3D Fully Convolutional Neural Networks

With Intersection Over Union Loss

for crop mapping from multi-temporal satellite images

Abstract

Information on cultivated crops is relevant for a large number of food security studies. Different scientific efforts are dedicated to generating this information from remote sensing images by means of machine learning methods. Unfortunately, these methods do not take account of the spatial-temporal relationships inherent in remote sensing images. In our paper, we explore the capability of a 3D Fully Convolutional Neural Network (FCN) to map crop types from multi-temporal images. In addition, we propose the Intersection Over Union (IOU) loss function for increasing the overlap between the predicted classes and ground reference data. The proposed method was applied to identify soybean and corn from a study area situated in the US corn belt using multi-temporal Landsat images. The study shows that our method outperforms related methods, obtaining a Kappa coefficient of 91.8%. We conclude that using the IOU loss function provides a superior choice to learn individual crop types.

Index Terms— Crop mapping , deep learning, fully convolutional neural networks , time series.

1 Introduction

Multi-temporal remote sensing images are being generated at an unprecedented scale and rate from resources such as Sentinel-2 (5 days frequency), Landsat (16 days frequency), and PlanetScope (daily). In the light of this, there have been many scientific efforts towards converting huge quantities of multi-temporal remote sensing images into useful information. One of these scientific efforts is automatic crop mapping [1, 2, 3, 4], being an active research area in remote sensing.

A decisive factor towards the goal of crop classification from multi-temporal images is developing methods that can learn temporal relationships in image time series. Traditional approaches for temporal feature representation such as Multi layer Perceptron, Random Forest, Support Vector Machine, and Decision Tree [5, 6, 7, 8, 9] have been developed for single-date images and are not able to explicitly consider the sequential relationship of multi-temporal data.

Recently with the success of deep neural networks in learning high-level task-specific features, CNN and LSTM-based methods have drawn increasing attention and achieved promising results in the field of crop classification from multi-temporal images [3, 2, 10, 11, 1, 12]. While most deep learning-based methods for crop mapping use pixel-by-pixel approach, in this paper, we will design a Fully Convolutional Neural Network (FCN) and use it for crop mapping. FCNs have been widely used in semantic segmentation, salient object detection, as well as brain tumor segmentation [13, 14, 15, 16]. They are capable of generating the segmentation map of the whole input image at once and thus are more efficient computationally. In addition, the spatial relationship between adjacent pixels is taken into account by using FCNs in contrast to pixel-by-pixel approaches, which take individual pixels as input. To fit the need of crop mapping, i.e. learning the sequential relationship of multi-temporal remote sensing data, we use 3D convolution operators as the building blocks of this FCN. They allow both the spatial and the temporal features to be extracted simultaneously. This would be beneficial to crop mapping since both the spatial and temporal relationships in multi-temporal remote sensing data are important for accurate crop mapping.

To learn different crop types, most deep learning-based crop mapping methods use the cross-entropy loss [3, 11, 12, 1, 10, 17]. They achieved promising results, but we hypothesize that there is still room for improvement by using a loss function better suited for cop mapping than the cross-entropy loss. To guide the network to generate more accurate prediction for crop types, we propose to learn the crop types by increasing the overlap between the prediction map and ground reference mask directly rather than using the cross-entropy loss that only focuses on per-pixel prediction. To the best of our knowledge, this is the first attempt to use this loss function in crop mapping.

In summary, the main contribution of this paper is to learn to identify different crop types by increasing the overlap between the prediction map and ground reference mask for each crop type, which would result in a rethink of the loss functions used to train deep neural networks for crop mapping. In conjunction with this loss function, we design a 3D FCN to simultaneously take into account the spatial and the temporal relationships in multi-temporal remote sensing data.

2 The proposed method

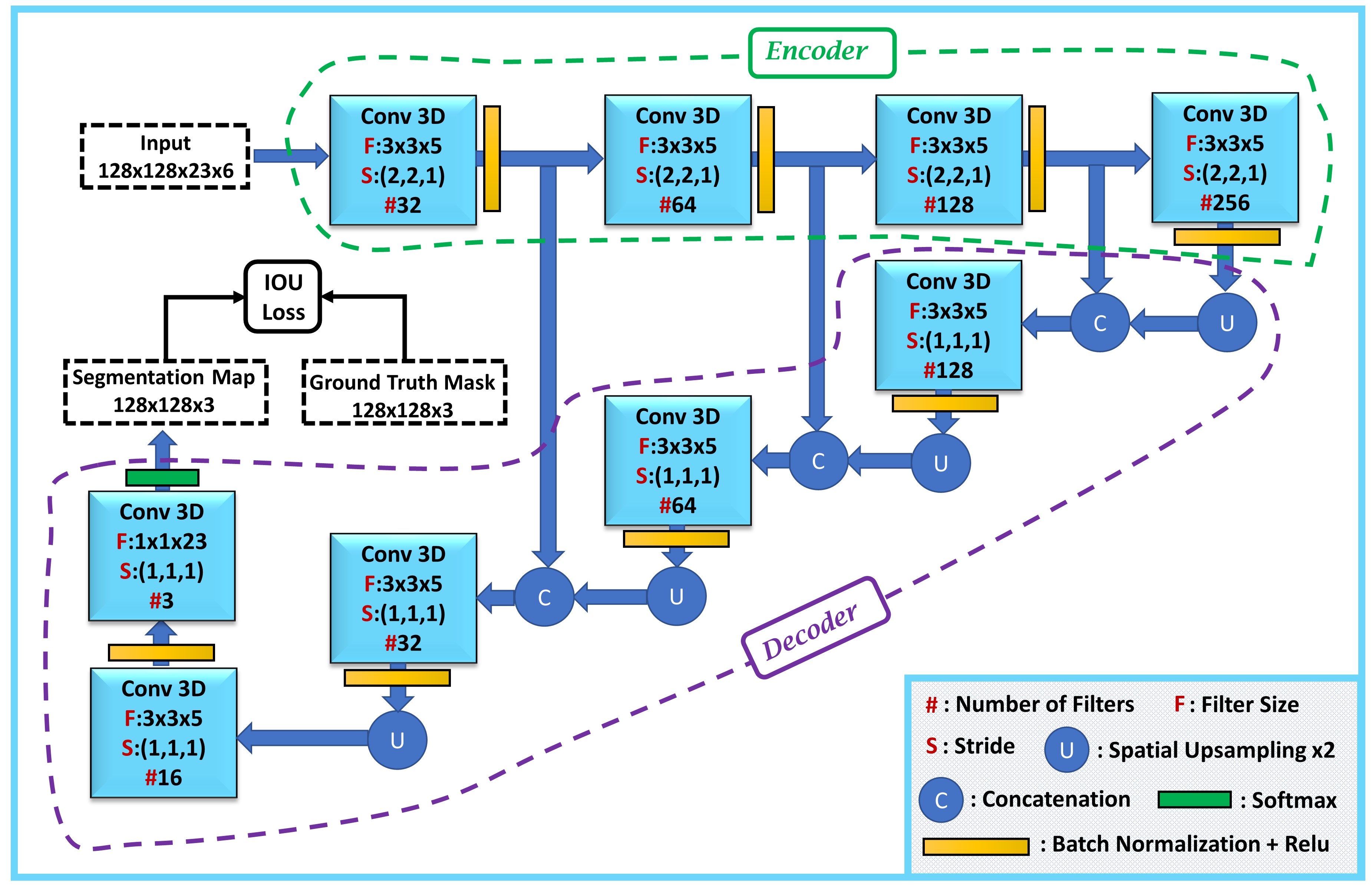

In this section, we explain our designed 3D FCN and Intersection Over Union (IOU) loss function, which is used to train the network. This network (Fig. 1) is composed of an encoder-decoder network. It learns to generate the segmentation map of crop lands from the input images. One important component of our proposed FCN is the 3D convolutional operator that is more beneficial than 2D convolutional operator for multi-temporal crop mapping since it also extracts the temporal features in addition to the spatial features. In the 3D FCN architecture, the Encoder extracts features at four different levels, each of which has different recognition information from each other. At lower levels, the Encoder captures spatial and local information due to the small receptive field, whereas it captures semantic and global information at higher levels because of the large receptive field. To take advantage of the both high level global contexts and low level details, features of different levels are merged in the Decoder through concatenation as shown in Fig. 1. In conjunction with the 3D FCN, we propose to use Intersection Over Union (IOU) loss to guide the FCN to output accurate segmentation maps.

In contrast to most deep learning-based crop mapping methods that use cross-entropy loss to learn the crop types, we propose a better loss function to guide the network to learn each crop type more accurately. We propose to use a loss function that tries to increase the overlap between the prediction map and ground reference mask directly. This loss function is more suited to crop mapping than the cross-entropy loss that only focuses on per-pixel prediction. Therefore, to increase the overlap between the prediction map and ground reference, we maximize the Intersection Over Union (IOU) for each crop type by adopting the following loss function:

| (1) |

where C denotes the number of classes, i.e. number of crop types and is defined as:

| (2) |

where M, N, p, and g denote total number of examples, total number of pixels in each example, prediction map, and ground reference mask respectively.

In the Experimental Results section we will show that using this loss function for learning the crop types results in a boost in the performance compared to using the cross-entropy loss.

3 Experiments

3.1 Study Area, Preprocessing, and Evaluation Metrics

Our experiments were conducted in the U.S corn belt. We selected a 1700x1700 pixel area located in the center of the footprint of h18v07 in the Analysis Ready Data (ARD) grid system. We used Landsat ARD as the input to our method, which are publicly available on USGS’s EarthExplorer web portal. At each observation date, this dataset contains six optical bands, namely red, green, blue, shortwave infrared 1, shortwave infrared 2, and near-infrared. We used CropScape website portal to download the Cropland Data Layer (CDL) as the labels for training, validation, and testing the network. The selected region is mostly composed of corn and soybean. In this project, corn, soybean, and “other” (i.e., merged class of other land cover/land use types) are taken as three classes of interest. Therefore, these three categories are assigned to the corresponding pixels of the input image. We used the Landsat multi-temporal data from April 22 to September 23 that covers growing season of corn and soybean [3].

To preprocess the Landsat multi-temporal data and prepare them for training and testing the model, we followed the same procedure as [3]. We removed the invalid pixels from the dataset. An invalid pixel is a pixel with less than seven valid observations after May 15 [3], and a valid observation is the pixel that is not filled, shadowed, cloudy, or unclear. The invalid pixels were excluded from the dataset and were not used in the training process because they do not contain enough multi-temporal information. To fill in the resulted gaps in the valid pixels, linear interpolation was used that resulted in 23 time steps with seven days interval from 22 April to 23 September. Furthermore, we normalized the data using the mean and standard deviation values.

As for performance evaluation of the proposed methods, we employed Cohen’s kappa coefficient, macro-averaged producer’s accuracy, and macro-averaged user’s accuracy [3].

3.2 Implementation details

We implemented our method in Keras [18] using the Microsoft Azure Machine Learning environment. The designed 3D FCN takes as input a 128x128x23x6 image and outputs a 128x128x3 segmentation map. In the input image size, 128x128, 23, and 6 correspond to the spatial size, number of time steps in time series, and number of optical bands respectively. In the output map size, 128x128 and 3 correspond to spatial size of the segmentation map and number of classes respectively. We used the stochastic gradient descent with a momentum coefficient 0.9 and a learning rate of 0.005. We split the training data into five sections, and used each of them as validation and the rest for training with batch size 2, which resulted in 5 models whose softmax outputs are averaged during testing. The code is publicly available at: https://github.com/Sina-Mohammadi/3DFCNwithIOUlossforCropMapping

| Method | Kappa | MA-PA | MA-UA |

|---|---|---|---|

| Transformer | 88.6 | 90.4 | 92.1 |

| Random Forest | 88.7 | 91.4 | 91.4 |

| Multilayer Perceptron | 88.8 | 91.4 | 91.5 |

| DeepCropMapping (DCM) [3] | 89.3 | 91.7 | 91.9 |

| Ours(3DFCN+CE loss) | 91.3 | 93.7 | 93.6 |

| Ours(3DFCN+IOU loss) | 91.8 | 94.1 | 94.2 |

3.3 Experimental Results

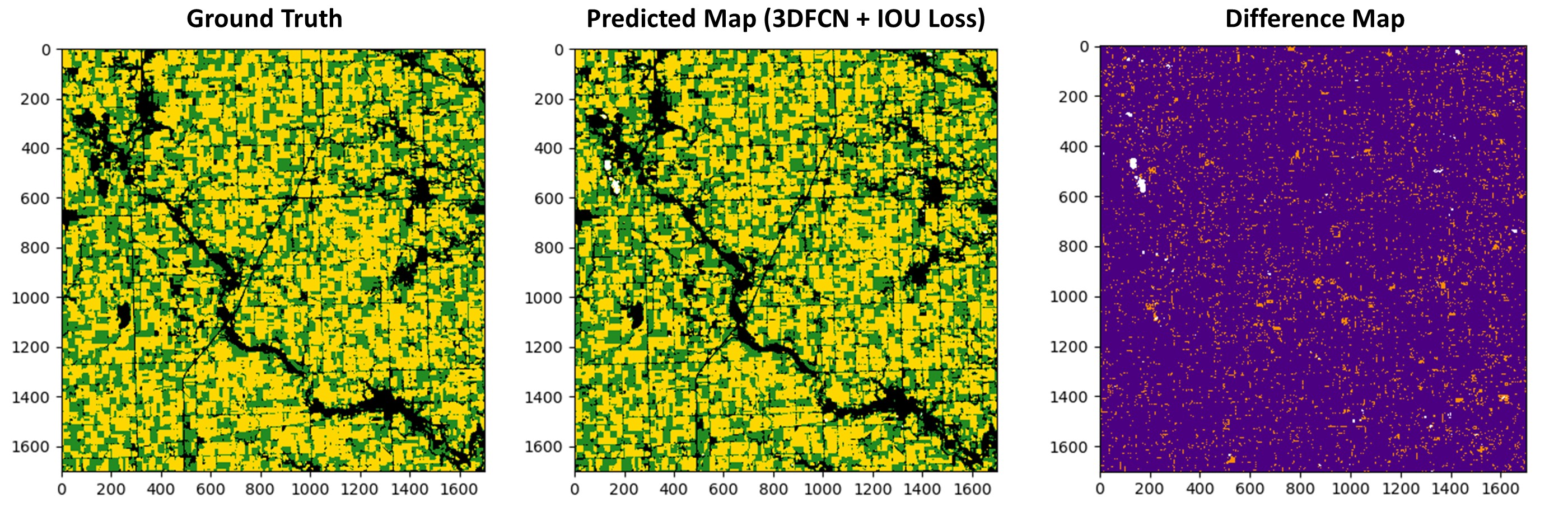

We used the data from the selected study area collected in 2015,2016,2017 as our training set, and we tested the trained 3D FCNs on the data collected in 2018. Then, we compared our method with the baseline classification models, namely Random Forest (RF), Multilayer Perceptron (MLP), and Transformer [19] with the exact same settings introduced in [3]. Moreover, we compared our method with the deep learning-based method introduced in [3]. The results are shown in Table 1. As seen from the table, our method outperforms other methods in terms of different evaluation metrics. Moreover, it can be seen that the adopted IOU loss function performs better than the cross-entropy loss. In addition, to visually investigate the performance of the method, we showed the predicted map of the 3D FCN trained with the IOU loss, ground reference, and difference map in Fig. 2.

4 Conclusion

In this study, a 3D FCN with an IOU loss function has been successfully applied to map soybean and corn crops in the US corn belt. The experimental results show that the adopted IOU loss function, which maximizes the overlap between the prediction map and ground reference mask for each crop type, is able to increase the performance compared to using the widely used cross-entropy loss. Therefore, using the IOU loss function is a better choice to learn individual crop type. For future work, we plan to apply the method to the regions that include more crop types to see to what extent our method can improve the performance.

5 Acknowledgement

This work was supported by Microsoft under the AI for Earth Microsoft Azure Compute Grant.

References

- [1] Shunping Ji, Chi Zhang, Anjian Xu, Yun Shi, and Yulin Duan, “3d convolutional neural networks for crop classification with multi-temporal remote sensing images,” Remote Sensing, vol. 10, no. 1, pp. 75, 2018.

- [2] Liheng Zhong, Lina Hu, and Hang Zhou, “Deep learning based multi-temporal crop classification,” Remote sensing of environment, vol. 221, pp. 430–443, 2019.

- [3] Jinfan Xu, Yue Zhu, Renhai Zhong, Zhixian Lin, Jialu Xu, Hao Jiang, Jingfeng Huang, Haifeng Li, and Tao Lin, “Deepcropmapping: A multi-temporal deep learning approach with improved spatial generalizability for dynamic corn and soybean mapping,” Remote Sensing of Environment, vol. 247, pp. 111946, 2020.

- [4] Mariana Belgiu, Wietske Bijker, Ovidiu Csillik, and Alfred Stein, “Phenology-based sample generation for supervised crop type classification,” International Journal of Applied Earth Observation and Geoinformation, vol. 95, pp. 102264, 2021.

- [5] Reza Khatami, Giorgos Mountrakis, and Stephen V Stehman, “A meta-analysis of remote sensing research on supervised pixel-based land-cover image classification processes: General guidelines for practitioners and future research,” Remote Sensing of Environment, vol. 177, pp. 89–100, 2016.

- [6] LeeAnn King, Bernard Adusei, Stephen V Stehman, Peter V Potapov, Xiao-Peng Song, Alexander Krylov, Carlos Di Bella, Thomas R Loveland, David M Johnson, and Matthew C Hansen, “A multi-resolution approach to national-scale cultivated area estimation of soybean,” Remote Sensing of Environment, vol. 195, pp. 13–29, 2017.

- [7] Fabian Löw, U Michel, Stefan Dech, and Christopher Conrad, “Impact of feature selection on the accuracy and spatial uncertainty of per-field crop classification using support vector machines,” ISPRS journal of photogrammetry and remote sensing, vol. 85, pp. 102–119, 2013.

- [8] Richard Massey, Temuulen T Sankey, Russell G Congalton, Kamini Yadav, Prasad S Thenkabail, Mutlu Ozdogan, and Andrew J Sánchez Meador, “Modis phenology-derived, multi-year distribution of conterminous us crop types,” Remote Sensing of Environment, vol. 198, pp. 490–503, 2017.

- [9] Di Shi and Xiaojun Yang, “An assessment of algorithmic parameters affecting image classification accuracy by random forests,” Photogrammetric Engineering & Remote Sensing, vol. 82, no. 6, pp. 407–417, 2016.

- [10] Charlotte Pelletier, Geoffrey I Webb, and François Petitjean, “Temporal convolutional neural network for the classification of satellite image time series,” Remote Sensing, vol. 11, no. 5, pp. 523, 2019.

- [11] Carolyne Danilla, Claudio Persello, Valentyn Tolpekin, and John Ray Bergado, “Classification of multitemporal sar images using convolutional neural networks and markov random fields,” in 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS). IEEE, 2017, pp. 2231–2234.

- [12] Nataliia Kussul, Mykola Lavreniuk, Sergii Skakun, and Andrii Shelestov, “Deep learning classification of land cover and crop types using remote sensing data,” IEEE Geoscience and Remote Sensing Letters, vol. 14, no. 5, pp. 778–782, 2017.

- [13] Changqian Yu, Jingbo Wang, Chao Peng, Changxin Gao, Gang Yu, and Nong Sang, “Learning a discriminative feature network for semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 1857–1866.

- [14] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille, “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 4, pp. 834–848, 2017.

- [15] Sina Mohammadi, Mehrdad Noori, Ali Bahri, Sina Ghofrani Majelan, and Mohammad Havaei, “Cagnet: Content-aware guidance for salient object detection,” Pattern Recognition, p. 107303, 2020.

- [16] Mehrdad Noori, Ali Bahri, and Karim Mohammadi, “Attention-guided version of 2d unet for automatic brain tumor segmentation,” in 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE). IEEE, 2019, pp. 269–275.

- [17] Marc Rußwurm and Marco Körner, “Multi-temporal land cover classification with sequential recurrent encoders,” ISPRS International Journal of Geo-Information, vol. 7, no. 4, pp. 129, 2018.

- [18] François Chollet et al., “keras,” 2015.

- [19] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin, “Attention is all you need,” in Advances in neural information processing systems, 2017, pp. 5998–6008.