3D Map Reconstruction of an Orchard using an Angle-Aware Covering Control Strategy

Abstract

In the last years, unmanned aerial vehicles are becoming a reality in the context of precision agriculture, mainly for monitoring, patrolling and remote sensing tasks, but also for 3D map reconstruction. In this paper, we present an innovative approach where a fleet of unmanned aerial vehicles is exploited to perform remote sensing tasks over an apple orchard for reconstructing a 3D map of the field, formulating the covering control problem to combine the position of a monitoring target and the viewing angle. Moreover, the objective function of the controller is defined by an importance index, which has been computed from a multi-spectral map of the field, obtained by a preliminary flight, using a semantic interpretation scheme based on a convolutional neural network. This objective function is then updated according to the history of the past coverage states, thus allowing the drones to take situation-adaptive actions. The effectiveness of the proposed covering control strategy has been validated through simulations on a Robot Operating System.

keywords:

Precision farming, Agricultural robotics, Autonomous vehicles in agriculture, Covering control, Crop modeling.1 Introduction

In modern agriculture, the relevance of the role of Unmanned Aerial Vehicles (UAVs), also known as drones, is rapidly growing (Mammarella et al. (2021)). Thanks to their enhanced capability to perform in-field operations in a precise and autonomous way, this typology of vehicles is leading to improvements in the context of the Agriculture 4.0 framework (Radoglou-Grammatikis et al. (2020) Mammarella et al. (2020)). As detailed in Comba et al. (2019a), UAVs could allow to extend, both in terms of spatial and temporal dimensions, the capability to monitor the crop status during the whole growing season, thanks to light and transportable sensors. Within this context, UAVs are exploited, for examples, to reveal crop water stresses (Guidoni et al. (2019)), soil erosion (Lima et al. (2021)), fungal and pest infestations (Calou et al. (2020)), etc. Recently, the potential of 3D crop model informative content for agricultural applications has been investigated in Comba et al. (2019b), as an alternative to widely-exploited 2D maps (Primicerio et al. (2015)). 3D map reconstruction through techniques like Structure-from-Motion (SfM) represents a powerful tool, even if it still represents a challenging task, due to the fact that the crop fields usually have poor and/or repetitive textures. Preliminary promising results have been achieved in the vineyard context where, thanks to a semantic interpretation approach, first proposed by Comba et al. (2020b), several relevant crop parameters, such as the leaf area index (see Comba et al. (2020a)), have been remotely measured. To speed up and improve the data acquisition process, the use of fleets of drones is nowadays considered a promising solution.

Classical covering control techniques typically requires drones to patrol over the environment by raising or lowering a density function (see e.g. Sugimoto et al. (2015)), according to the history of the past coverage states. On the other hand, to define and apply an effective covering control strategy to the selected field, it is crucial to identify which areas are of higher relevance, e.g. crop canopy, and needs to be detected from various viewing angles to improve the quality of the resulting map. This information can be resumed into a priority map, describing the distribution of the importance index function over the field. Approaches similar to the one presented in Comba et al. (2021) can be exploited to automatically retrieve the 3D-points density function distribution according to the field characteristics, starting from multi-spectral maps.

The main drawback of available covering control strategies is the lack of situation-adaptive features, that does not properly adapt the control action to the relevance and observation rate of each region. This is due because the importance index is defined as a monotonically decreasing function. Hence, if a drone is approaching a well-observed region with low importance index, the objective function gets low and the standard gradient-based coverage schemes tend to decelerate the drones instead of making them quickly escape from the same region.

To overcome all the aforementioned limitations of the current covering control strategies and to properly customize and optimize the density function distribution to the orchard of interest, in this paper we combine the semantic interpretation approach presented in Comba et al. (2021) for deriving a priority map to the angle-aware covering control scheme described in Shimizu et al. (2021) with the objective of providing an effective strategy to reconstruct a 3D map from rich viewing angles. The proposed scheme has been validated in simulation considering an apple orchard, first using a convolutional neural networks and an averaging filtering to identify the crop canopy and obtain the importance index field from a multi-spectral map, and then applying the distributed control strategy to a fleet of three UAVs. The preliminary results highlight the effectiveness of the covering controller and the adherence of the fleet behavior according to the evolution of the density function, demonstrating the capability of achieving situation-adaptive and angle-aware monitoring.

The remainder of the paper is structured as follows. In Section 2, we present the selected covering control strategy to obtain a 3D map reconstruction using a fleet of drones. Then, Section 3 we describe the algorithm exploited to extrapolate from a given multi-spectral map the corresponding priority map. The preliminary results obtained exploiting the angle-aware covering control to an apple orchard are presented in Section 4 while main conclusions are drawn in Section 5.

2 Angle-aware covering control

2.1 Preliminary definitions

Let us consider a generic nonlinear dynamical system

| (1) |

with , . The vector fields and are assumed to be Lipschitz continuous, and the system (1) has a unique solution on . In this paper, we follow the approach in Shimizu et al. (2021), and we assume that the function is a zeroing control barrier function (ZCBF) for the set . It is shown in Ames et al. (2017) that any Lipschitz continuous controller satisfying the constraint

| (2) |

where and are the Lie derivatives of along the vector field and , and is a class function, renders the set forward invariant. Then, the control problem aims at determining the control action closest to a given nominal input while satisfying the forward invariance of , i.e.

2.2 Problem settings

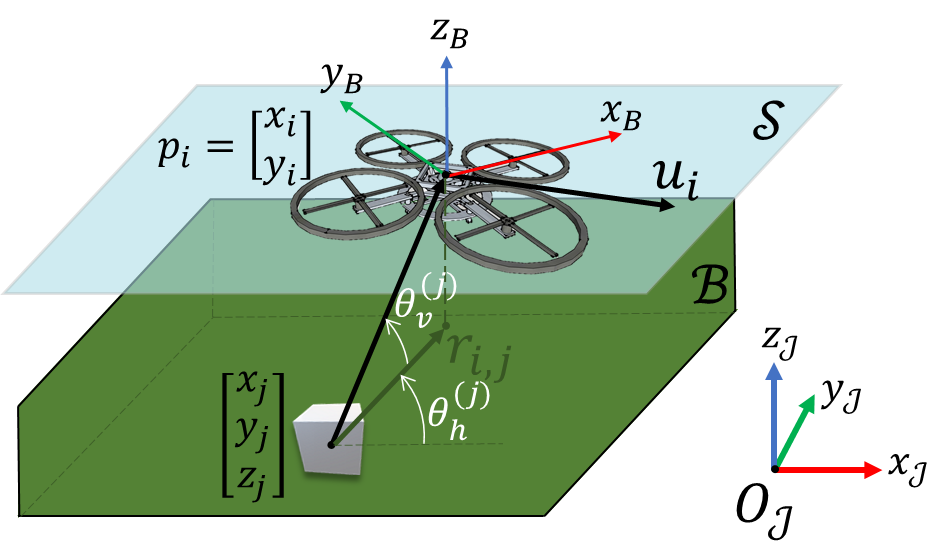

The selected scenario involves UAVs, locally controlled such that they are characterized by common and constant altitude and attitude. This implies that the location of each -th drone can be defined in a 2D space as with respect to an inertial frame as represented in Fig. 1. Hence, the dynamics of each drone is defined as , where is the control velocity input to be designed.

We assume that the target scenario (i.e. the field to be reconstructed using SfM techniques) is contained into an a-priori known, compact set , containing the ground surface. Then, the objective becomes to observe each point in the target field from rich viewing angles. This means that for the 3D map reconstruction we need to capture images of the target field from various and angles. In particular, is defined as the horizontal angle and is the vertical angle. Hence, the angle-aware coverage problem targets the coordinated region , which represents the target virtual field. Then, given the mapping , we have

| (3) |

The monitoring performance for the -th UAV with respect to the point is modeled by the distance between and the monitoring position . In particular, the performance function is defined as

| (4) |

where is a tuning parameter that depends on the sensor feature such that is small enough .

2.3 Objective function and controller design

The next step consists in discretizing the 5D field into a collection of 5D cells, i.e. polyhedra of same area , obtaining the new set . Let us assign to each -th cell an importance index , which should decay if is monitored by one of the UAV and for which the decade rate depends on . Then, we can define the following update rule for the importance index as

| (5) |

which renders each monotonically decreasing. Then, the control objective becomes to minimize an aggregate cost function to optimize the quality of the images collected by drones driving the cost towards zero. Moreover, to enhance the mission efficacy, a secondary objective is introduced to shape the UAVs behavior according to :

-

•

the drone shall escape from region with small , which corresponds to well-observed point , the drone shall escape from this region;

-

•

the UAV shall slow down and remain close to regions with large .

This concept can be formalized as in Shimizu et al. (2021) introducing a partition of the sampling set as

| (6) |

and then describing the cost function rate as

| (7) |

with the metric defined as .

This switching mode is enforced into the QP-based controller by taking: i) , with a given , as a candidate ZCBF; ii) ; and iii) softening the constraints with the introduction of a slack variable . The final QP problem becomes

This QP-based controller results hard to be implemented and solved in real time since the cardinality of tends to be very large. To address this issue, in Shimizu et al. (2021), the drone field was discretized by a collection of polygons , all of the same area , and corresponding gravity points . Then, according to the compression of onto by the mapping function , in Shimizu et al. (2021) the importance index was compressed onto as

| (8) |

3 Selected framework

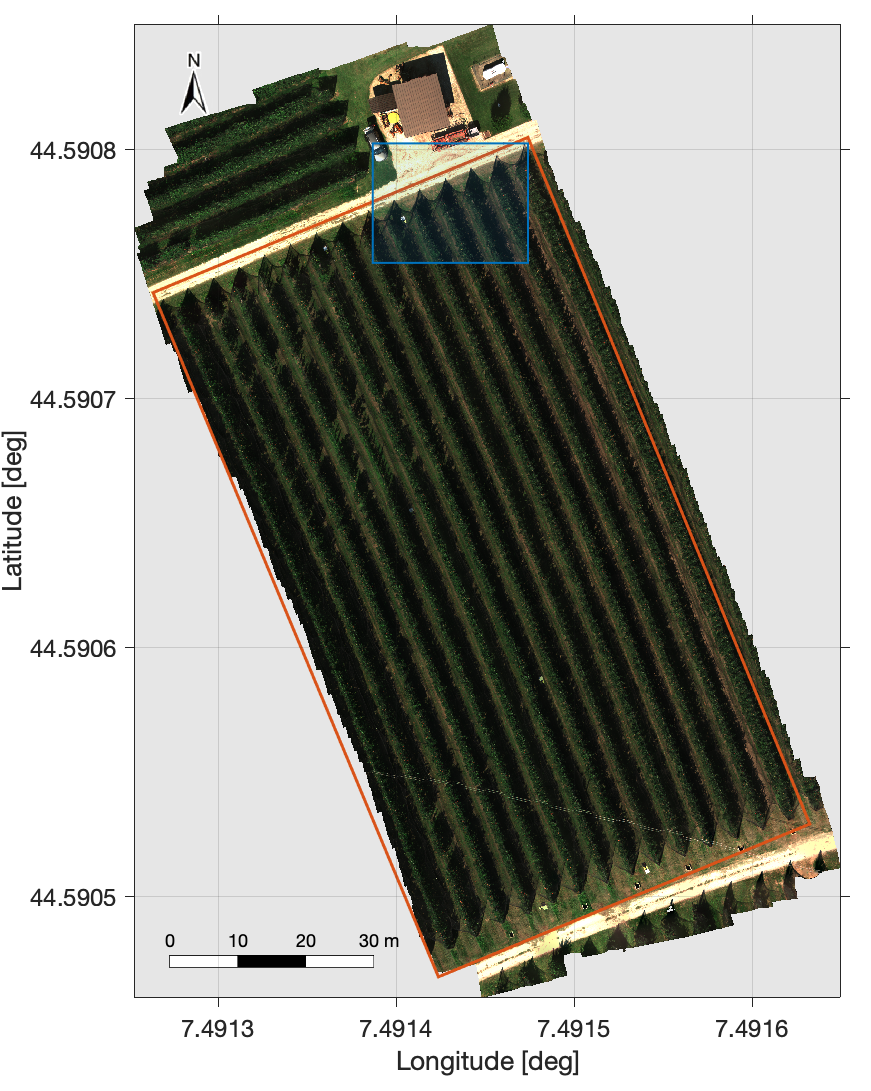

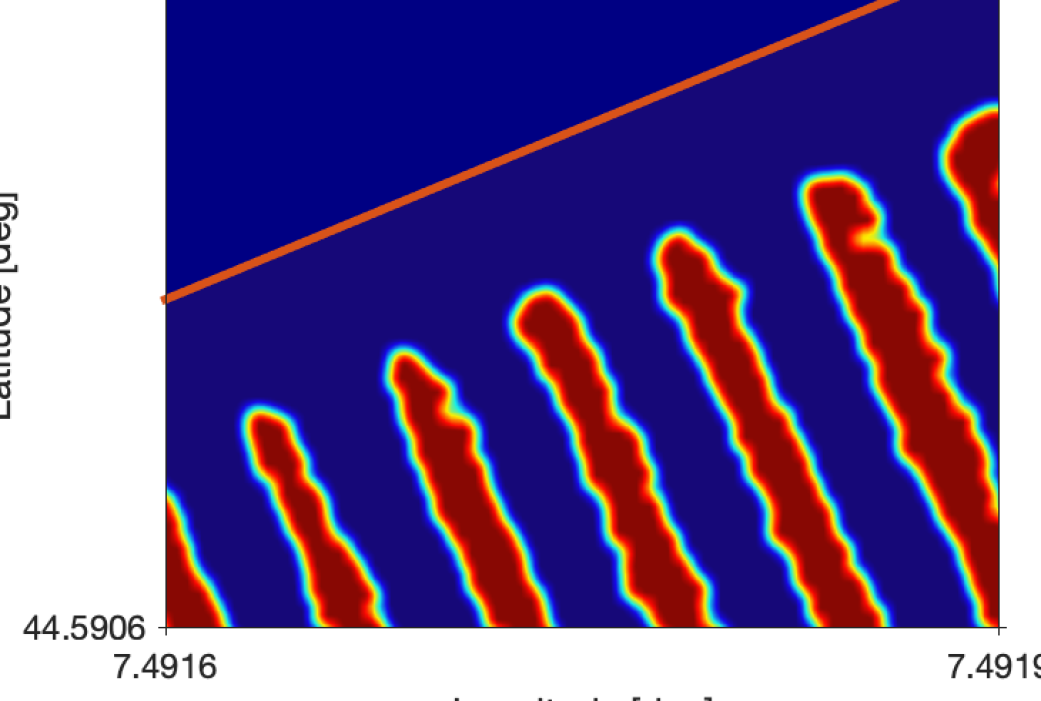

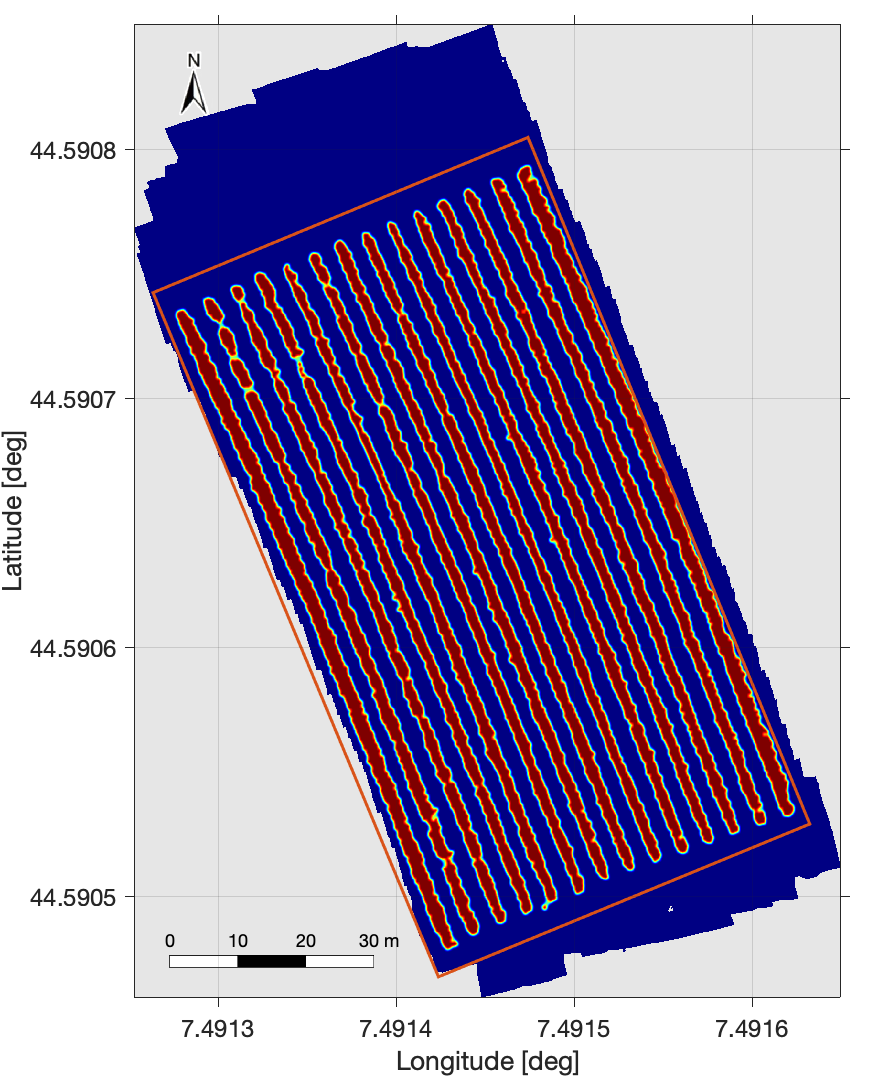

The case study selected envisions an apple orchard of about 0.4 ha located in Chiamina, Piedmont, North-west Italy (44°35’26” N, 7°29’30” E) (see Fig. 2). Gala apples are cultivated in the selected orchard, and the distance between trees is about 0.9 m and the inter-row space, which is covered by dense grass, is 3.5 m wide.

To properly apply the proposed covering control strategy to the selected agricultural scenario, it is crucial to retrieve a specific importance index field , adherent with the features of the orchard and the requirements related to the main objective of 3D map reconstruction. The distribution of over the field is named priority map and it is used to select regions of an orchard which are the target of the remote sensing task for 3D reconstruction. In particular, high priority regions will be those representing the crop canopy, while the rest of the map is considered less relevant.

In details, the priority map of the orchard was derived by processing a multi-spectral orthomosaics of the site, obtained by an aerial imagery campaign performed on September, 4th 2020. In particular, a MAIA S2 multi-spectral camera (SAL Engineering and EOPTIS) was mounted on a s900 DJI hexacopter with a GNSS receiver (u-blox 6, Pixhawk avionics). The UgCS Pro mission planning software (SPH Engineering) was used to define a set of waypoints, in order to maintain the height of the UAV flight close to 35 m with respect to the terrain and to guarantee a forward and side overlap between adjacent images greater than 80%. The resulting ground sample distance (GSD) and the field of view (FOV), at 35 m of altitude, were equal to 1.75 cm pixel and 22.4x16.8 m, respectively. Image correction and true reflectance ratios calculation have been performed thanks to the incident light sensor (ILS), mounted on the top of the drone, which measure the ambient light level for each shot in each band. In addition, geometric correction, co-registration (or stitching) and radiometric correction was done by MAIA images software (MultiCam Stitcher Pro). Finally, the multi-spectral map of the whole orchard has been obtained by processing the image block with Agisoft Metashape (2020) software. Using the position of six ground markers (in-field determined with a differential GNSS), the map was also georeferenced in the WGS84 EPSG:4326 reference system.

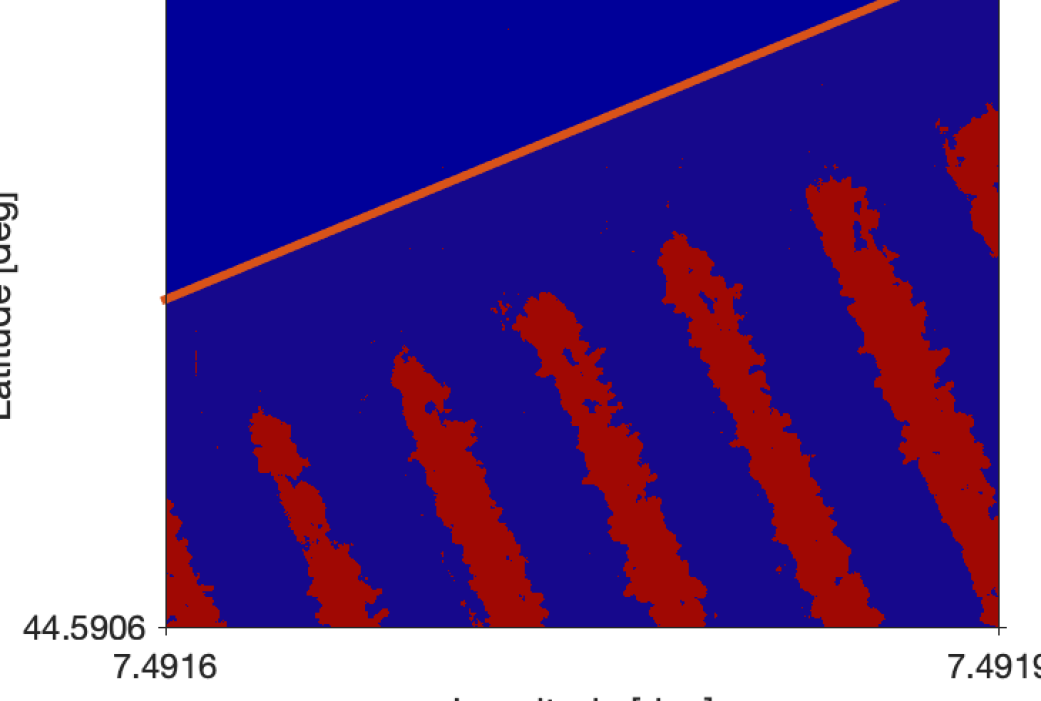

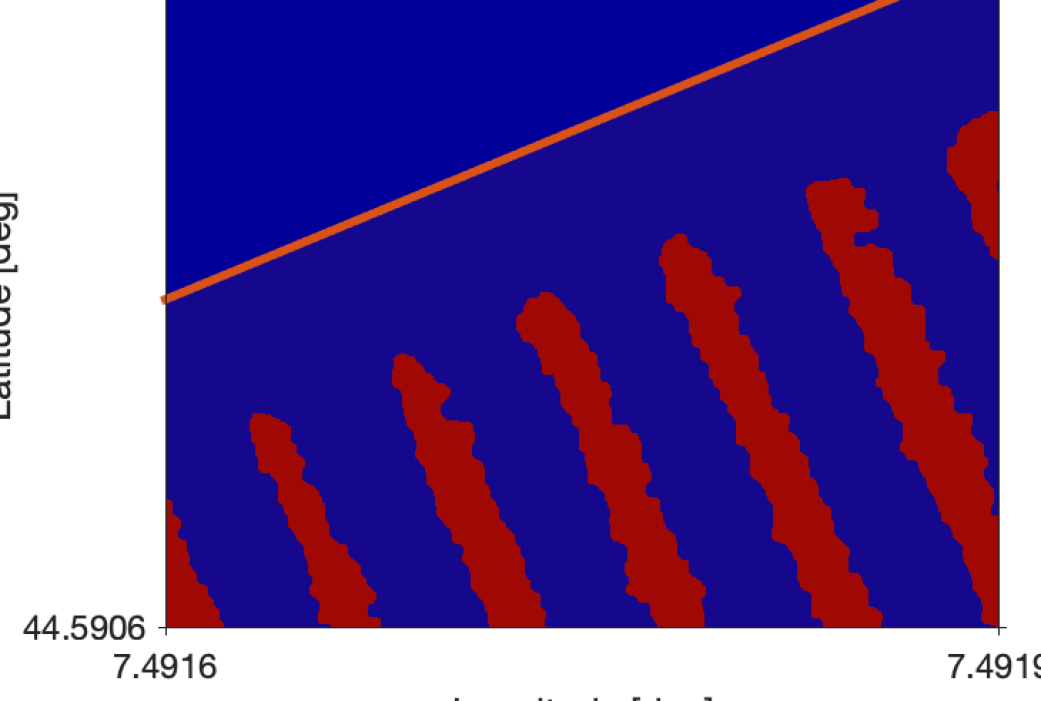

The procedure to retrieve the priority map from the multi-spectral ortho-mosaics is based on 3 main steps: 1) the semantic interpretation by a convolutional neural network approach; 2) a refinement by morphological operations; and, finally, 3) a filtering and rescaling task. Pixels representing the crop canopy within the multi-spectral imagery are thus detected by a properly trained U-Net convolutional neural network (Mathworks Matlab®, 2020) and reported in a categorical map (see Fig. 3(b)). To train, validate and test the U-Net, the ortho-mosaic was processed to select three different subsets of pixels, as described in Comba et al. (2021). The raw categorical map provided by the U-Net (Fig. 3(b)) was then processed with a sequence of morphological operators, in order to remove noise and small objects from the map, and to refine crop canopies boundaries (Fig. 3(c)). In particular, the sub-sequentially performed operations are a closing and an opening operation, with a circular flat morphological structuring element with radius equal to 5 and 10 pixels, respectively. Then, to properly eliminate sharp gradient between clusters, an averaging filter with circular kernel was adopted (Fig. 3(d)). The final priority map is represented in Fig. 4 and used as the initial distribution of the importance index for the covering control strategy as described in the following section.

4 Numerical simulations

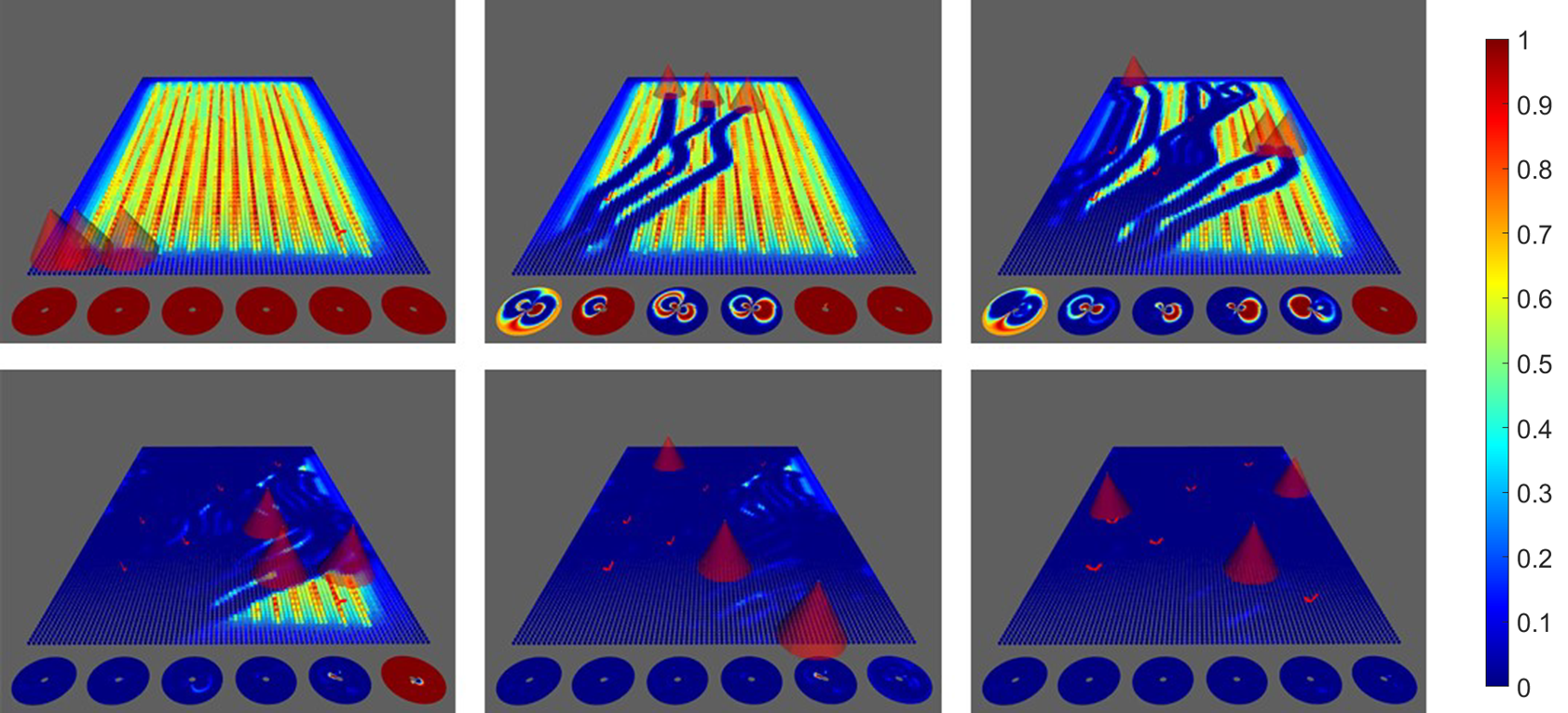

In this section, we describe the preliminary results obtained applying the proposed approach to an apple orchard. In the selected frameworks, we assume to cover the selected area with drones, whose initial positions were selected as , , and , to cover the selected orchard. The local controller allowed to maintain a relative altitude of m with respect to the terrain while the UAV velocity is constrained into the input space , limiting the drones acceleration to less than 5 m/s2. The other controller parameters were set as summarized in Table 1. In particular, allowed to drive at m, which is the orchard inter-row space, while set the drone velocity around m/s when monitoring high-importance region.

| Parameter | Value | Parameter | Value | Parameter | Value |

|---|---|---|---|---|---|

| 0.5 | 5 | 5 |

From the priority map, retrieved as described in Section 3 and depicted in Fig. 4, we can observe how the importance index is distributed over the orchard. Indeed, the semantic interpretation algorithm allowed to automatically identify the areas of higher interest, i.e. the tree rows, and to assign them a higher value of (yellow area) whereas we have much lower values of the importance index (blue areas) on the orchard boundaries and in the inter-rows zones. This information are later fed to the Python simulator, that requires a further down sample of the priority map into a pixels representation (i.e. each pixel measures m) and then a compression of the map itself into a points field. In details, we have that each cell of the virtual field is a mmradrad polyhedron whose volume m2rad2, obtaining . About the drone field , it was discretized by m2 polygons .

The next step consisted in validating the proposed covering control algorithm in a ROS environment, where CVXOPT was used to solve the QP of the proposed controller with an update frequency of Hz. In Fig. 5, we reported some frames from the simulation interface where it is possible to observe the evolution of the performance index according to the field coverage performed by the drones. In each frame, we can observe also the importance function with respect to the viewing angles for some specific check points. The drones took around minutes to cover the field and to collect the images from the selected viewing angles. In the second frame of the upper row of Fig. 5 we can observe the collision avoidance strategy that makes the drones move away each other. On the other hand, in the third frame we can observe how the two drones on the right follows the priority map to collect images along the rows. Then, in the lower row of frames, it is possible to observe how progressively the drones covered the entire area from rich viewing angles, achieving the primary objective.

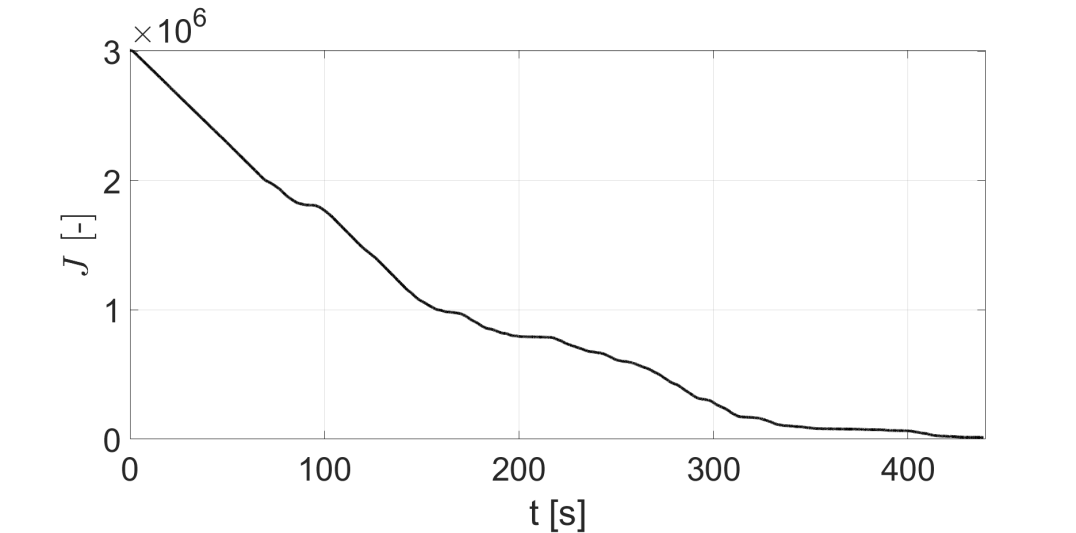

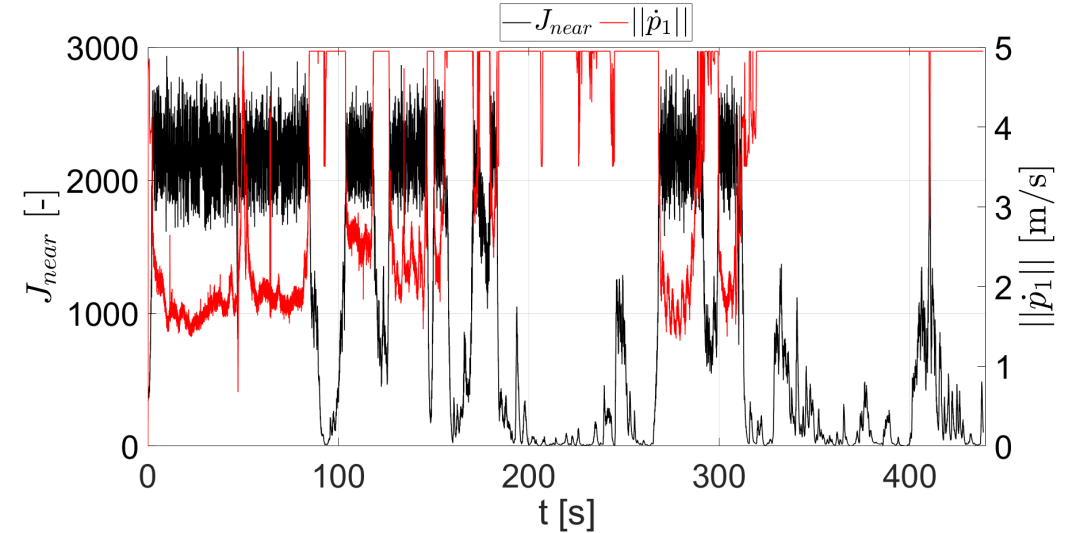

Fig. 6(a) shows the timeseries of the objective function . It is possible to observe that is monotonically decreasing and converges to . This implies that the UAVs would be able to collect ideal images when . Next, we needed to verify the secondary objective related to the adherence of the drone behavior according to the image sampling. In particular, we had to verify that the UAVs escape regions characterized by small (low importance or well-observed in the past) and to slow down when overflying high-relevance areas. From Fig. 6(b) we can observe that the UAVs average velocity when covering a high-relevance area was close to m/s whereas the airspeed was increased by the algorithm to 5 m/s when the parcel had low . This can further easily verified introducing an additional performance parameter defined as the sum of importance index near the drone , namely . The results depicted in Fig. 6(b) shows that the UAVs speed tends to be small when the index is large (large ), and vice versa, thus highlighting the fulfillment of the secondary objective.

5 Conclusion

In this paper, we applied a customized covering control strategy, that takes into account rich viewing angle to reconstruct 3D maps of an apple orchard. The proposed scheme is based on the preliminary extrapolation from a multi-spectral map of a priority field using a semantic interpretation approach. This allowed to determine the area of higher interest for the map reconstruction, coinciding whit the crop rows. The effectiveness of the proposed scheme was finally demonstrated through numerical simulations.

References

- Ames et al. (2017) Ames, A.D., Xu, X., Grizzle, J.W., and Tabuada, P. (2017). Control barrier function based quadratic programs for safety critical systems. IEEE Transactions on Automatic Control, 62(8), 3861–3876.

- Calou et al. (2020) Calou, V.B.C., dos Santos Teixeira, A., Moreira, L.C.J., Lima, C.S., de Oliveira, J.B., and de Oliveira, M.R.R. (2020). The use of UAVs in monitoring yellow sigatoka in banana. biosystems engineering, 193, 115–125.

- Comba et al. (2019a) Comba, L., Biglia, A., Ricauda Aimonino, D., Barge, P., Tortia, C., and Gay, P. (2019a). Neural network clustering for crops thermal mapping. In VI International Symposium on Applications of Modelling as an Innovative Technology in the Horticultural Supply Chain Model-IT 1311, 513–520.

- Comba et al. (2021) Comba, L., Biglia, A., Ricauda Aimonino, D., Barge, P., Tortia, C., and Gay, P. (2021). Semantic interpretation of multispectral maps for precision agriculture: A machine learning approach. In Precision agriculture’21, 920–925. Wageningen Academic Publishers.

- Comba et al. (2019b) Comba, L., Biglia, A., Ricauda Aimonino, D., Barge, P., Tortia, C., and Gay, P. (2019b). 2D and 3D data fusion for crop monitoring in precision agriculture. In 2019 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), 62–67. IEEE.

- Comba et al. (2020a) Comba, L., Biglia, A., Ricauda Aimonino, D., Tortia, C., Mania, E., Guidoni, S., and Gay, P. (2020a). Leaf Area Index evaluation in vineyards using 3D point clouds from UAV imagery. Precision Agriculture, 21(4), 881–896.

- Comba et al. (2020b) Comba, L., Zaman, S., Biglia, A., Ricauda Aimonino, D., Dabbene, F., and Gay, P. (2020b). Semantic interpretation and complexity reduction of 3D point clouds of vineyards. biosystems engineering, 197, 216–230.

- Guidoni et al. (2019) Guidoni, S., Drory, E., Comba, L., Biglia, A., Ricauda Aimonino, D., and Gay, P. (2019). A method for crop water status evaluation by thermal imagery for precision viticulture: Preliminary results. In International Symposium on Precision Management of Orchards and Vineyards 1314, 83–90.

- Lima et al. (2021) Lima, F., Blanco-Sepúlveda, R., Gómez-Moreno, M.L., Dorado, J., and Peña, J.M. (2021). Mapping tillage direction and contour farming by object-based analysis of UAV images. Computers and Electronics in Agriculture, 187, 106281.

- Mammarella et al. (2020) Mammarella, M., Comba, L., Biglia, A., Dabbene, F., and Gay, P. (2020). Cooperative agricultural operations of aerial and ground unmanned vehicles. In 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), 224–229. IEEE.

- Mammarella et al. (2021) Mammarella, M., Comba, L., Biglia, A., Dabbene, F., and Gay, P. (2021). Cooperation of unmanned systems for agricultural applications: A theoretical framework. Biosystems Engineering.

- Primicerio et al. (2015) Primicerio, J., Gay, P., Ricauda Aimonino, D., Comba, L., Matese, A., and Di Gennaro, S. (2015). NDVI-based vigour maps production using automatic detection of vine rows in ultra-high resolution aerial images. In Precision agriculture’15, 693–712. Wageningen Academic Publishers.

- Radoglou-Grammatikis et al. (2020) Radoglou-Grammatikis, P., Sarigiannidis, P., Lagkas, T., and Moscholios, I. (2020). A compilation of UAV applications for precision agriculture. Computer Networks, 172, 107148.

- Shimizu et al. (2021) Shimizu, T., Yamashita, S., Hatanaka, T., Uto, K., Mammarella, M., and Dabbene, F. (2021). Angle-aware coverage control for 3-D map reconstruction with drone networks. IEEE Control Systems Letters.

- Sugimoto et al. (2015) Sugimoto, K., Hatanaka, T., Fujita, M., and Huebel, N. (2015). Experimental study on persistent coverage control with information decay. In 2015 54th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), 164–169. IEEE.