A pJ/cycle Differential Ring Oscillator in nm CMOS for Robust Neurocomputing

Abstract

This paper presents a low-area and low-power consumption CMOS differential current controlled oscillator (CCO) for neuromorphic applications. The oscillation frequency is improved over the conventional one by reducing the number of MOS transistors thus lowering the load capacitor in each stage. The analysis shows that for the same power consumption, the oscillation frequency can be increased about compared with the conventional one without degrading the phase noise. Alternatively, the power consumption can be reduced at the same frequency. The prototype structures are fabricated in a standard nm CMOS technology and measurements demonstrate that the proposed CCO operates from V supply with maximum frequencies of MHz and energy/cycle ranging from pJ over the tuning range. Further, system level simulations show that the nonlinearity in current-frequency conversion by the CCO does not affect its use as a neuron in a Deep Neural Network if accounted for during training.

Index Terms:

Ring Oscillator, Low-power, Neuromorphic, Neurocomputing.I Introduction

Deep Neural Networks (DNN) are gaining popularity recently in many applications such as face recognition[1], speech recognition[2], natural language processing[3] etc due to improved performance compared to other machine learning algorithms. Deploying these networks at the edge in the Internet of Things (IoT) is important for scalability and fast response by reducing data transmission to the cloud[4, 5]. However, due to battery life and processing constraints, it is imperative to have low power, low area implementations of the DNN.

Neuromorphic implementations that utilize analog or physical computing are good in that context[6] and have been known to be energy efficient compared to digital baselines when the required precision is low[7]. Neuro-inspired spiking neural networks (SNN) have also gained popularity due to the promise of sparse activation leading to lower energy dissipation[8]. In recent years, time-based computational circuits for DNN/SNN are gaining popularity due to the reduced power supply in scaled CMOS [9, 10]. An important building block in these designs is a digital delay cell. For example, it is used to create an oscillator that can convert analogue current to digital output (rate based neuron) in [11, 12, 13] or be used as an integrate and fire neuron[9] with bio-plausible refractory period and spike frequency adaptation features. The major requirements for an oscillator to function as a neuron are:

-

•

Low area requirement since a large number of them are needed per chip.

-

•

Low energy/cycle to reduce operational energy.

-

•

Since these circuits typically co-exist with a large amount of digital circuits, they have to be robust against power supply noise and other interference.

Interestingly, for NN implementations, linearity of the tuning curve of the oscillator-based neuron is not important since learning can be used to correct for it.

Given these requirements, a CMOS ring oscillator (RO) based structure seems like a good choice due to its low-area and power requirements. To address the third point of robustness, differential ring oscillators should be the preferred topology [14]. In this paper, we propose a new differential delay cell that has reduced number of transistors compared to the conventional one thus reducing energy/cycle due to lower capacitance. Note this is different from [15] where the authors demonstrate reducing the number of stages. Given the popularity of rate based SNN converted from pre-trained artificial neural network (ANN) [16, 17], we show results from a fabricated chip in nm CMOS where the proposed delay cell is used in a differential ring oscillator to convert input analog currents to a frequency or rate. Nevertheless, the proposed delay cell can be used in conventional mixed signal applications as well as differential delay line based neural networks[10]. Further, other neuromorphic computation using coupled oscillators are also gaining in popularity [18, 19]–the proposed oscillators can be used for such computations as well.

This paper is organized as follows. Section II gives a review of conventional ring oscillator based CCO (RO-CCO) structures. The proposed structure and analysis are introduced in section III. Section IV presents measurement results and shows a performance comparison. Section V discusses the neural network simulation with the proposed CCO neuron model, its potential for usage in spiking neural networks as well as the startup circuits. Finally, the conclusions are provided in section VI.

II Conventional RO-CCO Review

A RO is made from gain stages, or delay stages, in feedback. ROs can be built in any standard CMOS process with a number of delay stages connected in a feedback loop.

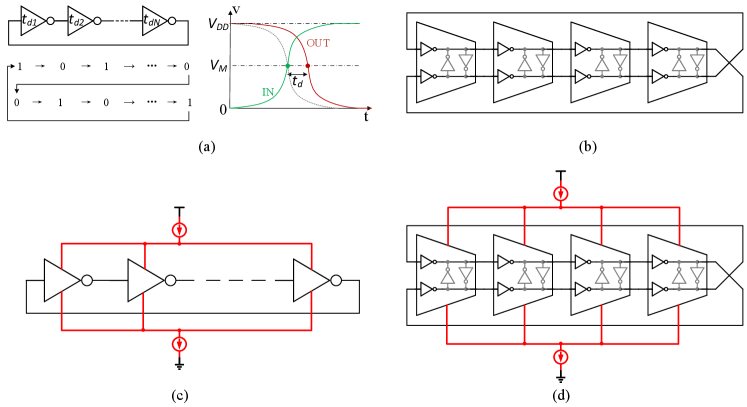

A single ended RO is comprised of odd stages of inverters cascaded in self-feedback (Fig. 1(a)) with capability of rail-to-rail output. The most common way to derive an equation for the oscillation frequency of an -stage ( is an odd number) ring oscillator is to assume each stage provides a delay of . For a stable oscillator, the signal must go through each of the delay stages twice to provide one period of oscillation, as shown in Fig. 1(a). Therefore, the period is , resulting in the oscillation frequency equation

| (1) |

Where is the tail current, is the total load capacitance of a single stage, is the amplitude of the voltage swing. Delay of each stage is defined as the time interval of output signal and input signal of the same stage at half VDD, illustrated in Fig. 1(a). The propagation delay is the most important parameter of this type of oscillator because it directly determines the oscillation frequency, the number of stages and hence determines the number of output phases and the power consumption. Moreover, the stability of delay time also reflect the jitter characteristics in time domain and phase noise in frequency domain.

Eliminating the restriction of odd number of stages in single ended RO, pseudo differential RO is more flexible since both odd and even stages are allowed. A -stage pseudo differential RO [14] is shown in Fig. 1(b). A single delay stage is composed of four inverters, in which two inverters work as pseudo differential structure, while another two grey inverters constitute cross-coupled regeneration to generate hysteresis phase shift. Differential RO can offer more output phases compared with single ended RO with the same stages. This is a very important criteria in time domain processing circuits especially where multiple phases are necessary for more accuracy operations.

Although single ended configurations consume less area, they are more susceptible to power supply variations and common-mode noise. In modern machine learning accelerators with analog computing neurons co-existing with digital memory and processing, it is extremely important to ensure good noise rejection properties for the analog blocks. A pseudo differential RO can suppress common mode noise and have a better common mode rejection ratio (CMRR) characteristic [14].

To further reduce the sensitivity to power supply variation and noise disturbance, current starved structures, as shown in Fig. 1(c), have been introduced [20, 21]. Since the current source and current sink transistors offer a negative feedback in the bias, the current variation is much less than non current starved structures. Another important advantage is that the current starved structures control the output frequency by controlling the bias current, resulting in the name of current controlled oscillator (CCO).

Finally, combining current starving and differential architecture, current starved fully differential RO (Fig. 1(d)) has better power supply rejection ratio (PSRR) and CMRR characteristics than other types of RO (shown later in Fig. 5). The power supply noise and substrate noise are isolated by current source and current sink, resulting in a better PSRR. Common mode disturbances such as temperature variations can also be suppressed by the differential structure.

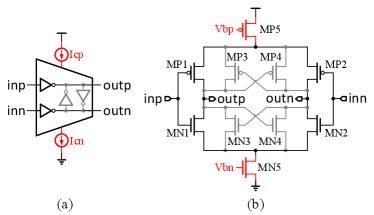

Although in practice, current starving transistors are shared by all stages, it is easier to understand the operation of an unit delay cell by assigning a current source and a current sink to it. Fig. 2 shows the single delay stage of current starved fully differential RO. MN1, MP1 and MN2, MP2 constitute the differential input and differential output pair. MN3, MP3 and MN4, MP4 constitute the positive feedback latch to offer hysterestic phase shift. The controllable current source and current sink, MP5 and MN5, make the delay stage from pseudo differential structure to fully differential. The core stage is composed of a differential input-output path and a hysteresis delay cells (HDC) composed of two cross-coupled inverters based positive feedback latch.

III Proposed Structure and Theoretical Analysis

As mentioned in Section I, two important metrics for oscillators used for neurocomputing are: (a) high resistance to common-mode noise and (b) low energy/cycle. While the former was addressed through a current starved fully differential architecture as mentioned in the previous section, we address the latter in this section. However, lowering energy is often associated with an increase in thermal noise induced jitter. We propose a new oscillator structure that is better than conventional structure in terms of energy/cycle while not adversely affecting the jitter performance.

III-A Proposed Oscillator

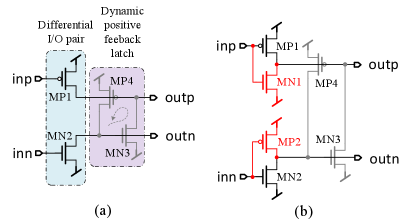

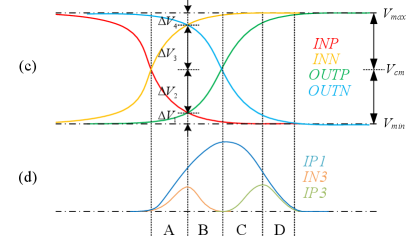

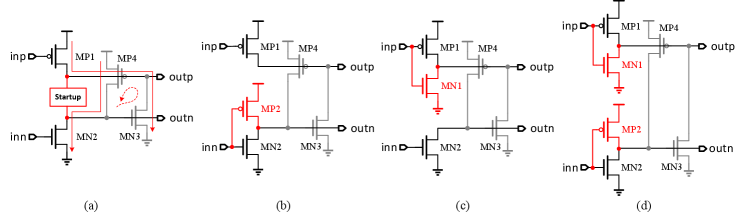

From the analysis of conventional ring oscillators in section II and simulations shown later in this section (Fig. 5), we know that current starved fully differential structure has both better PSRR and CMRR. To offer differential inputs, outputs and a positive feedback hysteresis, each stage of the conventional differential RO uses four inverters (i.e. transistors) except for the current starved transistors (Fig. 2). Our novelty stems from the observation that only four transistors seem necessary to realize oscillation as shown in Fig. 3(a). MP1 and MN2 are the pull-up and pull-down differential I/O pair while MN3 and MP4 serve as their loads respectively. At the same time, MN3 and MP4 constitute the simplest dynamic positive feedback latch. Another way to interpret this proposed structure is as follows: MP1 and MN3 constitute a dynamic inverter since and are almost the same (with a certain phase shift), similarly, MP4 and MN2 constitute another dynamic inverter since and are almost the same (with a certain phase shift). Likewise, the two dynamic inverters compose the regeneration cross pair to generate positive feedback and offer extra hysteresis phase shift. Every transistor acts as an active device for itself and a load device for another transistor. The push-pull nature and transistor reuse make the cell compact and efficient.

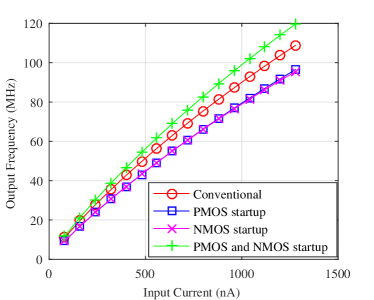

However, one problem is that and are strictly inverse only when oscillations have been sustained, while and are inverse with a phase delay of . If for some reason, the initial condition of and (Fig. 3 (a)) are same at say V, then is guaranteed to be pulled up to but the condition of stays at initial condition since both MN2 and MP4 are OFF. If initial condition of was , then the outputs of this stage are both at high voltage and the oscillator gets locked in this state and cannot start oscillating. So a start-up circuit is necessary to maintain robust oscillations. Only a NMOS or PMOS or both (Fig. 3(b)) can eliminate the possible stable state and work as start-up circuit. In the above example, when both and are V, now with added MP2, it will force to forcing the outputs of this stage to be opposite in polarity thus breaking the lock state earlier. From SPICE simulations, the structure with start-up of both NMOS and PMOS as shown as Fig. 3(b), gives the best frequency performance (see Section V) and is adopted in the rest of the paper.

III-B Frequency and Energy Dissipation

The novelty in our design of reducing the number of transistors should lead to an increased oscillation frequency per unit current. However, the charging currents also change and hence the combined effect is not obvious. Intuitively, the proposed delay stage offers less charging and discharging current by removing MP3 and MN4, which cuts down on power consumption. To precisely compare the charging/discharging current and the effect on the output frequency, we analyze the load capacitance and charging current model of a single stage in more details to present a theoretical prediction.

First, the load capacitor of each stage for the conventional structure (Fig. 2) is given by:

| (2) |

In comparison, for the proposed structure (Fig. 3(b)), it reduces to

| (3) |

Thus, . It is therefore reasonable to expect that the load capacitor decreases by since the number of transistors of each stage decreases by the same amount.

Next, we analyze the charging process of the node of in Fig. 3(b), while the discharging process is same as the node of . Fig. 3(c) illustrates the charging period, which is divided into four phases (A, B, C and D) according to the operation of the transistors. and are the swing range of the oscillation, which is lower than rail-to-rail because of the current starved structure. In our design, mV, mV, and mV. For simplicity, we assume that , mV and mV such that mV.

| Phase | MP1 | MP3 | MN3 |

|---|---|---|---|

| A | region=2 | region=0 | region=1 |

| B | region=2 | region=0 | region= |

| C | region= | region=2 | region=0 |

| D | region=1 | region=1 | region=0 |

| (4) |

| (5) |

where, and represent charging current in four different phases of conventional and proposed stage respectively, which are given by

| (6) | |||

| (7) |

The equations and approximated average values of , and in all phases are listed in Table I, where the charging process is divided into the four phases A, B, C and D as mentioned earlier. The regions of operation of the MOSFET are referred to as 0, 1 and 2 for cut-off, linear and saturation respectively.

The drain current of short-channel MOSFETs is assumed to follow the widely used alpha power law [22], [23]. The carrier velocity saturation coefficient is between to for sub-micron CMOS technology. Then, according to the above equations and the estimated values in the table, we can get the propagation delay relationship between the proposed and conventional RO as . Thus, the relation between the frequencies are:

| (8) |

This means that the frequency of proposed RO increases compared with conventional structure for the same input current. From equation 1, the average current relationship is expressed as:

| (9) |

This implies the average current of the proposed RO decreases by compared to the conventional structure.

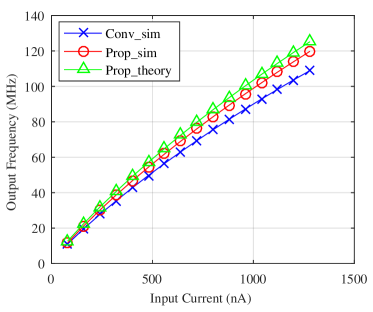

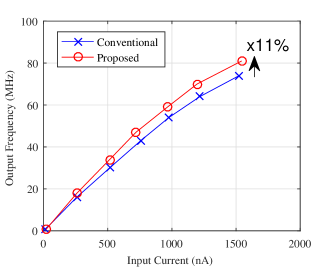

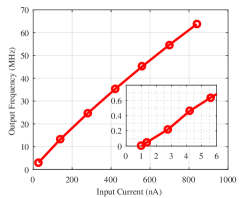

To verify these theoretical models, we conducted the current-frequency (I-F) transfer curve simulations of the conventional and proposed stage RO using nm CMOS models in SPICE. Fig. 4 shows the simulation results and the theoretical prediction results of the output frequency. As predicted, the proposed oscillator indeed produces higher frequency than the conventional one for the same input current. However, the theoretical values are slightly greater than that of simulation because of the inaccuracy in estimation of the current of the transistor MP3.

III-C Robustness and Jitter

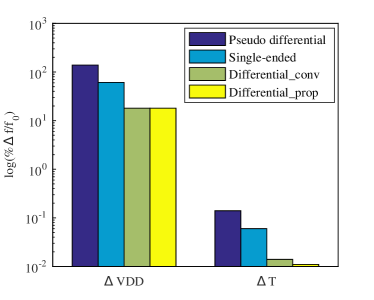

While the increase in oscillation frequency is good, it is not useful if the new oscillator has a degradation in other key metrics such as robustness and jitter. We first evaluate the robustness of the proposed oscillator in comparison to the conventional one through simulations. The simulation results of line sensitivity () and temperature sensitivity () of four types of RO-CCO is shown in Fig. 5. As expected, the characteristics of differential current starved structures, both proposed and conventional, are better ( for line sensitivity and for temperature sensitivity) than non current starved pseudo differential RO ( for line sensitivity and for temperature sensitivity). For current starved structures, differential structures are better than single ended ( for line sensitivity and for temperature sensitivity) as expected. It can be seen that the line sensitivity and temperature sensitivity of the proposed differential structure is not degraded compared to the conventional differential structure. The relatively high value of sensitivity to power supply is traced back to the tail current sources coming out of saturation at power supply voltages lower than V–this can be solved by reducing the current range. Confined to V for power supply, the sensitivity is only around .

Following [24] , the variance of period jitter for a RO can be expressed as:

| (10) |

where,

| (11) |

is the number of delay stage. and are technology-dependent noise factors for NMOS and PMOS respectively. Thus,

| (12) |

In our implementation, the load capacitor and average current decrease by and respectively.

| (13) |

So, the jitter of the proposed CCO is expected to be approximately the same as the conventional structure. This has been confirmed in simulations and measurement–we present these results in the next section.

III-D Frequency to Digital Conversion

For usage within a neural network system, the raw frequencies of the CCO need to be often converted to a digital word. The easiest method to do this is to pass the CCO output as a clock to a counter[12]. However, this method incurs a large conversion time () and concomitantly large conversion energy. A different approach, following [11] is used in our work as described next.

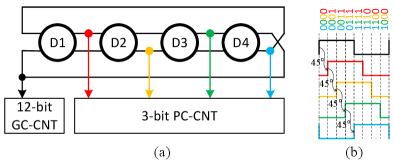

Fig. 6 shows the diagram of time to digital converter (TDC) with a four delay stage differential CCO and the sequential counters. Although the four stage differential CCO outputs eight phases totally, only half are unique with separate phase information while the rest are exactly the inverse of the unique phases and offer the same phase information. So only four phases with phase shift of each other, as shown in Fig. 6 (a), are utilized to generate the phase code counter (PC-CNT). Only codes are generated by the four stages differential CCO and hence only 3-bits are available in the PC-CNT acting as fine counter. The sequence diagrams of the four choice outputs and the corresponding complementary symmetry phase codes are illustrated in Fig. 6(b). The opposite phase of the last phase of PC-CNT is chosen to clock a Gray code counter (GC-CNT) serving as coarse counter. The number of bits of GC-CNT depends on the input current range while the number of bits in PC-CNT determines the accuracy of the converter. A Gray code counter is selected for higher reliability in neural networks with tightly packed layout. To enable wide dynamic range testing, a 12-bit GC-CNT is used in our design but the bit width can be optimized in neural network applications.

Both the phase code counter and Gray code counter possess the merits of energy efficiency and reliability since only one bit trips during state transition. Regarding the choice of number of stages in the CCO, we note that more stages of CCO structure () offers more valid output phases () and thus more bits in PC-CNT but has lower frequency and thus less bits in GC-CNT. Overall, the total number of bits is constant but more CCO stages consume more area overhead (). Assuming the same input current for both cases, the tail current for CCO core is constant and hence energy per conversion due to CCO is constant. Energy dissipated in the counter is less for more CCO stages, but this is much smaller than the CCO energy dissipation. Hence, due to the need of small footprint of the CCO, a -stage CCO core is designed in our work.

IV Measurement Results

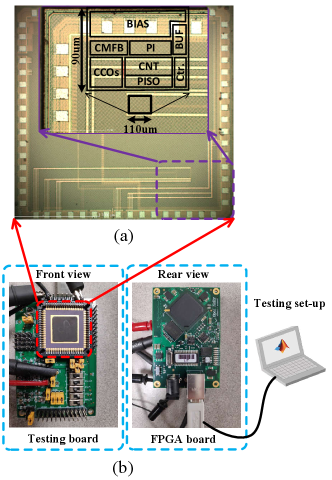

The prototype chip has been fabricated in a nm standard CMOS technology and includes a four-stage conventional CCO and the proposed CCO with output inverter buffers and peripheral circuits. The die micrograph and major sub-blocks are shown in Fig.7(a). The entire block is in size. The testing board is shown in Fig.7(b), where the configuration switches, testing points and power supply are on the front side of the testing board while an FPGA board is hooked to the rear side. This section presents the measurement results and explains the testing condition and methodology.

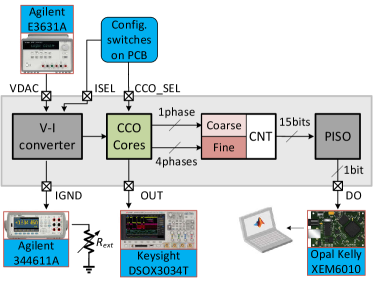

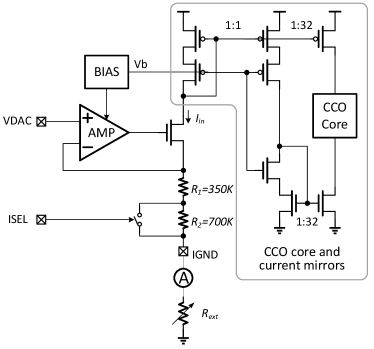

The testing setup is illustrated in Fig. 8(a). The bias current of the CCO can be controlled in coarse steps using an external resistor on the board and in fine steps by a voltage input from an on-board Digital to Analog Converter. The combination of resistor and voltage are converted into a reference current by a rail-to-rail operation amplifier based V-I converter (Fig. 8(b)) and then copied as the starved current for the CCO core by current mirrors. The range and amplitude of the current is also programmable by the configuration bit . CCO cores selection is configured by . The output of the oscillator is converted to a digital code by the coarse/fine digital quantizer as described earlier. The bit stream is serialized and sent to the FPGA for storage and analysis. The frequency of oscillation can be inferred from the digital code without having to measure a high frequency signal directly.

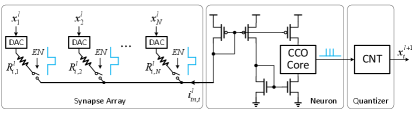

Note that the V-I converter is used just for convenience of testing. In an actual neural network, the input current to the CCO will come from a synaptic array. Fig. 8(c) shows an example how the CCO can be integrated into a neural network system with resistive synapses[25]. Here the CCO is used as the neuron in the layer and the count output from one neuron feeds a digital-analog converter (DAC) in the next layer to create voltage inputs. Note that this is just an example implementation just to clarify that the V-I converter is not a part of the neuron.

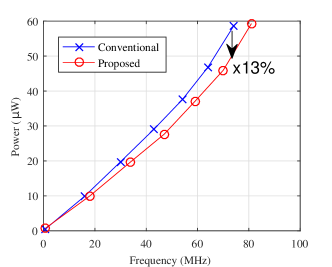

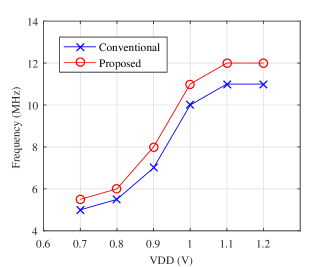

Measured frequency transfer curves of the conventional and proposed CCOs are plotted in Fig.9(a) at different input current obtained by varying the DAC voltage over a wide range. The measured frequency of proposed CCO indeed achieves improvement compared with that of the conventional one for the same current input, ranging approximately from 0 to , close to the simulation based result in Section III. Fig. 9(b) shows the measured power consumption at different oscillation frequencies. At the same oscillation frequency, the proposed CCO consumes less energy due to the less number of transistors and the corresponding capacitors. At the highest V, the energy/conversion step including V-I converter ranges from . For a lower V and excluding the V-I converter, the energy/conversion step ranges from . Equivalently, this energy efficiency can be quoted as . Finally, the dependence of the oscillation frequency on power supply voltage is tested. The experimentally obtained frequencies of the conventional and the proposed CCOs with different power supply are shown in Fig.9(c). The testing is under the condition of V, and is set to low (total resistor is 1.05 in this case). The result shows a sharp drop in frequencies for less than V and is traced back to the reference circuit not functioning properly at these voltages resulting in a change in the starving current. However, the proposed oscillator produce higher frequencies than the conventional design for all values of .

| Reference | Technology () | Topology | Power Eff. () | Frequency () |

|---|---|---|---|---|

| This work | 65 | Differential, 4-stage | 0.11-0.381 | 80 |

| Lee 2018 | ||||

| [26] | 2000 (SOI) | Single-ended, 3-stage | 360 | - |

| Yi 2019 | ||||

| [11] | 65 | Differential, 4-stage | 0.71 | 70 |

| Yao 2017 | ||||

| [12] | 350 | Single-ended, relaxation | 0.2 | 4 |

| Basu 2010 | ||||

| [27] | 350 | Single-ended, relaxation | 17.4 | 100 |

| Sahoo 2017 | ||||

| [9] | 65 | Single-ended | - | 1.5 |

-

1

Excluding V-I converter power

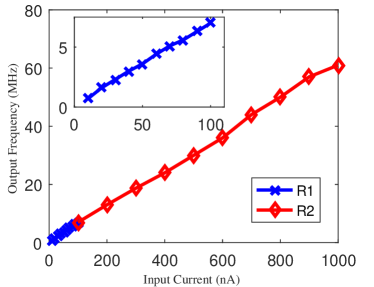

As mentioned before, the frequency of CCO can be tunable by both input voltage and the external resistor . Fig.10 shows the transfer curves, where sweeps from 100 mV to 1 V. First, the external resistor is fixed to and the configuration bit is set to low, making the total resistance . Tuning input voltage from 100 mV to 1 V covers the input current range from 10 nA to 100 nA, as shown in the blue line (R1).Then, the external resistor is fixed to and is set to high, leading the total resistance .Tuning input voltage from 100 mV to 1 V covers the input current from 100 nA to 1000 nA, as shown in the red line (R2). The testing points show the two kinds of tuning matches well and one can act as coarse tuning while another as fine tuning.

| Reference | Technology () | Architecture | Supply () | Power () | Area () | Bandwidth () | FOM () | Application Comments |

|---|---|---|---|---|---|---|---|---|

| This work | 65 | Novel CCO + TDC | 1.2 | 0.02 | 0.004 | 0.5 | 791 | silicon neuron in neuromorphic applications |

| Leene 2018 | ||||||||

| [28] | 65 | VCO + | 0.5 | 1.3 | 0.006 | 0.011 | 175 | electrode bio-potential recordings |

| Li 2017 | ||||||||

| [29] | 130 | VCO + | 1.2 | 1.05 | 0.13 | 0.4 | 118 | continuous-time delta-sigma modulator |

| Young 2014 | ||||||||

| [30] | 65 | OTA + VCO | 1.2 | 38 | 0.49 | 50 | 294 | continuous-time delta-sigma modulator |

| Talor 2013 | ||||||||

| [31] | 65 | VCO | 0.9 | 11.5 | 0.075 | 5.08 | 246 | digital wireless receiver, CT delta-sigma modulator |

| Kim 2014 | ||||||||

| [32] | 130 | delay cell + 3-D Vernier | 1.5 | 0.33 | 0.28 | 0.5 | 400 | time-of-flight (ToF) application |

| Tu 2017 | ||||||||

| [33] | 40 | PWM + | 1.2 | 0.02 | 0.015 | 0.005 | 1643 | CMOS Image Sensor, X-ray detector |

| Jayaraj 2019 | ||||||||

| [34] | 65 | VCO + | 1.2 | 1 | 0.06 | 2.5 | 151 | continuous-time (CT) ADC |

| Jayaraj 2019 | ||||||||

| [35] | 65 | VCO + | 1 | 0.1 | 0.06 | 2.3 | 8.6 | continuous-time (CT) ADC |

| Zhong 2018 | ||||||||

| [36] | 40 | VCO + | 1.1 | 0.91 | 0.086 | 5.2 | - | continuous-time (CT) ADC |

-

1

FOM is based on simulation since V-I converter does not support high frequency input.

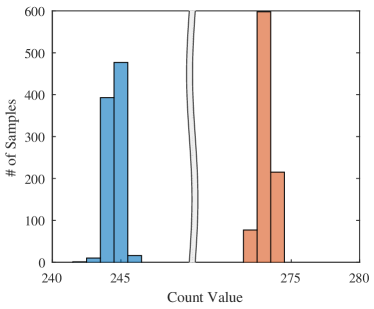

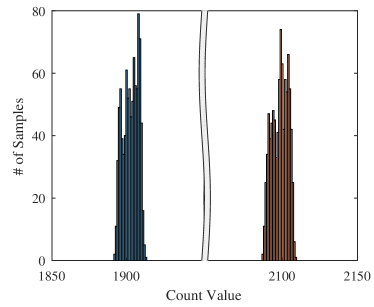

To test the jitter performance, the outputs of CCOs are connected to a time-to-digital converter (TDC) in the chip, as shown in Fig. 8 and described in the earlier section. A parallel in serial out (PISO) circuit is used to reduce the number of output pads. The 15-bit parallel data is loaded into the register simultaneously and is shifted out of the register serially one bit at a time under clock control. In our testing, the serial output data is samples by FPGA and then passed to PC to decode. Fig. 11 (a) and (b) display the measured period jitter performance for the conventional and proposed structure at a low and high frequency respectively. The duration of counter window is set to 50 in the measurement. It can be seen that the two structures perform approximately the same with around 0.2% jitter at lower frequency and around 0.25% jitter at higher frequency.

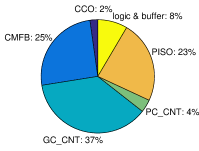

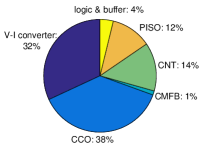

Fig. 12 shows the relative area utilization and the simulated power breakdown. The 4-stage CCO core contributes a small area of . It may be noted that although the V-I converter consumes a significant system power in our testing, this block is not an intrinsic part of our design but is there only for testing as mentioned earlier.

Table II compares characteristics and performance of various oscillators used in neuromorphic applications. The proposed CCO has one of the highest energy efficiencies reported so far (except [12]). However, [12] presents a single ended design with a much lower frequency of operation. Further, compared with the other differential design in [11], the proposed work uses less area.

Further, a detailed performance comparison is summarized in Table III for the recent time based ADC architectures. To compare with other ADCs, the center frequency of CCO is set to be MHz in the prototype ADC. With the input analog bandwidth of kHz, running at MS/s, it consumes from V in average with full input signal swing, out of which is drawn by the digital circuits and the buffer while is from analog (including CCO core and bias). The proposed novel CCO structure consumes less area and lower energy overhead by using less transistors. Besides, the Gray code counter and phase code counter consume less dynamic energy due to their merit of minimum change code. These lead to high energy efficiency of the time based ADC quantified by the figure of merit (FOM) calculated as:

| (14) |

Where, is the total power consumed by the CCO-based ADC. The ADC achieves an FOM in the range of fJ/conv-step for different input frequencies. Note that [35] achieves much lower FOM by using order noise shaping loop; however, this benefit comes mostly due to reduced quantization noise and is orthogonal to the improvements in oscillator structure we report. Also, this improvement comes with a X area penalty which may not be suited for neuron designs.

V Discussion

V-A Neural Network Simulation

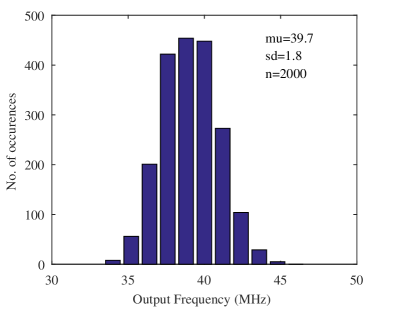

In this section, we explore potential application of the proposed CCO as a neuron in neural network (NN) implementations. The custom activation function of the proposed neuron is modelled using experimental results of the CCO (Fig. 9 and Fig. 11). The I-F transfer curve of CCO has a high sensitivity to process variations and hence the neurons on a chip are expected to have mismatch. Fig. 13 shows the mismatch of CCO through Monte Carlo (MC) simulation. As seen from the figure, the standard variation is MHz at the mean frequency of MHz leading to a coefficient of variation of . The mismatch of CCO is observed by performing MC runs while sweeping input currents. The variability in the slope of this I-F curve is included in the NN simulation as shown next. Note that synaptic non-idealities are a separate issue and has to be considered for the full system as well. This is beyond the scope of this work but many strategies to handle synaptic non-idealities such as mismatch, low-precision and write non-linearity have been published[12, 37, 38, 39, 40].

At first, the neural networks are trained and tested using ReLu activation function to establish a benchmark for further evaluation of proposed activation function. For hardware simulation, we adopted two strategies. In the first one, the networks are trained using ReLu activation and the custom activation function is introduced only during inference. In the second strategy, the custom activation is used both during training and inference. The simulation details are described in detail in algorithm 1.

| Datasets | MNIST | Fashion MNIST | CIFAR-10 | |||||

|---|---|---|---|---|---|---|---|---|

| Network | ANN | CNN | ANN | CNN | ANN | CNN | ||

| Simulation Accuracy | Software | 0.982 | 0.9926 | 0.8919 | 0.9174 | 0.5284 | 0.7478 | |

|

0.9812 | 0.9915 | 0.8846 | 0.9088 | 0.5014 | 0.6974 | ||

|

0.9829 | 0.9931 | 0.8937 | 0.9135 | 0.5257 | 0.7435 | ||

We used three different datasets (MNIST [41], fashion MNIST [42], CIFAR-10 [43]) and two network topologies (one ANN and one CNN) to evaluate the performance of the proposed neuron model. Since the primary aim of these simulations is to verify the viability of the CCO based neuron, instead of experimenting with different network architectures, we used the same ANN and CNN architectures for all the datasets and compared the performance of the hardware simulations with their pure software counterpart. The ANN comprises of two fully connected hidden layers with 800 and 300 neurons respectively. The CNN network architecture used can be described as: , where the input dimension is , represents a convolution layer with convolution filters with size, represents a 2D max-pooling layer, represent a dropout layer with dropout rate and represent a fully connected dense layer with neurons. All models are trained and tested through google colaboratory on a server with Intel Xeon CPU and NVIDIA Tesla K80 GPU. All the models are trained with categorical crossentropy loss and Adam optimizer and the testing accuracies for all the datasets and network topologies are averaged over 3 trials.

The results (mean across 3 trials) are shown in table IV. The key observations from the results are as follows: firstly, for simple datasets like MNIST, there is almost no loss of accuracy even if the custom activation function is introduced only during inference. For a relatively more complex fashion MNIST dataset, there is a small loss () in testing accuracy, while for more complex CIFAR10 dataset, the loss of accuracy becomes significant (). Secondly, the loss in accuracy can be almost completely recovered even for complex datasets like CIFAR-10, if the custom activation is introduced during training i.e. weight learning through back-propagation is able to correct for any non-ideality resulting from the custom activation. Thirdly, in some cases, when the custom activation is introduced during training, the testing accuracies seem to surpass the software accuracy. This can be attributed to Gaussian Noise injection in the custom activation acting as noise regularization and improving overall network performance [44]. For all the datasets and both hardware simulation settings, the loss of accuracy is similar for both ANN and CNN network topologies. Since the ANN uses only two hidden layers while the CNN architecture consists of large number of layers, this observation establishes the scalability of the proposed neuron model. Finally, we evaluated in detail the variability in the performance of the classifiers caused by random variation of the custom activation function described in step of algorithm 1. For MNIST classification task with ANN and CNN, we evaluated the classification accuracies over trials where the slopes of the custom activation are randomly generated for each trial. The standard deviation of classification accuracy for ANN and CNN are and respectively. This goes to show that the performance variation introduced by the slope variability is very small and therefore, further proves the viability of the proposed neuron model.

Changes in power supply voltage and temperature are ideally common mode perturbations and affect the CCO less due to differential structure. Moreover, due to sharing of power supply, if all neurons are affected by the same factor, RELU based neural networks will not be ideally affected. However, due to variations between neurons, there will be some effect in real implementations. To capture this effect, Monte-Carlo simulations were done to find distributions of supply voltage sensitivity (temperature sensitivity is almost negligible as shown in Section III). The resulting sensitivity distribution had a mean of and standard deviation of when varied in the range of V. Including this as an additional perturbation for the neuron outputs during inference, the results for CIFAR-10 were re-evaluated. We observed negligible reduction in accuracy of confirming our hypothesis that the network is relatively robust against supply variations.

V-B CCO as Spiking Neuron

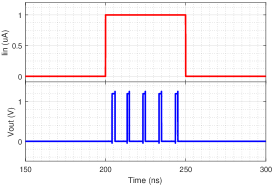

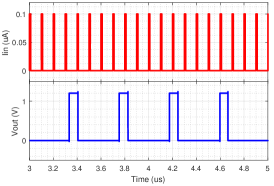

The earlier sections showed the usage of the CCO as a rate encoded neuron similar to its use in [12, 11]. However, it may also be used as a spiking neuron with precisely timed output spikes as needed in spiking neural networks (SNN). While one way to use the CCO as a spiking neuron is shown in [9], we show next how some of the critical features can be included in the CCO much easily. We identify one of the phases of the CCO as the spike phase and the corresponding output of the fine counter is plotted as spike output.

First, we show in SPICE simulations that adding a leak current source[45] next to the PMOS input current mirror in Fig. 8 can act to suppress spurious neuron spikes at low input currents. The resulting I-F curve plotted in Fig. 14(a) shows that indeed valid neuron firing starts for input currents larger than . Next, the response of the CCO neuron to a step of magnitude is shown in Fig. 14(b). As expected, the neuron fires spikes only when the step is applied. Lastly, to see how to integrate such a neuron in a network, we show the response of the CCO neuron to spike inputs that may come from other neurons in the network. Plotted in Fig. 14(c), it shows that for input synaptic current pulses of magnitude , the neuron integrates the charge on the PMOS current mirror and produces an output spike for every input spikes.

V-C Startup Circuit

In Section III, we proposed the novel structure of CCO and explained the necessity of a start-up circuit. A robust oscillation can be ensured by a start-up circuit with only a PMOS transistor or a NMOS transistor or both. As shown in Fig. 15 (a), a start-up circuit is equipped at the differential output nodes to eliminate the uncertainty state. The start-up circuit would be a single PMOS acting as a pull-up device to eliminate the uncertainty of , as shown in Fig. 15 (b), or a single NMOS acting as a pull-down device to eliminate the uncertainty of , as shown in Fig. 15 (c), or both a PMOS and a NMOS to eliminate the uncertainty of both the differential outputs, as shown in Fig. 15 (d).

The different start-up circuits give different oscillation frequencies. Fig. 16 compares the simulated output frequencies of conventional and proposed structure with three different start-up circuits. The CCO with both PMOS and NMOS start-up performs higher frequency than the conventional one while the CCOs with a single PMOS or NMOS start-up degrade the frequencies around compared to the conventional one. Hence, we only use the startup with both PMOS and NMOS in the proposed structure.

VI Conclusion

In this paper, an energy- and area-efficient full differential CMOS current controlled ring oscillator (CCO) is presented as a suitable and compact structure in neurocomputing applications. The CCO achieves higher frequency while consuming lower area and lower energy due to less transistors being utilized compared with the conventional structure. By eliminating the unnecessary transistors, the proposed structure is composed of a simplest dynamic positive feedback latch and differential pairs, saving in size. The CCO can be tuned by both input voltage and external variable resistor. The measurement results show our work achieves 11% frequency improvement and 13% energy-efficient without degrading the jitter and phase noise characteristics. Simulations of both ANN and CNN with the proposed CCO characteristics as the neuron transfer curve show no degradation in accuracy if the CCO nonlinearity is included in training.

References

- [1] Y. Taigman, M. Yang, M. Ranzato, and L. Wolf, “DeepFace: Closing the gap to human-level performance in face verification,” in Computer Vision and Pattern Recognition (CVPR), 2014, pp. 1701–08.

- [2] L. Deng and et. al, “Recent advances in deep learning for speech research at Microsoft,” in IEEE Intl. Conf. on Acoustics, Speech and Signal Processing (ICASSP), 2013, pp. 8604–08.

- [3] R. Collobert, J. Weston, L. Bottou, M. Karlen, K. Kavukcuoglu, and P. Kuksa, “Natural language processing (almost) from scratch,” J. Machine Learning Research, vol. 12, pp. 2493–2537, 2011.

- [4] V. M. Suresh and et. al., “Powering the IoT through embedded machine learning and LoRa,” in IEEE World Forum on IoT (WF-IOT), 2018, pp. 349–354.

- [5] A. Basu, Y. Chen, and E. Yao, “Big data management in neural implants: The neuromorphic approach,” in Emerging Technology and Architecture for Big-data Analytics, C. A. and C. C. Y. H, Eds. Cham: Springer, 2017.

- [6] A. Basu and et. al, “Low-power, adaptive neuromorphic systems: Recent progress and future directions,” IEEE Journal on Emerging and Selected Topics in Circuits and Systems (JETCAS), vol. 8, no. 1, pp. 6–27, 2018.

- [7] R. Sarpeshkar, “Analog versus digital: extrapolating from electronics to neurobiology,” Neural Computation, vol. 10, no. 7, pp. 1601–38, 1998.

- [8] M. Pfeiffer and T. Pfeil, “Deep learning with spiking neurons: opportunities and challenges,” Frontiers in Neuroscience, vol. 12, 2018.

- [9] B. D. Sahoo, “Ring oscillator based sub-1v leaky integrate-and-fire neuron circuit,” in IEEE Symp. Circuits and Systems (ISCAS). IEEE, 2017, pp. 1–4.

- [10] L. Everson, M. Liu, N. Pande, and C. H. Kim, “A 104.8TOPS/W One-Shot Time-Based Neuromorphic Chip Employing Dynamic Threshold Error Correction in 65nm,” in IEEE Asian Solid-State Circuits Conference (ASSCC), 2018.

- [11] Y. Chen, Z. Wang, A. Patil, and A. Basu, “A 2.86-TOPS/W Current Mirror Cross-Bar Based Machine-Learning and Physical Unclonable Function Engine for Internet-of-Things Applications,” IEEE Trans. on Circuits and Systems-I, vol. 66, no. 6, pp. 2240–52, 2019.

- [12] E. Yao and A. Basu, “VLSI Extreme Learning Machine: A Design Space Exploration,” IEEE Trans. on VLSI, vol. 25, no. 1, pp. 60–74, 2017.

- [13] A. Patil, S. Shen, E. Yao, and A. Basu, “Hardware Architecture for Large Parallel Array of Random Feature Extractors applied to Image Recognition,” Neurocomputing, vol. 261, pp. 193–203, 2017.

- [14] W. Bae, H. Ju, K. Park, S. Cho, and D. Jeong, “A 7.6 mW 414 fs RMS-jitter 10 GHz phase-locked loop for a 40 Gb/s serial link transmitter based on a two-stage ring oscillator in 65 nm CMOS,” IEEE J. Solid-State Circuits, vol. 51, no. 10, pp. 2357–2367, 2016.

- [15] K. R. Lakshmikumar and et. al., “A Process and Temperature Compensated Two-Stage Ring Oscillator,” in Custom Integrated Circuits Conference (CICC), 2007, pp. 691–4.

- [16] A. Sengupta, Y. Ye, R. Wang, C. Liu, and K. Roy, “Going deeper in spiking neural networks: Vgg and residual architectures,” Frontiers in neuroscience, vol. 13, 2019.

- [17] B. Rueckauer, I.-A. Lungu, Y. Hu, M. Pfeiffer, and S.-C. Liu, “Conversion of continuous-valued deep networks to efficient event-driven networks for image classification,” Frontiers in neuroscience, vol. 11, p. 682, 2017.

- [18] A. Raychowdhury, A. Parihar, and et .al., “Computing With Networks of Oscillatory Dynamical Systems,” Proc. of IEEE, vol. 107, no. 1, pp. 73–89, 2019.

- [19] S. Dutta, A. Parihar, and et .al., “Programmable coupled oscillators for synchronized locomotion,” Nature Communications, vol. 10, no. 3299, 2019.

- [20] A. Hajimiri, S. Limotyrakis, and T. H. Lee, “Jitter and phase noise in ring oscillators,” IEEE Journal of Solid-state circuits, vol. 34, no. 6, pp. 790–804, 1999.

- [21] P. Dudek, S. Szczepanski, and J. V. Hatfield, “A high-resolution cmos time-to-digital converter utilizing a vernier delay line,” IEEE Journal of Solid-State Circuits, vol. 35, no. 2, pp. 240–247, 2000.

- [22] A. N. T. Sakurai, “Alpha-Power Law MOSFET Model and its Applications to CMOS Inverter Delay and Other Formulas,” IEEE J. Solid-State Circuits, vol. 25, 1990.

- [23] K. A. Bowman, B. L. Austin, J. C. Eble, X. Tang, and J. D. Meindl, “A physical alpha-power law mosfet model,” IEEE Journal of Solid-State Circuits, vol. 34, no. 10, pp. 1410–1414, 1999.

- [24] A. A. Abidi, “Phase noise and jitter in CMOS ring oscillators,” IEEE J. Solid-State Circuits, vol. 41, no. 8, pp. 1803–1816, 2006.

- [25] P. Yao, H. Wu, and et. al, “Fully hardware-implemented memristor convolutional neural network,” Nature, vol. 577, 2020.

- [26] J.-J. Lee, J. Park, M.-W. Kwon, S. Hwang, H. Kim, and B.-G. Park, “Integrated neuron circuit for implementing neuromorphic system with synaptic device,” Solid-State Electronics, vol. 140, pp. 34–40, 2018.

- [27] A. Basu and P. E. Hasler, “Nullcline-based design of a silicon neuron,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 57, no. 11, pp. 2938–2947, 2010.

- [28] L. B. Leene and T. G. Constandinou, “A 0.006 1.2 W Analog-to-Time Converter for Asynchronous Bio-Sensors,” IEEE Journal of Solid-State Circuits, vol. 53, no. 9, pp. 2604–2613, 2018.

- [29] S. Li, A. Mukherjee, and N. Sun, “A 174.3-dB FoM VCO-Based CT Modulator With a Fully-Digital Phase Extended Quantizer and Tri-Level Resistor DAC in 130-nm CMOS,” IEEE Journal of Solid-State Circuits, vol. 52, no. 7, pp. 1940–1952, 2017.

- [30] B. Young, K. Reddy, S. Rao, A. Elshazly, T. Anand, and P. K. Hanumolu, “A 75dB DR 50MHz BW order CT- modulator using VCO-based integrators,” in 2014 Symposium on VLSI Circuits Digest of Technical Papers. IEEE, 2014, pp. 1–2.

- [31] G. Taylor and I. Galton, “A reconfigurable mostly-digital delta-sigma ADC with a worst-case FOM of 160 dB,” IEEE Journal of Solid-State Circuits, vol. 48, no. 4, pp. 983–995, 2013.

- [32] Y. Kim and T. W. Kim, “An 11 b 7 ps resolution two-step time-to-digital converter with 3-D Vernier space,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 61, no. 8, pp. 2326–2336, 2014.

- [33] C.-C. Tu, Y.-K. Wang, and T.-H. Lin, “A low-noise area-efficient chopped VCO-based CTDSM for sensor applications in 40-nm CMOS,” IEEE Journal of Solid-State Circuits, vol. 52, no. 10, pp. 2523–2532, 2017.

- [34] A. Jayaraj, M. Danesh, S. T. Chandrasekaran, and A. Sanyal, “Highly Digital Second-Order VCO ADC,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 66, no. 7, pp. 2415–2425, 2019.

- [35] A. Jayaraj, A. Das, S. Arcot, and A. Sanyal, “8.6fJ/step VCO-Based CT 2nd-Order ADC,” in 2019 IEEE Asian Solid-State Circuits Conference (A-SSCC), 2019, pp. 197–200.

- [36] Y. Zhong, S. Li, A. Sanyal, X. Tang, L. Shen, S. Wu, and N. Sun, “A Second-Order Purely VCO-Based CT ADC Using a Modified DPLL in 40-nm CMOS,” in 2018 IEEE Asian Solid-State Circuits Conference (A-SSCC), 2018, pp. 93–94.

- [37] R. A. John and et. al, “Optogenetics inspired transition metal dichalcogenide neuristors for in-memory deep recurrent neural networks,” Nature Communications, vol. 11, no. 1, pp. 1–9, 2020.

- [38] A. Bhaduri and et. al., “Spiking neural classifier with lumped dendritic nonlinearity and binary synapses: a current mode VLSI implementation and analysis,” Neural Computation, vol. 30, no. 3, pp. 723–60, 2018.

- [39] S. Roy and A. Basu, “An online unsupervised structural plasticity algorithm for spiking neural networks,” IEEE Trans. on Neural Networks and Learning Systems, vol. 28, no. 4, pp. 900–910, 2016.

- [40] D. Querlioz and et. al, “Immunity to device variations in a spiking neural network with memristive nanodevices,” IEEE Trans. on Nanotechnology, vol. 12, no. 3, pp. 288–95, 2013.

- [41] Y. LeCun, C. Cortes, and C. Burges, “The mnist database of handwritten digits,” http://yann.lecun.com/exdb/mnist/, 1998.

- [42] H. Xiao, K. Rasul, and R. Vollgraf, “Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms,” arXiv preprint arXiv:1708.07747, 2017.

- [43] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [44] H. Noh, T. You, J. Mun, and B. Han, “Regularizing deep neural networks by noise: Its interpretation and optimization,” in Advances in Neural Information Processing Systems, 2017, pp. 5109–5118.

- [45] G. Indiveri and et. al., “Neuromorphic silicon neuron circuits,” Frontiers in Neuroscience, vol. 5, 2011.