A 2-Level Domain Decomposition Preconditioner for KKT Systems with Heat-Equation Constraints111To appear in the proceedings of The 27th International Conference on Domain Decomposition Methods (DD27)

Abstract

Solving optimization problems with transient PDE-constraints is computationally costly due to the number of nonlinear iterations and the cost of solving large-scale KKT matrices. These matrices scale with the size of the spatial discretization times the number of time steps. We propose a new two level domain decomposition preconditioner to solve these linear systems when constrained by the heat equation. Our approach leverages the observation that the Schur-complement is elliptic in time, and thus amenable to classical domain decomposition methods. Further, the application of the preconditioner uses existing time integration routines to facilitate implementation and maximize software reuse. The performance of the preconditioner is examined in an empirical study demonstrating the approach is scalable with respect to the number of time steps and subdomains.

1 Introduction

This paper develops a new domain-decomposition method for solving the KKT system with heat-equation constraints. This effort is driven by the quadratic optimization problem of the form

| (1) | |||||

| s.t. | |||||

This quadratic PDE-constrained optimization problem finds a control such that the solution to the heat equation matches the target . The spatial domain is , the time interval is , and the heat conductivity is . Uniform homogenous boundary conditions are assumed for all time, and the initial condition is prescribed by .

Many nonlinear methods use a series of quadratic approximations of the form represented by Eq. 1 to solve PDE-constrained optimization problems (see for instance sequential quadratic programming methods [8, 15, 27]). There have been several studies focused on developing scalable preconditioners for the saddle-point system that arises from the first-order necessary conditions. Often preconditioners for saddle-point systems take the form of approximate factorization block preconditioners [3]. These were explored for KKT systems in [4, 5]. Our work relies heavily on the block preconditioners from the Wathen group [23, 24, 25].

This effort focuses on transient PDE constraints where the size of the system scales with the number of spatial unknowns times the number of time steps, resulting in substantial computational effort. To alleviate this, a number of efforts have proposed accelerating the time solve using adaptive space-time discretizations [17, 16], parareal [20, 27, 12], multigrid approaches [6, 7, 10, 13, 19], block preconditioning [23, 24], and domain decomposition methods [14].

Our approach is also built on block preconditioning ideas. A difference is that our technique exploits an observation that the Schur-complement of the KKT system is elliptic in time (see [11, 18]). This allows us to leverage existing two level domain decomposition approaches for elliptic systems to improve the parallel scalability of the block preconditioner. Good performance is achieved by algorithmic choices that ensure the forward and backward in time integrators can be applied on the fine level.

2 Discrete System and Block Preconditioner

In this article the PDE in Eq. 1 will be discretized on a D Cartesian grid using first order backward Euler in time, and a second order finite difference stencil in space. A row of the discrete space-time system for the heat equation satisfies:

| (2) |

Here are the interior space indices defined over and . The exterior indices are eliminated using the homogenous boundary conditions. The superscript time index runs from . Each is referred to below as a time-node. The control variable ’s index matches the implicit index on , therefore is associated with the th time interval. For a single time interval, Eq. 2 rewritten in matrix form is

| (3) |

and the global space-time system is

| (4) |

The right hand side includes contributions from the initial conditions. The matrix is block lower triangular and the matrix is block diagonal.

The linear system whose solution solves the quadratic optimization problem from Eq. 1 is the celebrated KKT system where

| (5) |

The final row is the discrete form of the PDE constraint, enforced by the Lagrange multiplier . We will also refer to as the adjoint solution. and are identity matrices scaled by . The matrix is a saddle point matrix, whose structure is frequently observed in numerical optimization. Many effective block preconditioners have been developed for this class of matrix [2, 3, 4]. We focus on the block preconditioning approach developed by Wathen and collaborators for solving linearized PDE-constrained optimization problems [23, 24, 25].

We write a block LDU factorization of the matrix

| (6) |

where the Schur-complement is . Following [23], is preconditioned using the block diagonal operator

| (7) |

This preconditioner leverages the result in [21], and approximately inverts the block diagonal in the LDU factorization. The matrix used in the approximate Schur complement is block lower triangular (similar to ), a fact that we will exploit below. The choice of integrates the state Jacobian and the effects of the regularization parameter. In [23, 24] and [26], this approximation is developed and shown to lead to robust performance with respect to .

3 Two-Level Domain Decomposition Schur-Complement

We propose a new domain decomposition approach for approximately inverting . This is motivated by the observation that the operator is elliptic in time (see [18] and [11]). For simplicity, we show this discretely using only the term . Consider the ODE discretized over three time steps with forward Euler: . With , the Schur-complement is

| (8) |

Examining the second row it is clear the operator has a D Laplacian stencil in time, with a positive perturbation on the diagonal. To take advantage of this ellipticity, we will apply existing domain decomposition approaches to the operator. This ellipticity principle enables scalable performance of a preconditioned Krylov method. We also impose an efficiency constraint that the computational kernels in our preconditioner use the time integration method for the state and adjoint unknowns.

The ellipticity principle is realized by considering a restricted additive Schwarz (RAS) method with subdomains (see [9]). Each subdomain contains the spatial unknowns associated with a subset of time steps. For instance, if there are time steps, then for the subdomains contain time-nodes , , and (the time-node is the excluded initial condition). Notice that the time-nodes are overlapped but the time steps are not. With these subsets, boolean operators are defined that restrict a space-time vector to the time-nodes in a subdomain, giving the RAS preconditioner

| (9) |

where is the (boolean) partition of unity matrix (Defn. 1.11 of [9]). RAS is known to lead to effective preconditioners for elliptic problems and can be extended to a multi-level schemes. While the ellipticity principle is exploited in , the explicit formation of the product does not satisfy the efficiency constraint.

To satisfy the efficiency constraint note that the range of is nonzero on time-nodes in the subdomain and one time-node earlier. For instance, if the subdomain contains nodes then the range is nonzero on . Let be a new extended restriction operator whose action produces a space-time vector for time-nodes that are nonzero in the range of . Further, choose so that

| (10) |

The operator restricts the space-time vector to the time-nodes contained in the earlier time step relative to the subdomain. Because is a restriction operator that represents the nonzero range of , we have that . Recalling that is diagonal, we can rewrite the subdomain solve in as

| (11) |

Using the constraint in Eq. 10, we have the additional identities and . This permits a final rewrite of the operator in Eq. 11

| (12) |

where and . The inverse action of is easily computed in a matrix free way on the time-nodes in the extended subdomain. Motivated by this equivalence, we define a new one-level preconditioner

| (13) |

The term in parentheses is different from the term inverted in Eq. 9. The difference is that the inverse computed in Eq. 9 is constrained to have a zero initial condition outside of the subdomain. This revised operator satisfies our efficiency constraint as computing and is done using the time integration method.

To obtain scalability with respect to the number of subdomains a coarse grid correction is required. Again leveraging the elliptic nature of the Schur-complement we consider the Nicolaides coarse space developed for solving the Poisson problem [22]. Following the presentation in [9], the Nicolades coarse space is defined as

| (14) |

The columns of form a constant and linear basis over the subdomain time-nodes. The coarse restriction is used in the definition of the coarse operator . The coarse solve is applied in a multiplicative way

| (15) |

Due to the structure of the coarse operator can be constructed in parallel. This does represent a violation of the efficiency constraint to be addressed by future work.

4 Numerical Experiments

To demonstrate this approach we discretize the quadratic optimization problem from Eq. 1 as described in Sec. 2. The D spatial domain is , and the time domain is . The initial conditions and target solutions are

| (16) |

The regularization parameter varies over five orders of magnitude. Experiments were run with , , and mesh points. Qualitatively, variation with number of spatial points was not a factor in the convergence. This is not surprising as the implicit operator in space is inverted with a direct solve. As a result, the computations below are all for the case of mesh points. Recall that homogeneous boundary conditions are removed, giving unknowns in each time step. The linear system (Eq. 5) is solved using right preconditioned GMRES from PyAMG [1] iterated until a relative residual tolerance of is achieved.

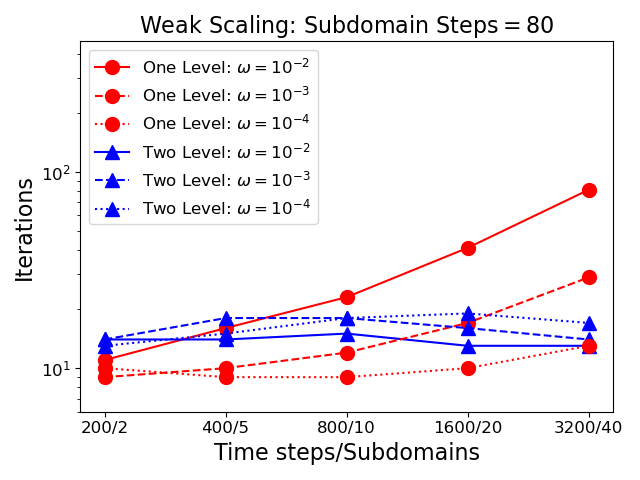

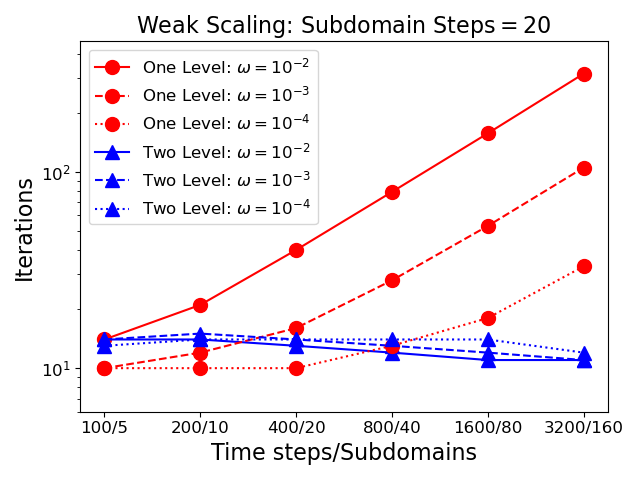

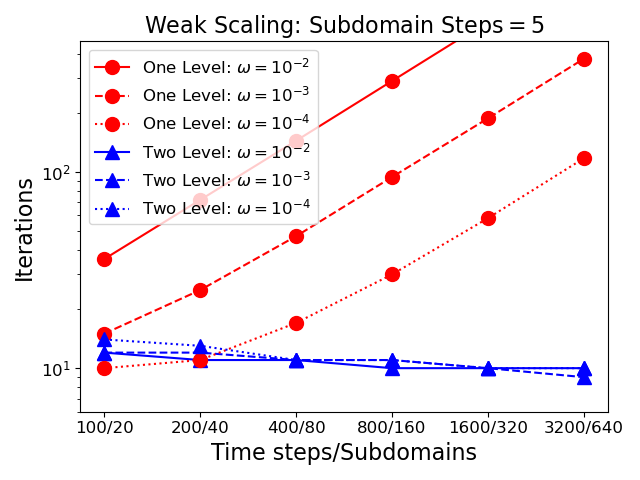

Figure 1 presents three weak-scaling studies ranging from to time steps. The number of time steps per subdomain is fixed at in the left plot, in the center plot, and in the right plot. For the case of steps, the fewest number of time steps is (the minimum number of time steps evenly divisible by in the chosen sequence). The plots, show the number of iterations as a function of time step count for GMRES preconditioned using from Eqn 7 with Schur complement approximations for the one level case (circle markers), and for the two level case (triangle markers). Different values for the regularization parameter are indicated using solid (), dashed () or dotted () lines. These plots demonstrate that the performance of the two level method is independent of both the number of subdomains, and the number of time steps. Further, independence holds regardless of the value of the regularization parameter. As anticipated the one level method has substantial growth with the number of time steps, and variability with the regularization parameter. However, it is worth noting that dependent on the number of subdomains and the size of the regularization parameter the one level method may be faster despite its lack of scalability. For instance, when using subdomains and a regularization parameter of the one-level method takes the same number of iterations but lacks the synchronization and added cost of the two level method.

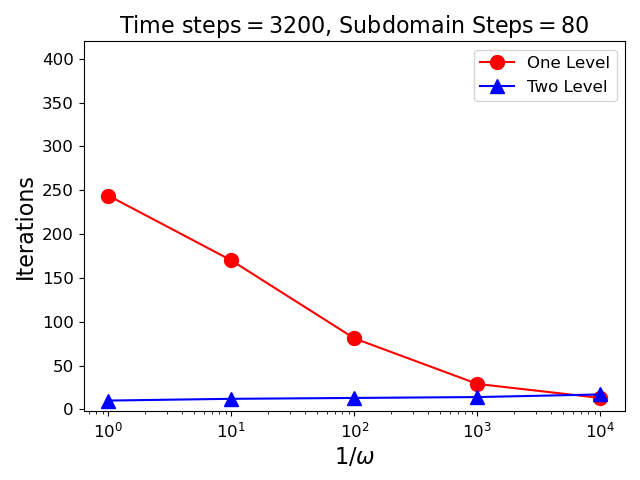

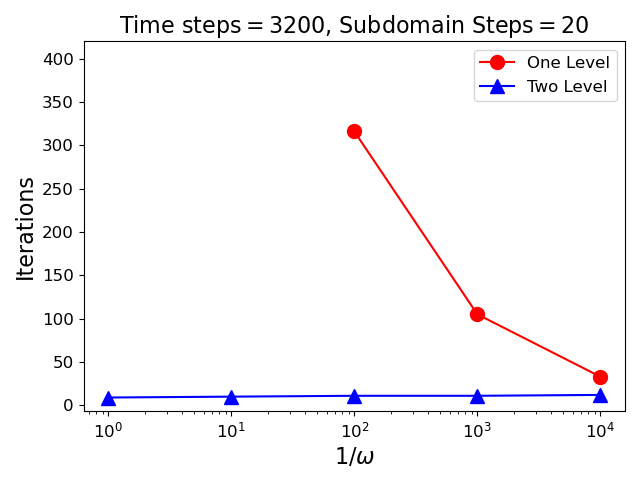

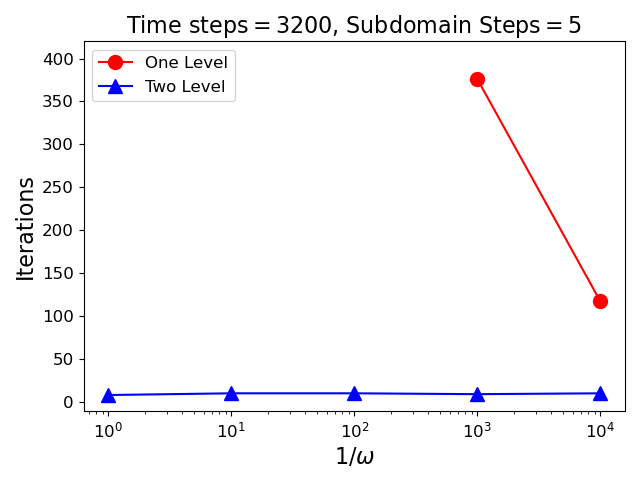

The scaling with respect to the regularization parameter is investigated in Figure 2. In these plots the preconditioned iteration counts are plotted as a function of the inverse regularization parameter. Data points are excluded when the number of iterations exceeded the maximum iteration count for GMRES (in this case ). Here again the two level method scales well, yielding essentially flat iteration counts as a function of the regularization parameter. The one level method shows strong dependence on , though it improves dramatically for smaller values.

5 Conclusion

In this paper, motivated by results in block preconditioning and the elliptic-in-time nature of the KKT system, we develop a two level domain decomposition preconditioner that facilitates a parallel-in-time solver for the discrete optimality system constrained by the heat equation. While limited in their breadth, initial results for this approach show excellent scalability with respect to the number of time steps, subdomains, and the regularization parameter. Future work will focus on achieving improved scaling by including more levels in the hierarchy, and applying this technique to a broader class of problems and discretizations.

Acknowledgements

The author is indebted anonymous reviewers whose comments resulted in a significant strengthening of the paper. The author also acknowledges support from the SEA-CROGS project and the Early Career program, both funded by the DOE Office of Science. This article has been authored by an employee of National Technology & Engineering Solutions of Sandia, LLC under Contract No. DE-NA0003525 with the U.S. Department of Energy (DOE). The employee owns all right, title and interest in and to the article and is solely responsible for its contents. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, world-wide license to publish or reproduce the published form of this article or allow others to do so, for United States Government purposes. The DOE will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan https://www.energy.gov/downloads/doe-public-access-plan. This paper describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the United States Government. Sandia report number: SAND2023-03175O.

References

- [1] N Bell, L N Olson, and J Schroder. PyAMG: Algebraic multigrid solvers in python. Journal of Open Source Software, 7(72):4142, 2022.

- [2] M Benzi and G H Golub. A preconditioner for generalized saddle point problems. SIAM Journal on Matrix Analysis and Applications, 26(1):20–41, 2004.

- [3] M Benzi, G H Golub, and J Liesen. Numerical solution of saddle point problems. Acta numerica, 14:1–137, 2005.

- [4] M Benzi, E Haber, and L Taralli. A preconditioning technique for a class of PDE-constrained optimization problems. Advances in Computational Mathematics, 35(2):149–173, 2011.

- [5] G Biros and O Ghattas. Parallel Lagrange–Newton–Krylov–Schur methods for PDE-constrained optimization. Part I: The Krylov–Schur solver. SIAM Journal on Scientific Computing, 27(2):687–713, 2005.

- [6] A Borzı. Multigrid methods for parabolic distributed optimal control problems. Journal of Computational and Applied Mathematics, 157(2):365–382, 2003.

- [7] A Borzi and V Schulz. Multigrid methods for PDE optimization. SIAM review, 51(2):361–395, 2009.

- [8] R H Byrd, F E Curtis, and J Nocedal. An inexact SQP method for equality constrained optimization. SIAM Journal on Optimization, 19(1):351–369, 2008.

- [9] V Dolean, P Jolivet, and F Nataf. An Introduction to Domain Decomposition Methods. Society for Industrial and Applied Mathematics, Philadelphia, PA, 2015.

- [10] R D Falgout, J-M Maldague, I Meyers, J Munar, E Neville, and T Overman. TriMGRIT: An Extension of Multigrid Reduction in Time for Constrained Optimization. In PinT 2020 – 9th Parallel-in-Time Workshop, 2020.

- [11] M J Gander and F Kwok. Schwarz methods for the time-parallel solution of parabolic control problems. In Domain decomposition methods in science and engineering XXII, pages 207–216. Springer, 2016.

- [12] M J Gander, F Kwok, and J Salomon. PARAOPT: A parareal algorithm for optimality systems. SIAM Journal on Scientific Computing, 42(5):A2773–A2802, 2020.

- [13] S Günther, N R Gauger, and J B Schroder. A non-intrusive parallel-in-time approach for simultaneous optimization with unsteady PDEs. Optimization Methods and Software, 34(6):1306–1321, 2019.

- [14] M Heinkenschloss. A time-domain decomposition iterative method for the solution of distributed linear quadratic optimal control problems. Journal of Computational and Applied Mathematics, 173(1):169–198, 2005.

- [15] M Heinkenschloss and D Ridzal. A matrix-free trust-region SQP method for equality constrained optimization. SIAM Journal on Optimization, 24(3):1507–1541, 2014.

- [16] U Langer, O Steinbach, F Tröltzsch, and H Yang. Space-time finite element discretization of parabolic optimal control problems with energy regularization. SIAM Journal on Numerical Analysis, 59(2):675–695, 2021.

- [17] U Langer, O Steinbach, F Tröltzsch, and H Yang. Unstructured space-time finite element methods for optimal control of parabolic equations. SIAM Journal on Scientific Computing, 43(2):A744–A771, 2021.

- [18] R M Lewis and S G Nash. Model problems for the multigrid optimization of systems governed by differential equations. SIAM Journal on Scientific Computing, 26(6):1811–1837, 2005.

- [19] S Lin. Multilevel-in-Time Methods for Optimal Control of PDEs and Training of Recurrent Neural Networks. PhD thesis, Rice University, 2022.

- [20] Y Maday and G Turinici. A parareal in time procedure for the control of partial differential equations. Comptes Rendus Mathematique, 335(4):387–392, 2002.

- [21] M F Murphy, G H Golub, and A J Wathen. A note on preconditioning for indefinite linear systems. SIAM Journal on Scientific Computing, 21(6):1969–1972, 2000.

- [22] R A Nicolaides. Deflation of Conjugate Gradients with Applications to Boundary Value Problems. SIAM Journal on Numerical Analysis, 24(2):355–365, 1987.

- [23] J W Pearson, M Stoll, and A J Wathen. Regularization-robust preconditioners for time-dependent PDE-constrained optimization problems. SIAM Journal on Matrix Analysis and Applications, 33(4):1126–1152, 2012.

- [24] J W Pearson, M Stoll, and A J Wathen. Preconditioners for state-constrained optimal control problems with moreau–yosida penalty function. Numerical Linear Algebra with Applications, 21(1):81–97, 2014.

- [25] T Rees, H S Dollar, and A J Wathen. Optimal solvers for PDE-constrained optimization. SIAM Journal on Scientific Computing, 32(1):271–298, 2010.

- [26] A Schiela and S Ulbrich. Operator preconditioning for a class of inequality constrained optimal control problems. SIAM Journal on Optimization, 24(1):435–466, 2014.

- [27] S Ulbrich. Generalized SQP methods with “parareal” time-domain decomposition for time-dependent PDE-constrained optimization. In Real-time PDE-constrained optimization, pages 145–168. SIAM, 2007.