A Benchmark for Multi-Modal Lidar SLAM with Ground Truth in GNSS-Denied Environments

Abstract

Lidar-based simultaneous localization and mapping (SLAM) approaches have obtained considerable success in autonomous robotic systems. This is in part owing to the high-accuracy of robust SLAM algorithms and the emergence of new and lower-cost lidar products. This study benchmarks current state-of-the-art lidar SLAM algorithms with a multi-modal lidar sensor setup showcasing diverse scanning modalities (spinning and solid-state) and sensing technologies, and lidar cameras, mounted on a mobile sensing and computing platform. We extend our previous multi-modal multi-lidar dataset with additional sequences and new sources of ground truth data. Specifically, we propose a new multi-modal multi-lidar SLAM-assisted and ICP-based sensor fusion method for generating ground truth maps. With these maps, we then match real-time pointcloud data using a natural distribution transform (NDT) method to obtain the ground truth with full 6 DOF pose estimation. This novel ground truth data leverages high-resolution spinning and solid-state lidars. We also include new open road sequences with GNSS-RTK data and additional indoor sequences with motion capture (MOCAP) ground truth, complementing the previous forest sequences with MOCAP data. We perform an analysis of the positioning accuracy achieved with ten different SLAM algorithm and lidar combinations. We also report the resource utilization in four different computational platforms and a total of five settings (Intel and Jetson ARM CPUs). Our experimental results show that current state-of-the-art lidar SLAM algorithms perform very differently for different types of sensors. More results, code, and the dataset can be found at: github.com/TIERS/tiers-lidars-dataset-enhanced.

Index Terms:

Autonomous driving, LiDAR SLAM benchmark solid-state LiDAR, SLAMI Introduction

Lidar sensors have been adopted as the core perception sensor in many applications, from self-driving cars [1] to unmanned aerial vehicles [2], including forest surveying and industrial digital twins [3]. High resolution spinning lidars enable a high-degree of awareness from the surrounding environments. More dense 3D pointclouds and maps are in creasing demand to support the next wave of ubiquitous autonomous systems as well as more detailed digital twins across industries. However, higher angular resolution comes at increased cost in analog lidars requiring a higher number of laser beams or a more compact electronics and optics solution. New solid-state and other digital lidars are paving the way to cheaper and more widespread 3D lidar sensors capable of dense environment mapping [4, 5, 6, 7].

So-called solid-state lidars overcome some of the challenges of spinning lidars in terms of cost and resolution, but introduce some new limitations in terms of a relatively small field of view (FoV) [8, 6]. Indeed, these lidars provide more sensing range at significantly lower cost [9]. Other limitations that affect traditional approaches to lidar data processing include irregular scanning patterns or increased motion blur.

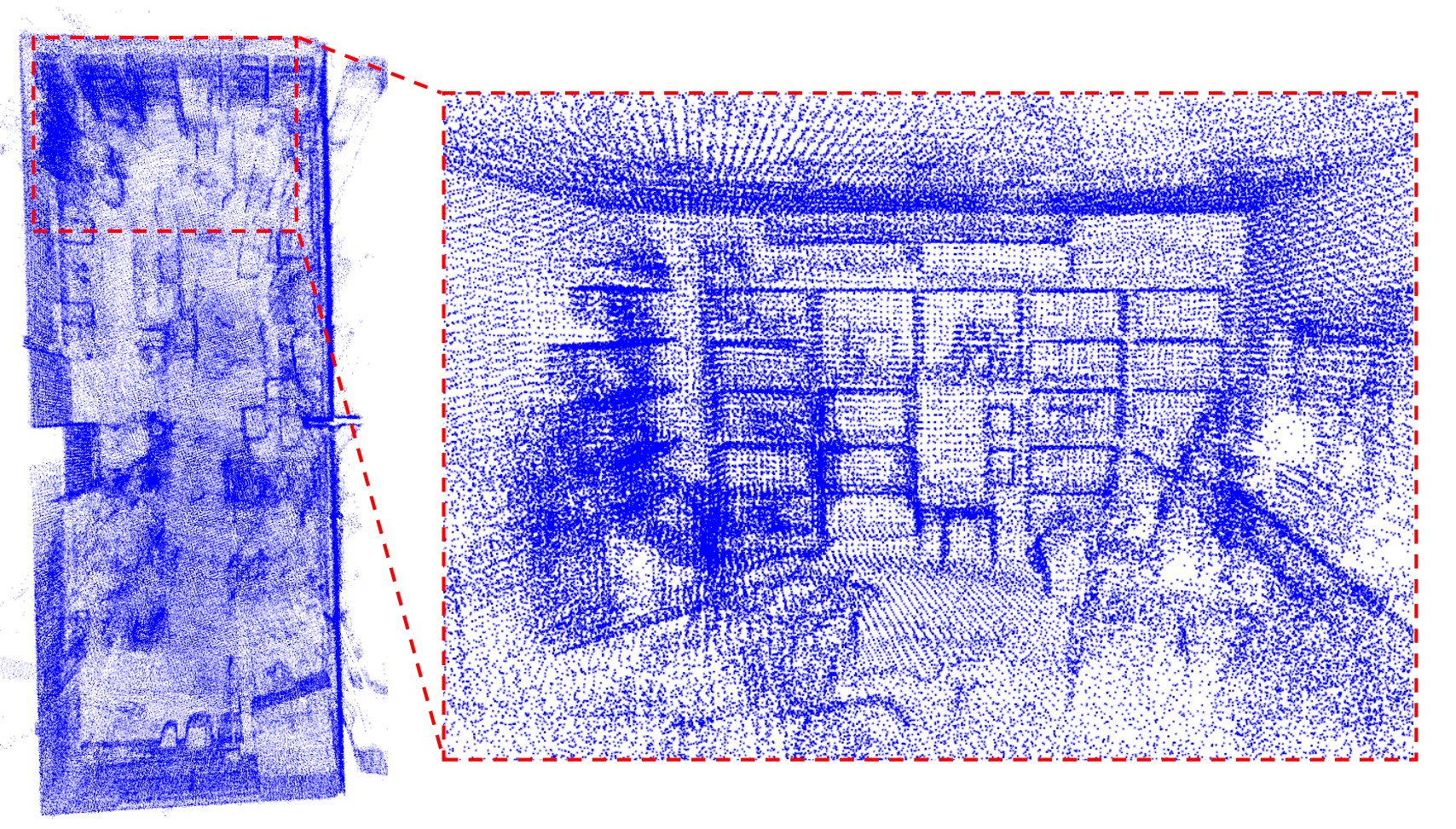

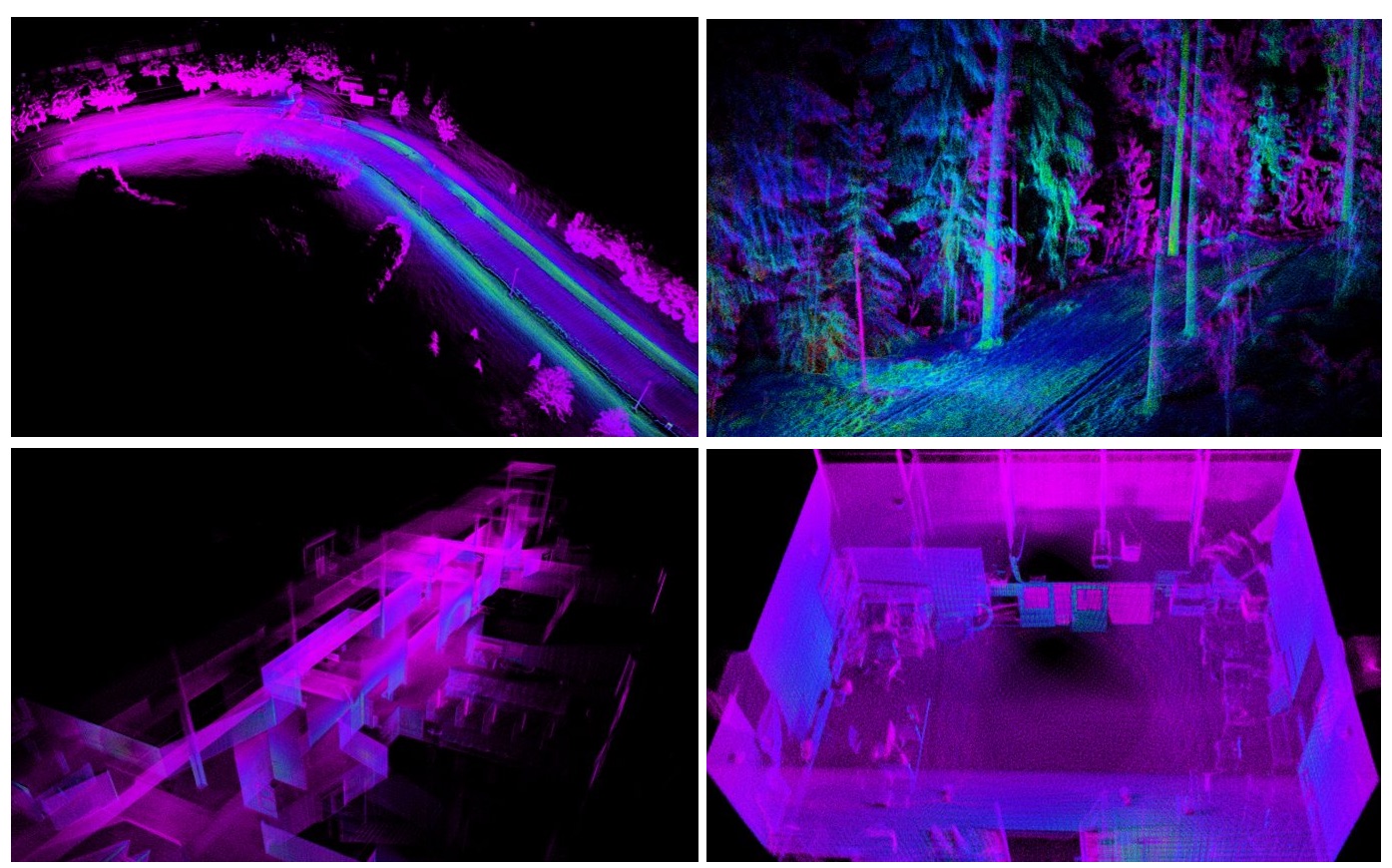

Despite their increasing popularity, few works have benchmarked the performance of both spinning lidar and solid-state lidar in diverse environments, which limits the development of more general-purpose lidar-based SLAM algorithms [9]. To bridge the gap in the literature, we present a benchmark that compares different modality lidars (spinning, solid-state) in diverse environments, including indoor offices, long corridors, halls, forests, and open roads. To allow for more accurate and fair comparison, we introduce a new method for ground truth generation in larger indoor spaces (see Fig. 1(a)). This enhanced ground truth enables significantly higher degree of quantitative benchmarking and comparison with respect to our previous work [9]. We hope for the extended dataset and ground truth labels, as well as more detailed data, to provide a performance reference for multi-modal lidar sensors in both structured and unstructured environments to both academia and industry.

In summary, this work evaluates state-of-the-art SLAM algorithms with a multi-modal multi-lidar platform as an extension of our previous work [9]. The main contributions of this work are as follows:

-

1.

a ground truth trajectory generation method for environments where MOCAP or GNSS/RTK are unavailable that leverages the multi-modality of the data acquisition platform and high-resolution sensors;

-

2.

a new dataset with data from 5 different lidar sensors, one lidar camera, and one stereo fisheye cameras in a variety of environments as illustrated in Fig. 1(b). Ground truth data is provided for all sequences;

-

3.

the benchmarking of ten state-of-the-art filter-based and optimization-based SLAM methods on our proposed dataset in terms of the accuracy of odometry, memory and computing resource consumption. The results indicate the limitations of current SLAM algorithms and potential future research directions.

The structure of the paper is as follows. Section II surveys recent progress in SLAM and existing lidar-based SLAM benchmarks. Section III provides an overview of the configuration of the proposed sensor system. Section IV offers the detailed benchmark and ground truth generation methodology. Section V concludes the study and suggests future work.

| IMU | Type | Channels | FoV | Angular Resolution | Range | Freq. | Points | |

| Velodyne VLP-16 | N/A | spinning | 16 | 360°×30° | V:2.0°, H:0.4° | 100 m | 10 Hz | 300,000 pts/s |

| Ouster OS1-64 | ICM-20948 | spinning | 64 | 360°×45° | V:0.7°, H:0.18° | 120 m | 10 Hz | 1,310,720 pts/s |

| Ouster OS0-128 | ICM-20948 | spinning | 128 | 360°×90° | V:0.7°, H:0.18° | 50 m | 10 Hz | 2,621,440 pts/s |

| Livox Horizon | BOSCH BMI088 | solid-state | N/A | 81.7°×25.1° | N/A | 260 m | 10 Hz | 240,000 pts/s |

| Livox Avia | BOSCH BMI088 | solid-state | N/A | 70.4°×77.2° | N/A | 450 m | 10 Hz | 240,000 pts/s |

| RealSense L515 | BOSCH BMI085 | lidar camera | N/A | 70°×43°(°) | N/A | 9 m | 30 Hz | - |

| RealSense T265 | BOSCH BMI055 | fisheye cameras | N/A | 163±5° | N/A | N/A | 30 Hz | - |

| Sequence | Description | Ground Truth | Sensor setup |

| Forest01-03 | Previous dataset [9] | MOCAP/ SLAM | |

| Indoor01-05 | Previous dataset [9] | MOCAP/ SLAM | |

| Road01-02 | Previous dataset [9] | SLAM | |

| Indoor06 | Lab space (easy) | MOCAP | |

| Indoor07 | Lab space (hard) | MOCAP | |

| Indoor08 | Classroom space | SLAM+ICP | |

| Indoor09 | Corridor (short) | SLAM+ICP | |

| Indoor10 | Corridor (long) | SLAM+ICP | |

| Indoor11 | Hall (large) | SLAM+ICP | |

| Road03 | Open road | GNSS RTK |

II Related Works

Owing to high accuracy, versatility, and resilience across environments, 3D lidar SLAM has received much study as a crucial component of robotic and autonomous systems [10]. In this section, we limit the scope to the well-known and well-tested 3D lidar SLAM methods. We also include an overview of the most recent 3D lidar SLAM benchmarks.

II-A 3D Lidar SLAM

The primary types of 3D lidar SLAM algorithms today are lidar-only [11], and loosely-coupled [12] or tightly-coupled [13] with IMU data. Tightly-coupled approaches integrate the lidar and IMU data at an early stage, in opposition to SLAM methods that loosely fuse the lidar and IMU outputs towards the end of their respective processing pipelines.

In terms of lidar-only methods, an early work by Zhang et al. on Lidar Odometry and Mapping (LOAM) introduced a method that can achieve low-drift and low-computational complexity already in 2014 [14]. Since then, there have been multiple variations of LOAM that enhance its performance. By incorporating a ground point segmentation and a loop closure module, LeGO-LOAM is more lightweight with the same accuracy but improved computational expense and lower long-term drift [15]. However, lidar-only approaches are mainly limited by a high susceptibility to featureless landscapes [16, 17]. By incorporating IMU data into the state estimation pipeline, SLAM systems naturally become more precise and flexible.

In LIOM [13], the authors proposed a novel tightly-coupled approach with lidar-IMU fusion based on graph optimization which outperformed the state-of-the-art lidar-only and loosely coupled. Owing to the better performance of tightly-coupled approaches, subsequent studies have focused in this direction. Another practical tightly-coupled method is Fast-LIO [18], which provides computational efficiency and robustness by fusing the feature points with IMU data through a iterated extended Kalman filter. By extending FAST-LIO, FAST-LIO2 [19] integrated a dynamic structure ikd-tree to the system that allows for the incremental map update at every step, addressing computational scalability issues while inheriting the tightly-coupled fusion framework from FAST-LIO.

The vast majority of these algorithms function well with spinning lidars. Nonetheless, new approaches are in demand since new sensors such as solid-state Livox lidars have emerged novel sensing modalities, smaller FoVs and irregular samplings have emerged [9]. Multiple existing studies using enhanced SLAM algorithms are being researched to fit these new lidar characteristics. Loam livox [20] is a robust and real-time LOAM algorithm for these types of lidars. LiLi-OM [6] is another tightly-coupled method that jointly minimizes the cost derived from lidar and IMU measurements for both solid-state Lidars and conventional Lidars.

II-B SLAM benchmarks

There are various multi-sensor datasets available online. We had a systematic comparison of the popular datasets in the Table III of our former work [9]. Among these datasets, not all of them have an analytical benchmark of 3D Lidar SLAM based on multi-modality Lidars. KITTI benchmark [23] is the most significant one with capabilities of evaluating several tasks including odometry, SLAM, objects detection, tracking ans so alike.

III Data Collection

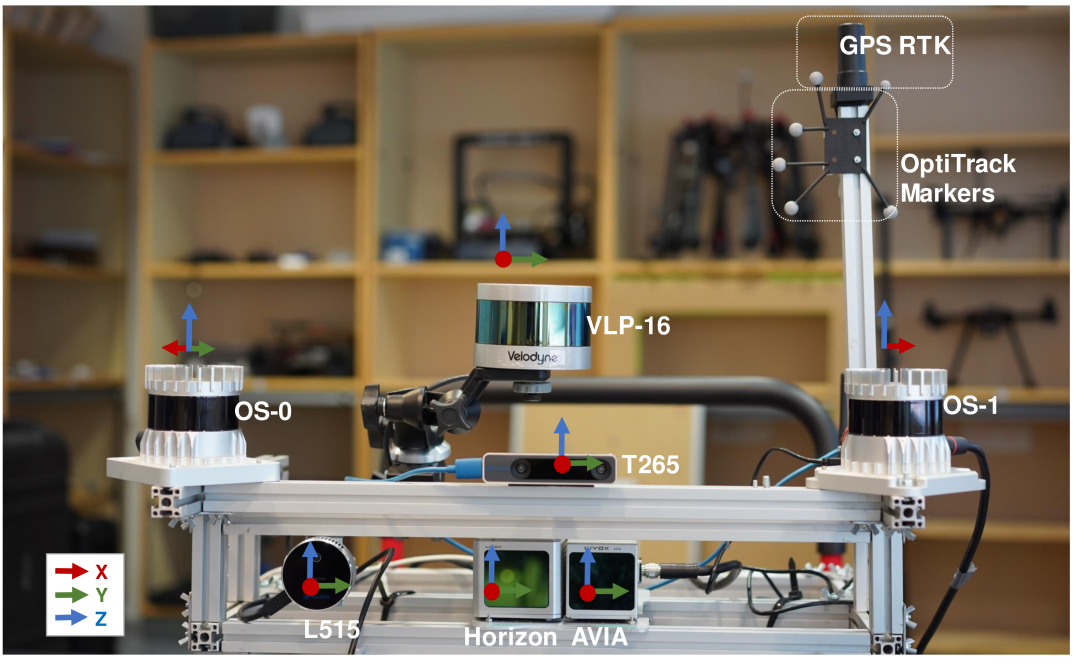

Our data collection platform is shown in Fig. 1(b), and details of sensors are listed in Table I. The platform has been mounted on a mobile wheeled vehicle to adapt to varying environments. In most scenarios, the platform is manually pushed or teleoperated, except for the forest environment where the platform is handheld.

III-A Data Collection Platform

The data collection platform contains various lidar sensors, from traditional spinning lidars with different resolutions to novel solid-state lidar featured with non-repetition scanning patterns. A lidar camera and stereo fisheye camera are also included. There are three spinning lidars, a 16-channel Velodyne lidar (VLP-16), a 64-channel Ouster lidar (OS1), and a 128-channel Ouster lidar (OS0). The OS0 and OS1 sensors were mounted left and right sides, where the OS1 is turned 45 degrees clockwise, and the OS0 is turned 45 degrees anticlockwise. The Velodyne lidar is at the top-most position. Two solid-state lidars, Horizon and Avia, were installed in the center of the frame. The Optitrack marker set for the MOCAP-based and the antenna for GNSS/RTK ground truth are both fixed on the top of the aluminum stick to maximize its visibility and detection range. All sensors are connected to a computer, featuring an Intel i7-10750h processor, 64 GB of DDR4 RAM memory and 1 TB SSD storage, through a Gigabit Ethernet router. The data collection system, including sensor drivers and online calibration scripts, are running on ROS Melodic under Ubuntu 18.04 entirely owing to the wider variety of ROS-based lidar SLAM methods available for Melodic.

III-B Calibration and Synchronization

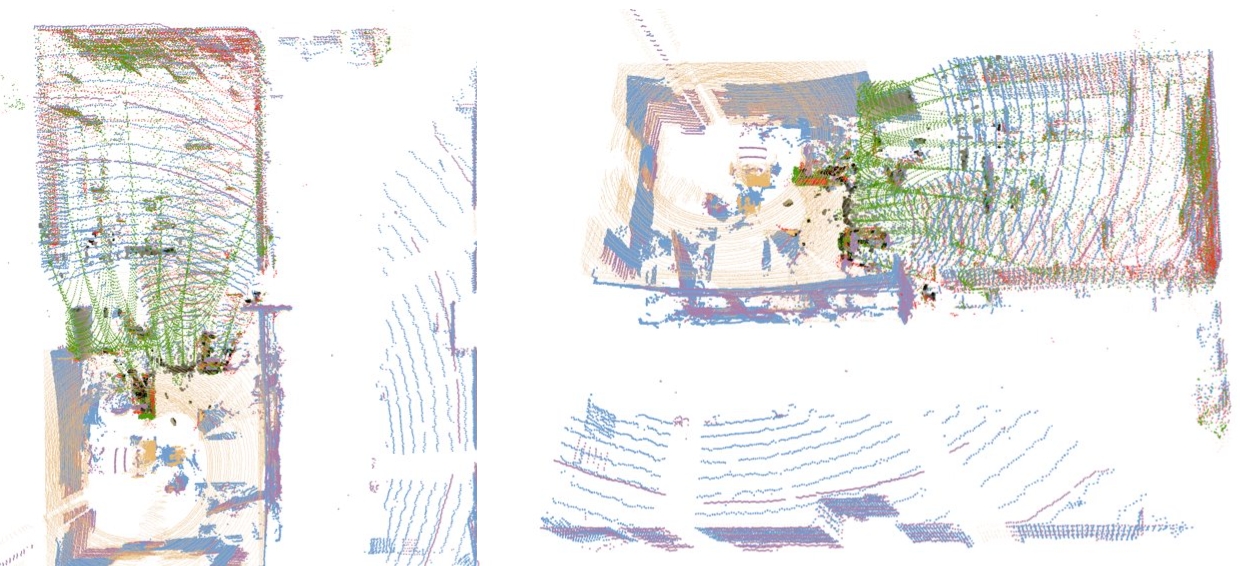

Efficient extrinsic parameters calibration is crucial to multi-sensor platforms, especially for handmade devices where the extrinsic parameters may change due to unstable connections or distortion of the material during transit. Similar to our previous work [9], we calculated the extrinsic parameter of sensors before each data collecting process. Fig. 2 shows the calibration result of sample lidar data from one of the indoor data sequences.

Different to our previous work [9], where the timestamp of Ouster and Livox lidars are kept based on their own clock, we synchronized all lidar sensors in ethernet mode via the software-based precise timestamp protocol (PTP) [24]. We compared the orientation estimation between the sensor’s built IMUs, and SLAM results with lidars and concluded that the latency of our system is below 5 ms.

III-C SLAM assisted Ground Truth Map

To provide accurate ground truth for large-scale indoor and outdoor environments, where the MOCAP system is unavailable or GNSS/RTK positioning result becomes unreliable due to the multi-path effect, we propose a SLAM-assisted solid-state lidar-based ground map generation framework.

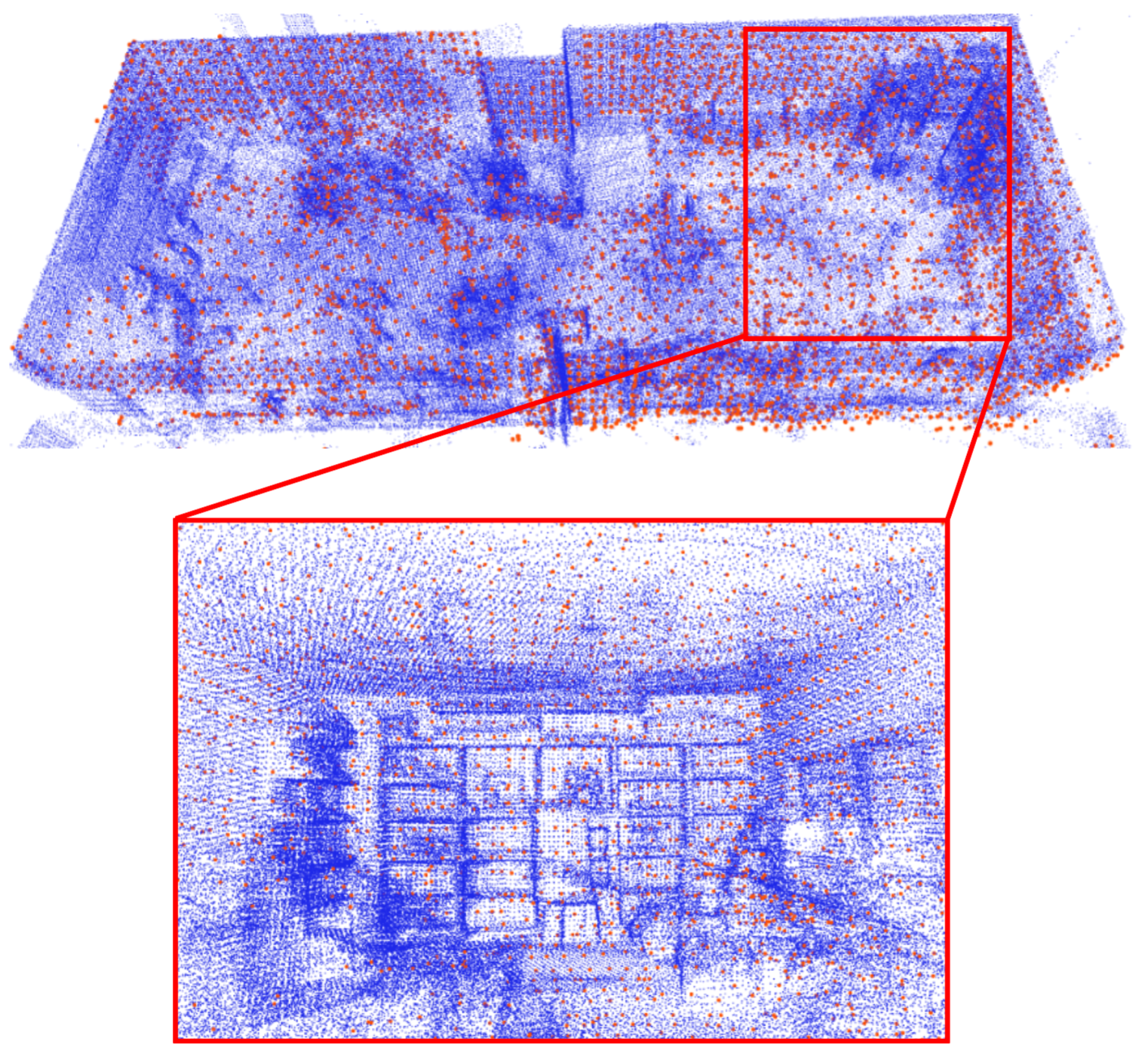

Inspired by the prior map generation methods in [25], where a survey-grade 3D imaging laser scanner Leica BLK360 scanner is unitized to obtain static pointclouds of the target environment, we employed a low-cost solid-state lidar Livox Avia and high resolution spinning lidar to collect undistorted pointclouds from environments. According to the Livox Avia datasheet,the range accuracy of the Avia sensor is 2 cm with a maximum detection range of 480 m. Due to the non-repetitive scanning pattern, the environment coverage of the pointcloud within the FoV increases with time. Therefore, we integrated multiple frames when the platform is stationary to get more detailed undistorted environmental sampling. Each integrated pointcloud contains more than 240,000 points. The Livox built-in IMU is used to detect the stationary state of the platform when the acceleration values are smaller than 0.01 along all axes. After gathering multiple undistorted pointcloud submaps from the target environment, the next step is to match and merge all submap into a global map by ICP. As the ICP process requires a good initial guess, we employ a high resolution spinning lidar os0 with a 360-degree horizontal FOV to provide raw position by performing real-time SLAM algorithms. This process is outlined in Algorithm 1. A dense and high-definition ground truth map can be obtained by denoising the map generated by the algorithm described above to remove noise. Fig. 1(a) shows ground truth map of sequence indoor08 generated based on Algorithm 1

Let be the pointcloud produced by the spinning lidar, be the pointcloud generated by solid-state lidar, and be the IMU data from built-in IMU. Our previous work has shown high resolution spinning lidar has the most robust performance in diverse environments. Therefore, LeGo-LOAM [15] is performed with a high resolution spinning lidar (OS0-128) and outputs the estimated pose for each submap.

The cached data stores submaps and the related poses. Let be the pointcloud and related pose in . The submap will be first transformed to map coordinate as based on estimated pose ; then GICP methods are employed on to minimize the Euclidean distance between closest points against pointcloud iteratively; will be transformed by the transformation matrix generated from GICP process, then merged to the map . The result map is treated as ground truth map.

After the ground truth map generated, we employ normal NDT method in [26] to match the real-time pointcloud data from spinning lidar against the HR map as the Figure 4 shows to get the platform position in ground truth map. The matching result from the NDT localizer is treated as the ground truth.

| Sequence | FLIO_OS0 | FLIO_OS1 | FLIO_Velo | FLIO_Avia | FLIO_Hori | LLOM_Hori | LLOMR_OS1 | LIOL_Hori | LVXM_Hori | LEGO_Velo |

| Indoor06 | 0.015 / 0.006 | 0.032 / 0.011 | N/A | 0.205 / 0.093 | 0.895 / 0.447 | N/A | 0.882 / 0.326 | N/A | N/A | 0.312 / 0.048 |

| Indoor07 | 0.022 / 0.007 | 0.025 / 0.013 | 0.072 / 0.031 | N/A | N/A | N/A | N/A | N/A | N/A | 0.301/0.081 |

| Indoor08 | 0.048 / 0.030 | 0.042 / 0.018 | 0.093 / 0.043 | N/A | N/A | N/A | N/A | N/A | N/A | 0.361 / 0.100 |

| Indoor09 | 0.188 / 0.099 | N/A | 0.472 / 0.220 | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Indoor10 | 0.197 / 0.072 | 0.189 / 0.074 | 0.698 / 0.474 | 0.968 / 0.685 | 0.322 / 0.172 | 1.122 / 0.404 | 1.713 / 0.300 | 0.641 / 0.469 | N/A | 0.930 / 0.901 |

| Indoor11 | 0.584 / 0.080 | 0.105 / 0.041 | 0.911 / 0.565 | 0.196 / 0.098 | 0.854 / 0.916 | 0.1.097 / 0.0.45 | 1.509 / 0.379 | N/A | N/A | N/A |

| Road03 | 0.123 / 0.032 | 0.095 / 0.037 | 1.001 / 0.512 | 0.211 / 0.033 | 0.351 / 0.043 | 0.603 / 0.195 | N/A | 0.103 / 0.058 | 0.706 / 0.396 | 0.2464 / 0.063 |

| Forest01 | 0.138 / 0.054 | 0.146 / 0.087 | N/A | 0.142 / 0.074 | 0.125 / 0.062 | 0.116 / 0.053 | 0.218 / 0.110 | 0.054 / 0.033 | 0.083 / 0.041 | 0.064 / 0.032 |

| Forest02 | 0.127 / 0.065 | 0.121 / 0.069 | N/A | 0.211 / 0.077 | 0.348 / 0.077 | 0.612 / 0.198 | N/A | 0.125 / 0.073 | 0.727 / 0.414 | 0.275 / 0.077 |

| ( CPU utilization (%), RAM utilization (MB), Pose publication rate (Hz) ) | ||||||||||

| FLIO_OS0 | FLIO_OS1 | FLIO_Velo | FLIO_Avia | FLIO_Hori | LLOM_Hori | LLOMR_OS1 | LIOL_Hori | LVXM_Hori | LEGO_Velo | |

| Intel PC | (79.4, 384.5, 74.0) | (73.7, 437.4, 67.5) | (69.9, 385.2, 98.6) | (65.0, 423.8, 98.3) | (65.7, 423.8, 103.7) | (126.2, 461.6, 14.5) | (112.3, 281.5, 25.8) | (186.1, 508.7, 19.1) | (135.4, 713.7, 14.7) | (28.7, 455.4, 9.8) |

| AGX MAX | (40.9, 385.3, 13.6) | (54.5, 397.5, 21.2) | (44.4, 369.7, 29.1) | (40.8, 391.5, 32.3) | (37.6, 408.4, 34.7) | (128.5, 545.4, 9.1) | (70.8, 282.3, 9.6) | (247.2, 590.3, 9.6) | (162.3, 619.0, 10.5) | (42.4, 227.8, 7.0) |

| AGX 30 W | (55.1, 398.8, 13.2) | (73.9, 409.2, 15.4) | (58.3, 367.6, 21.4) | (47.4, 413.4, 24.5) | (50.5, 387.9, 26.8) | (168.5, 658.5, 1.5) | (107.1, 272.2, 6.5) | (188.1, 846.0, 4.1) | (185.86, 555.81, 5.0) | (62.8, 233.4, 3.5) |

| UP Xtreme | (90.9, 401.8, 47.3) | (125.9, 416.2, 58.0) | (110.5, 380.5, 89.6) | (113.2, 401.2, 90.7) | (109.7, 422.8, 91.0) | (130.1, 461.1, 12.8) | (109.0, 253.5, 13.6) | (298.2, 571.8, 14.0) | (189.6, 610.4, 7.9) | (39.7, 256.6, 9.1) |

| NX 15 W | (53.7, 371.1, 14.3) | (73.3, 360.4, 14.2) | (57, 331.5, 19.5) | (51.2, 344.8, 21.9) | (47.5, 370.7, 23.4) | (N / A) | (N / A) | (239.0, 750.5, 4.54) | (198.0, 456.7, 5.5) | (36.9, 331.4, 3.7) |

IV SLAM benchmark

In this study, we evaluated popular 3D Lidar SLAM algorithms in multiple data sequences of various scenarios, including indoor, outdoor, and forest environments.

IV-A Ground Truth Evaluation

The evaluation of the accuracy of the proposed ground truth prior map method is challenging for some scenes in the dataset, as both GNSS and MOCAP systems are not available in indoor environments such as long corridors. Figure 5 (a),(b),(c) shows the standard deviations of the ground truth generated by the proposed method during the first 10 seconds when the device is stationary from sequence Indoors09. The standard deviations along the , , and axes are 2.2 cm, 4.1 cm, and 2.5 cm, respectively, or about 4.8 cm overall. However, evaluating localization performance when the device is in motion is more difficult. To better understand the order of magnitude of the accuracy, we compare the NDT-based ground truth values with the MOCAP-based ground truth values in the sequence Indoor06 environment. The results in Fig. 5 (d) show that the maximum difference does not exceed 5 cm.

IV-B Lidar Odometry Benchmarking

Different types of SLAM algorithms are selected and tested in our experiment. Lidar-only algorithms LeGo-LOAM (LEGO) 111https://github.com/RobustFieldAutonomyLab/LeGO-LOAM and Livox-Mapping (LVXM) 222https://github.com/Livox-SDK/livox_mapping are applied on data from the VLP-16 and Horizon separately; Tightly-coupled iterated extended Kalman filter-based methods, FAST-LIO (FLIO) 333https://github.com/hku-mars/FAST_LIO [27], are applied on both spinning lidar and solid-state lidar with built-in IMUs; A tightly coupled lidar inertial SLAM system based on sliding window optimization, LiLi-OM 444https://github.com/KIT-ISAS/lili-om [28] is tested with OS1 and Horizon. Furthermore, a tightly coupled method featuring sliding window optimization developed for Horizon lidar, LIO-LIVOX (LIOL)555https://github.com/Livox-SDK/LIO-Livox has also been tested on Horizon lidar data.

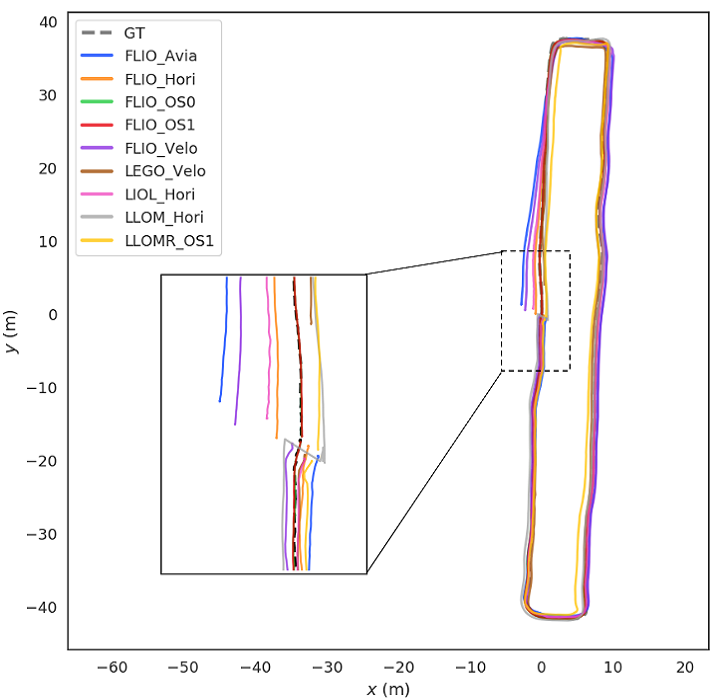

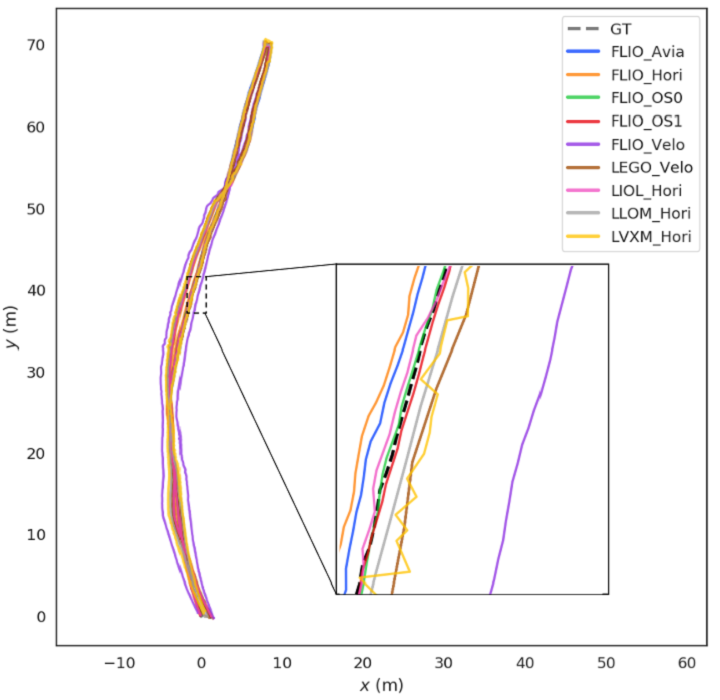

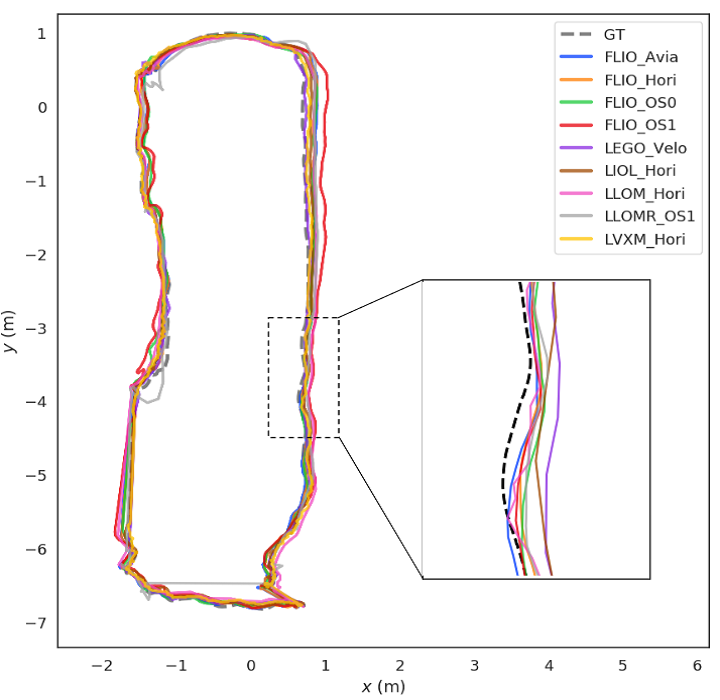

We provide a quantitative analysis of the odometry error based on the ground truth in Table III. To compare the trajectories in the same coordinate, we treat the coordinate of OS0 as a reference coordinate and transformed all trajectories generated by selected SLAM methods to reference coordinate. The absolute pose errors (APE) [29] is employed as the core evaluation metric. We calculated the error of each trajectory with the open-source EVO toolset 666https://github.com/MichaelGrupp/evo.git.

From the result, we can conclude that FAST_LIO with high resolution spinning lidar OS0 and OS1 has the most robust performance that can complete all the trajectories on different sequences with promising accuracy. Especially for sequence Indoor09 showcasing a long corridor, all other methods failed and Fast_LIO with high resolution lidar remain survived. Solid-state lidar-based SLAM systems such as LIOL_Hori perform as well or even better in outdoor environments than rotating lidars with appropriate algorithms, but perform significantly more poorly in the indoor environments. For the open road sequences Road03, all SLAM methods perform well, and the trajectories are completed without major disruptions. For the indoor sequence Indoor06, Avia-based and Horizon-based FLIO are able to reconstruct the sensor trajectory but significant drift accumulates. In all of these sequences, all the methods applied to spinning lidars perform satisfactorily. This result can be expected as they have full view of the environment, which has a clear geometry. For the sequence Indoor10 showcasing a long corridor, almost all methods can reconstruct the complete trajectory again. The best performance comes from OS0-FLIO and OS1-FLIO with correct alignment between the first and last positions. We hypothesize that this occurs because OS0 has more channels than OS1, leading to lower accumulated cumulative angular drift.

In addition to the quantitative trajectory analysis, we visualize trajectories generated by selected methods in 3 representative environments (indoors, outdoors, forest) in Fig. 6. Full reconstructed paths are available in the dataset repository.

IV-C Run-time evaluation across certain computing platforms

We conducted this experiment on 4 different platforms. First, a Lenovo Legion Y7000P with 16 GB RAM, a 6-core Intel i5-9300H (2.40 GHz) and an Nvidia GTX 1660Ti (1536 CUDA cores, 6 GB VRAM). Then, the Jetson Xavier AGX, a popular computing platform for mobile robots, has an 8-core ARMv8.2 64-bit CPU (2.25 GHz), 16 GB RAM and 512-core Volta GPU. From its 7 power modes, we chose MAX and 30 W (6 core only) modes. The Nvidia Xavier NX is also a common embedded computing platform with a 6-core ARM v8.2 64-bit CPU, 8 GB RAM, and 384-core Volta GPU with 48 Tensor cores. For the NX, we choose the 15 W power mode (all 6 cores). Finally, the UP Xtreme board features an 8-core Intel i7-8665UE (1.70 GHz) and 16 GB RAM.

These platforms all run ROS Melodic on Ubuntu 18.04. The CPU and memory utilization is measured with a ROS resource monitor tool 777https://github.com/alspitz/cpu_monitor. Additionally, for minimizing the difference of the operating environment, we unified the dependencies used in each SLAM system into same version, and each hyperparameter in the SLAM system is configured with the default values. The results are shown in Table IV.

The memory utilization of each selected SLAM approach among the two processor architectures platforms are roughly equivalent. However, the CPU utilization of the same SLAM algorithm running on Intel processors is generally higher than the other algorithms, and also the highest publishing frequency is obtained. LeGO_LOAM has the lowest CPU utilization but its accuracy is towarsd the low end (see Table III), and has a very low pose publishing frequency. Fast-LIO performs well, especially on embedded computing platforms, with good accuracy, low resource utilization, and high pose publishing frequency. In contrast, LIO_LIVOX has the highest CPU utilization due to the computational complexity of the frame-to-model registration method applied to estimate the pose.

A final takeaway is in the generalization of the studied methods. Many state-of-the-art methods are only applicable to a single lidar modality. In addition, those that have higher flexibility (e.g., FLIO) still lack the ability to support a point-cloud resulting from the fusion of both types of lidars.

V Conclusion

In this paper, we provide lidar datasets covering the characteristics of various environments (indoor, outdoor, forest), and systematically evaluate 5 open source SLAM algorithms in terms of lidar Odometry, and power consumption. The experiments have covered 9 sequences across 2 computing platforms. By including the Nvidia Jetson Xavier platform, it provides further references for the application of various SLAM algorithms on computationally resource-constrained devices such as drones. Overall, we found that in both indoor and outdoor environments, the spinning lidar-based FLIO exhibited good performance with low power consumption, which we believe is due to the ability of the spinning lidar to obtain a full view of the environment . However, in the forest environment, the LIOL algorithm based on solid-state lidar has the best performance in terms of accuracy and mapping quality, although it has the highest power consumption due to the sliding window optimization.

Finally, we aim to further extend our dataset to provide more refined and difficult sequences and open source it in the future. In this paper, our benchmark tests only focus on SLAM algorithms based on spinning lidar and solid-state lidar. In the future, we will add benchmark tests based on cameras and even SLAM algorithms based on multiple sensor fusions.

Acknowledgment

This research work is supported by the Academy of Finland’s AeroPolis project (Grant 348480).

References

- [1] Q. Li, J. P. Queralta, T. N. Gia, Z. Zou, and T. Westerlund, “Multi-sensor fusion for navigation and mapping in autonomous vehicles: Accurate localization in urban environments,” Unmanned Systems, vol. 8, no. 03, pp. 229–237, 2020.

- [2] N. Varney, V. K. Asari, and Q. Graehling, “Dales: a large-scale aerial lidar data set for semantic segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2020, pp. 186–187.

- [3] J. Yang, Z. Kang, S. Cheng, Z. Yang, and P. H. Akwensi, “An individual tree segmentation method based on watershed algorithm and three-dimensional spatial distribution analysis from airborne lidar point clouds,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 13, pp. 1055–1067, 2020.

- [4] D. Van Nam and K. Gon-Woo, “Solid-state lidar based-slam: A concise review and application,” in 2021 IEEE International Conference on Big Data and Smart Computing (BigComp). IEEE, 2021, pp. 302–305.

- [5] L. Qingqing, Y. Xianjia, J. P. Queralta, and T. Westerlund, “Adaptive lidar scan frame integration: Tracking known mavs in 3d point clouds,” in 2021 20th International Conference on Advanced Robotics (ICAR). IEEE, 2021, pp. 1079–1086.

- [6] K. Li, M. Li, and U. D. Hanebeck, “Towards high-performance solid-state-lidar-inertial odometry and mapping,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 5167–5174, 2021.

- [7] J. P. Queralta, L. Qingqing, F. Schiano, and T. Westerlund, “Vio-uwb-based collaborative localization and dense scene reconstruction within heterogeneous multi-robot systems,” arXiv preprint arXiv:2011.00830, 2020.

- [8] J. Lin and F. Zhang, “Loam livox: A fast, robust, high-precision lidar odometry and mapping package for lidars of small fov,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 3126–3131.

- [9] Q. Li, X. Yu, J. P. Queralta, and T. Westerlund, “Multi-modal lidar dataset for benchmarking general-purpose localization and mapping algorithms,” arXiv preprint arXiv:2203.03454, 2022.

- [10] C. Cadena, L. Carlone, H. Carrillo, Y. Latif, D. Scaramuzza, J. Neira, I. Reid, and J. J. Leonard, “Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age,” IEEE Transactions on robotics, vol. 32, no. 6, pp. 1309–1332, 2016.

- [11] D. Rozenberszki and A. L. Majdik, “Lol: Lidar-only odometry and localization in 3d point cloud maps,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 4379–4385.

- [12] W. Zhen, S. Zeng, and S. Soberer, “Robust localization and localizability estimation with a rotating laser scanner,” in 2017 IEEE international conference on robotics and automation (ICRA). IEEE, 2017, pp. 6240–6245.

- [13] H. Ye, Y. Chen, and M. Liu, “Tightly coupled 3d lidar inertial odometry and mapping,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 3144–3150.

- [14] J. Zhang and S. Singh, “Loam: Lidar odometry and mapping in real-time.” in Robotics: Science and Systems, vol. 2, no. 9, 2014.

- [15] T. Shan and B. Englot, “Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 4758–4765.

- [16] Q. Li, P. Nevalainen, J. Peña Queralta, J. Heikkonen, and T. Westerlund, “Localization in unstructured environments: Towards autonomous robots in forests with delaunay triangulation,” Remote Sensing, vol. 12, no. 11, p. 1870, 2020.

- [17] P. Nevalainen, P. Movahedi, J. P. Queralta, T. Westerlund, and J. Heikkonen, “Long-term autonomy in forest environment using self-corrective slam,” in New Developments and Environmental Applications of Drones. Springer, 2022, pp. 83–107.

- [18] W. Xu and F. Zhang, “Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated kalman filter,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3317–3324, 2021.

- [19] W. Xu, Y. Cai, D. He, J. Lin, and F. Zhang, “Fast-lio2: Fast direct lidar-inertial odometry,” IEEE Transactions on Robotics, 2022.

- [20] J. L. et al., “Loam livox: A fast, robust, high-precision lidar odometry and mapping package for lidars of small fov,” in 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 3126–3131.

- [21] J. Lin and F. Zhang, “R 3 live: A robust, real-time, rgb-colored, lidar-inertial-visual tightly-coupled state estimation and mapping package,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 10 672–10 678.

- [22] T.-M. Nguyen, M. Cao, S. Yuan, Y. Lyu, T. H. Nguyen, and L. Xie, “Viral-fusion: A visual-inertial-ranging-lidar sensor fusion approach,” IEEE Transactions on Robotics, vol. 38, no. 2, pp. 958–977, 2021.

- [23] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The kitti dataset,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1231–1237, 2013.

- [24] M. Lixia, A. Benigni, A. Flammini, C. Muscas, F. Ponci, and A. Monti, “A software-only ptp synchronization for power system state estimation with pmus,” IEEE Transactions on Instrumentation and Measurement, vol. 61, no. 5, pp. 1476–1485, 2012.

- [25] M. Ramezani, Y. Wang, M. Camurri, D. Wisth, M. Mattamala, and M. Fallon, “The newer college dataset: Handheld lidar, inertial and vision with ground truth,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 4353–4360.

- [26] P. Biber and W. Straßer, “The normal distributions transform: A new approach to laser scan matching,” in Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003)(Cat. No. 03CH37453), vol. 3. IEEE, 2003, pp. 2743–2748.

- [27] W. Xu and F. Zhang, “Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated kalman filter,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 3317–3324, 2021.

- [28] K. Li, M. Li, and U. D. Hanebeck, “Towards high-performance solid-state-lidar-inertial odometry and mapping,” IEEE Robotics and Automation Letters, vol. 6, no. 3, pp. 5167–5174, 2021.

- [29] J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of rgb-d slam systems,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS). IEEE, 2012, pp. 573–580.