A binary-activation, multi-level weight RNN and training algorithm for ADC-/DAC-free and noise-resilient processing-in-memory inference with eNVM

Abstract.

We propose a new algorithm for training neural networks with binary activations and multi-level weights, which enables efficient processing-in-memory circuits with embedded nonvolatile memories (eNVM). Binary activations obviate costly DACs and ADCs. Multi-level weights leverage multi-level eNVM cells. Compared to existing algorithms, our method not only works for feed-forward networks (e.g., fully-connected and convolutional), but also achieves higher accuracy and noise resilience for recurrent networks. In particular, we present an RNN-based trigger-word detection PIM accelerator, with detailed hardware noise models and circuit co-design techniques, and validate our algorithm’s high inference accuracy and robustness against a variety of real hardware non-idealities.

1. Introduction

Processing-in-memory (PIM) architectures for hardware neural network (NN) inference have gained increasing traction as they solve the memory bottleneck of traditional von Neumann architectures (Shafiee et al., 2016). While PIM architectures apply to different types of memories, including SRAM and eDRAM, it is especially advantageous to use embedded non-volatile memories (eNVM), due to their higher storage density and multi-level-cell (MLC) capability (Sheu et al., 2011; Bedeschi et al., 2008; Ma et al., 2019). Moreover, their non-volatility and power efficiency are especially well suited for inference tasks that require relatively fixed NN parameters. PIM with eNVMs not only avoids high-energy, long-latency, off-chip DRAM accesses by densely storing all NN parameters on-chip, it also minimizes inefficient on-chip data movement and intermediate data generation by embedding critical multiplication and accumulation (MAC) computations within the memory arrays.

The resulting highly-efficient MAC computations within the memory arrays are, however, analog in nature, which raises some issues. First, analog computations are sensitive to noise from the memory devices and circuits. Luckily, inherent noise resilience of NN models ought to ameliorate this concern. Second and more importantly, typical NNs use high-precision neuron activations that require DACs and ADCs in order to feed inputs to and resolve MAC outputs from the memory arrays, respectively. These circuit blocks introduce significant area, power, and latency overheads. For example, the die photo in (Zhang et al., 2016) shows the area of 5-bit input DACs consume more than one third of the area of the SRAM PIM array. This translates to even greater relative overhead for an eNVM-based PIM. Thorough design space exploration of a ReRAM PIM architecture in (Shafiee et al., 2016) shows the optimal configuration is to share one 8-bit ADC across all 128 columns of a 128x128 memristor array. Yet, this single ADC still occupies 48 times the area and consumes 6.7 times the energy of the entire memristor array. In fact, given this high cost of ADCs and DACs, many PIM designs resort to feeding and/or resolving activations one bit at a time in a sequential fashion, which then results in large latency penalties (Shafiee et al., 2016; Song et al., 2017). Either way, the high overhead introduced by ADCs and DACs defeats the original goal of attaining speed, power, and area efficiency by using PIM, which has recently drawn wide attention across the device, circuit, and algorithm communities (Ward-Foxton, 2020; Kendall et al., 2020; Xiao et al., 2020). Thirdly, weights of conventional NNs usually require higher resolution (e.g., 8 bits) than are available in typical MLCs (e.g., 2 or 3 bits), which requires each weight to be across multiple memory cells and further degrades area efficiency.

Reducing bit precision of both activations and weights can mitigate DAC and ADC overhead and reduce the number of cells needed for each weight. Hence, some PIM designs implement binary neural networks (BNN), with both binary weights and binary activations, obviating DACs and ADCs entirely, and only needing one single-level cell (SLC) per weight (Khwa et al., 2018). However, BNNs are not optimal for two reasons. First, the most popular BNN training algorithms use the straight-through estimator (STE) to get around BNN’s indifferentiability problem during backpropagation (Bengio et al., 2013; Hubara et al., 2017; Rastegari et al., 2016). However, as we will show in Sections 4.1 and 4.2, STE for binarizing activations is effective for training feedforward NNs, such as fully-connected (FC) and convolutional NNs (CNN), but works poorly for recurrent NNs (RNN). Second, binary weights in BNNs are too stringent to maintain high inference accuracy and do not take full advantage of the MLC capabilities of eNVMs.

This paper proposes an ADC-/DAC-free PIM architecture using dense MLC eNVMs (Figure 1(c)), which addresses all of the aforementioned issues of prior PIM designs. The major contributions of this work are:

-

•

To enable this optimal PIM architecture, we present a new noisy neuron annealing (NNA) algorithm to train NNs with binary activations (BA) and multi-level weights (MLW) that take full advantage of dense MLCs. This algorithm achieves higher inference accuracy than using STE to train BA-MLW RNNs.

-

•

We design an ADC-/DAC-free trigger word detection PIM accelerator with MLC eNVM, using a BA-MLW gated recurrent unit (GRU). Simulation results demonstrate superior inference accuracy and noise resilience compared to alternative algorithms.

-

•

Using detailed hardware noise models and circuit co-design techniques, we validate our NNA training algorithm yields high inference accuracy and robustness against a variety of real hardware noise sources.

-

•

We further demonstrate the generality of our NNA algorithm by also applying it to feedforward networks.

2. Background

MLC eNVM for PIM. Before we introduce the PIM architecture, it is important to first review the technologies available for its critical building block – the memory array. Although the memory array can be built with conventional SRAM cells, it is more advantageous to use eNVM, including traditional embedded Flash (eFlash) (Fick et al., 2017), or emerging resistive RAM (ReRAM) (Sheu et al., 2011) and phase change memory (PCM) (Bedeschi et al., 2008), or more recently, the purely-CMOS MLC eNVM (CMOS-MLC) (Ma et al., 2019). Compared with SRAM, which is inherently binary (single level cells, SLC), eNVM’s SLCs offer much higher area efficiency. Moreover, eNVM is often analog in nature that enables MLC capability for even higher storage density. Programming eNVM typically involves a continuous change in the conductivity of the memory devices, enabling them to store multiple levels of transister channel current in the cases of eFlash and CMOS-MLC, or multiple levels of resistor conductivity in the cases of ReRAM and PCM. The programming speed of eNVM is much slower than SRAM, but NN parameters are typically written infrequently and held constant during inference, rendering programming speed non-critical for inference-only applications. In fact, eNVM’s non-volatility offers energy savings and obviates reloading weights at power-up.

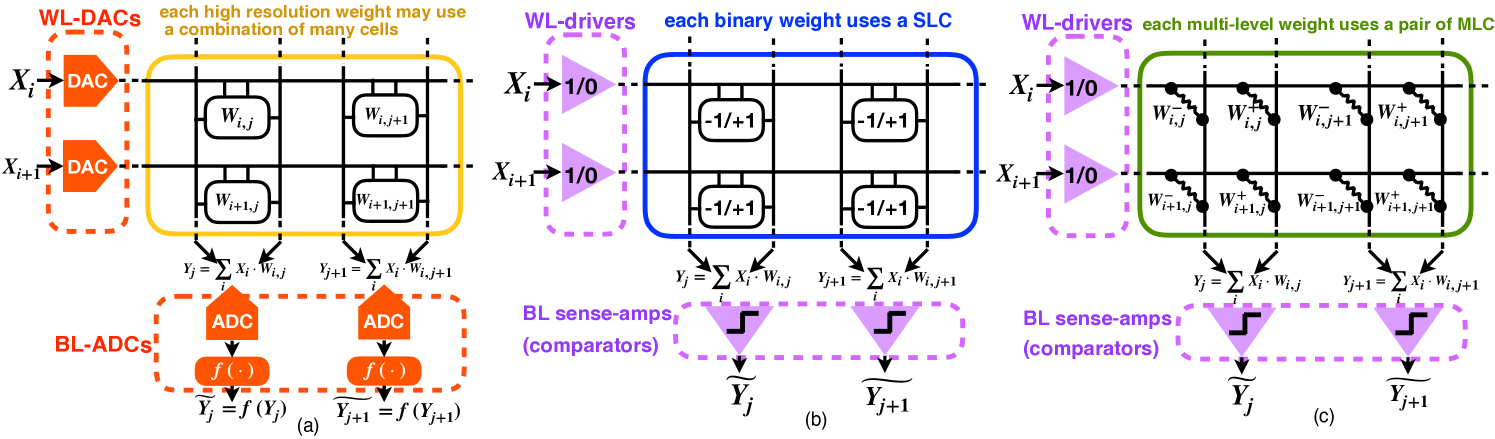

PIM for NN inference. A conventional PIM architecture for NN inference is shown in Figure 1a (Shafiee et al., 2016). The weight matrix of a NN is directly mapped into the memory array and, because weights for NNs typically require high resolution (e.g., bits), multiple lower-resolution memory cells are often combined to represent one weight. This PIM structure can perform a matrix-vector multiplication in one step. Each input activation (i.e., ) is simultaneously fed into individual wordlines as an analog voltage signal via a wordline DAC (WL-DAC), which then becomes a current through each memory cell proportional to the product of the input voltage and memory-cell conductance. MAC results are accumulated along corresponding sets of parallel bitlines (BLs) and resolved by column ADCs before being sent to digital nonlinear activation function units. A pair of columns are used to support both positive and negative weight values and each column pair corresponds to a single neuron. Again, as discussed in Section 1, while these DACs and ADCs support high-resolution activation, they impose large area and power overheads.

Quantization and BNN. Many different NN quantization algorithms have been proposed to reduce the bit widths of weights and/or activations while maximizing accuracy (Hubara et al., 2017; Rastegari et al., 2016; Li et al., 2016), in order to reduce storage and computation. For PIM, aggressive quantization can further relieve AD/DA resolution requirements for activations. In particular, BNNs with 1-bit activations and weights, can translate into much simpler PIM circuits, as shown in Figure 1b (compared to the conventional PIM architecture in Figure 1a). Since the activations are binary, WL-DACs and BL-ADCs in Figure 1a can be replaced by digital WL-drivers and conventional sense-amp comparators, respectively, both of which are compact peripheral components in standard memories. However, PIM implementations of BNNs have two major drawbacks. First, as shown in Figure 1b, binary weights use eNVM cells as 1-bit SLCs, not taking advantage of their MLC capability. Second, the most popular existing algorithm for training binary activations uses STE (Bengio et al., 2013; Hubara et al., 2017), which is effective for feedforward NNs, but performs poorly on RNNs.

3. Training BA-MLW NNs for optimal PIM implementation

To avoid DACs and ADCs and fully leverage MLCs, we propose a BA-MLW NN structure, shown in Figure 1c, as an optimal design for PIM. For simplicity, memory devices are illustrated as resistor cells (omitting access transistors), corresponding to ReRAM or PCM, with multi-level weights encodes via their conductance values; other eNMV technologies, such as eFLASH and CMOS-MLC, directly encode weights into access transistor channel currents. The current difference across a pair of cells represent one postive or negative weight value. Moreover, binary activation (BA) only requires digital WL-drivers and sense-amps, obviating expensive DACs and ADCs. In order to effectively train BA-MLW NNs, we propose a new algorithm that achieves high accuracy and resilience to quantization and noise for both feedforward and recurrent NNs.

3.1. Training binary activations (BA)

Binarizing the activations while maintaining high performance is challenging, because it not only restricts the expressive capacity of the neurons, but also introduces discrete computation nodes that preclude gradient propagation during training. We first review the STE algorithm prior to introducing our proposed BA training algorithm.

Reviewing STE. STE applies to a stochastic binary neuron (SBN) (Bengio et al., 2013). During forward propagation of training, each neuron generates a binary output from a Bernoulli sample

| (1) |

in which is a pre-activation from the linear MAC and the logistic function has a tunable slope (Chung et al., 2016). The SBN function is discrete with random sampling and, thus, does not have a well-defined gradient. Hence, STE simply passes through the gradient of the continuous sigmoid function during backpropagation

| (2) |

in which is the loss function. In other words, it ignores the random discrete sampling process, and pretends the forward propagation implements a sigmoid function. The issue with STE is that propagating gradients w.r.t. the sample-independent mean () while ignoring the random sampling outcome can cause discrepancies between the forward and backward passes (Jang et al., 2016). In fact, STE is a biased estimator of the expected gradient, which cannot even guarantee the correct sign when back-propagating through multiple hidden layers (Bengio et al., 2013). Nonetheless, STE has been found to work better in practice than other more complicated gradient estimators for feedforward NNs (Bengio et al., 2013), which we also verify in Section 4.2. However, as shown in Section 4.1, STE performs poorly when training RNNs with BAs.

Proposed noisy neuron annealing (NNA) algorithm. We use the following noisy continuous neuron (NCN) function during the forward pass of training

| (3) |

in which we add an i.i.d. zero-mean Gaussian random variable (RV) to each pre-activation before passing into a continuous sigmoid function with temperature . Equation 3 can be broken down into two steps: (i) a noise injection step, , and (ii) a continuous relaxing step, . This noise injection step – random noise added into pre-activations – corresponds to quantization noise, due to binarizing activations and quantizing weights, that flows forward through the MAC. Therefore, if we train the NN with noise explicitly added into pre-activations, the NN would develop resilience to these quantization errors. The continuous relaxing step is inspired by the Gumbel-softmax trick (Jang et al., 2016; Maddison et al., 2016), which uses a sharpened sigmoid to approximate the binary step function while still allowing smooth gradients to flow. As Section 4.1 will show, it is important to start from a large value of the hyperparameter to begin training with large noise and then anneal down to a smaller value, hence, the name of our training strategy – “noisy neuron annealing” (NNA) algorithm.

Related to the additive noise in variational autoencoders, the Gaussian noise distribution complies to the “mean and variance” form required by the re-parameterization trick (Maddison et al., 2016). This has a nice Gaussian gradient identity property (Rezende et al., 2014) that allows reversing the order between taking the expectation and taking the derivative. Combined with the continuous relaxation step, backpropagation through the entire NCN function does not encounter any discrete or sampling nodes:

| (4) |

During inference, we use the following noisy binary neuron (NBN) function:

| (5) |

which also has an additive i.i.d. Gaussian RV , but replaces the continuous sigmoid in NCN with a discrete step function. In Sections 4.1 and 4.2, we evaluate the noise resilience of trained NNs by sweeping .

Prior work has studied the regularization effect of noise injection regarding its impact on NN generalization and noise resilience (Murray et al., 1994; Jim et al., 1996; An, 1996; Reed et al., 1995). They use Taylor expansion of the loss function to show that adding Gaussian noise is akin to adding an extra regularization penalty term to the original loss function , such that the effective loss becomes

| (6) |

where refers to a certain noise-injected node. In our case, includes all pre-activations. The regularization term, , penalizes large gradients of w.r.t. noise-injected nodes, encouraging these nodes to find “flatter regions” of the solution space that are less sensitive to noise perturbations. Hyperparameter controls the tradeoff between reducing the raw error and enhancing noise resilience. Specifically, for NCN activations to counteract the noise, they tend to give up the highly-expressive but noise-prone transition region of the sigmoid and, instead, develop a bimodal pre-activation distribution to push them into the saturated regions, close to 1 or 0, that are highly immune to noise (Salakhutdinov et al., 2009). Equation 6 also provides a quantitative metric to estimate the noise resilience a NN acquires during training. We derive a detailed form to calculate this penalty term in Section 4.1 to compare amongst different NNs.

| weight | |||||||

|---|---|---|---|---|---|---|---|

3.2. Training multi-level weights (MLW)

Our NNA algorithm not only endows the NN with high resilience to binarizing activations, but also enables MLWs to leverage dense MLCs with high inference accuracy. Each weight can be quantized down to a small number of levels capable of encoding with one pair of MLCs (Figure 1c), as opposed to needing to combine multiple memory cells for high-resolution weights (Figure 1a).

To quantize MLWs from full-precision (FP) weights, we first determine a suitable clipping range for each weight matrix based on the weight distribution statistics from pre-training with FP weights. Then during fine-tuning with weight quantizations, we clip each weight matrix into prior to quantizing the weights into evenly-spaced levels within this range. We follow the same practice as (Hubara et al., 2017) for training, i.e., we use the quantized weights in the forward pass, but still keep the FP weights and accumulate gradients onto FP weights in the backward pass. After training is complete, the FP weights can be discarded and only the quantized weights are used for inference. For the special cases of 3- and 2-level weights, we use the training algorithm in (Li et al., 2016) for 3-level (ternary) and (Rastegari et al., 2016) for 2-level (binary) weights.

Table 1 shows how to represent a 7-level weight via the current differential across a pair of 4-level (2-bit) MLCs. Following the same principle, a 15-level weight can be encoded with a pair of 8-level (3-bit) MLCs, while a 3-level weight can use a pair of binary cells (1-bit, SLC).

4. Application case studies

We present two case studies that apply our NNA algorithm to (1) an RNN for a trigger word detection task and (2) a feedforward NN for handwritten digit recognition. We focus on the first case study to thoroughly demonstrate the merits of a BA-MLW RNN trained using the NNA algorithm. The second case study confirms the algorithm further generalizes to feedforward NNs.

4.1. A trigger word detection PIM accelerator using BA-MLW GRU with MLC eNVM

Trigger word detection is an important always-ON task for speech-activated edge devices, for which power and cost efficiency is paramount. We use the Speech Commands dataset from (Warden, 2018), which consists of over 105,000 audio clips of various words uttered by thousands of different people, with a total of 12 classification categories: 10 designated keywords, silence, and unknown words. We first present the architectural designs of the NN and PIM accelerator (Section 4.1.1), then elaborate on the software training results using different training methods (Section 4.1.2), and finally evaluate expected hardware performance with detailed noise models (Section 4.1.3).

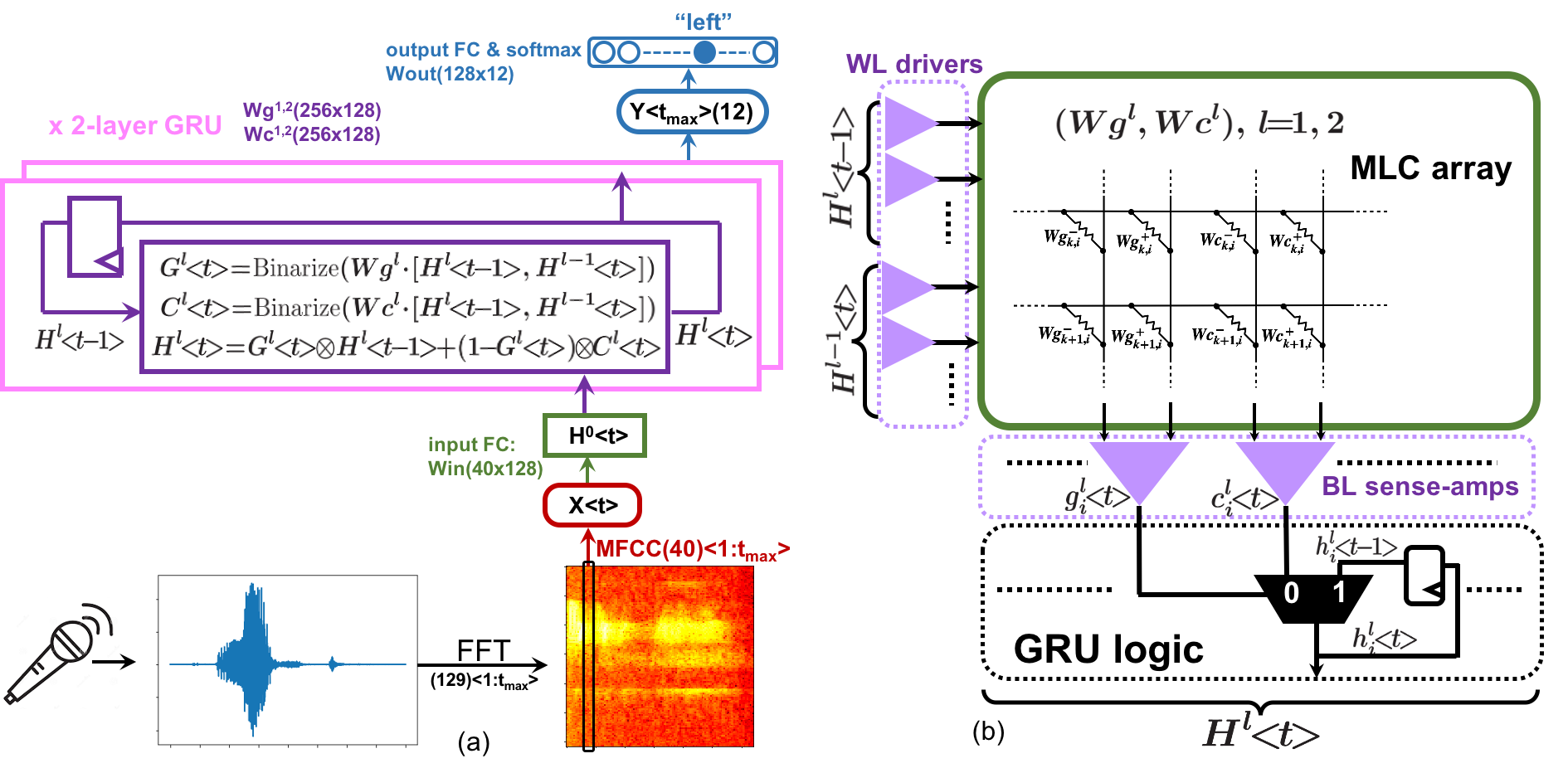

4.1.1. The architecture of the NN and PIM accelerator

The NN model structure. RNNs are well-suited to this speech-recognition task. Figure 2a illustrates a 2-layer gated recurrent unit (GRU) (Cho et al., 2014) with BA and MLW. We first perform FFT (window=16ms, stride=8ms) on the raw audio signals and then extract 40 Mel-frequency cepstral coefficients (MFCC) per 8ms timestep. Each MFCC vector passes through an input FC layer that encodes it into a 128 dimensional binary vector as the input to the first layer of a 2-layer stacked GRU (both layers use 128 dimensional vectors). We use the following modified version of GRU equations (Ng et al., 2018):

| (7) | ||||

| (8) | ||||

| (9) | ||||

| (10) | ||||

| (11) |

where denotes the timestep, is the layer number, and the gate (), candidate (), hidden state (), and the input encoding are all 128 dimensional activation vectors, trained using our NNA algorithm. The activation function refers to NCN (Equation 3) during training, and NBN (Equation 5) for evaluation, and simply uses the binary step function for PIM deployment (Figure 2a and 2b). Compared with the original GRU equations from (Cho et al., 2014), we remove the reset gate since it has minimal effect on the accuracy of this task, but greatly simplifes circuit design (Figure 2b). After the GRU processes inputs from all timesteps, the final timestep output of the top layer feeds into an output FC layer followed by a 12-way softmax that yields the classification.

PIM circuit design for the GRU. The BA-MLW GRU equations can be mapped into compact PIM circuits shown in Figure 2b. The MLC array implements MAC computations and the column sense-amps resolve the binarized and . serves as the multiplexer selection signal (since in Equation 11 for binary signals) to choose either to keep the binary hidden state saved from the previous timestep or to update it with the current binary candidate state. Since all analog MAC signals are encapsulated inside the MLC array, all input/output interface signals are binary, and the GRU logic outside the array is totally digital, this PIM design does not require any ADCs or DACs.

Noise-induced loss penalty terms for GRU. For the GRU, we can derive the noise-induced regularization penalty term in Equation 6 by taking the derivatives of loss w.r.t. the pre-activations of candidates () and gates ().

| (12) |

| (13) | ||||

| (14) |

where a lowercase letter with subscript denotes one element of the corresponding uppercase vector, and and regularize gates and candidates, respectively. The forms of Equations 13 and 14 have intuitive interpretations when minimizing them during training. To minimize , one way is to reduce the derivative of the gate () by pushing away from zero (the steep slope region of sigmoid), so that the gate is firmly ON or firmly OFF; alternatively, it can try to make the candidate of the current timestep equal to the hidden state of the previous timestep , such that the new hidden state would be the same regardless of . Either way, minimizing makes immune to noise injected into . To minimize , training will either reduce the gradient of candidate (), by pushing into the saturated flat regions of sigmoid, or try to turn on the gate to preserve the hidden state from the previous timestep disregarding the new candidate . Either way, it desensitizes to noise injected into . Equations 12–14 provide a quantitative metric to assess the resilience to quantization and noise in a trained GRU, which we use to compare different training schemes.

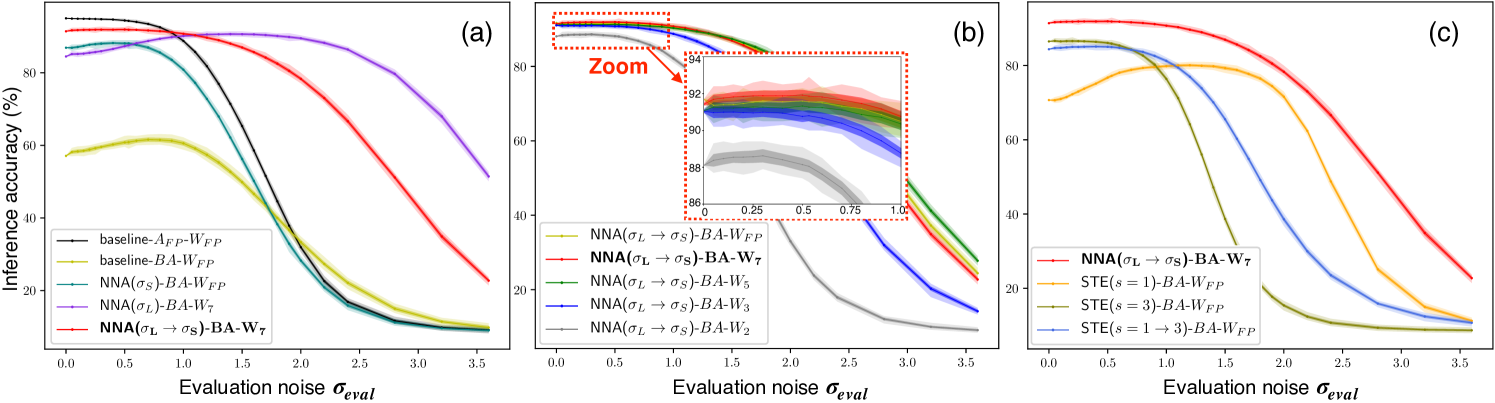

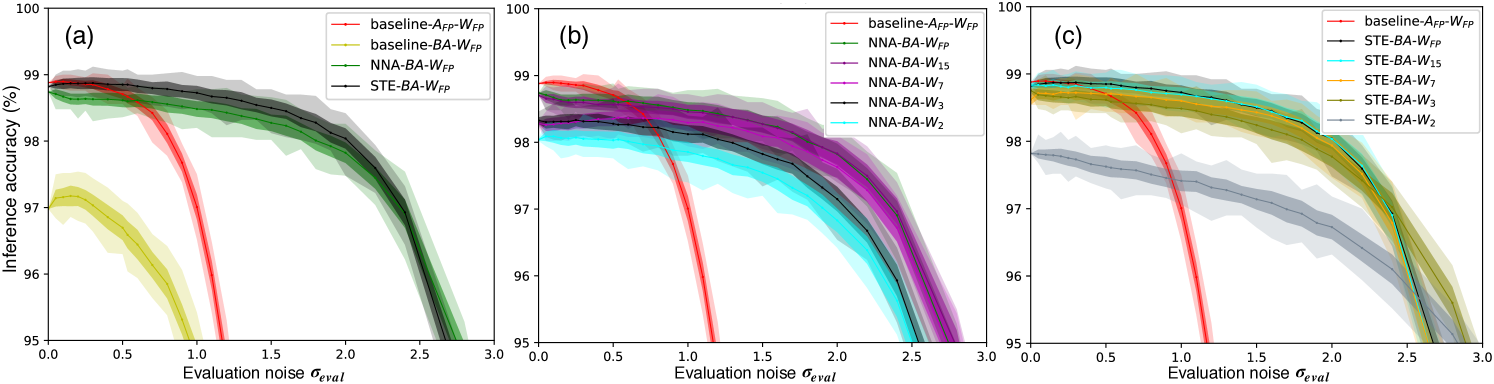

4.1.2. Software training results

To quantitatively compare NN accuracy and noise-resilience trained with different methods, we inject the same type of Gaussian training noise during inference and sweep the noise sigma – a common practice adopted in previous NN noise-resilience studies (Yang and Sze, 2019; Klachko et al., 2019; He et al., 2019; Moon et al., 2019; Bennett et al., 2020). We first compare different methods of binarizing activations, then explore different quantization levels for the weights, and, finally, compare RNN training performance using our NNA algorithm versus the popular STE algorithm. Results are summarized in Figure 3.

Comparing training schemes for binarizing activations. As a full-precision (FP) baseline, we first train the NN with FP sigmoid activations and FP weights, without noise injection or quantization. During inference, we add noise to its pre-activations and evaluate it with both FP sigmoid activations (baseline--, black), and binarized activations (baseline--, yellow), shown in Figure 3a. Trained without noise injection, baseline-- cannot maintain its high accuracy at large , making it vulnerable to quantization errors, leading to the poor performance of baseline--.

To endow the NN with resilience to quantization errors, we use our NNA algorithm and retrain from the FP sigmoid baseline (which is a good initialization point to speed up retraining). Initially, we use large training noise , and plot the inference accuracy with BAs and 7-level weights (NNA()--, purple) in Figure 3a. Results show high accuracy across a wider range of than baseline--, demonstrating stronger resilience to quantization errors. However, the accuracy peaks at a large around , and lower at small , because it is trained to minimize its loss in the presence of this large additive noise. This noise-resilience profile might suit certain noisy circuit environments, e.g., noisy power supply.

In order to also achieve high accuracy at small , we anneal down to a small and further retrain. As shown in Figure 3a (NNA()--, red), peak accuracy is achieved at smaller , demonstrating the efficacy of NNA with annealing (from to ). In comparison, directly retraining with from the baseline (NNA()--, green), without the intermediate stage, results in much worse accuracy and noise tolerance than NNA with annealing.

In practice, we find the choices of the hyperparameter quite flexible. For the initial large noise, we use a to be about 20% of the standard deviation (STD) of the inherent pre-activation distribution, corresponding to for this GRU, though a wide range of values all work similarly well; for the annealed noise, we find all achieves optimal results. For temperature in NCN, we find to be optimal: If too small, RNN’s gradient explodes. If too large, BA is not sufficiently approximated.

| Network | FP baseline | NNA() | NNA() | NNA() |

|---|---|---|---|---|

| Pg+Pc | 1.0000 | 0.5034 | 0.5018 | 0.8272 |

Loss penalty term interpretations. To understand why the annealing procedure of NNA is critical, we compare the loss penalty terms ( from Equation 13 and 14) of the four networks in Figure 3a. Shown in Table 2, we normalize them by the penalty value of baseline--, since only the relative values matter for comparison. After retraining with , NNA()--’s penalty term reduces to half of the baseline penalty, explaining its higher resilience to noise and quantization errors. After further retraining with annealed , NNA()-- maintains this small penalty value, even though provides less regularization effect – the smaller multiplier in Equation 12 (compared to previous ) makes the retraining prioritize reducing the raw error (thus higher peak accuracy) over enhancing noise resilience. This means the network can still “memorize” its previous large-noise training regularization effect even after annealing to fine-tune with smaller noise. In contrast, without the intermediate “experience” of large noise training, NNA()-- has much less regularization effect to reduce its loss penalty.

It should also be pointed out that if evaluated with FP activations and zero noise, all these networks achieve similarly high accuracy as the purely FP sigmoid network, but their accuracy and noise resilience are dramatically different after using binary activations. This implies that trained through different stages, the 4 networks in Figure 3a find distinct regions in the global solution space: retraining with finds a promising solution region that’s insensitive to quantization errors, while the further fine-tuning with only does a local search to optimize accuracy at the small noise range; in contrast, training without noise injection or only with small noise will not discover the solution region that’s resilient to noise and quantization due to lack of regularization penalty.

Weight quantization levels. Figure 3b shows the GRU’s performance across different numbers of weight quantization levels and confirms the high resilience to weight quantization offered by our NNA algorithm. The resulting performance of 7-level (implemented with a pair of 4-level cells) and 5-level (a pair of 3-level cells) quantization are almost the same as using FP weights. GRUs with 7-level weights achieve the highest peak accuracy at the lower end of range. Accuracy for GRUs with ternary weights (a pair of 2-level cells) degrades more quickly as increases. In contrast, GRUs with binary weights, which can be implemented with SRAMs), suffer the most accuracy degradation across the entire range. Our NNA algorithm’s high resilience to weight quantization makes it possible to use a pair of 3 or 4-level MLCs to achieve performance comparable to GRUs with FP weights. Even GRUs with tenary weights can achieve relatively high inference accuracy for applications with small . Therefore, our optimal PIM architecture (Figure 1c) avoids combining multiple cells to achieve high-resolution weights (Figure 1a, (Shafiee et al., 2016)), thereby saving area and simplifying circuit design.

NNA vs STE. We also experiment with SBN trained with STE, and compare its performance with our NNA algorithm in Figure 3c. We try 3 different settings for slope : (yellow), (blue), and annealing from to (, green) (Chung et al., 2016). Even with FP weights, all three GRUs trained with STE achieve worse inference accuracy compared to using NNA (NNA()--, red).

We should also point out an important distinction between the forms of SBN (Equation 1) and our NCN (Equation 3): SBN only has one parameter , whereas NCN has two degrees of freedom using and . On one hand, SBN uses to control the sigmoid slope (corresponding to in NCN), and similar to , we find needs to be no greater than about 3, in order not to run into exploding gradient problem. On the other hand, also controls the stochasticity of the Bernoulli sampling: a smaller introduces more randomness thus a higher noise resilience range, as can be seen from Figure 3c, comparing , and . However, SBN cannot seperately control the the sigmoid slope and the stochasticity. In contrast, our NCN has independent controls: is chosen to approximate binary outputs while avoiding exploding gradients, whereas the magnitude of noise injection is seperately controlled by . This flexiblity enables us to effectively implement NNA algorithm’s annealing procedure using NCN.

From a mathematical rigor point of view, in contrast to the Gaussian RVs used in NCN, the Bernoulli RVs used in SBN do not comply with the “location-scale” distribution required for using the reparameterization trick (Maddison et al., 2016). Therefore, it is mathematically illegal for STE to change the order between taking expectation and taking derivative for Bernoulli RVs (Equation 2). Increasing the slope can alleviate the discrepancy between the forward and backward pass of SBN (making the math less wrong, which explains the higher accuracy with in Figure 3c), but due to the lack of separate controls, changing inevitably changes both the sampling randomness and sigmoid’s gradient, and cannot be too large which will cause exploding gradients.

4.1.3. Hardware noise model and validation results

Need for accurate hardware noise models. So far we have used the same type of additive random Gaussian noise for both training and inference as and in Figure 3, consistent with prior work that evaluate and compare NN noise resilience (Yang and Sze, 2019; Klachko et al., 2019; He et al., 2019; Moon et al., 2019; Bennett et al., 2020). While prior hardware noise modeling work generally over-simplify by lumping all the noise sources into a single random Gaussian RV, real circuits have a variety of noise sources with properties different from these additive Gaussian RVs, mandating realistic models to faithfully evaluate their impact on hardware inference accuracy.

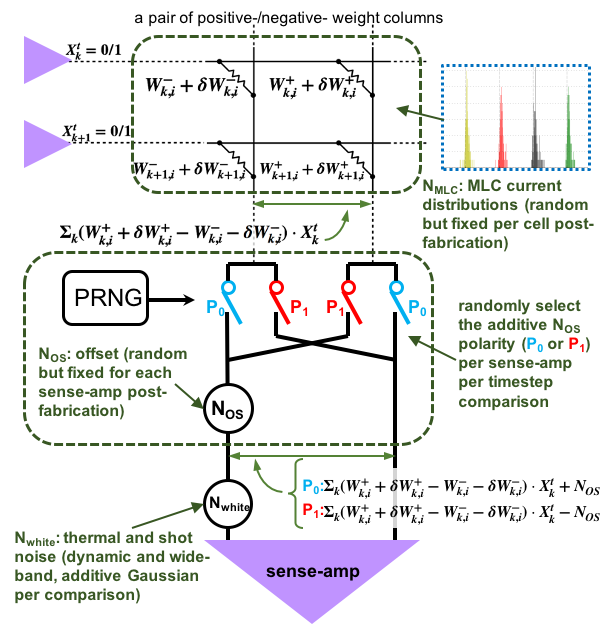

To evaluate how real circuit noise impacts inference accuracy, we account for the detailed profiles of three important sources of physical noise in PIM circuits shown in Figure 4: (1) the weight noise due to variability of eNVM devices, (2) an offset error due to device mismatch in the sensing circuitry, and (3) a white noise source from thermal and shot noise of the circuits. In the following subsections, we first elaborate on the distinctive characteristics of these three noise sources and present our simulation methodology of the overall noise model; then we introduce the devices and circuit designs of the PIM implementation used for deriving the statistics to build the noise model; finally, we present the hardware validation experiment results.

(1) comes from eNVM device manufacturing and programming variability, modeled as noise in programmed MLC weight levels, i.e., per-cell static weight error or deviating from the intended/ideal value or (Figure 4). Depending on input activations of each timestep, each cell’s weight error selectively contributes noise to the total weight noise of added to each corresponding element of MAC pre-activations.

(2) models transistor-mismatch-induced offset as a sense-amp input-referred static error. Each sense-amp’s random offset is determined after fabrication, which adds a fixed asymmetric bias term into the MAC computation associated with the comparisons of each sense-amp. This offset proves to be detrimental to inference accuracy due to its lack of dynamic randomness, but most prior noise-modeling studies overlook this important source of error.

Among the few papers that address this offset error, (Moon et al., 2019) simplifies it into a Gaussian RV, lumped with other noise sources by adding their variances together, and proposes per-chip, post-fabrication retraining; (Bankman et al., 2019) uses on-chip digital calibration circuitry to cancel the offset after fabrication. In contrast, we dedicate the realistic static noise model to each offset error, and leverage a simple yet effective circuit technique that avoids the need for individual retraining or the overhead of offset calibration. Our solution is to randomly flip the polarity of the added using a set of switches controlled by a pseudo random number generator (PRNG), as shown in Figure 4, such that each fixed magnitude is presented to each element of pre-activations with a random polarity per sense-amp comparison per timestep, thereby converting the effect of each sense-amp offset into a Bernoulli RV. Compared with the smooth “bell-shaped” Gaussian noise with which our NNs are trained, Bernoulli distributions have very different forms, but they can guarantee per-element zero-mean and dynamic randomness across timesteps. These are the traits of noise that NNs trained with our NNA algorithm are surprisingly resilient to, as we will show in our validation experiments.

(3) represents thermal and shot noise from the circuits, which are dynamic random white noise, modeled as an input-referred Gaussian noise source at each sense-amp. This is the only truly random and dynamic source of hardware noise, with exactly the same properties as the Gaussian noise used for training.

The overall noise model accounts for the three aforementioned noise sources, such that the total noise added into each element of pre-activations at each timestep is: . When simulating the inference performance of a single chip, , and are determined/static after fabrication and weight programming, so we only randomly sample them once and fix them during inference on all validation examples; is dynamic and so we randomly generate a new sample for each sense-amp comparison; the PRNG dynamically generates a random selection of to add or subtract the fixed magnitude for each corresponding sense-amp comparison. We validate hardware performance at the presence of all these noise sources by simulating cycle-accurate inference across GRU timesteps.

Device models and circuit simulations. For eNVM devices, we evaluate three promising emerging eNVMs (ReRAM, PCM, and CMOS-MLC) and model their corresponding based on measured data from (Sheu et al., 2011), (Bedeschi et al., 2008), and (Ma et al., 2019), respectively. We design and simulate all peripheral circuits targeting a 16nm FinFET technology (Wu et al., 2014). The sensing circuits that resolve BAs adopt the dynamic bitline discharge technique commonly used in memory reading circuitry, with the self-timed StrongArm-type sense-amp from (Miyahara et al., 2008). Sensing via variable bitline discharge time automatically handles the wide statistical range of bitline currents resulting from PIM-based MAC computations. Transistor sizes in the sense-amp pose a tradeoff between area versus , i.e., enlarging the transistor sizes can reduce at the expense of a larger sense-amp area overhead. We use Monte Carlo simulations to measure the of a range of sense-amp sizes, and evaluate their impacts on inference accuracy.

|

|

|

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy range (%) | |||||||||||

|

PCM | ReRAM | CMOS-MLC | ||||||||

| Accuracy range (%) |

Validation results are summarized in Table 3 and Figure 5. We choose NNA()-- as the optimal design target for circuit implementation and evaluation of the NNA algorithm’s resilience to hardware noise. We establish a baseline accuracy (%) corresponding to when only is present and both and are zero. This baseline accuracy is on par with the peak software inference accuracy in Figure 3. The magnitude of for real circuits turns out to be too small to have measurable impacts on accuracy. We first focus on exploring the performance impact of and the polarity flipping circuit technique. We then simulate with the entire noise model across three promising eNVM technologies.

As summarized by the upper two rows of Table 3, when we inject the modeled but fix their polarities, the validation accuracy drops to %. However, by randomly flipping the polarity of each using the PRNG, validation accuracy of the NN recovers to %, proving the effectiveness of this simple random polarity-flipping technique. The modeled statistical magnitudes of are derived from our sensing circuitry design with a reasonable sense-amp sizing choice that requires no more than 8 fins of minimum-length FinFETs for each input differential pair transistor. Therefore, our PIM accelerator design ensures minimal peripheral sensing circuitry overhead (mostly from sense-amps) thanks to BAs, in contrast to area- and power-consuming ADCs otherwise needed for higher-precision activations. These validation results also reveal that our NNA algorithm results in NNs that are resilient to noise profiles beyond the additive Gaussian noise with which they are trained (consistent with discoveries in (Joshi et al., 2020; Merolla et al., 2016)) and they are particularly tolerant to dynamic random zero-mean noise, whereas the exact shape of the distribution is less important (no need to be smooth or bell-shaped).

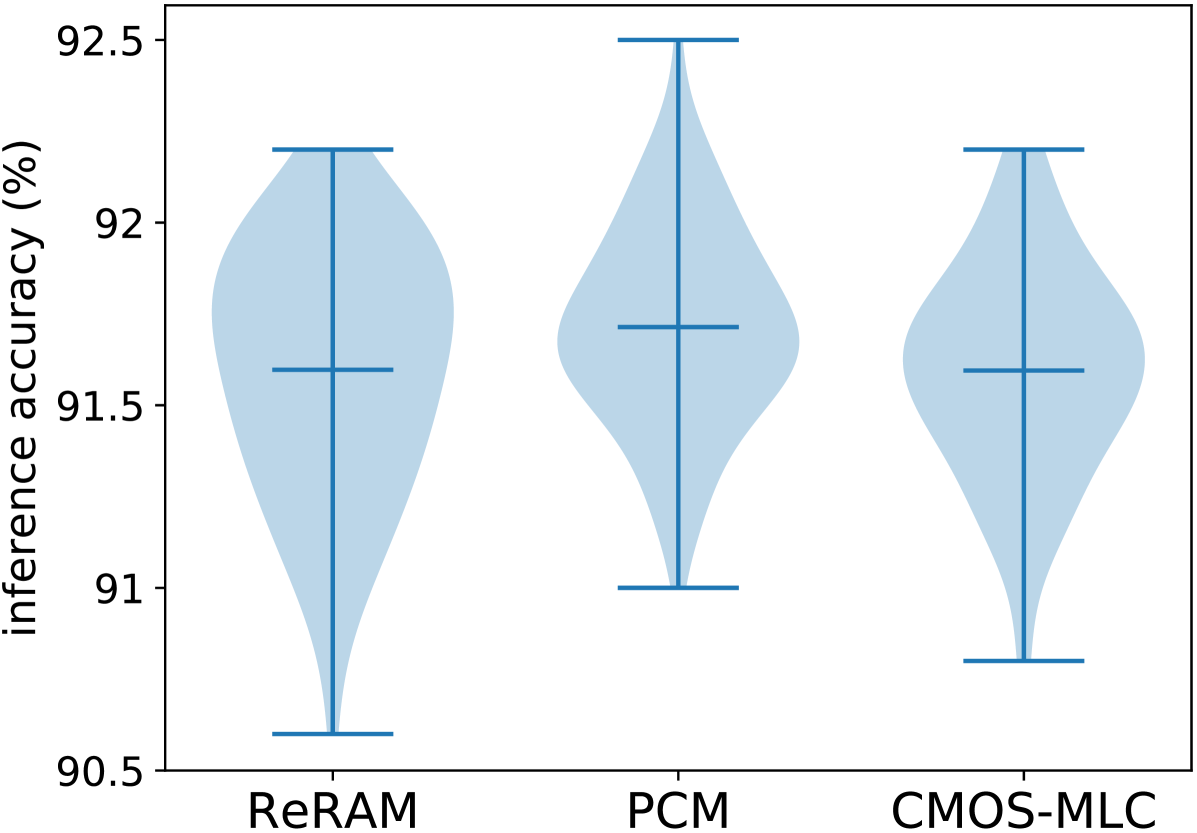

Finally, we evaluate inference performance for PIM designs with binary activations and 7-level weights (each weight implemented with a pair of 2-bit (4-level) MLCs) using the three promising eNVM technologies (PCM, ReRAM, and CMOS-MLC) and with all three types of noise sources (plus random polarity flipping). Figure 5 and the lower two rows of Table 3 summarize the validation results. PCM, ReRAM, and CMOS-MLC achieve hardware inference accuracy of = ()%, ()%, and ()%, respectively, validating that PIM circuits with all three eNVM technologies can achieve performance comparable to the software accuracy (Figure 3) even in the presence of realistic device and circuit non-idealities.

4.2. Training feedforward BA-MLW NN: LeNet5 for MNIST

To demonstrate the generalizability of our NNA algorithm to feedforward networks, we use the MNIST dataset and train BA-MLW networks using the LeNet5 architecture that comprises 2 CNN layers followed by 3 FC layers (LeCun et al., 1998). We compare the accuracy and noise resilience of the FP baseline with our NNA algorithm and STE, and the results are shown in Figure 5. Both STE and NNA are resilient to binarizing activations, and achieve peak accuracy comparable to the FP network and tolerate a wide range of , with STE slightly outperforming NNA (Figure 5a). Both STE and NNA are also resilient to weight quantization (Figure 5b and 5c), with no loss of accuracy when quantizing weights down to 15 levels (a pair of 8-level cells), but slight accuracy degradation with 7-level weights. Compared with the GRU in Section 4.1, LeNet5 needs more quantization levels due to its wider weight distribution ranges and smaller numbers of parameters in the CNN layers (especially the first CNN layer). Although not elaborated in this paper, layer-wise customized choices of quantization levels should further optimize performance.

Results of these two case studies show that the effectiveness of STE on feedforward networks does not easily translate to RNNs. While on the other hand, our NNA algorithm works well for both RNN and feedforward networks.

5. Related work

Most prior quantization studies have been focused on feedforward networks, whereas quantizing RNNs turns out to be more challenging. Consistent with our results, quantization techniques that work well for feedforward NNs (e.g., STE) have been found to work poorly for RNNs (Hubara et al., 2017). Existing RNN quantization studies find that to maintain accuracy, more bits are required for RNNs than for feedforward networks, especially for the activations. Hence, prior work either use FP activations (Ott et al., 2016), or need multiple bits per activation (He et al., 2016; Hubara et al., 2017), which would require costly DACs and ADCs for PIM implementations. In contrast, our work not only quantizes the weights but also binarizes activations of RNNs, enabling the optimal BA-MLW RNN structure for efficient PIM implementation.

Our NNA algorithm is largely inspired by the reparameterization trick (Rezende et al., 2014) and the Gumbel-softmax trick (Maddison et al., 2016; Jang et al., 2016). Introduced in the context of variational inference, the reparameterization trick reformulates the sampling process of certain probability distributions (e.g., those having a “location-scale” form), which allows the expected gradient w.r.t. parameters of these distributions to propagate. Gumbel-softmax uses the Gumbel RVs to attain an equivalent sampling process from categorical distributions. Moreover, it uses a continuous relaxation trick to solve the gradient propagation problem of sampling from discrete distributions. (Murray et al., 1994; Jim et al., 1996; An, 1996; Reed et al., 1995) study the generalization effects of noise injection to NNs’ inputs, weights, or activations. Additive Gaussian noise has also been used for learning binary encodings of documents with a multi-layer feedforward autoencoder (Salakhutdinov et al., 2009). Our paper differentiates from these works in that we apply these techniques (noise injection and methods of propagating gradients through stochastic sampling nodes) to training BA-MLW RNNs in order to yield an optimal PIM circuit implementation that obviates DACs and ADCs. Moreover, we propose an effective noise annealing procedure in our NNA algorithm and use noise injection’s regularization penalty effects to explain why our new algorithm enables high resilience to quantization and noise.

A notable recent work that proposes an end-to-end analog NN implementation also strives to address the issues of AD/DA overhead and device/circuit non-idealities that have been plaguing NNs’ PIM implementations (Ward-Foxton, 2020; Kendall et al., 2020). Their solution exclusively applies to energy-based NN models that leverage the physical Kirchhoff’s current law complied by a memristive crossbar network to find the corresponding NN model’s mathematical minimal energy solution that is naturally represented by neurons’ analog voltages, thereby avoiding ADC or DAC for hidden neurons during inference. To tackle device/circuit non-idealities, they adopt “chip-in-the-loop” training to tailor the NN model to each individual chip’s variability after fabrication. In contrast, our approach targets the commonly used feedforward and recurrent NN models, rather than energy-base models. The BA-MLW NN models trained with our proposed NNA algorithm not only eliminate AD/DA overhead, but also are resilient to a wide range and variety of hardware noise profiles. As we have shown in the hardware validation section, the same pre-trained model can achieve high performance across different chips, which avoids the overhead of post-fabrication on-chip training or calibration.

6. Conclusions and Future Work

There are two critical road blocks towards efficient PIM implementations of NN inference: the overwhelming power, area, and speed overhead from peripheral AD/DA circuitry, and inference accuracy degradation due to device and circuit non-idealities. We propose solving both problems by co-designing highly noise-resilient BA-MLW NN models (whose BAs obviate ADCs and DACs), trained using our novel NNA algorithm. The proposed noise injection and annealing based training procedure endows our NNs with not only high resilience to heavy quantizations, but also strong robustness against a variety of noise sources. Compared with a FP baseline and an alternative quantization algorithm (i.e., STE), our NNA algorithm achieves superior accuracy and noise resilience especially when applied to RNNs.

We demonstrate the architectural and circuit designs of a trigger word detecting PIM accelerator that implements a BA-MLW GRU trained with our NNA algorithm, and design detailed circuit noise models to evaluate its impact on inference performance. Assisted with a simple yet effective offset polarity random flipping circuit technique, our NNs maintain software-equivalent inference accuracy in the presence of the wide range and variety of noise encountered in real PIM circuits, revealing our NNs’ surprisingly strong resilience to noise profiles even beyond the additive Gaussian RVs with which they are trained. Our proposed circuit and algorithm co-design strategies can help pave the path towards more efficient PIM implementations of NNs.

References

- (1)

- An (1996) G. An. 1996. The effects of adding noise during backpropagation training on a generalization performance. Neural computation 8, 3 (1996), 643–674.

- Bankman et al. (2019) D. Bankman, L. Yang, B. Moons, M. Verhelst, and B. Murmann. 2019. An Always-On 3.8 J/86% CIFAR-10 Mixed-Signal Binary CNN Processor With All Memory on Chip in 28-nm CMOS. IEEE Journal of Solid-State Circuits 54, 1 (2019), 158–172.

- Bedeschi et al. (2008) F. Bedeschi et al. 2008. A bipolar-selected phase change memory featuring multi-level cell storage. IEEE JSSC 44, 1 (2008), 217–227.

- Bengio et al. (2013) Y. Bengio et al. 2013. Estimating or propagating gradients through stochastic neurons for conditional computation. arXiv:1308.3432 (2013).

- Bennett et al. (2020) Christopher H Bennett, T Patrick Xiao, Ryan Dellana, Ben Feinberg, Sapan Agarwal, Matthew J Marinella, Vineet Agrawal, Venkatraman Prabhakar, Krishnaswamy Ramkumar, Long Hinh, et al. 2020. Device-aware inference operations in SONOS nonvolatile memory arrays. In 2020 IEEE International Reliability Physics Symposium (IRPS). IEEE, 1–6.

- Cho et al. (2014) K. Cho et al. 2014. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv:1406.1078 (2014).

- Chung et al. (2016) J. Chung et al. 2016. Hierarchical multiscale recurrent neural networks. arXiv:1609.01704 (2016).

- Fick et al. (2017) L. Fick et al. 2017. Analog in-memory subthreshold deep neural network accelerator. In IEEE CICC. 1–4.

- He et al. (2016) Q. He et al. 2016. Effective quantization methods for recurrent neural networks. arXiv:1611.10176 (2016).

- He et al. (2019) Zhezhi He, Jie Lin, Rickard Ewetz, Jiann-Shiun Yuan, and Deliang Fan. 2019. Noise injection adaption: End-to-end ReRAM crossbar non-ideal effect adaption for neural network mapping. In Proceedings of the 56th Annual Design Automation Conference 2019. 1–6.

- Hubara et al. (2017) I. Hubara et al. 2017. Quantized neural networks: Training neural networks with low precision weights and activations. The Journal of Machine Learning Research 18, 1 (2017), 6869–6898.

- Jang et al. (2016) E. Jang et al. 2016. Categorical reparameterization with Gumbel-softmax. arXiv:1611.01144 (2016).

- Jim et al. (1996) K.-C. Jim et al. 1996. An analysis of noise in recurrent neural networks: convergence and generalization. IEEE Transactions on neural networks 7, 6 (1996), 1424–1438.

- Joshi et al. (2020) Vinay Joshi, Manuel Le Gallo, Simon Haefeli, Irem Boybat, Sasidharan Rajalekshmi Nandakumar, Christophe Piveteau, Martino Dazzi, Bipin Rajendran, Abu Sebastian, and Evangelos Eleftheriou. 2020. Accurate deep neural network inference using computational phase-change memory. Nature Communications 11, 1 (2020), 1–13.

- Kendall et al. (2020) Jack Kendall, Ross Pantone, Kalpana Manickavasagam, Yoshua Bengio, and Benjamin Scellier. 2020. Training End-to-End Analog Neural Networks with Equilibrium Propagation. arXiv:cs.NE/2006.01981

- Khwa et al. (2018) W.-S. Khwa et al. 2018. A 65nm 4Kb algorithm-dependent computing-in-memory SRAM unit-macro with 2.3 ns and 55.8 TOPS/W fully parallel product-sum operation for binary DNN edge processors. In IEEE ISSCC. 496–498.

- Klachko et al. (2019) Michael Klachko, Mohammad Reza Mahmoodi, and Dmitri Strukov. 2019. Improving noise tolerance of mixed-signal neural networks. In 2019 International Joint Conference on Neural Networks (IJCNN). IEEE, 1–8.

- LeCun et al. (1998) Y. LeCun et al. 1998. Gradient-based learning applied to document recognition. Proc. IEEE 86, 11 (1998), 2278–2324.

- Li et al. (2016) F. Li et al. 2016. Ternary weight networks. arXiv:1605.04711 (2016).

- Ma et al. (2019) Siming Ma, Marco Donato, Sae Kyu Lee, David Brooks, and Gu-Yeon Wei. 2019. Fully-CMOS Multi-Level Embedded Non-Volatile Memory Devices With Reliable Long-Term Retention for Efficient Storage of Neural Network Weights. IEEE Electron Device Letters 40, 9 (2019), 1403–1406.

- Maddison et al. (2016) C. J. Maddison et al. 2016. The concrete distribution: A continuous relaxation of discrete random variables. arXiv:1611.00712 (2016).

- Merolla et al. (2016) Paul Merolla, Rathinakumar Appuswamy, John Arthur, Steve K Esser, and Dharmendra Modha. 2016. Deep neural networks are robust to weight binarization and other non-linear distortions. arXiv preprint arXiv:1606.01981 (2016).

- Miyahara et al. (2008) Masaya Miyahara, Yusuke Asada, Daehwa Paik, and Akira Matsuzawa. 2008. A low-noise self-calibrating dynamic comparator for high-speed ADCs. In 2008 IEEE Asian Solid-State Circuits Conference. IEEE, 269–272.

- Moon et al. (2019) Suhong Moon, Kwanghyun Shin, and Dongsuk Jeon. 2019. Enhancing reliability of analog neural network processors. IEEE Transactions on Very Large Scale Integration (VLSI) Systems 27, 6 (2019), 1455–1459.

- Murray et al. (1994) A. Murray et al. 1994. Enhanced MLP performance and fault tolerance resulting from synaptic weight noise during training. IEEE Transactions on neural networks 5, 5 (1994), 792–802.

- Ng et al. (2018) A. Ng et al. 2018. Recurrent neural network: gated recurrent unit (GRU). https://www.youtube.com/watch?v=xSCy3q2ts44

- Ott et al. (2016) J. Ott et al. 2016. Recurrent neural networks with limited numerical precision. arXiv:1608.06902 (2016).

- Rastegari et al. (2016) M. Rastegari et al. 2016. Xnor-net: Imagenet classification using binary convolutional neural networks. In European Conference on Computer Vision. Springer, 525–542.

- Reed et al. (1995) R. Reed et al. 1995. Similarities of error regularization, sigmoid gain scaling, target smoothing, and training with jitter. IEEE Transactions on Neural Networks 6, 3 (1995), 529–538.

- Rezende et al. (2014) D. Rezende et al. 2014. Stochastic backpropagation and approximate inference in deep generative models. arXiv:1401.4082 (2014).

- Salakhutdinov et al. (2009) R. Salakhutdinov et al. 2009. Semantic hashing. International Journal of Approximate Reasoning 50, 7 (2009), 969–978.

- Shafiee et al. (2016) A. Shafiee et al. 2016. ISAAC: A convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Computer Architecture News 44, 3 (2016), 14–26.

- Sheu et al. (2011) S.-S. Sheu et al. 2011. A 4Mb embedded SLC resistive-RAM macro with 7.2 ns read-write random-access time and 160ns MLC-access capability. In IEEE ISSCC. 200–202.

- Song et al. (2017) L. Song et al. 2017. Pipelayer: A pipelined ReRAM-based accelerator for deep learning. In IEEE HPCA. 541–552.

- Ward-Foxton (2020) Sally Ward-Foxton. 2020. Research Proves End-to-End Analog Chips for AI Computation Possible. EETimes (2020). https://www.eetimes.com/research-breakthrough-promises-end-to-end-analog-chips-for-ai-computation/

- Warden (2018) P. Warden. 2018. Speech commands: A dataset for limited-vocabulary speech recognition. arXiv:1804.03209 (2018).

- Wu et al. (2014) S.-Y. Wu et al. 2014. An enhanced 16nm CMOS technology featuring 2 nd generation FinFET transistors and advanced Cu/low-k interconnect for low power and high performance applications. In IEEE IEDM. IEEE, 3–1.

- Xiao et al. (2020) T Patrick Xiao, Christopher H Bennett, Ben Feinberg, Sapan Agarwal, and Matthew J Marinella. 2020. Analog architectures for neural network acceleration based on non-volatile memory. Applied Physics Reviews 7, 3 (2020), 031301.

- Yang and Sze (2019) Tien-Ju Yang and Vivienne Sze. 2019. Design Considerations for Efficient Deep Neural Networks on Processing-in-Memory Accelerators. In 2019 IEEE International Electron Devices Meeting (IEDM). IEEE, 22–1.

- Zhang et al. (2016) J. Zhang et al. 2016. A machine-learning classifier implemented in a standard 6T SRAM array. In IEEE VLSI-Circuits. 1–2.