A Birotation Solution for Relative Pose Problems

Abstract

Relative pose estimation, a fundamental computer vision problem, has been extensively studied for decades. Existing methods either estimate and decompose the essential matrix or directly estimate the rotation and translation to obtain the solution. In this article, we break the mold by tackling this traditional problem with a novel birotation solution. We first introduce three basis transformations, each associated with a geometric metric to quantify the distance between the relative pose to be estimated and its corresponding basis transformation. Three energy functions, designed based on these metrics, are then minimized on the Riemannian manifold by iteratively updating the two rotation matrices. The two rotation matrices and the basis transformation corresponding to the minimum energy are ultimately utilized to recover the relative pose. Extensive quantitative and qualitative evaluations across diverse relative pose estimation tasks demonstrate the superior performance of our proposed birotation solution. Source code, demo video, and datasets will be available at mias.group/birotation-solution upon publication.

Index Terms:

Relative pose, birotation solution, basis transformationsI Introduction

Relative pose estimation is the core of various computer vision applications, including stereo camera extrinsic calibration [1, 2, 3], visual odometry [4, 5, 6], and so forth. This process estimates a transformation matrix, defined as follows:

| (1) |

where denotes the rotation matrix, represents the translation vector, and is a column vector of zeros. This problem can be solved by estimating an essential matrix , which satisfies the following epipolar constraint:

| (2) |

and decomposing it into and , where and represent a pair of 3-D correspondences in the reference and target camera coordinate systems, respectively111In this article, the subscripts “1” and “2” denote the reference and target views, respectively. . Alternatively, can be directly estimated based on the following relation:

| (3) |

where denotes the homogeneous coordinates of . Although both methods provide viable solutions for determining the relative pose, they still have significant limitations.

In the first approach, the essential matrix inherently possesses only five degrees of freedom (DoFs), yet its estimation typically requires solving for eight or nine unknown parameters, which can undermine both the uniqueness and stability of the solution [7, 8]. In particular, overfitting to inaccurately matched correspondences can substantially impair the performance of relative pose estimation [9]. To address this issue, researchers have resorted to correspondence refinement strategies [10, 11, 12, 13] and additional geometric constraints [8, 14]. Additionally, this approach cannot solve pure rotation problems, as the essential matrix degenerates to a zero matrix under such conditions [15].

In the second approach, the choice of the optimization algorithm hinges on the availability of depth information. When depth is known, relative pose estimation can be formulated as a perspective-n-point (PnP) problem [16], which can be solved reliably and efficiently using methods such as efficient PnP [17]. In contrast, when depth is unknown, the scale of the translation vector becomes ambiguous, necessitating its treatment as a unit vector [18]. Normalizing , nevertheless, increases the risk of the optimization process falling into local minima, posing significant challenges in achieving stable and accurate solutions [19].

Substituting the translation vector with a rotation matrix and using two rotation matrices to solve the relative pose problem can avoid the instability caused by the absence of depth information while introducing only one additional dimensionality to the solution space [19]. Although this concept has been investigated in previous studies [2, 3, 19], its practical application remains predominantly confined to stereo camera extrinsic online calibration, where the rotation matrix approximates the identity matrix and the translation vector aligns closely with the X-axis [3, 19]. Therefore, these methods fall short of providing a stable solution for general relative pose estimation problems.

To address this well-studied yet enduring problem, we propose a novel birotation solution in this article. Our study effectively expands existing methods, initially designed for stereo camera extrinsic online calibration, to tackle a broader range of relative pose problems. Specifically, we first define three basis transformations along the X-, Y-, and Z-axes. Three geometric metrics are then designed to quantify the distances between the relative pose to be estimated and these basis transformations. Each metric can be employed to construct an energy function , which includes a regularization term to guarantee the uniqueness of the solution. The energy function is minimized on the Riemannian manifold through iterative updates of the reference and target camera coordinate systems with two independent rotation matrices, and . These optimization processes yield three locally optimal birotation solutions, each defined by a pair of rotations, a scaling factor, and a coordinate axis. The birotation solution with the minimal geometric metric is then selected as the optimal solution, from which the relative pose can be directly derived. Extensive experiments across a variety of relative pose estimation tasks demonstrate the feasibility of our proposed solution and its greater accuracy over all other state-of-the-art (SoTA) methods.

In a nutshell, our contributions are as follows:

-

•

We revisit the relative pose problem from a fresh perspective and propose a novel birotation solution.

-

•

We introduce three basis transformations and corresponding geometric metrics to expand relevant prior works, which primarily focus on stereo camera extrinsic calibration, to solve general relative pose problems.

-

•

We propose a highly efficient algorithm for relative pose estimation based on the birotation solution, which simultaneously optimizes three energy functions derived from geometric metrics.

-

•

We demonstrate the feasibility and superiority of our solution through quantitative and qualitative evaluations across diverse relative pose estimation tasks.

The remainder of this article is organized as follows: Sect. II reviews previous relative pose estimation algorithms. The proposed basis transformations, geometric metrics, birotation solutions, and the relative pose estimation algorithm are detailed in Sect. III. Sect. IV presents experimental results that demonstrate the effectiveness and superiority of our algorithm. The potential risks associated with the uniqueness of the birotation solution are discussed in Sect. V. Finally, we conclude this article and suggest directions for future work in Sect. VI.

II Related Work

For the relative pose problem, the essential matrix estimation process under the epipolar constraint in (2) can be formulated as follows:

| (4) |

where represents the number of 3-D correspondences, and and denote the -th pair of 3-D correspondences in the reference and target camera coordinate systems, respectively. Although the eight-point method [7] can effectively solve such an -point problem, it necessitates highly accurate correspondences. The five-point method, combined with random sample consensus (RANSAC) [8, 9], proves effective in addressing cases where correspondences are less reliable. Furthermore, existing local optimization approaches [20, 21, 14] either suffer from low efficiency or are prone to becoming trapped in local minima. While manifold-based approaches [22, 23, 14] provide insightful constructions of the solution space, they often fail to guarantee iterative convergence in complex relative pose problems [12]. To overcome these challenges, the study [12] reformulates the -point problem as a quadratically constrained quadratic program (QCQP) and utilizes semi-definite relaxation to achieve a globally optimal solution, demonstrating polynomial-time convergence.

Different from the above-mentioned methods, the study [18] directly estimates and by solving the following optimization problem based on the epipolar constraint:

| (5) |

where denotes cross-product matrix of . However, this method demonstrates limited stability in estimating , primarily due to the significant non-convexity of the energy function introduced by and the normalization process of [19].

In recent years, data-driven methods, such as PoseNet [24], have gained significant popularity. Prior studies predominantly utilize convolutional neural networks, often employing a ResNet-18, to directly regress the 6-DoF relative poses, which include three Euler angles and three translation vector elements. Nevertheless, these methods often demonstrate limited interpretability, generalization, and accuracy. Recent advancements, such as SC-Depth [25] and SCIPaD [26], have incorporated a monocular depth estimation branch to improve the overall performance of relative pose estimation. However, the insufficient geometric constraints between depth and relative pose incorporated during training limit the accuracy of these approaches.

As discussed above, the use of birotation for relative pose estimation remains confined to stereo camera extrinsic rectification. The study [3] uses two independent rotation matrices, and , to separately rotate the left and right camera coordinate systems. They derive the Euler angles for and by formulating and solving the following optimization problem based on stereo rectification:

| (6) |

where and denote the -th row vector of and , respectively. The recent study [19] improves this approach by incorporating geometry constraints to ensure solution uniqueness. Nonetheless, these approaches are applicable only to relatively ideal stereo vision models (with small perturbations) and cannot be generalized for broader relative pose estimation problems.

III Methodology

While it is geometrically feasible to obtain an ideal stereo vision model (a transformation along the X-axis) by rotating two cameras, solving for such a pure rotation problem becomes highly challenging, particularly when the actual relative pose significantly deviates from the ideal model. This challenge arises because existing birotation-based methods [3, 19] primarily utilize local optimization algorithms to address a non-convex problem. Their effectiveness is influenced by both the initialization and the penalty term in each energy function, which can misdirect the optimization process. This greatly limits their applicability to general relative pose problems. Therefore, Sect. III-A first introduces two additional transformations along the Y- and Z-axes, respectively. Subsequently, Sect. III-B defines the geometric metrics corresponding to these three basis transformations. These geometric metrics are then employed to formulate energy functions, the minimization of which yields three birotation solutions, as presented in Sect. III-C. Building upon these solutions, a novel relative pose estimation algorithm is introduced in Sect. III-D.

III-A Basis Transformations

Previous birotation-based methods [3, 19] have been confined to an ideal stereo vision model (both cameras have an identical intrinsic matrix), primarily because their energy functions are formulated based on the constraint that and , a given pair of correspondences in the two images, have the same vertical coordinates (i.e., ). Similar constraints can be observed when a single camera translates solely along either the Y-axis or the Z-axis. Specifically, the horizontal coordinates of and are identical (i.e., ) in the former case, while the ratios of their horizontal to vertical coordinates are identical (i.e., ) in the latter case.

Nevertheless, in general relative pose problems, the intrinsic matrices of the reference and target cameras may differ. It is therefore necessary to reformulate the above-mentioned constraints based on the following basis transformations:

| (7) |

where , , and are unit vectors representing pure translations along the X-, Y-, and Z-axes of the camera coordinate system, respectively, and denotes the scaling factor corresponding to each transformation. and have the following relation:

| (8) |

where represents the normalized coordinates of with respect to depth, denotes the homogeneous coordinates of , and

| (9) |

and

| (10) |

represent the intrinsic matrices of the reference and target cameras, respectively. By combining (7) and (8), we can reformulate the aforementioned three constraints into the following expressions:

| X-Axis: | (11) | |||

| Y-Axis: | (12) | |||

| Z-Axis: | (13) | |||

which serve as the foundation for developing geometric metrics in the subsequent subsection.

III-B Geometric Metrics

Existing methods [3, 19] formulate the energy function as the sum of absolute residuals calculated based on the constraint presented in (11). In this subsection, we comprehensively analyze the drawbacks of existing methods in terms of absolute residual accumulation, and introduce three geometric metrics defined to quantify the distances between the relative pose to be estimated and the basis transformations.

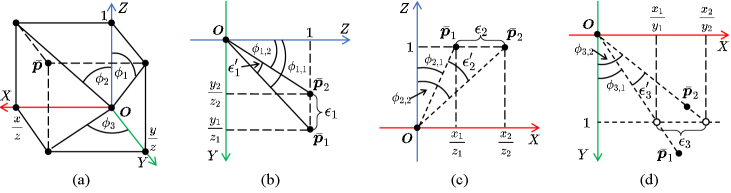

As illustrated in Fig. 1(a), and , the normalized coordinates of a pair of correspondences with respect to depth, can be respectively expressed using and as follows:

| (14) |

These angles satisfy the following relationship:

| (15) |

Combining (14) and (15) results in the following expressions:

| (16) |

According to (14) and (16), an absolute residual vector , formulated based on the geometric constraints presented in (11)-(13), can be expressed in terms of and as follows:

| (17) | ||||

, the -th element of , is illustrated in Fig. 1(b)-(d), respectively. As the relative pose to be estimated nears alignment with the -th basis transformation, approaches zero. The ratio of to can be formulated as follows:

| (18) | ||||

which leads to the following expression:

| (19) |

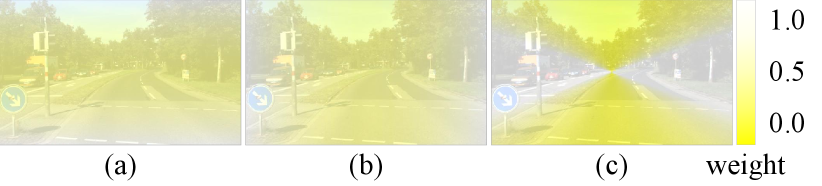

indicating that increases monotonically with respect to . Therefore, the implicit weights of residuals currently in use are subject to pixel coordinates. To illustrate this bias, we visualize the normalized implicit weights under different constraints, as shown in Fig. 2. Specifically, under the first constraint, the implicit weight increases along the vertical axis (see Fig. 2(a)), peaking near the top and bottom image boundaries and reaching a minimum near the horizontal midline. Similarly, under the second constraint, the implicit weight increases along the horizontal axis (see Fig. 2(b)), peaking near the left and right image boundaries and reaching a minimum near the vertical midline. Nonetheless, as illustrated in Fig. 2(c), under the third constraint, the implicit weights along the horizontal midline reach the maximum, whereas those along the vertical midline reach the minimum. In addition, the implicit weights are symmetric about the image center and decrease monotonically as the line passing through the center rotates from the horizontal to the vertical midline. This phenomenon emphasizes the instability of previous relative pose estimation approaches, particularly when correspondences from specific regions, such as treetops or sky, are utilized.

To overcome this limitation, we adjust the three constraints in (11)-(13) using an inverse trigonometric function, as expressed as follows:

| X-Axis: | (20) | |||

| Y-Axis: | (21) | |||

| Z-Axis: | (22) |

The new absolute residual vector can therefore be expressed as follows:

| (23) |

which eliminates the influence of implicit weights in residual accumulation. Comparisons between the absolute residuals and are also given in Fig. 1(b)-(d).

In accordance with the new constraints defined in (20)-(22), we introduce three geometric metrics that correspond to the three basis transformations as follows:

| (24) | |||

| (25) | |||

| (26) |

The value of represents the distance between the actual relative pose and the -th basis transformation. A smaller indicates a closer alignment between the actual relative pose and the -th basis transformation. When exactly one of the three distances approaches zero, the actual relative pose aligns with the corresponding basis transformation. Additionally, it is worth noting that when the camera remains stationary, all three distances approach zero.

III-C The Birotation Solution

Building on the above-mentioned basis transformations and geometric metrics, we introduce three birotation solutions to the relative pose estimation problem.

We use two rotation matrices, and , to apply pure rotation transformations to the reference and target camera coordinate systems, respectively, in order to align them with the -th basis transformation. This process can be formulated as follows:

| (27) |

where denotes the transformed coordinates of in the new camera coordinate systems. We refer to (27) as the -th birotation model for each , where the corresponding parameters , , , and together constitute the -th birotation solution for the relative pose problem.

By substituting the -th birotation model into the geometric metrics presented in (24)-(26), the three geometric metrics can be reformulated as functions of and , as follows:

| (28) |

where forms an even permutation [27] of the set when , and an odd permutation when . Given the scaling factor , the -th birotation solution can be obtained by minimizing .

The relative pose and the essential matrix can be derived from the birotation solutions. By multiplying both sides of with the matrix , we have the following expression:

| (29) |

According to the -th birotation model defined in (27), the following relationship holds:

| (30) |

By combining (29) and (30), the rotation matrix and translation vector can be determined from the -th birotation solution as follows:

| (31) |

Ideally, the three birotation solutions yield an identical relative pose. The essential matrix can then be derived from the birotation solutions as follows:

| (32) | ||||

However, it should be noted here that all three birotation solutions are not unique, as each solution has six DoFs (three from each rotation matrix), while the relative pose problem with ambiguous scale has only five DoFs. Specifically, rotating both the reference and target camera coordinate systems by the same angle around the translation vector does not change their relative pose, which geometrically demonstrates the redundant DoF in each birotation solution. Thus, additional geometric constraints should be incorporated into our proposed relative pose estimation algorithm, detailed in the next subsection, to resolve this ambiguity.

III-D Relative Pose Estimation Algorithm

According to the constraints presented in (20)-(22) and the birotation models defined in (27), we can obtain three -entry residual vectors using pairs of correspondences, as expressed as follows:

| (33) |

where

| (34) | |||

and denote the rotation vectors corresponding to the rotation matrices and in the -th birotation model, respectively, and represent the normalized coordinates of the -th pair of correspondences with respect to depth, and denote the -th and -th row vector of , respectively, and and are the -th and -th row vector of , respectively. , the discretized approximation of defined in (28), can be yielded by accumulating residuals defined in (34) as follows:

| (35) |

where is a diagonal matrix in which each element adaptively takes a value of either 0 or 1 to indicate the reliability of the -th pair of correspondences. By incorporating a regularization term into , the complete energy function for the -th birotation model can be formulated as follows:

| (36) |

where denotes the regularization term, and represents the regularization parameter. The regularization term is incorporated to reduce the energy function’s non-convexity and ensure the solution’s uniqueness.

The -th birotation solution can be yielded by minimizing (36) using our newly designed iterative optimization approach. Specifically, based on (31), the three birotation solutions are initialized using the prior rotation and translation as follows:

| (37) | |||

| (38) | |||

| (39) | |||

| (40) | |||

| (41) |

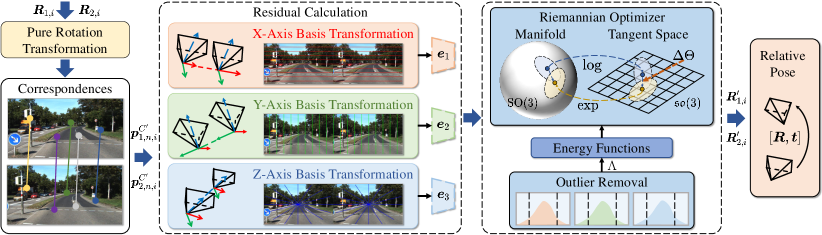

An overview of a single iteration of the optimization process is illustrated in Fig. 3. We first derive a first-order approximation of , which is then applied to the energy function as follows:

| (42) |

where denotes the increment vector at each iteration, and

| (43) |

is a matrix storing the gradients of with respect to and computed using the left perturbation model [18, 19], which avoids the need to compute the Baker-Campbell-Hausdorff Jacobian matrix at each iteration. Under the definitions of and , we can derive the following expressions:

| (44) | |||

| (45) | |||

| (46) |

Setting the derivative of (42) with respect to to zero yields the following expression:

| (47) |

Subsequently, and are updated using the left perturbation model, as follows:

| (48) |

where and denote the updated rotation matrices after one iteration. The iterative process terminates when either the value or the change rate of falls below predefined thresholds. After the iteration terminates, the final solution is selected from the three birotation solutions based on the following criteria:

| (49) |

where weighting coefficients can be empirically adjusted to prioritize one of the three birotation solutions, which can greatly enhance the reliability of the final solution. The relative rotation and the line along which the translation vector lies can then be derived from , , and their corresponding index using (31).

In addition, although the absolute value of the scaling factor is unknown, its sign can be determined based on the transformed coordinates and using the following expression when and are positive:

| (50) |

When the depth of either or is negative, the direction of the translation is determined by computing the depths of both and . The translation yielding two positive depths is selected as the valid solution. With convergence-related parameters fixed, the computational complexity of our relative pose estimation algorithm remains .

IV Experiments

Sect. IV-A provides implementation details on the proposed algorithm and the methods used for comparison. Sect. IV-B introduces the four public datasets utilized for performance comparison, along with the datasets newly created for ablation studies and robustness analyses. The evaluation metrics are detailed in Sect. IV-C. Sect. IV-D presents extensive quantitative and qualitative comparisons between the proposed method and previous SoTA approaches. In Sect. IV-E, ablation studies are conducted to evaluate the contributions of the energy functions and the effectiveness of the constraint adjustments. Finally, Sect. IV-F analyzes the robustness of our approach under different initialization strategies, correspondence noise levels, and matching error rates.

IV-A Implementation Details

IV-A1 Correspondence Matching

For both comparisons with SoTA methods and ablation studies, we adopt LoFTR [28], trained on the MegaDepth dataset [29], to obtain dense correspondences. This is primarily because in (28) can be closely approximated with in (35) when correspondences are dense. Additionally, we employ SIFT [30] in conjunction with LightGlue [31], also trained on the MegaDepth dataset, to further evaluate the robustness of our algorithm over other relative pose estimation algorithms under sparse correspondence conditions.

| Algorithm | Dense Correspondence | Sparse Correspondence | ||||||

| @ | @ | @ | @ | @ | @ | @ | @ | |

| Nistér [8] | 0.56 | 6.84 | 15.24 | 31.49 | 0.74 | 7.82 | 16.54 | 33.44 |

| Helmke et al. [14] | 0.05 | 0.89 | 2.76 | 7.42 | 0.08 | 2.21 | 5.51 | 14.11 |

| Zhao [12] | 0.00 | 0.04 | 0.23 | 1.49 | 0.00 | 0.07 | 0.40 | 2.12 |

| Ling et al. [18] | 0.83 | 8.39 | 16.55 | 32.15 | 0.58 | 8.11 | 17.18 | 34.56 |

| Zhao et al. [19] | 0.63 | 6.34 | 12.83 | 26.16 | 0.74 | 6.62 | 13.93 | 29.48 |

| Ours | 1.08 | 9.56 | 18.65 | 33.08 | 1.05 | 8.53 | 17.75 | 33.94 |

| Algorithm | Dense Correspondence | Sparse Correspondence | ||||||

| @ | @ | @ | @ | @ | @ | @ | @ | |

| Nistér [8] | 6.57 | 26.84 | 41.65 | 62.01 | 6.95 | 26.74 | 41.18 | 60.74 |

| Helmke et al. [14] | 0.84 | 6.03 | 11.58 | 22.80 | 1.50 | 10.17 | 18.68 | 33.75 |

| Zhao [12] | 0.04 | 0.76 | 2.44 | 9.04 | 0.07 | 0.96 | 2.86 | 9.78 |

| Ling et al. [18] | 9.50 | 31.81 | 46.59 | 65.51 | 10.00 | 31.13 | 45.13 | 63.53 |

| Zhao et al. [19] | 5.04 | 21.25 | 33.36 | 52.27 | 5.61 | 21.85 | 33.71 | 52.19 |

| Ours | 10.15 | 32.02 | 45.89 | 63.45 | 9.49 | 29.19 | 41.67 | 58.22 |

| Rotation Angle | Algorithm | Dense Correspondence | Sparse Correspondence | ||||||

| @ | @ | @ | @ | @ | @ | @ | @ | ||

| Nistér [8] | 47.90 | 52.06 | 52.89 | 53.52 | 64.87 | 70.69 | 71.86 | 72.73 | |

| Helmke et al. [14] | 47.92 | 52.59 | 53.57 | 54.44 | 67.24 | 73.91 | 75.47 | 76.77 | |

| Zhao [12] | 89.00 | 96.33 | 97.80 | 98.90 | 90.13 | 96.71 | 98.03 | 99.01 | |

| Ling et al. [18] | 88.75 | 96.25 | 97.75 | 98.87 | 88.82 | 96.27 | 97.76 | 98.88 | |

| Zhao et al. [19] | 68.36 | 74.09 | 75.24 | 76.10 | 76.11 | 82.19 | 83.41 | 84.32 | |

| Hartley [34] | 91.45 | 97.15 | 98.29 | 99.14 | 92.83 | 97.61 | 98.57 | 99.28 | |

| Ours | 91.77 | 97.26 | 98.35 | 99.18 | 90.54 | 96.85 | 98.11 | 99.05 | |

| Nistér [8] | 53.47 | 58.09 | 59.02 | 59.71 | 66.30 | 72.51 | 73.75 | 74.68 | |

| Helmke et al. [14] | 52.02 | 56.99 | 58.00 | 58.75 | 68.76 | 75.76 | 77.37 | 78.69 | |

| Zhao [12] | 89.55 | 96.52 | 97.91 | 98.96 | 89.69 | 96.42 | 97.76 | 98.77 | |

| Ling et al. [18] | 88.87 | 96.29 | 97.77 | 98.89 | 88.94 | 96.31 | 97.79 | 98.89 | |

| Zhao et al. [19] | 69.56 | 75.69 | 76.92 | 77.83 | 77.34 | 83.81 | 85.10 | 86.06 | |

| Hartley [34] | 90.80 | 96.63 | 97.80 | 98.68 | 91.64 | 96.91 | 97.97 | 98.76 | |

| Ours | 90.67 | 96.59 | 97.78 | 98.66 | 90.63 | 96.88 | 98.13 | 99.06 | |

| Nistér [8] | 54.00 | 58.72 | 59.66 | 60.37 | 68.67 | 75.69 | 77.09 | 78.14 | |

| Helmke et al. [14] | 51.75 | 57.20 | 58.39 | 59.43 | 72.44 | 80.18 | 81.83 | 83.08 | |

| Zhao [12] | 87.54 | 94.50 | 95.90 | 96.94 | 82.82 | 89.20 | 90.48 | 91.44 | |

| Ling et al. [18] | 88.83 | 96.28 | 97.77 | 98.88 | 88.90 | 96.30 | 97.78 | 98.89 | |

| Zhao et al. [19] | 69.97 | 76.72 | 78.07 | 79.08 | 80.28 | 87.01 | 88.36 | 89.37 | |

| Hartley [34] | 89.72 | 95.53 | 96.69 | 97.56 | 90.24 | 96.15 | 97.33 | 98.22 | |

| Ours | 90.03 | 96.23 | 97.47 | 98.40 | 89.75 | 96.14 | 97.41 | 98.37 | |

IV-A2 Compared SoTA Algorithms

We first compare our algorithm with the classical five-point algorithm used in conjunction with RANSAC [8] (implemented in OpenCV with default parameters), which is an explicit solver whose output is then used as the initialization for subsequent local optimization methods. These local optimization methods include the manifold-based method [14], the method that directly estimates rotation and translation [18], and the birotation-based method [19]. Moreover, we also use the published code of the QCQP-based global optimization method [12] to conduct comparative experiments. In addition, the homography estimation method [34], as implemented in OpenCV (all parameters are set by default), is also used for further comparison under the pure rotation condition. In the monocular visual odometry task, we also compare our method with two SoTA data-driven methods, SC-Depth [25] and SCIPaD [26], both trained on sequences 00–08 of the KITTI Odometry dataset [35].

IV-A3 Parameter Settings in Our Algorithm

The regularization parameter defined in (36) is set to . The coefficients in (49) are empirically set to in the stereo camera extrinsic calibration experiment, in the monocular visual odometry experiment, and the default values in all other experiments. This is because the ground-truth relative poses in the stereo camera extrinsic calibration and monocular visual odometry tasks typically align more closely with the first and third basis transformations defined in (7), respectively. Our method is also initialized using the output of the five-point algorithm with RANSAC [8]. In addition, the upper quartile method [36] is employed in each iteration to compute in (36) and remove outliers. The iteration terminates when either the value of falls below or its rate of change falls below .

| Scenes | Correspondence | Algorithm | @1∘ | @3∘ | @5∘ | @10∘ | ||||||

| Indoor | Dense | Nistér [8] | 1.85 | 6.07 | 1.77 | 6.02 | 6.52 | 11.23 | 5.47 | 26.30 | 42.40 | 64.50 |

| Helmke et al. [14] | 8.61 | 8.77 | 7.01 | 17.33 | 12.30 | 29.34 | 0.37 | 6.35 | 13.99 | 34.02 | ||

| Zhao [12] | 2.67 | 13.74 | 3.13 | 61.06 | 15.74 | 75.79 | 0.00 | 4.99 | 16.18 | 29.89 | ||

| Ling et al. [18] | 0.37 | 2.51 | 0.49 | 5.77 | 2.44 | 8.85 | 30.21 | 60.10 | 72.95 | 84.06 | ||

| Zhao et al. [19] | 41.53 | 4.11 | 6.28 | 6.69 | 2.22 | 3.79 | 32.08 | 66.01 | 77.34 | 86.74 | ||

| Ours | 0.27 | 2.14 | 0.43 | 0.70 | 1.52 | 2.85 | 39.10 | 70.74 | 81.41 | 90.25 | ||

| Sparse | Nistér [8] | 2.01 | 7.52 | 2.24 | 11.98 | 6.29 | 13.60 | 6.44 | 25.48 | 40.79 | 62.34 | |

| Helmke et al. [14] | 2.65 | 7.93 | 3.82 | 15.89 | 13.08 | 24.95 | 0.63 | 7.63 | 16.28 | 34.78 | ||

| Zhao [12] | 2.74 | 13.34 | 3.42 | 51.61 | 15.65 | 67.92 | 0.00 | 5.28 | 17.18 | 31.65 | ||

| Ling et al. [18] | 0.40 | 2.43 | 0.43 | 7.73 | 2.54 | 10.97 | 29.00 | 57.31 | 69.27 | 81.31 | ||

| Zhao et al. [19] | 41.09 | 6.59 | 4.97 | 13.47 | 2.79 | 5.20 | 35.55 | 68.01 | 78.28 | 86.85 | ||

| Ours | 0.25 | 2.17 | 0.38 | 2.85 | 1.66 | 4.19 | 36.67 | 70.41 | 80.60 | 89.47 | ||

| Outdoor | Dense | Nistér [8] | 1.31 | 6.40 | 1.03 | 2.36 | 3.35 | 15.82 | 2.86 | 16.19 | 28.36 | 52.39 |

| Helmke et al. [14] | 2.24 | 6.18 | 3.19 | 6.51 | 7.50 | 17.51 | 0.77 | 8.28 | 19.38 | 44.64 | ||

| Zhao [12] | 1.10 | 1.24 | 0.66 | 4.48 | 6.00 | 11.83 | 0.00 | 11.72 | 40.89 | 67.99 | ||

| Ling et al. [18] | 0.40 | 1.40 | 0.29 | 0.40 | 1.55 | 7.46 | 13.55 | 39.69 | 56.50 | 76.31 | ||

| Zhao et al. [19] | 0.26 | 0.87 | 0.22 | 1.32 | 0.87 | 1.67 | 44.71 | 80.12 | 88.07 | 94.04 | ||

| Ours | 0.23 | 0.96 | 0.20 | 0.12 | 0.64 | 1.30 | 52.15 | 83.69 | 90.22 | 95.11 | ||

| Sparse | Nistér [8] | 1.22 | 5.91 | 1.15 | 2.37 | 3.43 | 14.89 | 4.71 | 19.61 | 32.86 | 55.13 | |

| Helmke et al. [14] | 2.54 | 6.83 | 3.76 | 8.55 | 6.79 | 17.42 | 1.84 | 10.31 | 22.49 | 46.63 | ||

| Zhao [12] | 0.43 | 1.14 | 0.31 | 1.36 | 6.01 | 9.34 | 0.19 | 11.86 | 40.22 | 68.71 | ||

| Ling et al. [18] | 0.39 | 1.28 | 0.34 | 0.46 | 1.62 | 7.58 | 16.98 | 42.38 | 57.84 | 76.46 | ||

| Zhao et al. [19] | 0.25 | 0.83 | 0.24 | 1.31 | 0.83 | 1.41 | 52.36 | 83.12 | 89.87 | 94.93 | ||

| Ours | 0.25 | 0.77 | 0.23 | 0.10 | 0.55 | 0.91 | 64.36 | 88.06 | 92.83 | 96.42 |

IV-B Datasets

IV-B1 Public Datasets

- •

- •

-

•

The KITTI Odometry dataset [35] consists of well-rectified stereo video sequences and ground-truth trajectories collected from 22 real-world driving scenarios. Existing data-driven approaches, including the two networks we compare [25, 26], are typically trained on sequences 0 to 8 and evaluated on sequences 9 and 10. In our experiments, we evaluate all explicitly programming-based algorithms on sequences 0 to 10. Although this may introduce a slight bias when compared to data-driven approaches, the experimental results presented in Sect. IV-D3 demonstrate the superiority of our method over them. Additionally, we select 300 consecutive video frames from KITTI Odometry sequence 0, where the relative pose closely aligns with the third basis transformation, to conduct an ablation study. The results demonstrate the advantage of using three energy functions for relative pose estimation over a single energy function. The images used in the ablation study are publicly available for result reproduction.

-

•

The KITTI Raw dataset [35] consists of raw video sequences and ground-truth trajectories collected from 56 real-world driving scenarios. In our experiments, we select around 500 images as reference frames and generate three subsets of target frames by applying random rotations with angles of up to , , and , respectively. These subsets are used to evaluate the performance of relative pose estimation algorithms when only rotation is involved. The images used for the pure rotation experiment are publicly available for result reproduction.

IV-B2 New Real-World Datasets

As discussed above, ablation studies are required to compare the performance of our algorithm when using three energy functions versus a single energy function. Therefore, in addition to the 300 images selected from the KITTI Odometry dataset, we also create two additional datasets (each containing 300 image pairs), in which the cameras are positioned on the left and right, and top and bottom, respectively. Given that a relatively ideal experimental setup may not comprehensively reflect the performance of our algorithm, we introduce slight perturbations to the stereo rig in practice. Moreover, we use the same stereo rig to collect an additional 600 image pairs (300 indoor and 300 outdoor) for the stereo camera extrinsic online calibration experiment.

IV-B3 New Synthetic Datasets

We also create two synthetic datasets to further evaluate the robustness of our algorithm. We first generate a synthetic 3-D point cloud by uniformly sampling points within a cubic volume in the world coordinate system. All cameras have the same intrinsic parameters. The reference camera coordinate system is aligned with the world coordinate system, whereas the target camera coordinate systems are randomly generated. The synthetic 3-D points are then projected onto each camera’s image plane to generate corresponding 2-D pixel coordinates. The details of the two synthetic datasets are as follows:

-

•

The first synthetic dataset is created by adding Gaussian noise to the 2-D correspondences, with the standard deviation ranging from 0 to 2 pixels in increments of 0.02 pixel. For each noise level, we generate 100 pairs of reference and target views, each observing 200 3-D points.

-

•

The second synthetic dataset is created under varying correspondence matching error rates, ranging from 0 to 0.3 in increments of 0.01. Correct correspondences are perturbed with slight Gaussian noise ( pixel), while erroneous correspondences are perturbed with stronger Gaussian noise ( pixels) to simulate outliers. For each error rate, we generate 100 pairs of reference and target views, each observing 200 3-D points.

All the synthetic datasets are publicly available for result reproduction.

IV-C Evaluation Metrics

To quantify the algorithm’s performance with respect to individual motion components, we follow the studies [18] and [19] to compute the average absolute rotation error in radians and average absolute translation error in millimeters, respectively, where denotes the total number of frames used for evaluation, denotes the -th rotation vector derived from the estimated rotation matrix , represents the ground-truth rotation vector, and and denote the -th estimated and ground-truth translation vectors, respectively. To further quantify the overall performance of the algorithm in rotation and translation estimation, we follow the study [12] to compute the rotation error and translation error , both measured in degrees. Moreover, we follow the study [37] to quantify the algorithm’s overall relative pose estimation performance using the area under the cumulative pose error curve (AUC) in percents, computed at thresholds of , , , and , respectively, where the pose error is defined as the maximum of and . In this article, denotes the AUC value at the threshold , with higher values indicating better performance. It is important to note that when only rotational motion is involved, the AUC is calculated solely based on the rotation error. Additionally, when evaluating the algorithm’s performance on the monocular visual odometry task, we adopt commonly used metrics as presented in [35], including the average translational error (%), the rotational error (m), and the absolute trajectory error (ATE) in meters [38]. To ensure fair comparisons, following the study [26], the scales of all estimated trajectories are aligned with the ground truth using 7-DoF optimization.

IV-D Comparisons with SoTA Algorithms

This subsection first evaluates the performance of our algorithm under challenging conditions. Additional experiments on the pure rotation dataset are then conducted to validate the effectiveness of our method when only rotations are involved. Finally, experiments on stereo camera extrinsic online calibration and monocular visual odometry are conducted to demonstrate the practicality of our algorithm in specific tasks.

IV-D1 Performance under Challenging Conditions

Since this study aims to extend the existing birotation-based approach [19] to general relative pose problems, it is necessary to evaluate the performance of our method on two public datasets, ScanNet and YFCC100M, where the ground-truth relative poses can be significantly different from any of the three predefined basis transformations, making correspondence matching considerably more challenging. Following the study [37], we compute the AUC (%) across varying thresholds (∘) to quantify the performance of relative pose estimation algorithms on both datasets.

As shown in Table I, our algorithm achieves SoTA performance on the ScanNet dataset for both dense and sparse correspondences, which demonstrates the effectiveness of our method in indoor scenarios. Moreover, the outdoor relative pose estimation results presented in Table II indicate that our approach performs on par with the best-performing method [18] when correspondences are dense, and achieves the second-best performance when correspondences are sparse. Notably, while all methods use the output of the algorithm [8] as the initial relative pose, the previous birotation-based approach [19] performs significantly worse than the algorithm [8], whereas our method achieves substantial improvements. This underscores the limitation of the previous study [19], which was primarily designed for relatively ideal stereo vision models, and demonstrates the effectiveness of our approach in broadening the applicability of the birotation solution by introducing two additional basis transformations. In addition, although our method performs slightly worse than the algorithm presented in [18] in outdoor scenarios, this discrepancy may be attributed to the outlier removal strategy incorporated in their approach. Unlike the upper quartile method used in our algorithm, their strategy depends on an empirically tuned hyperparameter (extensive hyperparameter tuning experiments are conducted in this study to ensure fair comparisons), which limits its practicality across diverse datasets.

IV-D2 Performance under Pure Rotation Conditions

Pure rotation represents a corner case in relative pose estimation. Nonetheless, most existing algorithms developed for general relative pose problems rarely take this corner case into consideration. Therefore, it is essential to evaluate the performance of existing methods under pure rotation conditions using the curated pure rotation dataset detailed in Sect. IV-B1. The results are shown in Table III.

As expected, the approaches [8, 14] that estimate the essential matrix perform poorly across all thresholds, which validates the limitations of these methods in this corner case, as discussed in Sect. I. While the approach [12] also estimates the essential matrix, it achieves significantly better performance, primarily because it incorporates statistical analyses to explicitly identify pure rotation cases. In addition, the algorithm [18], which directly estimates relative poses, demonstrates strong robustness in pure rotation scenarios. We attribute this to the normalization of the translation vector and the decoupled optimization of rotation and translation in their method.

Moreover, the previous birotation-based method [19] struggles to stably solve pure rotation problems, primarily due to its sensitivity to the initialization of relative poses induced by the penalty term. In contrast, our proposed algorithm demonstrates superior robustness across all evaluation thresholds, regardless of whether the correspondences are dense or sparse. In addition, our approach performs on par with the homography estimation approach [34], which is specifically designed for pure rotation scenarios, further validating the accuracy of our algorithm in this corner case.

IV-D3 Performance on Two Specific Tasks

Additionally, we conduct extensive experiments to demonstrate the applicability of our relative pose estimation algorithm to two specific computer vision tasks: stereo camera extrinsic online calibration and monocular visual odometry.

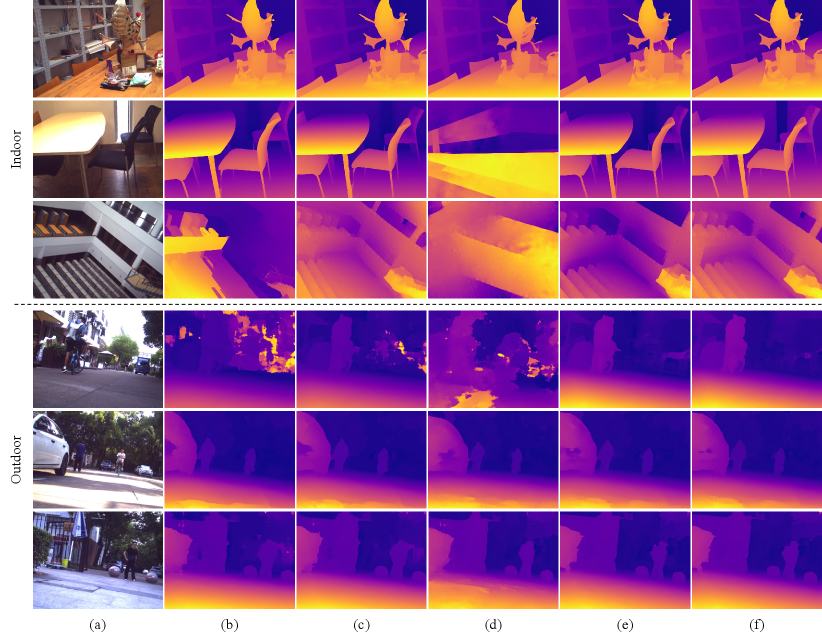

In addition to AUC, we follow the studies [18] and [19] to compute the average absolute rotation and translation errors in the stereo camera extrinsic online calibration experiment. As shown in Table IV, our algorithm consistently achieves SoTA performance in both indoor and outdoor scenarios. Notably, it increases the three translation components by approximately , , and in indoor scenarios, and by , , and in outdoor scenarios. As expected, the translation vectors estimated by the algorithm [18] are occasionally unsatisfactory, primarily due to the significant non-convexity of its energy function shown in (5). Moreover, although the previous birotation-based method [19], specifically designed for stereo camera extrinsic online calibration, achieves relatively high AUC, it yields unexpectedly large average absolute rotation and translation errors in indoor scenarios due to outliers in the estimated relative poses. These outliers are primarily caused by the inherent non-uniqueness of the birotation solution, as discussed in Sect. V. In contrast, our method ensures a unique solution by incorporating a regularization term into the energy function defined in (36). Furthermore, in outdoor scenarios, our method outperforms the method [19], primarily because the adjustments in constraints defined in (20)-(22) eliminate the influence of implicit weights in residual accumulation, as described in Sect. III-B. In addition, we also provide qualitative comparisons of different relative pose estimation approaches by visualizing disparity maps estimated using pre-trained deep stereo networks [39, 40]. These disparity maps are generated from stereo images rectified using the extrinsic parameters estimated by different relative pose estimation algorithms. As illustrated in Fig. 4, our method produces disparity maps with fewer erroneous regions compared to other approaches, which further validates its greater accuracy in both outdoor and indoor scenarios.

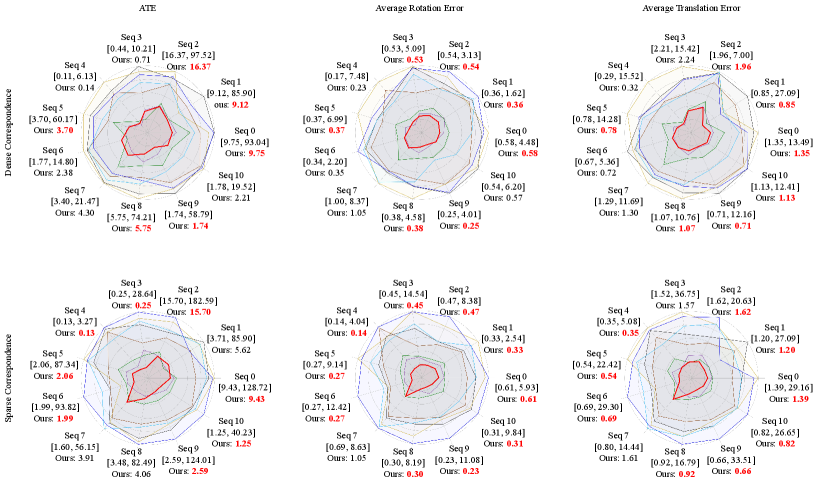

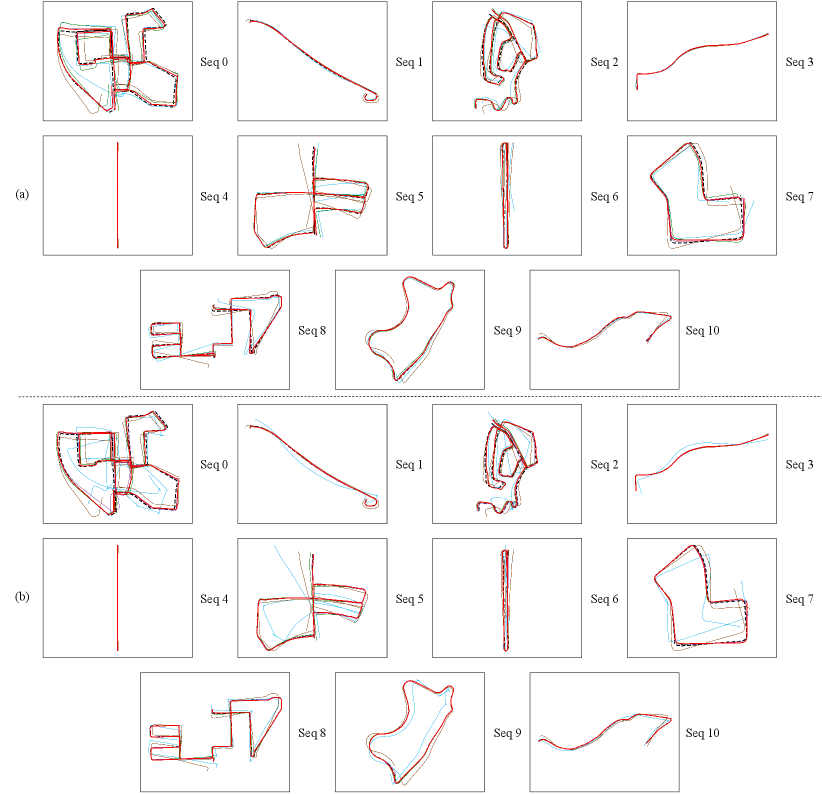

Quantitative comparisons of relative pose estimation algorithms for monocular visual odometry are depicted in Fig. 5, where our method achieves SoTA performance across all three matrices. Specifically, when correspondences are dense, our method achieves improvements of around in ATE, in , and in , while these gains increase to around , , and when using sparse correspondences. Notably, our algorithm outperforms existing approaches on Seqs 0, 2, 5, 8, and 9, which were collected along complex motion trajectories. These results underscore the robustness of our method for monocular visual odometry, particularly in challenging scenarios. As expected, the previous birotation-based method [19] performs poorly on this task, as ground-truth relative poses are mostly aligned with the third basis transformation, making the approach originally designed for relatively ideal stereo vision model infeasible. Moreover, the two SoTA data-driven approaches underperform several explicit programming-based methods, as current learning-based visual odometry frameworks lack sufficient geometric constraints during training to ensure accurate relative pose estimation. We also visualize the trajectories obtained by the five top-performing approaches to further compare their monocular visual odometry performance. As illustrated in Fig. 6, our approach yields trajectories that closely align with the ground truth in all sequences when using both dense and sparse correspondences, which demonstrates its high accuracy and robustness in the monocular visual odometry task.

IV-E Ablation Studies

| Ground-Truth Relative Pose | @ | @ | @ | @ | |||

| Left-right | 55.94 | 85.02 | 91.01 | 95.51 | |||

| 54.35 | 83.37 | 89.62 | 94.54 | ||||

| 0.19 | 0.29 | 0.30 | 0.54 | ||||

| 55.94 | 85.02 | 91.01 | 95.51 | ||||

| Top-bottom | 3.69 | 20.66 | 32.16 | 48.49 | |||

| 8.84 | 44.67 | 63.69 | 81.36 | ||||

| 1.10 | 5.33 | 7.85 | 11.79 | ||||

| 8.93 | 44.54 | 63.61 | 80.94 | ||||

| Front-back | 2.13 | 6.73 | 8.71 | 10.49 | |||

| 1.69 | 3.89 | 4.67 | 5.69 | ||||

| 14.61 | 63.97 | 77.91 | 88.85 | ||||

| 14.61 | 63.97 | 77.91 | 88.85 |

In our proposed method, three energy functions are simultaneously optimized to obtain the optimal birotation solution. To validate the necessity of this strategy, we conduct an ablation study on the contribution of each energy function. As shown in Table V, optimizing a single energy function achieves performance comparable to optimizing all three energy functions when the ground-truth relative poses are closely aligned with the corresponding basis transformation. However, its performance degrades significantly in the other two cases, demonstrating the practical necessity of optimizing all three energy functions.

| Dataset | Adjustments | @ | @ | @ | @ |

| ScanNet [32] | 0.97 | 9.17 | 17.28 | 31.03 | |

| 1.08 | 9.56 | 18.65 | 33.08 | ||

| YFCC100M [33] | 9.49 | 29.73 | 42.61 | 59.46 | |

| 10.15 | 32.02 | 45.89 | 63.45 |

| Dataset | Algorithm | Dense Correspondence | Sparse Correspondence | ||||||

| @ | @ | @ | @ | @ | @ | @ | @ | ||

| ScanNet | Nistér [8] | 0.56 | 6.84 | 15.24 | 31.49 | 0.74 | 7.82 | 16.54 | 33.44 |

| +Ours | 1.08 | 9.56 | 18.65 | 33.08 | 1.05 | 8.53 | 17.75 | 33.94 | |

| Hartley [7] | 0.50 | 7.50 | 16.28 | 32.71 | 0.55 | 6.92 | 15.38 | 32.14 | |

| +Ours | 0.98 | 9.22 | 18.12 | 32.74 | 1.09 | 8.65 | 17.69 | 33.57 | |

| Barath et al. [13] | 0.89 | 9.30 | 18.26 | 34.41 | 0.66 | 8.01 | 17.15 | 35.11 | |

| +Ours | 1.11 | 9.59 | 18.86 | 34.07 | 1.08 | 8.98 | 18.23 | 34.46 | |

| YFCC100M | Nistér [8] | 6.57 | 26.84 | 41.65 | 62.01 | 6.95 | 26.74 | 41.18 | 60.74 |

| +Ours | 10.15 | 32.02 | 45.89 | 63.45 | 9.49 | 29.19 | 41.67 | 58.22 | |

| Hartley [7] | 8.60 | 30.16 | 44.83 | 64.05 | 8.04 | 28.24 | 42.31 | 61.54 | |

| +Ours | 10.14 | 32.06 | 45.83 | 63.23 | 9.61 | 29.35 | 41.93 | 58.41 | |

| Barath et al. [13] | 9.36 | 33.17 | 48.53 | 67.40 | 9.44 | 32.32 | 46.48 | 64.34 | |

| +Ours | 10.60 | 33.22 | 47.40 | 64.88 | 9.89 | 30.27 | 42.90 | 59.39 | |

In addition, to demonstrates the superiority of the adjusted constraints shown in (20)-(22) for residual accumulation, we conduct another ablation study on the ScanNet and YFCC100M datasets, both of which exhibit high diversity in ground-truth relative poses. As shown in Table VI, the adjusted constraints enable our algorithm to increase the average AUC by approximately on the ScanNet dataset and around on the YFCC100M dataset across different thresholds.

IV-F Robustness Analyses

We first evaluate the performance of our algorithm with respect to different initialization strategies on the ScanNet and YFCC100M datasets. In addition to the default method [8], we also use two other explicit pose estimation algorithms: the direct linear transformation approach [7] and the five-point algorithm combined with marginalizing sample consensus (MAGSAC) [13]. As shown in Table VII, our method performs stably across different initial relative poses on both datasets. Moreover, it achieves significant improvements over the methods used for initialization on the ScanNet dataset. However, on the YFCC100M dataset, our method performs slightly worse than the algorithm [13], as MAGSAC is particularly effective at removing a large number of outliers.

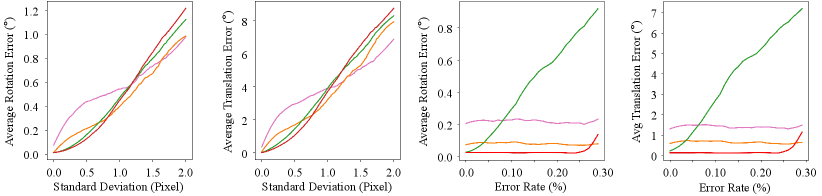

We also compute the average rotation and translation errors of the four top-performing algorithms on the two synthetic datasets to evaluate the robustness of our method under varying correspondence noise levels and matching error rates, respectively. As illustrated in Fig. 7(a)–(b), when the standard deviation of Gaussian noise on correspondences is below 0.8 pixels, our algorithm outperforms existing approaches. Nonetheless, as the standard deviation exceeds 0.8 pixels, the average rotation and translation errors of our method increase significantly compared to others. Additionally, as shown in Fig. 7(c)-(d), our method consistently achieves the lowest rotation and translation errors compared to existing approaches when the correspondence matching error rate is below 0.28. Nevertheless, as the proportion of incorrect correspondences increases, the accuracy of our method gradually degrades. These results indicate that our method is highly effective in scenarios with relatively accurate correspondences, but its performance degrades under conditions of extremely low-quality correspondence matching.

V Discussion

As mentioned earlier in the stereo camera extrinsic online calibration experiment, recovering the relative pose from a birotation solution is non-unique, resulting in outliers in the estimated relative poses. This section provides detailed analyses of this issue.

We begin by revisiting the non-uniqueness in essential matrix decomposition. Four possible solutions exist because the epipolar constraint remains satisfied when one or both camera coordinate systems are rotated by radians around the translation vector. Notably, when the relative pose aligns with one of the basis transformations, the epipolar constraint (2) simplifies to the corresponding constraint in (11), (12), and (13). Therefore, similar to the essential matrix decomposition, recovering the relative pose from a birotation solution also yields four possible solutions. Specifically, the constraint in (11) can be derived from the following two transformations:

| (51) | ||||

| (52) |

where

| (53) |

denotes a -radian rotation around the X-axis. The first birotation solution thus corresponds to four possible relative poses expressed as follows:

| (54) | ||||

| (55) | ||||

| (56) | ||||

| (57) |

Similarly, the constraint in (12) can also be derived from the following two transformations:

| (58) | ||||

| (59) |

where

| (60) |

denotes a -radian rotation around the Y-axis. The second birotation solution corresponds to four possible relative poses expressed as follows:

| (61) | ||||

| (62) | ||||

| (63) | ||||

| (64) |

Additionally, the constraint represented in (13) can be derived from the following two transformations:

| (65) | ||||

| (66) |

where

| (67) |

denotes a -radian rotation around the Z-axis. The third birotation solution thus corresponds to four possible relative poses expressed as follows:

| (68) | ||||

| (69) | ||||

| (70) | ||||

| (71) |

Similar to the process of determining the correct relative pose after essential matrix decomposition, all possible relative poses from birotation solutions can be enumerated, and the correct one can be identified by checking the depth signs of the reconstructed 3-D points. Nonetheless, in our proposed relative pose estimation algorithm, this process can be omitted when performing appropriate initialization. When the initial relative pose obtained from essential matrix decomposition is close to the correct solution, the regularization term in (36) ensures that the birotation solution does not converge to incorrect ones. Although our approach consistently produces unique solutions, as demonstrated in our experiments, there remains a potential risk of yielding non-unique solutions in practical applications, which we leave for future work.

VI Conclusion and Future Work

This article introduced a novel birotation solution for general relative pose problems. To expand the applicability of existing birotation-based methods, this study first presented three basis transformations and the corresponding geometric metrics, which serve as the foundation for the formulation of three energy functions. Relative poses can be accurately and robustly estimated by simultaneously minimizing these energy functions. This high-performing relative pose estimation algorithm significantly overcame the limitations of existing birotation-based methods, particularly by reducing reliance on initialization quality and alleviating the non-uniqueness in relative pose recovery. Extensive experiments conducted on both real-world and synthetic datasets demonstrate the superior performance of our algorithm over existing relative pose estimation methods across varying correspondence densities, as well as its strong robustness to different correspondence noise levels and matching error rates.

In the future, we are committed to further advancing our approach along the following three directions: (1) improving its efficiency by avoiding the simultaneous optimization of all three energy functions, (2) enhancing its robustness by incorporating a self-evolving correspondence matching network, and (3) ensuring the uniqueness of relative pose recovery from a birotation solution.

References

- [1] E. Dexheimer et al., “Information-theoretic online multi-camera extrinsic calibration,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 4757–4764, 2022.

- [2] T. Dang et al., “Continuous stereo self-calibration by camera parameter tracking,” IEEE Transactions on Image Processing, vol. 18, no. 7, pp. 1536–1550, 2009.

- [3] P. Hansen et al., “Online continuous stereo extrinsic parameter estimation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2012, pp. 1059–1066.

- [4] D. Nistér et al., “Visual odometry,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2004, DOI: 10.1109/CVPR.2004.1315094.

- [5] A. Levin and R. Szeliski, “Visual odometry and map correlation,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2004, DOI: 10.1109/CVPR.2004.1315088.

- [6] W. Chen et al., “LEAP-VO: Long-term effective any point tracking for visual odometry,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024, pp. 19 844–19 853.

- [7] R. I. Hartley, “In defense of the eight-point algorithm,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 19, no. 6, pp. 580–593, 1997.

- [8] D. Nistér, “An efficient solution to the five-point relative pose problem,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 26, no. 6, pp. 756–770, 2004.

- [9] O. Chum and J. Matas, “Optimal randomized RANSAC,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 8, pp. 1472–1482, 2008.

- [10] T. Wei et al., “Generalized differentiable RANSAC,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023, pp. 17 649–17 660.

- [11] Y.-Y. Jau et al., “Deep keypoint-based camera pose estimation with geometric constraints,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 4950–4957.

- [12] J. Zhao, “An efficient solution to non-minimal case essential matrix estimation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 4, pp. 1777–1792, 2020.

- [13] D. Barath et al., “MAGSAC: Marginalizing sample consensus,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 10 197–10 205.

- [14] U. Helmke et al., “Essential matrix estimation using Gauss-Newton iterations on a manifold,” International Journal of Computer Vision, vol. 74, pp. 117–136, 2007.

- [15] Q. Cai et al., “Equivalent constraints for two-view geometry: Pose solution/pure rotation identification and 3D reconstruction,” International Journal of Computer Vision, vol. 127, pp. 163–180, 2019.

- [16] Y. Zheng et al., “Revisiting the PnP problem: A fast, general and optimal solution,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2013, pp. 2344–2351.

- [17] V. Lepetit et al., “EPnP: An accurate O(n) solution to the PnP problem,” International Journal of Computer Vision, vol. 81, pp. 155–166, 2009.

- [18] Y. Ling and S. Shen, “High-precision online markerless stereo extrinsic calibration,” in IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016, pp. 1771–1778.

- [19] H. Zhao et al., “Dive deeper into rectifying homography for stereo camera online self-calibration,” in IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2024, pp. 14 479–14 485.

- [20] K. Kanatani and Y. Sugaya, “Unified computation of strict maximum likelihood for geometric fitting,” Journal of Mathematical Imaging and Vision, vol. 38, pp. 1–13, 2010.

- [21] Z. Zhang, “Determining the epipolar geometry and its uncertainty: A review,” International Journal of Computer Vision, vol. 27, pp. 161–195, 1998.

- [22] Y. Ma et al., “Optimization criteria and geometric algorithms for motion and structure estimation,” International Journal of Computer Vision, vol. 44, pp. 219–249, 2001.

- [23] R. Tron and K. Daniilidis, “The space of essential matrices as a Riemannian quotient manifold,” SIAM Journal on Imaging Sciences, vol. 10, no. 3, pp. 1416–1445, 2017.

- [24] A. Kendall et al., “PoseNet: A convolutional network for real-time 6-DOF camera relocalization,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2015, pp. 2938–2946.

- [25] J.-W. Bian et al., “Unsupervised scale-consistent depth learning from video,” International Journal of Computer Vision, vol. 129, no. 9, pp. 2548–2564, 2021.

- [26] Y. Feng et al., “SCIPaD: Incorporating spatial clues into unsupervised pose-depth joint learning,” IEEE Transactions on Intelligent Vehicles, 2024, DOI: 10.1109/TIV.2024.3460868.

- [27] J. D. Dixon and B. Mortimer, Permutation groups. Springer Science & Business Media, 1996, vol. 163.

- [28] J. Sun et al., “LoFTR: Detector-free local feature matching with transformers,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 8922–8931.

- [29] Z. Li and N. Snavely, “MegaDepth: Learning single-view depth prediction from internet photos,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 2041–2050.

- [30] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” International Journal of Computer Vision, vol. 60, pp. 91–110, 2004.

- [31] P. Lindenberger et al., “LightGlue: Local feature matching at light speed,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 17 627–17 638.

- [32] A. Dai et al., “ScanNet: Richly-annotated 3D reconstructions of indoor scenes,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 5828–5839.

- [33] B. Thomee et al., “YFCC100M: The new data in multimedia research,” Communications of the ACM, vol. 59, no. 2, pp. 64–73, 2016.

- [34] R. Hartley and A. Zisserman, Multiple view geometry in computer vision. Cambridge University Press, 2003.

- [35] A. Geiger et al., “Vision meets robotics: The KITTI dataset,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1231–1237, 2013.

- [36] P. J. Huber, “Robust estimation of a location parameter,” in Breakthroughs in statistics: Methodology and distribution. Springer, 1992, pp. 492–518.

- [37] P.-E. Sarlin et al., “SuperGlue: Learning feature matching with graph neural networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 4938–4947.

- [38] J. Sturm et al., “A benchmark for the evaluation of RGB-D SLAM systems,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2012, pp. 573–580.

- [39] J.-R. Chang and Y.-S. Chen, “Pyramid stereo matching network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 5410–5418.

- [40] L. Lipson et al., “RAFT-Stereo: Multilevel recurrent field transforms for stereo matching,” in 2021 International Conference on 3D Vision (3DV). IEEE, 2021, pp. 218–227.