A Comprehensive Survey on

Graph Anomaly Detection with Deep Learning

Abstract

Anomalies are rare observations (e.g., data records or events) that deviate significantly from the others in the sample. Over the past few decades, research on anomaly mining has received increasing interests due to the implications of these occurrences in a wide range of disciplines - for instance, security, finance, and medicine. For this reason, anomaly detection, which aims to identify these rare observations, has become one of the most vital tasks in the world and has shown its power in preventing detrimental events, such as financial fraud, network intrusions, and social spam. The detection task is typically solved by identifying outlying data points in the feature space, which, inherently, overlooks the relational information in real-world data. At the same time, graphs have been prevalently used to represent the structural/relational information, which raises the graph anomaly detection problem - identifying anomalous graph objects (i.e., nodes, edges and sub-graphs) in a single graph, or anomalous graphs in a set/database of graphs. Conventional anomaly detection techniques cannot tackle this problem well because of the complexity of graph data (e.g., irregular structures, relational dependencies, node/edge types/attributes/directions/multiplicities/weights, large scale, etc.). However, thanks to the advent of deep learning in breaking these limitations, graph anomaly detection with deep learning has received a growing attention recently. In this survey, we aim to provide a systematic and comprehensive review of the contemporary deep learning techniques for graph anomaly detection. Specifically, we provide a taxonomy that follows a task-driven strategy and categorizes existing work according to the anomalous graph objects that they can detect. We especially focus on the challenges in this research area and discuss the key intuitions, technical details as well as relative strengths and weaknesses of various techniques in each category. From the survey results, we highlight 12 future research directions spanning unsolved and emerging problems introduced by graph data, anomaly detection, deep learning and real-world applications. Additionally, to provide a wealth of useful resources for future studies, we have compiled a set of open-source implementations, public datasets, and commonly-used evaluation metrics. With this survey, our goal is to create a “one-stop-shop” that provides a unified understanding of the problem categories and existing approaches, publicly available hands-on resources, and high-impact open challenges for graph anomaly detection using deep learning.

Index Terms:

Anomaly detection, outlier detection, fraud detection, rumor detection, fake news detection, spammer detection, misinformation, graph anomaly detection, deep learning, graph embedding, graph representation, graph neural networks.1 Introduction

Anomalies were first defined by Grubbs in 1969 [grubbs1969procedures] as “one that appears to deviate markedly from other members of the sample in which it occurs” and the studies on anomaly detection were initiated by the statistics community in the 19th century. To us, anomalies might appear as social spammers or misinformation in social media; fraudsters, bot users or sexual predators in social networks; network intruders or malware in computer networks and broken devices or malfunctioning blocks in industry systems, and they often introduce huge damage to the real-world systems they appear in. According to FBI’s 2014 Internet Crime Report111https://www.fbi.gov/file-repository/2014_ic3report.pdf/view, the financial loss due to crime on social media reached more than $60 million in the second half of the year alone and a more up-to-date report222https://www.zdnet.com/article/online-fake-news-costing-us-78-billion-globally-each-year/ indicates that the global economic cost of online fake news reached around $78 billion a year in 2020.

In computer science, the research on anomaly detection dates back to the 1980s, and detecting anomalies on graph data has been an important data mining paradigm since the beginning. However, the extensive presence of connections between real-world objects and advances in graph data mining in the last decade have revolutionized our understanding of the graph anomaly detection problems such that this research field has received a dramatic increase in interest over the past five years. One of the most significant changes is that graph anomaly detection has evolved from relying heavily on human experts’ domain knowledge into machine learning techniques that eliminate human intervention, and more recently, to various deep learning technologies. These deep learning techniques are not only capable of identifying potential anomalies in graphs far more accurately than ever before, but they can also do so in real-time.

For our purposes today, anomalies, which are also known as outliers, exceptions, peculiarities, rarities, novelties, etc., in different application fields, refer to abnormal objects that are significantly different from the standard, normal, or expected. Although these objects rarely occur in real-world, they contain critical information to support downstream applications. For example, the behaviors of fraudsters provide evidences for anti-fraud detection and abnormal network traffics reveal signals for network intrusion protection. Anomalies, in many cases, may also have real and adverse impacts, for instance, fake news in social media can create panic and chaos with misleading beliefs [hooi2016birdnest, ahmed2019combining, nguyen2020fang, tam2019anomaly], untrustworthy reviews in online review systems can affect customers’ shopping choices [yu2016survey, benamira2019semi, kumar2018rev2], network intrusions might leak private personal information to hackers [mongiovi2013netspot, miller2013efficient, DBLP:journals/compsec/MiaoSZ20, perozzi2016scalable], and financial frauds can cause huge damage to economic systems [xuexiong2022, 10.1145/3394486.3403361, 10.1145/3394486.3403354, DBLP:conf/www/GuoLAHHZZ19].

Anomaly detection is the data mining process that aims to identify the unusual patterns that deviate from the majorities in a dataset [iglewicz1993detect, chandola2009anomaly, Fraud2020]. In order to detect anomalies, conventional techniques typically represent real-world objects as feature vectors (e.g., news in social media are represented as bag-of-words [sun2018detecting], and images in web pages are represented as color histograms [DBLP:conf/icdm/WuHPZCZ14]), and then detect outlying data points in the vector space [DBLP:conf/ijcai/WangL20, DBLP:conf/kdd/PangCCL18, DBLP:journals/corr/abs-2007-02500], as shown in Fig. 1(a). Although these techniques have shown power in locating deviating data points under tabulated data format, they inherently discard the complex relationships between objects [akoglu2015graph].

Yet, in reality, many objects have rich relationships with each other, which can provide valuable complementary information for anomaly detection. Take online social networks as an example, fake users can be created using valid information from normal users or they can camouflage themselves by mimicking benign users’ attributes [hooi2017graph, CARE-GNN]. In such situations, fake users and benign users would have near-identical features, and conventional anomaly detection techniques might not be able to identify them using feature information only. Meanwhile, fake users always build relationships with a large number of benign users to increase their reputation and influence so they can get unexpected benefits, whereas benign users rarely exhibit such activities [pandit2007netprobe, Densealert]. Hence, these dense and unexpected connections formed by fake users denote their deviations to the benigns and more comprehensive detection techniques should take these structural information into account to pinpoint the deviating patterns of anomalies.

To represent the structural information, Graphs, in which nodes/vertices denote real objects, and the edges denote their relationships, have been prevalently used in a range of application fields [liu2008spotting, wu2014multi, gao2017collaborative, aggarwal2011outlier, wu2017multiple, NIPS20201], including social activities, e-commerce, biology, academia and communication. With the structural information contained in graphs, detecting anomalies in graphs raises a more complex anomaly detection problem in non-Euclidean space - graph anomaly detection (GAD) that aims to identify anomalous graph objects (i.e., nodes, edges or sub-graphs) in a single graph as well as anomalous graphs among a set/database of graphs [akoglu2015graph, DBLP:journals/jiis/ChenHS12, GBGP]. As a toy example shown in Fig. 1(b), given an online social network, graph anomaly detection aims to identify anomalous nodes (i.e., malicious users), anomalous edges (i.e., abnormal relations) and anomalous sub-graphs (i.e., malicious user groups). But, because the copious types of graph anomalies cannot be directly represented in Euclidean feature space, it is not feasible to directly apply traditional anomaly detection techniques to graph anomaly detection, and researchers have intensified their efforts to GAD recently.

Amongst earlier works in this area, the detection methods relied heavily on handcrafted feature engineering or statistical models built by domain experts [akoglu2010oddball, eswaran2018spotlight, li2014probabilistic]. This inherently limits these techniques’ capability to detect unknown anomalies, and the exercise tended to be very labor-intensive. Many machine learning techniques, such as matrix factorization [li2017radar, DBLP:journals/pnas/MahoneyD09] and SVM [DBLP:journals/pr/ErfaniRKL16], have also been applied to detect graph anomalies. However, real-world networks often contain millions of nodes and edges that result in extremely high dimensional and large-scale data, and these techniques do not easily scale up to such data efficiently. Practically, they exhibit high computational overhead in both the storage and execution time [DBLP:journals/jbd/ThudumuBJS20]. These general challenges associated with graph data are significant for the detection techniques, and we categorize them as data-specific challenges (Data-CHs) in this survey. A summary of them is provided in Appendix A.

| Surveys | AD | DAD | GAD | GADL | Source Code | Dataset | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Node | Edge | Sub-graph | Graph | Real-world | Synthetic | |||||

| Our Survey | ||||||||||

| Chandola et al. [chandola2009anomaly] | - | - | - | - | - | - | - | - | - | |

| Boukerche et al. [DBLP:journals/csur/BoukercheZA20] | - | - | - | - | - | - | - | - | ||

| Bulusu et al. [DBLP:journals/corr/abs-2003-06979] | - | - | - | - | - | - | - | - | ||

| Thudumu et al. [DBLP:journals/jbd/ThudumuBJS20] | - | - | - | - | - | - | - | |||

| Pang et al. [DBLP:journals/corr/abs-2007-02500] | - | - | - | |||||||

| Chalapathy and Chawla [chalapathy2019deep] | - | - | - | - | - | - | ||||

| Akoglu et al. [akoglu2015graph] | - | - | - | - | - | - | - | - | ||

| Ranshous et al. [ranshous2015anomaly] | - | - | - | - | - | - | - | |||

| Jennifer and Kumar [d2021anomaly] | - | - | - | - | - | - | - | - | ||

| Eltanbouly et al. [DBLP:conf/iciot3/EltanboulyBACE20] | - | - | - | - | - | - | - | |||

| Fernandes et al. [DBLP:journals/telsys/FernandesRCAP19] | - | - | - | - | - | - | - | |||

| Kwon et al. [kwon2019survey] | - | - | - | - | - | - | - | |||

| Gogoi et al. [DBLP:journals/cj/GogoiBBK11] | - | - | - | - | - | - | - | - | ||

| Savage et al. [savage2014anomaly] | - | - | - | - | - | - | - | - | ||

| Yu et al. [yu2016survey] | - | - | - | - | - | - | - | - | ||

| Hunkelmann et al. [ahmed2019combining] | - | - | - | - | - | - | - | - | ||

| Pourhabibi et al. [Fraud2020] | - | - | - | - | - | - | ||||

| * AD: Anomaly Detection, DAD: Anomaly Detection with Deep Learning, GAD: Graph Anomaly Detection. | ||||||||||

| * GADL: Graph Anomaly Detection with Deep Learning. | ||||||||||

|

* -: not included, |

||||||||||

Non-deep learning based techniques also lack the capability to capture the non-linear properties of real objects [DBLP:journals/corr/abs-2007-02500]. Hence, the representations of objects learned by them are not expressive enough to fully support graph anomaly detection. To tackle these problems, more recent studies seek the potential of adopting deep learning techniques to identify anomalous graph objects. As a powerful tool for data mining, deep learning has achieved great success in data representation and pattern recognition [DBLP:conf/wsdm/WangNWYL20, zhang2019unsupervised, DBLP:conf/icdm/WangZ00ZX20]. Its deep architecture with layers of parameters and transformations appear to suit the aforementioned problems well. The more recent studies, such as deep graph representation learning and graph neural networks (GNNs), further enrich the capability of deep learning for graph data mining [ijcai2020-693, wu2020comprehensive, cui2018survey, NIPS20202, su2021comprehensive]. By extracting expressive representations such that graph anomalies and normal objects can be easily separated, or the deviating patterns of anomalies can be learned directly through deep learning techniques, graph anomaly detection with deep learning (GADL) is starting to take the lead in the forefront of anomaly detection. As a frontier technology, graph anomaly detection with deep learning, hence, is expected to generate more fruitful results on detecting anomalies and secure a more convenient life for the society.

1.1 Challenges in GAD with Deep Learning

Due to the complexity of anomaly detection and graph data mining [noble2003graph, DBLP:conf/ijcai/TengYEL18, shah2016edgecentric, DBLP:conf/icdm/WangGF17, GAL], in addition to the prior mentioned data-specific challenges, adopting deep learning techniques for graph anomaly detection also faces a number of challenges from the technical side. These challenges associated with deep learning are categorized as technique-specific challenges (Tech-CHs), and they are summarized as follows.

Tech-CH1. Anomaly-aware training objectives. Deep learning models rely heavily on the training objectives to fine-tune all the trainable parameters. For graph anomaly detection, this necessitates appropriate training objectives or loss functions such that the GADL models can effectively capture the differences between benign and anomalous objects. Designing anomaly-aware objectives is very challenging because there is no prior knowledge about the ground-truth anomalies as well as their deviating patterns versus the majority. How to effectively separate anomalies from normal objects through training remains critical for deep learning-based models.

Tech-CH2. Anomaly interpretability. In real-world scenarios, the interpretability of detected anomalies is also vital because we need to provide convincing evidence to support the subsequent anomaly handling process. For example, the risk management department of a financial organization must provide lawful evidence before blocking the accounts of identified anomalous users. As deep learning has been limited for its interpretability [DBLP:journals/jzusc/ZhangZ18, DBLP:journals/corr/abs-2007-02500], how to justify the detected graph anomalies remains a big challenge for deep learning techniques.

Tech-CH3. High training cost. Although D(G)NNs are capable of digesting rich information (e.g., structural information and attributes) in graph data for anomaly detection, these GADL models are more complex than conventional deep neural networks or machine learning methods due to the anomaly-aware training objectives. Such complexity inherently leads to high training costs in both time and computing resources.

Tech-CH4. Hyperparameter tuning. D(G)NNs naturally exhibit a large set of hyperparameters, such as the number of neurons in each neural network layer, the learning rate, the weight decay and the number of training epochs. Their learning performance is significantly affected by the values of these hyperparameters. However, it remains a serious challenge to effectively select the optimal/sub-optimal settings for the detection models due to the lack of labeled data in real scenarios.

Because deep learning models are sensitive to their associated hyperparameters, setting well-performing values for the hyperparameters is vital to the success of a task. Tuning hyperparameter is relatively trivial in supervised learning when labeled data are available. For instance, users can find an optimal/sub-optimal set of hyperparameters (e.g., through random search, grid search) by comparing the model’s outputs with the ground-truth. However, unsupervised anomaly detection has no accessible labeled data to judge the model’s performance under different hyperparameter settings [akoglu2021anomaly, zhao2020automating]. Selecting the ideal hyperparameter values for unsupervised detection models persists as a critical obstacle to applying them in a wide range of real scenarios.

1.2 Existing Anomaly Detection Surveys

Recognizing the significance of anomaly detection, many review works have been conducted in the last ten years covering a range of anomaly detection topics: anomaly detection with deep learning, graph anomaly detection, graph anomaly detection with deep learning, and particular applications of graph anomaly detection such as social media, social networks, fraud detection and network security, etc.

There are some representative surveys on generalized anomaly detection techniques - [chandola2009anomaly], [DBLP:journals/csur/BoukercheZA20] and [DBLP:journals/jbd/ThudumuBJS20]. But only the most up-to-date work in Thudumu et al. [DBLP:journals/jbd/ThudumuBJS20] covers the topic of graph anomaly detection. Recognizing the power of deep learning, the three contemporary surveys, Ruff et al. [ruff2021unifying], Pang et al. [DBLP:journals/corr/abs-2007-02500] and Chalapathy and Chawla [chalapathy2019deep] specifically review deep learning based anomaly detection techniques specifically.

As for graph anomaly detection, Akoglu et al. [akoglu2015graph], Ranshous et al. [ranshous2015anomaly], and Jennifer and Kumar [d2021anomaly] put their concentration on graph anomaly detection, reviewing many conventional approaches in this area, including statistical models and machine learning techniques. Other surveys are dedicated to particular applications of graph anomaly detection, such as computer network intrusion detection and anomaly detection in online social networks, e.g., [yu2016survey, ahmed2019combining, Fraud2020], and [DBLP:conf/iciot3/EltanboulyBACE20, DBLP:journals/telsys/FernandesRCAP19, kwon2019survey, DBLP:journals/cj/GogoiBBK11, savage2014anomaly]. These works provided solid reviews of the application of anomaly detection/graph anomaly detection techniques in these high demand and vital domains. However, none of the mentioned surveys are dedicated to techniques on graph anomaly detection with deep learning, as shown in Table I, and hence do not provide a systematic and comprehensive review of these techniques.

1.3 Contributions

Our contributions are summarized as follows:

-

•

The first survey in graph anomaly detection with deep learning. To the best of our knowledge, our survey is the first to review the state-of-the-art deep learning techniques for graph anomaly detection. Most of the relevant surveys focus either on conventional graph anomaly detection methods using non-deep learning techniques or on generalized anomaly detection techniques (for tabular/point data, time series, etc.). Until now, there has been no dedicated and comprehensive survey on graph anomaly detection with deep learning. Our work bridges this gap, and we expect that an organized and systematic survey will help push forward research in this area.

-

•

A systematic and comprehensive review. In this survey, we review the most up-to-date deep learning techniques for graph anomaly detection published in influential international conferences and journals in the area of deep learning, data mining, web services, and artificial intelligence, including: TKDE, TKDD, TPAMI, NeurIPS, SIGKDD, ICDM, WSDM, SDM, SIGMOD, IJCAI, AAAI, ICDE, CIKM, ICML, WWW, CVPR, and others. We first summarize seven data-specific and four technique-specific challenges in graph anomaly detection with deep learning. We then comprehensively review existing works from the perspectives of: 1) the motivations behind the deep methods; 2) the main ideas for identifying graph anomalies; 3) a brief introduction to conventional non-deep learning techniques; and 4) the technical details of deep learning algorithms. A brief timeline of graph anomaly detection and reviewed works is given in Fig. 2.

-

•

Future directions. From the survey results, we highlight 12 future research directions covering emerging problems introduced by graph data, anomaly detection, deep learning models, and real-world applications. These future opportunities indicate challenges that have not been adequately tackled, and so more effort is needed in the future.

-

•

Affluent resources. Our survey also provides an extensive collection of open-sourced anomaly detection algorithms, public datasets, synthetic dataset generating techniques, as well as commonly used evaluation metrics to push forward the state-of-the-art in graph anomaly detection. These published resources offer benchmark datasets and baselines for future research.

-

•

A new taxonomy. We have organized this survey with regard to different types of anomalies (i.e., nodes, edges, sub-graphs, and graphs) existing in graphs or graph databases. We also pinpoint the differences and similarities between different types of graph anomalies.

The rest of this survey is organized as follows. In Section 2, we provide preliminaries about the different types of settings. From Section 3 to Section 9, we review existing techniques for detecting anomalous nodes, edges, sub-graphs and graphs, respectively. In Section 10, we first provide a collection of published graph anomaly detection algorithms and datasets and then summarize commonly used evaluation metrics and synthetic data generation strategies. We highlight 12 future directions concerning deep learning in graph anomaly detection in Section 11 and summarize our survey in Section 12. A concrete taxonomy of our survey is given in Appendix B.

2 Preliminaries

In this section, we provide definitions of different types of graphs mostly used in node/edge/sub-graph-level anomaly detection (Section 3 to Section 7). For consistency, we have followed the conventional categorization of graphs as in existing works [akoglu2015graph, ranshous2015anomaly, kwon2019survey] and categorize them as static graphs, dynamic graphs, and graph databases. Unless otherwise specified, all graphs mentioned in the following sections are static. Meanwhile, as graph-level anomaly detection is discussed far away on page 13, to enhance readability, the definition for the graph database is given closer to the material in Section 8.

Definition 1 (Plain Graph). A static plain graph comprises a node set and an edge set where is the number of nodes and denotes an edge between nodes and . The adjacency matrix restores the graph structure, where if node and is connected, otherwise .

Definition 2 (Attributed Graph). A static attributed graph comprises a node set , an edge set and an attribute set . In an attributed graph, the graph structure follows the definition in Definition 1. The attribute matrix consists of nodes’ attribute vectors, where is the attribute vector associated with node and is the vector’s dimension. Hereafter, the terms attribute and feature are used interchangeably.

Definition 3 (Dynamic Graph). A dynamic graph comprises nodes and edges changing overtime. is the nodes set in the graph at a specific time step , is the corresponding edge set, and are the node attribute matrix and edge attribute matrix at time step in the graph if existed.

In reality, the nodes or edges might also be associated with numerical or categorical labels to indicate their classes (e.g., normal or abnormal). When label information is available/partially-available, supervised/semi-supervised detection models could be effectively trained.

3 Anomalous node detection (ANOS ND)

Anomalous nodes are commonly recognized as individual nodes that are significantly different from others. In real-world applications, these nodes often represent abnormal objects that appear individually, such as a single network intruder in computer networks, an independent fraudulent user in online social networks or a specific fake news on social media. In this section, we specifically focus on anomalous node detection in static graphs. The reviews on dynamic graphs can be found in Section 4. Table II at the end of Section 4 provides a summary of techniques reviewed for ANOS ND.

When detecting anomalous nodes in static graphs, the differences between anomalies and regular nodes are mainly drawn from the graph structural information and nodes/edges’ attributes [li2017radar, bojchevski2018bayesian, zhu2020mixedad, perozzi2014focused]. Given prior knowledge (i.e., community structure, attributes) about a static graph, anomalous nodes can be further categorized into the following three types:

-

•

Global anomalies only consider the node attributes. They are nodes that have attributes significantly different from all other nodes in the graph.

-

•

Structural anomalies only consider the graph structural information. They are abnormal nodes that have different connection patterns (e.g., connecting different communities, forming dense links with others).

-

•

Community anomalies consider both node attributes and graph structural information. They are defined as nodes that have different attribute values compared to other nodes in the same community.

In Fig. 3, node 14 is a global anomaly because its 4th feature value is 1 while all other nodes in the graph have the value of 0 for the corresponding feature. Nodes 5, 6, and 11 are identified as structural anomalies because they have links with other communities while other nodes in their community do not form cross-community links. Nodes 2 and 7 are community anomalies because their feature values are different from others in the communities they belong to.

3.1 ANOS ND on Plain Graphs

Plain graphs are dedicated to representing the structural information in real-world networks. To detect anomalous nodes in plain graphs, the graph structure has been extensively exploited from various angles. Here, we first summarize the representative traditional non-deep learning approaches, followed by a more recent, advanced detection technique based on representation learning.

3.1.1 Traditional Non-Deep Learning Techniques

Prior to the recent advances in deep learning and other state-of-the-art data mining technologies, traditional non-deep learning techniques have been widely used in many real-world networks to identify anomalous entities. A key idea behind these techniques was to transform the graph anomaly detection into a traditional anomaly detection problem, because the graph data with rich structure information can not be handled by the traditional detection techniques (for tabular data only) directly. To bridge the gap, many approaches [akoglu2010oddball, DBLP:conf/kdd/DingKBKC12, hooi2016fraudar] used the statistical features associated with each node, such as in/out degree, to detect anomalous nodes.

For instance, OddBall [akoglu2010oddball] employs the statistical features (e.g., the number of 1-hop neighbors and edges, the total weight of edges) extracted from each node and its 1-hop neighbors to detect particular structural anomalies that: 1) form local structures in shape of near-cliques or stars; 2) have heavy links with neighbors such that the total weight is extremely large; or 3) have a single dominant heavy link with one of the neighbors.

With properly selected statistical features, anomalous nodes can be identified with respect to their deviating feature patterns. But, in real scenarios, it is very hard to choose the most suitable features from a large number of candidates, and domain experts can always design new statistics, e.g., the maximum/minimum weight of edges. As a result, these techniques often carry prohibitive cost for assessing the most significant features and do not effectively capture the structural information.

3.1.2 Network Representation Based Techniques

To capture more valuable information from the graph structure for anomaly detection, network representation techniques have been widely exploited. Typically, these techniques encode the graph structure into an embedded vector space and identify anomalous nodes through further analysis. Hu et al. [hu2016embedding], for example, proposed an effective embedding method to detect structural anomalies that are connecting with many communities. It first adopts a graph partitioning algorithm (e.g., METIS [DBLP:journals/siamsc/KarypisK98]) to group nodes into communities ( is a user-specified number). Then, the method employs a specially designed embedding procedure to learn node embeddings that could capture the link information between each node and communities. Denoting the embedding for node as , the procedure initializes each with regard to the membership of node to community (if node belongs to the community, then ; otherwise, 0.) and optimizes node embeddings such that directly linked nodes have similar embeddings and unconnected nodes are dissimilar.

After generating the node embeddings, the link information between node and communities is quantified for further anomaly detection analysis. For a given node , such information is represented as:

| (1) |

where comprises node ’s neighbors. If has many links with community , then the value in the corresponding dimension will be large.

In the last step, Hu et al. [hu2016embedding] formulate a scoring function to assign anomalousness scores, calculated as:

| (2) |

As expected, structural anomalies receive higher scores as they connect to different communities. Indeed, given a predefined threshold, nodes with above-threshold scores are identified as anomalies.

To date, many plain network representation methods such as Deepwalk [perozzi2014deepwalk], Node2Vec [grover2016node2vec] and LINE [tang2015line] have shown their effectiveness in generating node representations and been used for anomaly detection performance validation [bandyopadhyay2020outlier, bandyopadhyay2019outlier, yu2018netwalk, cai2020structural]. By pairing the conventional anomaly detection techniques such as density-based techniques [breunig2000lof] and distance-based techniques [aggarwal2001outlier] with node embedding techniques, anomalous nodes can be identified with regard to their distinguishable locations (i.e., low-density areas or far away from the majorities) in the embedding space.

3.1.3 Reinforcement Learning Based Techniques

The success of reinforcement learning (RL) in tackling real-world decision making problems has attracted substantial interests from the anomaly detection community. Detecting anomalous nodes can be naturally regarded as a problem of deciding which class a node belongs to - anomalous or benign. As a special scenario of the general selective harvesting task, the anomalous node detection problem can be approached by a recent work in [morales2021selective] that intuitively combines reinforcement learning and network embedding techniques for selective harvesting. The proposed model, NAC, is trained with labeled data without any human intervention. Specifically, it first selects a seed network consisting of partially observed nodes and edges. Then, starting from the seed network, NAC adopts reinforcement learning to learn a node selection plan such that anomalous nodes in the undiscovered area can be identified. This is achieved by rewarding selection plans that can choose labeled anomalies with higher gains. Through offline training, NAC will learn an optimal/suboptimal anomalous node selection strategy and discover potential anomalies in the undiscovered graph step by step.

3.2 ANOS ND on Attributed Graphs

In addition to the structural information, real-world networks also contain rich attribute information affiliated with nodes [hamilton2017inductive, hamilton2017representation]. These attributes provide complementary information about real objects and together with graph structure, more hidden anomalies that are non-trivial can now be detected.

For clarity, we distinguish between deep neural networks and graph neural networks in this survey. We review deep neural network (Deep NN) based techniques, GCN based techniques, and reinforcement learning based techniques for ANOS ND as follows. Due to page limitations, other existing works including traditional non-deep learning techniques, GAT [velivckovic2017graph] based techniques, GAN based techniques, and network representation based techniques are surveyed in Appendix C.

3.2.1 Deep NN Based Techniques

The deep learning models such as autoencoder and deep neural networks provide solid basis for learning data representations. Adopting these models for more effective anomalous node detection have drawn substantial interest recently.

For example, Bandyopadhyay et al. [bandyopadhyay2020outlier] developed an unsupervised deep model, DONE, to detect global anomalies, structural anomalies and community anomalies in attributed graphs. Specifically, this work measures three anomaly scores for each node that indicate the likelihood of the situations where 1) it has similar attributes with nodes in different communities (); or 2) it connects with other communities (); or 3) it belongs to one community structurally but the attributes follow the pattern of another community (). If a particular node exhibits any of these characteristics, then it is assigned a higher score and is anomalous.

To acquire these scores, DONE adopts two separate autoencoders (AE), i.e., a structure AE and an attribute AE, as shown in Fig. 4. Both are trained by minimizing the reconstruction errors and preserving the homophily that assumes connected nodes have similar representations in the graph. When training the AEs, nodes exhibiting the predefined characteristics are hard to reconstruct and therefore introduce more reconstruction errors because their structure or attribute patterns do not conform to the standard behavior. Hence, the adverse impact of anomalies should be alleviated to achieve the minimized error. Accordingly, DONE specially designs an anomaly-aware loss function with five terms: , , , , and . and are the structure reconstruction error and attribute reconstruction error that can be written as:

| (3) |

and

| (4) |

where is the number of nodes, and store the structure information and attributes of node , and are the reconstructed vectors. and are proposed to maintain the homophily and they are formulated as:

| (5) |

and

| (6) |

where and are the learned latent representations from the structure AE and attribute AE, respectively. poses further restrictions on the generated representations for each node by the two AEs such that the graph structure and node attributes complement each other. It is formulated as:

| (7) |

By minimizing the sum of these loss functions, the anomaly scores of each node are quantified, and the top-k nodes with higher scores are identified as anomalies.

3.2.2 GCN Based Techniques

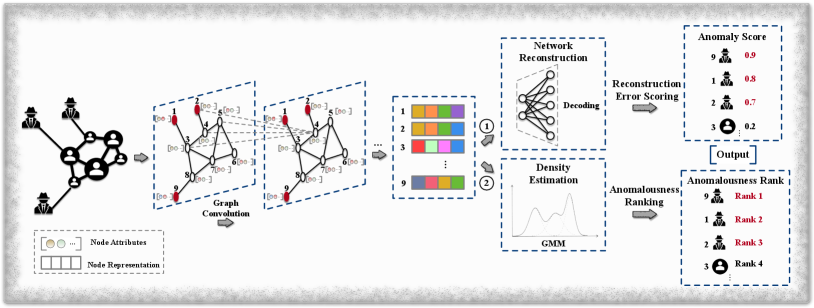

Graph convolutional neural networks (GCNs) [kipf2016gcn] have accomplished decent success in many graph data mining tasks (e.g., link prediction, node classification, and recommendation) owing to its capability of capturing comprehensive information in the graph structure and node attributes. Therefore, many anomalous node detection techniques start to investigate GCNs. Fig. 5 illustrates a general framework of existing works in this line.

In [ding2019deep], Ding et al. measured an anomaly score for each node using the network reconstruction errors of both the structure and attribute. The proposed method, DOMINANT, comprises three parts, namely, the graph convolutional encoder, the structure reconstruction decoder, and the attribute reconstruction decoder. The graph convolutional encoder generates node embeddings through multiple graph convolutional layers. The structure reconstruction decoder tends to reconstruct the network structure from the learned node embeddings, while the attribute reconstruction decoder reconstructs the node attribute matrix. The whole neural network is trained to minimize the following loss function:

| (8) |

where is the coefficient, depicts the adjacency matrix of the graph, and quantify reconstruction errors with regard to the graph structure and node attributes, respectively. When the training is finished, an anomaly score is then assigned to each node according to its contribution to the total reconstruction error, which is calculated by:

| (9) |

where and are the structure vector and attribute vector of node , and are their corresponding reconstructed vectors. The nodes are then ranked according to their anomaly scores in descending order, and the top-k nodes are recognized as anomalies.

To enhance the performance of anomalous node detection, later work by Peng et al. [peng2020deep] further explores node attributes from multiple attributed views to detect anomalies. The multiple attributed views are employed to describe different perspectives of the objects [sheng2019multi, 6848779, wu2013multi]. For example, in online social networks, user’s demographic information and posted contents are two different attributed views, and they characterize the personal information and social activities, respectively. The underlying intuition of investigating different views is that anomalies might appear to be normal in one view but abnormal in another view.

For the purpose of capturing these signals, the proposed method, ALARM, applies multiple GCNs to encode information in different views and adopts a weighted aggregation of them to generate node representations. This model’s training strategy is similar to DOMINANT [ding2019deep] in that it aims to minimize the network reconstruction loss and attribute reconstruction loss and can be formulated as:

| (10) |

where is coefficient to balance the errors, is the element at coordinate in the adjacency matrix , is the corresponding element in the reconstructed adjacency matrix , is the original node feature matrix and is the reconstructed node feature matrix. Lastly, ALARM adopts the same scoring function as [ding2019deep], and nodes with top-k highest scores are anomalous.

Instead of spotting unexpected nodes using their reconstruction errors, Li et al. [li2019specae] proposed SpecAE to detect global anomalies and community anomalies via a density estimation approach, Gaussian Mixture Model (GMM). Global anomalies can be identified by only considering the node attributes. For community anomalies, the structure and attributes need to be jointly considered because of their distinctive attributes to the neighbors. Accordingly, SpecAE investigates a graph convolutional encoder to learn node representations and reconstruct the nodal attributes through a deconvolution decoder. The parameters in the GMM are then estimated using the node representations. Due to the deviating attribute patterns of global and community anomalies, normal nodes are expected to exhibit greater energies in GMM, and the k nodes with the lowest probabilities are deemed to be anomalies.

In [wang2019fdgars], Wang et al. developed a novel detection model that identify fraudsters using their relations and features. Their proposed method, Fdgars, first models online users’ reviews and visited items as their features, and then identifies a small portion of significant fraudsters based on these features. In the last step, a GCN is trained in a semi-supervised manner by using the user-user network, user features, and labeled users. After training, the model can directly label unseen users.

A more recent work, GraphRfi [GraphRfi], also explores the potential of combining anomaly detection with other downstream graph analysis tasks. It targets on leveraging anomaly detection to identify malicious users and provide more accurate recommendations to service benign users by alleviating the impact of these untrustworthy users. Specifically, a GCN framework is deployed to encode users and items into a shared embedding space for recommendation and users are classified as fraudsters or normal users through an additional neural random forest using their embeddings. For rating prediction between users and items, the framework reduces the corresponding impact of suspicious users by assigning less weights to their training loss. At the same time, the rating behavior of users also provides auxiliary information for fraudster detection. The mutually beneficial relationship between these two applications (anomaly detection and recommendation) indicates the potential of information sharing among multiple graph learning tasks.

3.2.3 Reinforcement Learning Based Techniques

In contrast to NAC, Ding et al. [ding2019interactive] investigated to the use of reinforcement learning for anomalous node detection in attributed graphs. Their proposed algorithm, GraphUCB, models both attribute information and structural information, and inherits the merits of the contextual multi-armed bandit technology [langford2008epoch] to output potential anomalies. By grouping nodes into clusters based on their features, GraphUCB forms a -armed bandit model and measures the payoff of selecting a specific node as a potential anomaly for expert evaluation. With experts’ feedback on the predicted anomalies, the decision-making strategy is continuously optimized. Eventually, the most potential anomalies can be selected.

4 ANOS ND on Dynamic Graphs

Real-world networks can be modeled as dynamic graphs to represent evolving objects and the relationships among them. In addition to structural information and node attributes, dynamic graphs also contain rich temporal signals [DBLP:conf/wsdm/RossiGNH13], e.g., the evolving patterns of the graph structure and node attributes. On the one hand, these information inherently makes anomalous node detection on dynamic graphs more challenging. This is because dynamic graphs usually introduce large volume of data and temporal signals should also be captured for anomaly detection. But, on the other hand, they could provide more details about anomalies [ranshous2015anomaly, akoglu2015graph, wang2019detecting]. In fact, some anomalies might appear to be normal in the graph snapshot at each time stamp, and, only when the changes in a graph’s structure are considered, do they become noticeable.

In this section, we review the network representation based techniques and GAN based techniques as follows. Relevant techniques from traditional non-deep learning approaches are reviewed in Appendix D.

| Graph Type | Approach | Category | Objective Function | Measurement | Outputs |

| Static Graph - Plain | [hu2016embedding] | NR | Anomaly Score | ||

| DCI [wang2021decoupling] | NR | Anomaly Prediction | Predicted Label | ||

| NAC [morales2021selective] | RL | Cumulative reward | - | Anomalies | |

| Static Graph - Attributed | ALAD [liu2017accelerated] | Non-DP | Anomaly Score | ||

| Radar [li2017radar] | Non-DP | Residual Analysis | Residual Value | ||

| ANOMALOUS [peng2018anomalous] | Non-DP | Residual Analysis | Residual Value | ||

| SGASD [wu2017adaptive] | Non-DP | Anomaly Prediction | Predicted Label | ||

| DONE [bandyopadhyay2020outlier] | DNN | Anomaly Scores | |||

| DOMINANT [ding2019deep] | GCN | Anomaly Score | |||

| ALARM [peng2020deep] | GCN | Anomaly Score | |||

| SpecAE [li2019specae] | GCN | Density Estimation | Anomalousness Rank | ||

| Fdgars [wang2019fdgars] | GCN | Anomaly Prediction | Predicted Label | ||

| GraphRfi [GraphRfi] | GCN | Anomaly Prediction | Predicted Label | ||

| ResGCN [pei2021resgcn] | GCN | Anomaly Score | |||

| GraphUCB [ding2019interactive] | RL | Expert Judgment | - | Anomalies | |

| AnomalyDAE [fan2020anomalydae] | GAT | Reconstruction Loss | Anomalousness Rank | ||

| SemiGNN [SemiGNN] | GAT | Anomaly Prediction | Predicted Label | ||

| AEGIS [ding2020inductive] | GAN | Anomaly Score | |||

| REMAD [zhang2019robust] | NR | Residual Analysis | Residual Value | ||

| CARE-GNN [CARE-GNN] | NR | Anomaly Prediction | Predicted Label | ||

| SEANO [liang2018semi] | NR | Anomaly Score | Discriminator’s Output | ||

| OCGNN [wang2020ocgnn] | NR | Location in Embedding Space | Distance to Hypersphere Center | ||

| GAL [GAL] | NR | Anomaly Prediction | Predicted Label | ||

| CoLA [liu2021anomaly] | NR | Anomaly Score | |||

| COMMANDER [ding2021cross] | NR | Anomaly Score | |||

| FRAUDRE [zhangge1] | NR | Anomaly Prediction | Predicted Label | ||

| Meta-GDN [ding2021few] | NR | Anomaly Score | |||

| Dynamic Graph - Plain | NetWalk [yu2018netwalk] | DNN | Anomaly Score | Nearest Distance to Cluster Centers | |

| Dynamic Graph - Attributed | MTHL [teng2017anomaly] | Non-DP | Anomaly Score | Distance to Hypersphere Centroid | |

| OCAN [zheng2019one] | GAN | Anomaly Score | Discriminator’s Output | ||

| * Non-DP: Non-Deep Learning Techniques, DNN: Deep NN Based Techniques, GCN: GCN Based Techniques, RL: Reinforcement Learning Based Techniques. | |||||

| * GAT: GAT Based Techniques, NR: Network Representation Based Techniques, GAN: Generative Adversarial Network Based Techniques. | |||||

4.1 Network Representation Based Techniques

Following the research line of encoding a graph into an embedding space, after which anomaly detection is performed, dynamic network representation techniques have been investigated in the more recent works. Specifically, in [yu2018netwalk], Yu et al. presented a flexible deep representation technique, called NetWalk, for detecting anomalous nodes in dynamic (plain) graphs using only the structure information. It adopts an autoencoder to learn node representations on the initial graph and incrementally updates them when new edges are added or existing edges are deleted. To detect anomalies, NetWalk first executes the streaming -means clustering algorithm [ailon2009streaming] to group existing nodes in the current time stamp into different clusters. Then, each node’s anomaly score is measured with regard to its closest distance to the clusters. When the node representations are updated, the cluster centers and anomaly scores are recalculated accordingly.

4.2 GAN Based Techniques

In practice, anomaly detection is facing great challenges from the shortage of ground-truth anomalies. Consequently, many research efforts have been invested in modeling the features of anomalies or regular objects such that anomalies can be identified effectively. Among these techniques, generative adversarial networks (GAN) [goodfellow2014generative] have received extensive attention because of its impressive performance in capturing real data distribution and generating simulated data.

Motivated by the recent advances in “bad” GAN [dai2017good], Zheng et al. [zheng2019one] circumvented the fraudster detection problem using only the observed benign users’ attributes. The basic idea is to seize the normal activity patterns and detect anomalies that behave significantly differently. The proposed method, OCAN, starts by extracting the benign users’ content features using their historical social behaviors (e.g., historical posts, posts’ URL), for which this method is classified into the dynamic category. A long short-term memory (LSTM) based autoencoder [DBLP:conf/icml/SrivastavaMS15] is employed to achieve this and as assumed, benign users and malicious users are in separate regions in the feature space. Next, a novel one-class adversarial net comprising a generator and a discriminator is trained. Specifically, the generator produces complementary data points that locate in the relatively low density areas of benign users. The discriminator, accordingly, aims to distinguish the generated samples from the benign users. After training, benign users’ regions are learned by the discriminator and anomalies can hence be identified with regard to their locations.

Both NetWalk [yu2018netwalk] and OCAN [zheng2019one] approach the anomalous node detection problem promisingly, however, they respectively only consider the structure or attributes. By the success of static graph anomaly detection techniques that analyze both aspects, when the structure and attribute information in dynamic graphs are jointly considered, an enhanced detection performance can be foreseen. We therefore highlight this unexplored area for future works in Section 11.

5 Anomalous edge detection (ANOS ED)

In contrast to anomalous node detection, which targets individual nodes, ANOS ED aims to identify abnormal links. These links often inform the unexpected or unusual relationships between real objects [chang2021f], such as the abnormal interactions between fraudsters and benign users shown in Fig. 1, or suspicious interactions between attacker nodes and benign user machines in computer networks. Following the previous taxonomy, in this section, we review the state-of-the-art ANOS ED methods for static graphs, and Section 6 summarizes the techniques for dynamic graphs. A summary is provided in Table III. This section includes methods based on deep NNs, GCNs and network representations. The non-deep learning techniques are reviewed in Appendix E.

5.1 Deep NN Based Techniques

Similar to deep NN based ANOS ND techniques, autoencoder and fully connected network (FCN) have also been used for anomalous edge detection. As an example, Ouyang et al. [DBLP:conf/ijcnn/Ouyang0020] approached the problem by modeling the distribution of edges through deep models to identify the existing edges that are least likely to appear as anomalies (as shown in Fig. 6). The probability of each edge is decided by and which measure the edge probability using node with its neighbors and node with its neighbors , respectively. To calculate , the proposed method, UGED, first encodes each node into a lower-dimensional vector through a FCN layer and generates node ’s representation by a mean aggregation of itself and its neighbors’ vectors. Next, the node representations are fed into another FCN to estimate . The prediction is expressed as , where represents the trainable parameters, and is ’s representation. UGED’s training scheme aims to maximize the prediction of existing edges via a cross-entropy-based loss function, . After training, an anomaly score is assigned to each edge using the average of and . As such, existing edges that have a lower probability will get higher scores and the top-k edges are reported as anomalous.

5.2 GCN Based Techniques

Following the line of modeling edge distributions, some studies leverage GCNs to better capture the graph structure information. Duan et al. [AANE] demonstrated that the existence of anomalous edges in the training data prevents traditional GCN based models from capturing real edge distributions, which leads to sub-optimal detection performance. This inherently raises a problem: to achieve better detection performance, the node embedding process should alleviate the negative impact of anomalous edges, but these edges are detected using the learned embeddings. To tackle this, the proposed method, AANE, jointly considers these two issues by iteratively updating the embeddings and detection results during training.

In each training iteration, AANE generates node embeddings through GCN layers and learns an indicator matrix to spot potential anomalous edges. Given an input graph with adjacency matrix , each term in is 1 if , and 0 otherwise. Here, is the predicted link probability between nodes and , which is calculated as the hyperbolic tangent of and ’s embeddings, and is a predefined threshold. By this, an edge is identified as anomalous when its predicted probability is less than the average of all links associated with the node by a predefined threshold.

The total loss function of AANE contains two parts: an anomaly-aware loss () and an adjusted fitting loss (). is proposed to penalize the link prediction results and the indicator matrix such that anomalous edges will have lower prediction probabilities when they are marked as 1 in . This is formulated as:

| (11) |

where is the node set, is the set of ’s neighbors. quantifies the reconstruction loss with regard to the removal of potential anomalous edges, denoted as:

| (12) |

where is an adjusted adjacency matrix that removes all predicted anomalies from the input adjacency matrix . By minimizing these two losses, AANE identifies the top-k edges with lowest probabilities as anomalies.

5.3 Network Representation Based Techniques

Instead of using node embeddings for ANOS ED, edge representations learned directly from the graph are also feasible for distinguishing anomalies. If the edge representations well-preserve the graph structure and interaction content (e.g., messages in online social networks, co-authored papers in citation networks) between pairs of nodes, an enhanced detection performance can then be expected. To date, several studies, such as Xu et al. [DBLP:journals/ijdsa/XuWCY20], have shown promising results in generating edge representations. Although they are not specifically designed for graph anomaly detection, they pinpoint a potential approach to ANOS ED. This is highlighted as a potential future direction in Section 11.1.

6 ANOS ED on Dynamic Graphs

Dynamic graphs are powerful in reflecting the appearance/disappearance of edges over time [ranshous2016scalable]. Anomalous edges can be distinguished by modeling the changes in graph structure and capturing the edge distributions at each time step. Recent approaches to ANOS ED on dynamic graphs are reviewed in this section.

6.1 Network Representation Based Techniques

The intuition of network representation based techniques is to encode the dynamic graph structure information into edge representations and apply the aforementioned traditional anomaly detection techniques to spot irregular edges. This is quite straightforward, but there remain vital challenges in generating/updating informative edge representations when the graph structure evolves. To mitigate this challenge, the ANOS ND model NetWalk [yu2018netwalk] is also capable of detecting anomalous edges in dynamic graphs. Following the line of distance-based anomaly detection, NetWalk encodes edges into a shared latent space using node embeddings, and anomalies are identified based on their distances to the nearest edge-cluster centers in the latent space. Practically, Netwalk generates edge representations as the Hadamard product of the source and destination nodes’ representations, denoted as: . When new edges arrive or existing edges disappear, the node and edge representations are updated from random walks in the temporary graphs at each time stamp, after which the edge-cluster centers and edge anomaly scores are recalculated. Finally, the top-k farthest edges to the edge-clusters are reported as anomalies.

6.2 GCN Based Techniques

Although NetWalk is capable of detecting anomalies in dynamic graphs, it simply updates edge representations without considering the evolving patterns of long/short-term nodes and the graph’s structure. For more effective ANOS ED, Zheng et al. [zheng2019addgraph] intuitively combined temporal, structural and attribute information to measure the anomalousness of edges in dynamic graphs. They propose a semi-supervised model, AddGraph, which comprises a GCN and Gated Recurrent Units (GRU) with attention [cui2019hierarchical] to capture more representative structural information from the temporal graph in each time stamp and dependencies between them, respectively.

At each time stamp , GCN takes the output hidden state () at time to generate node embeddings, after which the GRU learns the current hidden state from the node embeddings and attentions on previous hidden states (as shown in Fig. 7). After getting the hidden state of all nodes, AddGraph assigns an anomaly score to each edge in the temporal graph based on the nodes associated with it. The proposed anomaly scoring function is formulated as:

| (13) |

where and are the corresponding nodes, is the weight of the edge, and are trainable parameters, and are hyper-parameters, and is the non-linear activation function. To learn and , Zheng et al. further assumed that all existing edges in the dynamic graph are normal in the training stage, and sampled non-existing edges as anomalies. Specifically, they form the loss function as:

| (14) |

where is the edge set, are sampled non-existing edges at time stamp , is a hyper-parameter, and regularizes all trainable parameters in the model. After training, the scoring function identifies anomalous edges in the test data by assigning higher anomaly scores to them based on Eq. 13.

7 Anomalous sub-graph detection (ANOS SGD)

In real life, anomalies might also collude and behave collectively with others to garner benefits. For instance, fraudulent user groups in an online review network, as shown in Fig. 1, may post misleading reviews to promote or besmirch certain merchandise. When these data are represented as graphs, anomalies and their interactions usually form suspicious sub-graphs, and ANOS SGD is proposed to distinguish them from the benign.

Unlike individual and independent graph anomalies, i.e., single nodes or edges, each node and edge in a suspicious sub-graph might be normal. However, when considered as a collection, they turn out to be anomalous. Moreover, these sub-graphs also vary in size and inner structure, making anomalous sub-graph detection more challenging than ANOS ND/ED [dGraphScan]. Although extensive effort has been placed on circumventing this problem, deep-learning techniques have only begun to address this problem in the last five years. For reference, traditional non-deep learning based techniques are briefly introduced in Appendix F, and a summary of techniques reviewed for ANOS SGD is provided in Table III at the end of Section 9.

Due to the flexibility of heterogeneous graphs in representing the complex relationships between different kinds of real objects, several recent works have taken advantage of deep network representation techniques to detect real-world anomalies through ANOS SGD. For instance, Wang et al. [wang2018deep] represented online shopping networks as bipartite graphs (a specific type of heterogeneous graph that has two types of nodes and one type of edge), in which users are source nodes and items are sink nodes. Fraudulent groups are then detected based on suspicious dense blocks that form in these graphs.

Wang et al. [wang2018deep] aimed to learn anomaly-aware representations of users such that suspicious users in the same group will be located closely in the vector space, while benign users will be far away (as shown in the embedding space in Fig. 8). According to the observation that user nodes belonging to one fraudulent group are more likely to connect with the same item nodes, the developed model, DeepFD, measures similarities in the behavior of two users, , as the percentage of items shared among all the items they have reviewed. User representations are then generated through a traditional autoencoder, which is trained using three losses and follows the encoding-decoding process. The first loss is the reconstruction loss that ensures the bipartite graph structure can be reconstructed properly using the learned user representations and item representations. The second term preserves the user similarity information in the learned user representations. That is, if two users have similar behaviors, their representations should also be similar. This loss is formulated as:

| (15) |

where is the number of user nodes, measures the similarity of user and ’s representations using an RBF kernel or other alternative. The third loss regularizes all trainable parameters. Finally, the suspicious dense blocks, which are expected to form dense regions in the vector space, are detected using DBSCAN [DBSCAN].

Another work, FraudNE [FraudNE], also models online review networks as bipartite graphs and further detects both malicious users and associated manipulated items following the dense block detection principle. Unlike DeepFD, FraudNE aspires to encode both types of nodes into a shared latent space where suspicious users and items belonging to the same dense block are very close to each other while others distribute uniformly (as shown in Fig. 8). FraudNE adopts two traditional autoencoders, namely, a source node autoencoder and a sink node autoencoder, to learn user representations and item representations, respectively. Both autoencoders are trained to jointly minimize their corresponding reconstruction losses and a shared loss function, and the total loss can be formulated as:

| (16) |

where and are hyperparameters, and regularizes all trainable parameters. Specifically, the reconstruction losses (i.e., and ) measure the gap between the input user/item features (extracted from the graph structure) and their decoded features. The shared loss function is proposed to restrict the representation learning process such that each linked pair of users and items get similar representations. As the DBSCAN [DBSCAN] algorithm is convenient to apply for dense region detection, FraudNE also uses it to distinguish the dense sub-graphs formed by suspicious users and items.

To date, only a few works have put their efforts into using deep learning techniques for ANOS SGD. However, with intensifying research interest in sub-graph representation learning, we encourage more studies on ANOS SGD and highlight this as a potential future in Section 11.1.

8 Anomalous graph detection (ANOS GD)

Beyond anomalous node, edge, and sub-graph, graph anomalies might also appear as abnormal graphs in a set/database of graphs. Typically, a graph database is defined as:

Definition 4 (Graph Database). A graph database contains individual graphs. Here, each graph is comprised of a node set and an edge set . and are the node attribute matrix and edge attribute matrix of if it is an attributed graph.

This graph-level ANOS GD aims to detect individual graphs that deviate significantly from the others. A concrete example of ANOS GD is unusual molecule detection. When chemical compounds are represented as molecular/chemical graphs where the atoms and bonds are represented as nodes and edges [6702420, sun2021sugar], unusual molecules can be identified because their corresponding graphs have structures and/or features that deviate from the others. Brain disorders detection is another example. A brain disorder can be diagnosed by analyzing the dynamics of brain graphs at different stages of aging in sequence and finding an inconsistent snapshot at a specific time stamp.

The prior reviewed techniques (i.e., ANOS ND/ED/SGD) are not compatible with ANOS GD because they are dedicated to detecting anomalies in a single graph, whereas ANOS GD is directed at detecting graph-level anomalies. This problem is commonly approached by: 1) measuring the pairwise proximities of graphs using graph kernels [manzoor2016fast]; 2) detecting the appearance of anomalous graph signals created by abnormal groups of nodes [hooi2018changedar]; or 3) encoding graphs using frequent motifs [noble2003graph]. However, none of these methods are deep learning-based. As the time of writing, very few studies in ANOS GD with deep learning have been undertaken. As such, this is highlighted as a potential future direction in Section 11.1.

8.1 GNN Based Techniques

Motivated by the success of GNNs in various graph classification tasks, the most recent works in ANOS GD employ GNNs to classify single graphs as normal/abnormal in the given graph database. Specifically, Dou et al. [dou2021user] transformed fake news detection into an ANOS GD problem by modeling news as tree-structured propagation graphs where the root nodes denote pieces of news, and child nodes denote users who interact with the root news. Their end-to-end framework, UPFD, extracts two embeddings for the news piece and users, respectively, via a text embedding model (e.g. word2vec, BERT) and a user engagement embedding process. For each news graph, its latent representation is a flattened concatenation of these two embeddings, which is input to train a neural classifier with the label of the news. Corresponding propagation graphs that are labeled as fake by the trained model are regarded as anomalous.

Another representative work by Zhao and Akoglu [zhao2020using] employed a GIN model and one-class classification (i.e., DeepSVDD [ruff2018deep]) loss to train a graph-level anomaly detection framework in an end-to-end manner. For each individual graph in the graph database, its graph-level embedding is generated by applying mean-pooling over its nodes’ node-level embeddings. A graph is eventually depicted as anomalous if it lies outside the learned hypersphere, as shown in Fig. 9.

8.2 Network Representation Based Techniques

It is also possible to apply general graph-level network representation techniques to ANOS GD. With these methods, the detection problem is transformed into a conventional outlier detection problem in the embedding space. In contrast to D(G)NN based techniques that can detect graph anomalies in an end-to-end manner, adopting these representation techniques for anomaly detection is two-staged. First, graphs in the database are encoded into a shared latent space using graph-level representation techniques, such as Graph2Vec [narayanan2017graph2vec], FGSD [verma2017hunt]. Then, the anomalousness of each single graph is measured by an off-the-shelf outlier detector. Essentially, this kind of approach involves pairing existing methods in both stages, yet, the stages are disconnected from each other and, hence, the detection performance can be subpar since the embedding similarities are not necessarily designed for the sake of anomaly detection.

9 ANOS GD on Dynamic Graphs

For dynamic graph environments, graph-level anomaly detection endeavors to identify abnormal graph snapshots/temporal graphs. Similar to ANOS ND and ED on dynamic graphs, given a sequence of graphs, anomalous graphs can be distinguished regarding their unusual evolving patterns, abnormal graph-level features, or other characteristics.

| Graph Type | Approach | Category | Objective Function | Measurement | Outputs |

| Anomalous Edge Detection Techniques | |||||

| Static Graph - Plain | UGED [DBLP:conf/ijcnn/Ouyang0020] | DNN | Anomaly Score | ||

| AANE [AANE] | GCN | Anomaly Ranking | Edge Existing Probability | ||

| Static Graph - Attributed | eFraudCom [zhangge2] | NR | Anomaly Prediction | Predicted Label | |

| Dynamic Graph - Plain | NetWalk [yu2018netwalk] | NR | Anomaly Score | Nearest Distance to Cluster Centers | |

| Dynamic Graph - Attributed | AddGraph [zheng2019addgraph] | GCN | Anomaly Score | ||

| Anomalous Sub-graph Detection Techniques | |||||

| Static Graph - Plain | DeepFD [wang2018deep] | NR | Density-based Method (DBSCAN) | Dense sub-graphs | |

| FraudNE [FraudNE] | NR | Density-based Method (DBSCAN) | Dense sub-graphs | ||

| Anomalous Graph Detection Techniques | |||||

| Graph Database - Attributed | UPFD [dou2021user] | NR | Anomaly Prediction | Predicted Label | |

| OCGIN [zhao2020using] | GNN | Location in Embedding Space | Distance to Hypersphere Center | ||

| Dynamic Graph - Plain | DeepSphere [teng2018deep] | DNN | Location in Embedding Space | Anomalous Label | |

| GLAD-PAW [wan2021glad] | GNN | Anomaly Prediction | Predicted Label | ||

| * DNN: Deep NN Based techniques, GCN: GCN Based Techniques, NR: Network Representation Based Techniques. | |||||

| * GNN: Graph Neural Network Based Techniques. | |||||

In order to derive each graph snapshot/temporal graph’s characteristics, the commonly used GNN, LSTM and autoencoder are feasible to apply. For instance, Teng et al. [teng2018deep] applied a LSTM-autoencoder to detect abnormal graph snapshots, as shown in Fig. 10. In their proposed model, DeepSphere, a dynamic graph is described as a collection of three-order tensors, where each , and the slices along the time dimension are the adjacency matrices of graph snapshots. To identify abnormal tensors, DeepSphere first embeds each graph snapshot into a latent space using an LSTM autoencoder, and then leverages a one-class classification objective [ruff2018deep] that learns a hypersphere such that normal snapshots are covered, and anomalous snapshots lay outside. The LSTM autoencoder takes the adjacency matrices as input sequentially and attempts to reconstruct these input matrices through training. The hypersphere is learned through a single neural network layer and its objective function is formulated as:

| (17) |

where is the latent representation generated by the LSTM autoencoder, a is the centroid of the hypersphere, is the radius, is the outlier penalty (), is the number of training graph snapshots, and is a hyperparameter. The overall objective function of DeepSphere is represented as:

| (18) |

where is the reconstruction loss of the LSTM autoencoder. When the training is finished, DeepSphere spots a given unseen data as anomalous if its embedding lies outside the learned hypersphere with a radius of .

In addition to all ANOS ND, ED, SGD, and GD techniques reviewed above, it is worth mentioning that perturbed graphs, which adversarial models generate to attack graph classification algorithms or GNNs [zhang2021backdoor, dai2018adversarial, 9338329], can also be regarded as (intensional) anomalies. In a perturbed graph, the nodes and edges are modified deliberately to deviate from the others. We have not reviewed these in this survey because their main purpose is to attack a GNN model. The key idea behind these methods is the attacking/perturbation strategy, and studies in this sphere seldom focus on a detection or reasoning module to identify the perturbed graph or its sub-structures, i.e., anomalous nodes, edges, sub-graphs, or graphs.

| Model | Language | Platform | Graph | Code Repository |

| AnomalyDAE [fan2020anomalydae] | Python | Tensorflow | Static Attributed Graph | https://github.com/haoyfan/AnomalyDAE |

| MADAN [gutierrez2020multi] | Python | - | Static Attributed Graph | https://github.com/leoguti85/MADAN |

| PAICAN [bojchevski2018bayesian] | Python | Tensorflow | Static Attributed Graph | http://www.kdd.in.tum.de/PAICAN/ |

| ONE [bandyopadhyay2019outlier] | Python | - | Static Attributed Graph | https://github.com/sambaranban/ONE |

| DONE&AdONE [bandyopadhyay2020outlier] | Python | Tensorflow | Static Attributed Graph | https://bit.ly/35A2xHs |

| SLICENDICE [nilforoshan2019slicendice] | Python | - | Static Attributed Graph | http://github.com/hamedn/SliceNDice/ |

| FRAUDRE [zhangge1] | Python | Pytorch | Static Attributed Graph | https://github.com/FraudDetection/FRAUDRE |

| SemiGNN [SemiGNN] | Python | Tensorflow | Static Attributed Graph | https://github.com/safe-graph/DGFraud |

| CARE-GNN [CARE-GNN] | Python | Pytorch | Static Attributed Graph | https://github.com/YingtongDou/CARE-GNN |

| GraphConsis [GraphConsis] | Python | Tensorflow | Static Attributed Graph | https://github.com/safe-graph/DGFraud |

| GLOD [zhao2020using] | Python | Pytorch | Static Attributed Graph | https://github.com/LingxiaoShawn/GLOD-Issues |

| OCAN [zheng2019one] | Python | Tensorflow | Static Graph | https://github.com/PanpanZheng/OCAN |

| DeFrauder [DBLP:conf/ijcai/DhawanGK019] | Python | - | Static Graph | https://github.com/LCS2-IIITD/DeFrauder |

| GCAN [lu2020gcan] | Python | Keras | Heterogeneous Graph | https://github.com/l852888/GCAN |

| HGATRD [huang2020heterogeneous] | Python | Pytorch | Heterogeneous Graph | https://github.com/201518018629031/HGATRD |

| GLAN [yuan2019jointly] | Python | Pytorch | Heterogeneous Graph | https://github.com/chunyuanY/RumorDetection |

| GEM [liu2018heterogeneous] | Python | - | Heterogeneous Graph | https://github.com/safe-graph/DGFraud/tree/master/algorithms/GEM |

| eFraudCom [zhangge2] | Python | Pytorch | Heterogeneous Graph | https://github.com/GeZhangMQ/eFraudCom |

| DeepFD [wang2018deep] | Python | Pytorch | Bipartite Graph | https://github.com/JiaWu-Repository/DeepFD-pyTorch |

| ANOMRANK [yoon2019fast] | C++ | - | Dynamic Graph | https://github.com/minjiyoon/anomrank |

| MIDAS [DBLP:conf/aaai/0001HYSF20] | C++ | - | Dynamic Graph | https://github.com/Stream-AD/MIDAS |

| Sedanspot [eswaran2018sedanspot] | C++ | - | Dynamic Graph | https://www.github.com/dhivyaeswaran/sedanspot |

| F-FADE [chang2021f] | Python | Pytorch | Dynamic Graph | http://snap.stanford.edu/f-fade/ |

| DeepSphere [teng2018deep] | Python | Tensorflow | Dynamic Graph | https://github.com/picsolab/DeepSphere |

| Changedar [hooi2018changedar] | Matlab | - | Dynamic Graph | https://bhooi.github.io/changedar/ |

| UPFD [dou2021user] | Python | Pytorch | Graph Database | https://github.com/safe-graph/GNN-FakeNews |

| OCGIN [zhao2020using] | Python | Pytorch | Graph Database | https://github.com/LingxiaoShawn/GLOD-Issues |

| DAGMM [zong2018deep] | Python | Pytorch | Non Graph | https://github.com/danieltan07/dagmm |

| DevNet [pang2019deep] | Python | Tensorflow | Non Graph | https://github.com/GuansongPang/deviation-network |

| RDA [zhou2017anomaly] | Python | Tensorflow | Non Graph | https://github.com/zc8340311/RobustAutoencoder |

| GAD [GAD] | Python | Tensorflow | Non Graph | https://github.com/raghavchalapathy/gad |

| Deep SAD [ruff2019deep] | Python | Pytorch | Non Graph | https://github.com/lukasruff/Deep-SAD-PyTorch |

| DATE [10.1145/3394486.3403339] | Python | Pytorch | Non Graph | https://github.com/Roytsai27/Dual-Attentive-Tree-aware-Embedding |

| STS-NN [STS-NN] | Python | Pytorch | Non Graph | https://github.com/JiaWu-Repository/STS-NN |

| * -: No Dedicated Platforms. | ||||

| Category | Dataset | #G | #N | #E | #FT | #AN | REF | URL |

| Citation Networks | ACM | 1 | 16K | 71K | 8.3K | - | [ding2019deep, ding2019interactive, fan2020anomalydae, ding2020inductive] | http://www.arnetminer.org/open-academic-graph |

| Cora | 1 | 2.7K | 5.2K | 1.4K | - | [li2019specae, bandyopadhyay2020outlier, bandyopadhyay2019outlier, liang2018semi, bojchevski2018bayesian] | http://linqs.cs.umd.edu/projects/projects/lbc | |

| Citeseer | 1 | 3.3K | 4.7K | 3.7K | - | [bandyopadhyay2020outlier, bandyopadhyay2019outlier, liang2018semi, perozzi2016scalable] | http://linqs.cs.umd.edu/projects/projects/lbc | |

| Pubmed | 1 | 19K | 44K | 500 | - | [li2019specae, bandyopadhyay2020outlier, bandyopadhyay2019outlier, liang2018semi] | http://linqs.cs.umd.edu/projects/projects/lbc | |

| DBLP | 1 | - | - | - | - | [yu2018netwalk, eswaran2018sedanspot, bojchevski2018bayesian, hu2016embedding, perozzi2016scalable] | http://www.informatik.uni-trier.de/˜ley/db/ | |

| Social Networks | Enron | - | 80K | - | - | - | [li2017radar, peng2018anomalous, zhang2019robust, gutierrez2020multi, wang2019detecting, yoon2019fast, eswaran2018sedanspot, eswaran2018spotlight, rayana2016less] | http://odds.cs.stonybrook.edu/#table2 |

| UCI Message | 1 | 5K | - | - | - | [yu2018netwalk, cai2020structural, zheng2019addgraph] | http://archive.ics.uci.edu/ml | |

| Google+ | 4 | 75M | 11G | - | - | - | https://wangbinghui.net/dataset.html | |

| Twitter Sybil | 3 | 41M | - | - | 100K | - | https://wangbinghui.net/dataset.html | |

| Twitter WorldCup2014 | - | 54K | - | - | - | [rayana2016less] | http://shebuti.com/SelectiveAnomalyEnsemble/ | |

| Twitter Security2014 | - | 130K | - | - | - | [rayana2016less] | http://shebuti.com/SelectiveAnomalyEnsemble/ | |

| Reality Mining | - | 9.1K | - | - | - | [rayana2016less] | http://shebuti.com/SelectiveAnomalyEnsemble/ | |

| NYTNews | - | 320K | - | - | - | [rayana2016less] | http://shebuti.com/SelectiveAnomalyEnsemble/ | |

| Politifact | 314 | 41K | 40K | - | 157 | [dou2021user] | https://github.com/safe-graph/GNN-FakeNews | |

| Gossipcop | 5.4K | 314K | 308K | - | 2.7K | [dou2021user] | https://github.com/safe-graph/GNN-FakeNews | |

| Co-purchasing Networks | Disney | 1 | 124 | 334 | 30 | 6 | [li2017radar, peng2018anomalous, liu2017accelerated, zhang2019robust, gutierrez2020multi] | https://www.ipd.kit.edu/mitarbeiter/muellere/consub/ |

| Amazon-v1 | 1 | 314K | 882K | 28 | 6.2K | [peng2018anomalous, hooi2017graph, zhu2020mixedad, kumar2018rev2, bojchevski2018bayesian, hu2016embedding, hooi2016fraudar] | https://www.ipd.kit.edu/mitarbeiter/muellere/consub/ | |

| Amazon-v2 | 1 | 11K | - | 25 | 821 | - | https://github.com/dmlc/dgl/blob/master/python/dgl/data/fraud.py | |

| Elliptic | 1 | 203K | 234K | 166 | 4.5K | - | https://www.kaggle.com/ellipticco/elliptic-data-set | |

| Yelp | 1 | 45K | - | 32 | 6.6K | - | https://github.com/dmlc/dgl/blob/master/python/dgl/data/fraud.py | |

| Transportation Networks | New York City Taxi | - | - | - | - | - | [teng2018deep, teng2017anomaly, eswaran2018spotlight] | http://www.nyc.gov/html/tlc/html/about/triprecorddata.shtml |

| * -: Not Given, #G: Number of Graphs, #N: Number of Nodes, #E: Number of Edges, #FT: Number of Features, #AN: Number of Anomalies, REF: References. | ||||||||

10 Published Algorithms and Datasets