A Convex Geodesic Selective Model for Image Segmentation

Abstract

Selective segmentation is an important application of image processing. In contrast to global segmentation in which all objects are segmented, selective segmentation is used to isolate specific objects in an image and is of particular interest in medical imaging – permitting segmentation and review of a single organ. An important consideration is to minimise the amount of user input to obtain the segmentation; this differs from interactive segmentation in which more user input is allowed than selective segmentation. To achieve selection, we propose a selective segmentation model which uses the edge-weighted geodesic distance from a marker set as a penalty term. It is demonstrated that this edge-weighted geodesic penalty term improves on previous selective penalty terms. A convex formulation of the model is also presented, allowing arbitrary initialisation. It is shown that the proposed model is less parameter dependent and requires less user input than previous models. Further modifications are made to the edge-weighted geodesic distance term to ensure segmentation robustness to noise and blur. We can show that the overall Euler-Lagrange equation admits a unique viscosity solution. Numerical results show that the result is robust to user input and permits selective segmentations that are not possible with other models.

Keywords. Variational model, partial differential equations, image segmentation, additive operator splitting, viscosity solution, geodesic.

1. Introduction

Segmentation of an image into its individual objects is one incredibly important application of image processing techniques. Segmentation can take two forms; firstly global segmentation for isolation of all foreground objects in an image from the background and secondly, selective segmentation for isolation of a subset of the objects in an image from the background. A comprehensive review of selective segmentation can be found in [7, 19] and in [45] for medical image segmentation where selection refers to extraction of single organs.

Approaches to image segmentation broadly fall into two classes; region-based and edge-based. Some region-based approaches are region growing [1], watershed algorithms [40], Mumford-Shah [29] and Chan-Vese [11]. The final two of these are partial differential equations (PDEs)-based variational approaches to the problem of segmentation. There are also models which mix the two classes to use the benefits of the region-based and edge-based approaches and will incorporate features of each. Edge-based methods aim to encourage an evolving contour towards the edges in an image and normally require an edge detector function [8]. The first edge-based variational approach was devised by Kass et al. [22] with the famous snakes model, this was further developed by Casselles et al. [8] who introduced the Geodesic Active Contour (GAC) model. Region-based global segmentation models include the well known works of Mumford-Shah [29] and Chan-Vese [11]. Importantly they are non-convex and hence a minimiser of these models may only be a local, not the global, minimum. Further work by Chan et al. [10] gave rise to a method to find the global minimiser for the Chan-Vese model under certain conditions.

This paper is mainly concerned with selective segmentation of objects in an image, given a set of points near the object or objects to be segmented. It builds in such user input to a model using a set where is the image domain [4, 5, 17]. Nguyen et al. [30] considered marker sets and which consist of points inside and outside, respectively, the object or objects to be segmented. Gout et al. [17] combined the GAC approach with the geometrical constraint that the contour passes through the points of . This was enforced with a distance function which is zero at and non-zero elsewhere. Badshah and Chen [4] then combined the Gout et al. model with [11] to incorporate a constraint on the intensity in the selected region, thereby encouraging the contour to segment homogeneouss regions. Rada and Chen [36] introduced a selective segmentation method based on two-level sets which was shown to be more robust than the Badshah-Chen model. We also refer to [5, 23] for selective segmentation models which include different fitting constraints, using coefficient of variation and the centroid of respectively. None of these models have a restriction on the size of the object or objects to be detected and depending on the initialisation these methods have the potential to detect more or fewer objects than the user desired. To address this and to improve on [36], Rada and Chen [37] introduced a model combining the Badshah-Chen [4] model with a constraint on the area of the objects to be segmented. The reference area used to constrain the area within the contour is that of the polygon formed by the markers in . Spencer and Chen [39] introduced a model with the distance fitting penalty as a standalone term in the energy functional, unbounding it from the edge detector term of the Gout et al. model.

All of the above selective segmentation models discussed are non-convex and hence the final result depends on the initialisation. Spencer and Chen [39], in the same paper, reformulated the model they introduced to a convex form using convex relaxation and an exact penalty term as in [10]. Their model uses Euclidean distance from the marker set as a distance penalty term, however we propose replacing this with the edge-weighted geodesic distance from (we call this simply the geodesic distance). This distance increases at edges in the image and is more intuitive for selective segmentation. The proposed model is given as a convex relaxed model with exact penalty term and we give a general existence and uniqueness proof for the viscosity solution to the PDE given by its Euler-Lagrange equation, which is also applicable to a whole class of PDEs arising in image segmentation. We note that the use of geodesic distance for segmentation has been considered before [6, 34], however the models only use geodesic distance as the fitting term within the regulariser, so are liable to make segmentation errors for poor initialisation or complex images. Here we take a different approach, by including geodesic distance as a standalone fitting term, separate from the regulariser, and using intensity fitting terms to ensure robustness.

In this paper we only consider 2D images, however for completion we remark that 3D segmentation models do exist [25, 44] and it is simple to extend the proposed model to 3D. The contributions of this paper can be summarised as follows:

-

•

We incorporate the geodesic distance as a distance penalty term within the variational framework.

-

•

We propose a convex selective segmentation model using this penalty term and demonstrate how it can achieve results which cannot be achieved by other models.

-

•

We improve the geodesic penalty term, focussing on improving robustness to noise and improving segmentation when object edges are blurred.

-

•

We give an existence and uniqueness proof for the viscosity solution for the PDEs associated with a whole class of segmentation models (both global and selective).

We find that the proposed model gives accurate segmentation results for a wide range of parameters and, in particular, when segmenting the same objects from the same modality images, i.e. segmenting lungs from CT scans, the parameters are very similar from one image to the next to obtain accurate results. Therefore, this model may be used to assist the preparation of large training sets for deep learning studies [32, 41, 42] that concern segmentation of particular objects from images.

The paper is structured as follows; in §2 we review some global and selective segmentation models. In §3 we discuss the geodesic distance penalty term, propose a new convex model and also address weaknesses in the naïve implementation of the geodesic distance term. In §4 we discuss the non-standard AOS scheme, introduced in [39], which we use to solve the model. In §5 we give an existence and uniqueness proof for a general class of PDEs arising in image segmentation, thereby showing that for a given initialisation the solution to our model is unique. In §6 we compare the results of the proposed model to other selective segmentation models, show that the proposed model is less parameter dependent than other models and is more robust to user input. Finally, in §7 we provide some concluding remarks.

2. Review of Variational Segmentation Models

Although we focus on selective segmentation, it is illuminating to introduce some global segmentation models first. Throughout this paper we denote the original image by with image domain .

2.1. Global Segmentation

The model of Mumford and Shah [29] is one of the most famous and important variational models in image segmentation. We will review its two-dimensional piecewise constant variant, commonly known as the Chan-Vese model [11], which takes the form

| (1) |

where the foreground is the subdomain to be segmented, the background and are fixed non-negative parameters. The values and are the average intensities of inside and respectively. We use a level set function

to track (an idea popularised by Osher and Sethian [31]) and reformulate (1) as

| (2) |

with a smoothed Heaviside function such as for some , we set throughout. We solve this in two stages, first with fixed we minimise with respect to and , obtaining

| (3) |

and secondly, with and fixed we minimise (2) with respect to . This requires the calculation of the associated Euler-Lagrange equations. A drawback of the Chan-Vese energy functional (2) is that it is non-convex. Therefore a minimiser may only be a local minimum and not the global minimum and the final segmentation result is dependent on the initialisation. Chan et al. [10] reformulated (2) using an exact penalty term to obtain an equivalent convex model – we use this same technique in §2.2 for the Geodesic Model.

2.2. Selective Segmentation

Selective segmentation models make use of user input, i.e. a marker set of points near the object or objects to be segmented. Let be such a marker set. The aim of selective segmentation is to design an energy functional where the segmentation contour is close to the points of .

Early work. An early model by Caselles et al. [8], commonly known as the Geodesic Active Contour (GAC) model, uses an edge detector function to ensure the contour follows edges, the functional to minimise is given by

The term is an edge detector, one example is with a tuning parameter. It is common to smooth the image with a Gaussian filter where is the kernel size, i.e. use as the edge detector. This mitigates the effect of noise in the image, giving a more accurate edge detector. Gout et al. [25] built upon the GAC model by incorporating a distance term into this integral, i.e. the integrand is . The distance term is a penalty on the distance from , this model encourages the contour to be near to the set whilst also lying on edges. However this model struggles when boundaries between objects and their background are fuzzy or blurred. To address this, Badshah and Chen [4] introduced a new model which adds the intensity fitting terms from the Chan-Vese model (1) to the Gout et al. model. However, their model has poor robustness [36]. To improve on this, Rada and Chen [37] introduced a model which adds an area fitting term into the Badshah-Chen model and is far more robust.

The Rada-Chen model [37]. We first briefly introduce this model, defined by

| (4) |

where are fixed non-negative parameters. There is freedom in choosing the distance term , see [37] for some examples. is the area of the polygon formed from the points of and . The final term of this functional puts a penalty on the area inside a contour being very different to . One drawback of the Rada-Chen model is that the selective fitting term uses no location information from the marker set . Therefore the result can be a contour which is separated over the domain into small parts, whose sum area totals the area fitting term.

Nguyen et al. [30]. This model is based on the GAC model and uses likelihood functions as fitting terms, it has the energy functional

where and are the normalised log-likelihoods that the pixel is in the foreground and background respectively. is the probability that pixel belongs to the foreground, and minimisation is constrained, requiring , so is convex. This model is good for many examples, see [30], however fails when the boundary of the object to segment is non-smooth or has fine structures. Also, the final result is sometimes sensitive to the marker sets used.

The Spencer-Chen model [39]. The authors introduced the following model

| (5) |

where are fixed non-negative parameters. Note that the regulariser of this model differs from the Rada-Chen model (4) as the distance function has been separated from the edge detector term and is now a standalone penalty term . The authors use normalised Euclidean distance from the marker set as their distance penalty term. We will discuss this later in §3 as it is one of the key improvements we make to the Spencer-Chen model, replacing the Euclidean distance term with a geodesic distance term.

Convex Spencer-Chen model [39]. Spencer and Chen use the ideas of [10] to reformulate (5) into a convex minimisation problem. It can be shown that the Euler-Lagrange equations for have the same stationary solutions as for

| (6) |

with the minimisation constrained to . This is a constrained convex minimisation which can be reformulated to an unconstrained minimisation using an exact penalty term in the functional, which encourages the minimiser to be in the range . In [39] the authors use a smooth approximation to given by

| (7) |

and perform the unconstrained minimisation of

| (8) |

When , the above functional has the same set of stationary solutions as . It permits us to choose arbitrary initialisation to obtain the desired selective segmentation result due to its complexity.

3. Proposed Convex Geodesic Selective Model

We propose an improved selective model, based on the Spencer-Chen model, which uses geodesic distance from the marker set as the distance term, rather than the Euclidean distance. Increasing the distance when edges in the image are encountered gives a more accurate reflection of the true similarity of pixels in an image from the marker set. We propose minimising the convex functional

| (10) |

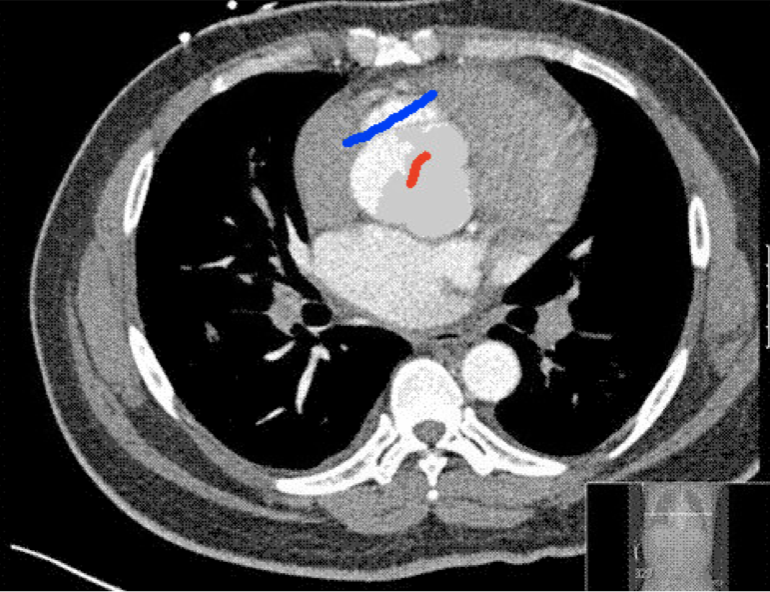

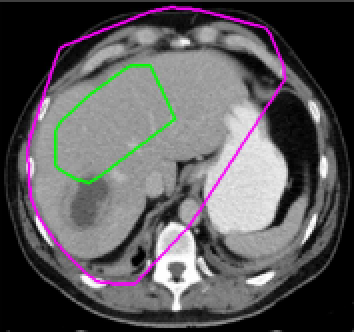

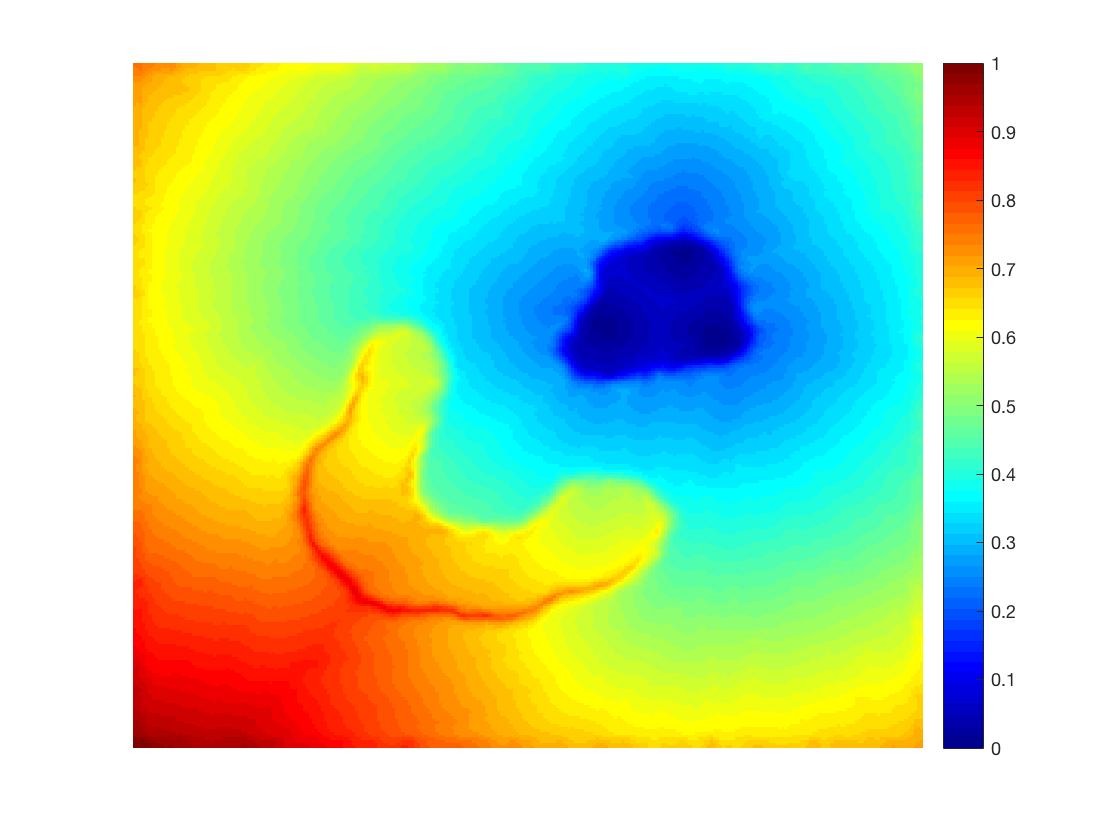

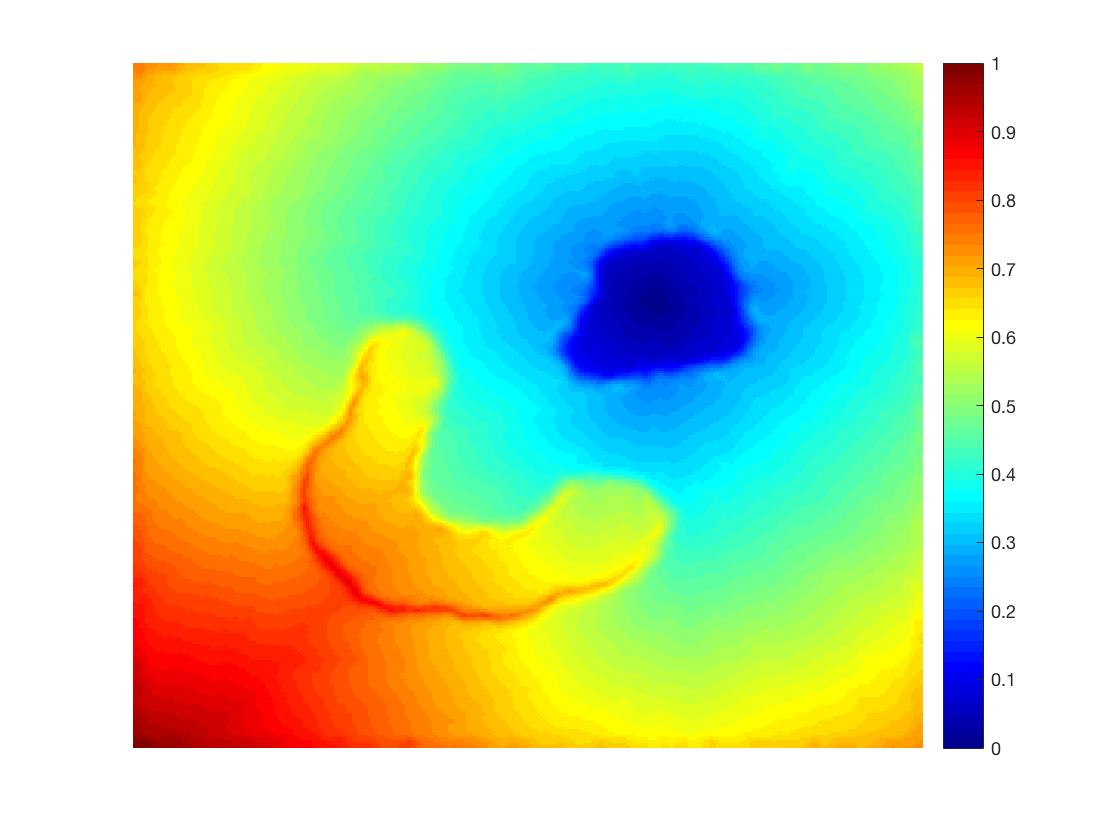

where is the edge-weighted geodesic distance from the marker set. In Figure 1, we compare the normalised geodesic distance and the Euclidean distance from the same marker point (i.e. set has one point in it); clearly the former gives a more intuitively correct distance penalty than the latter. We will refer to this proposed model as the Geodesic Model.

(i) (ii) (iii) (iv)

3.1. Computing the Geodesic Distance Term

The geodesic distance from the marker set is given by for and for , where is the solution of the following PDE

| (11) |

where is defined later on with respect to the image contents.

If (i.e. ) then the distance penalty is simply the normalised Euclidean distance as used in the Spencer-Chen model (5). We have free rein to design as we wish. Looking at the PDE in (11), we see that when small this results in a small gradient in our distance function and it is almost flat. When is large, we have a large gradient in our distance map. In the case of selective image segmentation, we want small gradients in homogeneous areas of the image and large gradients at edges. If we set

| (12) |

this gives us the desired property that in areas where , the distance function increases by some small ; here image is scaled to . At edges, is large and the geodesic distance increases here. We set value of and throughout. In Figure 1, we see that the geodesic distance plot gives a low distance penalty on the triangle, which the marker indicates we would like segmented. There is a reasonable penalty on the background, and all other objects in the image have a very high distance penalty (as the geodesic to these points must cross two edges). This contrasts with the Euclidean distance, which gives a low distance penalty to some background pixels and maximum penalty to the pixels furthest away.

3.2. Comparing Euclidean and Geodesic Distance Terms

We briefly give some advantages of using the geodesic distance as a penalty term rather than Euclidean distance and a remark on the computational complexity for both distances.

-

1.

Parameter Robustness. The Geodesic Model is more robust to the choice of the fitting parameter , as the penalty on the inside of the shape we want segmented is consistently small. It is only outside the shape where the penalty is large. Whereas with the Euclidean distance term we always have a penalty inside the shape we actually want to segment. This is due to the nature of the Euclidean distance which does not discriminate on intensity – this penalty can also be quite high if our marker set is small and doesn’t cover the whole object.

-

2.

Robust to Marker Set Selection. The geodesic distance term is far more robust to point selection, for example we can choose just one point inside the object we want to segment and this will give a nearly identical geodesic distance compared to choosing many more points. This is not true of the Euclidean distance term which is very sensitive to point selection and requires markers to be spread in all areas of the object you want to segment (especially at extrema of the object).

Remark 1 (Computational Complexity.).

The main concern of using the geodesic penalty term, which we obtain by solving PDE (11), would be that it takes a significant amount of time to compute compared to the Euclidean distance. However, using the fast marching algorithm of Sethian [38], the complexity of computing is for an image with pixels. This is is only marginally more complex than computing the Euclidean distance which has complexity [28].

3.3. Improvements to Geodesic Distance Term

We now propose some modifications to the geodesic distance. Although the geodesic distance presents many advantages for selective image segmentation, we have three key disadvantages of this fitting term, which the Euclidean fitting term does not suffer.

-

1.

Not robust to noise. The computation of the geodesic distance depends on in (see (11)). So, if an image contains a lot of noise, each noisy pixel appears as an edge and we get a misleading distance term.

-

2.

Objects far from with low penalty. As the geodesic distance only uses marker set for its initial condition (see (11)), this can result in objects far from having a low distance penalty, which is clearly not desired.

-

3.

Blurred edges. If we have two objects separated by a blurry edge and we have marker points only in one object, the geodesic distance will be low to the other object, as the edge penalty is weakly enforced for a blurry edge. We would desire low penalty inside the object with markers and a reasonable penalty in the joined object.

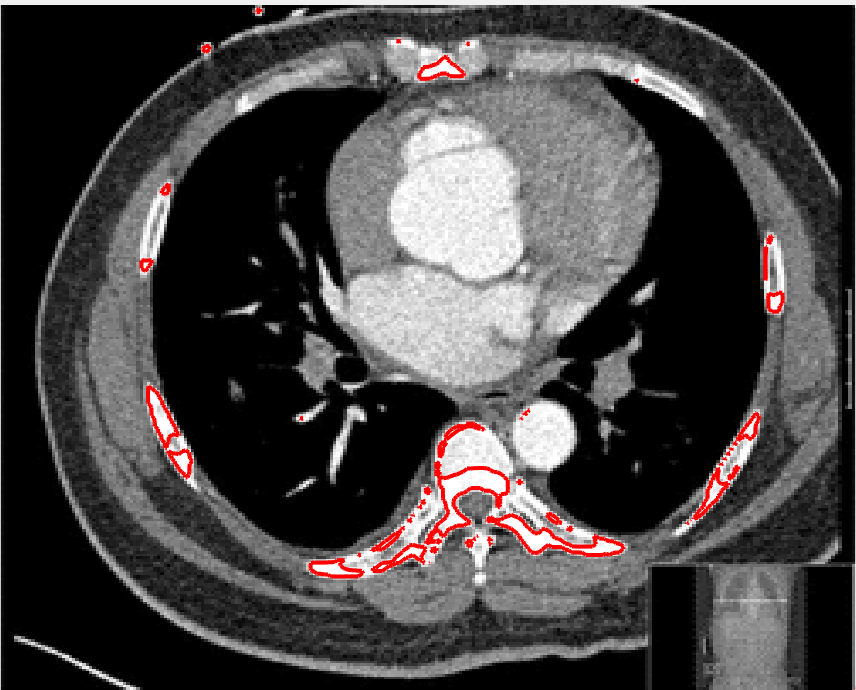

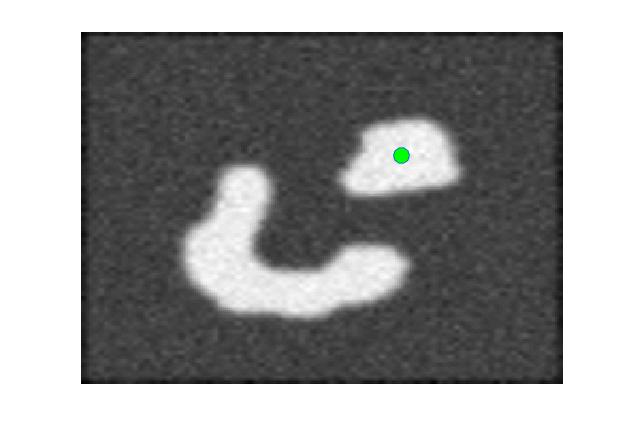

In Figure 2, each column shows an example for each of the problems listed above. We now propose solutions to each of these problems.

Problem 1: Noise Robustness. A naïve solution to the problem of noisy images would be to apply a Gaussian blur to to remove the effect of the noise, so we change to

| (13) |

where is a Gaussian convolution with standard deviation . However, the effect of Gaussian convolution is that it also blurs edges in the image. This then gives us the same issues described in Problem 3. We see in Figure 3 column 3, that the Gaussian convolution reduces the sharpness of edges and this results in the geodesic distance being very similar in adjacent objects – therefore we see more pixels with high geodesic distance.

Our alternative to Gaussian blur is to consider anisotropic TV denoising. We refer the reader to [9, 33] for information on the model, here we just give the PDE which results from its minimisation:

| (14) |

where are non-negative parameters (we fix throughout ). It is proposed to apply a relatively small number of cheap fixed point Gauss-Seidel iterations (between 100 and 200) to the discretised PDE. We cycle through all pixels and update as follows

| (15) |

where , , and . We update all pixels once per iteration and solve the PDE in (11) with replaced by

| (16) |

where represents the Gauss-Seidel iterative scheme and is the number of iterations performed (we choose in our tests). In the final column of Figure 3 we see that the geodesic distance map more closely resembles that of the clean image than the Gaussian blurred map in column 3 and in Figure 4 we see that the segmentation results are qualitatively and quantitatively better using the anisotropic smoothing technique.

Problem 2: Objects far from with low penalty.

In Figure 2 column 2 we see that the geodesic distance to the outside of the patient is lower than to their ribs. This is due to the fact that the region outside the body is homogeneous and there is almost zero distance penalty in this region. Similarly for Figure 3 column , the distances from the marker set to many surrounding objects is low, even though their Euclidean distance from the marker set is high. We wish to have the Euclidean distance incorporated somehow. Our solution is to modify the term from (16) to

| (17) |

In Figure 5 the effect of this is clear, as increases, the distance function resembles the Euclidean distance more. We use value in all experiments as it adds a reasonable penalty to pixels far from the marker set.

Problem 3: Blurred edges.

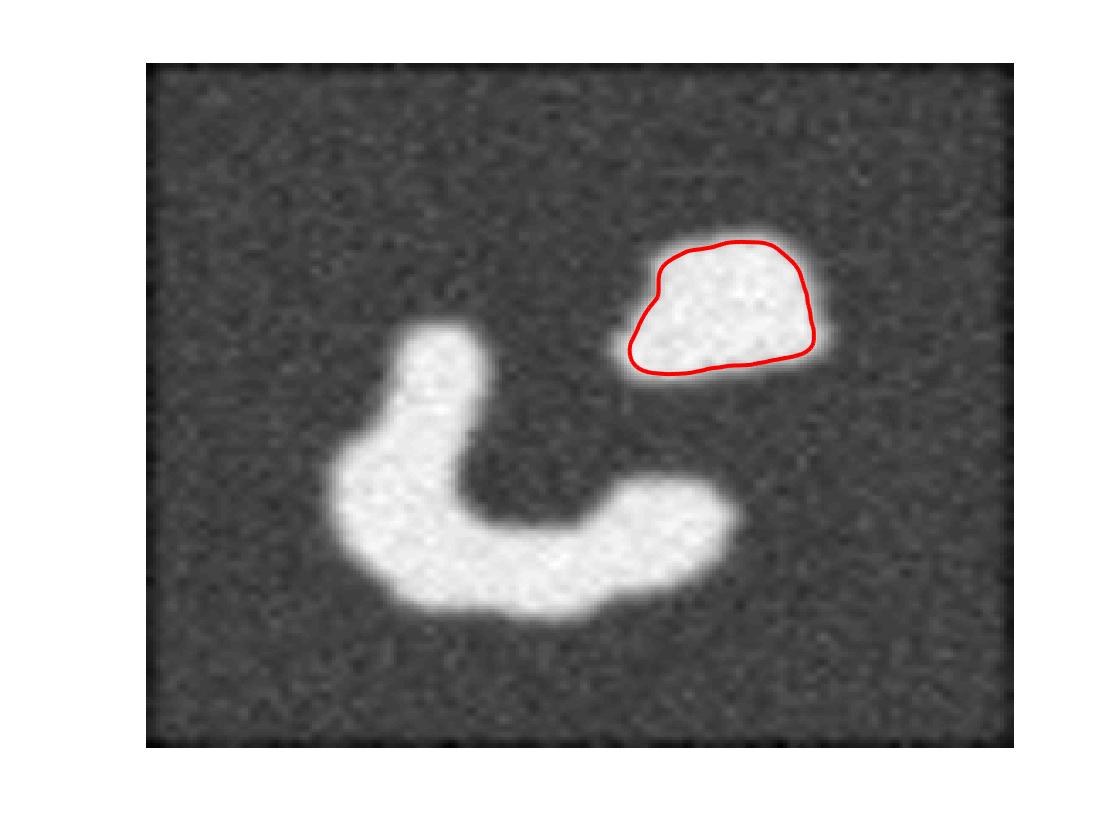

If there are blurred edges between objects in an image, the geodesic distance will not increase significantly at this edge. Therefore the final segmentation result is liable to include unwanted objects. We look to address this problem through the use of anti-markers. These are markers which indicate objects that we do not want to segment, i.e. the opposite of marker points, we denote the set of anti-marker points by .

We propose to use a geodesic distance map from the set denoted by which penalises pixels near to the set and doesn’t add any penalty to those far away. We could naïvely choose where is the normalised geodesic distance from . However this puts a large penalty on those pixels inside the object we actually want to segment (as to those pixels is small). To avoid this problem, we propose the following anti-marker distance term

where is a tuning parameter. We choose throughout. This distance term ensures rapid decay of the penalty away from the set but still enforces high penalty around the anti-marker set itself. See Figure 6 where a segmentation result with and without anti-markers is shown. As decays rapidly from , we do require that the anti-marker set be close to the blurred edge and away from the object we desire to segment.

3.4. The new model and its Euler-Lagrange equation

The Proposed Geodesic Model. Putting the above ingredients together, we propose the model

|

|

(18) |

where and is the geodesic distance from the marker set . We compute using (11) where defined in (17). Using Calculus of Variations, solving (18) with respect to , with fixed, leads to

| (19) |

and the minimisation with respect to (with and fixed) gives the PDE

| (20) |

in , where we replace with to avoid zero denominator; we choose throughout. We also have Neumann boundary conditions on where is the outward unit normal vector.

Next we discuss a numerical scheme for solving this PDE (20). However it should be remarked that updating should be done as soon as is updated; practically converge very quickly since the object intensity does not change much.

4. An additive operator splitting algorithm

Additive Operator Splitting (AOS) is a widely used method [14, 27, 43] as seen from more recent works [2, 3, 4, 5, 37, 39] on the diffusion type equation such as

| (21) |

AOS allows us to split the two dimensional problem into two one-dimensional problems, which we solve and then combine. Each one dimensional problem gives rise to a tridiagonal system of equations which can be solved efficiently, hence AOS is a very efficient method for solving diffusion-like equations. AOS is a semi-implicit method and permits far larger time-steps than the corresponding explicit schemes would. Hence AOS is more stable than an explicit method [43]. We rewrite the above equation as

and after discretisation, we can rewrite this as [43]

where is the time-step, and . For notational convenience we write . The matrix can be obtained as follows

|

|

and similarly to [37, 39], for the half points in we take the average of the surrounding pixels, e.g. . Therefore we must solve two tridiagonal systems to obtain , the Thomas algorithm allows us to solve each of these efficiently [43]. The AOS method described here assumes does not depend on , however in our case it depends on (see (20)) which has jumps around 0 and 1, so the algorithm has stability issues. This was noted in [39] and the authors adapted the formulation of (20) to offset the changes in . Here we repeat their arguments for adapting AOS when the exact penalty term is present (we refer to Figures 7 and 8 for plots of the penalty function and its derivative, respectively).

The main consideration is to extract a linear part out of the nonlinearity in . If we evaluate the Taylor expansion of around and and group the terms into the constant and linear components in , we can respectively write and . We actually find that and denote the linear term as from now on. Therefore, for a change in of around and , we can approximate the change in by .

To focus on the jumps, define the interval in which jumps as

and refine the linear function by

Using these we can now offset the change in by changing the formulation (21) to

or in AOS form which, following the derivation in [39], can be reformulated as

where . We note that is invertible as it is strictly diagonally dominant. This scheme improves on (21) as now, changes in are damped. However, it is found in [39] that although this scheme does satisfy most of the discrete scale space conditions of Weickert [43] (which guarantee convergence of the scheme), it does not satisfy all of them. In particular the matrix doesn’t have unit row sum and is not symmetrical. The authors adapt the scheme above to the equivalent

| (22) |

where the matrix does have unit row sum, however the matrix is not always symmetrical. We can guarantee convergence for (in which case must be symmetrical) but we desire to use a small to give a small interval . We find experimentally that convergence is achieved for any small value of , this is due to the fact that at convergence the solution is almost binary [10]. Therefore, although initially is asymmetrical at some pixels, at convergence all pixels have values which fall within and is a matrix with all diagonal entries . Therefore we find that at convergence is symmetrical and the discrete scale space conditions are all satisfied. In all of our tests we fix .

5. Existence and Uniqueness of the Viscosity Solution

In this section we use the viscosity solution framework and the work of Ishii and Sato [20] to prove that, for a class of PDEs in image segmentation, the solution exists and is unique. In particular, we prove the existence and uniqueness of the viscosity solution for the PDE which is determined by the Euler-Lagrange equation for the Geodesic Model. Throughout, we will assume is a bounded domain with boundary.

From the work of [12, 20], we have the following Theorem for analysing the solution of a partial differential equation of the form where , is the set of symmetric matrices, is the gradient of and is the Hessian of . For simplicity, and in a slight abuse of notation, we use for the vector of a general point in .

Theorem 2 (Theorem 3.1 [20]).

| (23) |

| (24) |

| (25) |

Conditions (C1)–(C2).

-

(C1)

for .

-

(C2)

for and .

Conditions (I1)–(I7). Assume is a bounded domain in with boundary.

-

(I1)

.

-

(I2)

There exists a constant such that for each the function is non-decreasing on .

-

(I3)

is continuous at for any in the sense that

holds. Here and denote, respectively, the upper and lower semi-continuous envelopes of , which are defined on .

-

(I4)

, where is the Hölder functional space.

-

(I5)

For each the function is positively homogeneous of degree one in , i.e. for all and .

-

(I6)

There exists a positive constant such that for all and . Here denotes the unit outward normal vector of at .

-

(I7)

For each there exists a non-decreasing continuous function satisfying such that if and satisfy

(26) then

for all , with , and .

5.1. Existence and uniqueness for the Geodesic Model

We now prove that there exists a unique solution for the PDE (20) resulting from the minimisation of the functional for the Geodesic Model (18).

Remark 3.

It is important to note that although the values of and depend on , they are fixed when we solve the PDE for and therefore the probem is a local one and Theorem 2 can be applied. Once we update and , using the updated , then we fix them again and apply Theorem 2. In practice, as we near convergence, we find and stabilise so we typically stop updating and once the change in both values is below a tolerance.

To apply the above theorem to the proposed model (20), the key step will be to verify the nine conditions. First, we multiply (20) by the factor , obtaining the nonlinear PDE

| (27) | ||||

where . We can rewrite this as

| (28) |

where , , , is the Hessian of and

| (29) |

Theorem 4 (Theory for the Geodesic Model).

The parabolic PDE with , as defined in (28) and Neumann boundary conditions has a unique solution in .

Proof. By Theorem 2, it remains to verify that satisfies (C1)–(C2) and (I1)–(I7). We will show that each of the conditions is satisfied. Most are simple to show, the exception being (I7) which is non-trivial.

(C1): Equation (28) only has dependence on in the term , we therefore have a restriction on the choice of , requiring for . This is satisfied for with defined as in (7).

(C2): We find for arbitrary that and so . It follows that , therefore this condition is satisfied.

(I1): is only singular at , however it is continuous elsewhere and satisfies this condition.

(I2): In the only term which depends on is . With defined as in (7), in the limit this function is a step function from on , on and on . So we can choose any constant . With there is smoothing at the end of the intervals, however there is still a lower bound on for and we can choose any constant .

(I3): is continuous at for any because Hence this condition is satisfied.

(I4): The Euler-Lagrange equations give Neumann boundary conditions

on , where is the outward unit normal vector, and we see that and therefore this condition is satisfied.

(I5): By the definition above, . So this condition is satisfied.

(I6): As before we can use the definition, . So we can choose and the condition is satisfied.

(I7): This is the most involved condition to prove and uses many other results. For clarity of the overall paper, we postpone the proof to Appendix A.

5.2. Generalisation to other related models

Theorems 2 and 4 can be generalised to a few other models. This amounts to writing each model as a PDE of the form (28) where is monotone and are bounded. This is summarised in the following Corollary:

Corollary 5.

Assume that and are fixed, with the terms and respectively defined as follows for a few related models:

-

•

Chan-Vese [11]: , .

-

•

Chan-Vese (Convex) [10]: , .

- •

-

•

Nguyen et al. [30]: , .

-

•

Spencer-Chen (Convex) [39]: , .

Then if we define a PDE of the general form

with

-

(i)

Neumann boundary conditions ( the outward normal unit vector)

-

(ii)

satisfies if

-

(iii)

and are bounded; and

-

(iv)

or ,

we have a unique solution for a given initialisation. Consequently we conclude that all above models admit a unique solution.

Proof. The conditions (i)–(iv) are hold for all of these models. All of these models require Neumann boundary conditions and use the permitted G(x). The monotonicity of is discussed in the proof of (C1) for Theorem 4 and the boundedness of and is clear in all cases.

Remark 6.

Remark 7.

We cannot apply the classical viscosity solution framework to the Rada-Chen model [37] as this is a non-local problem with .

6. Numerical Results

In this section we will demonstrate the advantages of the Geodesic Model for selective image segmentation over related and previous models. Specifically we shall compare

-

•

M1 — the Nguyen et al. (2012) model [30];

-

•

M2 — the Rada-Chen (2013) model [37];

-

•

M3 — the convex Spencer-Chen (2015) model [39];

-

•

M4 — the convex Liu et al. (2017) model [26];

-

•

M5 — the reformulated Rada-Chen model with geodesic distance penalty (see Remark 8);

-

•

M6 — the reformulated Liu et al. model with geodesic distance penalty (see Remark 8);

-

•

M7 — the proposed convex Geodesic Model (Algorithm 1).

Remark 8 (A note on M5 and M6).

We include M5 – M6 to test how the geodesic distance penalty term can improve M2 [37] and M4 [26]. These were obtained as follows:

-

•

we extend M2 to M5 simply by including the geodesic distance function in the functional.

-

•

we extend M4 to M6 with a minor reformulation to include data fitting terms. Specifically, the model M6 is

(30) for non-negative fixed parameters, and as defined in (7) and as defined for the convex Liu et al. model. This is a convex model and is the same as the proposed Geodesic Model M7 but with weighted intensity fitting terms.

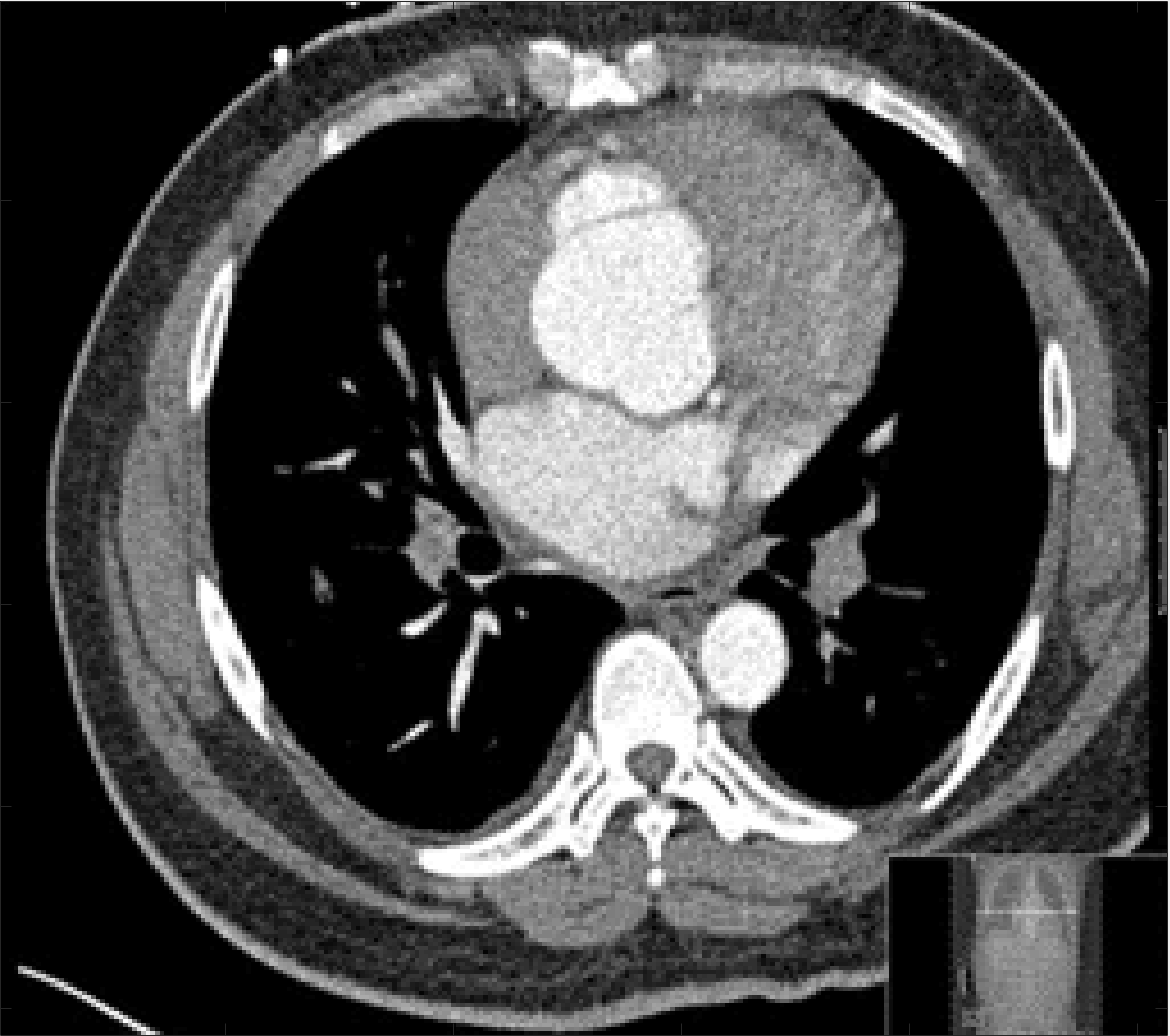

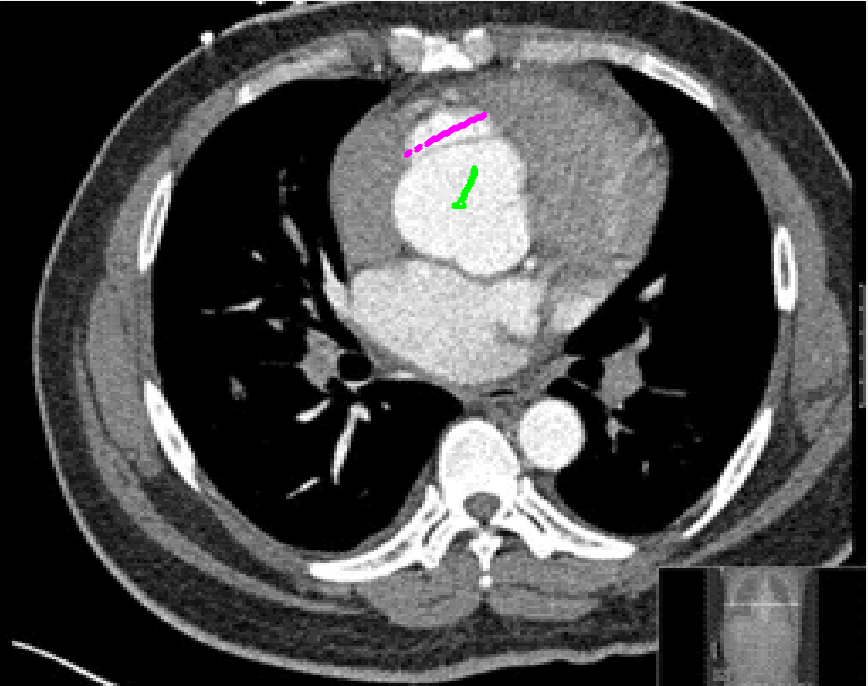

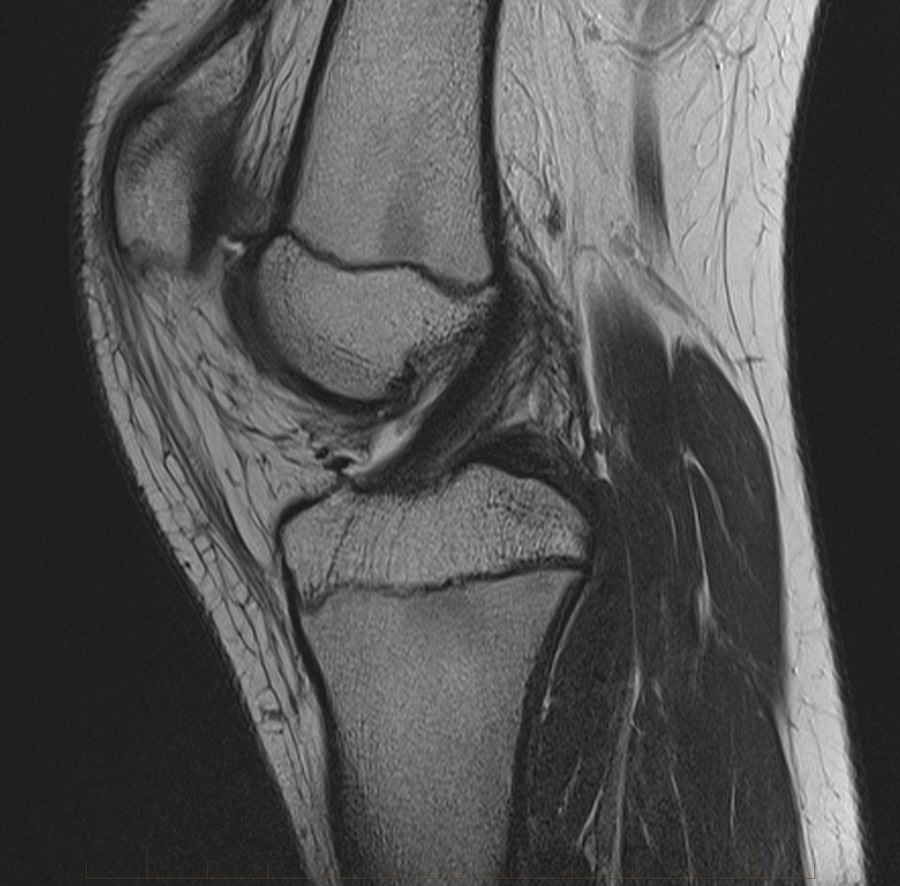

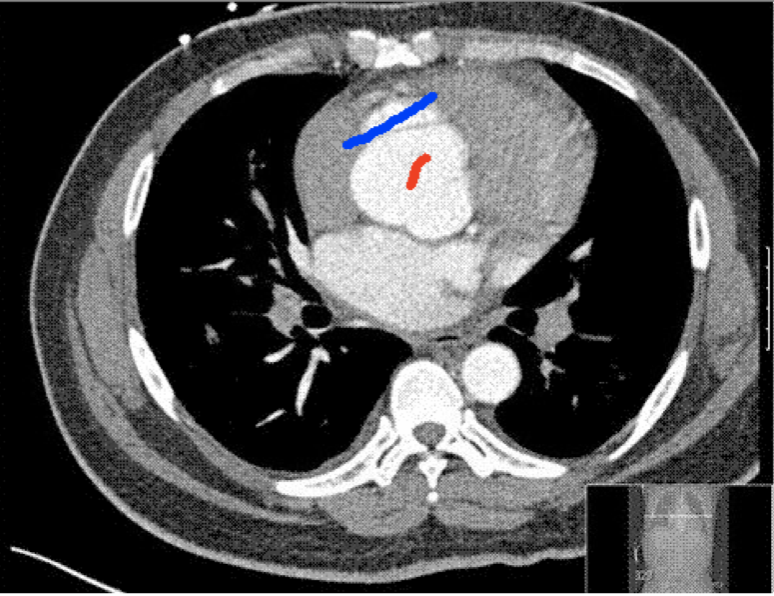

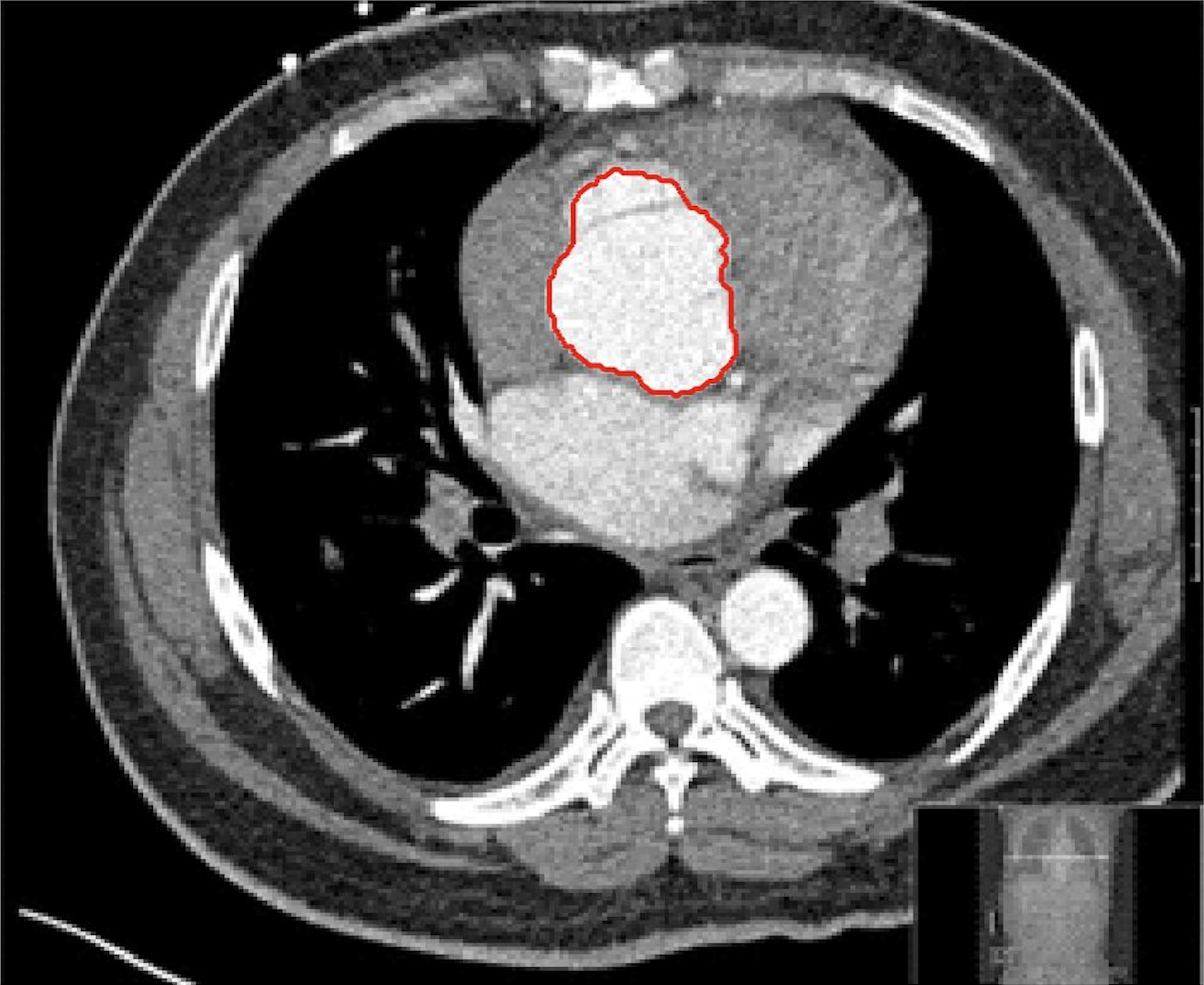

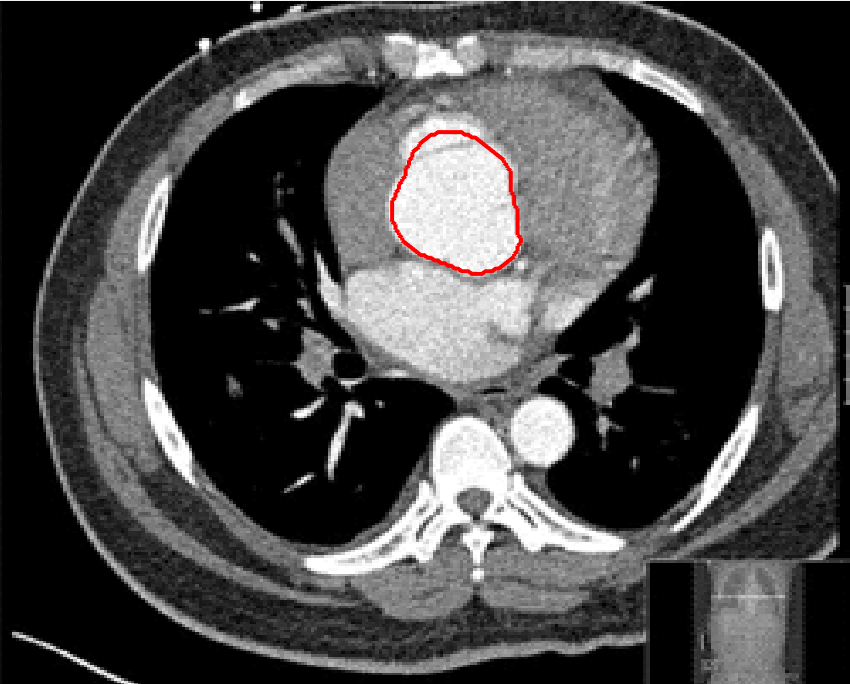

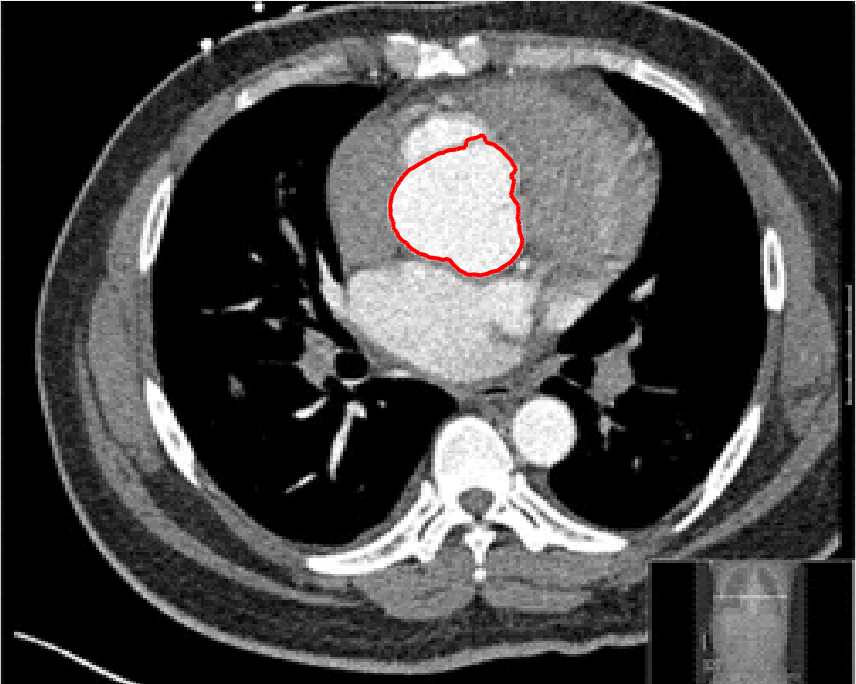

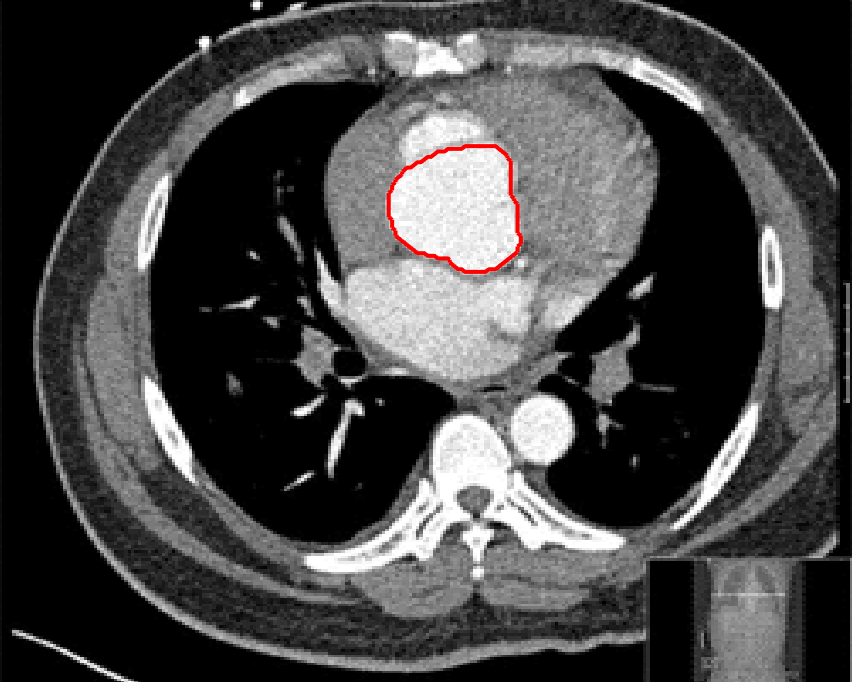

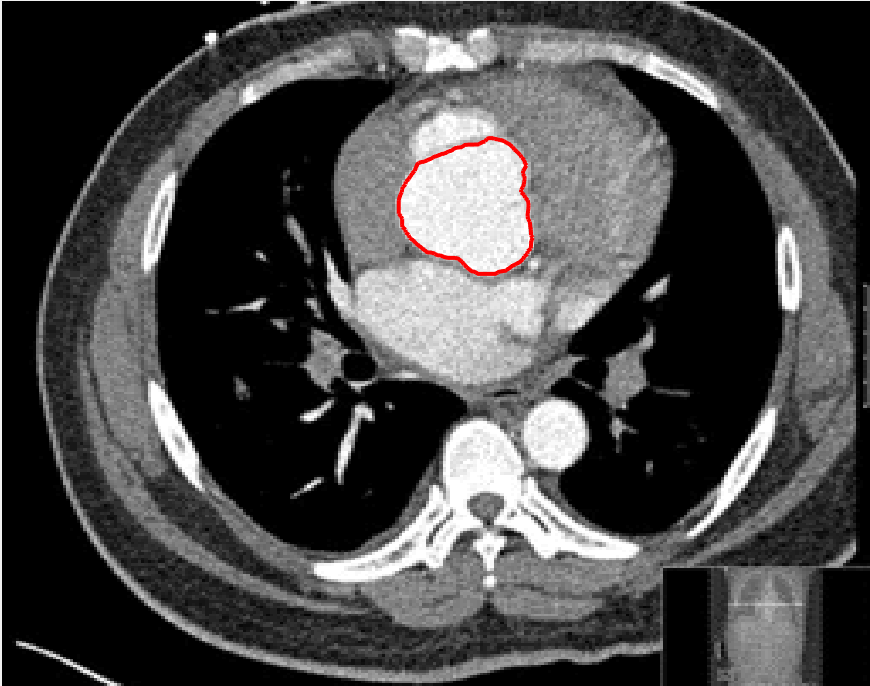

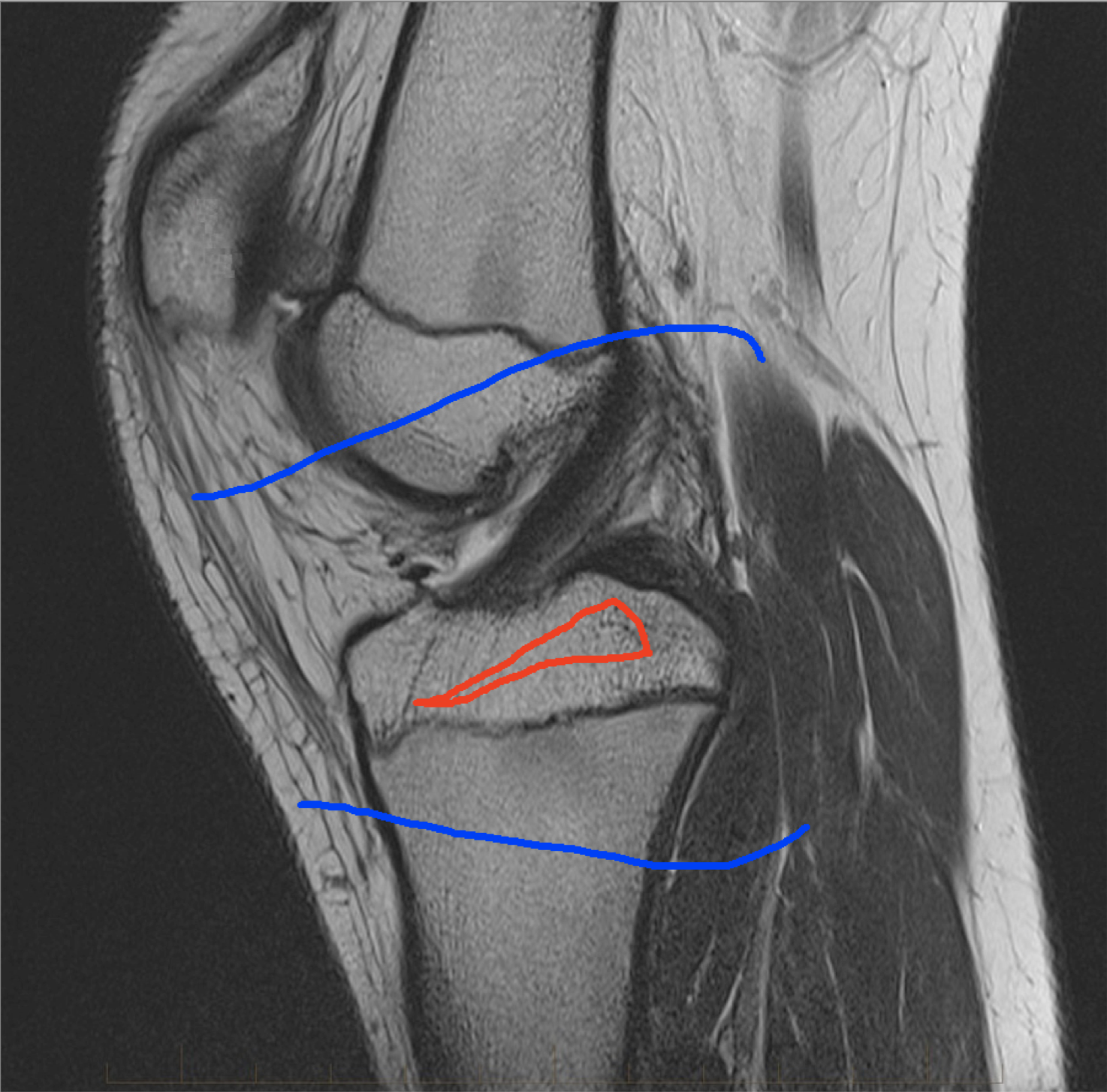

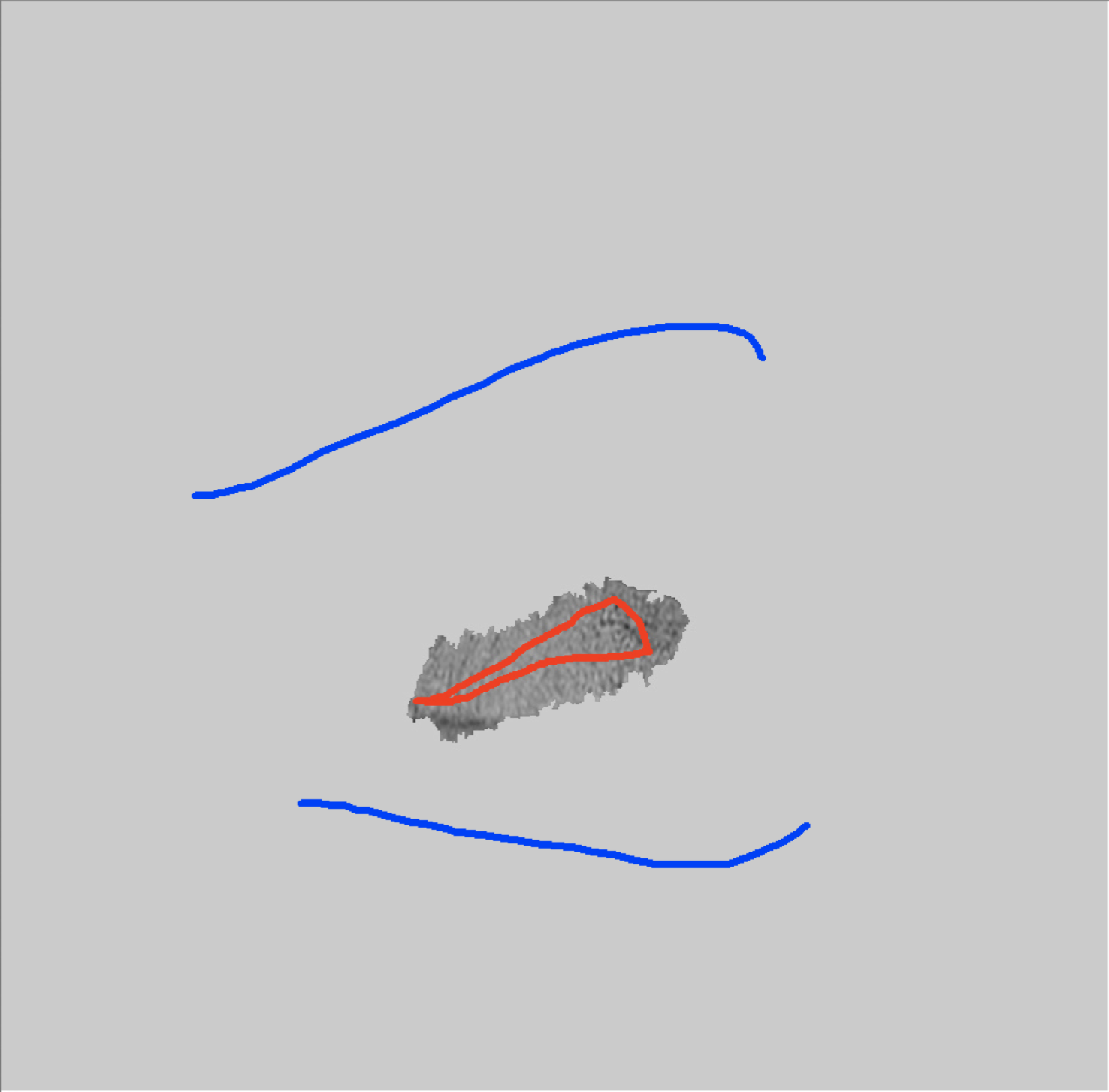

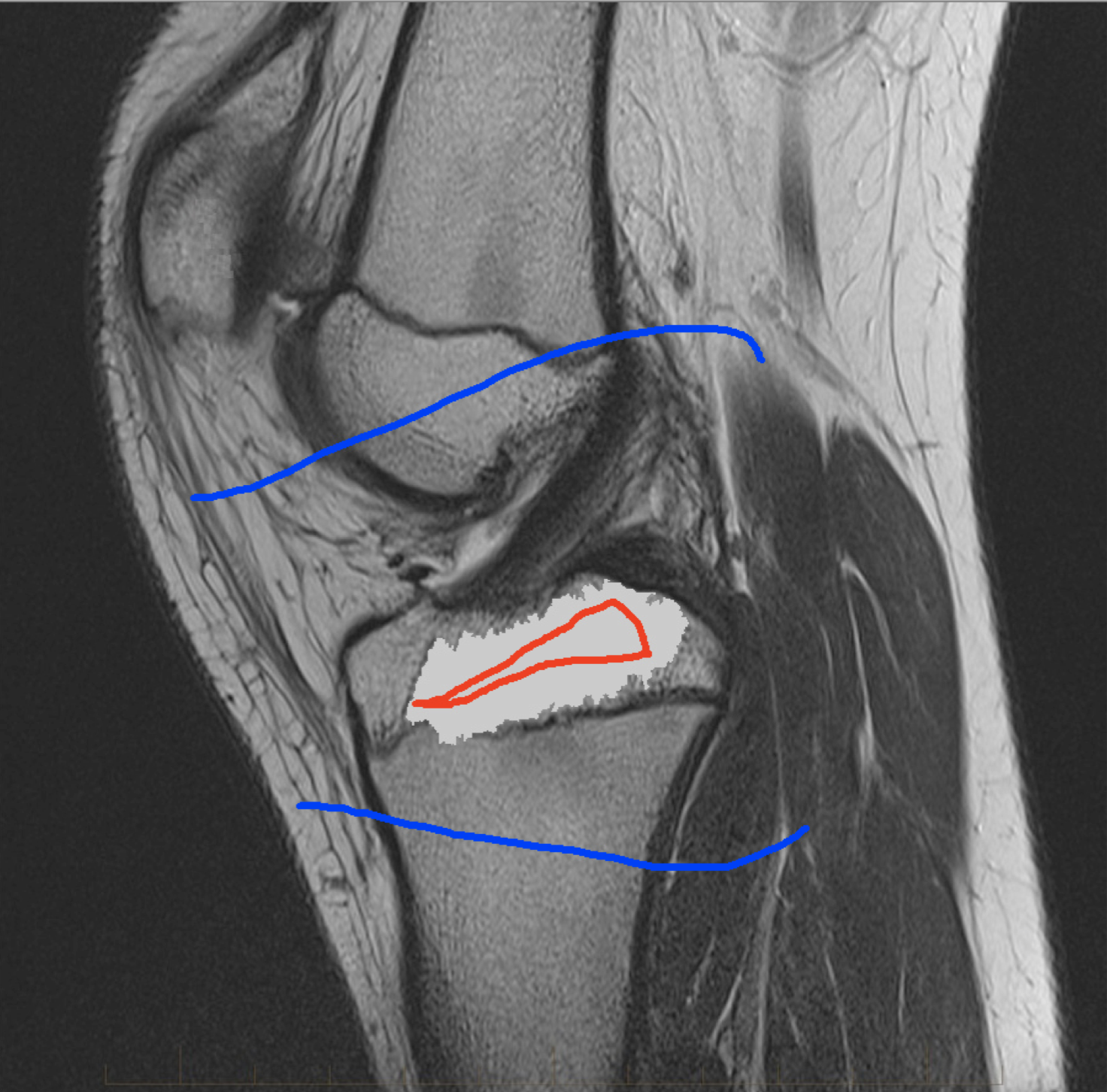

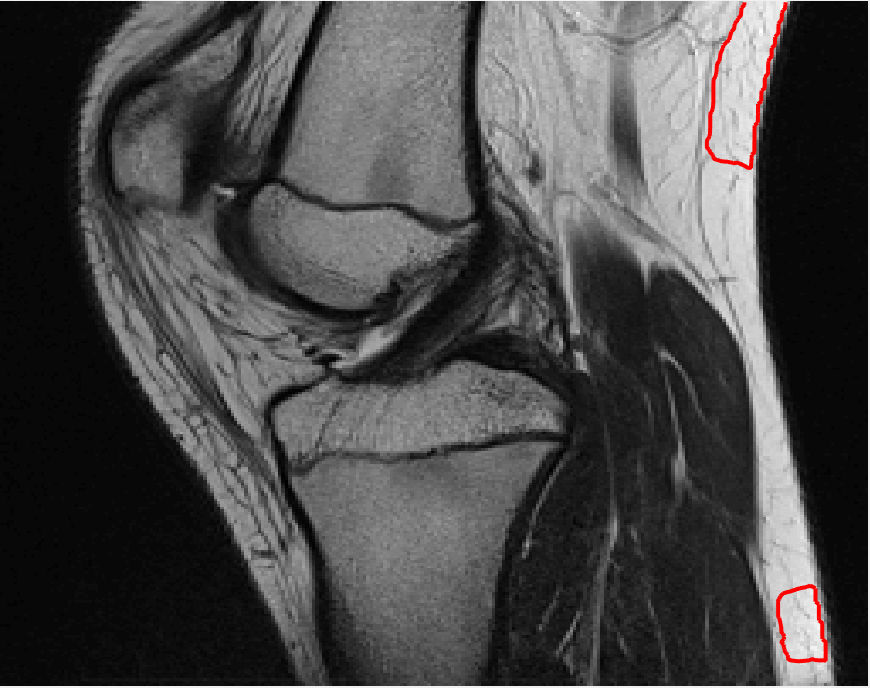

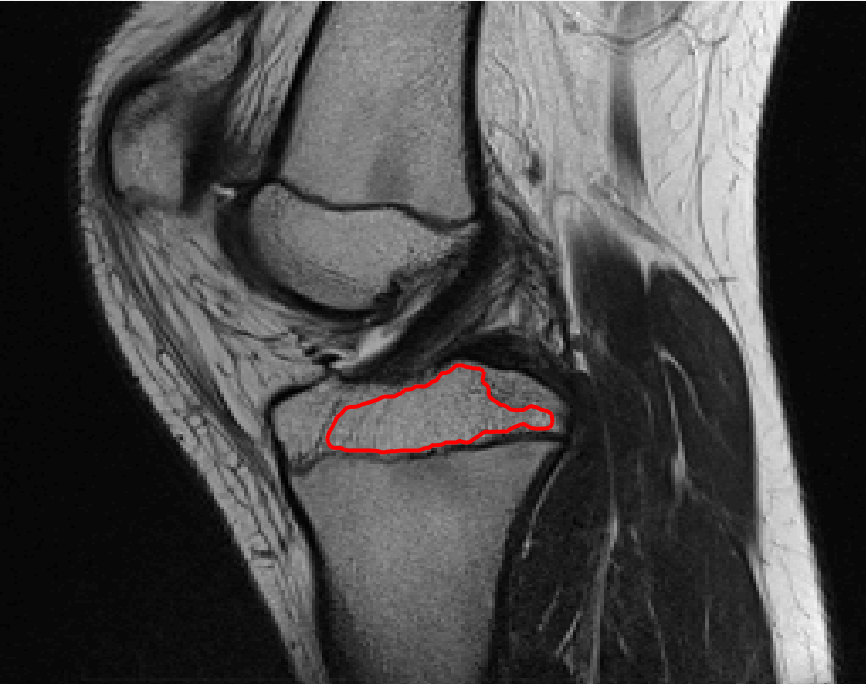

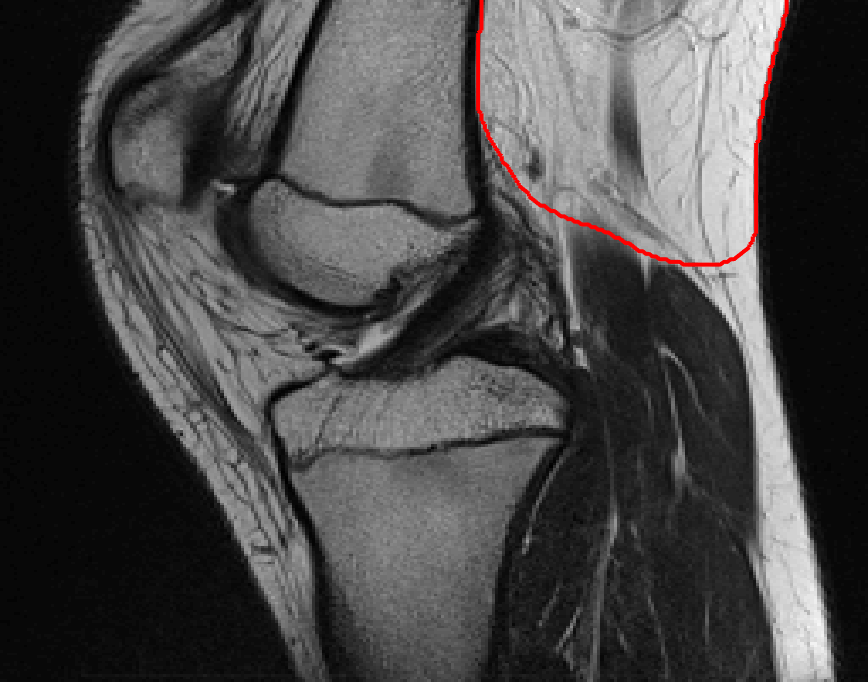

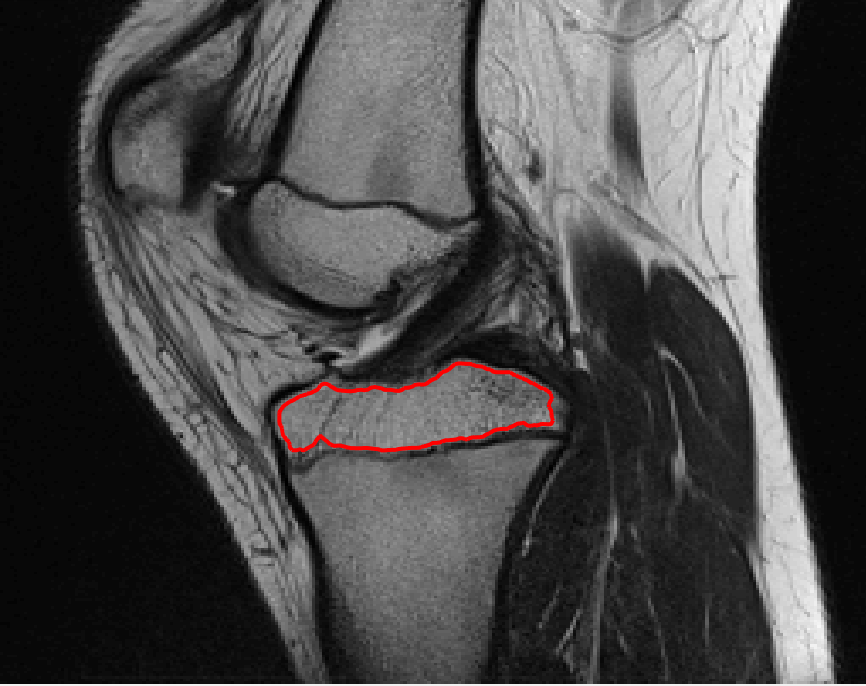

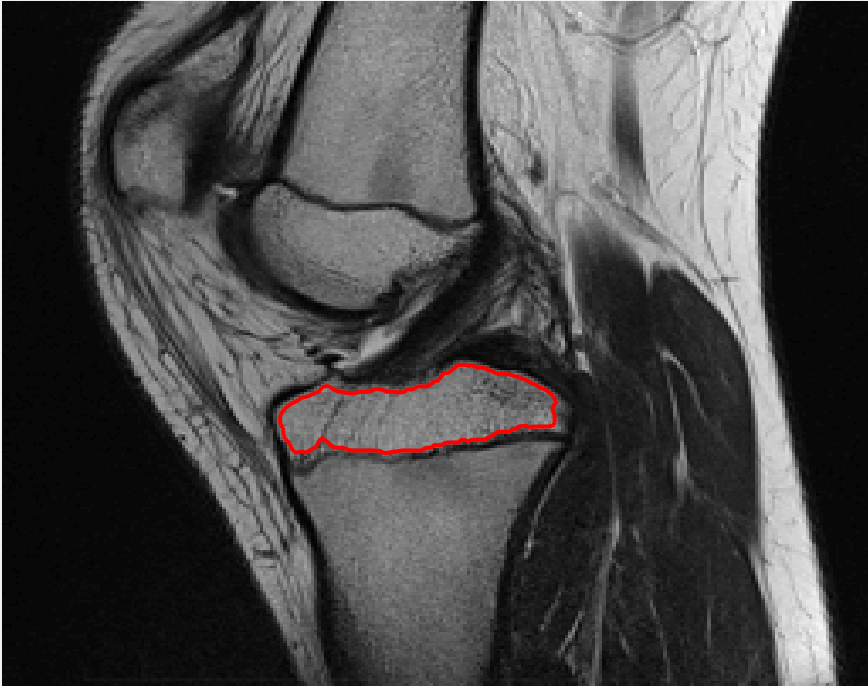

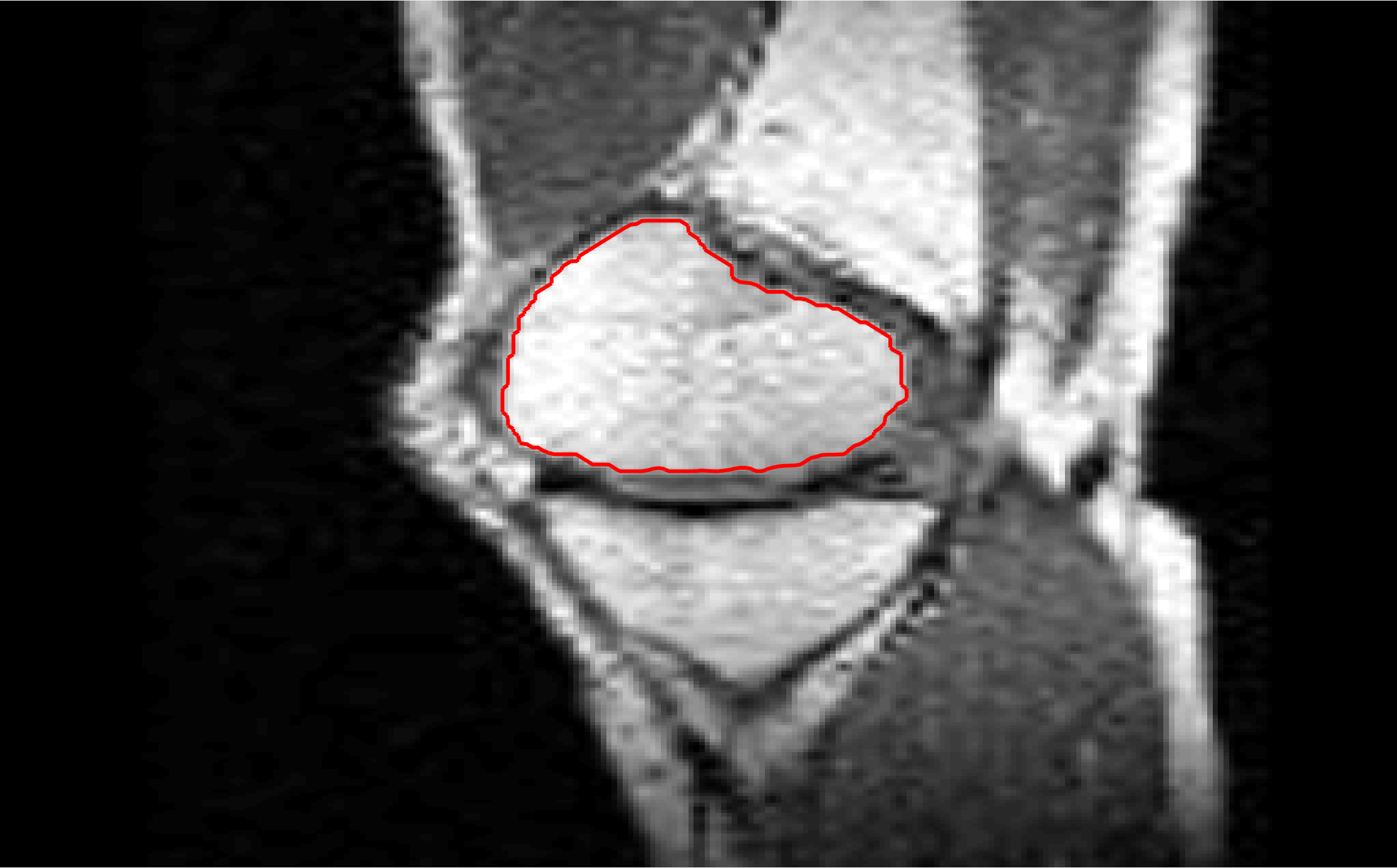

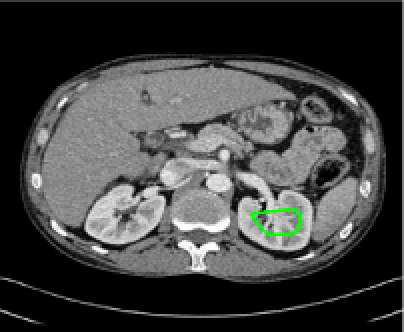

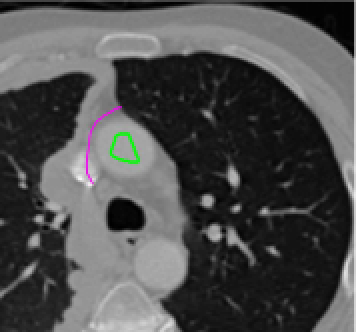

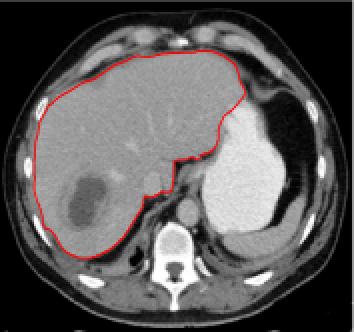

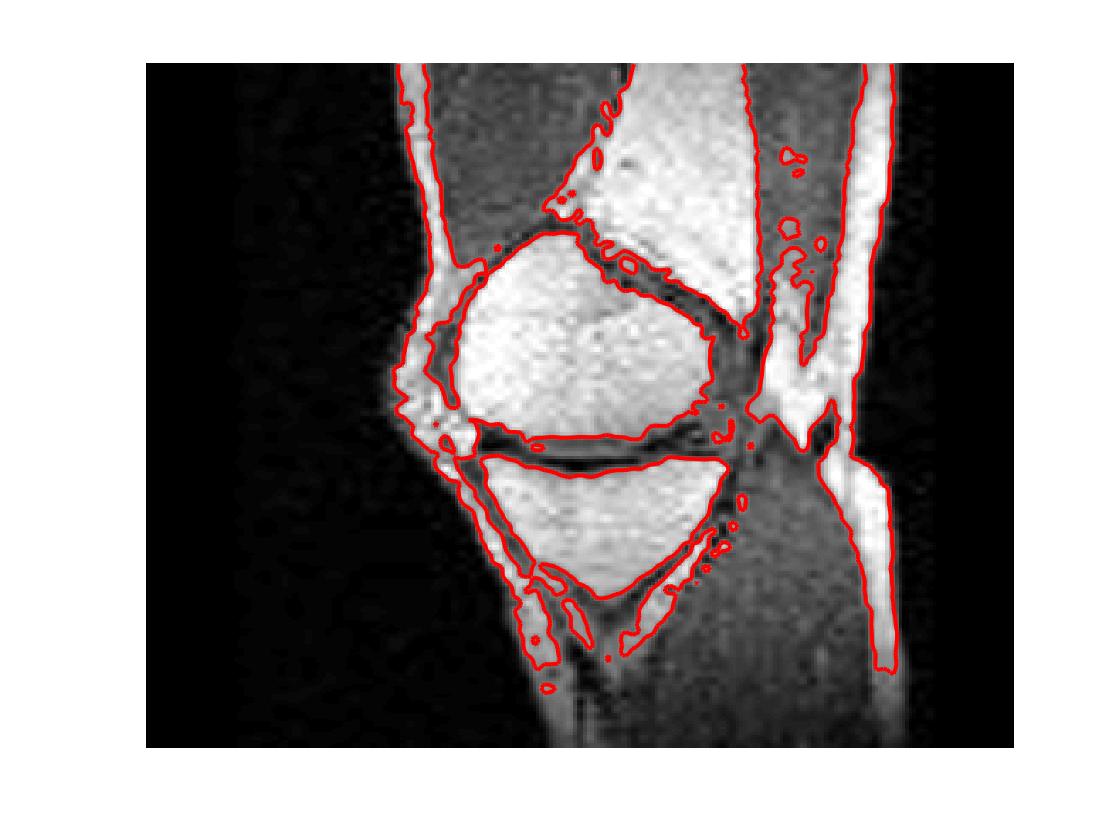

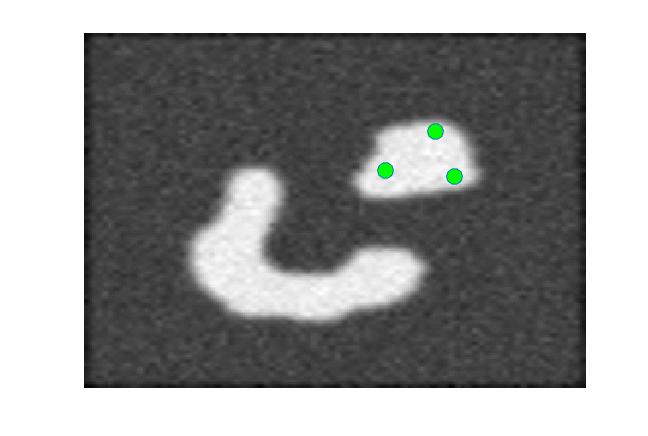

Four sets of test results are shown below. In Test 1 we compare models M1 – M6 to the proposed model M7 for two images which are hard to segment. The first is a CT scan from which we would like to segment the lower portion of the heart, the second is an MRI scan of a knee and we would like to segment the top of the Tibia. See Figure 9 for the test images and the marker sets used in the experiments. In Test 2 we will review the sensitivity of the proposed model to the main parameters. In Test 3 we will give several results achieved by the model using marker and anti-marker sets. In Test 4 we show the initialisation independence and marker independence of the Geodesic Model on real images.

For M7, we denote by the thresholded at some value to define the segmented region. Although the threshold can be chosen arbitrarily in from the work by [10, Thm 1] and [39], we usually take .

Quantitative Comparisons. To measure the quality of a segmentation, we use the Tanimoto Coefficient (TC) (or Jaccard Coefficient [21]) defined by

where GT is the ‘ground truth’ segmentation and is the result from a particular model. This measure takes value one for a segmentation which coincides perfectly with the ground truth and reduces to zero as the quality of the segmentation gets worse. In the other tests, where a ground truth is not available, we use visual plots.

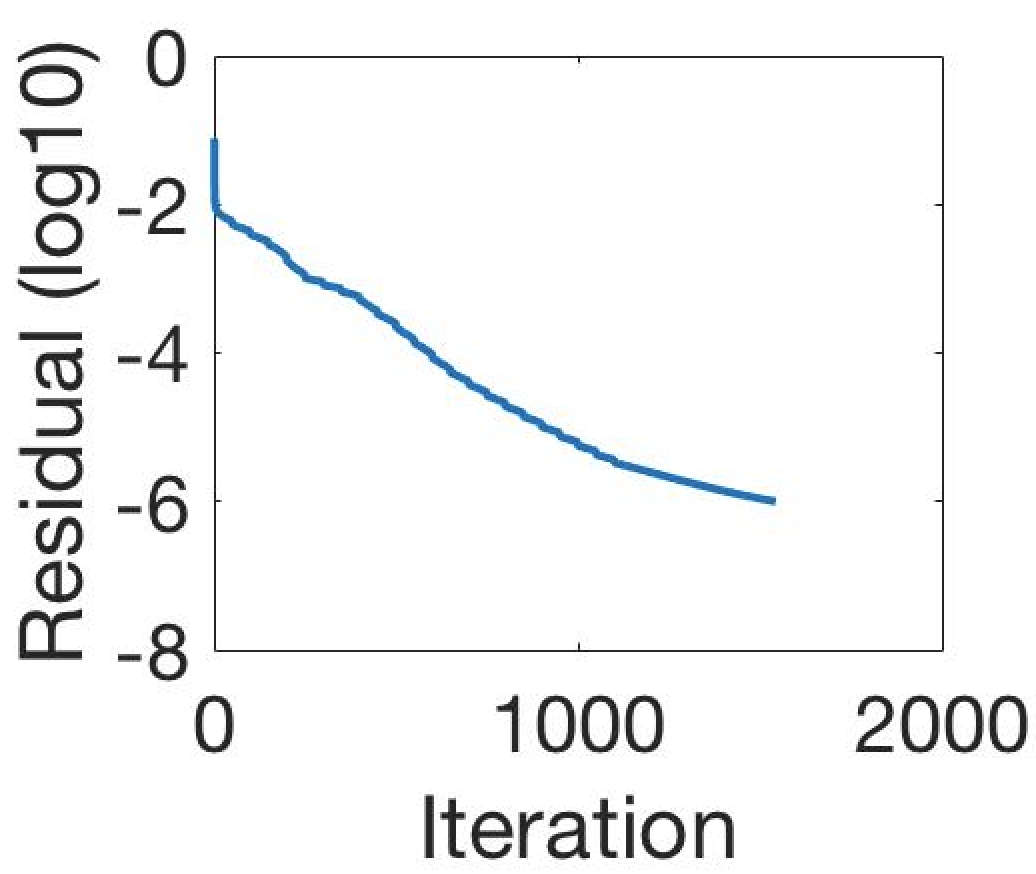

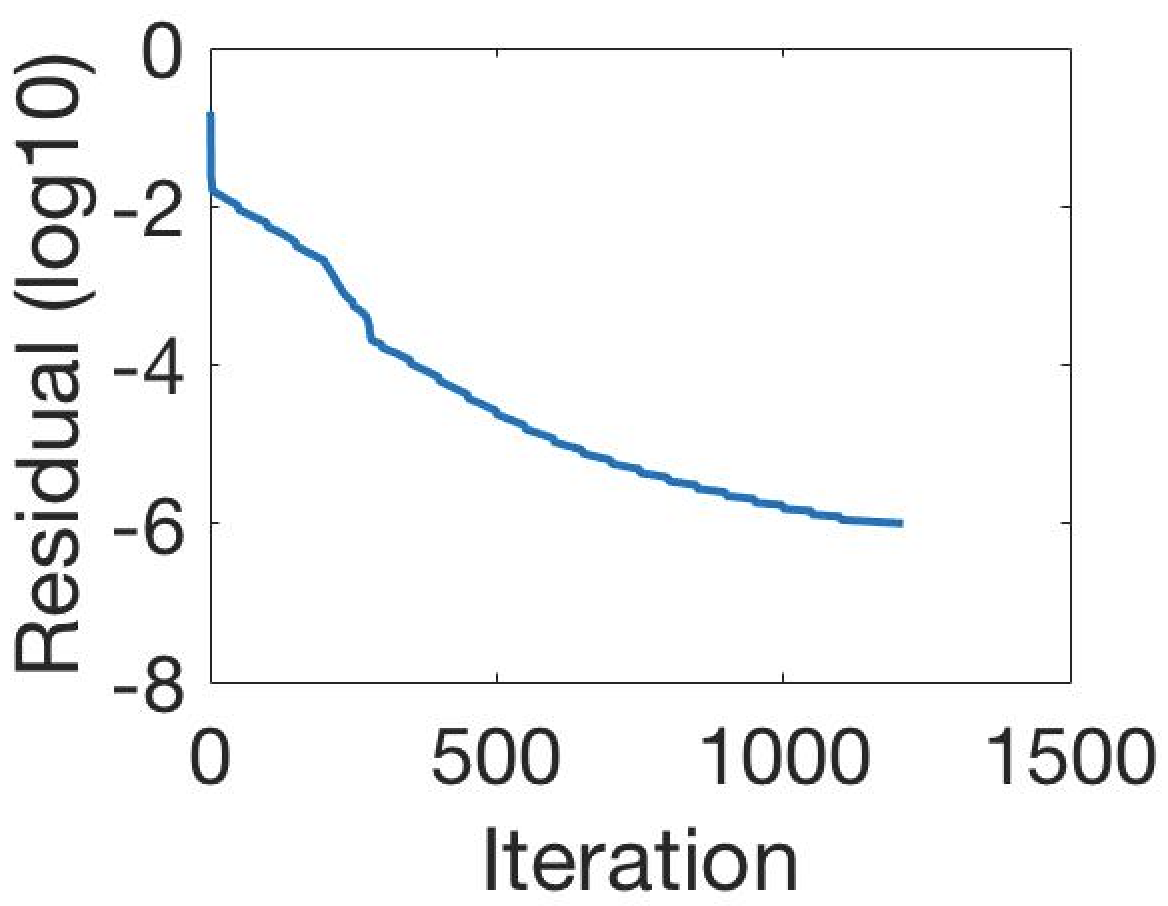

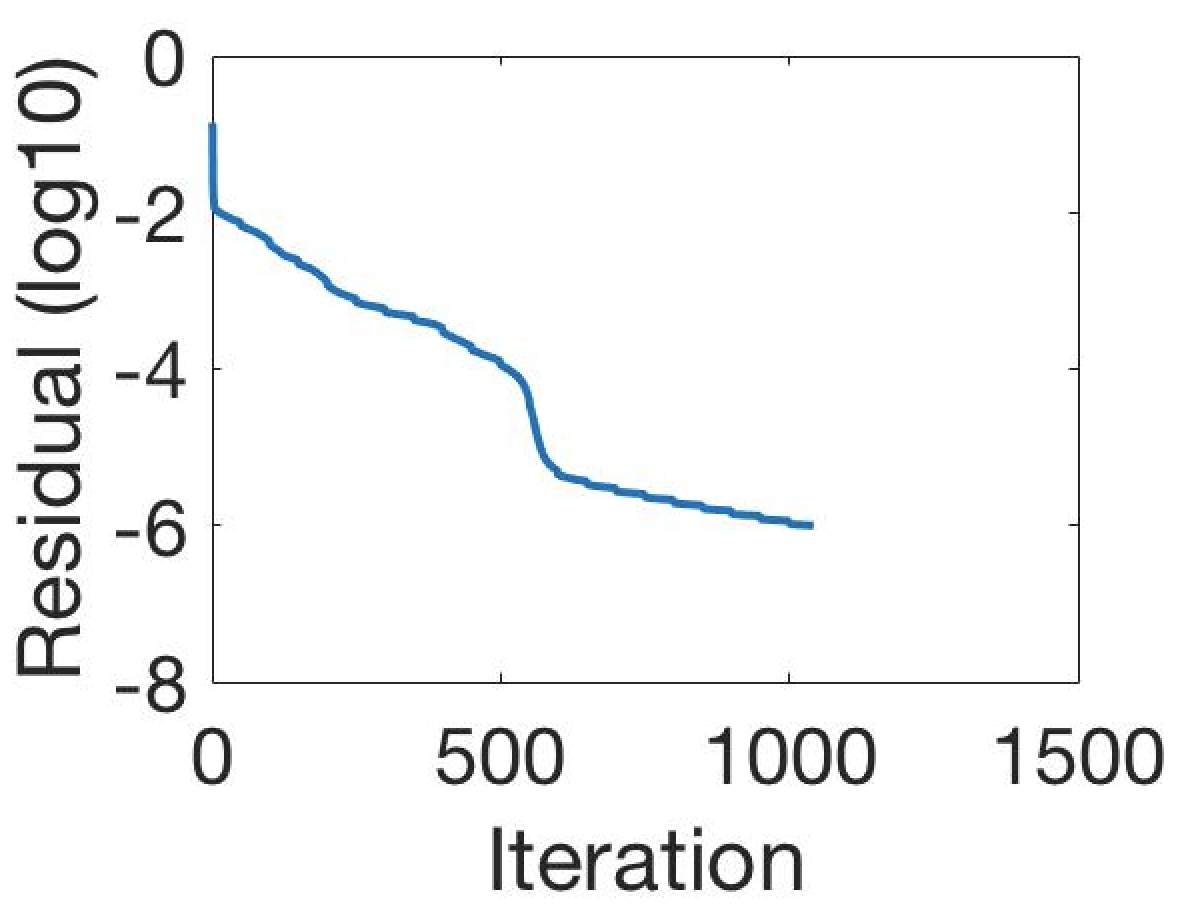

Parameter Choices and Implementation. We set , and vary and . Following [10] we let . To implement the marker points in MATLAB we use roipoly for choosing a small number of points by clicking and also freedraw which allows the user to draw a path of marker points. The stopping criteria used is the dynamic residual falling below a given threshold, i.e. once the iterations stop (we use in the tests shown).

Test 1 – Comparison of models M1 – M7.

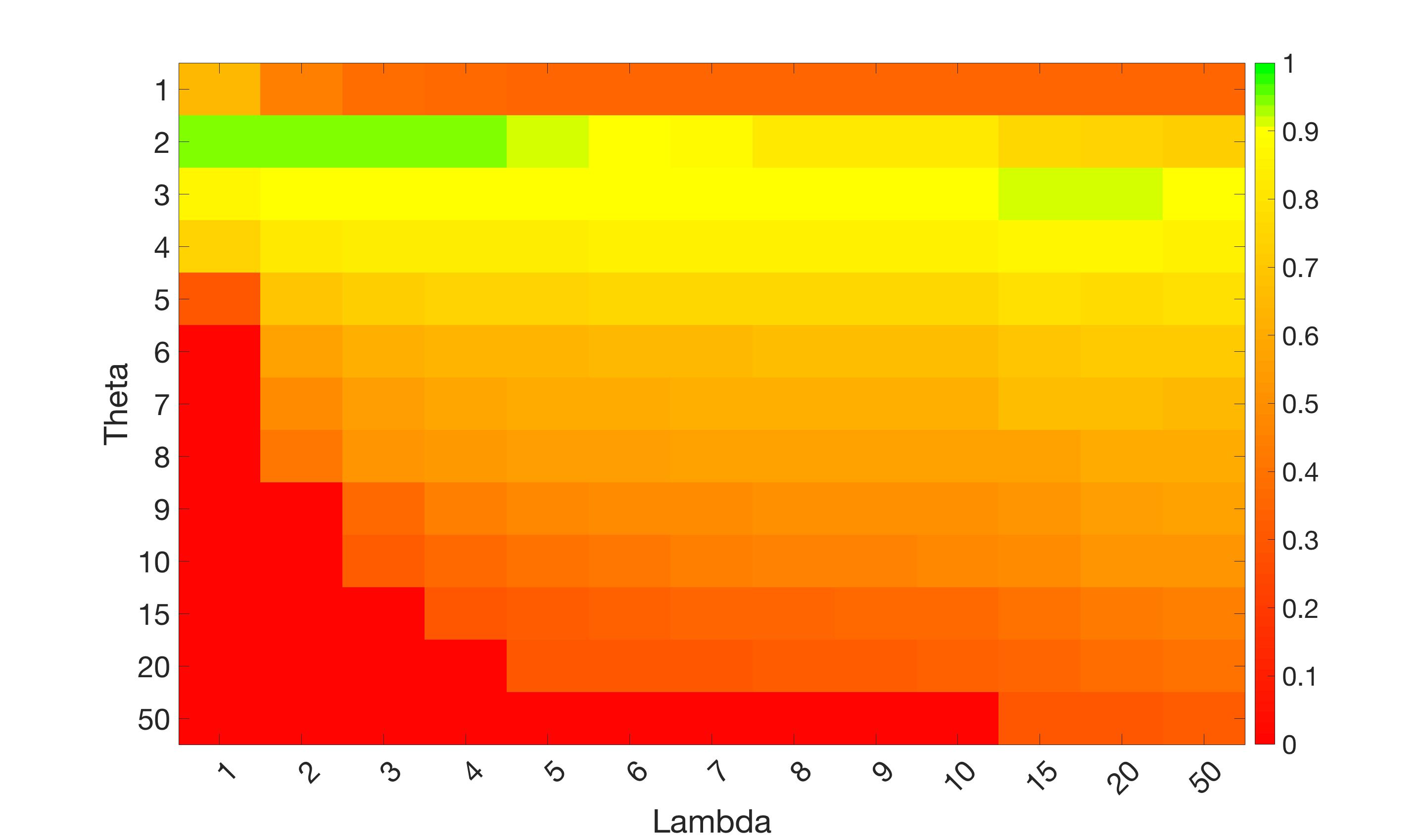

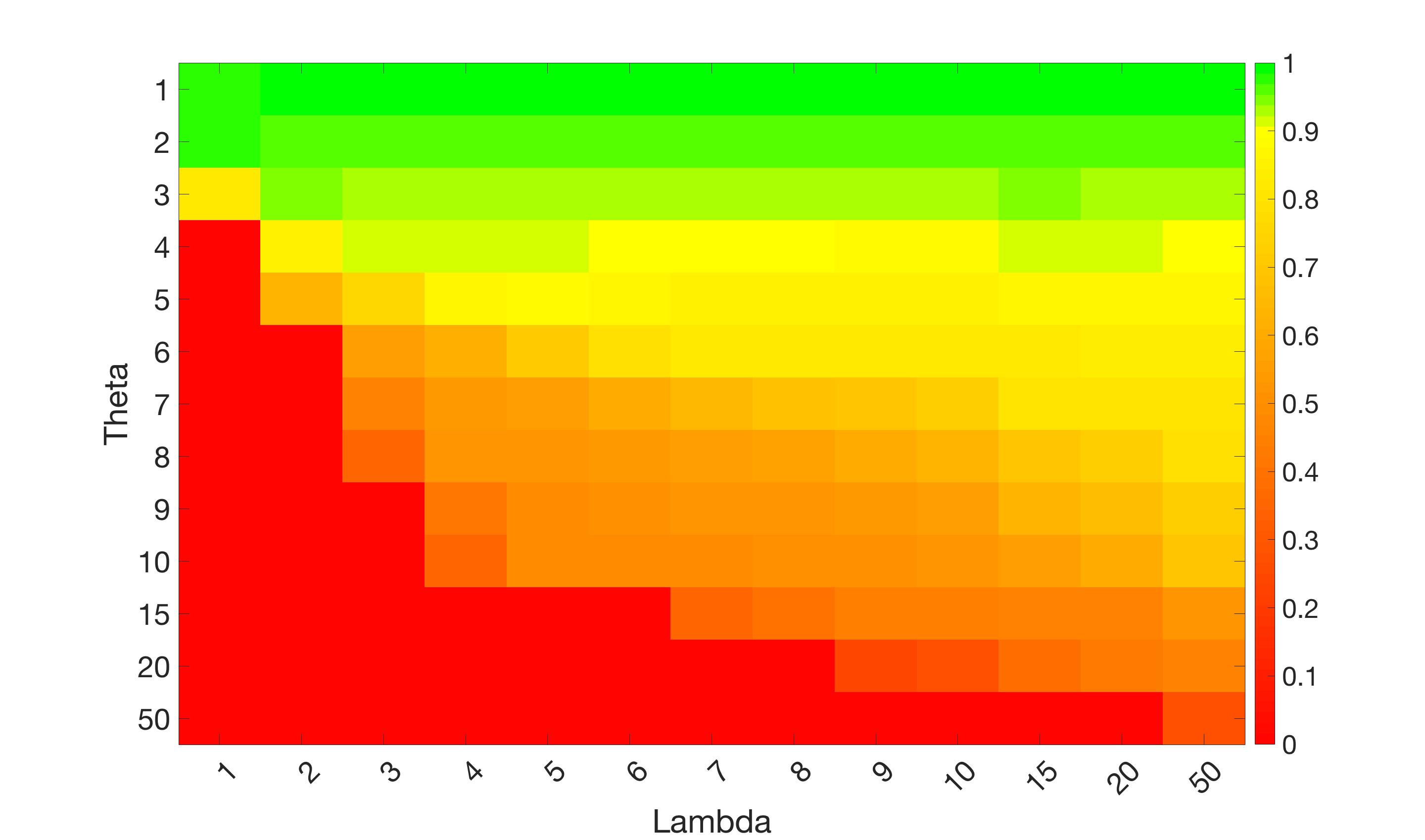

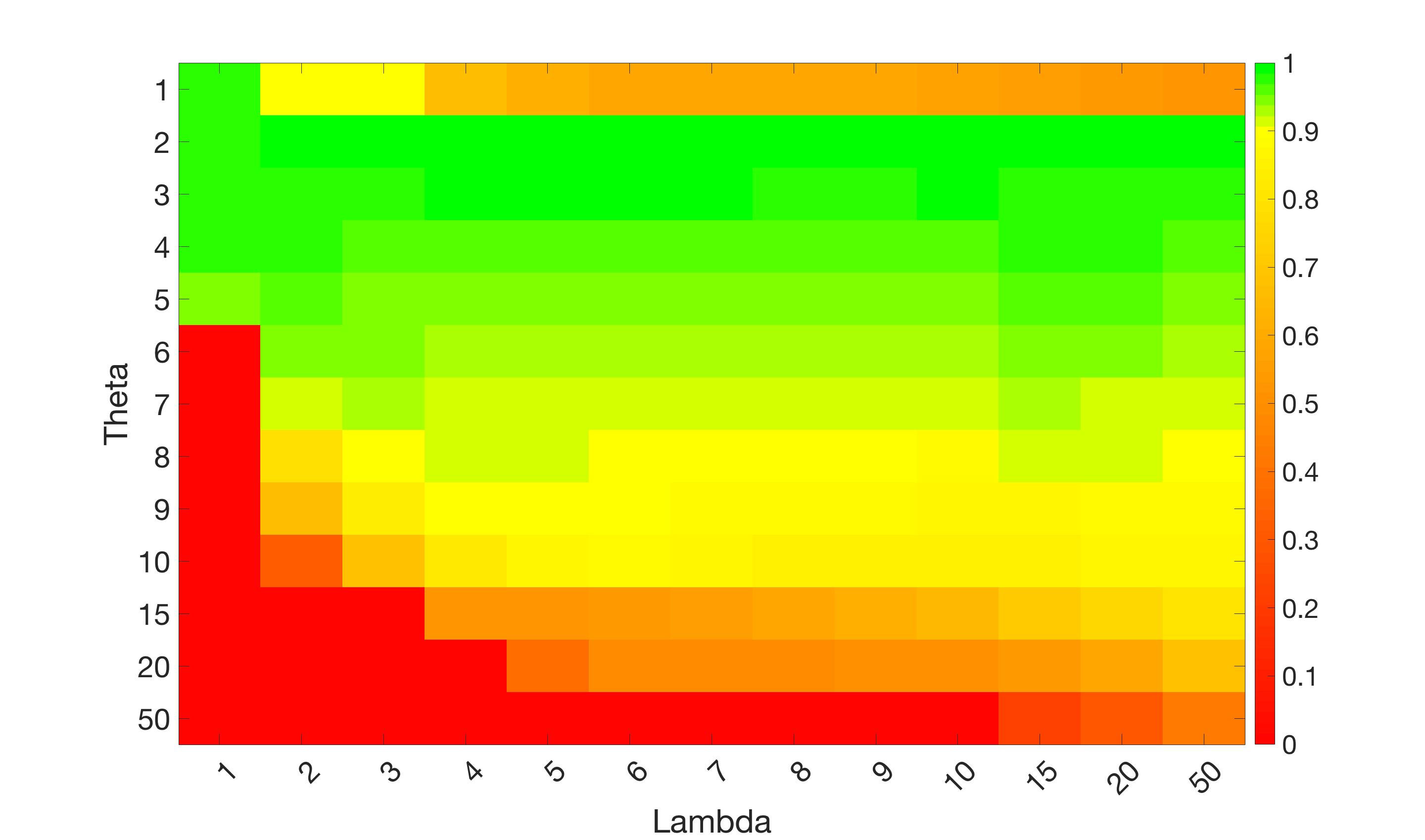

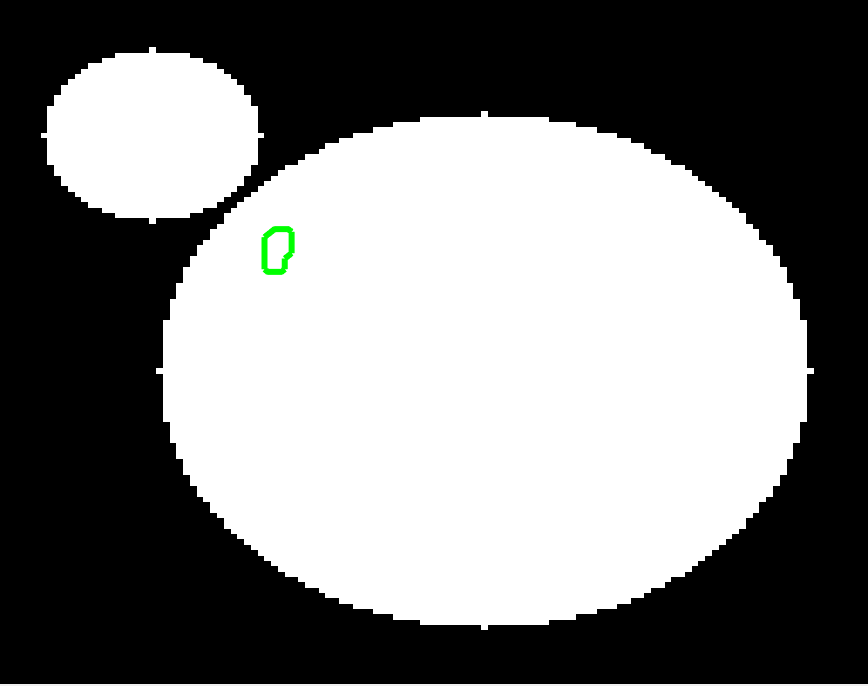

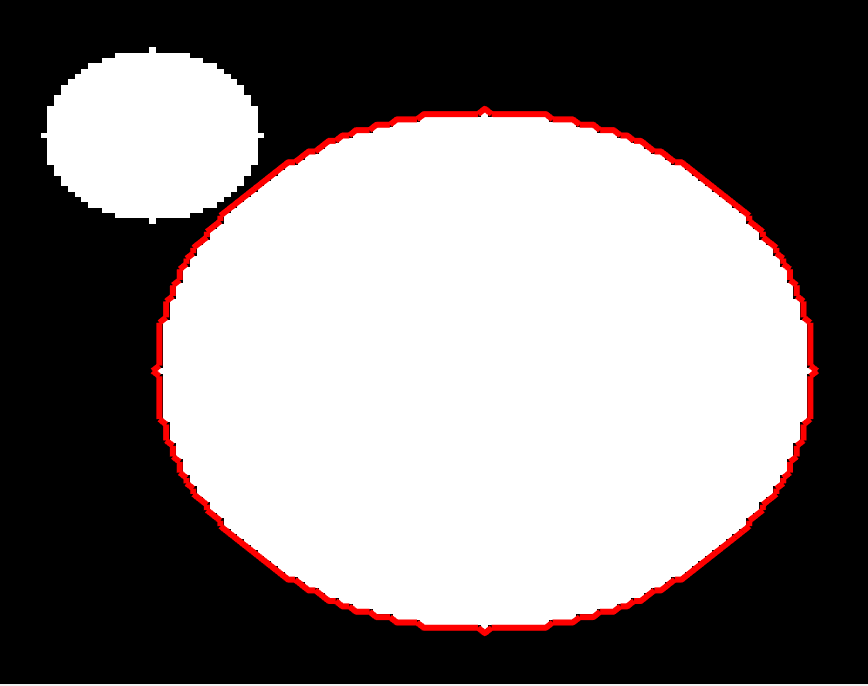

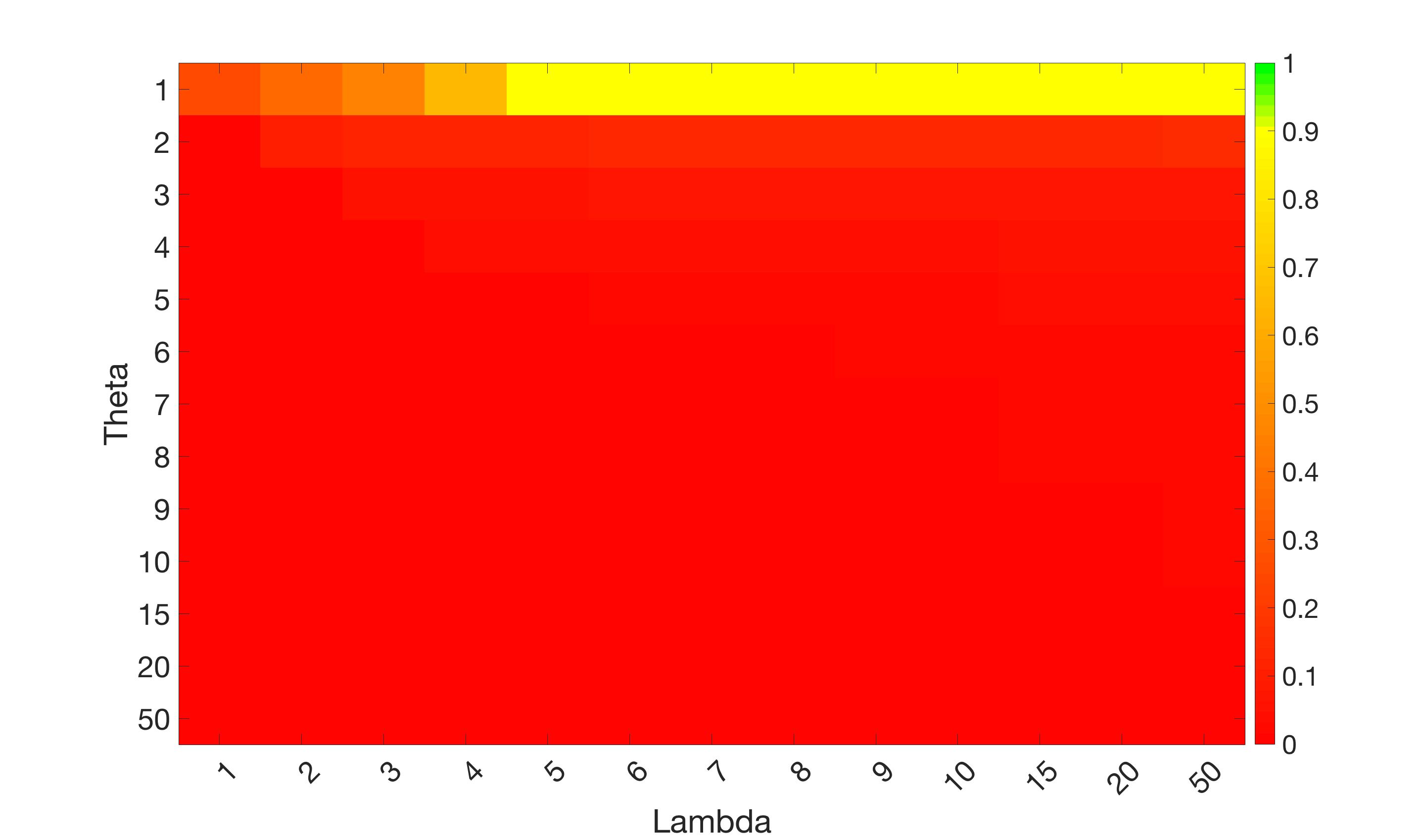

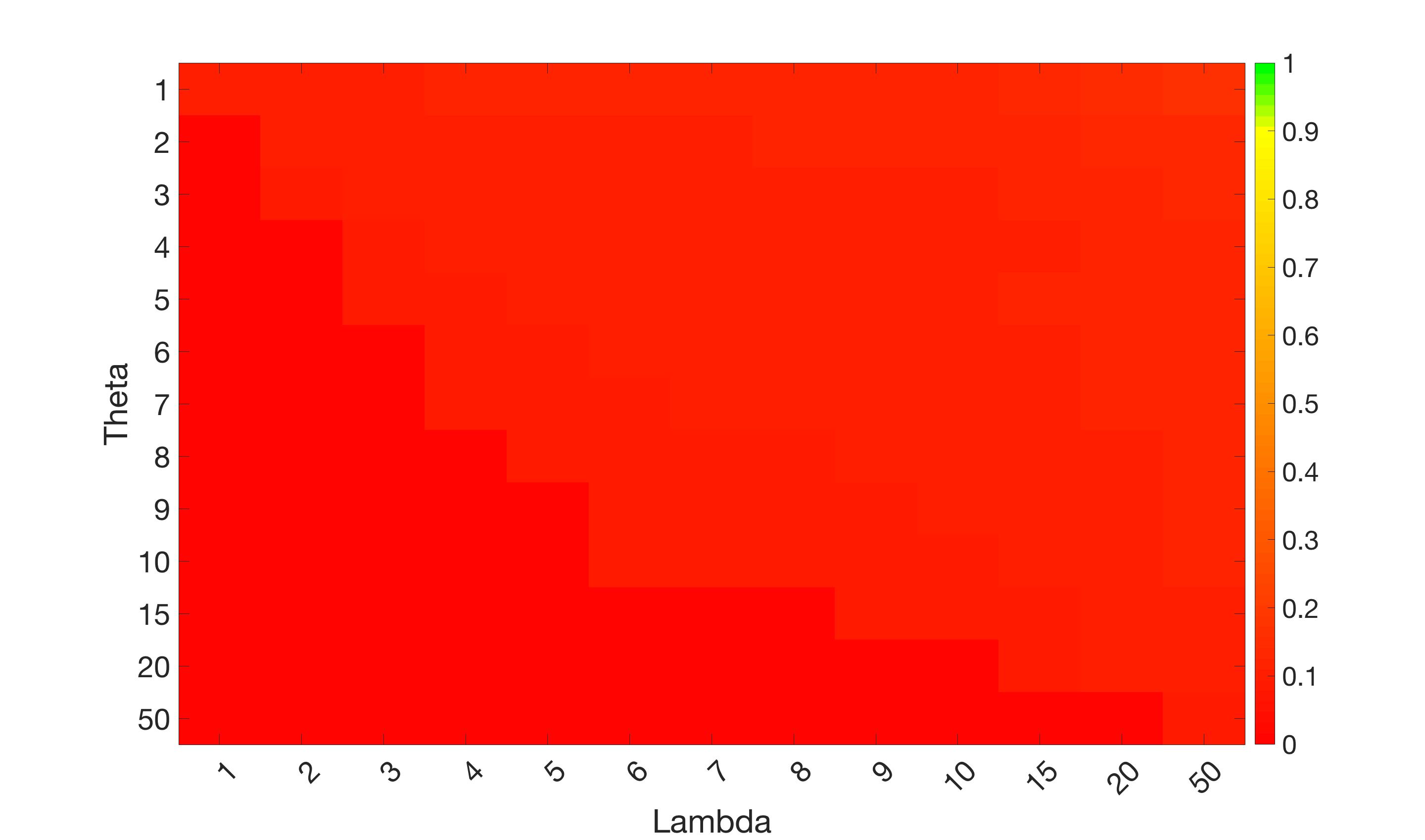

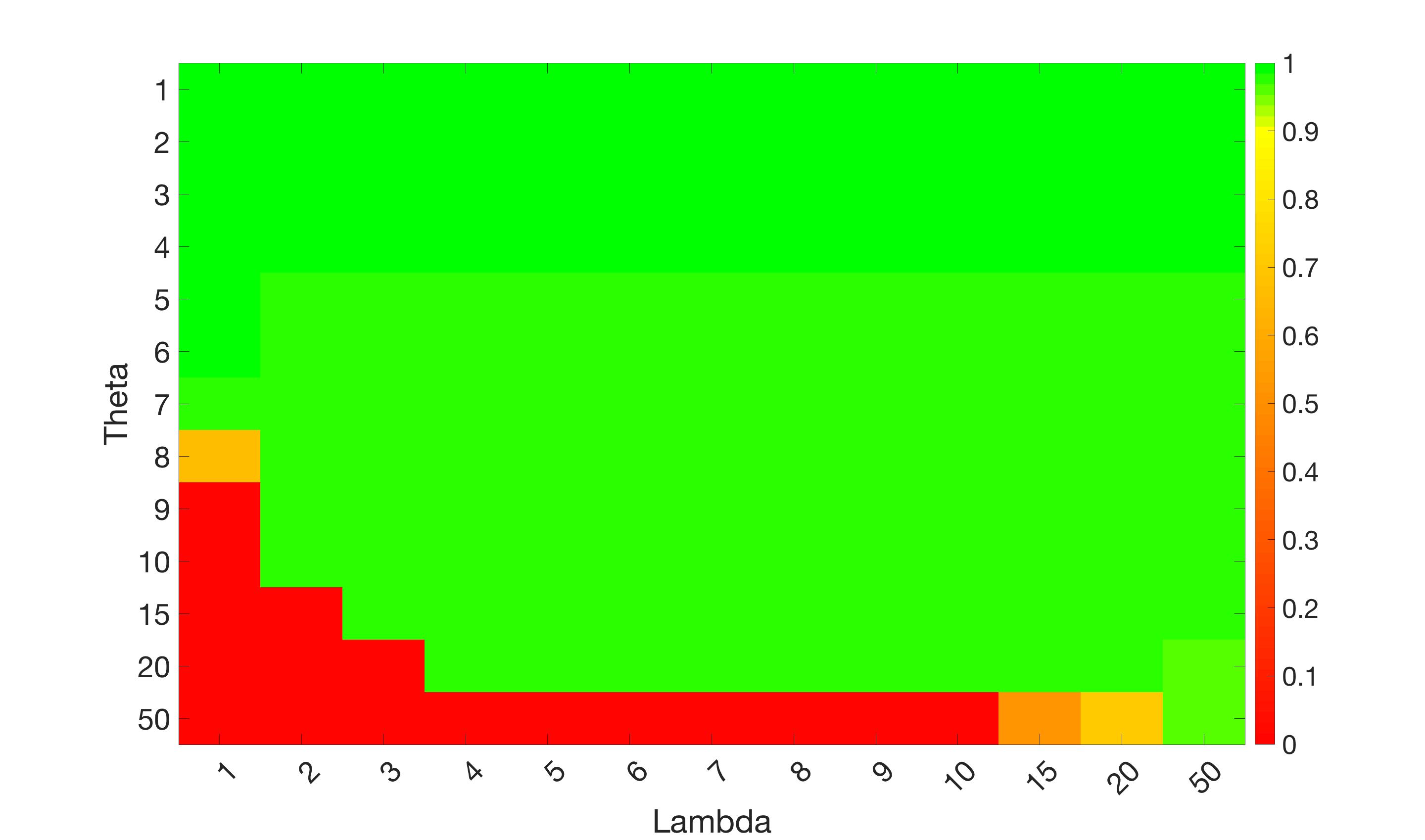

In this test we give the segmentation results for models M1 – M7 for the two challenging test images shown in Figure 9. The marker and anti-marker sets used in the experiments are also shown in this figure. After extensive parameter tuning, the best final segmentation results for each of the models are shown in Figures 10 and 11. For M1 – M4 we obtain incorrect segmentations in both cases. In particular, the results of M2 and M4 are interesting as the former gives poor results for both images, and the latter gives a reasonable result for Test Image 1 and a poor result for Test Image 2. In the case of M2, the regularisation term includes the edge detector and the distance penalty term (see (4)). It is precisely this which permits the poor result in Figures 10(b) and 11(b) as the edge detector is zero along the contour and the fitting terms are satisfied there (both intensity and area constraints) – the distance term is not large enough to counteract the effect of these. In the case of M4, the distance term and edge detector are separated from the regulariser and are used to weight the Chan-Vese fitting terms (see (9)). The poor segmentation in Figure 11(b) is due to the Chan-Vese terms encouraging segmentation of bright objects (in this case), weighting enforces these terms at all edges in the image and near . In experiments, we find that M4 performs well when the object to segment is of approximately the highest or lowest intensity in the image, however when this is not the case, results tend to be poor. We see that, in both cases, models M5 and M6 give much improved results to M2 and M4 (obtained by incorporating the geodesic distance penalty into each). The proposed Geodesic Model M7 gives an accurate segmentation in both cases. It remains to compare M5, M6 and M7. We see that M5 is a non-convex model (and cannot be made convex [39]), therefore results are initialisation dependent. It also requires one more parameter than M6 and M7, and an accurate set to give a reasonable area constraint in (4). These limitations lead us to conclude M6 and M7 are better choices than M5. In the case of M6, it has the same number of parameters as M7 and gives good results. M6 can be viewed as the model M7 with weighted intensity fitting terms (compare (18) and (30)). Experimentally, we find that the same quality of segmentation result can be achieved with both models generally, however M6 is more parameter sensitive than M7. This can be seen in the parameter map in Figure 12 with M7 giving an accurate result for a wider range of parameters than M6. To show the improvement of M7 over previous models, we also give an image in Figure 13 which can be accurately segmented with M7 but the correct result is never achieved with M6 (or M3). Therefore we find that M7 outperforms all other models tested M1 – M6.

Remark 9.

Models M2 – M7 are coded in MATLAB and use exactly the same marker/anti-marker set. For model M1, the software of Nguyen et al. requires marker and anti-marker sets to be input to an interface. These have been drawn as close as possible to match those used in the MATLAB code.

(i) (ii) (iii) (iv)

Test 2 – Test of M7’s sensitivity to changes in its main parameters. In this test we demonstrate that the proposed Geodesic Model is robust to changes in the main parameters. The main parameters in (20) are and . In all tests we set , which is simply a rescaling of the other parameters, and we set . In the first example, in Figure 12, we compare the TC value for various and values for segmentation of a bone in a knee scan. We see that the segmentation is very good for a larger range of and values. For the second example, in Figure 13, we show an image and marker set for which the Spencer-Chen model (M3) and modified Liu et al. model M6 cannot achieve the desired segmentation for any parameter range, but which can be attained for the Geodesic Model for a vast range of parameters. The final example, in Table 1, compares the TC values for various values with fixed parameters and . We use the images and ground truth as shown in Figures 12 and 13: on the synthetic circles image we obtain a perfect segmentation for all values of tested, and in the case of the knee segmentation the results are almost identical for any , above which the quality slowly deteriorates.

| Knee Segmentation (Figure 12) | Circle Segmentation (Figure 13) | |

|---|---|---|

| 0.97287 | 1.00000 | |

| 0.97287 | 1.00000 | |

| 0.97235 | 1.00000 | |

| 0.96562 | 1.00000 | |

| 0.94463 | 1.00000 | |

| 0.90660 | 1.00000 | |

| 0.89573 | 1.00000 | |

| 0.89159 | 1.00000 |

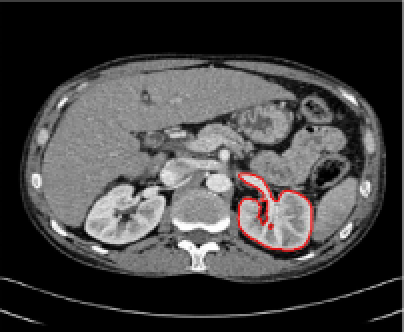

Test 3 – Further Results from the Geodesic Model M7. In this test we give some medical segmentation results obtained using the Geodesic Model M7. The results are shown in Figure 14. In the final two columns we use anti-markers to demonstrate how to overcome blurred edges and low contrast edges in an image. These are challenging and it is pleasing to see the correctly segmented results.

Test 4 – Initialisation and Marker Set Independence. In the first example, in Figure 16, we see how the convex Geodesic Model M7 gives the same segmentation result regardless of initialisation, as expected of a convex model. Hence the model is flexible in implementation. From many experiments it is found that using the polygon formed by the marker points as the initialisation converges to the final solution faster than using an arbitrary initialisation. In the second example, in Figure 16, we show intuitively how Model M7 is robust to the number of markers and the location of the markers within the object to be segmented. The Euclidean distance term, used in the Spencer-Chen model M3, is sensitive to the position and number of marker points, however, regardless of where the markers are chosen, and how many are chosen, the geodesic distance map will be almost identical.

(i) (ii) (iii) (iv) (v)

7. Conclusions

In this paper a new convex selective segmentation model has been proposed, using geodesic distance as a penalty term. This model gives results that are unachievable by alternative selective segmentation models and is also more robust to the parameter choices. Adaptations to the penalty term have been discussed which make it robust to noisy images and blurry edges whilst also penalising objects far from the marker set (in a Euclidean distance sense). A proof for the existence and uniqueness of the viscosity solution to the PDE given by the Euler-Lagrange equation for the model has been given (which applies to an entire class of image segmentation PDEs). Finally we have confirmed the advantages of using the geodesic distance with some experimental results. Future works will look for further extension of selective segmentation to other frameworks such as using high order regularizers [46, 13] where only incomplete theories exist.

Acknowledgements

The first author wishes to thank the UK EPSRC, the Smith Institute for Industrial Mathematics, and the Liverpool Heart and Chest Hospital for supporting the work through an Industrial CASE award. Thanks also must go to Professor Joachim Weickert (Saarland, Germany) for fruitful discussions at the early stages of this work. The second author is grateful to the UK EPSRC for the grant EP/N014499/1.

Appendix A — Proof that Condition (I7) Holds in Theorem 4

Using the assumption in (26), we write

Note that matrix from (29) is a real symmetric matrix and decomposes as with orthonormal and . Successively define and for all , an orthonormal basis, and obtain

Therefore, we can write

We now focus on reformulating the first term, we start by decomposing as follows

so we have where

Using this we compute

Substituting this in the overall trace sum we have

as ( is bounded) for all . Focussing on the first term in this expression we compute

where . This uses inequality (see [15, 16, 17, 18, 24, 35]). We now note that is Lipschitz continuous with Lipschitz constant .

Note. In the Geodesic Model we fix . Therefore, assuming and as Lipschitz requires us to assume that the underlying is a smooth function [16]. Thankfully, is typically provided as a smoothed image after some filtering (e.g. Gaussian smoothing) and we can assume regularity of .

Remark 10.

It is less clear that is Lipschitz, we now prove it explicitly. Firstly, it is relatively easy to prove that

by letting and we find . We now need to prove that is Lipschitz also. Take , then by a remark in [16], we can conclude such that

and so is Lipschitz with constant .

After some computations we obtain

Following the results in [15, 16, 17, 18, 24, 35] we have

so overall

where . Finally, we note that . If we now write

where (we must assume are bounded). Hence we have

and setting , this is a non-decreasing continuous function, maps and as required. We have proven that condition (I7) is satisfied.

References

- [1] R. Adams and L. Bischof. Seeded region growing. IEEE Transactions on Pattern Analysis and Machine Intelligence, 16(6):641–647, 1994.

- [2] N. Badshah and Ke Chen. Multigrid Method for the Chan-Vese Model in Variational Segmentation. Communications in Computational Physics, 4(2):294–316, 2008.

- [3] N. Badshah and Ke Chen. On two multigrid algorithms for modeling variational multiphase image segmentation. IEEE Transactions on Image Processing, 18(5):1097–1106, 2009.

- [4] N. Badshah and Ke Chen. Image selective segmentation under geometrical constraints using an active contour approach. Communications in Computational Physics, 7(4):759–778, 2010.

- [5] N. Badshah, Ke Chen, H. Ali, and G. Murtaza. A coefficient of variation based image selective segmentation model using active contours. East Asian J. Appl. Math., 2:150–169, 2012.

- [6] Xue Bai and Guillermo Sapiro. Geodesic matting: A framework for fast interactive image and video segmentation and matting. International journal of computer vision, 82(2):113–132, 2009.

- [7] Dhruv Batra, Adarsh Kowdle, Devi Parikh, Jiebo Luo, and Tsuhan Chen. Interactive co-segmentation of objects in image collections. Springer Science & Business Media, 2011.

- [8] Vicent Caselles, Ron Kimmel, and Guillermo Sapiro. Geodesic Active Contours. International Journal of Computer Vision, 22(1):61–79, 1997.

- [9] Francine Catté, Pierre-Louis Lions, Jean-Michel Morel, and Tomeu Coll. Image selective smoothing and edge detection by nonlinear diffusion. SIAM Journal on Numerical analysis, 29(1):182–193, 1992.

- [10] Tony F. Chan, Selim Esedoglu, and Mila Nikolova. Algorithms for Finding Global Minimizers of Image Segmentation and Denoising Models. SIAM Journal on Applied Mathematics, 66(5):1632–1648, 2006.

- [11] Tony F. Chan and Luminita A. Vese. Active contours without edges. IEEE Transactions on Image Processing, 10(2):266–277, 2001.

- [12] M. G. Crandall, H. Ishii, and P.-L. Lions. User’s guide to viscosity solutions of second order partial differential equations. ArXiv Mathematics e-prints, June 1992.

- [13] J. Duan, W. Lu, Z. Pan, and L. Bai. New second order mumford-shah model based on -convergence approximation for image processing. Infrared Physics and Technology, 76:641–647, 2016.

- [14] D. G. Gordeziani and G.V. Meladze. Simulation of the third boundary value problem for multidimensional parabolic equations in an arbitrary domain by one-dimensional equations. USSR Computational Mathematics and Mathematical Physics, 14:249–253, 1974.

- [15] Christian Gout and Carole Le Guyader. Segmentation of complex geophysical structures with well data. Computational Geosciences, 10(4):361–372, 2006.

- [16] Christian Gout, Carole Le Guyader, and Luminita Vese. Image segmentation under interpolation conditions. Preprint CAM-IPAM, page 44 pages, 2003.

- [17] Christian Gout, Carole Le Guyader, and Luminita A. Vese. Segmentation under geometrical conditions using geodesic active contours and interpolation using level set methods. Numerical Algorithms, 39(1-3):155–173, 2005.

- [18] Laurence Guillot and Maïtine Bergounioux. Existence and uniqueness results for the gradient vector flow and geodesic active contours mixed model. arXiv preprint math/0702255, 2007.

- [19] Jia He, Chang-Su Kim, and C-C Jay Kuo. Interactive Segmentation Techniques: Algorithms and Performance Evaluation. Springer Science & Business Media, 2013.

- [20] Hitoshi Ishii and Moto-Hiko Sato. Nonlinear oblique derivative problems for singular degenerate parabolic equations on a general domain. Nonlinear Analysis: Theory, Methods and Applications, 57(7):1077 – 1098, 2004.

- [21] Paul Jaccard. The distribution of the flora in the alpine zone.1. New Phytologist, 11(2):37–50, 1912.

- [22] Michael Kass, Andrew Witkin, and Demetri Terzopoulos. Snakes: Active contour models. International Journal of Computer Vision, 1(4):321–331, 1988.

- [23] M. Klodt, F. Steinbrücker, and Daniel Cremers. Moment Constraints in Convex Optimization for Segmentation and Tracking. Advanced Topics in Computer Vision, pages 1–29, 2013.

- [24] Carole Le Guyader. Imagerie Mathématique: segmentation sous contraintes géométriques Théorie et Applications. PhD thesis, INSA de Rouen, 2004.

- [25] Carole Le Guyader and Christian Gout. Geodesic active contour under geometrical conditions: Theory and 3D applications. Numerical Algorithms, 48(1-3):105–133, 2008.

- [26] Chunxiao Liu, Michael Kwok-Po Ng, and Tieyong Zeng. Weighted variational model for selective image segmentation with application to medical images. Pattern Recognition, pages –, 2017.

- [27] T. Lu, P. Neittaanmäki, and X-C. Tai. A parallel splitting up method and its application to navier-stokes equations. Applied Mathematics Letters, 4(2):25 – 29, 1991.

- [28] Calvin R Maurer, Rensheng Qi, and Vijay Raghavan. A linear time algorithm for computing exact euclidean distance transforms of binary images in arbitrary dimensions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(2):265–270, 2003.

- [29] D. Mumford and J. Shah. Optimal approximation of piecewise smooth functions ans associated variational problems. Commu. Pure and Applied Mathematics, 42:577–685, 1989.

- [30] Thi Nhat Anh Nguyen, Jianfei Cai, Juyong Zhang, and Jianmin Zheng. Robust interactive image segmentation using convex active contours. IEEE transactions on image processing : a publication of the IEEE Signal Processing Society, 21(8):3734–43, 2012.

- [31] S. Osher and J. Sethian. Fronts propagating with curvature-dependent speed: algorithms based on Hamilton-Jacobi formulations. Journal of Computational Physics, 79(1):12–49, 1988.

- [32] S. M. Patel and J. N. Dharwa. Accurate detection of abnormal tissues of brain MR images using hybrid clustering and deep learning based classifier methods of image segmentation. International Journal of Innovative Research in Science, Engineering and Technology, 6, 2017.

- [33] Pietro Perona and Jitendra Malik. Scale-space and edge detection using anisotropic diffusion. IEEE Transactions on pattern analysis and machine intelligence, 12(7):629–639, 1990.

- [34] Alexis Protiere and Guillermo Sapiro. Interactive image segmentation via adaptive weighted distances. IEEE Transactions on image Processing, 16(4):1046–1057, 2007.

- [35] Lavdie Rada. Variational models and numerical algorithms for selective image segmentation. PhD thesis, University of Liverpool, 2013.

- [36] Lavdie Rada and Ke Chen. A new variational model with dual level set functions for selective segmentation. Communications in Computational Physics, 12(1):261–283, 2012.

- [37] Lavdie Rada and Ke Chen. Improved Selective Segmentation Model Using One Level-Set. Journal of Algorithms and Computational Technology, 7(4):509–540, 2013.

- [38] James A Sethian. A fast marching level set method for monotonically advancing fronts. Proceedings of the National Academy of Sciences, 93(4):1591–1595, 1996.

- [39] Jack Spencer and Ke Chen. A Convex and Selective Variational Model for Image Segmentation. Communications in Mathematical Sciences, 13(6):1453–1472, 2015.

- [40] L. Vincent and P. Soille. Watersheds in digital spaces: an efficient algorithm based on immersion simulations. IEEE Trans. Pattern Analysis Machine Intell., 13(6):583–598, 1991.

- [41] G. T. Wang, W. Q. Li, S. Ourselin, and T. Vercauteren. Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks. https://arxiv.org/pdf/1709.00382.pdf, preprint, 2017.

- [42] G. T. Wang, W. Q. Li, M. A. Zuluaga, R. Pratt, P. A. Patel, M. Aertsen, T. Doel, A. L. David, J. Deprest, S. Ourselin, and T. Vercauteren. Interactive medical image segmentation using deep learning with image-specific fine-tuning. CoRR, abs/1710.04043, 2017.

- [43] J. Weickert, B. M. Ter Haar Romeny, and M. A. Viergever. Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Transactions on Image Processing, 7(3):398–410, 1998.

- [44] J. P. Zhang, Ke Chen, and B. Yu. A 3D multi-grid algorithm for the CV model of variational image segmentation. International Journal of Computer Mathematics, 89(2):160–189, 2012.

- [45] Feng Zhao and Xianghua Xie. An overview of interactive medical image segmentation. Annals of the BMVA, 2013(7):1–22, 2013.

- [46] W. Zhu, X.-C. Tai, and T. F. Chan. Image segmentation using euler’s elastica as the regularization. Journal of Scientific Computing, 57:414–438, 2013.