A correlation structure for the analysis of Gaussian and non-Gaussian responses in crossover experimental designs with repeated measures

Abstract

In this study, we propose a family of correlation structures for crossover designs with repeated measures for both, Gaussian and non-Gaussian responses using generalized estimating equations (GEE). The structure considers two matrices: one that models between-period correlation and another one that models within-period correlation. The overall correlation matrix, which is used to build the GEE, corresponds to the Kronecker between these matrices. A procedure to estimate the parameters of the correlation matrix is proposed, its statistical properties are studied and a comparison with standard models using a single correlation matrix is carried out. A simulation study showed a superior performance of the proposed structure in terms of the quasi-likelihood criterion, efficiency, and the capacity to explain complex correlation phenomena/patterns in longitudinal data from crossover designs

keywords:

Carry-over effect; Generalized Estimating Equations; Kronecker correlation; Overdispersed Count Data1 Introduction

Experimental designs are a very useful tool to analyze the effects of treatments applied to a set of experimental units (Hinkelmann and Kempthorne, 2005). In this kind of studies, it is frequent that experimental units are observed at a unique time point, this is known as a transversal experiment; notwithstanding, sometimes experimental units are observed several times during the study, keeping the same treatment, giving rise to longitudinal studies (Davis, 2002).

There are situations in which an experimental unit receives all treatments, each one in a different period, this induces a setup where a sequence of treatments is applied to each unit. This kind of designs is known as crossover design (Jones and Kenward, 2015).

In the scope of crossover designs, published results focus on the case of a normally distributed (Jones and Kenward, 2015) or binary (two possible outputs, namely success or failure) response variable. The latter case has been treated by means of generalized linear models for binary data (Ratkowsky et al. (1992), Curtin (2017) and Li et al. (2018)).

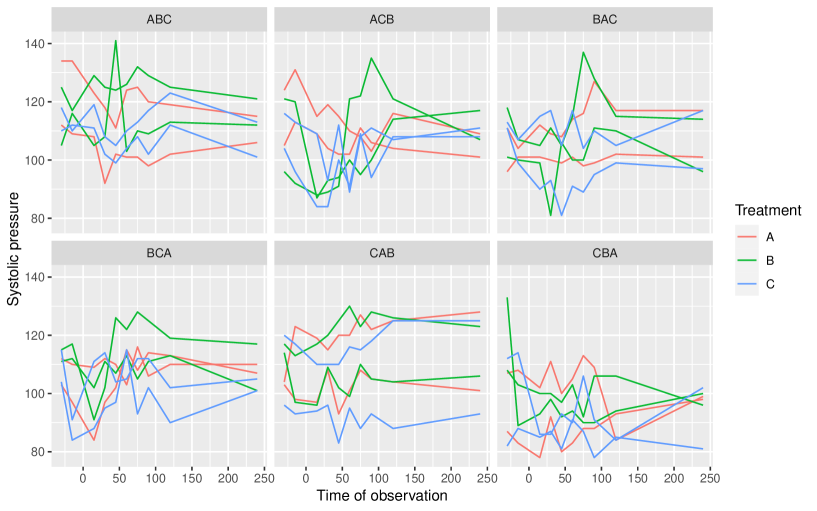

Jones and Kenward (2015, pag 204) described a crossover experiment with three treatments to control arterial pressure: treatment A is a placebo, treatments B and C are 20 and 40 mg doses of a test drug. Thus, there were six three-period sequences: ABC, ACB, BCA, BAC, CAB, and CBA, each one of them was applied to two individuals. In each period, 10 consecutive measurements of diastolic arterial pressure were taken: 30 and 15 minutes before, and 15, 30, 45, 60, 75, 90, 120 and 240 minutes after the administration of the treatment, as shown in Table 1.

| Sequence | Period 1 | Period 2 | Period 3 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

||||||||||

| ⋮ | ⋮ | ⋮ | ⋮ | ||||||||||

|

|

|

|

. A second experiment carried out by researchers of the Department of Animal Sciences of Universidad Nacional de Colombia, was focused on inferring the effects of two diets, A (grass) and B (a mixture of grass and paper recycling waste) on dairy cattle performance. Eight cows split into two groups of four received the diets in such a way that the first group was fed diet A from day 1 to day 42, and diet B from day 43 to day 84, while the second group was fed diet B during the first period and diet A during the second one. Measurements of milk yield and quality, live weight and body condition score were taken at 1, 14, 28, 42, 56, 70 and 84 days (Jaime, 2019); the design structure is shown in Table2.

| Sequence | Period 1 | Period 2 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

||||||||||||

|

|

|

To analyze this sort of designs, Basu and Santra (2010), Josephy et al. (2015), Hao et al. (2015), Lui (2015), Rosenkranz (2015), Grayling et al. (2018), Madeyski and Kitchenham (2018) and Kitchenham et al. (2018) used mixed models for crossover designs with Gaussian response and a single observation per period including additive carryover effects. Biabani et al. (2018) presented a review on crossover designs in neuroscience, all papers considered Gaussian responses and did not account for carryover effects due to the presence of a washout period, even when it lasted a few days. On the other hand, Oh et al. (2003), Curtin (2017) and Li et al. (2018) used generalized linear models for crossover designs of two periods and two sequences of two treatments with a continuous (normal or gamma) or binary response variable and each experimental unit observed once per period. They used generalized estimating equations (GEE) to estimate model parameters. A Bayesian formulation of generalized linear models where the response was the survival time with a single observation per period was presented in Shkedy et al. (2005) and Liu and Li (2016).

Moreover, Dubois et al. (2011), Diaz et al. (2013) and Forbes et al. (2015) used Gaussian mixed models to analyze records from crossover designs with repeated measures using the area under curve as a strategy to obtain a single observation per period and one experimental unit, and they did not account for carryover effects.

In all aforementioned approaches, it is assumed that the crossover design considers a washout period between treatments and that the response variable of each individual is observed once per period. These assumptions are not fulfilled in the experiments described above because of two reasons: i) in patients with arterial hypertension the treatment cannot be stopped, while in the case of dairy cattle, cows must be fed every day; moreover, in both studies, the placebo is part of the treatment design, so considering it as a washout period would modify the experiment, ii) in each treatment, experimental units were observed several times per period. Therefore, there is a necessity for developing a consistent methodology to analyze this sort of experiments. In this study, we develop a methodology to analyze data from crossover designs with repeated measures using GEE and considering two correlation structures, between and within periods, which are combined via a Kronecker product to yield the overall correlation matrix. These approach was found to improve the estimation of parameters of interest with respect to methods based on GEE that consider a single correlation structure for all the observations of a subject; in addition, this method accounts for carryover effects, hence, it does not need a washout period.

In the second section, we present some background and define the methodology, in the third section we propose a method to estimate the correlation matrix, and provide theoretical results that support our estimation and modelling approaches. In the fourth section, we present a simulation study showing some advantages of our model as compared to the model with a single correlation structure for each period. Lastly, in the fifth section, the methodology is applied to real data from the aforementioned arterial pressure and dairy cattle experiments.

2 Crossover designs with repeated measures

A crossover design has the following components (Patterson, 1951): i) Sequences which are each one of the distinct treatment combinations to be sequentially and randomly applied to the experimental units, ii) Treatments, which are randomly applied to each experimental unit within each sequence, iii) Periods, the time intervals in which the treatments that make up a sequence are applied, usually, each period is the same for all sequences and, consequently, the number of periods is equal to the number of elements of each sequence, iv) Experimental unit, the subjects or elements that receive the treatments, in each sequence there are experimental units, so the total number of experimental units in the study is denoted by .

Another important feature of the crossover design is the existence of carryover effects, defined in Vegas et al. (2016) as follows: persistency of the effect of a treatment over those that will be applied later, i.e., the treatment is applied in a given period, but its effect still affects the response in later periods when other treatments are applied, this residual effect is known as carryover effect. When the effect persists after one period, it is known as first order carryover effect, and when it persists after two periods, it is called a second order effect and so on (Patterson, 1951).

In a crossover design with sequences of length (the number of elements comprising each sequence) , is defined as the th respose of the th experimental unit at th period. Let be the number of observations of the th experimental unit during the th period. Then, vector is defined as:

| (1) |

Also, vector is defined as:

| (2) |

which has dimension , where is the number of periods.

According to these definitions, the ideas presented when discussing the arterial pressure and dairy cattle experiments, and assuming that has a distribution in the exponential family, we propose the following model based on GEE:

| (3) |

where is the link function related to the exponential family, is the vector of the design matrix corresponding to th response from the th experimental unit at the th period, is the vectors of fixed effects, is the overall mean, is the effect of the th sequence, is the effect of the th period, is the effect of treatment applied in the period to th experimental unit (), is the first order carryover effect of treatment, is the carry over effect of order of treatment. The carryover effects are considered in the two experiments discussed above because there was not a washout period. Due to the fact that observations of the same experimental unit are correlated, parameter estimation is carried out using GEE (Liang and Zeger, 1986). To this end, the following system of equations has to be solved:

| (4) |

where , , is the th column of the design matrix of the ith experimental unit, and the covariance component is defined as:

| (5) |

where is a diagonal matrix, is the variance function corresponding to the exponential family, and is the correlation matrix related to the covariance matrix as follows:

3 Kronecker correlation matrix

Due to the repeated measures structure in the crossover design, we propose a correlation structure of the form:

| (6) |

where is the within-period correlation matrix, is the between-period correlation matrix, and represents the Kronecker product (Harville, 1997). To estimate this matrix, we propose the following modified GEE to estimate is:

where is defined as in Equation (5), , is the th column of the design matrix of the th experimental unit.

The estimating equation for is:

| (7) |

where is a diagonal matrix, ,

, and is the th observed Pearson residual defined as:

| (8) |

and . On the other hand, we propose the following estimator for :

| (9) |

where is the vector of Pearson residuals for the th experminetal unit at the th period, and is the average (over i), , with

Theorem 3.1.

The estimator of given by is asymptotically unbiased and consistent.

Proof.

See appendix A ∎

The form of in (6) is given by correlation structures such as: independence, autoregressive, exchangeable, etc (Davis, 2002).

The correlation structures are compared via the quasi-likelihood information criterion (, Pan (2001)), which is defined as:

| (10) |

where is the estimated expected value of observation under the model assuming the correlation matrix , is the estimated covariance matrix of under independence, and is the covariance matrix of under the model with correlation matrix defined as in (6).

4 Simulation Study

A simulation study was carried out to evaluate the proposed model. The setup was a crossover model with three periods , five repetitions and two sequences within each period. The number of replicates per sequence varied from 2 to 100, and for each replicate, the simulation was ran 100 times. The following parameters are used in the simulation:

| (11) | |||

Four GEE models were fit per replicate, all had the same linear predictor and differed in the correlation structure: 1) independence, 2) first order autoregressive, 3) exchangeable, and 4) the Kronecker matrix proposed in this paper. Both, the simulations and fitted model were carried out using the libraries gee (Carey., 2019) and geeM (McDaniel et al., 2013) of R (R Core Team, 2022)

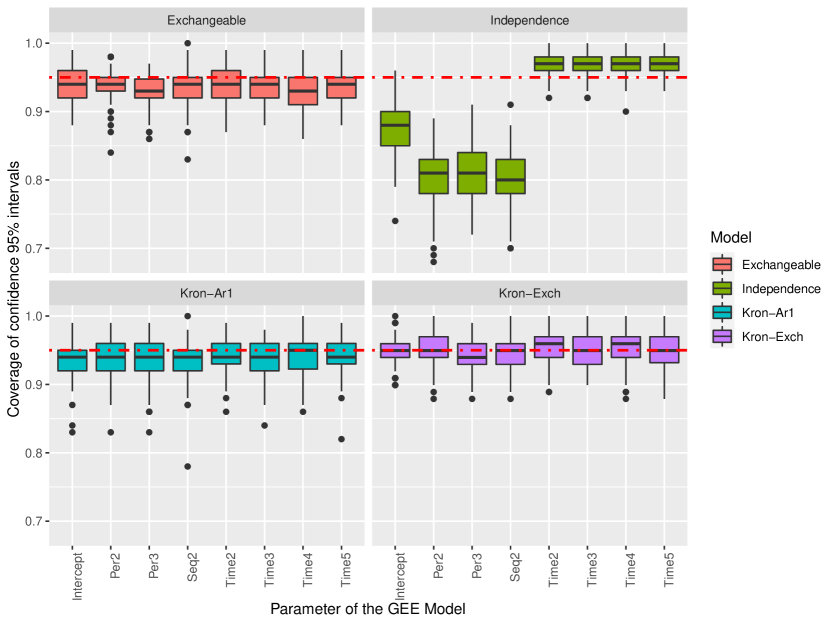

Figure 1 shows the proportion of times that each model had the smallest QIC relative to the 100 simulations for each number of replications per sequence (the mean and 95% confidence interval are shown). Moreover, an analysis of parameter estimation under each one of these models using the simulated data was performed; Figure 2 presents 95% confidence intervals for the location parameters under each model. In the simulation study it is observed that most of the times the lowest is obtained for the model with the true correlation structure. This suggets that the proposed estimation method along with theorem 3.1, which will allow to detect kronecker correlation structures in the data of the crossover design. In addition, this behavior is maintained even when the number of replicas within the design is low.

Regarding the coverage of confidence intervals for each parameter, in Figure 2 it can be noticed that the model with independence structure has a very low coverage for period and sequence effects; this will not allow a correct inference about the parameters of interest in the design. The other three models had more appropriate coverages, but the model with the within-period xchangeable matrix (that is, the true structure) exhibited values closer to 95%.

As to the time effects, corresponding coverages were close to 95% in all four models; however, a subcoverage was observed for the model with a within-period exchangeable structure and an overcoverage for the independence model. The true model showed coverages corresponding to 95%.

5 Application

The systolic blood pressure data is presented in Figure 3. The model used to analyze this experiment had a linear predictor considering fixed effects of treatment, period, baseline (the two measurements taken before applying the treatment), first and second order linear and quadratic carryover effects as a function of time. On the other hand, the model use to analyze data from the second experiment had a linear predictor considering fixed effects of baseline, treatment, period, and first order linear carryover effects as a function of time (quadratic effects were not considered because there were three observations within each period). The structures of the working correlation matrices were: i) independence, ii) first order autoregressive, and iii) fitted individually and were compared via the to determine the one exhibiting the best fit to each dataset. Table 3 presents the for each correlation structure in both datasets.

| Matriz | presión arterial | vacas |

|---|---|---|

| 46086 | 200.65 | |

| 44166 | 139.70 | |

| 45059 | 138.85 | |

| 44923 | 143.85 | |

| 44158 | 138.27 | |

| 43400 | 180.34 | |

| 43393 | 138.22 |

| Parameter | Estimate | Robust SE | Robust | -value |

|---|---|---|---|---|

| Intercept | 106.2917 | 3.4327 | 30.9641 | 0.001 |

| Base | 8.9587 | 1.9515 | 4.5908 | 0.001 |

| Time | 1.0754 | 0.4209 | 2.5548 | 0.0106 |

| Period 2 | 5.9752 | 3.0859 | 1.9363 | 0.0528 |

| Period 3 | 10.4117 | 5.1565 | 2.0191 | 0.0435 |

| Treatment B | -1.3032 | 2.0353 | -0.6403 | 0.5220 |

| Treatment C | -10.8840 | 3.4096 | -3.1922 | 0.0014 |

| Carry-over B | -7.2221 | 4.0008 | -1.8052 | 0.0710 |

| Carry-over C | -12.1513 | 5.6162 | -2.1636 | 0.0305 |

| Time2 | -0.0526 | 0.0202 | -2.6091 | 0.0091 |

| Time Carry over B | 0.9191 | 0.7102 | 1.2940 | 0.1957 |

| Time Carry over C | -0.1007 | 0.5789 | -0.1739 | 0.8619 |

| Time Carry over B | -0.0459 | 0.0405 | -1.1325 | 0.2574 |

| Time Carry over C | 0.0209 | 0.0410 | 0.5097 | 0.6103 |

| Parameter | Estimate | Robust SE | Robust | -value |

|---|---|---|---|---|

| Intercept | 19.5505 | 1.0248 | 10.2950 | 0.001 |

| Base | 0.5850 | 0.0629 | 9.2961 | 0.001 |

| Time | -0.6293 | 0.0949 | -6.6321 | 0.001 |

| Period 2 | 2.3908 | 1.0395 | 2.3000 | 0.0214 |

| Treatment A | 1.1217 | 0.8341 | 1.3448 | 0.1787 |

| Carry-over A | -4.2480 | 1.4128 | -3.0069 | 0.0026 |

| Time2 | 0.0117 | 0.0018 | 6.4550 | 0.001 |

| Time Carry over A | -0.0702 | 0.0260 | -2.6949 | 0.0070 |

According to 3 the and correlation matrices had the smallest QIC values for the arterial pressure and dairy cattle experiments, respectively. Hence, correlation matrices having the Kronecker structure had the best fit in the two datasets. The correlation matrices estimated using equations (7) and (9) for the arterial pressure data are, respectively:

| (12) | ||||

| (13) |

and for the dairy cattle dataset:

| (14) | ||||

| (15) |

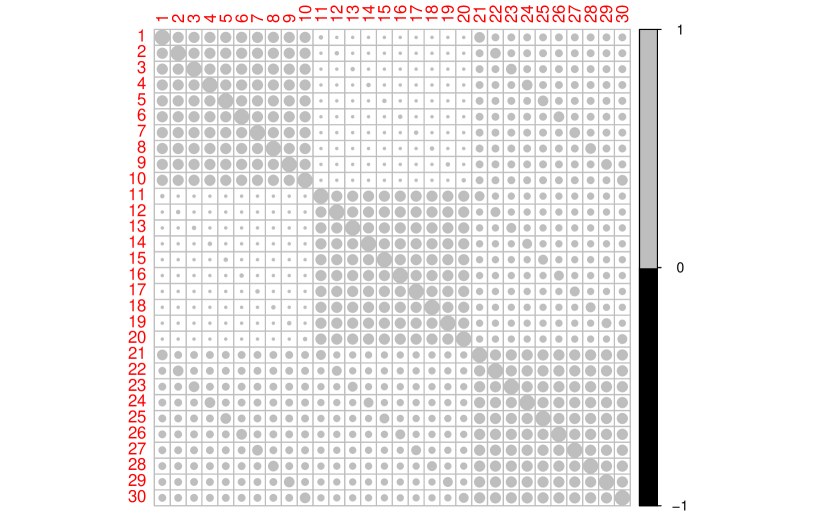

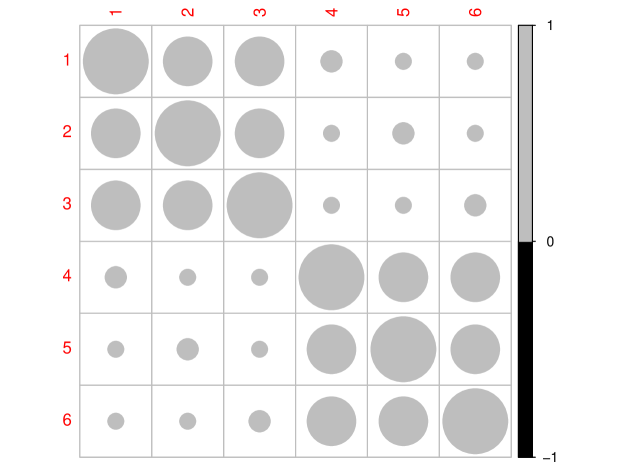

Figure 4 shows the matrix computed as the Kronecker product of matrices in equations (12) and (13). Notice the positive correlation between periods 1 and 3, a small positive correlation between periods 1 and 2. On the other hand, the matrix obtained as the Kronecker product of matrices in equations (14) and (15) is shown in Figure 5 where a positive but small correlation between periods 1 and 2 can be seen.

The matrices with the Kronecker structure are used to estimate the location parameters of the linear model (since these had the smallest QIC) yielding Table 4 4 for the arterial pressure data and Table 5 for the dairy cattle data. Each table shows the estimates, their standard errors (computed using the “sandwich” variance estimator (Hardin and Hilbe, 2003), the z statistic and the p-value corresponding to the null hypothesis

Table 4 shows significant effects of baseline, treatment C (with respect to A), time (linear and quadratic), period, and carryover effect of treatment C. The interaction between carryover effects and time was not significant, which means that the effect of the previous treatment remains the same during the rest of the experiment. Moreover, Table 5 shows significant effects of baseline (milk yield before the beginning of the experiment), linear (negative) and quadratic (positive) regression coefficients of time, which means that milk yield shows a convex behavior during the experiment. There was no significant treatment effect, but there was a significant carryover effect of the paper-based diet over the grass-based diet, i.e., the cows being fed paper took a time to recover after changing to the grass-based diet.

6 Conclusions

Defining the correlation matrix structure is a highly relevant decision when using GEE, a proper specification of correlation structures in longitudinal data analysis improves estimation efficiency, leading to more reliable statistical inferences (Hin and Wang, 2009) . Hence, in this paper we develop a family of correlation structures that combine some of the classical structures used in longitudinal data analysis with an additional matrix that allows more flexibility in the overall correlation structure by adding a few parameters. Moreover, we provide an explicit estimation method of these parameters which features some sound statistical properties.

The theoretical results supporting some asymptotic properties of the proposed estimators are illustrated through the simulation study where a gain in goodness of fit was observed across the different simulation scenarios. The QIC was able to select the correct model, which had the proposed correlation structure. In addition, the confidence intervals built from the GEE showed a coverage that matched the nominal value. The estimation by intervals for each of the parameters presents coverage close to 95%, which shows a correct theoretical specification of each univariate interval.

As to the real data analysis, the results for the arterial pressure data showed the importance of accounting for carryover effects as they are useful for correctly estimating and interpreting the treatment affects across the time. If not included in the model, these residual effects may induce confusion problems. On the other hand, in the dairy cattle data, significant carryover effects were detected as well. Regarding the estimates of correlation matrices in both datasets, the QIC selected the proposed correlation matrix. Thus, the theoretical and empirical results from real and simulated data analyses suggest that the proposed methodology is promising and may be applied to perform better inferences from data obtained under crossover designs without washout periods and a repeated measures structure.

References

- Basu and Santra (2010) S. Basu and S. Santra. A joint model for incomplete data in crossover trials. Journal of Statistical Planning and Inference, 140(10):2839–2845, 2010.

- Biabani et al. (2018) M. Biabani, M. Farrell, M. Zoghi, G. Egan, and S. Jaberzadeh. Crossover design in transcranial direct current stimulation studies on motor learning: potential pitfalls and difficulties in interpretation of findings. Reviews in the Neurosciences, 29(4):463–473, 2018.

- Carey. (2019) V. J. Carey. gee: Generalized Estimation Equation Solver, 2019. URL https://CRAN.R-project.org/package=gee. R package version 4.13-20.

- Cordeiro (2004) G. M. Cordeiro. On pearson’s residuals in generalized linear models. Statistics & probability letters, 66(3):213–219, 2004.

- Cordeiro and McCullagh (1991) G. M. Cordeiro and P. McCullagh. Bias correction in generalized linear models. Journal of the Royal Statistical Society: Series B (Methodological), 53(3):629–643, 1991.

- Cox and Snell (1968) D. R. Cox and E. J. Snell. A general definition of residuals. Journal of the Royal Statistical Society: Series B (Methodological), 30(2):248–265, 1968.

- Curtin (2017) F. Curtin. Meta-analysis combining parallel and crossover trials using generalised estimating equation method. Research Synthesis Methods, 8(3):312–320, 2017.

- Davis (2002) C. S. Davis. Statistical Methods for the Analysis of Repeated Measurements. Springer, San Diego, 2002.

- Diaz et al. (2013) F. J. Diaz, M. J. Berg, R. Krebill, T. Welty, B. E. Gidal, R. Alloway, and M. Privitera. Random-effects linear modeling and sample size tables for two special crossover designs of average bioequivalence studies: the four-period, two-sequence, two-formulation and six-period, three-sequence, three-formulation designs. Clinical pharmacokinetics, 52(12):1033–1043, 2013.

- Dubois et al. (2011) A. Dubois, M. Lavielle, S. Gsteiger, E. Pigeolet, and F. Mentré. Model-based analyses of bioequivalence crossover trials using the stochastic approximation expectation maximisation algorithm. Statistics in medicine, 30(21):2582–2600, 2011.

- Forbes et al. (2015) A. B. Forbes, M. Akram, D. Pilcher, J. Cooper, and R. Bellomo. Cluster randomised crossover trials with binary data and unbalanced cluster sizes: Application to studies of near-universal interventions in intensive care. Clinical Trials, 12(1):34–44, 2015.

- Grayling et al. (2018) M. J. Grayling, A. P. Mander, and J. M. Wason. Blinded and unblinded sample size reestimation in crossover trials balanced for period. Biometrical Journal, 60(5):917–933, 2018.

- Hao et al. (2015) C. Hao, D. von Rosen, and T. von Rosen. Explicit influence analysis in two-treatment balanced crossover models. Mathematical Methods of Statistics, 24(1):16–36, 2015.

- Hardin and Hilbe (2003) J. W. Hardin and J. Hilbe. Generalized Estimating Equations. Chapman & Hall, Boca Raton, 2003.

- Harville (1997) D. A. Harville. Matrix algebra from a statistician’s perspective, volume 1. Springer, 1997.

- Hin and Wang (2009) L.-Y. Hin and Y.-G. Wang. Working-correlation-structure identification in generalized estimating equations. Statistics in medicine, 28(4):642–658, 2009.

- Hinkelmann and Kempthorne (2005) K. Hinkelmann and O. Kempthorne. Design and Analysis of Experiments. Wiley series in probability and mathematical statistics. Applied probability and statistics. Wiley, New York, Vol 2, 2005.

- Jaime (2019) G. Jaime. Uso de un residuo de papel como suplemento para vacas lecheras. Tesis de maestría, Universidad Nacional de Colombia, Sede Bogota, 2019.

- Jones and Kenward (2015) B. Jones and M. G. Kenward. Design and Analysis of Cross-Over Trials Third Edition. Chapman & Hall/CRC, Boca Raton, 2015.

- Josephy et al. (2015) H. Josephy, S. Vansteelandt, M.-A. Vanderhasselt, and T. Loeys. Within-subject mediation analysis in ab/ba crossover designs. The international journal of biostatistics, 11(1):1–22, 2015.

- Kitchenham et al. (2018) B. Kitchenham, L. Madeyski, F. Curtin, et al. Corrections to effect size variances for continuous outcomes of cross-over clinical trials. Statistics in medicine, 37(2):320–323, 2018.

- Lancaster (1965) H. Lancaster. The helmert matrices. The American Mathematical Monthly, 72(1):4–12, 1965.

- Li et al. (2018) F. Li, A. B. Forbes, E. L. Turner, and J. S. Preisser. Power and sample size requirements for gee analyses of cluster randomized crossover trials. Statistics in Medicine, 2018.

- Liang and Zeger (1986) K.-Y. Liang and S. L. Zeger. Longitudinal data analysis using generalized linear models. Biometrika, 73(1):13–22, 1986.

- Liu and Li (2016) F. Liu and Q. Li. A bayesian model for joint analysis of multivariate repeated measures and time to event data in crossover trials. Statistical Methods in Medical Research, 25(5):2180–2192, 2016.

- Lui (2015) K.-J. Lui. Test equality between three treatments under an incomplete block crossover design. Journal of biopharmaceutical statistics, 25(4):795–811, 2015.

- Madeyski and Kitchenham (2018) L. Madeyski and B. Kitchenham. Effect sizes and their variance for ab/ba crossover design studies. Empirical Software Engineering, 23(4):1982–2017, 2018.

- McDaniel et al. (2013) L. S. McDaniel, N. C. Henderson, and P. J. Rathouz. Fast pure R implementation of GEE: application of the Matrix package. The R Journal, 5:181–187, 2013. URL https://journal.r-project.org/archive/2013-1/mcdaniel-henderson-rathouz.pdf.

- Oh et al. (2003) H. S. Oh, S.-g. Ko, and M.-S. Oh. A bayesian approach to assessing population bioequivalence in a 2 2 2 crossover design. Journal of Applied Statistics, 30(8):881–891, 2003.

- Pan (2001) W. Pan. Akaike’s information criterion in generalized estimating equations. Biometrics, 57:120–125, 2001.

- Patterson (1951) H. D. Patterson. Change-over trials. Journal of the Royal Statistical Society. Series B (Methodological), 13:256–271, 1951.

- R Core Team (2022) R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria, 2022. URL https://www.R-project.org/.

- Ratkowsky et al. (1992) D. Ratkowsky, R. Alldredge, and M. Evans. Cross-over Experiments. Statistics A Series of Textbooks and Monographs, Washington, 1992.

- Rosenkranz (2015) G. K. Rosenkranz. Analysis of cross-over studies with missing data. Statistical methods in medical research, 24(4):420–433, 2015.

- Shkedy et al. (2005) Z. Shkedy, G. Molenberghs, H. V. Craenendonck, T. Steckler, and L. Bijnens. A hierarchical binomial-poisson model for the analysis of a crossover design for correlated binary data when the number of trials is dose-dependent. Journal of biopharmaceutical statistics, 15(2):225–239, 2005.

- Srivastava et al. (2008) M. S. Srivastava, T. von Rosen, and D. Von Rosen. Models with a kronecker product covariance structure: estimation and testing. Mathematical Methods of Statistics, 17(4):357–370, 2008.

- Vegas et al. (2016) S. Vegas, C. Apa, and N. Juristo. Crossover designs in software engineering experiments: Benefits and perils. IEEE Transactions on Software Engineering, 42(2):120–135, 2016.

Appendix A

Theorem A.1.

The estimator of given by is asymptotically unbiased and consistent.

Proof.

First, asymptotic properties of Pearson’s residuals concerning expectation, variance, and residuals are explored. Define the theoretical Pearson residuals of response of the th response of the th experimental unit at the th period as:

| (16) |

and adapting the results of Cox and Snell (1968) it is true that:

| (17) | ||||

| (18) |

where

and is the element at position of the inverse of the Fisher information matrix, and according to Cordeiro and McCullagh (1991), the bias of () is given by:

| (19) | ||||

| (20) | ||||

where is a vector of appropriate size whose entries are all equal to 1, is a diagonal matrix with elements on the diagonal given by the variance of the estimated linear predictors, i.e. , the diagonal of the matrix

| (21) |

i.e., and is the design matrix of the parametric effects described in Equation (3). Now, computing the expected values by taking into account the properties of the exponential family we obtain:

| (22) | ||||

| (23) | ||||

| (24) | ||||

| (25) | ||||

| (26) | ||||

| (27) |

where is the element of the diagonal of the matrix defined in Equation (20). From Equation (22) and the bias of given in Equation (19), it follows that:

| (28) |

where is a vector of zeros with a 1’s at the th position. From equations (23) and (24), we get:

| (29) |

and therefore, from equations (28), (29) and (17):

| (30) |

where

from equations (25), (26) y (27):

| (31) |

and from equations (19) and (27) it follows that:

| (32) |

therefore, from the results (31) and (32) we have that:

| (33) |

where is a vector of ones and

By theorem 2 of Liang and Zeger (1986) it is known that the GEE estimator of are consistent and unbiased, i.e.,

| (34) |

thus from (30) and (33), we find that:

| (35) | ||||

| (36) |

and furthermore, by Section 3 of Cordeiro (2004) and equations (35) and (36) it follows that:

| (37) |

Let

| (38) |

be a matrix whose first column is and the following columns are:

| (39) |

where

then the matrix is a Helmert matrix (Lancaster, 1965) and therefore:

| (40) |

If is defined as the estimated Pearson residual of the th experimental unit in the th period and the th observation, i.e.,

and the matrix of residuals of the th individual, where the first row has the Pearson residuals defined in Equation (8) corresponding to the first period, and the second row of the corresponding to the second period and so on until completing a matrix with rows and columns, i.e.:

| (41) |

By Equation (35) and the correlation assumption given in Equation (6) it is true that:

| (42) | |||

And defining as:

and since is orthogonal, then is also orthogonal. Thus (Srivastava et al., 2008):

| (43) |

and according to Equation (42), we get:

where is:

| (44) |

with is the matrix of the average residuals defined in Equation (41) for each period, that is,

and

Now by the properties of the Pearson residuals we have that:

and by the properties given in Equation (40) and because we assume that the experimental units are independent, that is, , for all and that Equation (3) is true, then:

furthermore,

| (45) |

By the central limit theorem, we get that:

| (46) |

By Equations (37) and (45) it follows that:

| (47) |

and by Equations (40), (46) y (47) that:

and partitioning as follows:

Then it is obtained that

Similarly, it is found that:

| (48) | ||||

| (49) | ||||

Therefore since , , from Equation (48) we get:

| (50) |

| (51) |

By Theorem 2 in Liang and Zeger (1986) it is known that is consistent and unbiased for , by Equations (50), (46) and (47), we have that

| (52) |

is a consistent and asymptotically unbiased estimator for , which proves the theorem ∎