A Cross-Level Information Transmission Network for Predicting Phenotype from New Genotype: Application to Cancer Precision Medicine

Abstract

An unsolved fundamental problem in biology and ecology is to predict observable traits (phenotypes) from a new genetic constitution (genotype) of an organism under environmental perturbations (e.g., drug treatment). The emergence of multiple omics data provides new opportunities but imposes great challenges in the predictive modeling of genotype-phenotype associations. Firstly, the high-dimensionality of genomics data and the lack of labeled data often make the existing supervised learning techniques less successful. Secondly, it is a challenging task to integrate heterogeneous omics data from different resources. Finally, the information transmission from DNA to phenotype involves multiple intermediate levels of RNA, protein, metabolite, etc. The higher-level features (e.g., gene expression) usually have stronger discriminative power than the lower level features (e.g., somatic mutation). To address above issues, we proposed a novel Cross-LEvel Information Transmission network (CLEIT) framework. CLEIT aims to explicitly model the asymmetrical multi-level organization of the biological system. Inspired by domain adaptation, CLEIT first learns the latent representation of high-level domain then uses it as ground-truth embedding to improve the representation learning of the low-level domain in the form of contrastive loss. In addition, we adopt a pre-training-fine-tuning approach to leveraging the unlabeled heterogeneous omics data to improve the generalizability of CLEIT. We demonstrate the effectiveness and performance boost of CLEIT in predicting anti-cancer drug sensitivity from somatic mutations via the assistance of gene expressions when compared with state-of-the-art methods.

Introduction

Advances in next-generation sequencing have generated abundant and diverse omics data. They provide us with unparalleled opportunities to reveal the secrets of biology. An unsolved problem in biology is how to predict observable traits (phenotypes) given a new genetic constitution (genotype) under environmental perturbations. The predictive modeling of genotype-phenotype associations will answer not only many fundamental questions in biology but also address urgent needs in biomedicine. A typical application is anti-cancer precision medicine. Given a new cancer patient’s genetic information, what is the best existing drug to treat this patient? This is different from Genome Wide Association Study (GWAS) and Transcriptome Wide Association Study (TWAS), whose goal is to identify statistical correlations between observed genotype and phenotype. The predicting phenotype from a new genotype is a challenging task due to the asymmetrical multi-level hierarchical organization of the biological system. Cell-, tissue-, and organism-level phenotypes do not arise directly from DNAs but through multiple intermediate molecular or cellular phenotypes that are characterized by biological pathways, protein interactions, and gene expressions, etc (Blois 1984). In other words, in the information transmission process from DNA to RNA to protein to the observed phenotype of interest, the higher-level features (e.g., gene expression) usually have stronger discriminative power than the lower level features (e.g., somatic mutation) in a supervised learning task that is independent on the machine learning model applied. This premise is supported by multiple studies such as cancer (Costello et al. 2014), drug combination (Menden et al. 2019), and microbiome (Lloyd-Price et al. 2019). Therefore, a multi-level approach is needed to simulate the asymmetrical hierarchical information transmission process for linking the genotype to the phenotype (Hart and Xie 2016). Furthermore, the interpretability of machine learning model is critical for the biomedical application. The multi-scale modeling of genotype-phenotype associations will facilitate opening the black box of machine learning (Yang et al. 2019). In addition to the above fundamental challenge, the predictive modeling of genotype-phenotype associations faces several technical difficulties that hinder the application of existing machine learning methods. Firstly, omics data are often in an extremely high dimension. Secondly, the labeled data are scarce compared with unlabeled data. Finally, it is not a trivial task to integrate heterogeneous omics data from different resources.

To address the aforementioned challenges, we develop a novel neural network-based framework: Cross-LEvel Information Transmission (CLEIT) network. Inspired by domain adaptation, CLEIT first learns the latent representation of a high-level domain then uses it as ground-truth embedding to improve the representation learning of the low-level domain in the form of contrastive loss. In addition, we adopt a pre-training-fine-tuning approach to leveraging the unlabeled heterogeneous omics data to improve the generalizability of CLEIT. We demonstrate that CLEIT is effective in predicting anti-cancer drug sensitivity from somatic mutations, and significantly outperforms other state-of-the-art methods. Precision anti-cancer therapy that is tailed to individual patients based on their genetic profile has gained tremendous interest in clinical (TheAmericanCancerSociety 2020). Cancer acquires numerous mutations during its somatic evolution. Both driver and passenger mutations collectively confer cancer phenotypes and are associated with drug responses (Aparisi et al. 2019). Thus it is necessary to use the entire mutation profile of cancer for the prediction of anti-cancer drug sensitivity. The machine learning models that can explicitly model hierarchical biological processes will no doubt facilitate the development of precision medicine.

Related Work

CLEIT borrowed some ideas from widely used domain adaptation techniques. Domain adaptation aims at transferring the knowledge a trained predictive model has gained on the source domain with sufficient labeled data to the target domain without or with limited labeled data when the source and target domains are of different data distributions. In particular, feature-based domain adaptation approaches (Weiss, Khoshgoftaar, and Wang 2016) have gained popularity along with the advancement in deep learning techniques due to its power in feature representation learning. It aims to learn a shared feature representation by minimizing the discrepancy across different domains while leveraging supervised loss from labeled source examples to maintain trait space’s discriminative power. To achieve discrepancy reduction, there are typically two main methodologies.

The first one focuses on exploring proper statistical distribution discrepancy metrics. For example, maximum mean discrepancy (MMD) (Gretton et al. 2012) is used in deep domain confusion (DDC) (Tzeng et al. 2014) as domain confusion loss on the domain adaptation layer to learn domain invariant features in addition to the regular classification task. Deep adaptation network (DAN) (Long et al. 2015), and its follow-up works (Long et al. 2016, 2017) explored the idea of using multi-kernel MMD to match mean embedding of the multi-layer representations across domain and enhanced the feature transferability. In addition to MMD, CORAL (Sun, Feng, and Saenko 2015) matches the data distributions with second-order statistics (co-variance) on linear transformed inputs, and is further developed into its non-linear variant in Deep CORAL (Sun and Saenko 2016). Furthermore, Wasserstein distance is employed in JDOT (Courty et al. 2017) and WGDRL (Shen et al. 2017).

The other scheme is inspired by domain adaptation theory (Ben-David et al. 2007, 2010). It states that predictions must be made based on features that can not discriminate between source and target domains to achieve effective domain adaptation transfer. It intends to minimize the distribution difference across domains by adopting an adversarial objective with a trainable domain discriminator. Domain adversarial neural network (DANN) (Ganin et al. 2016) learned domain invariant features by a minimax game between the domain classifier and the feature generator with layer sharing and customized gradient reversal layer. Later, adversarial discriminative domain adaptation (ADDA) (Tzeng et al. 2017) achieved domain adaptation via a general framework consisting of discriminative modeling, untied weight sharing, and a GAN loss. ADDA first performs the discriminative task with labeled source domain samples and then utilizes the GAN (Goodfellow et al. 2014) architecture to learn the mapping from target domain samples to source domain feature space.

In addition to the above-mentioned discrepancy reduction approaches, encoder-decoder models are also widely used in domain adaptation, where domain invariant features are learned via shared intermediate representation while domain-specific features are preserved with reconstruction loss. Representative works include marginalized denoising autoencoder (Chen et al. 2012), multi-task autoencoders (Ghifary et al. 2015), deep reconstruction classification network (Ghifary et al. 2016). Moreover, domain separation network (DSN) (Bousmalis et al. 2016) was proposed to explicitly separate private representations for each domain and shared representations across domains. The shared representation is learned similarly as DANN (Ganin et al. 2016) or with MMD (Gretton et al. 2012), while the private representations are learned via orthogonality constraint against the shared representation. DSN can achieve better generalization across domains with the reconstruction through the concatenation of shared and private representations than other methods.

Contributions

CLEIT aims to address an important problem of multi-scale modeling of genotype-phenotype associations. Although CLEIT borrowed some ideas from the domain adaptive transfer learning, there is a significant difference between those approaches and CLEIT. The goal of classic domain adaptation is to use the label information from the source domain data to boost the performance of supervised tasks in the target domain without abundant labels. The feature in the target domain usually has a similar discriminative power to that in the source domain. While in our case, we focus on resolving the inherent discriminative power discrepancy between two domains, which have a hierarchy relation. The feature of the high-level domain has higher discriminative power than that of the low-level domain. Moreover, the entity types of source and target domains are usually the same in conventional domain adaptation. In our case, they are of different types. Specifically, our goal for information transmission is to solely push the latent representation of the low-level domain to approximate the one of the high-level domain, that is, the feature representation learned from the high-level domain is fixed and used as ground-truth feature representation of the low-level domain. In this setting, the latent space where the cross-level information transmission happened is no longer a symmetrical consensus from different domains. To boost the discrimination power of the low-level domain, the high-level and low-level domain is used as an input and an output, respectively. A mapping function is learned between them.

The major contributions of this research are summarized as follows.

- We design a pre-training-fine-tuning strategy to fully utilize both labeled and unlabeled omics data that are naturally noisy, high-dimensional, heterogeneous, and sparse.

- We propose a novel neural network framework that can explicitly model asymmetrical cross-level information transmissions in a complex system to boost the discriminative power of the low-level domain. The multi-level hierarchical structure is the fundamental characteristic of the biological and ecological system. The proposed architecture is general and can be applied to model various machine learning tasks in a multi-level system.

- In terms of biomedical application, the CLEIT model significantly improves individualized anti-cancer sensitivity prediction using only somatic mutation data. The oncology panel of somatic mutations has been routinely performed in the cancer treatment. The application of CLEIT may improve the effectiveness of cancer treatment and achieve precision medicine.

Method

Problem formulation

We denote a data domain as , where stands for the feature space and samples within domain , . is the affiliated marginal distribution. In this work, we consider two domains and , namely the high-level domain and low-level domain, where , . In addition, one common task of interest is to predict phenotype or other outcomes. This task can be done individually from both of the domains but with different performance, where can achieve superior performance to independent on machine learning models applied to them. Here, the performance difference is due to the nature of each domain’s data, instead of the volume of labeled samples as in a classical domain adaptation setting. However, although feature space and are not the same, the entities cross the feature spaces are hierarchically related, such as the multi-level hierarchical organization of omics data of a biological system. Based on this realization, the aim is to utilize the knowledge learned from to boost the predictive power of .

CLEIT framework

To use the knowledge learned from to boost the performance of , we propose a Cross-LEvel-Information Transmission (CLEIT) framework. The strategy of CLEIT is to encode the data from both domains into certain ”higher-level” features. The embedded ”high-level” feature has the direct implication of the task of interests and achieves the cross-level information transmission through transferring knowledge via learned representations cross domains.

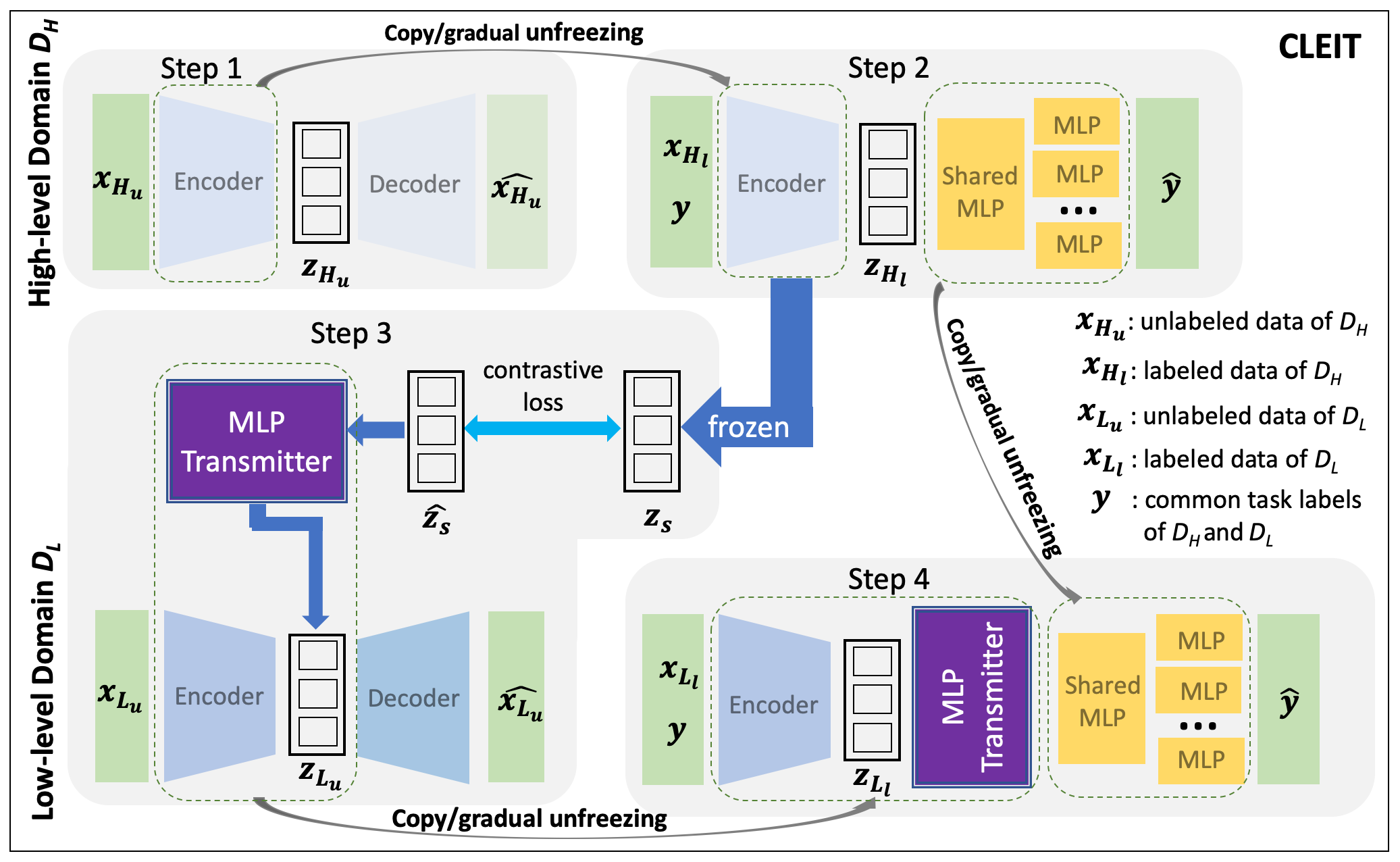

Figure 1 shows the overall framework of CLEIT. The training of CLEIT involves four steps: 1) learning an embedding of from unlabeled data using variational autoencoder (VAE) (Kingma and Welling 2013), 2) fine-turning the pre-trained embedding of from the step 1 using a multi-layer perceptron (MLP) in the setting of multi-task supervised learning, 3) learning an embedding of from unlabeled data using VAE along with training a MLP-based transmitter for the regularization of the embedding by the embedding, and 4) supervised learning of the final predictive model of using an architecture that appends the pre-trained multi-task MLP from step 2 as well as the pre-trained VAE encoder and the transmitter of from step 3. We denoted unlabeled samples as and labeled samples as , where stands for the number of samples in corresponding data sets. Furthermore, is used to symbolize the latent vectors(variables) learned in different phases throughout the training. Samples from the are similarly denoted.

High-level domain encoder training and fine-tuning

For the pre-training of , we first constructed a VAE (Kingma and Welling 2013) to ”warm” the encoder with standard input reconstruction task. In the fine-tuning step, for a label space that has multiple tasks, we first appended several shared layers after the pre-trained encoder module, then for each task we append additional fully connected layer to make complete predictor per task. In our setting, the task is the anti-cancer sensitivity of a drug. We primarily consider a regression problem, thus the loss in use is the masked scaled-invariant mean squared error which allows the missing values in the multi-dimension label space, as defined below,

| (1) |

where is the predicted drug sensitivity score (vector), and is an indicator vector of length , that stands for the availability of ground-truth label for this sample and accordingly is the total number of tasks with ground truth score for this sample. For the fine-tuning of the encoder, we started with updating only appended predictor modules and then employed gradual unfreezing and layer-wise decayed learning rates on encoder updating. After the training of , the encoder is frozen to generate ”ground-truth” latent representation of . In addition, the appended predictor modules (including shared and individual) can also be preserved to serve the initialized predictor in to facilitate corresponding training process.

Low-level domain encoder and transmitter

The pre-training of is largely the same as the one used for the encoder training of . Different from , the encoder training in is merely an intermediate step to improve the final predictive performance of tasks of interest. As shown in Figure 1, we also used a VAE to pre-train the encoder with unlabeled data, followed by the fine-tuning of supervised modules. The key innovation lies in the information transmitter module between the hidden representations of two domains. As introduced earlier, we considered the latent representation generated by encoder as ”ground-truth” representation to which encoder approximates. We utilized the additional transmitter module to explicitly bridge the asymmetrical encoding process between and . Specifically, the transmitter is responsible for the minimization of the difference between the hidden representations of two domains.

According to the formation of our training scheme, we are leveraging the stochastic encoder in the CLEIT framework. The latent variable z can be seen as multi-variate Gaussian variable. Thus, proper distribution distance loss can be used to measure the difference between representations. Such cross-level information transmission loss between representations can then be used as additional regularization to guide the training of encoder of . We can then train the encoder in a multi-task setting, where the training loss is defined as weighted combination of VAE loss () and cross-level information regularization loss () as shown below,

| (2) |

where is a user-specified hyper-parameter to balance the loss terms, when , the training does not use any information transmitted across domains. stands for additional transmission function, whose job is to explicitly transform (latent representation learned with auto-encoder training objective) to a representation that mimics the latent representation of . Thus, we have which stands for the transmitted representation. In this work, a two layer fully connected MLP is used as the transmission function. We used contrastive loss for the transmitter. The contrastive loss is one of the newest losses being used in self-supervised learning framework (Chen et al. 2020). We consider the hidden representation pair of the same sample in difference domains as positive, and the contrastive loss is defined as,

| (3) |

where

| (4) |

and

| (5) |

is the indicator vector of condition and the cross-level information regularization loss computed as the average contrastive loss between all positive pairs within one batch.

Low-level domain supervised learning

Since we are incorporating the cross-level information regularization loss into the encoder training, this requires us to leverage all the samples that have the features of both domains regardless of the label’s availability in this encoder training phase. The pre-training of encoder will terminate once stop condition is satisfied. The stopping condition can be maximum number of epochs or early stopping with validation dataset. In the supervised fine-tuning phase of task, the inherited predictor modules from the training of are appended after encoder and transmitter to avoid the initial training on these modules. Like encoder fine-tuning, we also adopted similar gradual unfreezing for the encoder and the transmitter module (when it is not an identity function) as well as layer-wise decayed learning rate.

A detailed fine-tuning procedure can be found in (Procedure 1).

Input:

Experiments

Datasets

We evaluate the performance of CLEIT on a real-world problem: predicting anti-cancer drug sensitivity given the mutation profile of cell lines. The mutation profile (oncology panel) has been implemented in clinic, but has weaker discriminative power for drug sensitivity prediction than the gene expression profile that is not a clinical standard yet. We collected and integrated data from several diverse resources: cancer cell line data from CCLE (Ghandi et al. 2019), pan-cancer data from Xena (Goldman et al. 2018), drug sensitivity data from GDSC (Yang et al. 2012), and gene-gene interactions from STRING (Szklarczyk et al. 2019). CCLE includes 1270 and 1697 cancer cell line samples with the gene expression profile and the somatic mutation profile, respectively. The pan-cancer data sets include 9808 and 9093 tumor samples with the gene expression profile and the somatic mutation profile, respectively. All gene expression data are metricized by the standard transcripts per million base for each gene, with additional log transformation. For the somatic mutation data, we kept only non-silent ones then propagated the mutated genes in each sample on a STRING gene-gene interaction network using pyNBS (Huang et al. 2018). Then, we only kept genes belonging to cancer gene consensus (Futreal et al. 2004). 563 and 562 CGC genes are selected for the gene expression and the somatic mutation, respectively. Furthermore, we matched the omics data of CCLE cell lines against GDSC drug sensitivity score measured by Area Under Drug Response Curve (AUC). In total we assembled 575 CCLE cell lines with both mutation and gene expression, which are associated with the 265 anti-cancer drugs. 222 cell lines have only mutation information. These data are used as labeled data in our study. Finally, the matched unlabeled samples are also identified to facilitate the pre-training. The gene expression profile is considered as , while the mutation as . A brief summary of the pre-processed data are shown in Table 1.

| Category |

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

|

|

575 | NA | ||||||

|

|

575 | 222 | ||||||

|

NA |

|

|

| Drug-wise | Sample-wise | |||

|---|---|---|---|---|

| Method | Pearson | RMSE | Pearson | RMSE |

| MLP (Mutation only) | 0.0414 | 0.1679 | 0.6441 | 0.1659 |

| VAE+MLP (Mutation only) | 0.0628 | 0.1544 | 0.6630 | 0.1491 |

| DDC | 0.0817 | 0.1541 | 0.6673 | 0.1524 |

| CORAL | 0.0732 | 0.1579 | 0.6624 | 0.1507 |

| DANN | 0.0969 | 0.1517 | 0.6773 | 0.1486 |

| ADDA | 0.0967 | 0.1520 | 0.6827 | 0.1488 |

| DSN | 0.1413 | 0.1419 | 0.6922 | 0.1322 |

| CLEIT (w/o transmitter) | 0.1456 | 0.1343 | 0.6930 | 0.1238 |

| CLEIT (MMD) | 0.1462 | 0.1384 | 0.6943 | 0.1351 |

| CLEIT (WGAN) | 0.1223 | 0.1504 | 0.6843 | 0.1428 |

| CLEIT (Contrastive) | 0.1630 | 0.1209 | 0.7171 | 0.1158 |

Experiment Set-up

We evaluated the performance of CLEIT by the task of predicting drug sensitivity on hold-out test over the labeled mutation-only test data. To be noted, both the prediction and ground truth are in the format of a matrix. Each row represents the sensitivity scores of a particular sample (cell line) against all drugs, and each column stands for the sensitivity scores of a specific drug against all tested cell lines. Thus the evaluation needs to be done by both sample-wise (per sample) and drug-wise (per drug). The evaluation metrics in use include the Pearson correlation and RMSE (root mean squared error). In addition, because of the incompleteness of the ground truth matrix, the prediction entries without a ground truth sensitivity score are filtered out in the calculation of each evaluation metric.

Training procedure of CLEIT

The training procedure of CLEIT is as follows. In the pre-training, we employed early stopping by tracking the VAE loss performance of labeled . While for the fine-tuning of , we set the maximum number of training epochs according to cross-validation experiment results. For the pre-training of , we used the labeled as validation set to apply early stopping strategy. Finally, we split into 90% training and 10% validation set for the fine-tuning of , where the validation set is used for early stopping. The final trained model are used to make predictions on labeled mutation-only test set. We repeat the fine-tuning 3 times.

Baseline models

We compared CLEIT with the following base-line models: MLP without and with the VAE pre-training for as well as several of the most popular domain adaptation algorithms that are used to transfer the knowledge learned from to . They include Deep Domain Confusion (DDC) network (Tzeng et al. 2014), Correlation Alignment (CORAL) (Sun, Feng, and Saenko 2015), Domain Adversarial Neural Network (DANN)(Ganin et al. 2016), Adversarial Domain Adaptation Network (ADDA) (Tzeng et al. 2017) and Domain Separation Network (DSN) (Bousmalis et al. 2016). Specifically, DDC, CORAL, DANN, and ADDA only made use of the labeled data, while DSN utilized both the unlabeled and labeled data. For domain adversarial loss in DSN we employed the MMD variant for the stability of training.

To evaluate the contribution of different components in CLEIT, we performed ablation studies by 1) removing the transmitter, 2) change the loss function to Maximum Mean Discrepancy (MMD) loss (Gretton et al. 2012) and Wasserstein-GAN (WGAN) (Arjovsky, Chintala, and Bottou 2017). Evaluation results on test data for CLEIT, base-line models, and ablation studies are summaries in Table 2.

Hyperparameters of CLEIT

Neural network-related models are constructed using TensorFlow 2.1. The detailed architecture of CLEIT and hyperparameters are listed in Table 3,

| Components |

|

|||||

|---|---|---|---|---|---|---|

|

|

|||||

|

|

|||||

|

|

|||||

|

|

|||||

|

|

|||||

|

|

|||||

|

|

Results and Discussion

Comparison with state-of-the-art models.

The results for both drug-wise and sample-wise evaluation are shown in Table 2. As seen in Table 2, all base-line domain adaptation models outperform the simple models with MLP only and VAE plus MLP in the drug-wise setting. It implies that will benefit from the knowledge transfer from . Furthermore, CLEIT models significantly outperform all other models in consideration (t-test p-value 0.05). The best performed model is the CLEIT that uses the contrastive loss. Compared with the best performed state-of-the-art model (DSN), the accuracy of CLEIT, when measured by Pearson’s correlation, improves 13.3% and 3.5% for the drug-wise and the sample-wise test, respectively.

Ablation studies.

In addition, the pre-training is helpful in improving the model performance, as suggested by the MLP models in Table 2. CLEIT models that incorporate MLP-transmission function show significantly better performance than the one without, suggesting that the transmission function plays a role in CLEIT. Choice of the loss function in the information transmission is also important. It is clear that contrastive loss performs better than MMD and WGAN. It is noted that MMD is used in DSN. When CLEIT uses MMD as the loss function to measure the domain discrepancy, the major difference between CLEIT and MMD is that CLEIT treats the information transmission between two domains asymmetrical, while DSN considers domain adaptation symmetrical. The results in Table 2 show that CLEIT-MMD outperforms DSN in both drug-wise and sample-wise settings. It indicates that the explicit modeling of hierarchical organization of and is important.

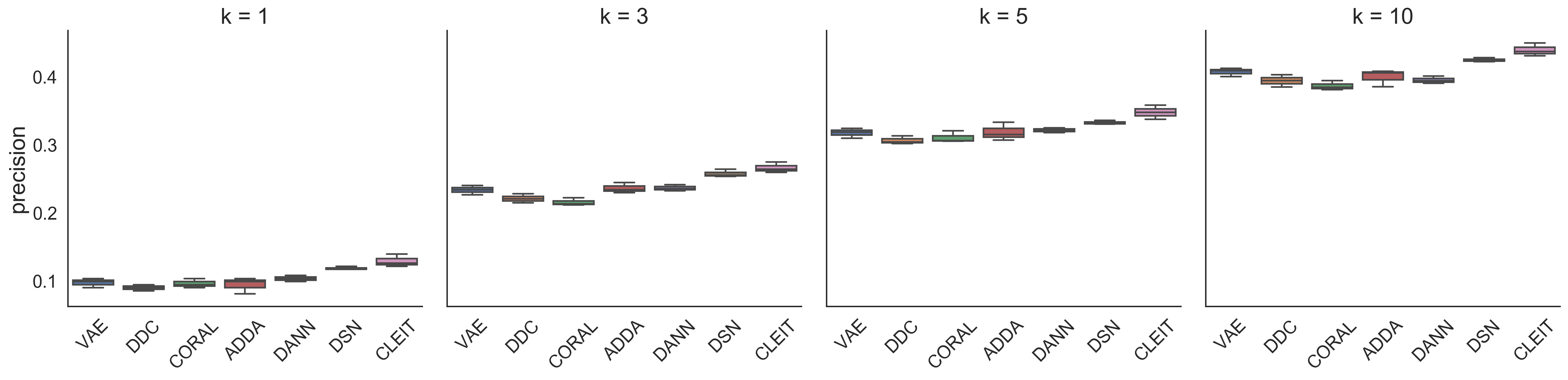

Prediction of top-ranked cell-line specific anti-cancer therapies.

Furthermore, CLEIT can be used to predict the best therapy for a new patient using only mutation data for precision medicine. We compared the performance of different methods with the precision of top- () predictions ranked by the AUC scores, which is defined as the ratio of drugs with top- smallest predicted scores per cell line among the drugs with top- ground-truth scores. Mutation only test results can be found below in Figure 2. Clearly, CLEIT model also outperforms other models in this scenario. Compared with the second best performed model DSN, CLEIT improves the performance by approximate 5% when = 5.

Conclusion

In this paper, we propose a novel machine learning framework CLEIT for the predictive modeling of genotype-phenotype associations by explicitly modeling the asymmetric cross-level information transmission in a biological system. Using the anti-cancer drug sensitivity prediction with only mutation data as a benchmark, CLEIT clearly outperforms existing methods and demonstrates its potential in precision medicine. Nevertheless, the performance of CLEIT could be further improved by incorporating domain knowledge. For example, an autoencoder module that can model gene-gene interactions and biological pathways will be greatly helpful. Under the framework of CLEIT, it is not difficult to integrate other omics data such as epigenomics and proteomics. They may further improve the performance of CLEIT.

Ethic Statement

This research addresses a fundamental problem in biology and ecology and will benefit broad scientific communities in both basic and translational studies. One of the immediate application of this research is in precision medicine. The proposed CLEIT can be used to inform the most effective therapy for a cancer patient based on her/his somatic mutation profile that is often included in the diagnosis. Caution should be taken when applying this research to precision medicine. The same as any machine learning techniques in the biomedical application, the outcome from this research only helps for the decision making but does not provide the final clinical decision, which should be made by a health professional.

References

- Aparisi et al. (2019) Aparisi, F.; Amado, H.; Calabuig-Fariñas, S.; Torres, S.; Pomares, A.; Jantus, E.; Blasco, A.; González-Cruz, V.; and Camps, C. 2019. Passenger mutations in cancer evolution. Cancer Reports and Reviews 3. doi:10.15761/CRR.1000188.

- Arjovsky, Chintala, and Bottou (2017) Arjovsky, M.; Chintala, S.; and Bottou, L. 2017. Wasserstein gan. arXiv preprint arXiv:1701.07875 .

- Ben-David et al. (2010) Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; and Vaughan, J. W. 2010. A theory of learning from different domains. Machine learning 79(1-2): 151–175.

- Ben-David et al. (2007) Ben-David, S.; Blitzer, J.; Crammer, K.; and Pereira, F. 2007. Analysis of representations for domain adaptation. In Advances in neural information processing systems, 137–144.

- Blois (1984) Blois, M. S. 1984. Information and medicine: the nature of medical descriptions. University of California Press.

- Bousmalis et al. (2016) Bousmalis, K.; Trigeorgis, G.; Silberman, N.; Krishnan, D.; and Erhan, D. 2016. Domain separation networks. In Advances in neural information processing systems, 343–351.

- Chen et al. (2012) Chen, M.; Xu, Z.; Weinberger, K.; and Sha, F. 2012. Marginalized denoising autoencoders for domain adaptation. arXiv preprint arXiv:1206.4683 .

- Chen et al. (2020) Chen, T.; Kornblith, S.; Norouzi, M.; and Hinton, G. 2020. A simple framework for contrastive learning of visual representations. arXiv preprint arXiv:2002.05709 .

- Costello et al. (2014) Costello, J. C.; Heiser, L. M.; Georgii, E.; Gönen, M.; Menden, M. P.; Wang, N. J.; Bansal, M.; Hintsanen, P.; Khan, S. A.; Mpindi, J.-P.; et al. 2014. A community effort to assess and improve drug sensitivity prediction algorithms. Nature biotechnology 32(12): 1202.

- Courty et al. (2017) Courty, N.; Flamary, R.; Habrard, A.; and Rakotomamonjy, A. 2017. Joint distribution optimal transportation for domain adaptation. In Advances in Neural Information Processing Systems, 3730–3739.

- Futreal et al. (2004) Futreal, P. A.; Coin, L.; Marshall, M.; Down, T.; Hubbard, T.; Wooster, R.; Rahman, N.; and Stratton, M. R. 2004. A census of human cancer genes. Nature reviews cancer 4(3): 177–183.

- Ganin et al. (2016) Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; and Lempitsky, V. 2016. Domain-adversarial training of neural networks. The Journal of Machine Learning Research 17(1): 2096–2030.

- Ghandi et al. (2019) Ghandi, M.; Huang, F. W.; Jané-Valbuena, J.; Kryukov, G. V.; Lo, C. C.; McDonald, E. R.; Barretina, J.; Gelfand, E. T.; Bielski, C. M.; Li, H.; et al. 2019. Next-generation characterization of the cancer cell line encyclopedia. Nature 569(7757): 503–508.

- Ghifary et al. (2015) Ghifary, M.; Bastiaan Kleijn, W.; Zhang, M.; and Balduzzi, D. 2015. Domain generalization for object recognition with multi-task autoencoders. In Proceedings of the IEEE international conference on computer vision, 2551–2559.

- Ghifary et al. (2016) Ghifary, M.; Kleijn, W. B.; Zhang, M.; Balduzzi, D.; and Li, W. 2016. Deep reconstruction-classification networks for unsupervised domain adaptation. In European Conference on Computer Vision, 597–613. Springer.

- Goldman et al. (2018) Goldman, M.; Craft, B.; Brooks, A.; Zhu, J.; and Haussler, D. 2018. The UCSC Xena Platform for cancer genomics data visualization and interpretation. BioRxiv 326470.

- Goodfellow et al. (2014) Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; and Bengio, Y. 2014. Generative adversarial nets. In Advances in neural information processing systems, 2672–2680.

- Gretton et al. (2012) Gretton, A.; Borgwardt, K. M.; Rasch, M. J.; Schölkopf, B.; and Smola, A. 2012. A kernel two-sample test. Journal of Machine Learning Research 13(Mar): 723–773.

- Hart and Xie (2016) Hart, T.; and Xie, L. 2016. Providing data science support for systems pharmacology and its implications to drug discovery. Expert opinion on drug discovery 11(3): 241–256.

- Huang et al. (2018) Huang, J. K.; Jia, T.; Carlin, D. E.; and Ideker, T. 2018. pyNBS: a Python implementation for network-based stratification of tumor mutations. Bioinformatics 34(16): 2859–2861.

- Kingma and Welling (2013) Kingma, D. P.; and Welling, M. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 .

- Lloyd-Price et al. (2019) Lloyd-Price, J.; Arze, C.; Ananthakrishnan, A. N.; Schirmer, M.; Avila-Pacheco, J.; Poon, T. W.; Andrews, E.; Ajami, N. J.; Bonham, K. S.; Brislawn, C. J.; et al. 2019. Multi-omics of the gut microbial ecosystem in inflammatory bowel diseases. Nature 569(7758): 655–662.

- Long et al. (2015) Long, M.; Cao, Y.; Wang, J.; and Jordan, M. 2015. Learning transferable features with deep adaptation networks. In International conference on machine learning, 97–105. PMLR.

- Long et al. (2016) Long, M.; Zhu, H.; Wang, J.; and Jordan, M. I. 2016. Unsupervised domain adaptation with residual transfer networks. In Advances in neural information processing systems, 136–144.

- Long et al. (2017) Long, M.; Zhu, H.; Wang, J.; and Jordan, M. I. 2017. Deep transfer learning with joint adaptation networks. In International conference on machine learning, 2208–2217. PMLR.

- Menden et al. (2019) Menden, M. P.; Wang, D.; Mason, M. J.; Szalai, B.; Bulusu, K. C.; Guan, Y.; Yu, T.; Kang, J.; Jeon, M.; Wolfinger, R.; et al. 2019. Community assessment to advance computational prediction of cancer drug combinations in a pharmacogenomic screen. Nature communications 10(1): 1–17.

- Shen et al. (2017) Shen, J.; Qu, Y.; Zhang, W.; and Yu, Y. 2017. Wasserstein distance guided representation learning for domain adaptation. arXiv preprint arXiv:1707.01217 .

- Sun, Feng, and Saenko (2015) Sun, B.; Feng, J.; and Saenko, K. 2015. Return of frustratingly easy domain adaptation. arXiv preprint arXiv:1511.05547 .

- Sun and Saenko (2016) Sun, B.; and Saenko, K. 2016. Deep coral: Correlation alignment for deep domain adaptation. In European conference on computer vision, 443–450. Springer.

- Szklarczyk et al. (2019) Szklarczyk, D.; Gable, A. L.; Lyon, D.; Junge, A.; Wyder, S.; Huerta-Cepas, J.; Simonovic, M.; Doncheva, N. T.; Morris, J. H.; Bork, P.; et al. 2019. STRING v11: protein–protein association networks with increased coverage, supporting functional discovery in genome-wide experimental datasets. Nucleic acids research 47(D1): D607–D613.

- TheAmericanCancerSociety (2020) TheAmericanCancerSociety. 2020. Precision or Personalized Medicine. https://www.cancer.org/treatment/treatments-and-side-effects/treatment-types/precision-medicine.

- Tzeng et al. (2017) Tzeng, E.; Hoffman, J.; Saenko, K.; and Darrell, T. 2017. Adversarial discriminative domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition, 7167–7176.

- Tzeng et al. (2014) Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; and Darrell, T. 2014. Deep domain confusion: Maximizing for domain invariance. arXiv preprint arXiv:1412.3474 .

- Weiss, Khoshgoftaar, and Wang (2016) Weiss, K.; Khoshgoftaar, T. M.; and Wang, D. 2016. A survey of transfer learning. Journal of Big data 3(1): 9.

- Yang et al. (2019) Yang, J. H.; Wright, S. N.; Hamblin, M.; McCloskey, D.; Alcantar, M. A.; Schrübbers, L.; Lopatkin, A. J.; Satish, S.; Nili, A.; Palsson, B. O.; et al. 2019. A white-box machine learning approach for revealing antibiotic mechanisms of action. Cell 177(6): 1649–1661.

- Yang et al. (2012) Yang, W.; Soares, J.; Greninger, P.; Edelman, E. J.; Lightfoot, H.; Forbes, S.; Bindal, N.; Beare, D.; Smith, J. A.; Thompson, I. R.; et al. 2012. Genomics of Drug Sensitivity in Cancer (GDSC): a resource for therapeutic biomarker discovery in cancer cells. Nucleic acids research 41(D1): D955–D961.