A Distributed Primal-Dual Method for Constrained Multi-agent Reinforcement Learning with General Parameterization

Abstract

This paper proposes a novel distributed approach for solving a cooperative Constrained Multi-agent Reinforcement Learning (CMARL) problem, where agents seek to minimize a global objective function subject to shared constraints. Unlike existing methods that rely on centralized training or coordination, our approach enables fully decentralized online learning, with each agent maintaining local estimates of both primal and dual variables. Specifically, we develop a distributed primal-dual algorithm based on actor-critic methods, leveraging local information to estimate Lagrangian multipliers. We establish consensus among the Lagrangian multipliers across agents and prove the convergence of our algorithm to an equilibrium point, analyzing the sub-optimality of this equilibrium compared to the exact solution of the unparameterized problem. Furthermore, we introduce a constrained cooperative Cournot game with stochastic dynamics as a test environment to evaluate the algorithm’s performance in complex, real-world scenarios.

Constrained Multi-Agent Reinforcement Learning, Primal-Dual Algorithm, Actor-Critic Algorithm.

1 Introduction

Reinforcement Learning (RL) has gained significant attention in recent years due to its success in solving complex decision-making problems across diverse domains [1], [2]. Traditional RL focuses on minimizing cumulative costs for a single agent or a set of agents acting independently. However, many real-world scenarios—such as traffic control [3], finance [4], and smart grids [5]—involve multiple agents that must cooperate or compete in a shared environment, leading to the study of Multi-agent Reinforcement Learning (MARL). In this framework, agents interact with each other and the environment, seeking to optimize their individual or joint policies while accounting for the influence of other agent’s actions (see, e.g., [6], [7], [8]). Moreover, a critical requirement for multi-agent systems in real-world scenarios is ensuring the independence of agents during online learning and operation. In many practical applications, centralized controllers face challenges related to scalability, real-time processing, and communication efficiency. To address these issues, distributed methods have been developed [9], [10], [11], [12].

While MARL holds great promise, many practical applications impose constraints that must be respected to ensure safety, fairness, or efficiency. For example, in networked microgrid management, maintaining power balance and preventing system overloads are critical for system stability [13]; similarly, in electric vehicle rebalancing systems, agents must consider factors such as battery life and access to charging stations [14]. Incorporating these types of constraints into reinforcement learning leads to the development of CMARL, which extends MARL frameworks to handle complex environments where satisfying constraints is as crucial as maximizing objective costs.

CMARL is framed within the Constrained Markov Game (CMG) framework [15], where each agent has its own local constraints and objective costs. In this work, we address a cooperative CMARL problem in which all agents aim to minimize a global objective function subject to global constraints, which are composed of local costs. We propose and analyze a distributed online method for solving this cooperative CMARL problem.

Although recent advancements in constrained single-agent reinforcement learning have demonstrated a zero duality gap [16], [17], the duality gap for the cooperative CMARL problem can be non-zero [18]. This inherent complexity poses significant challenges for algorithms attempting to satisfy global constraints [18]. In our study, we provide an analysis on the feasibility and sub-optimality of the equilibrium point of the proposed online algorithm.

Related Works: A widely used approach for solving single-agent constrained reinforcement learning problems is the primal-dual method, which demonstrates a zero duality gap by converting the original problem into an unconstrained Markov decision process with a Lagrangian cost [16], [19], [17]. This relaxed MDP is solved through alternating updates of the primal and dual variables. This approach has been extended to cooperative CMARL by relaxing the constrained problem into an unconstrained cooperative MARL [20], [18], [21]. Such relaxation enables the use of existing MARL algorithms, such as distributed actor-critic methods for networked agents [11].

Although there are many works on CMARL, only a few address the general problem where agent’s local costs are coupled through global constraint functions. For instance, in the CMARL formulation of [22], each agent’s actions indirectly influence others through state transition dynamics, but the method requires some coordination, preventing full decentralization. In contrast, [21] achieves decentralization through parameter sharing among agents, though it solves a distributed constrained MDP with networked agents rather than a true CMARL problem. This approach assumes homogeneous agents, with policies converging to a consensus. A recent extension by [23] builds on this by reducing gradient estimation variance for improved scalability.

For general CMARL formulation, [24] adopts the centralized training, decentralized execution framework, improving computational efficiency and scalability in large-scale multi-agent environments. It separates an agent’s policy into two components: a base policy for reward maximization and a perturbation policy for constraint satisfaction. However, it requires communication between agents during execution, distinguishing it from fully distributed methods.

Another notable work is [25], which operates in distributed settings. They propose a scalable method for general utility and constraint functions, modeled as nonlinear functions of the state-action occupancy measure. Their approach decomposes the state space for each agent, directly estimating local state-action occupancy measures while leveraging spatial correlation decay and truncated policy gradient estimators for scalability and convergence. However, despite its general policy parameterization, directly estimating local occupancy measures remains challenging in large state-action spaces.

Contributions: In this work, we propose a distributed algorithm for the CMARL problem with networked agents, where each agent maintains its own local primal and dual variables. Specifically, we estimate the Lagrangian multipliers of the global CMARL problem using only local information, enabling a fully distributed policy update method. To the best of our knowledge, this problem has not been addressed previously. Our approach builds on the method of [11] for unconstrained MARL. As such, our method can be viewed as a constrained extension of [11]. The main contributions of the article are as follows.

-

1.

We propose a fully distributed formulation for Lagrange multipliers in the cooperative CMARL problem over networked agents.

-

2.

We develop a distributed online primal-dual algorithm based on actor-critic methods with general function approximation.

-

3.

We prove the consensus of the Lagrange multipliers and the convergence of the proposed algorithm to an equilibrium point.

-

4.

We analyze feasibility of constraints, and sub-optimality of the algorithm’s equilibrium point compared to the exact solution of the unparameterized primal CMARL problem.

Notation: Let denote the -dimensional Euclidean space, and let represent the Euclidean norm for vectors and the spectral norm for matrices. The norm, denoted by , is the sum of the absolute values of the vector components. We define the gradient operator by . The notation represents a vector of ones, and denotes the indicator function for the set . Additionally, denotes the identity matrix of size . We define the matrix as the projection matrix onto the consensus subspace, given by where denotes the Kronecker product. The orthogonal projection onto the subspace orthogonal to the consensus subspace is given by: For any vector , the consensus component is defined by , where for . The disagreement component of relative to the consensus subspace is given by . Thus, any vector can be decomposed as Furthermore, we denote the projected dynamical system corresponding to the projection operator using where .

2 Problem Formulation of Distributed CMARL

In this section, we formally define the cooperative CMARL problem based on the CMG framework. We then propose a novel distributed problem formulation for CMARL, in which networked agents update their policies using only local information. To begin, we consider a CMG, which is represented as a tuple . This framework involves agents, subject to constraints, where agents and constraints are indexed by and , respectively. The components of the CMG tuple include the following: is the finite state space. At each time step , a random state is drawn. represents the finite joint action space, where is the action space for agent . The joint action of all agents is denoted by , where is the action of agent , and denotes the joint action vector of all agents except agent , i.e., . Thus, the joint action can be succinctly expressed as . is the probability transition kernel, representing the probability of transitioning from state to state under the joint action . represents the set of local costs for each agent. The local cost describes the expected cost for agent at state under the joint action . Specifically, , where is the immediate cost incurred by agent at time step . represents the set of local constraint cost for each agent. The local constraint cost describes the expected -th constraint cost for agent under state and joint action , i.e., , where is the immediate -th constraint cost incurred by agent at time step . is the upper bound vector for the set of constraints, where each corresponds to the upper bound for the -th cost function.

CMARL is formulated within the CMG framework, where each agent operates under an individual policy , with representing the probability simplex over the action space . Since the agents make decisions independently, the joint policy of the all agents is expressed as the product of individual policies, .

In this study, we focus on a general class of parameterized policies. Each agent operates with a set of policy parameters , where is a compact and convex set. This assumption is particularly beneficial in environments with large state spaces , as it facilitates more efficient policy representation. The policy parameters for all agents are concatenated as , where , forming the product parameter space. The corresponding product parameterized policy is denoted by . Furthermore, we define , where represents the concatenated parameters of all agents except agent . Therefore, the overall parameter vector can be expressed as .

Assumption 1

For any agent , state , and action , the policy holds for all . Moreover, the policy is continuously twice differentiable with respect to .

Assumption 2

Under any product parameterized policy, the Markov chain is assumed to be irreducible and aperiodic. Consequently, the Markov chain has a unique stationary distribution, which is denoted by for any product policy .

Assumption 3

The sequences are real-valued, uniformly bounded, and mutually independent for all time steps .

Remark 1

Without loss of generality, we assume that both the immediate cost and the constraint cost are bounded within the interval .

In CMARL, agents collaborate to find an optimal, feasible policy that minimizes the global objective cost function, which is defined by the total average of the local objective costs across all agents. Although each agent operates independently and utilizes only its local information, their collective goal is to minimize this global objective function. The formal definition of the global objective cost is expressed as:

| (1) | ||||

Here, the local cost function is defined as , representing the average cost across all agents. In a similar manner to the global objective cost, we define the global constraint functions, which agents are expected not to violate. The global constraint functions are given by:

| (2) | ||||

where represents the average constraint cost across all agents for the -th constraint. Additionally, we denote . It is important to note that the global constraint functions must be satisfied collectively by all agents and are not specific to any single agent. In fact, the constraint functions in (2) impose implicit restrictions on the product parameter space . Therefore, we define the feasible product parameter space as , where . The global Lagrangian function for this stochastic multi-agent optimization problem is then expressed as:

| (3) | ||||

where, represents the expected global Lagrangian cost, where is the global Lagrange multiplier vector associated with the global constraint functions, and for all . Using this formulation, the cooperative CMARL problem can be formulated as a minimax optimization problem . Thus, the corresponding dual problem is expressed as . furthermore it is worth noting, the primal and dual problem of CMARL may have different solutions since the cooperative CMARL problem has a nonzero duality gap[18]. Nevertheless, we are interested in solving the dual problem, as it is more suitable for distributed constrained optimization [26], [27]. For a given Lagrange multiplier vector, the inner minimization in the dual problem reduces to an unconstrained MARL problem. In this framework, each agent is assigned a local Lagrangian cost and works collaboratively to minimize the global Lagrangian function, relying solely on local information. By utilizing this approach, we can alternately update the primal variables and the Lagrange multipliers. In this work, we propose a distributed method for updating the Lagrange multipliers to efficiently solve the dual problem.

To address the CMARL problem in a distributed manner, we introduce the concept of locally estimated Lagrangian multipliers, denoted as . where, represents the local estimation by the -th agent of the global Lagrange multiplier . The vector of local estimates for the -th agent is denoted as . Additionally, the augmented vector of all local estimates across all agents is represented as .

By defining and considering that each agent operates with local parameters , we introduce the decomposed Lagrangian objective function as follows:

| (4) |

where represents the expected local Lagrangian cost for agent . Accordingly, we define the immediate local Lagrangian cost for agent at time step as , and the global Lagrangian cost at time step , aggregated over all agents, as .

For a given set of locally estimated Lagrange multipliers, the decomposed Lagrangian objective function can be treated as an unconstrained objective cost function, which is solvable using distributed actor-critic methods, as described in [11]. Furthermore, when the locally estimated Lagrange multipliers satisfy the consensus condition for all and , the decomposed Lagrangian objective function becomes equivalent to the global Lagrangian function in (3), i.e., .

Accordingly, for a continuously differentiable policy and given , the gradient of the decomposed Lagrangian objective function with respect to the parameters is given by the policy gradient theorem for MARL [11]:

| (5) |

where is the advantage function for agent , defined as:

The term refers to the global differential expected action-value function of the decomposed Lagrangian objective, and is defined as follows [28]:

We parameterize the function linearly as follows where represents the feature vector corresponding to each state-action pair, and each agent maintains its own local set of weights .

3 Algorithm

According to the CMARL problem formulation, the proposed algorithm, outlined in Algorithm 1, operates in two phases during each iteration. In the first phase, for a given , agents update their critic and actor weights using local information to solve an unconstrained multi-agent problem for a decomposed Lagrangian objective function. In this phase, each agent computes and utilizes as a surrogate immediate objective cost. This phase is based on the Algorithm 1 presented in [11]. Each agent maintains its critic parameters, denoted by , which must be shared locally according to the communication graph, with the weight matrix denoted by for all . The critic update rule for each agent in this phase is given by:

| (6) | ||||

Here, represents the critic learning rate, and the temporal difference (TD) error is updated using the following rule:

| (7) |

Additionally, in the first phase, we update the actors locally using the policy gradient theorem for MARL, as described in (5) for sample based gradient estimation of Lagrangian objective cost. The actor update is defined as follows:

| (8) | ||||

where, represents the actor learning rate. The parameter is updated in the descent direction within the set , where denotes the projection onto the set . Next, we propose the second phase, which focuses on updating the locally estimated Lagrange multipliers. This phase also involves estimating the constraint cost function. Since agents do not have access to the global constraint cost, these functions are estimated locally using a recursive update as follows:

| (9) |

where, and are defined for notational simplicity. Finally, we present the update rule for the Lagrange multipliers, which involves taking a step in the ascent direction with respect to the decomposed Lagrangian objective function using the estimated values:

| (10) |

where, represents the learning rate for the Lagrange multipliers, and denotes the projection onto the set .

4 Theoretical Results

This section consists of two parts. In the first part, we prove the convergence of Algorithm 1 to a stationary point of the update rules in (3)-(10). In the second part, we analyze the sub-optimality of the convergent point by deriving an upper bound on the gap between the obtained solution and the optimal solution.

4.1 Convergence of Algorithm

Assumption 4

The learning rates , , and should satisfy the following conditions:

Assumption 5

(Slater’s condition). There exists some policy and constants such that for all .

Assumption 6

Consider the sequence of nonnegative random matrices , which satisfies the following conditions:

-

1.

Each matrix is doubly stochastic, i.e., and .

-

2.

There exists a constant such that for the matrix , all diagonal elements and any other positive entries satisfy .

-

3.

The spectral norm of the matrix is denoted by , and it is assumed that .

-

4.

Given the -algebra generated by the random variables up to time , the matrix is conditionally independent of the variables and for all .

Assumption 7

The set is sufficiently large such that there exists at least one local minimum of in its interior, given .

This assumption is standard in the analysis of actor-critic algorithms, as it facilitates the convergence analysis of the algorithm. In practice, however, the parameter can be updated without the need for projection, as discussed in [11].

Proposition 1

Under Assumptions 1-7, for a given locally estimated Lagrange multipliers , the distributed actor-critic algorithm with linear critic approximation and local update rules (3)-(3), when applied to minimize the decomposed Lagrangian objective, ensures that the policy parameters for all converge almost surely to a point within the set of asymptotically stable equilibrium points of the projected dynamical system (11). Here, represents the minimizer of the Mean Square Projected Bellman Error (MSPBE) corresponding to the policy . Additionally, the critic weights achieve consensus at the point almost surely.

| (11) |

Proof: It is evident that for a fixed , the decomposed Lagrangian objective function reduces to an unconstrained multi-agent objective. Now, from Theorems 4.6 and 4.7 in [11], which guarantee the convergence of unconstrained MARL actor-critic, it can be concluded that the actor will converge to a point in the set of asymptotically stable equilibrium points defined by (11).

Remark 2

According to Proposition 1, for a good choice of the basis function of the critic approximator, which satisfies , for a sufficiently small , the actor converges to the -neighborhood of the local minima of .

Proof: The recursion (9) is driven only by immediate local costs, and these local costs are independent of each other constraints. By applying Lemma 5.2 from [11], which addresses the boundedness of updates for local independently, we can conclude that almost surely. Consequently, , and therefore .

Theorem 1

The disagreement component of the locally estimated Lagrange multipliers converges to zero almost surely, i.e., almost surely.

Proof: The recursion for the vector is given by:

| (12) |

To analyze the disagreement component, define:

The disagreement component is then given by Given that , we can further simplify to obtain:

Our goal is to show that is bounded. Consider the filtration . Then, we have:

where , , and . It is straightforward to show that the maximum eigenvalue of is less than or equal to 1. Utilizing Assumption 6, along with the Cauchy-Schwarz inequality and the Rayleigh quotient inequality, and considering the doubly stochastic property of , which ensures that , we obtain the following inequality:

| (13) |

Now, we can show that . At each iteration, for is a point in the convex set . Additionally, the local consensus update for each agent is a convex combination of . Consequently, it holds that . Also, from the non-expansive property of convex projection:

Using Lemma 1, there exists some constant such that . Considering the bound and taking expectations from both sides of (13), and applying Jensen’s inequality, we have:

Let for simplicity. Since and , there exists a sufficiently large such that for any and for some . Thus,

| (14) |

We define the function . With straightforward analysis, it can be shown that for given parameters , there exist such that . Accordingly, using the inequality in (14), there exist positive constants and that satisfy:

| (15) |

for all . By recursively substituting (15), it holds that . Thus, we can conclude for a positive constant . Equivalently, . Summing over , we obtain Finally, by Fubini’s theorem, we have . Hence, .

Assumption 8

Suppose the total number of policy parameters is . There exists a unique continuous function , which represents the converged point of the actor dynamics in (11) for a given .

Remark 3

Lemma 2

Let the projected dynamical system for be defined as follows:

| (16) |

where . Additionally, let the compact set denote the asymptotically stable equilibrium points of this projected dynamical system. Using this ODE, the convergence of can be established through the following theorem.

Proof: Using 2 from Assumption 6, There exist such that , then we can rewrite (10) for all as follows:

| (17) |

By Theorem 1, we have almost surely, for all . Consequently, for any , there exists a sufficiently large such that almost surely. Equivalently,

| (18) |

Furthermore, by Lemma 2, almost surely. Consequently, by applying Kushner-Clark Lemma (Theorem 5.3.1 of [29]) under Assumptions 4 and 8, the recursion (10) converges almost surely to a point in the set . Therefore, as per Theorem 1, we conclude that almost surely.

The following proposition introduces a condition under which, the feasibility of the constraints are met at the consensus value of Lagrange multipliers, i.e. .

Proposition 2

Suppose . Then all constraints are satisfied.

Proof: This can be proven by contradiction. Assume the claim is not true. Then, for a sufficiently small and for all , we have Using the definition of projected dynamical system for the equation (16), we obtain:

This leads to a contradiction since is an interior equilibrium point for the dynamic (16). Hence, the original claim is true.

4.2 Duality Gap Evaluation at Equilibrium Point

According to [18], CMARL problems, without parameterization, can generally exhibit a strictly positive duality gap. Specifically, for non-parameterized CMARL problems defined over product policies, this duality gap is denoted by . Formally, the duality gap is defined as where, and Since the parameterized policy class is a subset of the policy space , and given that the parameterized problem represents a nonlinear optimization problem, this duality gap can also exist in general parameterized problems.

In this section, we analyze the impact of errors arising from individual and product parameterizations of each policy within the parameterized policy space. Additionally, we examine how the local convergence of the Lagrange multipliers, resulting from solving a nonlinear maximin problem, affects the discrepancy between the value of the primal non-parameterized problem and the convergence points of our proposed algorithm. To quantify this, we define the duality gap for the parameterized problem as , where and are the converged points of the actor parameters and Lagrange multipliers, respectively, from the proposed algorithm.

The next lemma introduces a search space for the Lagrange multipliers of the non-parameterized dual problem, ensuring that the optimal Lagrange multiplier vector, denoted as , lies within these boundaries.

Lemma 3

(Lemma 1 [18]) Any optimal Lagrange multiplier satisfies the range for all .

According to Proposition 3, we define the set , which serves as the domain in which the Lagrange multiplier variables are confined when solving the dual problem. These bounds, or any other estimated bounds, can be applied within our proposed algorithm.

Definition 1

A parameterization is an -individual parameterization of policies in if, for some , there exists a parameter for every policy such that

Definition 2

A parameterization is an -product parameterization of policies in if, for some , there exists for any a parameter such that

Lemma 4

Assuming -individual parameterization for each agent , then is an upper bound for in the -product parameterization policy.

Proof: By considering the difference between the policy and the parameterized policy :

Using the triangle inequality, we can bound the above expression by:

For term , we have and For term , we note that Since is an -product parameterized policy for any product policy in , using induction for inequality, it follows that Combining these results, we obtain

Lemma 5

(Lemma 3 [30]): The distance between the stationary distributions and , which are induced by the policies , can be upper bounded by:

| (19) |

where .

In Lemma 5, , known as Kemeny’s constant, represents the average number of steps required to reach a target state in the Markov chain induced by the policy . It is calculated as the mean first passage time for each state, weighted according to the steady-state distribution. This constant is also related to the mixing time of the Markov chain, providing a measure of how quickly the chain converges to its stationary distribution.

Lemma 6

Let and be the occupation measure induced by any stationary policy and , respectively, where is an -product approximation of . Then, it follows that:

| (20) |

Proof: Expressing the total variation distance between the stationary distributions and :

By adding and subtracting the term and using triangle inequality we obtain:

| (21) |

, and from Lemma 5, Finally

The following Lemma provides a bound for the difference in the values of the Lagrangian functions with respect to the norm of the Lagrangian multipliers and the distance between the stationary distributions of the induced policies.

Lemma 7

The difference in the values of the Lagrangian functions and for two pairs and is bounded by:

| (22) |

Proof: We can express the difference in the Lagrangian values as the sum of two terms:

Then have Also, . Combining the bounds for and , we obtain the desired result.

Let the pair be the solution to the problem , and let be the solution to the problem .

Proposition 3

The duality gap for the parameterized problem is bounded as follows:

| (23) |

where , and .

Proof: Using Lemma 7, we have:

Since is a distance metric, we can apply the triangle inequality where is an -product parameterization of . Finally, using Proposition 3, we have and . Substituting these bounds into the earlier inequality and using the relationship between the duality gap and the optimal values, , we conclude the proof.

5 Experiments

In this section, we define a cooperative and dynamically stochastic model of the Cournot game to evaluate the proposed algorithm in a complex, real-world economic scenario.

In this problem, the deterministic objective cost for each agent is defined as , where represents the production level of each agent, and represents the unit price. Therefore, is the price proposed by each agent. The function , which represents the market price, depends on the agents’ actions and the state of the game. We define this function as , where is the total number of agents. The state variable is assumed to lie within the interval . The reason for choosing this model is that market demand parameters are not necessarily constant and may vary over time. This approach provides greater flexibility and is more representative of real-world scenarios. Typically, these parameters depend on the production levels of each agent, meaning that the defined state will be a function of the agent’s actions.

In the original Cournot game model, both the state space and the actions of each agent are defined continuously. To discretize this model, the state and action spaces can be discretized using an appropriate method. As a result, a probability distribution for state transitions under the agent’s actions can be expressed as .

Now, based on the previous definitions and considering the limitations of the real-world problem, constraints can be incorporated into the model. The upper bound for the market price, defined for each agent, is given by where is a weight assigned to each agent to account for differentiation constraint cost correspond to each agent, thereby improving the model flexibility.

In this experiment, we use the described model with the number of agents set to . For sampling from the state space, 10 states are selected, evenly spaced from the interval . Additionally, for sampling the actions of each agent, 10 samples with equal spacing are selected from the interval . Therefore, and for all . As a result, the total number of state-action pairs is . Moreover, we set , with weights , , , , and , and an upper bound of for the experiment.

For random state transitions under a given state-action pair, a binomial distribution is considered. This distribution in each state depends on the total actions of all agents, such that as the sum of actions increases, the probability of transitioning to states with lower values also increases by shifting the mean of the distribution as a function of the sum of agent’s actions. This state transition probability function can be adapted to better reflect real economic systems. This modeling makes the environment relatively challenging and highly stochastic for multi-agent learning. Additionally, the learning rates for the critic, actor, and Lagrange multipliers are set according to Assumption 4, with , , and , respectively.

We also assume that the critic is linear for all agents, with the dimension of its parameters set to 20. The features of each state-action pair are shared among the agents and uniformly sampled from the interval . Furthermore, the policy for each agent is parametric and linear, implemented using a softmax policy. The dimension of the policy parameters for each agent is 10. While the policy features for each agent are also uniformly sampled from the interval , each agent has its own specific policy features.

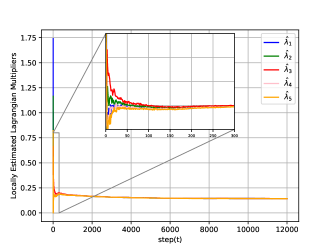

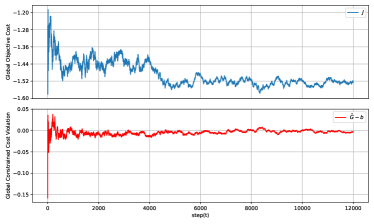

The experiment results are depicted in Figures 1 and 2. In Figure 1, it is shown that the locally estimated Lagrange multipliers reach consensus and subsequently converge, as demonstrated by Theorems 1 and 2. Additionally, Figure 2 illustrates the global objective cost and the global constraint cost during training. This plot demonstrates how effectively the algorithm reduces the objective cost while maintaining constraint violations near zero.

6 Conclusion

This paper introduced a distributed approach for solving cooperative CMARL problems, allowing agents to minimize a global objective function while respecting shared constraints. By leveraging a decentralized primal-dual algorithm based on actor-critic method, our approach enables each agent to estimate Lagrangian multipliers locally, achieving consensus and ensuring convergence to an equilibrium. We validated the method through a constrained cooperative Cournot game with stochastic dynamics. The results highlight the potential of our method for scalable, decentralized solutions in real-world applications, such as smart grids and autonomous systems. Future work will explore adaptations for more complex environments and dynamic constraints.

References

- [1] D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Van Den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot et al., “Mastering the game of go with deep neural networks and tree search,” Nature, vol. 529, no. 7587, pp. 484–489, 2016.

- [2] J. Kober, J. A. Bagnell, and J. Peters, “Reinforcement learning in robotics: A survey,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1238–1274, 2013.

- [3] T. Chu, J. Wang, L. Codecà, and Z. Li, “Multi-agent deep reinforcement learning for large-scale traffic signal control,” IEEE Transactions on Intelligent Transportation Systems, vol. 21, no. 3, pp. 1086–1095, 2020.

- [4] U. Pham, Q. Luu, and H. Tran, “Multi-agent reinforcement learning approach for hedging portfolio problem,” Soft Computing, vol. 25, no. 12, pp. 7877–7885, 2021.

- [5] M. Roesch, C. Linder, R. Zimmermann, A. Rudolf, A. Hohmann, and G. Reinhart, “Smart grid for industry using multi-agent reinforcement learning,” Applied Sciences, vol. 10, no. 19, p. 6900, 2020.

- [6] K. Zhang, Z. Yang, and T. Başar, “Multi-agent reinforcement learning: A selective overview of theories and algorithms,” Handbook of Reinforcement Learning and Control, 2021.

- [7] T. T. Nguyen, N. D. Nguyen, and S. Nahavandi, “Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications,” IEEE transactions on cybernetics, vol. 50, no. 9, pp. 3826–3839, 2020.

- [8] A. Oroojlooy and D. Hajinezhad, “A review of cooperative multi-agent deep reinforcement learning,” Applied Intelligence, vol. 53, no. 11, pp. 13 677–13 722, 2023.

- [9] Y. Zhang and M. M. Zavlanos, “Cooperative multiagent reinforcement learning with partial observations,” IEEE Transactions on Automatic Control, vol. 69, no. 2, pp. 968–981, 2023.

- [10] L. Cassano, K. Yuan, and A. H. Sayed, “Multiagent fully decentralized value function learning with linear convergence rates,” IEEE Transactions on Automatic Control, vol. 66, no. 4, pp. 1497–1512, 2020.

- [11] K. Zhang, Z. Yang, H. Liu, T. Zhang, and T. Basar, “Fully decentralized multi-agent reinforcement learning with networked agents,” in Proceedings of the 35th International Conference on Machine Learning (ICML), vol. 80. PMLR, 2018, pp. 5872–5881.

- [12] B. Yongacoglu, G. Arslan, and S. Yüksel, “Decentralized learning for optimality in stochastic dynamic teams and games with local control and global state information,” IEEE Transactions on Automatic Control, vol. 67, no. 10, pp. 5230–5245, 2021.

- [13] Q. Zhang, K. Dehghanpour, Z. Wang, F. Qiu, and D. Zhao, “Multi-agent safe policy learning for power management of networked microgrids,” IEEE Transactions on Smart Grid, vol. 12, no. 2, pp. 1048–1062, 2021.

- [14] S. He, Y. Wang, S. Han, S. Zou, and F. Miao, “A robust and constrained multi-agent reinforcement learning electric vehicle rebalancing method in amod systems,” in 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023, pp. 5637–5644.

- [15] E. Altman and A. Shwartz, “Constrained markov games: Nash equilibria,” in Advances in Dynamic Games and Applications. Springer, 2000, pp. 213–221.

- [16] S. Paternain, L. Chamon, M. Calvo-Fullana, and A. Ribeiro, “Constrained reinforcement learning has zero duality gap,” in Advances in Neural Information Processing Systems (NeurIPS), vol. 32, 2019.

- [17] S. Paternain, M. Calvo-Fullana, L. F. Chamon, and A. Ribeiro, “Safe policies for reinforcement learning via primal-dual methods,” IEEE Transactions on Automatic Control, vol. 68, no. 3, pp. 1321–1336, 2022.

- [18] Z. Chen, Y. Zhou, and H. Huang, “On the hardness of constrained cooperative multi-agent reinforcement learning,” in Proceedings of the 12th International Conference on Learning Representations (ICLR), 2024.

- [19] S. Bhatnagar and K. Lakshmanan, “An online actor-critic algorithm with function approximation for constrained markov decision processes,” Journal of Optimization Theory and Applications, vol. 153, pp. 688–708, 2012.

- [20] R. B. Diddigi, D. S. K. Reddy, P. KJ, and S. Bhatnagar, “Actor-critic algorithms for constrained multi-agent reinforcement learning,” in Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, 2019, pp. 1931–1933.

- [21] S. Lu, K. Zhang, T. Chen, T. Başar, and L. Horesh, “Decentralized policy gradient descent ascent for safe multi-agent reinforcement learning,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 10, 2021, pp. 8767–8775.

- [22] Y. Zhao, Y. Yang, Z. Lu, W. Zhou, and H. Li, “Multi-agent first order constrained optimization in policy space,” in Advances in Neural Information Processing Systems (NeurIPS), vol. 36, 2023, pp. 39 189–39 211.

- [23] R. Hassan, K. S. Wadith, M. M. Rashid, and M. M. Khan, “Depaint: A decentralized safe multi-agent reinforcement learning algorithm considering peak and average constraints,” Applied Intelligence, pp. 1–17, 2024.

- [24] Z. Yang, H. Jin, R. Ding, H. You, G. Fan, X. Wang, and C. Zhou, “Decom: Decomposed policy for constrained cooperative multi-agent reinforcement learning,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, no. 9, 2023, pp. 10 861–10 870.

- [25] D. Ying, Y. Zhang, Y. Ding, A. Koppel, and J. Lavaei, “Scalable primal-dual actor-critic method for safe multi-agent reinforcement learning with general utilities,” Advances in Neural Information Processing Systems (NeurIPS), vol. 36, 2024.

- [26] T.-H. Chang, A. Nedić, and A. Scaglione, “Distributed constrained optimization by consensus-based primal-dual perturbation method,” IEEE Transactions on Automatic Control, vol. 59, no. 6, pp. 1524–1538, 2014.

- [27] A. Nedic and A. Ozdaglar, “Distributed subgradient methods for multi-agent optimization,” IEEE Transactions on Automatic Control, vol. 54, no. 1, pp. 48–61, 2009.

- [28] R. S. Sutton, “Reinforcement learning: An introduction,” 2018.

- [29] H. J. Kushner and D. S. Clark, Stochastic Approximation Methods for Constrained and Unconstrained Systems. Springer, 1978.

- [30] Y. Zhang and K. W. Ross, “On-policy deep reinforcement learning for the average-reward criterion,” in Proceedings of the 38th International Conference on Machine Learning (ICML), vol. 139. PMLR, 2021, pp. 12 535–12 545.