A Domain-Agnostic Approach for Characterization of Lifelong Learning Systems

Abstract

Despite the advancement of machine learning techniques in recent years, state-of-the-art systems lack robustness to “real world” events, where the input distributions and tasks encountered by the deployed systems will not be limited to the original training context, and systems will instead need to adapt to novel distributions and tasks while deployed. This critical gap may be addressed through the development of “Lifelong Learning” systems that are capable of 1) Continuous Learning, 2) Transfer and Adaptation, and 3) Scalability. Unfortunately, efforts to improve these capabilities are typically treated as distinct areas of research that are assessed independently, without regard to the impact of each separate capability on other aspects of the system. We instead propose a holistic approach, using a suite of metrics and an evaluation framework to assess Lifelong Learning in a principled way that is agnostic to specific domains or system techniques. Through five case studies, we show that this suite of metrics can inform the development of varied and complex Lifelong Learning systems. We highlight how the proposed suite of metrics quantifies performance trade-offs present during Lifelong Learning system development - both the widely discussed Stability-Plasticity dilemma and the newly proposed relationship between Sample Efficient and Robust Learning. Further, we make recommendations for the formulation and use of metrics to guide the continuing development of Lifelong Learning systems and assess their progress in the future.

keywords:

lifelong learning , reinforcement learning , continual learning , system evaluation , catastrophic forgetting[org:apl]organization=Johns Hopkins University Applied Physics Laboratory,addressline=11100 Johns Hopkins Rd., city=Laurel, postcode=20723, state=MD, country=USA \affiliation[org:tdy]organization=Teledyne Scientific Company - Intelligent Systems Laboratory,addressline=19 T.W. Alexander Drive, city=RTP, postcode=27709, state=NC, country=USA \affiliation[org:uta]organization=Department of Computer Science, University of Texas at Austin,addressline=, city=Austin, postcode=, state=TX, country=USA \affiliation[org:usc]organization=Department of Computer Science, University of Southern California,addressline=, city=Los Angeles, postcode=, state=CA, country=USA \affiliation[org:lu]organization=Department of Computer Science, Loughborough University,addressline=, city=Loughborough, postcode=, state=England, country=UK \affiliation[org:umich]organization=Department of Electrical Engineering and Computer Science, University of Michigan,addressline=, city=Ann Arbor, postcode=, state=MI, country=USA \affiliation[org:sri]organization=SRI International,addressline=201 Washington Rd, city=Princeton, postcode=, state=NJ, country=USA \affiliation[org:utsa]organization=University of Texas at San Antonio,addressline=, city=San Antonio, postcode=, state=TX, country=USA \affiliation[org:umass]organization=Department of Computer Science, University of Massachusetts Amherst,addressline=, city=Amherst, postcode=, state=MA, country=USA \affiliation[org:snl]organization=Sandia National Laboratories,addressline=, city=Albuquerque, postcode=, state=NM, country=USA \affiliation[org:upenn]organization=Department of Computer and Information Science, University of Pennsylvania,addressline=, city=Philadelphia, postcode=, state=PA, country=USA \affiliation[org:brown]organization=Department of Computer Science, Brown University,addressline=, city=Providence, postcode=, state=RI, country=USA \affiliation[org:hrl]organization=Information and Systems Sciences Laboratory, HRL Laboratories,addressline=3011 Malibu Canyon Road, city=Malibu, postcode=90265, state=CA, country=USA \affiliation[org:vb]organization=Department of Computer Science, Vanderbilt University,addressline=, city=Nashville, postcode=, state=TN, country=USA \affiliation[org:anl]organization=Argonne National Laboratory,addressline=9700 S Cass Ave, city=Lemont, postcode=, state=IL, country=USA

1 Introduction

While machine learning (ML) has made dramatic advances in the past decade, deployment and use of data-driven ML-based systems in the real world faces a crucial challenge: the input distributions and tasks encountered by the deployed system will not be limited to the original training context, and systems will need to accommodate novel distributions and tasks while deployed. We define the challenge of Lifelong Learning (LL) as enabling a system to learn and retain knowledge of multiple tasks over its operational lifetime. Addressing this challenge requires new approaches to both algorithm development and assessment. The DARPA Lifelong Learning Machines (L2M) program was initiated in 2018 to stimulate fundamental algorithmic advances in LL and to assess these LL capabilities in complex environments. The program focused on both reinforcement learning (RL) and classification systems in diverse domains, such as CARLA (Dosovitskiy et al., 2017) (3D simulator for autonomous driving), StarCraft (Vinyals et al., 2017) (real-time strategy game), AI Habitat (Savva et al., 2019) (photorealistic 3D simulator for indoor environments), AirSim (Shah et al., 2018) (3D drone simulator), and L2Explorer (Johnson et al., 2022) (open-world exploration). The diversity of domains was motivated primarily by the research consideration of exploring LL in a broad array of contexts, and it resulted in each research team developing LL systems for their respective domains.

Throughout this work, we use the term “LL system” rather than “LL algorithm”, as the developed systems were composed of many different interacting components (e.g. regularization, experience replay, task change detection, etc.). The capability to do LL is a property of the overall system rather than any one component, and multiple metrics are needed to characterize LL systems.

The evaluation of these LL systems faced two key questions: (1) what metrics are most suitable for assessing LL, and (2) how can one apply these LL Metrics in a consistent way to different LL systems, each operating in a different domain? In particular, a primary purpose of this evaluation was to measure progress over the course of the program and to assess the strengths and weaknesses of different systems in an environment-agnostic manner, thereby providing deeper insight into LL.

The rest of this paper is organized as follows: In Section 2, we give an overview on LL systems, as well as different approaches for evaluating them. In Section 3, we introduce the core components of our approach for evaluating LL–conditions of LL, evaluation scenarios, and evaluation protocols. In Section 4, we define the metrics we use to evaluate LL systems. In Section 5, we describe a set of case studies that demonstrate the application of these metrics to varied domains. In Section 6, we conclude with insights from these case studies and give recommendations for assessing and advancing LL systems. Throughout this work, we introduce and use a number of terms which are defined in A.

2 Background

The area of machine LL has recently seen a large amount of attention in the research community (Silver et al., 2013; Chen and Liu, 2018a; Parisi et al., 2019; Hadsell et al., 2020; De Lange et al., 2021), especially through its connections to other subfields such as multi-task (Caruana, 1997; Zhang and Yang, 2021), transfer (Zhuang et al., 2019), incremental batch (Kemker et al., 2018), and online (Hoi et al., 2018) learning; as well as domain adaptation (Csurka, 2017) and generalization (Zhou et al., 2022). The distinguishing characteristic of LL is that a deployed system encounters a sequence of tasks over its lifetime, with no prior knowledge of the number, structure, duration, or re-occurrence probability of those tasks. The two key challenges are to retain expertise on previously learned tasks, thereby avoiding catastrophic forgetting (McCloskey and Cohen, 1989; Ratcliff, 1990; French, 1992, 1999; McClelland et al., 1995; Goodfellow et al., 2013), and to transfer acquired expertise to facilitate learning of new tasks (Pratt et al., 1991; Sharkey and Sharkey, 1993). Ultimately, an ideal LL system leverages relationships among tasks to improve performance across all tasks it encounters, even if the input distributions of those tasks change over a lifetime. Earlier work considered the challenges of developing algorithms to avoid forgetting and enhance transfer (Pratt, 1992; Ring, 1997).

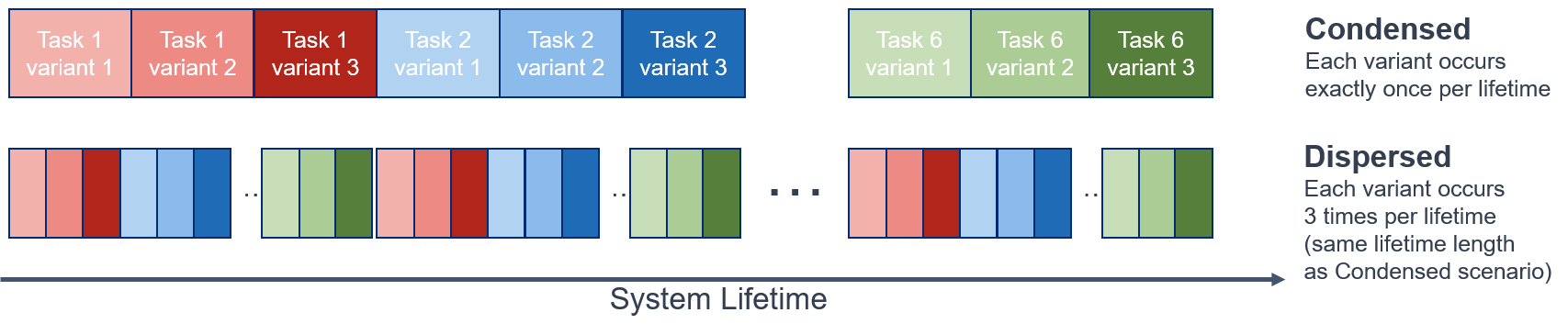

As different methods and algorithms for LL have been developed, various approaches have been taken for evaluating these systems. A key distinction has been made between evaluation scenarios and metrics: evaluation scenarios (as shown in Figure 1) set up the structure of the lifetime of the LL system–what tasks occur, how they are presented, and how often–whereas metrics assess how well the system performed over that lifetime. We recommend Mundt et al. (2022) as a concurrently-developed work focusing on the challenges of categorizing different LL algorithms and evaluations in terms of transparency, replicability, and contextualization. When constructing a set of metrics, it is important to decide what they should be assessing. Zhu et al. (2020) frame metrics for LL as assessing either generalization (how prior knowledge facilitates initial learning on a new task) or mastery (how prior knowledge facilitates eventual performance on a new task). The suite of metrics defined in this paper extends these concepts by defining conditions of LL in Section 3.1.

2.1 Evaluation Scenarios for Different Learning Paradigms

The difficulty of quantitatively evaluating LL systems has led to a variety of approaches, both specific to the learning type and more general. Quantitatively assessing the performance of classification LL systems is often more straightforward than assessing RL systems because there are straightforward ways of generating tasks from a dataset (e.g., by splitting sets of classes into tasks, or by inducing domain shifts). However, while evaluating the LL capability of a classification system is still challenging, the evaluation scenarios used to do so tend to be specific to the classification context, such as incremental class learning, e.g., Hsu et al. (2018). Despite this, there are broader insights that are applicable for RL as well, as noted by Farquhar and Gal (2019). In particular, Hayes et al. (2018b) identify different methods of setting up the sequence of observations that constitute each lifetime of the system: sampling from different tasks in an i.i.d. fashion, grouping them by task or by class labels within a task, or (most challenging) sampling and grouping them in a non-i.i.d. fashion.

Evaluation of lifelong RL faces additional challenges: (1) RL can be highly variable within and across training runs, and across rollouts of a fixed policy (Chan et al., 2020), (2) rewards across different tasks may have different scales or extrema, or may be unbounded, and (3) it is nontrivial to design tasks with well-characterized relationships (see, e.g., Carroll and Seppi (2005)). Nonetheless, work on RL generalization and transfer offers valuable insight for LL. Kirk et al. (2021) propose a useful formalism of a “contextual Markov decision process (MDP)” where for each episode encountered by the system, the state of the MDP encodes an unseen “context” (e.g., random seeds and parameters used to specify the task). During training and test, the system encounters episodes sampled from training and test context sets respectively, with generalization assessed using zero-shot forward transfer and a “generalization gap” metric (difference in expected rewards between train and test). One of their key recommendations is to specify tasks using a combination of procedural content generation (which varies based on parameters inherent to the environment) and explicitly specified parameters. In CORA, Powers et al. (2021) present a different approach for RL performance assessment. They handcrafted benchmark tasks for four different environments (Atari (Bellemare et al., 2013), ProcGen (Cobbe et al., 2020), MiniHack (Samvelyan et al., 2021) and AI2-Thor (Kolve et al., 2017)), and proposed a standard evaluation protocol ( tasks presented sequentially, cycled times).

2.2 Metrics for Different Learning Paradigms

Metrics commonly used to assess the performance of classification LL systems include average task accuracy (ACC), forward transfer (FT) and backward transfer (BT) (also denoted FWT and BWT, respectively), as well as model size, storage and computational efficiency (Rodríguez et al., 2018; Lopez-Paz and Ranzato, 2017). Other metrics specifically developed for classification LL include Cumulative Gain, which tracks ACC after each task exposure during the course of the system’s lifetime (Prado et al., 2020), , an extension of ACC that compares the accuracy to an offline learner (Hayes et al., 2018b), and Performance Drop (Balaji et al., 2020), which uses the baseline of a multi-task model trained jointly on all tasks.

Metrics used for assessing lifelong RL include those introduced by Powers et al. (2021) for use in CORA: Forgetting (change in performance on a task before and after learning a new task) and zero-shot FT (change in performance after learning a new task, relative to a random agent). They also present baseline algorithms demonstrating the value of the metrics and tasks. Zhu et al. (2020) also propose metrics for two-task transfer learning, comparing performance with and without prior task exposure: initial performance, asymptotic performance, accumulated reward (measured by an area under the curve (AUC) calculation), and time to a threshold performance. They also propose a Transfer Ratio (asymptotic performance measured as a ratio), and performance sensitivity (variance in performance with different hyperparameter settings).

In summary, there is currently no clear guidance for defining tasks or scenarios to exercise LL, other than the guidance of having multiple tasks with some kind of structured similarity and presenting tasks to the system without specifying the order beforehand. There are also no universally accepted metrics for LL, though FT is often used for both classification and RL, and average (or cumulative) change in performance is used in RL. Overall, there is no agreed-upon standard for how to assess LL systems across different environments in a uniform manner.

2.3 DARPA L2M Program Context

The L2M program was initiated to stimulate fundamental advances in lifelong ML systems. Of particular interest were systems operating in complex and challenging environments and potentially applicable to a broad array of domains (including autonomous driving, embodied search, and real-time strategy). To this end, research conducted under the program coalesced into five different domains.

|

Environment | Domain | ||||

|---|---|---|---|---|---|---|

| SG-UPenn (5.1) |

|

|

||||

| SG-Teledyne (5.2) |

|

|

||||

| SG-HRL (5.3) |

|

|

||||

| SG-Argonne (5.4) |

|

|

||||

| SG-SRI (5.5) |

|

|

Table 1 provides information on the five LL systems that were developed as part of the program, along with their associated environments/domains. In this work, we focused on the evaluation of systems within these five environments, but the concepts and methods are broadly applicable and could work well in conjunction with a library like Avalanche (Lomonaco et al., 2021). We treated each LL system as a black box, intentionally omitting details of the constituent components. Each system was developed by a different research team and their algorithmic advances are described in publications contained in Section 5.

2.4 Evaluation of LL systems

How exactly to assess such a wide variety of LL systems operating in diverse environments was a major challenge addressed during the course of the L2M Program. We emphasize that the goal was not to identify the “best” LL system, as each environment required different learning strategies. Instead, the goal was to provide deeper insight into the strengths and weaknesses of LL systems in an environment-agnostic manner. The L2M Program test and evaluation (T&E) team and research teams collaboratively identified and defined the following key components of an LL evaluation:

-

1.

The Conditions of LL the system needed to demonstrate, which are defined in Section 3.1. These conditions specify diverse criteria identifying different components of the overall phenomena of LL.

-

2.

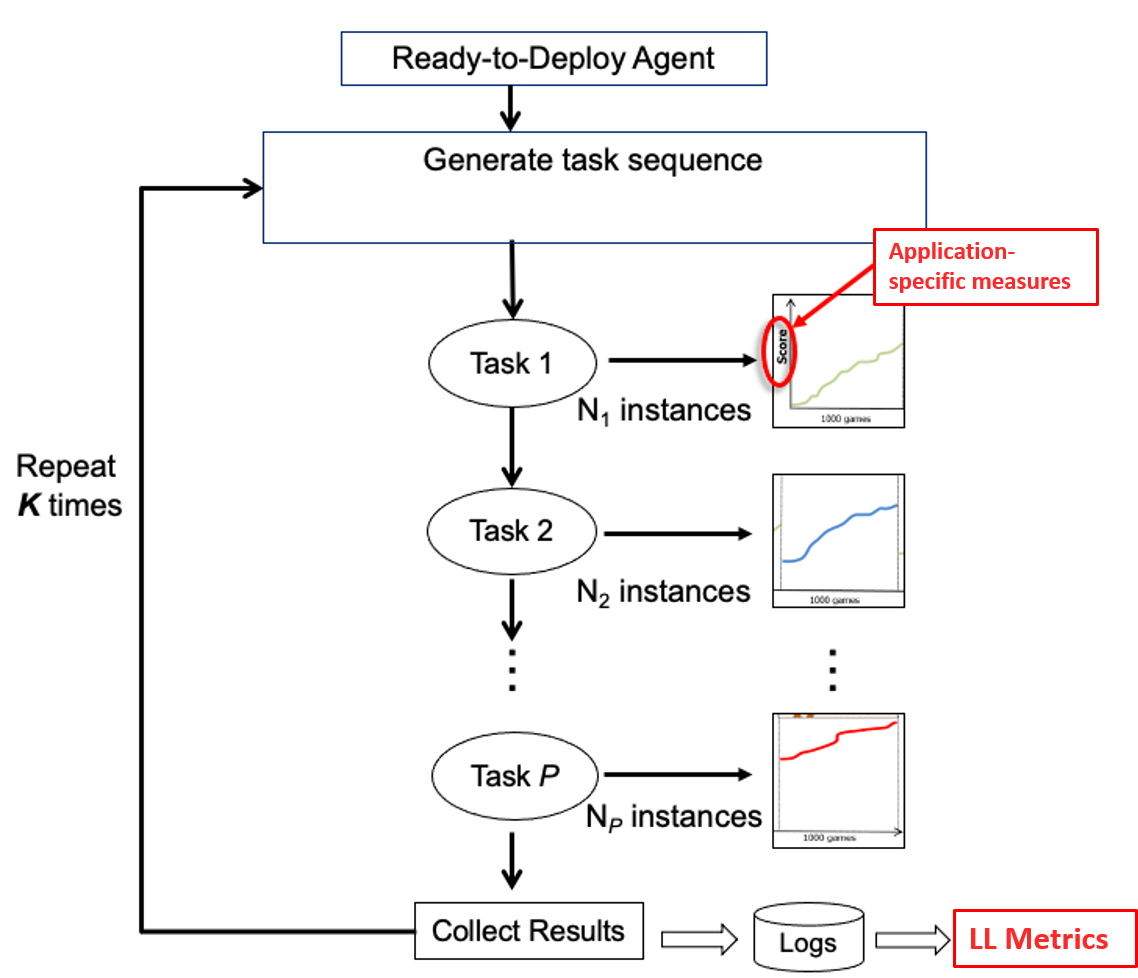

The Evaluation Scenarios that exercise the LL system for the purpose of computing metrics. This is an environment-agnostic template that defined the number of tasks and constraints on their relationships, as well as how they are sequenced in a given “lifetime” (or run) of the LL system. An example is demonstrated in Figure 1 and details are provided in Section 3.2.

-

3.

The overall Evaluation Protocol specifies how multiple lifetimes are set up, and consists of the Evaluation Scenarios as well as details (e.g. number of lifetimes) for obtaining statistically reliable metrics. Evaluation Protocols are discussed in Section 3.3.

-

4.

The set of LL Metrics (described in Section 4) that assess the conditions of LL. We discovered early on that a single metric would not be sufficient to cover all the conditions, and multiple metrics would be needed to characterize the LL systems.

3 Evaluation Approach

We consider three key aspects of evaluating LL systems–the conditions of LL (Section 3.1), scenarios that systems encounter (Section 3.2), and the overall protocols that specify an evaluation (Section 3.3).

3.1 Conditions of Lifelong Learning

We assert that an LL system must satisfy three necessary and sufficient conditions:

-

1.

Continuous Learning: The LL system learns a nonstationary stream of tasks (both novel and recurring), continually consolidating new information to improve performance while coping with irrelevance and noise.

-

2.

Transfer and Adaptation: As learning progresses, the LL system performs better on average on the next task it experiences, for both novel and known tasks (forward and backward transfer), maintaining performance during rapid changes in the ongoing task (adaptation).

-

3.

Scalability: The LL system continues learning for an arbitrarily long lifetime using limited resources (e.g., memory, time) in a scalable way.

These three conditions of LL have been used to drive the development of LL Metrics. They are similar to the notion of ‘generalization’ and ‘mastery’ introduced by Zhu et al. (2020), and two of our metrics can measure these concepts. The jumpstart formulation of FT (a Transfer and Adaptation metric) can be considered a measure of ‘generalization,’ and performance relative to a Single Task Expert (RP)- a Scalability metric - can be considered a measure of ‘mastery.’ It is important to point out that these conditions are partially independent; indeed, it is possible for a system to demonstrate LL in one condition but not in another. Because of this, it is all the more critical to use multiple measures to assess LL systems. The relationship between the Metrics, the Conditions of LL, and Scenario requirements associated with assessing them are discussed further in Section 4.

It is also worth noting the relationship between the above definition and related terms such as “Continual Learning” (Chen and Liu, 2018b). There are two aspects here. First, are the learning experiences from different tasks intermixed as an i.i.d sequence (online or streaming learning (Hayes et al., 2018a)) or as a non-i.i.d sequence with same-task experiences being batched together? Second, do new learning experiences expand the domain of already-learned tasks (incremental class learning), or are they entirely new tasks with new input and output domains (incremental task learning) (van de Ven and Tolias, 2018)?

Lifelong Learning, as defined above, is incremental task learning with same-task experiences batched together and with the additional constraint that the system leverage prior knowledge to become a more effective and efficient learner. The term “Continual Learning” has historically been used to loosely refer to either incremental task or class learning. However, over the past few years, it has been used more synonomously with Lifelong Learning. To avoid confusion, we consistently use the term “Lifelong Learning” in this paper.

3.2 Evaluation Scenarios

An Evaluation Scenario describes the patterns and frequency of task or task variant repetitions in sequence, and can facilitate evaluating LL systems with respect to specific metrics as well as provide insight into their strengths and weaknesses. Since certain task sequences are required to reasonably explore LL metrics, specifying a particular Scenario is a critical step in characterizing the performance of an LL system.

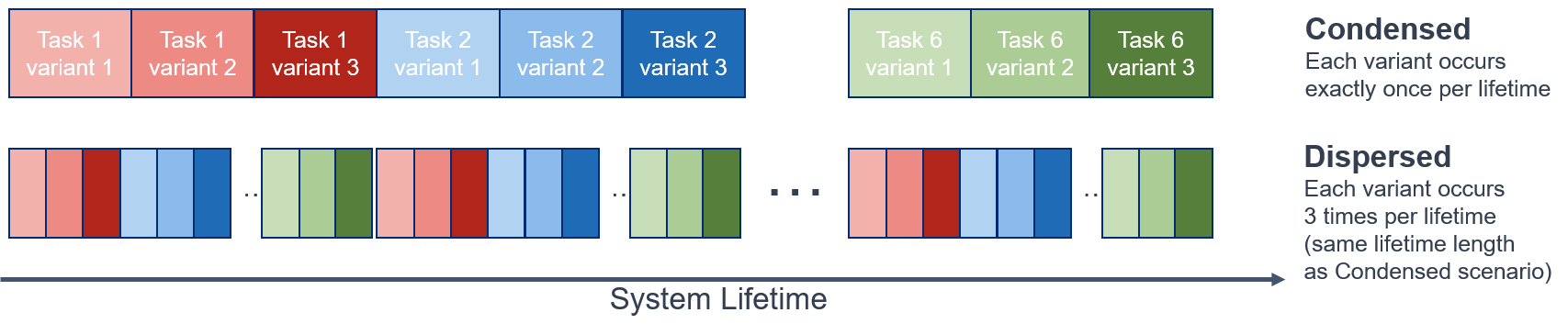

Two of the main scenario types used to accomplish this were Condensed and Dispersed Scenarios. Both scenario types are illustrated in Figure 2, with further details in B. Each involved a sequence of multiple tasks and variants. Individual runs had different permutation orders.

In particular, Condensed Scenarios involved concentrating all of the experience per task in one longer block. Dispersed Scenarios involved the same amount of experience per task, but with interleaved tasks in shuffled segments rather than appearing in sequence. These two scenario types were chosen to explore differences in system performance based on task ordering and appearance (since an operationalized system will not have prior knowledge of task sequences), and to ensure enough task repetitions for reasonably evaluating whether a system retained expertise on previously seen tasks. In Section 5, we see that some LL systems perform differently in various scenarios. These differences enable us to identify the characteristics, strengths, and weaknesses of an LL system.

In developing these scenario structures, we built on existing work in this area. For example, van de Ven and Tolias (2019a) proposed the class-incremental learning scenario, which is similar in structure to our condensed scenario. Concurrently to our work, Cossu et al. (2021) built off this and suggested the class-incremental with repetition scenario, which is similar to our dispersed scenario. Similarly, Stojanov et al. (2019) designs a class-incremental scenario that features parametric variation in its task design. Our framework differs in two key ways from these. First, it is meant to be more general than these scenarios, as it can accommodate LL systems that perform classification and/or reinforcement learning. Second, it incorporates task variants into its structure, which can help evaluate LL systems on environments with similar sets of tasks. Ultimately, the existence of these other scenarios is beneficial for exploring the combinatorial design space of LL scenarios, and benchmarks can be shared and extended. See B for a full example of what an Evaluation Scenario looks like.

3.3 Evaluation Protocols

In order to evaluate a particular LL system (consisting of a fixed set of hyperparameters, algorithms, and components), we recommend the use of an Evaluation Protocol. An Evaluation Protocol is a complete specification for conducting LL Scenarios to ensure reproducibility and obtain statistically reliable LL Metrics.

In addition to one or more Evaluation Scenarios, this specification consists of details about pre-deployment training (e.g., pretraining on a fixed dataset like ImageNet), and how multiple lifetimes (runs) should be generated for each scenario. This evaluation approach was used in the L2M program to foster experimentation on LL Metrics and to help researchers evaluate the performance and progress of their LL systems.

In addition to the Scenario specification, an Evaluation Protocol contains details for obtaining statistically reliable LL Metrics. As has been noted in the literature (Agarwal et al., 2021; Colas et al., 2018, 2019; Henderson et al., 2018; Dror et al., 2019), the training process for deep RL systems is noisy and variable, making it challenging to robustly evaluate them.

Our approach to generate statistically reliable LL Metrics is based on guidance in NIST/SEMATECH (2012), and similar to Colas et al. (2018). More details on this approach are provided in D. In contrast to much of the literature, which considers the problem of comparing two or more algorithms, here we focus on the challenge of obtaining reliable estimates of a system’s performance (with respect to the metrics defined in Section 4). Given such reliable estimates, we are able to determine whether a system is meeting a particular threshold. We further propose the use of LL thresholds in Section 4 to determine whether a system is demonstrating LL or not.

4 Lifelong Learning Metric Definitions

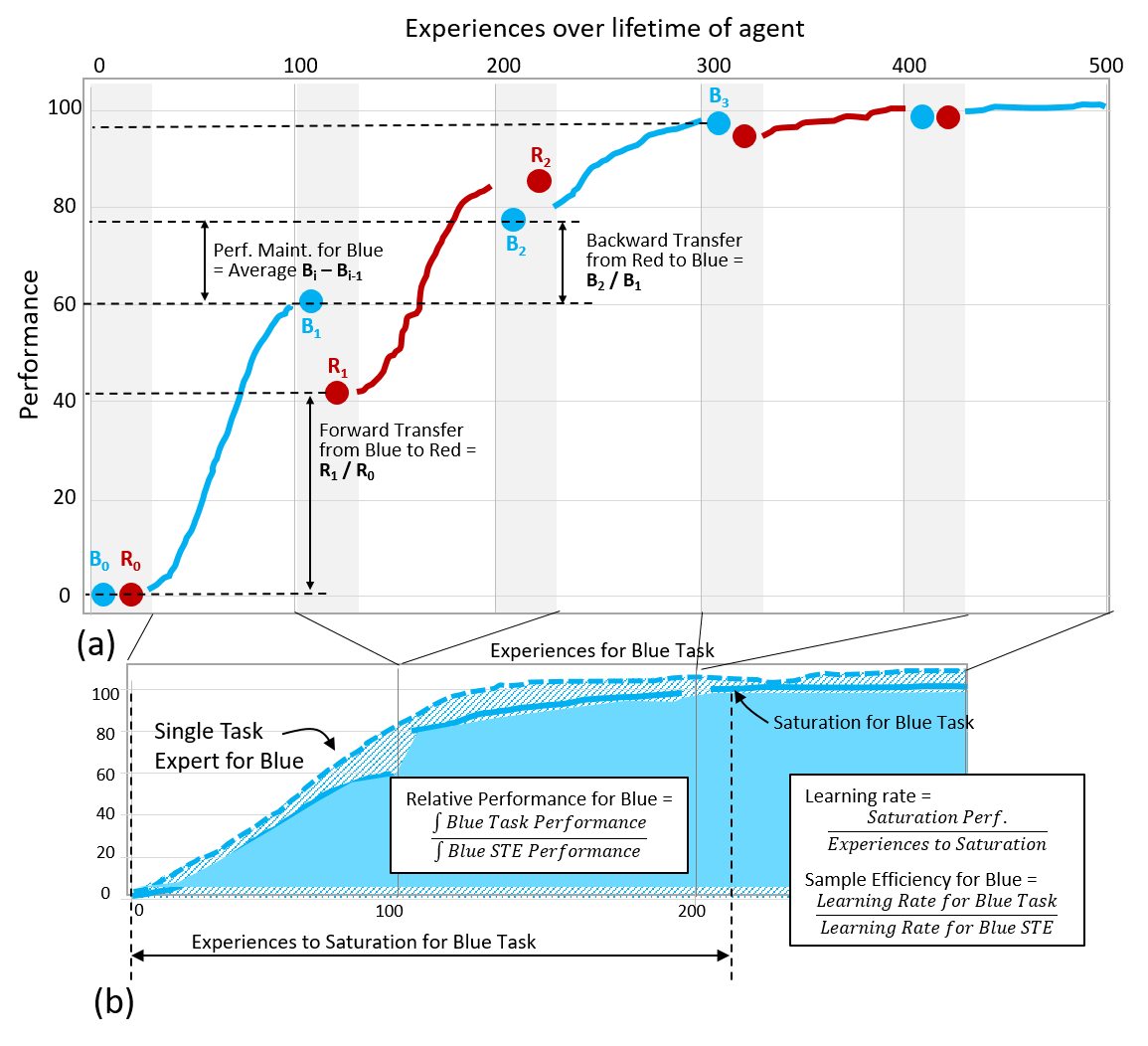

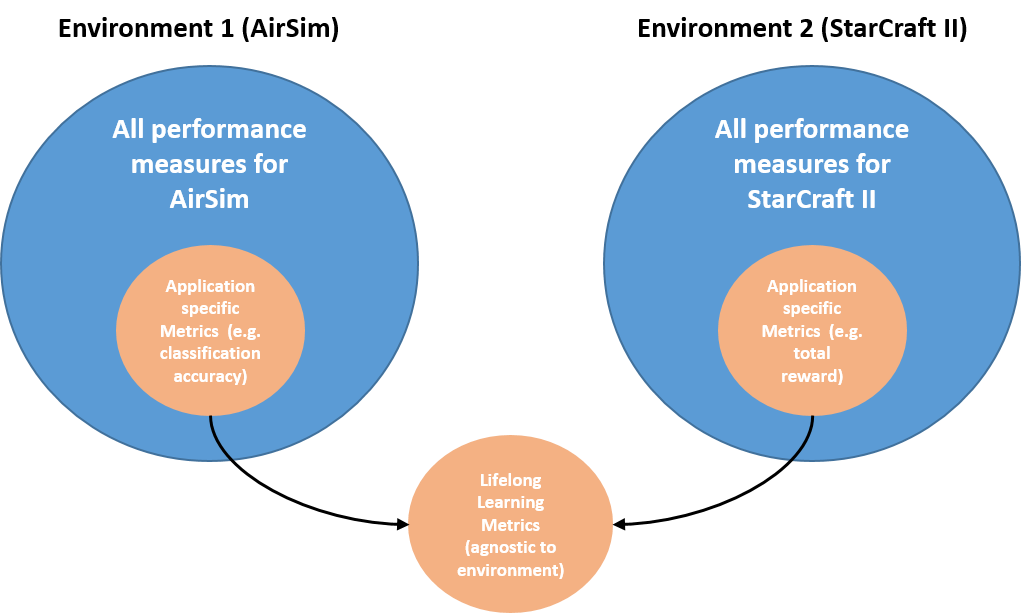

The Lifelong Learning Metrics are scenario, domain, environment and task-agnostic measures that characterize one or more Lifelong Learning (LL) capabilities across the lifetime of the system. This suite of LL Metrics, summarized in Table 2 and visualized in Figure 3, operates on application-specific performance measures (Section 4.1), making the evaluation methodology as separate as possible from the implementation details of a particular system.

| Metric Name | LL Condition | Assesses the LL system’s ability to: | ||||

|---|---|---|---|---|---|---|

|

|

Avoid catastrophic forgetting despite the introduction of new parameters or tasks | ||||

|

|

Use expertise in a known task to facilitate learning a new task | ||||

|

|

Use expertise in a new task to improve performance on a known task | ||||

|

Scalability | Match or exceed the performance of a single-task expert | ||||

|

Scalability | Make better use of learning experiences than an equivalent single-task expert |

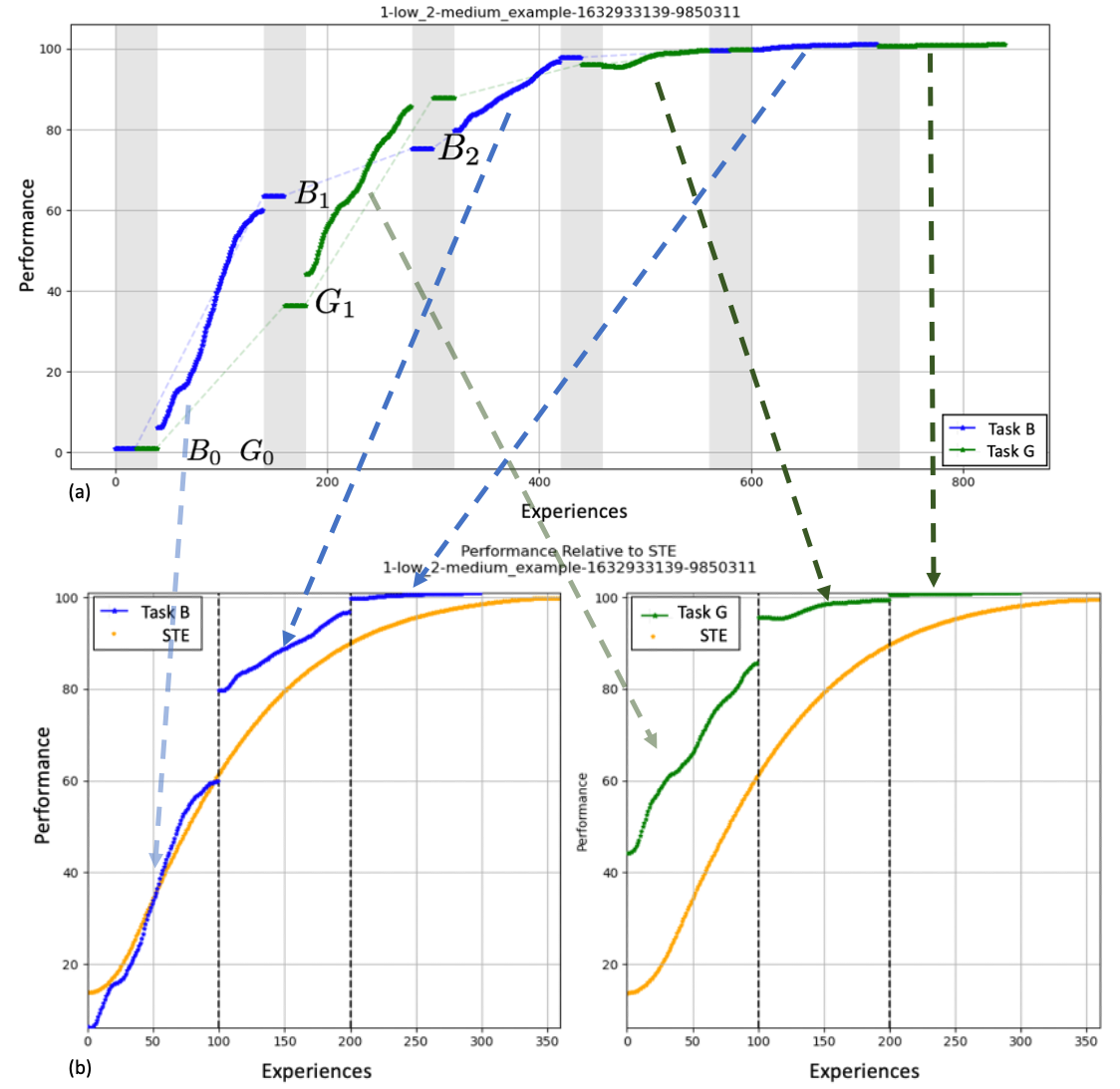

(a) White regions in the graph indicate Learning Blocks, and shaded regions indicate Evaluation Blocks. and refer to performance in the th Evaluation Block for the Blue and Red tasks, respectively. Horizontal dashed lines indicate relevant evaluation performance comparison points referred to in the example formulations of Performance Maintenance, Forward Transfer, and Backward Transfer Metrics.

(b) Single task expert (dashed blue) and LL system (solid blue) curves for the scenario shown in Fig. A. Vertical lines indicate the boundaries between each of the three Learning Blocks for the Blue Task stitched from above and overlaid with the Single task expert performance output of the same number of Learning Experiences. Experiences to Saturation and the Saturation Value for the Blue Task are also indicated on the figure to illustrate the example formulations of Sample Efficiency and Relative Performance Metrics.

The metrics are meant to work in a complementary manner in order to illustrate and characterize system capability. Thus, there is some overlap in the conditions they measure, as shown in Table 2, as well as in the means employed to do so. This approach ensures that no single metric value is responsible for fully quantifying an LL system’s performance and instead encourages deeper analysis into specific performance characteristics and the trade-offs between them.

The relationship between these metrics and the trade-offs illuminated by the case studies in Section 5 are explored further in Section 6. An in-depth discussion of the context of these metrics and their use can be found in New et al. (2022). Detailed formulations from New et al. (2022) are provided in C.2, and a publicly-available Python implementation of the metrics and a logging framework for systems that generate them are available online (Nguyen, 2022a, b).

4.1 Application-specific measures

As shown in Figure 4, an LL system performing tasks in its environment as specified by the Evaluation Protocol will generate some number of application-specific measures. Each learning experience (LX)– the minimum amount of experience with a task that enables some learning activity on the part of the system – is assumed to generate one or more scenario, domain, environment, and application-specific performance measures. A chosen subset of these application-specific measures is tracked and used to compute the LL Metrics. It is important to note that a task’s application-specific performance measures in a scenario will only be compared to the same task’s same application-specific performance measures. For example, consider an LL system that encounters two tasks and . Before encountering task , the system has a performance value for task of ; after encountering task , the system has a performance value for task of . Then, as defined in Section 4.3.2, we can assess how learning changes performance on with the backward transfer (BT) score:

There are no comparisons made between the performance values of and to compute these LL Metrics, so there is correspondingly no need to choose only one application-specific measure to assess an LL system’s performance across all tasks. In order to summarize the LL system’s performance for each Metric in a scenario, we used mean aggregation, but other options are possible.

In the following section we discuss each LL Condition, including the motivation for assessing it, the metrics associated with doing so, and the question that the metric attempts to address. At the end of each subsection, we provide LL threshold values for the metrics associated with that LL Condition.

4.2 Continuous Learning Metrics

A system demonstrating Continuous Learning will consolidate new information to improve performance while coping with irrelevance, noise, and distribution shift. The LL system needs to discover and adaptively select or ignore information that may be relevant or irrelevant. In particular, a Lifelong Learner must not be plagued by catastrophic forgetting, and performance must quickly recover when the agent is re-introduced to tasks whose performance may have degraded. While we have a metric to address whether a system has catastrophically forgotten task data, our attempt at formulating a metric to address whether a system recovers after a drop in performance was unsuccessful and is discussed more in Section 6.

4.2.1 Performance Maintenance (PM)

A Lifelong Learner should be capable of maintaining performance on each of its tasks. Performance Maintenance (PM) measures whether an LL system catastrophically forgets a previously learned task and compares a system’s performance when it first has the opportunity to learn a task to subsequent times experiencing the task. An important caveat here is that PM does not measure absolute performance levels; rather, it measures a change in performance over the course of the system’s lifetime. While there is some overlap between what PM and BT measure ( Section 4.3), BT compares a particular task’s evaluation blocks (EBs) immediately before and after learning a new task, whereas PM can be computed using any sequence of EBs, independent of how many other tasks were learned between.

4.2.2 LL threshold value for Performance Maintenance

The LL threshold value for PM is zero - this value indicates that, on average, there are no differences between initial and subsequent performances on a task. A positive value would indicate improvement over the course of a lifetime - a potential indicator of transfer. A negative value indicates forgetting. It is worth noting that this metric may be particularly sensitive to high variance in the application-specific measure ranges, since the metric computes a difference rather than use a ratio or a contrast.

| Case | Interpretation | ||

|---|---|---|---|

| PM 0 |

|

||

| PM = 0 | No forgetting; no additional learning. | ||

| PM 0 | (Does not demonstrate LL) Indicates forgetting. |

4.3 Transfer and Adaptation Metrics

One of the hallmark capabilities of a system capable of LL is the ability to leverage experience on one task toward improving performance on another. Without assuming knowledge of the details of how a system may accomplish this, we can measure progress toward this aim by computing both forward and backward transfer. At the very least, we expect that an LL system will not exhibit catastrophic forgetting, where learning a new task interferes with performance of a previously learned task.

For this particular suite of metrics, forward transfer (FT) was formulated as a jumpstart measure as introduced by Taylor and Stone (2007), where performance changes were assessed at the beginning of a learning block, measuring whether the system got a “jumpstart” on a future task. We used this formulation for FT for two primary reasons. First, the intention of these metrics was to be as domain-agnostic as possible, and addressing the nuance of how a learning curve changed could require a substantial amount of computational resources. Second, the preference was for a single value to express a system’s performance for each of the metrics, where possible. Transfer has been defined differently by others, but a jumpstart measure enables evaluation of the beginning of a system’s lifetime, which we felt was most appropriate given that we were assessing widely different systems. An important implication of this formulation to note is that for interpretability purposes, a forward transfer value is computed for only the first two tasks in a sequence.

4.3.1 Forward Transfer (FT)

FT involves a system utilizing experience from prior, seen tasks to improve on a future, unseen task. Importantly, since a primary aim in developing these metrics is their application without consideration of task specifics, we compute FT only in the first instance of each task pair as the ratio of the application-specific measure in an evaluation block before and after another task is learned. As formulated, this metric measures whether the LL system leverages data from a previously learned task to learn a new task, and it requires the presence of Evaluation Blocks before and after each new task’s first Learning Block in order to be computed. An important note about FT is that order of the tasks is important. FT may be present from Task A B, but not Task B A.

4.3.2 Backward Transfer (BT)

A system demonstrating BT will use expertise in a new task to improve performance on a known task. Unlike FT, which is only computed on the first instance of each task pair, BT can be computed for each task after every learning block (LB). This metric measures whether an LL system leverages data from a new task to improve performance on a previously learned task, and it requires EBs between each LB to measure the performance after new tasks are learned. BT is computed for each task where scenario structure allows.

4.3.3 LL Thresholds for Forward and Backward Transfer

Table 4 shows the LL threshold values for both FT and BT. A value of 1 would demonstrate no change in task performance, meaning neither forgetting nor transfer, whereas values above or below 1 would indicate transfer and interference, respectively.

| Case | Interpretation |

|---|---|

| BT / FT 1 | (Demonstrates LL) Indicates positive forward transfer. |

| BT / FT = 1 | No transfer or forgetting |

| BT / FT 1 | (Does not demonstrate LL) Indicates interference. |

4.4 Scalability Metrics

A fundamental capability for operationalized or deployable ML systems is the use of limited resources (e.g., memory, time) to accomplish or learn tasks in a scalable way. We expect an LL system to be able to sustain learning activity for arbitrarily long lifetimes including many tasks, though in practice,“arbitrarily long” and “many tasks” are relative to typical operational timescales of the application domain. While there are several ways to assess the use of limited resources, one domain-agnostic methods for doing so (used by Hayes et al. (2018b)) is to compare the performance of an LL system that is trying to learn many tasks to a single-task expert (STE) system that is learning just one task. The Sustainablity metrics assess essential components of LL because it is useful to see if an LL system is being outperformed by individual subsystems trained for each task. Scalability Metrics are an important component of system performance, in addition to being a proxy for task capacity.

4.4.1 Performance Relative to a Single Task Expert (RP)

An LL system with good performance relative to a Single Task Expert (RP) will perform well on each of its tasks when directly compared to the corresponding STE, often leveraging data from other tasks to do so. As formulated, RP measures how the performance of an LL system compares to a non LL system with comparable training. RP is related to the Transfer metrics in that a system that exhibits strong FT or BT should benefit from these effects. However, RP offers a more complete look at performance that combines all of the experience on a particular task and compares it to the performance of a STE with a similar amount of experience.

4.4.2 Sample Efficiency (SE)

Lifelong Learners are expected to sustain learning over long periods of time. The rate of performance gain of a system is a part of scalability; a system that learns quickly is efficient with the amount of experience it is exposed to. As formulated, sample efficiency (SE) describes the rate of task performance gain with additional experience. This metric measures the performance gain of the LL system by comparing the absolute level of performance (the “saturation value”) achieved by the LL system and the number of learning experiences required to get there with the corresponding STE values.

4.4.3 LL Thresholds for Relative Performance and Sample Efficiency

Determining threshold values for LL is more nuanced for the Scalability metrics. Ideally, we want the performance of an LL system to match or exceed that of an STE, as reflected in the determination of the LL thresholds in Table 5.

| Case | Interpretation |

|---|---|

| RP / SE 1 | (Demonstrates LL) Indicates Performance / Performance Gain above level of STE |

| RP / SE = 1 | Indicates Performance / Performance Gain exactly at level of STE |

| RP / SE 1 | (Does not demonstrate LL) Indicates Performance / Performance Gain below level of STE |

5 Case Studies with Lifelong Learning Systems

In this section, we examine five System Group case studies, all of which exercised the suite of LL Metrics. These metrics were computed on LL systems developed during the DARPA Lifelong Learning Machines (L2M) Program using various techniques and in different environments, as shown in Table 1. Over the course of L2M, we conducted multiple system evaluations, which are denoted by M12, M15, and M18. Each of the following subsections contains a brief overview of the corresponding LL system developed by each SG team, a description of the tasks used in each of the environments (summarized in Table 18), and a discussion of results and insights provided by the Metrics. For more details regarding the specific implementation of these systems and/or the results they generate, please see the referenced published work.

5.1 System Group UPenn - AIHabitat

5.1.1 System Overview

This section describes a case study on the development of the LL system led by SG-UPenn, a modular system that performs both classification and reinforcement learning (RL) tasks in realistic service robot settings. The core of the system, which integrates factorized models (deconvolutional factorized convolutional neural networks (DF-CNNs) for supervised learning (Lee et al., 2019) and lifelong policy gradients for faster training without forgetting (LPG-FTW) for RL (Mendez et al., 2020)), is divided into separate classification and RL pipelines, with the perception-action loop of a mobile robot. The system includes additional optional modules that can be combined with the core classification and RL pipelines, including a task-agnostic feature meta-learning module using meta Kronecker factorization optimization (Meta-KFO) (Arnold et al., 2021), intrinsic motivation via meta-learned intrinsic reward functions (Zheng et al., 2020), an alternative core RL algorithm based on the advantage actor critic (A2C) algorithm (Mnih et al., 2016), a self-supervised exploration module based on active visual mapping for robot navigation (Ramakrishnan et al., 2020), and a Markov decision process (MDP)-based curriculum learning module (Narvekar et al., 2020). These components can be turned on and off depending on the problem domain, and characterizing their effects through the set of LL Metrics proposed in this paper was a focus of the experimentation discussed in this case study. The task settings and select experimental results for the two pipelines are described below.

5.1.2 Classification Experimental Context

Classification. Lifelong classification experiments were carried out by SG-UPenn over data sets collected by simulated agents performing random walks through household environments in the AI Habitat simulator (Savva et al., 2019) using the Matterport 3D data set (Chang et al., 2017), resulting in realistic observations for household service robots derived from real world sensor data. All experiments were conducted over a fixed curriculum of object classification tasks, where each task required a mobile agent to classify a set of objects taken from an object superclass, e.g. classifying {chair, sofa, cushion, misc_seating} from the superclass seating_furniture.

5.1.3 Classification Experimental Results

| Configuration | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|

| DF-CNN | |||||

| META-KFO |

This case study focuses on a specific classification experiment for which the proposed set of LL Metrics was particularly informative. The goal of this experiment was to determine the differences in performance between factorized classification models and meta-learned classification models in a lifelong supervised learning setting. To explore this, SG-UPenn ran the same set of Lifelong classification experiments over two configurations of the system: the (factorized) DF-CNN core classification pipeline and the (meta-learned) META-KFO module. The results (Table 6) show that, while both approaches show good LL performance, META-KFO provides faster learning (higher SE) whereas the DF-CNN provides more stable learning through better catastrophic forgetting mitigation (higher PM and BT, with lower standard deviations). As such, SG-UPenn prioritized future development of the DF-CNN pipeline due to the stability afforded by the factorized method.

5.1.4 Reinforcement Learning Experimental Context

Reinforcement Learning. Lifelong RL experiments were carried out in the AI Habitat simulator using the Matterport 3D data set. All experiments were conducted over a fixed curriculum of object search tasks in the form of “find a given object (e.g. a chair, a cabinet, a sink, or a plant) in a given household environment (e.g. an apartment or a town house).” The agents observed RGB images from a head-mounted camera, and their actions were direct control commands.

5.1.5 Reinforcement Learning Experimental Results

| Configuration | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|

| M12 | |||||

| M15 | |||||

| M18 | 0.03 |

The first RL experiment (M12) hypothesized that intrinsic motivation would improve FT, RP, and SE in LL settings, making it an effective mechanism for knowledge reuse in lifelong RL. To test this hypothesis, SG-UPenn used the intrinsic motivation module combined with the core A2C RL algorithm. The results did not support this hypothesis, instead showing that intrinsic motivation is not an effective mechanism for lifelong learning, as shown in the M12 column of Table 7. The main issue identified was that the system was highly susceptible to catastrophic forgetting, as evidenced by the particularly low PM score. To overcome this problem, SG-UPenn focused system development on factorized methods instead, which are specifically designed to mitigate catastrophic forgetting.

The next set of RL experiments (M15) focused on evaluating the effectiveness of the factorized LPG-FTW algorithm in the realistic Habitat/Matterport environment. This system configuration used the core LPG-FTW algorithm with no additional modules. The results show significant improvement compared to the intrinsic motivation pipeline across all of the Lifelong Learning Metrics, with the exception of comparable RP. SG-UPenn notes that while the PM score was still negative, it is significantly higher than the intrinsic motivation pipeline, which shows increased mitigation of catastrophic forgetting. SG-UPenn continued to develop the LPG-FTW-based system with additional network architecture search and hyperparameter tuning that targeted the PM metric. Shown in the M18 column of Table 7, this resulted in significant improvements to both PM and FT. Contrary to the experimental results in the original LPG-FTW paper (Mendez et al., 2020), there is still relatively low performance with respect to single task experts (i.e. in the RP and SE metrics). SG-UPenn hypothesizes that this performance drop is due to the increased challenge of learning in high fidelity environments, and the higher task complexity that such environments entail.

5.2 System Group Teledyne - AirSim

5.2.1 System Overview

This section describes a case study on the development of the LL system led by SG-Teledyne. It consists of six key components, the core of which is the Uncertainty-Modulated Learning (UML) (Brna et al., 2019) algorithm. UML enables adaptation and learning in response to multiple types of uncertainty. Inspired by mechanisms of neuromodulation, UML compares its internal hypotheses against expectations and adapts its behavior based on the level of mismatch. Under high uncertainty, it re-configures itself and re-evaluates its inputs, allowing robust operation in noisy environments or in the presence of new conditions. Under low uncertainty, the algorithm can more confidently engage in long-term adaptation to learn new tasks or tune its knowledge base. Because uncertainty serves to gate learning and the type of adaptation in the system, it can prevent catastrophic forgetting and promote behaviorally-relevant adaptation. Furthermore, under very high uncertainty conditions, UML protects existing knowledge to allow one-shot learning of novel information. Finally, the algorithm can use its internal measures of uncertainty to actively seek new information to optimize learning and resource utilization (Brown et al., 2022). A limitation of UML is that it requires a robust representation of its inputs. Nonetheless, it has proven to work well when using the output layer of deep neural networks trained on datasets such as ImageNet (Deng et al., 2009) or COCO (Lin et al., 2014). Another limitation is that it learns to recognize tasks by the difference in the context of each task. Therefore, there is a requirement that each task possesses a sufficiently different context.

5.2.2 Experimental Context

The UML algorithm has been evaluated in multiple machine learning (ML) domains, including classification (Basu et al., 2017)), embodied agents (Brna et al., 2019), (Brown et al., 2022), and reinforcement learning. Under DARPA L2M, UML was evaluated using an embodied agent. Data was generated using AirSim (Shah et al., 2018) in a custom Unreal Engine 4 environment. The classification tasks were split into two “Asset Groups” loosely corresponding to notional municipal interest groups: EMA (Emergency Management) vehicles and DOT (Department of Transportation) traffic control assets (e.g., stop signs, traffic lights, etc.). Each asset group contained 2-3 individual classes of objects. The classification problems associated with each asset group formed tasks, and variants of those tasks were generated using different environmental conditions (e.g., time of day).

Experiments were conducted on permutations in ordering of these task variants, with a full evaluation across tasks being conducted after each exposure to a task.

5.2.3 Experimental Results

Table 8 shows aggregate results across all such runs generated using the SG-Teledyne system, which matched or exceeded the LL threshold value in all 5 metrics across the collected runs. These metrics enabled us to evaluate the performance of individual components in the system and their impact on LL capabilities. In an ablation experiment, Teledyne (TDY) showed that the memory consolidation technique in one of the system components (C5) was responsible for a significant gain in FT, but at the expense of PM, while other metrics remained relatively constant. These metrics enabled a deeper analysis and more complete understanding of the the impact of this component as it relates to the LL characteristics.

| Configuration | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|

| TDY UML Agent | |||||

| TDY C5 Ablation |

5.3 System Group HRL - CARLA

5.3.1 System Overview

This section describes a case study on the Super Turing Evolving Lifelong Learning ARchitecture (STELLAR), the LL system developed by SG-HRL. STELLAR is a general-purpose, scalable autonomous system capable of continual online RL that is applicable to a wide range of autonomous system applications, including autonomous ground vehicles (both on-road and off-road), autonomous undersea vehicles, and autonomous aircraft, among others. It consists of a deep convolutional encoder that feeds into an actor-critic network and is trained using Proximal Policy Optimization (Schulman et al., 2017). Importantly, STELLAR integrated 11 innovative components that solve different challenges and requirements for LL. It employed Sliced Cramer Preservation (SCP) (Kolouri et al., 2020), or the sketched version of it (SCP++) (Li et al., 2021), and Complex Synapse Optimizer (Benna and Fusi, 2016) to overcome catastrophic forgetting of old tasks; Self-Preserving World Model (Ketz et al., 2019) and Context-Skill Model (Tutum et al., 2021) for backward transfer to old tasks as well as forward transfer to their variants; Neuromodulated Attention (Zou et al., 2020) for rapid performance recovery when an old task repeats; Modulated Hebbian Network (Ladosz et al., 2022) and Plastic Neuromodulated Network (Ben-Iwhiwhu et al., 2021) for rapid adaptation to new tasks; Reflexive Adaptation (Maguire et al., 2021) and Meta-Learned Instinct Network (Grbic and Risi, 2021) to safely adapt to new tasks; and Probabilistic Program Neurogenesis (Martin and Pilly, 2019) to scale up the learning of new tasks during fielded operation. More details on the precise effect of each of these components are beyond the scope of this paper; however, this case study outlines how the integrated system dynamics demonstrated LL using the proposed metrics, and how these metrics shaped the advancement of the SG-HRL system.

5.3.2 Experimental Context

STELLAR was evaluated within the CARLA driving simulator (Dosovitskiy et al., 2017) in both the Condensed and Dispersed LL Scenarios (described in Section 3.2), which were each based on three tasks with two variants per task. The agent was required to drive safely from one point to another within a designated lane (either correct or opposite) in traffic. It was given positive rewards in each time step (every 50 ms) for distance traveled towards the destination and increasing speed within the designated lane. It was given negative rewards for distance traveled away from the destination and decreasing speed within the designated lane, as well as any collision. A given episode was terminated when the destination was reached, a maximum number of time steps had elapsed, or there was any collision. SG-HRL employed two vehicle models (Audi TT [car], Kawasaki Ninja [motorcycle]) with built-in differences in physical parameters such as for the body (e.g., mass, drag coefficient) and wheels (e.g., friction, damping rate, maximum steering angle, radius). The vehicle models also differed in camera orientation (0∘ yaw for car vs. 45∘ yaw for motorcycle).

The same architecture as the STELLAR systems was used to train the STEs to saturation, thereby characterizing the ability of the STEs to learn each task. SG-HRL collected 10 STE runs per task, which were all initialized with the same “ready-to-deploy” state as the STELLAR system.

5.3.3 Experimental Results

Given that the STELLAR system integrates the 11 components listed above with the specific intent to achieve various LL capabilities, SG-HRL expected the metrics to reveal such properties of the system. Indeed in both Condensed and Dispersed scenarios, the STELLAR system exceeded the threshold for LL for 4 of the 5 metrics, with only a non-catastrophic degradation in PM of old tasks through the lifetimes (Table 9).

| Configuration | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|

| Condensed (n=33) | |||||

| Dispersed (n=30) |

Further, as shown in Table 9, SG-HRL found that the performance was not significantly different between the Condensed and Dispersed scenarios. However, all the LL Metrics were numerically lower for the Dispersed scenario, with the decrements being significant at for two metrics; namely, FT ( = 0.089, Mann-Whitney U Test) and RP ( = 0.038, Mann-Whitney U Test). Potential explanations for the across-the-board numerical decrements in the metrics include: the increased cost of switching among tasks in the Dispersed scenario, greater interference from other tasks in the intervals between learning blocks for a given task, or the lack of any dependence of the strength of the consolidation mechanisms (SCP++, Self-Preserving World Model) on the performance levels acquired in the preceding learning blocks. In the Dispersed scenario, task performances in earlier learning blocks are not expected to be high due to shorter durations. In this case, strong preservation of sub-optimal task representations would interfere with subsequent learning blocks. Thus, the hyperparameters that control the degree of preservation should be reduced to improve all the LL Metrics.

| Configuration | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|

| Dispersed | |||||

| Reduced SCP++ stiffness |

The STELLAR system requires considerable analysis to assess how each component contributes to various LL capabilities. This case study represents one such analysis to illustrate the impact on the metrics. SG-HRL hypothesized that stronger consolidation mechanisms would reduce LL in the Dispersed scenario which, unlike the Condensed scenario, has task repetitions. SG-HRL also predicted that strong consolidation of sub-optimal task representations after each task would negatively impact subsequent learning blocks. Data was collected for the Dispersed scenario with the SCP++ stiffness coefficient reduced to 10% of the nominal value (Table 10). As expected, SCP++ stiffness reduction resulted in improvements in 3 of the 5 metrics; namely, PM (from -2.73 to 0.26), BT by about 10%, and RP by about 30%. But the manipulation also caused decrements in the other 2 metrics; namely, FT by about 4% and SE by about 50%. Of these effects, the improvement in RP ( = 0.022, Wilcoxon Signed Rank Test) and the decrement in SE (=0.0026, Wilcoxon Signed Rank Test) were statistically significant, and the improvement in PM (=0.055, Wilcoxon Signed Rank Test) was significant at . More work will be needed to understand the dynamics of LL for task repetitions in the context of the multi-component STELLAR system. It may be the case that the degree of consolidation (structural regularization, interleaving of explicit/generative replays) should be further contingent on task learning, and SG-HRL anticipates testing this in the future.

5.4 System Group Argonne - L2Explorer

5.4.1 System Overview

This section describes a case study on the development of the LL system led by SG-Argonne. The system’s design was inspired by the brains of insects and other small animals with the motivation of developing systems capable of LL that can operate effectively at the edge (Yanguas-Gil et al., 2019).

In particular, it focuses on the use of: 1) modulatory learning and processing, which control how information is processed, as well as when and where learning takes place (Daram et al., 2020); 2) metaplasticity models, which modulate synaptic plasticity rules that keep either a memory or an internal state in order to preserve useful information (van de Ven and Tolias, 2019b); 3) broadly trained representations, which apply transfer learning to minimize what the system needs to learn during deployment, and 4) structural sparsity, which minimizes the impact of forgetting by curtailing gradient propagation in stochastic gradient descent methods (Madireddy et al., 2020).

In the context of RL, Argonne adapted these principles to propose two types of algorithms. First, they proposed a lifelong deep Q learning algorithm (Mnih et al., 2013) aimed at solving problems where a consistent policy is learned across a series of independent tasks without specific task labels. Second, they proposed a lifelong cross entropy algorithm, which applies to situations involving short, potentially contradictory tasks, where no prior information is available that would lead to accurate and consistent computations of the value of each state. For the case of deep Q learning, Argonne’s system realizes short term and long term memory buffers by implementing periodic shuffling. The size of the buffers is kept within the length of a single task.

5.4.2 Experimental Context

Over the course of the project, SG-Argonne worked in two different environments. The first and more complex environment was L2Explorer (Johnson et al., 2022), a first-person point of view environment built on top of the Unity engine (Juliani et al., 2018) that allows the creation of tasks involving open-world exploration. Argonne designed a series of tasks emphasizing different aspects of a complex policy involving target identification and selection, navigation through obstacles, navigation towards landmarks, and foraging objects while avoiding hazards. The same tasks were implemented in Roundworld, a lightweight, first-person point of view environment developed by Argonne that comprises a simpler set of objects and visual inputs, allowing us to evaluate the algorithm across two different environments.

5.4.3 Experimental Results

Table 11 shows the performance of the deep Q learning algorithms in the two different environments. In both cases there is a consistent evidence of both forward and backward transfer across tasks in the proposed scenario. One of the characteristic aspects of these environments is their task variability, both by design and driven by the open world nature of the environments. In the context of RL, this leads to large fluctuations in the values of PM and BT for both environments, with standard deviations more than one order of magnitude higher than those typically observed in image classification scenarios. On the other hand, both scenarios show values of FT, RP, and SE that are consistent with the presence of LL behaviors.

| Environment | Scenario | Agent | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|---|---|

| L2Explorer | Condensed | M18 | |||||

| Roundworld | Condensed | M18 |

Having access to different metrics allows for deeper insight into variations in the system’s performance. Overall, the results obtained point to a complex picture in which the same Lifelong Learning system can exhibit different behavior depending on how well it can transfer information during its lifetime. However, further studies are needed in order to fully explore how the behavior of the agent depends on task sequence and its ability to effectively transfer relevant policies across tasks.

5.5 System Group SRI - StarCraft II

5.5.1 System Overview

This section describes a case study on the development of the LL system led by SG-SRI. The system is targeted at real-time strategy games where task change occurs naturally and throughout game play. For example, a competent Starcraft-2 (SC2) player is able to adapt their tactics to different enemy units. This section applies lifelong RL techniques to micromanagement tasks in SC2. This case study shows that the proposed metrics (a) validate that the negative effects of task drift are mitigated, (b) drive algorithm development to improve metrics, and (c) provide insights into software integration of multiple continual learners.

Components of the SG-SRI system (Sur et al., 2022; Daniels et al., 2022) include: (i) WATCH (Faber et al., 2021, 2022), a Wasserstein-based statistical changepoint detection that detects changes in the environment; (ii) Self-Taught Associative Memory (STAM) (Smith et al., 2021), to generate feature maps from RGB images in a continually updated manner; (iii) Danger detection, using the continual learner deep streaming linear discriminant analysis (DeepSLDA) (Hayes and Kanan, 2020); (iv) Compression, using the REMIND algorithm (Hayes et al., 2020) that uses Product Quantization (PQ); and (v) Sleep phase, implemented using the Eigentask framework (Raghavan et al., 2020).

5.5.2 Experimental Context

The tasks are defined using different SC2 maps called “minigames“ (Vinyals et al., 2017). The system is evaluated on the minigames of DefeatRoaches, DefeatZerglingsAndBanelings and CollectMineralShards. To each task, SG-SRI added a variant of the task that spawns two groups of enemies on each side of the map, creating a total of 3 tasks and 2 variants each. In the case of Collect, the variant has fog enabled (partial observability). SG-SRI notes that combat related tasks (Defeat) are most similar to each other (due to their reward structure) and represent 4 out of 6 tasks, so high forward transfer (jumpstart) is expected even for the single task learner.

5.5.3 Experimental Results

Table 12 shows evolution of the Eigentask algorithm driven by the proposed LL Metrics, with the current version of the system (denoted M18) achieving the criteria of lifelong learning in all but one metric (PM) in the condensed scenario and achieving the criteria of LL in several metrics for the alternating scenario. These versions, denoted as M12, M15, M18, correspond to the evaluations performed under L2M. These versions primarily differ in the generative replay architecture. The M12 model connects the autoencoders and policies one after another, whereas M15 uses a two-headed architecture using a common latent space and M18 uses hidden replay. In both scenarios, the metrics show that the M18 version that uses hidden replay is a significant improvement. Of note, the reported metrics have significantly lower variance with the M18 model compared to the M15 and M12 versions for the condensed scenario.

| Scenario | Agent | PM | FT | BT | RP | SE |

|---|---|---|---|---|---|---|

| M12 | ||||||

| Condensed | M15 | |||||

| M18 | ||||||

| M12 | ||||||

| Alternating | M15 | |||||

| M18 |

To study the effect that change detection and compression had on the overall performance of the LL system, SG-SRI performed an ablation experiment against the baseline Eigentask component in two different scenario types. PM and BT values are compared in Table 13, showing that triggering the sleep phase by statistical changepoint detection results in significantly higher PM compared to triggering it by a hand-coded schedule. This demonstrates the importance of task detection in LL systems in the task-agnostic setting and also shows that the compression of wake phase observations results in significantly higher PM. This ablation experiment demonstrates how the metrics shed insight on the impact of various system components during the development of the SG-SRI LL system.

| Agent | Performance Maintenance | Backward Transfer | ||

|---|---|---|---|---|

| Condensed | Pairwise | Condensed | Pairwise | |

| Single Task Learner (STL) | () | () | () | () |

| Eigentask (M15) | () | () | () | () |

| Eigentask + Change detection | () | () | () | () |

| Eigentask + Compression | () | () | () | () |

5.6 Summary of Case Studies of Systems Demonstrating LL

In this section we have reviewed five System Group case studies, all of which operated in different environments and employed different algorithms. Each of them used the suite of LL Metrics to inform their system development and evaluate whether their systems demonstrated the Conditions of Lifelong Learning in various experiments. In Table 14 we see that across all of the System Groups, the Lifelong Learning thresholds were met or exceeded for 52 out of 90 metrics, with Performance Maintenance only meeting the LL Threshold values in 3 of the 18 configurations compared to 13 configurations for Forward Transfer. This is unsurprising given that Performance Maintenance and Forward Transfer represent different aspects of the well-known performance trade-off between stability and plasticity, which we discuss further in Section 6.

| SG | Config | PM | FT | BT | RP | SE |

| UPenn | DF-CNN | < | < | |||

| META-KFO | < | < | < | < | ||

| RL M12 | ||||||

| RL M15 | < | |||||

| RL M18 | < | < | ||||

| Teledyne | C5 Ablated | < | ||||

| UML | < | |||||

| HRL | Condensed | < | < | |||

| Dispersed | < | < | < | |||

| SCP Ablation | < | < | ||||

| Argonne | L2Explorer | < | ||||

| Roundworld | < | |||||

| SRI | M12 Condensed | |||||

| M15 Condensed | < | < | ||||

| M18 Condensed | < | < | ||||

| M12 Alternating | ||||||

| M15 Alternating | < | |||||

| M18 Alternating |

6 Discussion

In this work, we have proposed and investigated a suite of domain- and technique- agnostic metrics to enable a systems-level development approach for evaluating Lifelong Learning systems. Such an approach is critical to supporting the multi-objective nature of Lifelong Learning (LL) system development, especially because increasingly complex solutions are required to advance the state of the art towards LL. A strength of our approach is that it simultaneously considers and quantifies varied capabilities of LL systems, rather than focusing on any single aspect of performance. By using the full suite of metrics to evaluate the System Group case studies, we were able to identify and study the performance trade-offs inherent to LL. Next, we discuss known performance trade-offs seen with these metrics, propose a new trade-off, and make recommendations for creating additional metrics for future investigations based on the accomplishments of the DARPA Lifelong Learning Machines (L2M) program.

6.1 LL Performance Trade-offs

We have argued that LL is complex and cannot be characterized by a single scalar value. This has motivated our development of a suite of metrics.

Designing an LL system must consider the following trade-offs:

-

1.

Stability vs. Plasticity: Should a system stably maintain all information it has encountered up to some point, even if that results in less flexibility to adapt to changes?

-

2.

Optimal Performance vs. Computational Cost: Should a system be optimized for maximum performance, even if that comes at a high computational cost?

-

3.

Sample Efficient vs. Robust Learning: Should a system prioritize a fast performance gain, even if it is less robust to noise or changes in the environment?

The most widely discussed trade-off in LL literature is the relationship between Stability, where a system has reliable or low-variance performance, and Plasticity, where a system is flexible and adaptable to changes (see, e.g., discussion in Mermillod et al. (2013); Grossberg (1988)). Performance Maintenance (PM) is a measure of stability, since it assesses how well a system retains task knowledge gained over the course of its lifetime; forward transfer (FT) is a measure of plasticity, as it assesses how well a system can apply knowledge from one task to another. In some cases, like the stiffness parameter experiment examined in SG-HRL’s case study (see Table 10), there is an explicit parameter that can be tuned, depending on the needs of the particular application, to prioritize reliability or flexibility. This results in somewhat expected behavior changes. In other cases, the trade-off is seen as a byproduct of targeting improvements in transfer, like in SG-Teledyne’s addition of a memory consolidation component (see Table 8), which manages the system’s stored knowledge. This addition caused marked improvement in FT– a measure of Plasticity – but at the cost of PM, a measure of Stability.

It is understood that LL systems operating in diverse environments will have varied design considerations; the availability or restriction of computational resources is one such factor. This can result in an intentional decision to choose system components that are less performant but cheaper computationally. While this discussion surfaces in the literature, particularly with regard to deployment considerations, we chose not to measure the computational resource expenditure for these evaluations. Instead, we allowed system groups to make their own assessments of progress in their domain. Even if an LL system is initially very computationally intensive, it may be possible to develop a more efficient system in the future. In non-LL, existing techniques for managing model complexity include: model distillation (Hinton et al., 2015; Gou et al., 2021), intelligently-designed model scaling strategies (Tan and Le, 2019), and investigations of broad scaling phenomena (Kaplan et al., 2020). These approaches could potentially be extended to LL; in Hayes et al. (2020), SG-SRI built on a technique called progress & compress (Schwarz et al., 2018). We see the addition of a metric to standardize the measurement of resource utilization as an excellent extension of this suite, and we summarize some initial efforts in this area in G. We collect our comments, observations and recommendations for the design and use of such a metric in Section 6.2.

We hypothesize that, as more progress is made to develop LL systems, more of these system design/performance trade-offs will be discovered. One trade-off that we observed in the SG-UPenn case study (Section 5.1) was between sample-efficient and robust learning. The system’s robustness to task or parameter changes was measured using the PM metric, and efficiency was measured via the sample efficiency (SE) metric. We can imagine a situation where a system may have an extremely robust representation of a wide range of tasks – along the lines of a subject matter expert for a particular problem space – but perhaps amassing that knowledge required significant training data and time. Conversely, a system may demonstrate aptitude for rapid mastery, but lack the broader experience to capably handle the details or nuance of edge cases.

The trade-off, then, may be that in some circumstances, optimizing for robustness comes at the cost of learning efficiency and vice versa. This goal is particularly relevant in data-poor contexts or where the cost of training is high; both of these apply in many robotic applications (like the SG-UPenn service robot setting). The LL system the SG-UPenn team built to address these challenges includes modularized components and factorized models, an approach that is well-suited to these conditions. Correspondingly, we see that when modifications were made between M15 and M18 systems to target gains in PM (Table 7), the resulting M18 results improved in PM, but at the cost of a lower Sample Efficiency. This demonstrates a consequence of the opposing aims of Robust and Sample Efficient learning. We imagine that this trade-off may not be applicable to problems with low-cost or abundant training data. However, it is apparent in this particular example, because SG-UPenn’s system design is intended for eventual transfer to service robot settings.

6.2 General Considerations for Formulation and Use of Metrics

One of the challenges of measuring LL performance is evaluating over the space of possible task sequences. Because these tasks may require orthogonal skills, it is an immense challenge to quantify a priori what ideal or even ‘good’ performance looks like for such a sequence. The standard we chose for determining thresholds for LL, which can certainly over-penalize an LL system, was perfection – perfect transfer between tasks and perfect memory of a task over the entire duration of a scenario. Over the course of the agent’s lifetime, any interference, forgetting, or performance not equal to or better than an STE was considered to be below the threshold for LL. Meeting this threshold for all lifelong learning conditions is likely to be difficult in real-world conditions. Determining an appropriate upper bound for performance on a sequence of tasks is a fundamental challenge – one that requires leveraging information like task difficulty and task similarity (and thus task transferability) – and was out of scope for this work. Below we outline some specific recommendations for metric design, some of which pose particularly unique challenges in the LL domain.

-

1.

Do not design metrics that rely on idealized performance curves

Despite knowing that we lack the ability to quantify what good performance is for a given sequence of tasks, there were some unanticipated difficulties in using the metrics related to some key assumptions about the nature and behavior of LL systems:-

(a)

Assumption 1: Learning a particular sequence of tasks is possible.

When we develop metrics to evaluate any machine learning system, we are often doing so based on an implicit assumption that a task is learnable by the system or, at least, that the system is capable of demonstrating some performance gain overthe course of its learning experiences (LXs). In the absence of baseline approaches on the same sequence of tasks to compare to, we may not even be able to say whether a sequence of tasks is learnable at all without running a cost-prohibitive number of experiments. In fact, the idea of learnability in the Lifelong Learning context has only recently been investigated in works such as Geisa et al. (2021), who explores the relationship between weak and strong learnability for both in-distribution (i.e. non-LL) and out of distribution problems. As the theory of learnability for Lifelong Learning is still developing, we must design our metrics acknowledging the potential for systems to demonstrate no learning on some tasks and, importantly, address whether or not those runs should be considered in computing the LL metrics. The results shown in Section 5 included all runs, independent of whether tasks demonstrated learning.

-

(b)

Assumption 2: In learning a sequence of tasks, performance on a previously learned task may drop, but it can and will “bounce back” when the task is shown later.

This assumption drove the design of the Performance Recovery metric, which in theory was designed to measure whether an LL system’s performance recovers after a change is introduced to its environment. To compute Performance Recovery, we first calculated the number of learning experiences the system required in order to get back to the previously attained value after a drop (recovery time), and computed the change in this number of experiences over the course of the system’s lifetime (i.e., fitted a line to the recovery times and computed the slope of the line). The idea was that a system demonstrating LL would adapt more quickly to changes as it amassed more experience.Of note, Performance Recovery could only be assessed for scenarios with many task repetitions. The use of this metric proved to be problematic, in particular because some systems would fail to “bounce back” sufficiently. This dependency of final system performance on initial LXs has been observed in the broader deep reinforcement learning (RL) space (Nikishin et al., 2022), where it was aligned with the concept of “primacy bias” from human cognition studies (Marshall and Werder, 1972). Beyond this binary challenge of a system returning to previous performance or not; given the variability in the application-specific measures, it also remained difficult to discern when performance has actually “bounced back” and to what should the new performance be compared, and how should we handle noise in these measurements? Dror et al. (2019) recommends the use of the Almost Stochastic Dominance test to mitigate the variability issue we faced, but we were unable to implement this due to the computational expense associated with this analysis.

-

(c)

Assumption 3: We can identify when a task has been “learned,” or at least, when the system performance has saturated.

Computing whether a system’s performance has saturated (and to what value) is not straightforward, in part due to the heteroskedastic nature of the learning curves. There is unpredictability to system learning, and coupling this with noisy learning makes this computation even more of a challenge. In addition, the notion of “saturation” may be ill-defined, particularly when the distribution of an environment within a learning block is nonstationary. In the case of this suite of metrics, Sample Efficiency explicitly relies on the computation of a saturation value, and Performance Maintenance compares an average of the most recent training performance to future evaluation performances – with the implicit assumption that a system has reached a stable, if not maximal, performance value at the end of a learning block.

In light of these challenges, we recommend designing an assessment – even a simple performance threshold specific to an environment – to determine whether a system has learned and to lend insight into computed metric values.

-

(a)

-

2.

Do not avoid metrics that measure overlapping concepts.