A Dynamical Approach to Operational Risk Measurement

Abstract

We propose a dynamical model for the estimation of Operational Risk in banking institutions. Operational Risk is the risk that a financial loss occurs as the result of failed processes. Examples of operational losses are the ones generated by internal frauds, human errors or failed transactions. In order to encompass the most heterogeneous set of processes, in our approach the losses of each process are generated by the interplay among random noise, interactions with other processes and the efforts the bank makes to avoid losses. We show how some relevant parameters of the model can be estimated from a database of historical operational losses, validate the estimation procedure and test the forecasting power of the model. Some advantages of our approach over the traditional statistical techniques are that it allows to follow the whole time evolution of the losses and to take into account different-time correlations among the processes.

1 Introduction

Operational Risk (OR) is defined as “the risk of [financial] loss resulting from inadequate or failed internal processes, people and systems or from external events”, including legal risk, but excluding strategic and reputation linked risks. The previous definition is taken from The New Basel Capital Accord or Basel II (Basel Committee, 2005), which can be viewed as the turning point for the OR management within banking institutions. In fact Basel II considers OR to be a critical risk factor. The accord requires banks to cope with OR by setting a side a certain capital charge, and it proposes three methods for calculating it.

The first method is the Basic Indicator Approach (BIA) that sets that capital to the of the bank’s gross income. The second is the STandardized Approach (STA), which is a simple generalization of the BIA: the percentage of the gross income is different for each business line and goes from to . The BIA and the STA share the fundamental drawback that, because of their inherent assumption that the capital charge is only a linear function of the bank’s gross income (per business line), they seem not to be solidly founded. Moreover, they give no additional insight into the dynamics governing the production of operational losses and thus they do not indicate any strategies for lowering them: in other words they do not offer a path toward OR management (Mc Neil et al., 2005; Cruz, 2002).

The third method is the Advanced Measurement Approach (AMA) which allows each bank to develop a model of its own that has to satisfy certain requirements and has to be approved by the national regulatory institutions. In particular, all the AMAs are required to use a historical database of internal and external losses. However, because the interest in OR is recent, and because only small, and sometimes unreliable databases of operational losses exist, the assessments of domain experts must also be considered. In addition, every AMA has to respect the classification of losses in eight business lines (like the STAs) and by seven different event types. Usually all the AMAs identify the capital charge with the Value-at-Risk (VaR) over the time horizon of one year and with a confidence level of , defined as the maximum potential loss not to be exceeded in one year with a confidence level of , i.e. the 99.9 percentile of the loss distribution. The VaR has a straightforward interpretation: the probability of registering a loss that is higher than the VaR in one year is equal to 0.001 and thus such a loss is registered on average every 1000 years.

Probably the most widely used AMA is the Loss Distribution Approach (LDA), which calculates the loss distribution separately, modeling the distribution of the number of losses over a certain time horizon (frequency) and the distribution of the impact of a single loss (severity) for each couple (business line, event type). The LDA relies on two assumptions: that frequency and severity for each couple are independent and that the frequency and severity of a couple are independent of the frequency and severity of all the other couples. From this point of view, the main defect of the LDA is that it completely neglects the correlations that may exist among the different couples. Advanced statistical tools aiming at considering the correlations have been proposed (Gourier et al., 2009; Neil et al., 2005; Cowell et al., 2007; Cornalba and Giudici, 2004), but there is still no general consensus regarding their effectiveness.

We believe that there are several advantages in the use of a dynamical model rather than a purely statistical approach such as the LDA. The first one is methodological: in fact, in the context of a purely statistical approach, one has to make assumptions about the shape of a distribution. For the LDA, in particular, one has to specify the frequency and severity distributions before their parameters can be fitted. Also, in the case in which the correlations are modeled through copulae, the functional form of the copula is usually specified a priori. On the other hand, a dynamical model only makes assumptions about the mechanisms underlying the generation of losses and from those assumptions is able to derive the loss distributions. This also means that the basic features of the loss distributions cannot be inserted “by han” as in the LDA, but they must emerge from the mechanisms that generate the losses instead. The second advantage is that a dynamical model may account for the different-time correlations. As mentioned previously, much effort has been devoted to including the correlations among different couples in the framework of the LDA. However, since both frequency and severity distributions do not depend on time, it is not possible to deal with the different-time correlations. Let us provide an example to show why, in the context of OR, different-time correlations cannot be neglected. Let us suppose, for example, that a failure occurs in the process of machinery servicing at time because of a damage in the transaction control system that is repaired only later at the time . As a consequence, some transactions may fail or be wrongly authorized and generate losses in other processes in the whole time interval . As it will be shown throughout the paper, the third advantage is that, in contrast with static approaches, a dynamical model offers a natural and solid framework to forecast future operational losses.

The model proposed by Kühn and Neu (2003); Anand and Kühn (2007) is the first attempt to introduce a dynamical model in the context of OR. It can be considered a mixed model in the sense that, while using a dynamical model to derive the frequency distribution, it still uses a static severity distribution. As a consequence, all the aforementioned benefits of adopting a dynamical model are limited to the frequency distribution. The model that we are proposing is completely dynamical in the sense that the relevant variable is the amount of loss registered in each couple at a certain time, avoiding the description in terms of frequency and severity. Indeed, frequency and severity distributions are simply a statistical tool for calculating the loss distributions and, while effective in a static approach, it is difficult to claim that the mechanisms generating losses directly involve them. The equation of motion includes two different mechanisms accounting for the generation of operational losses: the spontaneous generation via a random noise and the generation resulting from the interaction between different couples. The possibility that the bank will invest some money to avoid the occurrence of losses is also taken into account.

The aim of this paper is to set a new viable framework for a dynamical approach to OR. This means that we will try to build a basic dynamical model and find out how its crucial advantages over the purely statistical approaches can be exploited. In particular, it will be shown how the parameters of the model can be estimated from a database of operational losses or assessed by domain experts so that the model can be conveniently tailored to a specific bank. Since the main reason to study a dynamical approach is to try to understand whether, at least in principle, it is able to forecast future operational losses, we test the forecasting power of the model by choosing a realistic set of parameters. As we have already noted, using a dynamical approach implies that one cannot easily impose specific features on the loss distributions a priori: in particular, one cannot rely on the severity distribution to induce heavy tails in the loss distribution, as is the case in Kühn and Neu (2003). After the preliminary exploration of the potential of the model that we perform in this paper, the next step should be to establish some solid connections between the regions of the parameter space and the desirable features of the loss distributions. We will merely hint at the important role played by the random noise, like some recent papers (Bardoscia and Bellotti, 2010; Bardoscia, 2010) suggest.

We conclude with a final remark regarding the degrees of freedom of the model. It is very unlikely that the actual dynamics that causes the occurrence of operational losses in a bank directly involves the 56 couples indicated by Basel II: this would imply that the relevant variables for the dynamics of production of operational losses were the same for all the banks, regardless of their organizational structure. For this reason we prefer to introduce a further level of abstraction and just call processes the degrees of freedom of our model, implicitly assuming that they strongly vary from bank to bank and, therefore, that they should be carefully identified by a pool of experts in the internal structure of the bank.

2 Explaining the model

In the proposed model we associate with each process a positive real valued variable representing the amount of monetary loss incurred at the time in the -th process. There is one strong motivation to force to assume only positive values: our aim is to learn (at least) some of the parameters of the model from a database of historical operational losses collected by a bank whose entries are all positive, i.e. the quantities that are meant to be observed are intrinsically positive. We point out that negative values of could be interpreted as temporary reserves of money put aside to automatically lower the future losses; forcing to assume only positive values we are excluding this possibility. The evolution of the variables is governed by the following discrete time equation of motion:

| (1) |

where is the number of processes and the ramp function:

ensures that remains positive for all the time steps. From eq. 1 we see that the positive terms in the argument of the ramp function aim to generate a loss, while the negative terms aim to avoid the occurrence of a loss. is the number of nonzero losses occurred in the -th process in the time interval :

| (2) |

where is the Heaviside function and is an integer, so that ranges from 0 to . Eq. 2 also contains a clear definition of the parameter . In fact depends on , meaning that is the maximum delay up to which the losses incurred in the -th process may influence the losses in the -th process. In this sense the parameter is interpreted as the maximum correlation time between the losses incurred in the -th and the -th process; however it should be kept in mind that those correlation times (and thus ) are not symmetrical in general.

Let us now comment the role of each term in the argument of the ramp function in eq. 1. The sum term in eq. 1 accounts for the potential generation of losses due to the interactions with other processes: if , then the -th process is influenced by the -th process and, in particular, if , each loss occurred in the time interval (counted by ) generates a loss of amount in the -th process at the time . As an example, let us suppose that and that , ; from Eqs. 1 and 2 we see that the sum term for the first process may assume the values depending on the number of nonzero losses that occur in the second process in the time interval . This means that each loss that occurs in the second process in this time interval generates a potential loss of amount in the first process. As previously explained, is the maximum correlation time between the losses incurred in the second and in the first process. The losses incurred in the second process before the time have no influence on what may happen to the first process at the time . Obviously this is also true for the losses occurred after the time , i.e. in the future with respect to the time . If the condition , does not hold, the effect of the other processes must be summed to the effect of the second process. Also the are not symmetrical in general: in fact the effect on the -th process of a loss incurred in the -th process is clearly different from the effect on the -th process of a loss incurred in the -th process.

In this context, the parameters play a crucial role in accounting for different-time correlations and extending the model proposed in Anand and Kühn (2007), where the status of the -th process depends only on the value of the variables at the time . If the length of the time step of the model is larger than the maximum correlation time among the losses incurred in two different processes, eq. 1 provides a much more realistic description than the equation of motion proposed in Anand and Kühn (2007). In such a context the length of a time step is the typical time scale of the mechanisms responsible for generating the losses and, as it will be shown in section 3, it is linked to the temporal resolution of the database of operational losses that is used to estimate the parameters of the model.

can have two very different interpretations depending on its sign. If , it can be interpreted as the investment the bank makes to avoid the occurrence of losses in the -th process: the greater the value of , the less likely it is that a potential loss due to the other terms becomes an actual loss. Since does not depend on time, the amount of money (per unit of time) the bank chooses to invest on each process is fixed a priori rather than dynamically tuned. If , it can interpreted as pathological tendency of the -th process to produce a loss of amount at each time step.

is a -correlated random noise that accounts for spontaneously generated losses, i.e. losses not caused by the interactions with other processes. Because of its interpretation, its probability density function should have a subset of as support. We have chosen the exponential distribution:

| (3) |

where can be interpreted as the inverse of the mean value of the spontaneous losses that can be produced in the -th process. The exponential distribution has the property that about of its random extractions are lower than its mean value. This means that only few of the extraction will be able to exceed the threshold (unless it is chosen to be less than the mean value). This behavior seems to capture the intuitive picture of a spontaneous loss, which is something that is rarely expected to happen.

We point out that in the context of OR the crucial quantity is the cumulative loss up to the time :

| (4) |

which can be taken as an indicator of how much money the bank has to put aside to face OR over a time horizon . Let us note that such a time horizon is expressed here in units of time steps, meaning that, supposing that one is interested in the distribution of the cumulative losses registered in one year, in eq. 4 is equal to one year divided by the length of a time step.

3 Parameters estimation

We will show how the parameters and can be learned from a database of historical operational losses that keeps track of the amount of each loss together with the time at which and the process in which each loss occurred. In this context, we interpret the database as a realization of the eq. 1 for a number of time steps consistent with the database at our disposal: is identified with the time at which the oldest loss in the database occurred and with the time at which the newest loss occurred. The length of a time step of the model is therefore the inverse of the temporal resolution of the database of operational losses from which the parameters are estimated. Since there is no risk of ambiguity in this section, we will use the notation to refer to the amount of the loss registered at the time step in the -th process in the database at our disposal.

In order to estimate we look at those events such that , ; for such events eq. 1 reads:

| (5) |

and the probability that is:

| (6) |

In order to estimate the left hand side of eq. 11 one would need a sample of values of , which is not our case, since a database of operational losses provides a unique value for the amount of the loss in the -th process and at the time step . On the other hand, since the distribution of the noise does not depend on time, the right-hand side of eq. 11 also does not depend on time and it is reasonable to make the following identification:

| (7) |

where the left-hand side has the meaning of a frequentist estimate from the database at our disposal:

| (8) |

i.e. the number of times such that and , divided by the number of times such that times such that , . Dropping the dependence on time from the left-hand side of eq. 7 can be interpreted as the assumption that a single trajectory of the system contains all the information needed to perform a reliable estimation of . Inverting eq. 7 we have:

| (9) |

We note that the values of estimated with such a procedure are necessarily smaller than zero.

The estimation of is analogous to the estimation of , but it is based on a different class of events. Let us look at those events such that and for and ; for such events eq. 1 reads:

| (10) |

and the probability that is given by:

| (11) |

Making a similar identification to that of eq. 7 we have:

| (12) |

where, once again, the left-hand side is a frequentist estimate from the database at our disposal:

| (13) |

and eq. 12 can be inverted to obtain:

| (14) |

We note from eq. 14 that, depending on the class of events found on the database at our disposal, there may be up to different estimates of : one for each possible value of . The problem of dealing with multiple estimates of should be faced depending on the use one has for the value of the parameter . In sections 4 and 6 we describe two different strategies for collapsing the multiple estimates of into a single value. Let us note that eqs. 7 and 12 can be easily generalized for every distribution of , since is simple the cumulative function calculated in . However, the passage from eqs. 7 and 12 to eqs. 9 and 14 is possible only if such a cumulative function is invertible. In all the other cases eqs. 7 and 12 must be solved numerically to obtain the estimates of and .

With regard to the estimation of , a trivial possibility is to invert eq. 9, provided that the value of is known. Let us recall that can be interpreted as the money invested by the bank on the -th process to keep it working properly and, therefore, it is plausible that some kind of knowledge about its value is available. Nevertheless, may also be unknown, since, in general, it is the threshold that a potential loss in the -th process has to overcome to become an actual loss. The value of may be assessed independently from only if some information regarding the spontaneous losses in the -th process is available. Sometimes one may lack the knowledge regarding the interactions of a particular process with the others, but have a rather precise idea regarding the distribution of spontaneous losses instead. In those cases, we see from eq. 3 that is the inverse of the mean of the spontaneous losses in the -th process or it can obtained from any quantile of order of the distribution of spontaneous losses of the -th process: .

Furthermore cannot be learned directly from a database of operational losses and should be assessed from domain experts instead. Recalling the definition of given in section 2, the question that should be answered in order to assess its value is: “which is the maximum delay up to which the losses occurred in the -th process may influence the losses in the -th process?”.

Let us for a moment comment on the total number of parameters of the model that must be estimated or assessed. There are couplings , maximum correlation times since they both depend on two indexes, supports and inverse means of the noise since they depend only on one index, so that the total number of parameters is . In the context of the LDA, supposing that the frequencies and severities of the all processes are independent, one has that the total number of parameters is , where and are the number of parameters of the chosen frequency and severity distribution, respectively. Typically = 1, 2 (for Poisson and negative binomial distributions) and = 2 (for lognormal, gamma or Weibull distributions); obviously becomes larger if the severity tails are fitted with the extreme value theory. However, one should consider that the proposed approach takes the correlations among the different processes into account. For this reason it is fair to compare it with the LDA using couplae to capture the correlations. In this case (apart from the trivial cases of comonotone or anti-conomotone copulae) one has to use one copula for each couple of processes, resulting in additional parameters, where is the number of parameters of each copula. Typically = 1 (for Gaussian, Clayton, Gumbel or Frank copula). The total number of parameters of the LDA with copulas is then , which is of the same order in as the proposed approach.

4 Validation

In order to validate the proposed procedure for estimating the parameters we propose the following steps:

-

1.

set the parameters , , and of the model to realistic values. Let eq. 1 evolve for time steps and consider the obtained values of to be a database of operational losses.

-

2.

Estimate the parameters and using the procedure proposed in section 3 and compare the obtained values with the ones set out in point 1.

-

3.

Simulate eq. 1 a large number of times using the estimated parameters, so that a sample of trajectories of the system is obtained. Since there may be more than one estimate for each , the values of their estimates should be sampled among the available ones for each simulated trajectory.

-

4.

Compare calculated from the database generated in point 1 with the average of the same quantity calculated from the sample of trajectories generated in point 3. There are two reasons not to use and its average: on one hand, the single realization of eq. 1 strongly depends on the extractions of the noise and thus is not directly comparable to another realization; on the other hand, as previously mentioned, the quantity of interest in OR is not the amount of money lost at a certain time step, but rather the total amount of money lost up to that certain time, which is precisely the meaning of .

It is possible to look not only at the sample average of , but also at the full distribution of the values of . In particular, it is possible to estimate the VaR at a given time step by numerically calculating some percentile of the sampled distribution at the desired time step. The forecasting power of the model can be tested by repeating points from 2 to 5 using only a fraction of the database generated in point 1 to estimate the parameters and , but still simulating eq. 1 in point 3 for time steps. In this way we are effectively ignoring the information contained in the neglected fraction of the original database and making a forecast relative to those time steps.

5 Chosen parameters

We simulate for time steps a system composed by processes whose parameters are set up so that they can mimic the interactions between the following realistic processes:

-

1.

machine failure,

-

2.

human error,

-

3.

internal fraud,

-

4.

failed transaction of type I,

-

5.

failed transaction of type II.

Before we start to justify the chosen parameters, let us make a couple of observations. The value of may be chosen to fix the unit of measurement of . In fact, rescaling all the terms in eq. 1 by a factor , we obtain:

| (15) |

which is precisely the same equation as eq. 1, but with rescaled losses and parameters. This means that, since we are free to choose the unit of measure for , it is perfectly legitimate to fix . However, since we want to model the effort made by the bank to avoid losses, as we already pointed out, its value must be negative, thus:

Rather than directly specifying the value of , it is possible to specify the probability that a loss occurs in a noninteracting process. should be easier to assess, since it does not carry any information about the interactions. It is proportional to the number of times that a loss would occur in a given process if the process is left “all alone”, with no interaction with the other processes. Dropping the interaction term from eq. 1 and calculating one obtains:

| (16) |

that links to . The chosen values for are:

which are consistent with the fact that the probability that a human error spontaneously produces a loss is much higher (5 times) than the same probability for a machinery fault. is equally low, assuming that a loss generated by a spontaneous internal fraud is as rare as a spontaneous loss generated by a machinery failure, while intermediate values are chosen for failed transactions.

The are all equal to zero, apart from:

which accounts for the possibility that a human error causes a machine failure,

which accounts for the possibility that the act of committing a fraud leads to committing fraud again,

which accounts for the possibility that some transactions may fail because some funds have been subtracted by a fraud. The two types of transaction failures are distinguished by the fact that they may be also influenced by different processes, that is, type I by a human error and type II by a machine failure:

but in both cases the consequences are minor with respect to those deriving from a fund subtraction due to an internal fraud.

From eq. 1 we see that the only relevant values of are those relative to the values of which are different from zero. In all those cases we set , which takes into account the possibility of different-time correlations.

We conclude this section by pointing out that eq. 1 requires a number of time steps in the initial condition which is equal to . We set for , i.e. all process are perfectly working at the beginning, without losses.

6 Results

Using the parameters described in section 5 we have followed the validation protocol and estimated the parameters using the whole database generated at point 1. We repeated the procedure using only the first three-quarters of its time steps to test the forecasting power of the model. Using the full database we find the following relative errors on the estimated parameters:

while, using only the first three-quarters of its time steps, we find:

where the relative error on has been calculated using the mean of the available estimates. We can immediately note that the errors on the estimated parameters are comparable in the two cases and, in some cases, are lower when only the first three quarters of the available time steps are used. This means that the information contained in last quarter of time steps is redundant and that the first three-quarters of the available time steps can provide all the information needed to perform a reliable estimation of the parameters. This is a very general consideration indeed: every time we want to make a forecast about some quantity we are assuming that our knowledge about its dynamics is complete, i.e. all the relevant information is contained in the past (already observed) evolution.

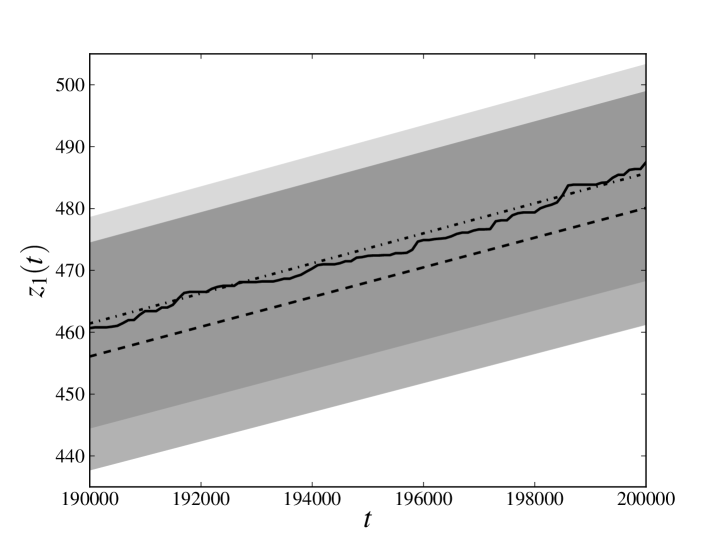

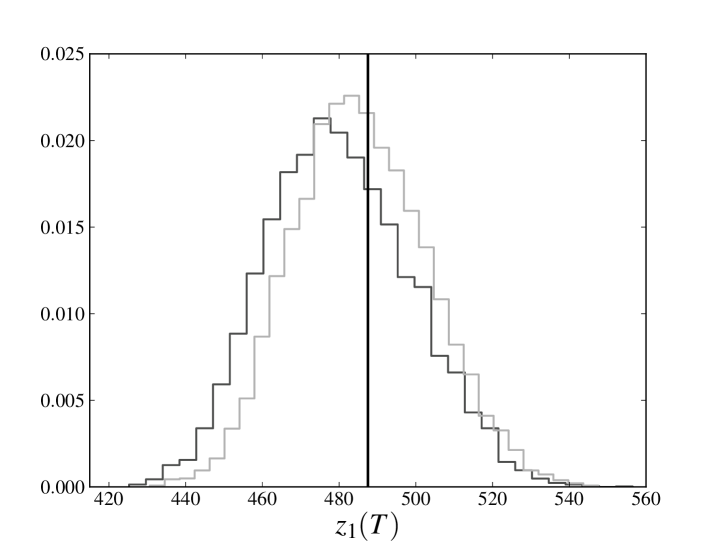

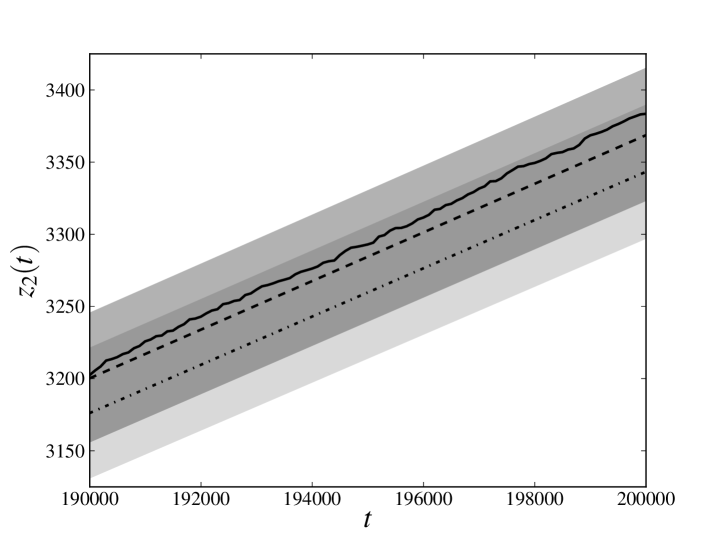

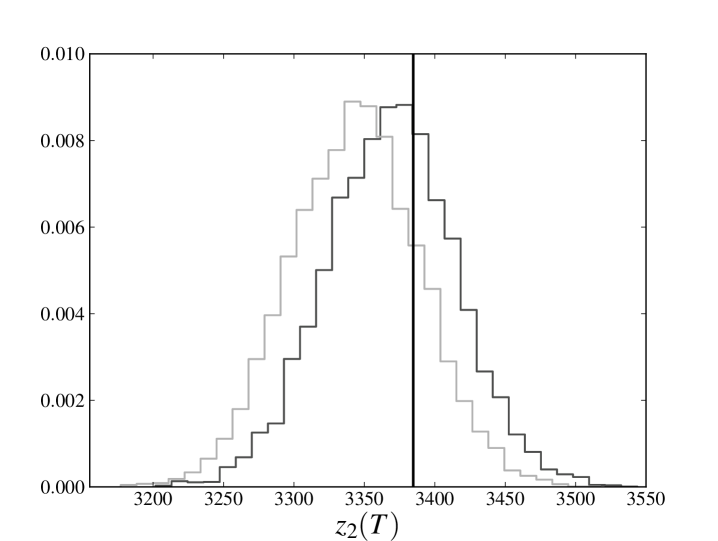

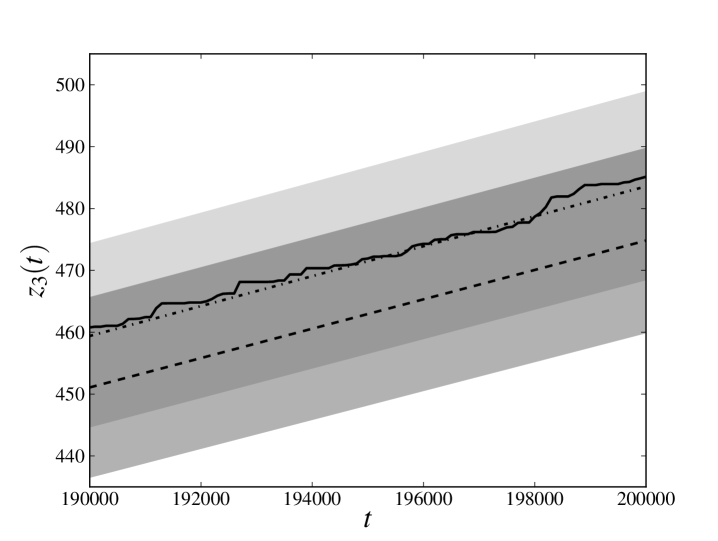

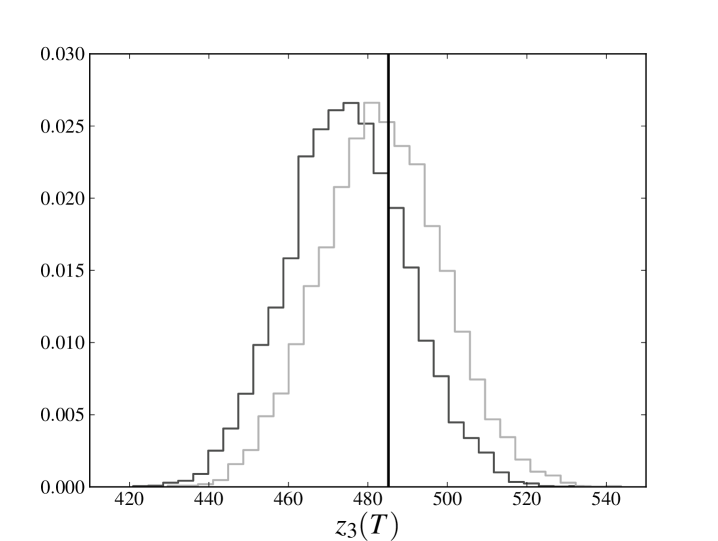

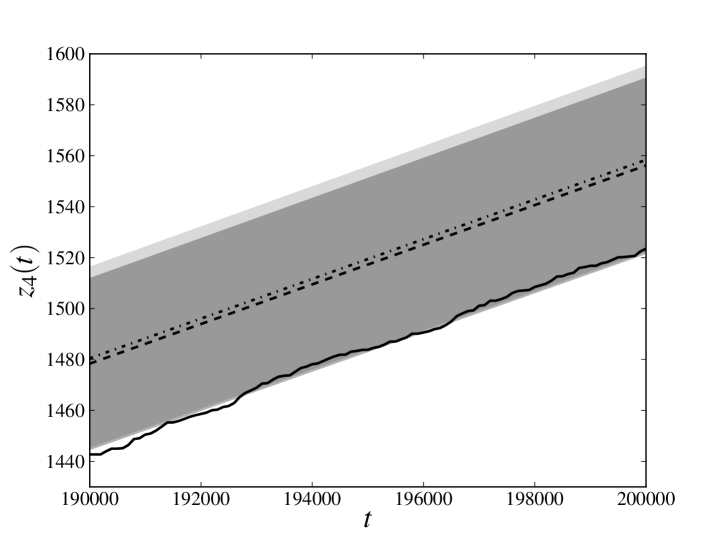

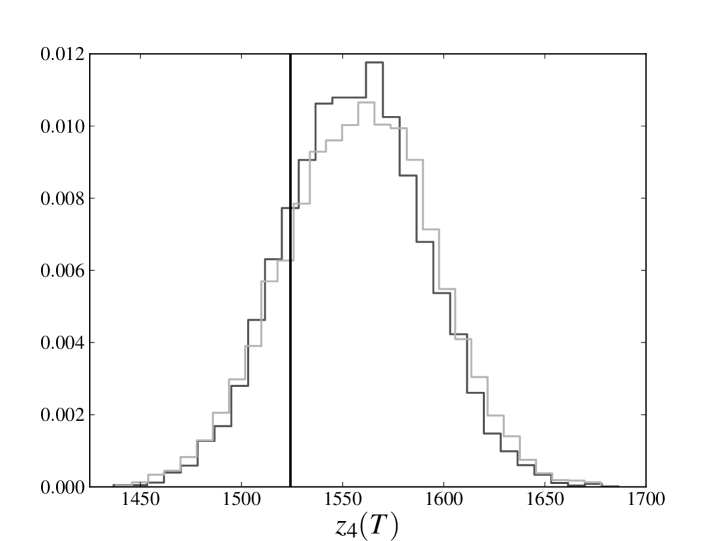

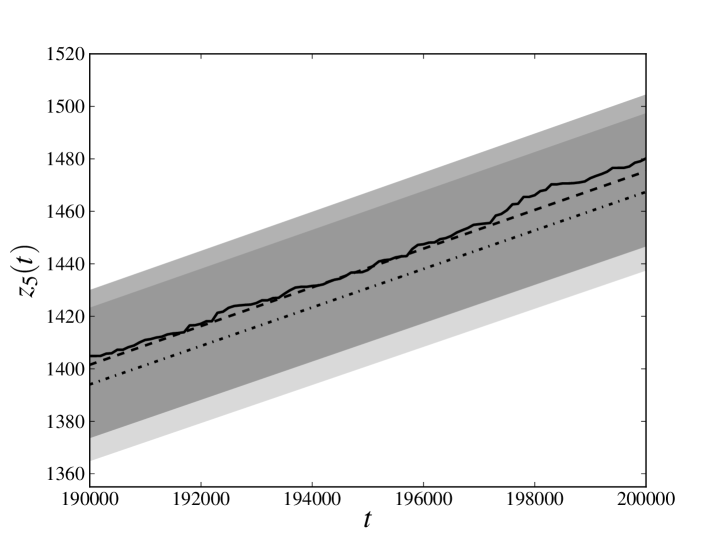

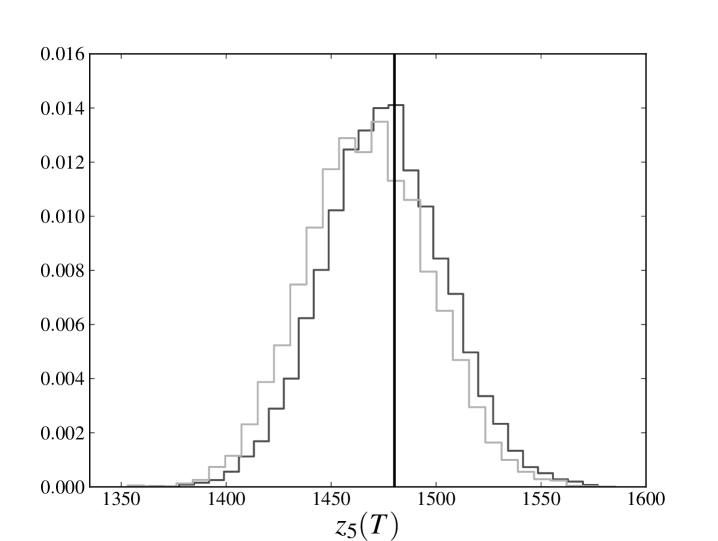

In the left panels of figs. 1 and 2 we compare (only the last 10000 time steps, for the sake of readability) calculated for the database generated at point 1 with the average of the same quantity for the trajectories sampled at point 3. The solid line denotes relative to the original trajectory, the dashed line denotes the average of obtained estimating the parameters from the whole database and the dash-dotted line denotes the average of obtained estimating the parameters from only the first three-quarters of the available time steps. The darker transparent region spans a standard deviation around the average of obtained estimating the parameters from the whole database. The lighter transparent region has the same meaning for the average of obtained estimating the parameters only from the first three-quarters of the available time steps. We see that, in all the cases, the original trajectory is reproduced with an error which is less than (or, for the -th process, equal to) one standard deviation, meaning that the cumulative loss in the last part of the database has been reliably forecast.

In the right panels of figs. 1 and 2 we compare the full distribution of of the sampled evolutions at point 3 with the original trajectory. The solid line denotes of the original trajectory, the darker histogram refers to the distribution of obtained estimating the parameters from the whole database, while lighter histogram refers to the distribution of obtained estimating the parameters only from the first three-quarters of the available time steps. Again we see that peaks of both distributions are very close and almost coincident with the value of the original trajectory.

We note that the observed average of is, by a good approximation, linear and that the distribution of does not seem to exhibit heavy tails. Such a behavior is also present in the process 2, which is not influenced by the losses incurred in any other process and whose losses can only have been spontaneously generated by the noise term. This observation encourages us to explore models in which the distribution of the noise has a different shape and heavy tails. However, it should be also kept in mind that deviations from the behavior shown here may depend not only on the distribution of the noise, but also on the chosen parameters. As already pointed out in section 1, a further exploration of the parameter space is certainly needed to understand if this peculiarity depends on the particular choice of parameters that has been made.

7 Conclusions

In this paper we have proposed a new dynamical model for OR. To the best of our knowledge for the first time the loss distribution is derived from an entirely dynamical approach. In a previous paper (Kühn and Neu, 2003) such an approach was limited to the frequency distribution. The use of a dynamical approach has a great methodological advantage over static approaches. For example, the LDA uses historical data to fit the yearly loss distribution and, exploiting this distribution to estimate the capital charge for the next year, the method implicitly assumes that the distribution will not change from one year to the next. On the other hand, a dynamical approach provides a natural framework for forecasting the distribution of the future losses on which the capital charge requirements should be based, making the much weaker assumption that only the basic mechanisms of loss production do not change from year to year. Indeed, also this assumption may be partially relaxed by estimating the parameters only from the most recent part of a database of operational losses or by forcing them to values assessed by a domain experts. However, we point out that, since the meaning of the parameters of the model is unconventional in the context of OR, such an assessment must be carried out with extreme care, so that the experts can be completely aware of the role played by each parameter in the model. Another crucial feature of the proposed approach that is absent in most of the AMAs is that it can take into account different-time correlations among the processes by means of the interaction term in the equation of motion.

Let us remark that the current implementation adheres to many of the AMA guidelines: i) some parameters of the model are estimated from a database of internal operational losses, ii) the remaining parameters have to be assessed by domain experts, iii) the processes can be considered to be, or can be linked to, the 56 (business lines, event types) couples, iv) the VaR of the cumulative loss distribution at every time step can be calculated with the desired level of confidence; the calculation of the VaR over the time horizon of one year can be performed once the fictional time scale the model is linked to some real time scale, which may be the time resolution of the available database of operational losses. It is worth noting that the proposed model does not require massive investments for its implementation. In fact, only a reliable monitoring of the internal losses and the assessments of internal experts are needed. Both of these things are requirements that every AMA-oriented bank should meet in any case. From a practical point of view the main steps required to implement the proposed approach are the following: i) the processes should be identified, since (as hinted in 1) they may strongly depend on the specific bank; ii) the losses incurred in the processes have to be monitored for a sufficient amount of time, so that a reliable database of operational losses can be built and iii) the parameters that cannot be estimated from the database must be assessed by domain experts.

The current limitations of the model pave the way for future research directions. A recent paper (Bardoscia and Bellotti, 2010) has focused on the case in which no causal loops among the processes exist, showing that, together with and , even the parameter can be learned from a database of operational losses in that case. We point out that the absence of causal loops is an hypothesis that is often accepted. In particular this is the crucial hypothesis of all the approaches based on bayesian networks (Cowell et al., 2007). In this case the loss distribution has been analytically derived as well, establishing a deep connection between the properties of the noise in the equation of motion and the shape of the loss distribution. We have already discussed the importance of investigating the case in which the distribution of the noise has heavy tails. Bardoscia (2010) deals with such distributions, generalizing the results obtained in Bardoscia and Bellotti (2010) and proving that, at least in the case in which there are no causal loops, the distribution of the cumulative loss is heavy tailed if and only if the distribution of the noise is heavy tailed. Further research should be certainly devoted to investigating the more general case in which causal loops are present.

Acknowledgments

M. B. would like to thank Maria Valentina Carlucci for the countless suggestions and fruitful discussions.

References

- Basel Committee (2005) Basel Committee on Banking Supervision (2005). International convergence of capital measurement and capital standards. Bank for International Settlements Press & Communications.

- Mc Neil et al. (2005) McNeil A. J., Frey R., Embrechts P. (2005). Quantitative Risk Management. Princeton University Press, Princeton.

- Cruz (2002) Cruz M. G. (2002). Modeling, Measuring and Hedging Operational Risk. Whiley & Sons, London.

- Gourier et al. (2009) Gourier E., Farkas W., Abbate D. (2009). Operational risk quantification using extreme value theory and copulas: from theory to practice. Journal of Operational Risk 4-3, 3.

- Neil et al. (2005) Neil M., Fenton N., Tailor M. (2005). Using Bayesian Networks to Model Expected and Unexpected Operational Losses. Risk Analysis 25-4, 963.

- Cowell et al. (2007) Cowell R. G., Verral R. J., Yoon M. K. (2007). Modeling Operational Risk with Bayesian Networks. Journal of Risk and Insurance 74-4, 795.

- Cornalba and Giudici (2004) Cornalba C., Giudici P. (2004). Statistical models for operational risk management. Physica A 338, 166.

- Kühn and Neu (2003) Kühn R., Neu P. (2003). Functional correlation approach to operational risk in banking organizations. Physica A 332, 650.

- Anand and Kühn (2007) Anand K., Kühn R. (2007). Phase transitions in operational risk. Physical Review E 75 016111.

- Bardoscia and Bellotti (2010) Bardoscia M., Bellotti R. (2010). A Dynamical Model for Forecasting Operational Losses. Available on arXiv:1007.0026 [q-fin.RM].

- Bardoscia (2010) Bardoscia M. (2010). Heavy tails in operational risk: a dynamical approach, in preparation.