11email: {luojieting,baiseliao}@zju.edu.cn 22institutetext: Utrecht University, Utrecht, the Netherlands

22email: J.J.C.Meyer@uu.nl

A Formal Framework for Reasoning about Agents’ Independence in Self-organizing Multi-agent Systems

Abstract

Self-organization is a process where a stable pattern is formed by the cooperative behavior between parts of an initially disordered system without external control or influence. It has been introduced to multi-agent systems as an internal control process or mechanism to solve difficult problems spontaneously. However, because a self-organizing multi-agent system has autonomous agents and local interactions between them, it is difficult to predict the behavior of the system from the behavior of the local agents we design. This paper proposes a logic-based framework of self-organizing multi-agent systems, where agents interact with each other by following their prescribed local rules. The dependence relation between coalitions of agents regarding their contributions to the global behavior of the system is reasoned about from the structural and semantic perspectives. We show that the computational complexity of verifying such a self-organizing multi-agent system is in exponential time. We then combine our framework with graph theory to decompose a system into different coalitions located in different layers, which allows us to verify agents’ full contributions more efficiently. The resulting information about agents’ full contributions allows us to understand the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system. Finally, we show how we can use our framework to model a constraint satisfaction problem.

Keywords:

Self-organization, logic, Multi-agent Systems, Graph Theory, Verification1 Introduction

In the modern society artificial intelligence has been applied in many industries such as health care, retail and traffic. Since our nature presents beautiful ways of solving problems to us, biological insights have been the source of inspiration for the development of several techniques and methods to solve complex engineering problems. One of the examples is the adoption of self-organization from complex systems. Self-organization is a process where a stable pattern is formed by the cooperative behavior between parts of an initially disordered system without external control or influence. It has been introduced to multi-agent systems as an internal control process or mechanism to solve difficult problems spontaneously, especially if the system is operated in an open environment thereby having no perfect and a priori design to be guaranteed [28][25][27]. One typical example using self organization mechanisms is ant colony optimization [13], where ants collaborate to find out the optimal solution through laying down pheromone trails. In a wireless mobile sensor network, robots with sensors can deploy themselves to achieve optimal sensing coverage when the system designer is not aware of robots’ interest or the operated environment [18].

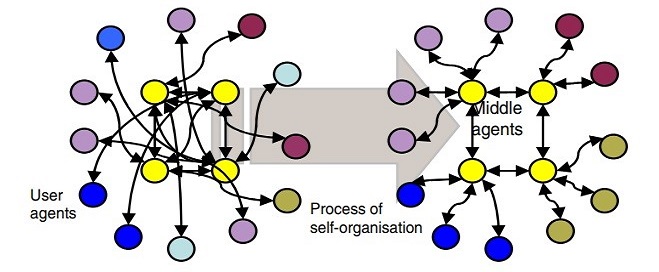

However, making a self-organizing system is highly challenging [17][32]. The traditional development of a multi-agent system is a top-down process which starts from the specification of the goal that the system needs to achieve to the development of specific agents. In this way, the goal of the system will guarantee to be achieved if the specific agents are implemented successfully. As the hierarchical in Figure.1(left), the global objective can be achieved by modules E and F, and module E can be refined by agents A and B and module F can be refined by agents B, C and D. However, such an approach cannot be applied to the development of self-organizing multi-agent systems: because a self-organizing multi-agent system has autonomous agents and local interactions between them, the development of a self-organizing multi-agent system is usually a bottom-up process which starts from defining local components to examining global behavior. The distributive structure in Figure.1(right) represents a self-organizing multi-agent system, where agents A, B, C and D interact with each other. Because of that, it is difficult to predict the behavior of the system from the system specification about autonomous agents and local interactions between them. Consequently, a self-organizing multi-agent system is usually evaluated through implementation. In other words, the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system makes implementation the usual way of correctness evaluation. There have been some methodologies for developing self-organizing multi-agent systems (such as ADELFE [3][4]), but they do not explain the complex link between local agent behavior and system level behavior of a self-organizing multi-agent system. The community of Self-Adaptive and Self-Organizing Systems (SASO) also highlights that it is still challenging to investigate how micro-level behavior lead to desirable macro-level outcomes. What if we can understand the complex link between micro-level agent behavior and macro-level system behavior in a self-organizing multi-agent system? If there is approach available that helps us understand how global system behavior emerges from agents’ local interactions, the development of self-organizing multi-agent systems can be facilitated. For example, if we know a coalition of agents brings about a property independently and we want to change that property, what we have to do is to reconfigure the behavior of that coalition instead of the behavior of agents outside that coalition. As we can see, the independent relation between coalitions of agents in self-organizing multi-agent systems is an crucial issue to be investigated.

In theoretical computer science, logic has been used for proving the correctness of a system [11][10]. Instead of implementing the system with respect to a specification, we can verify whether the system specification fulfills the global objective by checking logical formulas. That indeed provides a new way of evaluating a self-organizing multi-agent system apart from implementation. In this paper, we propose a logic-based framework of self-organizing multi-agent systems, where agents interact with each other by following their prescribed local rules. Based on the local rules, we define a structural property called independent component, a coalition of agents which do not get input from agents outside the coalition. Our semantics and the structure derived from communication between agents allow us not only to verify behavior of the system, but also to reason about the independence relation between coalitions of agents regarding their contributions to the global system behavior from two perspectives. Moreover, we propose a layered approach to decompose a self-organizing multi-agent system into different coalitions, which allows us to check agents’ full contributions more efficiently. The resulting information about agents’ full contributions allows us to understand the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system. We finally show how we can use our framework to model a constraint satisfaction problem, where a solution based on self-organization is used.

The rest of the paper is organized as follows: Section 2 introduces the abstract framework to represent a self-organizing multi-agent system, proposing the notion of independent components; Section 3 provides the semantics of our framework to reason about agents’ independence in terms of their contributions to the global behavior of the system; the model-checking problem is investigated in Section 4; Section 5 proposes a layered approach to decompose a self-organizing multi-agent system; in Section 6 we show how to use our framework to model constraint satisfaction problems; finally, related work and conclusion are provided in Sections 7 and 8 respectively.

2 Abstract Framework

In this section, we will propose the model of this paper: a concurrent game structure extended with local rules and define the structural property of independent components whose behavior is independent on the behavior of the agents outside of the components.

2.1 Self-organizing Multi-agent Systems

The semantic structure of this paper is concurrent game structures (CGSs). It is basically a model where agents can simultaneously choose actions that collectively bring the system from the current state to a successor state. Compared to other kripke models of transaction systems, each transition in a CGS is labeled with collective actions and the agents who perform those actions. Moreover, we treat actions as first-class entities instead of using choices that are identified by their possible outcomes. Formally,

Definition 2.1

A concurrent game structure is a tuple such that:

-

•

A natural number of agents, and the set of all agents is ; we use to denote a coalition of agents ;

-

•

A finite set of states;

-

•

A finite set of propositions;

-

•

A labeling function which maps each state to a subset of propositions which are true at ; thus, for each we have ;

-

•

A finite set of actions;

-

•

For each agent and a state , is the non-empty set of actions available to agent in ; is the set of joint actions in ; given a state , an action vector is a tuple such that ;

-

•

A function which maps each state and a joint action to another state that results from state if each agent adopted the action in the action vector, thus for each and each we have .

Note that the model is deterministic: the same update function adopted in the same state will always result in the same resulting state. A computation over is an infinite sequence of states such that for all positions , there is a joint action such that . For a computation and a position , we use to denote the th state of . More elaboration of concurrent game structures can be found in [2].

Self-organization has been introduced into multi-agent systems for a long time to solve various problems in multi-agent systems [32][16]. It is a mechanism or a process which enables a system to finish a difficult task by the cooperative behavior between agents spontaneously [12]. In particular, agents in a self-organizing multi-agent system have local view of the system and the system reaches a desired state spontaneously without guided by any externals. In this paper, we argue that the cooperative behavior is guided by prescribed local rules that agents are supposed to follow with communication between agents as a prerequisite. Therefore, we can define a self-organizing multi-agent system as a concurrent game structure together with a set of local rules for agents to follow. For example, in ant colony optimization algorithms, ants are required to record their positions and the quality of their solutions (lay down pheromones) so that in later simulation iterations more ants locate better solutions. Before defining such a type of local rules, we first define what to communicate, which is given by an internal function.

Definition 2.2 (Internal Functions)

Given a concurrent game structure , the internal function of an agent is a function that maps a state to a propositional formula over .

The internal function returns the information that is provided by participating agents themselves at a given state and might be different from agent to agent. Depending on the application, we might have different interpretation on . For example, vehicles in a busy traffic situation are required to communicate their urgencies, and robots sensors in a self-deploy sensing network are required to communicate their sensing areas. Here we assume that agents are wise enough to process their local rules with the communicated information. A local rule is defined based on agents’ communication as follows:

Definition 2.3 (Abstract Local Rules)

Given a concurrent game structure , an abstract local rule for an agent is a tuple consisting of a function that maps a state to a subset of agents, that is, , and a function that maps to an action available in state to agent , that is, . We denote the set of all the abstract local rules as and a subset of abstract local rules as that are designed for coalition of agents .

An abstract local rule consists of two parts: the first part states the agents with whom agent is supposed to communicate in state , and the second part states the action that agent is supposed to take given the communication result with agents in for their internals. Moreover, in [2] and [19], a rule (or a norm) is defined as a mapping that explicitly prescribes what agents need to do in a given state, which requires that system designers have complete information of the system including agents’ internals such that the desired state as well as the legal computations can be identified. Differently, a local rule in this paper is defined based on agents’ communication. Different participating agents might have different internal functions, making the communication results and thus the actions that are required to take different. Hence, the system allows agents to find out the desired state and how to get there in a self-organizing way. We see local rules not only as constraints but also guidance on agents’ behavior, namely an agent does not know what to do if he does not communicate with other agents. Therefore, we exclude the case where agents get no constraint from their respective local rules. Notation denotes a set of computations and is the set of computations starting from state where agents in coalition follow their respective local rules in . A computation is in if and only if it holds that for all positions there is a move vector such that and for all it is the case that . Because function returns only an action that agent is allowed to take in any state, there will be only one computation in the set of computations , which will be denoted as without the curly brackets outside in the rest of the paper. Now we are ready to define a self-organizing multi-agent system. Formally,

Definition 2.4 (Self-organizing Multi-agent Systems)

A self-organizing multi-agent system (SOMAS) is a tuple , where is a concurrent game structure and is a set of local rules for agents in the system to follow.

We will use the example in [29] for better understanding the above definitions.

Example 1

We consider a CGS scenario as Fig.2 where there are two trains, each controlled by an agent, going through a tunnel from the opposite side. The tunnel has only room for one train, and the agents can either wait or go. Starting from state , if the agents choose to go simultaneously, the trains will crash, which is state ; if one agent goes and the other waits, they can both successfully go through the tunnel without crashing, which is .

Local rules and are prescribed for the agents to follow: both agents communicate with each other for their urgency and in state ; the one who is more urgent can go through the tunnel first and the other one has to wait for it; after the agent who waits can go. We formalize as follows. In state , and ,

In state and , and , and

Given the above local rules, if is more urgent than or as urgent as w.r.t. and , the desired state is reached along with computation ; if is less urgent than w.r.t. and , the desired state is reached along with computation . As we can see, any legal computation is not prescribed by system designers, because it depends on the urgencies that are provided by agents themselves. Instead, the agents can find out how to get to the desire state by themselves through following their local rules. Certainly, agents can collaborate to cross the tunnel successfully without communication, but that requires an external who is aware of the available actions for each train and the game structure to make a plan for them, which is not allowed in a self-organizing multi-agent system. In a self-organizing multi-agent system, each train does not know the available actions from the other train but it can follow its local rule to behave based on the communication result instead of listening to an external to behave.

2.2 Full Contribution

Similar to ATL [2], our language ATL- is interpreted over a concurrent game structure that has the same propositions and agents. It is an extension of classical propositional logic with temporal cooperation modalities and path quantifiers. A formula of the form means that coalition of agents will bring about the subformula by following their respective local rules in , no matter what agents in do, where is a temporal formula of the form , or (where , , are again formulas in our language). Formally, the grammar of our language is defined below, where and :

Given a self-organizing multi-agent system , where is a concurrent game structure and is a set of local rules, and a state , we define the semantics with respect to the satisfaction relation inductively as follows:

-

•

iff ;

-

•

iff ;

-

•

iff and ;

-

•

iff for all , we have ;

-

•

iff for all and all positions it holds that ;

-

•

iff for all there exists a position such that for all positions it holds that and .

Dually, we write for . Importantly, when we say a coalition of agents ensures a temporal formula by following their respective local rules, it means that agents in the coalition ensure a temporal formula if they take the actions that their local rules return, no matter whether agents outside of the coalition take the actions that are allowed to take or not. It is merely interpreted from our semantics, while agents’ dependence relation in terms of communication does not play a role.

Proposition 2.1

Given an SOMAS and a coalition , for any coalition , it holds that

Proof

Because , computation set . Thus, if , meaning that holds in all , then will also hold in all . Therefore, .

It means if a coalition ensures an temporal formula, then any coalition that contains that coalition will also ensure that temporal formula. Notice that can be the whole agent set .

Example 2

According to the local rules in the two-train example, the train who is more urgent can go through the tunnel first and the other one has to wait for him. We have that one train by itself cannot bring about the result of passing through the tunnel without crash through following the local rule, which can be expressed:

Instead, both trains have to cooperate to bring about the result. Thus, we have that both agents by themselves can bring about the result of passing through the tunnel without crash through following the local rules, which can be expressed:

As we mentioned in the introduction, because a self-organizing multi-agent system has autonomous agents and local interactions between them, we are not clear about how the local components lead to the global behavior of the system, making a self-organizing multi-agent system usually evaluated through implementation. One possible solution to understand the complex link between local agent behavior and system level behavior is to divide the system into components, each of which has contribution to the global behavior of the system and is independent on agents outside the coalition. The principle is that, when studying the behavior of one component, we do not need to think about the influences coming from agents outside the component. The independence between different components in terms of their contributions to the global behavior of the system allows us to understand the complex link. With this idea, we first define a notion of independent components over a self-organizing multi-agent system.

Definition 2.5 (Independent Components)

Given an SOMAS , a coalition of agents is an independent component w.r.t. a state iff for all and its abstract local rule it is the case that ; a coalition of agents is an independent component w.r.t. a set of computations iff for all and is an independent component w.r.t. state .

An independent component w.r.t. state is a coalition of agents which only gets input information from agents inside coalition in state . In other words, an independent component might output information to agents in , but never get input from agents in .

Proposition 2.2

Given an SOMAS and two coalitions and where , if both and are independent components, then is also an independent component.

Proof

Because is an independent component and , agents in do not get input from agents in . For the same reason, agents in do not get input from agents in . Thus, agents in do not get input from agents in . Therefore, is also an independent component.

Example 3

In the two-train example, as in state both trains need to communicate with each other as their local rules require, neither nor is an independent component w.r.t. state . Because the system only consists of two trains, the two trains form an independent component w.r.t. state . Because in states and each train only gets urgency from itself to go ahead, is an independent component w.r.t. state and is an independent component w.r.t. state .

Using the notion of independent components and our language, We then propose the notion of semantic independence, structural independence and full contribution to characterize the independence between agents from different perspectives.

Definition 2.6 (Semantic Independence, Structural Independence and Full Contribution)

Given an SOMAS , a coalition of agents and a state ,

-

•

is semantically independent with respect to a temporal formula from iff ;

-

•

is structurally independent from iff is an independent component w.r.t. the set of computations ;

-

•

has full contribution to in iff is the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from .

The notion of full contribution captures the property of coalition in terms of independence from two different perspectives: semantically, coalition ensures through following its local rules no matter what other agents do; structurally, coalition do not communicate with other agents when following its local rules no matter what other agents do. Notice that when we say coalition has full contribution to in , it is important for coalition to be the minimal coalition that is both semantically independent with respect to and structurally independent from state . This is because there might exist multiple coalitions that are both semantically independent with respect to and structurally independent from state and the set of all agents is apparently one of them. In other words, coalition has full contribution to because any subset of coalition is either semantically dependent with respect to or structurally dependent. The following example illustrates why we need both semantic independence and structural independence to characterize the full contribution of a coalition of agents.

Example 4

Consider the transition system in Fig.3(top). is interpreted as an action vector where agent performs action and agent does whatever he can. Local rules are prescribed for agents and : in state needs to communicate with for some valuable information and is supposed to do based on the communication result. As we can see from the structure, brings about no matter what does, which means that coalition is semantically independent. However, since needs to communicate with in state , coalition is not structurally independent.

Consider the transition system in Fig.3(down). is interpreted as an action vector where agent performs action and agent deviates from action . Similar for . Local rules are prescribed for agents and : in state , both agents do not need to communicate with each other and are supposed to do . As we can see from the structure, and can bring about through following their local rules but neither nor can achieve that by itself, which means that coalitions or is not semantically independent. But since they do not need to communicate with each other, it is structurally independent.

As for the two-train example, neither train, namely or as a coalition, has full contribution to the result of passing through the tunnel without crash. The reasons are listed as follows: any single train cannot ensure the result of passing through the tunnel without crash, which means that any single train is not semantically independent. Moreover, both trains follow their local rules to communicate with each other in state , which means that any single train is not an independent component w.r.t. state thus not being structurally independent.

The two trains have full contribution to the result of passing through the tunnel without crash, because both agents by themselves can bring about the result of passing through the tunnel without crash through following the local rules, and the coalition of two trains is obviously an independent component w.r.t. , which means that it is structurally independent, and the coalition of two trains is obviously the minimal coalition that is both semantically independent w.r.t. the result of no crash and structurally independent from state .

Proposition 2.3

Given an SOMAS , a state and a temporal formula , there does not exist two different coalitions and such that and both and has full contribution to in .

Proof

Suppose there exists two different coalitions and that have full contribution to , which means that both and are the minimal (w.r.t. set-inclusion) coalitions that are both semantically independent with respect to and structurally independent from . Because and , we have . Because and both and are independent components, we have is also an independent component. Therefore, is both semantically independent with respect to and structurally independent from . If , is not the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from . If , which means that , then , not , is the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from . Contradiction!

The above proposition is consistent with an intuition: when semantically coalition ensures through following local rules but is not structurally independent from , meaning that agents in need to communicate with agents outside to ensure , we will enlarge the coalition through including the agents with which agents from communicate. The resulting coalition is the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from , which is also unique.

3 Model Checking

Our logic-based framework provides us another approach to verify a self-organizing multi-agent system. If we only care about whether the system will bring about a property, we only need to check whether a formula in ATL- with the whole set of agents is satisfied in a certain state; if we want to know whether a coalition of agents will bring about a property independently, we need to check whether a coalition of agents has full contribution to a temporal formula in a certain state. In this section, we will investigate how difficult to answer these two model-checking problems. We first measure the model-checking problem for ATL-. In order to answer that, we extend our concurrent game structure by adding new propositions to states that indicate for each local rule , whether or not it is followed by a corresponding agent. For this purpose, we define the extended game structure as follows:

-

•

. In other words, a state of the form of corresponds to the game structure being in state at the beginning of a computation, and a state of the form corresponds to being in state during a computation whose previous state was .

-

•

For each agent , there is a new proposition followed; that is .

-

•

For each state of the form , we have ; For each state , we have

-

•

For each player and each state , we have ;

-

•

For each state and each move vector , we have .

That is how we transfer to , and one computation in corresponds to one computation in . The new propositions allow us to identify the computations that follow the local rules. Using , we encode agents’ following of local rules as propositions in the states. Therefore, evaluating formulas of the form over states of can be reduced to evaluating standard ATL formulas over states of . In classic ATL [2], a formula of the form means there exists a set of strategies, one for each player in , to bring about , which can be interpreted as agents’ capacity of bringing about a property and is different from the semantics of in this paper. Namely, given a SOMAS , a state and a set of agents , a formula holds iff the state of the extended game structure satisfies the following ATL∗ formula:

As we can see, even though coalition of agents has the capacity to bring about , formula does not necessarily hold, because coalition has to follow its local rules to achieve that. To see why, consider the two-train example.

Example 5

Suppose we change the local rules to be the following:

When both trains have the same urgency, it will result in deadlock instead of passing through the tunnel if both trains follow their local rules to wait, which can be expressed in

However, it is clear that both trains can cooperate to pass through the tunnel without crash, which can be expressed in

Although checking an ATL- formula can be reduced to checking a logically equivalent ATL∗ formula, it still can be done in an efficient way so that the corresponding complexity bounds are much lower than those for general ATL∗ model checking.

Proposition 3.1

The model-checking problem for ATL- can be solved in time for a self-organizing multi-agent system with states, a coalition of agents and a formula of length .

Proof

We adopt the proof strategy from [2]. We first construct the extended game structure , where the obedience/violation of local rules are encoded in the structure. To verify whether a temporal formula can be enforced by a coalition of agents through following its local rules in a self-organizing multi-agent system at state , we need to check formula , which can be reduced to evaluating an ATL∗ formula for and :

We then construct a 2-player turn-based synchronous game , where player 1 controls all the actions of coalition (called A-move) leading to an auxiliary state, after which player 2 controls all the actions of coalition (called B-move) leading to the next state of the original transition. Such a game can be interpreted as an AND-OR graph, for which we can solve certain invariance and reachability problems in linear time. Because following the local rules is a necessary condition for coalition to win the game, we can remove all the outgoing A-move in from an state where holds and its outgoing transitions, which can be done in polynomial time to the number of states and the number of agents in . If the original game structure has states, then the turn-based synchronous structure has states. We then perform normal model checking for in , which can be done in polynomial time to the length of . Therefore, checking formula is in the complexity of .

We then measure the complexity of verifying a self-organizing multi-agent system, namely checking whether a coalition of agents has full contribution to a temporal formula in a certain state. A coalition has full contribution to a temporal formula in a state if only if it is the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to that temporal formula and structurally independent from that state. To verify structural independence, we can encode agents’ communication in the structure like what we did for the obedience/violation of local rules. Namely, given a coalition , we define the extended game structure as follows:

-

•

For each agent , there is a new proposition ; that is .

-

•

.

The new propositions allow us to identify the states where agents in only get input from agents inside the coalition. We then construct the extended game structure , where the obedience/violation of local rules are encoded in the structure. Therefore, evaluating whether a coalition of agents is an independent component with respect to can be again reduced to standard ATL formulas over states of .

Proposition 3.2

The model-checking problem for structural independence can be solved in time for a self-organizing multi-agent system with states and a coalition of agents .

Proof

Given a SOMAS , a state and a set of agents , is an independent component with respect to iff the state of the extended game structure satisfies the following ATL∗ formula:

As we have to check for each agent in , checking the above formula is in the complexity of .

With the results of Propositions 3.1 and 3.2, we can measure the complexity of verifying whether a coalition of agents has full contribution to a temporal formula in a certain state.

Proposition 3.3

The model-checking problem for verifying the full contribution of a coaltion of agents can be solved in time for a self-organizing multi-agent system with states, a coalition of agents and a formula of length .

Proof

Given a SOMAS , a state , a set of agents and a temporal formula with the length of , we need to follow Definition 2.6 to verify whether coalition has full contribution to in . Since we know that the model-checking problem for ATL- can be solved in time , and that the model-checking problem for structural independence can be solved in time , checking semantic and structural independence can be solved in time . Moreover, we need to ensure that is the minimal coalition that is both semantically independent and structurally independent. Hence, we have to check every subset of for its semantic independence and structural independence. Therefore, checking the full contribution of a coalition of agents in a state is in the complexity of .

4 Decomposing a Self-organizing Multi-agent System: a Layered Approach

In Section 2 we explored the dependence relation between agents from both structural and semantic perspectives. However, we still have no idea how agents guided by their respective local rules bring about the global behavior of the system. If we check formula and it returns true, it is just proved that all the agents in the system have full contribution to the global behavior of the system , which does not explain anything. For sure, we can enumerate all the possible coalitions of agents to check their contributions to the global behavior of the system, but it might be computationally expensive if the system has a large number of agents. Inspired by the decomposition approach in argumentation [21], we propose to decompose the system into different coalitions based on their dependence relation such that there is a partial order among different coalitions. Since a directed graph with nodes and arrows can better represent the dependence relation, we define a notion of a dependence graph w.r.t. a set of computations. Formally,

Definition 4.1 (Dependence Graph)

Given an SOMAS , a dependence graph w.r.t. a set of computations is a directed graph where and there exists and such that . Typically, given a state and a coalition of agents , when is a dependence graph w.r.t. a set of computations , we will denote it as .

In words, a dependence graph w.r.t. a set of computations consists of a set of nodes , which are the agents in , and a set of arrows , each of which indicates one agent gets input from another agent in a state along a computation in . Having this graph allows us to analyze the dependence relation between agents and the dependence relation between coalitions of agents with respect to a set of computations.

Proposition 4.1

Given an SOMAS , a coalition is an independent component w.r.t. a set of computations iff does not get input from agents outside of in the corresponding dependence graph .

Proof

Coalition is an independent component w.r.t. a set of computations iff for all and is an independent component w.r.t. state iff for all and and it is the case that , which means that for all and and it is the case that it is the case that does not get input from agents in , iff any does not get input from agents outside of in the corresponding dependence graph .

From that, we can check whether a coalition of agents is an independent component w.r.t. through simply checking its corresponding dependence graph.

Proposition 4.2

Given an SOMAS and two sets of computations , if a coalition of agents is an independent component w.r.t. a set of computations , then is also an independent component w.r.t. any set of computations where .

Proof

Let be the dependence graph of and be the dependence graph of . Because , is a spanning subgraph of . Therefore, if does not get input from other agents in , will also not get input from other agents in , which means that if is an independent component w.r.t. , then is also an independent component w.r.t. .

Typically, given an SOMAS , if a coalition of agents is an independent component w.r.t. , then is also an independent component w.r.t. ; if is not an independent component w.r.t. , then is also not an independent component w.r.t. . In this section, we will use a more complicated example illustrate our definitions.

Example 6

A multi-agent system consists of a couple of agents, each of which has specific capacity that is not common knowledge among agents. A complicated task is delegated to the agents and the agents have to cooperate to finish the task in a self-organizing way. The local rule for each agent is as follows: each agent works on one part of the task based on its capacity; once its part is finished, it passes the rest of the task to other agents who can continue via wireless signals until the whole task is finished. We will use our framework to study the contributions of agents and how they cooperate to finish the whole task. Given agents’ capacities, the whole task is finished along the computation . The dependence graph w.r.t. is represented as Figure.4(left), which consists of 5 agents and communication between them regarding the task information.

A dependence graph clearly illustrates not only the dependence relation between agents. When there exists circles in a dependence graph, we can shrink them down to single nodes based on the theory about strongly connected components and condensation graphs in graph theory. Because it is not the main concern of this paper, we omit the process of transforming a dependence graph to a condensation graph and assume that the dependence graph under consideration is a directed acyclic graph. As we mentioned before, we want to decompose the system such that there is a partial order of dependence among coalitions of agents. How to decompose the system based on the dependence graph becomes a problem we need to solve now. Similar to the solution in [21], we use a layering decomposition approach in this paper. We first propose the notion of layer. Formally,

Definition 4.2 (Layer of an Agent)

Given a dependence graph w.r.t. a set of computations , for all , the layer of is a function , and is defined as:

-

•

if has no parent in , then ;

-

•

otherwise, ,

We use to denote the highest layer.

The above definition indicates the layer of an agent in a given dependence graph in two cases. If is a leaf node in the dependence graph , then is located in the lowest layer such that it is independent on the behavior of any other agents. If is not a leaf node, then is located in the layer above all of its parents. A self-organizing multi-agent system can be decomposed into a number of layers. Formally,

Definition 4.3 (Decomposition of an SOMAS)

Given an SOMAS , a decomposition of w.r.t. a set of computations , denoted as , is a tuple

where ().

Using this decomposition approach, any agents in the same layer are independent on each other, and any agents in a given layer are only dependent on the agents located in the lower layers. It is expressed by the following proposition:

Proposition 4.3

Given an SOMAS and a decomposition , for any ():

-

•

if , then and do not get input from each other;

-

•

if gets input from , then .

Proof

If , agents and are on the same level. For located on , if , it has no parent, thus it does not get input from ; if , the parents of are located below , thus cannot reach , meaning that does not get input from . Similarly, does not get input from . If gets input from , then there exists an arrow coming from to . According to our decomposition approach, is located below . Hence, .

Thus, we organize the system in a way where there is a partial order of dependence among agents located in different layers. This layered structure helps us to find coalitions of agents which do not get input from other agents from the dependence graph efficiently. Starting from , any coalitions located in do not get input from other agents. We then go to , where each agent combining with its parents located in lower layers does not get input from other agents. After that, we go to a higher level until we reach . We have the following theorem to check whether a coalition of agents has full contribution to a temporal formula in a state.

Theorem 4.1

Given an SOMAS , a coalition of agents fully contributes to a temporal formula in a state iff satisfies the following conditions

-

•

does not get input from agents in in the dependence graph ;

-

•

;

-

•

for any if it does not get input from agents in in then .

Proof

For the first condition, coalition does not get input from agents in in the dependence graph if and only if coalition is an independent component w.r.t. , which means the coalition is structurally independent. The second condition ensures that is semantically independent w.r.t. . For the third condition, for any subsets of , if it gets input from agents in in , it is not structurally independent; if it does not get input from agents in in and , it is structurally independent but not semantically independent. Thus, for any , it is either not structurally independent or not semantically independent, which means that is the minimal (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from . Therefore, fully contributes to a temporal formula in a state .

It indicates how to verify whether a coalition of agents has full contribution to in state : structurally, we need to check whether does not get input from agents outside in the dependence graph of ; semantically, we need to check whether ensures by following local rules; for any of its proper subsets , if has been confirmed to be structurally independent w.r.t. , then we need to check whether it is not semantically independent w.r.t. . In other words, for any proper subsets of , we need to ensure it is either not structurally independent or not semantically independent w.r.t. from state .

Given a finite set of temporal formulas tformulas that we would like to verify, we need to follow a procedure to efficiently examine the contribution of agents to the global system behavior rather than checking an arbitrary coalition. The results from Proposition 4.3 and Theorem 4.1 can be used to design such a procedure. According to Proposition 4.2 if a coalition of agents is not an independent component w.r.t. then it is also not an independent component w.r.t. . Thus, we investigate the dependence graph of so that we can rule out the coalitions that are not independent components in . Given a computation and its corresponding dependence graph , we first find out all the coalitions of agents that do not get input from other agents according to the result of Proposition 4.3, putting them in the order of set inclusion. From the first coalition to the last coalition in the order, we follow Theorem 4.1 to check whether it has full contribution to any temporal property in tformulas. In this part, after we confirm that a coalition of agents is structurally and semantically independent w.r.t. a temporal formula , we check whether , which does not get input from other agents in , also bring about . If no, then is the minimal coalition (w.r.t. set-inclusion) coalition that is both semantically independent with respect to and structurally independent from . We provide the following pseudocode to illustrate the process.

When it is finished, we collect the information about agents’ full contributions in a set, which allows us to understand how different coalitions of agents contribute to the global system behavior.

Definition 4.4 (Agents’ Independence in Terms of Full Contributions)

Given an SOMAS and a state , agents’ independence in terms of their full contributions in is a set containing the information of agents’ full contributions, namely Coalition has full contribution to in state . .

Example 7

A decomposition of dependence graph in Fig.4(right) is as follows. According to Definition 4.2, , , , and . Therefore, the system is decomposed into three layers: , where , and . Based on the decomposition, from to , we can find multiple independent components, namely , , , , , , and . We then examine whether they fully contribute to any temporal formula one by one, which does a lot of savings over checking possible coalitions without using the dependence graph. Starting from , we check whether agent also does not get input from other agents in the dependence graph , and check whether it ensures by following its local rule. If both return yes, then fully contributes to . We perform the same examination for coalition . For coalition , after confirming that it is both semantically independent with respect to and structurally independent from , we still need to check whether neither coalitions nor brings about to ensure the minimization requirement. The verification is finished when we have done with . Suppose we have the following result: , each of which indicates that a coalition has full contribution to a part of the task. Therefore, contains the information on how different coalitions of agents finish the whole task.

5 Modeling Constraint Satisfaction Problems

In mult-agent systems, it is normal that agents have personal goals and constraints. From the perspective of the system, we would like to find a solution such that we can balance the satisfaction of the goals and constraints among agents. In mathematics, those problems are called constraint satisfaction problems. Constraint satisfaction problems are NP-complete in general, which means that it will more and more computationally expensive to solve those problems in centralized way when we enlarge the size of the problem. After realizing that, people start to look at agents themselves and the cooperation among them: agents can negotiate as to reach a global equilibrium. Algorithms based on self organization have been developed to solve various constraint satisfaction problems, such as task/resource allocation [22] and relation adaption [31]. Formally, a constraint satisfaction problem is defined as a triple , where

-

•

is a set of variables;

-

•

is a set of domains;

-

•

is a set of constraints.

Each variable can take on the values in its nonempty domain . Every constraint is a pair , where is a subset of n variables and is the relation on . An evaluation of the variables is a function from a subset of variables to a particular set of values in the corresponding subset of domains. An evaluation is said to satisfy a constraint if the values assigned to the variables satisfies the relation . A solution to the constraint satisfaction problem is an evaluation that include all the variables and do not violate any of the constraints.

Using our framework of self-organizing multi-agent systems to model a constraint satisfaction problem, we can see a complete evaluation that includes all the variables as a particular state in the system, and a self-organization based algorithm is a set of local rules we design for agents to follow. The goal of the system is to converge to a stable state where the values of do not violate any constraints, which is a feasible solution to the problem. Notice that set consists of multiple constraints. An evaluation that satisfies constraints always implies an evaluation that satisfies constraints if . Next, we will use a real example to illustrate how we use our framework to model a constraint satisfaction problem.

Multi-agent systems can be used to support information exchange within user communities where each user agent represents a user’s interests. But how does a user agent make contact with other user agents with common interests? We can use a peer-to-peer network, but this could flood the network with queries, risking overloading the system. A decentralized solution based on middle agents can self-organize to form communities among agents with common interests [28]. User agents represent users and each user agent registers with one or more middle agent. User agents (requestors) send queries about their user’s interests to middle agents. Given these queries, each middle agent then search among the information it holds from other user agents registered with it. If it can respond to a query based on this information then the search is completed. Otherwise, the middle agent communicates with other middle agents in order to try and obtain the information. Once this has been done, the middle agent relays results of the search to the requester. After that, the middle agent checks whether both the requester and the provider are within its group. If not, the middle agent of the requester transfers the provider to its group, so that both user agents are in the same group. The consequence is that users with common interests form a community. The querying behavior of user agents builds up a profile of their interests, which is updated by successive queries. It is a solution with self-organization features that solves a constraint satisfaction problem that the middle agents where user agents register correspond to the set of variables , the possible middle agents where user agents can register correspond to the domains of the variables , and user agents’ interests in other user agents correspond to the constraints . Compared to centralizedly solving the problem that might encounter computational issues when the number of users increases, the above solution is a highly scalable process that operates efficiently with a large number of users. As user agents broadcast their queries in the system when implementing the solution, it is not required that the system designer is aware of user agents’ interests beforehand.

We now use our framework to model the example. The system has a set of user agents and a set of middle agents ; each middle agent can register , disregister a user agent or does nothing. Function returns the static interest of each user agent . Each state is labeled with propositions, each of which is of the form , meaning that user agent registers in middle agent . We assume that middle agents are aware of the positions where user agents register, but not the interests of user agents before user agents broadcast their queries in the system. The system moves from one state to another state once a user agent registers or disregister with a user agent. The goal of the system is to converge to a state where all user agents are in their own user communities. As Fig. 5 described, a user community consists of user agent(s) and middle agent(s); for any user agent in the community, it registers with the same middle agent as its interested user agents do. In order to formalize this property, we extend our semantics as follows: given and ,

-

•

iff for all and there exists such that .

Local rule for each agent is prescribed as follows: if a user agent has interest in another user agent while they don’t register with the same middle agent, then the middle agent of has to disregister and send the information about to the middle agent of so that it can register . Suppose at the starting state user agents randomly register with different middle agents and each has interest in some other user agents (see Table 1). Starting from , asks a question about . After hearing the query about , disregisters and sends information about to , which moves the system from state to state . After getting the information about from , registers , which moves the system from state to state . In state , both and register with the same middle agent . Later on, asks a question about . Because and register with the same middle agent , the system does not change anything from the query. And then asks a question about . Similar to , disregisters after hearing the query, and registers after getting the information about from . And then asks a question about , which does not trigger any changes to the system. Based on the local rules, the dependence graph between user agents and middle agents in terms of their communication along the computation is shown in Fig. 6. Triggered by the queries, all agents follow their local rules to behave and the system transits from to . See Fig. 7 for the state transitions of the system and the valuation of each state. Because in both and with common interests register with and both and with common interests register with , satisfies the property of our desired state. Starting from a disorder state , the system finally converges to state where for each user it registers with the same middle agent as its interested user agents do, forming two user communities and . How does the system reach this desired state? What are the agents’ contributions to forming such two communities? Here is the analysis.

| User Agent | Interest | Register in |

From Fig. 6, we can decompose the system into four layers, which are all the user agens as layer 0, as layer 1, as layer 2 and as layer 3. We can see there are lots of independent components w.r.t. computation . Because the contributions of user agents are not encoded in the system, we do not discuss the independent components that are formed only by user agents. Given a set of temporal formulas , we verify which coalition of agents full contributes to any of them. Firstly, coalition has full contribution to . This is because any agents in the coalition do not get input from agents outside the coalition, and they form the community of , which can be expressed by

and apparently it cannot be achieved without any of them, which satisfies the minimization condition. Secondly, consider community . From Fig. 6, we can see that coalition is not an independent component as it gets input from middle agent . Finally, the whole system fully contributes to forming the two communities and .

Such information regarding agents’ full contributions allows us to understand how user agents’ interest are satisfied and how user communities are formed, which facilitates the development of the system. For example, since coalition has full contribution to , the community will not change even if we change the interests of and or does not register .

6 Related Work

In order to understand the complex link between local agent behavior and system level behavior, we need to study the independence between local agent behavior in terms of ensuring properties. Coalition-related logic achieves that from different perspectives. For instance, alternating temporal logic (ATL) and game theory can be used to check whether a coalition of agents can enforce a state property regardless of what other agents do [19]. [1] study whether a normative system remains effective even though some agents do not comply with it. [30] identifies two types of system properties that are unchangeable by restricting the joint behavior of a coalition. Apart from the semantics dimension, we have proposed the notion of independent components, a coalition of agents which only get input information from agents in the coalition. It is a structural dimension to represent a coalition of agents whose behavior is independent on the behavior of agents outside of the coalition.

If we see local rules as norms that are used to regulate the behavior of the systems, self-organizing multi-agent systems are actually a category of normative multi-agent systems. In preventative control systems [26], norms are represented as hard constraints where violations are impossible, but this does not respect agents’ autonomy. Soft constraints are used in detective control systems where norms are possible to be violated but agents are motivated to follow the norms through sanctions or punishments [5]. It is indeed more flexible than setting hard constraints in the system. However, agents coming from an open environment have their own personal situations such as knowledge and preferences, which might not be known to the designer of the system. In such a case, the system designer cannot identity which outcome is desired thus it is hard to identify legal computations to get there [7][20]. The norms that we consider in this paper are designed based on agents’ communication. As the norm prescribes, agents are supposed to communicate with each other about their own situations and what agents need to do depends on the communication results. Such type of norms are in fact widely used in multi-agent systems because it allows agents to regulate the system by themselves [28][27][22].

The logic we use in this paper is inspired by ATL [2]. ATL is usually used to reason about the strategies of participating agents [6][15]: formula is read as coalition have a strategy or can collaborate to bring about formula , which can be seen as the capacity of the participating agents to ensure a result no matter what other agents outside of the coalition do. A strategy is a function that takes a state (imperfect recall) or a sequence of states (perfect recall) as an argument and outputs an action, which can be seen as a plan. In order to make a plan for a coalition of agents to ensure a property, there is an external that is aware of the available actions from each agent and the game structure. In [Alur et al., 2002], the authors use a game between a protagonist and an antagonist to gain more intuition about ATL formulas. The ATL formula is satisfied at state iff the protagonist has a winning strategy to achieve in this game, which means that the protagonist is aware of the available actions from each agent and the game so that he can make a strategy (or a plan) for coalition to win the game. However, in a self-organizing multi-agent system, agents only have local view of the system, which is in this paper the state where they are, their own internal functions and the actions that are available to them and the local rules they need to follow, but not the internal functions of other agents and the actions that are available to other agents. Hence, there is no external to centrally make a plan for agents to follow. The logic in this paper is used to reason about the local rules of the participating agents: formula is read as coalition bring about formula by following their local rules no matter what other agents outside the coalition do. The use of logic and graph theory is new in the research of multi-agent systems, but it has appeared in the area of argumentation. An abstract argumentation framework can be represented as a directed graph (called a defeat graph) where nodes represent arguments and arcs represent attacks between them [14]. [21] use strongly connected components in graph theory to decompose an abstract argumentation framework for efficient computation of argumentation extensions. The decomposition approach in this paper is inspired by that, while we use it not for efficient computation but for system verification.

While we use modal logic to understand the complex link between local agent behavior and system level behavior, one could think that causal reasoning is a plausible alternative. Causal reasoning is the process of identifying the relationship between a cause and its effect [23]. It indeed has been used to identify the causal relation between macro- and micro-variables in [9] [8]. For self-organizing multi-agent systems, the local rules that agents follow are the cause and the global property they contribute is the effect. But sometimes it might be difficult to identify the causal relation because of the interactions between agents. In such cases, causal reasoning can still be combined with our graph-based layered approach to decompose the system, which is similar to the idea proposed by Judea Pearl and Thomas Verma to combine logic and directed graphs for causal reasoning [24].

7 Conclusion

Self-organization has been introduced to multi-agent systems as an internal control process or mechanism to solve difficult problems spontaneously. However, because a self-organizing multi-agent system has autonomous agents and local interactions between them, it is difficult to predict the global behavior of the system from the behavior of the agents we design deductively, making implementation the usual way to evaluate a self-organizing multi-agent system. Therefore, we believe that if we can understand how agents bring about the behavior of the system in the sense that which coalition contributes to which system property independently, the development of self-organizing multi-agent systems will be facilitated. In this paper, we propose a logic-based framework for self-organizing multi-agent systems, where abstract local rules are defined in the way that agents make their next moves based on their communication with other agents. Such a framework allows us to verify properties of the system without doing implementation. A structural property called independent components is introduced to represent a coalition of agents which do not get input from agents outside the coalition. The dependence relation between coalitions of agents regarding their contributions to the global behavior of the system is reasoned about from the structural and semantic perspectives. We then show model-checking a formula in our language remains close to the complexity of model-checking standard ATL, while model-checking whether a coalition of agents has full contribution to a temporal property is in exponential time. Moreover, we combine our framework with graph theory to decompose a system into different coalitions located in different layers. The resulting information about agents’ full contributions allows us to understand the complex link between local agent behavior and system level behavior in a self-organizing multi-agent system. We finally show how we can use our framework to model a constraint satisfaction problem, where a solution based on self-organization is used. Certainly, there may be some possible limitations in this study: for example, we only consider communication as the interaction between agents, which does not capture all types of interaction in the system. Moreover, the dependence graph with respect to computation is determined by agents’ communication required by local rules. Thus, the internal function of each agent play an important role in the global system behavior. In the future, it will be interesting to investigate the robustness of self-organizing multi-agent systems due to the change of internal functions, which is possible to happen when the system is deployed in an open environment and thus agents can join or exit the system as they want.

References

- [1] Thomas Ågotnes, Wiebe Van der Hoek, and Michael Wooldridge, ‘Robust normative systems and a logic of norm compliance’, Logic Journal of IGPL, 18(1), 4–30, (2010).

- [2] Rajeev Alur, Thomas A Henzinger, and Orna Kupferman, ‘Alternating-time temporal logic’, Journal of the ACM (JACM), 49(5), 672–713, (2002).

- [3] Carole Bernon, Valérie Camps, Marie-Pierre Gleizes, and Gauthier Picard, ‘Tools for self-organizing applications engineering’, in International Workshop on Engineering Self-Organising Applications, pp. 283–298. Springer, (2003).

- [4] Carole Bernon, Marie-Pierre Gleizes, Sylvain Peyruqueou, and Gauthier Picard, ‘Adelfe: a methodology for adaptive multi-agent systems engineering’, in International Workshop on Engineering Societies in the Agents World, pp. 156–169. Springer, (2002).

- [5] Ronen I Brafman and Moshe Tennenholtz, ‘On partially controlled multi-agent systems’, Journal of Artificial Intelligence Research, 4, 477–507, (1996).

- [6] Nils Bulling, Modelling and Verifying Abilities of Rational Agents, Papierflieger-Verlag, 2010.

- [7] Nils Bulling and Mehdi Dastani, ‘Norm-based mechanism design’, Artificial Intelligence, 239, 97–142, (2016).

- [8] Krzysztof Chalupka, Automated Macro-scale Causal Hypothesis Formation Based on Micro-scale Observation, Ph.D. dissertation, California Institute of Technology, 2017.

- [9] Krzysztof Chalupka, Tobias Bischoff, Pietro Perona, and Frederick Eberhardt, ‘Unsupervised discovery of el nino using causal feature learning on microlevel climate data’, in Proceedings of the Thirty-Second Conference on Uncertainty in Artificial Intelligence, pp. 72–81, (2016).

- [10] Edmund M. Clarke, E Allen Emerson, and A Prasad Sistla, ‘Automatic verification of finite-state concurrent systems using temporal logic specifications’, ACM Transactions on Programming Languages and Systems (TOPLAS), 8(2), 244–263, (1986).

- [11] Edmund M Clarke Jr, Orna Grumberg, Daniel Kroening, Doron Peled, and Helmut Veith, Model checking, MIT press, 2018.

- [12] Giovanna Di Marzo Serugendo, Marie-Pierre Gleizes, and Anthony Karageorgos, ‘Self-organization in multi-agent systems’, Knowledge Engineering Review, 20(2), 165–189, (2005).

- [13] Marco Dorigo, Mauro Birattari, and Thomas Stutzle, ‘Ant colony optimization’, IEEE computational intelligence magazine, 1(4), 28–39, (2006).

- [14] Phan Minh Dung, ‘On the acceptability of arguments and its fundamental role in nonmonotonic reasoning, logic programming and n-person games’, Artificial intelligence, 77(2), 321–357, (1995).

- [15] Valentin Goranko, Antti Kuusisto, and Raine Rönnholm, ‘Game-theoretic semantics for alternating-time temporal logic’, ACM Transactions on Computational Logic (TOCL), 19(3), 1–38, (2018).

- [16] VI Gorodetskii, ‘Self-organization and multiagent systems: I. models of multiagent self-organization’, Journal of Computer and Systems Sciences International, 51(2), 256–281, (2012).

- [17] VI Gorodetskii, ‘Self-organization and multiagent systems: Ii. applications and the development technology’, Journal of Computer and Systems Sciences International, 51(3), 391–409, (2012).

- [18] Abdelkader Khelil and Rachid Beghdad, ‘Esa: an efficient self-deployment algorithm for coverage in wireless sensor networks’, Procedia Computer Science, 98, 40–47, (2016).

- [19] Max Knobbout and Mehdi Dastani, ‘Reasoning under compliance assumptions in normative multiagent systems’, in Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems-Volume 1, pp. 331–340. International Foundation for Autonomous Agents and Multiagent Systems, (2012).

- [20] Max Knobbout, Mehdi Dastani, and John-Jules Ch Meyer, ‘Formal frameworks for verifying normative multi-agent systems’, in Theory and Practice of Formal Methods, 294–308, Springer, (2016).

- [21] B. Liao, Efficient Computation of Argumentation Semantics, Intelligent systems series, Elsevier Science, 2013.

- [22] Kathryn Sarah Macarthur, Ruben Stranders, Sarvapali Ramchurn, and Nicholas Jennings, ‘A distributed anytime algorithm for dynamic task allocation in multi-agent systems’, in Twenty-Fifth AAAI Conference on Artificial Intelligence, (2011).

- [23] Judea Pearl, Causality, Cambridge university press, 2009.

- [24] Judea Pearl and Thomas Verma, The logic of representing dependencies by directed graphs, University of California (Los Angeles). Computer Science Department, 1987.

- [25] Gauthier Picard, Carole Bernon, and Marie-Pierre Gleizes, ‘Etto: emergent timetabling by cooperative self-organization’, in International Workshop on Engineering Self-Organising Applications, pp. 31–45. Springer, (2005).

- [26] Yoav Shoham, ‘Agent-oriented programming’, Artificial intelligence, 60(1), 51–92, (1993).

- [27] Gabriele Valentini, Heiko Hamann, and Marco Dorigo, ‘Self-organized collective decision making: The weighted voter model’, in Proceedings of the 2014 international conference on Autonomous agents and multi-agent systems, pp. 45–52. International Foundation for Autonomous Agents and Multiagent Systems, (2014).

- [28] Fang Wang, ‘Self-organising communities formed by middle agents’, in Proceedings of the first international joint conference on Autonomous agents and multiagent systems: part 3, pp. 1333–1339. ACM, (2002).

- [29] Michael Wooldridge and Wiebe Van Der Hoek, ‘On obligations and normative ability: Towards a logical analysis of the social contract’, Journal of Applied Logic, 3(3-4), 396–420, (2005).

- [30] Jun Wu, Chongjun Wang, and Junyuan Xie, ‘A framework for coalitional normative systems’, in The 10th International Conference on Autonomous Agents and Multiagent Systems-Volume 1, pp. 259–266, (2011).

- [31] Dayong Ye, Minjie Zhang, and Danny Sutanto, ‘Self-organization in an agent network: A mechanism and a potential application’, Decision Support Systems, 53(3), 406–417, (2012).

- [32] Dayong Ye, Minjie Zhang, and Athanasios V Vasilakos, ‘A survey of self-organization mechanisms in multiagent systems’, IEEE Transactions on Systems, Man, and Cybernetics: Systems, 47(3), 441–461, (2016).