A fully adaptive multilevel stochastic collocation strategy for solving elliptic PDEs with random data

Abstract

We propose and analyse a fully adaptive strategy for solving elliptic PDEs with random data in this work. A hierarchical sequence of adaptive mesh refinements for the spatial approximation is combined with adaptive anisotropic sparse Smolyak grids in the stochastic space in such a way as to minimize the computational cost. The novel aspect of our strategy is that the hierarchy of spatial approximations is sample dependent so that the computational effort at each collocation point can be optimised individually. We outline a rigorous analysis for the convergence and computational complexity of the adaptive multilevel algorithm and we provide optimal choices for error tolerances at each level. Two numerical examples demonstrate the reliability of the error control and the significant decrease in the complexity that arises when compared to single level algorithms and multilevel algorithms that employ adaptivity solely in the spatial discretisation or in the collocation procedure.

Keywords. multilevel methods, hierarchical methods,

adaptivity, stochastic collocation, PDEs with random data, sparse grids,

uncertainty quantification, high-dimensional approximation.

AMS subject classifications. 65C20, 65C30, 65N35, 65M75

1 Introduction

A central task in computational science and engineering is the efficient numerical treatment of partial differential equations (PDEs) with uncertain input data (coefficients, source term, geometry, etc). This has been a very active area of research in recent years with a host of competing ideas. Our focus here is on elliptic PDEs with random input data in a standard (pointwise) Hilbert space setting: Find such that

| (1) |

where is a parameter-dependent inner product in a Hilbert space and is a parameter-dependent bounded linear functional. In general, the solution will be represented as where , , is the deterministic, bounded (physical) domain and is a (stochastic) parameter space of finite dimension (finite noise assumption). The component parameters will be associated with independent random variables that have a joint probability density function such that .

The most commonly used approach to solve (1) is the Monte Carlo (MC) method based on random samples . It has a dimension-independent convergence rate and leads to a natural decoupling of the stochastic and spatial dependency. While MC methods are well suited to adaptive spatial refinement, they are not able to exploit any smoothness or special structure in the parameter dependence. The standard, single-level stochastic collocation method (see, for example, [2, 31, 32, 37]) for (1) is similar to the MC method in that it involves independent, finite-dimensional spatial approximations at a set of deterministic sampling points in , so as to construct an interpolant

| (2) |

in the polynomial space with basis functions . The coefficients are determined by the interpolating condition , for . The quality of the interpolation process depends on the accuracy of the spatial approximations and on the number of collocation points , which typically, grows rapidly with increasing stochastic dimension .

Two concepts in the efficient treatment of (1) that have shown a lot of promise and are (to some extent) complementary are (i) adaptive sparse-grid interpolation methods that are able to exploit smoothness with respect to the uncertain parameters and (ii) multilevel approaches that aim at reducing the computational cost through a hierarchy of spatial approximations. Adaptive sparse-grid methods, where the set of sample points is adaptively generated, can be traced back to Gerstner & Griebel [23] and have been extensively tested in collocation form; see [1, 11, 25, 30, 33]. We note that parametric adaptivity has also been explored in a Galerkin framework; see [7, 9, 13, 16, 17], and that there are a number of recent papers aimed at proving dimension-independent convergence; see [3, 8, 29, 40, 41].

The multilevel Monte Carlo (MLMC) method was originally proposed as an abstract variance reduction technique by Heinrich [26], and independently for stochastic differential equations in mathematical finance by Giles [24]. In the context of uncertainty quantification it was developed by Barth et al. [4] and Cliffe et al. [12] and extended to stochastic collocation sampling methods soon thereafter by Teckentrup et al. [36]. The methodology in this paper can be viewed as an extension of this body of work.

Problems where the PDE solution develops singularities (or at least steep gradients) in random locations in the spatial domain are a challenging feature in many real-world applications. Spatial adaptivity driven by a posteriori error estimates has been investigated within single-level stochastic collocation in [33] and has been considered in a MLMC framework in [18]. To date, however, MC-based methods [14, 19, 27, 39] are the only methods where the spatial approximation is locally refined at each parametric sample point. In what follows, we will combine a pointwise adaptive mesh refinement for the approximation of the spatial approximations with an adaptive (anisotropic) sparse Smolyak grid, in order to improve the efficiency of the multilevel method to reach a user-prescribed tolerance for the accuracy of the multilevel interpolant, as well as for the computation of associated quantities of interest. By rigorous control of both error components, the fully adaptive algorithm proposed herein is able to exploit smoothness and structure in the parameter dependence while also exploiting fully the computational gains due to the spatially adapted hierarchy of spatial approximations at each collocation point. Under certain assumptions on the efficiency of the two-component adaptive schemes, the complexity of the new multilevel algorithm can be estimated rigorously. Two specifically chosen numerical examples will be looked at in the final section to demonstrate its efficiency.

2 Methodology

Following the paper of Teckentrup et al. [36], we consider steady-state diffusion problems with uncertain parameters. Thus, we will assume that and that the variational formulation (1) admits a unique solution in the weighted Bochner space

| (3) |

with corresponding norm

| (4) |

The structure of our adaptive algorithm is identical to that introduced in [36]. The distinctive aspects are discussed in this section.

2.1 Adaptive spatial approximation

We will focus on finite element approximation in the spatial domain using classical adaptive methodology. That is, we execute the loop

| (5) |

until the estimate of the error in the second step is less than a prescribed tolerance. Next, we let be a decreasing sequence of tolerances with

| (6) |

Then, for each fixed parameter , we run our adaptive strategy to compute approximate spatial solutions on a sequence of nested subspaces

| (7) |

This is the point of departure from the previous work [36], wherein the sequence of approximation spaces could be chosen in a general way, but in a manner that was fixed for all collocation points .

Starting from the pointwise error estimate

| (8) |

and supposing measurability of the discrete spaces and bounded second moments of , we directly get the following error bound

| (9) |

with a constant

| (10) |

that does not depend on and . Adaptive algorithms proposed by Dörfler [15] and Kreuzer[28] converge for fixed and . The constant in (10) is related to the effectivity of the a posteriori error estimation strategy. Values close to one can be obtained using hierarchical error estimators (see, for example, Bespalov et al. [10]) or using gradient recovery techniques in the asymptotic regime.

2.2 Adaptive stochastic interpolation

Let us assume and denote by a sequence of interpolation operators

| (11) |

with points from the -dimensional space . We construct each of these operators by a hierarchical sequence of one-dimensional Lagrange interpolation operators with the anisotropic Smolyak algorithm, which was introduced by Gerstner & Griebel [23]. The method is dimension adaptive, using the individual surplus spaces in the multi-dimensional hierarchy as natural error indicators.

Let be a second sequence of tolerances, a priori not necessarily decreasing. Under suitable regularity assumptions for the uncertain data (see e.g. Babuška et al. [2, Lem. 3.1, 3.2]), we can assume that there exist numbers , , and a constant not depending on such that

| (12) |

where, for simplicity, we set .

Since (8) implies , which is decreasing as , we can expect that for higher it suffices to use less accurate interpolation operators, i.e., smaller numbers , to achieve a required accuracy. Indeed this is the main motivation to set up a multilevel interpolation approximation. Note that in this way, the tolerances are strongly linked to the spatial tolerances . We will make this connection more precise and give suitable values for the sequence of tolerances in the next section.

2.3 An adaptive multilevel strategy

Given the sequences and , we define the multilevel interpolation approximation in the usual way by

| (13) |

Observe that the most accurate interpolation operator is used on the coarsest spatial approximation whereas the least accurate interpolation operator is applied to the difference of the finest spatial approximations . The close relationship between the spatial and stochastic approximations at index is clearly visible in (13). We note that the use of adaptive interpolation operators is also mentioned in [36]. The key difference is that the hierarchy in [36] is based on the number of collocation points rather than the tolerances employed here.

To show the convergence of the multilevel approximation to the true solution , we split the error into the sum of a spatial discretization error and a stochastic interpolation error using the triangle inequality

| (14) |

Due to (9) the first term on the right hand side of (14) is bounded by . The aim is now to choose the tolerances in an appropriate way to reach the same accuracy for the second term. From (12), we estimate the stochastic interpolation error as follows:

| (15) |

To obtain an accuracy of the same size as the spatial discretization error, a first choice for the tolerances would be to simply demand , for all , and conclude that

| (16) |

so that the adaptive multilevel method converges for . However, the values for can be optimized by minimizing the computational costs while keeping the desired accuracy.

At this point it is appropriate to consider the computational cost, , of the multilevel stochastic collocation estimator required to achieve an accuracy . Thus, in order to quantify the contributions from the spatial discretization and the stochastic collocation, we will need to make two assumptions to link the cost with the error bounds in (9) and (12). Let denote an upper bound for the cost to solve the deterministic PDE at sample point with accuracy . Then, we assume that for all ,

as well as the special case

Here, the constants , are independent of , , and the rates . Note that we could also consider the exact cost per sample and introduce a sample dependent constant in (A1), but that would complicate the subsequent analysis.

Assumption (A1) usually holds for first-order adaptive spatial discretization methods with , when coupled with optimal linear solvers such as multigrid. The factors on the right-hand side in (A2) reflect best the convergence of the sparse grid approximations in (12) with respect to the total number of collocation points, see Nobile et al. [31, 32] or [36, Theorem 5.5]. To estimate the difference , we use the fact that with a constant close to . It follows from , which is the basis for the very good performance of hierarchical error estimators. We will absorb into in the sequel.

The rate depends in general on the dimension . Theoretical results for the anisotropic classical Smolyak algorithm are given in [31, Thm. 3.8]. However, it has recently been shown in [40, 41] that under certain smoothness assumptions can be independent of .

An upper bound for the total computational cost of the approximation can then be defined as

| (17) |

with . In a first step, we will consider a general sequence without defining a decay rate a priori. Following the argument in [36], with a priori estimates for the spatial error replaced by tolerances, then leads to an estimate of -cost and identifies a set of optimal tolerances in (12).

Theorem 2.1.

Let be a decreasing sequence of spatial tolerances satisfying (6). Suppose assumptions (A1) and (A2) hold. Then, for any , there exist an integer and a sequence of tolerances in (12) such that

| (18) |

and

| (19) |

with and

| (20) | ||||

| (21) |

where for ease of notation we set . The optimal choice for the tolerances is then given by

| (22) |

The utility of (22) is that near-optimal tolerances can be readily computed if estimates of the constants and the rates in (A1) and (A2) are available.

Proof: As in the convergence analysis above, we split the error and make sure that both the spatial discretization error and the stochastic interpolation error are bounded by . First, we choose an appropriate and such that . This is, of course, always possible and fixes the number as a function of . Next we determine the set so that the computational cost in (17) is minimized subject to the requirement that the stochastic interpolation error is bounded by . Using assumptions (A1) and (A2), this reads

| (23) |

Application of the Lagrange multiplier method with all treated as continuous variables as in Giles [24] gives the optimal choice for the number of samples

| (24) |

with and defined in (20) and (21). To ensure that is an integer, we round up to the next integer. The –cost of the multilevel approximation can then be estimated as follows

with .

The optimal tolerances can be directly determined from assumption (A2). Thus with defined above we get

| (25) |

Note that with these values , which gives the desired accuracy in (15).

Observe that the function as well as the second term in (19) still depend on , because is a function of . In this way, the choice of the tolerances has an influence on the rate , which could be further optimized. However, in the following we will restrict our attention to a typical geometric design with with a positive reduction factor . The overall cost can then be estimated using a standard construction, leading to the following result.

Theorem 2.2.

Let the sequence of spatial tolerances in (6) be defined by with a reduction factor . Suppose assumptions (A1) and (A2) hold. Then, for any , there exists an integer such that

| (26) |

and

| (27) |

In typical applications, it is usually more natural to consider a functional of the solution instead of the solution itself. Thus, suppose a (possibly nonlinear) functional with is given. In this case, we define the following single-level and multi-level stochastic collocation approximations:

| (28) | |||||

| (29) |

with . As in (9) and (12), we can ensure that for the adaptive error control of the expected values, for all we have

| (30) | |||||

| (31) |

In practice, the following analogue of Theorem 2.2 holds for the expected value of the error of the multilevel approximation of functionals.

Proposition 2.1.

Let the sequence of spatial tolerances in (6) be defined by with a reduction factor . Suppose assumptions (A1) and (A2) hold with convergence rates and . Then, for any , there exists an integer such that

| (32) |

and

| (33) |

Proof: see the discussion of Proposition 4.6 in [36].

Note that in analogy to strong and weak convergence of solutions of stochastic differential equations, one would expect the error in mean associated with approximating a functional to decrease at a faster rate than the error in norm associated with approximating the entire solution . Thus, we anticipate that . Moreover, the convergence rate of the error in the functional is in general larger than the convergence rate of the error in the -norm, which leads to a smaller value in assumption (A1). Specifically, in the case of -regularity in space and using an optimal linear solver, such as multigrid, we anticipate that .

We conclude this section with a complete algorithmic description of our adaptive multilevel stochastic collocation method. Table 1 illustrates the main steps. The strategy is self-adaptive in nature. Thus, once the tolerances and are set, the algorithm delivers an approximate functional with accuracy close to the user-prescribed tolerance . An analogous algorithm can be defined to deliver the numerical solution close to a user-prescribed tolerance . A priori, to obtain optimal results, the reliability of the estimation for the adaptive spatial discretization and the adaptive Smolyak algorithm needs to be studied in order to provide values for the constants and . More specifically, while the spatial tolerances can be freely chosen (by fixing the number of levels and the reduction factor ), the optimal choice of the tolerances in (22) requires estimates of the parameters and . A discussion of how to effect this in a preprocessing step is given in the next section. A crucial point to note is that, even without this information, the adaptive anisotropic Smolyak algorithm will automatically detect the importance of various directions in the parameter space .

| Algorithm: Adaptive Multilevel Stochastic Collocation Method | |

|---|---|

| 1. | Given and , estimate , , , and . |

| 2. | Set coarsest spatial tolerance: . |

| 3. | Set other spatial tolerances: , . |

| 4. | Compute with tolerances and . |

| 5. | Set stochastic tolerances: |

| , , | |

| with and defined in (20) and (21), respectively. | |

| 6. | Coarsest level (reusing samples from Step 4): |

| Compute with tolerances and . | |

| 7. | Multilevel differences (reusing samples from level ): |

| Compute with tolerances , and . | |

| 8. | Compute . |

An important point already mentioned in [36] is that the optimal rounded values for the number of samples, , will not be used by the algorithm, because they do not necessarily correspond to an adaptive sparse grid level. However, for each level , the tolerance can be ensured by choosing slightly larger, resulting in a slight inefficiency of the sparse grid approximation. Note that, in practice, the same behaviour is observed for adaptive spatial discretizations. In any case, no restart is needed; cf. [36, Section 6.3].

3 Numerical examples

First, we provide general information on the adaptive components used. Then, numerical results are presented for two isotropic diffusion problems with uncertain source term and uncertain geometry, respectively. All calculations have been done with Matlab version R2017a on a Latitude with an i5-7300U Intel processor running at 2.7 GHz.

For the spatial approximation considered in the two examples, we use the adaptive piecewise linear finite element method implemented by Funken, Praetorius and Wissgott in the Matlab package p1afem.222The p1afem software package can be downloaded from the author’s webpage [20]. Using Matlab built-in functions and vectorization for an efficient realization, the code performs with almost linear complexity in terms of degrees of freedom with respect to the runtime. A general description of the underlying ideas can be found in [22] and the technical report [21] provides detailed documentation. The code is easy to modify. In order to control the accuracy of solution functionals, the dual weighted residual method (DWRM) introduced by Becker & Rannacher [6] is adopted. In what follows, we will give a short summary of the underlying principles that are relevant for our implementation.

Let be fixed. For ease of presentation we write and consider the variational problem (1) with . We also suppose the solution functional takes the specific form

| (34) |

as discussed in [36, Example 5.13]. Let be the finite element solution computed on the (adaptive) mesh . Then the DWRM provides a representation of the error in the solution functional in the form

| (35) |

by simply neglecting the higher-order term. Letting be the exact solution of the linearised dual problem

| (36) |

and letting be the finite element approximation of the dual solution on the same mesh, we have, using Galerkin orthogonality,

| (37) | ||||

| (38) |

In practice, when solving the dual problem we compute an approximation of its error in the hierarchical surplus space of quadratic finite elements. Hierarchical error estimators using this approach are implemented in p1afem for the primal solution .

Putting this all together, we obtain

| (39) |

We use as refinement indicators and mark elements for refinement using the standard Dörfler criterion from [15], which determines the minimal set such that . We typically set a value of in our calculations. Refinement by newest vertex bisection is applied to guarantee nested finite element spaces and the optimal convergence of the adaptive finite element method, see [28]. The adaptive process is terminated when the absolute value of is less than a prescribed tolerance.

Turning to the adaptive anisotropic Smolyak algorithm, the main idea for the construction of the sparse grid interpolation operators in (11) is to use the hierarchical decomposition

| (40) |

with multi-indices , , and univariate polynomial interpolation operators , which use collocation points to construct a polynomial interpolant in of degree at most . The operators are often referred to as hierarchical surplus operators. The function has to satisfy , , and . We set for all and use the nested sequence of univariate Clenshaw–Curtis nodes with if . In (40), is then the number of all explored quadrature points in determined by . To get good approximation properties, the index set should satisfy the downward closed set property, i.e.,

| (41) |

As usual, we ensure that to also recover constant functions.

The hierarchical structure in (40) allows to interpret updates that are derived by adding further differences , i.e., enhancing the index set by an admissible index that satisfies (41), as error indicators for already computed approximations. There are several adaptive strategies available. One could explore the whole margin of defined by

| (42) |

Generally, this approach is computationally challenging and yields a fast increase of quadrature points. Instead, as suggested by Gerstner & Griebel [23], the margin is reduced to the set

| (43) |

In each step, the adaptive Smolyak algorithm computes the profits for all – reusing already computed profits – and replaces the index in with the highest profit, say , by its admissible neighbours taken from the set . These neighbours are then explored next. The algorithm stops if the absolute value of the highest profit is less than a prescribed tolerance. This adaptive strategy is implemented in Matlab in the Sparse Grid Kit333This package can be downloaded from the CSQI website [34]. and its numerical performance is discussed in the review paper [5].

We adopt this refinement strategy with one minor deviation in our numerical experiments: namely, at the final iteration step, instead of adding the new profits to the interpolant, the previous approximate value is returned. In that way, the last highest profit can be considered as a more realistic error indicator. This adaptation allows a better understanding of the convergence behaviour of the multilevel approach. In practical calculations one would almost certainly use the final value.

3.1 Uncertain source and boundary conditions: ,

We will refer to this example as the one peak test problem. It was introduced by Kornhuber & Youett [27, Sect. 5.1] in order to assess the efficiency of adaptive multilevel Monte Carlo methods. The challenge is to solve the Poisson equation in a unit square domain with Dirichlet boundary data on (points in are represented by ). The source term and boundary data are uncertain and are parameterised by , representing the image of a pair of independent random variables with . In the isotropic case studied in [27], the source term and boundary data are chosen so that the uncertain PDE problem has a specific pathwise solution given by

where the scaling factor is chosen to be large—so as to generate a highly localised Gaussian profile centered at the uncertain spatial location . The value of that we will take in this study is . (The other values discussed in [27] are and .)

In this work, the test problem is made anisotropic by scaling the solution in the first coordinate direction by a linear function so that the takes values in the interval . The corresponding pathwise solution is then given by

The goal is to approximate the following quantity of interest (QoI)

| (44) |

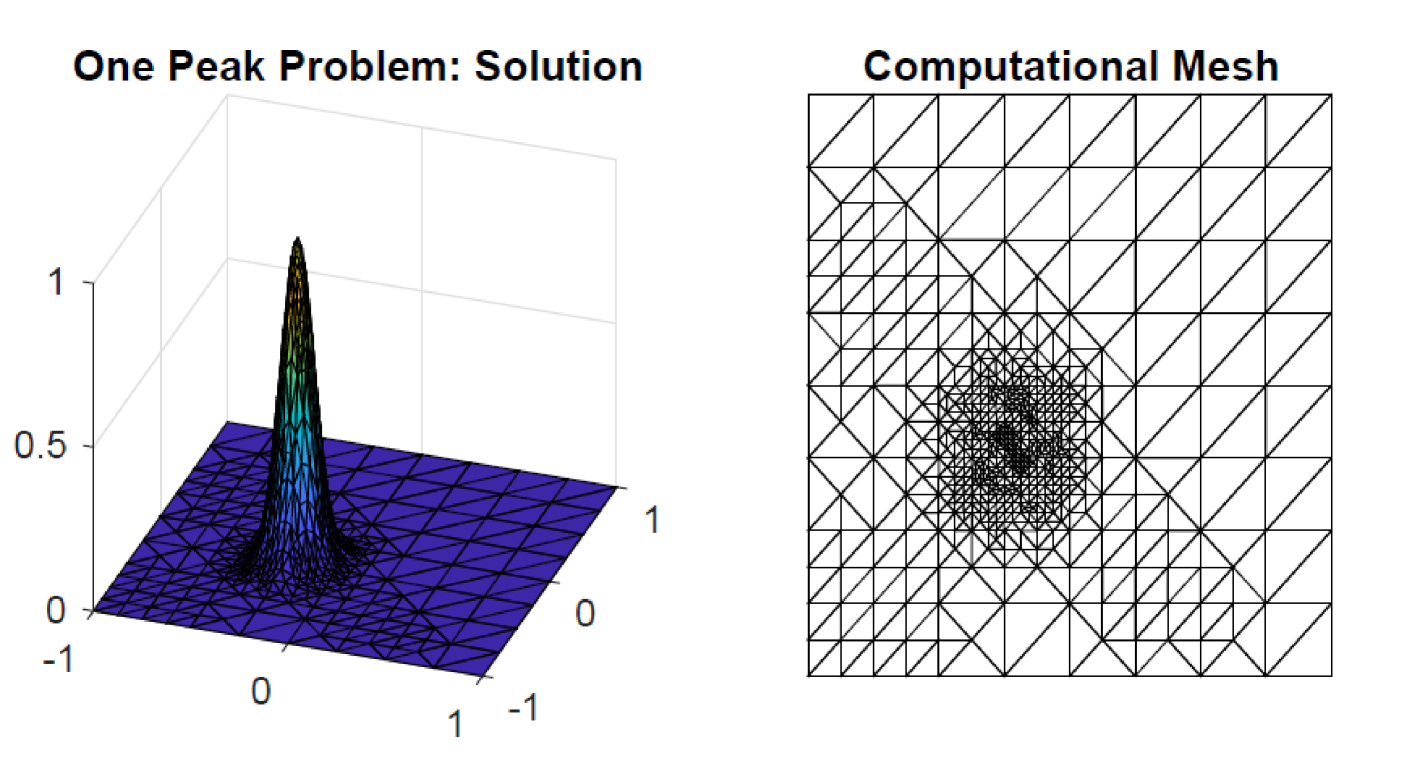

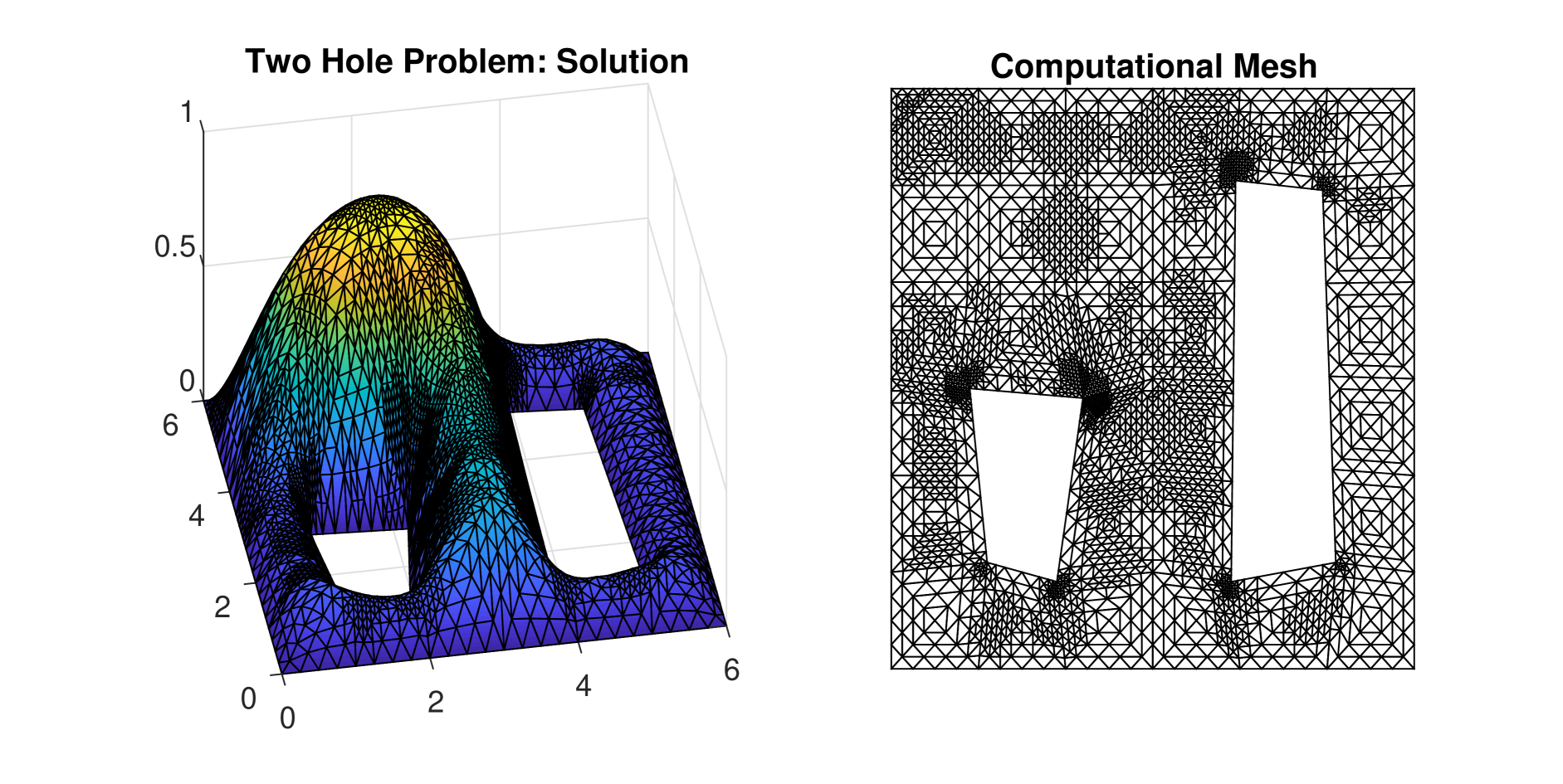

In our experiments we solve the simplified problem with on . (This has no impact on accuracy for the error tolerances that we will considered; see the discussion in the appendix). The initial mesh with vertices is generated by taking three uniform refinement steps, starting from two triangles with common edge from to . For the specific parameter choice and spatial tolerance , the adaptive algorithm terminated after 5 steps giving the numerical solution and corresponding mesh shown in Fig. 1.

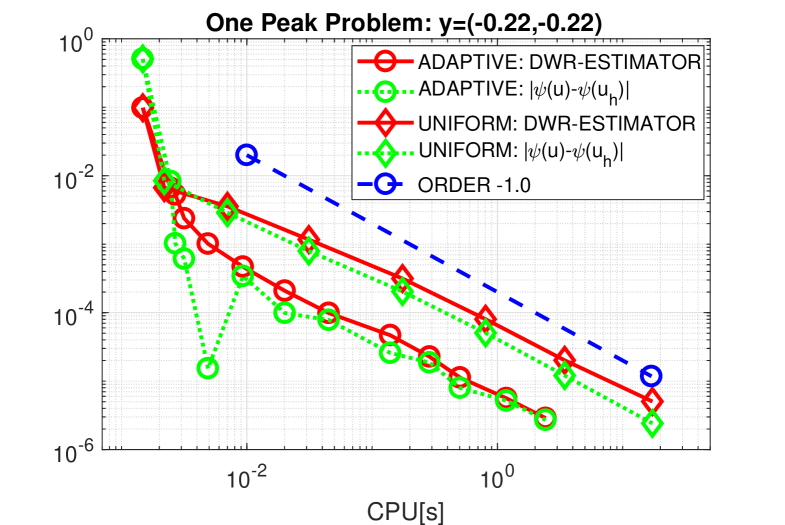

Running the adaptive algorithm with a tighter tolerance of generated a concentrated mesh with points after 13 refinement steps. The left part of Fig. 2 shows the efficiency of the locally adaptive procedure in comparison with a uniform refinement strategy. For smaller tolerances, the gain of efficiency in terms of overall cpu time is a factor . Comparing with a reference value , generated by running the adaptive algorithm with a very small tolerance, we see that the effectivity index, i.e., the ratio between estimator and true error, tends asymptotically to for both uniform and adaptive refinement.

The very good quality of the spatial error estimation process is still maintained if we sample over the whole parameter space using an isotropic sparse grid of collocation points – generated using Sparse Grid Kit by doubling of points in each stochastic dimension. In this case we have a reference value of , generated analytically using an asymptotic argument—details are given in the appendix. The error estimates for adaptive meshes associated with different spatial error tolerances are plotted in the right part of Fig. 2. Observe that the estimators deliver upper bounds for the numerical errors and the tolerances are always satisfied. As expected from the theory, the convergence rates for and in terms of computing time are both close to . So we have in (30) and in assumption (A1) in Section 2.

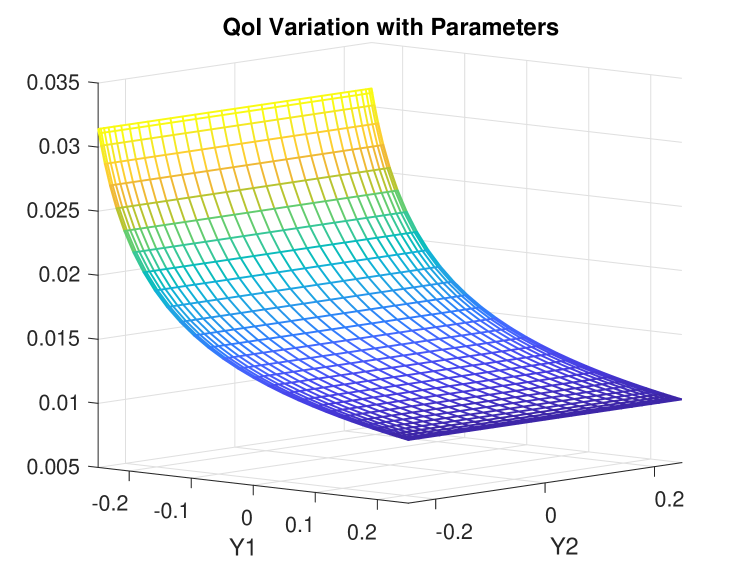

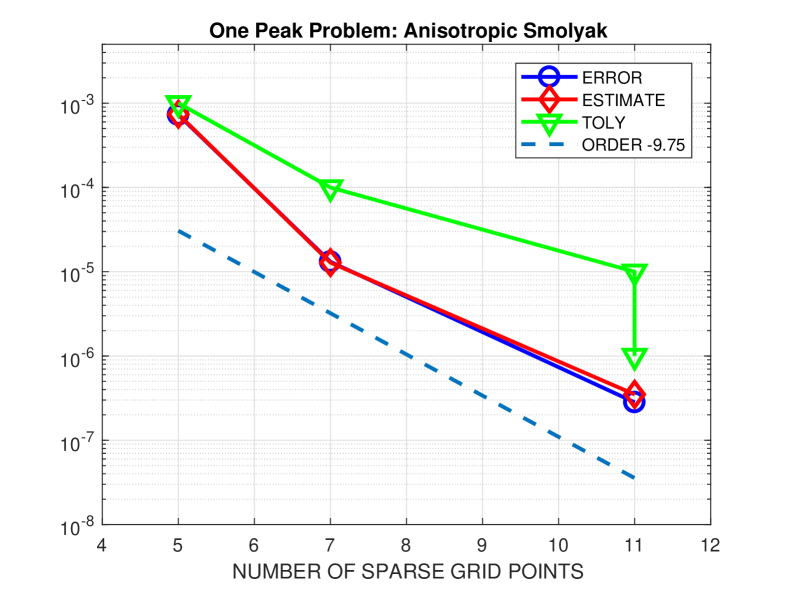

Next we consider the convergence behaviour and the quality of the error estimates for the adaptive anisotropic Smolyak algorithm. A reference solution generated by solving the problem with a small spatial error tolerance on a tensor-product grid of Clenshaw–Curtis points is shown in the left part of Fig. 3. Looking at the solution structure, it is evident that the QoI has inherent structure that can be exploited by an adaptive strategy: more specifically, very few sample points are needed to accurately compute it ! The right part of Fig. 3 shows the results for a decreasing sequence of stochastic tolerances , where we have fixed the spatial tolerance . The corresponding numbers of collocation points are , and . Looking at these more closely we find that all the quadrature rules generated by the adaptive procedure are one-dimensional rules in the direction matching the variation in the reference. Other features of note are that the prescribed tolerances are always satisfied and that the errors (with respect to the reference solution) are nicely estimated by the error indicators. A least-squares fit gives an averaged value of for the convergence order so that assumption (A2) in Section 2 is satisfied. An approximate value can be computed with a much lower spatial tolerance and collocation points in a few seconds.

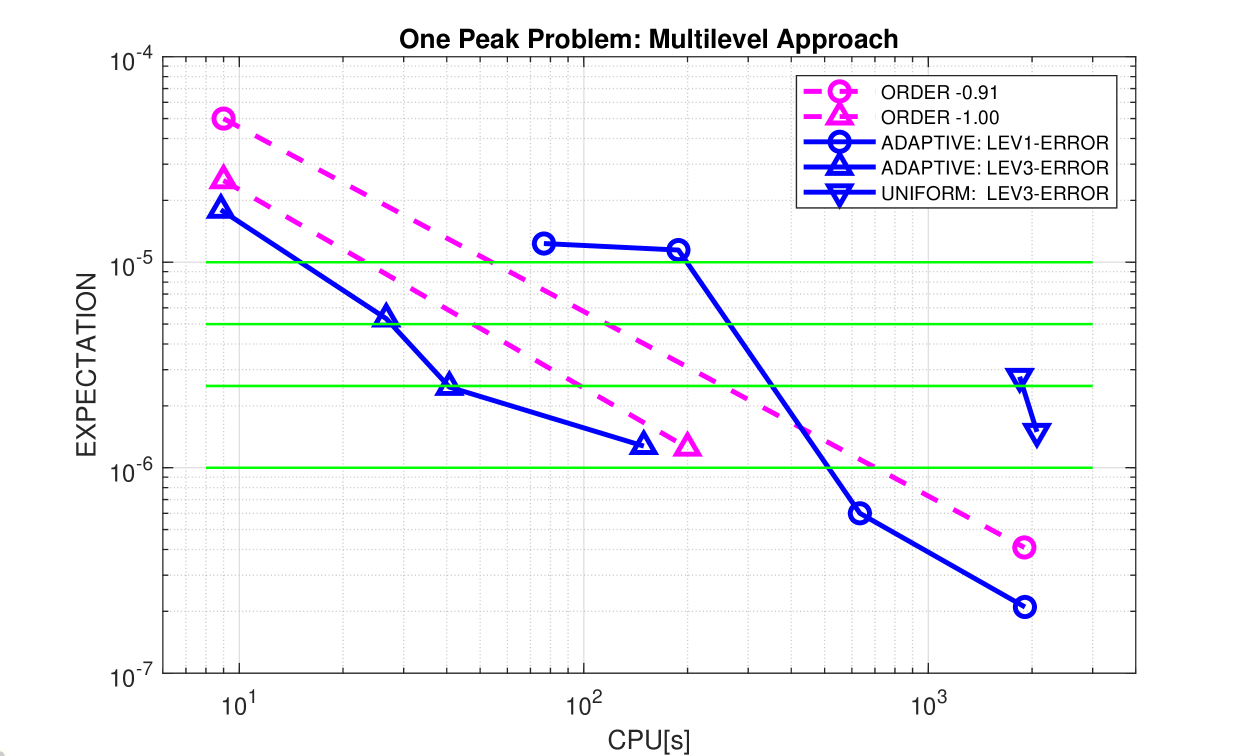

Having estimated the parameters in the second step of the algorithm in Table 1, we now run the adaptive multilevel approach with overall accuracy requirements of and . To illustrate the performance gains, we simply set (so that three levels are used) and assign a spatial error reduction factor of . The spatial tolerances are then given by with . To calculate, in a first step, a sufficiently accurate approximation for the tolerance at reasonable cost, we apply the anisotropic Smolyak algorithm with . Note that these samples can be reused later in the first level of the multilevel scheme. Eventually, the stochastic tolerances are derived from (22) with , and .

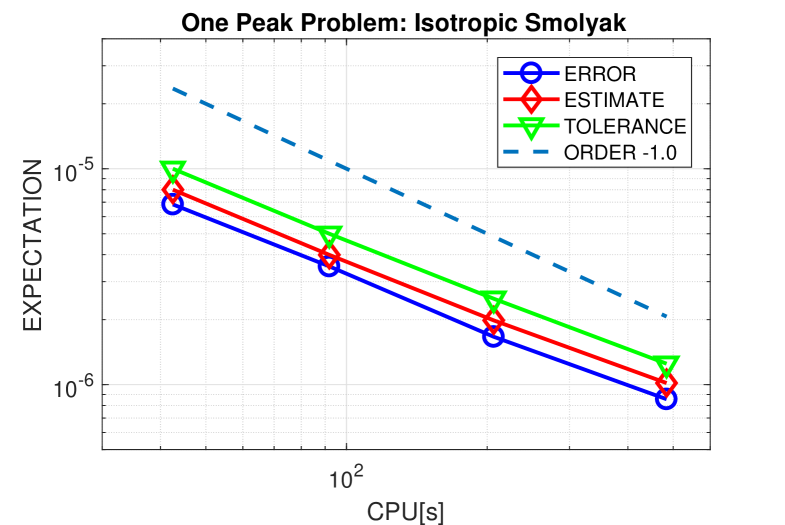

Results for the three-level and single-level approach are summarised in Fig. 4. For adaptive spatial meshes, we observe that the errors of the expected values are very close to the prescribed tolerances. This is not always the case for the values computed by the single-level approach. The three-level approach performs reliably and outperforms the single-level version clearly. The orders of convergence, and , predicted by Theorem 2.1 for the accuracy in terms of computational complexity are also visible. Already for coarse tolerances, the three-level approach with uniform spatial meshes shown plotted with inverted blue triangles) is not competitive. For higher tolerances, the calculation exceeded memory requirements (more than FE degrees of freedom).

These CPU timings are impressive. We have also solved the one peak test problem using an efficient adaptive stochastic Galerkin (SG) approximation strategy. While the linear algebra associated with the Galerkin formulation is decoupled in this case, the computational overhead of evaluating the right-hand-side vector is a big limiting factor in terms of the relative efficiency. Using SG the quantity

where

and

must be computed in every element in the current subdivision and for every parametric function in the active index set. This is an extremely demanding quadrature problem!

We have also tested an adaptive multilevel Monte Carlo method (see, [12, 27]) on this problem. For the lowest tolerance, , and an average over independent realizations, the three-level algorithm achieves an accuracy of in sec. The numbers of averaged samples for each level are , , and . Obviously, the slow Monte Carlo convergence rate of is prohibitive for higher tolerances here.

3.2 Uncertain geometry: ,

In our second example, we again consider a two-dimensional Poisson problem, but now with geometry of the computational domain being uncertain. We will refer to this example as the two hole test problem. The uncertain domain is defined by

| (45) |

where the holes and are taken as quadrilaterals with the uncertain vertices

| (46) |

Here, the random vector consists of sixteen uniformly distributed random variables , . We set

| (47) |

to represent different strength of uncertainty and hence anisotropy in the stochastic space. We impose a constant volume force and fix the component by homogeneous Dirichlet boundary conditions on the whole boundary , including with stochastically varying positions in space. Our goal is then to study the effect of this uncertainty on the expectation of the overall displacement calculated by

| (48) |

This allows an assessment of the desired averaged load capacity of the component, which takes into account uncertainties in the manufacturing process.

Applying a parameter-dependent map, each domain can be mapped to the fixed nominal domain with . Such a domain mapping approach was introduced by Xiu & Tartakovsky [38] and allows us to reformulate the problem in the form (1) with parameter-dependent coefficients on . The well established theory for elliptic partial differential equations with random input data can then be applied without modifications to show the well-posedness of the setting with random domains.

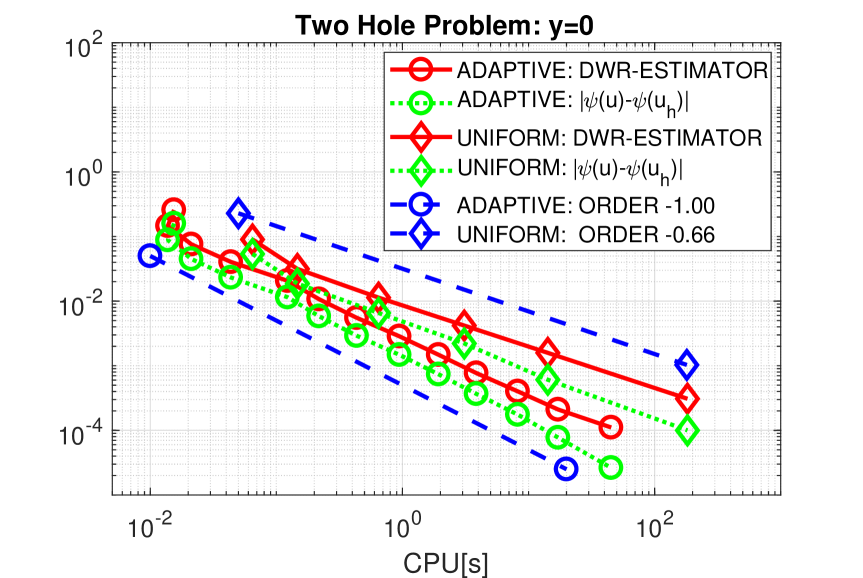

All calculations start with an initial criss-cross structured mesh consisting of triangles, which are adjusted to the random holes. In Fig. 5, the numerical solution and its corresponding adaptive mesh for a spatial tolerance and the random vector are shown. The adaptive algorithm refines the mesh at the eight reentrant corners due to the fact that the exact solution contains a loss of regularity there. Exemplarily, we study the convergence rates of adaptive and uniform refinements for the nominal domain , where is contained in . While the correctly adapted grids still recover the optimal order for the approximation of in terms of CPU time, the use of a sequence of uniform meshes sees the order drop down to the theoretical value , as can be seen in the left part of Fig. 6. The DWR-estimators perform quite well and deliver accurate upper bounds of the error. So we again set in (30) and use for adaptive meshes and for uniform meshes in assumption (A1). Note that the latter choice takes into account that due to the larger interior angle at the random holes for some parameter values , the regularity of the solution, and thus also the spatial convergence rate, is slightly lower.

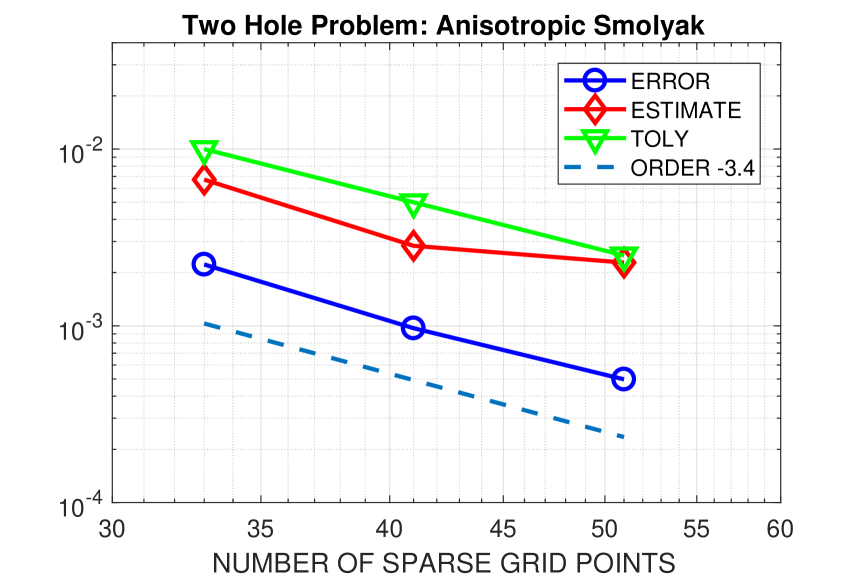

In order to estimate the parameter , we apply the anisotropic Smolyak algorithm and calculate samples at reasonable costs with tolerances . The value of for is taken as the reference value, leading to an approximate convergence order of . From Fig. 6, we detect that the errors are significantly smaller than the prescribed tolerances, as it was also the case in the first test example. Therefore, we again choose and set in assumption (A2). For later comparison, we compute a reference value with and . The required number of collocation points is and the vector of maximum polynomial degree is .

For the adaptive multilevel approach, we use again three levels and set the reduction factor to . The spatial tolerances are then computed from , , with the six overall accuracy requirements . Following the algorithm given in Table 1, we first compute with and then derive the stochastic tolerances from (22) with , , and . The corresponding values are given in Table 2. For the multilevel approach with uniform spatial meshes, we use to determine the stochastic tolerances.

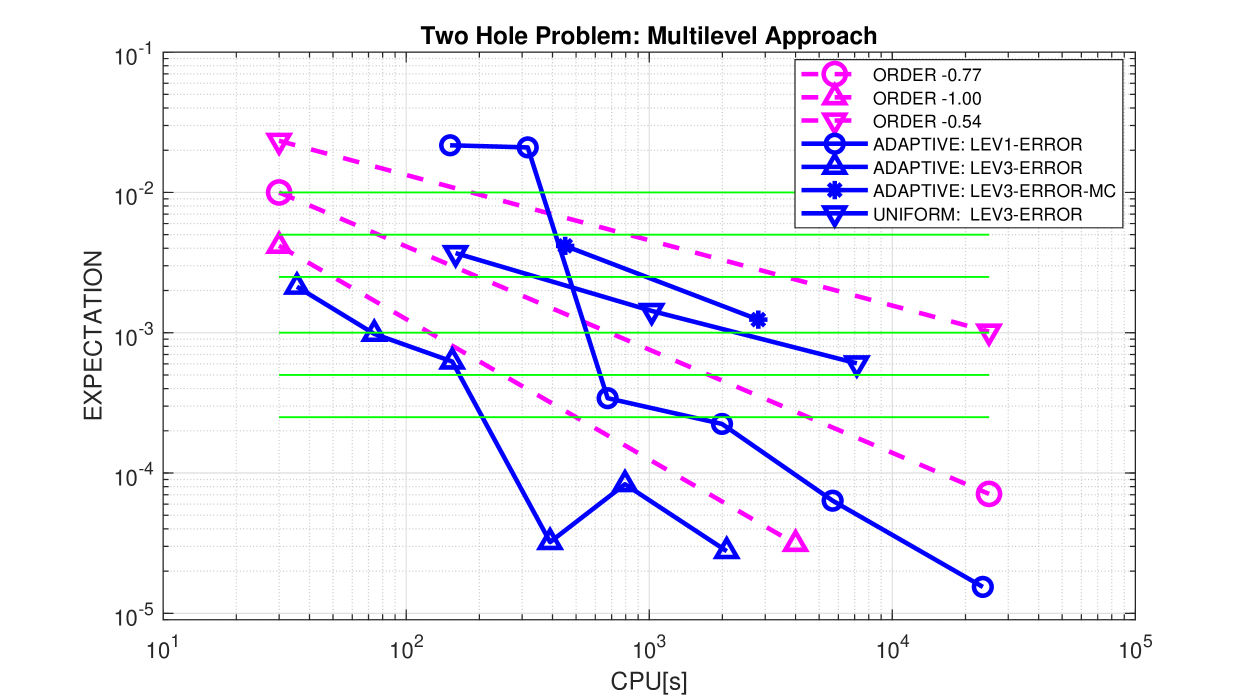

In Fig. 7, errors for the expected value versus computing time are plotted for the three-level approach with adaptive and uniform spatial meshes. For comparison, we also show results for the single-level approach with adaptive spatial meshes, computed with and . In all cases, except the first two for the single-level method, the overall tolerances are satisfied. The schemes work remarkably reliably. However, the achieved accuracy is often significantly better than the prescribed one – never more than a factor though. One possible reason could be that cancellation effects are typically overlooked if the overall error is split into two parts, which are then individually controlled.

The multilevel method with adaptive spatial meshes outperforms the single-level approach. The number of collocation points taken by the anisotropic Smolyak algorithm are listed in Table 2. We observe that the number of samples for the differences keeps constant, showing that the increasing samples in the zeroth level always catch enough information to eventually reach the tolerance. The corresponding numbers of collocation points for the single-level method are . Note that the computing time also includes the effort for the estimation process, which is not visible in these numbers; see also the discussion for our realization of the adaptive anisotropic Smolyak algorithm above. It is also clear that the averaged constant is too optimistic for the first runs with , which leads to the algorithm taking only one collocation point. The approximate orders of convergence for both methods, and , predict the observed asymptotic rates for the computing times quite well. However, the actual estimates can only serve as rough indicators for the achieved accuracy.

The multilevel method with uniform spatial meshes performs better than the single-level one for the first two tolerances, but becomes quickly inefficient for higher tolerances. Due to the larger value , it needs significantly more samples for coarser meshes: and , compared to the multilevel approach with adaptive spatial meshes, see Table 2. remains the same. The observed convergence order is close to the predicted value . Also for this example, it becomes obvious that uniform meshes cannot compete with adaptive meshes for higher tolerances.

This conclusion is also valid for tests with adaptive multilevel Monte Carlo. Results for tolerances are shown in Fig. 7 for three levels. The numbers of optimized samples are , , , respectively. All values are calculated from an average over 5 independent realizations. Although the variance reduction is quite high, leading to surprisingly small numbers , the fast increasing numbers for are still too challenging, especially for higher tolerances.

4 Concluding remarks

Adaptive methods have the potential to drastically reduce the number of degrees of freedom and to realise optimal complexity in terms of computing time, even in cases where the exact solutions have spatial singularities. As well as providing a posteriori error estimates, adaptive methods usually outperform methods based on uniform mesh refinement. When coupled with multilevel stochastic algorithms, they can increase the computational efficiency in the sampling process when the stochastic dimension increases and hence provide a general tool to further delay the curse of dimensionality.

State-of-the-art adaptive methods implemented in the open-source Matlab packages p1afem and Sparse Grid Kit have been used in off-the-shelf fashion in this study. The numerical results for the adaptive multilevel collocation method demonstrate the reliability of the error control and a significant decrease in complexity compared to uniform spatial refinement methods and single-level stochastic sampling methods. While these advantages are already known for the methods involved, this study shows that the adaptive components can be combined to give an efficient algorithm for solving PDEs with random data to arbitrary levels of accuracy.

Appendix.

The pathwise solution of the one peak problem is given by

with . The quantity of interest is given by

The specific choice taken in the numerical experiments leads to two simplifications.

-

(S1)

The Dirichlet boundary condition ( satisfying (A.1) on ) may be replaced without significant loss of accuracy by the numerical approximation

Justification: By direct computation, the maximum value of on the boundary occurs for , when the standard deviation of the Gaussian peak is minimised (thus and for all values ). For all such values of the nearest point on the boundary is , and the shortest distance to the boundary is so that the maximum value of on the boundary is given by .

-

(S2)

Thus, we may readily compute a reference value (accurate to more than 10 digits):

Justification: the integral in (A.2) is separable, thus

The key point is that the inner integrals in the above expression are Gaussians with small variance. In both cases the integrand is close to zero at the end points and . Thus, in both cases the integral can be extended by zero to the range (this could be made rigorous using probabilistic arguments) to give the following reference value approximation

Acknowledgements. The first author is supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) within the collaborative research center TRR154 “Mathematical modeling, simulation and optimisation using the example of gas networks” (Project-ID 239904186, TRR154/2-2018, TP B01), the Graduate School of Excellence Computational Engineering (DFG GSC233), and the Graduate School of Excellence Energy Science and Engineering (DFG GSC1070). The second author was supported by the UK Engineering and Physical Sciences Research Council (EPSRC) under grant number EP/K031368/1. The third author was funded by a Romberg visiting scholarship at the University of Heidelberg in 2019. The authors would also like to thank the Isaac Newton Institute for Mathematical Sciences, Cambridge, for support and hospitality during the programme “Uncertainty quantification for complex systems: theory and applications” (Jan–Jul 2018), where the work on this paper was initiated.

References

- [1] R. C. Almeida and J. T. Oden. Solution verification, goal-oriented adaptive methods for stochastic advection-diffusion problems. Comput. Methods Appl. Mech. Engrg., 199:2472–2486, 2010.

- [2] I. Babuška, F. Nobile, and R. Tempone. A stochastic collocation method for elliptic partial differential equations with random input data. SIAM J. Numer. Anal., 45:1005–1034, 2007.

- [3] M. Bachmayr, A. Cohen, D. Dũng, and C. Schwab. Fully discrete approximation of parametric and stochastic elliptic PDEs. SIAM J. Numer. Anal., 55:2151–2186, 2017.

- [4] A. Barth, C. Schwab, and N. Zollinger. Multi-level Monte Carlo finite element method for elliptic PDEs with stochastic coefficients. Numer. Math., 119:123–161, 2011.

- [5] J. Beck, F. Nobile, L. Tamellini, and R. Tempone. Stochastic spectral Galerkin and collocation methods for PDEs with random coefficients: a numerical comparison. In J.S. Hesthaven and E.M. Ronquist, editors, Spectral and High Order Methods for PDEs, volume 76 of Lecture Notes Comput. Sci. Engrg., pages 43–62. Springer, 2011.

- [6] R. Becker and R. Rannacher. An optimal control approach to a posteriori error estimation in finite element methods. Acta Numerica, 10:1–102, 2001.

- [7] A. Bespalov, C. Powell, and D. Silvester. Energy norm a posteriori error estimation for parametric operator equations. SIAM J. Sci. Comput., 36:A339–A363, 2014.

- [8] A. Bespalov, D. Praetorius, L. Rocchi, and M. Ruggeri. Goal-oriented error estimation and adaptivity for elliptic PDEs with parametric or uncertain inputs. Comp. Methods Appl. Mech. Engrg., 345:951–982, 2019.

- [9] A. Bespalov and D. Silvester. Efficient adaptive stochastic Galerkin methods for parametric operator equations. SIAM J. Sci. Comput., 38:A2118–A2140, 2016.

- [10] Alex Bespalov, Leonardo Rocchi, and David Silvester. T-IFISS: a toolbox for adaptive FEM computation. arXiv Preprint 1908.05618, 2019. https://arxiv.org/abs/1908.05618.

- [11] A. Chkifa, A. Cohen, and C. Schwab. High-dimensional adaptive sparse polynomial interpolation and applications to parametric PDEs. Found. Comput. Math., 14:601–633, 2014.

- [12] K.A. Cliffe, M.B. Giles, R. Scheichl, and A.L. Teckentrup. Multilevel Monte Carlo methods and applications to elliptic PDEs with random coefficients. Comput. Visual. Sci., 14:3–15, 2011.

- [13] A. Cohen, A. Chkifa, C. Schwab, and R. Devore. Sparse adaptive Taylor approximation algorithms for parametric and stochastic PDE’s. ESAIM–Math. Model. Num., 47(1):253–280, 2013.

- [14] G. Detommaso, T. Dodwell, and R. Scheichl. Continuous level Monte Carlo and sample-adaptive model hierarchies. SIAM/ASA J. Uncertain., 7:93–116, 2019.

- [15] W. Dörfler. A convergent adaptive algorithm for Poisson’s equation. SIAM J. Numer. Anal., 33:1106–1124, 1996.

- [16] M. Eigel, C. Gittelsson, C. Schwab, and E. Zander. Adaptive stochastic Galerkin FEM. Comput. Methods Appl. Mech. Engrg., 270:247 – 269, 2014.

- [17] M. Eigel, C. Gittelsson, C. Schwab, and E. Zander. A convergent adaptive stochastic Galerkin finite element method with quasi-optimal spatial meshes. ESAIM–Math. Model. Num., 49(5):1367–1398, 2015.

- [18] M. Eigel, Ch. Merdon, and J. Neumann. An adaptive Monte Carlo method with stochastic bounds for quantities of interest with uncertain data. SIAM/ASA J. Uncertain., 4:1219–1245, 2016.

- [19] D. Elfverson, F. Hellman, and A. Målqvist. A multilevel Monte Carlo method for computing failure probablities. SIAM/ASA J. Uncertain., 4:312–330, 2016.

-

[20]

S. Funken, D. Praetorius, and P. Wissgott.

Efficient implementation of adaptive P1-FEM in Matlab, software

available at

http://www.asc.tuwien.ac.at/~praetorius/matlab. - [21] S. Funken, D. Praetorius, and P. Wissgott. Efficient implementation of adaptive P1-FEM in Matlab (extended preprint). ASC Report 19/2008, Vienna University of Technology, 2008.

- [22] S. Funken, D. Praetorius, and P. Wissgott. Efficient implementation of adaptive P1-FEM in Matlab. Comput. Meth. Appl. Math., 11:460–490, 2011.

- [23] T. Gerstner and M. Griebel. Dimension-adaptive tensor-product quadrature. Computing, 71:65–87, 2003.

- [24] M. Giles. Multilevel Monte Carlo path simulation. Operations Res., 56:607–617, 2008.

- [25] D. Guignard and F. Nobile. A posteriori error estimation for the stochastic collocation finite element method. SIAM J. Numer. Anal., 56(5):3121–3143, 2018.

- [26] S. Heinrich. Multilevel Monte Carlo methods. In S. Margenov, J. Waniewski, and P. Yalamov, editors, Large-Scale Scientific Computing, volume 2179 of Lecture Notes Comput. Sci., pages 58–67. Springer, 2001.

- [27] R. Kornhuber and E. Youett. Adaptive multilevel Monte Carlo methods for stochastic variational inequalities. SIAM J. Numer. Anal., 56:1987–2007, 2018.

- [28] C. Kreuzer and K.G. Siebert. Decay rates of adaptive finite elements with Dörfler marking. Numer. Math., 1147:679–716, 2011.

- [29] F. Nobile, L. Tamellini, and R. Tempone. Convergence of quasi-optimal sparse-grid approximation of Hilbert-space-valued functions: application to random elliptic PDEs. Numer. Math., 134:343–388, 2016.

- [30] F. Nobile, L. Tamellini, F. Tesei, and R. Tempone. An adaptive sparse grid algorithm for elliptic PDEs with lognormal diffusion coefficient. In J. Garcke and D. Pflüger, editors, Sparse Grids and Applications—Stuttgart 2014, pages 191–220. Springer, 2016.

- [31] F. Nobile, R. Tempone, and C. Webster. An anisotropic sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal., 46:2411–2442, 2008.

- [32] F. Nobile, R. Tempone, and C. Webster. A sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal., 46:2309–2345, 2008.

- [33] B. Schieche and J. Lang. Adjoint error estimation for stochastic collocation methods. In J. Garcke and D. Pflüger, editors, Sparse Grids and Applications—Munich 2012, volume 97 of Lecture Notes Comput. Sci. Engrg., pages 271–294. Springer, 2014.

-

[34]

L. Tamellini, F. Nobile, D. Guignard, F. Tesei, and B. Sprungk.

Sparse grid matlab kit, version 17-5, software available at

https://csqi.epfl.ch. - [35] A.L. Teckentrup. Multilevel Monte Carlo Methods and Uncertainty Quantication. PhD thesis, University of Bath, 2013.

- [36] A.L. Teckentrup, P. Jantsch, C.G. Webster, and M. Gunzburger. A multilevel stochastic collocation method for partial differential equations with random input data. SIAM/ASA J. Uncertain., 3:1046–1074, 2015.

- [37] D. Xiu and J. S. Hesthaven. High-order collocation methods for differential equations with random inputs. SIAM J. Sci. Comput., 27:1118–1139, 2005.

- [38] D. Xiu and D. Tartakovsky. Numerical methods for differential equations in random domains. SIAM J. Sci. Comput., 28:1167–1185, 2007.

- [39] E. Youett. Adaptive Multilevel Monte Carlo Methods for Random Elliptic Problems. PhD thesis, Freie Universität Berlin, 2018.

- [40] J. Zech. Sparse-Grid Approximation of High-Dimensional Parametric PDEs. PhD thesis, ETH Zürich, 2018.

- [41] J. Zech, D. Dũng, and C. Schwab. Multilevel approximation of parametric and stochastic pdes. SAM Research Report 2018–05, ETH, Zurich, 2018. https://www.sam.math.ethz.ch/sam_reports/reports_final/reports2018/2018-05.pdf.