A General Approach to Fully Linearize the Power Amplifiers in mMIMO with Less Complexity

Abstract

A radio frequency (RF) power amplifier (PA) plays an important role to amplify the message signal at higher power to transmit it to a distant receiver. Due to a typical nonlinear behavior of the PA at high power transmission, a digital predistortion (DPD), exploiting the preinversion of the nonlinearity, is used to linearize the PA. However, in a massive MIMO (mMIMO) transmitter, a single DPD is not sufficient to fully linearize the hundreds of PAs. Further, for the full linearization, assigning a separate DPD to each PA is complex and not economical. In this work, we address these challenges via the proposed low-complexity DPD (LC-DPD) scheme. Initially, we describe the fully-featured DPD (FF-DPD) scheme to linearize the multiple PAs and examine its complexity. Thereafter, using it, we derive the LC-DPD scheme that can adaptively linearize the PAs as per the requirement. The coefficients in the two schemes are learned using the algorithms that adopt indirect learning architecture based recursive prediction error method (ILA-RPEM) due to its adaptive and free from matrix inversion operations. Furthermore, for the LC-DPD structure, we have proposed three algorithms based on correlation of its common coefficients with the distinct coefficients. Lastly, the performance of the algorithms are quantified using the obtained numerical results.

Index Terms:

Digital predistortion, massive MIMO, direct learning architecture, indirect learning architecture, recursive prediction error method.I Introduction

In the wireless transmitters, the radio frequency (RF) power amplifiers (PAs) are used to amplify the modulated signals for distant transmissions. However, the in-band and out-of-band nonlinear distortions occur to the signals amplified near to saturation region of the PAs [1]. This can be reduced by employing some backoff to the peak power of the signals. But, it reduces the efficiency of the PAs. Therefore, the preprocessing like digital predistortion (DPD) over the transmit signals before the PAs are required to linearize the resultant signals towards the saturation region. Since a decade, many works have focused on the linearization of multiple power amplifiers in the transmitters like massive MIMO (mMIMO) transmitters. But, they have focused on the linearization in a particular direction of beamforming instead of linearizing all the PAs. Because, the linearization of each PA requires separate DPD block along with the driving RF chain. Thus, due to high complexity, it is not suitable for an economical mMIMO transmitter. To deal with it, in this work, we have proposed a most general approach to fully linearize all the PAs with less complexity. Also, we have discoursed in detail about the fundamentals behind the challenges and the procedure to tackle it.

I-A Related Works

The preprocessing using DPD has an inverse property to the nonlinear PA to mitigate the nonlinearties in the desired transmit signal [2]. From the state-of-the-art, mostly the linear parametric models have been used for the the DPD [3]. One of the methods to identify the DPD coefficients is least square (LS) due to its fast convergence [4, 5, 6]. But, despite mathematical simplicity, its computational complexity is high due to engagement of inverse operations of the matrices of large sizes that correspond to the estimation of large number of DPD coefficients. However, many works have proposed the algorithms to reduce the complexity for the identification of the DPD based on LS method [7, 8, 9, 10]. For example, the size of the matrix is reduced by normalization of the DPD basis functions (BFs) followed by their pruning [7]. Also, based on stationary random process, the time varying matrix associated with the DPD coefficients is replaced by a constant covariance matrix [8]. Further, in an iterative algorithm based on LS, the samples of the DPD coefficients (or the size of the matrix) can be reduced by considering the correlation in the observation errors between two iterations [9]. Besides, the matrix size can also be reduced using the eigenvalue decomposition and principal component analysis (PCA) that decreases the order of the memory polynomial model of the DPD [11, 10]. In eigenvalue decomposition, the number of DPD coefficients can be reduced by considering only the dominant eigenvectors. Whereas, in PCA, the reduction is achieved by converting the correlated BFs of the DPD into uncorrelated BFs.

Although, the above techniques help in reduction of the size of the matrices, but, for time varying and highly nonlinear PAs, still, the required number of DPD coefficients is large. Thus, it leads to an undesirable large matrix operations. Therefore, the recursive based algorithms like least mean square (LMS) [12], recursive least squares (RLS) [13, 14], and recursive prediction error method (RPEM) [15] are computationally more reliable at the cost of their slow convergence to the desired optimal value of the variables. Using LMS, the DPD adjusts its coefficients to minimize the mean square error (MSE) between the PA output and the desired signal. The coefficients are updated using stochastic gradient decent method that minimizes the instantaneous error in each iteration. However, LMS is quite unstable and it is very sensitive in the step size for the update [16]. In conventional LS estimation, a batch of input and output data samples of the PA are used to update the DPD coefficients. But, in RLS, using a set of equations, the LS estimation is represented recursively and the coefficients are updated accordingly for the obtained new data sample of the input and output. To discount the influence of older samples, it uses an exponential weighing known as forgetting factor. The chosen value of the forgetting factor gives a trade-off between the precision and convergence and its low value provides high fluctuation to noise. Therefore, the forgetting factor is improved further in RPEM by considering its variation with time [17]. In the existing works, mostly, these adaptive algorithms are applied to two types of DPD learning architectures: (i) direct learning architecture (DLA) [18, 19] and (ii) indirect learning architecture (ILA) [13, 14]. DLA has better performance in the presence of noise at the output the PA, but, ILA is more effective in the convergence rate [20]. Therefore, ILA is widely used for the identification of the DPD. Also, in our proposed work, we have considered RPEM algorithm in an ILA architecture for the DPD identification. Next, we describe the state-of-the-art for the linearization of the multi-antenna transmitters.

In the multi-antenna systems like MIMO or mMIMO, although the PAs in the transmitters are of same type, but, in practice, they have different nonlinearties due to their sensitivity to process, supply voltage, and temperature (PVT) [21, 22]. Therefore, a single DPD is not capable to linearize all the PAs [23] and ideally, each PA requires a separate DPD [24]. But, the ideal case provides undesirably high complexity in hardware implementation as well as in processing and even not feasible for a mMIMO transmitter where hundreds of PAs need to be linearized. Subsequently, instead of linerizing all PAs, a resultant single PA can be linearized whose output is the sum of the outputs of the PAs [25, 26]. However, it addresses the average nonlinerites of the PAs, thus, none of them is fully linearized. On the other hand, instead of sum, the beam-oriented (BO) output of the PAs in a given direction can be linearized using a single DPD [27, 28, 29]. As it addresses the nonlinearity of the BO output in the desired direction (main lobe), again, the PAs are not fully linearized. Thereby, they are not able to linearize the outputs in other directions that gives the nonlinear sidelobes and typically, their power level is only dB lesser than the linear main lobe [30]. This can be improved by frequently updating the DPD for different directions. Also, the number of DPD coefficients per update can be reduced using the pruning algorithms [31]. However, the frequent update is not reliable for online operations and it leads to high computational complexity. Moreover, at a time, the DPD is identified for a particular BO direction and the PAs are not fully linearized that still gives the comparable nonlinear sidelobes to the main lobe. If we assume the similar distribution of nonlinearites over the PAs, the side lobes can be reduced by optimally adjusting the amplitude of the phase shifters in the BO output [32]. However, in general, it cannot provide the full linearization of the PAs. The performance towards the full linearization can be improved by including extra tuning box to each PA. The tuning boxes compensate the nonlinear differences between the PAs such that the resultant nonlinearity is same for each PA, thereafter, using a single DPD, the PAs are fully linearized [33, 34, 35]. Nonetheless, for the compensation of the differences, each tuning box is modeled using a polynomial model which needs to be identified using a learning algorithm. Therefore, its complexity is approximately similar to incorporate separate DPD for each PA. Different from only DPD operations, the sidelobes in the BO output can be linearized more reliably using the two layer of operations: the DPD training followed by the post-weighting coefficients optimization that are multipled by the DPD output signal and distributed at the respective PAs’ input [36, 37]. In a simplified analysis, different post-weighting coefficients are assigned for each PA, but, in a branch of a PA, same post-weighting coefficient are multiplied to the BFs of the DPD. Thus, due to less degree of freedom per branch, it is less reliable in post-weighting linearization of the PAs [36]. Also, to distribute different signals to the branches of the PAs, separate RF-chain is needed for each PA that gives a high complexity in a mMIMO transmitter. Later, in our proposed work [37], we adopted an adaptive post-weighting architecture that increases the degree of freedom (DOF) per branch as well as reduces the number of RF-chains requirement. But, still, due to optimization of post-weighting coefficients for discrete range of directions, the PAs are not fully linearized.

I-B Motivation and Key Contribution

As described earlier, the PAs in a multi-antenna transmitter can be fully linearized using identification of a separate DPD to each PA [24]. But, it leads to high complexity in the structure and in the computation to learn the coefficients. Also, for the distribution of the predistorted signals, it requires a separate RF-chain to each PA. Based on it, we propose a most general approach using a low-complexity DPD (LC-DPD) structure which approximates seperate DPD identification requirement as well as the reduction of the number of RF-chains as per the requirement in the mMIMO transmitters. The key contribution of this work is four-fold as follows. (i) First, we deduce the reduction in the number of coefficients for a given type of PAs in a subarray from the measurement data and the obtained numerical result of a system setting. Then, we propose a fully-featured DPD (FF-DPD) scheme to fully linearize the PAs in a subarray and describe its complexity in terms of number of multipliers, adders, and RF chains. (ii) Using the FF-DPD structure, we derive the less complex and non-trivial LC-DPD structure. The number of coefficients in it is reduced based on a geometric sequence and corresponding coefficients are represented in a block vector form. Based on the geometric sequence, we derive the expression of the number of multipliers, adders, and RF chains which are significantly reduced; thus, reduces the complexity. (iii) Next, for the training of the coefficients for the two schemes, we propose four algorithms based on indirect learning architecture based recursive prediction error method (ILA-RPEM): one for the FF-DPD scheme and three for the LC-DPD. Apart from the structural complexity of the FF-DPD, we also describe the computational complexity of its training. The performance of the three algorithms for the LC-DPD is determined based on the correlation of its common coefficients to the distinct coefficients in the structure. It is also shown that the complexities of the three algorithms are less than the algorithm for FF-DPD. Further, for various operations in the four algorithms, we define the four operators and describe their properties. (iv) Lastly, we obtain the numerical results in terms of power spectral density (PSD) and error vector magnitude (EVM) using the algorithms for the two schemes and obtain the various insights by comparing their performances.

II Structures for Full Linearization

In this section, first, we describe the ideal structure for the predistortion to fully linearize the multiple PAs. Thereafter, we derive a low-complexity structure that approximates the full linearization.

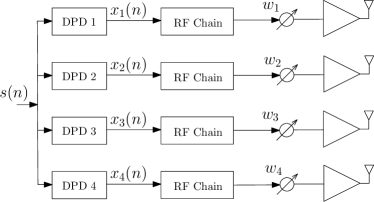

If we consider a subarray of PAs in a mMIMO transmitter as shown in Fig. 1 (where ), ideally, separate DPDs are applied to the respective PAs for the full linearization. As each DPD output signal is different, therefore, a separate RF-chain is employed. Thereafter, the following signals are phase shifted using the analog phase shifters (analog beamforming weights), ; to get the BO output from the PAs in a specific direction. Based on general memory polynomial (GMP) [3], the th DPD output to the input message can be expressed as:

| (1) |

where is the coefficient for the BF, of th power and th delay. Eq. (1) represents the most general model where the memory length and the order of the polynomial depends on the th PA. The outputs111For convenience, the time marker index of the signals are omitted. of the DPDs in the subarray are represented by a vector . Further, is multiplied by the beamforming weight and inputted to the respective th nonlinear PA. Output of the PA can be expressed as:

| (2) |

where represents the nonlinear function for the th PA. For the PAs in the subarray, the output vector can be expressed as: . Nevertheless, the implementation of the general architecture in Fig. 1 to completely linearize all the PAs is highly complex. Because, the different set of BFs with their coefficients for each of the DPDs require many delays, multipliers, and adders. Further, the computational complexity of the iterative/learning algoirthm to identify the coefficients for each of the DPDs is undesirably high. Also, the number of RF chains is same as the number of PAs, in the subarray which is not economical for a mMIMO transmitter.

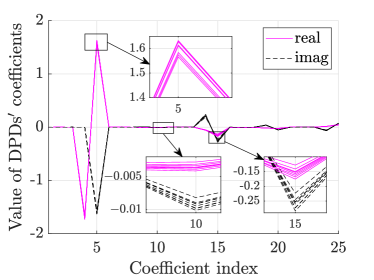

In order to simplify the structure, first, we analyze the values of the identified DPDs’ coefficients for the given PAs in a subarray. In Fig. 2, we have plotted the real and imaginary values of the identified coefficients of DPDs to fully linearize the respective traveling-wave tube (TWT) PAs based on Saleh model [38] having different AM/AM and AM/PM nonlinearities as described in Section V. Here, and ; . The DPDs are trained using the adaptive ILA-RPEM algorithm which is described in detail in the following sections. From the figure, it can be observed that out of BFs, the coefficients of some BFs with indices in the set are non-zero. Further, the index set of the BFs of non-zero coefficients is same for all PAs, because, the PAs are of same type222Note that in the supplementary file of [39], from the measurement of outputs of 16 HMC943APM5E PA ICs for the input signal at GHz, all the PAs nonlinerties are identified using the coefficients of same BFs. Therefore, they can be linearized using the DPDs’ coefficients of same BFs.. Also, the deviation in the values of a coefficient for different PAs is higher for higher value of the coefficient than the coefficients of lower values. For example, the mean deviations for the indices and are and for real part and and for the imaginary part, respectively. Thus, the coefficients with higher values dominate in the linarization of the PAs. Based on these observations, next, we reduce the number of coefficients in the proposed two DPD schemes.

II-A Fully-Featured DPD (FF-DPD)

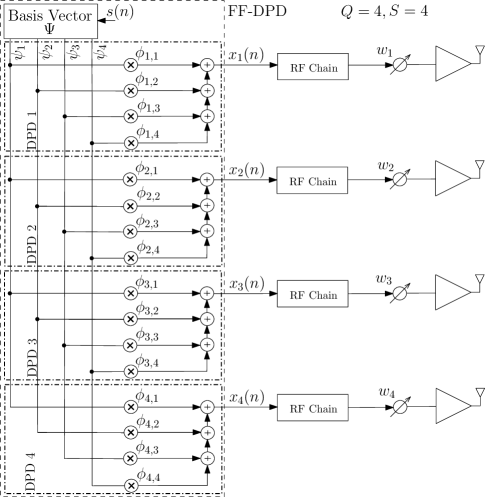

As described earlier in this section, the predistortion signals for a given type of PAs can be obtained using the same set of BFs based on GMP (cf. Fig. 2). Thus, in FF-DPD, we consider the same set of BFs of order and memory length for all the PAs in the subarray. Therefore, the th output from the FF-DPD can be obtained again using (1) after substituting and . The total number of BFs in the set for and is . However, as observed in Fig. 2, out of BFs, some BFs have nonzero DPD coefficients for a given type of PAs. Therefore, in general, we represent BFs as a vector with their respective nonzero coefficient vector for the th PA as , where is the th BF333BF is a function of , is given by for . and is its nonzero coefficient for . Using and , in (1) can be expressed in matrix form as:

| (3) |

Using , the output of the th PA is obtained using the same process as in (2) where is multiplied by the phase shifter and inputted to the PA to get , thus, output vector is obtained. Moreover, the coefficient vectors for the PAs in the subarray can be expressed in a block vector as: . From Fig. 3(a), coefficients are multiplied by each BF. Thus, for BFs, the FF-DPD structure has coefficients. Besides, the number of multipliers, in the structure is same as the number of coefficients, i.e., . Further, using the structure of FF-DPD in Fig. 3(a), the number of adders, can be determined as follows444In this work, the numbers of adders for the different structures are determined for the assumption that an adder has two inputs and one output.. Each predistorted output (; ) is determined though the sum of the multiplications of the coefficients by respective BFs. Therefore, in generation of each output, the number of adders is , i.e., one less than the number of coefficients. Thus, for outputs, the total number of adders, . Moreover, the number of RF chains, is same as the number of PAs, i.e., . For instance, Fig. 3(a) depicts the FF-DPD for and . It has , , and . If we compare Fig. 3(a) with Fig. 1, the ideal structure for the linearization of the subarray is same as the structure of FF-DPD except the same set of BFs has been used for all PAs in the later. Thus, the complexity of FF-DPD is still high in terms of multipliers, adders, and the RF chains. These complexities can be reduced using LC-DPD which is described next.

II-B Low-Complexity DPD (LC-DPD)

Using LC-DPD, we reduce the complexity to fully linearize the PAs as follows. As described earlier (cf. Fig. 2), coefficients of a dominant BF in the generation of the predistorted signals for different PAs has higher deviation in its value. Therefore, to reduce the number of coefficients in LC-DPD, the BFs in the vector are arranged in decreasing order of their dominance. Then, more coefficients are multiplied by a higher dominant BFs than the lower dominant BFs to generate the predistorted signals. Therefore, different from FF-DPD, in LC-DPD, the number of coefficients are multiplied adaptively by the BFs according to their dominance. Therefore, we decrease the number of coefficients based on a geometric series as follows.

| (6) |

where , ; and the cases are: Case I: and Case II: . According to the sequence in (6), the BFs are divided into groups, where each of the BFs in the th group are multiplied by coefficients; thus, the total coefficients in the th group is . Further, over the groups, the number multiplied coefficients per BF decreases as a geometric sequence with a common ratio . The sequence in Case II is same as in Case I except each BF in the last group is multiplied by one coefficient in Case II, because, and the number of coefficient per BF cannot be a fraction. Now, using this sequence, we define the coefficient vector of the LC-DPD as below.

Definition 1.

For the sequence of the number of coefficients multiplied by different BFs as expressed in (6), the coefficient vector for the LC-DPD can be represented as:

| (7a) | |||

| (7b) |

Example: For LC-DPD in Fig. 3(b) where and , the BFs in the vector are divided into groups and each group contains two BFs. For and , the sequence of number of coefficients is: ; thus, the total number of coefficients is . Here, each of the BF in the first group is assigned by coefficients, while for the second group, it is as depicted in the figure. Further, for the LC-DPD coefficient vector , the Case I in (7a) is satisfied, thus, using (7), , and , the coefficient vector .

Furthermore, the total number of coefficients (or multipliers) in , the number of adders, and the number of RF chains in the structure of LC-DPD can be determined using Lemma 1 as described below.

Lemma 1.

Proof:

The expression of in (8c) is trivial which is the sum of the terms of the sequences in (6) for the two cases. After substituting the condition of the special case: ; in (8c), is taken common, thus, it becomes a geometric series with common ratio . After simplifying, we get as in (8f). The number of adders, in the LC-DPD structure can be determined as follows. As described in the structure of FF-DPD, for generation of a predistorted output, if the coefficients to the respective BFs are completely different, then, the number of adder used in the generation is , i.e., one less than the number of coefficients. It can also be observed for the output of the LC-DPD in Fig. 3(b). But, for the output , the coefficients for BFs and are different, while the coefficients for and are equal to the respective coefficients for . We find that for the generation of , the number of adders is which is equal to the number of different coefficients. Based on it, the total number of adders, is obtained as in (9) which is equal to the total number of coefficients (or multipliers) subtracted by the number of predistorted outputs which uses completely different set of coefficients, given by: . For Case II in (6), and only one output is generated by completed different set of coefficients. So, represents the number of different sets of coefficients, thus, in (9) is for both the cases. Moreover, the number of RF chains, depends on the number of coefficients multiplied by each of the BF in the first group, i.e., as expressed in (9). For instance, in Fig. 3(b), each of and are assigned by coefficients, thus, . ∎

If we compare the LC-DPD to FF-DPD in Fig. 3, the number of multipliers, adders, and RF chains in LC-DPD are reduced by the factors, , , and , respectively.

III Training Based on ILA-RPEM: Part I

Now, we describe the ILA-RPEM based learning for FF-DPD and LC-DPD schemes to fully linearize a subarray of PAs. In Part I, we focus on the learning using FF-DPD structure where first, we describe the learning for FF-DPD coefficients, then, the learning for LC-DPD coefficients is realized by utilizing the structure of FF-DPD. Whereas, in Part II, the learning completely exploits the structure of LC-DPD.

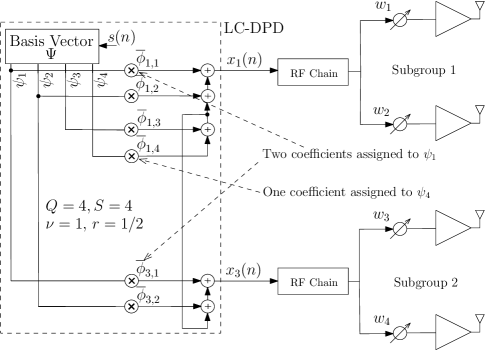

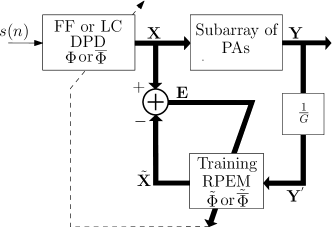

Fig. 4 represents a general ILA architecture to linearize a subarray of PAs using RPEM algorithm. Here, the message is inputted to the FF-DPD (or LC-DPD) block with coefficient vector (or ) which generates a vector of predistorted signals using (3). Thereafter, these signals are inputted to the respective PAs to get the output vector using (2). To minimize the nonlinearties in , in the feedback loop, first, is scaled by the inverse gain of the PAs to get , where . Then, it is inputted to the training block. Based on RPEM, the block estimates the FF-DPD (or LC-DPD) coefficient vector (or ), where is defined similar to as in Section II-A for FF-DPD structure, whereas, is defined as in (7) for LC-DPD structure. Thereafter, it is copied to FF-DPD (or LC-DPD) block, i.e., (or ) and again generates followed by . The process repeats until (or ) converges. Thereafter, the FF-DPD (or LC-DPD) is trained to fully linearize the PAs.

III-A Linearization of a PA using ILA-RPEM

We study the processing behind the linearization of th PA of the subarray using the ILA-RPEM in Fig. 4 as follows. Using the input , the predistorted output followed by the PA output are obtained using (3) and (2), respectively. To capture the inverse of the nonlinear behavior of the PA, the scaled output of the PA is inputted to the RPEM based learning block which generates the postdistorter signal similar to (3) as:

| (10) |

where is the th vector of the block vector and is the vector of BFs defined using same polynomial terms as in , only the difference is that instead of , is the input to the BFs in . Thus, the th element of the vector is: . Now, the goal of the RPEM algorithm is to minimize the difference between the postdistorted signal in (10) and the predistorted signal in (3) iteratively by optimizing . At the end of each iteration, is copied to , i.e., and using it, the algorithm tries to capture the inverse of the nonlinear characteristics of the PA. Thus, using the estimated value at the end of the convergence, the FF-DPD generates the optimal predistored signal to linearize the th PA.

Now, we describe the process to minimize the difference in each iteration. The cost function based on the average of the power content of over the long horizon is defined as:

| (11) |

From [40], in (11) can be minimized using the negative gradient of in . It can be obtained as:

| (12) |

Using the gradient in (12), the training block performs the below computations in (13) based on RPEM to get the trained coefficients for the th PA in the th iteration [40].

| (13a) | ||||

| (13b) | ||||

| (13c) | ||||

| (13d) | ||||

| (13e) | ||||

Here, in (13a), is computed. Thereafter, the forgetting factor is determined recursively in (13b) using its value in the previous iteration and the rate of growth . The initial value and grows exponentially to with iterations. Using , BF vector , and the covariance matrix , the scalar is computed in (13c). Initial value , where is the identity matrix and is a constant. Finally, matrix is obtained in (13d) followed by is determined in (13e) recursively at the end of the iteration. Moreover, RPEM is free from complex matrix inverse operations like in a LS estimation as is scalar. Now, using the above study for the linearization of th PA, we realize the full linearization of PAs of a subarray.

III-B FF-DPD to Linearize a Subarray using ILA-RPEM

As described earlier, the structure of FF-DPD in Fig. 3(a) is similar to assigning individual DPD in Fig. 1 to each of the PAs. Its complexity is reduced only due to utilizing same set of BFs for a given type of PAs. Therefore, using FF-DPD, the full linearization for PAs through ILA-RPEM is same as the parallel linearization of each of the PAs using the same process as for the th PA (cf. previous paragraph). For the parallel operations, the different parameters are arranged in the matrix form as follows. We define, , , , , , and . Here, is diagonal matrix constructed using the input scalar or matrix elements.

In Algorithm 1, the values are assigned to the independent parameters , , and . Then, the initial values of the covariance matrix and the forgetting factor are computed in Steps 3 and 4. Thereafter, the calculations from Step 9 to Step 15 are repeated until the coefficient vector converges. Lastly, we obtain the optimal coefficient vector in Step 18 which is copied to the FF-DPD, i.e., .

Performance

If we examine Step 13 of Algorithm 1, is iteratively estimated using the correlation matrix . Therefore, the coefficients in the vector of the block vector are correlated with each other to provide optimal predistorted signal to the linearize the th PA. As each PA is provided a separate predistorted signal, hence, FF-DPD gives the best performance.

Complexity

As the matrix multiplication dominates in the complexity of an algorithm [41], therefore, we consider Steps 11, 12, and 13 to determine the computational complexity of Algorithm 1 in an iteration. In Step 11, the matrices , have the sizes and , respectively. The computational complexity of is . Now, the matrix has the size which is further multiplied by with complexity . Thus, the total complexity of Step 11 is . Similarly, the complexities of Steps 12 and 13 are and , respectively555Note that the algorithm is still free from the matrix inverse operations, because, although, and are the matrices, but, they are diagonal matrices and their inverses are only the inverse of their diagonal elements.. In these operations, the highest order term is , therefore, per iteration, the computational complexity of the algorithm is .

Lemma 2.

Using property of diagonal matrix multiplication, complexity of Algorithm 1 is reduced by a factor of .

Proof:

As the direct multiplication of diagonal matrices is not computationally efficient due to redundant multiplication of entries. For example, the two diagonal matrices of size complies the following multiplication, , where and ; are the scalars. If we consider the conventional matrix multiplication, total number of multiplications is , but, according to the diagonal matrix multiplication, only multiplications are required of their diagonal entries. Thus, there are redundant multiplications in the former method. The same multiplication property is applied if and are matrices provided their sizes should satisfy the multiplication . If we employ it in the matrix multiplication of Step 11, there are respective diagonal elements multiplications. Further, in each diagonal multiplication, for example, in th, the matrix multiplication is with total number of multiplications . Thus, total multiplication is . Similarly, the computational complexities of Steps 12 and 13 are and , respectively. Thus, per iteration, the computational complexity is which is times less than the conventional method. ∎

III-C Realization of Learning for LC-DPD using FF-DPD

To learn the LC-DPD coefficient vector , we exploit the learning process for FF-DPD. In this regard, vector is converted into FF-DPD coefficient vector and vice versa. To realize the conversions mathematically, we define two operators and .

Definition 2 (A Linear Operator ).

The function that transforms the shape of the coefficient vector into the shape of as expressed in (14a) is a linear operator as defined in (14b).

| (14a) | ||||

| (14b) | ||||

where , for , , and . Here, indicates that after performing the operation in (14a), the th element of vector is assigned to the th element of vector . Furthermore, the operator has the following two properties.

-

(i)

In each row vector of the matrix , only one element is and the remaining elements are .

-

(ii)

The sum of the elements in the th column vector of the operator depicts the repetition of th element of vector in the vector . Also, if the th element of lies in the th vector of the block vector , then the number of repetition of th coefficient of in is which is same as the sum of the elements of the th column vector of .

Example: From Fig. 3(b), the block vector , where , and . Here, the parameters for LC-DPD are: , , , , , and . To reshape into the shape of as for FF-DPD in Fig. 3(a), we use the operator as given below, where for .

| (19) |

In (19), satisfies the property (i) as each of its row vector contains one element as and remaining are . Next, to verify the property (ii), for instance, the sum of the elements of the th column vector is which entails that th coefficient of repeats times in . It can also be determined using the expression where as the 5th column vector lies in the 2nd group and it gives after substitution of the value of the parameters. Besides, the block vector is obtained using the computation in (14a), where , , , and .

Definition 3 (A Linear Operator ).

The function that transforms the shape of the coefficient vector into the shape of as expressed in (20a), where some of the elements of are the average of some of elements of , is a linear operator as defined in (20b).

| (20a) | ||||

| (20b) | ||||

| (20e) | ||||

where , , , , , and . The value of the matrix elements is determined using (20e) based on the relationship between the elements of the vectors and . Besides, the operator has the following two properties.

-

(i)

In each row vector of the matrix , the sum of the elements is .

-

(ii)

Each column vector of has only one nonzero element which takes the value .

Example: If we consider Fig. 3 for this example, from Fig. 3(a), the block vector for FF-DPD is expressed as , where for and . To transform into the shape of as for the LC-DPD in Fig. 3(b), we use the operator as given below, where , and .

| (23) |

In (23), satisfies the property (i) as sum of the elements in each of its row vector is . Further, in each column vector, only one element is nonzero and its value is . For instance, in the second column vector, the third element is nonzero and for it, parameter . So, . Thus, property (ii) is also satisfied by . Moreover, after performing the operation in (20b), the relationship between the elements of and can be expressed as: , , , , , and .

Now, using Algorithm 2, we train the coefficient vector of LC-DPD as follows. Apart from the parameters, , , , and , the values of the operators, and are also inputted to the algorithm. As we realize the training of by exploiting the training of , the steps of Algorithm 2 are same as of Algorithm 1 except the Steps 4, 8, and 9. In Step 4, using operator , is converted into to compute other initial values of the parameters for the FF-DPD structure. While Steps 8 and 9 enforce the learning of FF-DPD coefficients in to incorporate the repetitive characteristics of LC-DPD coefficients in in each iteration. The forth process in Step 8 takes the average of some coefficients of which has to be repeated in the LC-DPD structure and is assigned to a coefficient of (cf. example of Definition 3). In the back process in Step 9, again, this coefficient in is repeated in (cf. example of Definition 2). Thus, it enforces the repetitive coefficients in to have equal value in each iteration as the learning is based on FF-DPD. Finally, after the convergence, it returns the estimated LC-DPD coefficient vector .

Performance and Complexity

As in Algorithm 2, the LC-DPD coefficient vector is trained by exploiting the training of the FF-DPD coefficient vector where using the operator , the common coefficients in is obtained by taking the average of some of the coefficients in (cf. Example of Definition 3). However, the obtained common coefficients after the average loose the correlation with the distinct coefficients. Therefore, using it, the generated predistorted signals are not optimal as in FF-DPD to linearize the PAs; thus, its performance is low. Further, the complexity of the algorithm is described as follows. Although, the operators, and are represented as the matrices in (14b) and (20b) to analyze the operations mathematically, but, in practice, they have only assignment and average operations whose complexities are negligible compared to the matrix multiplications. Therefore, the dominant operations in Algorithm 2 are same as Algorithm 1, thus, its complexity per iteration is . To enhance the performance and to reduce the complexity, we propose the improved algorithms in next section.

IV Training Based on ILA-RPEM: Part II

For reducing the complexity of the algorithm, we need to train LC-DPD coefficient vector by only exploiting the structure of LC-DPD instead of enforcing its training using the FF-DPD. Because, the length of vector as given by (8) is less than . Thus, the training only using the length of vector reduces the sizes of the matrices, and in the dominant matrix multiplications. Based on it, we propose two algorithms.

In order to train by completely exploiting the LC-DPD structure, first, we need to represent in a suitable form, i.e., in another block vector where each vector in it consists the coefficients that are multiplied by the BFs to generate a predistorted signal that is distributed to a subgroup of PAs666In LC-DPD assisted subarray, the PAs of the subarray are divided into subgroups, where each subgroup consists number of PAs. Thus, LC-DPD structure generates predistorted signals to distribute them to respective subgroups..

Definition 4 (Reshape of as ).

To generate the predistorted signals using the LC-DPD coefficient vector , it can be reshaped as the block vector , given by:

| (24) |

where is the th vector in . Here, the grouping of the vectors is based on their lengths, i.e., the vectors in a group have same length. The total number of groups is which is equal to the number of vectors in (cf. (7)). In the th group, the number of vectors is and each of the vector is of length as shown in (24). Besides, . Further, and are given by:

| (25a) | ||||

| (25b) | ||||

where , and for ; otherwise, .

Example: Again, we consider the instance of LC-DPD structure with , , , and in Fig. 3(b) to reshape to . From (7), where , , and . Using (24), its reshape as to generate the predistored signals is given as: . Here, number of groups, and substituting the parameters values in (25), we get , , and . Thus, and .

Corollary 1.

As is the reshape of , therefore, the elements in the former vector is same as in the later, thus, the length of the two vectors is equal. The length of can be obtained by , hence, from the length of in (8c), .

The above corollary can be proved by substituting in (25) followed by simplifying , it gives in (8c). Furthermore, to reshape into , we define a linear operator as below.

Definition 5 (A Linear Operator ).

Example: Again, we consider the example for Fig. 3(b) to reshape into , where , , , and . For it, the operator is given by:

| (29) |

Corollary 2.

The operator is always a square matrix as it is used to reshape the vector into the vector using the same elements. Also, the column vectors in are unit vectors and they are orthogonal to each other. Therefore, they form a orthonormal basis in the space . Furthermore, the inverse of is its transpose, i.e., [42]. Hence, from (26a), using , can be reshaped back to as: .

Now, we utilize the operator to train the coefficient vector in Algorithm 3. In the algorithm based on ILA-RPEM, the sizes of different matrices and vectors used in the training are determined according to the shape of the vector in (24). They are defined as: the forgetting vector , , , and . To determine the parameter , first, using the outputs of the PAs, we obtain the block vector , where . From , the block vector for LC-DPD can be obtained using the operator as: . Here, and can be represented similar to in (1) for , where is replaced by . Further, using operator , can be reshaped into as: . Now, using the vectors in the block vector , can be expressed as777This procedure is used to compute from in Steps 7 and 18 of Algorithm 3.: . Now the process in the Algorithm 3 can be described as follows. The inputs to the algorithm are same as in Algorithm 2 along with the operator . In the first two steps, correlation matrix and are initialized. Using Steps 5 to 8, the initial values of and the postdistorted signal vector are determined. Thereafter, similar to Algorithms 1 and 2, the iterative steps are followed to get the the converged value of . In the loop, the operators, and represent the Kronecker and Hadamard products, respectively.

Performance and Complexity

In Algorithm 3, the block vector is reshaped as the block vector to correlate the coefficients in its vector using the correlation matrix while the training (cf. Step 16 of Algorithm 3). However, the common coefficients in it are correlated with the distinct coefficients in the first vectors of the block vector (cf. (24)). For example, in Fig. 3(b), the coefficients, , are commonly shared with the remaining coefficients , and , to generate the predistorted signals and , respectively. But, using Algorithm 3, , are only correlated with , , thus, the predistorted signal is better to linearize st subgroup of PAs, whereas, performs less to linearize the nd subgroup. Therefore, the predistorted signals generated using the coefficients in first vectors in are optimal to linearize the respective subgroups of PAs. But, still, this algorithm provides a low performance for the remaining PAs. Therefore, next, we propose an algorithm that establishes the correlation of the common coefficients with the all distinct coefficients. Besides, the complexity of Algorithm 3 in an iteration can be determined using the dominant matrix multiplications in Steps 14, 15, and 16. As the matrices, , , , and are diagonal, the respective complexities of the three steps are: , , and . Based on the dominant term, the complexity of Algorithm 3 is . As , the complexity of Algorithm 3 is lesser than Algorithms 1 and 2. Next, to correlate the common coefficients in LC-DPD structure with all the remaining, first, we define a sequence of linear operators as below.

Definition 6 (A Sequence of Linear Operators for the Back and Forth Operations).

To establish the correlation of the common coefficients to set of distinct coefficients in the LC-DPD structure, a sequence of operators is defined in (30a), where the th operator in the sequence is given by (30b) and its th matrix element is expressed in (30c).

| (30a) | ||||

| (30b) | ||||

| (30c) | ||||

| (30d) | ||||

| (30e) | ||||

where , for ; otherwise for . , for , , , and . Again, like in (26b), the sum of the elements in each of the row or column vector of is . Further, from Corollary 2, . Besides, the common coefficients are with the th set of distinct coefficients in the vector which is obtained using the th operator in (30d). Here, first, is multiplied by , then, using a truncation function , the first elements of the vector is truncated to get . Its reverse operation, i.e., the conversion of to can be performed using (30e), where updates the first elements of by merging them with of length .

Example: For the instance of the LC-DPD structure with , , , and in Fig. 3(b), , thus, from (30a), . As described earlier, the coefficients, , are commonly shared with , and , . From (30d), we can find the vectors, and from using the operators and as given by (30b) which are:

| (35) |

Also, using this example, we can realize (30e).

Now, use of the sequence of operators, is described in Algorithm 4 to correlate the common coefficients with the remaining distinct coefficients. Apart from the input parameters in Algorithm 2, and the number (defined later) are inputted in Algorithm 4. Then, it initializes the correlation matrix and the forgetting matrix for . In Steps 5, 6, and 7, similar to earlier algorithms, it determines the initial values of and . Thereafter, three nested loops are initialized. In Steps 13 and 14 of the innermost loop, the algorithm determines and using (30d) in the th iteration. Steps 15 to 22 follow the process to update in the current iteration. Then, is converted back to using (30e). This process repeats for iterations to correlate the common coefficients to the th set of distinct coefficients. Thereafter, increases by unity to establish the correlation of the common coefficients with next set of distinct coefficients. Thus, the two inner loops repeat until and at this point, the algorithm completes the one cycle to correlate the common coefficients with all sets of distinct coefficients. The outermost loop repeats this cycle until converges.

Performance and Complexity

Algorithm 4 performs better than Algorithms 2 and 3, because, the common coefficients establish the correlation with all distinct coefficients in the vector . Therefore, using it, the generated predistorted signal vector gives the better linearization of the PAs in the subarray. Moreover, for the fair comparison of this algorithm with the other earlier algorithms in terms of computational complexity, we assign . The complexity of a correlation cycle depends on the dominant matrix multiplications in Steps 20, 21, and 22. For the correlation of the common coefficients with the distinct set of coefficients, the complexities of the three steps are: , , and . Thus, considering the dominant term, the complexity is . Taking inside the big O, the complexity can be approximated as: . As , but, , hence, the complexity of Algorithm 4 is greater than Algorithms 3, but, it is lesser than that of Algorithms 1 and 2.

V Numerical Results

V-A Evaluation Environment

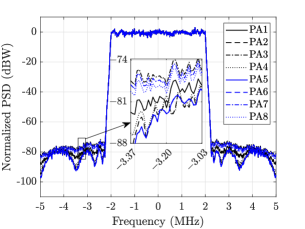

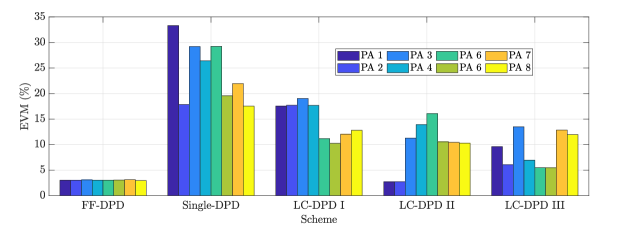

To evaluate the performance of the proposed analysis, we consider a subarray of PAs. The PAs follow Saleh model as in [43]. The parameters, and for the AM/AM distortion and and for the AM/PM distortion are given by: , , , and , where , , and are uniformly distributed over for . The GMP used for a DPD has the order and each order has the memory length, . But, as described using Fig. 2, the BFs with indices in the set have the nonzero coefficients; thus . Further, for the LC-DPD scheme, the BFs are arranged in decreasing order of their dominance. The arrangement is represented using the indices of the BFs as: . Moreover, for the LC-DPD structure, the geometric sequence in (6) has the following parameters’ values: , , , and . The bandwidth of the input signal is MHz. To get the insights on the linearization using the obtained results, the in-band average powers of the power spectral density (PSD) of the input signal and the output of the PAs, ; , are normalized to dB. Moreover, for the algorithms based on ILA-RPEM, the input parameters are set as: , , and . The linearization of the PAs is determined using the error vector magnitudes (EVMs) of their outputs with respect to the reference message signal . It is computed as: ; . The simulation for the proposed analysis is performed using MATLAB/Simulink.

V-B Performance Comparison

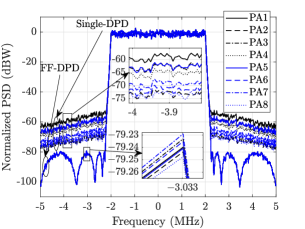

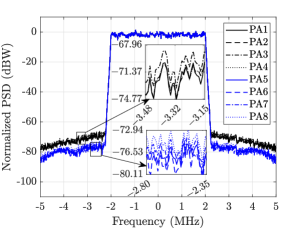

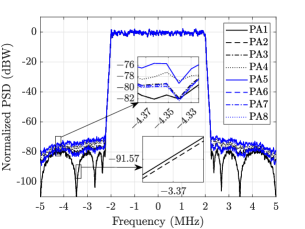

In Fig. 5, the different DPD schemes are compared in terms of linearization of the PAs in the subarray. It can be observed in Fig. 5(a) that FF-DPD gives the best performance to linearize all the PAs, whereas, single-DPD has the least performance. Because, in FF-DPD, each PA has a separate DPD to linearize itself, but, in single-DPD, all the PAs are linearized using a single DPD. Also, from the bar plot in Fig. 5(e), all the PAs have almost same EVMs and their values are around . Whereas, for single-DPD, the EVMs values of the PAs are different and the maximum value goes upto . If we compare the three LC-DPD schemes in Figs. 5(b), 5(c), and 5(d), the linearization performance of LC-DPD I is least, because, LC-DPD coefficients in it are determined using the structure of FF-DPD where correlation of the common coefficients are least to the distinct coefficients. Therefore, although, it is giving the better performance than single-DPD, but, none of the PAs is linearized properly. The average EVMs for the first four and the next four PAs are and , respectively. In LC-DPD II scheme, the structure of LC-DPD is completely exploits, but, the common coefficients are correlated with distinct coefficients for the first two PAs. Therefore, the linearization of these PAs are same as FF-DPD with average EVM equal to , while the linerization of the remaining PAs is less and their average EVM value is . In LC-DPD III, the common coefficients are partially correlated with each of the distinct coefficients, therefore, its overall performance is better than the previous two schemes. The average EVM values of LC-DPD I, LC-DPD II, and LC-DPD III schemes are , , and , respectively.

VI Conclusion

In this work, we have proposed two schemes, FF-DPD and LC-DPD to fully linearize the PAs in a subarray of a mMIMO transmitter. Although, FF-DPD provides the best performance but it has high complexity. Using the structure of FF-DPD, we derive a less complex LC-DPD. For the two schemes, four algorithms based on ILA-RPEM are described and their performances and complexities are investigated. From the obtained results we find that FF-DPD almost linearizes the PAs fully with on average EVM. The computational complexities of the three algorithms for LC-DPD is less complex, but, their performances in EVM are less than the algorithm for FF-DPD. Furthermore, among the three algorithms for LC-DPD, the third algorithm (LC-DPD III) provides the better performance as it better correlates the common coefficients to the distinct coefficients of the scheme.

References

- [1] J. Kenney and A. Leke, “Design considerations for multicarrier CDMA base station power amplifiers,” Microw. J., vol. 42, no. 2, pp. 76–83, Feb. 1999.

- [2] A. Katz, J. Wood, and D. Chokola, “The evolution of PA linearization: From classic feedforward and feedback through analog and digital predistortion,” IEEE Microw. Mag., vol. 17, no. 2, pp. 32–40, Feb. 2016.

- [3] D. R. Morgan, Z. Ma, J. Kim, M. G. Zierdt, and J. Pastalan, “A generalized memory polynomial model for digital predistortion of RF power amplifiers,” IEEE Trans. Signal Process., vol. 54, no. 10, pp. 3852–3860, Oct. 2006.

- [4] A. N. D’Andrea, V. Lottici, and R. Reggiannini, “RF power amplifier linearization through amplitude and phase predistortion,” IEEE Trans. Commun., vol. 44, no. 11, pp. 1477–1484, Nov. 1996.

- [5] Y. Liu, W. Pan, S. Shao, and Y. Tang, “A general digital predistortion architecture using constrained feedback bandwidth for wideband power amplifiers,” IEEE Trans. Microw. Theory Tech., vol. 63, no. 5, pp. 1544–1555, Feb. 2015.

- [6] Z. Wang, W. Chen, G. Su, F. M. Ghannouchi, Z. Feng, and Y. Liu, “Low feedback sampling rate digital predistortion for wideband wireless transmitters,” IEEE Trans. Microw. Theory Tech., vol. 64, no. 11, pp. 3528–3539, Nov. 2016.

- [7] S. Zhang, W. Chen, F. M. Ghannouchi, and Y. Chen, “An iterative pruning of 2-D digital predistortion model based on normalized polynomial terms,” in Proc. IEEE MTT-S Int. Microw. Symp. Digest, Seattle, WA, USA, Jun. 2013, pp. 1–4.

- [8] Z. Wang, W. Chen, G. Su, F. M. Ghannouchi, Z. Feng, and Y. Liu, “Low computational complexity digital predistortion based on direct learning with covariance matrix,” IEEE Trans. Microw. Theory Tech., vol. 65, no. 11, pp. 4274–4284, Nov. 2017.

- [9] L. Guan and A. Zhu, “Optimized low-complexity implementation of least squares based model extraction for digital predistortion of RF power amplifiers,” IEEE Trans. Microw. Theory Tech., vol. 60, no. 3, pp. 594–603, Mar. 2012.

- [10] P. L. Gilabert, G. Montoro, D. López, N. Bartzoudis, E. Bertran, M. Payaro, and A. Hourtane, “Order reduction of wideband digital predistorters using principal component analysis,” in Proc. IEEE MTT-S Int. Microw. Symp. Digest, Seattle, WA, USA, Jun. 2013, pp. 1–7.

- [11] R. N. Braithwaite, “Wide bandwidth adaptive digital predistortion of power amplifiers using reduced order memory correction,” in Proc. IEEE MTT-S Int. Microw. Symp. Digest, Atlanta, GA, USA, Jun. 2008, pp. 1517–1520.

- [12] J. Swaminathan, P. Kumar, and M. Vinoth, “Performance analysis of LMS filter in linearization of different memoryless non linear power amplifier models,” in Proc. Int. Conf. Advances Computing, Commun. Control, Berlin, Heidelberg, Jan. 2013, pp. 459–464.

- [13] P. M. Suryasarman and A. Springer, “A comparative analysis of adaptive digital predistortion algorithms for multiple antenna transmitters,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 62, no. 5, pp. 1412–1420, May 2015.

- [14] D. R. Morgan, Z. Ma, and L. Ding, “Reducing measurement noise effects in digital predistortion of RF power amplifiers,” in Proc. IEEE ICC, vol. 4, Anchorage, AK, USA, May 2003, pp. 2436–2439.

- [15] L. Gan and E. Abd-Elrady, “Digital predistortion of memory polynomial systems using direct and indirect learning architectures,” in Proc. Int. Conf. IASTED, vol. 654, p. 802.

- [16] B. Mohr, W. Li, and S. Heinen, “Analysis of digital predistortion architectures for direct digital-to-RF transmitter systems,” in Proc. IEEE Int. Midwest Symp. Circuits Syst., Boise, ID, USA, Aug. 2012, pp. 650–653.

- [17] T. Söderström and P. Stoica, System identification. Prentice-Hall International, 1989.

- [18] D. Zhou and V. E. DeBrunner, “Novel adaptive nonlinear predistorters based on the direct learning algorithm,” IEEE Trans. Signal Process., vol. 55, no. 1, pp. 120–133, Jan. 2006.

- [19] H. Paaso and A. Mammela, “Comparison of direct learning and indirect learning predistortion architectures,” in Proc. IEEE Int. Symp. Wireless Commun. Syst., Reykjavik, Iceland, Oct. 2008, pp. 309–313.

- [20] J. Chani-Cahuana, P. N. Landin, C. Fager, and T. Eriksson, “Iterative learning control for RF power amplifier linearization,” IEEE Trans. Microw. Theory Tech., vol. 64, no. 9, pp. 2778–2789, Sep. 2016.

- [21] H. Chauhan, V. Kvartenko, and M. Onabajo, “A tuning technique for temperature and process variation compensation of power amplifiers with digital predistortion,” in Proc. IEEE North Atlantic Test Workshop, Providence, RI, USA, May 2016, pp. 38–45.

- [22] E. Jarvinen, S. Kalajo, and M. Matilainen, “Bias circuits for GaAs HBT power amplifiers,” in Proc. IEEE MTT-S Int. Microw. Symps. Digest, vol. 1, Phoenix, AZ, USA, May 2001, pp. 507–510.

- [23] E. Ng, Y. Beltagy, P. Mitran, and S. Boumaiza, “Single-input single-output digital predistortion of power amplifier arrays in millimeter wave RF beamforming transmitters,” in Proc. IEEE Int. Microw. Symp.-IMS, Philadelphia, PA, USA, Jun. 2018, pp. 481–484.

- [24] K. Hausmair, P. N. Landin, U. Gustavsson, C. Fager, and T. Eriksson, “Digital predistortion for multi-antenna transmitters affected by antenna crosstalk,” IEEE Trans. Microw. Theory Tech., vol. 66, no. 3, pp. 1524–1535, Mar. 2018.

- [25] S. Choi and E.-R. Jeong, “Digital predistortion based on combined feedback in MIMO transmitters,” IEEE Commun. Lett., vol. 16, no. 10, pp. 1572–1575, Oct. 2012.

- [26] Q. Luo, X.-W. Zhu, C. Yu, and W. Hong, “Single-receiver over-the-air digital predistortion for massive MIMO transmitters with antenna crosstalk,” IEEE Trans. Microw. Theory Tech., vol. 68, no. 1, pp. 301–315, Jan. 2019.

- [27] X. Liu, Q. Zhang, W. Chen, H. Feng, L. Chen, F. M. Ghannouchi, and Z. Feng, “Beam-oriented digital predistortion for 5G massive MIMO hybrid beamforming transmitters,” IEEE Trans. Microw. Theory Tech., vol. 66, no. 7, pp. 3419–3432, Jul. 2018.

- [28] C. Tarver, A. Balatsoukas-Stimming, C. Studer, and J. R. Cavallaro, “Ofdm-based beam-oriented digital predistortion for massive MIMO,” in Proc. IEEE Int. Symp. Circuits Syst., Daegu, Korea, May 2021, pp. 1–5.

- [29] X. Liu, W. Chen, L. Chen, F. M. Ghannouchi, and Z. Feng, “Power scalable beam-oriented digital predistortion for compact hybrid massive MIMO transmitters,” IEEE Trans. Circuits Syst. I, Reg. Papers, vol. 67, no. 12, pp. 4994–5006, Aug. 2020.

- [30] C. Yu, J. Jing, H. Shao, Z. H. Jiang, P. Yan, X.-W. Zhu, W. Hong, and A. Zhu, “Full-angle digital predistortion of 5G millimeter-wave massive MIMO transmitters,” IEEE Trans. Microw. Theory Tech., vol. 67, no. 7, pp. 2847–2860, Jun. 2019.

- [31] A. Brihuega, M. Abdelaziz, L. Anttila, M. Turunen, M. Allén, T. Eriksson, and M. Valkama, “Piecewise digital predistortion for mmWave active antenna arrays: Algorithms and measurements,” IEEE Trans. Microw. Theory Tech., vol. 68, no. 9, pp. 4000–4017, Sep. 2020.

- [32] N. Tervo, B. Khan, J. P. Aikio, O. Kursu, M. Jokinen, M. E. Leinonen, M. Sonkki, T. Rahkonen, and A. Pärssinen, “Combined sidelobe reduction and omnidirectional linearization of phased array by using tapered power amplifier biasing and digital predistortion,” IEEE Trans. Microw. Theory Tech., vol. 69, no. 9, pp. 4284–4299, Sep. 2021.

- [33] C. Yu, J. Jing, H. Shao, Z. H. Jiang, P. Yan, X.-W. Zhu, W. Hong, and A. Zhu, “Full-angle digital predistortion of 5G millimeter-wave massive MIMO transmitters,” IEEE Trans. Microw. Theory Tech., vol. 67, no. 7, pp. 2847–2860, Jul. 2019.

- [34] P. Diao, L. Zhang, L. Tao, Y. Zhang, Y. Yi, H. Liu, and D. Zhao, “Full-angle digital predistortion technique for 5G millimeter-wave integrated phased array,” in Proc. IEEE MTT-S Int. Wireless Symp., vol. 1, Harbin, China, Aug. 2022, pp. 1–3.

- [35] J. Zhao, P. Liu, L. Zhai, and F. Yang, “A novel digital predistortion based on flexible characteristic detection for 5G massive MIMO transmitters,” IEEE Microw. Wireless Compon. Lett., vol. 32, no. 4, pp. 363–366, Apr. 2021.

- [36] J. Yan, H. Wang, and J. Shen, “Novel post-weighting digital predistortion structures for hybrid beamforming systems,” IEEE Commun. Lett., vol. 25, no. 12, pp. 3980–3984, Dec. 2021.

- [37] G. Prasad and H. Johansson, “A low-complexity post-weighting predistorter in a mMIMO transmitter under crosstalk,” arXiv preprint arXiv:2304.05795, 2023.

- [38] A. A. Saleh, “Frequency-independent and frequency-dependent nonlinear models of TWT amplifiers,” IEEE Trans. Commun., vol. 29, no. 11, pp. 1715–1720, Nov. 1981.

- [39] A. Brihuega, L. Anttila, M. Abdelaziz, T. Eriksson, F. Tufvesson, and M. Valkama, “Digital predistortion for multiuser hybrid MIMO at mmwaves,” IEEE Trans. Signal Process., vol. 68, pp. 3603 – 3618, May 2020.

- [40] L. Ljung and T. Söderström, Theory and practice of recursive identification. MIT press, 1983.

- [41] Y. Li, S.-L. Hu, J. Wang, and Z.-H. Huang, “An introduction to the computational complexity of matrix multiplication,” Journal of the Operations Research Society of China, vol. 8, pp. 29–43, Dec. 2020.

- [42] G. Strang, “Linear algebra and its applications 4th ed.” 2012.

- [43] C. Liu, W. Feng, Y. Chen, C.-X. Wang, and N. Ge, “Optimal beamforming for hybrid satellite terrestrial networks with nonlinear PA and imperfect CSIT,” IEEE Wireless Commun. Lett., vol. 9, no. 3, pp. 276–280, Mar. 2019.