A GNN-Guided Predict-and-Search Framework for Mixed-Integer Linear Programming

Abstract

Mixed-integer linear programming (MILP) is widely employed for modeling combinatorial optimization problems. In practice, similar MILP instances with only coefficient variations are routinely solved, and machine learning (ML) algorithms are capable of capturing common patterns across these MILP instances. In this work, we combine ML with optimization and propose a novel predict-and-search framework for efficiently identifying high-quality feasible solutions. Specifically, we first utilize graph neural networks to predict the marginal probability of each variable, and then search for the best feasible solution within a properly defined ball around the predicted solution. We conduct extensive experiments on public datasets, and computational results demonstrate that our proposed framework achieves and performance improvements to MILP solvers SCIP and Gurobi on primal gaps, respectively.

1 Introduction

Mixed-integer linear programming is one of the most widely used techniques for modeling combinatorial optimization problems, such as production planning (Pochet & Wolsey, 2006; Chen, 2010), resource allocation (Liu & Fan, 2018; Watson & Woodruff, 2011), and transportation management (Luathep et al., 2011; Schöbel, 2001). In real-world settings, MILP models from the same application share similar patterns and characteristics, and such models are repeatedly solved without making uses of those similarities. ML algorithms are well-known for its capability of recognizing patterns (Khalil et al., 2022), and hence they are helpful for building optimization algorithms. Recent works have shown the great potential of utilizing learning techniques to address MILP problems. The work of (Bengio et al., 2021) categorized ML efforts for optimization as (i) end-to-end learning (Vinyals et al., 2015; Bello* et al., 2017; Khalil et al., 2022), (ii) learning to configuring algorithms (Bischl et al., 2016; Kruber et al., 2017; pmlr-v176-gasse22a) and (iii) learning alongside optimization (Gasse et al., 2019; Khalil et al., 2016; Gupta et al., 2020). In this work, for the sake of interest, we focus on the end-to-end approach. While such an approach learns to quickly identify high-quality solutions, it generally faces the following two challenges:

-

(I)

high sample collection cost. The supervised learning task for predicting solutions is to map from the instance-wise information to a high-dimensional vector. Such a learning task becomes computationally expensive since it necessitates collecting a considerable amount of optimal solutions (see, e.g., Kabir et al. (2009)).

- (II)

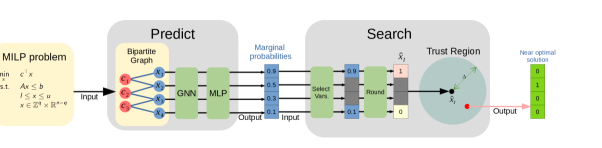

We propose a novel predict-and-search framework to address the aforementioned challenges. In principle, end-to-end approaches for MILP problems require the collection of abundant optimal solutions. However, collecting so many training samples is computationally prohibitive since obtaining optimal solutions is excessively time-consuming. We consider to approximate the distribution by properly weighing high-quality solutions with their objective values. This reduces the cost of sample collections by avoiding gathering optimal solutions as mentioned in challenge (I). Regarding challenge (II), we implement a trust region inspired algorithm that searches for near-optimal solutions within the intersection of the original feasible region and a properly defined ball centered at a prediction point. The overall framework is outlined in Figure 1.

The commonly used end-to-end approaches usually fix variables directly based on information from the prediction step, such as in Nair et al. (2020) and Yoon (2022). However, such approaches could lead to sub-optimal solutions or even infeasible sub-problems. Rather than forcing variables to be fixed, our search module looks for high-quality solutions within a subset of the original feasible region, which allows better feasibility while maintaining optimality.

The distinct contributions of our work can be summarized as follows.

-

•

We propose a novel predict-and-search framework that first trains a GNN to predict a marginal probability of each variable and then constructs a trust region to search for high-quality feasible solutions.

-

•

We demonstrate the ability of our proposed framework to provide equivalently good or better solutions than fixing-based end-to-end approaches.

-

•

We conduct comprehensive computational studies on several public benchmark datasets and the computational results show that our proposed framework achieves and smaller primal gaps than state-of-the-art general-purpose optimization solvers SCIP and Gurobi, respectively.

We make our code publicly available at https://github.com/sribdcn/Predict-and-Search_MILP_method.

2 Preliminaries

Given a vector and an index set , let denote a subvector that corresponds to the index set .

2.1 Mixed-integer linear programming

MILP techniques are used to model combinatorial optimization problems, and an MILP instance can be formulated as: , where denotes the feasible region. There are variables, with , , being their objective coefficients, lower and upper bounds, respectively. Without loss of generality, the first variables are discrete. denotes the coefficient matrix while represents the right-hand-side vector. For convenience, let denote an MILP instance. For the sake of interest, we consider each discrete variable to be binary, i.e. for . Furthermore, incorporating continuous variables into consideration will not invalidate our proposed methodology, hence in what follows we consider a pure binary integer programming problem.

2.2 Node Bipartite Graph

Gasse et al. (2019) proposed a bipartite graph representation for MILP problems. Specifically, let denote a bipartite graph, where denotes the set of variable nodes and constraint nodes, and represents the set of edges that only connect between nodes of different types. Variable nodes and constraint nodes are individually associated with the instance information e.g. degrees and coefficients. The adjacency matrix is a matrix that represents the connectivity of , as defined below:

2.3 Graph Neural Networks

Let denote the set of neighbors of node . We construct a -layer GNN as follows:

where function aggregates the feature information over the set of neighboring nodes and function combines the nodes’ hidden features from iteration with the aggregated neighborhood features, and denotes the hidden state of node in the layer. Initially, is the output of an embedding function . Note that the bipartite graph associated with an MILP instance does not include edges between variable nodes as well as constraint nodes.

2.4 Trust Region Method

The trust region method is designed for solving non-linear optimization problems as follows:

| (1) |

where denotes a set of feasible solutions. The main idea is to convert the originally difficult optimization problem into a series of simple local search problems. Specifically, it iteratively searches for trial steps within the neighborhood of the current iterate point (Yuan, 2015). The trial step is obtained by solving the following trust region problem:

| (2) | ||||

where is an approximation of the objective function , denotes a shifted of the set , is a norm of , and denotes the radius of the trust region. After solving the problem in the iteration, is updated to or accordingly. Then, new and are selected along with a new approximation .

3 Proposed Framework

The supervised learning task in end-to-end approaches trains a model to map the instance-wise information to a high-dimensional vector. Ding et al. (2020) first utilizes a GNN to learn the values of “stable” variables that stay unchanged across collected solutions, and then searches for optimal solutions based on learned information. However, such a group of variables does not necessarily exist for many combinatorial optimization problems. Another approach is to learn solution distributions rather than directly learning solution mapping; Li et al. (2018) predicts a set of probability maps for variables, and then utilizes such maps to conduct tree search for generating a large number of candidate solutions. Their work designed ML-enhanced problem-specific tree search algorithm and achieved encouraging results, however, it is not applicable to general problems. Nair et al. (2020) proposed a more general approach that first learns the conditional distribution in the solution space via a GNN and then attempts to fix a part of discrete variables, producing a smaller MILP problem that is computationally cheap to solve. However, fixing many discrete variables might lead to an infeasible MILP model.

To alleviate these issues, we adopt the trust region method and design a novel approach that searches for near-optimal solutions within a properly defined region. Specifically, we propose a predict-and-search framework that: (i) utilizes a trained GNN model to predict marginal probabilities of all binary variables in a given MILP instance; (ii) searches for near-optimal solutions within the trust region based on the prediction.

3.1 Predict

In this section, our goal is to train a graph neural network using supervised learning to predict the conditional distribution for MILP instances. To this end, we introduce the conditional distribution learning. On this basis, we present the training label in the form of a vector, i.e. marginal probabilities.

3.1.1 Distribution learning

A probability distribution learning model outputs the conditional distribution on the entire solution space of an MILP instance . A higher conditional probability is expected when the corresponding solution is more likely to be optimal. Nair et al. (2020) proposed a method to construct the conditional distribution with objective values via energy functions, and for a solution , the conditional probability can be calculated by:

| (3) |

This implies that an infeasible solution is associated with a probability of , while the optimal solution has the highest probability value. It’s worth noting that each instance corresponds to one distribution.

For the collected dataset , denotes the set of feasible solutions to instance . The probability of each solution in the dataset can be calculated by Equation (3). In general, the distance between two distributions can be measured by Kullback-Leibler divergence. Thus, the loss function for the supervised learning task is defined as:

| (4) |

denotes the prediction from the GNN denoted as with learnable parameters . The conditional distribution of an MILP problem can be approximated by a part of the entire solution space. Consequently, the number of samples to be collected for training is significantly reduced.

3.1.2 weight-based sampling

To better investigate the learning target and align labels with outputs, we propose to represent the label in a vector form. With the learning task specified in Equation (4), a new challenge arises: acquiring solutions through high-dimensional sampling is computationally prohibitive. A common technique is to decompose the high-dimensional distribution into lower-dimensional ones. Given an instance , let denote the element of a solution vector . In Nair et al. (2020), it is assumed that the variables are independent of each other, i.e.,

| (5) |

With this assumption, the high-dimensional sampling problem is decomposed into -dimensional sampling problems for each according to their probabilities . Since , we only need for to represent the conditional probability . Then the conditional distribution mapping outputs a dimension vector . Hence, the prediction of the GNN model can be represented as , where for .

Let denote the set of indices in with their component being .

| (6) |

where is normalized by . Given an MILP instance , we can calculate a corresponding learning target in the form of vector, i.e, , where each component is obtained by applying Equation (6). This equation calculates the marginal probability, where the weight is if the variable holds a value of in the corresponding solution and otherwise. We define such a learning target in the form of a vector as marginal probabilities. Solutions of higher quality will be associated with larger weighting coefficients and hence contribute more to the loss function.

For the loss function shown in Equation (4), based on the assumption in Equation (5) and the calculation of probabilities in Equation (4), we have:

This indicates that the multi-dimensional distribution learning loss becomes a summation of each component’s probability learning loss. Thus, with Equation (5), the distribution learning is converted to a marginal probabilities learning.

3.2 Search

With marginal probabilities as inputs, we adopted a trust region like method to carry out a search algorithm. In this section, we first introduce our observation that the distance between the solution obtained from a rounded learning target and the optimal solution can be very small. Hence, we adopt a trust region like method to address aforementioned challenge (II) and present a proposition to manifest the superiority of our framework. The complete framework is shown in Algorithm 1.

3.2.1 Observation

A variable’s marginal probability is closer to if this variable takes a value of in the optimal solution, and otherwise. Given an MILP instance and its learning target , we set the partial solution size parameter to represent the numbers of ’s and ’s in a partial solution. Let denote the set of indices of the smallest components of , and denote the set of indices of the largest components of . If , we get a partial solution by:

| (7) |

Let denote an optimal solution of , empirically, we found is close to as discussed in Appendix D. Explicitly, we measure the distance by norm, and there still exists a small , such that while is a large number. We speculate that, since the optimal solution has the largest weight as shown in equation (6), it is closer to the learning target than all other solutions. As a result, we hypothesize that similar phenomena can be observed with a slightly larger and the same when obtaining the set of indices based on prediction .

With this observation, it is reasonable to accelerate the solving process for MILP problems by fixing variables in the partial solution. Specifically, the sub-problem of an instance using the fixing strategy with the partial solution can be formulated as:

| (8) |

where . However, once , the fixing strategy may lead to suboptimal solutions or even infeasible sub-problems, which can also be observed in Appendix C.

3.2.2 Search within a neighborhood

Based on the observations and analysis above, we design a more practicable method. Inspired by the trust region method, we use the partial solution as a starting point to establish a trust region and search for a trial step. The trial step is then applied to the starting point to generate a new solution.

Specifically, given instance , we acquire the set via the prediction from a trained GNN, and get a partial solution by equation (7) to construct the trust region problem for a trial step similar to problem (1), (2). At last, output the point updated by trial step. Such a trust region problem is equivalent to following:

| (9) |

where denotes a neighborhood constraint. In order to reduce computational costs, we solve this problem only once to find a near-optimal solution. We show that our proposed method always outperforms fixing-based methods in Proposition 1.

Proposition 1.

Proof.

Note that , it is obvious

i.e. .

This proposition demonstrates the advantage of our proposed search strategy over fixing strategy; that is, when fixing strategies may lead to suboptimal solutions or even infeasible sub-problems as a consequence of inappropriate fixing, applying such a trust region search approach can always add flexibility to the sub-problem. Computational studies also provide empirical evidence in support of this proposition. Algorithm 1 presents the details of our proposed search algorithm. It takes the prediction as input, and acquires a partial solution and neighborhood constraints. A complete solution is then attained by solving the modified instance with neighborhood constraints appended. The solving process is denoted by , i.e. utilizing an MILP solver to address instance .

Parameter: Size , radius of the neighborhood:

Input: Instance , Probability prediction

Output: Solution

4 Computational Studies

We conducted extensive experiments on four public datasets with fixed testing environments to ensure fair comparisons.

Benchmark Problems Four MILP benchmark datasets are considered in our computational studies. Two of them come from the NeurIPS ML4CO 2021 competition pmlr-v176-gasse22a, including the Balanced Item Placement (denoted by IP) dataset and the Workload Appointment (denoted by WA) dataset (pmlr-v176-gasse22a). We generated the remaining two datasets: Independent Set (IS) and Combinatorial Auction (CA) using Ecole library (Prouvost et al., 2020) as Gasse et al. (2019) did. Note that the other two datasets from Gasse et al. (2019), Set Covering and Capacitated Facility Location, are not chosen since they can be easily solved by state-of-the-art MILP solvers, such as Gurobi and SCIP. Details of our selected datasets can be found in the Appendix F.

Graph neural networks A single layer perceptron embeds feature vectors so they have the same dimension of 64, and the layer normalization Ba et al. (2016) is applied for a better performance of the network. Then we adopted half-convolution layers from Gasse et al. (2019) to conduct information aggregation between nodes.

Finally, marginal probabilities are obtained by feeding the aggregated variable nodes into a 2-layer perceptron followed by a sigmoid activation function.

Training protocol Each dataset contains instances, including instances in the training set, instances in the validation set, and instances in the test set. All numerical results are reported for the test set. Our model is constructed with the PyTorch framework, and the training process runs on GPUs. The loss function is specified in Equation (4) and (5) with a batch size of 8. The ADAM optimizer Kingma & Ba (2014) is used for optimizing the loss function with a start learning rate of 0.003.

Evaluation metrics For each instance, we run an algorithm of interest and report its incumbent solution’s objective value as OBJ. Then we run single-thread Gurobi for seconds and denote as BKS the objective to the incumbent solution returned. BKS is updated if it is worse than OBJ. We define the absolute and relative primal gaps as: and , respectively and utilize them as performance metrics. Clearly, a smaller primal gap indicates a stronger performance.

Evaluation Configurations All evaluations are performed under the same configuration. The evaluation machine has two Intel(R) Xeon(R) Gold 5117 CPUs @ 2.00GHz, 256GB ram and two Nvidia V100 GPUs. SCIP 8.0.1 Bestuzheva et al. (2021), Gurobi 9.5.2 Gurobi Optimization, LLC (2022) and PyTorch 1.10.2 Paszke et al. (2019) are utilized in our experiments. The emphasis for Gurobi and SCIP is set to focus on finding better primal solutions. The time limit for running each experiment is set to seconds since a tail-off of solution qualities was often observed after that.

Data collection Details will be provided in the Appendix B.

5 Results and Discussion

To investigate the benefits of applying our proposed predict-and-search framework, we conducted comprehensive computational studies that: (i) compare our framework with SCIP and Gurobi; (ii) compare our framework with Neural Diving framework (Nair et al., 2020). Other numerical experiments including comparing against a modified version of confidence threshold neural diving (Yoon, 2022) along with implementation details can be found in the Appendix D.

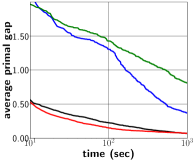

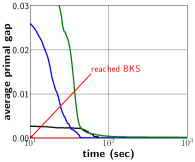

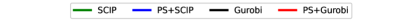

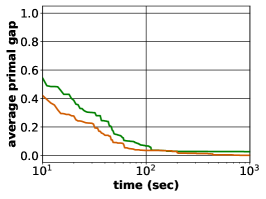

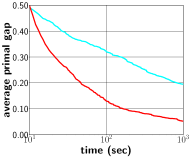

5.1 Comparing against state-of-the-art solvers

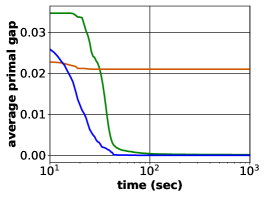

In what follows, we utilize SCIP (Gurobi) as an MILP solver in our proposed framework and denote our approach as PS+SCIP (PS+Gurobi). Figure 2 exhibits the progress of average as the solving process proceeds. In Figure 2a, we notice a significant performance improvement of our framework (blue) upon default SCIP (green), and such an improvement is also observed for WA, CA and IS datasets as shown in 2b, 2c and 2d. Compared with default Gurobi (black), our approach(red) still performs better, specially in the IS dataset. We remark that, in Figure 2d, PS+Gurobi obtained optimal solutions to IS problems within seconds. The performance comparison in Figure 2 also indicates that our proposed framework can bring noticable performance improvement upon MILP solvers, regardless of the solver choice.

In Table 1, we present objective values averaged across instances at the time limit of seconds. Column “” provides the absolute primal gap at seconds, and column “gain” presents the improvement of our method compared over an MILP solver. For instance, Table 1 shows that BKS for IP dataset is , and the absolute primal gaps of SCIP and PS+SCIP are and respectively. Then gain is computed as . According to Table 1, we can claim that our proposed framework outperforms SCIP and Gurobi with average improvements of and , respectively. We notice that the improvement for Gurobi is not as significant as for SCIP since Gurobi is significantly more capable in terms of identifying high-quality solutions than SCIP.

| dataset | BKS | SCIP | PS+SCIP | Gurobi | PS+Gurobi | ||||||

| OBJ | OBJ | OBJ | OBJ | ||||||||

| IP | 12.02 | 19.43 | 7.41 | 15.46 | 3.44 | 53.6% | 12.65 | 0.63 | 12.71 | 0.69 | -9.5% |

| WA | 700.94 | 704.23 | 3.29 | 702.35 | 1.41 | 57.1% | 701.24 | 0.30 | 701.22 | 0.28 | 6.7% |

| IS | 685.04 | 684.94 | 0.10 | 685.03 | 0.01 | 90.0% | 685.04 | 0.00 | 685.04 | 0.00 | 0.00 |

| CA | 23,680.01 | 23,671.66 | 8.35 | 23,671.95 | 8.06 | 3.5% | 23,635.07 | 44.94 | 23,654.47 | 25.54 | 43.2% |

| avg. | 51.1% | 9.9% | |||||||||

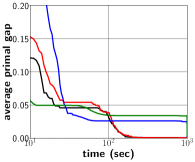

5.2 Comparing against Neural Diving

Another interesting comparison should be conducted against the state-of-the art ML method for optimization: the Neural Diving framework with Selective Net. However, since detailed settings and codes of the original work Nair et al. (2020) are not publicly available, reproducing the exact same results is impractical. To our best effort, a training protocol with fine parameter tuning and an evaluation process are established following algorithms provided in Nair et al. (2020). Three of six tested benchmark datasets used in Nair et al. (2020) are publicly available: Corlat, Neural Network Verification, and MipLib. Most Corlat instances can be solved by SCIP within a few seconds; MipLib contains instances with integer variables rather than binary variables, which is out of the scope of this work. Hence, Neural Network Verification (denoted as NNV) is chosen as the benchmark dataset for the comparison study. It is noteworthy that, empirically, turning on the presolve option in SCIP (Bestuzheva et al., 2021) causes false assertion of feasibility on many NNV instances. Hence, in our experiments on the NNV dataset, the presolve option is turned off, which potentially hurts the performances of both SCIP itself and frameworks implemented with SCIP.

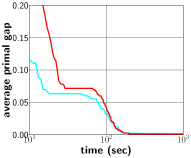

Under such circumstances, the best performance obtained is exhibited in Figure 3a. Clearly, the Neural Diving framework achieves significant improvement over default SCIP.

With such an implementation, we can start to compare our proposed framework against the Neural Diving approach. Due to the limitation of computational power, we are not able to find suitable settings of parameters to train Neural Diving framework on WA and CA datasets. Hence we conducted experiments only on IP and IS datasets. As shown in Figure 3b and 3c, our predict-and-search framework produced at least three times smaller average relative primal gaps. An interesting observation is that Neural Diving framework failed to surpass SCIP on IS dataset where the optimality is hard to achieve, while our framework outperformed both SCIP and the implemented Neural Diving method.

6 Conclusions

We propose a predict-and-search framework for tackling difficult MILP problems that are routinely solved. A GNN model was trained under a supervised learning setting to map from bipartite graph representations of MILP problems to marginal probabilities. We then design a trust region based algorithm to search for high-quality feasible solutions with the guidance of such a mapping. Both theoretical and empirical supports are provided to illustrate the superiority of this framework over fixing-based strategies. With extensive computational studies on publicly available MILP datasets, we demonstrate the effectiveness of our proposed framework in quickly identifying high-quality feasible solutions. Overall, our proposed framework achieved and better primal gaps comparing to SCIP and Gurobi, respectively.

Acknowledgments

This work is supported by the National Key R&D Program of China under grant 2022YFA1003900; Huawei; Hetao Shenzhen-Hong Kong Science and Technology Innovation Cooperation Zone Project (No.HZQSWS-KCCYB-2022046); University Development Fund UDF01001491 from The Chinese University of Hong Kong, Shenzhen; Guangdong Key Lab on the Mathematical Foundation of Artificial Intelligence, Department of Science and Technology of Guangdong Province.

References

- Ba et al. (2016) Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- Bello* et al. (2017) Irwan Bello*, Hieu Pham*, Quoc V. Le, Mohammad Norouzi, and Samy Bengio. Neural combinatorial optimization with reinforcement learning, 2017. URL https://openreview.net/forum?id=rJY3vK9eg.

- Bengio et al. (2021) Yoshua Bengio, Andrea Lodi, and Antoine Prouvost. Machine learning for combinatorial optimization: a methodological tour d’horizon. European Journal of Operational Research, 290(2):405–421, 2021.

- Bestuzheva et al. (2021) Ksenia Bestuzheva, Mathieu Besançon, Wei-Kun Chen, Antonia Chmiela, Tim Donkiewicz, Jasper van Doornmalen, Leon Eifler, Oliver Gaul, Gerald Gamrath, Ambros Gleixner, Leona Gottwald, Christoph Graczyk, Katrin Halbig, Alexander Hoen, Christopher Hojny, Rolf van der Hulst, Thorsten Koch, Marco Lübbecke, Stephen J. Maher, Frederic Matter, Erik Mühmer, Benjamin Müller, Marc E. Pfetsch, Daniel Rehfeldt, Steffan Schlein, Franziska Schlösser, Felipe Serrano, Yuji Shinano, Boro Sofranac, Mark Turner, Stefan Vigerske, Fabian Wegscheider, Philipp Wellner, Dieter Weninger, and Jakob Witzig. The SCIP Optimization Suite 8.0. ZIB-Report 21-41, Zuse Institute Berlin, December 2021. URL http://nbn-resolving.de/urn:nbn:de:0297-zib-85309.

- Bischl et al. (2016) Bernd Bischl, Pascal Kerschke, Lars Kotthoff, Marius Lindauer, Yuri Malitsky, Alexandre Fréchette, Holger Hoos, Frank Hutter, Kevin Leyton-Brown, Kevin Tierney, et al. Aslib: A benchmark library for algorithm selection. Artificial Intelligence, 237:41–58, 2016.

- Chen (2010) Zhi-Long Chen. Integrated production and outbound distribution scheduling: review and extensions. Operations research, 58(1):130–148, 2010.

- Ding et al. (2020) Jian-Ya Ding, Chao Zhang, Lei Shen, Shengyin Li, Bing Wang, Yinghui Xu, and Le Song. Accelerating primal solution findings for mixed integer programs based on solution prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pp. 1452–1459, 2020.

- Gasse et al. (2019) Maxime Gasse, Didier Chételat, Nicola Ferroni, Laurent Charlin, and Andrea Lodi. Exact combinatorial optimization with graph convolutional neural networks. Advances in Neural Information Processing Systems, 32, 2019.

- Gasse et al. (2022) Maxime Gasse, Simon Bowly, Quentin Cappart, Jonas Charfreitag, Laurent Charlin, Didier Chételat, Antonia Chmiela, Justin Dumouchelle, Ambros Gleixner, Aleksandr M Kazachkov, et al. The machine learning for combinatorial optimization competition (ml4co): Results and insights. In NeurIPS 2021 Competitions and Demonstrations Track, pp. 220–231. PMLR, 2022.

- Gupta et al. (2020) Prateek Gupta, Maxime Gasse, Elias Khalil, Pawan Mudigonda, Andrea Lodi, and Yoshua Bengio. Hybrid models for learning to branch. Advances in neural information processing systems, 33:18087–18097, 2020.

- Gurobi Optimization, LLC (2022) Gurobi Optimization, LLC. Gurobi Optimizer Reference Manual, 2022. URL https://www.gurobi.com.

- Kabir et al. (2009) Humayun Kabir, Ying Wang, Ming Yu, and Qi-Jun Zhang. High-dimensional neural-network technique and applications to microwave filter modeling. IEEE Transactions on Microwave Theory and Techniques, 58(1):145–156, 2009.

- Khalil et al. (2016) Elias Khalil, Pierre Le Bodic, Le Song, George Nemhauser, and Bistra Dilkina. Learning to branch in mixed integer programming. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 30, 2016.

- Khalil et al. (2022) Elias B Khalil, Christopher Morris, and Andrea Lodi. Mip-gnn: A data-driven framework for guiding combinatorial solvers. Update, 2:x3, 2022.

- Kingma & Ba (2014) Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Kruber et al. (2017) Markus Kruber, Marco E Lübbecke, and Axel Parmentier. Learning when to use a decomposition. In International conference on AI and OR techniques in constraint programming for combinatorial optimization problems, pp. 202–210. Springer, 2017.

- Li et al. (2018) Zhuwen Li, Qifeng Chen, and Vladlen Koltun. Combinatorial optimization with graph convolutional networks and guided tree search. Advances in neural information processing systems, 31, 2018.

- Liu & Fan (2018) Li Liu and Qi Fan. Resource allocation optimization based on mixed integer linear programming in the multi-cloudlet environment. IEEE Access, 6:24533–24542, 2018.

- Luathep et al. (2011) Paramet Luathep, Agachai Sumalee, William HK Lam, Zhi-Chun Li, and Hong K Lo. Global optimization method for mixed transportation network design problem: a mixed-integer linear programming approach. Transportation Research Part B: Methodological, 45(5):808–827, 2011.

- Nair et al. (2020) Vinod Nair, Sergey Bartunov, Felix Gimeno, Ingrid von Glehn, Pawel Lichocki, Ivan Lobov, Brendan O’Donoghue, Nicolas Sonnerat, Christian Tjandraatmadja, Pengming Wang, Ravichandra Addanki, Tharindi Hapuarachchi, Thomas Keck, James Keeling, Pushmeet Kohli, Ira Ktena, Yujia Li, Oriol Vinyals, and Yori Zwols. Solving mixed integer programs using neural networks, 2020.

- Paszke et al. (2019) Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett (eds.), Advances in Neural Information Processing Systems 32, pp. 8024–8035. Curran Associates, Inc., 2019.

- Pochet & Wolsey (2006) Yves Pochet and Laurence A Wolsey. Production planning by mixed integer programming, volume 149. Springer, 2006.

- Prouvost et al. (2020) Antoine Prouvost, Justin Dumouchelle, Lara Scavuzzo, Maxime Gasse, Didier Chételat, and Andrea Lodi. Ecole: A gym-like library for machine learning in combinatorial optimization solvers. In Learning Meets Combinatorial Algorithms at NeurIPS2020, 2020. URL https://openreview.net/forum?id=IVc9hqgibyB.

- Schöbel (2001) Anita Schöbel. A model for the delay management problem based on mixed-integer-programming. Electronic notes in theoretical computer science, 50(1):1–10, 2001.

- Vinyals et al. (2015) Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. Pointer networks. In C. Cortes, N. Lawrence, D. Lee, M. Sugiyama, and R. Garnett (eds.), Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc., 2015. URL https://proceedings.neurips.cc/paper/2015/file/29921001f2f04bd3baee84a12e98098f-Paper.pdf.

- Watson & Woodruff (2011) Jean-Paul Watson and David L Woodruff. Progressive hedging innovations for a class of stochastic mixed-integer resource allocation problems. Computational Management Science, 8(4):355–370, 2011.

- Yoon (2022) Taehyun Yoon. Confidence threshold neural diving, 2022.

- Yuan (2015) Ya-xiang Yuan. Recent advances in trust region algorithms. Mathematical Programming, 151(1):249–281, 2015.

A Half convolution

We use two interleaved half-convolutions Gasse et al. (2019) to replace the graph convolution. After that, the GNN we used can be formulated as:

where , and are 2-layer perceptrons with ReLU activation functions. That is, a half-convolution is performed to promote each constraint node aggregating information from its relevant variable nodes; after that, another one is performed on each variable node to aggregate information from its relevant constraint nodes and variable nodes.

B Data collection

The training process requires bipartite graph representations of MILP problems as the input and marginal probabilities of variables as the label. We first extract the bipartite graph by embedding variables and constraints as respective feature vectors (see Appendix E). Then, we run a single-thread Gurobi with a time limit of 3,600 seconds on training sets to collect feasible solutions along with their objective values. These solutions are thereafter weighted via energy functions as in Equation (3) to obtain marginal probabilities.

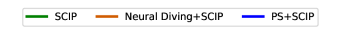

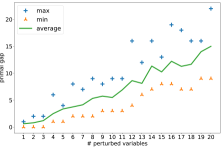

C Comparing objective values in different partial solutions

We also scrutinize why fixing strategy could fail. Variables in the optimal solution are randomly perturbed to simulate a real-world setting that a prediction is very likely to be inaccurate. Figure 4 exhibited a trend that, as we perturb more variables, the absolute primal gap (green) increases, and the percentage of infeasible sub-problems (red) increases. The absolute gaps shown in Figure 4a indicate large performance drawbacks given that the optimal objective value is . Convincingly, we conclude that fixing approaches presumably produce sub-optimal solutions. Besides, as shown in Figure 4b, randomly perturbing one variable can result in of infeasible sub-problems. That is, fixing strategy could lead to infeasible sub-problems even if relatively accurate predictions are provided.

D Comparing against a modified version of confidence threshold neural diving

In this part, we demonstrate our advantage over a fixing strategy by conducting numerical experiments. In Yoon (2022)’s work, thresholds are chosen to determine how many variables to fix. We modify this method so that the number of variables becomes a tunable hyper-parameter, which makes the procedure more controllable and flexible. We directly use the learning target to define confidence scores, and we obtain partial solutions by setting different size parameters as stated in Section 3.2.1. norm is utilized for measuring such distances. We selected , , , , and as size parameters and obtained their corresponding distances as , , , , and . We observed that such distances can be small if only a small portion of variables are taken into consideration. However, as we enlarge the number of involved variables, the distance increases dramatically. Hence, the predict-and-search approach can involve larger set of variables than fixing strategy does while still retaining the optimality.

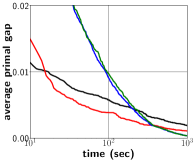

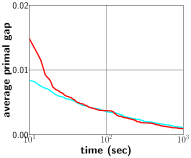

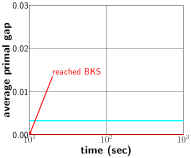

To support this argument, we compare the performance of our approach with that of a fixing strategy. For simplicity, we denote the modified version of confidence threshold neural diving as Fixing in Figures. Figure 5 shows the average relative primal gap achieved by different approaches, where both approaches use a fixed number of selected variables. One can spot the dominance of our approach over the modified version of confidence threshold neural diving from Figure 5a; this implies that such a fixing strategy only leads to mediocre solutions, while the search method (red) achieves solutions with better qualities. In Figure 5d, both approaches are capable of finding optimal solutions instantly, but the ones obtained by fixing method is far from optimal solutions to original problems. We notice that in Figure 5b and 5c, our framework struggles to find good solution in the early solving phases, but produces equivalent or better solutions within seconds comparing to the fixing method. A possible reason is that our solution results in larger feasible regions comparing to the fixing strategy. Conclusively, our framework always outperforms the fixing strategy.

E Feature descriptions for variable nodes, constraint nodes and edges

To encode MILP problems, we propose a set of features extracted from constraints, variables, and edges. This set of features is relatively light-weighted and generalized; each feature is obtained either directly from the original MILP model or by conducting simple calculations. Moreover, such extractions do not require pre-solving the MILP instance, which would save a significant amount of time for large and difficult MILP problems.

| # features. | name | description | |

| Variable | 1 | obj | normalized coefficient of variables in the objective function |

| 1 | v_coeff | average coefficient of the variable in all constraints | |

| 1 | Nv_coeff | degree of variable node in the bipartite representation | |

| 1 | max_coeff | maximum value among all coefficients of the variable | |

| 1 | min_coeff | minimum value among all coefficients of the variable | |

| 1 | int | binary representation to show if the variable is an | |

| integer variable | |||

| 12 | pos_emb | binary encoding of the order of appearance for | |

| each variable among all variables. | |||

| Constraint | 1 | c_coeff | average of all coefficients in the constraint |

| 1 | Nc_coeff | degree of constraint nodes in the bipartite representation | |

| 1 | rhs | right-hand-side value of the constraint | |

| 1 | sense | the sense of the constraint | |

| Edge | 1 | coeff | coefficient of variables in constraints |

F Sizes of benchmark problems

Table 3 exhibits dimensions of the largest instance of each tested benchmark dataset. The numbers of constraints, variables, and binaries are presented.

| dataset | # constr. | # var. | # binary var. |

| IP | 195 | 1,083 | 1,050 |

| WA | 64,480 | 61,000 | 1,000 |

| IS | 600 | 1,500 | 1,500 |

| CA | 6,396 | 1,500 | 1,500 |

G Parametric settings for experiments

For experiments where our predict-and-search framework is compared with SCIP, Gurobi, and fixing-based strategy, the settings for fixing-parameter and the neighborhood parameter are listed in Table 4. Based on the performance of the underlying solver, various settings of are used to carry out experiments for each benchmark dataset shown in Table 4. The radius of the search area is chosen respectively for different implementations (PS+SCIP, PS+Gurobi, and Fixing+SCIP) of our framework as shown in Table 4.

| dataset | PS+SCIP | PS+Gurobi | Fixing+SCIP | |||

| , | , | , | ||||

| IP | 400, 5 | 1 | 400, 5 | 10 | 400, 5 | 0 |

| WA | 0, 500 | 5 | 0, 500 | 10 | 0, 500 | 0 |

| IS | 300, 300 | 15 | 300, 300 | 20 | 300, 300 | 0 |

| CA | 400, 0 | 10 | 600, 0 | 1 | 600, 0 | 0 |