A graph discretization of vector Laplace operator

Abstract

In this paper, we study the graph-theoretic analogues of vector Laplacian (or Helmholtz operator) and vector Laplace equation. We determine the graph matrix representation of vector Laplacian and obtain the dimension of solution space of vector Laplace equation on graphs.

AMS classification: 35J05, 05C50

Keywords: Laplace operator; Vector Laplacian; Helmholtz operator; Graph; Hodage Laplacian.

1 Introduction

In Mathematics and Physics, Laplace’s equation is one of the most famous differential equation named after Pierre-Simon de Laplace, who firstly presented and investigated its properties. In general, this is often written as

where is the Laplace operator (or Laplacian) operating a scalar function . With that in mind, the Laplacian is also referred to as scalar Laplacian. In the context of real space and rectangular coordinates, then

where and are respectively the divergence and gradient operators. Recall,

where is a vector field.

In the setting of Graph Theory, the scalar Laplacian gives the celebrated Laplacian matrix of a graph [8, Lemma 5.6, eg.], defined by

where is the degree diagonal matrix with being the degree of vertex and is the adjacency matrix of in which if and otherwise. Proverbially, the graph Laplacian has been studied extensively in Spectral Graph Theory and has so much influence on many areas. For good survey articles on the graph Laplacian the reader is referred to [10, 11].

We here pay attention to the vector Laplacian. In , the curl of a vector field is defined to be

Then the vector Laplace equation is defined as

| (1) |

A straightforward calculation shows that

| (2) |

which is called vector Laplace operator (or vector Laplacian [12]) or Helmholtz operator [6];.

What is clear from the literature is that the operators involving on a vector function start back in 1911 [3, p133]. However, the difference between the scalar and vector Laplacians was not unrecognized in a long period, which caused that the progress in this direction had been hampered. The meaning of the vector Laplacian was not clear untill 1953, credit to Moon and Spencer [12], who developed a general equation for the vector Laplacian in any orthogonal, curvilinear coordinate system. Remark that the scalar and vector Laplacians are special cases of Hodge Laplacians, named from the Hodge theory on graphs [8], and that the Laplace equation and vector Laplace equation are respectively the special cases of Helmholtz equations

in which is a scalar function or a vector field.

For the purpose here, we consider the adjoint operators of and denoted by and respectively. Due to and [16, pp. 207], then an equivalent expression of (2) is shown as follows

| (3) |

In this paper, we fucus on the graph-theoretic analogues of vector Laplacian and vector Laplace equation. In Section 2 we determine the graph matrix representation, Helmholzian matrix, of vector Laplacian, which put the way for establish a spectral graph theory based on this new matrix. In Section 3 we identify the dimension of solution space of vector Laplace equation on graphs. As a corollary, the number of triangles in a graph is also obtained. In Section 4 we give some remarks and point out some potential applications of the results obtained in this paper.

2 Vector Laplacian on Graphs

Let be an undirected simple graph with vertex set and edge set , where its order is and its size is . Let be the set of triangles in . We define the real valued functions on its vertex set . Moreover, we request the real valued functions on and to be alternating. By an alternating function on , we mean a function of the form , where

An alternating function on is one of the form , where

From the topological point of view, the functions and are called 0-, 1-, 2-cochains, which are discrete analogues of differential forms on manifolds [17]. Let the Hilbert spaces of -, - and -cochains be respectively , and with indicating alternating and with standard -inner products defined by

We describe the graph-theoretic analogues of , , and in multivariate calculus [8]. The gradient is the linear operator grad: defined by

for all and zero otherwise. The curl is the linear operator curl: defined by

for all and zero otherwise. The divergence is the linear operator div: defined by

for all . Then the graph-theoretic analogue of the vector Laplacian, called graph Helmholtzian, is defined by [8, pp. 692]. From [8, Lemma 5.4] it follows that can be expressed as

| (4) |

Note, Helmholtz Decomposition Theorem for the clique complex of a graph correlates closely with the kernel of graph Helmholtzian [6, Theorem 2]. In addition, the graph Helmholtzian is a special case of Hodge Laplacians, a higher-order generalization of the graph Laplacian, due to Lim [8].

| Symbol | Diagram | ||

| () | |||

| () | |||

We emphatically derive a graph matrix representation of graph Helmholtzian. Given arbitrary orientations to the edges and triangles of , the trail and the head of an oriented edge are respectively marked by and . Set . If there is a directed edge from to , then we write . If two vertices and are adjacent, then we write , and otherwise. Therefore, implies either or . If a vertex satisfies , then we write . Furthermore, set if , and if . For two edges and , let if . Put if , if , and if . Denote by if either or , and denote by if either or . Set if and are in a same triangle. For an edge and a triangle , write if is an edge of . Furthermore, if the orientation of is coincident with that of then we write , and otherwise. To make the symbols more clear, we collect them in Tab. 1.

For an edge , the triangle degree of , denoted by is the number of triangles containing , that is,

The edge-vertex incidence matrix and triangle-edge incidence matrix are severally defined by

| (5) |

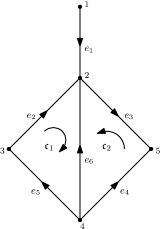

whose examples are given in Fig. 1.

The lemma below indicates the relations among the operators and matrices mentioned above.

Lemma 2.1.

The operator gives the matrix and the operator gives the matrix .

Proof.

Assign and arbitrary orientations. For any , let be the function such that if and otherwise. For any edge , let denote the function such that

if and otherwise. For any , set to be the function such that

if and otherwise. Clearly, , and are orthonormal basis of , and , respectively. Assume that gives the matrix and gives the matrix under these basis. It suffices to show that and since gives the matrix if gives the matrix .

Note that the -th entry of is

which yields that if , if and otherwise, and therefore .

Similarly, the -th entry of is

where . Hence, if then and thus ; if then and thus ; if then . Thereby, . ∎

Theorem 2.2.

Let be a graph with orientations on its edge set and triangle set . The graph Helmholtzian gives the square matrix indexed by the edge set of with

Proof.

From Lemma 2.1, by (4) the vector Laplacian gives the graph matrix

| (6) |

By immediate calculations, the -th entry of is given as

If , then

which results in . If , then

which shows . It remains to consider the case . If and , say and , then

indicating . If and , say and , then

which yields . If and , say , then

which arrives at . If and , say , then

implying .

The proof is completed. ∎

3 Vector Laplace Equation on Graphs

Clearly, the graph-theoretic analogue of vector Laplace equation (2) is

| (7) |

Hereafter, we determine the dimension of solution space of (7). Since is diagonalizable, then is equal to the algebraic multiplicity of eigenvalues , which is said to be nullity of a graph . Denoted by the nullity of a graph with respect to a graph matrix . For the adjacency matrix , Collatz and Sinogowitz [4] first posed the problem of characterizing all graphs with . This question has strong chemical background, because is a necessary condition for a so-called conjugated molecule to be chemically stable, where is the graph representing the carbon-atom skeleton of this molecule. For the Laplacian matrix , it is well-known that the nullity of a graph is exactly the number of its connected components.

We next determine involving the order, the size and the number of triangles of .

Lemma 3.1.

Proof.

Given an arbitrary orientation to and , by (6) we get that

if and only if and , which is equivalent to

Thereby, . ∎

Note that and , the number of triangles in . Thus we get the following result immediately.

Corollary 3.2.

Let be a graph with arbitrary orientations on and . Then

Lemma 3.3.

If has a pendent vertex and , then .

Proof.

Given arbitrary orientations to and , we get , and . Hence, the result follows from Corollary 3.2. ∎

For a vertex of a graph , let denote the set of the neighbours of . If such that , the contraction of is the replacement of and with a single vertex whose incident edges are the edges other than that are incident to or , and the resulting graph is denoted by .

Lemma 3.4.

Let be a graph with edge such that . If , then

Proof.

Give orientations to and such that and are respectively the tail and the head of any edge incident to it. Clearly, and . It suffices to show that by Corollary 3.2. For any vertex , let . Denote by , , , and the vertex in that replaces and in . Then

If the -th row of could be represented by the other rows, then so could the summation of the -th row and the -th row of , and thus

Hence, . If the -th row cannot be represented by the other rows, then

and we still have . ∎

The following result follows from the above lemma.

Corollary 3.5.

Let be a graph with a cut-edge . Then .

The next result follows from the fact that each edge of a tree is a cut-egde and using Corollary 3.5 repeatedly.

Corollary 3.6.

Let be a tree. Then, and so is an invertible matrix.

Our main result in this subsection is shown as follows.

Theorem 3.7.

Let be a graph with components. Then

Proof.

For , we consider each component of by induction on . If , then , because is connected. Therefore, . Assume that the statement is true for . Assume that . Taking an edge belonging to some triangle, let be the graph obtained from by replacing with an directed path . Clearly, and . Along with Lemma 3.4 we get

| (8) |

By inductive assumption,

| (9) |

Substituting , and into (9), by (8) we arrive at

resulting in . In consequence,

This completes the proof. ∎

The following results instantly follows from the above theorem.

Proposition 3.8.

Under the conditions of Theorem 3.7,

-

(i)

;

-

(ii)

.

4 Further remarks

In the article we have regarded a graph as a discrete analogue of vector Laplacian and vector Laplace equation. In Theorem 2.2, we give the graph matrix representation of vector Laplacian, which is called Helmholtzian matrix of a graph . Historically, it is the first one that is indexed by the edge set of a graph, compared with all the other graph matrices indexed by the vertex set of a graph. On the other hand, the graph Helmholtzian has been applied in the statistical ranking [6] and the random walks on simplicial complexes [15]. Just as the roles of the graph (normalized) Laplacian in studying the structural and dynamical properties of ordinary networks [1, 2], we look forward to the applications of graph Helmholtzian in the simplicial network, due to the Helmholtz operator is a special case of Hodge Laplacians based upon the clique complex of a graph. Henceforward, a spectral theory based on graph Helmholtzian is expected [9].

In Proposition 3.8(i), we get the dimension of solution space of a discrete analogue of PDE: vector Laplacian, which possesses natural counterparts on graphs. Moreover, the is involved in the Helmholtz Decomposition Theorem, a special case of Hodge decomposition holding in general for any simplicial complex of any dimension [6, Theorem 2]:

Helmholtz Decomposition Theorem[6, Theorem 2]. Let be a graph and be its clique complex. The space of edge flows on , i.e. , admits an orthogonal decomposition

See [8, Section 6.3] for more applications of the Hodge Laplacian and Hodge decomposition on graphs to other fields.

In the end, the number of triangles in a graph/network is a main metric to extract insights for an extensive range of graph applications, see [5, 13, 14, 18, eg.] for more details. Hence, the significance of triangle counting is posed by the GraphChallenge competition [7], which is now known in Proposition 3.8(ii).

Acknowledgments

Jianfeng Wang expresses his sincere thanks to Prof. Lek-Heng Lim for his kind suggestion. Jianfeng Wang is supported by National Natural Science Foundation of China (No. 12371353) and Special Fund for Taishan Scholars Project. Lu Lu is supported by National Natural Science Foundation of China (No. 11671344).

References

- [1] F. Chung, Spectral Graph Theory, The American Mathematical Society, 1997.

- [2] F. Chung and L.Y. Lu, Complex Graphs and Networks, The American Mathematical Society, 2006.

- [3] J.G. Coffin, Vector Analysis: An Introduction to Vector-methods and Their Various Applications to Physics and Mathematics, J. Wiley & Sons, 1911.

- [4] L. Collatz and U. Sinogowitz, Spektren endlicher grafen, Abh. Math. Semin. Univ. Hamb., 21 (1957), 63–77.

- [5] M.A. Hasan and V. Dave, Triangle Counting in Large Networks: A Review, Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 8(2):e1226, 2018.

- [6] X. Jiang, L.-H. Lim, Y. Yao and Y. Ye, Statistical ranking and combinatorial Hodge theory, Math. Program., 127 (2011) 203–244.

- [7] J. Kepner, Graph Challenge, https://graphchallenge.mit.edu. Accessed: 2020, April 08.

- [8] L.-H. Lim, Hodge Laplacians on Graphs, SIAM Rev. 62 (3) (2020) 685–715.

- [9] L. Lu, Y.T. Shi, J.F. Wang, Y. Wang, An introduction to graphs spectra of Hodge 1-Laplacians, submitted.

- [10] R. Merris, Laplacian matrices of graphs: a survey, Linear Algebra Appl. 197–198 (1994) 143–176.

- [11] B. Mohar, The Laplacian spectrum of graphs, Graph Theory Combin. Appl. 2 (1991) 871–898.

- [12] P. Moon, D. E. Spencer, The meaning of the vector Laplacian, Journal of the Franklin Institute 256 (6) (1953) 551-558.

- [13] S. Pandey, Z. Wang, S. Zhong, C. Tian, B. Zheng, X. Li, L. Li, A. Hoisie, C. Ding and D. Li et al, TRUST: Triangle Counting Reloaded on GPUs, IEEE Trans. Par. Dis. Sys., 32 (2021), 2646–2660.

- [14] M. Rezvani, W. Liang, C. Liu and J.X. Yu, Efficient Detection of Overlapping Communities Using Asymmetric Triangle Cuts, IEEE Trans. Know. Data Engin., 30 (2018), 2093–2105.

- [15] M.T. Schaub, A.R. Benson, P. Horn, G. Lippner and A. Jadbabaie, Random Walks on Simplicial Complexes and the Normalized Hodge 1-Laplacian, SIAM Rev., 62 (2020), pp. 353–391.

- [16] G. Strang, Introduction to Applied Mathematics. Wellesley-Cambridge Press, 1986.

- [17] F.W. Warner, Foundations of Differentiable Manifolds and Lie Groups, Grad. Texts in Math. 94, Springer-Verlag, New York, 1983.

- [18] H. Zhang, Y. Zhu, L. Qin, H. Cheng and J.X. Yu, Efficient triangle listing for billion-scale graphs, 2016 IEEE Inter. Conf. Big Data, pp. 813–822.

PS: In their longer manuscript [9], the last two authors of this paper,Yongtang Shi and Yi Wang have considered the spectral properties of graph Helmholtzian, including the irreducibility, the interlacing theorem, the graphs with few Helmholtzian eigenvalues, the coefficients of Helmholtzian polynomial, the relations between Helmholtzian and Laplacian spectra, the Helmholtzian spectral radii and their limit points, the least Helmholtzian eigenvalue, the product graphs and the Helmholtzian integral graphs. On the other hand, Jianfeng Wang and his student Zhen Chen have determined the Helmholtzian eigenvalues of threshold graphs recently.