A holistic approach to computing first-arrival traveltimes using neural networks

Abstract

Since the original algorithm by John Vidale in 1988 to numerically solve the isotropic eikonal equation, there has been tremendous progress on the topic addressing an array of challenges, including improvement of the solution accuracy, incorporation of surface topography, adding more accurate physics by accounting for anisotropy/attenuation in the medium, and speeding up computations using multiple CPUs and GPUs. Despite these advances, there is no mechanism in these algorithms to carry information gained by solving one problem to the next. Moreover, these approaches may breakdown for certain complex forms of the eikonal equation, requiring simplification of the equations to estimate approximate solutions. Therefore, we seek an alternate approach to address the challenge in a holistic manner, i.e., a method that not only makes it simpler to incorporate topography, allows accounting for any level of complexity in physics, benefiting from computational speedup due to the availability of multiple CPUs or GPUs, but also able to transfer knowledge gained from solving one problem to the next. We develop an algorithm based on the emerging paradigm of physics-informed neural network to solve various forms of the eikonal equation. We show how transfer learning and surrogate modeling can be used to speed up computations by utilizing information gained from prior solutions. We also propose a two-stage optimization scheme to expedite the training process in the presence of sharper heterogeneity in the velocity model and recommend using a locally adaptive activation function for faster convergence. Furthermore, we demonstrate how the proposed approach makes it simpler to incorporate additional physics and other features in contrast to conventional methods that took years and often decades to make these advances. Such an approach not only makes the implementation of eikonal solvers much simpler but also puts us on a much faster path to progress. The method paves the pathway to solving complex forms of the eikonal equation that have remained unsolved using conventional algorithms or solved using some approximation techniques at best; thereby, creating new possibilities for advancement in the field of numerical eikonal solvers.

keywords:

Eikonal equation , anisotropy , traveltimes , neural networks , scientific machine learning1 Introduction

The eikonal equation is a nonlinear partial differential equation (PDE) obtained from the first term of the Wentzel-Kramers-Brillouin expansion of the wave equation and represents a class of Hamilton-Jacobi equations [1]. It finds applications in multiple domains of science and engineering, including image processing [2], robotic path planning and navigation [3], computer graphics [4], and semi-conductor manufacturing [5]. In seismology, it is used to compute first-arrival traveltimes, which are necessary for the success of a wide range of seismic processing and imaging tools including statics and moveout correction [6], traveltime tomography for initial velocity model building [7, 8], microseismic source localization [9], and ray-based migration [10]. Ray tracing and finite-difference based solutions of the eikonal equation are the most popular approaches for computing traveltimes.

Ray tracing methods compute traveltimes along the characteristics of the eikonal equation by solving a system of ordinary differential equations [11]. The approach is generally efficient for a sparse source-receiver geometry, but the computational cost increases dramatically with the increase in the number of source-receiver pairs. Moreover, for practical applications such as imaging and velocity model building, traveltime solutions need to be interpolated onto a regular grid. This requirement not only adds to the computational cost of the method but also poses a challenge, particularly in complex media where rays may diverge from one another, leading to large spatial gaps between rays, creating regions known as shadow zones [12]. Additionally, in strongly varying velocity models, multiple ray-paths may connect a source-receiver pair, making it easy to miss the path with the minimum traveltime. Therefore, the numerical solution of the eikonal equation has been a topic of continued research interest over the years.

Vidale [13] led the development of numerical eikonal solvers by proposing an expanding box strategy to compute first-arrival traveltimes in heterogeneous media. Subsequently, the method was improved and extended to three dimensions [12], to incorporate anisotropy [14, 15], and to high-order accurate solutions [16]. The instability of the expanding box method due to turning rays led to the development of the expanding wavefront scheme [17]. This was further improved to obtain maximum energy traveltimes [18], and to incorporate anisotropy in the model [19].

Another algorithm that became popular during the late 1990s was the fast marching method [20]. The popularity of the method was due to its accuracy, stability, and efficiency properties. The fast marching method saw great interest and development in the subsequent period. This included extension of the method to improve traveltime accuracy [21, 22, 23], incorporating anisotropy [24, 25, 26], parallelization for computational speedup using multiple CPUs [27], and even acceleration using GPUs [28].

Despite its success, the fast marching method was overtaken in popularity by the fast sweeping method [29] since the mid-2000s. This was mainly due to the flexibility and robustness of the fast sweeping method to various forms of the eikonal equation. Numerous advances to the fast sweeping method have since been proposed to improve the accuracy of the method [30, 31], to incorporate anisotropy [32, 33, 34, 35], to account for attenuation [36], to tackle surface topography [37], and parallelization for computational speedup [38, 39].

Several other hybrid strategies have also been proposed to solve the eikonal equation. For a detailed review of these methods, we refer the interested reader to [40].

In light of these developments, it is beyond doubt that there has been tremendous progress since the original eikonal solver by Vidale [13]. This huge and growing body of literature, spanning over three decades, on the numerical solution of the eikonal equation, required significant research efforts to address an array of challenges, including improvement of the solution accuracy, incorporation of surface topography, adding more accurate physics by accounting for anisotropy/attenuation in the medium, and speeding up computations by using multiple CPUs and GPUs. Therefore, we seek an alternate approach that could address these challenges in a holistic manner – a method that makes it simpler to incorporate topography, allow accounting for more accurate physics, and benefit from computational speedup due to the availability of multiple CPUs or GPUs. Such an approach would not only make the implementation of eikonal solvers much simpler but also put us on a much faster path to progress in solving complex forms of the eikonal equation.

Furthermore, a major drawback of the conventional eikonal solvers is that there is no mechanism to utilize the information gained by solving one problem to the next. Therefore, the same amount of computational effort is needed even for a small perturbation in the source position and/or the velocity model. This can lead to a computational bottleneck, particularly for imaging/inversion applications that require repeated computations, often with thousands of source positions and multiple updated velocity models. Therefore, a method that could use information gained from one solution to the next to speed up computations can potentially remedy this situation. With these objectives in mind, we look into the machine learning literature for inspiration.

Having shown remarkable success across multiple research domains [41], machine learning has recently shown promise in tackling problems in scientific computing. The idea to use an artificial neural network for solving PDEs has been around since the 1990s [42]. However, due to recent advances in the theory of deep learning coupled with a massive increase in computational power and efficient graph-based implementation of new algorithms and automatic differentiation, we are witnessing a resurgence of interest in using neural networks to approximate solutions of PDEs.

Recently, Raissi et al. [43] developed a deep learning framework for the solution and discovery of PDEs. The so-called physics-informed neural network (PINN) leverages the capabilities of deep neural networks (DNNs) as universal function approximators. Contrary to the conventional deep learning approaches, PINNs restrict the space of admissible solutions by enforcing the validity of the underlying PDE governing the actual physics of the problem. This is achieved by using a simple feed-forward neural network leveraging automatic differentiation (AD) to compute the differential variables in the PDE. It is worth noting that PINNs do not require a labeled data to learn the mapping between inputs and outputs, rather learning is facilitated through the loss function formed by the underlying PDE. PINNs have already demonstrated success in solving forward and inverse problems in geophysics [44, 45, 46, 47, 48]. Unlike classical discretization-based methods, PINNs are the only unified framework that can be used readily both for data-driven and PDE-based forward and inverse solution of Eikonal equations by incorporating different terms in the loss function.

In this chapter, we present a neural network approach to solve various forms of the eikonal equation. We use the PINN framework, where the governing equation is incorporated into the loss function of the neural network. We also show how the proposed method addresses the highlighted challenges compared to conventional algorithms. Specifically, we show that by simply updating the loss function of the neural network, we can account for more accurate physics in the traveltime solution. Moreover, since the proposed method is mesh-free, we will observe that to incorporate topography, no special treatment is needed as opposed to conventional finite-difference methods. In addition, the use of computational graphs allows us to run the same piece of code on different platforms (CPUs, GPUs) and architectures (desktops, clusters) without worrying about the implementation details. Most importantly, the proposed method allows us to use information gained while solving for a particular source position and velocity model to speed up computations for perturbations in the velocity model and/or source position. We demonstrate this aspect through the use of machine learning techniques like transfer learning and surrogate modeling.

The rest of the chapter is organized as follows: We begin by presenting the theoretical foundations of the proposed method and discuss how it can be used to solve more complex forms of the eikonal equation. Next, we test the method on a diverse set of 2D and 3D benchmark synthetic models and compare its performance with the popular fast sweeping method. Finally, we conclude the chapter by discussing the strengths of the method and identifying future research opportunities.

2 Theory

In this section, we describe how neural networks can be used to compute traveltime solutions for eikonal equations corresponding to isotropic and anisotropic media. We do so by first introducing the different forms of the eikonal equation and the concept of factorization. Next, we outline the general mechanism of a feed-forward neural network followed by its capability as a function approximator. This is followed by a brief overview of the concept of automatic differentiation, which is used to compute the derivative of the networks’ output with respect to the inputs. Finally, putting these concepts together, we will present the proposed algorithm for solving various forms of the eikonal equation.

2.1 Eikonal equations

The eikonal equation is a non-linear first-order PDE that is, for an isotropic medium, given as:

| (1) |

subject to a point-source boundary condition as:

| (2) |

where is the traveltime from the source point to a point in the computational domain, and is the phase velocity of the isotropic medium. Since the curvature of the wavefront near the point-source is extremely large, previous studies [31, 49] have shown that it is better to solve the factored eikonal equation instead of equation (1). The idea is to factor the unknown traveltime into two multiplicative factors, where one of the factors is specified analytically to capture the source-singularity such that the other factor is gently varying in the source neighborhood. Therefore, we factor into two multiplicative functions:

| (3) |

where is the known function and is the unknown function. Plugging this into equation (1), we get the factored eikonal equation for an isotropic model as:

| (4) |

subject to the updated point-source condition:

| (5) |

The known factor is the traveltime solution in a homogeneous isotropic model given as:

| (6) |

where is taken to be the velocity at the point-source location.

Equation (4) is the factored eikonal equation for an isotropic medium; however, sedimentary rocks exhibit at least some degree of anisotropy due to a number of factors including thin layering and preferential alignment of grains cracks [50]. This results in the velocity being a function of the wave propagation direction, making the isotropic approximation of the Earth invalid. Therefore, traveltime computation algorithms must honor the anisotropic nature of the Earth for accurate subsurface imaging and other applications. Thus, we consider a realistic approximation of the subsurface anisotropy known as the tilted transverse isotropy (TTI) case. The factored eikonal equation for a TTI medium is considerably more complex than the isotropic case and is given, under the acoustic assumption, as [49]:

|

|

(7) |

where is the velocity along the symmetry axis, and are the anisotropy parameters, and is the tilt angle that the symmetry axis makes with the vertical. The point-source condition is the same as the one given in equation (5). Again is the unknown function we solve equation (7) for, whereas is the known function which may be taken as the solution of a homogeneous, tilted elliptically isotropic medium, given as [32]:

| (8) |

with

| (9) | ||||

In the above expressions, and are the velocity along the symmetry axis and the anisotropy parameter, respectively, at the point-source location. Similarly, is the tilt angle taken at the source-point.

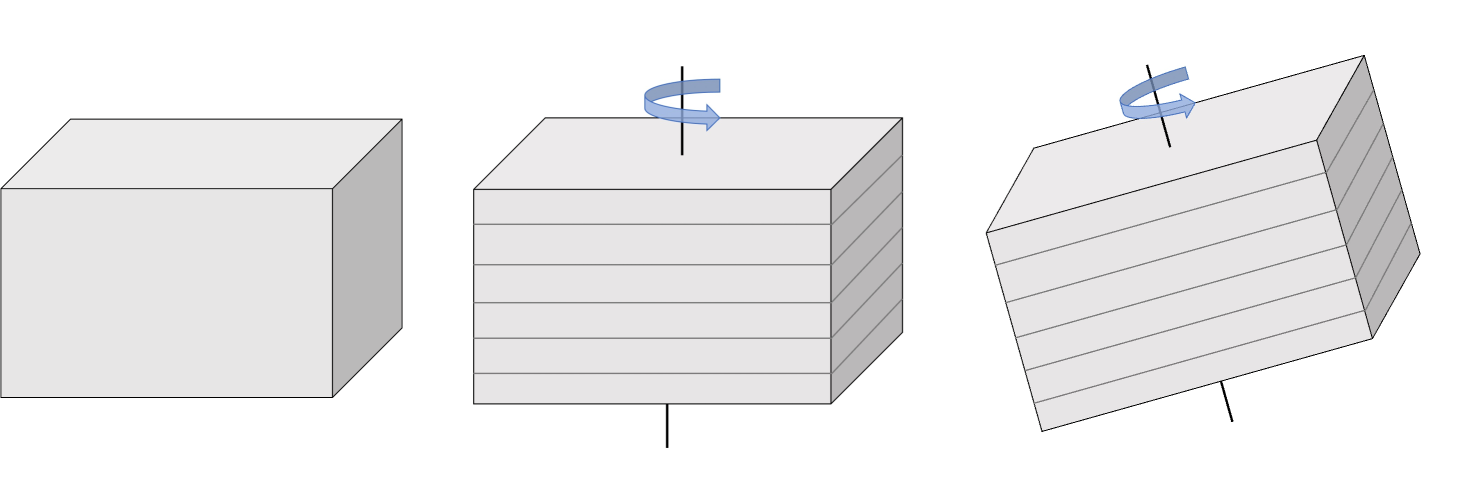

It is worth highlighting that the isotropic and TTI cases represent mathematical approximations of the subsurface. An isotropic model considers the velocity to be invariant with respect to the direction of propagation, which is a crude representation of the Earth’s crust. The simplest practical anisotropic symmetry system is axisymmetric anisotropy, commonly known as transverse isotropy (TI). A TI medium with a vertical axis of symmetry (VTI) is a good approximation for horizontally layering shale formation or thin-layering sediments. The factored eikonal equation for VTI media can be obtained by setting in equation (7). For more complex geology, such as sediments near the flanks of salt domes and fold-and-thrust belts like the Canadian foothills, a TTI model represents the best approximation. Figure 1 illustrates these approximations graphically.

The reason for considering eikonal equations corresponding to different media is to highlight, in comparison with the conventional methods, how easy it is to adapt the proposed method to solve a relatively more complex eikonal equation (more on this in Section 2.4).

2.2 Approximation property of neural networks

A feed-forward neural network, also known as a multi-layer perceptron, is a set of neurons organized in layers in which evaluations are performed sequentially through the layers. It can be seen as a computational graph with an input layer, an output layer, and an arbitrary number of hidden layers. In a fully connected neural network, neurons in adjacent layers are connected with each other, but neurons within a single layer share no connections. It is called a feed-forward neural network because information flows from the input through each successive layer to the output. Moreover, there are no feedback or recursive loops in a feed-forward neural network.

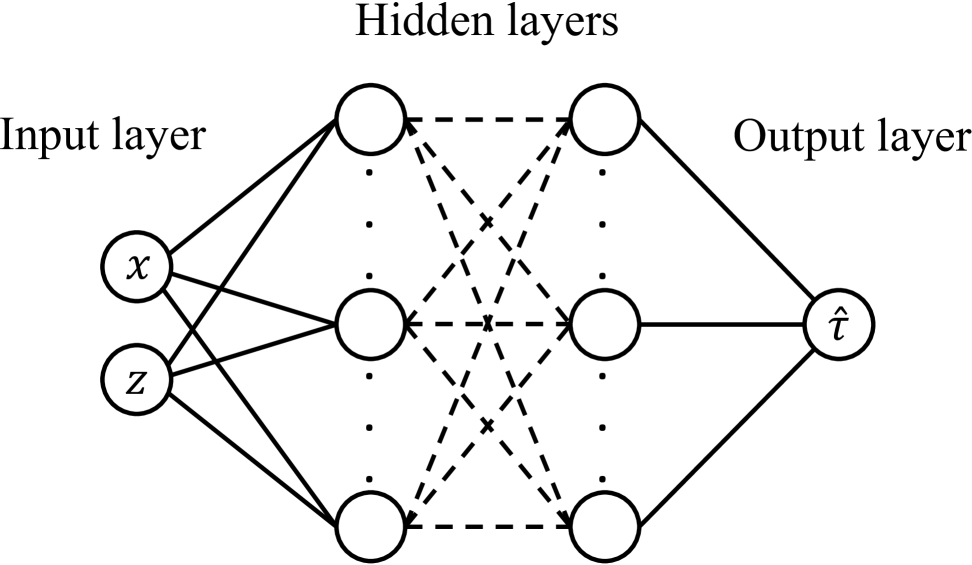

Neural networks are well-known for their strong representational power. A neural network with neurons in the input layer and neurons in the output layer can be used to represent a function . In fact, it has been shown that a neural network with a finite number of neurons in the hidden layer can be used to represent any bounded continuous function to the desired accuracy. This is also known as the universal approximation theorem [51, 52]. In addition, it was later shown that by using a deep network with multiple hidden layers and a nonlinear activation function, the total number of neurons needed to represent a given function could be significantly reduced [53]. Therefore, our goal here is to train a DNN that could represent the mapping between the spatial coordinates , as inputs to the network, and the unknown traveltime function representing the output of the DNN. Figure 2 illustrates this idea pictorially showing a neural network with input neurons for the spatial coordinates that are passed through the hidden layers to the output layer for predicting the traveltime factor at the inputted spatial location.

We formulate here considering a 2D case for simplicity of illustration. In a 3D model, one would need a neural network with three input neurons, one for each spatial dimension. It must also be noted that while DNNs are, in theory, capable of representing very complex functions, finding the actual parameters (weights and biases) needed to solve a given PDE can be very challenging.

2.3 Automatic differentiation

Solving a PDE using neural networks requires a mechanism to accurately compute derivatives of the network’s output(s) with respect to the input(s). There are multiple ways to compute derivatives including hand-coded analytical derivatives, symbolic differentiation, numerical approximation, and automatic differentiation (AD) [54]. While manually working out the derivatives is exact, it is often time consuming to code and it is error-prone. Symbolic differentiation, while also exact, may result in exponentially large expressions and, therefore, can be prohibitively slow and memory intensive. Numerical differentiation, on the other hand, is easy to implement but can be highly inaccurate due to round-off errors. Contrary to these approaches, AD uses exact expressions with floating-point values instead of symbolic strings and it involves no approximation errors. This results in an accurate evaluation of derivatives at machine precision. Therefore, we evaluate the partial derivatives of the unknown traveltime factor with respect to the inputs using AD.

2.4 Solving eikonal equations

We begin by considering how the different pieces of the puzzle outlined in the previous subsections can be combined to solve eikonal equations. First, we illustrate this using the factored isotropic eikonal equation (4) and then demonstrate how simple it is under the proposed framework to solve a more complex eikonal equation, such as the factored TTI eikonal equation (7).

To solve equation (4), we leverage the capabilities of neural networks as function approximators and define a loss function that minimizes the residual of the underlying PDE for a chosen set of training (collocation) points. This is achieved using the following components:

-

i.

a DNN approximation of the unknown traveltime field variable ,

-

ii.

a differentiation algorithm, i.e., AD in this case, to evaluate partial derivatives of with respect to the spatial coordinates ,

-

iii.

a loss function incorporating the underlying eikonal equation, sampled on a collocation grid, and

-

iv.

an optimizer to minimize the loss function by updating the neural network parameters.

To illustrate the idea, let us consider a two-dimensional domain . A point-source is located at coordinates , where . The unknown traveltime factor is approximated using a DNN, , such that:

| (10) |

where are network inputs, is the network output, and represents the set of all trainable parameters of the network with as the total number of parameters.

The loss function is given by the mean-squared error norm as

| (11) |

where represents the residual of the factored isotropic eikonal equation (4), given by

| (12) |

The first term on the right side of equation (11) imposes validity of the factored eikonal equation (4) on a given set of training points , where is the number of training samples. The second term forces the solution to be positive by penalizing negative solutions using the Heaviside function . The last term enforces the boundary condition by imposing the solution to be unity at the source point . The set of network parameters that minimizes the loss function (11) on this set of training points, , is then identified by solving the optimization problem:

| (13) |

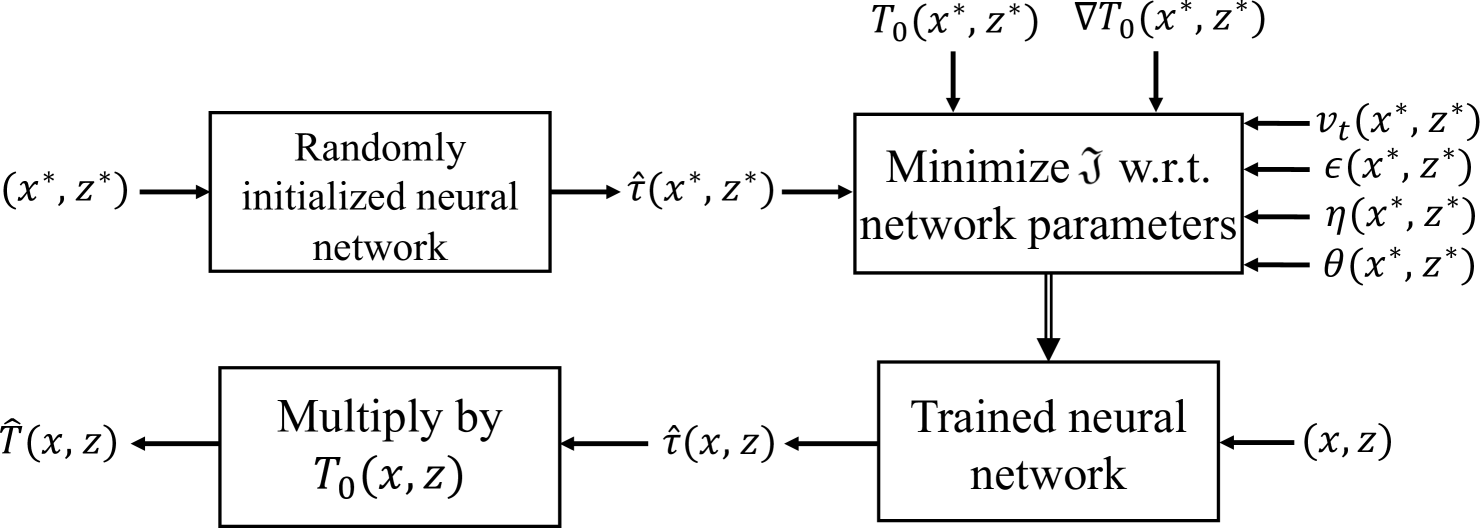

Once the DNN is trained, we evaluate the network on a set of regular grid-points in the computational domain to obtain the unknown traveltime field. The final traveltime solution is obtained by multiplying it with the known traveltime part, i.e.,

| (14) |

This yields traveltimes corresponding to an isotropic approximation of the Earth. However, it is well-known that the subsurface is anisotropic in nature. Therefore, a significant amount of research effort has been spent over the years on extending numerical eikonal solvers to anisotropic media. The complication in numerically solving the eikonal equation arises due to anellipticity of the wavefront [57] resulting in high-order nonlinear terms in the eikonal equation. These high-order terms dramatically increase the complexity in solving the anisotropic eikonal equation and, therefore, have been a topic of immense research interest. On the contrary, the proposed neural network formulation allows solving for the anisotropic eikonal equation by simply replacing the residual in equation (11) with the one corresponding to the anisotropic eikonal equation. Therefore, to solve the factored TTI eikonal equation (7), we would, instead of equation (12), use the following:

|

|

(15) |

This is a highly desirable feature of this approach because eikonal equations corresponding to models with even lower symmetry than TTI can be easily solved by simply using a different residual term. By contrast, conventional algorithms such as fast marching or fast sweeping methods would require significant effort to incorporate such changes, thereby resulting in much slower scientific progress.

A workflow summarizing the proposed solver for the factored TTI eikonal equation is shown in Figure 3.

3 Numerical Tests

In this section, we test the neural network based eikonal solver and compare its performance with the first-order fast sweeping method, which is routinely used in geophysical (and other) applications for traveltime computations. We consider several isotropic and anisotropic 2D/3D models for these tests and also include a model with topography to demonstrate the flexibility of the proposed method.

For each of the examples presented below, we use a neural network having 20 hidden layers with 20 neurons in each layer and minimize the neural network’s loss function using full-batch optimization. A locally adaptive inverse tangent activation function is used for all hidden layers except the final layer, which uses a linear activation function. Locally adaptive activation functions have been shown to achieve superior optimization performance and convergence speed over base methods. The introduction of a scalable parameter in the activation function for each neuron changes the slope of the activation function and, therefore, alters the loss landscape of the neural network for improved performance. For more information on locally adaptive activation functions, we refer the interested reader to [58].

We choose the afore-mentioned configuration of the neural network based on some initial tests and keep it fixed for the entire study to minimize the need for hyper-parameter tuning for each new velocity model.

The following examples are prepared and trained using the neural network library, SciANN [59], a Keras/Tensorflow API that is designed and optimized for physics-informed deep learning. SciANN leverages the latest advancements of Tensorflow while keeping the interface close to the mathematical description of the problem.

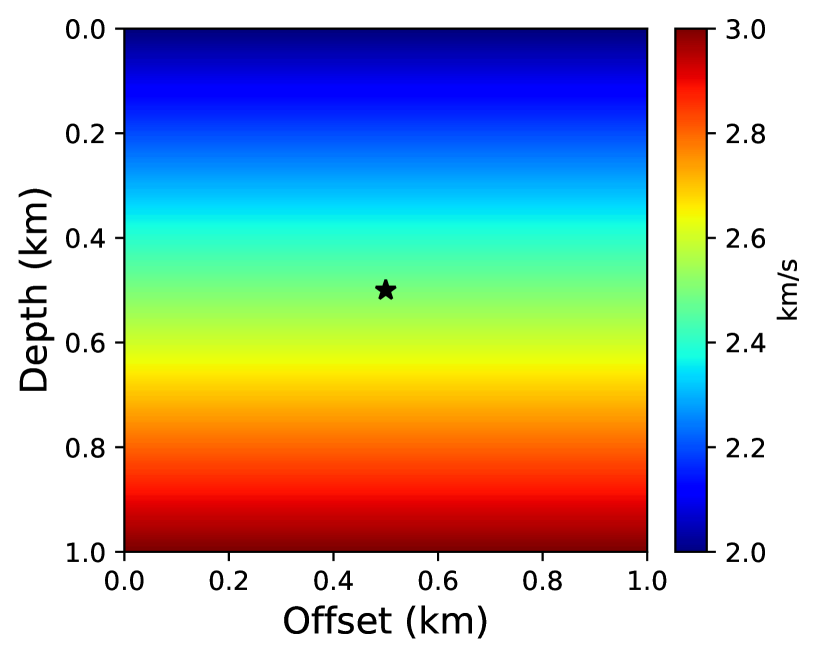

Example 1: An isotropic model with constant vertically varying gradient

First, we consider a vertically varying km2 isotropic model. The velocity at zero depth is 2 km/s and it increases linearly with a gradient of 1 s-1 to 3 km/s at a depth of 1 km. We compute traveltime solutions using the neural network and first-order fast sweeping method by considering a point-source located at . We compare their performance with a reference solution computed analytically [60]. The velocity model is shown in Figure 4 and is discretized on a 101101 grid with a 10 m grid interval along both axes.

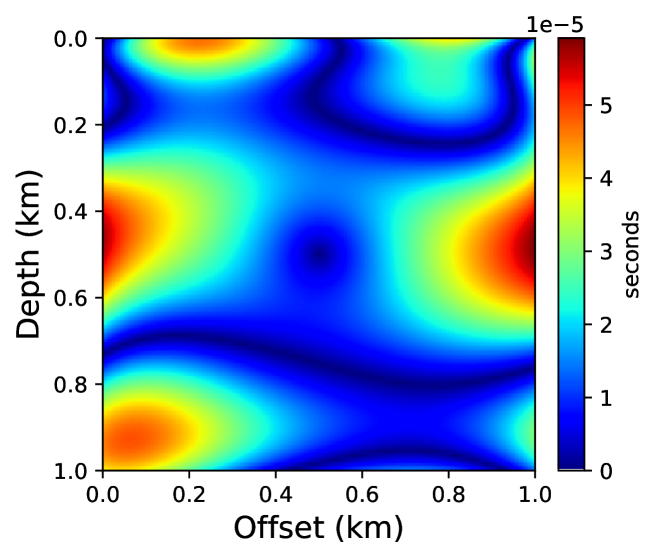

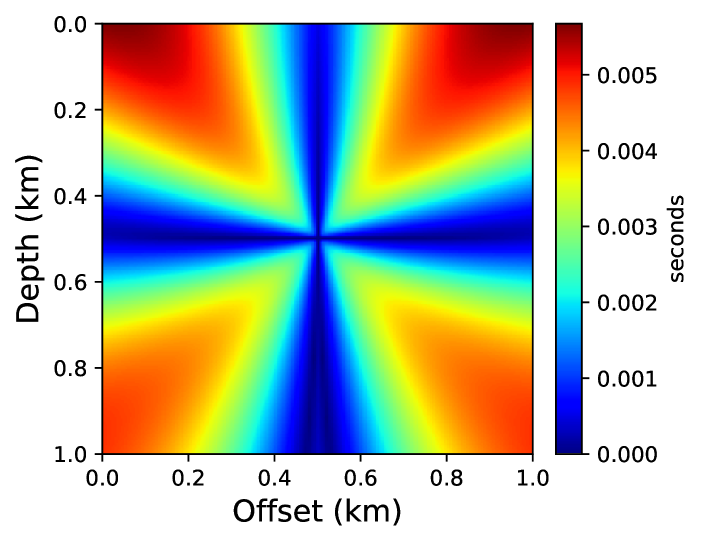

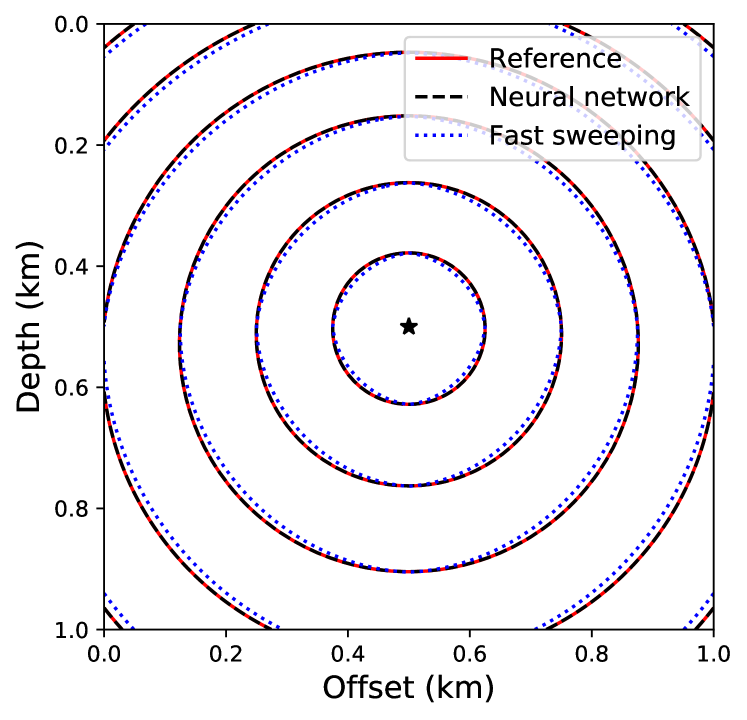

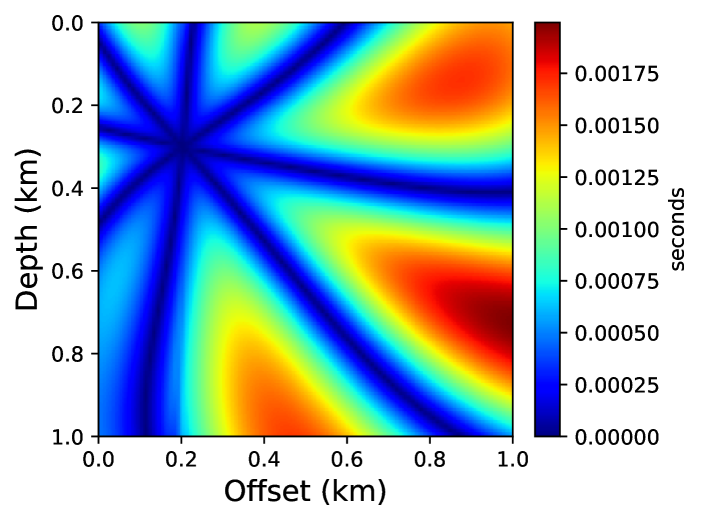

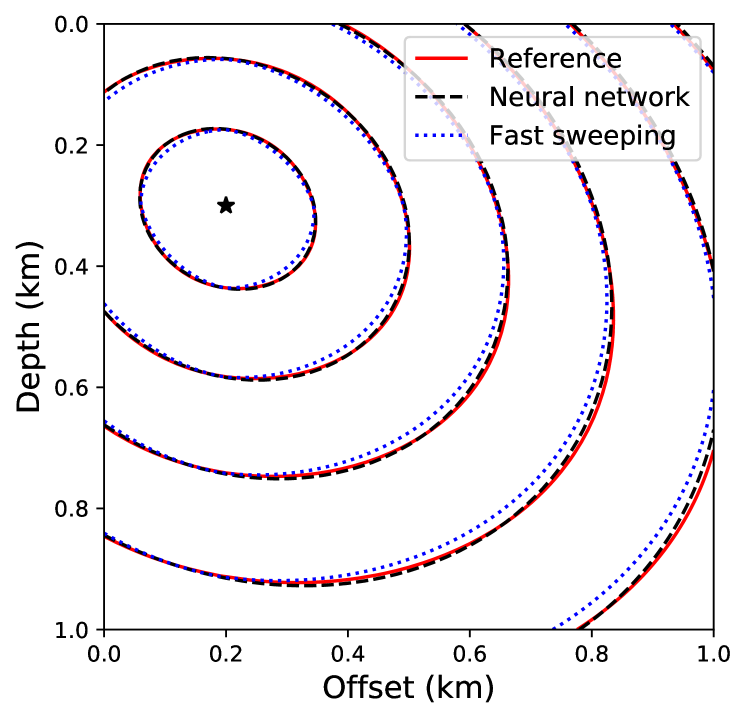

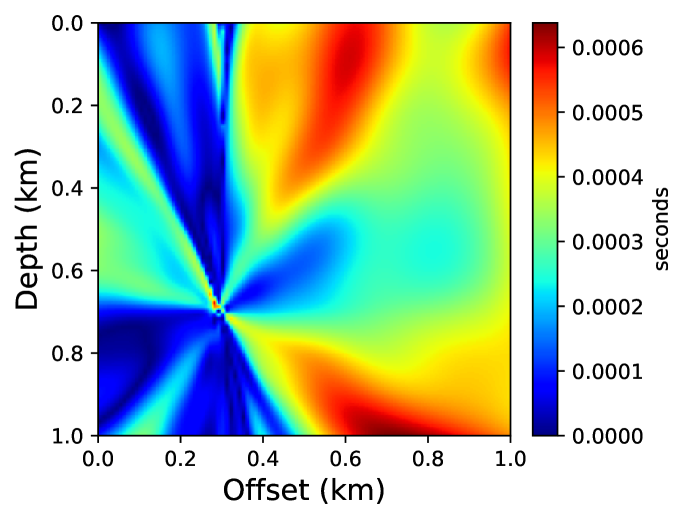

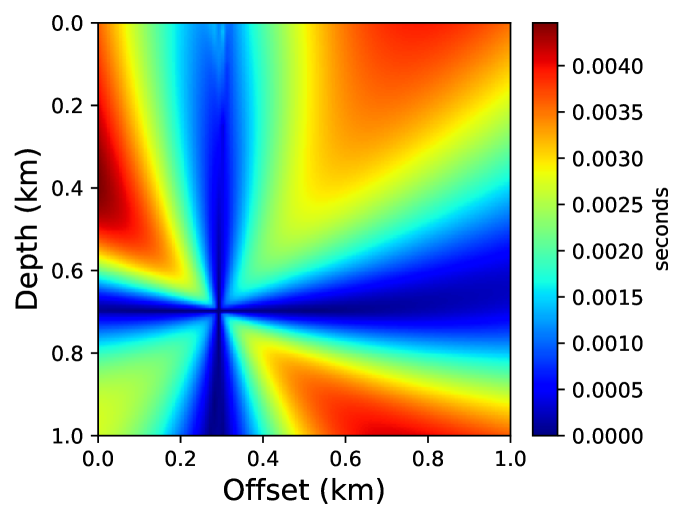

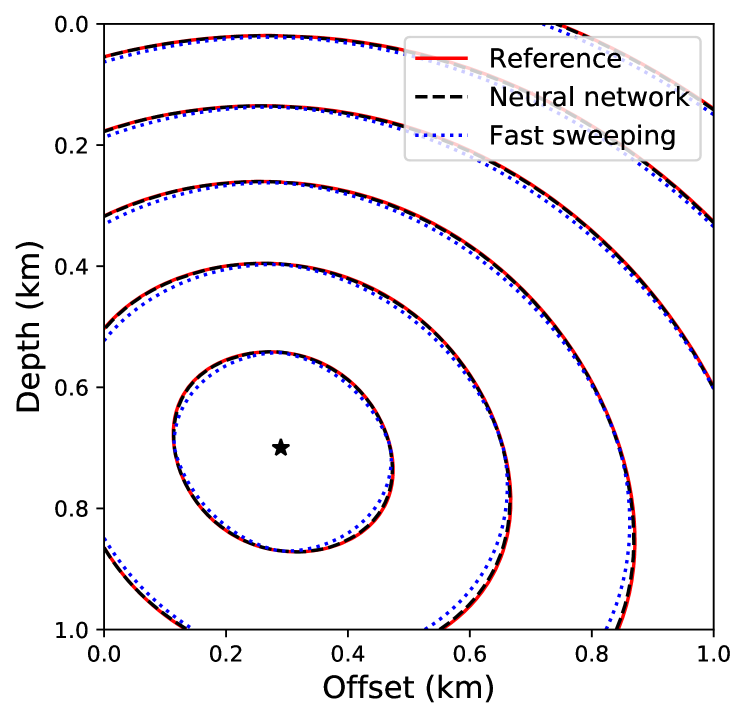

For training the neural network, we begin with randomly initialized parameters and train on 50% of the total grid points selected randomly and use the Adam optimizer [61] with 10,000 epochs. Once the network is trained, we evaluate the trained network on the regularly sampled (101101) grid to obtain the unknown traveltime field , which is then multiplied with the corresponding factored traveltime field to obtain the final traveltime solution. We compare the accuracy of the neural network solution and the first-order fast sweeping solution, computed on the same regular grid in Figure 5. We observe significantly better accuracy for the neural network based solution despite using only half of the total grid points for training. In Figure 6, we confirm this observation by plotting the corresponding traveltime contours.

Example 2: Smoothly varying TTI model

Next, we train the neural network to solve the TTI eikonal equation. Compared to the fast sweeping method that requires significant modifications to the isotropic eikonal solver, the neural network based approach requires only an update to the loss function by incorporating the appropriate residual based on the TTI eikonal equation. For the velocity parameter , we consider a linear velocity model with a vertical gradient of 1.5 and a horizontal gradient of 0.5 as shown in Figure 7. We use homogeneous models for the anisotropy parameters with =0.2 and = 0.083. We also consider a homogeneous tilt angle of = 30∘. These models are also discretized on a 101101 grid with 10 m grid interval along both axes.

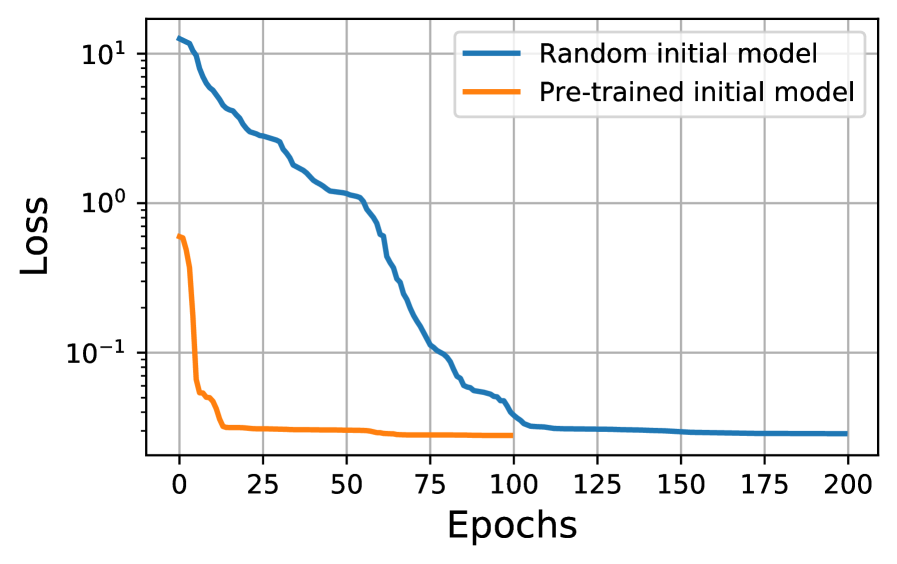

Instead of training the network from scratch, we use transfer learning, which is a machine learning technique that relies on storing knowledge gained while solving one problem and applying it to a different but related problem. Starting with a pre-trained network from example 1, we fine-tune the neural network parameters for the TTI model using 50% of the total grid points, selected randomly, using the L-BFGS-B solver [62] for 100 epochs. Starting with a pre-trained network allows us to use the L-BFGS-B method for faster convergence as opposed to starting with the Adam optimizer and then switching to L-BFGS-B as suggested by previous studies [43]. For comparison, we also train a neural network from scratch and the convergence history, shown in Figure 8, confirms that the solution converges much faster when using transfer learning.

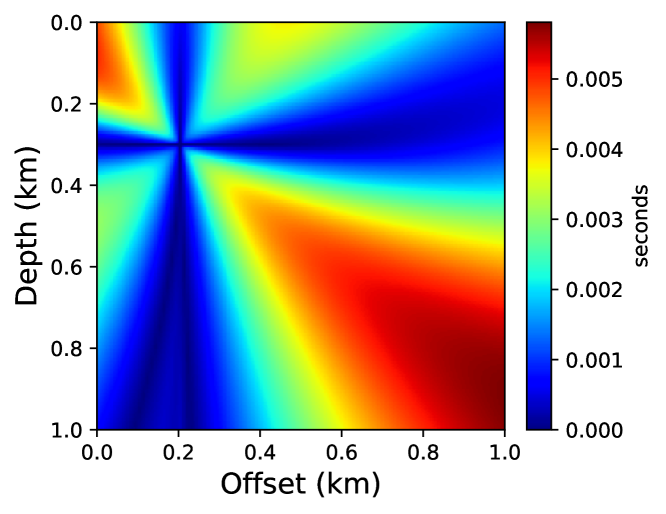

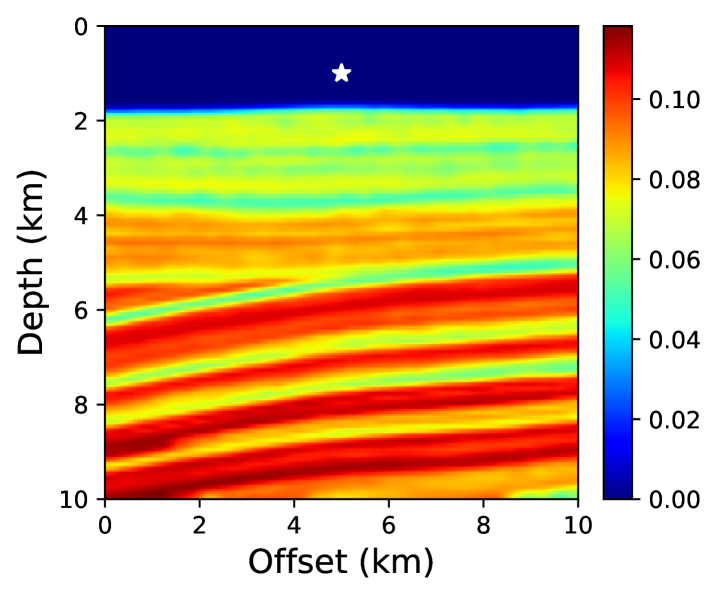

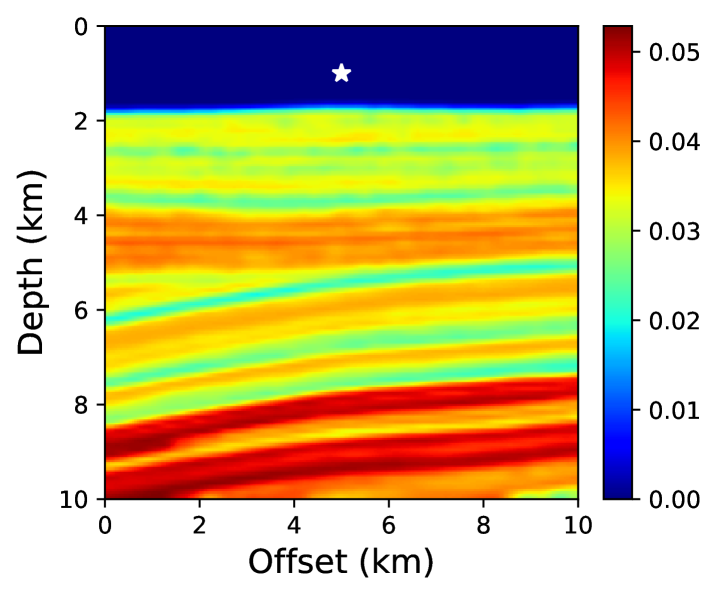

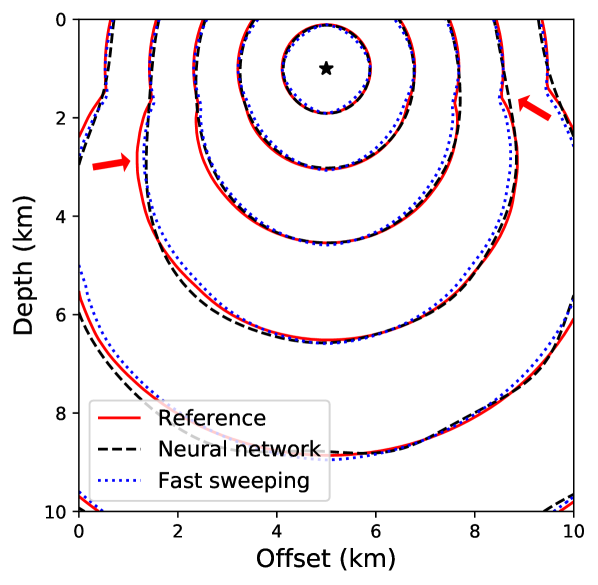

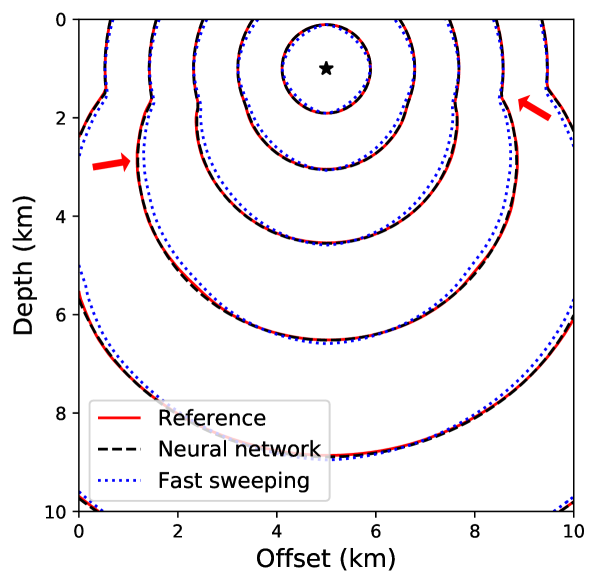

Figure 9 compares absolute traveltime errors computed using the neural network and the first-order fast sweeping method using the iterative solver of Waheed et al. [33]. The reference solution is obtained using a high-order fast sweeping method on a finer grid. We observe that, despite using transfer learning, the accuracy of the neural network solution is considerably better than the fast sweeping method. We confirm this observation visually by comparing the corresponding traveltime contours in Figure 10. One can also observe the effect of the additional anisotropy parameters and the tilt angle on the traveltime contours here. By comparing the shapes of the contours in Figure 6 and 10, it is obvious that the wave propagation speed varies with the direction of propagation. A faster propagation is observed orthogonal to the symmetry direction, given by the tilt angle, compared to the propagation along the symmetry axis.

It is worth noting that while the complexity of the fast sweeping solvers and their computational cost increases dramatically when switching from an isotropic to a TTI model, for the neural network both cases require similar complexity and computational cost. Therefore, the proposed method is particularly suited to model complex physics involving media with anisotropy, attenuation, etc.

One of the main challenges in seismic imaging and inversion is the need for repeated traveltime computations for thousands of source locations and multiple updated velocity models. Unfortunately, conventional techniques do not allow the transfer of information from one solution to the next and, therefore, the same amount of computational effort is needed for even small perturbations in the source location and/or the velocity model. We noted above how transfer learning can be used to speed up convergence for a new velocity model and source position. This could be further extended by adding source location as input to the network and training a surrogate model.

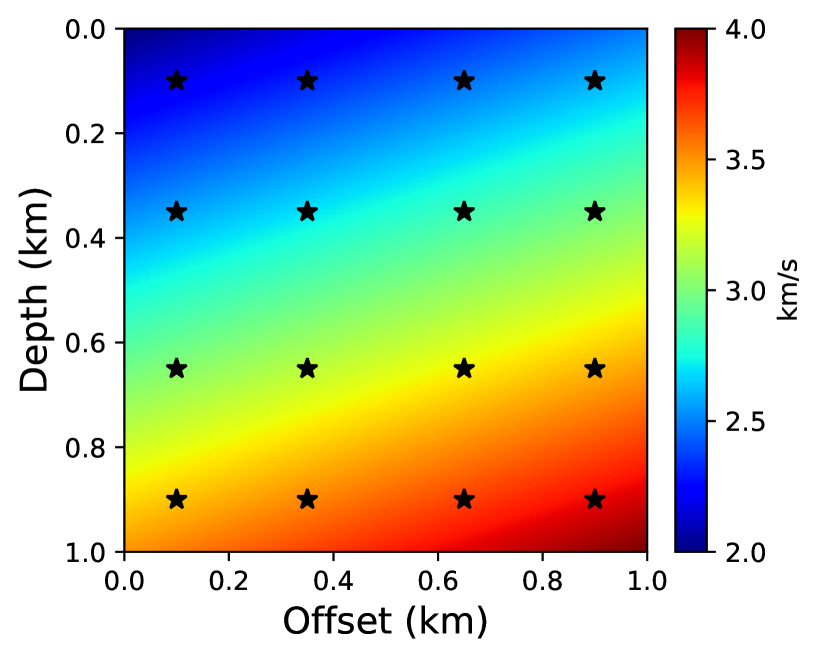

To do so, we train a neural network on solutions computed for 16 sources located at regular intervals in the considered TTI model as shown in Figure 11. Through the training process, the network learns the mapping between a given source location and the corresponding traveltime field. Once the surrogate model is trained, traveltime fields for additional source locations can be computed instantly by using a single evaluation of the trained network. This is similar to obtaining an analytic solver as no further training is needed for computing traveltimes corresponding to new source locations. This feature is particularly advantageous for large 3D models that need thousands of such computations.

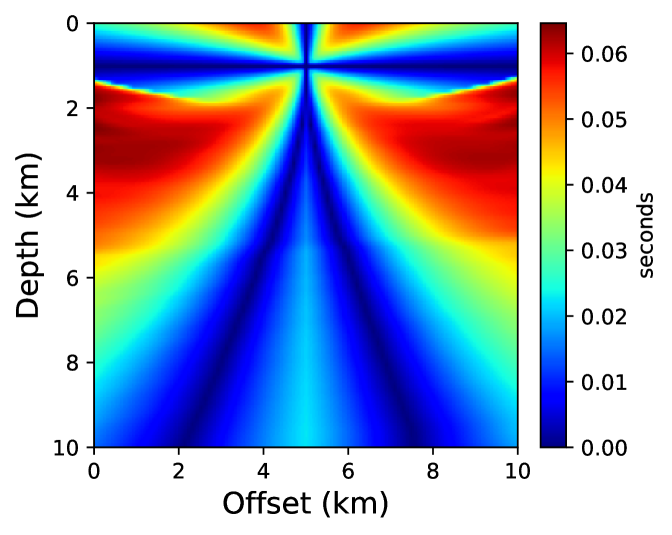

After training the surrogate model, we test its performance by computing the traveltime field corresponding to a randomly chosen source location. Figure 12 compares the absolute traveltime errors for the solution predicted by the surrogate model and the fast sweeping TTI solver. We observe that even without any additional training for this new source position, we obtain remarkably high accuracy compared to the fast sweeping method. This is also confirmed by visually comparing the corresponding traveltime contours in Figure 13.

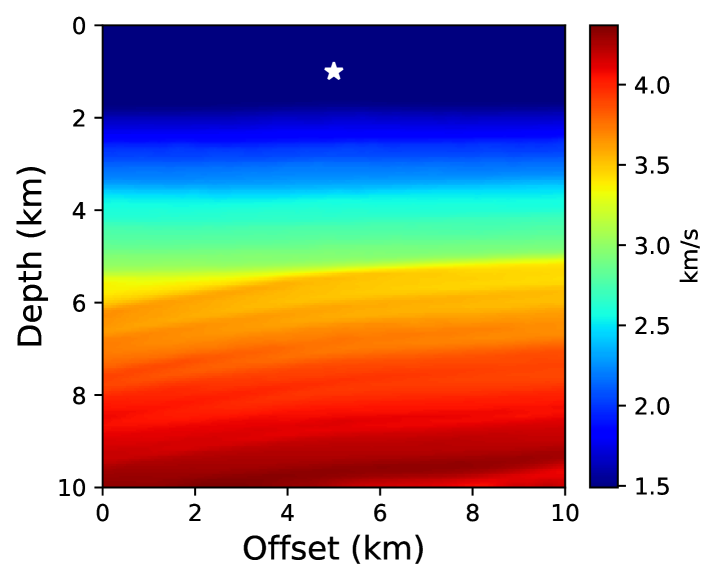

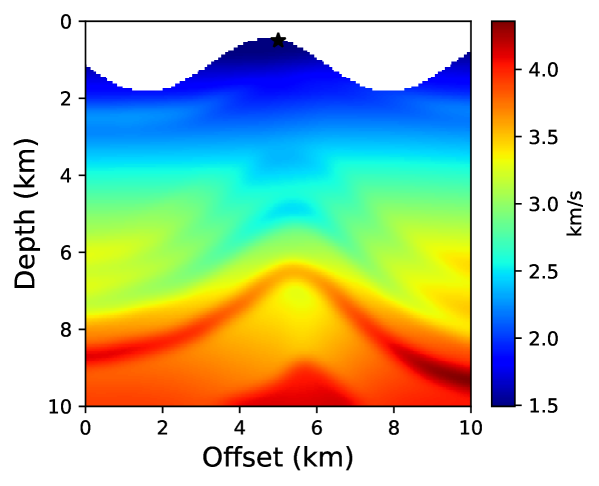

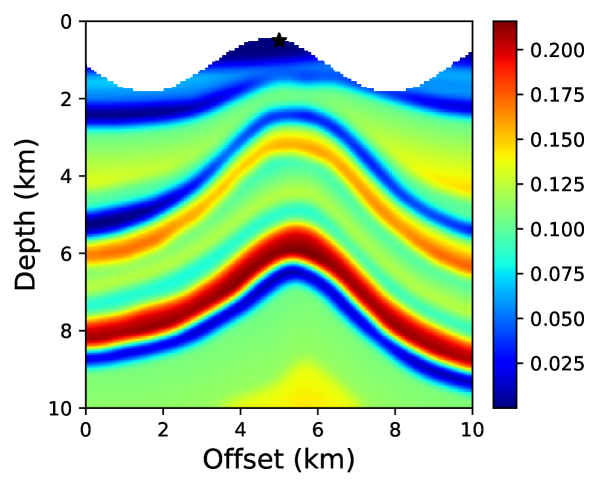

Example 3: VTI SEAM model

Next, we test the performance of the proposed method on a portion of the VTI SEAM model, shown in Figure 14. The model parameters are extracted from the 3D SEG Advanced Modeling (SEAM) Phase I subsalt earth model [63]. This is a particularly interesting example due to sharper variations in the velocity model and the anisotropy parameters. The model is discretized on a 101101 grid with a grid spacing of 100 m along both axes. Based on our recent efforts in using the neural network solver [47, 44], we observe that the convergence of the neural network approach slows down considerably in the presence of sharp variations in the velocity model. We have already seen above that using a pre-trained neural network yields faster convergence by allowing the use of the second-order optimization method (L-BFGS-B) directly. Therefore, in this example, we use the pre-trained network obtained from the TTI eikonal solver in example 2.

For further speedup, we propose a two-stage training scheme to obtain an accurate traveltime solution. In the first stage, when the neural network is learning a smooth representation of the underlying function, we use only a small percentage of grid points for training. In this case, we use only 1% of the total grid points chosen randomly for the first 200 epochs and then switch to using 50% of the total grid points in stage 2 for another 1000 epochs to update the learned function in better approximating sharp features in the resulting traveltime field. Again, since we start training with a pre-trained network, we use the L-BFGS-B optimizer for faster convergence.

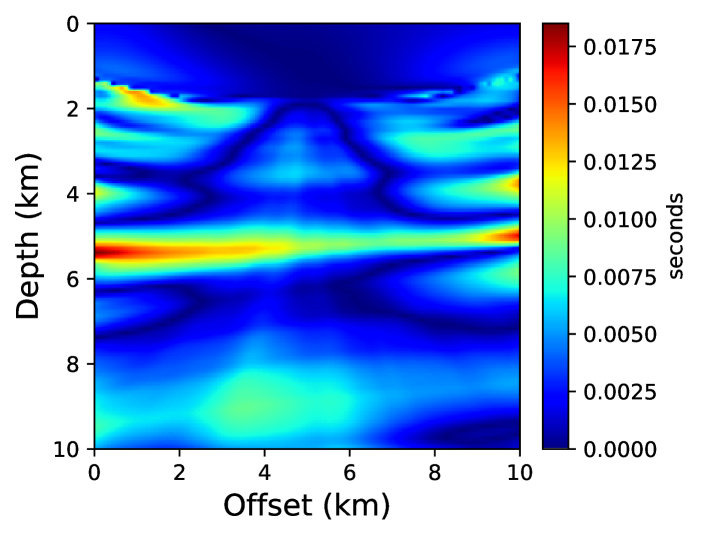

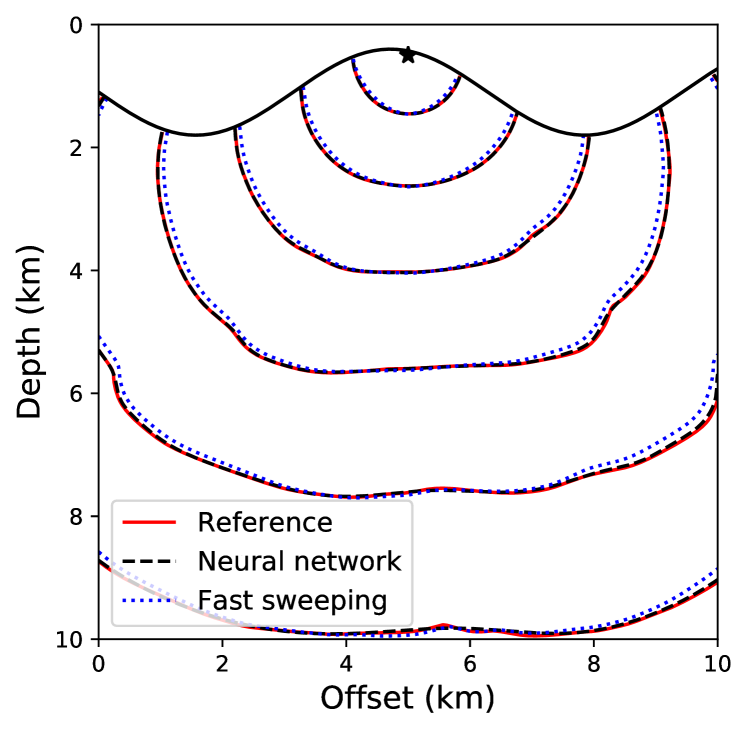

Figure 15 shows traveltime contours for a point-source located at (5 km, 1 km) for the reference solution, the neural network solution, and the first-order fast sweeping solution. In Figure 15, we show the neural network solution at the end of stage 1. It can be seen that the solution is quite smooth and misses sharp features visible in the reference solution. In Figure 15, we observe that the neural network solution captures these features as additional training points are added in the second stage of training. Therefore, using a small number of training points in stage 1 reduces the training cost without compromising on solution accuracy. By comparing the absolute traveltime errors in Figure 16, we observe that the neural network solution after stage 2 is considerably more accurate than the first-order fast sweeping method, even for a realistic VTI model.

Example 4: BP TTI model

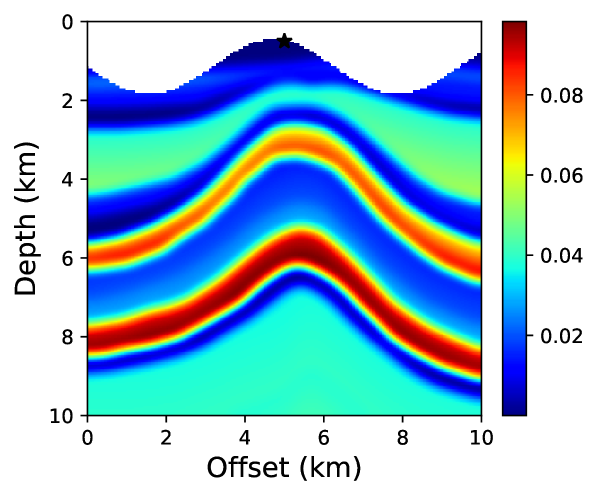

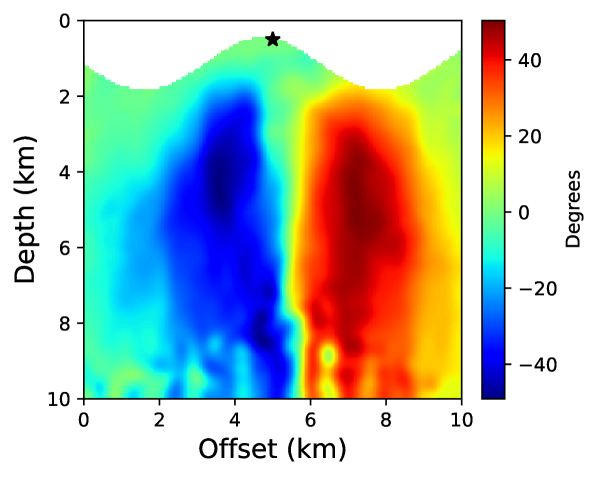

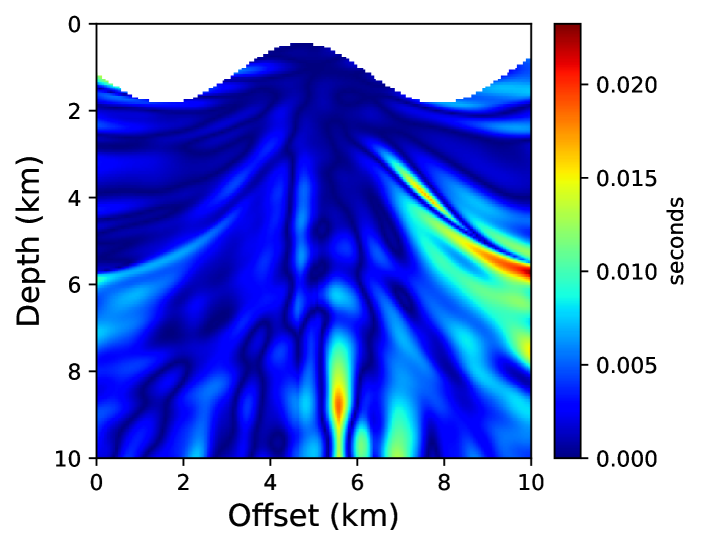

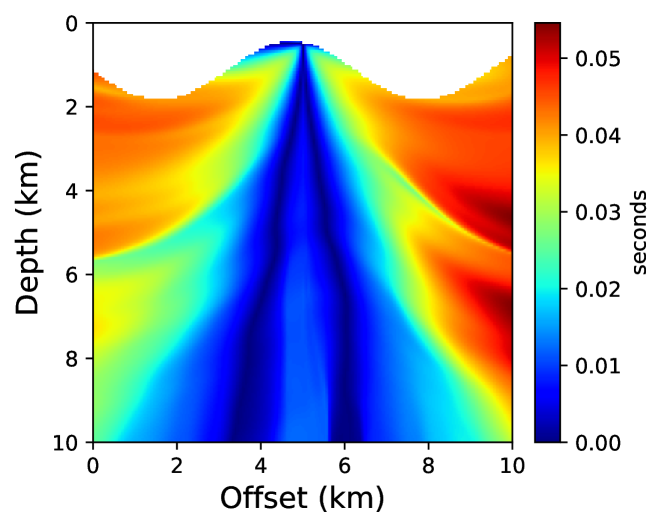

We have already seen how the neural network based approach is flexible in incorporating complex physics compared to conventional techniques. In this example, we will see how incorporating irregular topography is straightforward using the proposed approach. The free-surface encountered in land seismic surveys is often non-flat and requires taking this into account for accurate traveltime computation. One approach to tackle this is to transform from Cartesian to curvilinear coordinate system to mathematically flatten the free-surface and solve the resulting topography-dependent eikonal equation [37]. This adds additional computational cost and may result in instabilities when topography varies sharply. On the contrary, the neural network approach outlined here is mesh-free and doesn’t require any modification to the algorithm. We demonstrate this through a test on a portion of the BP TTI model, shown in Figure 17. The model was developed by Shah [64] and publicly provided courtesy of the BP Exploration Operating Company. The considered portion of the model is discretized on a 161161 grid using a grid spacing of 6.25 m along both axes. Points above the considered topography layer are then removed from the model.

For a point-source located at (5 km, 0.5 km), we compare the neural network and fast sweeping traveltime solutions. For training the neural network, we take into account about 50% of the grid points below the topography and once the network is trained, we evaluate the solution on the regular grid points that fall below the topography. We start training using pre-trained parameters from example 2 and train for 10,000 epochs using an L-BFGS-B optimizer. Figure 18 shows absolute traveltime errors for the two cases, indicating that the neural network solution is again considerably more accurate than fast sweeping. We confirm this observation visually through traveltime contours plotted in Figure 19.

Example 5: 3D TTI model

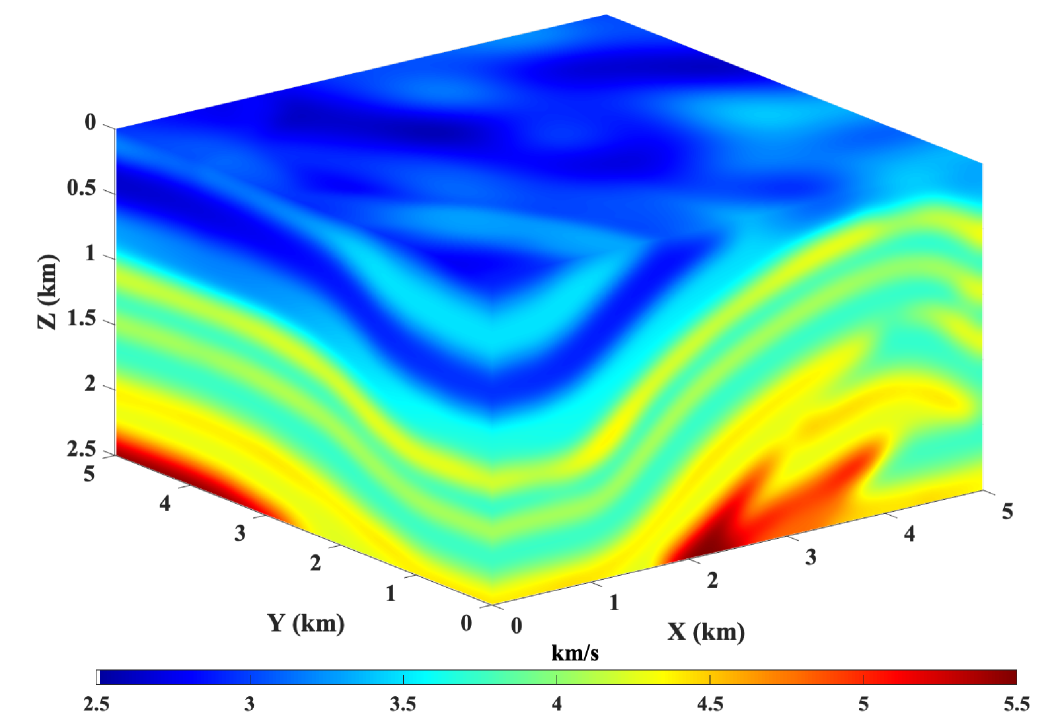

Finally, we show an example of extending the proposed method to a 3D TTI model. The model for the velocity parameter is shown in Figure 20. A homogeneous model is used for the anisotropy parameters with =0.2 and = 0.1. We also consider a homogeneous tilt angle of 30∘. The model is discretized on a 10110151 grid with a grid spacing of 50 m along each axis. The workflow for obtaining the neural network solution is essentially the same. In this case, the neural network takes three input parameters corresponding to the spatial axes and outputs the corresponding unknown traveltime field . Similar to before, the neural network output is multiplied by the known traveltime factor to obtain the final traveltime solution.

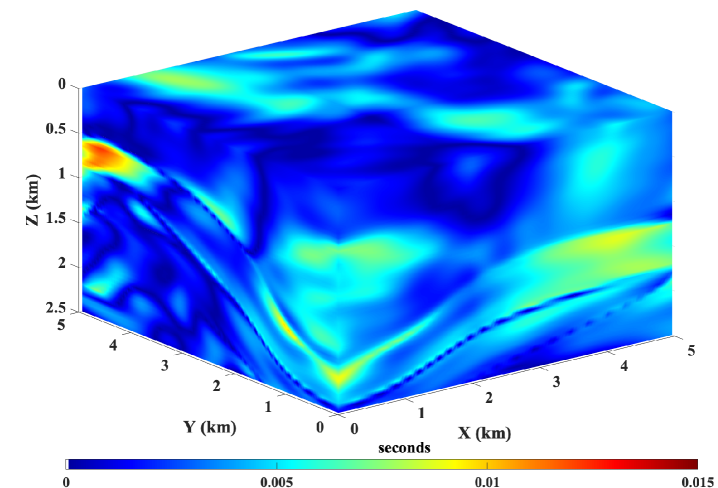

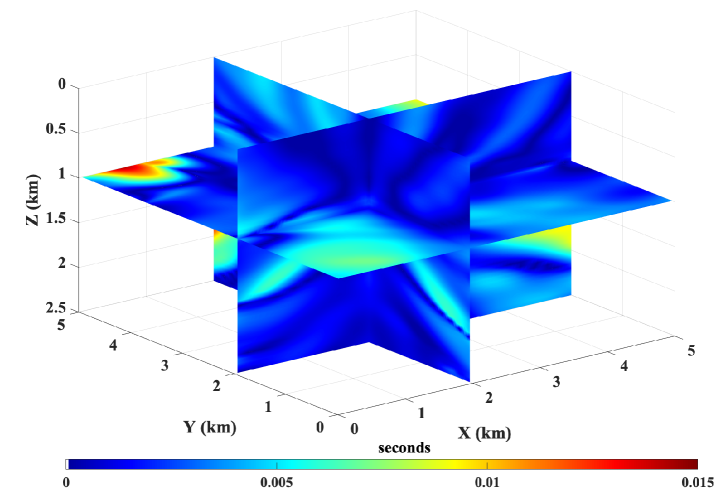

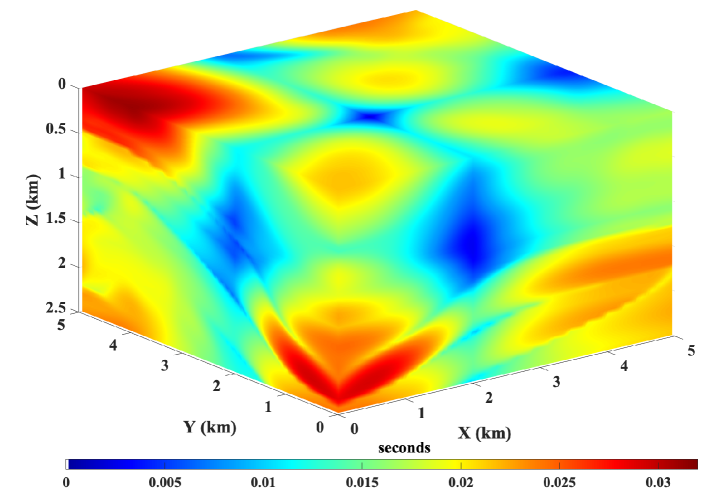

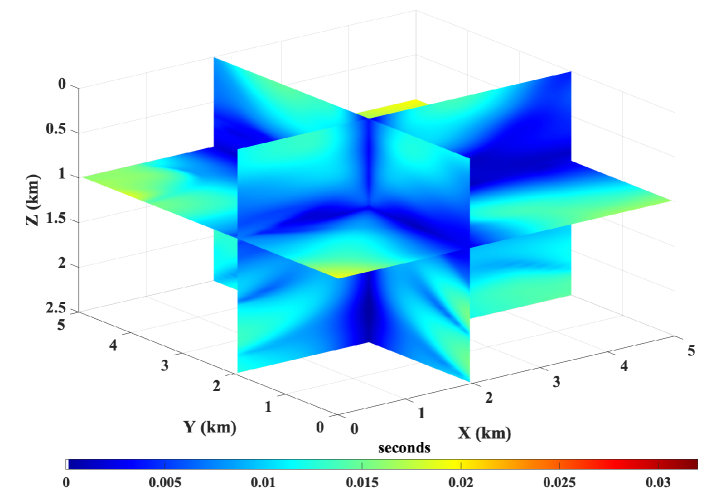

Starting with a pre-trained neural network on a smoothly varying TTI model, we train the network using 50% of the total grid points chosen randomly. We use 20,000 L-BFGS-B epochs during the training process. For a point-source located at =(2 km, 2 km, 1 km), we compare the accuracy of the neural network solution and the first-order fast sweeping solution in Figure 21. We observe that the proposed method is capable of computing accurate traveltimes for 3D TTI models as well without requiring any major alteration to the underlying algorithm.

4 Discussion and conclusions

We proposed a neural network approach to computing first-arrival traveltimes based on the framework of physics-informed neural networks. By leveraging the capabilities of neural networks as universal function approximators, we define a loss function to minimize the residual of the governing eikonal equation at a chosen set of training points. This is achieved with a simple feed-forward neural network using automatic differentiation.

We demonstrated the flexibility of the proposed framework in incorporating anisotropy in the model simply by updating the loss function of the neural network according to the underlying PDE. Since the method is mesh-free, we also saw how easy it is to incorporate non-flat topography into the solver compared to conventional methods. Another attractive feature, due to this mesh-free nature of the algorithm, is that sources and receivers do not have to lie on a regular grid as required by conventional finite-difference techniques. We also showed that by using machine learning techniques like transfer learning and surrogate modeling, we could transfer information gained by solving one problem to the next – a key feature missing in our conventional numerical algorithms. This would be key in achieving computational efficiency beyond conventional methods on models of practical interest.

Furthermore, the neural network based eikonal solver uses Tensorflow at the backend, which allows for easy deployment of computations across a variety of platforms (CPUs, GPUs) and architectures (desktops, clusters). On the contrary, significant effort is needed in adapting conventional algorithms to benefit from different computational platforms or architectures.

In short, the approach tackles many problems associated with obtaining fast and accurate traveltimes, that required decades of research, in a holistic manner. In fact, it opens new possibilities in solving complex forms of the eikonal equation that have remained unsolved by conventional algorithms or solved through some approximation techniques at best. Our recent tests have demonstrated success in solving such equations using neural networks without approximations. This includes solutions to the qSV eikonal equation for anisotropic media [65] and the attenuating VTI eikonal equation [66].

It is also worth emphasizing that the actual computational advantage of the proposed method, compared to conventional numerical solvers, depends on many factors including the network architecture, optimization hyper-parameters, and sampling techniques. If the initialization of the network and the optimizer learning rate are chosen carefully, the training can be completed quite efficiently. Furthermore, the activation function used, the adaptive weighting of the loss function terms, and the availability of second-order optimization techniques can accelerate the training significantly. Therefore, a detailed study is needed to quantify the computational gains afforded by the proposed neural network-based solver compared to conventional algorithms by considering the afore-mentioned factors. A rudimentary analysis performed in [44] indicates that once the surrogate model is obtained, the PINN eikonal solver is computationally faster by more than an order of magnitude than the fast sweeping method. Since the computational complexity of solving the anisotropic eikonal equation using conventional methods increases dramatically compared to the isotropic case [33], the proposed framework is computationally more attractive for traveltime modeling in anisotropic media.

Nevertheless, there are a few challenges associated with the method that requires further research. Chief among them is the slow convergence of the solution in the presence of sharp heterogeneity in the velocity and/or anisotropy models. We proposed a two-stage optimization process in this chapter that alleviates part of the problem by using only a small fraction of the training points during the initial training phase. Since at this stage, the network is learning a smooth representation of the underlying function, we could save some computational cost by using a small number of training points initially. We also used a locally adaptive activation function that has been shown to achieve faster convergence. Other possible solutions may include an adaptive sampling of the velocity model by using denser sampling of training points around parts of the model with large velocity gradients. Another challenge concerns the optimal choice of the neural networks’ hyper-parameters. In this study, we alleviated the problem by choosing them through some quick initial tests and keeping them fixed for all the test examples. Recent advances in the field of meta-learning may, potentially, enable automated selection of these parameters in the future.

References

- Crandall and Lions [1983] M. G. Crandall, P.-L. Lions, Viscosity solutions of Hamilton-Jacobi equations, Transactions of the American mathematical society 277 (1983) 1–42.

- Alvino et al. [2007] C. Alvino, G. Unal, G. Slabaugh, B. Peny, T. Fang, Efficient segmentation based on eikonal and diffusion equations, International Journal of Computer Mathematics 84 (2007) 1309–1324.

- Garrido et al. [2016] S. Garrido, D. Álvarez, L. Moreno, Path planning for mars rovers using the fast marching method, in: Robot 2015: Second Iberian Robotics Conference, Springer, 2016, pp. 93–105.

- Raviv et al. [2011] D. Raviv, A. M. Bronstein, M. M. Bronstein, R. Kimmel, N. Sochen, Affine-invariant geodesic geometry of deformable 3D shapes, Computers & Graphics 35 (2011) 692–697.

- Helmsen et al. [1996] J. J. Helmsen, E. G. Puckett, P. Colella, M. Dorr, Two new methods for simulating photolithography development in 3D, in: Optical Microlithography IX, volume 2726, International Society for Optics and Photonics, 1996, pp. 253–261.

- Lawton [1989] D. C. Lawton, Computation of refraction static corrections using first-break traveltime differences, Geophysics 54 (1989) 1289–1296.

- Hole and Zelt [1995] J. Hole, B. Zelt, 3-D finite-difference reflection traveltimes, Geophysical Journal International 121 (1995) 427–434.

- Taillandier et al. [2009] C. Taillandier, M. Noble, H. Chauris, H. Calandra, First-arrival traveltime tomography based on the adjoint-state method, Geophysics 74 (2009) WCB1–WCB10.

- Grechka et al. [2015] V. Grechka, A. De La Pena, E. Schisselé-Rebel, E. Auger, P.-F. Roux, Relative location of microseismicity, Geophysics 80 (2015) WC1–WC9.

- Lambare et al. [2003] G. Lambare, S. Operto, P. Podvin, P. Thierry, 3D ray+ born migration/inversion—Part 1: Theory, Geophysics 68 (2003) 1348–1356.

- Cerveny [2001] V. Cerveny, Seismic ray theory, Cambridge University Press, 2001.

- Vidale [1990] J. E. Vidale, Finite-difference calculation of traveltimes in three dimensions, Geophysics 55 (1990) 521–526.

- Vidale [1988] J. Vidale, Finite-difference calculation of travel times, Bulletin of the Seismological Society of America 78 (1988) 2062–2076.

- Dellinger [1991] J. Dellinger, Anisotropic finite-difference traveltimes, in: SEG Technical Program Expanded Abstracts 1991, Society of Exploration Geophysicists, 1991, pp. 1530–1533.

- Dellinger and Symes [1997] J. Dellinger, W. Symes, Anisotropic finite-difference traveltimes using a Hamilton-Jacobi solver, in: SEG Technical Program Expanded Abstracts 1997, Society of Exploration Geophysicists, 1997, pp. 1786–1789.

- Kim and Cook [1999] S. Kim, R. Cook, 3-D traveltime computation using second-order ENO scheme, Geophysics 64 (1999) 1867–1876.

- Podvin and Lecomte [1991] P. Podvin, I. Lecomte, Finite difference computation of traveltimes in very contrasted velocity models: a massively parallel approach and its associated tools, Geophysical Journal International 105 (1991) 271–284.

- Nichols [1996] D. E. Nichols, Maximum energy traveltimes calculated in the seismic frequency band, Geophysics 61 (1996) 253–263.

- Wang et al. [2006] Y. Wang, T. Nemeth, R. T. Langan, An expanding-wavefront method for solving the eikonal equations in general anisotropic media, Geophysics 71 (2006) T129–T135.

- Sethian and Popovici [1999] J. A. Sethian, A. M. Popovici, 3-D traveltime computation using the fast marching method, Geophysics 64 (1999) 516–523.

- Rickett and Fomel [1999] J. Rickett, S. Fomel, A second-order fast marching eikonal solver, Stanford Exploration Project Report 100 (1999) 287–293.

- Alkhalifah and Fomel [2001] T. Alkhalifah, S. Fomel, Implementing the fast marching eikonal solver: spherical versus cartesian coordinates, Geophysical Prospecting 49 (2001) 165–178.

- Popovici and Sethian [2002] A. M. Popovici, J. A. Sethian, 3-D imaging using higher order fast marching traveltimes, Geophysics 67 (2002) 604–609.

- Sethian and Vladimirsky [2003] J. A. Sethian, A. Vladimirsky, Ordered upwind methods for static Hamilton–Jacobi equations: Theory and algorithms, SIAM Journal on Numerical Analysis 41 (2003) 325–363.

- Cristiani [2009] E. Cristiani, A fast marching method for Hamilton-Jacobi equations modeling monotone front propagations, Journal of Scientific Computing 39 (2009) 189–205.

- bin Waheed et al. [2015] U. bin Waheed, T. Alkhalifah, H. Wang, Efficient traveltime solutions of the acoustic TI eikonal equation, Journal of Computational Physics 282 (2015) 62–76.

- Breuß et al. [2011] M. Breuß, E. Cristiani, P. Gwosdek, O. Vogel, An adaptive domain-decomposition technique for parallelization of the fast marching method, Applied Mathematics and Computation 218 (2011) 32–44.

- Monsegny et al. [2018] J. Monsegny, J. Monsalve, K. León, M. Duarte, S. Becerra, W. Agudelo, H. Arguello, Fast marching method in seismic ray tracing on parallel GPU devices, in: Latin American High Performance Computing Conference, Springer, 2018, pp. 101–111.

- Zhao [2005] H. Zhao, A fast sweeping method for eikonal equations, Mathematics of computation 74 (2005) 603–627.

- Zhang et al. [2006] Y.-T. Zhang, H.-K. Zhao, J. Qian, High order fast sweeping methods for static hamilton–jacobi equations, Journal of Scientific Computing 29 (2006) 25–56.

- Fomel et al. [2009] S. Fomel, S. Luo, H. Zhao, Fast sweeping method for the factored eikonal equation, Journal of Computational Physics 228 (2009) 6440–6455.

- Luo and Qian [2012] S. Luo, J. Qian, Fast sweeping methods for factored anisotropic eikonal equations: multiplicative and additive factors, Journal of Scientific Computing 52 (2012) 360–382.

- Waheed et al. [2015] U. B. Waheed, C. E. Yarman, G. Flagg, An iterative, fast-sweeping-based eikonal solver for 3D tilted anisotropic media, Geophysics 80 (2015) C49–C58.

- Han et al. [2017] S. Han, W. Zhang, J. Zhang, Calculating qP-wave traveltimes in 2-D TTI media by high-order fast sweeping methods with a numerical quartic equation solver, Geophysical Journal International 210 (2017) 1560–1569.

- Le Bouteiller et al. [2019] P. Le Bouteiller, M. Benjemaa, L. Métivier, J. Virieux, A discontinuous galerkin fast-sweeping eikonal solver for fast and accurate traveltime computation in 3D tilted anisotropic media, Geophysics 84 (2019) C107–C118.

- Hao et al. [2018] Q. Hao, U. Waheed, T. Alkhalifah, A fast sweeping scheme for P-wave traveltimes in attenuating VTI media, in: 80th EAGE Conference and Exhibition 2018, volume 2018, European Association of Geoscientists & Engineers, 2018, pp. 1–5.

- Lan and Zhang [2013] H. Lan, Z. Zhang, A high-order fast-sweeping scheme for calculating first-arrival travel times with an irregular surface, Bulletin of the Seismological Society of America 103 (2013) 2070–2082.

- Zhao [2007] H. Zhao, Parallel implementations of the fast sweeping method, Journal of Computational Mathematics (2007) 421–429.

- Detrixhe et al. [2013] M. Detrixhe, F. Gibou, C. Min, A parallel fast sweeping method for the eikonal equation, Journal of Computational Physics 237 (2013) 46–55.

- Gómez et al. [2019] J. V. Gómez, D. Álvarez, S. Garrido, L. Moreno, Fast methods for eikonal equations: an experimental survey, IEEE Access 7 (2019) 39005–39029.

- Jordan and Mitchell [2015] M. I. Jordan, T. M. Mitchell, Machine learning: Trends, perspectives, and prospects, Science 349 (2015) 255–260.

- Lagaris et al. [1998] I. E. Lagaris, A. Likas, D. I. Fotiadis, Artificial neural networks for solving ordinary and partial differential equations, IEEE transactions on neural networks 9 (1998) 987–1000.

- Raissi et al. [2019] M. Raissi, P. Perdikaris, G. E. Karniadakis, Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, Journal of Computational Physics 378 (2019) 686–707.

- Waheed et al. [2020] U. Waheed, E. Haghighat, T. Alkhalifah, C. Song, Q. Hao, Eikonal solution using physics-informed neural networks, arXiv preprint arXiv:2007.08330 (2020).

- Smith et al. [2020] J. D. Smith, K. Azizzadenesheli, Z. E. Ross, Eikonet: Solving the eikonal equation with deep neural networks, IEEE Transactions on Geoscience and Remote Sensing (2020).

- Moseley et al. [2020] B. Moseley, A. Markham, T. Nissen-Meyer, Solving the wave equation with physics-informed deep learning, arXiv preprint arXiv:2006.11894 (2020).

- Song et al. [2021] C. Song, T. Alkhalifah, U. Waheed, Solving the frequency-domain acoustic vti wave equation using physics-informed neural networks, Geophysical Journal International (2021).

- Waheed et al. [2021] U. b. Waheed, T. Alkhalifah, E. Haghighat, C. Song, J. Virieux, PINNtomo: Seismic tomography using physics-informed neural networks, arXiv preprint arXiv:2104.01588 (2021).

- Waheed and Alkhalifah [2017] U. Waheed, T. Alkhalifah, A fast sweeping algorithm for accurate solution of the tilted transversely isotropic eikonal equation using factorization, Geophysics 82 (2017) WB1–WB8.

- Thomsen [1986] L. Thomsen, Weak elastic anisotropy, Geophysics 51 (1986) 1954–1966.

- Cybenko [1989] G. Cybenko, Approximation by superpositions of a sigmoidal function, Mathematics of control, signals and systems 2 (1989) 303–314.

- Hornik et al. [1989] K. Hornik, M. Stinchcombe, H. White, Multilayer feedforward networks are universal approximators, Neural networks 2 (1989) 359–366.

- Lu et al. [2017] Z. Lu, H. Pu, F. Wang, Z. Hu, L. Wang, The expressive power of neural networks: A view from the width, in: Advances in neural information processing systems, 2017, pp. 6231–6239.

- Baydin et al. [2017] A. G. Baydin, B. A. Pearlmutter, A. A. Radul, J. M. Siskind, Automatic differentiation in machine learning: a survey, The Journal of Machine Learning Research 18 (2017) 5595–5637.

- Abadi et al. [2015] M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin, S. Ghemawat, I. Goodfellow, A. Harp, G. Irving, M. Isard, Y. Jia, R. Jozefowicz, L. Kaiser, M. Kudlur, J. Levenberg, D. Mané, R. Monga, S. Moore, D. Murray, C. Olah, M. Schuster, J. Shlens, B. Steiner, I. Sutskever, K. Talwar, P. Tucker, V. Vanhoucke, V. Vasudevan, F. Viégas, O. Vinyals, P. Warden, M. Wattenberg, M. Wicke, Y. Yu, X. Zheng, TensorFlow: Large-scale machine learning on heterogeneous systems, 2015. URL: https://www.tensorflow.org/, software available from tensorflow.org.

- Paszke et al. [2017] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, A. Lerer, Automatic differentiation in PyTorch, in: Proceedings of Neural Information Processing Systems, 2017.

- Alkhalifah [2000] T. Alkhalifah, An acoustic wave equation for anisotropic media, Geophysics 65 (2000) 1239–1250.

- Jagtap et al. [2020] A. D. Jagtap, K. Kawaguchi, G. Em Karniadakis, Locally adaptive activation functions with slope recovery for deep and physics-informed neural networks, Proceedings of the Royal Society A 476 (2020) 20200334.

- Haghighat and Juanes [2021] E. Haghighat, R. Juanes, Sciann: A Keras/Tensorflow wrapper for scientific computations and physics-informed deep learning using artificial neural networks, Computer Methods in Applied Mechanics and Engineering 373 (2021) 113552.

- Slotnick [1959] M. Slotnick, Lessons in seismic computing, Soc. Expl. Geophys 268 (1959).

- Kingma and Ba [2014] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980 (2014).

- Zhu et al. [1997] C. Zhu, R. H. Byrd, P. Lu, J. Nocedal, Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization, ACM Transactions on Mathematical Software (TOMS) 23 (1997) 550–560.

- Fehler and Keliher [2011] M. Fehler, P. J. Keliher, SEAM Phase 1: Challenges of subsalt imaging in tertiary basins, with emphasis on deepwater Gulf of Mexico, Society of Exploration Geophysicists, 2011.

- Shah [2007] H. Shah, The 2007 BP anisotropic velocity-analysis benchmark, in: 70th Anuual EAGE meeting, workshop, 2007.

- Waheed et al. [2021] U. Waheed, T. Alkhalifah, B. Li, E. Haghighat, A. Stovas, J. Virieux, Traveltime computation for qSV waves in TI media using physics-informed neural networks, in: 82nd EAGE Conference and Exhibition, Submitted, 2021.

- Taufik et al. [2021] M. H. Taufik, U. Waheed, Q. Hao, T. Alkhalifah, The eikonal solution for attenuating VTI media using physics-informed neural networks, in: 82nd EAGE Conference and Exhibition, Submitted, 2021.