A Hybrid SFANC-FxNLMS Algorithm for Active Noise Control based on Deep Learning

Abstract

The selective fixed-filter active noise control (SFANC) method selecting the best pre-trained control filters for various types of noise can achieve a fast response time. However, it may lead to large steady-state errors due to inaccurate filter selection and the lack of adaptability. In comparison, the filtered-X normalized least-mean-square (FxNLMS) algorithm can obtain lower steady-state errors through adaptive optimization. Nonetheless, its slow convergence has a detrimental effect on dynamic noise attenuation. Therefore, this paper proposes a hybrid SFANC-FxNLMS approach to overcome the adaptive algorithm’s slow convergence and provide a better noise reduction level than the SFANC method. A lightweight one-dimensional convolutional neural network (1D CNN) is designed to automatically select the most suitable pre-trained control filter for each frame of the primary noise. Meanwhile, the FxNLMS algorithm continues to update the coefficients of the chosen pre-trained control filter at the sampling rate. Owing to the effective combination of the two algorithms, experimental results show that the hybrid SFANC-FxNLMS algorithm can achieve a rapid response time, a low noise reduction error, and a high degree of robustness.

Index Terms:

Active noise control, selective fixed-filter ANC, deep learning, hearables.I Introduction

Active noise control (ANC) involves the generation of an anti-noise of equal amplitude and opposite phase to suppress the unwanted noise [1, 2, 3, 4, 5, 6, 7]. Owing to its compact size and high efficiency in attenuating low frequency noises, ANC has been widely used in industrial operations and consumer products such as headphones [8, 9, 10, 11, 12, 13, 14]. Traditional ANC systems typically employ adaptive algorithms to attenuate noises [15, 16, 17]. Filtered-X normalized least-mean-square (FxNLMS), being an adaptive ANC algorithm, not only can achieve low noise reduction errors through adaptive optimization but also does not require prior knowledge of the input data [18, 19, 20]. However, due to the least mean square (LMS) based algorithms’ inherent slow convergence and poor tracking ability [21], FxNLMS is less capable of dealing with rapidly varying or non-stationary noises. Its slow response to these noises may impact customers’ perceptions of noise reduction effect [22].

In industrial applications and mature ANC products, fixed-filter ANC methods [23, 24] are adopted to tackle the problems of adaptive algorithms, where the control filter coefficients are fixed rather than adaptively updated to avoid slow convergence and instability. However, the coefficients of the fixed-filter algorithm are pre-trained for a specific noise type, resulting in the degradation of noise reduction performance for other types of noises [25]. Therefore, for flexible selection of different pre-trained control filters in response to different noise types, Shi et al. [26] proposed a selective fixed-filter active noise control (SFANC) method based on the frequency band matching. Nevertheless, several critical parameters of the method can only be determined through trials and errors. Also, its filter-selection module consumes extra computations in the real-time processor [27].

Thereby, automatic learning of the SFANC algorithm’s critical parameters is a significant issue that would promote its uses in real-world scenarios and industrial products. Under this consideration, deep learning techniques, particularly convolutional neural networks (CNNs), achieving significant success in classification [28, 29, 30, 31] appear to be an excellent approach for improving SFANC. By replacing the filter-selection module with a CNN model, the SFANC algorithm can automatically learn its parameters from noise datasets and select the best control filter given different noise types without resorting to extra-human efforts [32]. Additionally, a CNN model implemented on a co-processor can decouple the computational load from the real-time noise controller [32]. Though its superiority on response speed has been demonstrated, the CNN-based SFANC algorithm is limited by some wrong noise classifications and the lack of adaptive optimization, which may result in large steady-state errors.

As discussed above, using either SFANC or FxNLMS is difficult to constantly obtain satisfactory noise reduction performance for various noises. The SFANC algorithm and FxNLMS algorithm can be effectively combined to overcome the limitations of the individual ANC algorithms. Therefore, a hybrid SFANC-FxNLMS algorithm is proposed in this paper. In the proposed algorithm, a lightweight one-dimensional CNN (1D CNN) implemented on a co-processor can automatically select the most suitable control filter for different noise types. For each frame of the noise waveform, the control filter coefficients are decided by the 1D CNN and continue to be updated adaptively via the FxNLMS algorithm at the sampling rate. Simulation results show that the proposed algorithm not only achieves faster noise reduction responses and lower steady-state errors but also exhibits good tracking and robustness. Thus, it can be used for attenuating dynamic noises such as traffic noises and urban noises etc.

The remainder of this paper is organized as follows: Section II introduces the hybrid SFANC-FxNLMS algorithm in detail. Section III presents a series of numerical simulations to evaluate the effectiveness of the proposed algorithm. Finally, the conclusion is given in Section IV.

II Hybrid SFANC-FxNLMS Algorithm

The overall framework of the hybrid SFANC-FxNLMS algorithm is presented in Fig. 1. Although learning acoustic models directly from the raw waveform data is challenging [33], the input of the proposed ANC system is raw waveform instead of frequency spectrogram.

II-A A concise explanation of SFANC

An ANC progress can be abstracted as a first-order Markov chain [34, 35], as shown in Fig. 2, where represents the optimal control filter to deal with the disturbance . If we assumed that the optimal control filter has discrete states as , the control filter selection process can be considered as determining the best control filter from a pre-trained filter set . Hence, the SFANC strategy can be summarized as follows:

| (1) |

where operator returns the input value for minimum output; , , and represent the linear convolution, the reference signal, and the impulse response of the secondary path, respectively. The reference signal is assumed to be the same as the primary noise.

In practice, is typically regarded as the linear combination of . Thus, (1) is equivalent to

| (2) |

which refers to the process of selecting a control filter from the pre-trained filter set that maximizes its posterior probability in the presence of reference signal . Moreover, according to Bayes’ theorem [36], the posterior probability in (2) can be replaced with a conditional probability as

| (3) |

which predicts the most suitable control filter straight from the reference signal .

To achieve a satisfactory noise reduction for the SFANC algorithm, we should find a classifier model to approximate from the pre-recorded sampling set . The denotes the parameters of the classifier and can be obtained through maximum likelihood estimation (MLE) [37] as

| (4) |

Hence, we can utilize the deep learning approach to extract this classifier model from the training set .

II-B CNN-based SFANC algorithm

Motivated by the work [32], this paper develops a 1D CNN capable of dealing with noise waveforms end-to-end. The input of the network is the normalized noise waveform with a 1-second duration. The min-max operation is defined as:

| (5) |

where and obtain the maximum and minimum value of the input vector . It aims to rescale the input range into and retain the signal’s negative part that contains phase information.

II-B1 Pre-training control filters

The primary and secondary paths used in the training stage of the control filters are band-pass filters with a frequency range of 20Hz-7,980Hz. Broadband noises with frequency ranges shown in Fig. 3 are used to pre-train control filters. The filtered reference least mean square (FxLMS) algorithm is adopted to obtain the optimal control filters for these broadband noises due to its low computational complexity [38]. Subsequently, these pre-trained control filters are saved in the control filter database.

II-B2 The Proposed 1D CNN

The detailed architecture of the 1D CNN is illustrated in Fig. 4. Every residual block in the network is composed of two convolutional layers, subsequent batch normalization [39], and ReLU non-linearity [40]. Note that a shortcut connection is adopted to add the input with the output in each residual block since residual architecture is demonstrated easy to be optimized [41]. Additionally, the network uses a broad receptive field (RF) in the first convolutional layer and narrow RFs in the rest convolutional layers to fully exploit both global and local information.

In order to train the 1D CNN model, we generated broadband noise tracks with various frequency bands, amplitudes, and background noise levels at random. Each track has a duration of 1 second. These noise tracks are taken as primary noises to generate disturbances. The class label of a noise track corresponds to the index of the control filter that achieves the best noise reduction performance on the disturbance.

II-C The FxNLMS algorithm

The FxNLMS algorithm is a conventional adaptive ANC algorithm [42], where the error signal can be obtained from:

| (6) |

where , and represent the control filter, the transposed operation and linear convolution, respectively. The weight update equation of control filter is given by:

| (7) | ||||

in which, denotes the step size. is the filtered reference signal generated by passing the reference signal through the estimated secondary path . Additionally, is a small constant that avoids dividing by zero in the denominator.

II-D Hybrid SFANC-FxNLMS Algorithm

As illustrated in Fig. 1, the primary noise is sampled at a rate of Hz, while each data frame is made up of samples ( second duration data). Throughout the control process, the co-processor (e.g., a mobile phone) utilizes the pre-trained 1D CNN to output the index of the most appropriate control filter for each frame and sends it to the real-time controller. When the received filter index differs from the current index, the real-time controller will swap the control filter. Simultaneously, the real-time controller continues to update the control filter’s coefficients via (7) at each sample. Notably, the co-processor operates at the frame rate, whereas the real-time controller performs at the sampling rate.

The hybrid SFANC-FxNLMS algorithm can achieve faster responses to different noises with the assistance of the SFANC. Meanwhile, the FxNLMS algorithm continues to update the control filter’s coefficients in the SFANC-FxNLMS algorithm, which contributes to obtain lower noise reduction errors.

| Network | Test Accuracy | #Layers | #Parameters |

|---|---|---|---|

| Proposed Network | 99.35 | 6 | 0.21M |

| M3 Network | 99.15 | 3 | 0.22M |

| M5 Network | 99.10 | 5 | 0.56M |

| M11 Network | 99.15 | 11 | 1.79M |

| M18 Network | 98.50 | 18 | 3.69M |

| M34-res Network | 98.35 | 34 | 3.99M |

III Experiments

In the experiments, we trained the 1D CNN model on a synthesized noise dataset and then evaluated the hybrid SFANC-FxNLMS algorithm on real-record noises. The primary path and secondary path are chosen as band-pass filters. Moreover, the step size of the FxNLMS algorithm is set to , and the control filter length is taps.

III-A Training and testing of 1D CNN

The synthetic noise dataset was subdivided into three subsets: noise tracks for training, noise tracks for validation, and noise tracks for testing. During training, the Adam algorithm [43] was used for optimization. The training epoch was set at . To avoid exploding or vanishing gradients, the glorot initialization [44] was used. To avoid overfitting due to the scarcity of training data, the 1D network was built as a light-weighted network with weights all subjected to regularization with a coefficient of .

The proposed 1D CNN is compared to several different 1D networks proposed in [33] utilizing raw acoustic waveforms: M3, M5, M11, M18, and M34-res. Table I summarizes the comparison results. The number of network layers includes the number of convolutional layers and fully connected layers. Among these networks, the proposed 1D network obtains the highest classification accuracy of on the test dataset, which means it can provide the most suitable pre-trained control filters for different noises. Also, the proposed 1D network is lightweight, utilizing the fewest network parameters possible due to its efficient architecture.

III-B Real-world noise cancellation

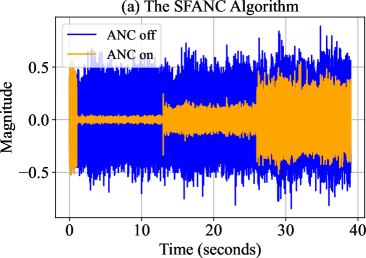

This section presents using the SFANC, FxNLMS, and hybrid SFANC-FxNLMS algorithm to attenuate real-record noises. The real-world noises are not included in the training datasets. We created two distinct noises by cascading and mixing an aircraft noise with a frequency range of 50Hz-14,000Hz and a traffic noise with a frequency range of 40Hz–1,400Hz.

III-B1 Cascaded noise attenuation

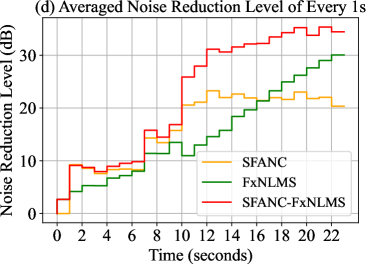

The varying noise in this simulation is provided by cascading aircraft noise and traffic noise. The noise reduction results using different ANC methods on the cascaded noise are shown in Fig. 5.

From the result, we can observe that the hybrid SFANC-FxNLMS algorithm can track and respond quickly to different parts of the varying noise. During the noise reduction process, the hybrid SFANC-FxNLMS algorithm consistently outperforms the SFANC and FxNLMS algorithms. In particular, during s-s, the averaged noise reduction level achieved by the hybrid SFANC-FxNLMS algorithm is 6dB and 15dB more than that of SFANC and FxNLMS, respectively. Noticeably, it is the transition period from one noise to another. Therefore, even when the frequency band and amplitude of the noise change, the hybrid SFANC-FxNLMS algorithm can still attenuate it.

III-B2 Mixed noise attenuation

In addition to cascading, we added the aircraft noise and traffic noise together to get a mixed noise. Fig. 6 depicts the performance of various ANC algorithms on the mixed noise. The SFANC algorithm responds to mixed noise much faster than the FxNLMS algorithm, as illustrated in the figure. For example, the SFANC method achieves a noise reduction level of about dB after s, whereas the FxNLMS algorithm takes more than s to achieve the same level. However, after convergence during s-s, the FxNLMS algorithm achieves a higher noise reduction level than the SFANC method.

Also, it is noticeable that the SFANC algorithm is incapable of dealing with new noise during the first s, since it only updates the control filter index after the first s. However, owing to combining with FxNLMS, the hybrid SFANC-FxNLMS algorithm can slightly attenuate the noise during the first s. Furthermore, we can see that the averaged noise reduction level of hybrid SFANC-FxNLMS algorithm is about dB higher than that of SFANC and FxNLMS algorithms in s-s. Therefore, the results on the mixed noise also confirm the superiority of the hybrid SFANC-FxNLMS algorithm over the SFANC and FxNLMS algorithm.

III-C Noise reduction performance on the varying primary path

In this section, the robustness of different ANC algorithms are examined when dealing with a primary path that changes every seconds. The impulse response of the primary path is mixed with different levels of white noise to obtain additional signal-to-noise ratios (SNR) of dB and dB respectively as shown in Fig. 7. The primary noise is the traffic noise.

According to Fig. 7, in the initial period, the SFANC and hybrid SFANC-FxNLMS algorithm have better noise reduction performances over the FxNLMS algorithm. However, when the SNR of the primary path varies, SFANC can’t perform as well as FxNLMS and hybrid SFANC-FxNLMS algorithm. Since the primary noise type remains the same, the selected pre-trained control filter is fixed in the SFANC algorithm, which may not be suitable on the varying primary path. The results indicate that the hybrid SFANC-FxNLMS algorithm is sufficiently robust to deal with the varying primary path.

IV Conclusion

The proposed hybrid SFANC-FxNLMS algorithm effectively combines SFANC and FxNLMS to achieve faster noise reduction responses and lower steady-state errors. A lightweight 1D CNN operating at frame rate is used to automatically determine control filter coefficients. Also, the FxNLMS algorithm continues to update the coefficients adaptively at the sampling rate. Simulation results validate the good tracking capability and robustness of the hybrid SFANC-FxNLMS algorithm when dealing with varying noises and primary paths in dynamic noise control environments. The proposed hybrid algorithm is promising to be further explored for combining other fixed-filter and adaptive ANC algorithms.

References

- [1] C. N. Hansen, Understanding active noise cancellation. CRC Press, 2002.

- [2] S. Elliot and P. Nelson, “Active noise control,” Noise News International, vol. 2, no. 2, pp. 75–98, 1994.

- [3] S. M. Kuo and D. R. Morgan, “Active noise control: a tutorial review,” Proceedings of the IEEE, vol. 87, no. 6, pp. 943–973, 1999.

- [4] Y. Kajikawa, W.-S. Gan, and S. M. Kuo, “Recent advances on active noise control: open issues and innovative applications,” APSIPA Transactions on Signal and Information Processing, vol. 1, 2012.

- [5] X. Qiu, An introduction to virtual sound barriers. CRC Press, 2019.

- [6] J. Zhang, “Active noise control over spatial regions,” Ph.D. dissertation, The Australian National University (Australia), 2019.

- [7] D. Chen, L. Cheng, D. Yao, J. Li, and Y. Yan, “A secondary path-decoupled active noise control algorithm based on deep learning,” IEEE Signal Processing Letters, vol. 29, pp. 234–238, 2021.

- [8] S. Liebich, J. Fabry, P. Jax, and P. Vary, “Signal processing challenges for active noise cancellation headphones,” in Speech Communication; 13th ITG-Symposium. VDE, 2018, pp. 1–5.

- [9] P. Rivera Benois, P. Nowak, and U. Zölzer, “Evaluation of a decoupled feedforward-feedback hybrid structure for active noise control headphones in a multi-source environment,” in INTER-NOISE and NOISE-CON Congress and Conference Proceedings, vol. 255, no. 4. Institute of Noise Control Engineering, 2017, pp. 3691–3699.

- [10] S. M. Kuo and D. R. Morgan, “Active noise control: a tutorial review,” Proceedings of the IEEE, vol. 87, no. 6, pp. 943–973, 1999.

- [11] C.-Y. Chang, A. Siswanto, C.-Y. Ho, T.-K. Yeh, Y.-R. Chen, and S. M. Kuo, “Listening in a noisy environment: Integration of active noise control in audio products,” IEEE Consumer Electronics Magazine, vol. 5, no. 4, pp. 34–43, 2016.

- [12] S. Liebich, C. Anemüller, P. Vary, P. Jax, D. Rüschen, and S. Leonhardt, “Active noise cancellation in headphones by digital robust feedback control,” in 2016 24th European Signal Processing Conference (EUSIPCO). IEEE, 2016, pp. 1843–1847.

- [13] X. Shen, D. Shi, and W.-S. Gan, “A wireless reference active noise control headphone using coherence based selection technique,” in ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2021, pp. 7983–7987.

- [14] K. Coker and C. Shi, “A survey on virtual bass enhancement for active noise cancelling headphones,” in 2019 International Conference on Control, Automation and Information Sciences (ICCAIS). IEEE, 2019, pp. 1–5.

- [15] R. M. Reddy, I. M. Panahi, and R. Briggs, “Hybrid fxrls-fxnlms adaptive algorithm for active noise control in fmri application,” IEEE Transactions on Control Systems Technology, vol. 19, no. 2, pp. 474–480, 2010.

- [16] J. Guo, F. Yang, and J. Yang, “Convergence analysis of the conventional filtered-x affine projection algorithm for active noise control,” Signal Processing, vol. 170, p. 107437, 2020.

- [17] F. Yang, Y. Cao, M. Wu, F. Albu, and J. Yang, “Frequency-domain filtered-x lms algorithms for active noise control: A review and new insights,” Applied Sciences, vol. 8, no. 11, p. 2313, 2018.

- [18] J. C. Burgess, “Active adaptive sound control in a duct: A computer simulation,” The Journal of the Acoustical Society of America, vol. 70, no. 3, pp. 715–726, 1981.

- [19] D. Shi, W.-S. Gan, B. Lam, S. Wen, and X. Shen, “Active noise control based on the momentum multichannel normalized filtered-x least mean square algorithm,” in INTER-NOISE and NOISE-CON Congress and Conference Proceedings, vol. 261, no. 6. Institute of Noise Control Engineering, 2020, pp. 709–719.

- [20] L. Lu, K.-L. Yin, R. C. de Lamare, Z. Zheng, Y. Yu, X. Yang, and B. Chen, “A survey on active noise control techniques–part i: Linear systems,” arXiv preprint arXiv:2110.00531, 2021.

- [21] Y. Tsao, S.-H. Fang, and Y. Shiao, “Acoustic echo cancellation using a vector-space-based adaptive filtering algorithm,” IEEE Signal Processing Letters, vol. 22, no. 3, pp. 351–355, 2014.

- [22] R. Ranjan and W.-S. Gan, “Natural listening over headphones in augmented reality using adaptive filtering techniques,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 23, no. 11, pp. 1988–2002, 2015.

- [23] R. Ranjan, T. Murao, B. Lam, and W.-S. Gan, “Selective active noise control system for open windows using sound classification,” in INTER-NOISE and NOISE-CON Congress and Conference Proceedings, vol. 253, no. 6. Institute of Noise Control Engineering, 2016, pp. 1921–1931.

- [24] C. Shi, R. Xie, N. Jiang, H. Li, and Y. Kajikawa, “Selective virtual sensing technique for multi-channel feedforward active noise control systems,” in ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp. 8489–8493.

- [25] S. Wen, W.-S. Gan, and D. Shi, “Using empirical wavelet transform to speed up selective filtered active noise control system,” The Journal of the Acoustical Society of America, vol. 147, no. 5, pp. 3490–3501, 2020.

- [26] D. Shi, W.-S. Gan, B. Lam, and S. Wen, “Feedforward selective fixed-filter active noise control: Algorithm and implementation,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 1479–1492, 2020.

- [27] D. Shi, B. Lam, and W.-S. Gan, “A novel selective active noise control algorithm to overcome practical implementation issue,” in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2018, pp. 1130–1134.

- [28] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [29] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 1–9.

- [30] J. Sikora, R. Wagnerová, L. Landryová, J. Šíma, and S. Wrona, “Influence of environmental noise on quality control of hvac devices based on convolutional neural network,” Applied Sciences, vol. 11, no. 16, p. 7484, 2021.

- [31] H. Zhang and D. Wang, “Deep anc: A deep learning approach to active noise control,” Neural Networks, vol. 141, pp. 1–10, 2021.

- [32] D. Shi, B. Lam, K. Ooi, X. Shen, and W.-S. Gan, “Selective fixed-filter active noise control based on convolutional neural network,” Signal Processing, vol. 190, p. 108317, 2022.

- [33] W. Dai, C. Dai, S. Qu, J. Li, and S. Das, “Very deep convolutional neural networks for raw waveforms,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2017, pp. 421–425.

- [34] P. A. Lopes and M. Piedade, “The kalman filter in active noise control,” in INTER-NOISE and NOISE-CON Congress and Conference Proceedings, vol. 1999, no. 5. Institute of Noise Control Engineering, 1999, pp. 1111–1124.

- [35] P. A. Lopes and M. S. Piedade, “A kalman filter approach to active noise control,” in 2000 10th European Signal Processing Conference. IEEE, 2000, pp. 1–4.

- [36] S. M. Kay, Fundamentals of statistical signal processing: estimation theory. Prentice-Hall, Inc., 1993.

- [37] S. van Ophem and A. P. Berkhoff, “Multi-channel kalman filters for active noise control,” The Journal of the Acoustical Society of America, vol. 133, no. 4, pp. 2105–2115, 2013.

- [38] F. Yang, J. Guo, and J. Yang, “Stochastic analysis of the filtered-x lms algorithm for active noise control,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 2252–2266, 2020.

- [39] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in International conference on machine learning. PMLR, 2015, pp. 448–456.

- [40] M. D. Zeiler, M. Ranzato, R. Monga, M. Mao, K. Yang, Q. V. Le, P. Nguyen, A. Senior, V. Vanhoucke, J. Dean et al., “On rectified linear units for speech processing,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, 2013, pp. 3517–3521.

- [41] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [42] S. C. Douglas and T.-Y. Meng, “An optimum nlms algorithm: Performance improvement over lms,” in Acoustics, Speech, and Signal Processing, IEEE International Conference on. IEEE Computer Society, 1991, pp. 2125–2126.

- [43] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [44] X. Glorot and Y. Bengio, “Understanding the difficulty of training deep feedforward neural networks,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010, pp. 249–256.