A knowledge-based data-driven (KBDD) framework for all-day identification of cloud types using satellite remote sensing

Abstract

Cloud types, as a type of meteorological data, are of particular significance for evaluating changes in rainfall, heatwaves, water resources, floods and droughts, food security and vegetation cover, as well as land use. In order to effectively utilize high-resolution geostationary observations, a knowledge-based data-driven (KBDD) framework for all-day identification of cloud types based on spectral information from Himawari-8/9 satellite sensors is designed. And a novel, simple and efficient network, named CldNet, is proposed. Compared with widely used semantic segmentation networks, including SegNet, PSPNet, DeepLabV3+, UNet, and ResUnet, our proposed model CldNet with an accuracy of 80.89±2.18% is state-of-the-art in identifying cloud types and has increased by 32%, 46%, 22%, 2%, and 39%, respectively. With the assistance of auxiliary information (e.g., satellite zenith/azimuth angle, solar zenith/azimuth angle), the accuracy of CldNet-W using visible and near-infrared bands and CldNet-O not using visible and near-infrared bands on the test dataset is 82.23±2.14% and 73.21±2.02%, respectively. Meanwhile, the total parameters of CldNet are only 0.46M, making it easy for edge deployment. More importantly, the trained CldNet without any fine-tuning can predict cloud types with higher spatial resolution using satellite spectral data with spatial resolution , which indicates that CldNet possesses a strong generalization ability. In aggregate, the KBDD framework using CldNet is a highly effective cloud-type identification system capable of providing a high-fidelity, all-day, spatiotemporal cloud-type database for many climate assessment fields.

keywords:

Cloud types , All-day identification , Knowledge-based data-driven framework , Edge deployment , Generalization ability1 Introduction

Clouds are important substances in the Earth’s ecosystem, affecting hydrological cycles, energy balance, terrestrial ecosystems, air quality, and even food security [Narenpitak et al., 2017, Watanabe et al., 2018, Bühl et al., 2019, Eytan et al., 2020, Goldblatt et al., 2021, Cesana & Del Genio, 2021, Hieronymus et al., 2022]. Different level clouds will have different impacts on the underlying surface. For example, deep convection clouds bring flood-inducing extremes of precipitation to endanger life and cause economic losses [Furtado et al., 2017]; long periods of no precipitation cause drought [Hartick et al., 2022], leading to reduced, or even no, harvest of crops. A process-oriented climate model assessment was carried out by Kaps et al. [2023] based on cloud types derived from satellites. Especially in the context of global climate change [Jørgensen et al., 2022, Zhang et al., 2023], in-depth research on clouds is urgently needed.

Many studies have been conducted on cloud detection, especially the application of satellite remote sensing data in cloud mask identification [Li et al., 2007, Shang et al., 2017, Skakun et al., 2022, Qiu et al., 2019, Sun et al., 2017, Li et al., 2019, Sedano et al., 2011, Poulsen et al., 2020, Joshi et al., 2019]. Foga et al. [2017] applied CFMask, the C language version of the function of mask (Fmask) algorithm, to detect clouds with Landsat products, and found that this algorithm performed best overall compared to other algorithms. In order to reduce the possibility of the mismatch of cloud and cloud shadows, and improve the accuracy of cloud shadow detection in the areas with large gradients, mountainous Fmask (MFmask) was developed by Qiu et al. [2017]. Fmask cannot distinguish whether clouds are thick or thin, therefore Ghasemian & Akhoondzadeh [2018] proposed the random forest algorithm with feature level fusion or decision level fusion to achieve this function in combination with the visible, infrared spectrum, and texture features provided by Landsat-8. Random forest, as a machine learning method, can identify clouds without setting and debugging thresholds. Drawing on machine learning that can simplify tedious procedures, Li et al. [2015] used subblock cloud images with brightness characteristics as learning samples for the support vector machine classifier to recognize clouds. XGBoost-based retrieval was proposed to improve the accuracy of cloud detection over different underlying surfaces in the East Asian region, which was compared with Japan Aerospace Exploration Agency (JAXA) AHI cloud product [Yang et al., 2022].

With advancements in computer hardware, many data-driven technologies have been widely used in the field of cloud detection [Segal-Rozenhaimer et al., 2020a, Li et al., 2020, Wu et al., 2022, Kanu et al., 2020, Mateo-García et al., 2020]. Li et al. [2022] combined the generative adversarial network (GAN) and physics-based cloud distortion model (CDM) to construct a hybrid model, GAN-CDM, to detect cloud over different underlying surfaces, including ice/snow, barren, water, urban, wetland, and forest. The GAN-CDM model not only requires very few patch-level labels during training, but also has good transferability for different optical satellite sensors. RS-Net based on the UNet structure was applied to Landsat-8 by Jeppesen et al. [2019], which is suitable for production environments due to its ability to execute quickly. Similarly, Wieland et al. [2019] used the modified UNet to segment clouds in remote sensing images obtained from multiple sensors (Landsat TM, OLI, ETM+, and Sentinel-2). In order to make the model training more accurate, cloud mask labels of the training data were obtained from images of ground-based sky cameras in the researches of Dev et al. [2019] and Skakun et al. [2021]. However, the characteristics of clouds have not been fully explored in cloud detection algorithms.

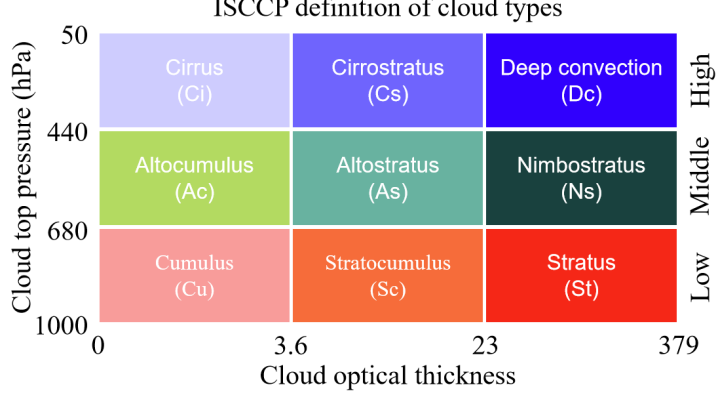

In order to better understand the characteristics of clouds [Wang et al., 2016, 2019, Teng et al., 2020, Ding et al., 2022, Khatri et al., 2018], they are categorized into distinct cloud types based on different standards. The most well-known standard is the International Satellite Cloud Climatology Project (ISCCP). According to cloud top pressure and cloud optical thickness, clouds are divided into cirrus (Ci), cirrostratus (Cs), deep convection (Dc), altocumulus (Ac), altostratus (As), nimbostratus (Ns), cumulus (Cu), stratocumulus (Sc), and stratus (St) [Rossow & Schiffer, 1999, Wang & Sassen, 2001]. Among them: Ci, Cs, and Dc belong to high-level clouds; Ac, As, and Ns belong to mid-level clouds; and Cu, Sc, and St belong to low-level clouds. In early work, Jun & Fengxian [1990] clustered satellite spectral signals to obtain different cloud types. Segal-Rozenhaimer et al. [2020b] has developed a cloud detection algorithm based on convolutional neural networks, which simplifies the tedious process of previous threshold methods. Yu et al. [2021] used the random forest method to divide clouds into multi-layer clouds and eight types of single-layer clouds based on the satellite FengYun-4A. Zhang et al. [2019] found that the use of visible channels significantly improves the ability of random forest to identify cloud types. Purbantoro et al. [2019] used the split window method to classify clouds based on the brightness temperature (BT) of channel 13 and brightness temperature difference (BTD) between channel 13 and channel 15 derived from the satellite Himawari-8. Wang et al. [2021] developed VectNet, and conducted pixel-level cloud-type classification work in the region (117°E - 129.8°E, 29.2°N - 42°N) using remote sensing data from 16 channels of the satellite Himawari-8.

Although the above researches have achieved good results in identifying cloud types depending on satellite remote sensing data, substantial work remains for further exploration. For example, methods based on threshold judgment rely largely on professional knowledge and experience [Yang et al., 2022]; Many studies have focused on the recognition of cloud masks [Tana et al., 2023, Wang et al., 2022a, Caraballo-Vega et al., 2023], but there is relatively little in-depth research on cloud types using deep learning [Larosa et al., 2023, Zhao et al., 2023]; Cloud-type recognition is mostly carried out in daytime areas [Huang et al., 2022], while cloud-type recognition in nighttime areas needs to be investigated. Considering the aforementioned problems, the following efforts are mainly made:

-

•

A knowledge-based data-driven (KBDD) framework for identifying cloud types based on spectral information from Himawari-8/9 satellite sensors is designed.

-

•

In order to simplify the tedious process of threshold setting, a novel, simple and efficient network, named CldNet, is proposed. Meanwhile, the widely used networks SegNet [Badrinarayanan et al., 2017], PSPNet [Zhao et al., 2017], DeepLabV3+ [Chen et al., 2018], UNet [Ronneberger et al., 2015], and ResUnet [Zhang et al., 2018] for pixel-level classification are used to compare with CldNet.

-

•

Our proposed KBDD framework is capable of achieving all-day identification of cloud types over the entire satellite observation region, regardless of whether some areas of the region are daytime or nighttime.

-

•

The trained model CldNet is applied directly to higher resolution satellite spectral input data without any fine-tuning, resulting in higher resolution cloud-type distributions ( to ).

The main purpose of this paper is to develop a knowledge-based data-driven (KBDD) framework for all-day identification of cloud types based on spectral information from Himawari-8/9 satellite sensors. Meanwhile, a novel, simple and efficient network, named CldNet, is proposed. More importantly, a highly effective cloud-type identification system capable of providing a high-fidelity, all-day, spatiotemporal cloud-type database for many climate assessment fields is established. In section 2, the study area and data processing are introduced in detail. Section 3 describes the KBDD framework, the specific architecture of CldNet and quantitative evaluation metrics of cloud-type classification. The performance of CldNet, SegNet, PSPNet, DeepLabV3+, UNet, ResUnet, and UNetS is shown in section 4. And the generalization ability of CldNet, limitations of current study and prospects for future research will be discussed in depth in section 5. Finally, some important conclusions and directions for future work are presented.

2 Data

2.1 Remote sensing data of the satellites Himawari-8/9

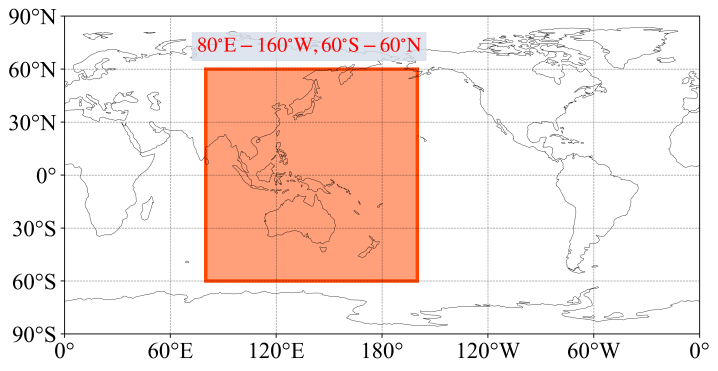

The satellites Himawari-8/9 belong to the Himawari 3rd generation programme [Bessho et al., 2016, Kurihara et al., 2016, Yang et al., 2020], whose main mission is operational meteorology and additional mission is environmental applications. The Himawari-8/9 satellites were launched on October 7, 2014 and November 2, 2016, respectively. The satellite Himawari-8 replaced the satellite MTSAT-2 as an operational satellite on July 7, 2015, and the satellite Himawari-9 replaced the satellite Himawari-8 as the primary satellite on December 13, 2022. The scope of the satellites Himawari-8/9 is shown in Fig. 1, whose coverage area is from 80°E to 160°W, and from 60°N to 60°S. Satellite spectral channels provide albedo from B01 to B06 and brightness temperature (BT) from B07 to B16, whose specific information is shown in Table 1 [Huang et al., 2017, Taniguchi et al., 2022]. In this study, Himawari L1 gridded data within the study area were downloaded through the Japan Aerospace Exploration Agency (JAXA) Himawari Monitor P-Tree System (available at https://www.eorc.jaxa.jp/ptree/index.html).

| Channel | Central wavelength | Bandwidth | SNR or NET specified input | Resolution | Primary application | Value range |

|---|---|---|---|---|---|---|

| B01 | 455 nm | 50 nm | 300 albedo (%) | 1.0 km | Aerosol | [0, 100] |

| B02 | 510 nm | 20 nm | 300 albedo (%) | 1.0 km | Aerosol | [0, 100] |

| B03 | 645 nm | 30 nm | 300 albedo (%) | 0.5 km | Low cloud, Fog | [0, 100] |

| B04 | 860 nm | 20 nm | 300 albedo (%) | 1.0 km | Vegetation, Aerosol | [0, 100] |

| B05 | 1610 nm | 20 nm | 300 albedo (%) | 2.0 km | Cloud phase, SO2 | [0, 100] |

| B06 | 2260 nm | 20 nm | 300 albedo (%) | 2.0 km | Particle size | [0, 100] |

| B07 | 3.85 µm | 0.22 µm | 0.16 BT (K) | 2.0 km | Low cloud, Fog, Forest fire | [220, 335] |

| B08 | 6.25 µm | 0.37 µm | 0.40 BT (K) | 2.0 km | Upper level moisture | [200, 260] |

| B09 | 6.95 µm | 0.12 µm | 0.10 BT (K) | 2.0 km | Mid-upper level moisture | [200, 270] |

| B10 | 7.35 µm | 0.17 µm | 0.32 BT (K) | 2.0 km | Mid-level moisture | [200, 275] |

| B11 | 8.60 µm | 0.32 µm | 0.10 BT (K) | 2.0 km | Cloud phase, SO2 | [200, 320] |

| B12 | 9.63 µm | 0.18 µm | 0.10 BT (K) | 2.0 km | Ozone content | [210, 295] |

| B13 | 10.45 µm | 0.30 µm | 0.10 BT (K) | 2.0 km | Cloud imagery, Information of cloud top | [200, 330] |

| B14 | 11.20 µm | 0.20 µm | 0.10 BT (K) | 2.0 km | Cloud imagery, Sea surface temperature | [200, 330] |

| B15 | 12.35 µm | 0.30 µm | 0.10 BT (K) | 2.0 km | Cloud imagery, Sea surface temperature | [200, 320] |

| B16 | 13.30 µm | 0.20 µm | 0.30 BT (K) | 2.0 km | Cloud top height | [200, 295] |

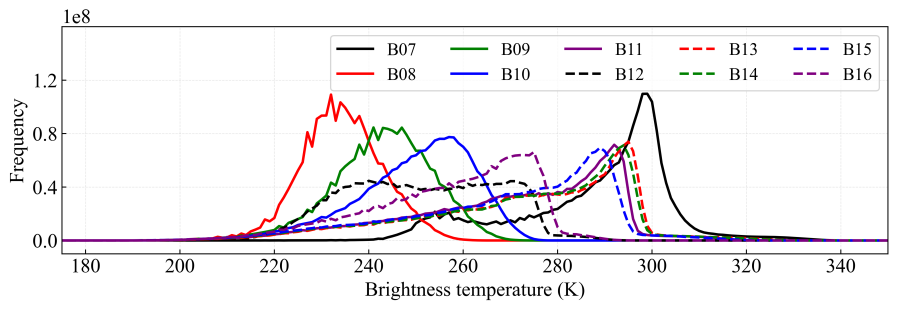

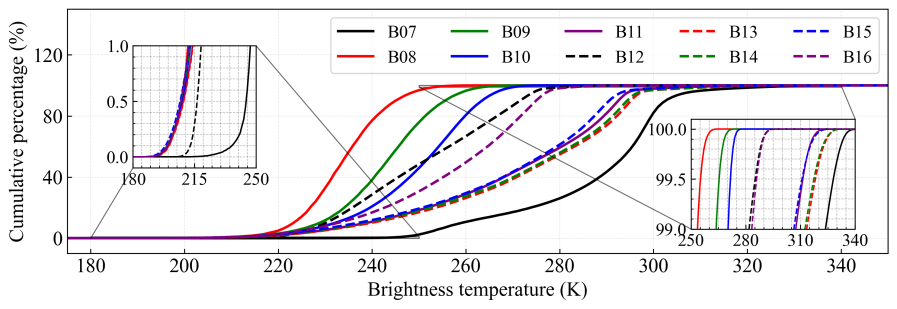

The remote sensing data of the spatial resolution at 03:00 (UTC+0) were downloaded every 3 d from January 1, 2020 to December 31, 2022. The value range of albedo from B01 to B06 is [0, 100]%, but the value range of BT from B07 to B16 needs to be determined statistically. The BT values corresponding to different channels of each pixel in the downloaded remote sensing images are counted. The frequency and cumulative percentage curves of BT from B07 to B16 are plotted in Fig. 2(a) and 2(b), respectively. In Fig. 2(a), the BT value of the peak frequency of B08 is the smallest, while the BT value of the peak frequency of B07 is the largest. The frequency curves for B11, B13, B14, and B15 have the same trend; those for B08, B09, and B10 are relatively consistent with a normal distribution; and the BT values for B12 are evenly distributed between 235K and 275K. In order to accurately determine the range of BT values for each channel, the cumulative percentage of BT for each channel is calculated in Fig. 2(b). The images at the 0% and 100% positions of the cumulative percentage curve are magnified and observed to determine the range of BT values for each channel. The range of BT values for each channel is determined and recorded in Table 1, which covers 99.9% of its own data. Based on the range of spectral channel values, the model inputs can be reasonably normalized.

2.2 Cloud types

The International Satellite Cloud Climatology Project (ISCCP) definition of cloud types is presented in Fig. 3, which is detailed at https://isccp.giss.nasa.gov. Under cloudy skies, cloud types are classified as cirrus (Ci), cirrostratus (Cs), deep convection (Dc), altocumulus (Ac), altostratus (As), nimbostratus (Ns), cumulus (Cu), stratocumulus (Sc), and stratus (St), depending on cloud optical thickness and cloud top pressure. Otherwise, the sky is clear (Cl). The JAXA Himawari Monitor P-Tree System provides Himawari L2 gridded data cloud types with spatial resolution and temporal resolution 10 min [Letu et al., 2019, Lai et al., 2019, Letu et al., 2020].

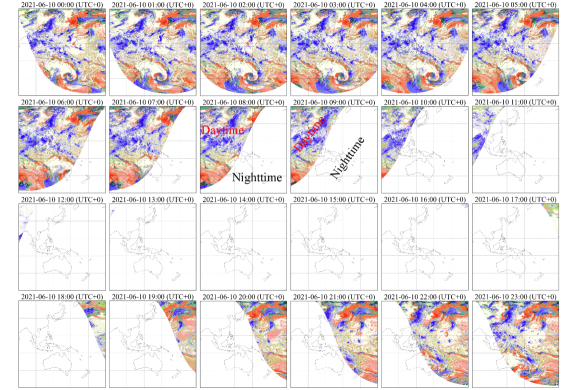

In Fig. 4, the change process of the overall cloud-type distributions every hour on June 10, 2021 is shown. Cloud types are found to be available only in the daytime region, while those in the nighttime region are unknown. Wang et al. [2022b] pointed out that the physical algorithms [Nakajima & Nakajma, 1995, Ishida & Nakajima, 2009] used by JAXA’s cloud-type product involve visible (VIS) B01 B03, near-infrared (NIR) B04 B06 and infrared (IR) B07 B16 bands, and thus cloud-type retrieval is limited to the daytime region only. Here, the cloud-type distributions at 03:00 (UTC+0) every 3 d are chosen as classification labels for this research from January 1, 2020 to December 31, 2022. Therefore, Himawari L1 gridded data with the same resolution at the corresponding time are downloaded in section 2.1.

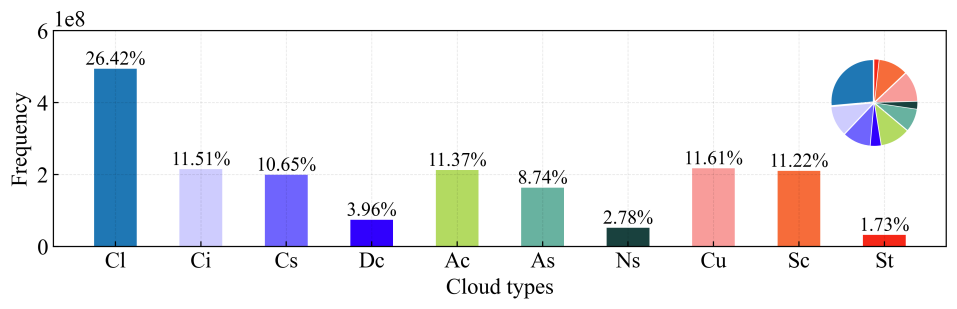

The frequency of each cloud type is calculated, as shown in Fig. 5. The frequency of clear sky (Cl) in the entire satellite observation area is the highest, while the frequency of St is the lowest. Cl accounts for 26.42%, St accounts for 1.73%, and their ratio is approximately 15:1. The proportion of all types of clouds is 73.58%.

3 Methodology

3.1 Knowledge-based data-driven framework

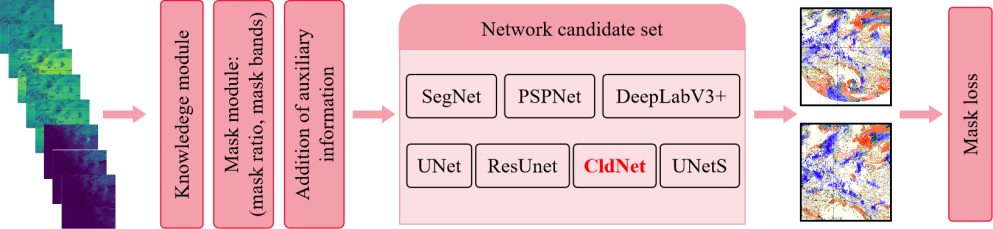

In order to derive all-day identification of cloud types through spectral information from Himawari-8/9 satellite sensors, a knowledge-based data-driven (KBDD) framework is proposed, whose specific architecture is depicted in Fig. 6. The KBDD framework mainly consists of knowledge module, mask module, addition of auxiliary information, network candidate set, and mask loss.

The main function of the knowledge module is to reorganize spectral information from Himawari-8/9 satellite sensors based on existing research knowledge. From previous research knowledge, it has been found that cloud types are not only related to single channel remote sensing, but also to the differences between two different channels. Here, it should be noted that satellite spectral channels B01 to B06 are characterized by albedo, while satellite spectral channels B07 to B16 are characterized by brightness temperature. Since albedo and brightness temperature are distinct variables and their units are different, the pairwise combinations must have the same dimension in order to make a difference. The knowledge module completed the reorganization of the satellite spectral information of B01 B16, the differences between the pairwise combinations of B01 B06, and the differences between the pairwise combinations of B07 B16.

The mask module is a convenient way to mask certain spectral channels and increase the flexibility of the framework, which has the ability to train with/without VIS and NIR data but does not require changing the model structure. When not using VIS and NIR channels, the mask module can set the satellite spectral information of B01 B06 and the differences between the pairwise combinations of B01 B06 to zero through the parameters mask ratio and mask bands.

The addition of auxiliary information is to improve the performance of the framework. The auxiliary information includes satellite zenith angle (SAZ), satellite azimuth angle (SAA), solar zenith angle (SOZ), solar azimuth angle (SOA), longitude, latitude, altitude, and underlying surface attributes (land or water). The auxiliary information and the reorganized satellite spectral information are merged through concatenation. The merged data is input into the network candidate set to obtain the probability result of cloud types. Due to the fact that the cloud-type references in the nighttime area do not exist, the mask loss mainly calculates the loss of labeled pixels in the target image, excluding unlabeled pixels.

3.2 SegNet, PSPNet, DeepLabV3+, UNet and ResUnet

The networks SegNet, PSPNet, DeepLabV3+, UNet and ResUnet are adopted as the most commonly used semantic segmentation networks in this study. SegNet is proposed by Badrinarayanan et al. [2017], and its network structure is a convolutional encoder-decoder. It mainly uses 2D convolution, 2D max pooling, and 2D max upsampling pooling. The input pooling indices of 2D max upsampling pooling come from the corresponding output of 2D max pooling. The input and output features of 2D convolution depend on the input and output data, respectively. The output feature number of the last convolution is the number of cloud-type categories. Under the function of softmax and argmax, the cloud type corresponding to the pixel can be obtained.

PSPNet is proposed by Zhao et al. [2017] and ResNet50 [He et al., 2016] is used as a feature map extractor for PSPNet in this study. The size of the feature map is reduced to 1/4 of the original input size to conserve memory. The feature maps in different levels are generated by the pyramid pooling module, and all feature maps are concatenated to predict cloud types.

DeepLabV3+ is proposed by Chen et al. [2018] and the feature map extractor of DeepLabV3+ is the same as that of PSPNet. In encoder processing, the encoder mainly uses four convolutional kernels with different dilation parameters and one global average pooling. In decoder processing, the low-level features obtained by the feature map extractor and the features obtained by the encoder are concatenated to predict cloud types.

Ronneberger et al. [2015] designed UNet. UNet is widely used in the field of imaging [Waldner & Diakogiannis, 2020, Yu et al., 2023, Yoo et al., 2022, Amini Amirkolaee et al., 2022, Pang et al., 2023], and it has significant similarities with SegNet. The main difference is that UNet concatenates the feature maps corresponding to the downsampling process during the upsampling process, and ultimately predicts cloud types. Zhang et al. [2018] developed ResUnet, which is built with residual units and has similar architecture to that of UNet.

3.3 CldNet

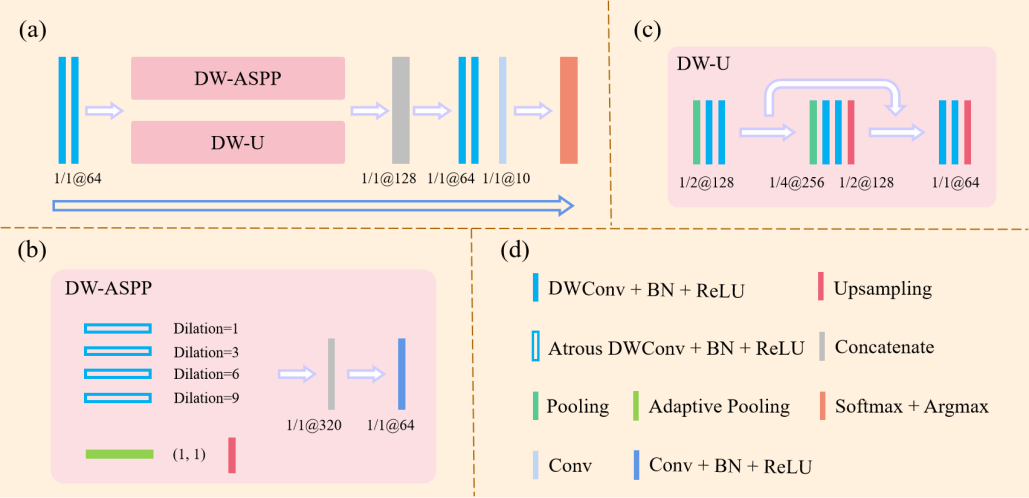

In this study, a novel simple and effective deep learning-based network for cloud-type classification, named CldNet, is proposed and illustrated in Fig. 7. Depthwise separable convolution (DWConv) is applied into CldNet, which factorizes a standard convolution into a depthwise convolution [Chollet, 2017] followed by a pointwise convolution [Hua et al., 2018]. The difference between atrous DWConv and DWConv is the dilation of depthwise convolution. CldNet mainly consists of a DW-ASPP module and a DW-U module in Fig. 7. The DW-ASPP module and DW-U module are inspired by the atrous spatial pyramid pooling (ASPP) module of DeepLabV3+ and UNet, respectively. The DW-ASPP module uses four atrous depthwise separable convolutions with different dilations to capture useful multi-scale spatial context, and this can enhance the receptive field of the feature map. The DW-U module can extract feature maps at different levels. The fusion of the two extracted feature maps is beneficial for mining the inherent relationships between model input data and cloud types and achieving cloud-type recognition. More importantly, the two modules of CldNet have fewer parameters, which saves memory and makes them easier to deploy on edge devices.

3.4 Loss function

The probability distribution of cloud-type prediction for each pixel is expected to be consistent with that of the cloud-type reference. Cross entropy can measure the difference information between two probability distributions. In the multi classification problem of deep learning, cross entropy can be replaced by negative logarithmic likelihood to calculate model loss. The loss update model parameters through backpropagation to achieve optimal performance of the model. Negative logarithmic likelihood loss (NLLLoss) is computed by Eq. 1 and Eq. 2, and the label of cloud type is calculated by Eq. 3.

| (1) |

| (2) |

| (3) |

where is the number of samples per batch; is the number of cloud-type labels; and is the probability and label of the reference cloud type, respectively; and are the probability and label of the model’s predicted results, respectively; is the output result of the network structure; and the function returns the indices of the maximum value of all elements in the input vector.

3.5 Experimental setup

This section will provide a detailed introduction about experimental setup. Satellite spectral data and cloud-type data from 2020 and 2021 are used as the training dataset, and those from 2022 are used as the test dataset. During the training process, the training dataset is randomly divided into five parts. Four of these parts are used for training, whose main purpose is to update the parameters of the model. The remaining part is used for validation, whose purpose is to prevent overfitting by early stopping of the model training [Dietterich, 1995] when the loss on the validation dataset no longer decreases after 10 consecutive epochs. The test dataset is used to evaluate the performance of the trained model.

All models in this study are trained on single NVIDIA GPU A100. In each training batch, single remote sensing image is segmented into slices and input into the model, and its dimension is . The batch size is 25. Optimization algorithm Adam is used and its learning rate (lr) is controlled through a multi-step learning rate approach. If epoch 5, lr = 0.01; If 5 epoch 10, lr = 0.001; If 10 epoch 20, lr = 0.0001; If 20 epoch 30, lr = 0.00001; And if epoch 30, lr = 0.000001.

3.6 Quantitative evaluation metrics

The recognition of nine cloud types and clear sky is a multi classification problem. In order to better evaluate the predictive classification performance of the model, accuracy is a commonly used indicator. can measure the ratio of correctly classified predictions to the total number, as follows:

| (4) |

In order to understand the model’s ability to distinguish between clouds and clear skies, the indicator accuracy (N/Y for cloud) is defined as follows:

| (5) |

focuses on evaluating the proportion of ture positive data among all predicted positive data. focuses on evaluating how much data has been successfully predicted as positive among all positive data. For and , each class needs to calculate its precision and recall separately. is a comprehensive indicator of both and , as follows:

| (6) |

| (7) |

| (8) |

directly adds up the of different classes to calculate the average, which can treat each class equally.

| (9) |

is the sum of the of each class multiplied by its weight, and this method considers class imbalance issues.

| (10) |

adds the TP, FP, and FN of each class first, and then calculates them based on the binary classification.

| (11) |

| (12) |

| (13) |

where TP is true positive; FN is false negative; FP is false positive; and TN is true negative. True and false refer to the correctness of the test result. True means that the test result is correct, and false means that the test result is incorrect. Positive and negative refer to the test results of the sample. Positive means that the intended target is detected, and negative means that the intended target is not detected. is the total number of samples in sigle image. is the number of cloud-type labels. is the true distribution proportion of the class .

4 Results

4.1 Performance of different models

4.1.1 Comparison of all models

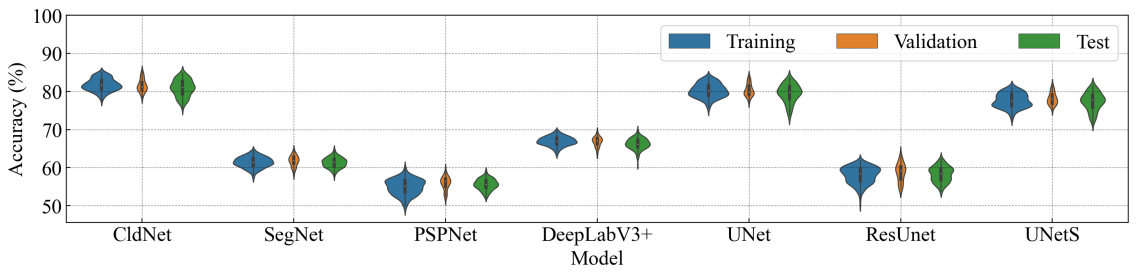

The networks with different structures, including CldNet, SegNet, PSPNet, DeepLabV3+, UNet and ResUnet, are used to train network parameters through the training dataset. The accuracy of each batch under each epoch is recorded, and Fig. 8 compares the accuracy of different models for the converged epoch. The accuracy performance of each model in the three stages of training, validation, and test is very close, indicating that the model possesses good stability and robustness. Our proposed model CldNet is state-of-the-art in identifying cloud types, which achieves average accuracies of 81.76±1.64%, 81.58±1.63%, and 80.89±2.18% during the training, validation, and test stages, respectively. PSPNet is the worst, which achieves average accuracies of 54.95±2.06%, 55.92±1.70%, and 55.58±1.43% during the training, validation, and test stages, respectively. The accuracy of CldNet is only approximately 1.4% higher than that of UNet, but the total parameters (0.46M) of CldNet are much smaller than the total parameters (31.09M) of UNet. The number of features in the UNet backbone structure is reduced to a quarter of its original number, and the network is marked as UNetS. The total parameters of UNetS are reduced to 1.96M, and it has an accuracy of 77.37±2.34% on the test set. The accuracy of CldNet is approximately 3.5% higher than that of UNetS. The mean, standard deviation, minimum, median, and maximum of accuracy for all models during the training, validation, and test stages are recorded in Table A.1.

The overall accuracy trend of all models on the test dataset is shown in Fig. 9, and DOY refers to the day of the year. Basically, all models maintain the same pattern (CldNet UNet UNetS DeepLabV3+ SegNet ResUnet PSPNet) of testing accuracy on the same day. The average accuracies of the cloud-type distributions for CldNet, SegNet, PSPNet, DeepLabV3+, UNet, ResUnet, and UNetS are 80.89±2.18%, 61.30±1.32%, 55.58±1.43%, 66.25±1.45%, 79.50±2.37%, 58.27±2.00%, and 77.37±2.34%, respectively. Compared with SegNet, PSPNet, DeepLabV3+, UNet, ResUnet and UNetS, our proposed model CldNet has increased by 32%, 46%, 22%, 2%, 39% and 5%, respectively.

Starting from December 2022, the spectral information released by JAXA comes from the Himawari-9 sensors, while the spectral information before December 2022 comes from the Himawari-8 sensors. The trained parameters of the model are based on the spectral data from the Himawari-8 sensor. Although both the sensors of Himawari-8 and Himawari-9 are the Advanced Himawari Imager, the accuracy of cloud-type prediction using the spectral data from Himawari-9 sensor in the trained model still decreases in Fig. 9. In 2022, March, April, and May belong to spring; June, July, and August belong to summer; September, October, and November belong to autumn; December, January, and February belong to winter. For CldNet, the accuracy for spring, summer, autumn, January and February of winter, and December of winter is 80.17±2.36%, 83.26±1.08%, 80.36±1.13%, 80.81±1.20%, and 77.62±0.61%. This result indicates that the model has an advantage in identifying cloud types in summer.

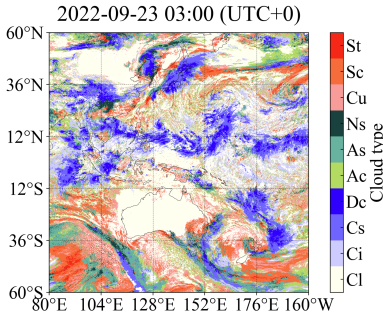

The satellite observation area is divided into 3030 sub-areas, and the cloud-type prediction error density of each sub-area is defined as the proportion of pixels with cloud-type prediction errors in that sub-area to the total pixels. The cloud-type prediction error density distributions of CldNet, SegNet, PSPNet, DeepLabV3+, UNet, ResUnet and UNetS at 2022-09-23 03:00 (UTC+0) are shown in Fig. 10(a) - 10(g). The lighter is the color in the figure, the higher is the prediction accuracy of the model. The areas marked with circles in the figure are the areas where the model’s cloud-type prediction ability is poor. From the perspective of overall cloud-type prediction error density distributions, our proposed model CldNet is significantly better than SegNet, PSPNet, DeepLabV3+, and ResUnet. The differences between CldNet and UNet in terms of the cloud-type prediction error density are computed in Fig. 10(h), where a negative value indicates that CldNet is better than UNet, while a positive value indicates that UNet is better than CldNet. Most areas are positive, indicating that CldNet is better than UNet. Among all the models, our proposed model CldNet is state-of-the-art (SOTA) in cloud-type recognition.

4.1.2 Performance of CldNet across cloud types and clear sky

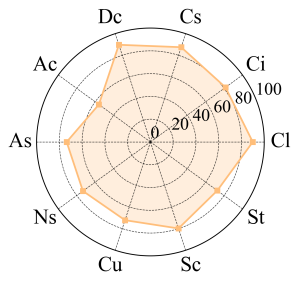

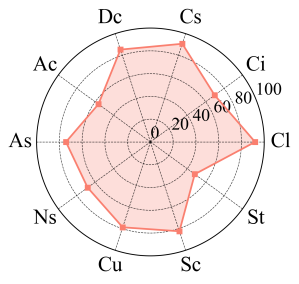

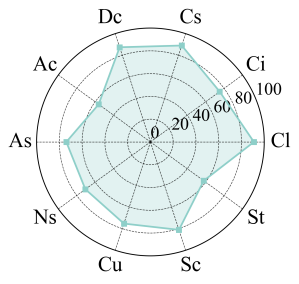

For a better assessment, detailed statistics of each cloud type prediction at 2022-09-23 03:00 (UTC+0) are provided. Classification indicators of all models, including precision, recall, and -score are computed and recorded in Table A.2. The results show that CldNet performs best, and PSPNet performs worst. The classification indicators obtained by CldNet for each cloud type are presented in Fig. 11. Meanwhile, the number of pixels for each cloud type obtained by CldNet at 2022-09-23 03:00 (UTC+0) is summarized in Table A.3. From Table A.3, cloud types Ci and Cu are mistakenly identified as Ac due to their cloud optical thickness belonging to the same category and cloud top height of Ac being between Ci and Cu. Therefore, the precision of Ac is relatively low in Fig. 11(a). In Fig. 11(b), recall of St is lowest because a large number of cloud type St pixels are mistakenly identified as As, Ns, and Sc.

-score is calculated based on precision and recall, and the model performs poorly in cloud types Ac and St. For multi-classification tasks, accuracy/, , , and accuracy (N/Y for cloud) of cloud types at 2022-09-23 03:00 (UTC+0) are computed, which are recorded in Table A.4. The purpose of accuracy (N/Y for cloud) is to measure the ability of the model to distinguish between clear and cloudy skies. The accuracies (N/Y for cloud) of CldNet, SegNet, PSPNet, DeepLabV3+, UNet, ResUnet, and UNetS are 95.27%, 89.70%, 88.33%, 91.23%, 95.00%, 87.32%, and 94.58%, respectively. From all of the metrics, our proposed model CldNet still maintains the performance of SOTA among all of the models.

4.2 The impact of auxiliary information and satellite spectral channels

In the previous section, our proposed model CldNet is found to perform best in all models, and thus CldNet is chosen for a more in-depth study. In order to improve the accuracy of the model, some auxiliary information has been added. The auxiliary information includes satellite zenith angle (SAZ), satellite azimuth angle (SAA), solar zenith angle (SOZ), solar azimuth angle (SOA), longitude, latitude, altitude, and underlying surface attributes (land or water). The impact of the auxiliary information on CldNet is explored in Table 2, and it is found that CldNet-W, which adds SAZ, SAA, SOZ and SOA to the model inputs, performs best. Compared with CldNet, the accuracy of CldNet-W has been improved by approximately 1.35%. This indicates that the auxiliary information can enhance the predictive ability of the model to identify cloud types.

| Addition of auxiliary information | Accuracy (%) | ||||||||||

| SAZ | SAA | SOZ | SOA | Longitude | Latitude | Altitude | Underlying surface | Training | Validation | Test | |

| 81.76±1.64 | 81.58±1.63 | 80.89±2.18 | |||||||||

| ✓ | ✓ | ✓ | ✓ | 82.86±1.71 | 82.96±1.60 | 82.23±2.14 | |||||

| ✓ | ✓ | 82.10±1.69 | 81.97±1.61 | 81.01±2.70 | |||||||

| ✓ | ✓ | 82.42±1.65 | 82.35±1.58 | 81.68±2.02 | |||||||

| ✓ | ✓ | ✓ | ✓ | 82.31±1.64 | 82.20±1.57 | 81.49±2.13 | |||||

| ✓ | ✓ | ✓ | 82.10±1.62 | 81.88±1.53 | 81.22±1.99 | ||||||

| ✓ | 81.73±1.61 | 81.63±1.58 | 80.96±2.09 | ||||||||

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 82.56±1.77 | 82.70±1.75 | 82.01±2.28 | |

An important aim of this study is to extrapolate cloud types in the nighttime areas by training model parameters using daytime label data. VIS and NIR channels can be used during the day, but not at night. If the model does not use VIS and NIR information, mask module will set the data involving VIS and NIR channels to zero. CldNet-O uses mask module to set the model input data involving VIS and NIR channels to zero through mask ratio and mask bands based on CldNet-W.

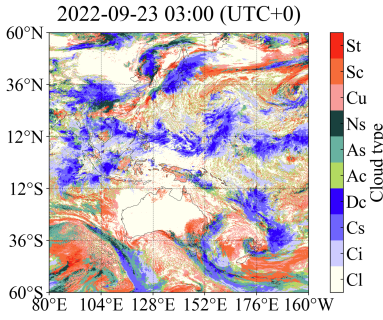

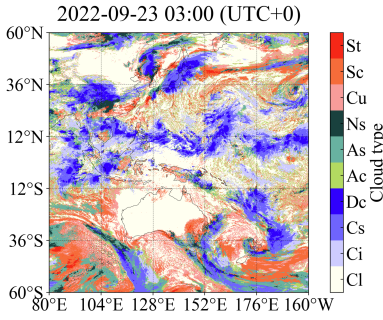

The overall cloud-type distributions predicted by the trained CldNet-W and CldNet-O at 2022-09-23 03:00 (UTC+0) are shown in Fig. 12, whose specific indicator results are recorded in Table A.2 and Table A.4. In Fig. 12(c), CldNet-W performs better than CldNet-O in cloud-type identification for most regions. However, CldNet-O extrapolates cloud types in the nighttime region due to not involving VIS and NIR channels. Compared with CldNet-W, the accuracy/ and accuracy (N/Y for cloud) of CldNet-O have decreased by 7.34% and 0.45%, respectively. It can be seen that the removal of VIS and NIR has little effect on the distinction between clear and cloudy skies, but a significant impact on the identification of cloud types, and this result is also confirmed by the study of Zhang et al. [2019]. In order to construct a high-fidelity, all-day, spatiotemporal cloud-type database with spatial resolution over the entire satellite observation area, it is vitally important that CldNet-W and CldNet-O can be deployed online for daytime and nighttime areas, respectively.

\begin{overpic}[abs,unit=1mm,width=138.76157pt,trim=0.0pt 0.0pt 30.11249pt -10.03749pt,clip]{

./FIGS/section4/CldNetO_20220922/CldNet_202310220500O_CLTYPE_202209221600_pre.png

}

\put(18.5,15.5){\color[rgb]{0,0,0}Fig.\ref{fig:models_stage_bb:cc}}

\put(0.0,0.0){

\color[rgb]{0,0,0}

\polygon(17,13)(17,19)(29,19)(29,13)

}

\end{overpic}

\begin{overpic}[abs,unit=1mm,width=138.76157pt,trim=0.0pt 0.0pt 30.11249pt -10.03749pt,clip]{

./FIGS/section4/CldNetO_20220922/CldNet_202310220500O_CLTYPE_202209221600_pre.png

}

\put(18.5,15.5){\color[rgb]{0,0,0}Fig.\ref{fig:models_stage_bb:cc}}

\put(0.0,0.0){

\color[rgb]{0,0,0}

\polygon(17,13)(17,19)(29,19)(29,13)

}

\end{overpic}

\begin{overpic}[abs,unit=1mm,width=138.76157pt,trim=30.11249pt 0.0pt 0.0pt -10.03749pt,clip]{

./FIGS/section4/CldNetO_20220922/CldNet_202310220500O_CLTYPE_202209221600_true.png

}

\end{overpic}

\begin{overpic}[abs,unit=1mm,width=138.76157pt,trim=30.11249pt 0.0pt 0.0pt -10.03749pt,clip]{

./FIGS/section4/CldNetO_20220922/CldNet_202310220500O_CLTYPE_202209221600_true.png

}

\end{overpic}

\begin{overpic}[abs,unit=1mm,width=0.0pt,trim=0.0pt 0.0pt 0.0pt -10.03749pt,clip]{

./FIGS/section4/cloud_BT11_20220922/cloud_BT11_20220922_1600_cbv.png

}

\put(16.5,14.5){\color[rgb]{0,0,0}Fig.\ref{fig:models_stage_bb:ccs}}

\put(0.0,0.0){

\color[rgb]{0,0,0}

\polygon(15,12)(15,18.3)(27,18.3)(27,12)

}

\end{overpic}

\begin{overpic}[abs,unit=1mm,width=0.0pt,trim=0.0pt 0.0pt 0.0pt -10.03749pt,clip]{

./FIGS/section4/cloud_BT11_20220922/cloud_BT11_20220922_1600_cbv.png

}

\put(16.5,14.5){\color[rgb]{0,0,0}Fig.\ref{fig:models_stage_bb:ccs}}

\put(0.0,0.0){

\color[rgb]{0,0,0}

\polygon(15,12)(15,18.3)(27,18.3)(27,12)

}

\end{overpic}

4.3 All-day recognition of cloud types

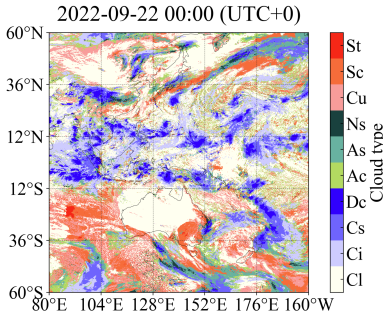

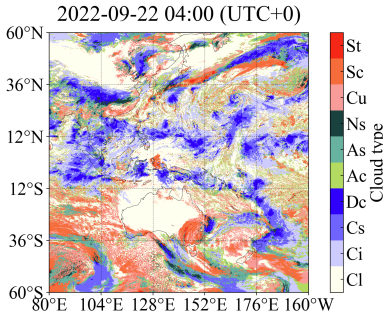

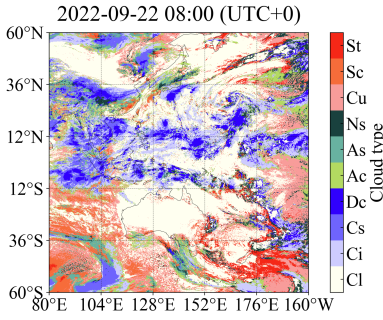

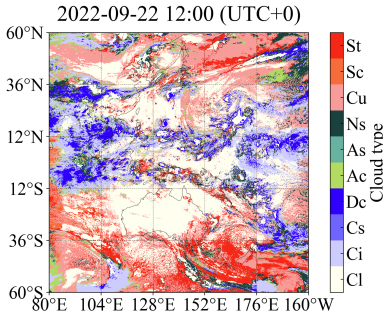

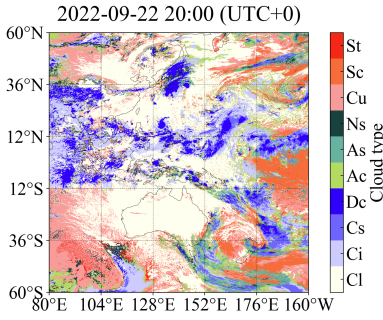

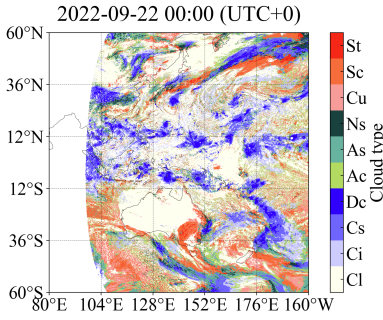

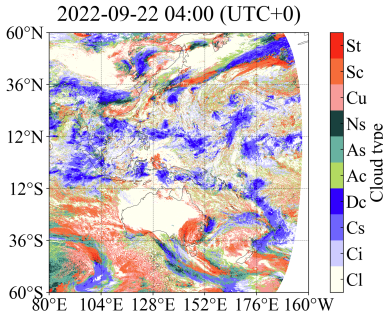

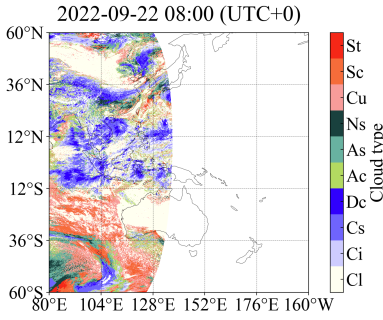

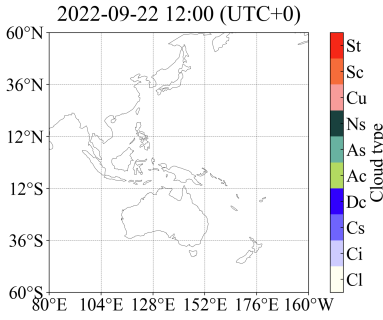

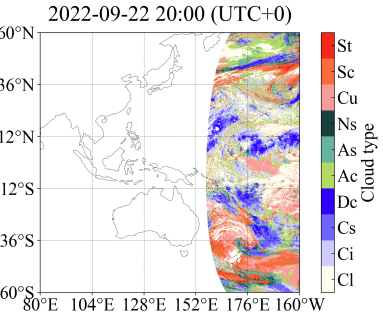

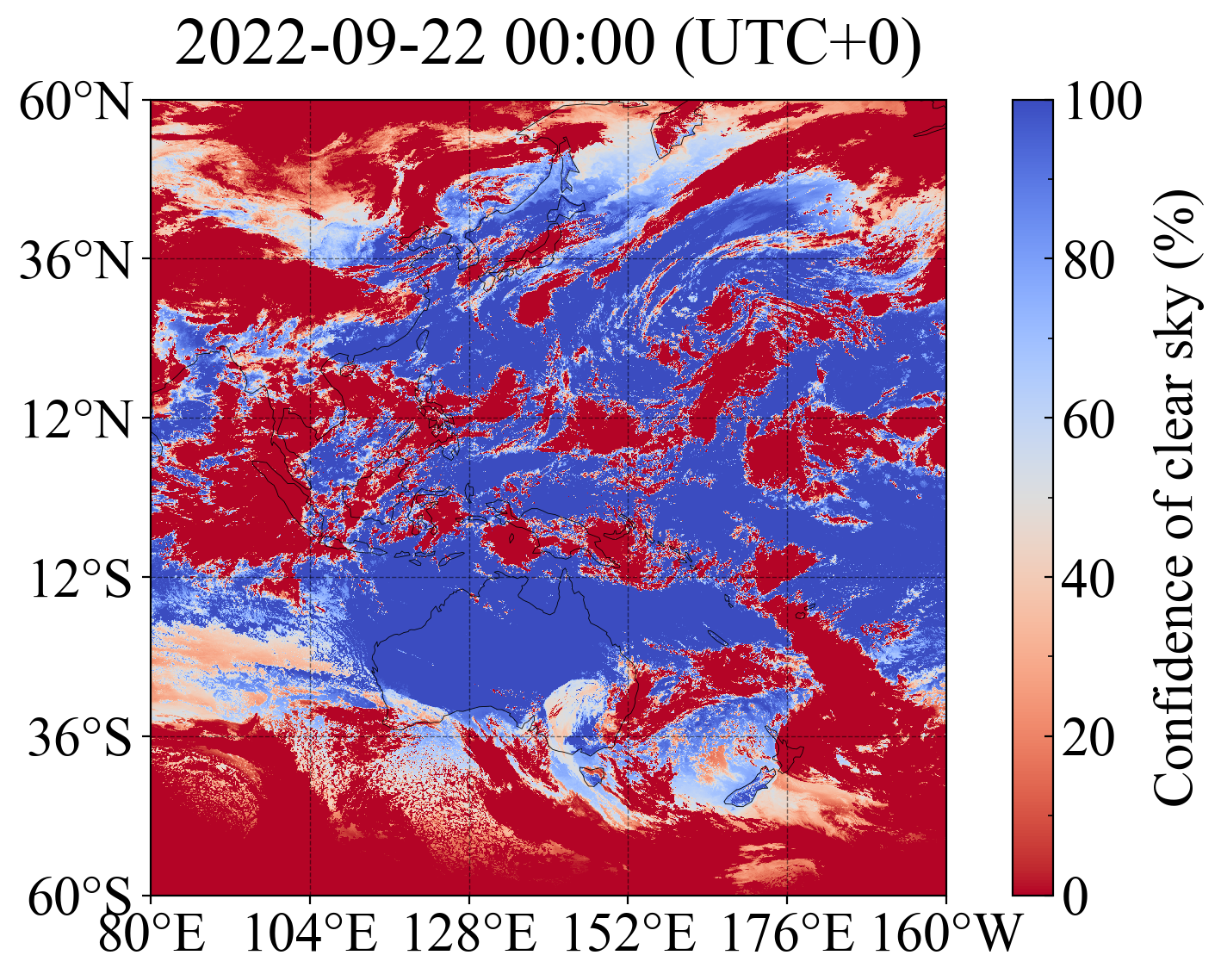

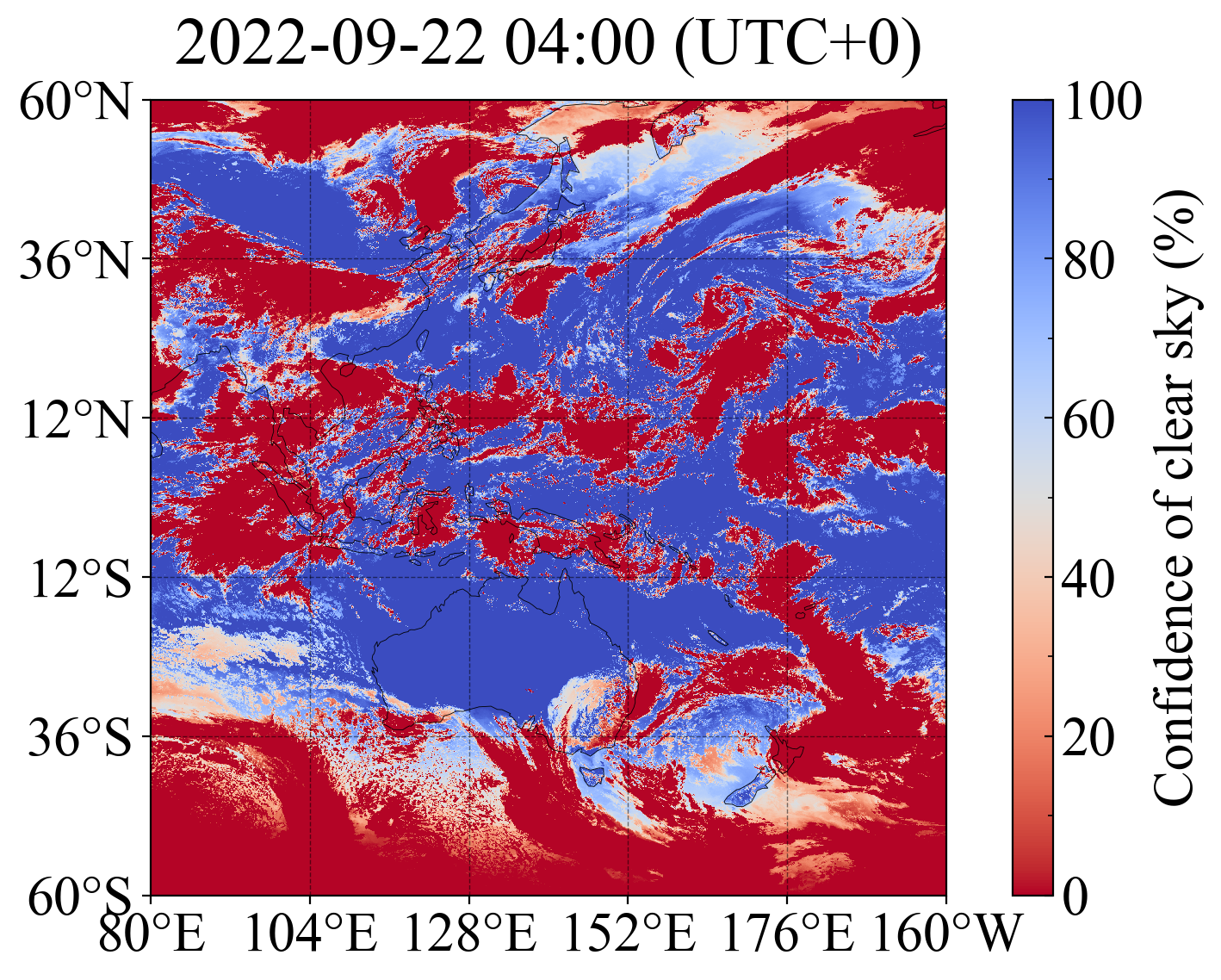

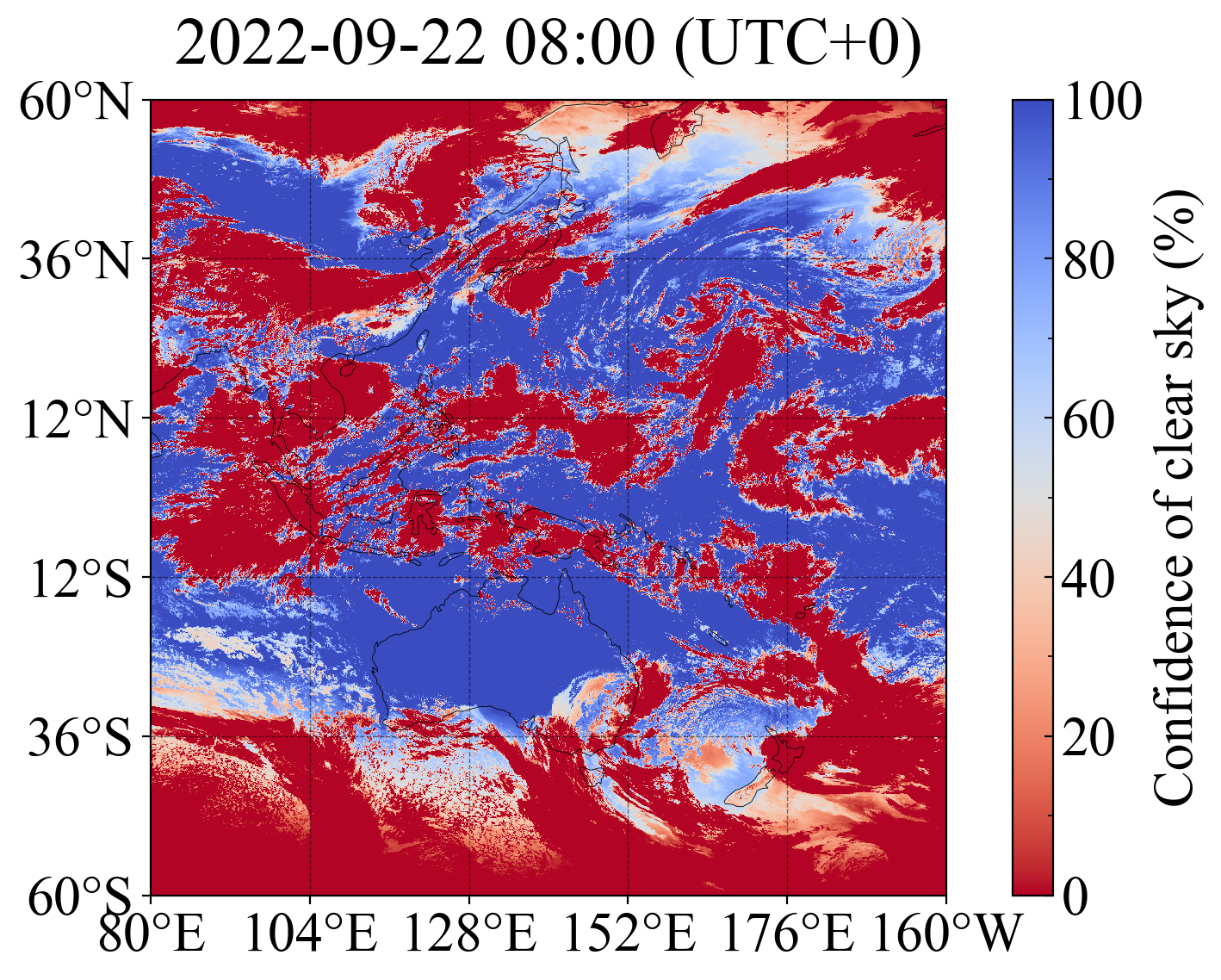

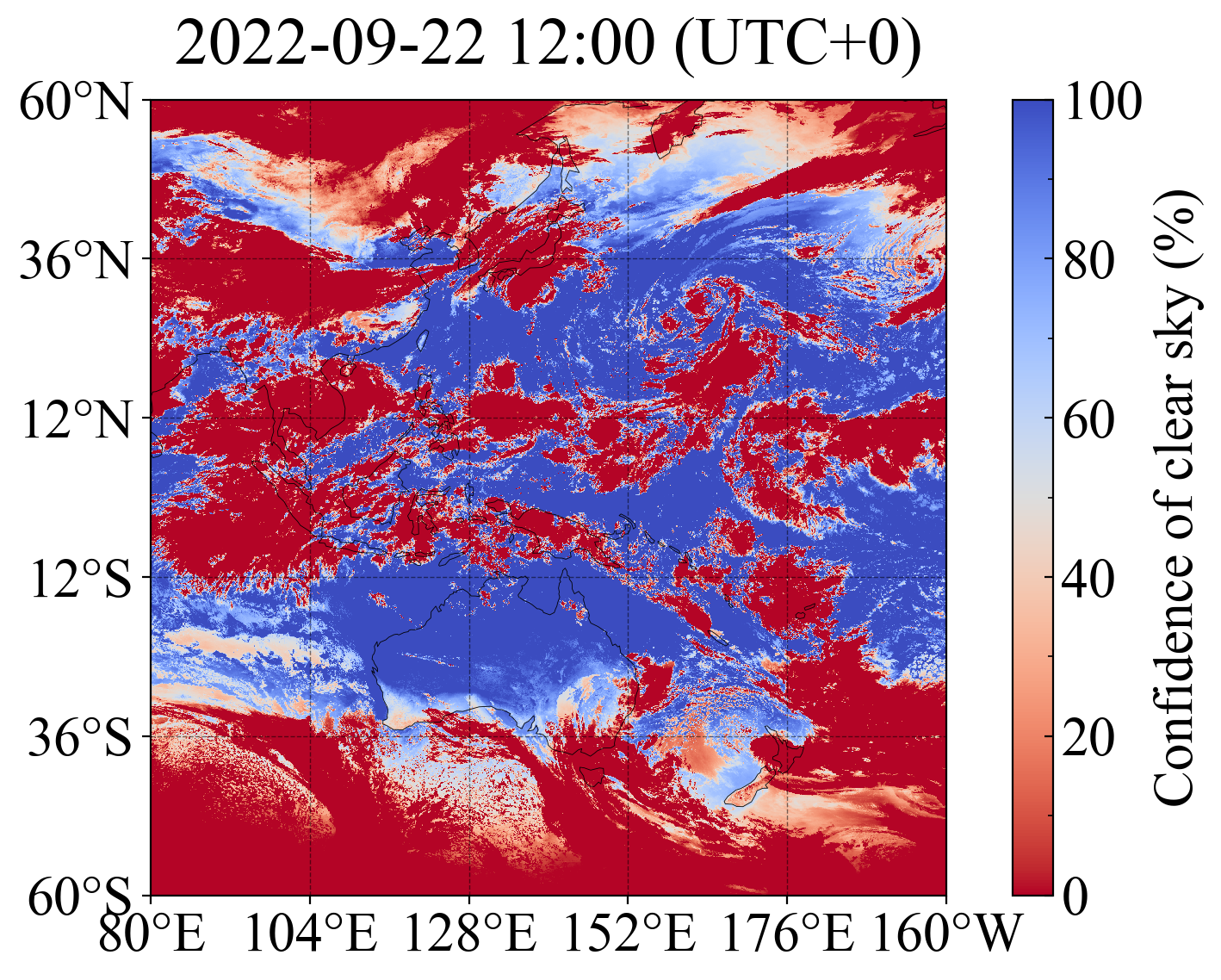

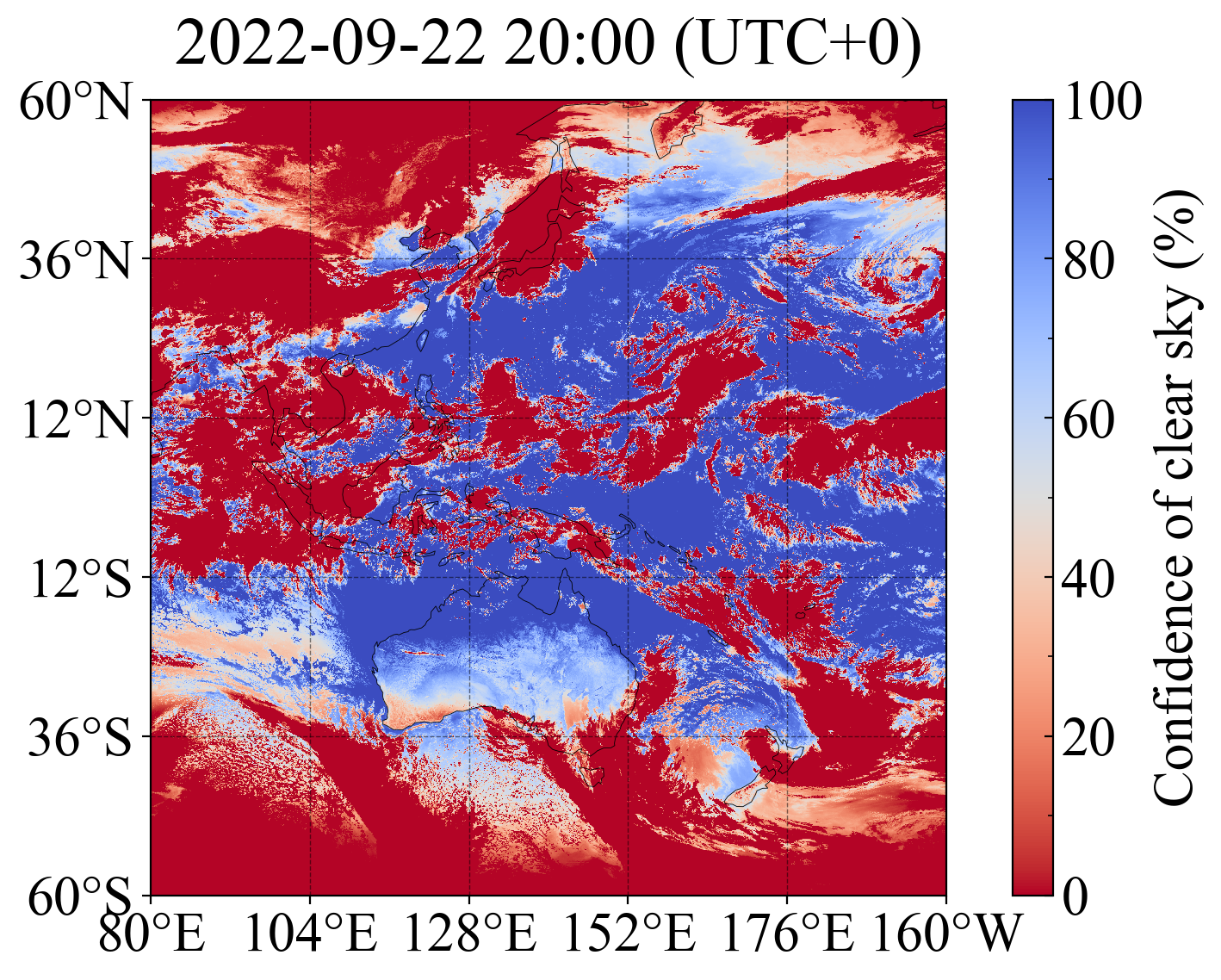

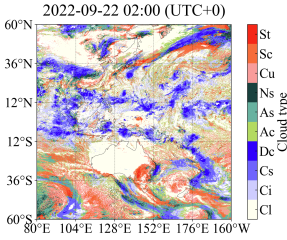

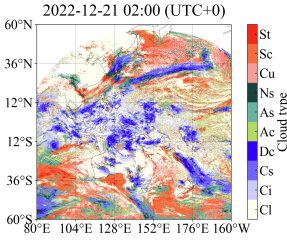

The overall distributions of cloud types every 4 h for CldNet-O and reference during 2022-09-22 are shown in Fig. 13. Compared to the reference in Fig. 13(b), the cloud-type distributions in the nighttime area can be observed using CldNet-O in Fig. 13(a). In order to evidence the predicted results in the nighttime area, a method [Shang et al., 2017] for the identification of clear and cloudy sky over land based on BT of the satellites Himawari-8/9 spectral channel B14 is adopted. Here, confidence of clear sky for each pixel in the satellites Himawari-8/9 image is computed through the formula . The overall distributions of confidence of clear sky every 4 h during 2022-09-22 are shown in Fig. 14.

The cloud cover over Australia (the black box in Fig. 13) is enlarged and compared with the confidence of clear sky at 2022-09-22 16:00 (UTC+0) in Fig. 15. The confidence of clear sky and cloud cover in the region R01 in Fig. 15 exhibit a very similar distribution, which verifies the model’s ability to capture cloud patterns. The cloud cover in the region R02 in Fig. 15(a) shows a long strip distribution, which is supported by the confidence of clear sky in Fig. 15(b). The cloud cover and confidence of clear sky in the region R03 are generally consistent. Overall, the results indicate that CldNet-O can capture subtle features of cloud distribution.

5 Discussion

5.1 Generalization ability

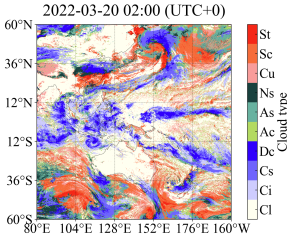

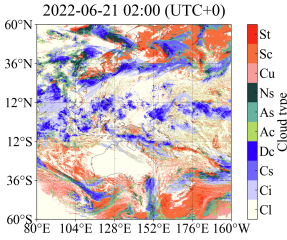

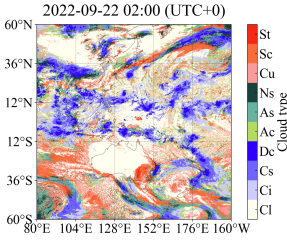

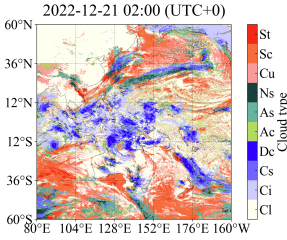

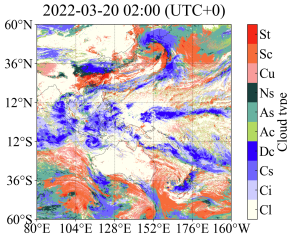

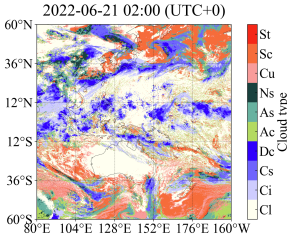

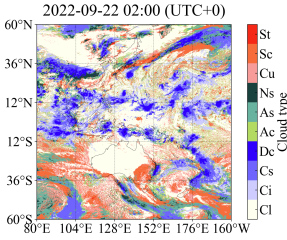

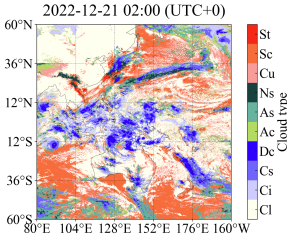

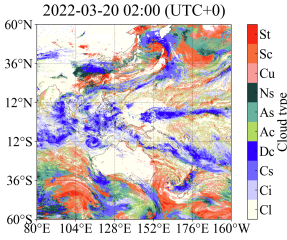

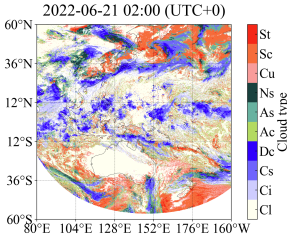

In this section, we directly apply the previously trained model, i.e., the trained CldNet-W, and CldNet-O without any fine-tuning to satellite spectral data with spatial resolution from Himawari-8/9 satellite sensors to obtain the cloud-type distributions with the same spatial resolution . Four time points, including 2022-03-20 2:00 (UTC+0), 2022-06-21 2:00 (UTC+0), 2022-09-22 2:00 (UTC+0), and 2022-12-21 2:00 (UTC+0), are selected for cloud-type prediction. The cloud-type distributions with spatial resolution predicted by the trained CldNet-W and CldNet-O are shown in Fig. 16(a) and 16(b), respectively. The JAXA’s P-Tree system only provides the cloud-type product with spatial resolution , which is used as a reference in Fig. 16(c). From the overall distribution of cloud types in Fig. 16, the trained CldNet-W and CldNet-O have achieved good performance.

In order to quantitatively evaluate the generalization ability of our models CldNet-W and CldNet-O, two methods, Low2High and High2Low, are used to calculate cloud-type classification indicators due to the resolution difference between prediction and reference. The process of Low2High is that the reference is first interpolated from to resolution, and then this is compared to the prediction to calculate those classification indicators. The process of High2Low is that the prediction is first interpolated by neighboring interpolation from to resolution, and then this is compared to the reference to calculate those classification indicators.

The results of the cloud-type classification indicators are recorded in Table 3. The indicators obtained by the method High2Low are better than those obtained by Low2High. For accuracy (N/Y for cloud), the results of CldNet-W and CldNet-O are all above 88%, which indicates that the model has excellent ability in the distinction between clear and cloudy skies. Overall, the trained models CldNet-W and CldNet-O have achieved good results in directly applying high-resolution satellite spectral information to predict high-resolution cloud-type distributions in term of accuracy/, , , and accuracy (N/Y for cloud). This also demonstrates the good generalization ability of the model on higher resolution model input data.

| Model | Time | Accuracy/ | Accuracy (N/Y for cloud) | ||

|---|---|---|---|---|---|

| CldNet-W | 2022-03-20 2:00 (UTC+0) | 0.71157 - 0.74954 | 0.68238 - 0.72400 | 0.70533 - 0.74367 | 0.91217 - 0.92118 |

| CldNet-W | 2022-06-21 2:00 (UTC+0) | 0.74295 - 0.79098 | 0.70851 - 0.76545 | 0.73599 - 0.78507 | 0.90991 - 0.92289 |

| CldNet-W | 2022-09-22 2:00 (UTC+0) | 0.72115 - 0.76174 | 0.68462 - 0.73283 | 0.71372 - 0.75503 | 0.91207 - 0.92252 |

| CldNet-W | 2022-12-21 2:00 (UTC+0) | 0.69669 - 0.74468 | 0.66100 - 0.71047 | 0.69160 - 0.73984 | 0.91068 - 0.92309 |

| CldNet-O | 2022-03-20 2:00 (UTC+0) | 0.66161 - 0.68174 | 0.57814 - 0.59656 | 0.64959 - 0.66994 | 0.91331 - 0.92004 |

| CldNet-O | 2022-06-21 2:00 (UTC+0) | 0.69331 - 0.71657 | 0.58604 - 0.60743 | 0.68059 - 0.70425 | 0.90689 - 0.91522 |

| CldNet-O | 2022-09-22 2:00 (UTC+0) | 0.66723 - 0.69030 | 0.57313 - 0.59417 | 0.65461 - 0.67831 | 0.90940 - 0.91745 |

| CldNet-O | 2022-12-21 2:00 (UTC+0) | 0.62770 - 0.64746 | 0.53697 - 0.55409 | 0.60352 - 0.62373 | 0.87654 - 0.88287 |

5.2 Limitation and future research

One limitation of this study is that the satellite Himawari-8 was replaced by the satellite Himawari-9 in December 2022. Therefore, the training data is from the satellite Himawari-8, while the test data is partially from the satellite Himawari-9. Although the sensors carried by Himawari-8 and Himawari-9 satellites are the Advanced Himawari Imager, the spectral data distribution of the two satellites may be slightly different. The experimental results in Section 4.1.1 indicate that directly applying the model trained on satellite spectral data from the Himawari-8 sensor to satellite spectral data from the Himawari-9 sensor will reduce the accuracy of cloud-type recognition. In order to ensure accuracy, it is necessary to train the spectral data obtained from each satellite separately to obtain the model parameters of the corresponding satellite spectral data. Another limitation is that the labels used for model training are daytime cloud-type labels. In order to enhance the credibility of the model, model parameters still need to be trained using other data containing nighttime cloud-type labels.

In future research, we will adopt appropriate measures to optimize the limitations mentioned above and strive to expand the application scope of this model from regional to global scale. In order to achieve global cloud-type coverage, the proposed model will be applied to multiple geostationary observation satellites, such as Meteosat, GEOS-W/E, FengYun, and Himawari. The global distribution of cloud types is crucial in global climate change and environmental assessment research.

6 Conclusions

In this study, a knowledge-based data-driven (KBDD) framework for all-day identification of cloud types based on spectral information from Himawari-8/9 satellite sensors is designed, and the KBDD framework mainly consists of knowledge module, mask module, addition of auxiliary information, network candidate set, and mask loss. Meanwhile, a novel simple and efficient network, named CldNet, is proposed in this study, which mainly consists of a DW-ASPP module and a DW-U module inspired by the ASPP module of DeepLabV3+ and UNet, respectively.

Our proposed model, CldNet, has achieved the accuracy of 80.89±2.18% on the test dataset. Compared with other commonly used segmentation networks, including SegNet (61.30±1.32%), PSPNet (55.58±1.43%), DeepLabV3+ (66.25±1.45%), UNet (79.50±2.37%), ResUnet (58.27±2.00%) and UNetS (77.37±2.34%), CldNet is state-of-the-art in cloud-type recognition. Meanwhile, the addition of the auxiliary information, including SAZ, SAA, SOZ and SOA, improves the accuracy of CldNet by approximately 1.35%.

By setting the input data involving VIS and NIR to zero in mask module, the trained CldNet-O is capable of achieving cloud-type prediction over nighttime areas. More importantly, the trained models CldNet-W and CldNet-O without any fine-tuning are directly applied to satellite spectral data with spatial resolution from the Himawari-8/9 satellite sensors to obtain the cloud-type distributions with the same spatial resolution , achieving accuracy of above 75% and 65%, respectively. Furthermore, the cloud-type distributions with spatial resolution are similar to those with spatial resolution provided by the JAXA’s P-Tree system. This demonstrates that our framework has strong generalization ability for high-resolution model input data.

The KBDD framework using CldNet is a highly effective cloud-type identification system capable of providing a high-fidelity, all-day, spatiotemporal cloud-type database for many climate assessment fields. In practice, CldNet-W and CldNet-O can be deployed for daytime and nighttime areas, respectively. Meanwhile, the total parameters of CldNet-W/CldNet-O are only 0.46M, making it easy to deploy online on edge devices. For long-term research, the KBDD framework with more cloud property prediction capabilities, and even embedding physical models, to improve accuracy will be explored globally in the future.

CRediT authorship contribution statement

Longfeng Nie: formal analysis, data curation, methodology, software, visualization, writing - original draft. Yuntian Chen: formal analysis, writing - review and editing, funding acquisition. Mengge Du: writing - review and editing. Changqi Sun: writing - review and editing. Dongxiao Zhang: supervision, writing - review and editing, funding acquisition.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Data availability

The GitHub page https://github.com/rsai0/PMD/tree/main/CldNetV1_0_0 is available, which includes the specific network structure settings for all models. Further updates will follow.

Acknowledgements

This work was supported and partially funded by the National Center for Applied Mathematics Shenzhen (NCAMS), the National Natural Science Foundation of China (Grant No. 62106116), China Meteorological Administration Climate Change Special Program (CMA-CCSP) under Grant QBZ202316, the Major Key Project of PCL (Grant No. PCL2022A05), and High Performance Computing Center at Eastern Institute of Technology, Ningbo.

Appendix A

| Model | Stage | Mean (%) | Std (%) | Min (%) | Median (%) | Max (%) |

|---|---|---|---|---|---|---|

| CldNet | Test | 80.89 | 2.18 | 75.60 | 81.10 | 84.91 |

| CldNet | Training | 81.76 | 1.64 | 77.37 | 81.64 | 85.05 |

| CldNet | Validation | 81.58 | 1.63 | 78.42 | 81.21 | 85.14 |

| SegNet | Test | 61.30 | 1.32 | 57.71 | 61.40 | 64.77 |

| SegNet | Training | 61.42 | 1.42 | 57.22 | 61.49 | 64.56 |

| SegNet | Validation | 61.74 | 1.41 | 58.25 | 61.87 | 64.57 |

| PSPNet | Test | 55.58 | 1.43 | 52.17 | 55.64 | 58.95 |

| PSPNet | Training | 54.95 | 2.06 | 48.99 | 55.17 | 60.10 |

| PSPNet | Validation | 55.92 | 1.70 | 51.87 | 56.37 | 59.32 |

| DeepLabV3+ | Test | 66.25 | 1.45 | 60.74 | 66.23 | 69.80 |

| DeepLabV3+ | Training | 66.93 | 1.23 | 63.30 | 66.92 | 69.65 |

| DeepLabV3+ | Validation | 66.83 | 1.23 | 63.53 | 67.06 | 69.35 |

| UNet | Test | 79.50 | 2.37 | 73.12 | 79.80 | 83.70 |

| UNet | Training | 80.23 | 1.85 | 74.98 | 80.18 | 83.97 |

| UNet | Validation | 80.37 | 1.60 | 77.31 | 79.84 | 83.75 |

| ResUnet | Test | 58.27 | 2.00 | 53.77 | 58.47 | 62.39 |

| ResUnet | Training | 58.04 | 2.18 | 50.11 | 58.38 | 61.88 |

| ResUnet | Validation | 58.77 | 2.36 | 54.40 | 59.15 | 63.29 |

| UNetS | Test | 77.37 | 2.34 | 71.53 | 77.70 | 81.67 |

| UNetS | Training | 77.64 | 1.89 | 72.40 | 77.53 | 81.41 |

| UNetS | Validation | 78.13 | 1.57 | 75.26 | 77.98 | 81.73 |

| UNetS-W | Test | 79.08 | 2.23 | 73.83 | 79.35 | 83.09 |

| UNetS-W | Training | 79.51 | 1.92 | 73.86 | 79.34 | 83.24 |

| UNetS-W | Validation | 79.95 | 1.46 | 76.79 | 79.61 | 83.04 |

| UNetS-O | Test | 69.99 | 1.75 | 64.73 | 70.15 | 73.51 |

| UNetS-O | Training | 70.11 | 1.43 | 66.77 | 70.11 | 73.20 |

| UNetS-O | Validation | 70.55 | 1.33 | 67.89 | 70.63 | 73.42 |

| CldNet-W | Test | 82.23 | 2.14 | 77.23 | 82.38 | 86.17 |

| CldNet-W | Training | 82.86 | 1.71 | 77.87 | 82.71 | 86.36 |

| CldNet-W | Validation | 82.96 | 1.60 | 79.50 | 82.44 | 86.58 |

| CldNet-O | Test | 73.21 | 2.02 | 67.11 | 73.53 | 76.83 |

| CldNet-O | Training | 74.04 | 1.34 | 70.63 | 74.07 | 76.90 |

| CldNet-O | Validation | 73.98 | 1.35 | 71.21 | 74.07 | 76.66 |

| Model | Indicator | Cl | Ci | Cs | Dc | Ac | As | Ns | Cu | Sc | St |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CldNet | Precision | 0.89926 | 0.81549 | 0.87483 | 0.89708 | 0.55967 | 0.73586 | 0.73301 | 0.72223 | 0.79858 | 0.72630 |

| CldNet | Recall | 0.91963 | 0.69801 | 0.90647 | 0.85264 | 0.56451 | 0.74197 | 0.68178 | 0.78788 | 0.82451 | 0.48151 |

| CldNet | -score | 0.90933 | 0.75219 | 0.89037 | 0.87430 | 0.56208 | 0.73890 | 0.70647 | 0.75363 | 0.81134 | 0.57910 |

| SegNet | Precision | 0.75953 | 0.65885 | 0.68310 | 0.70178 | 0.37352 | 0.48296 | 0.44760 | 0.49174 | 0.51699 | 0.30031 |

| SegNet | Recall | 0.87888 | 0.52666 | 0.77155 | 0.57951 | 0.23953 | 0.50415 | 0.27200 | 0.52409 | 0.66293 | 0.03409 |

| SegNet | -score | 0.81486 | 0.58538 | 0.72464 | 0.63481 | 0.29188 | 0.49333 | 0.33837 | 0.50740 | 0.58093 | 0.06124 |

| PSPNet | Precision | 0.77093 | 0.49427 | 0.55745 | 0.54970 | 0.30614 | 0.43938 | 0.49505 | 0.46337 | 0.49045 | 0.54712 |

| PSPNet | Recall | 0.77889 | 0.54580 | 0.73306 | 0.72357 | 0.25343 | 0.37933 | 0.32664 | 0.33915 | 0.58362 | 0.09041 |

| PSPNet | -score | 0.77489 | 0.51876 | 0.63331 | 0.62476 | 0.27730 | 0.40715 | 0.39359 | 0.39165 | 0.53299 | 0.15517 |

| DeepLabV3+ | Precision | 0.80689 | 0.70137 | 0.77049 | 0.80571 | 0.42516 | 0.60434 | 0.60918 | 0.52389 | 0.63459 | 0.57543 |

| DeepLabV3+ | Recall | 0.86784 | 0.58361 | 0.81049 | 0.71387 | 0.37366 | 0.65713 | 0.44484 | 0.59872 | 0.65803 | 0.18535 |

| DeepLabV3+ | -score | 0.83626 | 0.63709 | 0.78999 | 0.75701 | 0.39775 | 0.62963 | 0.51420 | 0.55881 | 0.64610 | 0.28039 |

| UNet | Precision | 0.89000 | 0.82380 | 0.85197 | 0.88591 | 0.55346 | 0.69083 | 0.70279 | 0.71192 | 0.78000 | 0.66344 |

| UNet | Recall | 0.91971 | 0.66758 | 0.89256 | 0.78625 | 0.54091 | 0.73454 | 0.59482 | 0.78987 | 0.82110 | 0.47234 |

| UNet | -score | 0.90461 | 0.73751 | 0.87180 | 0.83311 | 0.54712 | 0.71201 | 0.64431 | 0.74887 | 0.80002 | 0.55181 |

| ResUnet | Precision | 0.70701 | 0.60504 | 0.64891 | 0.71789 | 0.35650 | 0.42708 | 0.56189 | 0.48591 | 0.51580 | 0.08512 |

| ResUnet | Recall | 0.86831 | 0.55085 | 0.78377 | 0.51449 | 0.13640 | 0.44127 | 0.23545 | 0.52007 | 0.66036 | 0.00203 |

| ResUnet | -score | 0.77940 | 0.57668 | 0.71000 | 0.59940 | 0.19731 | 0.43406 | 0.33185 | 0.50241 | 0.57920 | 0.00396 |

| UNetS | Precision | 0.87848 | 0.80943 | 0.83723 | 0.84560 | 0.52165 | 0.67380 | 0.66010 | 0.68090 | 0.74660 | 0.63135 |

| UNetS | Recall | 0.91656 | 0.65693 | 0.87540 | 0.78989 | 0.49330 | 0.69061 | 0.56905 | 0.76106 | 0.80982 | 0.37454 |

| UNetS | -score | 0.89711 | 0.72525 | 0.85589 | 0.81680 | 0.50708 | 0.68210 | 0.61120 | 0.71875 | 0.77693 | 0.47016 |

| UNetS-W | Precision | 0.86887 | 0.82598 | 0.88479 | 0.87900 | 0.56838 | 0.74024 | 0.68192 | 0.71039 | 0.74202 | 0.54179 |

| UNetS-W | Recall | 0.91796 | 0.66130 | 0.91032 | 0.93918 | 0.48786 | 0.67082 | 0.76216 | 0.77122 | 0.84260 | 0.77500 |

| UNetS-W | -score | 0.89274 | 0.73452 | 0.89737 | 0.90810 | 0.52505 | 0.70382 | 0.71981 | 0.73955 | 0.78912 | 0.63774 |

| UNetS-O | Precision | 0.86115 | 0.75843 | 0.73966 | 0.68304 | 0.49476 | 0.57628 | 0.57680 | 0.61483 | 0.63152 | 0.40416 |

| UNetS-O | Recall | 0.91666 | 0.57067 | 0.81494 | 0.61837 | 0.39027 | 0.67186 | 0.31917 | 0.69160 | 0.77872 | 0.03141 |

| UNetS-O | -score | 0.88804 | 0.65129 | 0.77548 | 0.64910 | 0.43635 | 0.62041 | 0.41094 | 0.65096 | 0.69744 | 0.05828 |

| CldNet-W | Precision | 0.89334 | 0.83895 | 0.91096 | 0.90900 | 0.57737 | 0.77264 | 0.69976 | 0.74225 | 0.80456 | 0.64512 |

| CldNet-W | Recall | 0.92354 | 0.68315 | 0.92700 | 0.95484 | 0.56018 | 0.75058 | 0.82726 | 0.78538 | 0.84481 | 0.72012 |

| CldNet-W | -score | 0.90819 | 0.75307 | 0.91891 | 0.93135 | 0.56865 | 0.76145 | 0.75819 | 0.76321 | 0.82419 | 0.68056 |

| CldNet-O | Precision | 0.88089 | 0.79055 | 0.78105 | 0.69935 | 0.54131 | 0.62346 | 0.53390 | 0.67256 | 0.68904 | 0.40466 |

| CldNet-O | Recall | 0.92033 | 0.61353 | 0.83083 | 0.75633 | 0.45802 | 0.69151 | 0.52409 | 0.71505 | 0.77811 | 0.22495 |

| CldNet-O | -score | 0.90018 | 0.69088 | 0.80517 | 0.72673 | 0.49620 | 0.65572 | 0.52895 | 0.69316 | 0.73087 | 0.28916 |

| Cloud type | Prediction | Recall | ||||||||||

| Cl | Ci | Cs | Dc | Ac | As | Ns | Cu | Sc | St | |||

| Reference | Cl | 1292447 | 33454 | 381 | 83 | 56481 | 664 | 78 | 21029 | 743 | 34 | 0.91963 |

| Ci | 59786 | 482596 | 5715 | 0 | 134868 | 5302 | 0 | 2955 | 165 | 0 | 0.69801 | |

| Cs | 30 | 22777 | 558228 | 14000 | 1726 | 18853 | 210 | 0 | 0 | 0 | 0.90647 | |

| Dc | 22 | 0 | 26457 | 184882 | 0 | 2559 | 2916 | 0 | 0 | 0 | 0.85264 | |

| Ac | 49754 | 47880 | 669 | 0 | 367854 | 21679 | 3 | 151048 | 12743 | 0 | 0.56451 | |

| As | 461 | 4689 | 45733 | 1316 | 17942 | 383128 | 15806 | 3314 | 43423 | 555 | 0.74197 | |

| Ns | 417 | 0 | 918 | 5811 | 11 | 23972 | 84935 | 2 | 4702 | 3810 | 0.68178 | |

| Cu | 32946 | 388 | 0 | 0 | 74181 | 975 | 0 | 507022 | 28015 | 1 | 0.78788 | |

| Sc | 1255 | 0 | 0 | 0 | 4209 | 60139 | 2701 | 16655 | 430180 | 6601 | 0.82451 | |

| St | 119 | 0 | 0 | 0 | 0 | 3383 | 9222 | 0 | 18710 | 29192 | 0.48151 | |

| Precision | 0.89371 | 0.81867 | 0.87425 | 0.89895 | 0.55957 | 0.71936 | 0.75734 | 0.71921 | 0.80165 | 0.72324 | ||

| Model | Accuracy/ | Accuracy (N/Y for cloud) | ||

|---|---|---|---|---|

| CldNet | 0.79305 | 0.75777 | 0.79207 | 0.95269 |

| SegNet | 0.61227 | 0.50328 | 0.59734 | 0.89697 |

| PSPNet | 0.55256 | 0.47096 | 0.54198 | 0.88326 |

| DeepLabV3+ | 0.67093 | 0.60472 | 0.66603 | 0.91233 |

| UNet | 0.77928 | 0.73512 | 0.77754 | 0.94996 |

| ResUnet | 0.59120 | 0.47143 | 0.56555 | 0.87320 |

| UNetS | 0.75930 | 0.70613 | 0.75655 | 0.94577 |

| UNetS-W | 0.78079 | 0.75478 | 0.77710 | 0.94310 |

| UNetS-O | 0.69991 | 0.58383 | 0.68996 | 0.94037 |

| CldNet-W | 0.80649 | 0.78678 | 0.80498 | 0.95183 |

| CldNet-O | 0.73310 | 0.65170 | 0.72853 | 0.94735 |

References

- Amini Amirkolaee et al. [2022] Amini Amirkolaee, H., Arefi, H., Ahmadlou, M., & Raikwar, V. (2022). Dtm extraction from dsm using a multi-scale dtm fusion strategy based on deep learning. Remote Sens. Environ., 274, 113014. doi:10.1016/j.rse.2022.113014.

- Badrinarayanan et al. [2017] Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell., 39, 2481–2495. doi:10.1109/TPAMI.2016.2644615.

- Bessho et al. [2016] Bessho, K., Date, K., Hayashi, M., Ikeda, A., Imai, T., Inoue, H., Kumagai, Y., Miyakawa, T., Murata, H., Ohno, T., Okuyama, A., Oyama, R., Sasaki, Y., Shimazu, Y., Shimoji, K., Sumida, Y., Suzuki, M., Taniguchi, H., Tsuchiyama, H., Uesawa, D., Yokota, H., & Yoshida, R. (2016). An introduction to Himawari-8/9 - Japan’s new-generation geostationary meteorological satellites. J. Meteorolog. Soc. Jpn., 94, 151–183. doi:10.2151/jmsj.2016-009.

- Bühl et al. [2019] Bühl, J., Seifert, P., Engelmann, R., & Ansmann, A. (2019). Impact of vertical air motions on ice formation rate in mixed-phase cloud layers. npj Clim. Atmos. Sci., 2, 36. doi:10.1038/s41612-019-0092-6.

- Caraballo-Vega et al. [2023] Caraballo-Vega, J., Carroll, M., Neigh, C., Wooten, M., Lee, B., Weis, A., Aronne, M., Alemu, W., & Williams, Z. (2023). Optimizing worldview-2, -3 cloud masking using machine learning approaches. Remote Sens. Environ., 284, 113332. doi:10.1016/j.rse.2022.113332.

- Cesana & Del Genio [2021] Cesana, G. V., & Del Genio, A. D. (2021). Observational constraint on cloud feedbacks suggests moderate climate sensitivity. Nat. Clim. Change, 11, 213–218. doi:10.1038/s41558-020-00970-y.

- Chen et al. [2018] Chen, L., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. CoRR, abs/1802.02611. arXiv:1802.02611.

- Chollet [2017] Chollet, F. (2017). Xception: Deep learning with depthwise separable convolutions. In 2017 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) (pp. 1800--1807). doi:10.1109/CVPR.2017.195.

- Dev et al. [2019] Dev, S., Nautiyal, A., Lee, Y. H., & Winkler, S. (2019). Cloudsegnet: A deep network for Nychthemeron cloud image segmentation. IEEE Geosci. Remote Sens. Lett., 16, 1814--1818. doi:10.1109/LGRS.2019.2912140.

- Dietterich [1995] Dietterich, T. (1995). Overfitting and undercomputing in machine learning. ACM Comput. Surv., 27, 326--327. doi:10.1145/212094.212114.

- Ding et al. [2022] Ding, L., Corizzo, R., Bellinger, C., Ching, N., Login, S., Yepez-Lopez, R., Gong, J., & Wu, D. L. (2022). Imbalanced multi-layer cloud classification with Advanced Baseline Imager (ABI) and CloudSat/CALIPSO data. In 2022 IEEE Trans. Big Data (pp. 5902--5909). doi:10.1109/BigData55660.2022.10020783.

- Eytan et al. [2020] Eytan, E., Koren, I., Altaratz, O., Kostinski, A. B., & Ronen, A. (2020). Longwave radiative effect of the cloud twilight zone. Nat. Geosci., 13, 669--673. doi:10.1038/s41561-020-0636-8.

- Foga et al. [2017] Foga, S., Scaramuzza, P. L., Guo, S., Zhu, Z., Dilley, R. D., Beckmann, T., Schmidt, G. L., Dwyer, J. L., Joseph Hughes, M., & Laue, B. (2017). Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ., 194, 379--390. doi:10.1016/j.rse.2017.03.026.

- Furtado et al. [2017] Furtado, K., Field, P. R., Luo, Y., Liu, X., Guo, Z., Zhou, T., Shipway, B. J., Hill, A. A., & Wilkinson, J. M. (2017). Cloud microphysical factors affecting simulations of deep convection during the presummer rainy season in southern China. J. Geophys. Res.: Atmos., 123, 10,477--10,505. doi:10.1029/2017JD028192.

- Ghasemian & Akhoondzadeh [2018] Ghasemian, N., & Akhoondzadeh, M. (2018). Introducing two Random Forest based methods for cloud detection in remote sensing images. Adv. Space Res., 62, 288--303. doi:10.1016/j.asr.2018.04.030.

- Goldblatt et al. [2021] Goldblatt, C., McDonald, V. L., & McCusker, K. E. (2021). Earth’s long-term climate stabilized by clouds. Nat. Geosci., 14, 143--150. doi:10.1038/s41561-021-00691-7.

- Hartick et al. [2022] Hartick, C., Furusho-Percot, C., Clark, M. P., & Kollet, S. (2022). An interannual drought feedback loop affects the surface energy balance and cloud properties. Geophys. Res. Lett., 49, e2022GL100924. doi:10.1029/2022GL100924.

- He et al. [2016] He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In 2016 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) (pp. 770--778). doi:10.1109/CVPR.2016.90.

- Hieronymus et al. [2022] Hieronymus, M., Baumgartner, M., Miltenberger, A., & Brinkmann, A. (2022). Algorithmic differentiation for sensitivity analysis in cloud microphysics. J. Adv. Model. Earth Syst., 14, e2021MS002849. doi:10.1029/2021MS002849.

- Hua et al. [2018] Hua, B.-S., Tran, M.-K., & Yeung, S.-K. (2018). Pointwise convolutional neural networks. In 2018 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) (pp. 984--993). doi:10.1109/CVPR.2018.00109.

- Huang et al. [2017] Huang, Y., Meng, Z., Li, J., Li, W., Bai, L., Zhang, M., & Wang, X. (2017). Distribution and variability of satellite-derived signals of isolated convection initiation events over central eastern china. J. Geophys. Res.: Atmos., 122, 11,357--11,373. doi:10.1002/2017JD026946.

- Huang et al. [2022] Huang, Y., Wang, Z., Jiang, X., Wu, M., Zhang, C., & Guo, J. (2022). Pointshift: Point-wise shift mlp for pixel-level cloud type classification in meteorological satellite imagery. In 2022 IEEE Int. Geosci. Remote Sens. Symp. (pp. 607--610). doi:10.1109/IGARSS46834.2022.9883178.

- Ishida & Nakajima [2009] Ishida, H., & Nakajima, T. Y. (2009). Development of an unbiased cloud detection algorithm for a spaceborne multispectral imager. J. Geophys. Res.: Atmos., 114, D07206. doi:10.1029/2008JD010710.

- Jeppesen et al. [2019] Jeppesen, J. H., Jacobsen, R. H., Inceoglu, F., & Toftegaard, T. S. (2019). A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ., 229, 247--259. doi:10.1016/j.rse.2019.03.039.

- Joshi et al. [2019] Joshi, P. P., Wynne, R. H., & Thomas, V. A. (2019). Cloud detection algorithm using SVM with SWIR2 and tasseled cap applied to Landsat 8. Int. J. Appl. Earth Obs. Geoinf., 82, 101898. doi:10.1016/j.jag.2019.101898.

- Jun & Fengxian [1990] Jun, L., & Fengxian, Z. (1990). Computer identification of multispectral satellite cloud imagery. Adv. Atmos. Sci., 7, 366--375. doi:10.1007/BF03179768.

- Jørgensen et al. [2022] Jørgensen, L. B., Ørsted, M., Malte, H., Wang, T., & Overgaard, J. (2022). Extreme escalation of heat failure rates in ectotherms with global warming. Nature, 611, 93--98. doi:10.1038/s41586-022-05334-4.

- Kanu et al. [2020] Kanu, S., Khoja, R., Lal, S., Raghavendra, B., & CS, A. (2020). Cloudx-net: A robust encoder-decoder architecture for cloud detection from satellite remote sensing images. Remote Sens. Appl.: Soc. Environ., 20, 100417. doi:10.1016/j.rsase.2020.100417.

- Kaps et al. [2023] Kaps, A., Lauer, A., Camps-Valls, G., Gentine, P., Gómez-Chova, L., & Eyring, V. (2023). Machine-learned cloud classes from satellite data for process-oriented climate model evaluation. IEEE Trans. Geosci. Remote Sens., 61, 1--15. doi:10.1109/TGRS.2023.3237008.

- Khatri et al. [2018] Khatri, P., Iwabuchi, H., & Saito, M. (2018). Vertical profiles of ice cloud microphysical properties and their impacts on cloud retrieval using thermal infrared measurements. J. Geophys. Res.: Atmos., 123, 5301--5319. doi:10.1029/2017JD028165.

- Kurihara et al. [2016] Kurihara, Y., Murakami, H., & Kachi, M. (2016). Sea surface temperature from the new japanese geostationary meteorological himawari-8 satellite. Geophys. Res. Lett., 43, 1234--1240. doi:10.1002/2015GL067159.

- Lai et al. [2019] Lai, R., Teng, S., Yi, B., Letu, H., Min, M., Tang, S., & Liu, C. (2019). Comparison of cloud properties from himawari-8 and fengyun-4a geostationary satellite radiometers with modis cloud retrievals. Remote Sens., 11. doi:10.3390/rs11141703.

- Larosa et al. [2023] Larosa, S., Cimini, D., Gallucci, D., Di Paola, F., Nilo, S. T., Ricciardelli, E., Ripepi, E., & Romano, F. (2023). A cloud detection neural network approach for the next generation microwave sounder aboard eps metop-sg a1. Remote Sens., 15. doi:10.3390/rs15071798.

- Letu et al. [2019] Letu, H., Nagao, T. M., Nakajima, T. Y., Riedi, J., Ishimoto, H., Baran, A. J., Shang, H., Sekiguchi, M., & Kikuchi, M. (2019). Ice cloud properties from himawari-8/ahi next-generation geostationary satellite: Capability of the ahi to monitor the dc cloud generation process. IEEE Trans. Geosci. Remote Sens., 57, 3229--3239. doi:10.1109/TGRS.2018.2882803.

- Letu et al. [2020] Letu, H., Yang, K., Nakajima, T. Y., Ishimoto, H., Nagao, T. M., Riedi, J., Baran, A. J., Ma, R., Wang, T., Shang, H., Khatri, P., Chen, L., Shi, C., & Shi, J. (2020). High-resolution retrieval of cloud microphysical properties and surface solar radiation using himawari-8/ahi next-generation geostationary satellite. Remote Sens. Environ., 239, 111583. doi:10.1016/j.rse.2019.111583.

- Li et al. [2019] Li, C., Ma, J., Yang, P., & Li, Z. (2019). Detection of cloud cover using dynamic thresholds and radiative transfer models from the polarization satellite image. J. Quant. Spectrosc. Radiat. Transfer, 222-223, 196--214. doi:10.1016/j.jqsrt.2018.10.026.

- Li et al. [2022] Li, J., Wu, Z., Sheng, Q., Wang, B., Hu, Z., Zheng, S., Camps-Valls, G., & Molinier, M. (2022). A hybrid generative adversarial network for weakly-supervised cloud detection in multispectral images. Remote Sens. Environ., 280, 113197. doi:10.1016/j.rse.2022.113197.

- Li et al. [2015] Li, P., Dong, L., Xiao, H., & Xu, M. (2015). A cloud image detection method based on SVM vector machine. Neurocomputing, 169, 34--42. doi:10.1016/j.neucom.2014.09.102.

- Li et al. [2020] Li, Y., Chen, W., Zhang, Y., Tao, C., Xiao, R., & Tan, Y. (2020). Accurate cloud detection in high-resolution remote sensing imagery by weakly supervised deep learning. Remote Sens. Environ., 250, 112045. doi:10.1016/j.rse.2020.112045.

- Li et al. [2007] Li, Z., Li, J., Menzel, W. P., Schmit, T. J., & Ackerman, S. A. (2007). Comparison between current and future environmental satellite imagers on cloud classification using MODIS. Remote Sens. Environ., 108, 311--326. doi:10.1016/j.rse.2006.11.023.

- Mateo-García et al. [2020] Mateo-García, G., Laparra, V., López-Puigdollers, D., & Gómez-Chova, L. (2020). Transferring deep learning models for cloud detection between landsat-8 and proba-v. ISPRS J. Photogramm. Remote Sens., 160, 1--17. doi:10.1016/j.isprsjprs.2019.11.024.

- Nakajima & Nakajma [1995] Nakajima, T. Y., & Nakajma, T. (1995). Wide-area determination of cloud microphysical properties from noaa avhrr measurements for fire and astex regions. J. Atmos. Sci., 52, 4043 -- 4059. doi:10.1175/1520-0469(1995)052<4043:WADOCM>2.0.CO;2.

- Narenpitak et al. [2017] Narenpitak, P., Bretherton, C. S., & Khairoutdinov, M. F. (2017). Cloud and circulation feedbacks in a near-global aquaplanet cloud-resolving model. J. Adv. Model. Earth Syst., 9, 1069--1090. doi:10.1002/2016MS000872.

- Pang et al. [2023] Pang, S., Sun, L., Tian, Y., Ma, Y., & Wei, J. (2023). Convolutional neural network-driven improvements in global cloud detection for landsat 8 and transfer learning on sentinel-2 imagery. Remote Sens., 15. doi:10.3390/rs15061706.

- Poulsen et al. [2020] Poulsen, C., Egede, U., Robbins, D., Sandeford, B., Tazi, K., & Zhu, T. (2020). Evaluation and comparison of a machine learning cloud identification algorithm for the SLSTR in polar regions. Remote Sens. Environ., 248, 111999. doi:10.1016/j.rse.2020.111999.

- Purbantoro et al. [2019] Purbantoro, B., Aminuddin, J., Manago, N., Toyoshima, K., Lagrosas, N., Sri Sumantyo, J. T., & Kuze, H. (2019). Evaluation of cloud type classification based on split window algorithm using Himawari-8 satellite data. In 2019 IEEE Int. Geosci. Remote Sens. Symp. (pp. 170--173). doi:10.1109/IGARSS.2019.8898451.

- Qiu et al. [2017] Qiu, S., He, B., Zhu, Z., Liao, Z., & Quan, X. (2017). Improving Fmask cloud and cloud shadow detection in mountainous area for Landsats 4-8 images. Remote Sens. Environ., 199, 107--119. doi:10.1016/j.rse.2017.07.002.

- Qiu et al. [2019] Qiu, S., Zhu, Z., & He, B. (2019). Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4-8 and Sentinel-2 imagery. Remote Sens. Environ., 231, 111205. doi:10.1016/j.rse.2019.05.024.

- Ronneberger et al. [2015] Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. CoRR, abs/1505.04597. arXiv:1505.04597.

- Rossow & Schiffer [1999] Rossow, W. B., & Schiffer, R. A. (1999). Advances in understanding clouds from ISCCP. Bull. Amer. Meteor. Soc., 80, 2261 -- 2288. doi:10.1175/1520-0477(1999)080<2261:AIUCFI>2.0.CO;2.

- Sedano et al. [2011] Sedano, F., Kempeneers, P., Strobl, P., Kucera, J., Vogt, P., Seebach, L., & San-Miguel-Ayanz, J. (2011). A cloud mask methodology for high resolution remote sensing data combining information from high and medium resolution optical sensors. ISPRS J. Photogramm. Remote Sens., 66, 588--596. doi:10.1016/j.isprsjprs.2011.03.005.

- Segal-Rozenhaimer et al. [2020a] Segal-Rozenhaimer, M., Li, A., Das, K., & Chirayath, V. (2020a). Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (cnn). Remote Sens. Environ., 237, 111446. doi:10.1016/j.rse.2019.111446.

- Segal-Rozenhaimer et al. [2020b] Segal-Rozenhaimer, M., Li, A., Das, K., & Chirayath, V. (2020b). Cloud detection algorithm for multi-modal satellite imagery using convolutional neural-networks (CNN). Remote Sens. Environ., 237, 111446. doi:10.1016/j.rse.2019.111446.

- Shang et al. [2017] Shang, H., Chen, L., Letu, H., Zhao, M., Li, S., & Bao, S. (2017). Development of a daytime cloud and haze detection algorithm for Himawari-8 satellite measurements over central and eastern China. J. Geophys. Res.: Atmos., 122, 3528--3543. doi:10.1002/2016JD025659.

- Skakun et al. [2021] Skakun, S., Vermote, E. F., Artigas, A. E. S., Rountree, W. H., & Roger, J.-C. (2021). An experimental sky-image-derived cloud validation dataset for Sentinel-2 and Landsat 8 satellites over NASA GSFC. Int. J. Appl. Earth Obs. Geoinf., 95, 102253. doi:10.1016/j.jag.2020.102253.

- Skakun et al. [2022] Skakun, S., Wevers, J., Brockmann, C., Doxani, G., Aleksandrov, M., Batič, M., Frantz, D., Gascon, F., Gómez-Chova, L., Hagolle, O., López-Puigdollers, D., Louis, J., Lubej, M., Mateo-García, G., Osman, J., Peressutti, D., Pflug, B., Puc, J., Richter, R., Roger, J.-C., Scaramuzza, P., Vermote, E., Vesel, N., Zupanc, A., & Žust, L. (2022). Cloud mask intercomparison eXercise (CMIX): An evaluation of cloud masking algorithms for Landsat 8 and Sentinel-2. Remote Sens. Environ., 274, 112990. doi:10.1016/j.rse.2022.112990.

- Sun et al. [2017] Sun, L., Mi, X., Wei, J., Wang, J., Tian, X., Yu, H., & Gan, P. (2017). A cloud detection algorithm-generating method for remote sensing data at visible to short-wave infrared wavelengths. ISPRS J. Photogramm. Remote Sens., 124, 70--88. doi:10.1016/j.isprsjprs.2016.12.005.

- Tana et al. [2023] Tana, G., Ri, X., Shi, C., Ma, R., Letu, H., Xu, J., & Shi, J. (2023). Retrieval of cloud microphysical properties from himawari-8/ahi infrared channels and its application in surface shortwave downward radiation estimation in the sun glint region. Remote Sens. Environ., 290, 113548. doi:10.1016/j.rse.2023.113548.

- Taniguchi et al. [2022] Taniguchi, D., Yamazaki, K., & Uno, S. (2022). The great dimming of betelgeuse seen by the himawari-8 meteorological satellite. Nature Astronomy, 6, 930--935. doi:10.1038/s41550-022-01680-5.

- Teng et al. [2020] Teng, S., Liu, C., Zhang, Z., Wang, Y., Sohn, B.-J., & Yung, Y. L. (2020). Retrieval of ice-over-water cloud microphysical and optical properties using passive radiometers. Geophys. Res. Lett., 47, e2020GL088941. doi:10.1029/2020GL088941.

- Waldner & Diakogiannis [2020] Waldner, F., & Diakogiannis, F. I. (2020). Deep learning on edge: Extracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ., 245, 111741. doi:10.1016/j.rse.2020.111741.

- Wang et al. [2022a] Wang, Q., Zhou, C., Zhuge, X., Liu, C., Weng, F., & Wang, M. (2022a). Retrieval of cloud properties from thermal infrared radiometry using convolutional neural network. Remote Sens. Environ., 278, 113079. doi:10.1016/j.rse.2022.113079.

- Wang et al. [2016] Wang, T., Fetzer, E. J., Wong, S., Kahn, B. H., & Yue, Q. (2016). Validation of MODIS cloud mask and multilayer flag using CloudSat-CALIPSO cloud profiles and a cross-reference of their cloud classifications. J. Geophys. Res.: Atmos., 121, 11,620--11,635. doi:10.1002/2016JD025239.

- Wang et al. [2022b] Wang, X., Iwabuchi, H., & Yamashita, T. (2022b). Cloud identification and property retrieval from Himawari-8 infrared measurements via a deep neural network. Remote Sens. Environ., 275, 113026. doi:10.1016/j.rse.2022.113026.

- Wang et al. [2019] Wang, Y., Yang, P., Hioki, S., King, M. D., Baum, B. A., Di Girolamo, L., & Fu, D. (2019). Ice cloud optical thickness, effective radius, and ice water path inferred from fused misr and modis measurements based on a pixel-level optimal ice particle roughness model. J. Geophys. Res.: Atmos., 124, 12126--12140. doi:10.1029/2019JD030457.

- Wang et al. [2021] Wang, Z., Kong, X., Cui, Z., Wu, M., Zhang, C., Gong, M., & Liu, T. (2021). Vecnet: A spectral and multi-scale spatial fusion deep network for pixel-level cloud type classification in Himawari-8 imagery. In 2021 IEEE Int. Geosci. Remote Sens. Symp. (pp. 4083--4086). doi:10.1109/IGARSS47720.2021.9554737.

- Wang & Sassen [2001] Wang, Z., & Sassen, K. (2001). Cloud type and macrophysical property retrieval using multiple remote sensors. J. Appl. Meteorol., 40, 1665 -- 1682. doi:10.1175/1520-0450(2001)040<1665:CTAMPR>2.0.CO;2.

- Watanabe et al. [2018] Watanabe, M., Kamae, Y., Shiogama, H., DeAngelis, A. M., & Suzuki, K. (2018). Low clouds link equilibrium climate sensitivity to hydrological sensitivity. Nat. Clim. Change, 8, 901--906. doi:10.1038/s41558-018-0272-0.

- Wieland et al. [2019] Wieland, M., Li, Y., & Martinis, S. (2019). Multi-sensor cloud and cloud shadow segmentation with a convolutional neural network. Remote Sens. Environ., 230, 111203. doi:10.1016/j.rse.2019.05.022.

- Wu et al. [2022] Wu, K., Xu, Z., Lyu, X., & Ren, P. (2022). Cloud detection with boundary nets. ISPRS J. Photogramm. Remote Sens., 186, 218--231. doi:10.1016/j.isprsjprs.2022.02.010.

- Yang et al. [2020] Yang, X., Zhao, C., Luo, N., Zhao, W., Shi, W., & Yan, X. (2020). Evaluation and comparison of Himawari-8 L2 V1.0, V2.1 and MODIS C6.1 aerosol products over Asia and the oceania regions. Atmos. Environ., 220, 117068. doi:10.1016/j.atmosenv.2019.117068.

- Yang et al. [2022] Yang, Y., Sun, W., Chi, Y., Yan, X., Fan, H., Yang, X., Ma, Z., Wang, Q., & Zhao, C. (2022). Machine learning-based retrieval of day and night cloud macrophysical parameters over East Asia using Himawari-8 data. Remote Sens. Environ., 273, 112971. doi:10.1016/j.rse.2022.112971.

- Yoo et al. [2022] Yoo, S., Lee, J., Gholami Farkoushi, M., Lee, E., & Sohn, H.-G. (2022). Automatic generation of land use maps using aerial orthoimages and building floor data with a Conv-Depth Block (CDB) ResU-Net architecture. Int. J. Appl. Earth Obs. Geoinf., 107, 102678. doi:10.1016/j.jag.2022.102678.

- Yu et al. [2023] Yu, J., He, X., Yang, P., Motagh, M., Xu, J., & Xiong, J. (2023). Coastal aquaculture extraction using GF-3 fully polarimetric SAR imagery: A framework integrating UNet++ with marker-controlled watershed segmentation. Remote Sens., 15, 2246. doi:10.3390/rs15092246.

- Yu et al. [2021] Yu, Z., Ma, S., Han, D., Li, G., Gao, D., & Yan, W. (2021). A cloud classification method based on random forest for FY-4A. Int. J. Remote Sens., 42, 3353--3379. doi:10.1080/01431161.2020.1871098.

- Zhang et al. [2019] Zhang, C., Zhuge, X., & Yu, F. (2019). Development of a high spatiotemporal resolution cloud-type classification approach using Himawari-8 and CloudSat. Int. J. Remote Sens., 40, 6464--6481. doi:10.1080/01431161.2019.1594438.

- Zhang et al. [2023] Zhang, Q., Shen, Z., Pokhrel, Y., Farinotti, D., Singh, V. P., Xu, C.-Y., Wu, W., & Wang, G. (2023). Oceanic climate changes threaten the sustainability of Asia’s water tower. Nature, 611, 87--93. doi:10.1038/s41586-022-05643-8.

- Zhang et al. [2018] Zhang, Z., Liu, Q., & Wang, Y. (2018). Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett., 15, 749--753. doi:10.1109/LGRS.2018.2802944.

- Zhao et al. [2017] Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. In 2017 IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR) (pp. 6230--6239). doi:10.1109/CVPR.2017.660.

- Zhao et al. [2023] Zhao, Z., Zhang, F., Wu, Q., Li, Z., Tong, X., Li, J., & Han, W. (2023). Cloud identification and properties retrieval of the fengyun-4a satellite using a resunet model. IEEE Trans. Geosci. Remote Sens., 61, 1--18. doi:10.1109/TGRS.2023.3252023.