A Maximum Entropy Principle in Deep Thermalization

and in Hilbert-Space Ergodicity

Abstract

We report universal statistical properties displayed by ensembles of pure states that naturally emerge in quantum many-body systems. Specifically, two classes of state ensembles are considered: those formed by i) the temporal trajectory of a quantum state under unitary evolution or ii) the quantum states of small subsystems obtained by partial, local projective measurements performed on their complements. These cases respectively exemplify the phenomena of “Hilbert-space ergodicity” and “deep thermalization.” In both cases, the resultant ensembles are defined by a simple principle: the distributions of pure states have maximum entropy, subject to constraints such as energy conservation, and effective constraints imposed by thermalization. We present and numerically verify quantifiable signatures of this principle by deriving explicit formulae for all statistical moments of the ensembles; proving the necessary and sufficient conditions for such universality under widely-accepted assumptions; and describing their measurable consequences in experiments. We further discuss information-theoretic implications of the universality: our ensembles have maximal information content while being maximally difficult to interrogate, establishing that generic quantum state ensembles that occur in nature hide (scramble) information as strongly as possible. Our results generalize the notions of Hilbert-space ergodicity to time-independent Hamiltonian dynamics and deep thermalization from infinite to finite effective temperature. Our work presents new perspectives to characterize and understand universal behaviors of quantum dynamics using statistical and information theoretic tools.

I Introduction

The second law of thermodynamics is a foundational concept in statistical physics with far reaching implications across many areas of studies, from quantum gravity to information theory and computation [1, 2, 3, 4, 5]. At its core is the idea of a maximum entropy principle [6, 7, 8, 9, 10]; that a macroscopic system in equilibrium is described by the state with maximum entropy, subject to physical constraints such as global energy and particle number conservation. It is remarkable that such a simple principle enables highly accurate predictions about a large system without knowledge of the vast majority of its degrees of freedom.

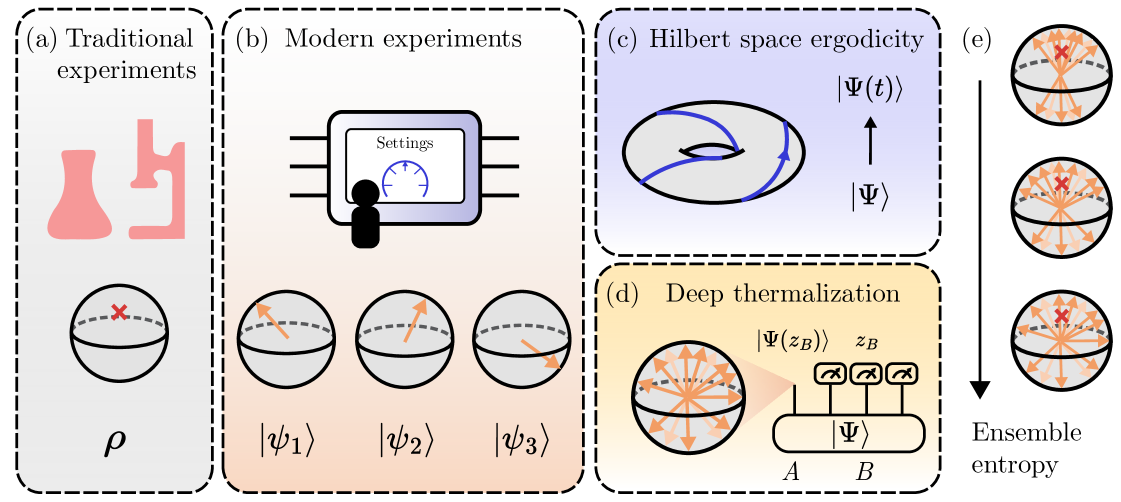

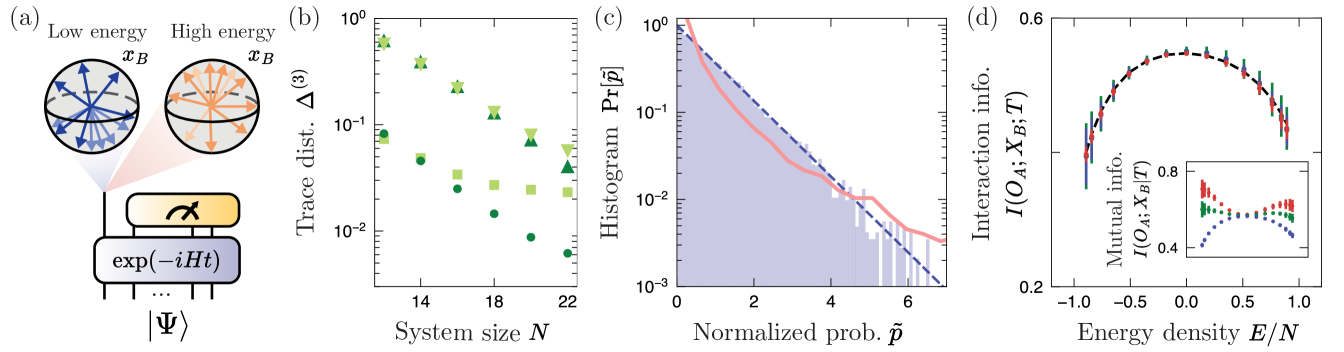

In quantum systems, the maximum entropy principle [11, 12] is often justified by one of the following two conditions relevant to many conventional experiments [Fig. 1(a)]. First, one only has access to local or spatially averaged observables [13, 14, 15, 16]. Second, one can only measure observables averaged over long time intervals or at late, equilibrium times without fine-grained temporal resolution [17, 15, 16]. In both cases, measurement outcomes become largely agnostic to microscopic details of the initial state or system dynamics and are well-described by thermal density matrices. It is this inability to obtain detailed, microscopic information from traditional experiments that render quantum states to be in a probabilistic ensemble and ultimately empower the maximum entropy principle to make accurate and practical predictions of accessible observables.

Modern quantum experiments challenge such traditional settings [Fig. 1(b)]. Quantum devices built using atomic or superconducting qubit platforms have realized and probed diverse phenomena ranging from non-equilibrium dynamics [18, 19, 20, 21, 22] to exotic phases of matter [23, 24, 25, 26, 27]. These experiments have the capability to fully interrogate the entire quantum system, enabling the measurement of arbitrary, non-local multi-particle correlation functions at the fundamental quantum projection limit. They are also able to deterministically evolve quantum many-body states with a high repetition rate, enabling the study of quantum dynamics with an unprecedented level of accuracy and details. Existing maximum entropy principles were not designed to capture such microscopic measurement outcomes, which are non-local and time-resolved. Therefore, we set out to develop a new approach that may provide an effective statistical model for such data. Here, we report the discovery of a generalized maximum entropy principle for quantum many-body systems that is well aligned with modern capabilities of experiments.

We specifically study two settings, the temporal and projected ensembles. The former considers the trajectory of quantum states under time evolution, while the latter considers ensembles of states generated by partial projective measurements performed on the system [Fig. 1(c,d)]. In both settings, the average states, or first moments, of the ensembles are described by density matrices which have been extensively studied in the literature [17, 13, 14, 12, 15, 16]. Here, certain universal phenomena apply, such as the fact that the reduced density matrices of small subsystems are well described by thermal Gibbs states [14, 15], or dephasing in the energy-eigenbasis, which leads to the so-called diagonal ensemble as an effective description of equilibrium properties [12, 17, 13]. However, the temporal and projected ensembles of generic systems have not been studied beyond their first moments. Our results extend this universality to higher moments, finding that the first moment uniquely fixes the higher moments.

We find a new type of maximum entropy principle that describes ensembles of states in the above settings [Fig. 1(e)]. The entropy in question is an ensemble entropy, which quantifies how the states in an ensemble are spread out over the relevant Hilbert space. This is distinct from the conventional von Neumann entropy, which only depends on the average state (density matrix) of the ensemble. In broad strokes, our main finding is that in many physical situations, the ensembles of pure states maximize the ensemble entropy up to physical constraints such as energy conservation or local thermalization, thus establishing a new maximum entropy principle. Special cases of our ensembles of states have been discussed in the literature under the names of “deep thermalization” [28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38] and “Hilbert space ergodicity” [39, 40] respectively, in particular at infinite effective temperature. Here we present a unified principle that holds under general conditions.

Ultimately, our work is a theory of fluctuations: the variations in observables over states in an ensemble, which go fundamentally beyond ensemble-averaged observables that are typically studied. In companion experimental work [41], we use insights gained from this work to predict and verify universal forms of fluctuations in a variety of quantities, interpolating from global to local, and in closed as well as open system dynamics.

Our findings have several implications. First, we confirm that Hamiltonian dynamics uniformly explores its available Hilbert space. Specifically, the temporal trace of a many-body quantum state satisfies the maximum entropy principle under the constraint of energy conservation. For sufficiently long evolution, the trajectory of the state is only constrained by the population of the energy eigenstates: all other degrees of freedom are randomly distributed with maximum entropy. This improves our understanding of thermalization as a dynamical phenomenon [42, 43] and its relationship to static notions of thermal equilibrium [44, 45]. While thermalization has traditionally concerned the dynamics of local quantities —e.g. how such quantities reach their equilibrium values— here we study the dynamics of the global state, confirming and formalizing our intuition about ergodicity in Hilbert space [46, 39]. We find necessary and sufficient conditions for Hilbert-space ergodicity and find that curiously, just as in classical dynamics, a system can be ergodic without being chaotic.

Second, we generalize notions of deep thermalization [28, 29, 30] away from infinite temperature. Deep thermalization refers to the observation that in generic many-body dynamics, not only does the density matrix of a local subsystem reach thermal equilibrium, so do the higher moments of the projected ensemble. Additionally, it has been pointed out that the higher moments may take a longer time than lower moments to converge, implying the possibility of sustained thermalization (in non-local quantities) even when local observables have converged [30, 31, 36]. The equilibrium values of such higher moments have considerable structure. At infinite effective temperature, the projected ensemble [28, 29] has been found to approach the Haar ensemble [47, 48], a paradigmatic uniform random ensemble of quantum states. We extend this phenomenology to finite temperature, identifying finite temperature generalizations of the Haar ensemble with maximum entropy which describe our ensembles of states. Our result should be taken as complementary to, but distinct from conventional theories of quantum thermalization such as the eigenstate thermalization hypothesis (ETH) [12, 15]. It does not rely on the ETH, but when used in conjuction, implies that all higher moments of the projected ensemble reach thermal equilbrium with universal, Gibbs-like structure.

Third, our approach predicts and, where relevant, confirms the existence of a universality in natural many-body states, revealed in their higher moments. Not only do mean quantities captured by density matrices exhibit universal thermal behavior, so do higher-order moments such as the variance and skewness, as dictated by our maximum entropy principle. Higher order quantities beyond local observables have received recent attention in non-equilibrium dynamics [49, 50], and our results suggest at the broader applicability of higher moments — the maximum entropy principle holds not only at the level of density matrices [51, 52], but may also hold at the level of many-body wavefunctions [53, 54, 55]. This possibility has been recently investigated for energy eigenstates in Ref. [55]. Our results support such an approach based on the maximum entropy principle, and suggest that they may be more widely applicable; here we primarily apply them to time-evolved many-body states.

Finally, we draw further connections between thermalization and quantum information theory. Ensembles of quantum states are natural objects for quantum communication: fundamental results such as the Holevo bound investigate the use of such ensembles to transmit information [56]. Our results indicate that ensembles of states that result from many-body dynamics are both maximally difficult to compress, distinguish, or use for information transmission, providing a new perspective on the nature of information scrambling and hiding in many-body dynamics [57, 58, 59, 60, 61].

The maximum entropy principle has repeatedly exceeded expectations in its ability to describe complex, interacting systems [6, 7, 8, 9, 10]. Most results have so far been restricted to classical or single-particle quantum physics. Our work represents a first step to adapting this principle to the modern setting of many-body physics. While we were able to firmly establish this principle in certain settings, we find that it may apply more generally in many-body physics. Indeed, see Ref. [62] for a recent application of this principle to many-body physics to full-counting statistics in systems with a symmetry. These developments hint at broader applications of the maximum entropy principle in many-body physics.

II Summary of results

| Ensemble | Statistical description | Maximum entropy principle | Measurable signature | Information theoretic properties |

|---|---|---|---|---|

| Temporal ensemble | Random phase ensemble (Sec. IV) | Maximum entropy with fixed energy populations | Porter Thomas (PT) dist. in outcome probabilities (Thm. 3). |

Constant mutual information [Eq. (43)]

|

|

Projected ensemble:

Energy non-revealing basis |

Scrooge ensemble (Sec. V) | Maximum entropy with fixed first moment | PT dist. in joint probabilities [Eq. (56)] |

Minimal mutual information [Eq. (61)]

|

| General measurement basis | Generalized Scrooge ensemble (Sec. VI) | Collection of maximum-entropy ensembles with fixed first moments | PT dist. in normalized probabilities [Eq. (72)] |

Minimal interaction information [Eq. (76)]

|

In this work, we study ensembles of quantum states. These ensembles are collections of pure quantum states , labeled by an index , with an associated probability distribution , that is, . Quantum state ensembles can be discrete (i.e. the index takes discrete values, such as a discrete set of measurement outcomes) or continuous (the index takes continuous values, such as time). In situations where the label is not important, we simply write .

Quantum state ensembles have traditionally been studied in quantum information for tasks such as quantum communication [63]. However, until recently they have not been necessary in the context of many-body physics. Under traditional measurements, observations made on an ensemble of states only depend on the first moment . This is because without the ability to determine the index , measurements of an observable would yield its expected value, averaged over the ensemble, i.e. . Therefore, in this setting, one can only operate at the level of mixed states [17, 13, 14, 12, 15, 16].

Modern experiments challenge this assumption and may involve full knowledge of the ensemble . In modern quantum devices, one can determine the index in a variety of settings. For example, in the ensembles we study, will be the evolution time, which can be precisely controlled, or will be the outcome of a projective measurement which is recorded by the experimenter. When the index can be determined, we can study a wider range of properties, such as the fluctuations of the expectation values . The simplest such quantity is the variance . This quantity depends on more than the first moment . Specifically, it depends on the second moment since the first term is equal to . In general, the statistical properties of quantum state ensembles can be systematically characterized through their moments

| (1) |

for positive integer 111For discrete ensembles, the integral reduces to a sum over all states in the ensemble, see Sec. III..

Recent work has illustrated that rich physical phenomena are encoded in the higher moments of state ensembles. As a concrete, but non-exhaustive example, higher moment quantities are necessary to detect the measurement-induced phase transition in monitored quantum circuits [64, 65, 66, 67]. In this setting, an ensemble of pure states is generated by the different outcomes of mid-circuit measurements. A phase transition is observed in quantities such as the bipartite entanglement entropy averaged over states in the ensemble. Their average -th Rényi entropies can be computed from the -th moment of the ensemble, through the quantity , where and are a bipartition of the system.

Without reference to a specific observable, the fluctuations of quantum state ensembles can be studied by examining how the states are distributed in Hilbert space. We quantify this spread through an ensemble entropy, which is the Shannon entropy of the probability distribution . For continuous ensembles, this requires the choice of a reference distribution, in our case, the Haar ensemble. Thus, for continuous quantum state ensembles, the ensemble entropy is given as the (negative) Kullback-Liebler (KL) divergence between and the Haar ensemble

| (2) | ||||

We find that the ensembles we study are statistically described by universal continuous222Even when the ensembles are finite, such as the projected ensemble, we treat them as finite samples from an underlying continuous ensemble. state ensembles with maximum ensemble entropy, up to constraints imposed by energy conservation or effective constraints imposed by thermalization. We note that the ensemble entropy differs from the traditionally considered von Neumann entropy which only depends on the average state . Instead, depends on the distribution of the individual states , which is determined by all moments of , and has distinct information-theoretic properties (Fig. 2). We also remark that while similar notions have been studied in the past, e.g. in Refs. [53, 54], this work explicitly shows the link between this entropy measure and ensembles of states obtained from dynamics, the first to do so to our knowledge.

In the following, we demonstrate this result by focusing on two specific settings —temporal and projected ensembles— and their relationship. Finally, we discuss implications of our results, summarized in Table 1.

II.1 Temporal ensembles are random phase ensembles

The first ensemble of states we study is the temporal ensemble, consisting of quantum states generated by time evolution of an initial state under a Hamiltonian [Fig. 3(a)], with probabilities being uniform on the time interval .

| (3) | ||||

This ensemble consists of the trajectory of a quantum state as it evolves under . The statistical properties of the trajectory quantify its degree of ergodicity in Hilbert space, as our results will make clear.

Conventionally, quantum ergodicity is formulated in terms of the energy eigenstates of a system [68, 69, 70]. While this applies to time-independent Hamiltonian evolution or Floquet dynamics, it is not applicable to quantum dynamics in general. Furthermore, the notion of ergodicity is fundamentally dynamical in nature, and its definition in terms of static quantities such as energy eigenstates illustrates a tension in our understanding of quantum ergodicity. Recent works [39, 40] challenge this formulation. Specifically, they show that the ergodicity of certain time-dependent Hamiltonian dynamics cannot be formulated using conventional approaches based on the statistical properties of (quasi)-energy eigenstates, but instead can be characterized by the statistical properties of their trajectories. The central idea of Refs. [39, 40] is to test if a time-evolved state uniformly visits all ambient points in the Hilbert space, a property dubbed (complete) Hilbert-space ergodicity.

Our work generalizes the notion of Hilbert-space ergodicity [39] to systems with energy conservation. The presence of energy conservation explicitly disallows ergodicity over all of Hilbert space. Nevertheless, we find that many-body dynamics under time-independent Hamiltonian evolution is still Hilbert-space ergodic, in the sense that the evolved states uniformly explore their available Hilbert space, which is constrained due to energy conservation. In order to understand the effects of energy conservation, consider the simple case of a single qubit initially polarized in the -direction, subject to a magnetic field along the -axis. Over time, the qubit periodically traces out an equatorial circle on the Bloch sphere, instead of uniformly covering the sphere. In contrast, quantum dynamics without such conservation laws, specifically a quasiperiodically driven system, can uniformly explore Hilbert space [39].

As in classical dynamics [71], we find that a quantum many-body system may be Hilbert-space ergodic without being quantum chaotic (i.e. being integrable). We show that Hilbert-space ergodicity holds as long as the system satisfies the no-resonance conditions (Definition 3), colloquially the absence of higher order resonances in the spectrum (Definition 3). This condition is widely assumed to be true in chaotic systems [69, 17, 72, 13], and we numerically observe that it is also true in certain integrable systems such as the XXZ model, but not in others such as the transverse field Ising model.

To prove our claim of Hilbert-space ergodicity, we show that in the infinite interval limit, the temporal ensemble is equal to the random phase ensemble [46, 73]. This is the ensemble of states with fixed amplitudes in some basis , here the energy eigenbasis, and with complex phases independently random from the uniform distribution . In a time-evolved state, the amplitudes are fixed by the initial state and Hamiltonian, and the phases are the only degrees of freedom.

Theorem 1 (informal): The infinite-time temporal ensemble obtained by evolving an initial state is equal to a random phase ensemble if the Hamiltonian satisfies all -th no-resonance conditions. Conversely, for almost every initial state, if the infinite-time temporal ensemble is equal to a random phase ensemble, the Hamiltonian satisfies all -th no-resonance conditions.

The above Theorem is somewhat of a folklore result [72, 70, 46], often stated without proof. Here we provide a full statement and proof, for several reasons. Firstly, Theorem 1 has a natural interpretation in terms of a maximum entropy principle. The random phase ensemble has maximum ensemble entropy [Eq. (2)] on this restricted Hilbert space, and in Sec. IV.2, we quantify the rate of convergence of the temporal ensemble to the random phase ensemble with increasing interval . Next, this leads to a novel type of universality in the many-body setting, which we outline below.

Unlike the above example of a two-level system, the exponentially larger Hilbert space in many-body systems leads to behaviour much more akin to ergodicity in the full Hilbert space. Specifically, we show that the trajectory appears pseudorandom in the following sense: in the limit of large Hilbert space dimension , the temporal ensemble is statistically equal to the Haar ensemble, deformed as follows

| (4) |

where is the diagonal ensemble. By “statistically equal,” we mean that Eq. (4) is not true on the level of wavefunctions, but the moments of the ensembles are very close. To show this, we develope a simple analytic expression for the moments of the temporal ensemble that holds in the limit (Sec. IV.1). One consequence of this pseudorandomness is our finding that the measurement probabilities, upon appropriate rescaling, universally follow the Porter-Thomas distribution (below, and Sec. IV.3), a hallmark of random quantum states which had been observed in quantum dynamics without conservation laws [74]. We discuss information-theoretic aspects of this result and also prove an analog of the ergodic theorem, a result in the theory of dynamical systems [75], where we relate the statistics defined over time and configuration space (discussed below and in Sec. IV.5).

II.2 Projected ensembles are (generalized) Scrooge ensembles

Not only do temporal ensembles satisfy the maximum entropy principle, so do projected ensembles. Projected ensembles, introduced in a modern context by Refs. [28, 29] (and previously studied in Ref. [76]), are ensembles of states obtained from a single, larger many-body state . To do so, we partition the system into two parts and , such that the Hilbert space factorizes (for simplicity of discussion) as . We then measure in a basis . Each measurement outcome projects into a distinct pure state [Fig. 1(d)], defined as

| (5) |

where the normalization constant is the probability of obtaining the outcome , . This defines the projected ensemble:

| (6) |

For concreteness, we primarily study global states obtained from time-evolution by a generic, ergodic many-body Hamiltonian , which rapidly reach thermal equilibrium at a temperature set by the energy of the initial state [51, 52]. We will also study eigenstates of that exhibit similar thermal properties.

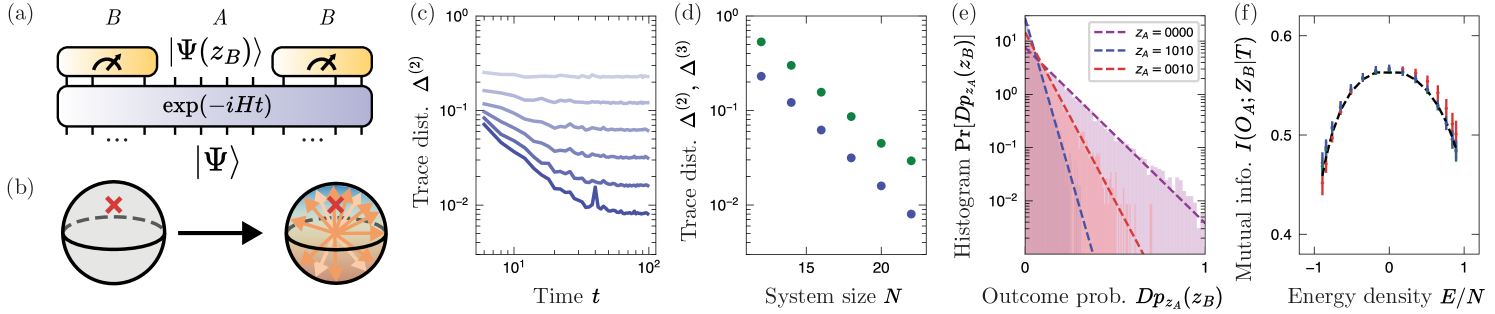

In Refs. [28, 29], it was found that when the state is obtained from time-evolution under a chaotic many-body Hamiltonian at infinite effective temperature, the projected ensemble of small subsystems is very well-approximated by the Haar ensemble. This phenomenon is known as deep thermalization [30]. This has since been rigorously shown in a variety of settings, including deep random circuits [29, 36], dual-unitary models [77, 30, 31, 32, 78], free fermion models [33], or Hamiltonian dynamics with certain assumptions [34]. Various aspects including the rate of deep thermalization [30, 31, 78, 36], the effect of symmetries [35, 37], and the presence of magic in the global state [38] have been investigated. The majority of what we know so far is restricted to the setting of infinite temperature dynamics. In this work, we set out to study the projected ensembles formed in finite temperature dynamics.

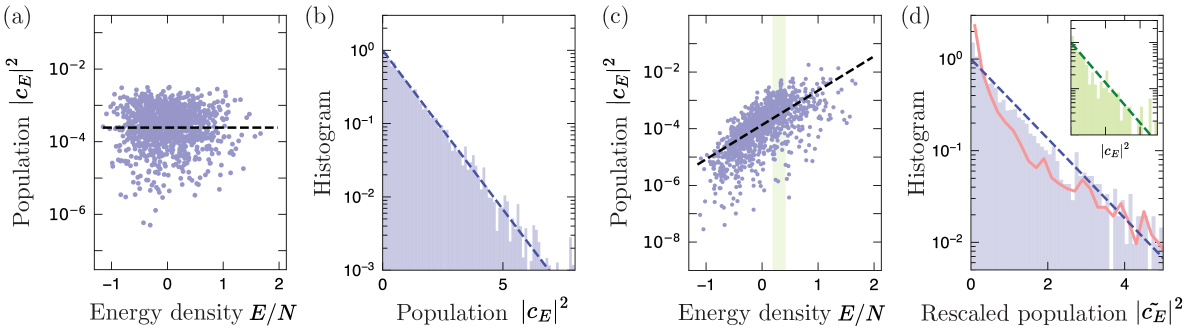

At finite temperature, projected ensembles are not close to the Haar ensemble. To see this, note that the first moment of the projected ensemble is precisely equal to the reduced density matrix (RDM). In turn, only at infinite temperature is the RDM equal to the first moment of the Haar ensemble, the maximally mixed state . This follows from a standard result in quantum thermalization [69] that the reduced density matrix is well described by a Gibbs state, i.e. , where is the Hamiltonian truncated to the subsystem , and is an effective temperature. It has remained an open question as to how to describe these projected ensembles at finite temperature.

In this work, we find that a maximum entropy principle still applies: under an “energy non-revealing” condition on the measurement basis which we shall discuss below, the projected ensembles are described by the ensembles that have maximum entropy, over all ensembles with a given first moment. These ensembles have been previously studied and were termed Scrooge ensembles [79, 80, 81] for their unique information-theoretic properties333As a technical point, Scrooge ensembles only have maximum entropy when they are regarded as ensembles of unnormalized states [80], see Sec. III.. Each density matrix uniquely defines a Scrooge ensemble , and our result is that at finite temperatures, the projected ensemble is the Scrooge ensemble corresponding to the appropriate thermal RDM.

Claim 1 (Corollary of Theorem 4 below, informal): The projected ensemble is statistically described by the Scrooge ensemble, if the measurement basis is energy non-revealing.

The Scrooge ensemble may be essentially understood as a deformation of the Haar ensemble such that its first moment equals [79] [Fig. 4(b)]. Specifically, we can form the Scrooge ensemble by first sampling Haar-random states , then deforming them by a given density matrix , i.e.

| (7) |

The probability is further weighted by the norm , leading to the distribution of states

| (8) |

In Sec. V.3, we give closed form expressions for the resultant probability distribution function , as well as its -th moments.

Scrooge ensembles do not always describe the projected ensemble. They only do so in the special case when the measurement basis is uncorrelated with the energy, defined precisely in Sec. VI.4. Under these circumstances, we can treat the projected states as independent, identical samples from a distribution. However, this is not always true: when is correlated with the subsystem energy, it is also correlated with the projected state , such that the energies and are anticorrelated. Under these general conditions, we introduce the generalized Scrooge ensemble to describe the projected ensemble (Section VI). Each projected state should be treated as a sample from a different Scrooge ensemble for each , i.e. where the first moment is not the RDM , but is a -dependent mixed state , which we may determine by the time-average of the projected state, . Therefore, the generalized Scrooge ensemble is more accurately a collection of ensembles, where the projected ensemble is comprised of exactly one sample from each ensemble, but we will abuse notation and refer to it as an ensemble. This is our second key result.

Theorem 4 (informal): At sufficiently long times ,444While our proof requires times that are exponential in system size, we find from numerical experiments that we only require times that grow weakly (at most polynomially) with system size. the projected ensemble generated by a time-evolved state is approximately equal to the generalized Scrooge ensemble, which depends on the measurement basis , Hamiltonian , and initial state , if the Hamiltonian satisfies all -th no-resonance conditions. The converse direction holds for almost every initial state .

When the measurement basis is uncorrelated with the global energy, the generalized Scrooge ensemble reduces to the Scrooge ensemble (Claim 1). Specifically, this happens when the mixed state is independent of (up to a rescaling), giving a concrete definition for “energy non-revealing” bases.

We prove this result using our knowledge of the temporal ensemble. We show that the temporal trajectory of the projected state is a Scrooge ensemble (here, because of the subsystem projection, there are no hard constraints on the amplitudes, as there were in the case of the global state). We then utilize our ergodic theorem (Sec. IV.5) to translate our results for a distribution over time to a distribution over the outcomes .

While Theorem 4 concerns the time-evolved states , we numerically observe that the generalized Scrooge ensemble also describes the projected ensembles obtained from energy eigenstates, suggesting that our maximum entropy principle holds for a broader class of many-body states.

In projected ensembles, the maximum entropy principle sheds light on the nature of correlations between system and bath. Specifically, the projected ensemble reveals correlations between the subsystem and its complement , which we quantify in terms of the mutual information. We find that the nontrivial correlations between them are minimal and basis independent. Here, nontrivial correlations mean any amount of correlation (mutual information) that is beyond the thermal correlations that remain after infinite-time averaging. In other words, any meaningful correlations between and originate only from energy conservation, and other symmetries if they exist. Below and in Sec. V.8 and VI.3, we discuss consequences of these correlations for tasks such as communication and compression.

II.3 Implications and discussion

Below, we summarize several broader points that we found applicable to both the temporal and projected ensemble.

Ergodic theorem — Remarkably, the temporal and projected ensembles are closely related. We prove that a version of the ergodic theorem holds in many-body dynamics. This enables us to translate statements about distributions over time (temporal ensemble) into statements about distributions over measurement outcomes, at fixed, typical points in time. In particular, we use this to prove Theorem 4, a statement about the projected ensembles at typical points in time.

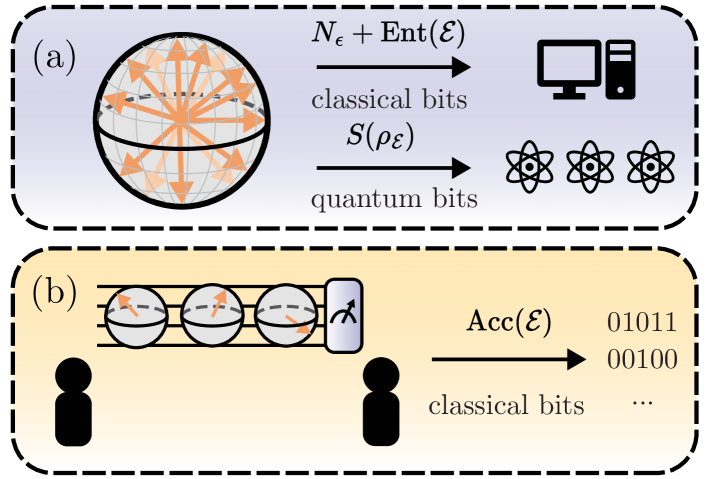

Operational meaning of ensemble entropy — In this work, we use the ensemble entropy to define our maximum entropy principle. We also identify its operational interpretation: the ensemble entropy quantifies the information required to classically represent an ensemble of states. Our maximally entropic ensembles are therefore the most difficult to compress, formalizing our intuition that the states generated by natural, chaotic many-body dynamics lack overall structure that can be exploited for compression.

Specifically, the ensemble entropy quantifies the number of classical bits required to store an ensemble of states up to numerical precision [Fig. 2(a)]. This should be contrasted with the minimum number of qubits required to store the ensemble in quantum memory, which was established by Schumacher to depend only on the first moment of the ensemble through the von Neumann entropy [82].

Information-theoretic consequences — Not only are our ensembles of states maximally difficult to classically store, they are also maximally difficult to distinguish by measurement, as quantified by their accessible information [79, 63]. The accessible information quantifies the maximum rate of classical information that can be transmitted by sending states from the ensemble over a quantum channel (in terms of bits of information per channel use) [Fig. 2(b)]. It is upper-bounded by the well-known Holevo bound [56], and lower-bounded by Josza, Robb, and Wooters [79]. They found that the Scrooge ensemble achieves this lower bound, dubbed the subentropy [Eq. (61)]. They dubbed the ensemble the “Scrooge ensemble” because it is uniquely “stingy” in the amount of information revealed under measurement. Therefore, the projected ensemble not only has maximum ensemble entropy, it also minimizes the amount of information that can be extracted. These information theoretic properties are directly measurable [Fig. 4(f) and Fig. 5(d)] but have not yet been observed in experiment.

In the case of temporal ensembles, the accessible information quantifies the amount of information that can be gained about the evolution time of the state. We find that it takes on a universal value , where is the Euler-Mascheroni constant [Fig. 3(d)].

Measurable signature: universal Porter-Thomas distribution — Our maximum entropy principle selects distributions of states that are “closest” to the Haar ensemble, satisfying certain constraints. The relationship between our ensembles of states to the Haar ensemble gives rise to a measurable signature of our maximum entropy principle: the Porter-Thomas (PT) distribution [83]. Originally identified in nuclear physics settings, the PT distribution has received renewed attention in many-body physics. This distribution represents the distribution of overlaps between Haar random states with a fixed (but arbitrary) state , i.e. the probability of measuring from a Haar-random state [84]. Not only is the PT distribution a measurable signature of Haar-random states, we find that it is also present in many natural many-body states. In companion work [41], we experimentally and theoretically investigate the emergence of the Porter-Thomas distribution in global quantities, its relationship to local quantities, and how the Porter-Thomas distribution is modified in the presence of noise, using our results to learn about the noise in an experiment.

II.4 Organization of paper

The rest of the paper is organized as follows. In Section III, we provide an overview of the central object of our work — ensembles of pure states and their information-theoretic and measurable properties. We then proceed to study our two ensembles of states: the temporal ensemble (Section IV) and the projected ensemble in a special and a general setting (Sections V and VI). Finally, in Section VII we discuss the PT distribution in the properties of many-body eigenstates.

III Ensembles of pure states

Ensembles of pure states generated by quantum many-body dynamics are the central objects of study in this work. In this section, we provide an overview of ensembles of pure states and their properties. After defining normalized and unnormalized ensembles of states, we introduce two quintessential ensembles: the Haar and Gaussian ensembles, which we use as the reference distributions for our ensemble entropy. We then discuss the quantities we use to analyze our ensembles of states: the -th statistical moments, ensemble entropy, mutual information, and PT distribution.

Ensembles of pure states are collections of pure states in a Hilbert space of dimension , weighted by a probability distribution.

Definition 1.

An ensemble of pure states is a probability distribution of states weighted by probabilities :

| (9) |

when the ’s are omitted, we take the states to be uniformly distributed over indices 555We ignore subtleties about the global phase of the states , and assume that the states are gauge-fixed to, for example, have real positive values of the first entry in some basis ..

Our distributions may be continuous or discrete. In the continuous case, the distribution is a measure over normalized wavefunctions, which we can specify in terms of its components , subject to the constraint that :

| (10) |

where is the Euclidean measure on .

Unnormalized ensembles of states — We will also find it useful to define distributions of unnormalized states. In this case, the distribution is simply a measure over -dimensional complex vectors, defined in terms of its components . For consistency, we will use tildes throughout this work to denote unnormalized states .

An unnormalized ensemble can be mapped to a normalized one by normalizing each states. This results in the probability distribution . For each state , the normalized ensemble averages over the distribution of the norm to give the measure . Therefore, the unnormalized ensemble contains more information than the normalized one: specifically information about fluctuations of the norm .

III.1 Gaussian and Haar random ensembles of states

The ensemble entropy, which quantifies our maximum entropy principle, is defined with reference to the Haar (or Gaussian) ensemble. These ensembles are unique in being invariant under arbitrary unitary transformations and have been the subject of considerable study as paradigmatic distributions of random quantum states [48].

Random unnormalized states: the Gaussian ensemble — The Gaussian ensemble is a probability distribution over unnormalized states, where each component is an independent complex Gaussian variable.

| (11) |

The normalization above is chosen so that the average norm is .

Random normalized states: the Haar ensemble — The Haar ensemble is the unique distribution of normalized states that is invariant under any unitary transformation . It is naturally related to the Gaussian ensemble: one can sample from the Haar ensemble by sampling states from the Gaussian ensemble, then normalizing them. This induces the measure

| (12) |

The Haar ensemble has been widely studied in quantum information science and utilized for a myriad of applications, see e.g. Ref. [48]. In particular, the moments of the Haar ensemble play a key role, which we now discuss.

III.2 Statistical -th moments

An ensemble of states contains a large amount of information, beyond that of its density matrix. In this work, we systematically study and quantify ensembles of states by their higher-order moments.

Higher order quantities have received less attention in many-body physics because they have been inaccessible to traditional experiments, requiring a large number of controlled repetitions in order to access higher order statistical properties. Modern quantum devices address these limitations with both high repetition rates and a high degree of controllability [28, 85].

In this work, we find that not only do the average properties (first moments) thermally equilibrate, so too do the higher moments of our natural ensembles of states. We first define the -th moments.

The -th moment of an ensemble is, for discrete ensembles

| (13) |

for continuous ensembles is

| (14) |

and for unnormalized ensembles we have (denoting the moments with tildes)

| (15) |

The -th moment of an ensemble can be used to describe the expectation value of any linear operator which acts on -copies of the Hilbert space. The ensemble average of can be obtained from the -th moment: . For example, describes the -th moment of the expectation values . Here, we use to denote the ensemble average .

The -th moment can be thought of as a generalization of the density matrix. Namely, the first moment is the conventional density matrix, and describes the average state of the ensemble. All higher moments are likewise positive semi-definite operators and, for ensembles of normalized states, have unit trace.

Moments of Haar ensemble — The higher moments of the Gaussian and Haar ensembles are particularly simple. The moments of the Haar ensemble have analytical expression [48]:

| (16) |

with similar expression for the moments of the Gaussian ensemble. Here, is the operator that permutes states by the permutation , i.e. . This result follows from the Schur-Weyl duality [86]. Its simplicity enables the design of useful quantum algorithms such as classical shadow tomography [87, 88], as well as to analytical solutions of models of quantum dynamics such as random quantum circuits [64]. We will find that our ensembles of states have -th moments with similar structure as the Haar -th moments, enabling phenomena such as the emergence of the Porter-Thomas distribution.

Trace distance of higher moments — Higher moments also enable the systematic and quantitative comparison of two ensembles of states. Specifically, we compare their -th moments through their trace distance.

| (17) |

where the trace (or nuclear) norm of a matrix is the sum of the absolute values of its eigenvalues. In particular, the trace distance sets an upper bound on how well any -copy observable can distinguish two ensembles of states [63].

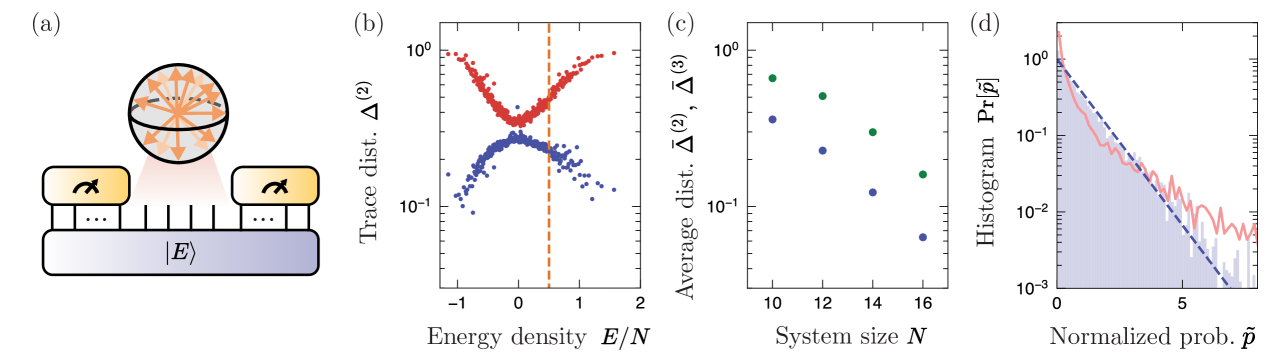

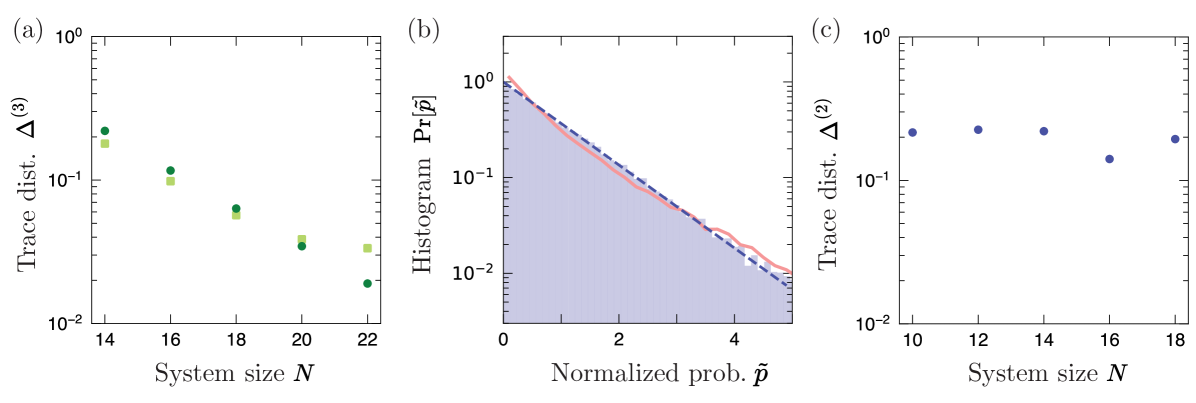

Refs. [28, 29] found that with increasing system size, projected ensembles of infinite effective temperature states — either obtained from quench dynamics of simple initial states or chosen from high energy eigenstates — have closer -th moments to the Haar moment (in other words, projected ensembles form better approximate -designs). Analogously, we find that the -th moments of the projected and (generalized) Scrooge ensembles converge in trace distance with increasing system size [Fig. 4(b) and Fig. 5(b)].

III.3 Ensemble entropy

The central claim of our work is that natural many-body dynamics generates ensembles of states with maximum entropy. The entropy in question, the ensemble entropy, differs from the conventional von Neumann entropy . This is not suitable as an entropy of an ensemble of states, since it only depends on the first moment , and there are many ensembles of states which share the same average state . Instead, our ensemble entropy is the Shannon entropy of the distribution of states over Hilbert space. For continuous distributions, this entropy must be defined relative to a reference distribution.

Definition 2.

The ensemble entropy is the negative of the Kullback-Leibler (KL) divergence between and the reference measure. For normalized ensembles, the reference distribution is the Haar measure [Eq. (2)]

| (18) | ||||

while for unnormalized ensembles, the reference distribution is the Gaussian measure

| (19) | ||||

The ensemble entropy is non-positive, achieving zero only when the two distributions are equal. See Refs. [53, 54] for similar attempts to quantify the entropy of state ensembles.

Connection to von Neumann entropy — While the ensemble entropy may seem an unconventional notion of entropy, in fact it can be related to the von Neumann entropy of higher moments of the ensemble. For finite ensembles , the quantity

| (20) |

converges to the Shannon entropy , which in turn converges to the ensemble entropy (plus a divergent constant), when the discrete ensemble is appropriately selected (see below). This follows from the fact that with increasing number of copies , two states become increasingly distinguishable (orthogonal), since quickly vanishes when . Therefore, for any discrete ensemble , the quantities and converge to the eigenvalues and eigenvectors of respectively. A similar argument can be made for continuous distributions, and we obtain , with a divergent constant .

Ensemble entropy and information compression — The ensemble entropy has a simple operational meaning: it quantifies the minimum number of bits required to store the states in the ensemble in classical memory, up to a fixed precision . If storing a single such state requires classical bits, we find that storing a large number of states drawn from the ensemble requires classical bits (note that ), as illustrated in Fig. 2(a). Our maximum entropy principle indicates that the required storage for many-body states generated by time evolution is maximal.

To see this, note that storing states up to accuracy amounts to partitioning the Hilbert space in a uniform -net, i.e. into a discrete set of points which are a distance apart [3]. To store the states in the ensemble, each state in the continuous Hilbert space is assigned to one of these discrete points. Storing these states thus amounts to storing these labels — they may either be stored directly or they may be compressed according to how often the labels appear. In the former case, each label requires bits, if there are points in the net. In the latter, the optimal rate of compression is given by the relative entropy between the discrete probabilities on this -net and the uniform distribution [3]. In the limit of small , the discrete and continuous distributions converge, and therefore the overall amount of compression achieved is equal to the ensemble entropy per state, and the overall number of bits required to store states is .

Finally, we note that this task is different from the task of storing an ensemble of states in quantum memory, i.e. compressing the states into a smaller number of qubits. This can be done by Schumacher compression [82], with compression rate given by the von Neumann entropy of the first moment . In contrast, the task of classical approximate storage depends on all moments of the ensemble.

III.4 Mutual information

In addition to the ensemble entropy, we study another information-theoretic property: the mutual information, which quantifies the information-theoretic correlations between two variables. We find that not only is the ensemble entropy maximized in our ensembles, the mutual information is also minimized. These two quantities are a priori unrelated, and lead to distinct operational consequences.

The mutual information between random variables and is defined as

| (21) | |||

This can be interpreted as the information gain (reduction in entropy) of the variable from measurements of the variable . In this work, we take the logarithm to base 2, which quantifies the mutual information in units of bits.

The mutual information is relevant in several information theoretic tasks, including quantifying the maximum rate of information transmission through a noisy classical channel [79], where the variables and are here the channel input and output. Below, we focus on the significance of mutual information to a quantum communication task.

Holevo studied the use of a noiseless quantum channel to transmit classical information [56]. Given an ensemble of (possibly mixed) states , Holevo considered a task where one party (Alice) transmits a classical message to another (Bob), by sending a sequence of states . Bob decodes this message using measurements on the received states. When the quantum states are not orthogonal, the symbols cannot be unambiguously determined: the maximum classical transmission rate is therefore given by the accessible information

| (22) |

the mutual information maximized over complete measurement bases .

Holevo provided an upper bound for the accessible information: the Holevo- quantity [56]. In an ensemble of pure states, the Holevo- quantity is equal to the von Neumann entropy . For a given first moment , the Holevo bound is saturated when the ensemble of states is formed using the eigenbasis of , i.e. .

Josza, Robb and Wooters [79] subsequently established a lower bound for the accessible information, dubbed the subentropy . That is, for any ensemble of pure states with a given first moment , the accessible information is bounded by:

| (23) |

Like the von Neumann entropy, the subentropy only depends on the first moment . For any ensemble , the subentropy is defined as the mutual information averaged over all complete projective measurement bases . This is equivalent to averaging over unitaries which rotate measurement bases and has expression [79, 89]

| (24) |

where are the eigenvalues of . More recently, the subentropy has received attention in studies of the dynamics of information in many-body systems [61].

Josza, Robb and Wooters established that the Scrooge ensemble saturates this lower bound (Section V, [79]). Scrooge ensembles are therefore ensembles with minimum accessible information (or, the most information “stingy” ensembles), leading to their name.

(Generalized) Scrooge ensembles describe projected ensembles, therefore our projected ensembles have minimal accessible information. That is, in natural many-body systems, it is maximally hard to predict the measurement outcome of one subsystem from measurements on the other.

III.5 Porter-Thomas distribution

Finally, we turn to a measurable signature of our maximum entropy principle, the Porter-Thomas (PT) distribution. Originally studied in nuclear physics [83], the PT distribution has received renewed attention due to its presence in Haar-random many-body states, approximated by the output of deep random quantum circuits [84].

Concretely, a positive random variable follows the Porter-Thomas distribution with mean [i.e. ] if its probability distribution function is

| (25) |

In particular, the overlap of states drawn from the Haar random distribution to any fixed state follows a PT-distribution with [74]

| (26) |

Notably, this is a statement about the probability distribution of probabilities . While relatively unknown in physical settings, this has received attention in the statistical literature under several names, including the fingerprint and histogram of the histogram [90, 91]. The fingerprint is sufficient to describe the properties of a distribution that do not depend on its labels, most notably its Shannon entropy.

The probability-of-probabilities can be studied in modern quantum experiments. For example, the PT distribution expected from Haar-random states has been predicted and subsequently experimentally verified in deep random quantum circuits [84, 74] and many-body states at infinite temperature [29]. Even though the ensembles we study in this work are not the Haar ensemble, we find that the maximum entropy principle leads to the same PT distribution, when observables are appropriately normalized. In companion work, we experimentally and theoretically study this object in detail [41].

IV Temporal ensembles

The most natural setting of quantum dynamics is time evolution under a time-independent Hamiltonian. It is an important endeavor to understand the phenomena that can arise in this setting. Despite the fact that it is simple to state, relatively few tools are available to analyze the dynamics under an interacting many-body Hamiltonian, and one must often turn to more analytically tractable models of quantum dynamics such as random quantum circuits [64].

In this section, we show universal statistical properties of the temporal ensemble — the time-trace of a state evolving under Hamiltonian dynamics. Intuitively, Hamiltonian dynamics conserves energy, and its trajectory in Hilbert space cannot be unconstrained. In fact, the Schrödinger equation conserves the population of each energy eigenstate , where for an initial state . This imposes a total of (the Hilbert space dimension) constraints, and the only remaining degrees of freedom are the complex phases . One might conjecture that these phases are uniformly and independently random, satisfying the maximum entropy principle.

Indeed, this expectation is correct: the temporal ensemble is (statistically) described by the random phase ensemble, the ensemble of states with fixed magnitudes and independent, uniformly random phases in the energy eigenbasis. This has been a folklore result in several works, see e.g. Refs. [72, 70, 46], however, it has to our knowledge not been presented with the same level of rigor as in this work. Here, we rigorously state our result with a necessary and sufficient condition — the -th no-resonance condition — and discuss its corollaries.

The first moments of the temporal ensemble are heavily used in the quantum thermalization literature. Seminal works in quantum chaos and thermalization introduced the diagonal ensemble to characterize observables at thermal equilibrium [51, 52]. However, this only captures the average values of observables and does not address higher moments such as their variance over time. Previous work either bounds [13, 17] or predicts the dependence of fluctuations with the total Hilbert space dimension [69, 92, 93], but here we provide approximate formulae for all moments. We apply our result on the temporal ensemble to a novel class of observables: global projective measurements, discovering universal behaviour in its higher moments and information-theoretic properties.

We first formalize the above intuitive expectation and present the sketch of our proof. We define the temporal ensemble as the set of explored by a fixed initial state time-evolving under a Hamiltonian over a time interval .

| (27) |

When , we equate this to the random phase ensemble, the distribution of states with fixed magnitudes in a given basis , with i.i.d. random phases .

This ensemble was studied in Ref. [46], which characterizes properties such as the entanglement of its typical states. Ref. [73] develops a graphical calculus to perform the -copy averages we discuss in this work, although we will not need its full generality here (see also Ref. [94, 95]).

Our result, which equates the temporal ensemble (in the limit ) with the random phase ensemble, relies on the following assumption:

Definition 3 (-th no-resonance condition).

In other words, the two sets of indices must be related by some permutation , . It is easy to see that the -th no-resonance condition implies the -th no-resonance conditions, for . The no-resonance conditions are considered generically satisfied and are used in works such as Refs. [69, 17, 72, 13]: it is believed that if the condition does not hold in a given ergodic system, any small perturbation will generically break any -th resonances [96]. We note recent work [98] that studies near-violations of the -th no-resonance condition, concluding that they are generically small and bounding their effects on certain quantities.

The no-resonance condition for simply states that the energy eigenspectrum has no degeneracies. However, this may not be satisfied in generic Hamiltonians. The presence of non-Abelian symmetries ensures that there are degenerate multiplets of states. Strictly speaking, this violates all no-resonance conditions. However, for the purposes of the temporal ensemble, we may disregard these degeneracies: the initial state projects the degenerate eigenspace onto a single eigenstate. Our conclusions below hold as long as the spectrum of , with degeneracies removed, satisfies the -th no-resonance conditions [94]. We call this the no-resonance condition modulo degeneracies.

With the above definitions, we are ready to state our result equating the temporal and random phase ensembles.

Theorem 1.

Given an initial state and a Hamiltonian , the infinite-time temporal ensemble is equal to the random phase ensemble:

| (29) |

with fixed energy populations , if satisfies all -th no-resonance conditions modulo degeneracies. The converse holds as long as has non-zero population on the eigenspace of every non-degenerate eigenvalue , i.e. for almost every 666If there is a symmetry and is supported only on every eigenstate in a symmetry sector, we may conclude that the -th no-resonance conditions modulo degeneracies hold for the eigenvalues in that sector..

Proof sketch. Here we sketch the idea of the proof, deferring its details to Appendix A. The general idea is to evaluate the -th moment of the temporal ensemble, and show that it matches the moments of the random phase ensemble. The evaluation of is possible for an infinite interval based on the no-resonance condition Eq. (28), and the corresponding moments of the random phase ensemble can also be explicitly evaluated. We note that having matching moments does not always imply that two ensembles are equal to one another. Under certain conditions such as the (complex) Carleman condition [99], this equivalence can be made. The evaluated moments satisfy the complex Carleman condition, completing our proof. The converse direction can be easily shown by noting that if the -th no-resonance condition is not satisfied, the moment will have additional terms that are not present in the random phase ensemble (as long as all coefficients are non-zero) and hence the temporal and random phase ensembles will differ. ∎

Ensemble entropy — The ensemble entropy is always divergent in any distribution of states with fixed magnitudes, since the constrained space is a sub-dimensional manifold of the full Hilbert space. This can be intuitively understood from the following property of the KL divergence . Assume we are given samples from and promised that the underlying ensemble is either or the Haar ensemble. The KL divergence quantifies how many samples one needs to correctly conclude that the ensemble is and not the Haar ensemble [3]. In our case, we can rule out the Haar ensemble even with a single wavefunction from the temporal ensemble. This is because the state has the fixed amplitudes , which occurs with probability zero in the Haar ensemble. Therefore, given complete knowledge of the state , the constraints , and the promise that the underlying ensemble is either or the Haar ensemble, this discrimination task can be done infinitely quickly, and hence the KL divergence is infinitely large. While artificial in practice, this operational meaning for the KL divergence accounts for its divergence in the temporal ensemble.

However, the maximum entropy principle still holds in a certain sense: we can separate the entropy into contributions from the phase and magnitude degrees of freedom; the contribution from the phase is maximized by independent, uniformly distributed phases (Appendix C). We find a constant, non-divergent contribution

| (30) | ||||

We interpret this expression as proportional to the volume of the constrained space. Remarkably, this expression will reappear in the ensemble entropy of the projected ensemble (Section V.7).

IV.1 Asymptotic product form

In this work, we shall be interested in the moments of the random phase ensemble. Here, we provide a simplified, approximate form of the -th moments, which enables their further analysis.

Theorem 2 (Asymptotic product form).

Proof sketch: We provide the full proof of Theorem 2 in Appendix D, and only state the key idea, which is the following: the -th moment of the temporal ensemble can be written as a sum over all lists of labels of energy eigenstates and over all permutations of (Theorem 1). Crucially, is allowed to have repeated elements. Only if no elements are repeated will the number of unique permutations of be . Using the same counting also for lists with repeated elements, the expression for the moments considerably simplifies.

| (32) | ||||

| (33) |

Eq. (32) is not exact because it over-counts permutations when contains repeated elements: there are additional correction terms to compensate for this over-counting. Careful consideration gives that the trace norm of all correction terms can be bounded by , with a -dependent prefactor (Appendix D). ∎

Theorem 2 explicitly demonstrates how the higher order moments of the temporal ensemble depend on the first moment . Specifically, the moments of the temporal ensemble have a structural resemblance to the moments of the Haar ensemble [Eq. (16)], up to a correction which is typically exponentially small, formalizing our intuition of maximally entropic ensembles as “distorted” Haar ensembles. This asymptotic behavior will also be common to projected ensembles.

IV.2 Finite-time temporal ensembles

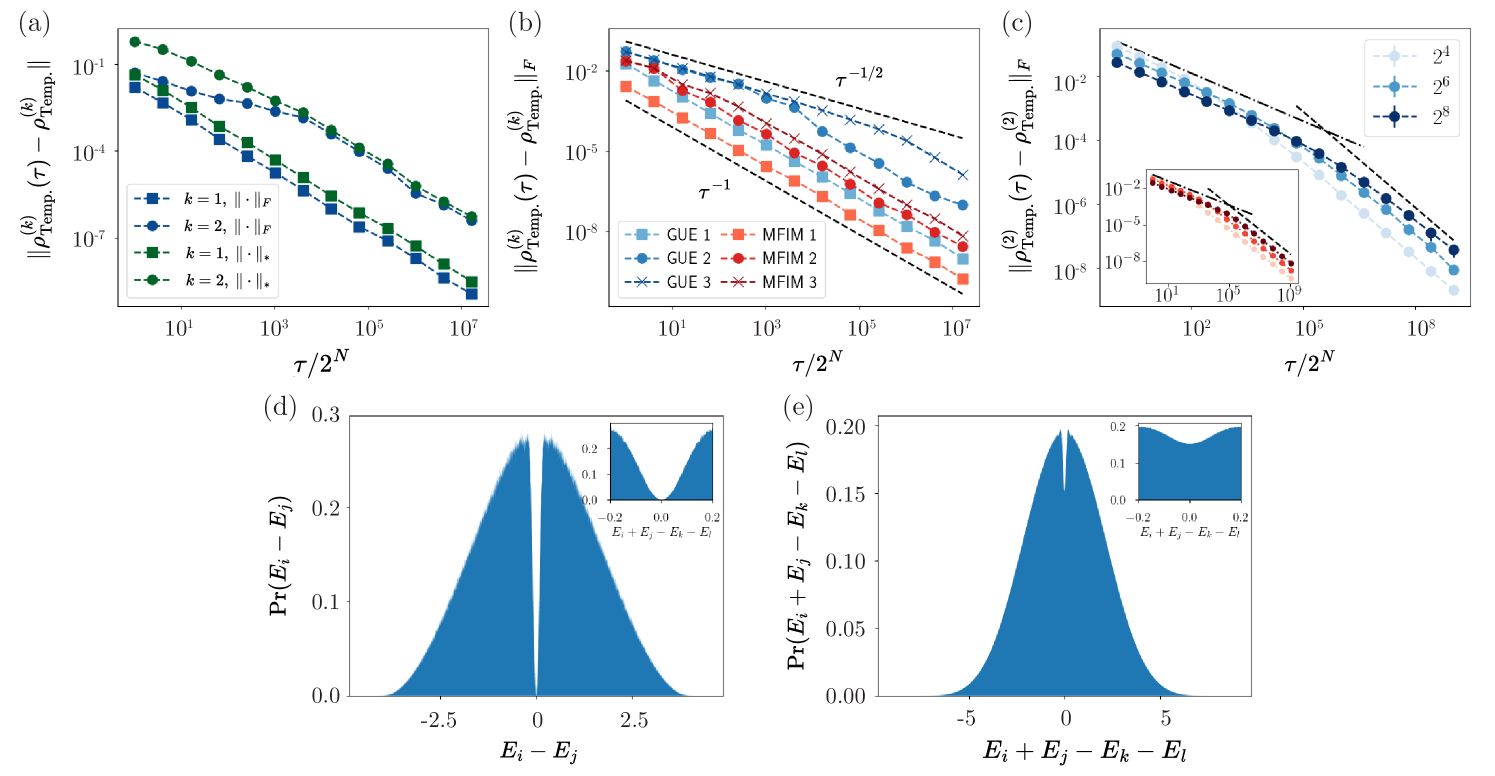

Theorem 1 studies the properties of temporal ensembles over infinite time intervals. Here, we investigate how temporal ensembles over finite time intervals converge to their infinite-interval values. We analytically study its convergence rate in random-matrix models and present numerical evidence that the above convergence rate also holds for realistic Hamiltonians. Specifically, we study the convergence of the finite time -th moment to its infinite-time limit . This has expression:

| (34) | |||

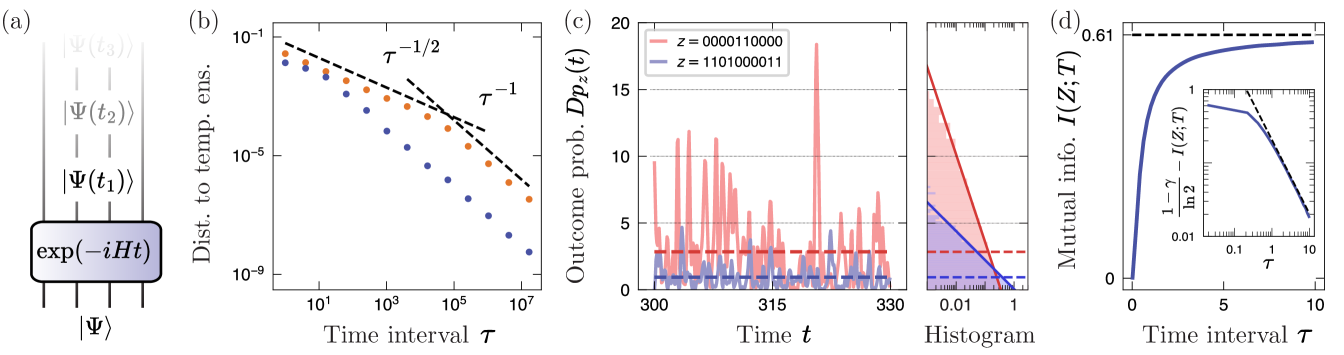

We consider the convergence both in terms of the trace norm and Frobenius norm, with the latter being analytically easier to study. For the first moment, i.e. , we numerically observe that the distance in Frobenius and trace norms both decay as (see App. F). This holds for a generic chaotic model, the mixed field Ising model (the main model we study in this work, Sec. IV.4) as well as for Hamiltonians sampled at random from the Gausian Unitary Ensemble (GUE). We attribute this asymptotic scaling to the asymptotic behavior of the function in Eq. (34). In Appendix F, we support these numerical findings by analytical calculations in random matrix theory, showing that the average of the squared Frobenius norm decays as . This suggests that the average Frobenius norm decays as , consistent with our numerical observations.

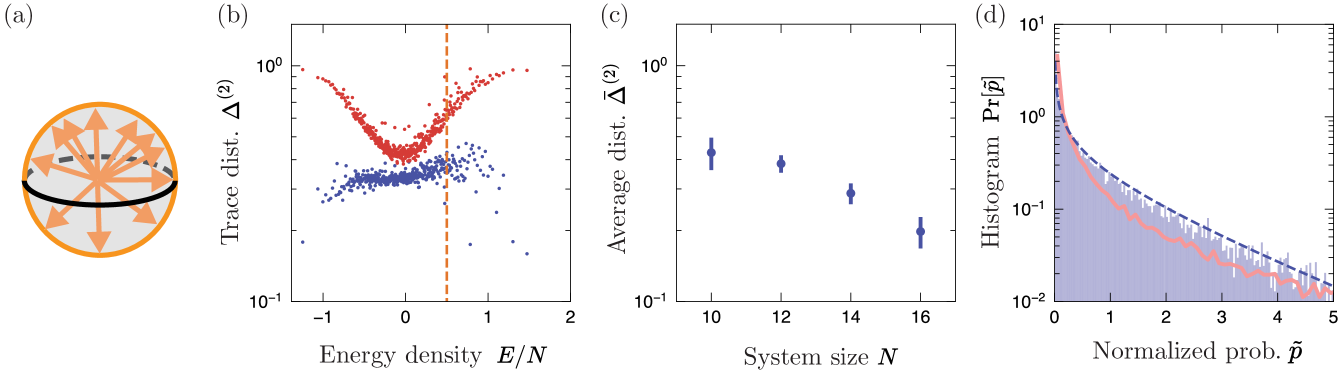

Interestingly, for higher order moments , we observe different behavior. For the mixed field Ising model as well as individual samples from the GUE, the Frobenius distance decays at intermediate times as before a crossover time, after which it decays as [Fig. 3(b)]. This crossover happens in times which are exponentially long in system size, which we attribute to the presence of a minimum distance of gaps (for ) for each individual Hamiltonian. While such minimum distance is exponentially small in system size, it has a finite, nonzero value for finite-sized systems. Only at times much longer than the inverse of this minimum gap does the asymptotic behavior become clear.

Notably, we find that for the first moment, , the GUE ensemble mean closely resembles the behavior of individual instances and decays as . In contrast, for higher moments, , the behavior of individual samples and the sample mean differ. With an increasing number of samples, the crossover is less pronounced. We relate these findings to statistical properties of the eigenvalues of GUE Hamiltonians. It is well known that the eigenvalues exhibit level repulsion, i.e. the probability to find two distinct eigenvalues close to each other is vanishing. In contrast, as suggested by Ref. [98] and the analytical and numerical results presented in Appendix F, we find that the gaps of eigenvalues exhibit only weak repulsion, leading to the crossover timescale growing with the size of the sample.

IV.3 Porter-Thomas distribution as measurable signature of temporal ensembles

An immediate consequence of Theorem 2 is the emergence of the Porter-Thomas distribution (Sec. III.5) in the probabilities of measuring a given outcome , as a function of time. This result was originally presented in Ref. [94]. For clarity, we denote this distribution as 777The subscript indicates that the measurement outcome is being fixed and the distribution is taken over time ., which can be experimentally estimated by repeated measurements of the state .

Unlike local observables, whose expectation values quickly equilibrate in time— has exponentially small relative fluctuations about its equilibrium value [17]— global observables such as the projector never equilibrate. Theorem 3 indicates that they have relative fluctuations which follow a universal, exponential distribution. We utilized this result in Ref. [94] to propose a protocol for many-body benchmarking in analog quantum simulators. Here, we will use the PT distribution as a signature of our maximum entropy principle; it will be a common signature among the temporal ensemble, projected ensemble and beyond.

Theorem 3.

Given an initial state , a Hamiltonian which satisfies the -th no-resonance condition, and a fixed state , the outcome distribution follows an approximate Porter-Thomas (or, exponential) distribution over time, with mean . Specifically, the -th moments satisfy:

| (35) |

where is the inverse effective dimension, which captures the effective size of the Hilbert space explored during quench evolution that is accessible by measurements in . Our notation associates with the temperature of the initial state, which to a large extent controls the size of the Hilbert space explored.

Proof.

Its -th moment can be easily obtained from the -th moment of the temporal ensemble, which gives the desired . This relation follows from Theorem 2. We conclude that follows a Porter-Thomas distribution888More accurately, we bound the distance between the -th moments of and the Porter-Thomas distribution. We do not currently have a means to directly bound the distance between distributions, such as the total variation distance., with a mean value equal to . This is a simple illustration of how the first moment uniquely determines all moments of the distribution . This relation is only approximate because of the correction terms in Theorem 2. We are able to (loosely) bound the effects of these correction terms on the -th moments of in terms of the parameter . This is not a straightforward application of the bound provided in Theorem 2, which is too weak for our purposes. We refer the reader to Ref. [94] for a detailed proof, or to Appendix I for a very similar proof. ∎

IV.4 Numerical model

We verify our theory predictions via explicit exact numerical simulations of interacting quantum many-body systems. As an illustration, we work with a paradigmatic model of many-body quantum chaos, the one-dimensional mixed field Ising model (MFIM) with open boundary conditions.

| (36) |

where and are Pauli matrices on site . We use parameter values established in Ref. [100]: . Unless otherwise stated, we use this model in this and future sections for our numerical simulations.

In both the projected and temporal ensemble, we study the time-evolution of the initial state

| (37) |

where the parameter is used to tune the state from infinite temperature () to positive or negative temperature. Unless otherwise stated, we present data for , which has energy density , far from the center of the spectrum.

Here, we illustrate the content of Theorem 3 in Fig. 3(c), where we plot the probabilities for two choices of , which exhibit large fluctuations over time, about different average values . The histograms of show good agreement with the PT distributions even over a relatively short time interval, confirming our analysis.

IV.5 Ergodicity

Theorem 3 is a statement about the distribution of over time (denoted to make explicit that the variable is held constant). We can make an analogous statement about a distribution over , holding constant. At a fixed, late time , the quantities are approximately distributed according to the PT distribution with mean 1.

| (38) |

This was originally derived in Ref. [94] by showing that the weighted -th moment for a typical, late time satisfies:

| (39) |

We interpret this equivalence of distributions over time and over outcomes as a kind of “ergodicity,” in the sense of the ergodic theorem in the study of classical dynamical systems [75], which states that in an ergodic system, the average over time is equal to the “spatial” average over configurations. Such an equivalence will be essential to our characterization of projected ensembles in Section VI.

IV.6 Mutual information: building a (bad) “clock”

The temporal ensemble has unique information theoretic properties. In particular, we find near-universal behavior in the mutual information between the random variables and which respectively represent the measurement outcomes and evolution times .

To illustrate the operational meaning of the mutual information, let us consider the following task. We are given several copies of the time-evolved state . We have knowledge of the initial state of the system as well as its Hamiltonian, but not the duration of the time evolution , which is assumed to be uniformly distributed on some large interval . Given multiple single-copy measurements performed in an optimal basis, how much information about can we learn?

Before performing detailed analysis, we can make a prediction based on our maximum entropy principle: since a temporal ensemble is maximally entropic, we expect that the amount of information must be small no matter which basis the measurements are performed. Indeed, our results imply that the (near) optimality is achieved by any generic measurement basis including the conventional configuration basis . Furthermore the information gain per measurement takes the universal value , independent of the Hamiltonian, initial state, measurement basis, and system size.

In order to formally define the mutual information , we must make the following definitions. We let time be a uniformly distributed variable over a interval of (long but arbitrary) length . We then define a joint probability distribution over measurement outcomes and times , by , which is normalized: . We shall also need the marginal distributions , and the conditional distribution .

Then, the mutual information can be computed as

| (40) | |||

| (41) | |||

| (42) | |||

| (43) |

where in Eq. (42) we have used Theorem 3 to replace the integral over time with an integral over , which follows a PT distribution, i.e. with probability density .

It is remarkable that this universal value does not depend on system sizes, and, in particular, does not vanish with any parameters. This implies that, despite the fact that ergodic dynamics hides information about time as strongly as possible, a finite amount (0.6099 bits) of information still cannot be concealed, in the large limit. In other words, our result implies that a certain level of temporal fluctuations in observables is inevitable in the ergodic dynamics of generic pure states, putting the lower bound on the ability of unitary dynamics to hide temporal information. We also note that estimating the evolution duration is equivalent to determining an overall strength scaling factor of a many-body Hamiltonian by evolving to a fixed time , and hence this “clock” may equivalently be regarded as a sensor for the overall Hamiltonian strength.

IV.6.1 Finite-time mutual information

We note that the mutual information discussed above should be distinguished from more conventional metrics for clocks such as sensitivity. The sensitivity at very small intervals (i.e. in the limit of many measurements) depends instead on the energy uncertainty , which is not universal. The mutual information instead describes how quickly a large interval can be refined by measurements.

Our universal result above is valid in the limit of large time intervals , over which follows the approximate PT distribution. At shorter intervals (but still at sufficiently late times ), the mutual information takes on a smaller value, due to having a non-zero correlation time. This is illustrated in Fig. 3(d). In fact, approaches its late-time value as a power law:

| (44) |

where is the uncertainty in energy of the initial state. This is illustrated in the inset of Fig. 3(d).

We present the full derivation in Appendix G. In brief, we obtain this result by estimating the autocorrelation time of , using approximations of the many-body spectrum. We find that the correlation time is inversely proportional to the energy uncertainty of the state . If we interrogate when t is an integer multiple of the correlation time, we get an effective number of points in times for which is distinct. This gives a mutual information that approaches the value at the rate . The coefficient follows from a detailed calculation. This result complements Section IV.2, providing a convergence timescale of finite-time temporal ensembles from the perspective of the mutual information. We leave to future work the analysis of the ultimate sensitivity at very small intervals , at which point Eq. (44) breaks down.

Our results in this section describe universal, statistical features of the trajectory of a many-body state under chaotic many-body dynamics. They confirm an intuitive, folklore heuristic that chaotic many-body dynamics resembles random unitary dynamics. Here, we find signatures of Haar random states such as the PT distribution in the time-evolved many-body states. We attribute this quasi-randomness to our maximum-entropy principle. This translates to our finding that these temporal trajectories can be used for certain protocols such as benchmarking and sensing. Furthermore, their performance in these protocols is universal and independent of details such as the Hamiltonian, initial state, and measurement basis.

V Projected Ensembles

Next, we turn to the projected ensemble, which is the second setting of our maximum entropy principle. When a many-body state is bipartitioned into a small system and its complement , the large subsystem acts like a “thermal bath” to the smaller subsystem . This observation is instrumental to the emergence of thermal equilibration in a closed quantum system. Nevertheless, the extent to which subsystem truly acts as a bath is not fully characterized, owing to the complexity of the overall quantum many-body state. Projected ensembles offer a lens into the nature of correlations between subsystem and bath. Without the information of the bath, the subsystem is described by the reduced density matrix . In order to go further, it is necessary to obtain information about the bath. In projected ensembles, this is done by projecting the bath onto definite states , typically by performing measurements in a product basis. This in turn projects into a pure state . Taken over all measurement outcomes, a single wavefunction generates a large ensemble of states . By studying the statistical properties of this ensemble of states, as well as any correlations between and , the projected ensemble construction provides a new way to study system-bath correlations.

Our maximum entropy principle states the following: there are correlations between and which are “thermal” in the sense that they arise from (and only from) energy conservation. Furthermore, there are random fluctuations over these thermal correlations. In Section VI, we find that these fluctuations are universal and follow a maximum entropy principle.

In this section, we study a special case. In certain measurement bases, the correlations between and vanish. Nevertheless, effects of energy conservation remain: a mixture of all projected states equals , which is, up to subleading corrections, a thermal Gibbs state with temperature set by the bath [69]. Therefore, while the projected states are effectively identical independent random samples from a distribution of states, the underlying distribution is not Haar-random, but has first moment . In this section, we find that this distribution has maximum entropy under this first moment constraint, a distribution that has been previously studied under the name “Scrooge ensemble” [79, 80, 76].

Explicitly, the projected ensemble is defined as the set of states obtained by projectively measuring a pure state on a large subsystem [Fig. 4(a)]. Specifically, the subsystem is measured, giving a random outcome , which occurs with probability :

| (45) |

This measurement outcome projects the initial state onto a state in the Hilbert space of the remaining subsystem, denoted :

| (46) |

The projected ensemble is the set of states

| (47) |

While this object was first introduced in different contexts, e.g. in Ref. [76], the projected ensemble was later studied by some of the authors in Refs. [28, 29] as a means of quantifying the notion of randomness in a single many-body state (as opposed to ensembles of them), by studying its projected ensemble.

V.1 Results at infinite temperature: approximate -designs

In Ref. [29], it was found that a wide class of many-body states induce projected ensembles that are statistically close to the Haar ensemble. This closeness was measured by the trace distance between the -th moments of the projected ensemble and the Haar ensemble.

Specifically, it was proven that the projected ensembles are approximate -designs when the global states are typical states drawn from the Haar ensemble or from a state -design (with ). This was also numerically demonstrated in natural many-body states, obtained from either (a) time-evolution of an initial state or (b) the eigenstates of a chaotic many-body Hamiltonian, close to infinite temperature, defined as states that satisfy . Subsequent work has rigorously established the emergence of the projected -design in specific settings, such as in dual-unitary models [77, 30, 31, 32, 78], free fermion models [33], or under assumptions of the reduced density matrix [34]. Further work has studied the effects of symmetry [35, 37], the effect of quantum magic in the global state [38] on the projected ensemble, and deep thermalization timescales [30, 31, 36].

In the above studies, it was important that the initial state be at infinite temperature. This is because the first moment of the projected ensemble is the reduced density matrix . For thermal states, is close to a Gibbs state [69]; only at infinite temperature is this equal to the first moment of the Haar ensemble: the maximally mixed state . Therefore, away from infinite temperature, the projected ensembles of such states cannot equal the Haar ensemble. It has remained an open question as to which ensemble describes the finite-temperature projected ensemble.

V.2 Projected ensembles at finite temperature: Scrooge ensemble

Our main result of this section is that under appropriate conditions, the projected ensemble of a finite temperature state is described, by the Scrooge ensemble [79, 80, 76]. Introduced in Ref. [79], any density matrix has a corresponding Scrooge ensemble, which we denote [Figure 4(b)]. This is most easily understood as a “-distortion” of the Haar ensemble, defined by the probability distribution. The state has a higher probability when it has a larger overlap with the principal axes of . We can sample from the Scrooge ensemble by “distorting” wavefunctions sampled from the Haar ensemble, as described in Section II. We can also describe the Scrooge ensemble in terms of its distribution function [80].

| (48) | |||

where is the eigensystem of , and . In the context of the projected ensemble, the first moment is the reduced density matrix of .

In the ideal limit in which the measurement basis is a Haar-random basis on , Ref. [76] proves that the resulting projected ensemble is the Scrooge ensemble.