A Model-based Semi-supervised Clustering Methodology

Abstract

We consider an extension of model-based clustering to the semi-supervised case, where some of the data are pre-labeled. We provide a derivation of the Bayesian Information Criterion (BIC) approximation to the Bayes factor in this setting. We then use the BIC to the select number of clusters and the variables useful for clustering. We demonstrate the efficacy of this adaptation of the model-based clustering paradigm through two simulation examples and a fly larvae behavioral dataset in which lines of neurons are clustered into behavioral groups.

Keywords: BIC, Machine learning, Behavioral data, Model selection, GMM, Mclust

1 Introduction

Clustering is the art of partitioning data into distinct and scientifically relevant classes by assigning labels to observations. Clustering is typically performed in an unsupervised setting, where none of the observed data initially have labels. In semi-supervised learning, some of the data have labels, but others do not; the goal is to classify the unlabeled data by assigning them to the same classes as the labeled data. In the gap between these two settings, there is semi-supervised clustering, in which some of the data have labels, and there may not be labeled data from every class. Furthermore, the total number of classes may be unknown. Just as in semi-supervised learning, leveraging the known labels allows for a more informed clustering.

There are many strategies for solving clustering problems. Some clustering procedures are based on heuristics that lack a principled justification, and many of the basic questions of clustering (e.g., how many classes there are) are often left to the intuition of the practitioner. Fraley and Raftery [9] review model-based clustering, which recasts the task of clustering as a model selection problem, which is well-studied in the field of statistics. The main assumption in model-based clustering is that the data are drawn from one of distributions, where each distribution represents a cluster. Model selection can be accomplished by computing approximate Bayes factors for competing models with different numbers and/or types of components.

Much of the recent work in model-based clustering has centered on variable selection. Raftery and Dean [18] proposed a greedy algorithm for model-based clustering in the unsupervised setting; it used the BIC to choose the number of clusters and the features to consider while clustering by proposing linear relationships between variables that influence clustering and those independent of clustering. Murphy et al. [15] adapted Raftery and Dean’s algorithm to semi-supervised learning. Maugis et al. [13, 12] consider many more scenarios of dependency structures between the clustering variables and the remaining variables than in [18]. Taking a different approach, Witten et al. [22] offer a framework for variable selection in clustering using sparse k-means or sparse hierarchical algorithms by modifying the corresponding objective function to include penalty constraints. In their simulations, they outperform the methods of [18] considerably.

The BIC is generally denoted as

where is the maximum of the likelihood, is the number of parameters estimated, and is the number of data used to estimate those parameters. Alternative information criteria generally differ by having different values for the penalty term. For example, the sample size adjusted BIC [5] is defined as

Because one may wonder of the efficacy of such a deviation from the BIC. This question is particularly salient when considering that the standard derivation of the BIC as the approximation to the integrated loglikelihood is only accurate asymptotically (so that o(1) terms are negligible). In this paper, we will present such a modified BIC that is asymptotically equivalent to the standard BIC and thoroughly explore an analytical example of how such a modification could be useful.

In this paper, we quickly review model based clustering in Section 2. We state our derivation of a modified BIC to the semi-supervised case in Section 3 that is asymptotically equivalent to the standard BIC. We follow this with a detailed discussion of equivalent information criteria, analytical calculations for a toy example, and simulations for a semi-supervised clustering example. Next, we apply our methods to the fly larvae behavioral dataset in Section LABEL:fly. Finally, we conclude the paper with a discussion of the results and extensions to our method.

2 Review of Model Based Clustering

Assume that the data are distributed i.i.d. according to a mixture model,

where are the parameters of the component of the mixture and is the total number of components. It can be shown that this is equivalent to the following generative process: for each

-

(1)

draw from

-

(2)

draw from .

If we consider each of the components as representing a single cluster, then the problem of clustering the data is that of unveiling each latent from the generative process. The expectation-maximization (EM) algorithm can be used to find the maximum likelihood estimates for the parameters of the model and the posterior probabilities of cluster membership for each (cf. [8]).

With this formulation, we have a fully probabilistic clustering scheme. We can set the cluster of the observation to the maximum a posteriori estimate:

Note that the posterior probability can give us a measure of confidence about the classification.

Here, we have used as a general density function, but it should be noted that the multivariate Gaussian distribution is the most common choice of distribution. We will eventually use an information criterion, such as the Bayesian Information Criterion (BIC), to assess the relative quality of different clusterings. Then, the number of clusters can be chosen using the BIC, which rewards model fit and penalizes model complexity.

By considering different constraints to the covariance matrices in the Gaussian components, we can reduce the number of parameters estimated, thus lowering the influence of the penalty term in the BIC. This allows for simpler clusters to be chosen over complex clusters. Celeux and Govaert [6] consider a spectral decomposition of the component’s covariance matrix,

where represents the largest eigenvalue, is a diagonal matrix whose largest entry is , and is a matrix of the corresponding eigenvectors. The interpretation of each term as it relates to cluster is as follows: represents the volume, the orientation, and the shape. By forcing one or more of these terms to be the same across all clusters, we can reduce the number of parameters to be estimated from in the unconstrained case () to in the most constrained case (). We can also force additional constraints, such and/or , leading to the simplest model: with only parameters to estimate.

3 Methodology

The main theoretical contribution of this paper is to derive an adjustment to the BIC for the semi-supervised clustering setting followed by an exploration about equivalent information criteria. We will set forth a formalization of a more general setting, derive the BIC in this broader setting, and then apply our result to the special case of semi-supervised clustering. The modified BIC then represents a principled measure by which to choose the number of clusters and the variables for clustering when performing semi-supervised clustering.

Consider independent random variables

where and for and

Collect all of the into set .

Model , while more general than strictly necessary, encompasses the semi-supervised case. Consider the first group of random variables as those for which we do not have labels and each of the other groups as those whose labels we know. Then, for a proposed total number of clusters , we assume

where is a multivariate normal pdf with mean and covariance matrix , and . Also, for each ,

where the double subscript is to account for possible relabeling. It follows that

where

Then, for each , is the same as except that the mixing coefficients are constrained such that and all other .

With this model, we can derive the Expectation step (E-step) and Maximization (M-step) of the EM algorithm. Note that semi-supervised EM is not new; indeed, similar calculations can be found in [14] Section 2.19 and [19].

Here, we generalize the BIC to the semi-supervised case with the aim of not overly penalizing for supervised data. The modified BIC approximation to the integrated likelihood of model is

where is the maximum likelihood estimate (MLE).

We provide the derivation in the appendix. Compare the above result to the classical BIC approximation:

The derivation of the BIC (adjusted and normal) involves an O(1) term, which is dropped at the end. Due to this, the difference between the two BICs is a smaller penalty in the semi-supervised case (i.e. vs. , which is a difference). This can be interpreted as not penalizing more complicated cluster structures for the supervised data. We will discuss the merit of such a deviation in Section 4.

It is known that finite mixture models do not meet the regularity conditions for the BIC approximation used to approximate the integrated likelihood. However, [9] comment that it is still an appropriate and effective approximation in the paradigm of model-based clustering. Thus, we will not be too remiss in considering Theorem LABEL:BIC as applicable when performing semi-supervised clustering. Because the model is appropriate for the semi-supervised case, we can use the modified BIC as a way to calculate Bayes factors for different number of total clusters , subsets of variables to be included in the clustering, and parameterizations of . The largest BIC value will therefore correspond to our chosen model for clustering. We will call the semi-supervised clustering algorithm using the BIC from Theorem LABEL:BIC to make the aforementioned selections ssClust henceforth.

4 o(1) equivalent Information Criteria

Here, we comment on the use of different penalty terms. Suppose that we define an adjusted BIC, say as a function of as

When does using such an adjustment matter? Consider two models, say and , in competition for selection. Fix an . Suppose that

-

(i)

-

(ii)

and

-

(iii)

In this case, we see that under the original definition of the BIC, we would choose over , and with the modified definition, we would do the opposite.

In order to analyze this under some assumptions, define two statistical tests:

and

When do and differ?

-

Case 1:

and For this is impossible.

-

Case 2:

and We have two subcases.

-

(a)

Suppose is the true model. We would be correct in choosing over .

-

(b)

Suppose is the true model. Note that in this case, we would make a mistake by choosing over .

-

(a)

We would like to analyze the probabilities in case 2. Preferably, (2.a) is much more probable than (2.b). Unfortunately, under (2.a) the distribution of is only known for certain cases. For example, with local alternatives of the form is known to have noncentral chi square distribution with and the appropriate non-centrality parameter. Since this result requires rather quixotic assumptions to hold, we will not theoretically bound the probabilities for more general cases; instead, we will defer our analysis to a specific example.

We can say more about (2.b) with some assumptions.

Proposition 4.1.

Suppose the models are nested (so that is a submodel of ). Define Under , and with probability

| (1) |

where is the distribution function of an distribution with

Proof.

We can do some algebra on and to obtain equivalent condition that

If the models are nested (so that is a submodel of ), then the numerator of converges in law to a distribution with by Wilk’s Theorem. In this case, we note that by Slutsky’s Theorem, asymptotically has the distribution of an F-statistic with

Hence, under the nested models assumption, the probability that and hold when they should not (i.e. choose when is the truth) is given by equation (1). ∎

4.1 Illustrative Example

We now present an analysis for specific null and alternative models where we can explicitly calculate the probabilities of cases (2.a) and (2.b). The purpose is to demonstrate that for finite , the choice of penalty term is nontrivial.

Let with . Consider the nested models:

-

•

-

•

where is the dimensional identity matrix.

Let

Then, and Suppose that according to whether or not is the true model.

Fix a realization of the data Let and be with the coordinates after set to 0. Twice the maximized loglikelihood of the data under is (up to constants)

Under it is

since . Thus we find that

-

2a)

Under ,

where noncentral Hence, for any

Therefore,

-

2b)

Under

(2) where . Thus,

Hence,

Clearly, we can vary and to influence these probabilities. We will now do so to show how (a) some penalty is better than none; (b) the AIC penalty can result in mistakes; and (c) the optimal penalty depends on and .

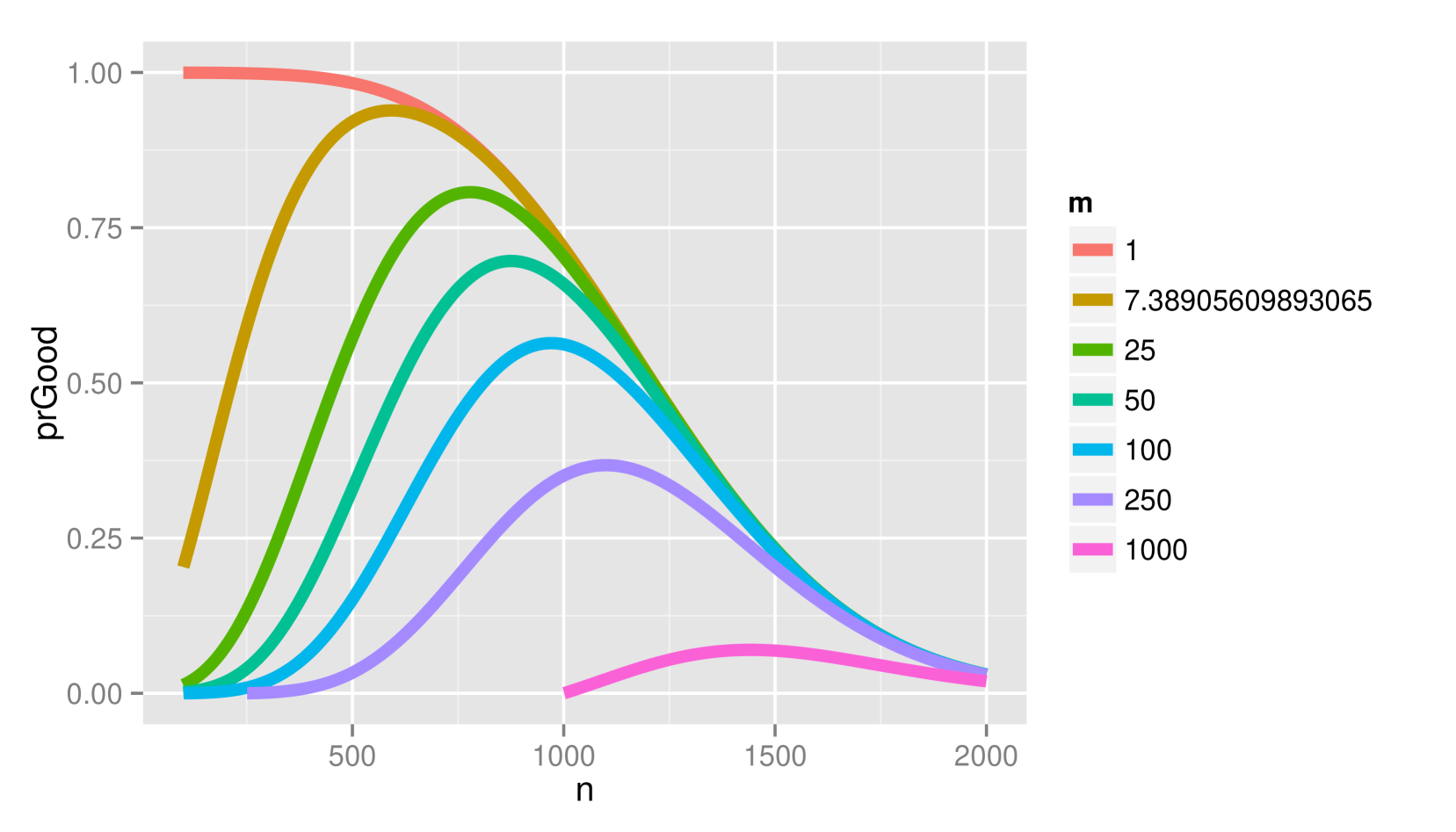

Figure 1 depicts the probability of (2.a) and (2.b) when and vary for fixed and under the alternative (a) and null (b) hypotheses. Lower penalties than the BIC would use generally result in better decisions under . However, there is approximately a chance of error under when the BIC would be correct with the penalty of that of the AIC.

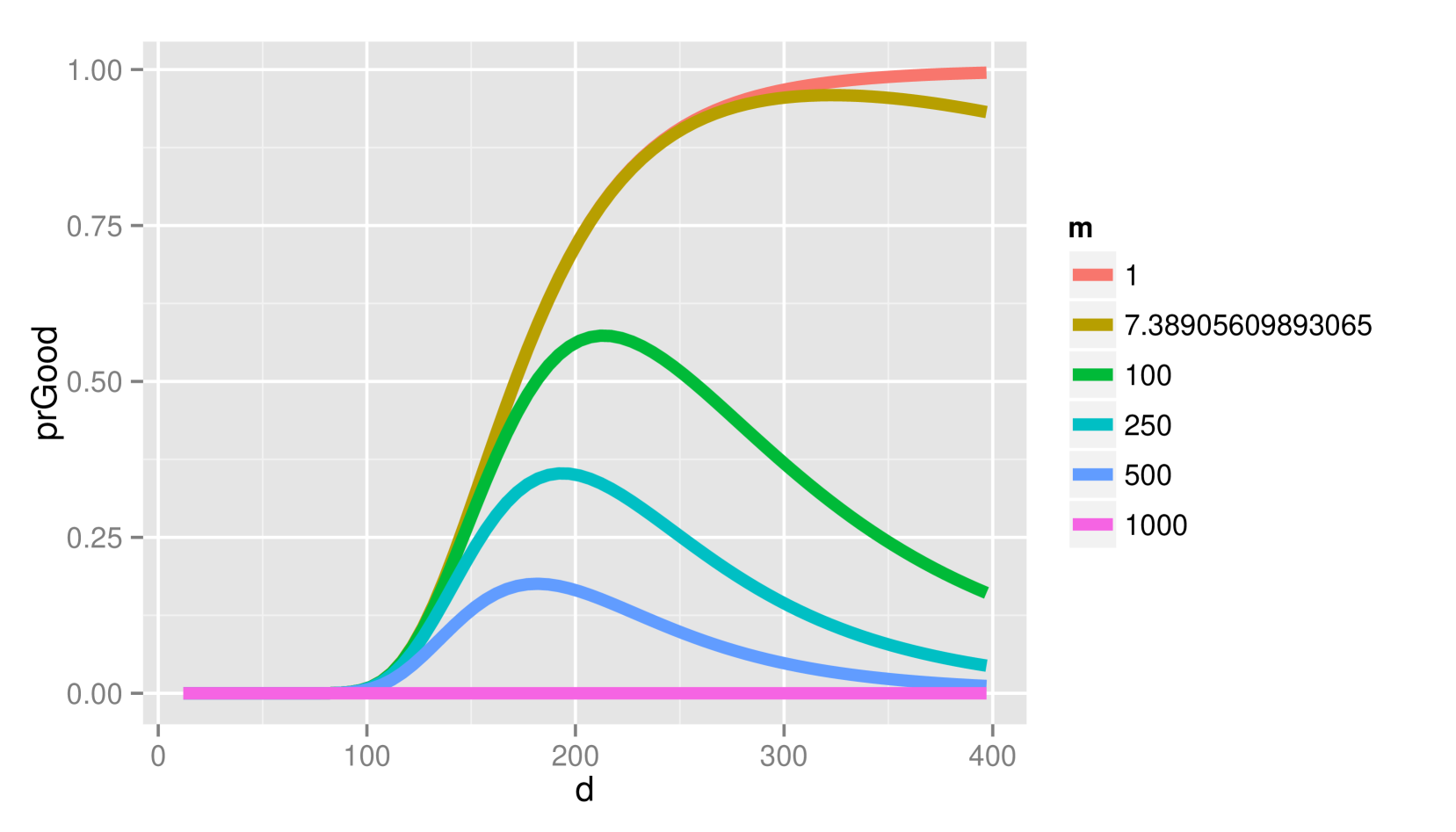

Figure 2 depicts the probability of (2.a) and (2.b) when and vary for fixed and under the alternative (a) and null (b) hypotheses. Lower penalties than the BIC would use generally result in better decisions under without sacrificing making too many new mistakes under .

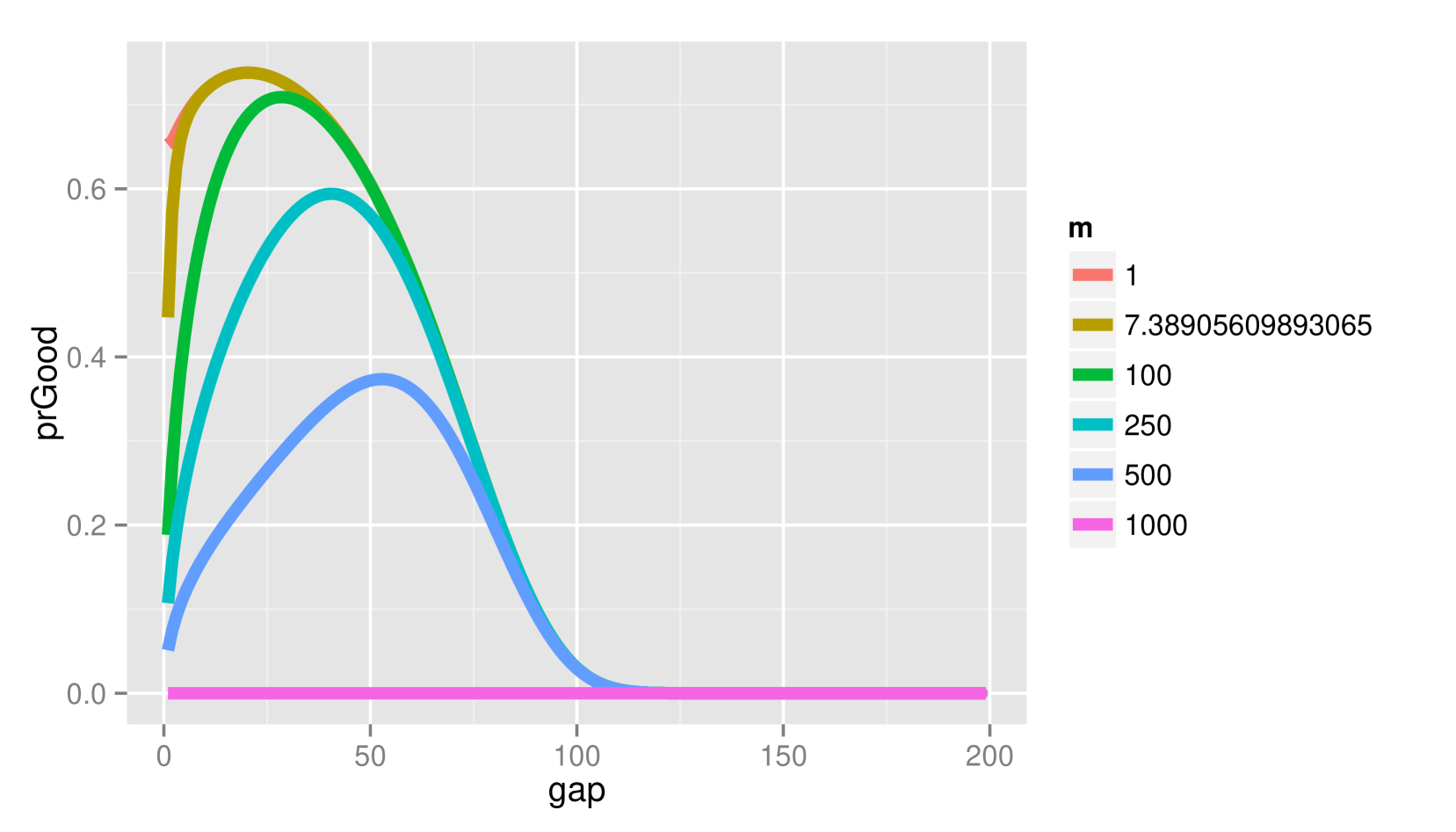

Figure 3 depicts the probability of (2.a) and (2.b) when and vary for fixed and under the alternative (a) and null (b) hypotheses. Lower penalties than the BIC would use generally result in better decisions under and not too much worse decisions under . However, there is approximately a larger chance of error under when the BIC would be correct with the AIC penalty for smaller .

This example may seem overly simplistic. Indeed, it does not involve semi-supervised clustering at all. However, it does demonstrate the complexities of penalization in model selection with finite samples.

4.2 Simulation Study

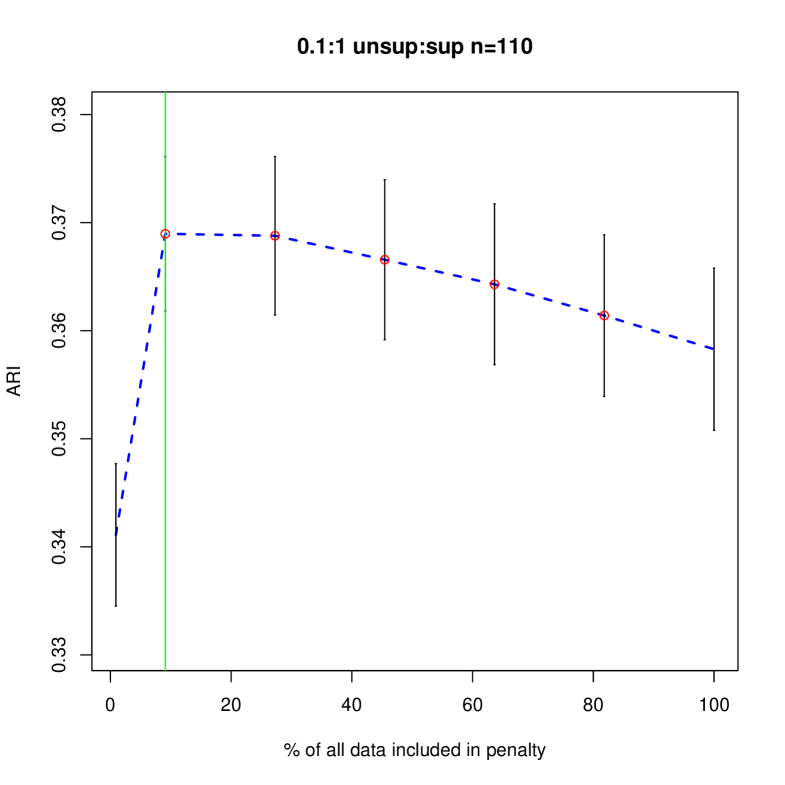

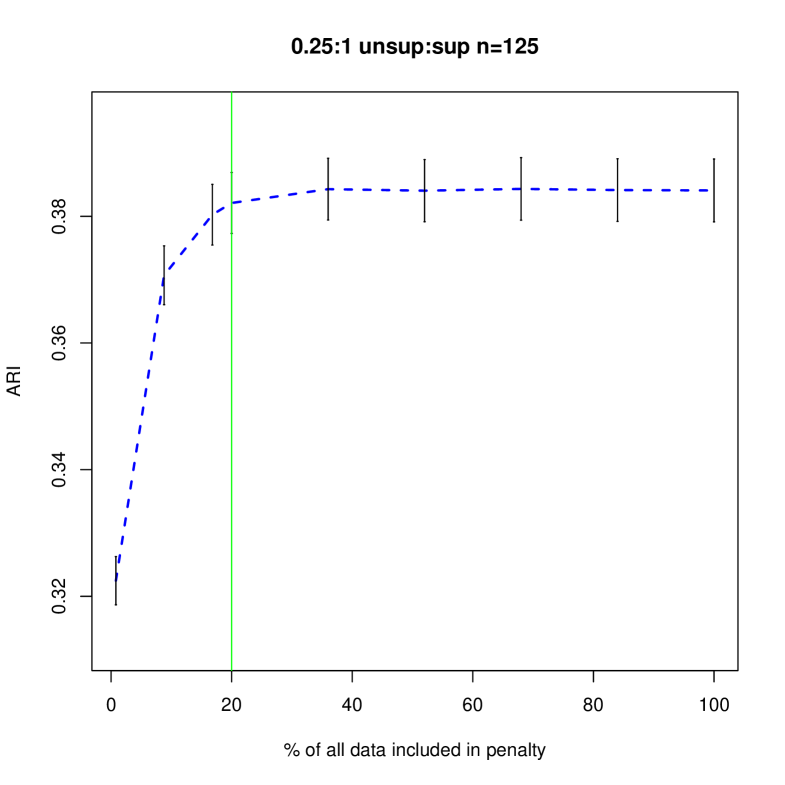

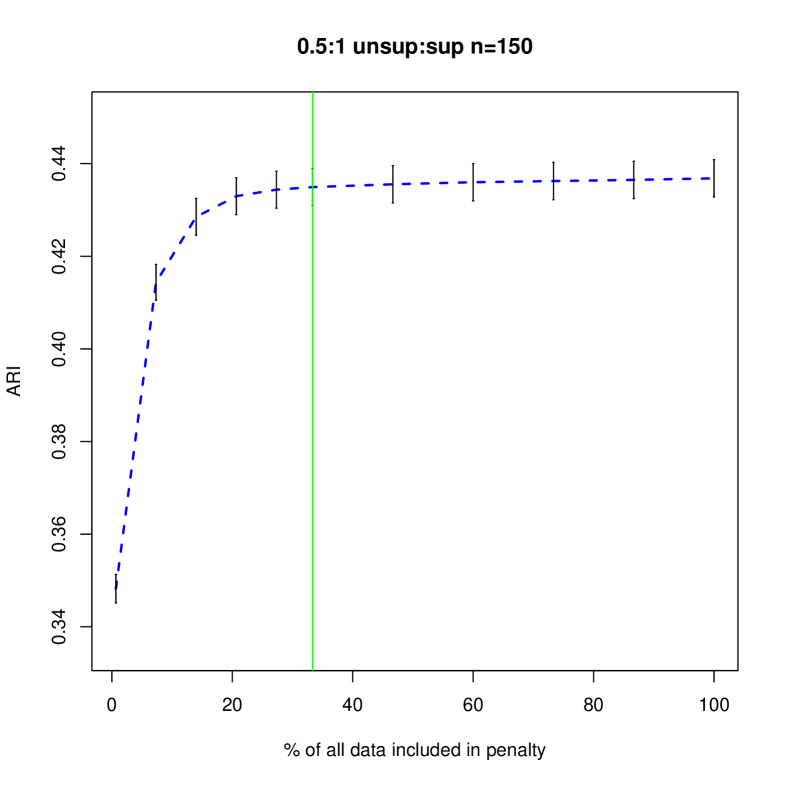

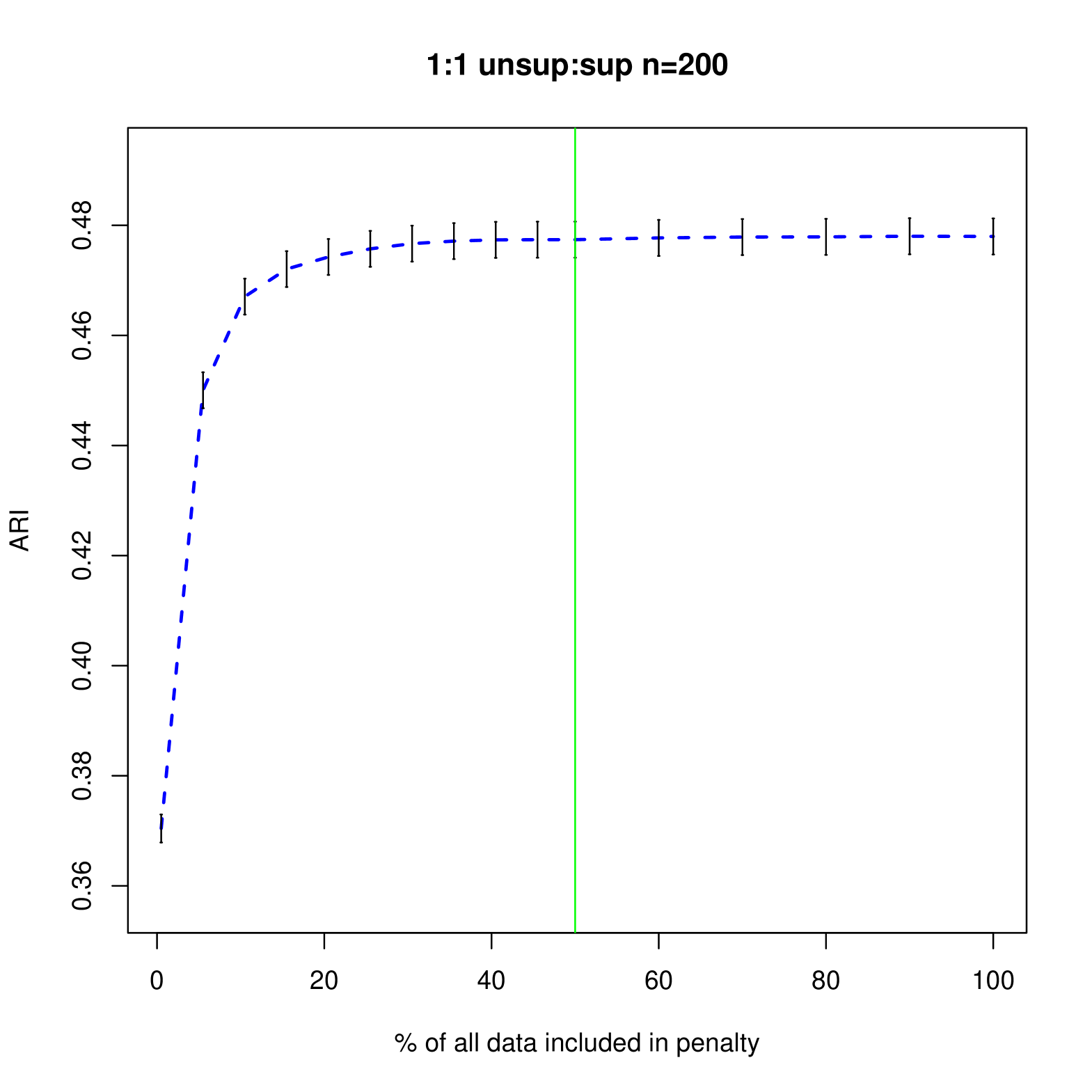

We would like to demonstrate the impact of the previously discussed model selection complexities from the illustrative example on the inference task of semi-supervised clustering. To that end, consider the following procedure for constructing a dataset , semi-supervising it, and clustering it using model-based clustering with different penalties. We will then compare the resulting ARI scores.

Let

Also, let

and

Consider the following procedure for generating one Monte Carlo Replicate.

-

1.

Define the pdf of each datum as being from a mixture model where with generated using the onion method of [11]

-

2.

Draw supervised from the first two components.

-

3.

Draw unsupervised from the full mixture model.

-

4.

Cluster using Gaussians with various constraints on the proposed covariance matrices.

-

5.

Choose the models for clustering based on different values of in the penalty term where

-

6.

Calculate resulting ARIs using the chosen models..

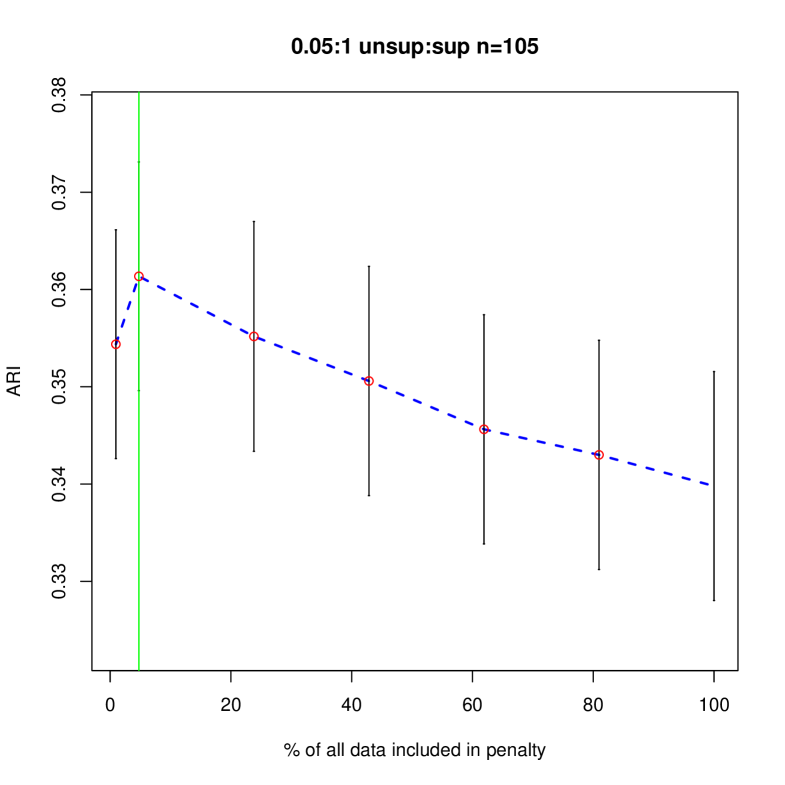

Figures 4 and 5 shows how different penalties can affect the overall clustering performance on the unlabeled data. Generally, we see that with more unsupervised data, there are less differences between the different choices of penalty terms in the interval which makes sense given the asymptotic results. In this particular example, less penalization allowed us to detect the third component sooner, improving the ARI values in the resulting clustering. Thus, we have shown that in an example closer to our problem of interest, the restricted penalization derived in the previous section can be efficacious as compared to the standard penalty. Additionally, as grows, the differences between the information criteria that are dependent on to the same order becomes negligible even for relatively small values of .

5 Identifying Fly Behaviotypes

In one of the motivating applications for this work, classes of neurons in Drosopholia larvae are controlled using optogenetics (cf. [1] regarding optogenetics). In [20], they observe the reactions of the affected larvae to stimuli in high-throughput behavioral assays. The goal is to determine which classes of neurons cause similar changes in behavior when deactivated.

We initially collected data on larvae grouped into dishes. By changing the optogenetic procedure, known lines are created, pbd1, 38a1, 61d0, ppk1, 11f0, pbd2, 38a2, pbd3, ppk2, iav1, and 20c0. Of these lines, we discarded the larvae in pbd3 and ppk2 because they had less than 40 larvae each. Further, we discarded all larvae with an unknown line. After curating the data, we now have larvae. Each larva is observed while responding to various stimuli. Vogelstein et al. citevogelstein2014discovery expand on the methods of [16] and [17] and describe how the observations are embedded into , where here . We use the method presented in [24] to select the elbow of the scree plot to further reduce the data to dimensions. We did not perform any additional feature selection.

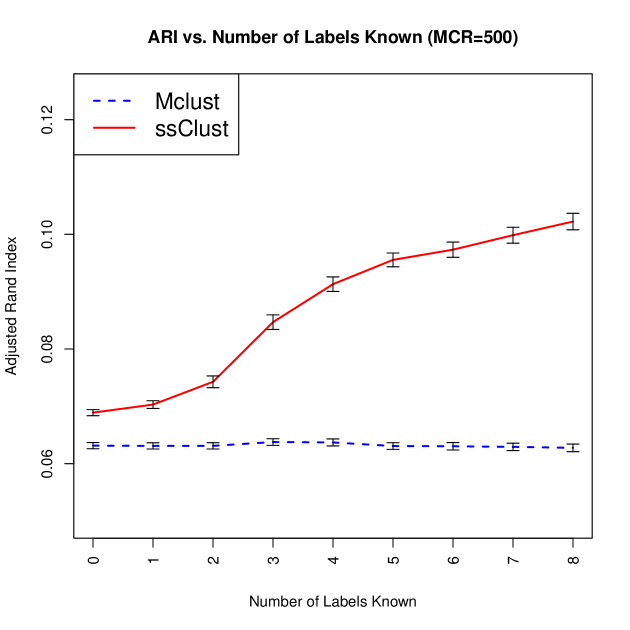

For each Monte Carlo replicate, we use a small subset of the data where the line was known (101 randomly chosen animals from each of the 9 remaining lines) along with pre-labeled data randomly chosen from the 101 animals in each line. We wrote an R package entitled ssClust to perform semi-supervised GMM with similar options to the popular Mclust software, which is an R package for GMM [10]. We cluster the points using both ssClust and Mclust. Then, we compute the ARI against the line type for both methods.

We observe that the initialization strategy of using ss-k-means++ instead of hierarchical clustering results in a significant improvement to the ARI even with no supervision. We find that a single animal per line significantly improves the clustering results, as expected (cf. Figure 6). Further, we can see that there are diminishing returns on additional supervision starting at supervised examples per line.

5.1 Differentiating Lines

Vogelstein et al. [20] posed and answered questions of the form, “Is line X different than line Y (in terms of behaviotypes)?” As an illustrative example, we will perform a similar analysis comparing the ppk1 and pbd1 lines but will additionally incorporate some supervision. Here, our interpretation of the clusters will shift from lines to behaviotypes. Our proposed procedure for distinguishing between lines is as follows:

-

(1)

Sample animals from each line

-

(2)

Label animals from each line according to a labeling strategy (see below)

-

(3)

Cluster the animals using ssClust and Mclust into between 2 and 12 clusters using the parameterizations EEE, VVV, VII, and EII.

-

(4)

Collect the results and construct empirical probability of cluster membership for each line

-

(5)

Compute the Hellinger distance between the two lines to be compared and store this as statistic

-

(6)

Simulate the distribution of under the null hypothesis that the lines are the same by permuting the labels and computing the Hellinger distance for for some large integer .

-

(7)

Return an empirical p-value based on steps (5) and (6).

In item (2) we did not specify a labeling strategy in detail. We propose a strategy that is reasonably realistic to execute for our particular dataset. Vogelstein et al. [20] used a hierarchical clustering scheme in which the first few layers were visually identifiable by watching the worms. Thus, by using their labels from an early layer (layer 2, with 4 clusters total), we have a plausible level of supervision for a human to have performed. Specifically, we sample at random a label from the true labels among a line with weights proportional to the counts of each label in that line. Using that label, we sample 3 worms of that label in that line to be the supervised examples. Next, for the other line, we sample a different label, and 3 examples with that true label.

We see that based on all three labeling strategies that both ssClust and Mclust are able to corroborate the results from [20] even with small amounts of data: ppk1 and pbd1 are statistically different (p-value for both for 500 MC replicates).

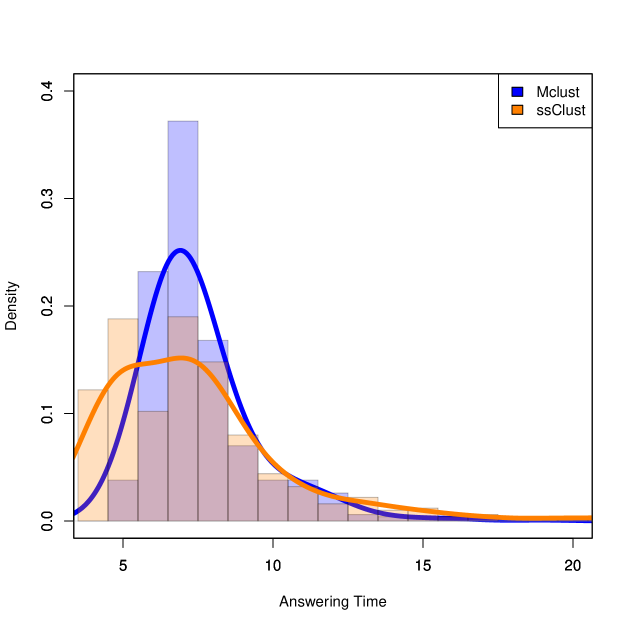

We now show that ssClust can answer these questions “sooner” than Mclust. That is, the p-value () will be below the significance level with fewer unsupervised examples. To quantify this concept, we introduce the “answering time” for algorithm A:

where here we use the notation

to be the labeled and unlabeled data.

The p-value constraint bears some explanation; it says that we require a significant p-value for all datasets at least as large as with unsupervised examples per line. Note that here we assume our datasets are nested and that they all use the same supervised examples. Since the answering time will be dependent on the datasets used, we perform a Monte Carlo simulation with 500 random sequences of datasets and report the answering times for both ssClust and Mclust (cf. Figure 7). The median answering time for ssClust is significantly lower than for Mclust (p-value = 4.7e-12 for paired Wilcoxon signed-rank test).

6 Conclusion

Previously explored approaches to semi-supervised clustering include a modified K-means, which can be seen as our algorithm constrained to spherical and identical covariance matrices without the adjusted BIC for model selection (cf. [2]). Others have used latent variables representing clusters and cluster-dependent distributions to give a probabilistic approach to a slightly different problem, where instead of labels being known, only pairwise constraints in the form of must-link and cannot-link are given (cf. [3]). [21] applied an appropriately modified K-means to this problem. Finally, [7] and [23] offer a different approach to semi-supervised clustering involves training a measure to account for constraints or labels.

The main contribution of this paper over previous works is presenting a probabilistic framework for semi-supervised clustering and deriving an information criterion consistent with the framework to allow selection of number of clusters and/or clustering variables. With the corrected BIC, we found that in the simulated examples and fly larvae dataset our method outperformed the most comparable method, Mclust. In the fly dataset, ssClust was able to recover lines better and yielded a lower answering time more often for the question of whether two particular lines were different, behaviorally. This indicates that incorporating even a small amount of information can help guide the clustering process.

Future areas of research will involve handling the more nuanced must-link and cannot-link constraints, which are flexible enough to encompass the labels-known problem we have explored in this paper.

7 Appendix

A Derivation of the BIC for the Semi-supervised Model

Assume the data are distributed according to a member of model described in Section 3.

Consider the integrated likelihood:

| (3) |

where is a probability, pmf, or pdf where appropriate. Let

the log of the posterior likelihood. Suppose that the posterior mode exists, say .

A second order Taylor expansion about gives

By the first order optimality necessary conditions, we know Note faster than as . By ignoring the last term, we will approximate with the truncated Taylor expansion:

Recalling (3), we may approximate using a saddle point approximation:

Recognize the integral as proportional to the density of a multivariate Guassian with mean and covariance . Let Then, we have

| (4) |

where is number of free parameters in . If the conditions in Proposition 3.4.3 in [4] hold, we have for sufficiently large, where is the Fisher information matrix.

Taking of both sides, (4) becomes

Now, we must calculate . Define Observe

Henceforth, we will use to denote . We will assume that is positive definite, so that it can be written as

for some non-singular matrix . Let denote the -dimensional identity matrix. For notational purposes, let

Observe

where . It should be noted that is a positive semi-definite matrix, as it is a transform of a sum of positive semi-definite matrices, so that Sylvester’s Theorem states that has the same inertia as a positive semi-definite matrix.

Next, we would like to describe the growth of . For any eigenvalue of , say , we have by Weyl’s theorem

Observe

which is independent of . Let Then, we have

The only term growing with above is the ratio of the supervised data over the unsupervised data. In general, it is usually much more expensive to obtain additional supervised data than unsupervised; thus, we find it reasonable to posit that in (i.e. is ). In this case, is by continuity and our bounds.

Hence,

| (5) | |||||

| (6) |

When the posterior mode is nearly or is equal to the MLE, as is the case when the prior on is uniform and is finite (cf. Bickel and Doksum pp. 114), substitute , the MLE, for , the posterior mode. Then, we have

Note that the terms of order less than get washed out in the limit as . If we drop them, we have our derivation of the adjusted BIC. ∎

References

- opt [2011] Method of the year 2010. Nat Meth, 8(1):1–1, 01 2011. URL http://dx.doi.org/10.1038/nmeth.f.321.

- Basu et al. [2002] Sugato Basu, Arindam Banerjee, and Raymond Mooney. Semi-supervised clustering by seeding. In Proceedings of 19th International Conference on Machine Learning. Citeseer, 2002.

- Basu et al. [2004] Sugato Basu, Mikhail Bilenko, and Raymond J Mooney. A probabilistic framework for semi-supervised clustering. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, pages 59–68. ACM, 2004.

- Bickel and Doksum [2001] Peter J. Bickel and Kjell A. Doksum. Mathematical Statistics, volume I. Prentice-Hall, Inc., 2001.

- Boekee and Buss [1981] DE Boekee and HH Buss. Order estimation of autoregressive models. In Proceedings of the 4th Aachen colloquium: Theory and application of signal processing, pages 126–130, 1981.

- Celeux and Govaert [1995] Gilles Celeux and Gérard Govaert. Gaussian parsimonious clustering models. Pattern recognition, 28(5):781–793, 1995.

- Cohn et al. [2003] David Cohn, Rich Caruana, and Andrew McCallum. Semi-supervised clustering with user feedback. Constrained Clustering: Advances in Algorithms, Theory, and Applications, 4(1):17–32, 2003.

- Dempster et al. [1977] Arthur P Dempster, Nan M Laird, and Donald B Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society. Series B (Methodological), pages 1–38, 1977.

- Fraley and Raftery [2002] Chris Fraley and Adrian E Raftery. Model-based clustering, discriminant analysis, and density estimation. Journal of the American Statistical Association, 97(458):611–631, 2002.

- Fraley et al. [2012] Chris Fraley, Adrian E. Raftery, T. Brendan Murphy, and Luca Scrucca. mclust version 4 for r: Normal mixture modeling for model-based clustering, classification, and density estimation. Technical Report 597, University of Washington, Department of Statistics, June 2012.

- Joe [2006] Harry Joe. Generating random correlation matrices based on partial correlations. Journal of Multivariate Analysis, 97(10):2177–2189, 2006.

- Maugis et al. [2009a] Cathy Maugis, Gilles Celeux, and M-L Martin-Magniette. Variable selection in model-based clustering: A general variable role modeling. Computational Statistics & Data Analysis, 53(11):3872–3882, 2009a.

- Maugis et al. [2009b] Cathy Maugis, Gilles Celeux, and Marie-Laure Martin-Magniette. Variable selection for clustering with gaussian mixture models. Biometrics, 65(3):701–709, 2009b.

- McLachlan and Peel [2004] Geoffrey McLachlan and David Peel. Finite mixture models. John Wiley & Sons, New York, 2004.

- Murphy et al. [2010] Thomas Brendan Murphy, Nema Dean, and Adrian E Raftery. Variable selection and updating in model-based discriminant analysis for high dimensional data with food authenticity applications. The annals of applied statistics, 4(1):396, 2010.

- Priebe [2001] Carey E. Priebe. Olfactory classification via interpoint distance analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 23(4):404–413, 2001.

- Priebe et al. [2004] Carey E Priebe, David J Marchette, and Dennis M Healy Jr. Integrated sensing and processing decision trees. Pattern Analysis and Machine Intelligence, IEEE Transactions on, 26(6):699–708, 2004.

- Raftery and Dean [2006] Adrian E Raftery and Nema Dean. Variable selection for model-based clustering. Journal of the American Statistical Association, 101(473):168–178, 2006.

- Shental et al. [2003] Noam Shental, Aharon Bar-Hillel, Tomer Hertz, and Daphna Weinshall. Computing gaussian mixture models with em using side-information. In Proc. of workshop on the continuum from labeled to unlabeled data in machine learning and data mining, 2003.

- Vogelstein et al. [2014] Joshua T Vogelstein, Youngser Park, Tomoko Ohyama, Rex A Kerr, James W Truman, Carey E Priebe, and Marta Zlatic. Discovery of brainwide neural-behavioral maps via multiscale unsupervised structure learning. Science, 344(6182):386–392, 2014.

- Wagstaff et al. [2001] Kiri Wagstaff, Claire Cardie, Seth Rogers, Stefan Schrödl, et al. Constrained k-means clustering with background knowledge. In Proceedings of the Eighteenth International Conference on Machine Learning, volume 1, pages 577–584, 2001.

- Witten and Tibshirani [2010] Daniela M Witten and Robert Tibshirani. A framework for feature selection in clustering. Journal of the American Statistical Association, 105(490), 2010.

- Xing et al. [2002] Eric P Xing, Michael I Jordan, Stuart Russell, and Andrew Y Ng. Distance metric learning with application to clustering with side-information. In Advances in neural information processing systems, pages 505–512, 2002.

- Zhu and Ghodsi [2006] Mu Zhu and Ali Ghodsi. Automatic dimensionality selection from the scree plot via the use of profile likelihood. Computational Statistics & Data Analysis, 51(2):918–930, 2006.