A Multi-Stage Goal-Driven Network for Pedestrian Trajectory Prediction

††thanks: This research was funded by Fujian NSF (2022J011112) and the Research Project of Fashu Foundation (MFK23001).

Abstract

Pedestrian trajectory prediction plays a pivotal role in ensuring the safety and efficiency of various applications, including autonomous vehicles and traffic management systems. This paper proposes a novel method for pedestrian trajectory prediction, called multi-stage goal-driven network (MGNet). Diverging from prior approaches relying on stepwise recursive prediction and the singular forecasting of a long-term goal, MGNet directs trajectory generation by forecasting intermediate stage goals, thereby reducing prediction errors. The network comprises three main components: a conditional variational autoencoder (CVAE), an attention module, and a multi-stage goal evaluator. Trajectories are encoded using conditional variational autoencoders to acquire knowledge about the approximate distribution of pedestrians’ future trajectories, and combined with an attention mechanism to capture the temporal dependency between trajectory sequences. The pivotal module is the multi-stage goal evaluator, which utilizes the encoded feature vectors to predict intermediate goals, effectively minimizing cumulative errors in the recursive inference process. The effectiveness of MGNet is demonstrated through comprehensive experiments on the JAAD and PIE datasets. Comparative evaluations against state-of-the-art algorithms reveal significant performance improvements achieved by our proposed method.

Index Terms:

trajectory prediction, autonomous driving, attention mechanism, goal drivenI Introduction

With the rapid advancement of autonomous driving technologies, the research and application of pedestrian trajectory prediction have increasingly attracted widespread attention. Pedestrians are an important part of the urban transportation system, and predicting their behavior patterns and trajectories has a crucial impact on traffic flow analysis, autonomous driving, intelligent monitoring, and urban planning[1, 2, 3, 4]. In interactive environments, the comprehension and prediction of pedestrian trajectories play a pivotal role in ensuring the safe navigation of autonomous driving systems. For instance, the precision of predicting pedestrians’ future trajectories is imperative for self-driving cars to avert collisions and uphold road safety. There is also a need to strategize secure and socially-aware paths while issuing alerts for unusual movements. Moreover, in intelligent monitoring, predicting pedestrian trajectories proves beneficial for detecting abnormal behaviors and potential safety risks. Real-time analysis of pedestrian trajectories enables prompt detection of safety hazards, including situations such as pedestrians in non-traffic areas or abnormal gatherings of pedestrian groups. Subsequently, appropriate measures can be implemented to prevent accidents.

In recent years, the swift advancement of deep learning technology, notably with the introduction of recurrent neural networks (RNN) and long short-term memory networks (LSTM), has substantially elevated the accuracy of pedestrian trajectory prediction. This progress has spawned a large amount of related research, leveraging a series of technologies such as attention mechanisms [5, 6, 7, 8], graph neural networks [9, 10, 11], generative models [12, 13, 14], and goal-driven networks [15, 16, 17, 18, 19] to better capture complex spatio-temporal relationships and understand pedestrian movement patterns, thereby achieving more accurate predictions of pedestrians’ future trajectories.

Although significant research has been conducted in recent years, the majority of studies have focused on aerial views or fixed cameras in video surveillance, rather than cameras installed on autonomous vehicles. The primary distinction is that the latter must account for ego-motion, thereby complicating pedestrian trajectory prediction from an egocentric perspective due to the influence of ego-motion captured by in-vehicle cameras. Most of the work [20, 21, 22, 23, 24] based on the egocentric perspective utilizes additional information such as ego-motion, semantic intention, image features or social interaction to some extent. However, in recent years, certain goal-driven models [25, 26] solely utilize observed trajectories as input and direct the generation of future trajectories by predicting either a long-term goal or step-by-step goals. Their performance surpasses that of many methods requiring additional features. Thus, we initiated a study on enhancing trajectory prediction performance through a goal-driven approach without the reliance on additional features. Diverging from existing goal-driven models, which typically estimate only the final destination or employ stepwise recursion, we introduce a multi-stage goal-driven network (MGNet). We posit that predicting stage goals can more effectively steer the forward recursive reasoning of the trajectory, consequently reducing cumulative errors in the reasoning process and enhancing long-term trajectory prediction. In real-life scenarios, pedestrians often need to plan a series of goals to guide their travel direction when aiming to reach a destination. This multi-stage goal is more detailed than a single goal and more accurately represents the pedestrian’s movement intention. Based on this assumption, we employ a conditional variational autoencoder (CVAE) augmented with an attention mechanism as the encoder. The CVAE learns the distribution of future trajectories conditioned on observed past trajectories through stochastic latent variables, while the attention mechanism captures complex temporal dependencies to better predict multi-stage goals. Then experiments show that estimating multi-stage goals provides superior guidance for predicting future trajectories.

The primary contributions of this work can be summarized into the following four parts:

-

•

We propose a novel pedestrian trajectory prediction network (MGNet) designed to mitigate errors in recursive prediction by anticipating multiple staged goals.

-

•

We employ the attention mechanism to integrate the trajectory distribution features acquired by CVAE, enhancing the prediction of staged goals in the future trajectory and consequently achieving further performance improvement.

-

•

We crafted a multi-stage goal evaluator characterized by a double-layer structure. This evaluator utilizes the goal features from the upper layer to inform the output of subsequent layers of goals.

- •

The remainder of the paper is structured as follows: Section II offers a thorough review of related works. Section III delineates the essential components of the proposed methods. Section IV outlines the experimental details and furnishes both qualitative and quantitative comparisons. Finally, Section V summarizes the findings and engages in a discussion of future works.

II Related Work

II-A Trajectory Prediction in Dynamic Video Scenes

The egocentric camera perspective is frequently considered the most natural viewpoint for observing the surrounding environment of an ego-vehicle. However, it poses additional challenges due to its limited field of view and ego-motion. Several studies have addressed these challenges by transforming the perspective into a bird’s-eye view using 3D sensors [28, 29, 30, 31, 32, 33]. While this method is feasible, it is susceptible to measurement errors and multimodal data processing issues, especially with LiDAR and stereo sensors.

Therefore, there are also many studies conducted directly under the egocentric view. Bhattacharyya et al. [34] employed Bayesian Long Short-Term Memory (LSTM) networks to model observation uncertainty and integrated them with ego-motion to predict the distribution of potential future positions. Yagi et al. [35] utilized information such as pose, locations, scales, and past ego-motion to predict the future trajectory of a person. Chandra et al. [22] models the interrelationships between nearby heterogeneous objects to predict trajectories. Yao et al. [36] proposed a novel multi-stream RNN encoder-decoder model that independently captures both object location and appearance. Makansi et al. [21] estimate a reachability prior for objects based on the semantic map and project this information into the future. Diverging from the aforementioned approaches, we abstain from employing additional features such as self-motion, semantic intent, and image characteristics. Instead, we exclusively utilize observed past trajectories as input to accomplish pedestrian trajectory prediction from an egocentric perspective.

II-B Attention-based Methods for Trajectory Prediction

In recent years, more and more studies have shown the effectiveness of attention mechanisms in trajectory prediction. Huang et al. [5] integrated a Graph Attention Network (GAT) with Long Short-Term Memory (LSTM) to model pedestrian motion. Sadeghian et al. [6] combine attention mechanisms with social interactions and physical information between pedestrians to generate future trajectories. Yu et al. [7] proposed a spatio-temporal graph transformer framework that exclusively employs the attention mechanism to address trajectory prediction. chiara et al. [8] introduce a straightforward yet effective attention-based recurrent architecture designed to handle temporal dependencies. nayakanti et al. [37] proposed an attention-based scene encoder-decoder capable of fusing one or more modalities across temporal and spatial dimensions. In our work, we integrate the attention mechanism with the generative model CVAE to enhance the generation of stage goals by capturing more intricate temporal dependencies.

II-C Goal-Driven Methods for Trajectory Prediction

Several works leverage goal prediction to improve prediction accuracy. Mangalam et al.[16] assist in long-range multi-modal trajectory prediction by inferring distant trajectory endpoints. Rhinehart et al. [38] introduce a generative multi-agent forecasting method capable of conditioning on agent goals and modeling the relationships between individual agent goals. Zhao et al. [39] predict an agent’s potential future goal states by encoding its interactions with the environment and other agents, and then generate a trajectory state sequence conditioned on the goal. Yao et al. [25] employs a bidirectional decoder on the predicted goal to enhance long-term trajectory prediction. Wang et al. [26] predict a series of stepwise goals at various temporal scales and integrate them into both encoders and decoders for trajectory prediction. Building upon the existing work, we have extended beyond the prediction of a single long-term goal or stepwise goals to guide trajectory generation. We have designed a multi-stage goal evaluator to predict multiple stage goals within future trajectories. By leveraging stage goal features, our aim is to reduce cumulative errors in recursive inference, thereby generating more accurate trajectories.

III Proposed Method

III-A Problem Formulation

As shown in Fig. 1, the purpose of trajectory prediction is to anticipate the future position sequence of the target in a scene based on the observed past position sequence. At time step t, we use to represent the past trajectories of n pedestrians. Where is the set of observation positions of pedestrian i in the past frames. For , it represents the position and size of a bounding box, where denotes the center coordinates, and represents the width and height of the bounding box, respectively. Given , a predictor can be employed to forecast the future trajectories for all n pedestrians, where represents the position of pedestrian i over the next frames. The definition of is similar to and denotes a bounding box. We aim to design a model such that the predicted future trajectory closely aligns with the actual future trajectory . The definitions of and are similar. Furthermore, we use to denote a series of stage goals for n pedestrians output by the multi-stage goal evaluator. Then, represents the stage goal position of pedestrian i in the upcoming frames, where denotes the division of the trajectory for the next frames into stage goals.

III-B Overview

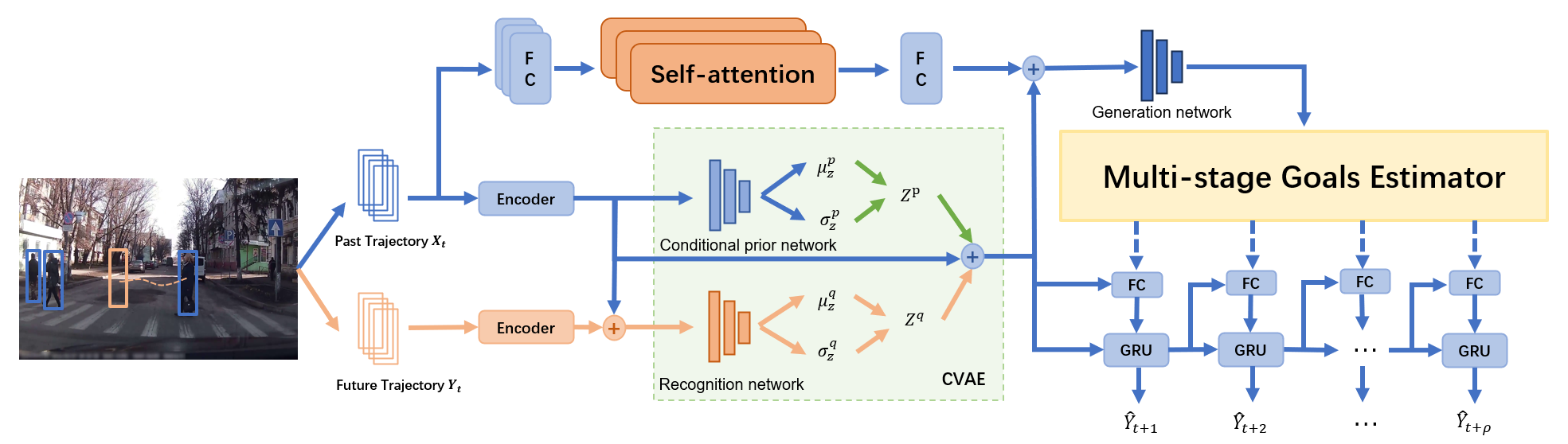

The model we propose is primarily structured around three key modules: the conditional variational autoencoder, the attention module, and the multi-stage goal evaluator. The encoding part of the model can be roughly divided into two sections. In the first part, to capture more intricate temporal dependencies, we utilize the parallel self-attention mechanism in Transformers to encode the past trajectory , resulting in the feature vector . In the second part, the past trajectory needs to be encoded through a Gated Recurrent Unit (GRU) encoder to obtain the encoded feature vector . During the training phase, the ground truth goal is encoded by another GRU encoder and concatenated with ht to generate the feature vector . Subsequently, the feature vectors and are respectively input into the conditional prior network and recognition network within the CVAE module to learn the distribution of future trajectories. The resulting output features are concatenated with the feature vectors and , and fed into the generation network and the forward recursive inference. The generation network then derives the feature vectors necessary for multi-stage goal evaluation. Finally, in the decoding stage, the multi-stage goal evaluator outputs hidden features for several stages of goals and incrementally incorporates them into the forward recursive inference process, resulting in the final future trajectory. The overall architecture of the proposed model is illustrated in Fig. 2.

III-C Temporal Attention

In recent years, Transformer[40] has achieved great success in time series data prediction. It pioneered self-attention, which can assign different attention weights to different positions in the input sequence, greatly improving the accuracy of time series data prediction. At the same time, multiple self-attention heads are also introduced to learn multiple sets of different attention weights, which enhances the model’s ability to model input sequences. In our work, we use the encoding part of Transformer. Given a past observed trajectory sequence , three different vectors are obtained through linear transformation: (query), (key) and (value). The attention weights are then calculated using these three vectors with the following formula:

| (1) |

where is the dimension of , used as the normalization factor. Based on the aforementioned formula, the multi-head attention (k heads) conducts multiple parallel attention calculations on distinct segments of the input sequence, its formula is expressed as:

| (2) | |||

| (3) |

where is a fully connected layer that merges the outputs of attention heads. The final embedding is generated by a fully connected layers.

III-D Conditional Variational Autoencoder

We also used a conditional variational autoencoder(CVAE) to encode the pedestrian’s past trajectory sequence to derive the latent variable , so as to learn and generate an approximate distribution of future trajectories. With reference to [14, 16, 25], Our CVAE consists of the following modules: 1) Recognition network , responsible for capturing the correlation between variable and the actual trajectory y. 2) Conditional prior network , tasked with modeling the latent variable based on past observed trajectories. 3) Generation network , responsible for encoding input features and generating multi-stage goals. Here, denote the parameters of corresponding networks. The three mentioned networks consist of three-layer multi-layer perceptrons. Different from previous work, we augment the generation network by incorporating latent features encoded by multi-head self-attention, so that it can learn richer temporal dependence information.

During the training stage, the past trajectory and the ground truth future trajectory are initially encoded using distinct gated-recurrent unit encoders to obtain the feature vectors and , respectively. To capture dependence information between past trajectories and ground truth future trajectories, we input the feature vectors and into the recognition network to predict the distribution mean and standard deviation of future trajectories. The conditional prior network assumes that only is utilized to predict the distribution mean and standard deviation , without knowledge of the ground truth future trajectory. Next, we sample from , combine it with and , and ultimately utilize the generation network to generate the hidden features required by the multi-stage goal evaluator. During the testing phase, the ground truth future trajectories are not available. So unlike the training phase, is sampled from to generate .

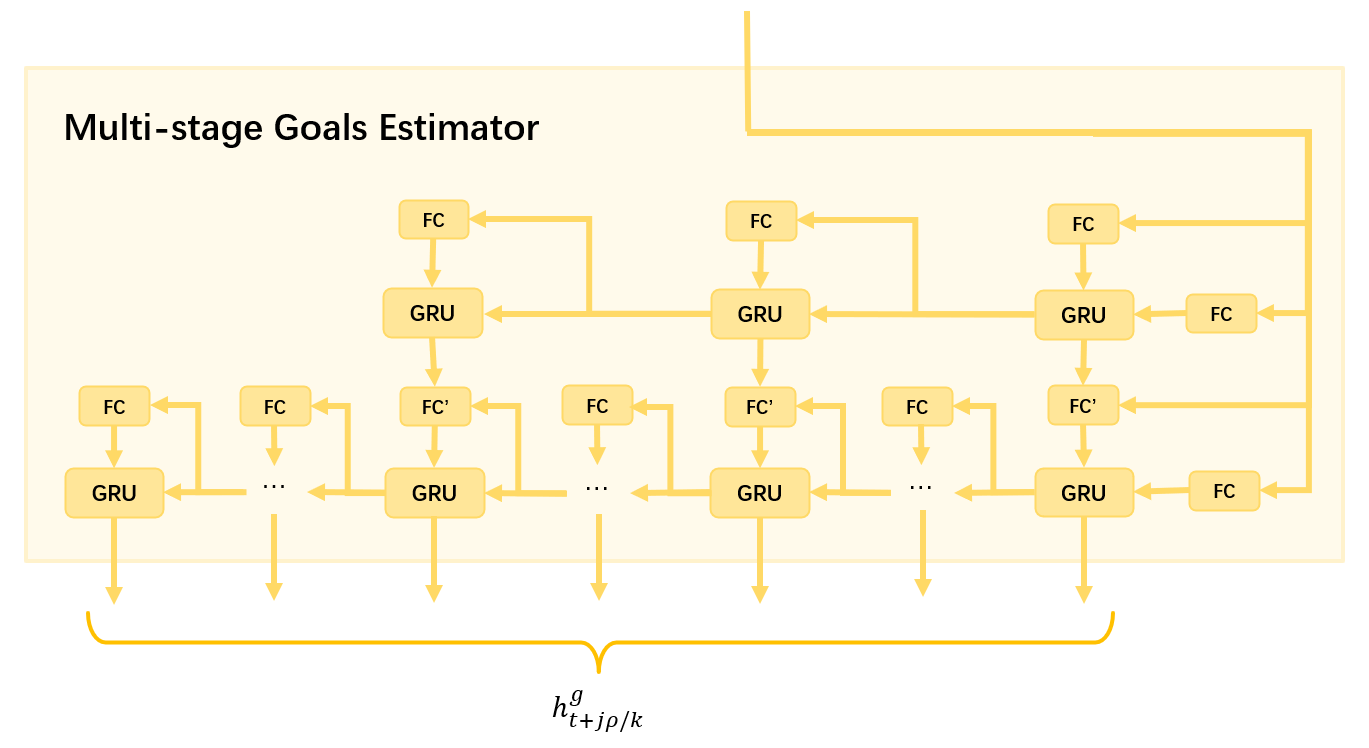

III-E Multi-Stage Goal Estimator

As shown in Fig. 3, the structure diagram of the multi-stage goal evaluator. It employs a double-layer architecture, proceeding from coarse to fine in a top-down manner. The features of upper-layer predicted stage goals guide the generation of lower-layer stage goals. Specifically, the evaluation device comprises a double-layer reverse RNN. Each layer is initialized with the feature vector as input and initialization, and generates the hidden feature of several stages of goals from time to . In the first layer, the entire trajectory is divided into three stages for prediction, while in the second layer, further refinement divides it into more stages. The specific number of segments can be adjusted based on the final model’s effectiveness, with subsequent experimental sections discussing this matter accordingly. At the same time step, the coarse-grained goal features of the upper layer are concatenated with the hidden features of the GRU in the lower layer to predict the fine-grained goal feature , which is then used as the output of the evaluator. Hence, we can define the output of the multi-stage target evaluator as:

| (4) |

Where denotes the index of the stage goal, signifies the number of time steps to be predicted, and represents dividing the future trajectory into stage goals. represents the entire multi-goal evaluator. Meanwhile, to compute the stage goal loss, it is fed into a fully connected layer to generate the stage goal .

During the final decoding stage, the stage target output by the evaluator at the same time step is concatenated with the hidden state of forward recursive inference to predict the final trajectory waypoint at that time. It’s worth noting that due to the variable number of stage target features output by the multi-objective evaluator, the connection between the evaluator and forward recursive reasoning in Fig. 2 is represented by a dotted line.

| Method | JAAD | PIE | ||||

|---|---|---|---|---|---|---|

| MSE | MSE | |||||

| ( 0.5 / 1.0 / 1.5s ) | (1.5s) | (1.5s) | ( 0.5 / 1.0 / 1.5s ) | (1.5s) | (1.5s) | |

| Linear [20] | 223 / 857 / 2303 | 1565 | 6111 | 123 / 477 / 1365 | 950 | 3983 |

| LSTM [20] | 289 / 569 / 1558 | 1473 | 5766 | 172 / 330 / 911 | 837 | 3352 |

| B-LSTM [34] | 159 / 539 / 1535 | 1447 | 5615 | 101 / 296 / 855 | 811 | 3259 |

| FOL-X [36] | 147 / 484 / 1374 | 1290 | 4924 | 47 / 183 / 584 | 546 | 2303 |

| PIEtraj [20] | 110 / 399 / 1248 | 1183 | 4780 | 58 / 200 / 636 | 596 | 2477 |

| PIEfull [20] | - | - | - | - / - / 559 | 520 | 2162 |

| BiTraP-D [25] | 93 / 378 / 1206 | 1105 | 4565 | 41 / 161 / 511 | 481 | 1949 |

| MGNet | 87 / 353 / 1132 | 1079 | 4452 | 37 / 145 / 474 | 445 | 1906 |

III-F Loss Functions

The model’s overall loss function comprises three components: trajectory prediction loss, goals loss, and The KL-divergence (KLD) loss. The trajectory prediction loss quantifies the error between the predicted trajectory by the model and the ground truth future trajectory . The goals loss measures the error between the staged goals predicted by the multi-stage goal estimator and the corresponding real goals . For the aforementioned two losses, we utilize the L2 norm for calculation. The formulas for the two losses are expressed as follows:

| (5) |

| (6) |

The KLD loss captures the difference between the distribution output by the recognition network of the CVAE module and the distribution output by the prior network in the same module. The total losses, including KLD losses, are as follows:

| (7) |

| Goal | JAAD | PIE | ||||

|---|---|---|---|---|---|---|

| MSE | MSE | |||||

| ( 0.5 / 1.0 / 1.5s ) | (1.5s) | (1.5s) | ( 0.5 / 1.0 / 1.5s ) | (1.5s) | (1.5s) | |

| 1 | 92 / 376 / 1212 | 1147 | 4683 | 45 / 164 / 525 | 493 | 2190 |

| 3 | 90 / 365 / 1157 | 1101 | 4537 | 39 / 150 / 490 | 460 | 1973 |

| 9 | 90 / 368 / 1179 | 1132 | 4638 | 37 / 145 / 474 | 445 | 1906 |

| 15 | 87 / 353 / 1132 | 1079 | 4452 | 40 / 150 / 484 | 454 | 1975 |

| 45 | 89 / 359 / 1159 | 1106 | 4565 | 41 / 156 / 503 | 473 | 2054 |

IV Experiments

IV-A Datasets

For our experiments, we assess the effectiveness of our framework by conducting evaluations on the JAAD[27] and PIE[20] datasets, specifically for the prediction of pedestrian trajectories from ego-centric perspectives. JAAD includes 2,800 pedestrian trajectories captured from dash cameras and annotated at 30Hz. PIE comprises 1,842 pedestrian trajectories, also annotated at 30Hz, featuring longer trajectories and more comprehensive annotations, including semantic intention, ego-motion, and neighboring objects. Following [20], we divided the datasets into training, testing, and validation sets with ratios of 50%, 40%, and 10%, respectively. Additionally, we use an observation length of 0.5 seconds to predict future trajectories of lengths 0.5, 1.0, and 1.5 seconds.

IV-B Implementation Details

We conducted experiments on a desktop server running Ubuntu 20.04 OS equipped with a 4.00GHz Intel Core i9-9900KS CPU, 64GB RAM, and a single NVIDIA GeForce RTX 3090 GPU. In our model architecture, Gated Recurrent Units (GRUs) serve as the backbone for both the encoder and decoder, each configured with a hidden size of 256. For the attention module, We utilize an embedding dimension of size 32 for spatial coordinates and employ a single transformer encoder layer with 8 multi-head attention heads. We utilize the Adam optimizer with an initial learning rate of 0.001, dynamically adjusted based on the validation loss. We use the ReLU as the activation function, and to address overfitting, we implement batch normalization and dropout in our model. The optimization is performed end-to-end with a batch size of 128, and the training process concludes after 100 epochs.

IV-C Evaluation Metrics

In this paper, we mainly utilize the mean squared error (MSE) between the upper left and lower right corners of the bounding box to evaluate the performance of our proposed approach. The specific evaluation formula is as follows:

| (8) |

where n is the number of predicted samples, is the ground truth, and is the predicted value of the model.

In addition, we also calculated the center mean squared error () and the center final mean squared error () for result evaluation. can measure the accuracy of the entire trajectory, while only measures the accuracy of the endpoints of the trajectory. The calculation formulas for both are similar to MSE, but note that their calculations are based on the bounding box centroid. All metrics are measured in pixels.

| BL | AT | ES | MSE | ||

|---|---|---|---|---|---|

| ( 0.5 / 1.0 / 1.5s ) | (1.5s) | (1.5s) | |||

| ✓ | 94 / 383 / 1231 | 1190 | 4734 | ||

| ✓ | ✓ | 92 / 376 / 1212 | 1147 | 4683 | |

| ✓ | ✓ | 89 / 362 / 1164 | 1113 | 4573 | |

| ✓ | ✓ | ✓ | 87 / 353 / 1132 | 1079 | 4452 |

IV-D Results

Quantitative Comparison. To ensure the reliability of the experimental results, we derived the model’s output from the average of three experiments. As depicted in Table I, we conducted a comparative analysis of our model against the current state-of-the-art algorithms on the JAAD and PIE datasets, achieving superior results across various indicators. Particularly noteworthy, compared to the BiTraP model, MGNet demonstrated an average performance increase of 6.3 and 8.9 under the MSE indicator on the JAAD and PIE datasets, respectively. These results underscore the pivotal role of guiding trajectory generation through predicting multi-stage goals in enhancing prediction accuracy.

Exploration Study We examine the impact on results by adjusting the number of stage goal features output by the multi-stage goal estimator, with the results presented in Table II. In the table, the output one goal signifies that the model does not utilize a multi-stage goal estimator and solely predicts a long-term goal to guide trajectory generation. The output of three goals represents a single-layer structure used in the multi-stage goal estimator, segmenting the future trajectory into three stages to guide trajectory generation. Experimental data indicates that on the JAAD dataset, the best results are achieved when 15 goal features are output, whereas on the PIE dataset, optimal results are obtained with 9 goal features. This emphasizes that the optimal number of output stage goal features is dataset-dependent and requires further adjustment. Additionally, it is observed that when the multi-stage goal evaluator is not used, that is, when only one goal feature is output, the results are the least favorable, providing further evidence of the efficacy of the multi-stage goal evaluator.

Ablation Study. In the JAAD dataset, we systematically remove various components from the model to assess their impact on the experimental results. The findings are presented in Table III. ”BL” in the table denotes the baseline, which solely utilizes CVAE encoding and outputs a single long-term goal to guide forward recursive inference for trajectory prediction. ”AT” represents the attention module, while ”ES” stands for the multi-stage goal evaluator. It is evident from the table that incorporating either the attention module or the multi-stage goal evaluator positively influences the results, with the multi-stage goal evaluator demonstrating the most significant improvement. Ultimately, the optimal results are achieved by combining the attention module and the multi-stage goal evaluator.

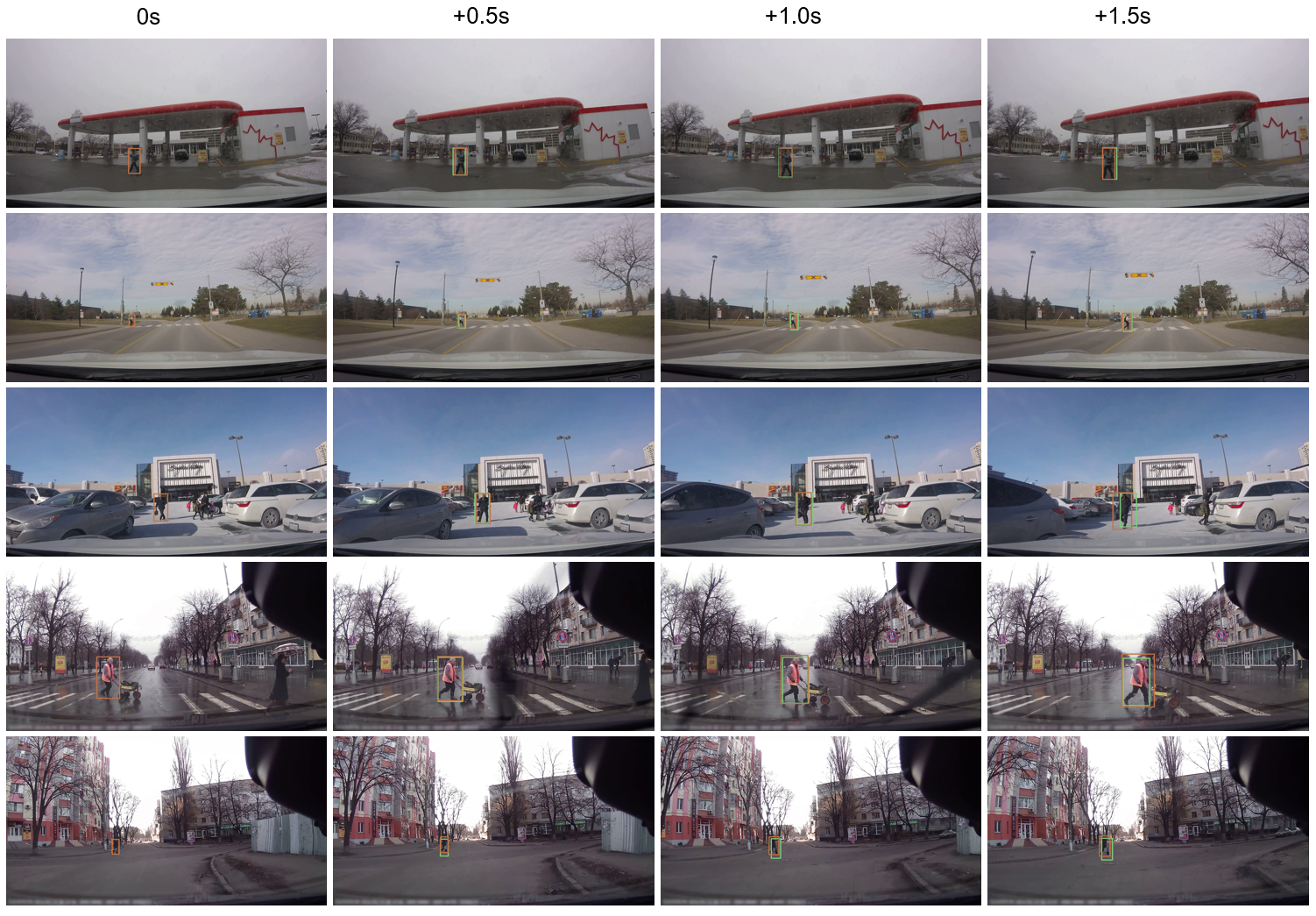

Qualitative Results. As shown in Fig. 4, we visualize the prediction bounding boxes and ground truth at several key time nodes (0.5s, 1.0s, 1.5s) to qualitatively demonstrate the prediction performance. The samples are extracted from the JAAD dataset, covering five representative scenes in daily life: 1) gas station, 2) main road in town, 3) parking lot, 4) crosswalk, and 5) intersection. In the depicted figure, our predicted bounding boxes closely align with the ground truth. Specifically, within the next 1.0 second, the predicted bounding boxes exhibits nearly identical location and size to the ground truth. At the subsequent 1.5 second, although the position and size of the predicted bounding boxes deviate from the actual situation, the overall position remains roughly consistent.

V Conclusion

In this work, we propose a new goal-driven network model (MGNet) for pedestrian trajectory prediction. Leveraging the generative model CVAE and an attention module, the model encodes trajectories and subsequently generates multiple stage goals through a multi-stage goal evaluator to guide future trajectory generation. Unlike most existing goal-driven models that exclusively estimate the final destination or distant goals, our approach posits that predicted stage goals can more effectively guide the forward recursive inference of the trajectory, thus reducing cumulative errors in the inference process. Experimental results substantiate the effectiveness of the proposed model compared to state-of-the-art methods. In future work, we aim to explore the construction of a trajectory memory model to enhance the guidance of stage goal generation and further improve model performance.

References

- [1] R. Okuda, Y. Kajiwara, and K. Terashima, “A survey of technical trend of adas and autonomous driving,” in Technical Papers of 2014 International Symposium on VLSI Design, Automation and Test. IEEE, 2014, pp. 1–4.

- [2] S. Coşar, G. Donatiello, V. Bogorny, C. Garate, L. O. Alvares, and F. Brémond, “Toward abnormal trajectory and event detection in video surveillance,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 27, no. 3, pp. 683–695, 2016.

- [3] E. Yurtsever, J. Lambert, A. Carballo, and K. Takeda, “A survey of autonomous driving: Common practices and emerging technologies,” IEEE access, vol. 8, pp. 58 443–58 469, 2020.

- [4] B. I. Sighencea, R. I. Stanciu, and C. D. Căleanu, “A review of deep learning-based methods for pedestrian trajectory prediction,” Sensors, vol. 21, no. 22, p. 7543, 2021.

- [5] Y. Huang, H. Bi, Z. Li, T. Mao, and Z. Wang, “Stgat: Modeling spatial-temporal interactions for human trajectory prediction,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6272–6281.

- [6] A. Sadeghian, V. Kosaraju, A. Sadeghian, N. Hirose, H. Rezatofighi, and S. Savarese, “Sophie: An attentive gan for predicting paths compliant to social and physical constraints,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 1349–1358.

- [7] C. Yu, X. Ma, J. Ren, H. Zhao, and S. Yi, “Spatio-temporal graph transformer networks for pedestrian trajectory prediction,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XII 16. Springer, 2020, pp. 507–523.

- [8] L. F. Chiara, P. Coscia, S. Das, S. Calderara, R. Cucchiara, and L. Ballan, “Goal-driven self-attentive recurrent networks for trajectory prediction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 2518–2527.

- [9] J. Sun, Q. Jiang, and C. Lu, “Recursive social behavior graph for trajectory prediction,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 660–669.

- [10] A. Mohamed, K. Qian, M. Elhoseiny, and C. Claudel, “Social-stgcnn: A social spatio-temporal graph convolutional neural network for human trajectory prediction,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 14 424–14 432.

- [11] L. Shi, L. Wang, C. Long, S. Zhou, M. Zhou, Z. Niu, and G. Hua, “Sgcn: Sparse graph convolution network for pedestrian trajectory prediction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 8994–9003.

- [12] A. Gupta, J. Johnson, L. Fei-Fei, S. Savarese, and A. Alahi, “Social gan: Socially acceptable trajectories with generative adversarial networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2255–2264.

- [13] R. Liang, Y. Li, X. Li, Y. Tang, J. Zhou, and W. Zou, “Temporal pyramid network for pedestrian trajectory prediction with multi-supervision,” in Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 3, 2021, pp. 2029–2037.

- [14] N. Lee, W. Choi, P. Vernaza, C. B. Choy, P. H. Torr, and M. Chandraker, “Desire: Distant future prediction in dynamic scenes with interacting agents,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 336–345.

- [15] S. V. Albrecht, C. Brewitt, J. Wilhelm, B. Gyevnar, F. Eiras, M. Dobre, and S. Ramamoorthy, “Interpretable goal-based prediction and planning for autonomous driving,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 1043–1049.

- [16] K. Mangalam, H. Girase, S. Agarwal, K.-H. Lee, E. Adeli, J. Malik, and A. Gaidon, “It is not the journey but the destination: Endpoint conditioned trajectory prediction,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16. Springer, 2020, pp. 759–776.

- [17] N. Deo and M. M. Trivedi, “Trajectory forecasts in unknown environments conditioned on grid-based plans,” arXiv preprint arXiv:2001.00735, 2020.

- [18] P. Dendorfer, A. Osep, and L. Leal-Taixé, “Goal-gan: Multimodal trajectory prediction based on goal position estimation,” in Proceedings of the Asian Conference on Computer Vision, 2020.

- [19] K. Mangalam, Y. An, H. Girase, and J. Malik, “From goals, waypoints & paths to long term human trajectory forecasting,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 15 233–15 242.

- [20] A. Rasouli, I. Kotseruba, T. Kunic, and J. K. Tsotsos, “Pie: A large-scale dataset and models for pedestrian intention estimation and trajectory prediction,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 6262–6271.

- [21] O. Makansi, O. Cicek, K. Buchicchio, and T. Brox, “Multimodal future localization and emergence prediction for objects in egocentric view with a reachability prior,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 4354–4363.

- [22] R. Chandra, U. Bhattacharya, A. Bera, and D. Manocha, “Traphic: Trajectory prediction in dense and heterogeneous traffic using weighted interactions,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8483–8492.

- [23] L. Neumann and A. Vedaldi, “Pedestrian and ego-vehicle trajectory prediction from monocular camera,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 10 204–10 212.

- [24] M. Huynh and G. Alaghband, “Online adaptive temporal memory with certainty estimation for human trajectory prediction,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 940–949.

- [25] Y. Yao, E. Atkins, M. Johnson-Roberson, R. Vasudevan, and X. Du, “Bitrap: Bi-directional pedestrian trajectory prediction with multi-modal goal estimation,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 1463–1470, 2021.

- [26] C. Wang, Y. Wang, M. Xu, and D. J. Crandall, “Stepwise goal-driven networks for trajectory prediction,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 2716–2723, 2022.

- [27] I. Kotseruba, A. Rasouli, and J. K. Tsotsos, “Joint attention in autonomous driving (jaad),” arXiv preprint arXiv:1609.04741, 2016.

- [28] Y. Chai, B. Sapp, M. Bansal, and D. Anguelov, “Multipath: Multiple probabilistic anchor trajectory hypotheses for behavior prediction,” arXiv preprint arXiv:1910.05449, 2019.

- [29] J. Gao, C. Sun, H. Zhao, Y. Shen, D. Anguelov, C. Li, and C. Schmid, “Vectornet: Encoding hd maps and agent dynamics from vectorized representation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 11 525–11 533.

- [30] T. Salzmann, B. Ivanovic, P. Chakravarty, and M. Pavone, “Trajectron++: Dynamically-feasible trajectory forecasting with heterogeneous data,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XVIII 16. Springer, 2020, pp. 683–700.

- [31] H. Song, D. Luan, W. Ding, M. Y. Wang, and Q. Chen, “Learning to predict vehicle trajectories with model-based planning,” in Conference on Robot Learning. PMLR, 2022, pp. 1035–1045.

- [32] Z. Zhou, L. Ye, J. Wang, K. Wu, and K. Lu, “Hivt: Hierarchical vector transformer for multi-agent motion prediction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 8823–8833.

- [33] G. Aydemir, A. K. Akan, and F. Güney, “Adapt: Efficient multi-agent trajectory prediction with adaptation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 8295–8305.

- [34] A. Bhattacharyya, M. Fritz, and B. Schiele, “Long-term on-board prediction of people in traffic scenes under uncertainty,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4194–4202.

- [35] T. Yagi, K. Mangalam, R. Yonetani, and Y. Sato, “Future person localization in first-person videos,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7593–7602.

- [36] Y. Yao, M. Xu, C. Choi, D. J. Crandall, E. M. Atkins, and B. Dariush, “Egocentric vision-based future vehicle localization for intelligent driving assistance systems,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 9711–9717.

- [37] N. Nayakanti, R. Al-Rfou, A. Zhou, K. Goel, K. S. Refaat, and B. Sapp, “Wayformer: Motion forecasting via simple & efficient attention networks,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 2980–2987.

- [38] N. Rhinehart, R. McAllister, K. Kitani, and S. Levine, “Precog: Prediction conditioned on goals in visual multi-agent settings,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 2821–2830.

- [39] H. Zhao, J. Gao, T. Lan, C. Sun, B. Sapp, B. Varadarajan, Y. Shen, Y. Shen, Y. Chai, C. Schmid et al., “Tnt: Target-driven trajectory prediction,” in Conference on Robot Learning. PMLR, 2021, pp. 895–904.

- [40] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.