A New Perspective on Privacy Protection in Federated Learning with Granular-Ball Computing

Abstract

Federated Learning (FL) facilitates collaborative model training while prioritizing privacy by avoiding direct data sharing. However, most existing articles attempt to address challenges within the model’s internal parameters and corresponding outputs, while neglecting to solve them at the input level. To address this gap, we propose a novel framework called Granular-Ball Federated Learning (GrBFL) for image classification. GrBFL diverges from traditional methods that rely on the finest-grained input data. Instead, it segments images into multiple regions with optimal coarse granularity, which are then reconstructed into a graph structure. We designed a two-dimensional binary search segmentation algorithm based on variance constraints for GrBFL, which effectively removes redundant information while preserving key representative features. Extensive theoretical analysis and experiments demonstrate that GrBFL not only safeguards privacy and enhances efficiency but also maintains robust utility, consistently outperforming other state-of-the-art FL methods. The code is available at https://github.com/AIGNLAI/GrBFL.

1 Introduction

Federated Learning (FL) is a distributed machine learning approach to solve the data silo problem, which aims to train a global model without the need to centralize the original dataset. The important problem of FL to be solved now is the trade-off between privacy, efficiency, and utility Zhang et al. (2022), i.e., how to protect privacy without significantly compromising utility and efficiency. However, existing FL methods often focus on improving model performance from the perspective of models and features, with few methods considering whether changing the input data of FL can enhance the model’s overall performance.

Significant challenges persist in FL concerning privacy, efficiency, and utility. FL models are vulnerable to various attacks Park et al. (2023); Liu et al. (2024); Rodríguez-Barroso et al. (2023); Al Mallah et al. (2023), such as those targeting the central server, local devices, or initiated by any participant within the FL workflow. Additionally, the distributed nature of training data across numerous clients, coupled with often unreliable and slow network connections, makes communication efficiency crucial Bao et al. (2023). In each learning round, clients independently compute model updates based on their local data and send these updates to the central server, which aggregates them to generate a new global model.

While many studies have addressed issues related to privacy, efficiency, and utility, the "No Free Lunch" (NFL) Zhang et al. (2022, 2023) principle highlights the lack of a solution that simultaneously improves all three aspects. We approached this problem from the perspective of input data, theoretically analyzing and experimentally validating reconstruction attacks. Our findings indicate that as the information content of input data decreases, the amount of information an attacker can reconstruct also diminishes. An analysis of the expected generalization error suggests that, provided a suitable model is selected and redundant information is removed, the model’s classification performance can be maintained. Furthermore, the reduction in information content significantly enhances FL efficiency. Therefore, reconstructing input data and eliminating redundant information presents an effective solution for improving FL.

Building on these findings, we realized that the concept of granular balls can be effectively applied to enhance privacy in FL by reconstructing input data rather than directly using the finest-grained data. Motivated by this insight, we proposed the Granular-Ball Federated Learning (GrBFL) framework and developed a two-dimensional binary search segmentation algorithm based on variance constraints. This framework is designed to detect gradient variations within images, extract representative information, and reconstruct image data into graph structures during the input stage. GrBFL not only ensures that crucial information is preserved with minimal impact on classification accuracy but also enhances privacy by preventing attackers from fully reconstructing the original data due to the loss of some redundant information. Additionally, GrBFL improves the efficiency of FL.

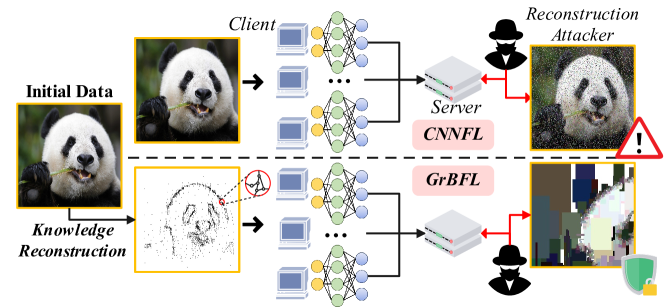

As shown in Figure 1, the traditional approach is to transform an image into a matrix and input each pixel to CNN, whereas, in granular-ball input, an image can be represented as a graph after granular-ball computing, where each node represents a structural block in the image and each edge represents the association between two nodes. Local models are trained in each client by GNN and then uploaded to the server for aggregation into global models. The node features contain information about the location and size of the granular rectangles, so the attacker needs to know not only the sample gradient and model parameters but also the meaning of the node features. We simulate an attacker performing a reconstruction attack, showing that the reconstructed image of GrBFL is less similar to the original data than CNNFL. Our model clearly provides better privacy protection and masks partial information of the input image.

In summary, the contributions of this paper are as follows:

-

We rigorously analyze the privacy, efficiency, and utility of FL from the perspective of input and validate our conclusions through experiments. To our knowledge, this is the first method to address the issues of FL from the input perspective.

-

We propose a novel GrBFL framework based on granular ball generation, which comprises two key components: (1) Knowledge reconstruction based on granular ball computing, selecting representative information through gradient analysis and reconstructing it into graph structures. (2) Joint aggregation based on graph inputs, introducing a proximal term to address instability issues in graph-based federated learning. Additionally, we designed a two-dimensional binary search segmentation algorithm based on variance constraints to facilitate knowledge reconstruction within this framework.

-

We explored the design of efficiency and comprehensive metrics in federated learning, introducing Communication Efficiency (CE) and Privacy-Efficiency-Utility-Measure (PEUM) to evaluate the effectiveness of our approach. Extensive experiments demonstrate that our proposed framework significantly enhances efficiency and effectively protects privacy, without compromising practicality.

2 Related Work

2.1 Federated learning

Federated learning (FL) is an emerging collaborative training paradigm that enables participants to train models while preserving data privacy jointly Yang et al. (2020); Gong et al. (2020). As FL continues to evolve, the importance of privacy protection has become increasingly prominent. Effectively safeguarding participants’ data privacy has become a central research focus, leading to continuous exploration and refinement of privacy-preserving techniques in existing methods Zhang et al. (2023).

Currently, privacy protection methods in FL primarily include differential privacy Le Ny and Pappas (2013); Chen et al. (2024); Jiang et al. (2024), secure multi-party computation Bonawitz et al. (2017); Bell et al. (2020), and homomorphic encryption Aono et al. (2017); Xu et al. (2020). These methods introduce innovations from the perspectives of models and features, enhancing privacy protection capabilities. However, few methods consider privacy protection from the perspective of input. Our research is the first to address this gap and propose a methodological approach in this area.

Moreover, performance and efficiency are equally crucial in FL Zhang et al. (2022, 2023). Although, due to the "No-Free-Lunch" principle, achieving significant improvements in all three aspects—privacy, performance, and efficiency—simultaneously is challenging, striving for a balance among them is essential. While previous works have made progress in specific areas, such as FedProx Li et al. (2020a) and FedOPT Ahmed et al. (2024) mitigating performance degradation due to heterogeneity and Scaffold Karimireddy et al. (2020) and FedNova Wang et al. (2020) improving efficiency and speed, these advancements remain incomplete. Our proposed framework aims to enhance efficiency while minimizing the loss in accuracy, thereby providing models with stronger generalization capabilities.

2.2 Granular Ball Computing

Granular Ball Computing (GBC) is a novel approach within the domain of multi-granularity computing, widely applied in machine learning and data mining. Unlike traditional methods that rely on the finest granularity of input data, such as pixel-level inputs for neural networks, GBC aligns more closely with the way the human brain processes information. Chen’s experiments demonstrated that in image recognition, the human brain prioritizes large-scale contour information over fine-grained details Chen (1982). This suggests that traditional machine learning models, which use the finest granularity inputs, are not only more susceptible to noise but also less interpretable, and misaligned with human cognitive processes.

Wang first introduced the concept of large-scale cognitive rules into granular computing, leading to the development of multi-granularity cognitive computing Wang (2017). Building on this, Xia et al. proposed Granular Ball Computing, where different sizes of hyperspheres represent varying levels of granularity: large granular balls for coarse granularity and small balls for fine granularity Xia et al. (2020, 2022). Recent advancements have further refined this approach. Shuyin et al. (2023) introduced an enhanced granular ball method for handling non-Euclidean spaces and capturing fine-grained boundary details. By using graph nodes to represent rectangular image regions instead of individual pixels, this method significantly reduces the amount of input data while preserving essential information. This not only enhances efficiency by minimizing redundant data processing but also strengthens privacy protection.

This deeply prompts us to consider the potential of GBC in enhancing privacy protection within FL. By focusing on coarse-grained and abstract representations rather than fine-grained data points, GBC naturally reduces the exposure of sensitive information. This characteristic presents a critical advantage in privacy-sensitive contexts such as FL.

3 Understanding Input in Federated Learning

Existing FL methods mostly focus on improving models and features, with few methods addressing the input level of the model. In this section, we will analyze the relationship between input and privacy, efficiency, and utility in FL from both theoretical and experimental perspectives, yielding practical results.

3.1 Problem Definition

FL is a machine-learning scenario with clients (denoted as ), and a central server (denoted as ). Each client has its private dataset . The goal of the entire system is to minimize the sum of the loss functions on all clients:

| (1) |

where, is the total number of samples on all clients.

3.2 Input and Privacy

A common attack in FL is the data reconstruction attack Zhu et al. (2019), which reconstructs the original training data using the model’s weights and gradients with the L-BFGS algorithm, posing a significant data security risk.

The loss function is the optimization objective for the reconstruction attack. This loss function is typically used to measure the difference between the original data and the reconstructed sample and can be a norm or other forms of divergence measures. We use to represent the network during the attack process. Consider the impact of a small change in on the loss function :

| (2) |

where represents the change in the loss function and represents the Jacobian matrix of the loss function with respect to .

During the iteration process, is updated as follows:

| (3) |

where is an approximation of the Hessian matrix, is the gradient of the current inferred data , and is estimated using the changes in parameters and gradients over the past few iterations. This approximation better reflects the local curvature information of the current parameters.

We want the loss function to decrease. Thus, we need:

| (4) |

The L-BFGS algorithm updates parameters by estimating the inverse of the Hessian matrix. At each iteration, it approximates the inverse matrix of the Hessian at the current parameter value . Therefore, the direction of is influenced by the inverse of the Hessian matrix. As the input data decreases, the magnitude of becomes smaller. This means that the influence of in the algorithm weakens, and parameter updates are more influenced by the inverse of the Hessian matrix. The L-BFGS algorithm can more accurately estimate the direction of parameter updates, leading to faster convergence to the minimum of the loss function.

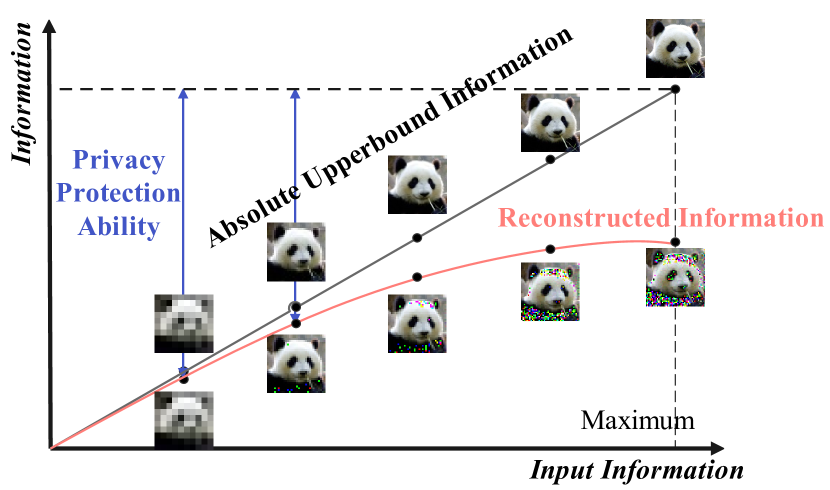

Figure 2 illustrates the trend of the information of data reconstructed by the model as the input information changes, which also validates the theory we proposed above. When input data decreases, the reconstruction attacker can better restore the data, but the absolute information content of the obtained data will not exceed that of the original image. Therefore, considering FL’s privacy protection from the input perspective is feasible.

3.3 Input and Utility & Efficiency

We can decompose the expected generalization error of FL, analyzing the relationship between input and utility. For a given model and data , the expected generalization error of FL can be expressed as:

| (5) | ||||

In Equation 5, we decompose the generalization error into model bias, variance, and data noise. Model bias depends on the model’s fitting capability and the proportion of effective information. As the amount of ineffective data decreases, both variance and data noise decrease, while model bias remains unchanged. Conversely, when the amount of effective data decreases, variance and data noise also decrease, but model bias increases. This suggests that it is feasible to retain only the effective information from the input data and reconstruct it, as long as the model’s fitting capability is preserved, thereby maintaining the utility of FL.

From an efficiency perspective, for a fixed model and algorithm, when the information content of the input data decreases, the total computation required by the model reduces, thereby enhancing the efficiency of FL.

4 Methodology

4.1 Overview

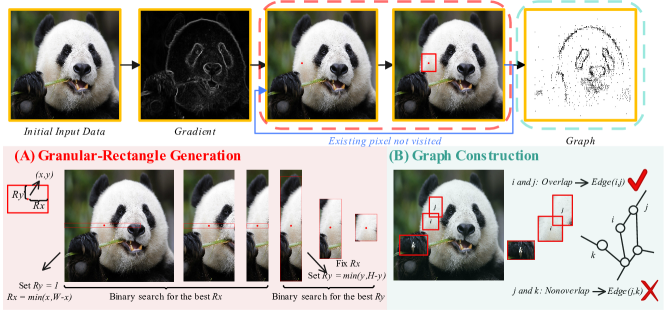

Our proposed framework comprises two main components: granular-ball-based knowledge reconstruction and graph input-based aggregation with variance constraints. For the knowledge reconstruction, we developed a two-dimensional binary search algorithm based on variance constraints. Figure 3 illustrates the knowledge reconstruction process of this algorithm. For the input data, we first compute the gradient map using the Sobel operator. Next, we iteratively select the point with the smallest gradient that has not yet been chosen to generate a granular-rectangle, continuing this process until all points have been processed. During the generation of each granular-rectangle, we first fix its height and determine the appropriate length using a bisection method based on purity constraints. Then, with the length fixed, we similarly determine the appropriate height. When no further granular-rectangle can be generated, we reconstruct all the granular information into a graph: each granularity becomes a node, and an edge is formed between any two granular-rectangle that share overlapping pixels. In this way, the image is reconstructed into a graph. We then apply an appropriate graph neural network for federated learning, and perform model aggregation based on variance constraints, before finally uploading the aggregated results to the server.

4.2 Granular-Ball Based Knowledge Reconstruction

Equation 4 and Equation 5 inspired us to consider how to balance privacy, efficiency, and utility in FL from the input perspective. From the perspectives of utility and efficiency, the performance of a model often depends on the amount of information in the data itself rather than the form in which the data is stored. This insight led us to consider privacy protection from the standpoint of data storage forms. Simply adding noise or blurring the original images can interfere with machine classification accuracy, but reconstructed images by such methods are often still recognizable to humans.

As shown in Figure 2, with very little information, the model cannot effectively learn the features of a panda, but for humans, it is still recognizable. Therefore, we need to consider filtering and reconstructing the input data to ensure that the reconstructed images are difficult for both humans and machines to recognize. The granular computing methods discussed in Xia et al. (2019) and Shuyin et al. (2023) focus on extracting and integrating input information, which is then fed into the model at a coarser granularity. This approach aligns with our concept of reconstructing input information to achieve privacy protection in federated learning. Building on this, we have designed an algorithm for the GrBFL framework.

To achieve image segmentation from fine to coarse granularity, a straightforward approach is to group adjacent similar pixels into the same granular-ball. This allows us to retain the original image structure while removing redundant information. It is important to note that the term "granular-ball" here does not literally refer to a spherical shape, as the image itself is two-dimensional. Therefore, using a rectangle is more appropriate for modeling regions within the image. We first compute the gradient map of the image using operators such as Sobel. For each pixel, the greater the gradient, the fewer similar pixels are typically found in its vicinity. Therefore, we sort the pixels in ascending order of their gradient values and construct granular-rectangle for each pixel accordingly. Purity is a classical metric for assessing the quality of granular-ball generation, which we extend to the context of image granular-rectangles. In image processing, the purity of a granular-rectangle is defined as the ratio of normal pixels to the total number of pixels within the granular-rectangle. Here, normal pixels refer to those whose gray-scale values differ from the granule’s center pixel by no more than a specified threshold. The calculation of purity is shown in Equation 6.

| (6) |

where is the ID of granular-rectangle, is the centre, is the other pixel points within the granular-rectangle, is the defined threshold, and are the length and width of the granular-rectangle, is the granular-rectangle, is gray-scale value.

Identifying the largest granular rectangle that satisfies the purity constraint is a critical challenge that requires careful consideration. Evaluating each pixel individually can lead to prohibitively long processing times, especially when dealing with large-scale image data. However, it is noteworthy that the purity function demonstrates global monotonicity: as the granular rectangle expands in any direction, its purity consistently decreases. By leveraging this property, a binary search strategy can be employed, starting with a larger size and progressively narrowing down, to efficiently determine the optimal dimensions of the granular rectangle that satisfy the purity constraint. This approach significantly reduces the algorithm’s complexity to a logarithmic scale.

After obtaining all the granular rectangles, we construct a graph structure by abstracting the center of each granular rectangle as a node in the graph. Additionally, we consider the pairwise overlapping relationships between granular rectangles. If two granular rectangles overlap, an edge is added between the corresponding nodes; otherwise, no edge is present between the nodes. This graph construction method preserves the original structural information while also encapsulating the relationships between granular rectangles. Formally, the reconstructed graph is defined as:

Definition 1.

(Granular-Rectangle Graph) The Granular-Rectangle Graph can be defined as an undirected graph , where is the set of granular-rectangle. Each granular-rectangle is defined as , where and represent the coordinates of the granular-rectangle’s center, and represent the dimensions of the granular-rectangle, and , , , and denote the mean, variance, maximum, and minimum pixel values within the granular-rectangle, respectively. The edge set is defined such that an edge exists if and only if and .

In the FL scenario, each client processes the input image data as described above, resulting in a series of graph data. The GrBFL framework then inputs these graph data into the graph neural network for training. After a certain number of training rounds, the models from each client will be aggregated. The specific process of model aggregation will be detailed in the following section.

4.3 Graph Input-Based Aggregations

Through extensive experiments, we found that, compared to traditional graph datasets like Cora, the datasets generated from granular-ball computing exacerbate the differences between client models in FL. This increased disparity poses challenges for methods that rely on directly weighted averaging of model parameters and gradients, such as slower convergence rates. As shown in Table 1, various FL aggregation strategies were evaluated on our dataset, with the results indicating that the simple FedProx Li et al. (2020a) aggregation strategy performed better. The proximal term introduced in FedProx effectively mitigated the instability of graph neural networks when processing granular-ball inputs. The FedProx method is an evolution of the FedAvg McMahan et al. (2017) algorithm, which reduces discrepancies in model parameter adjustments by incorporating a proximal term into the loss function of the client models. This not only accelerates the convergence of the global model but also alleviates the instability encountered by graph neural networks in the context of granular-ball inputs.

| Method | FedAVG | SCAFFOLD | FedNova | Fedprox |

| Acc | 92.33% | 90.58% | 90.08% | 96.25% |

The FedProx algorithm locally introduces a proximal term to limit parameter updates, i.e. becomes , thus minimizing the following loss function:

| (7) |

Following aggregation under the FedProx mechanism, the server transmits the graph neural network model back to the client, thereby concluding a round of FL.

4.4 Complexity analysis.

The time complexity of our method for processing gradients and sorting is , where and represent the length and width of the image, respectively. When solving for the granular-rectangle size using a binary search algorithm, the time complexity is , where is the number of regions and . Constructing the granular-rectangle graph has a time complexity of . This method is highly efficient for large-scale image processing. Testing shows that for common 224x224 image data, our method completes data reconstruction in just , thereby ensuring model performance.

5 Experiment

5.1 Experiment Setup

Datasets. We conducts a detailed analysis on three classic image datasets: MNIST, CIFAR-10, and CIFAR-100. The varying difficulty levels of these datasets allow for a comprehensive evaluation of the model’s overall performance.

Baselines. To highlight the strong performance of GrBFL in terms of privacy protection, we compared it with several methods under the CNNFL framework. Specifically, we compared GrBFL with the differential privacy Le Ny and Pappas (2013) and the LotteryFL Li et al. (2020b) within the CNNFL framework. The former achieves privacy protection from a feature perspective, while the latter does so from a model perspective.

Implementation. The code for this study was implemented using PyTorch, ensuring that all FL models were evaluated in a consistent computational environment. Within the GrBFL framework, we explored the applicability of this approach across different graph neural network models, including GCN, GAT, GIN, and GraphSAGE. The entire training process was conducted on an NVIDIA RTX-3090 GPU, with specific training parameters and details provided in the supplementary materials.

5.2 Evaluation Metrics

To conduct a thorough evaluation of the model within the context of FL, which includes the aspects of privacy, efficiency, utility, and their comprehensive considerations, we carefully define the following metrics.

Privacy. A key challenge in FL privacy protection is that attackers may infer information about the training data through model parameters and gradients. In this experiment, we assume a scenario where an attacker has successfully obtained model parameters and gradient data related to specific samples from a client. Additionally, we assume that the attacker has comprehensive knowledge of the node feature semantics in the granular computing domain. With this information, the attacker might ultimately reconstruct an image. To evaluate the level of privacy protection achieved under these specific conditions, we introduce a privacy score, denoted as , which serves as a quantitative metric. The formula for the privacy score is defined in Equation 8.

| (8) |

where refers to the actual image, refers to the image reconstructed by the attacker, and is mean square error.

Efficiency. We use model communication efficiency (CE) to evaluate the efficiency of different models. In the evaluation of communication efficiency within the FL framework, we meticulously recorded the duration and traffic metrics of communications. Using this data, we calculated the average communication time, which serves as a quantitative indicator of the model’s communication efficiency throughout the entire FL cycle. The formal definition of communication efficiency (CE) can be found in Equation 9.

| (9) |

where, denotes the sigmoid function, which is a crucial component in calculating communication efficiency. The variables time and traffic represent the duration of communication and the volume of data communicated, respectively. Specifically, traffic is quantified as the product of the number of model parameters and the communication frequency. Additionally, is a scaling factor used to uniformly map the metric values to a range between 0 and 1.

Utility. We selected accuracy, a widely recognized multi-class classification metric, as the primary tool for evaluating model utility. Accuracy is used to assess the performance of the aggregated FL model across all test samples, reflecting the aggregation level and representational capability of the individual client models.

Comprehensive measurement. The evaluation of federated learning (FL) is not limited to a single aspect such as privacy, efficiency, practicality, or isolation. Considering the diversity of these dimensions, we have developed a comprehensive evaluation metric called PEUM (Privacy-Efficiency-Utility Metric) to integrate these three key dimensions and assess the overall effectiveness of the methods considered. The composite score of the model is represented as the harmonic mean of accuracy, communication efficiency, and privacy score. Formally, this is expressed by Equation 10.

| (10) |

5.3 Main Results

The GrBFL framework demonstrates exceptional efficacy in privacy protection.

To rigorously evaluate the privacy protection capabilities of the GrBFL and CNNFL frameworks, this study conducted reconstruction attack experiments under the GCN and ResNet models. The experiments were performed across three datasets. For each dataset, a set of 100 random training samples was systematically extracted and subjected to 300 iterations to compute . The attack model for CNNFL can be referenced in Zhu et al. (2019). The attack model for GrBFL is similar to that of CNNFL. In this case, the attacker can access the weights of the graph neural network and the gradients of the data, using continual optimization to reconstruct the original graph data. We assume that the attacker understands the semantics of node features. However, since node features only contain coarse pixel information, the attacker can only determine the position and size of each granule rectangle but cannot precisely obtain the specific pixel values.

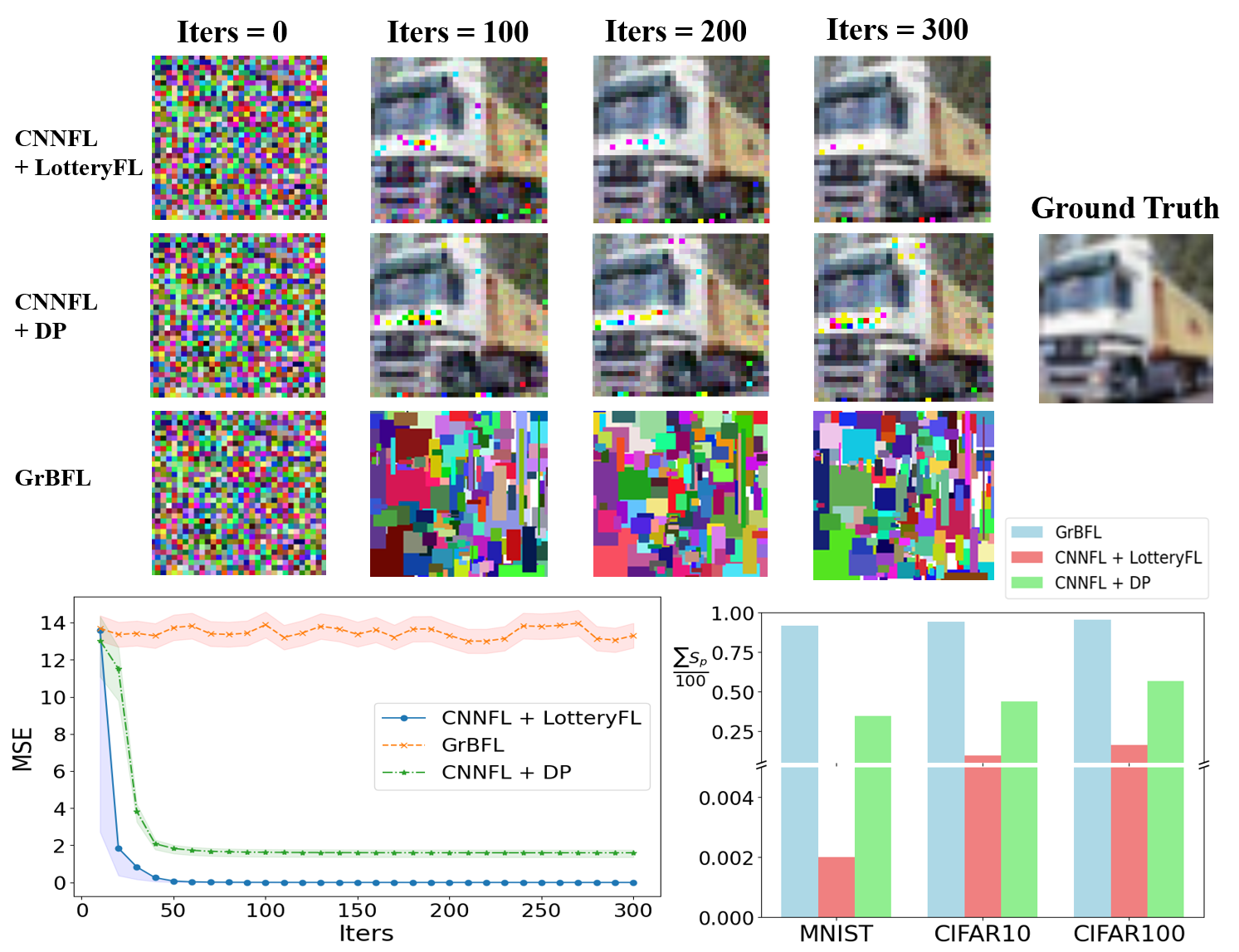

Figure 4 illustrates the results of our privacy protection experiments. The upper section compares the differential privacy performance of GrBFL, LotteryFL, and Differential Privacy (DP) across different numbers of iterations. It can be observed that, although LotteryFL and DP provide some degree of privacy protection, reconstruction of the data remains possible as the number of iterations increases. In contrast, GrBFL demonstrates significant effectiveness in privacy protection. Even with a surge in the number of iterations, GrBFL’s inherent information loss mechanism effectively hinders the attacker’s ability to accurately reconstruct the training data.

Figure 4 quantitatively displays the results of the privacy protection experiments. The line chart in the lower left quadrant shows the trajectory of mean squared error (MSE) loss between reconstructed images and the original images for different scenarios, indicating that GrBFL maintains a significant divergence in data recovery, thereby validating its effectiveness in privacy protection. The bar chart in the lower right section presents the average results for 100 random samples from three datasets, demonstrating that GrBFL exhibits strong privacy protection capabilities across all datasets.

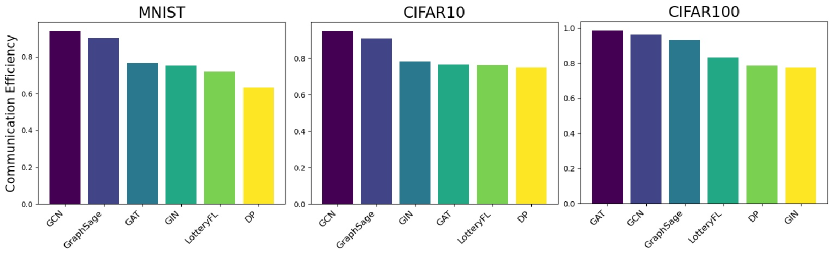

GrBFL achieves superior communication efficiency. Figure 5 provides a visualization of communication efficiency. The experimental results demonstrate that GrBFL performs exceptionally well in terms of communication efficiency. Specifically, in the three experimental datasets, when the parameter is set to , the model efficiency score for GrBFL is significantly higher compared to FL methods based on the CNNFL framework.

GrBFL performs well in the comprehensive evaluation. We conducted a comprehensive assessment of each model using the metrics defined in Equation 10, with the results shown in Table 2. Although privacy protection methods from both feature and model perspectives achieve some degree of privacy protection, their overall scores are not balanced. This validates the rationale of considering knowledge reconstruction from the input perspective, as this approach effectively protects privacy without excessively compromising efficiency and utility, and even achieves better overall performance.

| Framework | Methods | MNIST | CIFAR10 | CIFAR100 |

| GAT | 0.29 0.02 | 0.24 0.05 | 0.16 0.07 | |

| GCN | 0.32 0.02 | 0.25 0.03 | 0.14 0.04 | |

| GIN | 0.29 0.01 | 0.22 0.06 | 0.12 0.04 | |

| GrBFL (Ours) | GraphSage | 0.31 0.00 | 0.22 0.02 | 0.12 0.02 |

| DP | 0.27 0.04 | 0.21 0.05 | 0.11 0.04 | |

| CNNFL | LotteryFL | 0.22 0.03 | 0.22 0.01 | 0.12 0.03 |

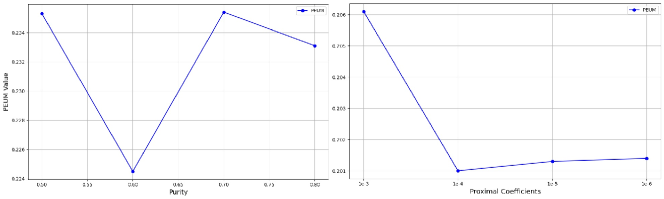

Parameter Sensitivity Study. We conducted a parameter sensitivity analysis on the purity of the constructed granular-receptacle and the proximal term coefficient in the client model’s loss function. The results in Figure 6 indicate that our method exhibits strong robustness, with the impact of parameter variations not exceeding 0.1.

Additionally, we conducted experiments on membership inference attacks. The detailed results of additional experiment results, along with the details of experiments mentioned earlier, can be found in the supplementary materials.

6 Conclusion

In this paper, we theoretically analyzed the feasibility of achieving a balance between privacy, efficiency, and utility in FL from the input perspective. Based on the concept of granular-ball computation, we proposed a new FL framework called GrBFL. We converted images into graphs through adaptive granular ball computation and then input them into a graph FL model for classification. Additionally, we designed two metrics to evaluate the effectiveness of the proposed FL paradigm. Experimental results validated the correctness of this input perspective approach. In future work, we will continue to investigate the impact of inputs in FL and develop more algorithms for GrBFL.

References

- Zhang et al. (2022) Xiaojin Zhang, Hanlin Gu, Lixin Fan, Kai Chen, and Qiang Yang. No free lunch theorem for security and utility in federated learning. ACM Transactions on Intelligent Systems and Technology, 14(1):1–35, 2022.

- Park et al. (2023) Sungwon Park, Sungwon Han, Fangzhao Wu, Sundong Kim, Bin Zhu, Xing Xie, and Meeyoung Cha. Feddefender: Client-side attack-tolerant federated learning. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pages 1850–1861, 2023.

- Liu et al. (2024) Yang Liu, Yan Kang, Tianyuan Zou, Yanhong Pu, Yuanqin He, Xiaozhou Ye, Ye Ouyang, Ya-Qin Zhang, and Qiang Yang. Vertical federated learning: Concepts, advances, and challenges. IEEE Transactions on Knowledge and Data Engineering, 2024.

- Rodríguez-Barroso et al. (2023) Nuria Rodríguez-Barroso, Daniel Jiménez-López, M Victoria Luzón, Francisco Herrera, and Eugenio Martínez-Cámara. Survey on federated learning threats: Concepts, taxonomy on attacks and defences, experimental study and challenges. Information Fusion, 90:148–173, 2023.

- Al Mallah et al. (2023) Ranwa Al Mallah, David Lopez, Godwin Badu-Marfo, and Bilal Farooq. Untargeted poisoning attack detection in federated learning via behavior attestation. IEEE Access, 2023.

- Bao et al. (2023) Ergute Bao, Dawei Gao, Xiaokui Xiao, and Yaliang Li. Communication efficient and differentially private logistic regression under the distributed setting. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pages 69–79, 2023.

- Zhang et al. (2023) Xiaojin Zhang, Yan Kang, Kai Chen, Lixin Fan, and Qiang Yang. Trading off privacy, utility, and efficiency in federated learning. ACM Transactions on Intelligent Systems and Technology, 14(6):1–32, 2023.

- Yang et al. (2020) Qiang Yang, Lixin Fan, and Han Yu. Federated Learning: Privacy and Incentive, volume 12500. Springer Nature, 2020.

- Gong et al. (2020) Maoguo Gong, Yu Xie, Ke Pan, Kaiyuan Feng, and Alex Kai Qin. A survey on differentially private machine learning. IEEE Computational Intelligence Magazine, 15(2):49–64, 2020.

- Le Ny and Pappas (2013) Jerome Le Ny and George J Pappas. Differentially private filtering. IEEE Transactions on Automatic Control, 59(2):341–354, 2013.

- Chen et al. (2024) Lin Chen, Xiaofeng Ding, Zhifeng Bao, Pan Zhou, and Hai Jin. Differentially private federated learning on non-iid data: Convergence analysis and adaptive optimization. IEEE Transactions on Knowledge and Data Engineering, 2024.

- Jiang et al. (2024) Yangfan Jiang, Xinjian Luo, Yuncheng Wu, Xiaochen Zhu, Xiaokui Xiao, and Beng Chin Ooi. On data distribution leakage in cross-silo federated learning. IEEE Transactions on Knowledge and Data Engineering, 2024.

- Bonawitz et al. (2017) Keith Bonawitz, Vladimir Ivanov, Ben Kreuter, Antonio Marcedone, H Brendan McMahan, Sarvar Patel, Daniel Ramage, Aaron Segal, and Karn Seth. Practical secure aggregation for privacy-preserving machine learning. In proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pages 1175–1191, 2017.

- Bell et al. (2020) James Henry Bell, Kallista A Bonawitz, Adrià Gascón, Tancrède Lepoint, and Mariana Raykova. Secure single-server aggregation with (poly) logarithmic overhead. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, pages 1253–1269, 2020.

- Aono et al. (2017) Yoshinori Aono, Takuya Hayashi, Lihua Wang, Shiho Moriai, et al. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Transactions on Information Forensics and Security, 13(5):1333–1345, 2017.

- Xu et al. (2020) Guowen Xu, Hongwei Li, Yun Zhang, Shengmin Xu, Jianting Ning, and Robert H Deng. Privacy-preserving federated deep learning with irregular users. IEEE Transactions on Dependable and Secure Computing, 19(2):1364–1381, 2020.

- Li et al. (2020a) Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, and Virginia Smith. Federated optimization in heterogeneous networks. Proceedings of Machine Learning and Systems, 2:429–450, 2020a.

- Ahmed et al. (2024) Syed Thouheed Ahmed, V Vinoth Kumar, TR Mahesh, LV Narasimha Prasad, AK Velmurugan, V Muthukumaran, and VR Niveditha. Fedopt: federated learning-based heterogeneous resource recommendation and optimization for edge computing. Soft Computing, pages 1–12, 2024.

- Karimireddy et al. (2020) Sai Praneeth Karimireddy, Satyen Kale, Mehryar Mohri, Sashank Reddi, Sebastian Stich, and Ananda Theertha Suresh. Scaffold: Stochastic controlled averaging for federated learning. In International Conference on Machine Learning, pages 5132–5143. PMLR, 2020.

- Wang et al. (2020) Jianyu Wang, Qinghua Liu, Hao Liang, Gauri Joshi, and H Vincent Poor. Tackling the objective inconsistency problem in heterogeneous federated optimization. Advances in Neural Information Processing Systems, 33:7611–7623, 2020.

- Chen (1982) Lin Chen. Topological structure in visual perception. Science, 218(4573):699–700, 1982.

- Wang (2017) Guoyin Wang. Dgcc: data-driven granular cognitive computing. Granular Computing, 2(4):343–355, 2017.

- Xia et al. (2020) Shuyin Xia, Daowan Peng, Deyu Meng, Changqing Zhang, Guoyin Wang, Elisabeth Giem, Wei Wei, and Zizhong Chen. A fast adaptive k-means with no bounds. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- Xia et al. (2022) Shuyin Xia, Xiaochuan Dai, Guoyin Wang, Xinbo Gao, and Elisabeth Giem. An efficient and adaptive granular-ball generation method in classification problem. IEEE Transactions on Neural Networks and Learning Systems, 2022.

- Shuyin et al. (2023) Xia Shuyin, Dai Dawei, Yang Long, Zhany Li, Lan Danf, Wang Guoy, et al. Graph-based representation for image based on granular-ball. arXiv preprint arXiv:2303.02388, 2023.

- Zhu et al. (2019) Ligeng Zhu, Zhijian Liu, and Song Han. Deep leakage from gradients. Advances in neural information processing systems, 32, 2019.

- Xia et al. (2019) Shuyin Xia, Yunsheng Liu, Xin Ding, Guoyin Wang, Hong Yu, and Yuoguo Luo. Granular ball computing classifiers for efficient, scalable and robust learning. Information Sciences, 483:136–152, 2019.

- McMahan et al. (2017) Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics, pages 1273–1282. PMLR, 2017.

- Li et al. (2020b) Ang Li, Jingwei Sun, Binghui Wang, Lin Duan, Sicheng Li, Yiran Chen, and Hai Li. Lotteryfl: Personalized and communication-efficient federated learning with lottery ticket hypothesis on non-iid datasets. arXiv preprint arXiv:2008.03371, 2020b.