A New Perspective on the Effects of Spectrum in Graph Neural Networks

Abstract

Many improvements on GNNs can be deemed as operations on the spectrum of the underlying graph matrix, which motivates us to directly study the characteristics of the spectrum and their effects on GNN performance. By generalizing most existing GNN architectures, we show that the correlation issue caused by the spectrum becomes the obstacle to leveraging more powerful graph filters as well as developing deep architectures, which therefore restricts GNNs’ performance. Inspired by this, we propose the correlation-free architecture which naturally removes the correlation issue among different channels, making it possible to utilize more sophisticated filters within each channel. The final correlation-free architecture with more powerful filters consistently boosts the performance of learning graph representations. Code is available at https://github.com/qslim/gnn-spectrum.

1 Introduction

Although graph neural network (GNN) communities are in a rapid development of both theories and applications, there is still a lack of a generalized understanding of the effects of the graph’s spectrum in GNNs. As we can see, many improvements can finally be unified into different operations on the spectrum of the underlying graph, while their effectiveness is interpreted by several well-accepted isolated concepts: (Wu et al., 2019; Zhu et al., 2021; Klicpera et al., 2019a, b; Chien et al., 2021; Balcilar et al., 2021) explain it in the perspective of simulating low/high pass filters; (Ming Chen et al., 2020; Xu et al., 2018; Liu et al., 2020; Li et al., 2018) interpret it as ways of alleviating oversmoothing phenomenon in deep architectures; (Cai et al., 2021) adopts the conception of normalization operation in neural networks and applies it to graph data. Since these improvements all indirectly operate on the spectrum, it motivates us to study the potential connections between the GNN performance and the characteristics of the graph’s spectrum. If we can find such a connection, it would provide a deeper and generalized insight into these seemingly unrelated improvements associated with the graph’s spectrum (low/high pass filter, oversmoothing, graph normalization, etc), and further identify potential issues in existing architectures. To this end, we first consider the simple correlation metric: cosine similarity among signals, and study the relations between it and the graph’s spectrum in the graph convolution operation. It provides a new perspective that in existing GNN architectures, the distribution of eigenvalues of the underlying graph matrix controls the cosine similarity among signals. An ill-posed spectrum would easily make signals over-correlated which is evidence of information loss.

Compared with oversmoothing studies (Li et al., 2018; Oono & Suzuki, 2020; Rong et al., 2019; Huang et al., 2020), the correlation analysis associated with the graph’s spectrum further indicates that the correlation issue is essentially caused by the graph’s spectrum. In other words, for graph topologies with an unsmooth spectrum, the issue can appear even with a shallow architecture, and a deep model further makes the spectrum less smooth and eventually exacerbates this issue. Meanwhile, the correlation analysis also provides a unified interpretation of the effectiveness of various existing improvements associated with the graph’s spectrum since they all implicitly impose some constraints on the spectrum to alleviate the correlation issue. However, these improvements are trade-offs between alleviating the correlation issue and applying more powerful graph filters: since a filter implementation directly reflects on the spectrum, a more appropriate filter for relevant signal patterns may correspond to an ill-posed spectrum, which in return will not gain performance improvements. Hence, in general GNN architectures, the correlation issue becomes the obstacle to applying more powerful filters. As we can see, although one can approximate more sophisticated graph filters by increasing the order of the polynomial theoretically (Shuman et al., 2013), in the popular models, simple filters, e.g. low-pass filter (Kipf & Welling, 2017; Wu et al., 2019), or the fixed filter coefficients (Klicpera et al., 2019a, b) serve as the practical applicable choice.

With all the above understandings, the key solution is to decouple the correlation issue from the filter design, which results in our correlation-free architecture. In contrast to existing approaches, it allows to focus on exploring more sophisticated filters without the concern of the correlation issue. With this guarantee, we can improve the approximation abilities of polynomial filters to better approximate the desired more complex filters (Hammond et al., 2011; Defferrard et al., 2016). However, we also find that it cannot be achieved by simply increasing the number of polynomial bases as the basis characteristics implicitly restrict the number of available bases in the resulting polynomial filter. For this reason, commonly used (normalized) adjacency or Laplacian matrix where its spectrum serves as the basis cannot effectively utilize high-order bases. To address this issue, we propose new graph matrix representations, which are capable of leveraging more bases and learnable filter coefficients to better respond to more complex signal patterns. The resulting model significantly boosts performance on learning graph representations. Although there are extensive studies on the polynomial filters including the fixed coefficients and learnable coefficients (Defferrard et al., 2016; Levie et al., 2019; Chien et al., 2021; He et al., 2021), to the best of our knowledge, they all focus on the coefficients design and use the (normalized) adjacency or Laplacian matrix as a basis. Therefore, our work is well distinguished from them. Our contributions are summarized as follows:

-

•

We show that general GNN architectures suffer from the correlation issue and also quantify this issue with spectral smoothness;

-

•

We propose the correlation-free architecture that decouples the correlation issue from graph convolution;

-

•

We show that the spectral characteristics also hinder the approximation abilities of polynomial filters and address it by altering the graph’s spectrum.

2 Preliminaries

Let be an undirected graph with node set and edge set . We denote the number of nodes, the adjacency matrix and the node feature matrix where is the feature dimensionality. is a graph signal that corresponds to one dimension of .

Spectral Graph Convolution (Hammond et al., 2011; Defferrard et al., 2016). The definition of spectral graph convolution relies on Fourier transform on the graph domain. For a signal and graph Laplacian , we have Fourier transform and inverse transform . Then, the graph convolution of a signal with a filter is

| (1) |

where denotes a diagonal matrix in which the diagonal corresponds to spectral filter coefficients. To avoid eigendecomposition and ensure scalability, is approximated by a truncated expansion in terms of Chebyshev polynomials up to the -th order (Hammond et al., 2011), which is also the polynomials of ,

| (2) |

where . Now the convolution in Eq. 1 is

| (3) |

Note that this expression is -localized since it is a -order polynomial in the Laplacian, i.e., it depends only on nodes that are at most hops away from the central node.

Graph Convolutional Network (GCN) (Kipf & Welling, 2017). GCN is derived from -order Chebyshev polynomials with several approximations. The authors further introduce the renormalization trick with and . Also, GCN can be generalized to multiple input channels and a layer-wise model:

| (4) |

where is learnable matrix and is nonlinear function.

Graph Diffusion Convolution (GDC) (Klicpera et al., 2019b). A generalized graph diffusion is given by the diffusion matrix:

| (5) |

with the weight coefficients and the generalized transition matrix . can be , or others as long as they are convergent. GDC can be viewed as a generalization of the original definition of spectral graph convolution, which also applies polynomial filters but not necessarily the Laplacian.

3 Revisiting Existing GNN Architectures

| GCN | SGC | APPNP | GCNII | GDC | SSGC | GPR | ChebyNet | CayleNet | BernNet | |

|---|---|---|---|---|---|---|---|---|---|---|

| Poly-basis | General | General | Residual | Residual | General | General | General | Chebyshev | Cayle | Bernstein |

| Poly-coefficient | Fixed | Fixed | Fixed | Fixed | Fixed | Fixed | Learnable | Fixed | Learnable | Learnable |

We first generalize existing spectral graph convolution as follows

| (6) |

where is the graph matrix, e.g. adjacency or Laplacian matrix and their normalized forms. is the polynomial of graph matrices with coefficients for a -order polynomial. is the feature transformation neural network with the learnable parameters . In SGC (Wu et al., 2019), GDC (Klicpera et al., 2019b), SSGC (Zhu & Koniusz, 2020), and GPR (Chien et al., 2021), is implemented as the general polynomial, i.e. . Their differences are identified by the coefficients . For example, SGC corresponds to a very simple form with and . By removing the nonlinear layer in GCNII (Ming Chen et al., 2020), APPNP (Klicpera et al., 2019a) and GCNII share the similar graph convolution layer as

where and is the input node features. By deriving its closed-form, we reformulate it with Eq. 6 as . In ChebyNet (Defferrard et al., 2016), CayleNet (Levie et al., 2019) and BernNet (He et al., 2021), corresponds to Chebyshev, Cayle and Bernstein polynomials respectively. GPR, CayleNet and BernNet apply learnable coefficient , where is learned as the coefficients of general, Cayle and Bernstein basis respectively. Therefore, with our formulation in Eq. 6, general graph convolutions are mainly different from as summarized in Tab. 1111Here, we follow the naming convention in GCNII called initial residual connection. GCN and GCNII interlace nonlinear computations over layers, making them difficult to reformulate all layers with Eq. 6. But one can represent them with the recursive form as . For example, in GCN, we have and with ..

3.1 Correlation Analysis in the Lens of Graph’s Spectrum

Based on the generalized formulation of Eq. 6, we conduct correlation analysis on existing graph convolution in the perspective of the graph’s spectrum. We denote for simplicity. denotes one channel in . Then the convolution on is represented as . The cosine similarity between and the -th eigenvector of is

| (7) |

is the weight of on when representing with the set of orthonormal bases . The cosine similarity between and is

| (8) |

The detailed derivations of Eq. 7 and Eq. 8 are given in Appendix A.

Eq. 8 builds the connection between the cosine similarity and the spectrum of the underlying graph matrix. We say the spectrum is if all eigenvalues have similar magnitudes. By comparing Eq. 7 and Eq. 8, it shows that the graph convolution operation with the unsmooth spectrum, i.e., dissimilar eigenvalues, results in signals correlated (a higher cosine similarity) to the eigenvectors corresponding to larger magnitude eigenvalues and orthogonal (a lower cosine similarity) to the eigenvectors corresponding to smaller magnitude eigenvalues. In the case where 0 eigenvalue is involved in the spectrum, signals would lose information in the direction of the corresponding eigenvectors. In the deep architecture, this problem would further be exacerbated:

Proposition 3.1.

Assume is a symmetric matrix with real-valued entries. are real eigenvalues, and are corresponding eigenvectors. Then, for any given , we have

(i) and for ;

(ii) If , , and the convergence speed is decided by .

We prove Proposition 3.1 in Appendix B. Proposition 3.1 shows that a deeper architecture violates the spectrum’s smoothness, which therefore makes the input signals more correlated to each other. 222 Here, nonlinearity is not involved in the propagation step. This meets the case of the decoupling structure where a multi-layer GNN is split into independent propagation and prediction steps (Liu et al., 2020; Wu et al., 2019; Klicpera et al., 2019a; Zhu & Koniusz, 2020; Zhang et al., 2021). The propagation involving nonlinearity remains unexplored due to its high complexity, except for one case of ReLU as nonlinearity (Oono & Suzuki, 2020). Most convergence analyses (such as over-smoothing) only study the simplified linear case (Cai et al., 2021; Liu et al., 2020; Wu et al., 2019; Klicpera et al., 2019a; Zhao & Akoglu, 2020; Xu et al., 2018; Ming Chen et al., 2020; Zhu & Koniusz, 2020; Klicpera et al., 2019b; Chien et al., 2021). Finally, , and the information within signals would be washed out. Note that all the above analysis does not impose any constraint to the underlying graph such as connectivity.

Revisiting oversmoothing via the lens of correlation issue. In the well-known oversmoothing analysis, the convergence is considered as where each row of only depends on the degree of the corresponding node, provided that the graph is and (Xu et al., 2018; Liu et al., 2020; Zhao & Akoglu, 2020; Chien et al., 2021). Our analysis generalizes this result. In our analysis, the convergence of the cosine similarity among signals does not limit a graph to be or that is required in the oversmoothing analysis analogical to the stationary distribution of the Markov chain, and even does not require a model to be necessarily : it is essentially caused by the bad distributions of eigenvalues, while the deep architecture exacerbates it. Interestingly, inspired by this perspective, the correlation problem actually relates to the specific topologies since different topologies correspond to different spectrum. There exists topologies inherently with bad distributions of eigenvalues, and they will suffer from the problem even with a shallow architecture. Also, by taking the symmetry into consideration, Proposition 3.1(i) shows that the convergence of cosine similarity with respect to is also . In contrast that existing results only discuss the theoretical infinite depth case, this provides more concrete evidence in the practical finite depth case that a deeper architecture can be more harmful than a shallow one.

Revisiting graph filters via the lens of correlation issue. The graph filter is approximated by a polynomial in the theory of spectral graph convolution (Hammond et al., 2011; Defferrard et al., 2016). Although theoretically, one can approximate any desired graph filter by increasing the order of the polynomial (Shuman et al., 2013), most GNNs cannot gain improvements by enlarging . Instead, the simple low-pass filter studied by many improvements on spectral graph convolution acts as the practical effective choice (Shuman et al., 2013; Wu et al., 2019; NT & Maehara, 2019; Muhammet et al., 2020; Klicpera et al., 2019b). Although there are studies involving high-pass filters to better process high-frequency signals recently, the low-pass is always required in graph convolution (Zhu & Koniusz, 2020; Zhu et al., 2021; Balcilar et al., 2021; Bo et al., 2021; Gao et al., 2021). This can be explained in the perspective of correlation analysis. As we have shown, the graph convolution is sensitive to the spectrum. A more proper filter to better respond to relevant signal patterns may result in an unsmooth spectrum, making different channels correlated to each other after convolution. In contrast, although a low-pass filter has limited expressiveness, it corresponds to a smoother spectrum, which alleviates the correlation issue.

4 Correlation-free Architecture

The correlation analysis via the lens of graph’s spectrum shows that in general GNN architectures, the spectrum leads to correlation issue and therefore acts as the obstacle to developing deep architectures as well as leveraging more expressive graph filters. To overcome this issue, a natural idea is to assign the graph convolution in different channels of with different spectrums, which can be viewed as a generalization of Eq. 6 as follows

| (9) |

Both and are the feature transformation neural networks with the learnable parameters and respectively. is the -th polynomial with the learnable coefficients . is the -th channel of . We denote for simplicity. Then the convolution operation on in Eq. 9 is

| (10) |

with the filter . We denote . Then,

| (11) |

where . If for any , i.e., the algebraic multiplicity of all eigenvalues is 1, is a Vandermonde matrix with . serve as a set of bases, where each filter is a linear combination of . Hence, a larger helps to better approximate the desired filter. When , is a full-rank matrix and is sufficient to represent any desired filter with proper assignments of . Note that is much smaller in real-world graph-level tasks than that in node-level tasks, making more tractable.

By considering the columns of a Vandermonde matrix, i.e. as bases, we can see that when increasing (aka applying more bases), with goes diminishing and with goes divergent. To balance the diminishing and divergence problems when applying a larger , we need to carefully control the range of the spectrum close to or . General approaches have 333General approaches use the (symmetry) normalized , i.e. , to guarantee its spectrum is bounded by (Kipf & Welling, 2017; Klicpera et al., 2019b) or the (symmetry) normalized , i.e. to ensure the boundary [0, 2] and then rescale it to (He et al., 2021).. Although there is no concern of divergence problems, , especially for a small , inclines to 0 when increasing , making the higher-order basis ineffective in the practical limited precision condition.

On the other hand, general approaches are less likely to learn the coefficients of polynomial filters in a completely free manner (Klicpera et al., 2019b; He et al., 2021). The specially designed coefficients to explicit modify spectrum, i.e. Personalized PageRank (PPR), heat kernel (Klicpera et al., 2019b), etc or the coefficients learned under the constrained condition, i.e. Chebyshev (Defferrard et al., 2016), Cayley (Levie et al., 2019), Bernstein (He et al., 2021) polynomial, etc act as the practical applicable filters. This is probably because the polynomial filter relies on sophisticated coefficients to maintain spectral properties. Learning them from scratch would easily fall into an ill-posed filter (He et al., 2021). However, by modifying the filter bases, it would relax the requirement on the coefficients, making it more suitable for learning coefficients from scratch.

Finally, although the new architecture in Eq. 9 decouples the correlation issue from developing more powerful filters, general filter bases are less qualified for approximating more complex filters. Hence, we still need to explore more effective filter bases to replace existing ones. To this end, we will introduce two different improvements on filter bases in the following sections whose effectiveness will serve as a verification of our analysis.

4.1 Spectral Optimization on Filter Basis

One can directly apply a smoothing function on the spectrum of , which helps to narrow the range of eigenvalues close to 1 or -1. There can be various approaches to this end, and in this paper, we propose the following eigendecomposition-based method for a symmetric matrix 444Although the computation of requires eigendecomposition, is always a symmetric matrix and the eigendecomposition on it is much faster than a general matrix.

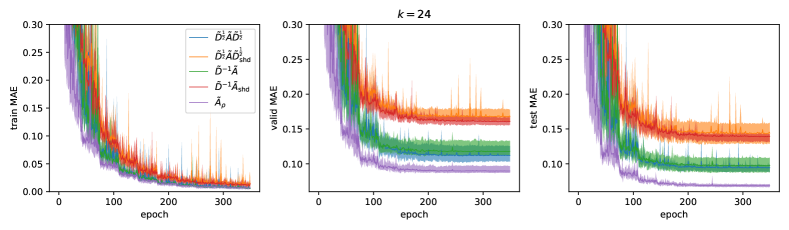

| (12) |

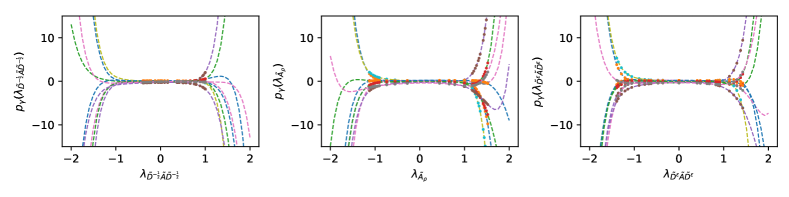

where . , . serves as the polynomial bases in Eq. 10. Unlike general spectral approaches, is not required to be a bounded spectrum. It can leverage more bases while alleviating both the diminishing and divergence problems by controlling in a small range. Therefore, can be considered as a basis-augmentation technique as shown in Fig. 1.

There can be other transformations on the spectrum, e.g., , which have a similar effect to . Note that the injectivity of also influences the approximation ability, which is discussed in more details in Appendix C.

4.2 Generalized Normalization on Filter Basis

Eq. 12 directly operates on the spectrum, which can achieve an accurate control on the range of the spectrum but requires eigendecomposition. To avoid eigendecomposition, we alternatively study the effects of graph normalization on the spectrum. We generalize the normalized adjacency matrix as follows

| (13) |

where is the normalization coefficient and is the shift coefficient. Widely-used corresponds to and .

Proposition 4.1.

Let be the spectrum of and be the spectrum of , then for any , we have

where and are the minimum and maximum degrees of nodes in the graph.

We prove Proposition 4.1 in Appendix D. Proposition 4.1 extends the results in (Spielman, 2007), showing that the normalization has a scaling effect on the spectrum: a smaller is likely to lead to a smaller , while a larger is likely to lead to a larger . When , the upper and lower bounds coincide with .

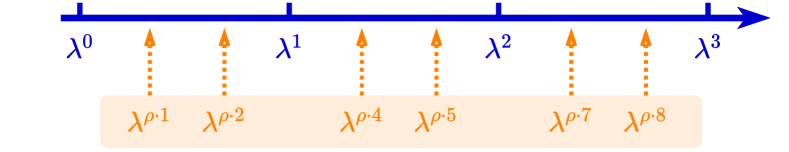

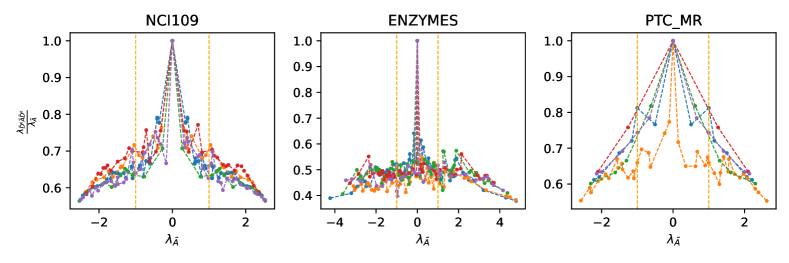

To further investigate the effects of the normalization on the spectrum, we fix and empirically evaluate as shown in Fig. 2. When fixing , shrinks the spectrum of with different degrees on different eigenvalues. For eigenvalues with small magnitudes (in the middle area of the spectrum), it has a small shrinking effect, while for eigenvalues with large magnitudes, it has a relatively large shrinking effect. Hence, can be used as a spectral smoothing method. Also, different results in different shrinking effects, which is consistent with the results in Proposition 4.1. Widely-used with the spectrum bounded by may not be a good choice since the diminishing problem. Intuitively, to utilize more bases, we should narrow the range of the spectrum close to 1 (or -1) to avoid both the diminishing and divergence problems in higher-order bases. This can vary from different datasets and we should carefully balance and .

5 Related Work

Many improvements on GNNs can be unified into the spectral smoothing operations, e.g. low-pass filter (Wu et al., 2019; Zhu et al., 2021; Klicpera et al., 2019a, b; Chien et al., 2021; Balcilar et al., 2021), alleviating oversmoothing (Ming Chen et al., 2020; Xu et al., 2018; Liu et al., 2020; Li et al., 2018), graph normalization(Cai et al., 2021), etc, our analysis on the relations of the correlation issue and the spectrum of underlying graph’s matrix provides a unified interpretation on their effectiveness.

ChebyNet (Defferrard et al., 2016), CayleNet (Levie et al., 2019), APPNP (Klicpera et al., 2019a), SSGC (Zhu & Koniusz, 2020), GPR (Chien et al., 2021), BernNet (He et al., 2021), etc explore various polynomial filters and use the normalized adjacency or Laplacian matrix as basis. We improve the approximation ability of polynomial filters by altering the spectrum of filter bases. The resulting bases allow leveraging more bases to approximate more sophisticated filters and are more suitable for learning coefficients from scratch.

We note that the concurrent work (Jin et al., 2022) has also pointed out the overcorrelation issue in the infinite depth case, without further discussion on the reason (e.g. graph’s spectrum) behind this phenomenon. In contrast, we show that correlation is inherently caused by the spectrum of the underlying graph filter, and also quantify this effect with spectral smoothness. It allows to analyze the correlation across all layers instead of only the theoretical infinite depth.

6 Experiments

We conduct experiments on TUDatasets (Yanardag & Vishwanathan, 2015; Kersting et al., 2016), OGB (Hu et al., 2020) which involve graph classification tasks and ZINC (Dwivedi et al., 2020) which involves graph regression tasks. Then, we evaluate the effects of our proposed new graph convolution architecture and two filter bases.

6.1 Results

| dataset | NCI1 | NCI109 | ENZYMES | PTC_MR |

| GK | 62.490.27 | 62.350.3 | 32.701.20 | 55.650.5 |

| RW | - | - | 24.161.64 | 55.910.3 |

| PK | 82.540.5 | - | - | 59.52.4 |

| FGSD | 79.80 | 78.84 | - | 62.8 |

| AWE | - | - | 35.775.93 | - |

| DGCNN | 74.440.47 | - | 51.07.29 | 58.592.5 |

| PSCN | 74.440.5 | - | - | 62.295.7 |

| DCNN | 56.611.04 | - | - | - |

| ECC | 76.82 | 75.03 | 45.67 | - |

| DGK | 80.310.46 | 80.320.3 | 53.430.91 | 60.082.6 |

| GraphSag | 76.01.8 | - | 58.26.0 | - |

| CapsGNN | 78.351.55 | - | 54.675.67 | - |

| DiffPool | 76.91.9 | - | 62.53 | - |

| GIN | 82.71.7 | - | - | 64.67.0 |

| -GNN | 76.2 | - | - | 60.9 |

| Spec-GN | 84.791.63 | 83.620.75 | 72.505.79 | 68.056.41 |

| Norm-GN | 84.871.68 | 83.501.27 | 73.337.96 | 67.764.52 |

Settings. We use the default dataset splits for OGB and ZINC. For TUDatasets, we follow the standard 10-fold cross-validation protocol and splits from (Zhang et al., 2018) and report our results following the protocol described in (Xu et al., 2019; Ying et al., 2018). Following all baselines on the leaderboard of ZINC, we control the number of parameters around 500K. The baseline models include: GK (Shervashidze et al., 2009), RW (Vishwanathan et al., 2010), PK (Neumann et al., 2016), FGSD (Verma & Zhang, 2017), AWE (Ivanov & Burnaev, 2018), DGCNN (Zhang et al., 2018), PSCN (Niepert et al., 2016), DCNN (Atwood & Towsley, 2016), ECC (Simonovsky & Komodakis, 2017), DGK (Yanardag & Vishwanathan, 2015), CapsGNN (Xinyi & Chen, 2019), DiffPool (Ying et al., 2018), GIN (Xu et al., 2019), -GNN (Morris et al., 2019), GraphSage (Hamilton et al., 2017), GAT (Veličković et al., 2018), GatedGCN-PE (Bresson & Laurent, 2017), MPNN (sum) (Gilmer et al., 2017), DeeperG (Li et al., 2020), PNA (Corso et al., 2020), DGN (Beani et al., 2021), GSN (Bouritsas et al., 2020), GINE-VN (Brossard et al., 2020), GINE-APPNP (Brossard et al., 2020), PHC-GNN (Le et al., 2021), SAN (Kreuzer et al., 2021), Graphormer (Ying et al., 2021). Spec-GN denotes the proposed graph convolution in Eq. 9 with the smoothed filter basis by spectral transformation in Eq. 12. Norm-GN denotes the proposed graph convolution in Eq. 9 with the smoothed filter basis by graph normalization in Eq. 13.

| method | ZINC MAE | MolPCBA AP |

|---|---|---|

| GCN | 0.3670.011 (505k) | 24.240.34 (2.02m) |

| GIN | 0.5260.051 (510k) | 27.030.23 (3.37m) |

| GAT | 0.3840.007 (531k) | - |

| GraphSage | 0.3980.002 (505k) | - |

| GatedGCN-PE | 0.2140.006 (505k) | - |

| MPNN | 0.1450.007 (481k) | - |

| DeeperG | - | 28.420.43 (5.55m) |

| PNA | 0.1420.010 (387k) | 28.380.35 (6.55m) |

| DGN | 0.1680.003 NA | 28.850.30 (6.73m) |

| GSN | 0.1010.010 (523k) | - |

| GINE-VN | - | 29.170.15 (6.15m) |

| GINE-APPNP | - | 29.790.30 (6.15m) |

| PHC-GNN | - | 29.470.26 (1.69m) |

| SAN | 0.1390.006 (509k) | - |

| Graphormer | 0.1220.006 (489k) | - |

| Spec-GN | 0.06980.002 (503k) | 29.650.28 (1.74m) |

| Norm-GN | 0.07090.002 (500k) | 29.510.33 (1.74m) |

Results. Tab. 2 and 3 summarize performance of our approaches comparing with baselines on TUDatasets, ZINC and MolPCBA. For TUDatasets, we report the results of each model in its original paper by default. When the results are not given in the original paper, we report the best testing results given in (Zhang et al., 2018; Ivanov & Burnaev, 2018; Xinyi & Chen, 2019). For ZINC and MolPCBA, we report the results of their public leaderboards. TUDatasets involves small-scale datasets. NCI1 and NCI109 are around 4K graphs. ENZYMES and PTC_MR are under 1K graphs. General GNNs easily suffer from overfitting on these small-scale data, and therefore we can see that some traditional kernel-based methods even get better performance. However, Spec-GN and Norm-GN achieve higher classification accuracies by a large margin on these datasets. The results on TUDatasets show that although Spec-GN and Norm-GN achieve more expressive filters, it does not lead to overfitting on learning graph representations. Recently, Transformer-based models are quite popular in learning graph representations, and they significantly improve the results on large-scale molecular datasets. On ZINC, Spec-GN and Norm-GN outperform these Transformer-based models by a large margin. And on MolPCBA, they are also competitive compared with SOTA results.

| Architecture | Basis | test MAE | valid MAE | ||||

|---|---|---|---|---|---|---|---|

| shd | idp | ||||||

| ✓ | ✓ | 0.14150.00748 | 0.15680.00729 | ||||

| ✓ | ✓ | 0.14390.00900 | 0.15690.00739 | ||||

| ✓ | ✓ | 0.10610.01018 | 0.12940.01454 | ||||

| ✓ | ✓ | 0.11330.01711 | 0.13160.02057 | ||||

| ✓ | ✓ | 0.09440.00379 | 0.11000.00787 | ||||

| ✓ | ✓ | 0.09820.00417 | 0.11720.00666 | ||||

| ✓ | ✓ | 0.06980.00200 | 0.08840.00319 | ||||

| ✓ | ✓ | 0.07090.00176 | 0.09290.00445 | ||||

6.2 Ablation Studies

We perform ablation studies on the proposed architecture and the filter bases (by setting in Eq. 12) and on ZINC. We use “idp” and “shd” to respectively represent the correlation-free architecture (also known as independent filter architecture) in Eq. 9 and the general shared filter architecture in Eq. 6. Both architectures learn the filter coefficients from scratch.

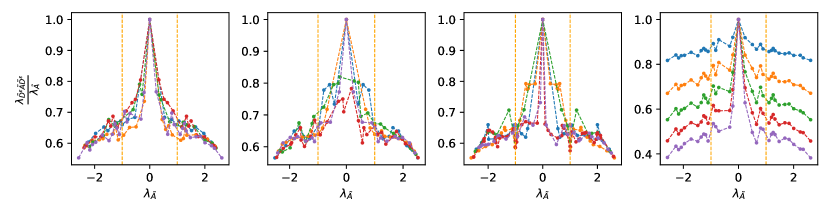

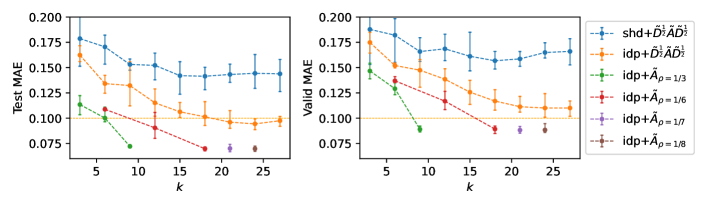

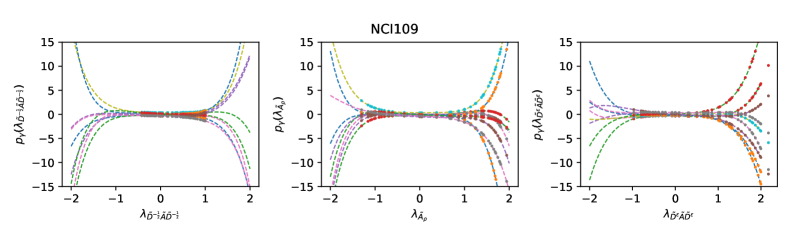

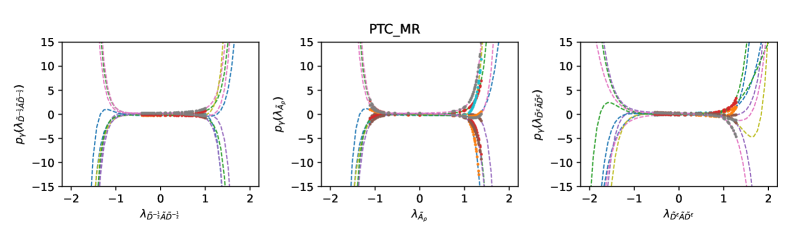

Correlation-free architecture and different filter bases. In Fig. 3, we visualize the learned filters in the correlation-free on three bases, i.e. , and . The visualizations show that each channel indeed learns a different filter on all three bases. has the bounded spectrum that is slightly close to due to the involvement of self-loop. The filters learn a similar response on all range which corresponds to different frequencies in frequency domain. and have the spectrum close to or while the filters learn diverse responses on these areas, which corresponds to more complex patterns on different frequencies. Tab. 4 shows that the correlation-free always outperforms the shared filter by a large margin on all tested bases. Both and have the bounded spectrum and they have similar performance. and narrow the range of the spectrum close to or through completely different strategies, but they have similar performance that is much better than and . This validates our analysis on the filter basis. Meanwhile, achieves more accurate control on the spectrum, and correspondingly, it slightly outperforms .

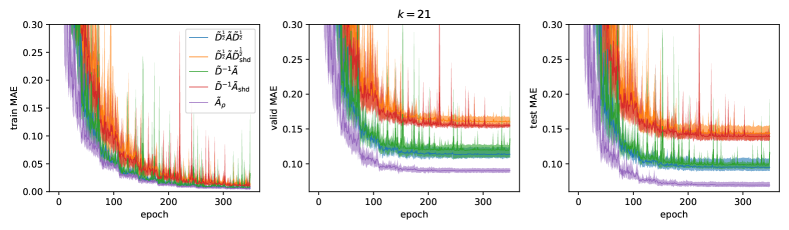

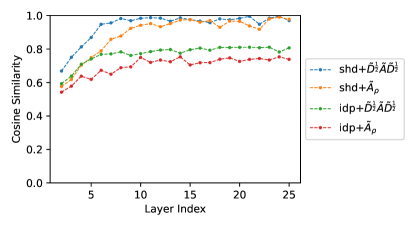

Do more bases gain improvements? In Fig. 4, we systematically evaluate the effects of the number of bases on learning graph representations, including and our with . The shared filter case, i.e. shd cannot well leverage more bases (a larger ) as the MAE stops decreasing at which is also reported by several baselines in Tab. 3. In contrast, both correlation-free cases idp and idp outperform the shared filter case by a large margin and they continuously gain improvements when increasing . The MAE of idp stops decreasing at the test MAE close to 0.09 and the valid MAE close to 0.11. By replacing with , the best test MAE is below 0.07, and the best valid MAE is close to 0.088. The bases in are controlled by both and . We use the tuple to denote a combination of and . By fixing , the curves corresponding to and show that increasing gains improvements. By fixing the upper bound of to be 1, involves 3 more bases than and outperforms . The same results are also reflected in the comparison of and . For the comparison of and , both settings achieve the lowest MAE and the difference is less obvious.

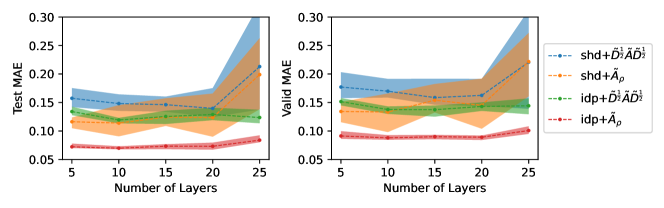

The effects of model depth. Fig.5 shows the performance comparisons between correlation-free and shared filter as depth increases. Each architecture is tested with the default basis and our proposed . We set the same number of bases in all resulting models, and each model is tested with the number of layers (depth) equal to . The results show that the correlation-free can preserve the performance as depth increases. The shared filter cases perform quite unstable and drop dramatically when depth . Also, across all depths, the correlation-free almost always outperforms the shared filter and has low variance among different runs. In Appendix G, we also test cosine similarities of different layers in a deep model.

Stability. We also found that the correlation-free is more stable in different runs than the shared filter case as reflected in the standard deviation in Tab. 4. This is probably because different channels may pose different patterns, which causes interference among each other in the shared filter case. While the correlation-free well avoids this problem. Also, the results of and are more stable than and in different runs. For and , the difference between the best and the worst runs can be more than 0.02. While for and , this difference is less than 0.01. More results are given in Appendix H. The instability of and is probably because learning filter coefficients from scratch without any constraints is difficult to maintain spectrum properties and therefore easily falls into an ill-posed filter (He et al., 2021). In contrast, and inherently with smoother spectrum alleviate this problem and make them more appropriate in the scenario of learning coefficients from scratch.

7 Conclusion

We study the effects of spectrum in GNNs. It shows that in existing architectures, the unsmooth spectrum results in the correlation issue, which acts as the obstacle to developing deep models as well as applying more powerful graph filters. Based on this observation, we propose the correlation-free architecture which decouples the correlation issue from filter design. Then, we show that the spectral characteristics also hinder the approximation abilities of polynomial filters and address it by altering the graph’s spectrum. Our extensive experiments show the significant performance gain of correlation-free architecture with powerful filters.

Acknowledgments

This work is supported in part by the National Key Research and Development Program of China (no. 2021ZD0112400), and also in part by the National Natural Science Foundation of China under grants U1811463 and 62072069.

References

- Atwood & Towsley (2016) Atwood, J. and Towsley, D. Diffusion-convolutional neural networks. In Advances in Neural Information Processing Systems, pp. 1993–2001, 2016.

- Balcilar et al. (2021) Balcilar, M., Renton, G., Héroux, P., Gaüzère, B., Adam, S., and Honeine, P. Analyzing the expressive power of graph neural networks in a spectral perspective. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=-qh0M9XWxnv.

- Beani et al. (2021) Beani, D., Passaro, S., Létourneau, V., Hamilton, W., Corso, G., and Liò, P. Directional graph networks. In International Conference on Machine Learning, pp. 748–758. PMLR, 2021.

- Bo et al. (2021) Bo, D., Wang, X., Shi, C., and Shen, H. Beyond low-frequency information in graph convolutional networks. In AAAI. AAAI Press, 2021.

- Bouritsas et al. (2020) Bouritsas, G., Frasca, F., Zafeiriou, S., and Bronstein, M. M. Improving graph neural network expressivity via subgraph isomorphism counting. arXiv preprint arXiv:2006.09252, 2020.

- Bresson & Laurent (2017) Bresson, X. and Laurent, T. Residual gated graph convnets. arXiv preprint arXiv:1711.07553, 2017.

- Brossard et al. (2020) Brossard, R., Frigo, O., and Dehaene, D. Graph convolutions that can finally model local structure. arXiv preprint arXiv:2011.15069, 2020.

- Cai et al. (2021) Cai, T., Luo, S., Xu, K., He, D., Liu, T.-Y., and Wang, L. Graphnorm: A principled approach to accelerating graph neural network training. In 2021 International Conference on Machine Learning, May 2021.

- Chien et al. (2021) Chien, E., Peng, J., Li, P., and Milenkovic, O. Adaptive universal generalized pagerank graph neural network. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=n6jl7fLxrP.

- Corso et al. (2020) Corso, G., Cavalleri, L., Beaini, D., Liò, P., and Veličković, P. Principal neighbourhood aggregation for graph nets. In Advances in Neural Information Processing Systems, 2020.

- Defferrard et al. (2016) Defferrard, M., Bresson, X., and Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Advances in neural information processing systems, pp. 3844–3852, 2016.

- Dwivedi et al. (2020) Dwivedi, V. P., Joshi, C. K., Laurent, T., Bengio, Y., and Bresson, X. Benchmarking graph neural networks. arXiv preprint arXiv:2003.00982, 2020.

- Gao et al. (2021) Gao, X., Dai, W., Li, C., Zou, J., Xiong, H., and Frossard, P. Message passing in graph convolution networks via adaptive filter banks. arXiv preprint arXiv:2106.09910, 2021.

- Gilmer et al. (2017) Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O., and Dahl, G. E. Neural message passing for quantum chemistry. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1263–1272. JMLR. org, 2017.

- Hamilton et al. (2017) Hamilton, W., Ying, Z., and Leskovec, J. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems, pp. 1024–1034, 2017.

- Hammond et al. (2011) Hammond, D. K., Vandergheynst, P., and Gribonval, R. Wavelets on graphs via spectral graph theory. Applied and Computational Harmonic Analysis, 30(2):129–150, 2011.

- He et al. (2021) He, M., Wei, Z., Huang, Z., and Xu, H. Bernnet: Learning arbitrary graph spectral filters via bernstein approximation. In NeurIPS, 2021.

- Hu et al. (2020) Hu, W., Fey, M., Zitnik, M., Dong, Y., Ren, H., Liu, B., Catasta, M., and Leskovec, J. Open graph benchmark: Datasets for machine learning on graphs. arXiv preprint arXiv:2005.00687, 2020.

- Huang et al. (2020) Huang, W., Rong, Y., Xu, T., Sun, F., and Huang, J. Tackling over-smoothing for general graph convolutional networks. arXiv preprint arXiv:2008.09864, 2020.

- Ivanov & Burnaev (2018) Ivanov, S. and Burnaev, E. Anonymous walk embeddings. In Dy, J. and Krause, A. (eds.), Proceedings of the 35th International Conference on Machine Learning, volume 80 of Proceedings of Machine Learning Research, pp. 2191–2200, Stockholmsmässan, Stockholm Sweden, 10–15 Jul 2018. PMLR. URL http://proceedings.mlr.press/v80/ivanov18a.html.

- Jin et al. (2022) Jin, W., Liu, X., Ma, Y., Aggarwal, C., and Tang, J. Towards feature overcorrelation in deeper graph neural networks, 2022. URL https://openreview.net/forum?id=Mi9xQBeZxY5.

- Kersting et al. (2016) Kersting, K., Kriege, N. M., Morris, C., Mutzel, P., and Neumann, M. Benchmark data sets for graph kernels, 2016. http://graphkernels.cs.tu-dortmund.de.

- Kipf & Welling (2017) Kipf, T. N. and Welling, M. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations (ICLR), 2017.

- Klicpera et al. (2019a) Klicpera, J., Bojchevski, A., and Günnemann, S. Predict then propagate: Graph neural networks meet personalized pagerank. In International Conference on Learning Representations (ICLR), 2019a.

- Klicpera et al. (2019b) Klicpera, J., Weißenberger, S., and Günnemann, S. Diffusion improves graph learning. Advances in Neural Information Processing Systems, 32:13354–13366, 2019b.

- Kreuzer et al. (2021) Kreuzer, D., Beaini, D., Hamilton, W., Létourneau, V., and Tossou, P. Rethinking graph transformers with spectral attention. arXiv preprint arXiv:2106.03893, 2021.

- Le et al. (2021) Le, T., Bertolini, M., Noé, F., and Clevert, D.-A. Parameterized hypercomplex graph neural networks for graph classification. arXiv preprint arXiv:2103.16584, 2021.

- Levie et al. (2019) Levie, R., Monti, F., Bresson, X., and Bronstein, M. M. Cayleynets: Graph convolutional neural networks with complex rational spectral filters. IEEE Transactions on Signal Processing, 67(1):97–109, 2019. doi: 10.1109/TSP.2018.2879624.

- Li et al. (2020) Li, G., Xiong, C., Thabet, A., and Ghanem, B. Deepergcn: All you need to train deeper gcns. arXiv preprint arXiv:2006.07739, 2020.

- Li et al. (2018) Li, Q., Han, Z., and Wu, X.-M. Deeper insights into graph convolutional networks for semi-supervised learning. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- Liu et al. (2020) Liu, M., Gao, H., and Ji, S. Towards deeper graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 338–348, 2020.

- Ming Chen et al. (2020) Ming Chen, Z. W., Zengfeng Huang, B. D., and Li, Y. Simple and deep graph convolutional networks. 2020.

- Morris et al. (2019) Morris, C., Ritzert, M., Fey, M., Hamilton, W. L., Lenssen, J. E., Rattan, G., and Grohe, M. Weisfeiler and leman go neural: Higher-order graph neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pp. 4602–4609, 2019.

- Muhammet et al. (2020) Muhammet, B., Guillaume, R., Pierre, H., Benoit, G., Sébastien, A., and Honeine, P. When spectral domain meets spatial domain in graph neural networks. In Thirty-seventh International Conference on Machine Learning (ICML 2020)-Workshop on Graph Representation Learning and Beyond (GRL+ 2020), 2020.

- Murphy et al. (2019) Murphy, R. L., Srinivasan, B., Rao, V., and Ribeiro, B. Janossy pooling: Learning deep permutation-invariant functions for variable-size inputs. In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=BJluy2RcFm.

- Neumann et al. (2016) Neumann, M., Garnett, R., Bauckhage, C., and Kersting, K. Propagation kernels: efficient graph kernels from propagated information. Machine Learning, 102(2):209–245, 2016.

- Niepert et al. (2016) Niepert, M., Ahmed, M., and Kutzkov, K. Learning convolutional neural networks for graphs. In International Conference on Machine Learning, pp. 2014–2023, 2016.

- NT & Maehara (2019) NT, H. and Maehara, T. Revisiting graph neural networks: All we have is low-pass filters, 2019.

- Oono & Suzuki (2020) Oono, K. and Suzuki, T. Graph neural networks exponentially lose expressive power for node classification. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=S1ldO2EFPr.

- Rong et al. (2019) Rong, Y., Huang, W., Xu, T., and Huang, J. Dropedge: Towards deep graph convolutional networks on node classification. In International Conference on Learning Representations, 2019.

- Shervashidze et al. (2009) Shervashidze, N., Vishwanathan, S., Petri, T., Mehlhorn, K., and Borgwardt, K. Efficient graphlet kernels for large graph comparison. In Artificial Intelligence and Statistics, pp. 488–495, 2009.

- Shuman et al. (2013) Shuman, D. I., Narang, S. K., Frossard, P., Ortega, A., and Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE signal processing magazine, 30(3):83–98, 2013.

- Simonovsky & Komodakis (2017) Simonovsky, M. and Komodakis, N. Dynamic edge-conditioned filters in convolutional neural networks on graphs. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3693–3702, 2017.

- Spielman (2007) Spielman, D. A. Spectral graph theory and its applications. In 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), pp. 29–38, 2007. doi: 10.1109/FOCS.2007.56.

- Veličković et al. (2018) Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., and Bengio, Y. Graph Attention Networks. International Conference on Learning Representations, 2018. URL https://openreview.net/forum?id=rJXMpikCZ.

- Verma & Zhang (2017) Verma, S. and Zhang, Z.-L. Hunt for the unique, stable, sparse and fast feature learning on graphs. In Advances in Neural Information Processing Systems, pp. 88–98, 2017.

- Vishwanathan et al. (2010) Vishwanathan, S. V. N., Schraudolph, N. N., Kondor, R., and Borgwardt, K. M. Graph kernels. Journal of Machine Learning Research, 11(Apr):1201–1242, 2010.

- Wu et al. (2019) Wu, F., Souza, A., Zhang, T., Fifty, C., Yu, T., and Weinberger, K. Simplifying graph convolutional networks. In Proceedings of the 36th International Conference on Machine Learning, pp. 6861–6871. PMLR, 2019.

- Xinyi & Chen (2019) Xinyi, Z. and Chen, L. Capsule graph neural network. In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=Byl8BnRcYm.

- Xu et al. (2018) Xu, K., Li, C., Tian, Y., Sonobe, T., Kawarabayashi, K.-i., and Jegelka, S. Representation learning on graphs with jumping knowledge networks. In International Conference on Machine Learning, pp. 5453–5462. PMLR, 2018.

- Xu et al. (2019) Xu, K., Hu, W., Leskovec, J., and Jegelka, S. How powerful are graph neural networks? In International Conference on Learning Representations, 2019. URL https://openreview.net/forum?id=ryGs6iA5Km.

- Yanardag & Vishwanathan (2015) Yanardag, P. and Vishwanathan, S. Deep graph kernels. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1365–1374. ACM, 2015.

- Ying et al. (2021) Ying, C., Cai, T., Luo, S., Zheng, S., Ke, G., He, D., Shen, Y., and Liu, T.-Y. Do transformers really perform bad for graph representation? arXiv preprint arXiv:2106.05234, 2021.

- Ying et al. (2018) Ying, Z., You, J., Morris, C., Ren, X., Hamilton, W., and Leskovec, J. Hierarchical graph representation learning with differentiable pooling. In Advances in Neural Information Processing Systems, pp. 4800–4810, 2018.

- Zaheer et al. (2017) Zaheer, M., Kottur, S., Ravanbakhsh, S., Poczos, B., Salakhutdinov, R. R., and Smola, A. J. Deep sets. In Guyon, I., Luxburg, U. V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc., 2017. URL https://proceedings.neurips.cc/paper/2017/file/f22e4747da1aa27e363d86d40ff442fe-Paper.pdf.

- Zhang et al. (2018) Zhang, M., Cui, Z., Neumann, M., and Chen, Y. An end-to-end deep learning architecture for graph classification. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- Zhang et al. (2021) Zhang, S., Liu, L., Gao, S., He, D., Fang, X., Li, W., Huang, Z., Su, W., and Wang, W. Litegem: Lite geometry enhanced molecular representation learning for quantum property prediction, 2021.

- Zhao & Akoglu (2020) Zhao, L. and Akoglu, L. Pairnorm: Tackling oversmoothing in gnns. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=rkecl1rtwB.

- Zhu & Koniusz (2020) Zhu, H. and Koniusz, P. Simple spectral graph convolution. In International Conference on Learning Representations, 2020.

- Zhu et al. (2021) Zhu, M., Wang, X., Shi, C., Ji, H., and Cui, P. Interpreting and unifying graph neural networks with an optimization framework. In Proceedings of the Web Conference 2021, pp. 1215–1226, 2021.

Appendix A Derivations of Eq. 7 and Eq. 8

Since is a symmetric matrix, assume the eigendecomposition with and .

Appendix B Proof of Proposition 3.1

Proof.

(ii) Since monotonously increases with respect to and has the upper bound 1, must be convergent.

As , we have and the convergence speed is decided by . Therefore .

Then,

∎

Appendix C More Discussions of Spectral Optimization on Filter Basis

We use to denote the eigenspace of associated with such that .

Proposition C.1.

Given a symmetric matrix with where , and can be any eigenbasis of ,

let , where is an entry-wise function applied on . Then we have

(i) ;

(ii) Meanwhile, if is injective, and is injective.

Proof.

Let . is equivalent to . For any , the geometric multiplicity of any is equal to its algebraic multiplicity, and . and . Similarly, for any , . Note that for any . Hence . As a result, for any .

If is injective, for any . Thus .

We use to denote the generalisation of the set of all eigenvalues of (Slso known as the spectrum of ). Let and . Suppose , to prove , we discuss two cases respectively.

Case 1:

Then . The characteristic polynomials of and are different. Therefore, .

Case 2:

Then . We prove the equivalent proposition ””. If , . For any with geometric multiplicity , we can find the corresponding eigenvectors according to . Similarly, we can find the corresponding eigenvectors according to . Note that the eigen-decomposition is unique in terms of eigenspaces. Thus, . Therefore, for any , (As given in Proposition C.1). Correspondingly, .

∎

Proposition C.1 shows that the eigenspace of involves the eigenspace of . Therefore, is invariant to the choice of eigenbasis, i.e., for any eigenbases and of . Hence, is unique to for a given . Consistently, we denote the mapping .

When is injective, and share the same algebraic multiplicity. Otherwise, has a larger algebraic multiplicity on the corresponding eigenvalues, which may weaken the approximation ability based on the understanding of Vandermonde matrix. Also, the injectivity of serves as a guarantee that the transformation is reversible with no information loss.

is also equivariant to graph isomorphism. For any two graphs and with matrix representations and (e.g., adjacency matrix, Laplacian matrix, etc.), and are isomorphic if and only if there exists a permutation matrix such that . We denote . Then

Claim 1.

is equivariant to graph isomorphism, i.e. .

Proof.

∎

Appendix D Proof of Proposition 4.1

Proof.

Let . According to Courant-Fischer theorem,

Let . As the change of variables is non-singular, this is equivalent to

Therefore,

Similarly, we can prove . ∎

Appendix E Visualizations of the Effects of the Normalization on the Spectrum

Appendix F Visualizations of the Learned Filters

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/01e80162-db43-4085-86c5-326313c79f2e/x7.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/01e80162-db43-4085-86c5-326313c79f2e/x8.png)

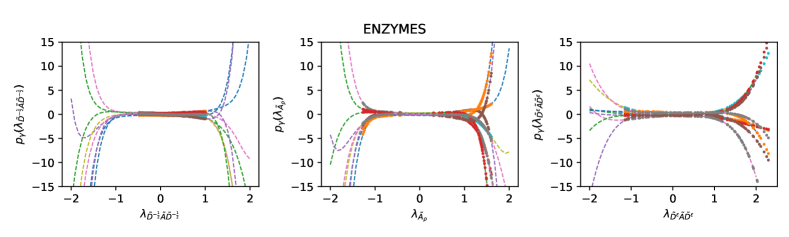

Appendix G The Correlation Issue of Deep Models

We test the absolute value of cosine similarities in different layers for a depth=25 model. For each graph, we compute the mean of all hidden signal pairs. The final visualized results in Fig.8 are the mean of all graphs within a randomly selected batch. To be consistent with the definition of spectral graph convolution as well as our correlation analysis, the test runs do not utilize edge features of ZINC.

The results show that on both bases, the cosine of the shared filter case converges to 1, while the correlation-free converges to for and for . (We also found that it easily leads to a large cosine similarity on ZINC, which is mainly because graphs are small such that , where is the number of nodes and is the number of hidden features.) These results do show that general GNNs suffer from the correlation issue as depth increases, while our correlation-free architecture enjoys a relatively stable performance.

Appendix H More Results

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/01e80162-db43-4085-86c5-326313c79f2e/x13.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/01e80162-db43-4085-86c5-326313c79f2e/x14.png)