A new point of NP-hardness for 2-to-1 Label Cover

Abstract

We show that given a satisfiable instance of the -to- Label Cover problem, it is -hard to find a -satisfying assignment.

1 Introduction

Over the past decade, a significant amount of progress has been made in the field of hardness of approximation via results based on the conjectured hardness of certain forms of the Label Cover problem. The Unique Games Conjecture (UGC) of Khot [Kho02] states that it is -hard to distinguish between nearly satisfiable and almost completely unsatisfiable instances of Unique, or 1-to-1, Label Cover. Using the UGC as a starting point, we now have optimal inapproximability results for Vertex Cover [KR03], Max-Cut [KKMO07], and many other basic constraint satisfaction problems (CSP). Indeed, assuming the UGC we have essentially optimal inapproximability results for all CSPs [Rag08]. In short, modulo the understanding of Unique Label Cover itself, we have an excellent understanding of the (in-)approximability of a wide range of problems.

Where the UGC’s explanatory powers falter is in pinning down the approximability of satisfiable CSPs. This means the task of finding a good assignment to a CSP when guaranteed that the CSP is fully satisfiable. For example, we know from the work of Håstad [Hås01] that given a fully satisfiable 3Sat instance, it is -hard to satisfy of the clauses for any . However given a fully satisfiable -to- Label Cover instance, it is completely trivial to find a fully satisfying assignment. Thus the UGC can not be used as the starting point for hardness results for satisfiable CSPs. Because of this, Khot additionally posed his -to- Conjectures:

Conjecture 1.1 ([Kho02]).

For every integer and , there is a label set size such that it is -hard to -decide the -to- Label Cover problem.

Here by -deciding a CSP we mean the task of determining whether an instance is at least -satisfiable or less than -satisfiable. It is well known (from the Parallel Repetition Theorem [FK94, Raz95]) that the conjecture is true if is allowed to depend on . The strength of this conjecture, therefore, is that it is stated for each fixed greater than .

The -to- Conjectures have been used to resolve the approximability of several basic “satisfiable CSP” problems. The first result along these lines was due to Dinur, Mossel, and Regev [DMR09] who showed that the -to- Conjecture implies that it is -hard to -color a -colorable graph for any constant . (They also showed hardness for -colorable graphs via another Unique Games variant.) O’Donnell and Wu [OW09] showed that assuming the -to- Conjecture for any fixed implies that it is -hard to -approximate instances a certain -bit predicate — the “Not Two” predicate. This is an optimal result among all -bit predicates, since Zwick [Zwi98] showed that every satisfiable -bit CSP instance can be efficiently -approximated. In another example, Guruswami and Sinop [GS09] have shown that the -to- Conjecture implies that given a -colorable graph, it is -hard to find a -coloring in which less than a fraction of the edges are monochromatic. This result would be tight up to the by an algorithm of Frieze and Jerrum [FJ97]. It is therefore clear that settling the -to-1 Conjectures, especially in the most basic case of , is an important open problem.

Regarding the hardness of the -to- Label Cover problem, the only evidence we have is a family of integrality gaps for the canonical SDP relaxation of the problem, in [GKO+10]. Regarding algorithms for the problem, an important recent line of work beginning in [ABS10] (see also [BRS11, GS11, Ste10]) has sought subexponential-time algorithms for Unique Label Cover and related problems. In particular, Steurer [Ste10] has shown that for any constant and label set size, there is an -time algorithm which, given a satisfiable -to- Label Cover instance, finds an assignment satisfying an -fraction of the constraints. E.g., there is a -time algorithm which -approximates -to- Label Cover, where is a certain universal constant.

In light of this, it is interesting not only to seek -hardness results for certain approximation thresholds, but to additionally seek evidence that nearly full exponential time is required for these thresholds. This can done by assuming the Exponential Time Hypothesis (ETH) [IP01] and by reducing from the Moshkovitz–Raz Theorem [MR10], which shows a near-linear size reduction from 3Sat to the standard Label Cover problem with subconstant soundness. In this work, we show reductions from 3Sat to the problem of -approximating several CSPs, for certain values of and for all . In fact, though we omit it in our theorem statements, it can be checked that all of the reductions in this paper are quasilinear in size for , for some .

1.1 Our results

In this paper, we focus on proving -hardness for the -to- Label Cover problem. To the best of our knowledge, no explicit -hardness factor has previously been stated in the literature. However it is “folklore” that one can obtain an explicit one for label set sizes & by performing the “constraint-variable” reduction on an -hardness result for -coloring (more precisely, Max--Colorable-Subgraph). The best known hardness for -coloring is due to Guruswami and Sinop [GS09], who showed a factor -hardness via a somewhat involved gadget reduction from the -query adaptive PCP result of [GLST98]. This yields -hardness of -approximating -to- Label Cover with label set sizes & . It is not known how to take advantage of larger label set sizes. On the other hand, for label set sizes & it is known that satisfying -to- Label Cover instances can be found in polynomial time.

The main result of our paper gives an improved hardness result:

Theorem 1.2.

For all , -deciding the -to- Label Cover problem with label set sizes & is -hard.

By duplicating labels, this result also holds for label set sizes & for any .

Let us describe the high-level idea behind our result. The folklore constraint-variable reduction from -coloring to -to- Label Cover would work just as well if we started from “-coloring with literals” instead. By this we mean the CSP with domain and constraints of the form “”. Starting from this CSP — which we call — has two benefits: first, it is at least as hard as -coloring and hence could yield a stronger hardness result; second, it is a bit more “symmetrical” for the purposes of designing reductions. We obtain the following hardness result for .

Theorem 1.3.

For all , it is -hard to -decide the 2NLin problem.

As 3-coloring is a special case of , [GS09] also shows that -deciding 2NLin is -hard for all , and to our knowledge this was previously the only hardness known for . The best current algorithm achieves an approximation ratio of (and does not need the instance to be satisfiable) [GW04]. To prove Theorem 1.3, we proceed by designing an appropriate “function-in-the-middle” dictator test, as in the recent framework of [OW12]. Although the [OW12] framework gives a direct translation of certain types of function-in-the-middle tests into hardness results, we cannot employ it in a black-box fashion. Among other reasons, [OW12] assumes that the test has “built-in noise”, but we cannot afford this as we need our test to have perfect completeness.

Thus, we need a different proof to derive a hardness result from this function-in-the-middle test. We first were able to accomplish this by an analysis similar to the Fourier-based proof of hardness given in Appendix F of [OW12]. Just as that proof “reveals” that the function-in-the-middle test can be equivalently thought of as Håstad’s test composed with the -to- gadget of [TSSW00], our proof for the function-in-the-middle test revealed it to be the composition of a function test for a certain four-variable CSP with a gadget. We have called the particular four-variable CSP 4-Not-All-There, or 4NAT for short. Because it is a -CSP, we are able to prove the following -hardness of approximation result for it using a classic, Håstad-style Fourier-analytic proof.

Theorem 1.4.

For all , it is -hard to -decide the 4NAT problem.

Thus, the final form in which we present our Theorem 1.2 is as a reduction from Label-Cover to 4NAT using a function test (yielding Theorem 1.4), followed by a 4NAT-to- gadget (yielding Theorem 1.3), followed by the constraint-variable reduction to -to- Label Cover. Indeed, all of the technology needed to carry out this proof was in place for over a decade, but without the function-in-the-middle framework of [OW12] it seems that pinpointing the 4NAT predicate as a good starting point would have been unlikely.

1.2 Organization

We leave to Section 2 most of the definitions, including those of the CSPs we use. The heart of the paper is in Section 3, where we give both the and 4NAT function tests, explain how one is derived from the other, and then perform the Fourier analysis for the 4NAT test. The actual hardness proof for 4NAT is presented in Section 4, and it follows mostly the techniques put in place by Håstad in [Hås01].

2 Preliminaries

We primarily work with strings for some integer . We write to denote the th coordinate of . Oftentimes, our strings are “blocked” into “blocks” of size . In this case, we write for the th block of , and for the th coordinate of this block. Define the function such that if falls in the th block of size (e.g., for , for , and so on).

2.1 Definitions of problems

An instance of a constraint satisfaction problem (CSP) is a set of variables , a set of labels , and a weighted list of constraints on these variables. We assume that the weights of the constraints are nonegative and sum to 1. The weights therefore induce a probability distribution on the constraints. Given an assignment to the variables , the value of is the probability that satisfies a constraint drawn from this probability distribution. The optimum of is the highest value of any assignment. We say that an is -satisfiable if its optimum is at least . If it is 1-satisfiable we simply call it satisfiable.

We define a CSP to be a set of CSP instances. Typically, these instances will have similar constraints. We will study the problem of -deciding . This is the problem of determining whether an instance of is at least -satisfiable or less than -satisfiable. Related is the problem of -approximating , in which one is given a -satisfiable instance of and asked to find an assignment of value at least . It is easy to see that -deciding is at least as easy as -approximating . Thus, as all our hardness results are for -deciding CSPs, we also prove hardness for -approximating these CSPs.

We now state the three CSPs that are the focus of our paper.

2-NLin():

In this CSP the label set is and the constraints are of the form

The special case when each RHS is is the -coloring problem. We often drop the from this notation and simply write 2NLin. The reader may think of the ‘N’ in as standing for ‘N’on-linear, although we prefer to think of it as standing for ‘N’early-linear. The reason is that when generalizing to moduli , the techniques in this paper generalize to constraints of the form “” rather than “”. For the ternary version of this constraint, “”, it is folklore111Venkatesan Guruswami, Subhash Khot personal communications. that a simple modification of Håstad’s work [Hås01] yields -hardness of -approximation.

4-Not-All-There:

For the 4-Not-All-There problem, denoted 4NAT, we define to have output if and only if at least one of the elements of is not present among the four inputs. The 4NAT CSP has label set and constraints of the form , where the ’s are constants in .

We additionally define the “Two Pairs” predicate , which has output if and only if its input contains two distinct elements of , each appearing twice. Note that an input which satisfies TwoPair also satisfies 4NAT.

-to-1 Label Cover:

An instance of the -to-1 Label Cover problem is a bipartite graph , a label set size , and a -to-1 map for each edge . The elements of are labeled from the set , and the elements of are labeled from the set . A labeling satisfies an edge if . Of particular interest is the case, i.e., 2-to-1 Label Cover.

Label Cover serves as the starting point for most -hardness of approximation results. We use the following theorem of Moshkovitz and Raz:

Theorem 2.1 ([MR10]).

For any there exists such that the problem of deciding a 3Sat instance of size can be Karp-reduced in time to the problem of -deciding -to-1 Label Cover instance of size with label set size .

2.2 Gadgets

A typical way of relating two separate CSPs is by constructing a gadget reduction which translates from one to the other. A gadget reduction from to is one which maps any constraint into a weighted set of constraints. The constraints are over the same set of variables as the constraint, plus some new, auxiliary variables (these auxiliary variables are not shared between constraints of ). We require that for every assignment which satisfies the constraint, there is a way to label the auxiliary variables to fully satisfy the constraints. Furthermore, there is some parameter such that for every assignment which does not satisfy the constraint, the optimum labeling to the auxiliary variables will satisfy exactly fraction of the constraints. Such a gadget reduction we call a -gadget-reduction from to . The following proposition is well-known:

Proposition 2.2.

Suppose it is -hard to -decide . If there exists a -gadget-reduction from to , then it is -hard to -decide .

We note that the notation -gadget-reduction is similar to a piece of notation employed by [TSSW00], but the two have different (though related) definitions.

2.3 Fourier analysis on

Let and set . For , consider the Fourier character defined as . Then it is easy to see that , where here and throughout has the uniform probability distribution on unless otherwise specified.. As a result, the Fourier characters form an orthonormal basis for the set of functions under the inner product ; i.e.,

where the ’s are complex numbers defined as . For , we use the notation to denote and to denote the number of nonzero coordinates in . When is clear from context and , define so that (recall the notation from the beginning of this section).

We have Parseval’s identity: for every it holds that . Note that this implies that for all , as otherwise would be greater than 1. A function is said to be folded if for every and , it holds that , where .

Proposition 2.3.

Let be folded. Then .

Proof.

This means that must be 1. Expanding this quantity,

So, , as promised. ∎

3 2-to-1 hardness

In this section, we give our hardness result for 2-to-1 Label Cover, following the proof outline described at the end of Section 1.1.

Theorem 1.2 (restated).

For all , it is -hard to -decide the -to- Label Cover problem.

First, we state a pair of simple gadget reductions:

Lemma 3.1.

There is a -gadget-reduction from 4NAT to 2NLin.

Lemma 3.2.

There is a -gadget-reduction from 2NLin to -to-.

Together with Proposition 2.2, these imply the following corollary:

Corollary 3.3.

There is a -gadget-reduction from 4NAT to -to-. Thus, if it is -hard to -decide the 4NAT problem, then it is -hard to -decide the 2-to-1 Label Cover problem.

The gadget reduction from 4NAT to 2NLin relies on the simple fact that if satisfy the 4NAT predicate, then there is some element of that none of them equal.

Proof of Lemma 3.1.

A 4NAT constraint on the variables is of the form

where the ’s are all constants in . To create the 2NLin instance, introduce the auxiliary variable and add the four 2NLin equations

| (1) |

If is an assignment which satisfies the 4NAT constraint, then there is some such that for all . Assigning to satisfies all four equations (1). On the other hand, if doesn’t satisfy the 4NAT constraint, then , so no assignment to satisfies all four equations. However, it is easy to see that there is an assignment which satisfies three of the equations. This gives a -gadget-reduction from 4NAT to 2NLin, which proves the lemma. ∎

The reduction from 2NLin to 2-to-1 Label Cover is the well-known constraint-variable reduction, and uses the fact that in the equation , for any assignment to there are two valid assignments to , and vice versa.

Proof of Lemma 3.2.

An 2NLin constraint on the variables is of the form

for some . To create the 2-to-1 Label Cover instance, introduce the variable which will be labeled by one of the six possible functions which satisfies . Finally, introduce the 2-to-1 constraints and .

If is an assignment which satisfies the 2NLin constraint, then we label with . In this case,

Thus, both equations are satisfied. On the other hand, if does not satisfy the 2NLin constraint, then any which is labeled with disagrees with on at least one of or . It is easy to see, though, that a can be selected to satisfy one of the two equations. This gives a -gadget-reduction from 2NLin to 2-to-1, which proves the lemma. ∎

3.1 A pair of tests

Now that we have shown that 2NLin hardness results translate into 2-to-1 Label Cover hardness results, we present our 2NLin function test. Even though we don’t directly use it, it helps explain how we were led to consider the 4NAT CSP. Furthermore, the Fourier analysis that we eventually use for the 4NAT Test could instead be performed directly on the 2NLin Test without any direct reference to the 4NAT predicate. The test is:

2NLin Test

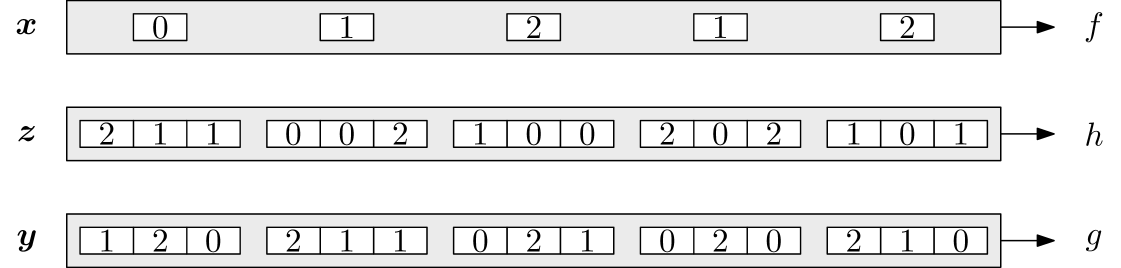

Given folded functions , :

-

•

Let and be independent and uniformly random.

-

•

For each , select independently and uniformly from the elements of .

-

•

With probability , test ; with probability , test .

Above is an illustration of the test. We remark that for any given block , determines (with very high probability), because as soon as contains two distinct elements of , must be the third element of . Notice also that in every column of indices, the input to always differs from the inputs to both and . Thus, “matching dictator” assignments pass the test with probability . (This is the case in which and for some , .) On the other hand, if and are “nonmatching dictators”, then they succeed with only probability. This turns out to be essentially optimal among functions and without “matching influential coordinates/blocks”. We will obtain the following theorem:

Theorem 1.3 restated.

For all , it is -hard to -decide the 2NLin problem.

Before proving this, let us further discuss the 2NLin test. Given , , and from the 2NLin test, consider the following method of generating two additional strings which represent ’s “uncertainty” about . For , if , then set both and to the lone element of . Otherwise, set one of or to , and the other one to . It can be checked that , a more stringent requirement than satisfying 4NAT. In fact, the marginal distribution on these four variables is a uniformly random assignment that satisfies the TwoPair predicate. Conditioned on and , the distribution on and is identical to the distribution on . To see this, first note that by construction, neither nor ever equals . Further, because these indices are distributed as uniformly random satisfying assignments to TwoPair, , which matches the corresponding probability for . Thus, as , , and are distributed identically, we may rewrite the test’s success probability as:

This is because if 4NAT fails to hold on the tuple , then can disagree with at most of them.

At this point, we have removed from the test analysis and have uncovered what appears to be a hidden 4NAT test inside the 2NLin Test: simply generate four strings , , , and as described earlier, and test . With some renaming of variables, this is exactly what our 4NAT Test does:

4NAT Test

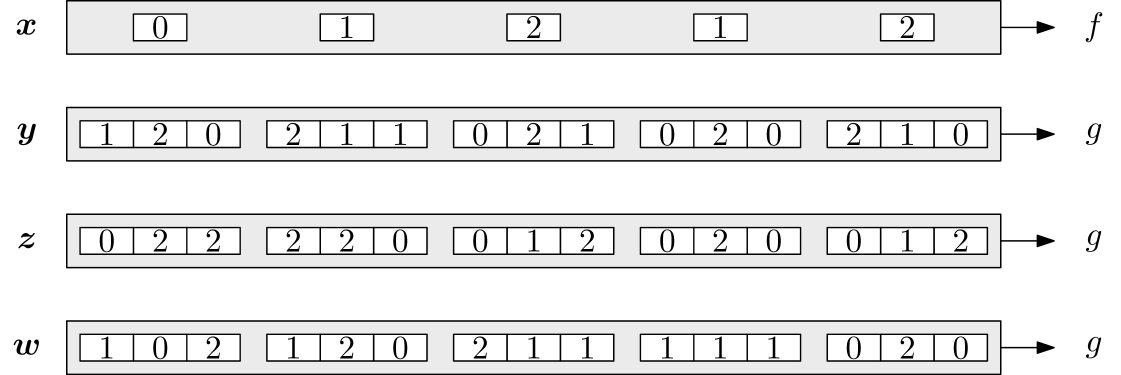

Given folded functions , :

-

•

Let be uniformly random.

-

•

Select as follows: for each , select uniformly at random from the elements of satisfying .

-

•

Test .

Above is an illustration of this test. In this illustration, the strings and were derived from the strings in Figure 1 using the process detailed above for generating and . Note that each column is missing one of the elements of , and that each column satisfies the TwoPair predicate. Because satisfying TwoPair implies satisfying 4NAT, matching dictators pass this test with probability . On the other hand, it can be seen that nonmatching dictators pass the test with probability . In the next section we show that this is optimal among functions and without “matching influential coordinates/blocks”.

(As one additional remark, our 2NLin Test is basically the composition of the 4NAT Test with the gadget from Lemma 3.1. In this test, if we instead performed the test with probability and the test with probability , then the resulting test would basically be the composition of a 3NLin test with a suitable 3NLin-to-2NLin gadget.)

3.2 Analysis of 4NAT Test

Let , and set . In what follows, we identify and with the functions and , respectively, whose range is rather than . Set . The remainder of this section is devoted to the proof of the following lemma:

Lemma 3.4.

Let and . Then

The first step is to “arithmetize” the 4NAT predicate. It is not hard to verify that

Using the symmetry between , , and , we deduce

| (2) |

In the second term in the RHS of (2) we in fact have . This is because and are independent, and hence since and are folded. Regarding the third term of the RHS in (2), this also turns out to be by virtue of being folded. This can be proven using a Fourier-analytic argument; we present here an alternate combinatorial argument:

Lemma 3.5.

.

Proof.

Fix any value for . Consider the function defined as , where all arithmetic is performed modulo 3. Note that has order 3, meaning that . This allows us to group values for and into sets of size three as follows: put into the set . Because is invertible and of order 3, each pair is a member of only one set: .

Conditioned on , if is in the support of the test, then all are also in the support of the test. This is because the strings which are in the support of the test are exactly the strings and for which the set is of size 2, for all . These strings, in turn, are exactly those for which . But if , then

This shows that is in the support of the test, conditioned on . As , the same holds for .

When conditioned on , each pair in the support of the test occurs with equal probability. To see this, first note that is pairwise independent from . In other words, any value for is equally likely, regardless of . Then, conditioned on and , there are exactly two possibilities for each index of , both of which occur with half probability. Thus, the event occurs with the same probability, no matter the values of or .

Consider an arbitrary set . Conditioned on falling in , the value of is a uniformly random element of this set. This means that is equally likely to be , , or . By the folding of , is therefore equally likely to be one of , or . As this happens for any choice of the set , is uniform on , even when conditioned on . Thus, as desired. ∎

Equation (2) has now been reduced to

| (3) |

As is always in , is always at least . Therefore,

| (4) |

It remains to handle the term, which is the subject of our next lemma. This is done through a standard argument in the style of Håstad [Hås01].

Lemma 3.6.

.

Proof.

Begin by expanding out :

| (5) |

We focus on the products of the Fourier characters:

| (6) |

We can attend to each block separately:

| (7) |

Now, consider the expectation . The distribution on the values for is uniform on the six possibilities , , , , , and . We claim that is nonzero if and only if . If, on the other hand, , then either only one of or is zero, or neither is zero, and . In the first case, the expectation is either or for a nonzero or a nonzero , respectively. Both of these expectations are zero, as both and are uniform on . In the second case,

which is zero, because is nonzero, and is uniformly distributed on .

Thus, when and Equation (6) are nonzero, . This means that . When , this is clearly . Otherwise, as either or , each with probability half, this is equal to

In summary, when , .

We can now rewrite Equation (7) as

Note that the exponent of , , is zero if , in which case the expectation is just the constant . This occurs for all exactly when . If, on the other hand, is nonzero, then the entire expectation is zero because , the value of , is uniformly random from . Thus, Equation (6) is nonzero only when and , in which case it equals

We may therefore conclude with

4 Hardness of 4NAT

In this section, we show the following theorem:

Theorem 1.4 (detailed).

For all , it is -hard to -decide the 4NAT problem. In fact, in the “yes case”, all 4NAT constraints can be satisfied by TwoPair assignments.

Combining this with Lemma 3.1 yields Theorem 1.3, and combining this with Corollary 3.3 yields Theorem 1.2. It is not clear whether this gives optimal hardness assuming perfect completeness. The 4NAT predicate is satisfied by a uniformly random input with probability , and by the method of conditional expectation this gives a deterministic algorithm which -approximates the 4NAT CSP. This leaves a gap of in the soundness, and to our knowledge there are no better known algorithms.

On the hardness side, consider a uniformly random satisfying assignment to the TwoPair predicate. It is easy to see that each of the four variables is assigned a uniformly random value from , and also that the variables are pairwise independent. As any satisfying assignment to the TwoPair predicate also satisfies the 4NAT predicate, the work of Austrin and Mossel [AM09] immediately implies that -approximating the 4NAT problem is -hard under the Unique Games conjecture. Thus, if we are willing to sacrifice a small amount in the completeness, we can improve the soundness parameter in Theorem 1.4. Whether we can improve upon the soundness without sacrificing perfect completeness is open.

We now arrive at the proof of Theorem 1.4. The proof is entirely standard, and proceeds by reduction from -to-1 Label Cover. It makes use of our analysis of the 4NAT Test, which is presented in Appendix 3.2. One preparatory note: most of the proof concerns functions and . However, we also be making use of Fourier analytic notions defined in Section 2.3, and this requires dealing with functions whose range is rather than . Thus, we associate and with the functions and , and whenever Fourier analysis is used it will actually be with respect to the latter two functions.

Proof.

Let be a -to-1 Label Cover instance with alphabet size and -to-1 maps for each edge . We construct a 4NAT instance by replacing each vertex in with its Long Code and placing constraints on adjacent Long Codes corresponding to the tests made in the 4NAT Test. Thus, each is replaced by a copy of the hypercube and labeled by the function . Similarly, each is replaced by a copy of the Boolean hypercube and labeled by the function . Finally, for each edge , a set of 4NAT constraints is placed between and corresponding to the constraints made in the 4NAT Test, and given a weight equal to the probability the constraint is tested in the 4NAT Test multiplied by the weight of in . This produces a 4NAT instance whose weights sum to 1 which is equivalent to the following test:

-

•

Pick an edge uniformly at random.

-

•

Reorder the indices of so that the th group of indices corresponds to .

-

•

Run the 4NAT test on and . Accept iff it does.

Completeness

If the original Label Cover instance is fully satisfiable, then there is a function for which . Set each to the dictator assignment and each to the dictator assignment . Let . Because satisfies the constraint , . Thus, and correspond to “matching dictator” assignments, and above we saw that matching dictators pass the 4NAT Test with probability 1. As this applies to every edge in , the 4NAT instance is fully satisfiable.

Soundness

Assume that there are functions and which satisfy at least a fraction of the 4NAT constraints. Then there is at least an fraction of the edges for which and pass the 4NAT Test with probability at least . This is because otherwise the fraction of 4NAT constraint satisfied would be at most

Let be the set of such edges, and consider . Set . By Lemma 3.4,

meaning that

| (8) |

Parseval’s equation tells us that . The function therefore induces a probability distribution on the elements of . As a result, we can rewrite Equation (8) as

| (9) |

As previously noted, is less than 1 for all , so the expression in this expectation as never greater than 1. We can thus conclude that

as otherwise the expectation in Equation (9) would be less than . Call the event in the probability . When occurs, the following happens:

-

•

.

-

•

. Furthermore, as is folded, .

This suggests the following randomized decoding procedure for each : pick an element with probability and choose one of its nonzero coordinates uniformly at random. Similarly, for each , pick an element with probability and choose one of its nonzero coordinates uniformly at random. In both cases, nonzero coordinates are guaranteed to exist because all the ’s and ’s are folded.

Now we analyze how well this decoding scheme performs for the edges (we may assume the other edges are unsatisfied). Suppose that when the elements of and were randomly chosen, ’s set was in , and ’s set equals . Then, as , and each label in has at least one label in which maps to it, the probability that matching labels are drawn is at least . Next, the probability that such an and are drawn is

Combining these, the probability that this edge is satisfied is at least . Thus, the decoding scheme satisfies at least

fraction of the Label Cover edges in expectation. By the probabilistic method, an assignment to the Label Cover instance must therefore exist which satisfies at least this fraction of the edges.

We now apply Theorem 2.1, setting the soundness value in that theorem equal to , which concludes the proof. ∎

References

- [ABS10] Sanjeev Arora, Boaz Barak, and David Steurer. Subexponential algorithms for Unique Games and related problems. In Proceedings of the 51st Annual IEEE Symposium on Foundations of Computer Science, pages 563–572, 2010.

- [AM09] Per Austrin and Elchanan Mossel. Approximation resistant predicates from pairwise independence. Computational Complexity, 18(2):249–271, 2009.

- [BRS11] Boaz Barak, Prasad Raghavendra, and David Steurer. Rounding semidefinite programming hierarchies via global correlation. In Proceedings of the 52nd Annual IEEE Symposium on Foundations of Computer Science, 2011.

- [DMR09] Irit Dinur, Elchanan Mossel, and Oded Regev. Conditional hardness for approximate coloring. SIAM Journal on Computing, 39(3):843–873, 2009.

- [FJ97] Alan Frieze and Mark Jerrum. Improved approximation algorithms for MAX k-CUT and MAX BISECTION. Algorithmica, 18(1):67–81, 1997.

- [FK94] Uriel Feige and Joe Kilian. Two prover protocols: low error at affordable rates. In Proceedings of the 26th Annual ACM Symposium on Theory of Computing, pages 172–183, 1994.

- [GKO+10] Venkatesan Guruswami, Subhash Khot, Ryan O’Donnell, Preyas Popat, Madhur Tulsiani, and Yi Wu. SDP gaps for 2-to-1 and other Label-Cover variants. In Proceedings of the 37th Annual International Colloquium on Automata, Languages and Programming, pages 617–628, 2010.

- [GLST98] Venkatesan Guruswami, Daniel Lewin, Madhu Sudan, and Luca Trevisan. A tight characterization of NP with 3 query PCPs. In Proceedings of the 39th Annual IEEE Symposium on Foundations of Computer Science, pages 8–17, 1998.

- [GS09] Venkatesan Guruswami and Ali Kemal Sinop. Improved inapproximability results for Maximum k-Colorable Subgraph. In Proceedings of the 12th Annual International Workshop on Approximation Algorithms for Combinatorial Optimization Problems, pages 163–176, 2009.

- [GS11] Venkatesan Guruswami and Ali Sinop. Lasserre hierarchy, higher eigenvalues, and approximation schemes for quadratic integer programming with PSD objectives. In Proceedings of the 52nd Annual IEEE Symposium on Foundations of Computer Science, 2011.

- [GW04] Michel X. Goemans and David P. Williamson. Approximation algorithms for MAX-3-CUT and other problems via complex semidefinite programming. J. Comput. Syst. Sci., 68(2):442–470, 2004.

- [Hås01] Johan Håstad. Some optimal inapproximability results. Journal of the ACM, 48(4):798–859, 2001.

- [IP01] Russell Impagliazzo and Ramamohan Paturi. On the complexity of k-SAT. Journal of Computer and System Sciences, 62(2):367–375, 2001.

- [Kho02] Subhash Khot. On the power of unique 2-prover 1-round games. In Proc. 34th ACM Symposium on Theory of Computing, pages 767–775, 2002.

- [KKMO07] Subhash Khot, Guy Kindler, Elchanan Mossel, and Ryan O’Donnell. Optimal inapproximability results for Max-Cut and other -variable CSPs? SIAM Journal on Computing, 37(1):319–357, 2007.

- [KR03] Subhash Khot and Oded Regev. Vertex Cover might be hard to approximate to within . In Proc. 18th IEEE Conference on Computational Complexity, pages 379–386, 2003.

- [MR10] Dana Moshkovitz and Ran Roz. Two-query PCP with subconstant error. Journal of the ACM, 57(5):29, 2010.

- [OW09] Ryan O’Donnell and Yi Wu. Conditional hardness for satisfiable -CSPs. In Proceedings of the 41st Annual ACM Symposium on Theory of Computing, pages 493–502, 2009.

- [OW12] Ryan O’Donnell and John Wright. A new point of NP-hardness for Unique-Games. In Proceedings of the 44th Annual ACM Symposium on Theory of Computing, 2012.

- [Rag08] Prasad Raghavendra. Optimal algorithms and inapproximability results for every CSP? In Proceedings of the 40th Annual ACM Symposium on Theory of Computing, pages 245–254, 2008.

- [Raz95] Ran Raz. A parallel repetition theorem. In Proceedings of the 27th Annual ACM Symposium on Theory of Computing, pages 447–456, 1995.

- [Ste10] David Steurer. Subexponential algorithms for d-to-1 two-prover games and for certifying almost perfect expansion. Available at the author’s website, 2010.

- [TSSW00] Luca Trevisan, Gregory Sorkin, Madhu Sudan, and David Williamson. Gadgets, approximation, and linear programming. SIAM Journal on Computing, 29(6):2074–2097, 2000.

- [Zwi98] Uri Zwick. Approximation algorithms for constraint satisfaction problems involving at most three variables per constraint. In Proceedings of the 9th Annual ACM-SIAM Symposium on Discrete Algorithms, pages 201–210, 1998.