Michigan State University

11email: {huding,yemingqu}@msu.edu

A Novel Geometric Approach for Outlier Recognition in High Dimension

Abstract

Outlier recognition is a fundamental problem in data analysis and has attracted a great deal of attention in the past decades. However, most existing methods still suffer from several issues such as high time and space complexities or unstable performances for different datasets. In this paper, we provide a novel algorithm for outlier recognition in high dimension based on the elegant geometric technique “core-set”. The algorithm needs only linear time and space complexities and achieves a solid theoretical quality guarantee. Another advantage over the existing methods is that our algorithm can be naturally extended to handle multi-class inliers. Our experimental results show that our algorithm outperforms existing algorithms on both random and benchmark datasets.

1 Introduction

In this big data era, we are confronted with an extremely large amount of data and it is important to develop efficient algorithmic techniques to handle the arising realistic issues. Due to its recent rapid development, deep learning [20] becomes a powerful tool for many emerging applications; meanwhile, the quality of training dataset often plays a key role and seriously affects the final learning result. For example, we can collect tremendous data (e.g., texts or images) through the internet, however, the obtained dataset often contains a significant amount of outliers. Since manually removing outliers will be very costly, it is very necessary to develop some efficient algorithms for recognizing outliers automatically in many scenarios.

Outlier recognition is a typical unsupervised learning problem and its counterpart in supervised learning is usually called anomaly detection [33]. In anomaly detection, the given training data are always positive and the task is to generate a model to depict the positive samples. Therefore, any new data can be distinguished to be positive or negative (i.e., anomaly) based on the obtained model. Several existing methods, especially for image data, include autoencoder [30] and sparse coding [27].

Unlike anomaly detection, the given data for outlier recognition are unlabeled; thus we can only model it as an optimization problem based on some reasonable assumption in practice. For instance, it is very natural to assume that the inliers (i.e., normal data) locate in some dense region while the outliers are scattered in the feature space. Actually, many well known outlier recognition methods are based on this assumption [7, 13]. However, most of the density-based methods are only for low-dimensional space and quite limited for large-scale high-dimensional data that are very common in computer vision problems (note that several high-dimensional approaches often are of heuristic natures and need strong assumptions [21, 23, 3]). Recently, [26] applied the one-class support vector machine (SVM) method [31] to high-dimensional outlier recognition. Further, [34] introduced a new unsupervised model of autoencoder inspired by the observation that inliers usually have smaller reconstruction errors than outliers.

Our main contributions. Although the aforementioned methods could efficiently solve the problem of outlier recognition to a certain extent, they still suffer from several issues such as high time and space complexities or unstable performances for different datasets. In this paper, we present a novel geometric approach for outlier recognition. Roughly speaking, we try to build an approximate minimum enclosing ball (MEB) to cover the inliers but exclude the outliers. This model is seemed to be very simple but involves a couple of computational challenges. For example, the existence of outliers makes the problem to be not only non-convex but also highly combinatorial. Also, the high dimensionality makes the problem more difficult. To tackle these challenges, we develop a randomized algorithmic framework using a popular geometric concept called “core-set”. Comparing with existing results for outlier recognition, we provide a thorough analysis on the complexities and quality guarantee. Moreover, we propose a simple greedy peeling strategy to extend our method to multi-class inliers. Finally, we test our algorithm on both random and benchmark datasets and the experimental results reveal the advantages of our approach over various existing methods.

1.1 Other Related Work

Besides the aforementioned existing results, many other methods for outlier recognition/anomaly detection were developed previously and the readers can refer to several excellent surveys [22, 8, 18].

In computational geometry, a core-set [1] is a small set of points that approximate the shape of a much larger point set, and thus can be used to significantly reduce the time complexities for many optimization problems (please refer to a recent survey [28]). In particular, a core-set can be applied to efficiently compute an approximate MEB for a set of points in high-dimensional space [5, 24]. Moreover, [4] showed that it is possible to find a core-set of size that yields a -approximate MEB, with an important advantage that the size is independent of the original size and dimensionality of the dataset. In fact, the algorithm for computing the core-set of MEB is a Frank-Wolfe style algorithm [15], which has been systematically studied by Clarkson [9].

The problem of MEB with outliers also falls under the umbrella of the topic robust shape fitting [19, 2], but most of the approaches cannot be applied to high-dimensional data. [35] studied MEB with outliers in high dimension, however, the resulting approximation is a constant that is not fit enough for the applications proposed in this paper.

Actually, our idea is inspired by a recent work about removing outliers for SVM [12], where they proposed a novel combinatorial approach called Random Gradient Descent (RGD) Tree. It is known that SVM is equivalent to finding the polytope distance from the origin to the Minkowski Difference of the given two labeled point sets. Gilbert algorithm [17, 16] is an efficient Frank-Wolfe algorithm for computing polytope distance, but a significant drawback is that the performance is too sensitive to outliers. To remedy this issue, RGD Tree accommodates the idea of randomization to Gilbert algorithm. Namely, it selects a small random sample in each step by a carefully designed strategy to overcome the adverse effect from outliers.

1.2 Preliminaries

As mentioned before, we model outlier recognition as a problem of MEB with outliers in high dimension. Here we first introduce several definitions that are used throughout the paper.

Definition 1 (Minimum Enclosing Ball (MEB))

Given a set of points in , MEB is the ball covering all the points with the smallest radius. The MEB is denoted by .

Definition 2 (MEB with Outliers)

Given a set of points in and a small parameter , MEB with outliers is to find the smallest ball that covers at least points. Namely, the task is to find a subset of having at least points such that the resulting MEB is the smallest among all the possible choices; the induced ball is denoted by .

From Definition 2 we can see that the major challenge is to determine the subset of which makes the problem a challenging combinatorial optimization. Therefore we relax our goal to its approximation as follows. For the sake of convenience, we always use to denote the optimal subset of , that is, the radius of , and to denote the radius of .

Definition 3 (Bi-criteria Approximation)

Given an instance for MEB with outliers and two small parameters , an -approximation is a ball that covers at least points and has the radius at most .

When both and are small, the bi-criteria approximation is very close to the optimal solution with only a slight violation on the number of covering points and radius.

2 Our Algorithm and Analyses

In this section, we present our method in detail. For the sake of completeness, we first briefly introduce the core-set for MEB based on the idea of [4].

The algorithm is a simple iterative procedure with an elegant analysis: initially, it selects an arbitrary point and places it into a set that is empty at the beginning; in each of the following steps, the algorithm updates the center of and adds the farthest point to ; finally, the center of induces a -approximation for MEB of the whole input point set. The selected points are also called the core-set for MEB. To ensure there is at least certain extent of improvement achieved in each iteration, [4] showed that the following two inequalities would hold if the algorithm always selects the farthest point to the temporary center of :

| (1) | ||||

| (2) |

where and are the radii of the -th and the -th iterations respectively, is the optimal radius of the MEB, and is the shifting distance of the center of .

-

(1)

Let be the current node.

-

(2)

If the height of is , becomes a leaf node. Otherwise, perform the following steps:

-

(a)

Let denote the set of points along the path from root to node , and denote the center of . We say that is the attached point of .

-

(b)

Let . Compute the point set containing the top points which have the largest distances to .

-

(c)

Take a random sample of size from , and let each point be a child node of .

-

(a)

2.1 Algorithm for MEB with Outliers

We present our -approximation algorithm for MEB with outliers in this section. Although the outlier recognition problem belongs to unsupervised learning, we can estimate the fraction of outliers in the given data before executing our algorithm. In practice, we can randomly collect a small set of samples from the given data, and manually identify the outliers and estimate the outlier ratio . Therefore, in this paper we assume that the outlier ratio is known.

To better understand our algorithm, we first illustrate the high-level idea. If taking a more careful analysis on the previously mentioned core-set construction algorithm [4], we can find that it is not necessary to select the farthest point to the center of in each step. Instead, as long as the selected point has a distance larger than , the minimal extent of improvement would always be guaranteed [10]. As a consequence, we investigate the following approach.

We denote the ball centered at point with radius as . Recall that is the subset of yielding the optimal MEB with outliers, and is the radius of (see Section 1.2). In the -th step, we add an arbitrary point from to where is the current center of . Based on the above observation, we know that a -approximation is obtained after at most steps, that is, when .

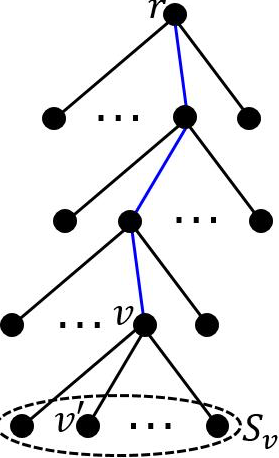

However, in order to carry out the above approach we need to solve two key issues: how to determine the value of and how to select a point belonging to . Actually, we can implicitly avoid the first issue via replacing the radius by the -th largest distance from the points of to , where is some appropriate number that will be determined in our following analysis. For the second issue, we have to take a small random sample instead of a single point from and try each of the sampled points; this operation will result in a tree structure that is similar to the RGD Tree introduced by [12] for SVM. We present the algorithm in Algorithm 1 and place the detailed parameter settings, proof of correctness, and complexity analyses in Sections 2.2 & 2.3.

2.2 Parameter Settings and Quality Guarantee

We denote the tree constructed by Algorithm 1 as . The following theorem shows the success probability of Algorithm 1.

Theorem 2.1

If we set , then with probability at least there exists at least one node of yielding an -approximation for the problem of MEB with outliers.

Before proving Theorem 2.1, we need to introduce several important lemmas.

Lemma 1

[11] Let be a set of elements, and be a subset of with size for some . If one randomly samples elements from , then with probability at least , the sample contains at least one element in for any .

Lemma 2

For each node , the set in Algorithm 1 contains at least one point from with probability .

Proof

Since and , we have

| (3) | ||||

Note that the size of is . If we apply Lemma 1 via setting and , it is easy to know that contains at least one point from with probability .

Lemma 3

With probability , there exists a leaf node such that the corresponding set .

Proof

Lemma 2 indicates that each node has a child node corresponding to a point from with probability . In addition, the probability of root belonging to is (recall that is the fraction of outliers). Note that the height of is , then with probability at least

| (4) |

there exists one leaf node satisfying .

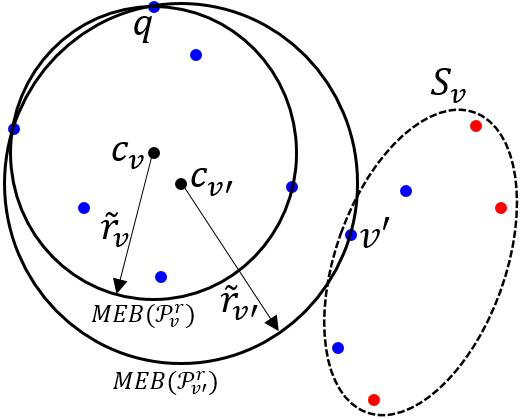

In the remaining analyses, we always assume that such a root-to-leaf path described in Lemma 3 exists and only focus on the nodes along this path. We denote where is the optimal radius of the MEB with outliers. Let be the MEB covering centered at , and the radii of and be and respectively. Readers can refer to Fig. 2. The following lemma is a key observation for MEB.

Lemma 4

[4] Given a set of points in , let and be the radius and center of respectively. Then for any point with a distance to , there exists a point such that .

The following lemma is a key for proving the quality guarantee of Algorithm 1. As mentioned in Section 2.1, the main idea follows the previous works [4, 10]. For the sake of completeness, we present the detailed proof here.

Lemma 5

For each node , at least one of the following two events happens: (1) is an -approximation; (2) its child on the path satisfies

| (5) |

Proof

If , then we are done; that is, covers points and . Otherwise, and we consider the second case.

By triangle inequality and the fact that (i.e., the point associating the node “”) lies outside , we have

| (6) |

Let . Combining the fact that , we have

| (7) |

By Lemma 4, we know that there exists one point (see Fig. 2) in satisfying . Since is also inside , . Then, we have

| (8) |

Combining (7) and (8), we obtain

| (9) |

Because and are decreasing and increasing on respectively, we let to achieve the lower bound (i.e., ). Substituting the value of to (9), we have . As a consequence, the second event happens and the proof is completed.

Now we prove Theorem 2.1 by the idea from [4]. Suppose no node in makes the first event of Lemma 5 occur. As a consequence, we obtain a series of inequalities for each pair of radii and (see (5)). For ease of analysis, we denote if the height of is in . By Inequality (5), we have

| (10) |

Combining the initial case and (10), we obtain

| (11) |

by induction [4]. Note that the equality in (11) holds only when , therefore,

| (12) |

Then, (recall that is the leaf node on the path ), which is a contradiction to our assumption . The success probability directly comes from Lemma 3. Overall, we obtain Theorem 2.1.

2.3 Complexity Analyses

We analyze the time and space complexities of Algorithm 1 in this section.

Time Complexity. For each node , we need to compute the corresponding approximate . To avoid computing the exact MEB costly, we apply the approximation algorithm proposed by [4]. See Algorithm 2 for details.

For Algorithm 2, we have the following theorem.

Theorem 2.2

[4] Let the center and radius of be and respectively, then , .

From Theorem 2.2, we know that a -approximation for MEB can be obtained when with the time complexity . Suppose the height of node is , then the complexity for computing the corresponding approximate is . Further, in order to obtain the point set , we need to find the pivot point that has the -th smallest distance to . Here we apply the PICK algorithm [6] which can find the -th smallest from a set of () numbers in linear time. Consequently, the complexity for each node at the -th layer is . Recall that there are nodes at the -th layer of . In total, the time complexity of our algorithm is

| (13) |

If we assume is a constant, the complexity is linear in and , where the hidden constant . In our experiment, we can carefully choose the parameters so as to keep the value of not too large.

Space Complexity. In our implementation, we use a queue to store the nodes in the tree. When the head of is popped, its child nodes are pushed into . In other words, we simulate breadth first search on the tree . Therefore, always keeps its size at most . Note that each node needs to store to compute its corresponding MEB, but actually we only need to record the pointers to link the points in . Therefore, the space complexity of is . Together with the space complexity of the input data, the total space complexity of our algorithm is .

2.4 Boosting

By Theorem 2.1, we know that with probability at least there exists an -approximation in the resulting tree. However, when outlier ratio is high, say , the success probability will become small. To further improve the performance of our algorithm, we introduce the following two boosting methods.

-

1.

Constructing a forest. Instead of building a single tree, we randomly initialize several root nodes and grow each root node to be a tree. Suppose the number of root nodes is . The probability that there exists an -approximation in the forest is at least which is much larger than .

-

2.

Sequentialization. First, initialize one root node and build a tree. Then select the best node in the tree and set it to be the root node for the next tree. After iteratively performing the procedure for several rounds, we can obtain a much more robust solution.

3 Experiments

From our analysis in Section 2.2, we know that Algorithm 1 results in a tree where each node has a candidate for the desired -approximation for the problem of MEB with outliers. For each candidate, we identify the nearest points to as the inliers. To determine the final solution, we select the candidate that has the smallest variance of the inliers.

3.1 Datasets and Methods to Be Compared

In our experiment, we test the algorithms on two random datasets and two benchmark image datasets. In terms of the random datasets, we generate the data points based on normal and uniform distributions under the assumption that the inliers usually locate in dense regions while the outliers are scattered in the space. The benchmark image datasets include the popular MNIST [25] and Caltech [14].

To make our experiment more convincing, we compare our algorithm with three well known methods for outlier recognition: angle-based outlier detection (ABOD) [23], one-class SVM (OCSVM) [31], and discriminative reconstructions in an autoencoder (DRAE) [34]. Specifically, ABOD distinguishes the inliers and outliers by assessing the distribution of the angles determined by each 3-tuple data points; OCSVM models the problem of outlier recognition as a soft-margin one-class SVM; DRAE applies autoencoder to separate the inliers and outliers based on their reconstruction errors.

The performances of the algorithms are measured by the commonly used score , where precision is the proportion of the correctly identified positives relative to the total number of identified positives, and recall is the proportion of the correctly identified positives relative to the total number of positives in the dataset.

3.2 Random Datasets

We validate our algorithm on the following two random datasets.

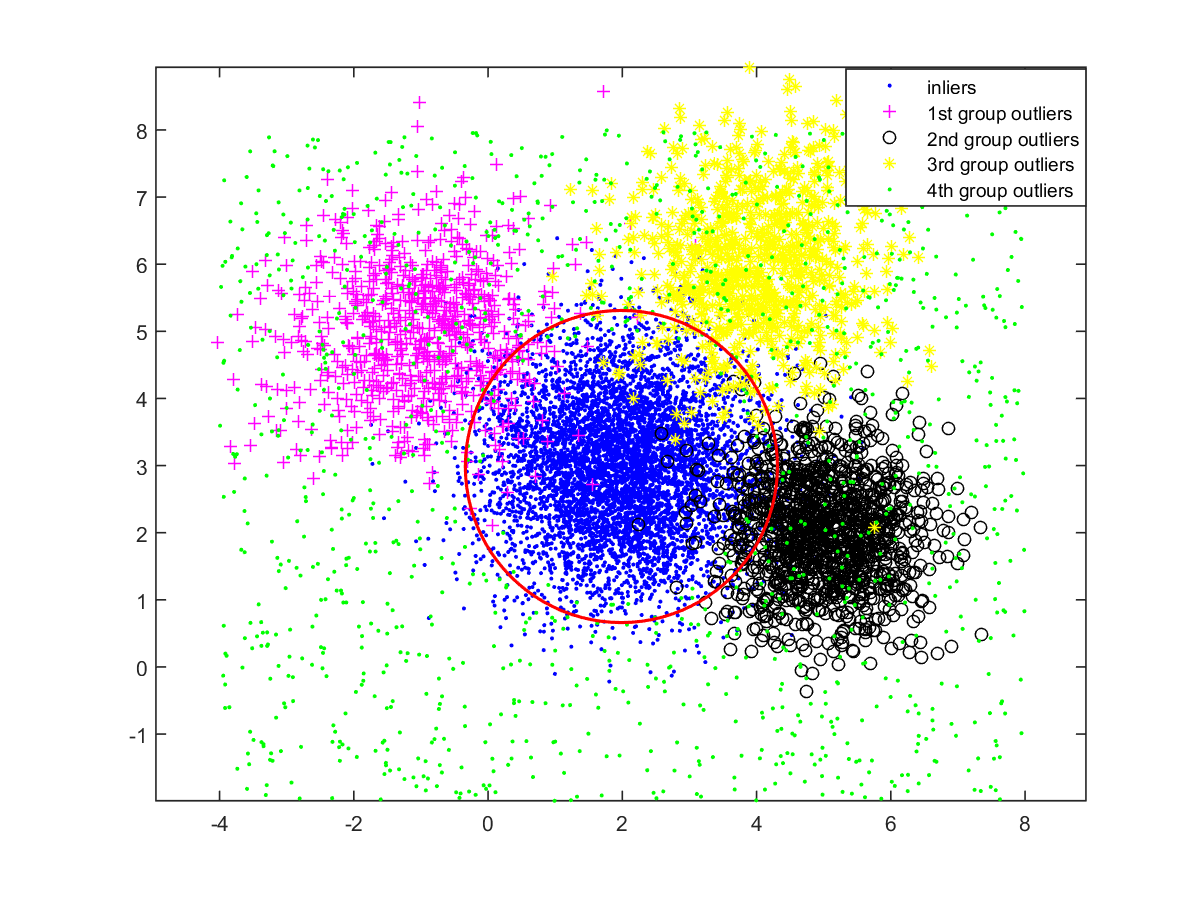

A toy example in D. To better illustrate the intuition of our algorithm, we first run it on a random dataset in 2D. We generate an instance of points with the outlier ratio . The inliers are generated by a normal distribution; the outliers consist of four groups where the first three are generated by normal distributions and the last is generated by a uniform distribution. The four groups of outliers contains , , , and points, respectively. See Fig. 3. The red circle obtained by our algorithm is the boundary to distinguish the inliers and outliers where the resulting score is . From this case, we can see that our algorithm can efficiently recognize the densest region even if the outlier ratio is high and the outliers also form some dense regions in the space.

High-Dimensional Points. We further test our algorithm and the other three methods on high-dimensional dataset. Similar to the previous 2D case, we generate points with four groups of outliers in ; the outlier ratio varies from to . The scores are displayed in Table 1, from which we can see that our algorithm significantly outperforms the other three methods for all the levels of outlier ratio.

| ABOD | |||||

|---|---|---|---|---|---|

| OCSVM | |||||

| DRAE | |||||

| Ours | 0.984 | 0.965 | 0.939 | 0.938 | 0.898 |

3.3 Benchmark Image Datasets

In this section, we evaluate all the four methods on two benchmark image datasets.

3.3.1 MNIST Dataset

MNIST contains handwritten digits ( to ) composed of both training and test datasets. For each of the 10 digits, we add the outliers by randomly selecting the images from the other digits. For each outlier ratio , we compute the average score over all the 10 digits. To map the images to a feature (Euclidean) space, we use two kinds of image features: PCA-grayscale and autoencoder feature.

| ABOD | |||||

|---|---|---|---|---|---|

| OCSVM | |||||

| DRAE | |||||

| Ours |

-

(1)

PCA-grayscale Feature. Each image in MNIST has a grayscale which is represented by a -dimensional vector. Note that the images of MNIST have massive redundancy. For example, the digits often locate in the middle of the images and all the background pixels have the value of . Therefore, we apply principle component analysis (PCA) to reduce the redundancy by trying multiple projection matrices which preserve , , and energy of the original grayscale features. These three features are denoted as PCA-, PCA- and PCA-, respectively. The results are shown in Table 2. We notice that our scores always achieve the highest by PCA-; this is due to the fact that PCA- can significantly reduce the redundancy as well as preserve the most useful information (comparing with PCA- and PCA-).

-

(2)

Autoencoder Feature. Autoencoder [29] is often adopted to extract the features of grayscale images. The autoencoder model trained in our experiment has seven symmetrical hidden layers (------), and the input layer is a -dimensional grayscale. We use the middle hidden layer as image feature. The results are shown in Table 3 and our method achieves the best for most of the cases.

Table 3: The scores of the four methods on MNIST by using autoencoder feature. ABOD OCSVM DRAE 0.933 Ours 0.885 0.831 0.770 0.694

3.3.2 Caltech Dataset

The Caltech- dataset 111http://www.vision.caltech.edu/Image_Datasets/Caltech256/ includes image sets. We choose concepts as the inliers in our experiment, which are airplane, binocular, bonsai, cup, face, ketch, laptop, motorbike, sneaker, t-shirt, and watch. We apply VGG net [32] to extract the image features, which is the -dimensional output of the second fully-connected layer. The results are shown in Table 4.

| ABOD | |||||

|---|---|---|---|---|---|

| OCSVM | |||||

| DRAE | 0.927 | 0.912 | |||

| Ours | 0.964 | 0.948 | 0.932 |

Unlike the random data, the distribution of real data in the space is much more complicated. To alleviate this problem, we try to capture the separate parts of the original VGG net feature. Similar to Section 3.3.1, we apply PCA to reduce the redundancy of VGG net feature and preserve its key parts. Three matrices are obtained to preserve , , and energy respectively. The results are shown in Table 5. We can see that our method achieves the best for all the cases, especially when using PCA- (marked by underlines). More importantly, PCA- considerably improves the results by using the original VGG net feature (see Table 4), and the dimensionality is only which results in a significant reduction on the complexities.

| ABOD | |||||

|---|---|---|---|---|---|

| OCSVM | |||||

| DRAE | |||||

| Ours |

3.4 Comparisons of Time Complexities

From Section 3.2 and Section 3.3 we know that our method achieves the robust and competitive performances in terms of accuracy. In this section, we compare the time complexities of all the four algorithms.

ABOD has the time complexity . In the experiment, we use its speed-up edition FastABOD which has the reduced time complexity where is some specified parameter.

OCSVM is formulated as a quadratic programming with the time complexity .

DRAE alternatively executes the following two steps: discriminative labeling and reconstruction learning. Suppose it runs in rounds; actually the two inner steps are also iterative procedures which both run iterations. Thus, the total time complexity of DRAE is , where is the number of the hidden layer nodes that can be generally expressed as ( is a constant); then the total time complexity becomes where is a large constant depending on , and .

When the number of points is large, FastABOD, OCSVM, and DRAE will be very time-consuming. On the contrary, our algorithm takes only linear running time (see Section 2.3) and usually runs much faster in practice. For example, our algorithm often takes less than of the time consumed by the other three methods in our experiment.

4 Extension for Multi-class Inliers

All the three compared methods in Section 3 can only handle one-class inliers. However, in many real scenarios the data could contain multiple classes of inliers. For example, a given image dataset may contain the images of “dog” and “cat”, as well as a certain fraction of outliers. So it is necessary to recognize multiple dense regions in the feature space. Fortunately, our proposed algorithm for MEB with outliers can be naturally extended for multi-class inliers. Instead of building one ball, we can perform the following greedy peeling strategy to extract multiple balls: first we can take a small random sample from the input to roughly estimate the fractions for the classes; then we iteratively run the algorithm for MEB with outliers and remove the covered points each time, until the desired number of balls are obtained. Roughly speaking, we reduce the problem of multi-class inliers to a series of the problems of one-class inliers. The extended algorithm for multi-class inliers is evaluated on two datasets, a random dataset in and Caltech-.

Random dataset. We generate three classes of inliers following different normal distributions and the outliers following uniform distribution in . For each outlier ratio , we report the three scores (with respect to the three classes of inliers) and their average in Table 6 (a).

Caltech-256. We randomly select three image sets from Caltech- as the three classes of inliers, and an extra set of mixed images from the remaining image sets as the outliers. Moreover, we point out that recognizing multi-class inliers from real image sets is much more challenging than single class; we believe that it is due to the following two reasons: (1) the multiple classes of inliers could mutually overlap in the feature space and (2) the outlier ratio with respect to each class usually is large (for example, the outlier ratio for class 1 should also take into account of the fractions of the remaining class 2 and 3, if there are 3 classes in total). We use PCA-VGG- feature in our experiment and the performance is very robust (see Table 6 (b)).

|

|

|

|

|

|||||||||||||

| AVG |

(a) Random dataset

AVG

(b) Caltech-

5 Conclusion

In this paper, we present a new approach for outlier recognition in high dimension. Most existing methods have high time and space complexities or cannot achieve a quality guaranteed solution. On the contrary, we show that our algorithm yields a nearly optimal solution with the time and space complexities linear on the input size and dimensionality. More importantly, our algorithm can be extended to efficiently solve the instances with multi-class inliers. Furthermore, our experimental results suggest that our approach outperforms several popular existing methods in terms of accuracy.

References

- [1] P. K. Agarwal, S. Har-Peled, and K. R. Varadarajan. Geometric approximation via coresets. Combinatorial and Computational Geometry, 52:1–30, 2005.

- [2] P. K. Agarwal, S. Har-Peled, and H. Yu. Robust shape fitting via peeling and grating coresets. Discrete & Computational Geometry, 39(1-3):38–58, 2008.

- [3] C. C. Aggarwal and P. S. Yu. Outlier detection for high dimensional data. ACM Sigmod Record, 30(2):37–46, 2001.

- [4] M. Badoiu and K. L. Clarkson. Smaller core-sets for balls. In Proceedings of the ACM-SIAM Symposium on Discrete Algorithms (SODA), pages 801–802, 2003.

- [5] M. Badoiu, S. Har-Peled, and P. Indyk. Approximate clustering via core-sets. In Proceedings of the ACM Symposium on Theory of Computing (STOC), pages 250–257, 2002.

- [6] M. Blum, R. W. Floyd, V. Pratt, R. L. Rivest, and R. E. Tarjan. Time bounds for selection. Journal of Computer and System Sciences, 7(4):448–461, 1973.

- [7] M. M. Breunig, H.-P. Kriegel, R. T. Ng, and J. Sander. Lof: identifying density-based local outliers. ACM Sigmod Record, 29(2):93–104, 2000.

- [8] V. Chandola, A. Banerjee, and V. Kumar. Anomaly detection: A survey. ACM Computing Surveys (CSUR), 41(3):15, 2009.

- [9] K. L. Clarkson. Coresets, sparse greedy approximation, and the frank-wolfe algorithm. ACM Transactions on Algorithms, 6(4):63, 2010.

- [10] H. Ding and J. Xu. Solving the chromatic cone clustering problem via minimum spanning sphere. In Proceedings of the International Colloquium on Automata, Languages, and Programming (ICALP), pages 773–784, 2011.

- [11] H. Ding and J. Xu. Sub-linear time hybrid approximations for least trimmed squares estimator and related problems. In Proceedings of the International Symposium on Computational geometry (SoCG), page 110, 2014.

- [12] H. Ding and J. Xu. Random gradient descent tree: A combinatorial approach for svm with outliers. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pages 2561–2567, 2015.

- [13] M. Ester, H.-P. Kriegel, J. Sander, and X. Xu. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), pages 226–231, 1996.

- [14] L. Fei-Fei, R. Fergus, and P. Perona. Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. Computer vision and Image understanding, 106(1):59–70, 2007.

- [15] M. Frank and P. Wolfe. An algorithm for quadratic programming. Naval Research Logistics Quarterly, 3(1-2):95–110, 1956.

- [16] B. Gärtner and M. Jaggi. Coresets for polytope distance. In Proceedings of the International Symposium on Computational geometry (SoCG), pages 33–42, 2009.

- [17] E. G. Gilbert. An iterative procedure for computing the minimum of a quadratic form on a convex set. SIAM Journal on Control, 4(1):61–80, 1966.

- [18] M. Gupta, J. Gao, C. Aggarwal, and J. Han. Outlier detection for temporal data. Synthesis Lectures on Data Mining and Knowledge Discovery, 5(1):1–129, 2014.

- [19] S. Har-Peled and Y. Wang. Shape fitting with outliers. SIAM Journal on Computing, 33(2):269–285, 2004.

- [20] G. E. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever, and R. R. Salakhutdinov. Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580, 2012.

- [21] H.-P. Kriegel, P. Kröger, E. Schubert, and A. Zimek. Outlier detection in axis-parallel subspaces of high dimensional data. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD), pages 831–838, 2009.

- [22] H.-P. Kriegel, P. Kröger, and A. Zimek. Outlier detection techniques. Tutorial at PAKDD, 2009.

- [23] H.-p. Kriegel, M. Schubert, and A. Zimek. Angle-based outlier detection in high-dimensional data. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), pages 444–452, 2008.

- [24] P. Kumar, J. S. B. Mitchell, and E. A. Yildirim. Approximate minimum enclosing balls in high dimensions using core-sets. ACM Journal of Experimental Algorithmics, 8, 2003.

- [25] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- [26] W. Liu, G. Hua, and J. R. Smith. Unsupervised one-class learning for automatic outlier removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3826–3833, 2014.

- [27] C. Lu, J. Shi, and J. Jia. Abnormal event detection at 150 fps in matlab. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), pages 2720–2727, 2013.

- [28] J. M. Phillips. Coresets and sketches. Computing Research Repository, 2016.

- [29] D. E. Rumelhart, G. E. Hinton, and R. J. Williams. Learning representations by back-propagating errors. Cognitive Modeling, 5(3):1, 1988.

- [30] M. Sakurada and T. Yairi. Anomaly detection using autoencoders with nonlinear dimensionality reduction. In Proceedings of the Workshop on Machine Learning for Sensory Data Analysis (MLSDA), page 4, 2014.

- [31] B. Schölkopf, R. Williamson, A. Smola, J. Shawe-Taylor, and J. Platt. Support vector method for novelty detection. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), pages 582–588, 1999.

- [32] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [33] P.-N. Tan, M. Steinbach, and V. Kumar. Introduction to Data Mining. 2006.

- [34] Y. Xia, X. Cao, F. Wen, G. Hua, and J. Sun. Learning discriminative reconstructions for unsupervised outlier removal. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), pages 1511–1519, 2015.

- [35] H. Zarrabi-Zadeh and A. Mukhopadhyay. Streaming 1-center with outliers in high dimensions. In Proceedings of the Canadian Conference on Computational Geometry (CCCG), pages 83–86, 2009.