A Novel OTFS-based Massive Random Access Scheme in Cell-Free Massive MIMO Systems for High-Speed Mobility

Abstract

In the research of next-generation wireless communication technologies, orthogonal time frequency space (OTFS) modulation is emerging as a promising technique for high-speed mobile environments due to its superior efficiency and robustness in doubly-selective channels. Additionally, the cell-free architecture, which eliminates the issues associated with cell boundaries, offers broader coverage for radio access networks. By combining cell-free network architecture with OTFS modulation, the system may meet the demands of massive random access required by machine-type communication devices in high-speed scenarios. This paper explores a massive random access scheme based on OTFS modulation within a cell-free architecture. A transceiver model for uplink OTFS signals involving multiple access points (APs) is developed, where channel estimation with fractional channel parameters is approximated as a two-dimensional block sparse matrix recovery problem. Building on existing superimposed and embedded preamble schemes, a hybrid preamble strategy intended for massive random access is proposed. This scheme leverages superimposed and embedded preambles to respectively achieve rough and accurate active user equipment (UEs) detection (AUD), as well as precise channel estimation. Moreover, this study introduces a generalized approximate message passing and pattern-coupled sparse Bayesian learning with Laplacian prior (GAMP-PCSBL-La) algorithm, which effectively captures block sparse features after discrete cosine transform (DCT), delivering precise estimation results with reduced computational complexity. Simulation results demonstrate that the proposed scheme is effective and provides superior performance compared to other existing schemes.

Index Terms:

Massive random access, OTFS, cell-free massive MIMO, active UE detection, channel estimation, block sparse recovery.I Introduction

The next-generation wireless communication will delve deeper into more ubiquitous Internet of Things (IoT) scenarios in the coming decades, encompassing broader coverage areas and a significantly larger number of user equipment (UEs) [1]. Beyond human-type communication devices (HTCDs), there are numerous machine-type communication devices (MTCDs) that need to facilitate data transmission [2]. In high-speed massive machine-type communication (mMTC) scenarios, such as high-speed railways, Internet of Vehicles (IoV), unmanned aerial vehicle (UAV) communications, and high-speed integrated sensing and communication (ISAC) [3], the great number of MTCDs face constraints such as allocable resources [4]. Due to asynchronous delays and Doppler shifts caused by high-speed mobility, doubly-selective features are often exhibited by the transmission channel. Traditional coordinated access protocols, which involve multiple handshake processes, not only introduce extra delays but also incur significant signaling overhead [5]. Moreover, coordinated orthogonal resources suffer severe orthogonality degradation in doubly-selective channels, thereby reducing system performance [6]. Unlike coordinated schemes, grant-free non-orthogonal multiple access (NOMA) allows devices to transmit data without allocated resources. The receiver performs active UE detection (AUD) and channel estimation (CE) based on unique non-orthogonal preamble sequence assigned to each UE [7]. Therefore, grant-free NOMA in uncoordinated access schemes is considered one of the key technologies for mMTC [4].

Emerging machine-type wireless transmission services impose stringent demands on communication quality in high-mobility scenarios. Orthogonal frequency division multiplexing (OFDM), widely used in 4G and 5G, can eliminate inter-symbol interference caused by time dispersion using a cyclic prefix (CP), but struggles to mitigate frequency dispersion caused by Doppler shifts, leading to inter-carrier interference [8]. Hadani et al. proposed a novel two-dimensional modulation known as orthogonal time frequency space (OTFS) [9]. Compared to OFDM, OTFS has been proven to significantly improve transmission performance in doubly-selective channels with only a modest increase in system complexity [10]. Specifically, OTFS uses a two-dimensional inverse symplectic finite Fourier transform (ISFFT) to map signals from the Doppler-delay (DD) domain to the time-frequency (TF) domain. Unlike OFDM, each signal symbol in OTFS spans the entire TF domain channel, fully exploiting channel diversity and enhancing reliability [11]. Additionally, the number of reflectors is considerably smaller than the dimension of transmitted symbols, resulting in sparsity for channel parameters in the DD domain [9], which simplify the estimation of channel state information (CSI). Given these advantages, OTFS is considered a promising candidate for next-generation broadband communication modulation technology.

In addition, high-mobility communication inevitably requires wide coverage, as UEs may travel significant distances during communication intervals. Cellular network necessitates handovers for high-mobility UEs, increasing the complexity of system processing [12]. Moreover, boundary effects limit the transmission efficiency for UEs located at the cell edges [13]. Therefore, a concept named cell-free massive MIMO has been proposed to support denser and wider device coverage, significantly enhancing spectral efficiency and reliability [14]. By deploying numerous access points (APs) across the coverage area, boundary effects are eliminated in cell-free massive system [15]. Each AP is equipped with an independent signal processing unit and connected to a central processing unit (CPU) via fronthaul, providing a flexible networking [16]. Additionally, with UEs being closer to the receiving antennas, signal transmission and processing delays are significantly reduced. Mohammadi et al. theoretically demonstrated that OTFS modulation can achieve superior performance within a cell-free massive MIMO architecture [17]. However, numerous challenges still need to be addressed for massive random access in high-mobility scenarios when integrating cell-free massive MIMO.

Recent discussions on OTFS grant-free access schemes for high-mobility mainly focus on low earth orbit (LEO) satellite communication [18]. Shen et al. approximated the OTFS channel as a sparse matrix and utilized the low-complexity pattern-coupled sparse Bayesian learning (PCSBL) for AUD and sparse CE [19]. Zhou et al. designed a novel training sequences aided OTFS (TS-OTFS) transmission protocol for LEO satellite IoT communication and proposed a two-stage AUD and CE method [20]. Besides, a high-speed railway IoT active detection method combining tandem spreading multiple access (TSMA) and OTFS was proposed in [21]. By pre-estimating propagation delays, a preamble transmission method was designed in [22], allowing UEs to perform pre-compensation. However, existing research fails to address schemes for massive high-mobility MTCD access that incorporate cell-free massive MIMO systems. Moreover, the current CE methods, including the embedded [23] and superimposed [24] pilot schemes, have their limitations: the former incurs high pilot overhead, while the latter has suboptimal estimation performance. Hence, a balanced scheme is required to ensure accurate estimation while reducing overhead.

To address the aforementioned challenges, this paper investigates the AUD and CE schemes for massive random access in cell-free massive MIMO system. Firstly, we establish OTFS uplink signal model in cell-free massive MIMO system. Secondly, a hybrid preamble scheme is designed, where rough AUD is performed by superimposed preambles, and joint accurate AUD and CE are achieved based on embedded preambles. This scheme reduces the overall sparse signal dimension, allowing the system to accommodate more UEs. Finally, we propose a new block sparse matrix recovery algorithm for AUD and CE, named generalized approximate message passing and pattern-coupled sparse Bayesian learning with Laplacian prior (GAMP-PCSBL-La). Simulations demonstrate that this algorithm achieves better estimation performance compared to existing block recovery algorithms. Our contributions are summarized as follows:

-

•

We first analyze the massive random access model with OTFS modulation integrating cell-free massive MIMO in this paper. Through mathematical approximation, utilizing uniform planar array (UPA) of antennas and selecting appropriate preamble sequence embedding positions, we model the preamble signals as a two-dimensional (2-D) sparse compressed sensing model in the delay-Doppler-UE-beam domains. Then AUD and CE are transformed into a 2-D block sparse matrix recovery problem.

-

•

To address the scale constraints of high-dimensional sparse matrices in compressed sensing111The sparse recovery of compressed sensing requires meeting sparsity constraint [25], i.e. , where denotes the length of observed sequences, and are the number of nonzero and total elements of sparse sequence, respectively. is a small constant. while reducing the overhead of preambles, we propose a hybrid preamble scheme. Rough AUD is performed by superimposed preamble, followed by accurate AUD and CE based on embedded preamble. This approach reduces the sparse channel dimension for each estimation, enabling the system to support massive access for numerous UEs.

-

•

A novel GAMP-PCSBL-La algorithm is designed to recover the two-dimensional block sparse channel matrix. GAMP achieves good estimation performance while reducing computational complexity by avoiding matrix inversion [26]. PCSBL captures the block sparsity of two-dimensional matrix [27], and the Laplacian prior distribution has been proven to enhance reconstruction ability of sparse signals with discrete cosine transform (DCT) [28]. By combining these features, GAMP-PCSBL-La achieves excellent channel estimation accuracy with low computational complexity. Our simulation results further validate this conclusion.

The remainder of this paper is organized as follows. In Section II, we introduce the system model. Section III discusses the rough AUD, joint accurate AUD and CE strategies based on hybrid preamble scheme. In Section IV, we present the novel block sparse matrix recovery algorithm, GAMP-PCSBL-La. Section V provides numerical simulations and the corresponding analysis. Finally, the conclusion is given in Section VI.

Notations: Bold lower letters and bold capital letters denote vectors and matrices, respectively. Normal lower letters and capital letters represent scalar variables and constants, respectively. and are complex number set and real number set, respectively. represent a sliced matrix for with -th row to -th row and -th column to -th column, while is a sliced vector for with -th element to -th element. Especially, denotes the submatrix of with -th row to -th row. and mean the expectation and variance, respectively. denotes a Dirac delta function, and is the conjugate transpose of a matrix or vector. means -th element of matrix . and represent Kronecker product and Hadamard product, respectively. Calligraphy letters are used to denote sets. is Frobenius norm. and respecitvely represent ceiling and rounding operations.

II System Model

II-A mMTC in Cell-free Massive MIMIO System

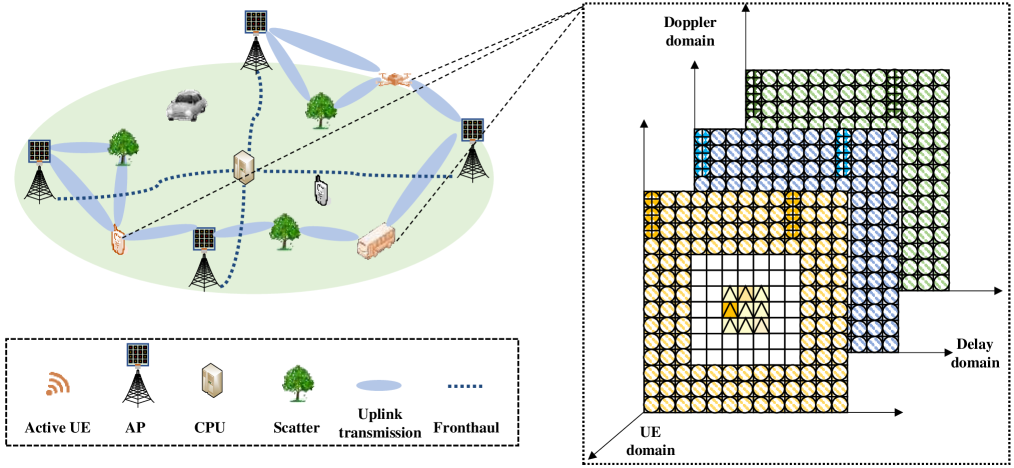

We consider a cell-free massive MIMO system, as shown in Fig. 1, comprising APs and single-antenna UEs, which are randomly distributed over a large area. Assume that each AP is connected to the CPU through fronthaul, allowing lossless data interaction. The UEs move within the area, and only a small portion of UEs transmit uplink data to APs in a specific transmission slot. These UEs are referred to as active UEs, denoted as , while the remaining UEs are silent. The channels of active UEs and APs experience doubly-selective fading. The maximum delay and Doppler shift are set to and , respectively. The signal propagation from an active UE to an AP is characterized by a finite number of paths. The uplink transmission block consists of preamble sequences and data symbols. AUD and CE are performed based on received preamble sequences.

II-B OTFS Modulation and Channel Model

Consider a typical OTFS transceiver system occupies bandwidth and time duration , where denotes the number of subcarriers with interval and denotes the number of time intervals . In DD domain, the resolutions of delay and Doppler parameters are and , respectively. For a given active UE , the modulated and power-allocated symbol is assigned to the -th grid point in DD grid. Here, and represent the indices for Doppler domain and delay domain, respectively. By applying the ISFFT to in DD domain, the zero-mean symbols are transformed into TF domain:

| (1) |

On this basis, the transmitter applies the Heisenberg transform to convert it into time-domain:

| (2) |

where represents the rectangular window function in time domain with a duration of . The delay-Doppler channel response model from UE to the -th AP is defined as:

| (3) |

Here, , and represent the gain, delay, and Doppler shift, respectively, of the -th path from UE to the -th AP. is the number of path. Consider the path loss and shadow fading, we have , with representing the large-scale fading coefficient of the channels from UE to -th AP. The corresponding received time-domain signals for -th AP is presented as:

| (4) |

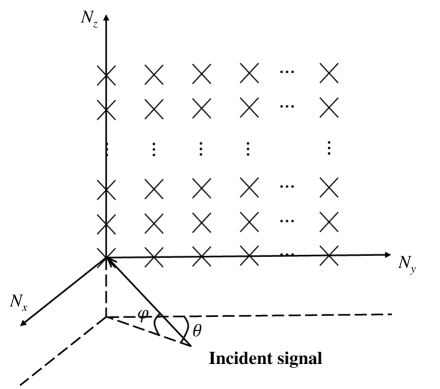

where represents the additive Gaussian noise. Each AP is equipped with a UPA, where and represent the number of antennas in the -direction and -direction, respectively. As shown in Fig. 3, let the elevation angle and azimuth angle of the -th incident signal from the -th active UE to the -th AP are denoted by and , respectively. The antenna spacing is specified as half-wavelength, with and . The response of UPA is given by:

| (5) |

where represents the spatial steering vector with dimension , expressed as:

| (6) |

The combine matrix for each AP is denoted as , where is the DFT matrix with dimension . We define beam vector , which has only one non-zero block. The local signal processing unit of AP performs Wigner transform on the time-domain received signal, yielding the received signal presented as

| (7) |

Usually, is larger than . We assume that each delay parameter is an integer multiple of the resolution, i.e.,

| (8a) | |||

| (8b) | |||

where both and are integers, and is a fraction value between -0.5 and 0.5. Using symplectic finite Fourier transform (SFFT), the received signal in the TF domain is transformed into the DD domain:

| (9) |

Additionally, we introduce a function defined as

| (10) |

Combining equations (1), (II-B), (8a), (8b) and (9), we obtain the received signal model in DD domain as , where is shown in equation (11), is defined as the neighborhood of integer Doppler parameters, and is a very small integer.

Proof:

Please refer to Appendix A in [29]. ∎

| (11) |

III Hybrid Preamble-based AUD and CE Scheme

III-A Rough Estimation

We are going to consider the signal model with both delay domain and Doppler domain dimensions are relatively small. Assuming and are both integers, where . The quantization value for the maximum delay is when is particularly small, which implies that any delay parameter is a fractional value. The quantization value for the maximum Doppler shift is . Similar to equation (11), we obtain the reception model as:

| (12) |

where , , and is defined as the neighborhood of with is a small-value integer. Due to , , and especially when is very small and is larger compared to , (a) holds approximately true.

Proof:

Please refer to Appendix B in [29]. ∎

For a concise expression, we define function:

| (13) | ||||

where represents the vector obtained by circularly shifting vector by positions. Based on the above definitions, we transform equation (III-A) into matrix form:

| (14) |

where , and

| (15e) | |||

| (15f) | |||

Here, is a matrix, where each element is given by . is the index vector that satisfies . is expressed as

| (16) |

| (17) |

is presented in equation (17), where and . Combining equations (14) and (17), is regarded as the known measurement matrix at the AP, as the observed matrix and as an unknown 2-D block sparse matrix. In a multi-user scenario, the dimension of the sparse matrix expands, allowing us to utilize this model to detect the indices of non-zero entries in the sparse matrix and thereby identify potential active UEs. Given the coarse approximations made during rough AUD, especially under conditions where is notably small, accurate estimation of channel parameters becomes challenging. Therefore, it necessitates further refinement based on initial rough detection for accurate AUD and CE. Detailed elaboration on this can be found in following subsections.

III-B Accurate Estimation

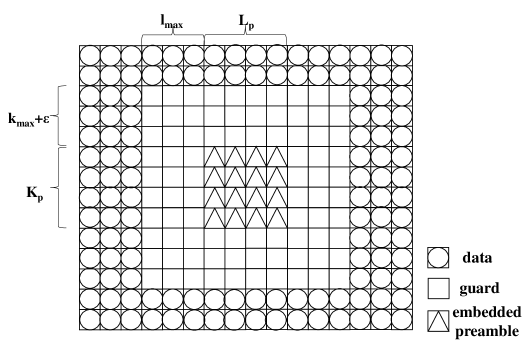

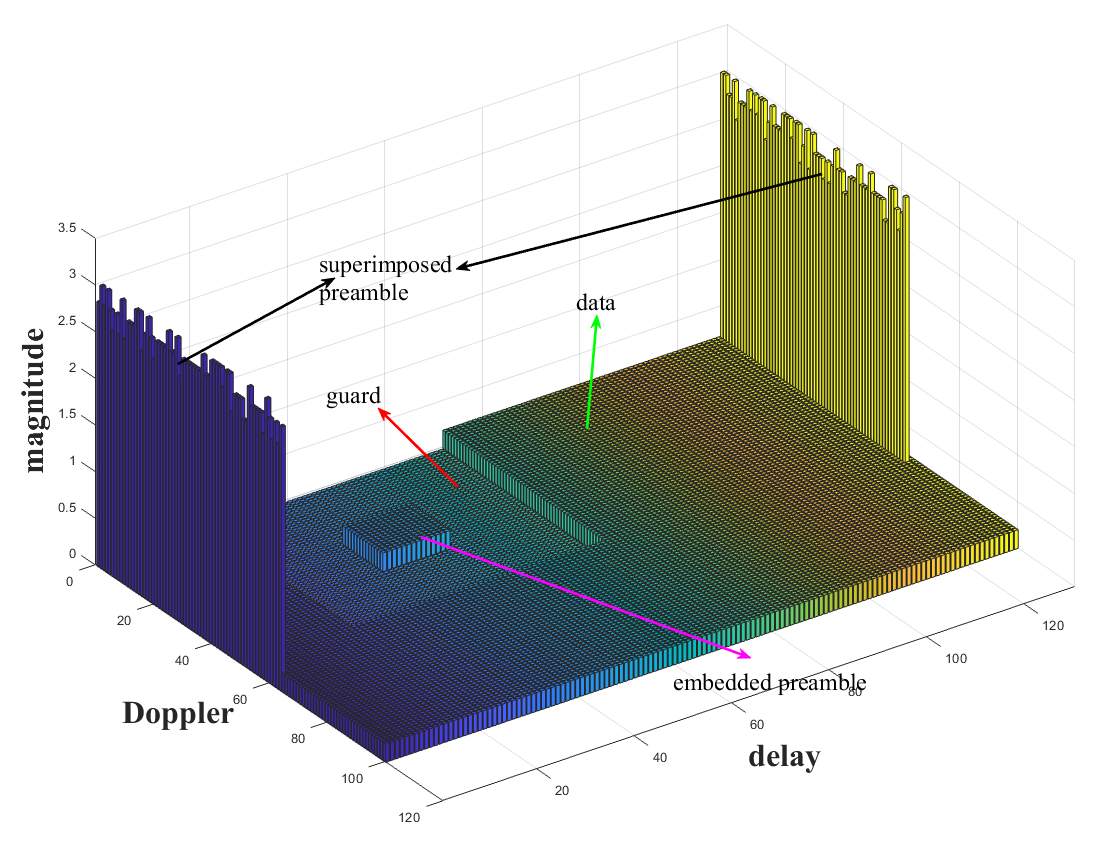

Given a sufficiently large dimensions of transmission block, the embedded preamble can be adopted for joint accurate AUD and CE. Let and . It can be observed from (11) that the -th DD domain received symbol is affected by the transmitted symbols with range of . Therefore, to avoid interference between preamble and data, a guard interval needs to be estabilshed, where symbols within this interval are set to zero, as illustrated in Fig. 4.

Assuming the starting coordinates of the preamble are , with the dimension of on the delay axis and on the Doppler axis. If we set and , for , only the case when (since ) in (11) are considerable. We denote , , and let , , where and are DD domain transmitted symbols of -th UE and received symbols of -th AP, respectively. denotes the vectorized matrix indices . Similar to (14), the received embedded preamble signal is expressed as:

| (18) |

where is described in equation (19), and

| (19) |

| (20a) | |||

| (20b) | |||

is the index vector that satisfies . is expressed as

| (21) |

| (22) |

is presented as in equation (22), where and . According to equation (18), can be considered as the known measurement matrix at the AP, as the observed matrix and as an unknown 2-D block sparse matrix. Since in accurate estimation, and , which make a higher resolution in delay and Doppler, resulting in more precise quantization. However, compared to rough estimation, the dimension of the sparse vector for accurate estimation is larger, making it more difficult to recover the sparse vector in multi-UE scenarios. Therefore, a hybrid preamble scheme is designed to achieve precise detection and estimation with lower overhead and complexity.

III-C Hybrid Preamble for Multi-UE Joint Active Detection and Channel Estimation

In the DD domain, we superimpose the superimposed preamble, denoted as preamble1 , and the block symbols , which includes the embedded preamble denoted as preamble2 , and data symbols . Different power levels are allocated to and , ensuring a significant difference in energy domain between these two types of signals. The superimposed result forms transmission block, structured as shown in the Fig. 5.

Then we have

| (23) |

where area represents the grids designated for placing preamble2 and the guard intervals. We set the preamble1 is placed at intervals of along the delay axis while being placed continuously along the Doppler axis. Since the delay dimension of preamble1 is assumed to be very small, and the Doppler dimension satisfies , there is sufficient space within DD dimension to place preamble2 and guard interval. This arrangement ensures that the received signal of preamble1 does not interfere with preamble2.

Building on this,the received signal in the TF domain can be expressed as:

| (24) |

where and represent -th AP’s received and signals in TF domain, respectively. is treated as noise. Assuming that applying ISFFT to results in a TF domain signal , and applying ISFFT to to obtain a TF domain signal , both signals pass through the same channel to arrive at the -th AP. After performing the Wigner transform, the TF domain received signals are and respectively. Based on equations (1), (9), and (22), we can derive:

| (25) |

| (26) |

| (27) |

Proof:

Please refer to Appendix C in [29]. ∎

By comparing equations (26) and (27), it is apparent that for and , define can be approximated as the received signal of through a channel with the same parameters, except that the Doppler parameter is times the original one. Therefore, the maximum Doppler quantization parameter also becomes times the original value. Based on this inference, we apply an SFFT to each column of and then perform rough AUD with the maximum Doppler quantization parameter . In the multi-UE scenario, the reception model for preamble1, as described in (14), can be written as:

| (28) |

where is the SFFT result of , and

| (29a) | |||

| (29b) | |||

After completing the rough AUD, each AP transmits the detected results, representing the set of active UEs, to CPU. The CPU merges these results to form a system-wide rough active UEs set, as , where denotes the set of active UEs detected by the -th AP. Assuming that for , we have . Similarly, for multi-UE scenario, equation (18) is rewritten as:

| (30) |

where , and

| (31a) | |||

| (31b) | |||

Since , the dimension of the sparse vector to be recovered is smaller than that of the estimated vector in a scheme that solely performs accurate AUD with the same sparsity (i.e., the same number of non-zero elements) and received signals. Therefore, a more accurate estimation can be achieved by the hybrid preamble scheme.

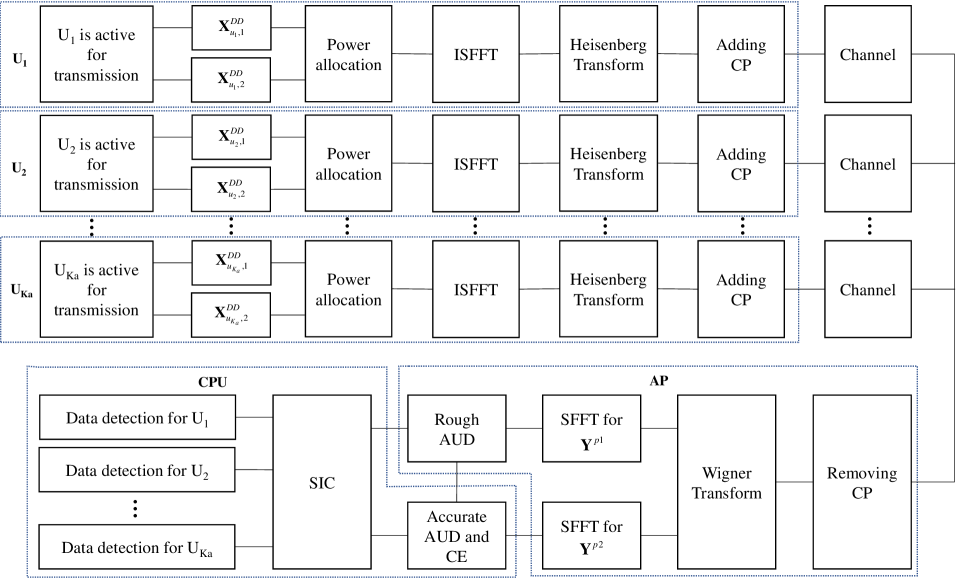

After obtaining active UEs and their corresponding channels, the influence of preamble1 on the received signal can be removed by using successive interference cancellation (SIC). Based on the residual received signal and estimated channel parameters, the data signal can be recovered using algorithms such as message passing. The system’s signal processing flow can be seen in Fig. 2. This part of data recovery is out of the scope of this paper. The hybrid preamble based AUD and CE scheme is summarized as in Algorithm 1.

IV 2-D Block Sparse Matrix Recovery

IV-A Probability Model

As elaborated in [30], the Laplacian distribution, compared to Gaussian mixture distribution, can better capture the sparsity of signals after undergoing DCT and achieve more precise estimation. Given an AWGN channel model:

| (32) |

where is the observed matrix, is the measurement matrix (its values are known at the receiver), is the block sparse matrix to be estimated, and is the additive noise matrix. Since the Laplacian distribution is defined only for real-valued random variables, we need to convert the complex form model of equation (32) into the following real equivalent model:

| (35) | |||

| (38) | |||

| (43) |

Here, and represent the operations of taking the real and imaginary parts of a complex matrix, respectively. In practical systems, the noise variance is often unpredictable. We assume that the communication between the transmitter and receiver occurs over an AWGN channel, i.e.,

| (44) |

where is -th element of matirx , and denotes the noise variance. Referencing the two-layer hierarchical probabilistic model of PCSBL [27], we introduce the hyperparameters and establish the probability distributions of as:

| (45a) | |||

| (45b) | |||

| (45c) | |||

IV-B GAMP Algorithm for Sparse Matrix Recovery

As [26] explained, given a prior distribution, the GAMP algorithm can achieve sparse signal recovery with reduced computational complexity from to . Following the sum-product and max-sum forms of the BP algorithm, the GAMP algorithm uses Gaussian and quadratic approximations to provide the minimum mean square error (MMSE) estimation and maximum a posteriori (MAP) estimation of the sparse matrix, respectively. By defining scalar estimation functions, and , the GAMP algorithm iteratively performs scalar operations at the input and output nodes to decompose the vector-valued estimation problem. Assuming that in -th iteration, the prior distribution of the sparse matrix is expressed as , with is the hyperparameter obtained in the -th iteration. For AWGN channel, the GAMP algorithm is shown from line 3 to line 13 in Algorithm 2.

For sum-product GAMP, and are defined as

| (46a) | |||

| (46b) | |||

Based on MMSE estimation, in input node, we have

| (47a) | ||||

| (47b) | ||||

where represent the approximate posterior distribution of -th element of the matrix to be estimated. In the sum-product derivation, the messages from the factor node to the variable node are approximated as:

| (48) |

As previously mentioned, the prior of is defined as:

with

Therefore, the approximate posterior distribution of can be expressed as:

| (49) | ||||

where

| (50a) | ||||

| (50b) | ||||

| (50c) | ||||

| (50d) | ||||

| (50e) | ||||

| (50f) | ||||

| (50g) | ||||

| (50k) | ||||

According to (IV-B), the posterior mean and variance of can be calculated as in (51) and (52), where is the standard -function, representing the tail probability of the normal distribution, defined as:

| (51) |

| (52) |

| (53) |

Proof:

Please refer to Appendix D in [29]. ∎

This completes the GAMP portion of Algorithm 2.

IV-C Learning Hyperparameters via EM Algorithm

After obtaining the posterior distribution of , our objective shifts to finding appropriate hyperparameters and that maximize the posterior probability of them. A direct strategy is to use the EM algorithm, where is treated as a hidden variable. In the E-step, the log-posterior mean is computed, and in the M-step, the log-posterior is maximized. The iterative process of these two steps is summarized as follows.

E Step: Given the posterior distribution of and the observed matrix , we compute the mean of the log-posterior of the hyperparameters with respect to the hidden variable in -th iteration. Let and we define function as:

| (54) |

where represents a constant that is independent of . Next, we calculate and as follow.

| (55) |

| (56) |

where represents the mean of the absolute value of , that is

| (57) |

M Step: We update the hyperparameters and by maximizing function:

| (58a) | ||||

| (58b) | ||||

First, we consider . Unlike conventional SBL, in PCSBL, the hyperparameters are interdependent, meaning that the element-wise estimation of parameters cannot be performed independently. Directly solving the result of (58a) is challenging. To address this, we refer to the derivation process in [28] and consider an alternative suboptimal solution that achieves good estimation accuracy while simplifying the computation process. Assuming is the optimal solution to (58a), the first-order derivative of R funcation with respect to equals zero at . That is, for any , , the following condition holds:

| (59) |

where

| (60) |

| (61) |

In our model, the parameters and hold true for any . Building on this, based on equation (61), satisfies the following inequality constraints:

| (62) | |||

Substituting the above results into equation (59), we obtain:

| (63) |

Then is held. Therefore, a simple suboptimal solution for equation (58a) can be given by:

| (64) |

Next, we focus on noise variance . Suppose is the optimal solution of (58b), it satisfies:

| (65) |

It is easy to obtain the expression of as:

| (66) |

Thus completes the update process for . Equations (64) and (66) serve as the output of the EM algorithm, reflected in lines 14 and 15 of Algorithm 2. With this, we have completed the entire derivation process of the GAMP-PCSBL-La algorithm. In Section VI, we validate that the proposed GAMP-PCSBL-La algorithm can accurately estimate block sparse matrix with DCT sparse properties. This algorithm is employed for AUD and CE.

V Computational Complexity Analysis

The scheme proposed in this paper consists of two main stages: rough AUD and joint accurate AUD and CE. For rough AUD, an additional SFFT for the superimposed preamble is introduced, along with the GAMP-PCSBL-La algorithm for 2-D block sparse matrix recovery. The computational complexity for rough AUD is given by , where the first two terms correspond to the SFFT, and the last term corresponds to the GAMP-PCSBL-La algorithm. Similarly, for the joint accurate AUD and CE, the GAMP-PCSBL-La algorithm for 2-D block sparse matrix recovery, applied to the embedded preamble, is used, with a computational complexity of . Additionally, the receiver needs to perform SIC for the superimposed preamble, with a computational complexity of . In summary, the overall computational complexity of the proposed scheme is . In existing schemes, the superimposed scheme has lower complexity but poorer access performance. Furthermore, as , this results in the embedded scheme potentially having higher complexity than the hybird scheme, while occupying more DD resources.

VI Simulations

To validate the effectiveness and superiority of the proposed scheme, numerical simulations are conducted. The specific simulation parameters are detailed in Table I. We consider the 3GPP vehicular models, namely extended vehicular A (EVA) with number of paths is 9 and [31]. The delay and Doppler parameters are randomly generated within the range of 0 to their respective maximum values. and are uniformly distributed within the ranges and , respectively.

| Parameters | Definition | Value |

|---|---|---|

| Doppler dimension for a block | 128 | |

| Delay dimension for a block | 512 | |

| Number of a UE’s paths | 9 | |

| Maximum velocity (km/h) | 300 | |

| Maximum path delay (s) | 2.5 | |

| Carrier frequency (GHz) | 4 | |

| Subcarrier interval (kHz) | 15 | |

| Doppler dimension for preamble1 | 64 | |

| Delay dimension for preamble1 | 4 | |

| Doppler dimension for preamble2 | 20 | |

| Delay dimension for preamble2 | 20 | |

| Large scale fading coefficient (dB) | ||

| Background noise (dBm/Hz) | -174 | |

| Transmission power (dBm) | 10 |

To evaluate the performance of the massive random access scheme, we use the detection error rate (DER) and the normalized mean squared error (NMSE) as performance metrics for AUD and CE, respectively. They are defined as follows:

| (67a) | |||

| (67b) | |||

where represents a set whose elements are in but not in . denotes the cardinality of set . A smaller DER or NMSE indicates more accurate detection and estimation results, corresponding to better AUD and CE performance.

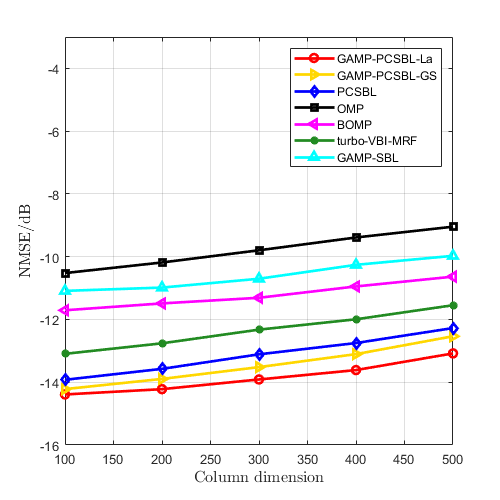

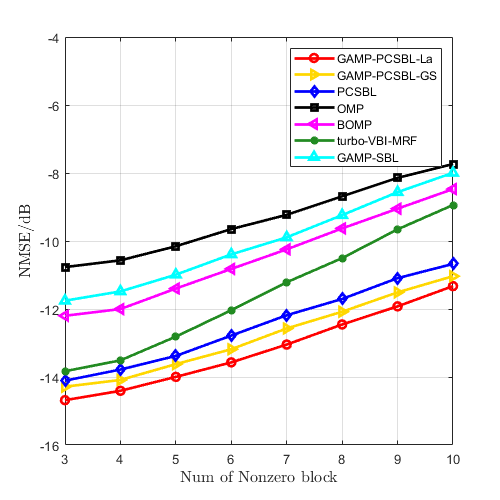

Initially, the performance of the proposed GAMP-PCSBL-La algorithm is compared with other existing algorithms for 2-D block sparse matrix recovery. we set the dimensions of the 2-D block sparse matrix to , the observation matrix to , and the sensing matrix to . A block sparse matrix was generated by randomly creating non-zero values and applying a DCT. The elements of the sensing matrix and noise matrix followed a Gaussian distribution. Compared algorithms including GAMP-PCSBL with Gaussian prior (GAMP-PCSBL-Gs) [32], PCSBL [27], orthogonal matching pursuit (OMP) [33], block OMP (BOMP) [34], turbo variational Bayesian inference with Markov random field (turbo-VBI-MRF) [35], and GAMP-SBL [36].

In Fig. 6(a), with the number of non-zero blocks fixed at 5, we compared the performance of various algorithms in recovering block sparse matrix under different signal-to-noise ratios (SNRs). The simulation curves show that as the SNR increases, the NMSE performance of all algorithms improves. In Fig. 6(b), with the SNR fixed at 12.5 dB, we analyzed the impact of varying the column dimensions of the block sparse matrix on the performance of each algorithm. It is evident that as the dimensions of the sparse matrix increase, the estimation accuracy of all algorithms gradually declines. Additionally, in Fig. 6(c), with the SNR fixed at 12.5 dB and the sparse matrix dimensions set to , we compared the performance trends of each algorithm under different numbers of non-zero blocks. This figure implies that as the number of non-zero blocks increases, the estimated accuracy decreases across all algorithms. The simulation results in Fig. 6(b) and 6(c) are consistent with the relevant conclusions of compressed sensing theory. These simulation curves also demonstrate that algorithms utilizing PCSBL outperform other algorithms in block sparse matrix recovery. Moreover, the proposed GAMP-PCSBL-La algorithm outperforms the other algorithms, showcasing its unique performance advantages in recovering block sparse matrices formed through DCT.

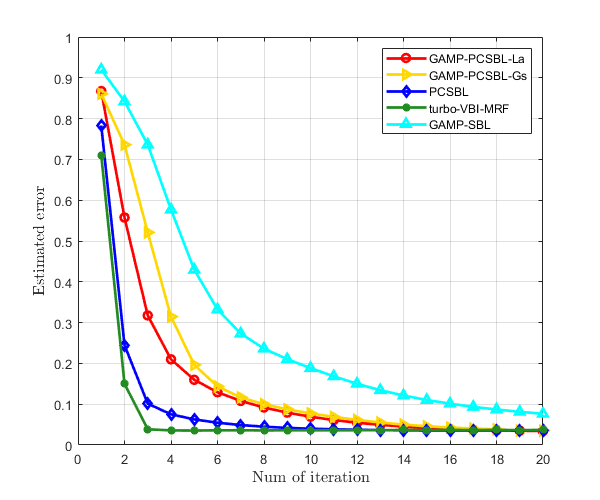

We compare the convergence trends of several iterative algorithms in Fig. 7, with the simulation settings being consistent with those in Fig. 6. The ‘estimated error’ in the figure is defined as the non-logarithmic form of NMSE, i.e., . The figure shows that the PCSBL and turbo-VBI-MRF algorithms, which are based on direct matrix inversion, converge faster than GAMP-based algorithms, reaching convergence in approximately 5 iterations. In contrast, the GAMP-based algorithms converge after about 20 iterations. This simulation result demonstrates that the proposed GAMP-PCSBL-La algorithm exhibits good convergence and reliability.

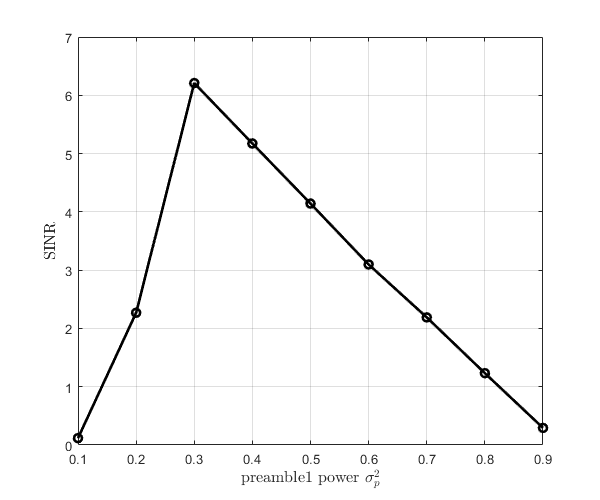

In the hybrid preamble scheme proposed in this paper, the power allocation between the two superimposed signals, and , significantly impacts the performance of the receiver. To assess this impact, we define as the power allocation ratio of , and let vary from 0.1 to 0.9 in steps of 0.1. After SIC, the received signal is expressed as , where represents the received signal of after passing through the channel . and denote interference and additive Gaussian noise, respectively. The receiver performance is evaluated based on the signal-to-interference-plus-noise ratio (SINR) under different values of :

| (68) |

The simulation results, as shown by the curves in Fig.8, indicate that as increases, the receiver performance first improves and then deteriorates, with optimal performance occurring around . At this point, the magnitude of non-zero entries of to be ten times that of . The trend of this curve aligns with the conclusion in [24], which indicates that there is an optimal power allocation ratio for the superimposed pilot signals. In the subsequent simulations, we will fix the .

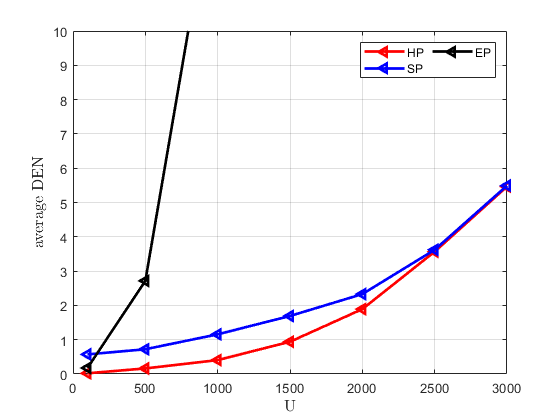

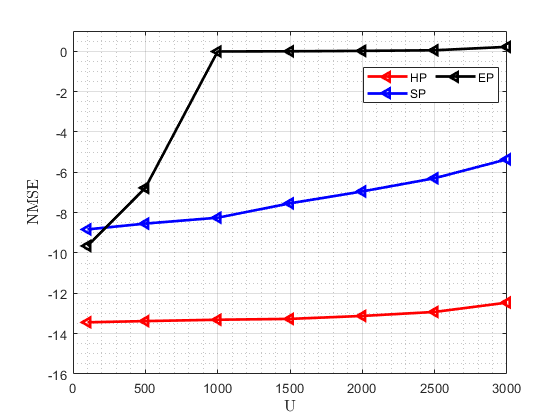

In order to demonstrate the advantages of the proposed scheme in massive random access scenarios, we compare its performance under different value of . Specifically, we fix the AP antenna array dimension at and set the number of active users to 30, while varying from 100 to 3000. Especially, we use ‘HP’, ‘SP’ and ‘EP’ to denote hybrid preamble, superimposed preamble and embedded preamble schemes, respectively. The average detection error number (DEN) instead of DER is used to characterize the AUD performance for fairness. Under the GAMP-PCSBL-La algorithm, the performance trends of the three schemes are illustrated in Fig. 9. It can be observed that when is relatively small, HP, SP, and EP all achieve satisfactory detection and estimation performance. However, as increases, the performance of EP deteriorates significantly, as the growth rate of the sensing matrix dimension in EP is much higher than in the other two schemes. Initially, HP outperforms SP in terms of active user detection, but as increases, the detection performance of both schemes begins to converge. This is because, in high- scenarios, the detection performance of HP is primarily constrained by the rough AUD stage. Nevertheless, HP consistently outperforms SP in channel estimation by at least 5dB, and this performance gap increases with . Overall, HP exhibits the best performance in massive UE scenarios.

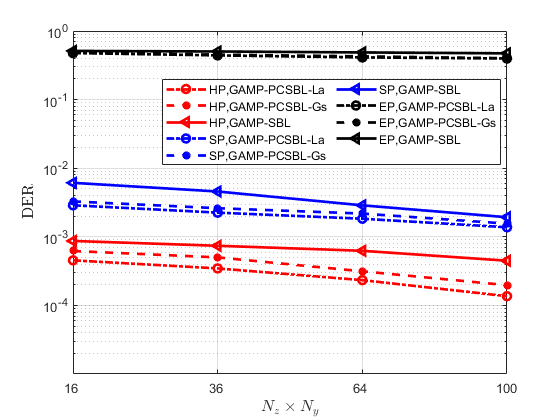

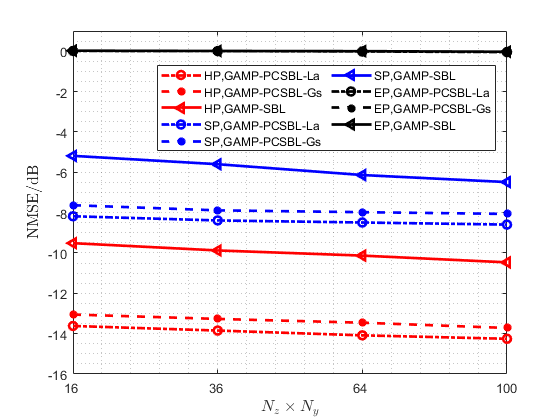

In Fig. 10, with the number of active UEs fixed at 30, we simulated the impact of antenna array dimensions on the performance of massive random access schemes. Additionally, to avoid the complex computation of large matrix inversions, we limited our comparison to low-complexity GAMP-based algorithms to evaluate their performance in massive random access, thereby verifying the superiority of the proposed scheme. The results indicate that as the number of antennas increases, both the hybrid preamble scheme and the superimposed preamble scheme exhibit improved AUD and CE performance. Due to the absence of a rough activity detection step to reduce the dimensionality of the matrix to be estimated, the embedded preamble scheme alone, with its excessively large block-sparse channel matrix, fails to achieve effective AUD and CE results. It is evident that in massive random access, the proposed hybrid preamble scheme significantly outperforms the schemes that utilize either the superimposed or embedded preamble alone. Additionally, the simulation curves demonstrate that, compared to the GAMP-PCSBL-Gs and GAMP-SBL algorithms, the proposed GAMP-PCSBL-La algorithm more effectively captures the block-sparsity caused by fractional channel parameters, resulting in superior AUD and CE performance.

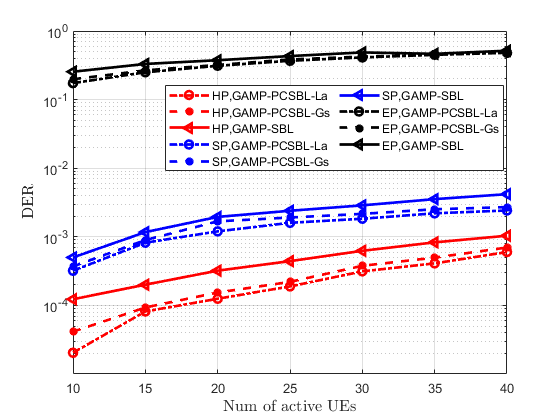

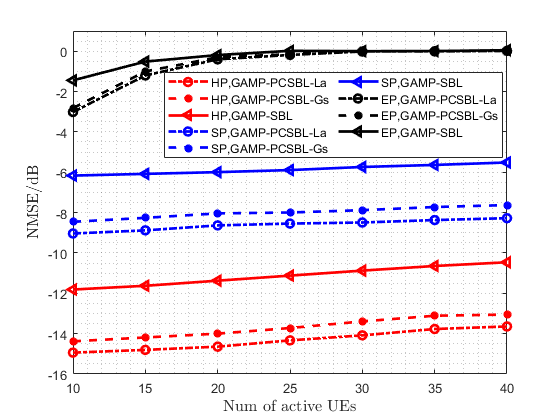

Similar conclusions can be drawn from the simulation curves in Fig. 11. With the antenna array dimension fixed at , we vary the number of active UEs from 10 to 40. The simulation results show that as the number of active UEs increases, the performance of all the massive random access schemes declines. This decline is attributed to the fact that more active UEs correspond to more non-zero elements, making the matrix less sparse. The proposed hybrid preamble scheme achieves significantly better AUD and CE performance compared to the other two preamble schemes when addressing the demands of massive random access. The larger dimension of the channel matrix to be estimated causes the embedded preamble scheme to fail when used alone. Moreover, the simulation curves in Fig. 11 demonstrate that the proposed GAMP-PCSBL-La algorithm outperforms other iterative algorithms in block-sparse matrix recovery.

VII Conclusion

This paper proposes a hybrid preamble scheme for massive machine-type random access in high-mobility scenarios within cell-free massive MIMO systems using OTFS modulation. This scheme employs a superimposed preamble for rough AUD, then performs accurate AUD and CE based on the rough detected UE set and embedded preamble. By leveraging the advantages of both preamble schemes, the proposed hybrid preamble scheme achieves more precise detection and estimation with reduced preamble overhead. Additionally, a GAMP-PCSBL-La algorithm is introduced to estimate the channel matrix, effectively capturing the block-sparse characteristics of the channel caused by fractional channel parameters, while maintaining low computational complexity. Simulation results demonstrate that the proposed hybrid preamble scheme better meets the requirements for massive random access in OTFS-modulating cell-free massive MIMO systems, and that the GAMP-PCSBL-La algorithm is particularly well-suited for this scheme.

Appendix A

By substituting equations (1) and (8a), (8b) into equation (II-B), we obtain:

| (69) |

Furthermore, substituting equation (9) into the above equation then we get:

| (70) |

We define , thus, the above equation can be expressed as:

| (71) |

Analyzing the equation (71), and using the properties of the Dirac delta function, can be written as a segment function. When , we have:

| (72) |

Here, the approximate equality retains only the integer points near the extremum to approximate , where . Similarly, when , we have

| (73) |

Combining the above derivations, we obtain equation (11).

Appendix B

In the case where is very small, the received signal in the time-frequency domain can be expressed as:

| (74) |

In our system, assuming that the delay parameter is much smaller than the duration of one symbol, we can roughly establish the approximation in the above equation. Similar to the derivation in Appendix A, we can substitute equations (1), (8a), (8b), and (9) to obtain:

Then we can derive equation (III-A) based on above results.

Appendix C

For the case where is small, the derivation of equation (27) can be found in Appendix B. For the case where is large, according to equation (II-B), we have:

Assuming the time-frequency domain symbols follow a zero-mean Gaussian distribution, according to the central limit theorem, the ratio of variances between the first and second terms on the right-hand side of the equation is , where is the expected value of the delay quantization value . Typically, delays are assumed to be uniformly randomly distributed, so and It can be considered that the first term on the right-hand side of equation dominates the numerical value. By placing the second term of equation into the noise, we obtain the equation (27).

Appendix D

According to equations (IV-B) and (50), the posterior mean of can be expressed as:

| (75) |

where

| (76) |

Then we have

| (77) |

From equations (50d) to (50g), we can get that

| (78) |

The last two terms of equation (D) can be eliminated, resulting in equation (51). We define

| (79) |

where

| (80) |

First we have

| (81) |

using the fact that

| (82) |

and to get

| (83) |

In the right-hand side of equation (83), we set and substitute the definitions of and into the left-hand side, yielding:

| (84) |

Then we get

| (85) |

With the definition of , it can be obtained that

| (86) |

| (87) |

Combining equations (50f) and (50g), and substituting (86) and (87) into (79), finally using the variance definition , we obtain the result of equation (52).

References

- [1] M. Matthaiou, O. Yurduseven, H. Q. Ngo, D. Morales-Jimenez, S. L. Cotton, and V. F. Fusco, “The road to 6G: Ten physical layer challenges for communications engineers,” IEEE Commun. Mag., vol. 59, pp. 64–69, Jan. 2021.

- [2] Y. Wu, X. Gao, S. Zhou, W. Yang, Y. Polyanskiy, and G. Caire, “Massive access for future wireless communication systems,” IEEE Wirel. Commun., vol. 27, pp. 148–156, Aug. 2020.

- [3] B. Ai, A. F. Molisch, M. Rupp, and Z.-D. Zhong, “5G key technologies for smart railways,” Proc. IEEE, vol. 108, pp. 856–893, June 2020.

- [4] M. B. Shahab, R. Abbas, M. Shirvanimoghaddam, and S. J. Johnson, “Grant-free non-orthogonal multiple access for IoT: A survey,” IEEE Commun. Surv. Tutor., vol. 22, pp. 1805–1838, May 2020.

- [5] H. Chen, R. Abbas, P. Cheng, M. Shirvanimoghaddam, W. Hardjawana, W. Bao, Y. Li, and B. Vucetic, “Ultra-reliable low latency cellular networks: Use cases, challenges and approaches,” IEEE Commun. Mag., vol. 56, pp. 119–125, Dec. 2018.

- [6] O. Kodheli, E. Lagunas, N. Maturo, S. K. Sharma, B. Shankar, J. F. M. Montoya, J. C. M. Duncan, D. Spano, S. Chatzinotas, S. Kisseleff, et al., “Satellite communications in the new space era: A survey and future challenges,” IEEE Commun. Surv. Tutor., vol. 23, pp. 70–109, Oct. 2020.

- [7] Y. Liu, S. Zhang, X. Mu, Z. Ding, R. Schober, N. Al-Dhahir, E. Hossain, and X. Shen, “Evolution of NOMA toward next generation multiple access (NGMA) for 6G,” IEEE J. Sel. Areas Commun., vol. 40, pp. 1037–1071, Apr. 2022.

- [8] W. C. Jakes and D. C. Cox, Microwave mobile communications. New York, NY, USA: Wiley-IEEE press, 1994.

- [9] R. Hadani, S. Rakib, M. Tsatsanis, A. Monk, A. J. Goldsmith, A. F. Molisch, and R. Calderbank, “Orthogonal time frequency space modulation,” in Proc. IEEE Wireless Commun. Netw. Conf (WCNC), pp. 1–6, IEEE, Mar. 2017.

- [10] A. Farhang, A. RezazadehReyhani, L. E. Doyle, and B. Farhang-Boroujeny, “Low complexity modem structure for OFDM-based orthogonal time frequency space modulation,” IEEE Wirel. Commun. Lett., vol. 7, pp. 344–347, Nov. 2017.

- [11] Z. Wei, W. Yuan, S. Li, J. Yuan, G. Bharatula, R. Hadani, and L. Hanzo, “Orthogonal time-frequency space modulation: A promising next-generation waveform,” IEEE Wirel. Commun., vol. 28, pp. 136–144, Aug. 2021.

- [12] J. Wang, C. Jiang, and L. Kuang, “High-mobility satellite-UAV communications: Challenges, solutions, and future research trends,” IEEE Commun. Mag., vol. 60, pp. 38–43, May 2022.

- [13] J. Zhang, E. Björnson, M. Matthaiou, D. W. K. Ng, H. Yang, and D. J. Love, “Prospective multiple antenna technologies for beyond 5G,” IEEE J. Sel. Areas Commun., vol. 38, pp. 1637–1660, Aug. 2020.

- [14] H. Q. Ngo, A. Ashikhmin, H. Yang, E. G. Larsson, and T. L. Marzetta, “Cell-free massive MIMO versus small cells,” IEEE Trans. Wirel. Commun., vol. 16, pp. 1834–1850, Mar. 2017.

- [15] E. Björnson and L. Sanguinetti, “Scalable cell-free massive MIMO systems,” IEEE Trans. Commun., vol. 68, pp. 4247–4261, July 2020.

- [16] D. Wang, X. You, Y. Huang, W. Xu, J. Li, P. Zhu, Y. Jiang, Y. Cao, X. Xia, Z. Zhang, et al., “Full-spectrum cell-free RAN for 6G systems: system design and experimental results,” Sci. China-Inf. Sci., vol. 66, p. 130305, Feb. 2023.

- [17] M. Mohammadi, H. Q. Ngo, and M. Matthaiou, “Cell-free massive MIMO meets OTFS modulation,” IEEE Trans. Commun., vol. 70, pp. 7728–7747, Nov. 2022.

- [18] Z. Gao, X. Zhou, J. Zhao, J. Li, C. Zhu, C. Hu, P. Xiao, S. Chatzinotas, D. W. K. Ng, and B. Ottersten, “Grant-free NOMA-OTFS paradigm: Enabling efficient ubiquitous access for LEO satellite Internet-of-Things,” IEEE Netw., vol. 37, pp. 18–26, Jan. 2023.

- [19] B. Shen, Y. Wu, J. An, C. Xing, L. Zhao, and W. Zhang, “Random access with massive MIMO-OTFS in LEO satellite communications,” IEEE J. Sel. Areas Commun., vol. 40, pp. 2865–2881, Oct. 2022.

- [20] X. Zhou, K. Ying, Z. Gao, Y. Wu, Z. Xiao, S. Chatzinotas, J. Yuan, and B. Ottersten, “Active terminal identification, channel estimation, and signal detection for grant-free NOMA-OTFS in LEO satellite Internet-of-Things,” IEEE Trans. Wirel. Commun., vol. 22, pp. 2847–2866, Apr. 2022.

- [21] Y. Ma, G. Ma, N. Wang, Z. Zhong, and B. Ai, “OTFS-TSMA for massive Internet of Things in high-speed railway,” IEEE Trans. Wirel. Commun., vol. 21, pp. 519–531, Jan. 2021.

- [22] A. K. Sinha, S. K. Mohammed, P. Raviteja, Y. Hong, and E. Viterbo, “OTFS based random access preamble transmission for high mobility scenarios,” IEEE Trans. Veh. Technol., vol. 69, pp. 15078–15094, Dec. 2020.

- [23] P. Raviteja, K. T. Phan, and Y. Hong, “Embedded pilot-aided channel estimation for OTFS in delay–Doppler channels,” IEEE Trans. Veh. Technol., vol. 68, pp. 4906–4917, May 2019.

- [24] H. B. Mishra, P. Singh, A. K. Prasad, and R. Budhiraja, “OTFS channel estimation and data detection designs with superimposed pilots,” IEEE Trans. Wirel. Commun., vol. 21, pp. 2258–2274, Apr. 2021.

- [25] E. J. Candès, J. Romberg, and T. Tao, “Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information,” IEEE Trans. Inf. Theory, vol. 52, pp. 489–509, Feb. 2006.

- [26] S. Rangan, “Generalized approximate message passing for estimation with random linear mixing,” in Proc. IEEE Int. Symp. Inf. Theory, pp. 2168–2172, IEEE, July 2011.

- [27] J. Fang, Y. Shen, H. Li, and P. Wang, “Pattern-coupled sparse Bayesian learning for recovery of block-sparse signals,” IEEE Trans. Signal Process., vol. 63, pp. 360–372, Jan. 2014.

- [28] F. Bellili, F. Sohrabi, and W. Yu, “Generalized approximate message passing for massive MIMO mmWave channel estimation with Laplacian prior,” IEEE Trans. Commun., vol. 67, pp. 3205–3219, May 2019.

- [29] Y. Hu, D. Wang, X. Xia, J. Li, P. Zhu, and X. You, “A novel massive random access in cell-free massive mimo systems for high-speed mobility with otfs modulation,” arXiv preprint arXiv:2409.01111, 2025. https://arxiv.org/abs/2409.01111.

- [30] S. D. Babacan, R. Molina, and A. K. Katsaggelos, “Bayesian compressive sensing using Laplace priors,” IEEE Trans. Image Process., vol. 19, pp. 53–63, Jan. 2009.

- [31] M. Series, “Guidelines for evaluation of radio interface technologies for IMT-Advanced,” Report ITU, vol. 638, no. 31, 2009.

- [32] J. Fang, L. Zhang, and H. Li, “Two-dimensional pattern-coupled sparse Bayesian learning via generalized approximate message passing,” IEEE Trans. Image Process., vol. 25, pp. 2920–2930, June 2016.

- [33] J. A. Tropp and A. C. Gilbert, “Signal recovery from random measurements via orthogonal matching pursuit,” IEEE Trans. Inf. Theory, vol. 53, pp. 4655–4666, Dec. 2007.

- [34] L. Lu, W. Xu, Y. Wang, and Z. Tian, “Compressive spectrum sensing using sampling-controlled block orthogonal matching pursuit,” IEEE Trans. Commun., vol. 71, pp. 1096–1111, Feb. 2022.

- [35] M. Zhang, X. Yuan, and Z.-Q. He, “Variance state propagation for structured sparse bayesian learning,” IEEE Trans. Signal Process., vol. 68, pp. 2386–2400, Mar. 2020.

- [36] X. Zhang, P. Fan, L. Hao, and X. Quan, “Generalized approximate message passing based Bayesian learning detectors for uplink grant-free NOMA,” IEEE Trans. Veh. Technol., vol. 72, pp. 15057–15061, Nov. 2023.