A Pairwise Dataset for GUI Conversion and Retrieval between Android Phones and Tablets

Abstract.

With the popularity of smartphones and tablets, users have become accustomed to using different devices for different tasks, such as using their phones to play games and tablets to watch movies. To conquer the market, one app is often available on both smartphones and tablets. However, although one app has similar graphic user interfaces (GUIs) and functionalities on phone and tablet, current app developers typically start from scratch when developing a tablet-compatible version of their app, which drives up development costs and wastes existing design resources. Researchers are attempting to employ deep learning in automated GUIs development to enhance developers’ productivity. Deep learning models rely heavily on high-quality datasets. There are currently several publicly accessible GUI page datasets for phones, but none for pairwise GUIs between phones and tablets. This poses a significant barrier to the employment of deep learning in automated GUI development. In this paper, we collect and make public the Papt dataset, which is a pairwise dataset for GUI conversion and retrieval between Android phones and tablets.

1. Introduction

Mobile apps are ubiquitous in our daily life for supporting different tasks such as reading, chatting, and banking. Smartphones and tablets are the two types of portable devices with the most available apps (tabletShare, 2023). To conquer the market, one app is often available on both smartphones and tablets (Majeed-Ariss et al., 2015). Due to comparable functionalities, the smartphone and tablet versions of the same app have a highly similar Graphical User Interface (GUI). Popular apps always share a similar GUI design between phone apps and tablet apps, for example, YouTube (youtube, 2023) and Spotify (spotify, 2023). If a tool can automatically recommend a GUI design for the appropriate tablet platform based on existing mobile GUIs, it can significantly minimise the developer’s engineering effort and accelerate the development process. From the user side, a comparable design could provide data or study on user preferences for consistency across different devices. It reduces the need for them to learn new navigation and interaction patterns (Oulasvirta et al., 2020). Therefore, automated GUI development tasks, such as the cross-platform conversion of GUI designs, GUI recommendations, etc., are gradually gaining attention from industry and academia (Zhao et al., 2022; Li et al., 2022; Chen et al., 2020a). However, the field of automatic GUI development is still in a research bottleneck, lacking breakthroughs and widely recognized tools or methods. If a present developer needs to develop a tablet-compatible version of their app, they usually start from scratch, resulting in needless costs increase and wasted existing design resources.

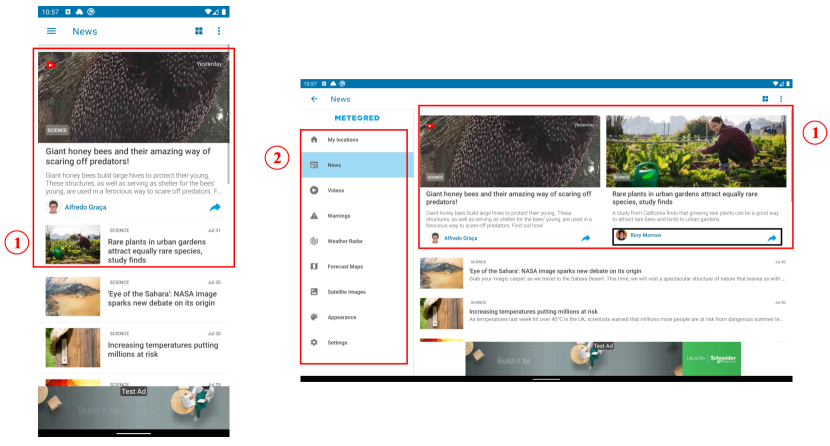

According to our observations, the growth of automated GUI development is hindered by two reasons. First, as deep learning approaches, particularly generative models (Theis et al., 2015), become more widespread in the field of automated GUI development, researchers increasingly need a pairwise, high-quality GUI dataset for training models, summarizing rules, and so on. The current datasets, for example, Rico (Deka et al., 2017), ReDraw (Moran et al., 2019), and Clay (Li et al., 2022), only include single GUI pages with UI metadata and UI screenshots, and there are no pairwise corresponding GUI page pairs. Current datasets are only suitable for GUI component identification, GUI information summarising and GUI completion. The lack of valid GUI pairs in current datasets have severely hindered the growth of GUI automated development. Second, the collecting of GUI pairs between phones and tablets is more labor-intensive. Individual GUI pages can be automatically collected and labeled by current GUI testing and exploration tools (Hu and Neamtiu, 2011; Memon, 2002) to speed up the collection process. However, due to the disparity in screen size and GUI design between phones and tablets, it is challenging to automatically align the content on both GUI pages. Figure 1 shows an example of a phone-tablet GUI pair of the app ’BBC News’ (bbcNews, 2023). To accommodate tablet devices, the UI component group 1 in the phone GUI are converted to the parts marked as 1 in the tablet GUI. We can find that these components not only change their positions and sizes, but also the layouts and types of GUIs. To keep a consistent layout with the left GUI components in the tablet GUI, the UI component group 1 in the tablet design adds new contents that are not available in the mobile GUI (black box in the tablet GUI). Another UI component group, which is marked as 2 in the tablet GUI, is not present in the phone GUI at all. One tablet GUI page may correspond to the contents of multiple phone GUI pages due to the different screen size and design style. The UI contents in group 2 of the tablet GUI correspond to other phone GUIs.

In response to above challenges, we provide our dataset Papt, which is a PAirwise dataset for GUI conversion and retrieval between Android Phones and Tables. It is the first pairwise GUI dataset of phones and tablets. The dataset contains 1,0035 corresponding phone-table GUI pairs, which are collected from 5,593 tablet-phone app pairs. We first describe the data source, dataset collection process, collection approaches, and collection tools in this paper. We also open source our data collection tool for further related works. Then, we describe the format of the dataset and the loading method. This resource paper bridges the gap between smartphone GUIs and tablet GUIs. Our goal is to provide an effective benchmark for GUI automation development and to encourage more academics to explore this field.

In summary, the contributions of this paper are the following:

-

•

We contribute the first pairwise GUI dataset between Android phones and tablets 111https://github.com/huhanGitHub/papt.

-

•

We provide the detailed procedure of data collection and open source our data collection tool.

2. Related Work

2.1. Android GUI dataset

The research community has collected datasets to facilitate diverse deep learning-based applications in the realm of mobile app design. Rico (Deka et al., 2017) is the largest publicly available Android GUI dataset containing 72,219 screenshots from 9,772 apps. It was built by the combination of crowdsourcing and automation and has been widely used as the primary data source for GUI modeling research. However, several weaknesses (Deka et al., 2021; Lee et al., 2020), such as noise and erroneous labeling, have been identified in Rico. To tackle these issues, a series of Android GUI datasets curated utilizing filtering and repairing mechanisms are introduced. Enrico (Leiva et al., 2020) is the first enhanced dataset drawn from Rico, which is used for mobile layout design categorization and consists of 1460 high-quality screenshots produced by human revision, covering 20 different GUI design topics. The VINS dataset (Bunian et al., 2021), developed specifically for detecting GUI elements, comprises 2,740 Android screenshots manually collected from various sources including Rico and Google Play. Since the data cleaning process for both the Enrico and VINS datasets involves humans, adopting such approaches to improve existing Android GUI datasets at scale is expensive and time-consuming. To this end, CLAY (Li et al., 2022) employs deep learning models to automatically denoise Android screen layouts and create a large-scale GUI dataset with 59,555 screenshots on the basis of Rico. Apart from these Rico-based GUI datasets, several works (Chen et al., 2018, 2019, 2020a, 2020b; Wang et al., 2021; Hu et al., 2019, 2023a, 2023b, 2023d, 2023c; Chen et al., 2021; Huang et al., 2021) also build their datasets for various GUI-related tasks such as Skeleton Generation, search and component prediction.

Despite improvements in recent Android GUI datasets, they are lack of updates so that some of their GUI styles are out-of-date. More importantly, none of the datasets provides pairwise GUI pages between different mobile devices. To fill the current gaps, we first introduce a pairwise dataset consisting of 10,035 phone-tablet GUI page pairs collected from 5,593 phone-tablet app pairs (Hu et al., 2023d), which can be used for GUI conversion, retrieval, and recommendation between Android phones and tablets.

2.2. Layout Generation

As one of the main goals for our dataset is to facilitate the GUI conversion between Android and tablet apps, we apply layout generation techniques to our dataset. Here we present a succinct review of existing layout generation techniques. LayoutGAN (Li et al., 2019) is the first approach that utilizes a generative model (i.e., Generative Adversarial Network) to generate layouts. In particular, it adopts self-attention layers to generate a realistic layout and proposes a novel differentiable wireframe rendering layer to enable Convolutional Neural Network (CNN)-based discrimination. LayoutVAE (Jyothi et al., 2019) is an autoregressive generative model, which uses Long Short-Term Memory (Hochreiter and Schmidhuber, 1997) to consolidate the information from multiple UI elements and leverages Variational Autoencoders (VAEs) (Kingma and Welling, 2013) to generate layouts. Recently, VTN (Arroyo et al., 2021) also exploits VAE architecture but both encoder and decoder are substituted with Transformers (Vaswani et al., 2017). Equipped with self-attention layers, VTN possesses the capacity to learn appropriate layout arrangements without annotations. At the same time, LayoutTransformer (Gupta et al., 2021), a purely Transformer-based framework, is proposed for layout generation. It captures co-occurrences and implicit relationships among elements in layouts and uses such captured features to produce layouts with bounding boxes as units. Based on our experiment results, we find that current layout generation models have limited capacity for GUI conversion between Android and tablet layouts, suggesting the potential research direction in automated GUI development.

2.3. GUI Design Search

Another goal of our dataset is to support the GUI retrieval that searches and recommends the comparable tablet GUI design in accordance with an Android GUI design. We thus employ GUI design search techniques for our dataset. Many research efforts have been made in GUI design search in recent years. Rico (Deka et al., 2017) is a neural-based training framework that aims to facilitate query-by-example search. It provides a layout-encoding vector representation for each UI and offers a variety of visual representations for search engines. GUIFetch (Behrang et al., 2018) searches GUI design by leveraging a code-search technique, which retrieves the most similar GUI code for users based on their provided sketches. WAE (Chen et al., 2020a) is a wireframe-based searching model that utilizes image autoencoder architecture to address the challenge of labeling large-scale GUI designs. In light of the experiment results, deep learning-based approaches are able to achieve satisfactory performance. Moreover, we anticipate the research community can dedicate more effort to the GUI retrieval between Android and tablet apps as this area is yet under-explored.

3. Dataset

In this section, we introduce the data source of this dataset in subsection 3.1, the collection approach in subsection 3.2, the collection tool in subsection 3.3, the format of a GUI pair in subsection 3.4, the statistics of the dataset in subsection 3.5, and how to access the dataset in subsection 3.6. We also illustrate the advantages of our dataset compared to other current datasets in subsection 3.7.

| Category | #Count | Percentage (%) |

|---|---|---|

| Entertainment | 496 | 8.87 |

| Social | 394 | 7.04 |

| Communication | 326 | 5.83 |

| Lifestyle | 318 | 5.69 |

| Books & Reference | 286 | 5.11 |

| Education | 279 | 4.98 |

| News & Magazines | 271 | 4.85 |

| Shopping | 270 | 4.83 |

| Sports | 267 | 4.78 |

| Music & Audio | 266 | 4.76 |

| Weather | 265 | 4.73 |

| Finance | 262 | 4.68 |

| Bussiness | 261 | 4.67 |

| Travel & Local | 255 | 4.57 |

| Medical | 254 | 4.54 |

3.1. Data Source

We first crawl 6,456 tablet apps from Google Play. Then we match their corresponding phone apps by their app names and app developers. Finally, we collect 5,593 valid phone-tablet app pairs from 22 app categories. Table 1 shows the top 15 categories of 5,593 app pairs. The column Category represents the category of these apps. The column #Count and Percentage(%) denote the number of apps in this category and their percentage of the overall number of apps, respectively. These 5,593 phone-tablet app pairs are the data source for this dataset. The three most common categories of apps in the data source are: Entertainment (8.87%), Social (7.04%) and Communication (5.83%). As shown in Table 1, the categories of apps in our data source are scattered and balanced. Most of the categories occupy between 4% and 6% of the total dataset. This balanced distribution ensures the dataset’s generalizability and diversity.

3.2. Data Collection Procedure

During the data collection process, we collect data in two stages: performing algorithms to automatically pair phone-tablet GUI pages and manually validating collected pairs.

In this section, we first introduce the current GUI data format in subsection 3.2.1. Second, we illustrate two ways we use to match GUI pairs: by dynamically adjusting the resolution of the device (illustrated in subsection 3.2.2 ) and by calculating the similarities (illustrated in subsection 3.2.3). We then develop data collecting tools based on these methodologies and use them to automatically collect GUI pairs. After the automatic collection, three volunteers with at least one year of GUI development experience will manually check and eliminate invalid pairs from the automatically collected pairs.

3.2.1. GUI Data Format

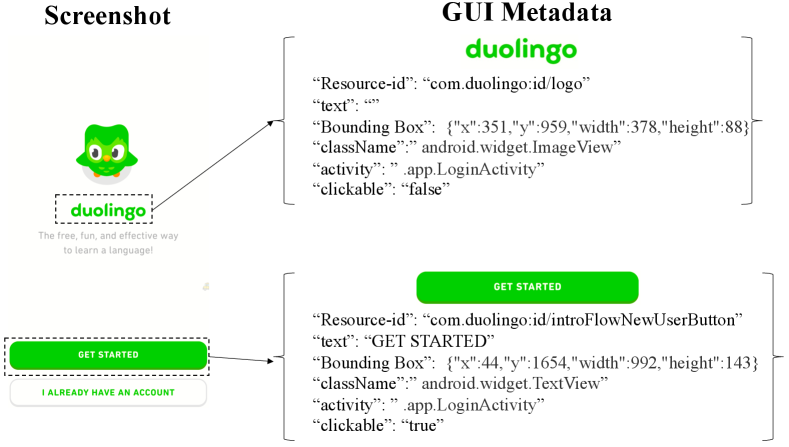

We install and run phone-tablet app pairs on the Pixel6 and Samsung Galaxy tab S8, respectively. We use uiautomator2 (uiautomator2, 2023) to collect screenshots and GUI metadata of the dynamically running apps. Figure 2 shows an example of a collected GUI screenshot and metadata of some UI components inside the GUI. This example is from the app ’duolingo’ (duolingo, 2023). The metadata is a documentary object model (DOM) tree of current GUIs, which includes the hierarchy and properties (e.g., class, bounding box, layout) of UI components. We can infer the GUI hierarchy from the DOM tree hierarchy in metadata.

3.2.2. Match GUI Pairs by Dynamically Adjusting the Device Resolution

Android’s Responsive/Adaptive layouts provide an optimized user experience regardless of screen size, allowing Android apps to support phones, tablets, foldable, ChromeOS devices, portrait/landscape orientations, and resizable configurations (adpLay, 2023). Therefore, some apps define layout files that support different devices and call the corresponding layout file depending on the resolution of the installed device.

These apps share the same APK files on both mobile and tablet devices. We first deal with this type of apps. According to Android’s official guidelines for supporting different screen sizes (supportDifScr, 2023), Android developers can provide alternate layouts for displays with a minimum width measured in density-independent pixels (dp or dip) by using the smallest width screen size qualifiers. For example, developers can define two layout files for the MainActivity: res/layout/main_activity.xml and res/layout-sw600dp/main_activity.xml for smartphones and tablets with 600 density-independent pixels, respectively. The smallest width qualifier (sw600dp) specifies the smallest of the screen’s two sides, regardless of the device’s current orientation. This layout file allows us to determine that the current app’s MainActivity is optimal for the tablet’s layout. Two types of the smallest width qualifier: 600dp and 720dp specifically develop for the 7” and 10” tablets currently on the market (supportDifScr, 2023).

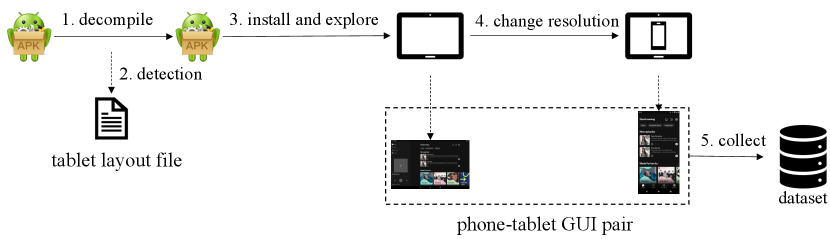

Figure 3 shows the pipeline of matching GUI pairs by dynamically adjusting device resolutions. Based on the above analysis, we first decompile the collected app pairs and then search for the presence of layout files for 600dp tablets and 720dp tablets in the source files (step 1 & 2 in Figure 3). In 5,593 app pairs, 1,214 pairs are found in the layout files of the smallest width qualifiers for the tablet device and share the same app on their phones and tablets. Therefore, these 1,214 pairs use Responsive/Adaptive layouts to dynamically change GUI layout based on the installed device.

For these app pairs, we use the Windows Manager Command of ADB (adb shell wm size) (adb, 2023) to dynamically adjust the device’s resolution. We sequentially simulate the tablet and phone resolutions on a tablet device and allow the GUIs collected before and after the simulations to automatically pair up (steps 4 & 5 in Figure 4).

3.2.3. Match GUI Pairs by Comparing UI Similarities

With the exception of 1,214 apps that share the same file on tablet and phone, the remaining 4,379 phone-tablet pairs all have independent app files. After dynamically launching the mobile and tablet apps, we match the corresponding GUI page pairs by comparing the similarity of their GUI pages.

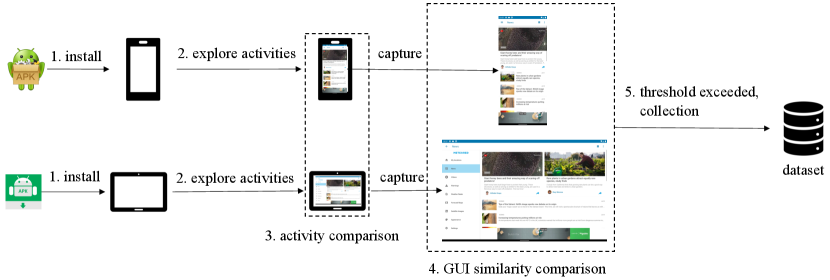

Figure 4 shows the pipeline of matching GUI pairs by comparing UI similarities. Each app has many GUI pages, and a simple comparison of all pages between the mobile app and the tablet app would be time-consuming. An Android activity provides the window in which the app draws its UI (activity, 2023). We first find the corresponding Android activity pairs of app pairs. Then we compare each GUI page on the basis of the corresponding Android activity pairs. To match Android activity pairs, we extract activity names from each GUI and encode them into numerical semantic vectors using a pre-trained BERT (Devlin et al., 2019) model. We match the activity-level pairs by comparing their semantically close activity vectors (Step 3 in Figure 4). For example, the GUI in activity homeActivity and mainActivity are matched by close semantic vectors. One Android activity may have multiple Android fragments (fragment, 2022) and GUI pages (Machiry et al., 2013; GUI state, 2023) with different UI components and layouts in current industrial apps. We compare the attributes of GUI components between phones and tablets to pair phone-tablet GUI pairs at lower granularity (Step 4 in Figure 4). In pairing, UI components are identified by their types and properties. UI components between phones and tablets with the same types and properties are considered paired GUI components. For example, two TextViews with the same texts, two ImageViews with the same images, two Buttons with the same texts are considered the paired components. If more than half of the UI components in two GUIs are paired, they are considered a phone-tablet GUI pair (Step 5 in Figure 4).

3.2.4. Manual GUI Pair Verification

Due to the limitations of ADB, current data collection tools cannot get the metadata of UI type WebView and some user-defined third-party UI components. Meanwhile, when some fragments and UIs of Android are covered, we only need the metadata of the UI at the front end (because the covered UI is not rendered and displayed on the current screen). However, the present data collection tools (adb, 2023; uiautomator2, 2023) capture information for both the viewable displayed and the covered UI, resulting in the collection of erroneous data. Therefore, three volunteers with at least one year of Android development experience perform a second round of manual data validation. Volunteers check each pair and then apply two criteria to evaluate the data quality: the data’s reliability and the rationality of the pairs. Volunteers verify the validity of collected pairs and remove pairs with inaccurate metadata. Volunteers also check the rationality of matched pairs and remove GUI pairs that do not correspond to each other. In this process, our volunteers also manually match some phone-tablet GUI pairs.

3.3. Data Collection Tool

Based on the above-described two collection strategies, we develop two distinct collecting tools: the adjust resolution collector and the similarity matching collector.

The first tool dynamically adjusts the resolution of the current device using ADB instructions. When the running app detects a change in the screen’s resolution, it will call the layout file designed for the tablet and change the layout of the current GUI.

The second tool concurrently runs two apps of one app pair on a mobile phone and a tablet. The tool dynamically evaluates the similarity of the GUIs presented on two devices, and automatically collects the matched GUI page pair when the similarity exceeds a predetermined threshold.

These two data collection tools are also included in the repository of the publicly accessible dataset. With the installation instructions provided, more researchers can utilise our tools to collect more customised GUI datasets for future research.

3.4. Format of A GUI Pair

In this section, we introduce the format of each GUI pair.

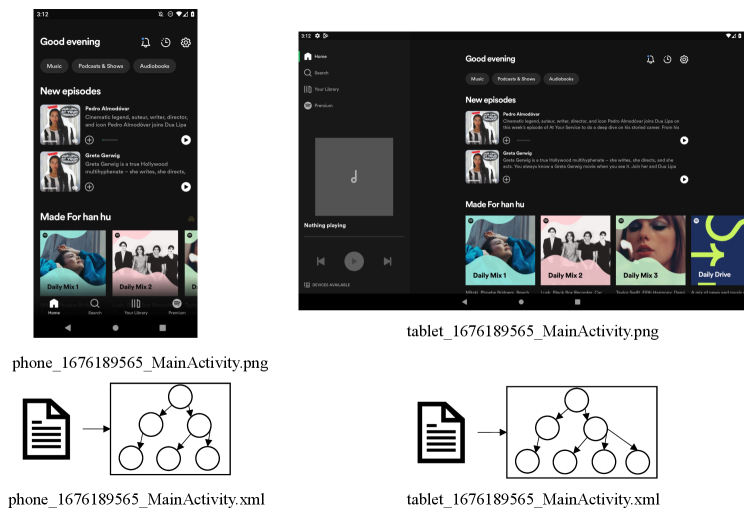

Figure 5 shows an example of pairwise GUI pages of the app ’Spotify’ in our dataset. All GUI pairs in one phone-tablet app pair are placed in the same directory. Each pair consists of four elements: a screenshot of the GUI running on the phone (phone_1676189565_-MainActivity.png), the metadata data corresponding to the GUI screenshot on the phone ( phone_1676189565_MainActivity.xml ), a screenshot of the GUI running on the tablet (tablet_1676189565_-MainActivity.png ), and the metadata data corresponding to the GUI screenshot on the tablet (tablet_1676189565_MainActivity.xml ). The naming format for all files in the dataset is Device_Timestamp_Activity Name. As shown in Figure 5, The filename tablet_1676189565_-MainActivity.xml indicates that this file was obtained by the tablet and was collected with the timestamp 1676189565, this GUI belongs to MainActivity and this file is a metadata file in XML format. We use timestamps and activity names to distinguish phone-tablet GUI pairs.

3.5. Statistics of the Database

We collect a total of 10,035 valid phone-tablet GUI pairs. For a more comprehensive presentation of our dataset, we statistically analyze the collected pairs from two perspectives: the distribution of UI view types within the dataset in subsection 3.5.1 and the distribution of GUI similarity between GUI pairs in subsection 3.5.2.

3.5.1. Distribution of UI View Types

In Android development, a UI view is a basic building block for creating user interfaces. Views are responsible for drawing and handling user interactions for a portion of the screen (AndroidView, 2023). For example, a button, a text , an image, and a list are all a type of view.

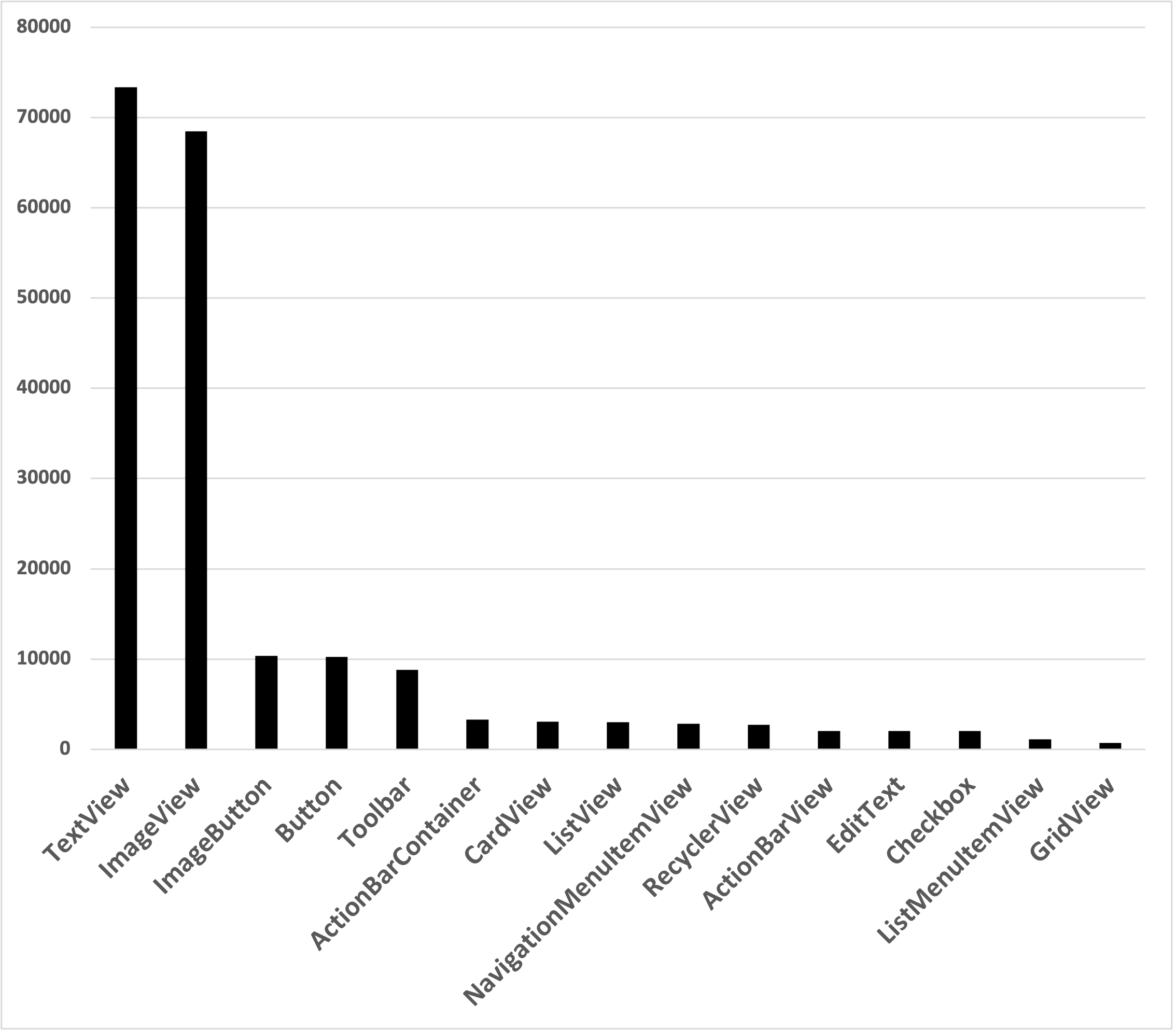

Figure 6 illustrates the distribution of UI View types in the dataset. Considering the data’s long-tail distribution, we only display the top 15 types. We can see that the TextView and ImageView types, including all their derived categories such as AppCompatTextView, AppCompatCheckedTextView, and AppCompatImageView, are the most common UI view types in the dataset. Their numbers (73,349 and 68,496) significantly outnumber all other view types. The GUI primarily presents information via text and images, so text and image-related views are the most prevalent in the database. ImageButton (10,366) and Button (10,235) are the third and fourth most UI views. Users interact with the GUI mainly through clicks and click operations rely heavily on button views, so button-related views are also common in GUI datasets.

3.5.2. Distribution of GUI Pair Similarity

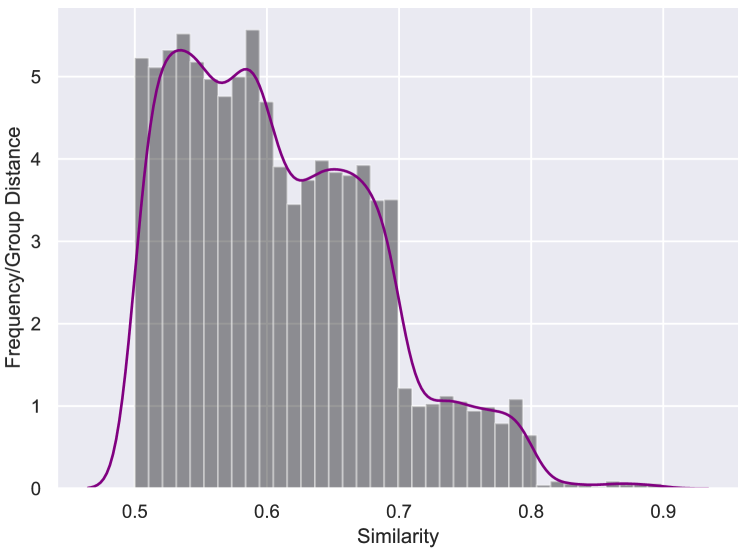

The similarity analysis between phone-tablet GUI pairs is important for downstream tasks. Given a GUI pair, there are a total of and GUI views in the GUIs of the phone and tablet, respectively. Suppose there are the same views in the GUIs of the phone and the tablet. The similarity of their GUIs is calculated as

| (1) |

Figure 7 shows the frequency histogram of GUI similarities of our phone-tablet GUI pairs in the dataset. The similarity between the GUIs of phone and tablet in most pairs is between 0.5 and 0.7. Considering the difference in screen size between tablets and phones, the current phone GUI page can only contain part of the UI views in the corresponding tablet GUI page, and the current data reminds us that when performing downstream tasks such as GUI layout generation, search, etc., we should consider filling in the contents that are not available in the mobile phone GUI page.

3.6. Accessing the Dataset

The dataset is made accessible to the public in accordance with the criteria outlined in the attached license agreements222https://github.com/huhanGitHub/papt. The pairs in the dataset are contained in separate folders according to the app. Most of the app folders are named after the package name of the app’s APK, for example, air.com.myheritage.mobile , and a few are named after the app’s name, for example, Spotify. In each app folder, as described in Section 3.4, each pair contains four elements: the phone GUI screenshot, the XML file of the phone GUI metadata, the corresponding tablet GUI screenshot, and the XML file of the tablet GUI metadata. We also shared the script for loading all GUI pairs in the open source repository.

3.7. Compare With Available Datasets

Dataset GUI Platform #GUIs #Paired GUIs #Data Source App Latest Updates Mainly targeted tasks Rico (Deka et al., 2017) Phone 72,000 0 9,700 Sep. 2017 UI Component Recognition, GUI completion UI2code (Chen et al., 2018) Phone 185,277 0 5,043 June. 2018 UI Skeleton Generation Gallery D.C. (Chen et al., 2019) Phone 68,702 0 5,043 Nov. 2019 UI Search LabelDroid (Chen et al., 2020b) Phone 394,489 0 15,087 May. 2020 UI Component Prediction UI5K (Chen et al., 2020a) Phone 54,987 0 7,748 June. 2020 UI Search Enrico (Leiva et al., 2020) Phone 1,460 0 9,700 Oct. 2020 UI Layout Design Categorization VINS (Bunian et al., 2021) Phone 2,740 0 9,700 May. 2021 UI Search Screen2Words (Wang et al., 2021) Phone 22,417 0 6,269 Oct. 2021 UI screen summarization Clay (Li et al., 2022) Phone 59,555 0 9,700 May. 2022 UI Component Recognition, GUI completion Papt Phone, Tablet 20,070 10,035 11,186 Jan. 2023 UI Component Recognition, GUI completion, GUI conversion, GUI search

3.7.1. Application to More Tasks

Table 2 shows a summary of our and other GUI datasets. First, since our data consist of phone-tablet pairs, we must manually locate the corresponding GUI pages between phones and tablets, resulting in a lesser number of pages than comparable datasets. However, we now have a broader data source (including tablet GUIs), more supported tasks, and newer data. Notably, it is the only available GUI dataset that contains phone-tablet pairwise GUIs. Our dataset addresses numerous significant gaps in existing GUI automated development and provides effective data support for the application of deep learning techniques in GUI generation, search, recommendation, and other domains.

3.7.2. Data Accuracy

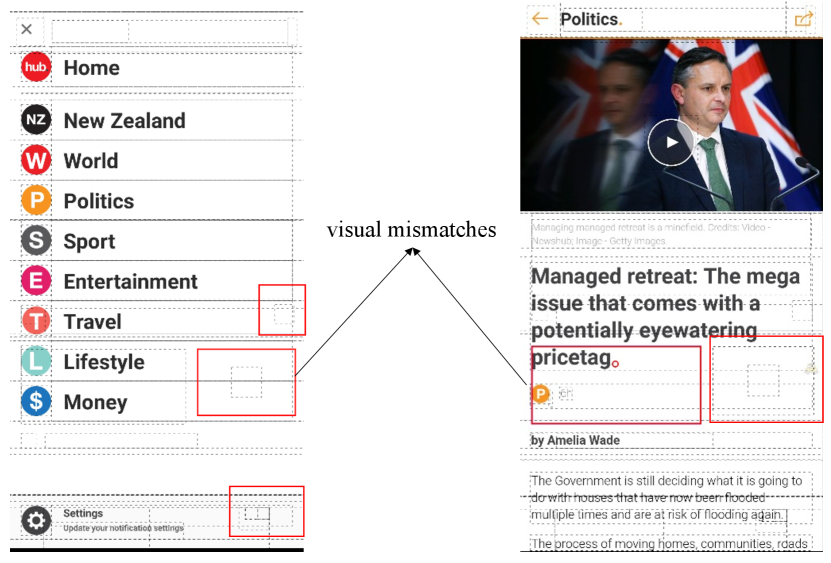

Specifically, our data eliminates a large number of GUI visual mismatches that are frequent in current datasets like as Rico and Enrico. Due to the limitations of the previous data collection tools, some GUIs have visual mismatches in the metadata and screenshots. Figure 8 shows typical visual mismatch examples between hierarchy metadata and screenshots in current datasets. Based on the bounding box coordinates of Android views provided in the metadata, we depict the location of the views in the metadata as a black dashed line in the screenshot. We mark the obvious visual mismatches with a solid red line box, which do not correspond to any of the views in the rendered screenshot. The metadata provides information on the UI elements behind the current layer, but these elements cannot be interacted with on the current screenshot. Visual mismatches between the UI data in the metadata and the screenshot would result in the UI data in the metadata and the screenshot not corresponding one to the other. Too many mismatch cases would have a negative impact on the efficiency of model generation and search. The selected UI collecting tool, UIautomator2, has optimised the GUI caption technique to avoid metadata and screenshots from containing inconsistent UI information (uiautomator2, 2023). During manual reviews, our volunteers also eliminated GUI pages with mismatched. Compared to other datasets, such as rico, fewer mismatches give us a higher accuracy of our data.

4. Conclusion

In this paper, we propose a pairwise dataset Papt for GUI conversion and retrieval between Android phones and tablets. As far as we know, this is the first dataset aims to bridge phone and tablet GUIs. We explain and analyze the data source of this dataset. We illustrate the two approaches for collecting pairwise GUIs and introduce our data collection tools. We use an example to show the format of our GUI pairs and show the distribution of UI view types and GUI pair similarity in our dataset. We also demonstrate how to access this dataset and compare the advantages of our dataset with current other GUI datasets.

References

- (1)

- activity (2023) activity 2023. activity. https://developer.android.com/reference/android/app/Activity.

- adb (2023) adb 2023. adb. https://developer.android.com/studio/command-line/adb.

- adpLay (2023) adpLay 2023. adpLay. https://developer.android.com/jetpack/compose/layouts/adaptive.

- AndroidView (2023) AndroidView 2023. AndroidView. https://data-flair.training/blogs/android-layout-and-views/.

- Arroyo et al. (2021) Diego Martin Arroyo, Janis Postels, and Federico Tombari. 2021. Variational transformer networks for layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13642–13652.

- bbcNews (2023) bbcNews 2023. bbcNews. https://www.bbc.com/news.

- Behrang et al. (2018) Farnaz Behrang, Steven P Reiss, and Alessandro Orso. 2018. GUIfetch: supporting app design and development through GUI search. In Proceedings of the 5th International Conference on Mobile Software Engineering and Systems. 236–246.

- Bunian et al. (2021) Sara Bunian, Kai Li, Chaima Jemmali, Casper Harteveld, Yun Fu, and Magy Seif Seif El-Nasr. 2021. Vins: Visual search for mobile user interface design. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 1–14.

- Chen et al. (2019) Chunyang Chen, Sidong Feng, Zhenchang Xing, Linda Liu, Shengdong Zhao, and Jinshui Wang. 2019. Gallery dc: Design search and knowledge discovery through auto-created gui component gallery. Proceedings of the ACM on Human-Computer Interaction 3, CSCW (2019), 1–22.

- Chen et al. (2018) Chunyang Chen, Ting Su, Guozhu Meng, Zhenchang Xing, and Yang Liu. 2018. From ui design image to gui skeleton: a neural machine translator to bootstrap mobile gui implementation. In Proceedings of the 40th International Conference on Software Engineering. 665–676.

- Chen et al. (2020a) Jieshan Chen, Chunyang Chen, Zhenchang Xing, Xin Xia, Liming Zhu, John Grundy, and Jinshui Wang. 2020a. Wireframe-based UI design search through image autoencoder. ACM Transactions on Software Engineering and Methodology (TOSEM) 29, 3 (2020), 1–31.

- Chen et al. (2020b) Jieshan Chen, Chunyang Chen, Zhenchang Xing, Xiwei Xu, Liming Zhu, Guoqiang Li, and Jinshui Wang. 2020b. Unblind your apps: Predicting natural-language labels for mobile gui components by deep learning. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering. 322–334.

- Chen et al. (2021) Qiuyuan Chen, Xin Xia, Han Hu, David Lo, and Shanping Li. 2021. Why my code summarization model does not work: Code comment improvement with category prediction. ACM Transactions on Software Engineering and Methodology (TOSEM) 30, 2 (2021), 1–29.

- Deka et al. (2021) Biplab Deka, Bardia Doosti, Forrest Huang, Chad Franzen, Joshua Hibschman, Daniel Afergan, Yang Li, Ranjitha Kumar, Tao Dong, and Jeffrey Nichols. 2021. An Early Rico Retrospective: Three Years of Uses for a Mobile App Dataset. Artificial Intelligence for Human Computer Interaction: A Modern Approach (2021), 229–256.

- Deka et al. (2017) Biplab Deka, Zifeng Huang, Chad Franzen, Joshua Hibschman, Daniel Afergan, Yang Li, Jeffrey Nichols, and Ranjitha Kumar. 2017. Rico: A mobile app dataset for building data-driven design applications. In Proceedings of the 30th annual ACM symposium on user interface software and technology. 845–854.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv:1810.04805 [cs.CL]

- duolingo (2023) duolingo 2023. duolingo. https://www.duolingo.com/.

- fragment (2022) fragment 2022. fragment. https://developer.android.com/guide/fragments.

- GUI state (2023) GUI state 2023. GUI state. https://developer.android.com/topic/libraries/architecture/saving-states.

- Gupta et al. (2021) Kamal Gupta, Justin Lazarow, Alessandro Achille, Larry S Davis, Vijay Mahadevan, and Abhinav Shrivastava. 2021. Layouttransformer: Layout generation and completion with self-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 1004–1014.

- Hochreiter and Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural computation 9, 8 (1997), 1735–1780.

- Hu and Neamtiu (2011) Cuixiong Hu and Iulian Neamtiu. 2011. Automating GUI testing for Android applications. In Proceedings of the 6th International Workshop on Automation of Software Test. 77–83.

- Hu et al. (2019) Han Hu, Qiuyuan Chen, and Zhaoyi Liu. 2019. Code generation from supervised code embeddings. In Neural Information Processing: 26th International Conference, ICONIP 2019, Sydney, NSW, Australia, December 12–15, 2019, Proceedings, Part IV 26. Springer, 388–396.

- Hu et al. (2023a) Han Hu, Ruiqi Dong, John Grundy, Thai Minh Nguyen, Huaxiao Liu, and Chunyang Chen. 2023a. Automated Mapping of Adaptive App GUIs from Phones to TVs. arXiv:2307.12522 [cs.SE]

- Hu et al. (2023b) Han Hu, Yujin Huang, Qiuyuan Chen, Terry Tue Zhuo, and Chunyang Chen. 2023b. A First Look at On-device Models in iOS Apps. arXiv:2307.12328 [cs.SE]

- Hu et al. (2023c) Han Hu, Yujin Huang, Qiuyuan Chen, Terry Yue zhuo, and Chunyang Chen. 2023c. A First Look at On-device Models in iOS Apps. ACM Transactions on Software Engineering and Methodology (2023).

- Hu et al. (2023d) Han Hu, Haolan Zhan, Yujin Huang, and Di Liu. 2023d. Pairwise GUI Dataset Construction Between Android Phones and Tablets. arXiv preprint arXiv:2310.04755 (2023).

- Huang et al. (2021) Yujin Huang, Han Hu, and Chunyang Chen. 2021. Robustness of on-device models: Adversarial attack to deep learning models on android apps. In 2021 IEEE/ACM 43rd International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP). IEEE, 101–110.

- Jyothi et al. (2019) Akash Abdu Jyothi, Thibaut Durand, Jiawei He, Leonid Sigal, and Greg Mori. 2019. Layoutvae: Stochastic scene layout generation from a label set. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 9895–9904.

- Kingma and Welling (2013) Diederik P Kingma and Max Welling. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).

- Lee et al. (2020) Chunggi Lee, Sanghoon Kim, Dongyun Han, Hongjun Yang, Young-Woo Park, Bum Chul Kwon, and Sungahn Ko. 2020. GUIComp: A GUI design assistant with real-time, multi-faceted feedback. In Proceedings of the 2020 CHI conference on human factors in computing systems. 1–13.

- Leiva et al. (2020) Luis A Leiva, Asutosh Hota, and Antti Oulasvirta. 2020. Enrico: A dataset for topic modeling of mobile UI designs. In 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services. 1–4.

- Li et al. (2022) Gang Li, Gilles Baechler, Manuel Tragut, and Yang Li. 2022. Learning to denoise raw mobile UI layouts for improving datasets at scale. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–13.

- Li et al. (2019) Jianan Li, Jimei Yang, Aaron Hertzmann, Jianming Zhang, and Tingfa Xu. 2019. Layoutgan: Generating graphic layouts with wireframe discriminators. arXiv preprint arXiv:1901.06767 (2019).

- Machiry et al. (2013) Aravind Machiry, Rohan Tahiliani, and Mayur Naik. 2013. Dynodroid: An input generation system for android apps. In Proceedings of the 2013 9th Joint Meeting on Foundations of Software Engineering. 224–234.

- Majeed-Ariss et al. (2015) Rabiya Majeed-Ariss, Eileen Baildam, Malcolm Campbell, Alice Chieng, Debbie Fallon, Andrew Hall, Janet E McDonagh, Simon R Stones, Wendy Thomson, and Veronica Swallow. 2015. Apps and adolescents: a systematic review of adolescents’ use of mobile phone and tablet apps that support personal management of their chronic or long-term physical conditions. Journal of medical Internet research 17, 12 (2015), e287.

- Memon (2002) Atif M Memon. 2002. GUI testing: Pitfalls and process. Computer 35, 08 (2002), 87–88.

- Moran et al. (2019) Kevin Moran, C Bernal-Cardenas, M Curcio, R Bonett, and D Poshyvanyk. 2019. The ReDraw Dataset: A Set of Android Screenshots, GUI Metadata, and Labeled Images of GUI Components.

- Oulasvirta et al. (2020) Antti Oulasvirta, Niraj Ramesh Dayama, Morteza Shiripour, Maximilian John, and Andreas Karrenbauer. 2020. Combinatorial optimization of graphical user interface designs. Proc. IEEE 108, 3 (2020), 434–464.

- spotify (2023) spotify 2023. spotify. https://en.wikipedia.org/wiki/Spotify.

- supportDifScr (2023) supportDifScr 2023. supportDifScr. https://developer.android.com/guide/topics/large-screens/support-different-screen-sizes.

- tabletShare (2023) tabletShare 2023. tabletShare. https://gs.statcounter.com/platform-market-share/desktop-mobile-tablet.

- Theis et al. (2015) Lucas Theis, Aäron van den Oord, and Matthias Bethge. 2015. A note on the evaluation of generative models. arXiv preprint arXiv:1511.01844 (2015).

- uiautomator2 (2023) uiautomator2 2023. uiautomator2. https://play.google.com/store.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

- Wang et al. (2021) Bryan Wang, Gang Li, Xin Zhou, Zhourong Chen, Tovi Grossman, and Yang Li. 2021. Screen2words: Automatic mobile UI summarization with multimodal learning. In The 34th Annual ACM Symposium on User Interface Software and Technology. 498–510.

- youtube (2023) youtube 2023. youtube. https://en.wikipedia.org/wiki/YouTube.

- Zhao et al. (2022) Yanjie Zhao, Li Li, Xiaoyu Sun, Pei Liu, and John Grundy. 2022. Code implementation recommendation for Android GUI components. In Proceedings of the ACM/IEEE 44th International Conference on Software Engineering: Companion Proceedings. 31–35.