A Pre-Allocation Design for Cost Minimization and Delay Constraint in Vehicular Offloading System

Abstract

To accommodate exponentially increasing traffic demands of vehicle-based applications, operators are utilizing offloading as a promising technique to improve quality of service (QoS), which gives rise to the application of Mobile Edge Computing (MEC). While the conventional offloading paradigms focus on delay and energy tradeoff, they either fail to find efficient models to represent delay, especially the queueing delay, or underestimate the role of MEC Server. In this paper, we propose a novel Pre-Allocation Design for vehicular Offloading (PADO). A task delay queue is constructed based on an allocate-execute separate (AES) mechanism. Due to the dynamics of vehicular network, we are inspired to utilize Lyapunov optimization to minimize the execution cost of each vehicle and guarantee task delay. The MEC Server with energy harvesting devices is also taken into consideration of the system. The transaction between vehicles and server is decided by a Stackelberg Game framework. We conduct extensive experiments to show the property and superiority of our proposed framework.

Index Terms:

mobile edge computing, Lyapunov Optimization, Stackelberg Game, queueing delay, energy harvestingI Introduction

With the ever-increasing number of vehicles on the roads and the development of automobile industry, vehicles have been a significant component of the mobile devices connecting to the Internet. Nowadays, vehicles can support various mobile applications, such as image-aided navigation[9] and vehicular augmented reality[6]. These applications require huge quantities of computation resources. The increasing needs for resources along with the pursuit of higher performance for advanced vehicular applications poses a great challenge to run computationally intensive applications on the resource constrained vehicles.

The more rigorous requirements for mobile devices on execution and storage ability calls for a new paradigm called Mobile Edge Computing (MEC) that relies on the internal wireless network to acquire computational capabilities[11]. Compared with the traditional local execution of tasks, offloading workload to an MEC Server not only reduces the execution delay but also saves the energy consumption of users. Therefore, it is pressing to take advantage of the MEC features to share the intensive workload of users[4].

A number of works have studied offloading schemes in an MEC system. Cordeschi [2] designed a distributed and adaptive traffic offloading scheme for cognitive cloud vehicular networks. Sasaki et al. [8] designed an infrastructure-based vehicle control system to reduce latency and balance computational load. Wang [10] studied computational offloading problem to optimize the total consumption cost incurred by the usage of the limited computational resources. Du [3] considers a cognitive vehicular network and formulate a dual-side optimization problem to minimize vehicle side and server side cost.

However, as the computation resources owned by vehicles are limited, not all the tasks can be disposed at once. More chances are that tasks go under a queueing stage and pending execution under an FIFO principle. For each vehicle, multiple tasks generated in different moments coexist, forming a task backlog queue. Most of the aforementioned works either ignore the queueing delay or approximate delay estimation, leading to defective and unreliable solutions.

In addition, it is worthwhile to leverage energy harvesting technology to capture the green energy (e.g., solar, wind and solar radiation, etc.) for charging battery constantly. Rather than the design adopted in [5, 14], energy harvesting devices in our settings are equipped by the MEC Server battery, which can embrace the benefit of massive deployment. The integration of renewable energy is provides another option for the MEC Server other than the battery storage and power grid. At each time slot, the server can alternate the source to provide energy for its service while preventing the battery level overload. Thus the cost minimization of MEC Server is also concerned in our framework.

As comparison to the state-of-the-art works, our proposed offloading scheme explicitly takes into account the key aspects specific in vehicular network. The contributions of our work can be summarized as follows:

-

•

We propose a novel Pre-Allocation Design for vehicular Offloading (PADO) framework, which enables the vehicle to carry out allocate-execute separate mechanism on deciding its offloading strategy. Under the PADO framework, a delay queue which directly represents the task queueing delay is formulated. The design of delay queue can facilitate arbitary delay deadline and tasks with stratified deadline requirement.

-

•

The execution cost minimization problem, which is an intractable high-dimensional Markov decision problem, is formulated by a low-complexity online Lyapunov optimization based scheduling framework. The usage of Lyaponov Optimization changes the stochastic problem into a deterministic one and its solution only depends on non-stochastic variables. Both parties in the PADO framwork, a.k.a vehicles and MEC Server, adopt this framework for balancing queues stability and cost minimization.

-

•

A one-leader and multi-follower Stackelberg Game between vehicles and MEC Server is formulated to characterize coupled trading behavior between players. The solution to the dual-side optimization problem gives us indicators for the current vehicle’s workload. A closed-form unit task price is derived, which can be used to guide vehicle’s offloading action.

-

•

We conduct extensive experiments to show the property and superiority of our proposed framework. Theorems about the stability of queues are verified by simulation results. Moreover, the effectiveness of the proposed algorithm is demonstrated by comparisons with three benchmark policies. It is shown that the PADO framework enables vehicles to fully utilize the execution deadline and improves in terms of execution cost.

The rest of the paper is organized as follows. We introduce the system model in Section II and formulate the optimization problem for vehicles and MEC Server in Section III. In Section IV, we use Stackelberg Game to determine the offloading strategy in a specific time slot. We evaluate our proposed offloading scheme with simulation in Section V. The paper is concluded in Section VI.

II System Model

II-A Mobile Edge Computing System

Typically, a mobile edge computing system involves several vehicles and road side units. We denote as the set of vehicles, where is the number of vehicles. These vehicle users are the producer of the whole system, which means they will stochastically generate certain amount of tasks at the beginning of each time slot. Here the stochasticity is two-fold, i.e., the occurrence of computation task and its numeric amount are both randomly distributed. In the light of the bursting nature of task arrival, vehicles either produce tasks, the quantity of which has a lower bound, or do not produce any new task at all. We thus use an i.i.d Bernoulli distribution across vehicles to model this burst arrival. We define as the parameter of the distribution, a.k.a the arrival rate. If is denoted to indicate the occurrence of a newly-arrived task on slot in vehicle , then . The numeric quantity of a computation task, denoted as is uniformly distributed on the closed interval . So in any time interval, the actual amount of newly generated tasks can be defined as below,

| (1) |

Other than the quantity, a computation task is attributed by other factors. The most important one is the maximal tolerant delay. To guarantee the user Quality of Service (QoS), applications with high requirement in timeliness need a response within certain time after generation. We denote as the time limit of vehicle ’s task in slot .

The execution of computation task is based on physical hardware (such as GPU, memory and HDD). In actual settings, different tasks also vary in the requirement of hardware. For instance, we call an application that has high requirement in GPU configuration as a GPU-intensive task. Vehicle-based virtual reality is one of the representative applications of this kind. So in the characterization of a computation task, we need to identify the request of resources respectively so that the edge servers can organize the VMs. Without loss of generality, we denote as the number of total types of resources, and then is the set of configuration on hardware, where is the configuration on type- resource. Thereafter, we use a tuple to characterize a computation task based on the discussion above.

II-B Computation Model on Vehicles and Servers

We assume that each computation task can be chopped into independent parts at any ratio, for local execution and offloading. This partition can be implemented by Spark [13]. We denote as the percentage of locally computed task, and as the offloading percentage. For vehicle , it will decide and for each task considering delay cost and the accessibility to the server.

The delay cost is comprised of two components, the queueing delay and service time a.k.a the execution time. As discussed above, vehicles can either execute the task locally, or offload it to the remote server. For the local part, the frequency scheduled for the task is denoted as , which can be implemented by adjusting the chip voltage with DVFS techniques[7]. The execution time can be expressed as

| (2) |

Compared with the server, vehicle users have limited local resources. So chances are that tasks are not instantly executed just on its arrival, but will have to be waiting in a backlog queue with FIFO discipline. This will generate the queueing delay. To quantify the queueing delay, we propose a novel delay time indicating method.

First, to facilitate the downstream method, we make a modification for the maximum tolerant delay , which is a continuous variable. Here binning method is used to discretize . is denoted as the set of typical delay constraints, where and is the number of delay constraints. As the task with lower delay requirement is more sensitive to the delay (e.g. the task with time limit of 10s is more sensitive to any 1s increase compared to that of time limit of 1 minute), typically we set the to be a geometric sequence. We assign a delay index for each of the task according to its as below.

| (3) |

can also be deemed as the priority index of a task. The lower is, the quicker response for the result should be. After discretization of into delay constraints, we can formulate a delay time sequence denoted by for each , where its update equation is

| (4) |

where is the time interval of each slot, is an indicator function that maps to 1 if , and 0 otherwise. We also assume the CPU-cycle frequency is constrained by .The queueing delay for tasks at time slot is the delay before it is generated, namely . Notice that the allocation and execution are separate in our settings. In other words, and are designated to a task when it arrives, while the true execution may begin when the task is at the head of the queue. That’s why the framework is called pre-allocation here.

Another part of the task is offloaded to the MEC server. This process can be viewed as a renting behavior for the virtual machines from server. Typically, the server organizes and provides its service in the form of virtual machine, which contains quantified computation resources. These VMs work independently, and serve as a Plug and Play application. It means when the task is scheduled to the server with resources not exceeding its limit quantum, the task can be executed immediately without any queueing or waiting. Hence the delay of offloaded tasks can be expressed as

| (5) |

Therefore, the total delay can be expressed as

| (6) |

One of the advantages of using MEC server for offloading is that the delay can be reduced. We assume that the computation resource on server can easily satisfy the deadline requirement of any task, say, , i.e.

| (7) |

Since , we can also get . According to Eq. 6, if we want to guarantee the total delay bound, the following inequality must be satisfied:

| (8) |

Apart from the delay, vehicles also pay attention to the energy cost for the task executed locally. The energy consumption model is describe below. For task , of the total amount is locally executed at CPU frequency , so the energy cost throughout its execution can be expressed as

| (9) |

where is the effective switched capacitance that depends on the chip architecture [1].

II-C Energy Model on Server

In this paper, the MEC server is equipped with a renewable energy generator, which consistently provides energy supply for the offloading system[12]. We denote the production rate as . The server benefits from the generation of renewable energy by reducing the amount of buying energy from the central electric grid. Compared to that from the latter one, the cost of renewable energy is negligent in this scenario, exempt from the investment of raw materials as well as the depravity of long-distance transmission. To make the best of the harvested energy, the server is also equipped with a battery as a buffer to store the energy. We denote the charging level of the battery in slot as .

For the MEC server, it supplies energy for the tasks offloaded from vehicle users. For task , we have a total amount of with allocated CPU frequency . So to empower the rent virtual machine, energy consumption for task can be expressed as

| (10) |

To avoid charging deficiency, the generated renewable energy is firstly supplied to execute the offloaded tasks. If there is redundant energy, then the surplus will be charged into the battery. Let be the current charging rate to the battery and thus , where . Otherwise, when the instant energy consumption exceeds the amount of renewable energy generation, the energy supply gap will be filled by either the battery or by the power grid. We denote the amount from latter resource as . And so is the discharged amount of energy from battery under this condition.

With the notations stated above, we can derive the battery dynamics on the basis of charging and discharging strategy,

| (11) |

where and represent the charging and discharging efficiency respectively. And denotes the amount of energy extracted from the battery, so it must satisfies:

| (12) |

which means the extracted energy must not exceed the current battery storage.

The backlog of tasks in vehicular users and the energy storage makes the offloading decisions for users and energy supply policy for servers to be more complicated, compared to the conventional mobile edge computing systems. These two kinds of backlog entail temporally correlated workload and energy level and makes the system decisions coupled in different time slots. In other words, the decisions are not myopic any more but to make a tradeoff between the long term and current profit.

III Problem Formulation

III-A Cost minimization for vehicular users

The vehicular users are motivated to minimize the time-average expected cost for executing the tasks with guaranteed delay. This problem can be formulated as below,

where denotes the overall energy consumption for local execution. And denotes the payment for VMs from MEC server. As a general business model, the service provided by server is charged by time. denotes the server’s unit price for providing CPU frequency . The expectation above is with respect to the potential randomness of the control policy. is the drop loss of a task. If the task is neither executed locally nor offloaded, it can be deemed as dropped (or deployed to cloud), the price of which is .

One challenge in solving is due to constraint , which brings time correlation to the problem. We leverage tools from Lyapunov optimization framework. First, we are going to modify , and transform the off-line problem to an online optimization problem. To guarantee the delay of task execution, we construct a virtual queue , which can be expressed as follow:

| (13) |

We can define the Lyapunov function as . Then, the conditional Lyapunov drift-plus-penalty for slot is given by:

| (14) |

where is a user-determined hyperparameter. While we assume that in any time slot, at most one task is newly generated, whose delay priority is denoted as . Then the drift-plus-penalty can be slacked with an upper bound,

where is a constant with regard to the function with variables , and .

Proof 1

See Appendix A.

At every time slot, the vehicle will make decisions of the local allocated CPU frequency and the proportion to be offloaded for controlling the upper bound of cost. To this end, the Lyapunov framework minimizes the right hand side of the drift-plus-penalty expression. The optimization problem for vehicle can thus be formulated as

III-B Revenue Maximization for MEC Server

As for MEC server, it provides high-quality and ultra-low latency service for vehicles. Its objective is to make a profit for providing such service. However, it also pays close attention to the remnant in battery. For a sustainable business mode, the battery should be in a healthy and stable across time. The revenue maximization problem can be defined as below

where is the unit price of purchasing electricity from power grid. So is the overall payment for extra power when the renewable energy supply is insufficient.

Nevertheless, due to the energy causality constraint (12), the decision making process of is coupled among different time slots. To facilitate further analysis, we first introduce an upper bound for the discharged energy, and the problem can be rewritten as the following

As will be elaborated later, the proposed solution to also satisfies constraint (12). Next we define the perturbation parameter and virtual energy queue respectively,

| (15) |

| (16) |

where is a positive control parameter. With regard to the stability of battery queue, we adopt a similar Lyapunov framework on MEC server. The Lyapunov drift-plus-penalty is defined as

To minimize the RHS of the above inequality, the MEC server make decisions of and . So the revenue maximization of MEC server can now be expressed as

Thus, in this vehicular edge computing scenario, we aim at devising a bidirectional pricing and energy management scheme for both vehicle users and MEC server. Meanwhile, we expect to guarantee the long term profit of all the agents in the system. To this end, a Stackelberg Game based algorithm is proposed for the agents’ own profit maximization respectively.

IV A Stackelberg Game Approach

In this section, we develop a Stackelberg Game model to analyze the offloading mechanism between vehicular users and MEC server according to optimization problem and respectively. First, we formally define the game played under such scenario over the slots. This game contains vehicular users and MEC server. The bidirectional pricing scheme set by MEC server will variously impact the offloading decision and local allocation for vehicles, which will conversely affect the planning of mechanism of MEC server through its total revenue from users. This leads to a typical instance of the Stackelberg Game, where the MEC server works as a leader and the vehicular users are the followers who are subject to the decision made by leader. Here we give the complete modeling of this one-leader and multi-follower Stackelberg Game as below,

Definition 1

Vehicular Offloading Stackelberg Game Players: vehicular users and 1 MEC server.

Strategies: Each vehicular user determines its own strategy , which is a combination of the partition of task and the locally allocated CPU frequency to meet the demand of its long term profit. The MEC server also makes decision at every slot , where is a CPU frequency allocation vector. And it also decides the amount of energy to buy from power grid.

Payoff: Vehicular users benefit from offloading tasks by saving the energy as well as guaranteeing the delay constraint for each task. MEC server makes profit through payment for renting virtual machines to the vehicular users who offload their tasks.

As stated in Section III, vehicles and server have their own utility functions, which are also mutually correlated. So seeking the best strategy for each of them is equivalent to optimizing the utility functions of vehicle users and MEC server sequentially.

We first address the strategy for vehicular users. Notice that there is variable coupling due to the constraint in . Hence we employ the Lagrange dual method. the optimization function in is a concave function with regard to variable and . The Lagrangian relaxation for is defined as

where is the Lagrangian multiplier for constraint . Due to the convexity of the objective function, the minimum of can be derived by the Karush-Kuhn-Tucker (KKT) conditions described as below:

The optimization problem has a local minimum iff. there exists a unique s.t.

We can derive the corresponding and as

| (17) |

| (18) |

where ,, can be deemed as the unit price for executing the task for local computation, offloading to MEC server and dropping to cloud respectively. More specifically, these values are derived from the KKT condition and can be expressed as below,

| (19) |

| (20) |

| (21) |

Proof 2

See Appendix B.

With the two variables and selected in (17) and (18), the vehicle can further determine the pre-allocation amount of local CPU frequency by solving the problem in with regard to variable .

Now that the vehicular users’ decision space is determined, MEC server, as the leader in the Game, will modify its decision on the basis of . On scrutinizing the optimization problem for server, can be decomposed into two subproblems

The objective of is a linear function. So the purchasing strategy can be selected as,

| (22) |

In accord with our intuition, MEC server will supply task computation with its battery energy when it has abundant storage. On the other hand, purchase from power grid mounts when the battery is thirsty. We can prove that with adopting this strategy, the battery storage will stabilize within a fixed interval.

Theorem 1

Under the given strategy in (22), the battery energy level of MEC server is confined within , .

Proof 3

See Appendix C.

Notice that the proved range of means that . So the constraint (12) can be satisfied by .

determines the CPU frequency allocation on server and the task-specific sale price for each vehicle. Due to the complexity of the original problem, we introduce a Lagrangian dual problem . So the allocation and pricing strategy can be derived from a problem given by

where

and , is the vector of Lagrangian multiplies and must satisfy

| (23) |

The dual problem is solved by using gradient projection method, and the Lagrangian multipliers are updated as following:

| (24) |

We display the whole decision process in 1, where the core idea is optimization of , and , iteratively and alternatively.

We have derived three different optimization objectives under different cases, i.e. , , . They are defined as below:

Interestingly, we can efficiently derive the solutions to all the three optimization problems due to the linearity or quadraticity of the objective functions. The solving procedure can be seen in Appendix D.

V Numerical Results

In this section, we will verify the theoretical results derived in Section IV and evaluate the performance of the proposed algorithm through simulations. We consider an MEC system with = 50 randomly deployed mobile vehicles. We also set , cycles/bit and GHz for all mobile vehicles. The locally generated computation task is assumed to be uniformly distributed within [10, 20] units, and each unit represents 1000 bit computation amount. The simulation results are conducted over 1000 consecutive time slots with slot length ms. The control parameter is chosen extensively from to .

We compare our proposed method with the existing paradigms listed below:

i) : No offloading happens in this scenario. All the tasks are executed locally, with local CPU frequency that maximizes .

ii) : The vehicles will stochastically offload part of its tasks to the MEC server. And the MEC Server accept these offloaded tasks as long as it has surplus computational resources. Otherwise, the tasks will be offloaded to the cloud.

iii) : The vehicles can make decisions to locally execute the tasks or offload them. As a mainstream method in the preceding literature [3], this approach focuses on maintaining the stability of a task backlog queue. For fair comparison, other settings of the framework are shared in our paper.

V-A Service Delay Performance

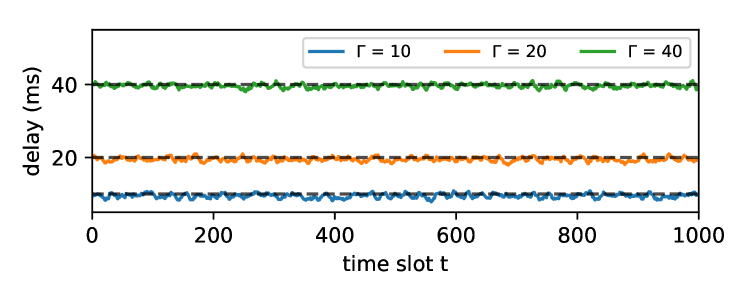

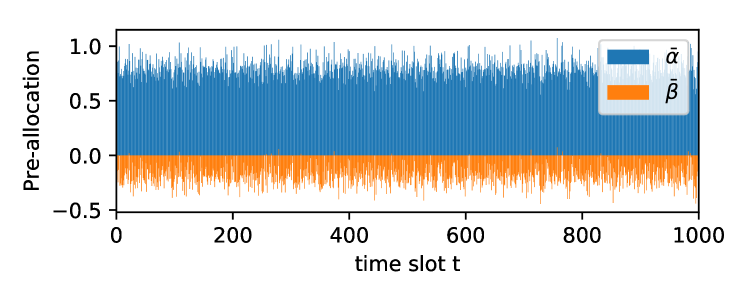

Fig. 1 shows the delay evolution with different task deadlines throughout the testing time. The black dashed lines demonstrate the delay requirement of each kind of tasks. From the figure, we can observe that the average of delay is guaranteed in our method by stabilizing the delay queue. Fig. 2 shows the corresponding pre-allocation strategy for the vehicle to stabilize its delay queue. It shows that our framework enables the vehicles to fully utilize the pre-set deadline requirement so that the delay can jitter close to the dashed line.

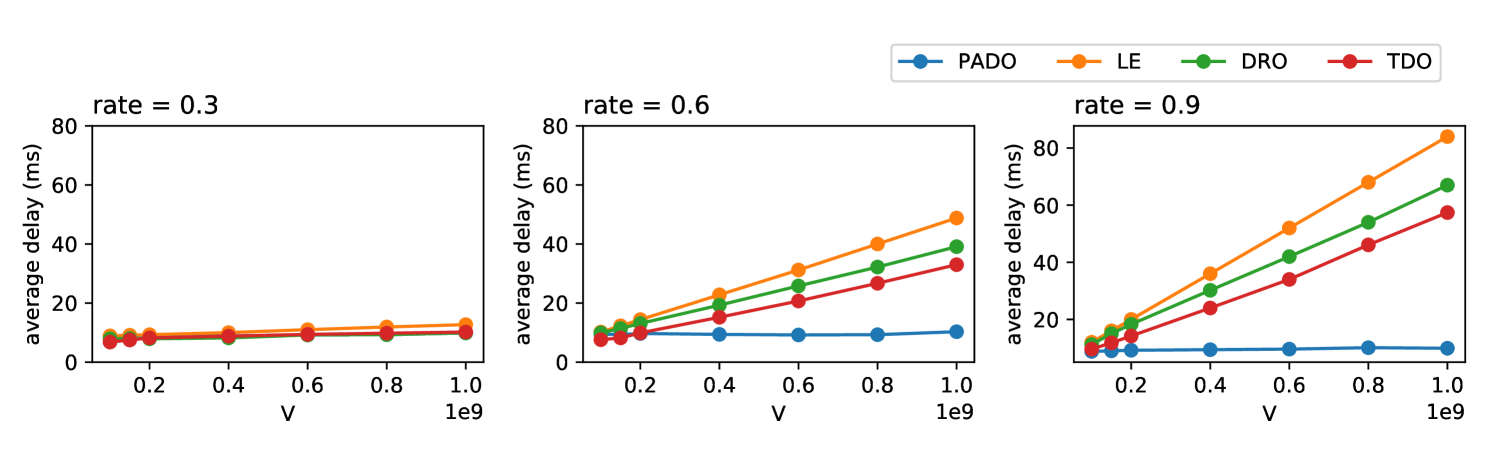

In Fig. 3, we demonstrate the service delay performance for PADO algorithm and other three benchmark methods. We test the methods under different task arrival rates, which represent the severity of workload.

It can be observed that when the task arrival rate is low, all the approaches can easily handle the situation by vehicles’ local computation resource. In this situation, PADO will allocate its resources and fulfill the delay requirement more wisely. When the task arrival rate becomes high, for all the benchmarks, the average delay increases linearly with V and becomes unbounded when V goes to infinity. However, with our pre-allocation framework PADO, the service delay can be controlled beforehand via a pre-defined parameter. This property is well preserved especially when the control parameter is relatively small, which means the vehicle attaches more importance to the service delay.

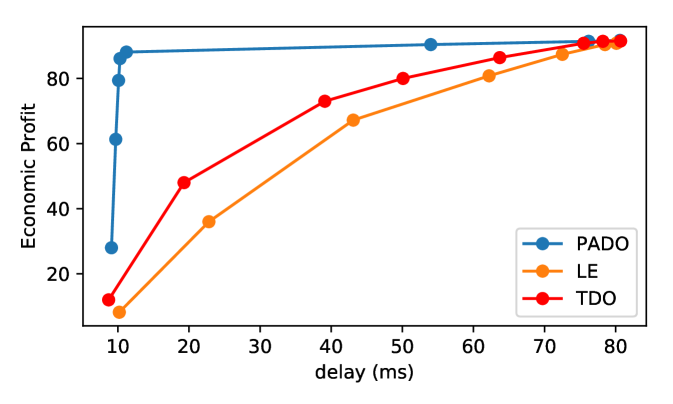

V-B Economic Profit of Vehicles

Other than the delay requirement, our framework also focuses on economic profit for each vehicle. The economic profit includes minimizing local energy consumption, offloading payment and dropping loss. So the PADO framework can be summarized as searching for the highest economic profit that satisfies task’s delay constraint. From Fig. 4, we observe that methods under Lyapunov optimization framework have tradeoff between task delay and execution expenditure. For Local Execution Strategy (LE), when the control parameter is low, it will allocate more local CPU resources to lessen its execution delay, so the economic profit is low. On the other hand, when goes larger, the economic profit rises for less local energy consumption, but with the delay rises correspondingly. For TDO and PADO, this process is more complex as the vehicles can decide their offloading strategy by tuning and and they both show a similar tradeoff with LE. The difference between TDO and PADO is that our proposed PADO has a phase that stabilizes the delay but largely varies in economic profit. As stated above, vehicles in this phase can fully utilize the deadline and optimizes its economic profit. We observe that the three methods converge to the same point in Fig. 4. It means when V is ultra-large, more emphasis is put on the economic profit. The most efficient way to lower expenditure is to locally execute the tasks regardless of delay. However, such should be avoided in real world application as the offloading strategy does not take advantage of the service provided by MEC Server.

V-C Performance on MEC Server

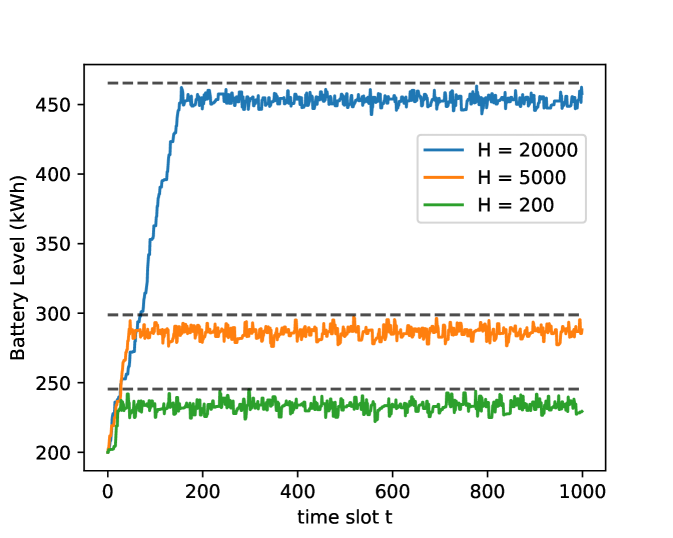

Our PADO framework also pays attention to the performance on the service providers, a.k.a the MEC Server. One of the main concerns of MEC Server is the battery charge/discharge management. First, Fig. 5 illustrates the battery level evolution process under control parameter ranging from 200 to 20000. It directly shows the stability of battery level, which has been theoretically proven in Appendix A. The battery is initially charged with 200 kWh electricity power. We can see that for different , the battery levels first increase linearly and then jitter within a fixed range. Throughout the time slots, the battery level never crosses the upper bound , which is represented by the balck dashed lines in Fig 5 for different respectively. When becomes larger, the stable level of battery also increases. It means the battery size should be expanded for increased .

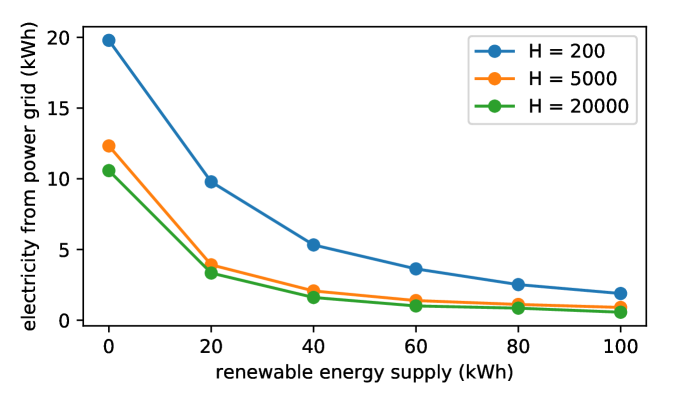

Fig 6 shows the energy management strategy of the MEC Server. One of the most frustrating problems in using renewable energy as power supply is its unstable generation. We can see that our PADO framework enables the MEC Server to automatically change its energy source. When the renewable energy supply is low, the energy purchased from power grid would be high to maintain its battery level and provide service to vehicles. Another property is that when the renewable energy supply reduces, the overall energy supplement (from power grid and renewable source) will also decline. We also compare the energy management strategy under different . When is large, the MEC Server lays more weight to the expenditure, so the purchase amount drops correspondingly.

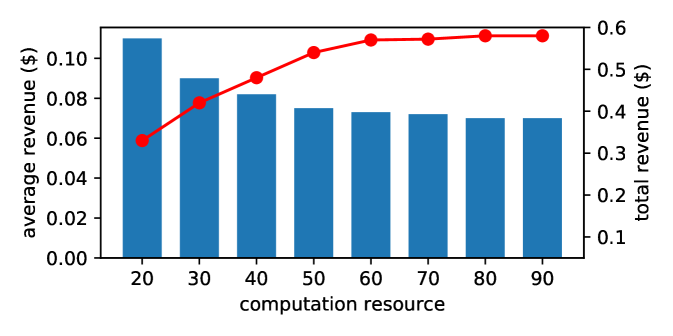

In our MEC settings, the MEC Server has limited computation resources, which means it cannot provide service for all the offloading requests. The server may accept or reject some of them by tuning its sale price to maximize its own profit. Fig. 7 shows the relation between the quantity of computation resources that an MEC Server owns and its transaction price with vehicles. As the MEC Server has more computation resources, the average price111The average price means the average transaction price for one unit of computation task. decreases to attract more offloading requests from vehicles. Indeed the loss of income in unit price is worthwhile for the MEC Server, since the server can earn it back by expanding the customer group. So we also observe an increase in the total revenue. The unit price will no longer decrease When the MEC Server has abundant computation resources. It can be explained by the reason that further decrease in price will not generate higher overall profit for the server.

Appendix A

We prove the constraints for by induction. First, if , it denotes that battery has abundant energy, so the server choose to discharge its energy from battery. So . Moreover, . Hence according to our definition of in (15). When , . Also, . Therefore, we have .

References

- [1] T. D. Burd and R. W. Brodersen. Processor design for portable systems. Journal of VLSI signal processing systems for signal, image and video technology, 13(2-3):203–221, 1996.

- [2] N. Cordeschi, D. Amendola, and E. Baccarelli. Reliable adaptive resource management for cognitive cloud vehicular networks. IEEE Transactions on Vehicular Technology, 64(6):2528–2537, 2014.

- [3] J. Du, F. R. Yu, X. Chu, J. Feng, and G. Lu. Computation offloading and resource allocation in vehicular networks based on dual-side cost minimization. IEEE Transactions on Vehicular Technology, 68(2):1079–1092, 2018.

- [4] Y. Liu and M. J. Lee. An effective dynamic programming offloading algorithm in mobile cloud computing system. In 2014 IEEE Wireless Communications and Networking Conference (WCNC), pages 1868–1873. IEEE, 2014.

- [5] Y. Mao, J. Zhang, and K. B. Letaief. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE Journal on Selected Areas in Communications, 34(12):3590–3605, 2016.

- [6] H. Qiu, F. Ahmad, R. Govindan, M. Gruteser, F. Bai, and G. Kar. Augmented vehicular reality: Enabling extended vision for future vehicles. In Proceedings of the 18th International Workshop on Mobile Computing Systems and Applications, pages 67–72. ACM, 2017.

- [7] J. M. Rabaey, A. P. Chandrakasan, and B. Nikolic. Digital integrated circuits, volume 2. Prentice hall Englewood Cliffs, 2002.

- [8] K. Sasaki, N. Suzuki, S. Makido, and A. Nakao. Vehicle control system coordinated between cloud and mobile edge computing. In 2016 55th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), pages 1122–1127. IEEE, 2016.

- [9] A. Vu, A. Ramanandan, A. Chen, J. A. Farrell, and M. Barth. Real-time computer vision/dgps-aided inertial navigation system for lane-level vehicle navigation. IEEE Transactions on Intelligent Transportation Systems, 13(2):899–913, 2012.

- [10] W. Wang and W. Zhou. Computational offloading with delay and capacity constraints in mobile edge. In 2017 IEEE International Conference on Communications (ICC), pages 1–6. IEEE, 2017.

- [11] Y. Xu and S. Mao. A survey of mobile cloud computing for rich media applications. IEEE Wireless Communications, 20(3):46–53, 2013.

- [12] B. Yang, J. Li, Q. Han, T. He, C. Chen, and X. Guan. Distributed control for charging multiple electric vehicles with overload limitation. IEEE Transactions on Parallel and Distributed Systems, 27(12):3441–3454, 2016.

- [13] M. Zaharia, M. Chowdhury, M. J. Franklin, S. Shenker, and I. Stoica. Spark: Cluster computing with working sets. HotCloud, 10(10-10):95, 2010.

- [14] G. Zhang, W. Zhang, Y. Cao, D. Li, and L. Wang. Energy-delay tradeoff for dynamic offloading in mobile-edge computing system with energy harvesting devices. IEEE Transactions on Industrial Informatics, 14(10):4642–4655, 2018.