A QR Algorithm for Symmetric Tensors

Abstract

We extend the celebrated QR algorithm for matrices to symmetric tensors. The algorithm, named QR algorithm for symmetric tensors (QRST), exhibits similar properties to its matrix version, and allows the derivation of a shifted implementation with faster convergence. We further show that multiple tensor eigenpairs can be found from a local permutation heuristic which is effectively a tensor similarity transform, resulting in the permuted version of QRST called PQRST. Examples demonstrate the remarkable effectiveness of the proposed schemes for finding stable and unstable eigenpairs not found by previous tensor power methods.

keywords:

Symmetric tensor, QR algorithm, shift, eigenpairAMS:

15A69,15A181 Introduction

The study of tensors has become intensive in recent years, e.g., [9, 13, 3, 8, 10, 11]. Symmetric tensors arise naturally in various engineering problems. They are especially important in the problem of blind identification of under-determined mixtures [1, 5, 2, 15]. Applications are found in areas such as speech, mobile communications, biomedical engineering and chemometrics. Although in principle, the tensor eigenproblem can be fully solved by casting it into a system of multivariate polynomial equations and solving them, this approach is only feasible for small problems and the scalability hurdle quickly defies its practicality. For larger problems, numerical iterative schemes are necessary and the higher-order power methods (HOPMs) presented in [3, 8, 10, 11] are pioneering attempts in this pursuit. Nonetheless, HOPM and its variants, e.g., SS-HOPM [10] and GEAP [11], suffer from the need of setting initial conditions and that only one stable eigenpair is computed at a time.

In the context of matrix eigenpair computation, the QR algorithm is much more powerful and widely adopted, yet its counterpart in the tensor scenario seems to be lacking. Interestingly, the existence of a tensor QR algorithm was questioned at least a decade ago [7], which we try to answer here. Specifically, the central idea of the symmetric QR algorithm is to progressively align the original matrix (tensor) onto its eigenvector basis yet preserving the particular matrix (tensor) structure, viz. symmetry, by similarity transform. In that regard, the tensor nature does not preclude itself from having a tensor QR algorithm counterpart. The main contribution of this paper is an algorithm, called the QR algorithm for Symmetric Tensors (QRST), that computes the real eigenpair(s) of a real th-order -dimensional symmetric tensor . We show that QRST also features a shifted version to accelerate convergence. Moreover, a permutation heuristic is introduced, captured in the algorithm named permuted QRST (PQRST), to scramble the tensor entries to produce possibly more distinct eigenpairs. We emphasize that the current focus is essentially a demonstration of the feasibility of QR algorithm for tensors, rather than an effort to develop efficient QRST implementations which will definitely involve further numerical work and smart shift design. We believe the latter will come about when QRST has become recognized.

This paper is organized as follows. Section 2 reviews some tensor notions and operations, and defines symmetric tensors and real eigenpairs. Section 3 proposes QRST and PQRST, together with a shift strategy and convergence analysis. Section 4 demonstrates the efficacy of (P)QRST through numerical examples, and Section 5 draws the conclusion.

2 Tensor Notations and Conventions

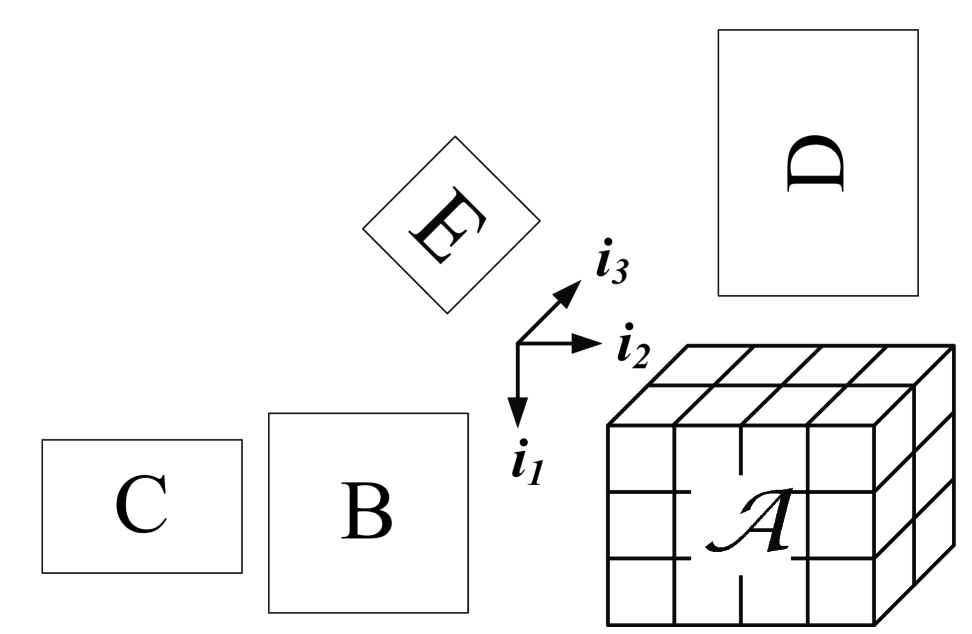

Here we present some tensor basics and notational conventions adopted throughout this work. The reader is referred to [9] for a comprehensive overview. A general th-order or -way tensor, assumed real throughout this paper, is a multidimensional array that can be perceived as an extension of the matrix format to its general th-order counterpart [9]. We use calligraphic font (e.g., ) to denote a general () tensor and normal font (e.g., ) for the specific case of a matrix (). The inner product between two tensors is defined as

| (1) |

where is the vectorization operator that stretches the tensor entries into a tall column vector. Specifically, for the index tuple , the convention is to treat as the fastest changing index while the slowest, so will arrange from top to bottom, namely, , , , , , . The norm of a tensor is often taken to be the Frobenius norm .

The -mode product of a tensor with a matrix is defined by [9]

| (2) |

and . For distinct modes in a series of tensor-matrix multiplication, the ordering of the multiplication is immaterial, namely,

| (3) |

whereas it matters for the same mode,

| (4) |

This is in fact quite easy to recognize by referring to Figure 1 where . As an example, for matrices with compatible dimensions, it can be readily shown that . Apparently, for whereby denotes the identity matrix.

2.1 Symmetric Tensors and Real Eigenpairs

In this paper we focus on symmetric tensors. In particular, a th-order symmetric tensor has all its dimensions being equal, namely, , and , where is any permutation of the indices . To facilitate notation we further introduce some shorthand specific to symmetric tensors. First, we use to denote the set of all real symmetric th-order -dimensional tensors. When a row vector is to be multiplied onto modes of (due to symmetry it can be assumed without loss of generality that all but the first modes are multiplied with ), we use the shorthand

| (5a) | ||||

| (5b) | ||||

| (5c) | ||||

| (5d) | ||||

| (5e) | ||||

This is a contraction process because the resulting tensors are progressively shrunk wherein we assume any higher order singleton dimensions () are “squeezed”, e.g., is treated as an matrix rather than its equivalent tensor. Besides contraction by a row vector, the notation generalizes to any matrix of appropriate dimensions. Suppose we have a matrix , then

| (6) |

Now, the real eigenpair of a symmetric tensor , namely, , is defined as

| (7) |

This definition is called the (real) Z-eigenpair [16] or -eigenpair [14] which is of interest in this paper, apart from other differently defined eigenpairs, e.g., [11]. It follows that

| (8) |

For , depending on whether is odd or even, we have the following result regarding eigenpairs that occur in pairs [10]:

Lemma 1.

For an even-order symmetric tensor, if is an eigenpair, then so is . For an odd-order symmetric tensor, if is an eigenpair, then so is .

Proof.

For an even , we have . For an odd , we have .∎

Subsequently, we do not call two eigenpairs distinct if they follow the relationship in Lemma 1. For even-order symmetric tensors, there exists a symmetric identity tensor counterpart such that [16, 10]

| (9) |

The constraint that is necessary so it holds for all even . Obviously, is an eigenpair for . It is also obvious that such an identity tensor does not exist for an odd since contracting with does not lead to itself. Consequently, for an even , we have similar shifting of eigenvalues when we add a multiple of to , i.e., if is an eigenpair of , then is an eigenpair of .

2.2 Similar Tensors

In this paper, we say that two symmetric tensors are similar, denoted by , if for some non-singular

| (10) |

so that the inverse transform of by will return , namely,

| (11) |

Suppose is similar to through a similarity transform by an orthogonal matrix , i.e., and . It follows that if is an eigenpair of , then is an eigenpair of where denotes the first column of . This is seen by

| (12) |

In fact, we do not need to limit ourselves to and the above result easily generalizes to any , which we present as lemma 2.

Lemma 2.

For a symmetric tensor , if is an eigenpair of , then is an eigenpair of where is the th column of the orthogonal matrix .

Proof.

The proof easily follows from (12). ∎

3 QR Algorithm for Symmetric Tensors

The conventional QR algorithm for a symmetric matrix starts with an initial symmetric matrix , and computes its QR factorization . Next, the QR product is reversed to give . And in subsequent iterations, we have , and where we define . Under some mild assumptions, will converge to a diagonal matrix holding the eigenvalues of . In the following, we present QRST which computes multiple, stable and unstable [10], eigenpairs. Moreover, with a permutation strategy to scramble tensor entries, PQRST can produce possibly even more distinct eigenpairs, rendering such tensor QR algorithm much more effective compared to tensor power methods [10, 11] which find only one stable eigenpair at a time.

3.1 QRST

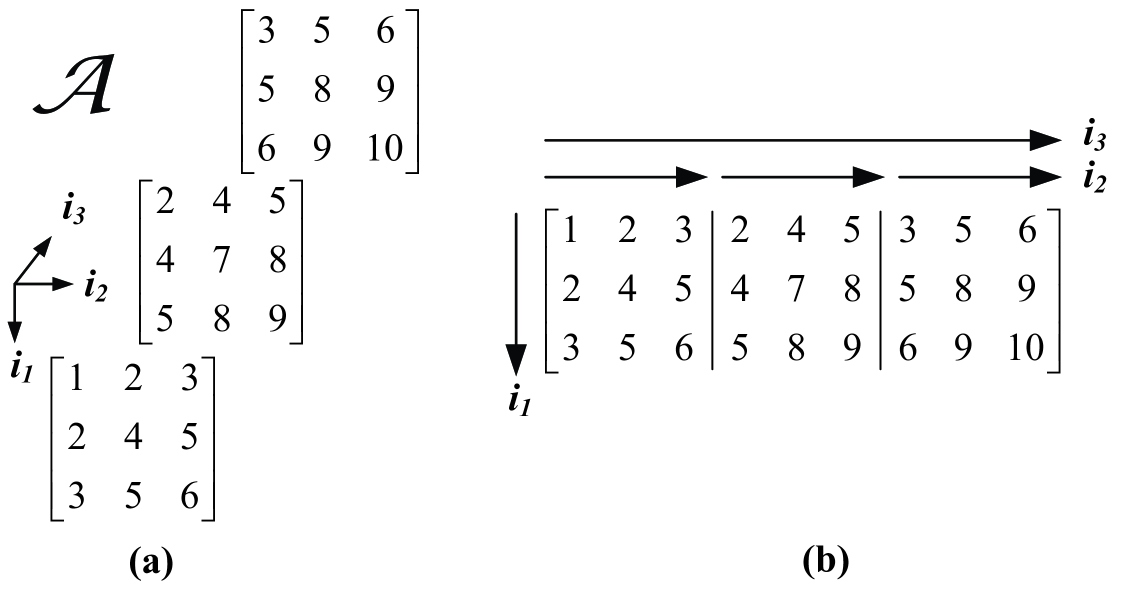

The symmetric tensor in Figure 2(a) will be used as a running example. We call it a labeling matrix as it labels each distinct entry in a symmetric tensor sequentially with a natural number. Moreover, Figure 2(b) is the matricization of this labeling matrix along the first mode [9]. As a matter of fact, matricization about any mode of gives rise to the same matrix due to symmetry.

Now we formally propose the QRST algorithm, summarized in Algorithm 3.1. The core of QRST is a two-step iteration, remarked in Algorithm 3.1 as Steps 1 and 2, for the th slice where . In the Algorithm, it should be noted that Matlab convention is used whereby it can be recognized that is a column and is a (symmetric) square matrix.

Algorithm 3.1.

(Shifted) QRST

Input: , tolerance , maximum iteration , shifts from shift scheme

Output: eigenpairs

for do # \eqparboxCOMMENTiterate through the th square slice

iteration count

while or do

Step 1: # perform QR factorization

Step 2: # similarity transform

end while

end for

if converged then

end if

Referring to Figure 2(b), if we take , and start with , the first (leftmost) “slice” of the matrix is extracted for QR factorization to get , and then all three modes of are multiplied with so the tensor symmetry is preserved. Using Algorithm 3.1 with the heuristic shift described in Section 3.2, this tensor QR algorithm converges in iterations when becomes a multiple of (i.e., all but the first entry in the column are numerically zero), resulting in

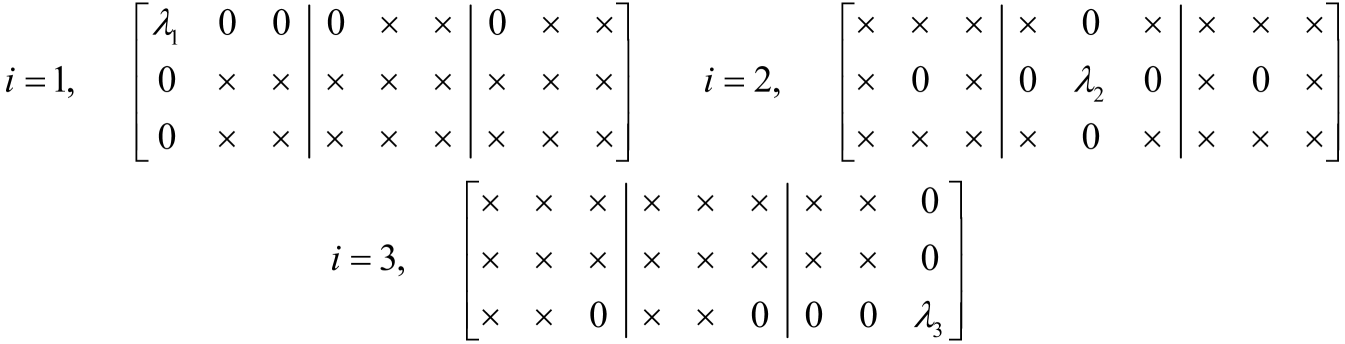

for which is obviously an eigenvector as its contraction along the and axes will extract the first column . Using (12), this corresponds to the eigenpair of the labeling tensor. In fact, for different ’s, we shoot for different converged patterns in the corresponding square slices as depicted in Figure 3, where it is obvious that for each .

Since the core computation of QRST lies in the QR factorization () and the corresponding similarity transform (), the overall complexity is therefore dominated by the similarity transform process. Note that this high complexity comes about whereby the tensor symmetry is not exploited. When a low-rank decomposition for exists that represents as a finite sum of rank- outer factors, the complexity of QRST can be largely reduced to . However, we refrain from elaborating as it is beyond our scope of verifying the idea of QRST. Such low-rank implementation will be presented separately in a sequel of this work.

3.2 Convergence and Shift Selection

We assume an initial symmetric . The vector denotes the th column of the identity matrix and we define with and etc. A tensor with higher order singleton dimensions is assumed squeezed, e.g., is regarded as an matrix rather than its equivalent tensor. Also, we use to denote the th column in and to stand for the -entry of . It will be shown that the unshifted QRST operating on the matrix slice resulting from contracting all but the first two modes by , i.e., , is in fact an orthogonal iteration. This can be better visualized by enumerating a few iterations of QRST:

| (13a) | ||||

| (13b) | ||||

| (13c) | ||||

| (13d) | ||||

| (13e) | ||||

etc. From (13), we see that in general for ,

| (14a) | ||||

| (14b) | ||||

Multiplying to both sides of (14b) we get

| (22) | |||

| (29) |

It should now become obvious that (29) is the tensor generalization of the matrix orthogonal iteration [6] (also called simultaneous iteration or subspace iteration) for finding dominant invariant eigenspace of matrices111Unlike the matrix case with a constant (static) matrix , the tensor counterpart comes with a dynamic that is updated in every pass with the th column of .. This result comes at no surprise as the matrix QR algorithm is known to be equivalent to orthogonal iteration applied to a block column vector. However, our work confirms, for the first time, this is also true also for symmetric tensors.

Now, if we set and extract the first columns on both sides of (29), we get

| (30) |

which is just the unshifted S-HOPM method [3, 8] cited as Algorithm 1 in [10]. If we start QRST with , then the first two columns of (29) proceed as

| (31a) | ||||

| (31b) | ||||

Assuming convergence at ,

| (32a) | ||||

| (32b) | ||||

from which we can easily check that due to (32a). In fact, for any , if and converge to and , respectively, then from (29), we must have

| (37) |

where is diagonal due to symmetry on the right of equality. Now if we pre-multiply onto both sides of (37) and post-multiply with , then

| (38) |

which boils down to the eigenpair when . So in effect, (shifted) QRST finds more than just the conventional eigenpairs, but actually all “local” eigenpairs of the square matrix , one of them happens to be the tensor eigenpair. This explains why in practice, (shifted) QRST can locate even unstable tensor eigenpairs as it is not restricted to the “global” convexity/concavity constraint imposed on the function that always leads to positive/negative stable eigenpairs [10].

Till now, it can be seen that except for , (shifted) QRST differs from the (S)S-HOPM algorithm presented in [10, 11], and convergence is generally NOT ensured for . However, inspired by (32b), the key for convergence starts with the convergence of the first column that reads

| (39) |

Consequently, applying results in [10, 11], convergence of (39) can be enforced locally around provided the scalar function is convex (concave), which is “localized” in the sense that the contracted is used instead of the whole . Then, for example, convexity can be achieved by adding a shift that makes positive semidefinite, namely,

| (40) |

So the shift step in Algorithm 3.1 is simply where is a small positive quantity which is set to be unity in our tests unless otherwise specified. This is analogous to the core step in SS-HOPM (Algorithm 2 of [10]). In short, the shift strategy in [10, 11] can be locally reused for QRST with the advantage that QRST may further compute the unstable eigenpairs alongside the positive/negative-stable ones, since a group of eigenpairs are generated at a time due to analogy of QRST to orthogonal iteration.

3.3 PQRST

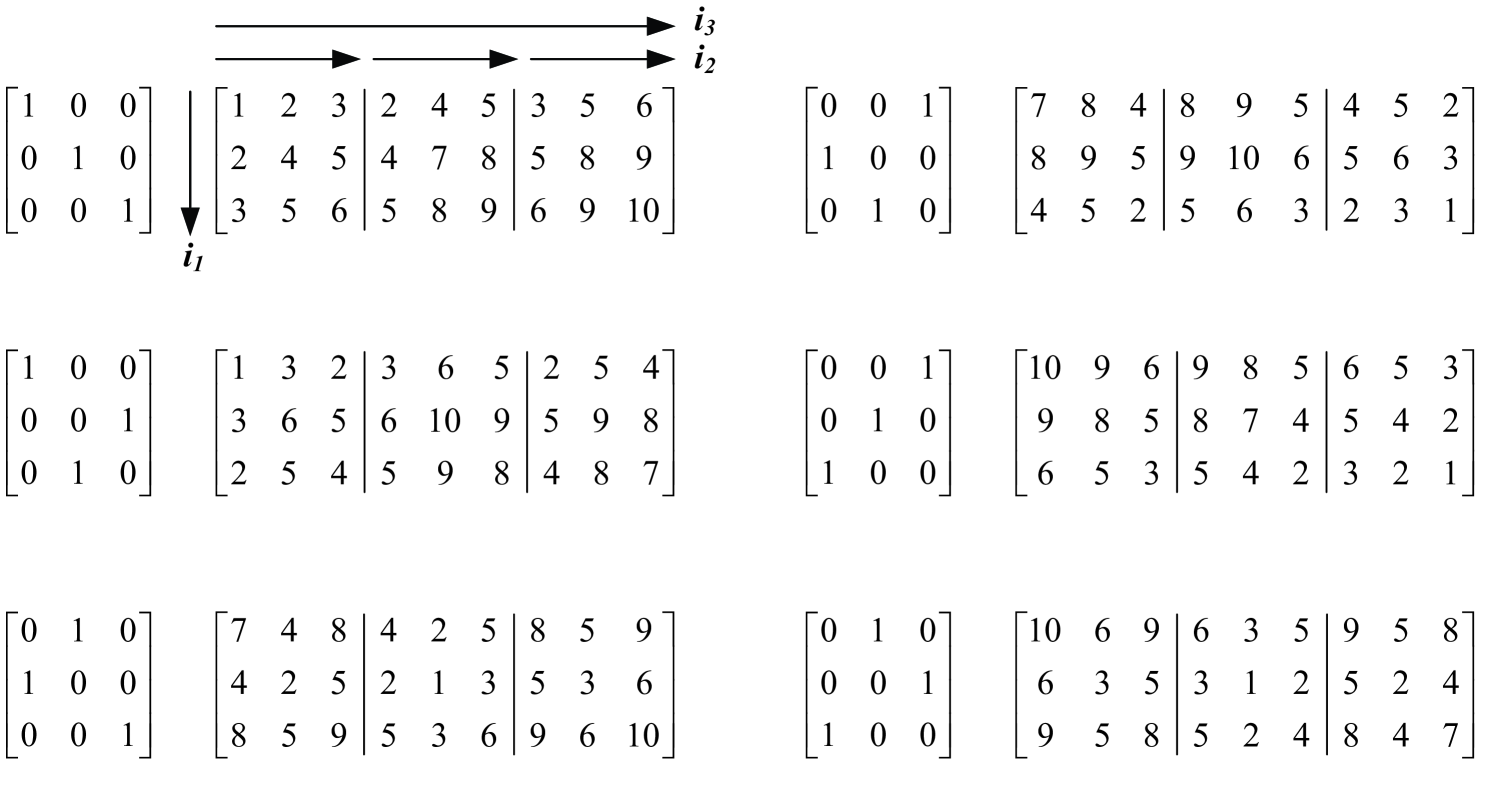

For a symmetric , QRST produces at most eigenpairs in one pass, and fewer than eigenpairs can result when QRST does not converge or when the same eigenpair is computed repeatedly. To increase the probability of generating distinct eigenpairs, and noting that eigenvectors of similar tensors are just a change of basis, we introduce the heuristic of permutation to scramble the entries in as much as possible. Figure 4 shows how the labeling tensor is permuted by the (orthogonal) permutation matrices.

As such, we can run QRST on a tensor subject to different permutations. We call this permuted QRST or PQRST, summarized in Algorithm 3.2.

Algorithm 3.2.

PQRST

Input: , preconditioning orthogonal matrices in a cell ,

Output: eigenpairs

for do

call QRST Algorithm 3.1 with and collect distinct converged eigenpairs

end for

Of course, PQRST requires running QRST times which quickly becomes impractical for large . In that case, we should limit the number of permutations by sampling across all possible permutations or use other heuristics for setting the initial pre-transformed , which is our on-going research.

4 Numerical Examples

In this section, we compare PQRST with SS-HOPM from [10], and with its adaptive version described in [12]. For all the SS-HOPM runs the shift was chosen as . We considered the iteration to have converged when . If the eigenpairs are not known in advance then we also solve the corresponding polynomial system using the numerical method described in [4]. All numerical experiments were done on a quad-core desktop computer with 16 GB memory. Each core runs at 3.3 GHz.

4.1 Example 1

As a first example we use the labeling tensor of Figure 2(a). Due to the odd order of the tensor we have that both and are eigenpairs. From solving the polynomial system we learn that there are 4 distinct eigenpairs, listed in Table 1. The stability of each of these eigenpairs was determined by checking the signs of the eigenvalues of the projected Hessian of the Lagrangian as described in [10].

| stability | ||

|---|---|---|

| 30.4557 | negatively stable | |

| 0.4961 | positively stable | |

| 0.1688 | positively stable | |

| 0.1401 | unstable |

100 runs of the SS-HOPM algorithm were performed, each with a different random initial vector. Choosing the random initial vectors from either a uniform or normal distribution had no effect whatsoever on the final result. The shift was fixed to the “conservative” choice of . All 100 runs converged to the eigenpair with the eigenvalue . The median of number of iterations is 169. One run of 169 iterations took about 0.02 seconds. Next, 100 runs of the adaptive SS-HOPM algorithm were performed with a tolerance of . When the initial guess for was a random vector sampled from a uniform distribution, then all 100 runs consistently converged to the eigenpair with a median number of 14 iterations. This clearly demonstrates the power of the adaptive shift in order to reduce the number of iterations. Choosing the initial vector from a normal distribution resulted in finding the 3 stable eigenpairs and 2 iterations not converging (Table 2). The error was computed as the average value of . Similar to the SS-HOPM algorithm, the PQRST algorithm without shifting can only find the “largest” eigenpair with a median of 13 iterations. This took about 0.082 seconds. PQRST with shift results in 18 runs that find all eigenpairs (Table 3). From the 18 runs, 8 did not converge.

| occ. | median its | error | ||

|---|---|---|---|---|

| 58 | 30.4557 | 15 | ||

| 23 | 0.4961 | 225 | ||

| 17 | 0.1688 | 701 |

| occ. | median its | error | ||

|---|---|---|---|---|

| 2 | 30.4557 | 18 | ||

| 2 | 0.4961 | 69.5 | ||

| 2 | 0.1688 | 246 | ||

| 4 | 0.1401 | 201 |

4.2 Example 2

For the second example we consider the 4th-order tensor from Example 3.5 in [10]. Solving the polynomial system shows that there are 11 distinct eigenpairs (Table 4).

| stability | ||

|---|---|---|

| 0.8893 | negatively stable | |

| 0.8169 | negatively stable | |

| 0.5105 | unstable | |

| 0.3633 | negatively stable | |

| 0.2682 | unstable | |

| 0.2628 | unstable | |

| 0.2433 | unstable | |

| 0.1735 | unstable | |

| -0.0451 | positively stable | |

| -0.5629 | positively stable | |

| -1.0954 | positively stable |

Both a positive and negative shift are used for the (adaptive) SS-HOPM runs. Tables 5 and 6 list the results of applying 200 (100 positive shifts and 100 negative shifts) SS-HOPM and adaptive SS-HOPM runs to this tensor. Using the conservative shift of and the same convergence criterion as in Example 4.1 resulted in 27 runs not converging for the SS-HOPM algorithm. Again, the main strength of the adaptive SS-HOPM is the reduction of number of iterations required to converge. A faster convergence also implies a smaller error as observed in the numerical results. The same initial random vectors were used for both the SS-HOPM and adaptive SS-HOPM runs. Observe that in the 100 adaptive SS-HOPM runs with a negative shift the eigenpair with eigenvalue was never found. The total run time for 100 iterations of one adaptive SS-HOPM algorithm took about 0.025 seconds, which means that 100 runs take about 2.5 seconds to complete.

Similarly, the PQRST algorithm without shifting does not converge in any of the permutations. PQRST however retrieves all eigenpairs except one (Table 7). The total time to run PQRST for all permutations for 100 iterations took about 0.35 seconds.

| occ. | median its | error | ||

|---|---|---|---|---|

| 2 | 0.8893 | 886 | ||

| 30 | 0.8169 | 644 | ||

| 41 | 0.3633 | 931 | ||

| 55 | -0.0451 | 680 | ||

| 27 | -0.5629 | 386 | ||

| 18 | -1.0954 | 357.5 |

| occ. | median its | error | ||

|---|---|---|---|---|

| 19 | 0.8893 | 69 | ||

| 30 | 0.8169 | 33 | ||

| 51 | 0.3633 | 26 | ||

| 78 | -0.5629 | 18 | ||

| 22 | -1.0954 | 34 |

| occ. | median its | error | ||

|---|---|---|---|---|

| 4 | 0.8893 | 96.5 | ||

| 2 | 0.8169 | 70 | ||

| 1 | 0.5105 | 33 | ||

| 4 | 0.2682 | 186.5 | ||

| 2 | 0.2628 | 46 | ||

| 2 | 0.2433 | 45.5 | ||

| 1 | 0.1735 | 45 | ||

| 6 | -0.0451 | 34 | ||

| 2 | -0.5629 | 19 | ||

| 4 | -1.0954 | 25.5 |

4.3 Example 3

For the third example we consider the 3rd-order tensor from Example 3.6 in [10]. Solving the polynomial system reveals that there are 4 stable and 3 unstable distinct eigenpairs (Table 8).

| stability | ||

|---|---|---|

| 0.8730 | negatively stable | |

| 0.4306 | positively stable | |

| 0.2294 | unstable | |

| 0.0180 | negatively stable | |

| 0.0033 | unstable | |

| 0.0018 | unstable | |

| 0.0006 | positively stable |

The results of running SS-HOPM, adaptive SS-HOPM and PQRST on this tensor exhibit very similar features as in Example 4.1. Just like in Example 4.1, the SS-HOPM algorithm can only retrieve the eigenpair with the largest eigenvalue when the initial random vector is drawn from a uniform distribution. When the initial guess is drawn from a normal distribution, SS-HOPM retrieves 2 eigenpairs in 100 runs while the adaptive SS-HOPM retrieves the 4 stable eigenpairs. (Table 9). The shifted PQRST algorithm () retrieves all 7 eigenpairs (Table 10).

| occ. | median its | error | ||

|---|---|---|---|---|

| 43 | 0.8730 | 13 | ||

| 35 | 0.4306 | 22 | ||

| 16 | 0.0180 | 43 | ||

| 6 | -0.0006 | 18.5 |

| occ. | median its | error | ||

|---|---|---|---|---|

| 1 | 0.8730 | 569 | ||

| 5 | 0.4306 | 56 | ||

| 2 | 0.2294 | 511 | ||

| 2 | 0.0180 | 873 | ||

| 2 | 0.0033 | 1328 | ||

| 2 | 0.0018 | 1177 | ||

| 2 | 0.0006 | 3929.5 |

4.4 Example 4

As a last example we consider a random symmetric tensor in . Both SS-HOPM and the adaptive SS-HOPM are able to find 4 distinct eigenpairs in 100 runs. Since the difference in the results between SS-HOPM and the adaptive SS-HOPM lies in the total number of iterations we only list the results for the adaptive SS-HOPM method in Table 11.

| occ. | median its | error | ||

|---|---|---|---|---|

| 23 | 2.4333 | 79 | ||

| 23 | 4.3544 | 25 | ||

| 33 | 3.4441 | 52 | ||

| 21 | 2.8488 | 70 |

In contrast, PQRST with shift finds 7 distinct eigenpairs. The 3 additional eigenpairs are unstable.

| occ. | median its | error | ||

|---|---|---|---|---|

| 24 | 1.3030 | 599 | ||

| 576 | 2.4333 | 75 | ||

| 216 | 4.3544 | 148 | ||

| 120 | 3.4441 | 41 | ||

| 240 | 2.8488 | 48 | ||

| 120 | 1.6652 | 773 | ||

| 132 | 1.2643 | 150 |

5 Conclusions

The QR algorithm for finding eigenpairs of a matrix has been extended to its symmetric tensor counterpart called QRST (QR algorithm for symmetric tensors). The QRST algorithm, like its matrix version, permits a shift to accelerate convergence. A preconditioned QRST algorithm, called PQRST, has further been proposed to efficiently locate multiple eigenpairs of a symmetric tensor by orienting the tensor into various directions. Numerical examples have verified the effectiveness of (P)QRST in locating stable and unstable eigenpairs not found by previous tensor power methods.

References

- [1] P. Comon and M. Rajih, Blind identification of under-determined mixtures based on the characteristic function, Signal Processing, 86 (2006), pp. 2271 – 2281. Special Section: Signal Processing in {UWB} Communications.

- [2] L. De Lathauwer, J. Castaing, and J. Cardoso, Fourth-order cumulant-based blind identification of underdetermined mixtures, Signal Processing, IEEE Transactions on, 55 (2007), pp. 2965–2973.

- [3] L. De Lathauwer, B. De Moor, and J. Vandewalle, On the best rank- and rank-(, ,…, ) approximation of higher-order tensors, SIAM Journal on Matrix Analysis and Applications, 21 (2000), pp. 1324–1342.

- [4] P. Dreesen, Back to the Roots: Polynomial System Solving Using Linear Algebra, PhD thesis, Faculty of Engineering, KU Leuven (Leuven, Belgium), 2013.

- [5] A Ferreol, L. Albera, and P. Chevalier, Fourth-order blind identification of underdetermined mixtures of sources (FOBIUM), Signal Processing, IEEE Transactions on, 53 (2005), pp. 1640–1653.

- [6] G. H. Golub and C. F. Van Loan, Matrix Computations, The Johns Hopkins University Press, 3rd ed., Oct. 1996.

- [7] M. E Kilmer and C. D. M. Martin, Decomposing a tensor, SIAM News, 37 (2004), pp. 19–20.

- [8] E. Kofidis and P. A. Regalia, On the best rank-1 approximation of higher-order supersymmetric tensors, SIAM Journal on Matrix Analysis and Applications, 23 (2002), pp. 863–884.

- [9] T. Kolda and B. Bader, Tensor decompositions and applications, SIAM Review, 51 (2009), pp. 455–500.

- [10] T. G. Kolda and J. R. Mayo, Shifted power method for computing tensor eigenpairs, SIAM Journal on Matrix Analysis and Applications, 32 (2011), pp. 1095–1124.

- [11] T. G. Kolda and J. R. Mayo, An adaptive shifted power method for computing generalized tensor eigenpairs, ArXiv e-prints, (2014).

- [12] , An Adaptive Shifted Power Method for Computing Generalized Tensor Eigenpairs, ArXiv e-prints, (2014).

- [13] J. M. Landsberg, Tensors: Geometry and Applications, American Mathematical Society, 2012.

- [14] L.-H. Lim, Singular values and eigenvalues of tensors: a variational approach, in in CAMAP2005: 1st IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing, 2005, pp. 129–132.

- [15] L.-H. Lim and P. Comon, Blind multilinear identification, IEEE Trans. Inf. Theory, 60 (2014), pp. 1260–1280.

- [16] L. Qi, Eigenvalues of a real supersymmetric tensor, Journal of Symbolic Computation, 40 (2005), pp. 1302–1324.