A Randomized Approach to Tight Privacy Accounting

Abstract

Bounding privacy leakage over compositions, i.e., privacy accounting, is a key challenge in differential privacy (DP). The privacy parameter ( or ) is often easy to estimate but hard to bound. In this paper, we propose a new differential privacy paradigm called estimate-verify-release (EVR), which tackles the challenges of providing a strict upper bound for the privacy parameter in DP compositions by converting an estimate of privacy parameter into a formal guarantee. The EVR paradigm first verifies whether the mechanism meets the estimated privacy guarantee, and then releases the query output based on the verification result. The core component of the EVR is privacy verification. We develop a randomized privacy verifier using Monte Carlo (MC) technique. Furthermore, we propose an MC-based DP accountant that outperforms existing DP accounting techniques in terms of accuracy and efficiency. MC-based DP verifier and accountant is applicable to an important and commonly used class of DP algorithms, including the famous DP-SGD. An empirical evaluation shows the proposed EVR paradigm improves the utility-privacy tradeoff for privacy-preserving machine learning.

1 Introduction

The concern of privacy is a major obstacle to deploying machine learning (ML) applications. In response, ML algorithms with differential privacy (DP) guarantees have been proposed and developed. For privacy-preserving ML algorithms, DP mechanisms are often repeatedly applied to private training data. For instance, when training deep learning models using DP-SGD [ACG+16], it is often necessary to execute sub-sampled Gaussian mechanisms on the private training data thousands of times.

A major challenge in machine learning with differential privacy is privacy accounting, i.e., measuring the privacy loss of the composition of DP mechanisms. A privacy accountant takes a list of mechanisms, and returns the privacy parameter ( and ) for the composition of those mechanisms. Specifically, a privacy accountant is given a target and finds the smallest achievable such that the composed mechanism is -DP (we can also fix and find ). We use to denote the smallest achievable given , which is often referred to as optimal privacy curve in the literature.

Training deep learning models with DP-SGD is essentially the adaptive composition for thousands of sub-sampled Gaussian Mechanisms. Moment Accountant (MA) is a pioneer solution for privacy loss calculation in differentially private deep learning [ACG+16]. However, MA does not provide the optimal in general [ZDW22]. This motivates the development of more advanced privacy accounting techniques that outperforms MA. Two major lines of such works are based on Fast Fourier Transform (FFT) (e.g., [GLW21] and Central Limit Theorem (CLT) [BDLS20, WGZ+22]. Both techniques can provide an estimate as well as an upper bound for though bounding the worst-case estimation error. In practice, only the upper bounds for can be used, as differential privacy is a strict guarantee.

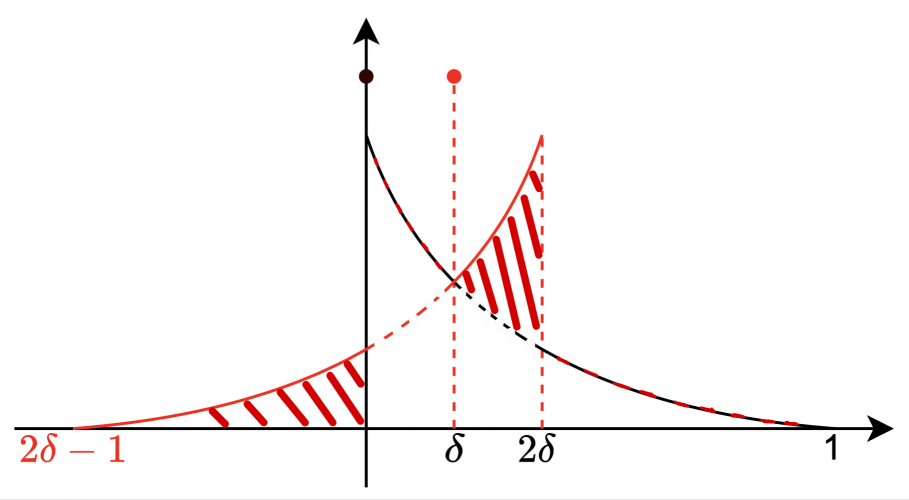

Motivation: estimates can be more accurate than upper bounds. The motivation for this paper stems from the limitations of current privacy accounting techniques in providing tight upper bounds for . Despite outperforming MA, both FFT- and CLT-based methods can provide ineffective bounds in certain regimes [GLW21, WGZ+22]. We demonstrate such limitations in Figure 1 using the composition of Gaussian mechanisms. For FFT-based technique [GLW21], we can see that although it outperforms MA for most of the regimes, the upper bounds (blue dashed curve) are worse than that of MA when due to computational limitations (as discussed in [GLW21]’s Appendix A; also see Remark 6 for a discussion of why the regime of is important). The CLT-based techniques (e.g., [WGZ+22]) also produce sub-optimal upper bounds (red dashed curve) for the entire range of . This is primarily due to the small number of mechanisms used (), which does not meet the requirements for CLT bounds to converge (similar phenomenon observed in [WGZ+22]). On the other hand, we can see that the estimates of from both FFT and CLT-based techniques, which estimate the parameters rather than providing an upper bound, are in fact very close to the ground truth (the three curves overlapped in Figure 1). However, as we mentioned earlier, these accurate estimations cannot be used in practice, as we cannot prove that they do not underestimate . The dilemma raises an important question: can we develop new techniques that allow us to use privacy parameter estimates instead of strict upper bounds in privacy accounting?111We note that this not only brings benefits for the regime where , but also for the more common regime where . See Figure 3 for an example.

This paper gives a positive answer to it. Our contributions are summarized as follows:

Estimate-Verify-Release (EVR): a DP paradigm that converts privacy parameter estimate into a formal guarantee. We develop a new DP paradigm called Estimate-Verify-Release, which augments a mechanism with a formal privacy guarantee based on its privacy parameter estimates. The basic idea of EVR is to first verify whether the mechanism satisfies the estimated DP guarantee, and release the mechanism’s output if the verification is passed. The core component of the EVR paradigm is privacy verification. A DP verifier can be randomized and imperfect, suffering from both false positives (accept an underestimation) and false negatives (reject an overestimation). We show that EVR’s privacy guarantee can be achieved when privacy verification has a low false negative rate.

A Monte Carlo-based DP Verifier. For an important and widely used class of DP algorithms including Subsampled Gaussian mechanism (the building block for DP-SGD), we develop a Monte Carlo (MC) based DP verifier for the EVR paradigm. We present various techniques that ensure the DP verifier has both a low false positive rate (for privacy guarantee) and a low false negative rate (for utility guarantee, i.e., making the EVR and the original mechanism as similar as possible).

A Monte Carlo-based DP Accountant. We further propose a new MC-based approach for DP accounting, which we call the MC accountant. It utilizes similar MC techniques as in privacy verification. We show that the MC accountant achieves several advantages over existing privacy accounting methods. In particular, we demonstrate that MC accountant is efficient for online privacy accounting, a realistic scenario for privacy practitioners where one wants to update the estimate on privacy guarantee whenever executing a new mechanism.

Figure 2 gives an overview of the proposed EVR paradigm as well as this paper’s contributions.

2 Privacy Accounting: a Mean Estimation/Bounding Problem

In this section, we review relevant concepts and introduce privacy accounting as a mean estimation/bounding problem.

Symbols and notations. We use to denote two datasets with an unspecified size over space . We call two datasets and adjacent (denoted as ) if we can construct one by adding/removing one data point from the other. We use to denote random variables. We also overload the notation and denote the density function of .

Differential privacy and its equivalent characterizations. Having established the notations, we can now proceed to formally define differential privacy.

Definition 1 (Differential Privacy [DMNS06]).

For , a randomized algorithm is -differentially private if for every pair of adjacent datasets and for every subset of possible outputs , we have .

One can alternatively define differential privacy in terms of the maximum possible divergence between the output distribution of any pair of and .

Lemma 2 ([BO13]).

A mechanism is -DP iff , where & .

is usually referred as Hockey-Stick (HS) Divergence in the literature. For every mechanism and every , there exists a smallest such that is -DP. Following the literature [ZDW22, AGA+23], we formalize such a as a function of .

Definition 3 (Optimal Privacy Curve).

The optimal privacy curve of a mechanism is the function s.t. .

Dominating Distribution Pair and Privacy Loss Random Variable (PRV). It is computationally infeasible to find by computing for all pairs of adjacent dataset and . A mainstream strategy in the literature is to find a pair of distributions that dominates all in terms of the Hockey-Stick divergence. This results in the introduction of dominating distribution pair and privacy loss random variable (PRV).

Definition 4 ([ZDW22]).

A pair of distributions is a pair of dominating distributions for under adjacent relation if for all , . If equality is achieved for all , then we say is a pair of tightly dominating distributions for . Furthermore, we call the privacy loss random variable (PRV) of associated with dominating distribution pair .

Zhu et al. [ZDW22] shows that all mechanisms have a pair of tightly dominating distributions. Hence, we can alternatively characterize the optimal privacy curve as for the tightly dominating pair , and we have if is a dominating pair that is not necessarily tight. The importance of the concept of PRV comes from the fact that we can write as an expectation over it: . Thus, one can bound by first identifying ’s dominating pair distributions as well as the associated PRV , and then computing this expectation. Such a formulation allows us to bound without enumerating over all adjacent and . For notation convenience, we denote Clearly, . If is a tightly dominating pair for , then .

Privacy Accounting as a Mean Estimation/Bounding Problem. Privacy accounting aims to estimate and bound the optimal privacy curve for adaptively composed mechanism . The adaptive composition of two mechanisms and is defined as , in which can access both the dataset and the output of . Most of the practical privacy accounting techniques are based on the concept of PRV, centered on the following result.

Lemma 5 ([ZDW22]).

Let be a pair of tightly dominating distributions for mechanism for . Then is a pair of dominating distributions for , where denotes the product distribution. Furthermore, the associated privacy loss random variable is where is the PRV associated with .

Lemma 5 suggests that privacy accounting for DP composition can be cast into a mean estimation/bounding problem where one aims to approximate or bound the expectation in (2) when . Note that while Lemma 5 does not guarantee a pair of tightly dominating distributions for the adaptive composition, it cannot be improved in general, as noted in [DRS19]. Hence, all the current privacy accounting techniques work on instead of , as Lemma 5 is tight even for non-adaptive composition. Following the prior works, in this paper, we only consider the practical scenarios where Lemma 5 is tight for the simplicity of presentation. That is, we assume unless otherwise specified.

Most of the existing privacy accounting techniques can be described as different techniques for such a mean estimation problem. Example-1: FFT-based methods. This line of works (e.g., [GLW21]) discretizes the domain of each and use Fast Fourier Transform (FFT) to speed up the approximation of . The upper bound is derived through the worst-case error bound for the approximation. Example-2: CLT-based methods. [BDLS20, WGZ+22] use CLT to approximate the distribution of as Gaussian distribution. They then use CLT’s finite-sample approximation guarantee to derive the upper bound for .

Remark 6 (The Importance of Privacy Accounting in Regime ).

The regime where is of significant importance for two reasons. (1) serves as an upper bound on the chance of severe privacy breaches, such as complete dataset exposure, necessitating a “cryptographically small” value, namely, [DR+14, Vad17]. (2) Even with the oft-used yet questionable guideline of or , datasets of modern scale, such as JFT-3B [ZKHB22] or LAION-5B [SBV+22], already comprise billions of records, thus rendering small values crucial. While we acknowledge that it requires a lot of effort to achieve a good privacy-utility tradeoff even for the current choice of , it is important to keep such a goal in mind.

3 Estimate-Verify-Release

As mentioned earlier, upper bounds for are the only valid options for privacy accounting techniques. However, as we have demonstrated in Figure 1, both FFT- and CLT-based methods can provide overly conservative upper bounds in certain regimes. On the other hand, their estimates for can be very close to the ground truth even though there is no provable guarantee. Therefore, it is highly desirable to develop new techniques that enable the use of privacy parameter estimates instead of overly conservative upper bounds in privacy accounting.

We tackle the problem by introducing a new paradigm for constructing DP mechanisms, which we call Estimate-Verify-Release (EVR). The key component of the EVR is an object called DP verifier (Section 3.1). The full EVR paradigm is then presented in Section 3.2, where the DP verifier is utilized as a building block to guarantee privacy.

3.1 Differential Privacy Verifier

We first formalize the concept of differential privacy verifier, the central element of the EVR paradigm. In informal terms, a DP verifier is an algorithm that attempts to verify whether a mechanism satisfies a specific level of differential privacy.

Definition 7 (Differential Privacy Verifier).

We say a differentially private verifier is an algorithm that takes the description of a mechanism and proposed privacy parameter as input, and returns if the algorithm believes is -DP (i.e., where is the PRV of ), and returns otherwise.

A differential privacy verifier can be imperfect, suffering from both false positives (FP) and false negatives (FN). Typically, FP rate is the likelihood for DPV to accept when . However, is still a good estimate for by being a small (e.g., <10%) underestimate. To account for this, we introduce a smoothing factor, , such that is deemed “should be rejected” only when . A similar argument can be put forth for FN cases where we also introduce a smoothing factor . This leads to relaxed notions for FP/FN rate:

Definition 8.

We say a DPV’s -relaxed false positive rate at is

We say a DPV’s -relaxed false negative rate at is

Privacy Verification with DP Accountant. For a composed mechanism , a DP verifier can be easily implemented using any existing privacy accounting techniques. That is, one can execute DP accountant to obtain an estimate or upper bound of the actual privacy parameter. If , then the proposed privacy level is rejected as it is more private than what the DP accountant tells; otherwise, the test is passed. The input description of a mechanism , in this case, can differ depending on the DP accounting method. For Moment Accountant [ACG+16], the input description is the upper bound of the moment-generating function (MGF) of the privacy loss random variable for each individual mechanism. For FFT and CLT-based methods, the input description is the cumulative distribution functions (CDF) of the dominating distribution pair of each individual .

3.2 EVR: Ensuring Estimated Privacy with DP Verifier

We now present the full paradigm of EVR. As suggested by the name, it contains three steps: (1) Estimate: A privacy parameter for is estimated, e.g., based on a privacy auditing or accounting technique. (2) Verify: A DP verifier DPV is used for validating whether mechanism satisfies -DP guarantee. (3) Release: If DP verification test is passed, we can execute as usual; otherwise, the program is terminated immediately. For practical utility, this rejection probability needs to be small when is an accurate estimation. The procedure is summarized in Algorithm 1.

Given estimated privacy parameter , we have the privacy guarantee for the EVR paradigm:

theoremprivguarantee Algorithm 1 is -DP for any if .

We defer the proof to Appendix B. The implication of this result is that, for any estimate of the privacy parameter, one can safely use it as a DPV with a bounded false positive rate would enforce differential privacy. However, this is not enough: an overly conservative DPV that satisfies 0 FP rate but rejects everything would not be useful. When is accurate, we hope the DPV can also achieve a small false negative rate so that the output distributions of EVR and are indistinguishable. We discuss the instantiation of DPV in Section 4.

4 Monte Carlo Verifier of Differential Privacy

As we can see from Section 3.2, a DP verifier (DPV) that achieves a small FP rate is the central element for the EVR framework. In the meanwhile, it is also important that DPV has a low FN rate in order to maintain the good utility of the EVR when the privacy parameter estimate is accurate. In this section, we introduce an instantiation of DPV based on the Monte Carlo technique that achieves both a low FP and FN rate, assuming the PRV is known for each individual mechanism.

Remark 9 (Mechanisms where PRV can be derived).

PRV can be derived for many commonly used DP mechanisms such as the Laplace, Gaussian, and Subsampled Gaussian Mechanism [KJH20, GLW21]. In particular, our DP verifier applies for DP-SGD, one of the most important application scenarios of privacy accounting. Moreover, the availability of PRV is also the assumption for most of the recently developed privacy accounting techniques (including FFT- and CLT-based methods). The extension beyond these commonly used mechanisms is an important future work in the field.

Remark 10 (Previous studies on the hardness of privacy verification).

Several studies [GNP20, BGG22] have shown that DP verification is an NP-hard problem. However, these works consider the setting where the input description of the DP mechanism is its corresponding randomized Boolean circuits. Some other works [GM18] show that DP verification is impossible, but this assertion is proved for the black-box setting where the verifier can only query the mechanism. Our work gets around this barrier by providing the description of the PRV of the mechanism as input to the verifier.

4.1 DPV through an MC Estimator for

Recall that most of the recently proposed DP accountants are essentially different techniques for estimating the expectation

where each is the privacy loss random variable for , and is a pair of dominating distribution for individual mechanism . In the following text, we denote the product distribution and . Recall from Lemma 5 that is a pair of dominating distributions for the composed mechanism . For notation simplicity, we denote a vector .

Monte Carlo (MC) technique is arguably one of the most natural and widely used techniques for approximating expectations. Since is an expectation in terms of the PRV , one can apply MC-based technique to estimate it. Given an MC estimator for , we construct a as shown in Algorithm 2 (instantiated by the Simple MC estimator introduced in Section 4.2). Specifically, we first obtain an estimate from an MC estimator for . The estimate passes the test if , and fails otherwise. The parameter here is an offset that allows us to conveniently controls the -relaxed false positive rate. We will discuss how to set in Section 4.4.

4.2 Constructing MC Estimator for

In this section, we first present a simple MC estimator that applies to any mechanisms where we can derive and sample from the dominating distribution pairs. Given the importance of Poisson Subsampled Gaussian mechanism for privacy-preserving machine learning, we further design a more advanced and specialized MC estimator for it based on the importance sampling technique.

Simple Monte Carlo Estimator. One can easily sample from by sampling and output . Hence, a straightforward algorithm for estimating (2) is the Simple Monte Carlo (SMC) algorithm, which directly samples from the privacy random variable . We formally define it here.

Definition 11 (Simple Monte Carlo (SMC) Estimator).

We denote as the random variable of SMC estimator for with samples, i.e., for i.i.d. sampled from .

Importance Sampling Estimator for Poisson Subsampled Gaussian (Overview). As is usually a tiny value ( or even cryptographically small), it is likely that by naive sampling from , almost all of the samples in are just 0s! That is, the i.i.d. samples from can rarely exceed . To further improve the sample efficiency, one can potentially use more advanced MC techniques such as Importance Sampling or MCMC. However, these advanced tools usually require additional distributional information about and thus need to be developed case-by-case.

Poisson Subsampled Gaussian mechanism is the main workhorse behind the DP-SGD algorithm [ACG+16]. Given its important role in privacy-preserving ML, we derive an advanced MC estimator for it based on the Importance Sampling technique. Importance Sampling (IS) is a classic method for rare event simulation [TK10]. It samples from an alternative distribution instead of the distribution of the quantity of interest, and a weighting factor is then used for correcting the difference between the two distributions. The specific design of alternative distribution is complicated and notation-heavy, and we defer the technical details to Appendix C. At a high level, we construct the alternative sampling distribution based on the exponential tilting technique and derive the optimal tilting parameter such that the corresponding IS estimator approximately achieves the smallest variance. Similar to Definition 11, we use to denote the random variable of importance sampling estimator with samples.

4.3 Bounding FP Rate

We now discuss the FP guarantee for the DPV instantiated by and we developed in the last section. Since both estimators are unbiased, by Law of Large Number, both and converge to almost surely as , which leads a DPV with perfect accuracy. Of course, cannot go to in practice. In the following, we derive the required amount of samples for ensuring that -relaxed false positive rate is smaller than for and . We use (or ) as an abbreviation for (or ), the random variable for a single draw of sampling. We state the theorem for , and the same result for can be obtained by simply replacing with . We use to denote the FP rate for DPV implemented by SMC estimator.

theoremsmcsamplecomp Suppose . DPV instantiated by has bounded -relaxed false positive rate with .

The proof is based on Bennett’s inequality and is deferred to Appendix D. This result suggests that, to improve the computational efficiency of MC-based DPV (i.e., tighten the number of required samples), it is important to tightly bound (or ), the second moment of (or ).

Bounding the Second-Moment of MC Estimators (Overview). For clarity, we defer the notation-heavy results and derivation of the upper bounds for and to Appendix E. Our high-level idea for bounding is through the RDP guarantee for the composed mechanism . This is a natural idea since converting RDP to upper bounds for – the first moment of – is a well-studied problem [Mir17, CKS20, ALC+21]. Bounding is highly technically involved.

4.4 Guaranteeing Utility

Overall picture so far. Given the proposed privacy parameter , a tolerable degree of underestimation , and an offset parameter , one can now compute the number of samples required for the MC-based DPV such that -relaxed FP rate to be based on the results from Section 4.3 and Appendix E. We have not yet discussed the selection of the hyperparameter . An appropriate is important for the utility of MC-based DPV. That is, when is not too smaller than , the probability of being rejected by DPV should stay negligible. If we set , the DPV simply rejects everything, which achieves 0 FP rate (and with ) but is not useful at all!

Formally, the utility of a DPV is quantified by the -relaxed false negative (FN) rate (Definition 8). While one may be able to bound the FN rate through concentration inequalities, a more convenient way is to pick an appropriate such that is approximately smaller than . After all, already has to be a small value for privacy guarantee. The result is stated informally in the following (holds for both and ), and the involved derivation is deferred to Appendix F.

Theorem 12 (Informal).

When , then .

Therefore, by setting , one can ensure that is also (approximately) upper bounded by . Moreover, in Appendix, we empirically show that the FP rate is actually a very conservative bound for the FN rate. Both and are selected based on the tradeoff between privacy, utility, and efficiency.

The pseudocode of privacy verification for DP-SGD is summarized in Appendix G.

5 Monte Carlo Accountant of Differential Privacy

The Monte Carlo estimators and described in Section 4.2 are used for implementing DP verifiers. One may already realize that the same estimators can also be utilized to directly implement a DP accountant which estimates . It is important to note that with the EVR paradigm, DP accountants are no longer required to derive a strict upper bound for . We refer to the technique of estimating using the MC estimators as Monte Carlo accountant.

Finding for a given . It is straightforward to implement MC accountant when we fix and compute for . In practice, privacy practitioners often want to do the inverse: finding for a given , which we denote as . Similar to the existing privacy accounting methods, we use binary search to find (see Algorithm 3). Specifically, after generating PRV samples , we simply need to find the such that . We do not need to generate new PRV samples for different we evaluate during the binary search; hence the additional binary search is computationally efficient.

Number of Samples for MC Accountant. Compared with the number of samples required for achieving the FP guarantee in Section 4.3, one may be able to use much fewer samples to obtain a decent estimate for , as the sample complexity bound derived based on concentration inequality may be conservative. Many heuristics for guiding the number of samples in MC simulation have been developed (e.g., Wald confidence interval) and can be applied to the setting of MC accountants.

Compared with FFT-based and CLT-based methods, MC accountant exhibits the following strength:

(1) Accurate estimation in all regimes. As we mentioned earlier, the state-of-the-art FFT-based method [GLW21] fails to provide meaningful bounds due to computational limitations when the true value of is small. In contrast, the simplicity of the MC accountant allows us to accurately estimate in all regimes.

(2) Short clock runtime & Easy GPU acceleration. MC-based techniques are well-suited for parallel computing and GPU acceleration due to their nature of repeated sampling. One can easily utilize PyTorch’s CUDA functionality (e.g., torch.randn(size=(k,m)).cuda()*sigma+mu) to significantly boost the computational efficiency for sampling from common distributions such as Gaussian. In Appendix H, we show that when using one NVIDIA A100 GPU, the runtime time of sampling Gaussian mixture can be improved by times compared with CPU-only scenario.

(3) Efficient online privacy accounting. When training ML models with DP-SGD or its variants, a privacy practitioner usually wants to compute a running privacy leakage for every training iteration, and pick the checkpoint with the best utility-privacy tradeoff. This involves estimating for every , where . We refer to such a scenario as online privacy accounting222Note that this is different from the scenario of privacy odometer [RRUV16], as here the privacy parameter of the next individual mechanism is not adaptively chosen.. MC accountant is especially efficient for online privacy accounting. When estimating , one can re-use the samples previously drawn from that were used for estimating privacy loss at earlier iterations.

6 Numerical Experiments

In this section, we conduct numerical experiments to illustrate (1) EVR paradigm with MC verifiers enables a tighter privacy analysis, and (2) MC accountant achieves state-of-the-art performance in privacy parameter estimation.

6.1 EVR vs Upper Bound

To illustrate the advantage of the EVR paradigm compared with directly using a strict upper bound for privacy parameters, we take the current state-of-the-art DP accountant, the FFT-based method from [GLW21] as the example.

EVR provides a tighter privacy guarantee. Recall that in Figure 1, FFT-based method provides vacuous bound when the ground-truth . Under the same hyperparameter setting, Figure 3 (a) shows the privacy bound of the EVR paradigm where the are FFT’s estimates. We use the Importance Sampling estimator for DP verification. We experiment with different values of . A higher value of leads to tighter privacy guarantee but longer runtime. For fair comparison, the EVR’s output distribution needs to be almost indistinguishable from the original mechanism. We set and set according to the heuristic from Theorem 12. This guarantees that, as long as the estimate of from FFT is not a big underestimation (i.e., as long as ), the failure probability of the EVR paradigm is negligible (). The ‘FFT-EVR’ curve in Figure 3 (a) is essentially the ‘FFT-est’ curve in Figure 1 scaled up by . As we can see, EVR provides a significantly better privacy analysis in the regime where the ‘FFT-upp’ is unmeaningful ().

EVR incurs little extra runtime. In Figure 3 (b), we plot the runtime of the Importance Sampling verifier in Figure 3 (b) for different . Note that for , the privacy curves are indistinguishable from ‘Exact’ in Figure 3 (a). The runtime of EVR is determined by the number of samples required to achieve the target -relaxed FP rate from Theorem 4.3. Smaller leads to faster DP verification. As we can see, even when , the runtime of DP verification in the EVR is minutes. This is attributable to the sample-efficient IS estimator and GPU acceleration.

EVR provides better privacy-utility tradeoff for Privacy-preserving ML with minimal time consumption. To further underscore the superiority of the EVR paradigm in practical applications, we illustrate the privacy-utility tradeoff curve when finetuning on CIFAR100 dataset with DP-SGD. As shown in Figure 4, the EVR paradigm provides a lower test error across all privacy budget compared with the traditional upper bound method. For instance, it achieves around 7% (relative) error reduction when . The runtime time required for privacy verification is less than seconds for all , which is negligible compared to the training time. We provide additional experimental results in Appendix H.

6.2 MC Accountant

We evaluate the MC Accountant proposed in Section 5. We focus on privacy accounting for the composition of Poisson Subsampled Gaussian mechanisms, the algorithm behind the famous DP-SGD algorithm [ACG+16]. The mechanism is specified by the noise magnitude and subsampling rate .

Settings. We consider two practical scenarios of privacy accounting: (1) Offline accounting which aims at estimating , and (2) Online accounting which aims at estimating for all . For space constraint, we only show the results of online accounting here, and defer the results for offline accounting to Appendix H. Metric: Relative Error. To easily and fairly evaluate the performance of privacy parameter estimation, we compute the almost exact (yet computationally expensive) privacy parameters as the ground-truth value. The ground-truth value allows us to compute the relative error of an estimate of privacy leakage. That is, if the corresponding ground-truth of an estimate is , then the relative error . Implementation. For MC accountant, we use the IS estimator described in Section 4.2. For baselines, in addition to the FFT-based and CLT-based method we mentioned earlier, we also examine AFA [ZDW22] and GDP accountant [BDLS20]. For a fair comparison, we adjust the number of samples for MC accountant so that the runtime of MC accountant and FFT is comparable. Note that we compared with the privacy parameter estimates instead of upper bounds from the baselines. Detailed settings for both MC accountant and the baselines are provided in Appendix H.

Results for Online Accounting: MC accountant is both more accurate and efficient. Figure 5 (a) shows the online accounting results for . As we can see, MC accountant outperforms all of the baselines in estimating . The sharp decrease in FFT at approximately 250 steps is due to the transition of FFT’s estimates from underestimating before this point to overestimating after. Figure 5 (b) shows that MC accountant is around 5 times faster than FFT, the baseline with the best performance in (a). This showcases the MC accountant’s efficiency and accuracy in online setting.

7 Conclusion & Limitations

This paper tackles the challenge of deriving provable privacy leakage upper bounds in privacy accounting. We present the estimate-verify-release (EVR) paradigm which enables the safe use of privacy parameter estimate. Limitations. Currently, our MC-based DP verifier and accountant require known and efficiently samplable dominating pairs and PRV for the individual mechanism. Fortunately, this applies to commonly used mechanisms such as Gaussian mechanism and DP-SGD. Generalizing MC-based DP verifier and accountant to other mechanisms is an interesting future work.

Acknowledgments

This work was supported in part by the National Science Foundation under grants CNS-2131938, CNS-1553437, CNS-1704105, the ARL’s Army Artificial Intelligence Innovation Institute (A2I2), the Office of Naval Research Young Investigator Award, the Army Research Office Young Investigator Prize, Schmidt DataX award, and Princeton E-ffiliates Award, Amazon-Virginia Tech Initiative in Efficient and Robust Machine Learning, and Princeton’s Gordon Y. S. Wu Fellowship. We are grateful to anonymous reviewers at NeurIPS for their valuable feedback.

References

- [ACG+16] Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, pages 308–318, 2016.

- [AGA+23] Wael Alghamdi, Juan Felipe Gomez, Shahab Asoodeh, Flavio Calmon, Oliver Kosut, and Lalitha Sankar. The saddle-point method in differential privacy. In Proceedings of the 40th International Conference on Machine Learning, pages 508–528, 2023.

- [ALC+21] Shahab Asoodeh, Jiachun Liao, Flavio P Calmon, Oliver Kosut, and Lalitha Sankar. Three variants of differential privacy: Lossless conversion and applications. IEEE Journal on Selected Areas in Information Theory, 2(1):208–222, 2021.

- [BDLS20] Zhiqi Bu, Jinshuo Dong, Qi Long, and Weijie J Su. Deep learning with gaussian differential privacy. Harvard data science review, 2020(23), 2020.

- [BDPW21] Hangbo Bao, Li Dong, Songhao Piao, and Furu Wei. Beit: Bert pre-training of image transformers. arXiv preprint arXiv:2106.08254, 2021.

- [BGG22] Mark Bun, Marco Gaboardi, and Ludmila Glinskih. The complexity of verifying boolean programs as differentially private. In 2022 IEEE 35th Computer Security Foundations Symposium (CSF), pages 396–411. IEEE, 2022.

- [BO13] Gilles Barthe and Federico Olmedo. Beyond differential privacy: Composition theorems and relational logic for f-divergences between probabilistic programs. In International Colloquium on Automata, Languages, and Programming, pages 49–60. Springer, 2013.

- [BSBV21] Benjamin Bichsel, Samuel Steffen, Ilija Bogunovic, and Martin Vechev. Dp-sniper: Black-box discovery of differential privacy violations using classifiers. In 2021 IEEE Symposium on Security and Privacy (SP), pages 391–409. IEEE, 2021.

- [CKS20] Clément L Canonne, Gautam Kamath, and Thomas Steinke. The discrete gaussian for differential privacy. Advances in Neural Information Processing Systems, 33:15676–15688, 2020.

- [DGK+22] Vadym Doroshenko, Badih Ghazi, Pritish Kamath, Ravi Kumar, and Pasin Manurangsi. Connect the dots: Tighter discrete approximations of privacy loss distributions. Proceedings on Privacy Enhancing Technologies, 4:552–570, 2022.

- [DL09] Cynthia Dwork and Jing Lei. Differential privacy and robust statistics. In Proceedings of the forty-first annual ACM symposium on Theory of computing, pages 371–380, 2009.

- [DMNS06] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam Smith. Calibrating noise to sensitivity in private data analysis. In Theory of cryptography conference, pages 265–284. Springer, 2006.

- [DR+14] Cynthia Dwork, Aaron Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- [DRS19] Jinshuo Dong, Aaron Roth, and Weijie J Su. Gaussian differential privacy. Journal of the Royal Statistical Society Series B: Statistical Methodology, 84(1):3–37, 2019.

- [DRV10] Cynthia Dwork, Guy N Rothblum, and Salil Vadhan. Boosting and differential privacy. In 2010 IEEE 51st Annual Symposium on Foundations of Computer Science, pages 51–60. IEEE, 2010.

- [GJG+22] Daniele Gorla, Louis Jalouzot, Federica Granese, Catuscia Palamidessi, and Pablo Piantanida. On the (im) possibility of estimating various notions of differential privacy. arXiv preprint arXiv:2208.14414, 2022.

- [GKKM22] Badih Ghazi, Pritish Kamath, Ravi Kumar, and Pasin Manurangsi. Faster privacy accounting via evolving discretization. In International Conference on Machine Learning, pages 7470–7483. PMLR, 2022.

- [GLW21] Sivakanth Gopi, Yin Tat Lee, and Lukas Wutschitz. Numerical composition of differential privacy. Advances in Neural Information Processing Systems, 34:11631–11642, 2021.

- [GM18] Anna C Gilbert and Audra McMillan. Property testing for differential privacy. In 2018 56th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pages 249–258. IEEE, 2018.

- [GNP20] Marco Gaboardi, Kobbi Nissim, and David Purser. The complexity of verifying loop-free programs as differentially private. In 47th International Colloquium on Automata, Languages, and Programming (ICALP 2020). Schloss Dagstuhl-Leibniz-Zentrum für Informatik, 2020.

- [JUO20] Matthew Jagielski, Jonathan Ullman, and Alina Oprea. Auditing differentially private machine learning: How private is private sgd? Advances in Neural Information Processing Systems, 33:22205–22216, 2020.

- [KH21] Antti Koskela and Antti Honkela. Computing differential privacy guarantees for heterogeneous compositions using fft. CoRR, abs/2102.12412, 2021.

- [KJH20] Antti Koskela, Joonas Jälkö, and Antti Honkela. Computing tight differential privacy guarantees using fft. In International Conference on Artificial Intelligence and Statistics, pages 2560–2569. PMLR, 2020.

- [KJPH21] Antti Koskela, Joonas Jälkö, Lukas Prediger, and Antti Honkela. Tight differential privacy for discrete-valued mechanisms and for the subsampled gaussian mechanism using fft. In International Conference on Artificial Intelligence and Statistics, pages 3358–3366. PMLR, 2021.

- [KOV15] Peter Kairouz, Sewoong Oh, and Pramod Viswanath. The composition theorem for differential privacy. In International conference on machine learning, pages 1376–1385. PMLR, 2015.

- [LMF+22] Fred Lu, Joseph Munoz, Maya Fuchs, Tyler LeBlond, Elliott V Zaresky-Williams, Edward Raff, Francis Ferraro, and Brian Testa. A general framework for auditing differentially private machine learning. In Advances in Neural Information Processing Systems, 2022.

- [LO19] Xiyang Liu and Sewoong Oh. Minimax optimal estimation of approximate differential privacy on neighboring databases. Advances in neural information processing systems, 32, 2019.

- [LWMIZ22] Yun Lu, Yu Wei, Malik Magdon-Ismail, and Vassilis Zikas. Eureka: A general framework for black-box differential privacy estimators. Cryptology ePrint Archive, 2022.

- [Mir17] Ilya Mironov. Rényi differential privacy. In 2017 IEEE 30th Computer Security Foundations Symposium (CSF), pages 263–275. IEEE, 2017.

- [MSCJ22] Saeed Mahloujifar, Alexandre Sablayrolles, Graham Cormode, and Somesh Jha. Optimal membership inference bounds for adaptive composition of sampled gaussian mechanisms. arXiv preprint arXiv:2204.06106, 2022.

- [MV16] Jack Murtagh and Salil Vadhan. The complexity of computing the optimal composition of differential privacy. In Theory of Cryptography Conference, pages 157–175. Springer, 2016.

- [NHS+23] Milad Nasr, Jamie Hayes, Thomas Steinke, Borja Balle, Florian Tramèr, Matthew Jagielski, Nicholas Carlini, and Andreas Terzis. Tight auditing of differentially private machine learning. arXiv preprint arXiv:2302.07956, 2023.

- [NST+21] Milad Nasr, Shuang Songi, Abhradeep Thakurta, Nicolas Papernot, and Nicholas Carlin. Adversary instantiation: Lower bounds for differentially private machine learning. In 2021 IEEE Symposium on security and privacy (SP), pages 866–882. IEEE, 2021.

- [PTS+22] Ashwinee Panda, Xinyu Tang, Vikash Sehwag, Saeed Mahloujifar, and Prateek Mittal. Dp-raft: A differentially private recipe for accelerated fine-tuning. arXiv preprint arXiv:2212.04486, 2022.

- [RRUV16] Ryan M Rogers, Aaron Roth, Jonathan Ullman, and Salil Vadhan. Privacy odometers and filters: Pay-as-you-go composition. Advances in Neural Information Processing Systems, 29, 2016.

- [RZW23] Rachel Redberg, Yuqing Zhu, and Yu-Xiang Wang. Generalized ptr: User-friendly recipes for data-adaptive algorithms with differential privacy. In International Conference on Artificial Intelligence and Statistics, pages 3977–4005. PMLR, 2023.

- [SBV+22] Christoph Schuhmann, Romain Beaumont, Richard Vencu, Cade Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, Aarush Katta, Clayton Mullis, Mitchell Wortsman, et al. Laion-5b: An open large-scale dataset for training next generation image-text models. Advances in Neural Information Processing Systems, 35:25278–25294, 2022.

- [SNJ23] Thomas Steinke, Milad Nasr, and Matthew Jagielski. Privacy auditing with one (1) training run. arXiv preprint arXiv:2305.08846, 2023.

- [TK10] Surya T Tokdar and Robert E Kass. Importance sampling: a review. Wiley Interdisciplinary Reviews: Computational Statistics, 2(1):54–60, 2010.

- [Vad17] Salil Vadhan. The complexity of differential privacy. Tutorials on the Foundations of Cryptography: Dedicated to Oded Goldreich, pages 347–450, 2017.

- [WGZ+22] Hua Wang, Sheng Gao, Huanyu Zhang, Milan Shen, and Weijie J Su. Analytical composition of differential privacy via the edgeworth accountant. arXiv preprint arXiv:2206.04236, 2022.

- [WMW+22] Jiachen T Wang, Saeed Mahloujifar, Shouda Wang, Ruoxi Jia, and Prateek Mittal. Renyi differential privacy of propose-test-release and applications to private and robust machine learning. Advances in Neural Information Processing Systems, 35:38719–38732, 2022.

- [ZDW22] Yuqing Zhu, Jinshuo Dong, and Yu-Xiang Wang. Optimal accounting of differential privacy via characteristic function. In International Conference on Artificial Intelligence and Statistics, pages 4782–4817. PMLR, 2022.

- [ZKHB22] Xiaohua Zhai, Alexander Kolesnikov, Neil Houlsby, and Lucas Beyer. Scaling vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12104–12113, 2022.

Appendix A Extended Related Work

In this section, we review the related works in privacy accounting, privacy verification, privacy auditing, and we also discuss the connection between our EVR paradigm and the famous Propose-Test-Release (PTR) paradigm.

Privacy Accounting.

Early privacy accounting techniques such as Advanced Composition Theorem [DRV10] only make use of the privacy parameters of the individual mechanisms, which bounds in terms of the privacy parameter for each . The optimal bound for under this condition has been derived [KOV15, MV16]. However, the computation of the optimal bound is #P-hard in general. Bounding only in terms of is often sub-optimal for many commonly used mechanisms [MV16]. This disadvantage has spurred many recent advances in privacy accounting by making use of more statistical information from the specific mechanisms to be composed [ACG+16, Mir17, KJH20, BDLS20, KH21, KJPH21, GLW21, ZDW22, GKKM22, DGK+22, WGZ+22, AGA+23]. All of these works can be described as approximating the expectation in (2) when . For instance, the line of [KJH20, BDLS20, KH21, KJPH21, GLW21, GKKM22, DGK+22] discretize the domain of each and use Fast Fourier Transform (FFT) in order to speed up the approximation of . [ZDW22] tracks the characteristic function of the privacy loss random variables for the composed mechanism and still requires discretization when the mechanisms do not have closed-form characteristic functions. The line of [BDLS20, WGZ+22] uses Central Limit Theorem (CLT) to approximate the distribution of as Gaussian distribution and uses the finite-sample bound to derive the strict upper bound for . We also note that [MSCJ22] also uses Monte Carlo approaches to calculate optimal membership inference bounds. They use a similar Simple MC estimator as the one in Section 4.2. Although their Monte Carlo approach is similar, their error analysis only works for large values of ( as they use sub-optimal concentration bounds.

Privacy Verification.

As we mentioned in Remark 10, some previous works have also studied the problem of privacy verification. Most of the works consider either “white-box setting” where the input description of the DP mechanism is its corresponding randomized Boolean circuits [GNP20, BGG22]. Some other works consider an even more stringent “black-box setting” where the verifier can only query the mechanism [GM18, LO19, BSBV21, GJG+22, LWMIZ22]. In contrast, our MC verifier is designed specifically for those mechanisms where the PRV can be derived, which includes many commonly used mechanisms such as the Subsampled Gaussian mechanism.

Privacy verification via auditing.

Several heuristics have tried to perform DP verification, forming a line of work called auditing differential privacy [JUO20, NST+21, LMF+22, NHS+23, SNJ23]. Specifically, these techniques can verify a claimed privacy parameter by computing a lower bound for the actual privacy parameter, and comparing that with the claimed privacy parameter. The input description of mechanism for DPV, in this case, is a black-box oracle , where the DPV makes multiple queries to and estimates the actual privacy leakage. Privacy auditing techniques can achieve 100% accuracy when (or -FN rate for any ), as the computed lower bound is guaranteed to be smaller than . However, when lies between and the computed lower bound, the DP verification will be wrong. Moreover, such techniques do not have a guarantee for the lower bound’s tightness.

Remark 13 (Connection between our EVR paradigm and the Propose-Test-Release (PTR) paradigm [DL09]).

PTR is a classic differential privacy paradigm introduced over a decade ago by [DL09], and is being generalized in [RZW23, WMW+22]. At a high level, PTR checks if releasing the query answer is safe with a certain amount of randomness (in a private way). If the test is passed, the query answer is released; otherwise, the program is terminated. PTR shares a similar underlying philosophy with our EVR paradigm. However, they are fundamentally different in terms of implementation. The verification step in EVR is completely independent of the dataset. In contrast, the test step in PTR measures the privacy risks for the mechanism on a specific dataset , which means that the test itself may cause additional privacy leakage. One way to think about the difference is that EVR asks “whether is private”, while PTR asks “whether is private”.

Appendix B Proofs for Privacy

*

Proof.

For any mechanism , we denote as the event that , and indicator variable . Note that event implies is -DP.

Thus, we know that

| (1) |

For notation simplicity, we also denote , and .

For any possible event ,

where in the first inequality, we use the definition of differential privacy. Therefore, is -DP. By assumption of , we reach the conclusion. ∎

Appendix C Importance Sampling via Exponential Tilting

Notation Review.

Recall that most of the recently proposed DP accountants are essentially different techniques for estimating the expectation

where each is the privacy loss random variable for , and is a pair of dominating distribution for individual mechanism . In the following text, we denote the product distribution and . Recall from Lemma 5 that is a pair of dominating distributions for the composed mechanism . For notation simplicity, we denote a vector . We slightly abuse the notation and write . Note that . When the context is clear, we omit the dominating pairs and simply write .

Dominating Distribution Pairs for Poisson Subsampled Gaussian Mechanisms.

The dominating distribution pair for Poisson Subsampled Gaussian Mechanisms is a well-known result.

Lemma 14.

For Poisson Subsampled Gaussian mechanism with sensitivity , noise variance , and subsampling rate , one dominating pair is and .

Proof.

See Appendix B of [GLW21]. ∎

That is, is just a 1-dimensional standard Gaussian distribution, and is a convex combination between standard Gaussian and a Gaussian centered at .

Remark 15 (Dominating pair supported on higher dimensional space).

The cost of our approach would not increase (in terms of the number of samples) even if the dominating pair is supported in a high dimensional space. For Monte Carlo estimate, we can see from Hoeffding’s inequality that the expected error rate of estimation is independent of the dimension of the support set of dominating distribution pairs. This means the number of samples we need to ensure a certain confidence interval is independent of the dimension. However, we should also note that although the number of samples does not change, the sampling process itself might be more costly for higher dimensional spaces.

C.1 Importance Sampling for the Composition of Poisson Subsampled Gaussian Mechanisms

Importance Sampling (IS) is a classic method for rare event simulation. It samples from an alternative distribution instead of the distribution of the quantity of interest, and a weighting factor is then used for correcting the difference between the two distributions. Specifically, we can re-write the expression for as follows:

| (2) |

where is the alternative distribution up to the user’s choice. From Equation (2), one can construct an unbiased importance sampling estimator for by sampling from . In this section, we develop a for estimating when composing identically distributed Poisson subsampled Gaussian mechanisms, which is arguably the most important DP mechanism nowadays due to its application in differentially private stochastic gradient descent.

Exponential tilting is a common way to construct alternative sampling distribution for IS. The exponential tilting of a distribution is defined as

where is the moment generating function for . Such a transformation is especially convenient for distributions from the exponential family. For example, for normal distribution , the tilted distribution is , which is easy to sample from.

Without the loss of generality, we consider Poisson Subsampled Gaussian mechanism with sensitivity 1, noise variance , and subsampling rate . Recall from Lemma 14 that the dominating pair in this case is and . For notation simplicity, we denote , and thus . Since each individual mechanism is the same, and . The exponential tilting of with parameter is . We propose the following importance sampling estimator for based on exponential tilting.

Definition 16 (Importance Sampling Estimator for Subsampled Gaussian Composition).

Let the alternative distribution

with . Given a random draw , an unbiased sample for is . We denote as the random variable of the corresponding importance sampling estimator with samples.

We defer the formal justification of the choice of to Appendix C.2. We first give the intuition for why we choose such an alternative distribution .

Intuition for the alternative distribution .

It is well-known that the variance of the importance sampling estimator is minimized when the alternative distribution

The distribution of each privacy loss random variable is light-tailed, which means that for the rare event where , it is most likely that there is only one outlier among all such that is large (which means that is also large), and all the rest of s are small. Hence, a reasonable alternative distribution can just tilt the distribution of a randomly picked , and leave the rest of distributions to stay the same. Moreover, is selected to approximately minimize the variance of (detailed in Appendix C.2). An intuitive way to see it is that , which significantly improves the probability where while also accounting for the fact that decays exponentially fast as increases.

We also empirically verify the advantage of the IS estimator over the SMC estimator. The orange curve in Figure 6 (a) shows the empirical estimate of which quantifies the variance of . Note that corresponds to the case of . As we can see, drops quickly as increases, and eventually converges. We can also see that the selected by our heuristic in Definition 16 (marked as red ‘*’) approximately corresponds to the lowest point of . This validates our theoretical justification for the selection of .

C.2 Intuition of the Heuristic of Choosing Exponential Tilting Parameter

The variance of the IS estimator proposed in Definition 16 is given by

Let . We aim to find that minimizes .

Note that

| (3) |

To simplify the notation, let .

| (4) | ||||

| (5) |

By setting and simplify the expression, we have

| (6) |

As we mentioned earlier, only when , and for such an event it is most likely that there is only one outlier among all such that , and all the rest of . Therefore, a very simple and rough, yet surprisingly effective approximation for the RHS of (6) is

| (7) |

for s.t. . This leads to an approximate solution

| (8) |

Appendix D Sample Complexity for Achieving Target False Positive Rate

To derive the sample complexity for achieving a DP verifier with a target false positive rate, we use Bennett’s inequality.

Lemma 17 (Bennett’s inequality).

Let be independent real-valued random variables with finite variance such that for some almost surely for all . Let . For any , we have

| (9) |

where for .

*

Proof.

For any s.t. , we have

| (10) |

Since , the condition in Bennett’s inequality is satisfied with . Hence, (10) can be upper bounded by

By setting , we have

∎

Appendix E Proofs for Moment Bound

E.1 Overview

As suggested by Theorem 4.3, a good upper bound for (or ) is important for the computational efficiency of MC-based DPV.

We upper bound the higher moment of through the RDP guarantee for the composed mechanism . This is a natural idea since converting RDP to upper bounds for – the first moment of – is a well-studied problem [Mir17, CKS20, ALC+21]. Recall that the RDP guarantee for is equivalent to a bound for for ’s privacy loss random variable for any .

Lemma 18 (RDP-MGF bound conversion [Mir17]).

If a mechanism is -RDP, then .

We convert an upper bound for into the following guarantee for the higher moment of .

theoremvariancebound For any , we have

The proof is deferred to Appendix E.2. The basic idea is to find the smallest constant such that . By setting , our result recovers the RDP-DP conversion from [CKS20]. By setting , we obtain the desired bound for .

Corollary 19.

.

Corollary 19 applies to any mechanisms where the RDP guarantee is available, which covers a wide range of commonly used mechanisms such as (Subsampled) Gaussian or Laplace mechanism. We also note that one may be able to further tighten the above bound similar to the optimal RDP-DP conversion in [ALC+21]. We leave this as an interesting future work.

Next, we derive the upper bound for for Poisson Subsampled Gaussian mechanism.

theoremvarboundisholder For any positive integer , and for any s.t. , we have where is an upper bound for detailed in Appendix E.3.

The proof is based on applying Hölder’s inequality to the expression of , and then bound the part where is involved: . We can bound through Theorem 18.

Figure 6 (a) shows the analytical bound from Corollary 19 and Theorem 19 compared with empirically estimated and . As we can see, the analytical bound for for relatively large is much smaller than the bound for (i.e., in the plot). Moreover, we find that the which minimizes the analytical bound (the blue ‘*’) is close to the selected by our heuristic (the red ‘*’). For computational efficiency, one may prefer to use that minimizes the analytical bound. However, the heuristically selected is still useful when one simply wants to estimate and does not require the formal, analytical guarantee for the false positive rate. We see such a scenario when we introduce the MC accountant in Section 5. We also note that such a discrepancy (and the gap between the analytical bound and empirical estimate) is due to the use of Hölder’s inequality in bounding . Further tightening the bound for is important for future work.

E.2 Moment Bound for Simple Monte Carlo Estimator

*

Proof.

where is the exponential tilting of . Define . When . When , the derivative of with respect to is

It is easy to see that the maximum of is achieved at , and we have

Overall, we have

∎

E.3 Moment Bound for Importance Sampling Estimator

In this section, we first prove two possible upper bounds for in Theorem E.3 and Theorem E.3. We then combine these two bounds in Theorem 19 via Holder’s inequality.

theoremvarboundisjs For any , we have

Proof.

| (11) |

Note that

Lemma 20.

When and , we have

Proof.

Since , we have

Hence,

Hence,

where the first inequality is due to Jensen’s inequality. ∎

theoremvarboundismax For any positive integer , we have

| (12) |

where is an upper bound for the MGF of privacy loss random variable of truncated Gaussian mixture .

Proof.

Similar to Theorem E.3, the goal is to bound

Note that

| (13) |

where .

Further note that

| (14) | ||||

| (15) |

where (14) is obtained through integration by parts.

Now, as we can see from (15), the question reduces to bound for any . It might be easier to write

and we know that

| (16) | ||||

| (17) |

as all s are i.i.d. random samples from , where is the CDF of Gaussian distribution with mean and variance .

It remains to bound the conditional probability , it may be easier to see it in this way:

where the last step is due to Chernoff bound which holds for any . Now we only need to bound the moment generating function for , where is the truncated distribution of . We note that this is equivalent to bounding the Rényi divergence for truncated Gaussian mixture distribution.

Recall that . For any that is a positive integer, we have

| (18) |

where . Note that the above expression can be efficiently computed. Denote the above results as (18). Hence

| (19) |

Now we have

Remark 21.

In practice, we can further improve the bound by moving the minimum operation inside the integral:

| (20) |

Of course, this bound will be less efficient to compute.

Theorem 19 (Generalizing Theorem E.3 and Theorem E.3 via Holder’s inequality).

For any positive integer , and for any s.t. , we have where is an upper bound for the MGF of privacy loss random variable of truncated Gaussian mixture defined in (18).

Appendix F Proofs for Utility

F.1 Overview

While one may be able to bound the false negative rate through similar techniques that we bound the false positive rate, i.e., applying the concentration inequalities, the guarantee may be loose. As a formal, strict guarantee for is not required, we provide a convenient heuristic of picking an appropriate such that is approximately smaller than .

For any mechanism such that , we have

and in the meantime, if , we have

Our main idea is to find such that

In this way, we know that is upper bounded by for the same amount of samples we discussed in Section 4.3.

Observe that (or with a not too large ) is typically a highly asymmetric distribution with a significant probability of being zero, and the probability density decreases monotonically for higher values. Under such conditions, we prove the following results:

Theorem 22 (Informal).

When , we have

The proof is deferred to Appendix F.2. Therefore, by setting , one can ensure that is also (approximately) upper bounded by . We empirically verify the effectiveness of such a heuristic by estimating the actual false negative rate. As we can see from Figure 6 (b), the dashed curve is much higher than the two solid curves, which means that the false negative rate is a very conservative bound.

Remark 23 (For the halting case).

In this section, we develop techniques for ensuring the false negative rate (i.e., the rejection probability) is around when the proposed privacy parameter is close to the true . In the experiment, we use the state-of-the-art FFT accountant to produce , which is very accurate as we can see from Figure 1. Hence, the rejection probability in the experiment is around , which means the probability of rejection is close to the probability of catastrophic failure for privacy.

If one is still concerned that the rejection probability is too large, we can further reduce the probability as follows: we run two instances of EVR paradigm simultaneously; if both of the instances are passed, we randomly pick one and release the output. If either one of them is passed, we release the passed instance. It only fails when both of the instances fail. By running two instances of the EVR paradigm in parallel, the false positive rate (i.e., the final ) will be only doubled, but the probability of rejection will be squared.

We can also introduce a variant of our EVR paradigm that better deals with the failure case: whenever we face the “rejection”, we run a different mechanism that is guaranteed to be -DP (e.g., by adjusting the subsampling rate and/or noise multiplier in DP-SGD). Moreover, we use FFT accountant to obtain a strict privacy guarantee upper bound for the original mechanism , where . We use and to denote the false negative and false positive rate of the privacy verifier used in EVR paradigm. If the original mechanism is indeed -DP, then for any subset , for this augmented EVR paradigm we have

If the original mechanism is not -DP, then we have

Hence, this augmented EVR algorithm will be , and if is around , then this extra factor will be very small. We can also adjust the privacy guarantee for such that the privacy guarantees for the two cases are the same, which can further optimize the final privacy cost.

F.2 Technical Details

In this section, we provide theoretical justification for the heuristic of setting .

For notation convenience, throughout this section, we talk about , but the same argument also applies to with a not too large , unless otherwise specified. We use to denote the density of at . Note that .

We make the following assumption about the distribution of .

Assumption 24.

.

While intuitive, this assumption is hard to analyze for the case of Subsampled Gaussian mechanism. Therefore, we instead provide a condition for which the assumption holds for Pure Gaussian mechanism.

Lemma 25.

Fix . When , Assumption 24 holds.

Proof.

The PRV for the composition of Gaussian mechanism is .

which is clearly when . ∎

We also empirically verify this assumption for Subsampled Gaussian mechanism as in Figure 7. As we can see, the selected by our heuristic (the red star) has which matches our principle of selecting . The minimizes the analytical bound (which we are going to use in practice) achieves .

Our goal is to show that for large . For notation simplicity, we denote in the remaining of the section. We also denote

Note that . We also write and to indicate the truncated distribution of on and , respectively.

We first construct an alternative random variable with the following distribution:

| (23) |

This is a valid probability due to Assumption 24, as . The distribution of is illustrated in Figure 8. Note that is a symmetric distribution with .

Similar to the notation of , we write . We show an asymmetry result for in terms of .

Lemma 26.

For any , we have

| (24) |

Proof.

Note that the above argument holds trivially when . For , we use the induction argument. Suppose we have

| (25) |

for any . That is,

| (26) |

for any .

where the two inequalities are due to the induction assumption. ∎

Now we come back and analyze by using Lemma 26.

Similarly,

Overall, we have

| (27) |

for any . Note that the above argument does not require and to be correlated. To maximize , we can sample for a given as follows: for each ,

-

1.

If , then let .

-

2.

If , then with probability , output for ; with probability output for ; with probability output .

Denote the random variable . It is not hard to see that . By Bennett’s inequality, with , we have

if we set . Let , then by setting and , we have .

To summarize, when we set with the heuristic, we have

Appendix G Pseudocode for Privacy Accounting for DP-SGD with EVR Paradigm

In this section, we outline the steps of privacy accounting for DP-SGD with our EVR paradigm. Recall from Lemma 14 that for subsampled Gaussian mechanism with sensitivity , noise variance , and subsampling rate , one dominating pair is and . Hence, for DP-SGD with iterations, the dominating pair is the product distribution and where each and follow the same distribution as and .333It can also be extended to the heterogeneous case easily.

Appendix H Experiment Settings & Additional Results

H.1 GPU Acceleration for MC Sampling

MC-based techniques are well-suited for parallel computing and GPU acceleration due to their nature of repeated sampling. One can easily utilize PyTorch’s CUDA functionality, e.g.,

| torch.randn(size=(k,m)).cuda()*sigma+mu |

, to significantly boost the computational efficiency. Figure 9 (a) shows that when using a NVIDIA A100-SXM4-80GB GPU, the execution time of sampling Gaussian mixture () can be improved by times compared with CPU-only scenario. Figure 9 (b) shows the predicted runtime for different target false positive rates for . We vary and set the target false positive rate as the smallest that is greater than , where and is positive integer. We set , and are set as the heuristics introduced in the previous sections. The runtime is predicted by the number of required samples for the given false positive rate. As we can see, even when we target at false positive rate (which means that ), the clock time is still acceptable (around 3 hours).

H.2 Experiment for Evaluating EVR Paradigm

H.2.1 Settings

For Figure 1 & Figure 3, the FFT-based method has hyperparameter being set as . For the GDP-Edgeworth accountant, we use the second-order expansion and uniform bound, following [WGZ+22].

For Figure 4 (as well as Figure 11), the BEiT [BDPW21] is first self-supervised pretrained on ImageNet-1k and then trained finetuned on ImageNet-21k, following the state-of-the-art approach in [PTS+22]. For DP-GD training, we set as 28.914, clipping norm as 1, learning rate as 2, and we train for at most 60 iterations, and we only finetune the last layer on CIFAR-100.

H.2.2 Additional Results

curve. We show additional results for a more common setting in privacy-preserving machine learning where one set a target and try to find for different , the number of individual mechanisms in the composition. We use to stress that the PRV is for the composition of mechanisms. Such a scenario can happen when one wants to find the optimal stopping iteration for training a differentially private neural network.

Figure 10 shows such a result for Poisson Subsampled Gaussian where we set subsampling rate 0.01, , and . We set for the FFT method. The estimate in this case is obtained by fixing and find the corresponding estimate for through FFT-based method [GLW21]. As we can see, the EVR paradigm achieves a much tighter privacy analysis compared with the upper bound derived by FFT-based method. The runtime of privacy verification in this case is minutes for all s.

Privacy-Utility Tradeoff. We show additional results for the privacy-utility tradeoff curve when finetuning ImageNet-pretrained BEiT on CIFAR100 dataset with DP stochastic gradient descent (DP-SGD). For DP-SGD training, we set as 5.971, clipping norm as 1, learning rate as 0.2, momentum as , batch size as 4096, and we train for at most 360 iterations (30 epochs). We only finetune the last layer on CIFAR-100.

As shown in Figure 11 (a), the EVR paradigm provides a better utility-privacy tradeoff compare with the traditional upper bound method. In Figure 11 (b), we show the runtime of DP verification when and we set according to Theorem 12 (which ensures EVR’s failure probability is negligible). The runtime is estimated on an NVIDIA A100-SXM4-80GB GPU. As we can see, it only takes a few minutes for privacy verification, which is short compared with hours of model training.

H.3 Experiment for Evaluating MC Accountant

H.3.1 Settings

Evaluation Protocol. We use to stress that the PRV is for the composition of Poisson Subsampled Gaussian mechanisms. For the offline setting, we make the following two kinds of plots: (1) the relative error in approximating (for fixed ), and (2) the relative error in (for fixed ), where is the inverse of from (2). For the online setting, we make the following two kinds of plots: (1) the relative error in approximating (for fixed ), and (2) cumulative time for privacy accounting until th iteration.

MC Accountant. We use the importance sampling technique with the tilting parameter being set according to the heuristic described in Definition 16.

Baselines. We compare MC accountant against the following state-of-the-art DP accountants with the following settings:

-

The state-of-the-art FFT-based approach [GLW21]. The setting of and is specified in the next section.

-

CLT-based GDP accountant [BDLS20].

-

GDP-Edgeworth accountant with second-order expansion and uniform bound.

-

The Analytical Fourier Accountant based on characteristic function [ZDW22], with double quadrature approximation as this is the practical method recommended in the original paper.

H.3.2 Additional Results for Online Accounting

Figure 12 and 13 show the online accounting results for and , respectively. For the setting of , we can see that the MC accountant achieves a comparable performance with a shorter runtime. For the setting of , we can see that the MC accountant achieves significantly better performance compared to the state-of-the-art FFT accountant (and again, with a shorter runtime). This showcases the MC accountant’s efficiency and accuracy in online setting.

H.3.3 Additional Results for Offline Accounting

In this experiment, we set the number of samples for MC accountant as , and the parameter for FFT-based method as . The parameters are controlled so that the MC accountant is faster than FFT-based method, as shown in Table 1. Figure 14 (a) shows the offline accounting results for when we set . As we can see, the performance of MC accountant is comparable with the state-of-the-art FFT method. In Figure 15 (a), we decreases to . Compared against baselines, MC approximations are significantly more accurate for larger , compared with the FFT accountant. Figure 14 (b) shows the offline accounting results for when we set . Similarly, MC accountant performs comparably as FFT accountant. However, when we decrease to and to (Figure 15 (b)), MC accountant significantly outperforms FFT accountant. This illustrates that MC accountant performs well in all regimes, and is especially more favorable when the true value of is tiny.

| AFA | GDP | GDP-E | FFT | MC-IS |

|---|---|---|---|---|

| 18.63 | 1.50 | 3.01 | 2.31 |