2128 \lmcsheadingLABEL:LastPageSep. 27, 2021Apr. 24, 2025

[a] [b] [a]

A robust graph-based approach to observational equivalence

Abstract.

We propose a new step-wise approach to proving observational equivalence, and in particular reasoning about fragility of observational equivalence. Our approach is based on what we call local reasoning. The local reasoning exploits the graphical concept of neighbourhood, and it extracts a new, formal, concept of robustness as a key sufficient condition of observational equivalence. Moreover, our proof methodology is capable of proving a generalised notion of observational equivalence. The generalised notion can be quantified over syntactically restricted contexts instead of all contexts, and also quantitatively constrained in terms of the number of reduction steps. The operational machinery we use is given by a hypergraph-rewriting abstract machine inspired by Girard’s Geometry of Interaction. The behaviour of language features, including function abstraction and application, is provided by hypergraph-rewriting rules. We demonstrate our proof methodology using the call-by-value lambda-calculus equipped with (higher-order) state.

1. Introduction

1.1. Context and motivation

Observational equivalence [MJ69] is an old and central question in the study of programming languages. Two executable programs are observationally equivalent when they have the same behaviour. Observational equivalence between two program fragments (aka. terms) is the smallest congruence with respect to arbitrary program contexts. By formally establishing observational equivalence, one can justify compiler optimisation, and verify and validate programs.

There are two mathematical challenges in proving observational equivalence. Firstly, universal quantification over contexts is unwieldy. This has led to various indirect approaches to observational semantics. As an extremal case, denotational semantics provides a model-theoretic route to observational equivalence. There are also hybrid approaches that employ both denotational and operational techniques, such as Kripke logical relations [Sta85] and trace semantics [JR05]. Moreover, an operational and coinductive approach exists, under the name of applicative bisimilarity [Abr90].

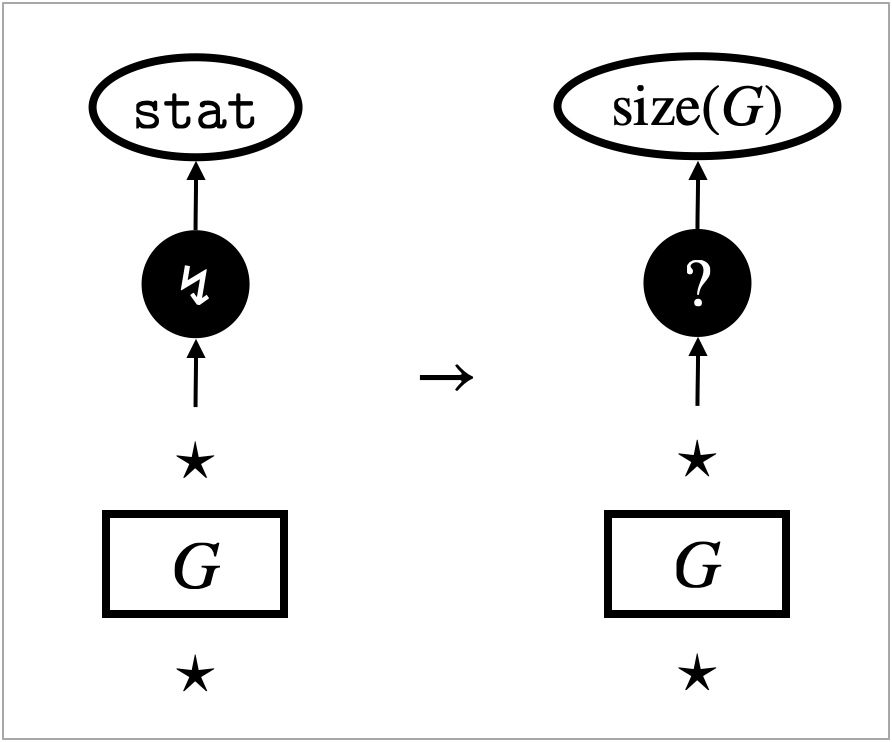

The second challenge is fragility of observational equivalence. The richer a programming language is, the more discriminating power program contexts have and hence the less observational equivalences hold in the language. For example, the beta-law is regarded as the fundamental observational equivalence in functional programming. However, it can be violated in the presence of a memory inspection feature like the one provided by the OCaml garbage-collection (Gc) module. A function that returns the size of a given program enables contexts to distinguish from , for example111See a concrete example written in OCaml, on the online platform Try It Online: https://bit.ly/3TqnGOW.

The fragility of observational equivalence extends to its proof methodologies. There have been studies of the impact that language features have on semantics and hence on proof methodologies for observational equivalence. The development of game semantics made it possible to give combinatorial, syntax-independent and orthogonal characterisations for classes of features such as state and control, e.g. the so-called “Abramsky cube” [Abr97, Ghi23], or to replace the syntactic notion of context by an abstracted adversary [GT12]. A classification [DNB12] and characterisation [DNRB10] of language features and their impact on reasoning has also been undertaken using logical relations. Applicative bisimilarity has been enriched to handle effects such as algebraic effects, local state, names and continuations [SKS07, KLS11, DGL17, SV18].

1.2. Overview and contribution

What is missing and desirable seems a general semantical framework with which one can directly analyse fragility, or robustness, of observational equivalences. To this end, we introduce a graphical abstract machine that implements focussed hypernet rewriting. We then propose a radically new approach to proving observational equivalence that is based on step-wise and local reasoning and centred around a concept of robustness. All these concepts will be rigorously defined in the paper.

The main contribution of the paper is rather conceptual, showing how the graphical concept of neighbourhood can be exploited to reason about observational equivalence, in a new and advantageous way. The technical development of the paper might seem quite elaborate, but this is because we construct a whole new methodology from scratch: namely, focussed hypernet rewriting and reasoning principles for it. These reasoning principles enable us to analyse fragility, or robustness, of observational equivalences in a formal way.

We introduce and use hypernets to represent (functional) programs with effects. Hypernets are an anonymised version of abstract syntax trees, where variables are simply represented as connections. Formally, hypernets are given by hierarchical hypergraphs. Hierarchy allows a hypergraph to be an edge label recursively. An extensive introduction to hypernets and rewriting of them can be found in the literature [GZ23].

Given a hypernet that represents a term, its evaluation is modelled by step-by-step traversal and update of the hypernet. Traversal steps implement depth-first search on the hypernet for a redex, and each update step triggers application of a rewrite rule to the hypernet. Traversal and update are interwoven strategically using a focus that is simply a dedicated edge passed around the hypernet. Importantly, updates are always triggered by a certain focus, and designed to only happen around the focus. We call this model of evaluation focussed hypernet rewriting.

There are mainly two differences compared with conventional reduction semantics. The first difference is the use of hypernets instead of terms. This makes renaming of variables irrelevant. The second difference is the use of the focus instead of evaluation contexts. In conventional reduction semantics, redexes are identified using evaluation contexts. Whenever the focus triggers an update of a hypernet, its position in the hypernet coincides with where the hole is in an evaluation context.

A new step-wise approach.

This work takes a new coinductive, step-wise, approach to proving observational equivalence. We introduce a novel variant of the weak simulation dubbed counting simulation. We demonstrate that, to prove observational equivalence, it suffices to construct a counting simulation that is closed under contexts by definition. This approach is opposite to the known coinductive approach which uses applicative bisimilarity; one first constructs an applicative bisimulation and then proves that it is a congruence, typically using Howe’s method [How96].

Local reasoning.

In combination with our new step-wise approach, focussed hypernet rewriting facilitates what we call local reasoning. Our key observation is that, to obtain the counting simulation that is closed under contexts by definition, it suffices to simply trace sub-graphs and analyse their interaction with the focus. The interaction can namely be analysed by inspecting updates that happen around the focus and how these updates can interfere with the sub-graphs of interest. The reasoning principal here is the graphical concept of neighbourhood, or graph locality.

The local reasoning is a graph counterpart of analysing interaction between (sub-)terms and contexts using the conventional reduction semantics. In fact, it is not just a counterpart but an enhancement in two directions. Firstly, sub-graphs are more expressive than sub-terms; sub-graphs can represent parts of a program that are not necessarily well-formed. Secondly, the focus can indicate which part of a context is relevant in the interaction between the context and a term, which is not easy to make explicit in the conventional semantics that uses evaluation contexts.

Robustness.

Finally, local reasoning extracts a formal concept of robustness in proving observational equivalence. Robustness is identified as the key sufficient condition that ensures two sub-graphs that we wish to equate interact with updates of a hypernet, which is triggered by the focus, in the same way; for example, if one sub-graph is duplicated (or discarded), the other is also duplicated (or discarded).

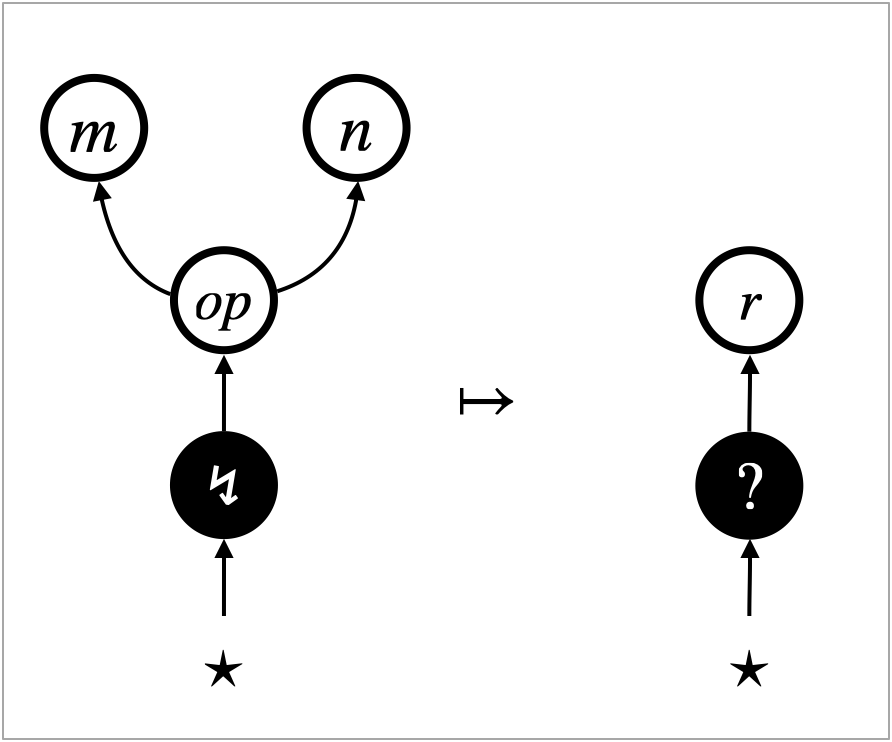

The concept of robustness helps us gain insights into fragility of observational equivalence. If robustness of two sub-graphs fails, we obtain a counterexample, which is given by a rewrite rule that interferes with the two sub-graphs in different ways. Let be the two different results of interference (i.e. is the result of updating , and is the result of updating ). There are two possibilities.

-

(1)

The sub-graphs are actually observationally equivalent. In this case, the counterexample suggests that the two different results should first be equated. The observational equivalence we wish to establish is likely to depend on the ancillary observational equivalence .

-

(2)

The observational equivalence fails too. In this case, the counterexample provides the particular computation that violates the equivalence, in terms of a rewrite rule. We can conclude that the language feature that induces the computation violates the observational equivalence.

Generalised contextual equivalence.

Using focussed hypernet rewriting, we propose a generalised notion of contextual equivalence. The notion has two parameters: a class of contexts and a preorder on natural numbers. The first parameter enables us to quantify over syntactically restricted contexts, instead of all contexts as in the standard notion. This can be used to identify a shape of contexts that respects or violates certain observational equivalences, given that not necessarily all arbitrarily generated contexts arise in program execution. The second parameter, a preorder on natural numbers, deals with numbers of steps it takes for program execution to terminate. Taking the universal relation recovers the standard notion of contextual equivalence. Another instance is contextual equivalence with respect to the greater-than-or-equal relation on natural numbers, which resembles the notion of improvement [San95, ADV20, ADV21] that is used to establish equivalence and also to compare efficiency of abstract machines. This instance of contextual equivalence is useful to establish that two programs have the same observable execution result, and also that one program terminates with fewer steps than the other.

1.3. Organisation of the paper

Section 2 provides a gentle introduction to our graph-based approach to modelling program evaluation, and reasoning about observational equivalence with the key concepts of locality and robustness. Section 3 formalises the graphs we use, namely hypernets. The rest of the paper is in two halves.

In the first half, we develop our reasoning framework, targeting the linear lambda-calculus. Although linear lambda-terms have restricted expressive power, they are simple enough to demonstrate the development throughout. Section 4 presents the hypernet representation of linear lambda-terms. Section 5 then presents our operational semantics, i.e. focussed hypernet rewriting. Section 6 formalises it as an abstract machine called universal abstract machine (UAM).

Section 7 sets the target of our proof methodology, introducing the generalised notion of contextual equivalence. Section 8 presents our main technical contributions: it formalises the concept of robustness, and presents our main technical result which is the sufficiency-of-robustness theorem (6).

In the second half, we extend our approach to the general (non-linear) lambda-calculus equipped with store. Section 9 describes how the hypernet representation can be adapted. Section 10 shows how the UAM can be extended accordingly, and presents the copying UAM. Section 11 formalises observational equivalence between lambda-terms by means of contextual equivalence between hypernets. Section 12 then demonstrates our approach by proving some example equivalences for the call-by-value lambda-calculus extended with state. The choice of the language here is pedagogical; our methodology can accommodate other effects as long as they are deterministic.

Finally, Section 13 discusses related and future work, concluding the paper. Some details of proofs are presented in Appendix.

2. A gentle introduction

2.1. Hypernets

Compilers and interpreters deal with programs mainly in the form of an abstract syntax tree (AST) rather than text. It is broadly accepted that such a data structure is easier to manipulate algorithmically. Somewhat curiously perhaps, reduction semantics (or small-step operational semantics), which is essentially a list of rules for program manipulation, is expressed using text rather than the tree form. In contrast, our graph-based semantics is expressed as algorithmic manipulations of the data structure that represents syntax.

Let us demonstrate our graphical representation, using the beta-law

| (1) |

in the linear lambda-calculus. We use colours to clarify some variable scopes. This law substitutes for the variable , and in doing so, the bound variable has to be renamed to so it does not capture the variable in .

Our first observation is that ASTs are not satisfactory to represent syntax, when it comes to define operational semantics. They contain more syntactic details than necessary, namely by representing variables using names. This makes an operation on terms like substitution a global affair. To avoid variable capturing, substitution needs to clarify the scope of each variable and appropriately rename some variables.

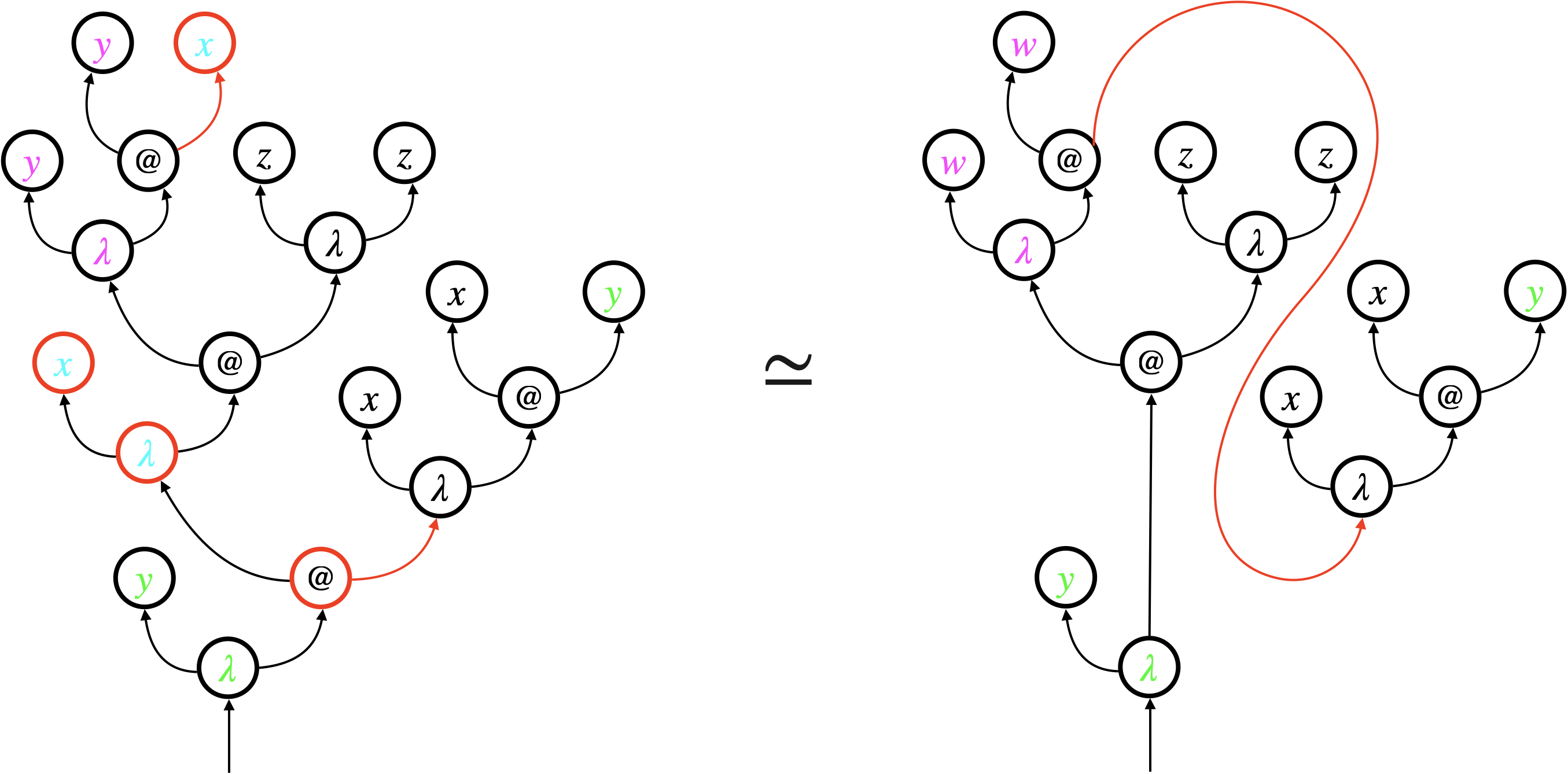

Figure 1a shows the beta-law (1) using ASTs. The scope of each variable is not obvious in the ASTs, which is why we keep using colours to distinguish variable scopes. The law deletes the four red nodes of the left AST, and connects two red arrows to represent substitution for . Additionally, all occurrences of the variable has to be renamed to .

We propose hypernets as an alternative graph representation. Hypernets, inspired by proof nets [Gir87], replace variable names with virtual connection, and hence keep variables anonymous. Binding structures and scopes are made explicit by (dashed) boxes around sub-graphs.

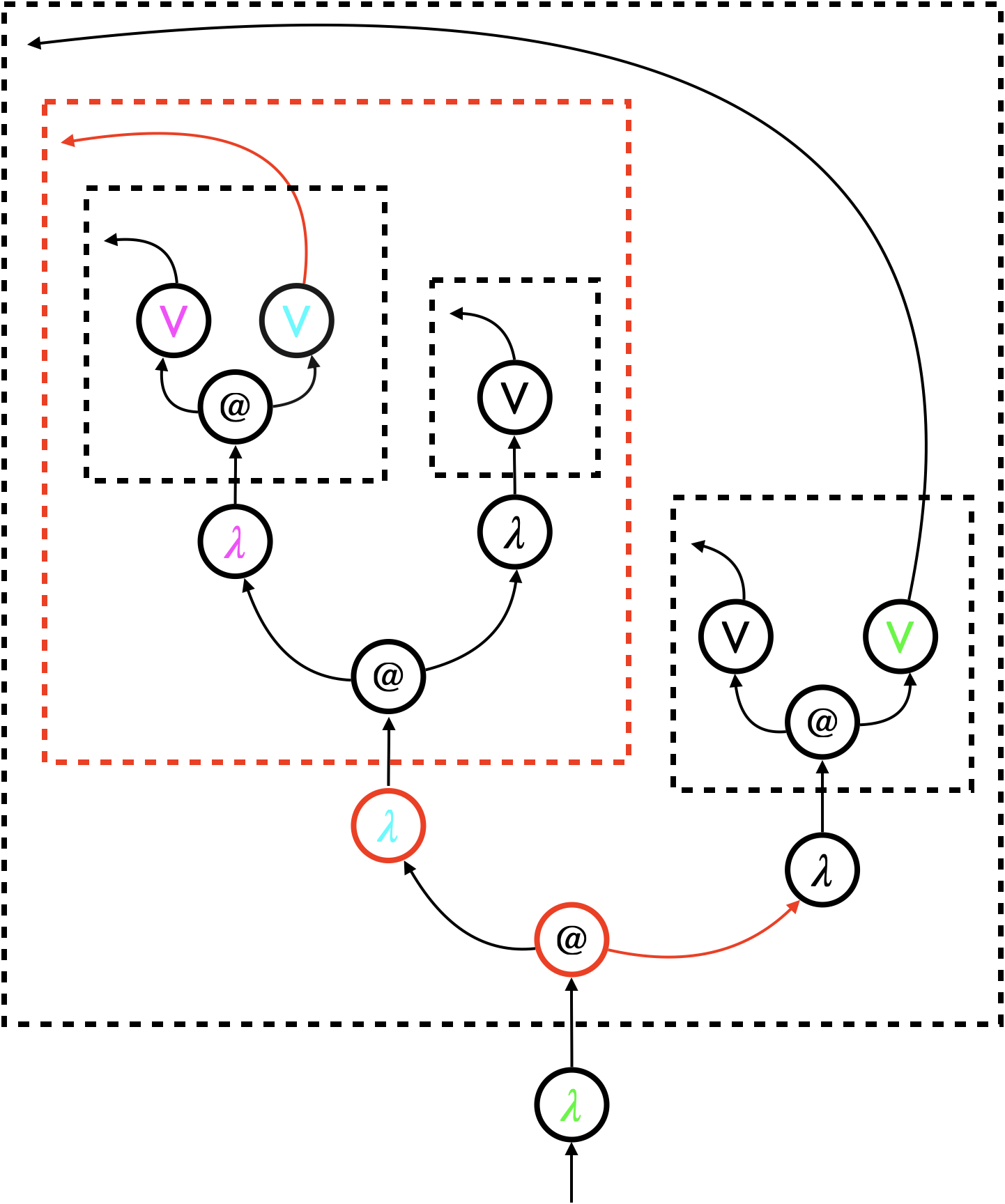

Figure 1b shows the same beta-law (1) using hypernets instead of ASTs. Each bound variable is simply represented by an arrow that points at the left edge of the associated dashed box. For example, the upper one of the two red arrows in the left hypernet represents the bound variable . It points at the left edge of the red dashed box that represents the scope of the variable. The dashed box is connected to the corresponding binder ().

The beta-law requires relatively local changes to hypernets. In Figure 1b, the two red nodes are deleted, the associated red dashed box is also deleted, and the two red arrows are connected to represent substitution for . There is no need for renaming , as it is simply represented by an anonymous arrow.

Remark 1 (Arrows representing bound variables).

In hypernets, the arrow representing a bound variable points at the left edge of the associated dashed box. In other graphical notations (e.g. proof nets [Gir87]), the bound variable would be connected to the corresponding binder (), as shown by red arrows in Figure 1c. We treat these red arrows as mere decorations, and exclude them from the formalisation of hypernets. We find that boxes suffice to delimit the scope of variables and sub-terms. Exclusion of decorations also simplifies the formalisation by reducing the number of loops in each hypernet. ∎

2.2. Focussed hypernet rewriting

The main difference between a law (an equation) and a reduction is that the former can be applied in any context, at any time, whereas the latter must be applied strategically, in a particular (evaluation) context and in a particular order. Different reduction strategies, for instance, make different programming languages out of the same calculus.

The question to be addressed here is how to define strategies for determining redexes in hypernets. Our operational semantics, i.e. focussed hypernet rewriting, combines graph traversal with update, and exploits the traversal to search for a redex.

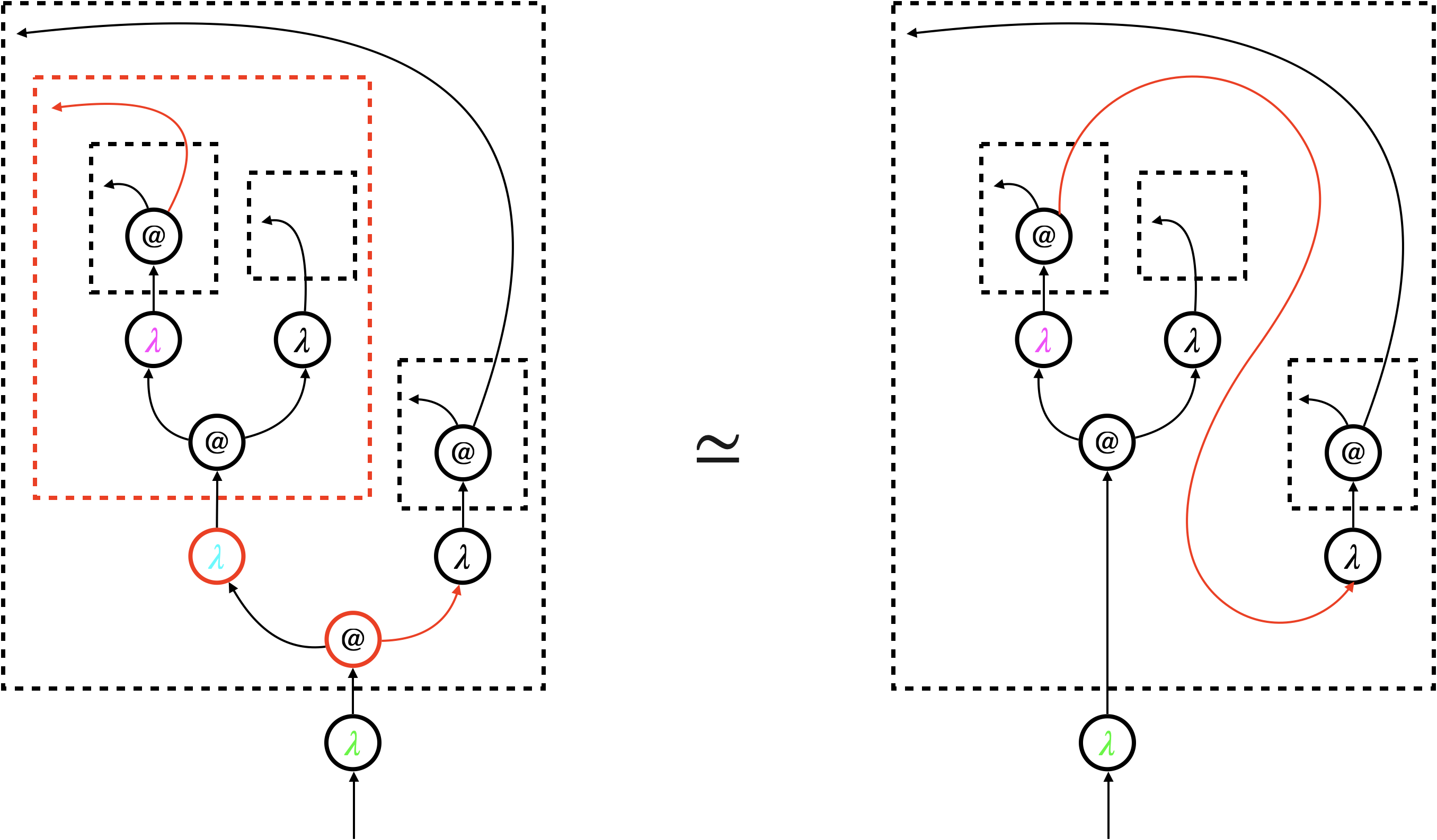

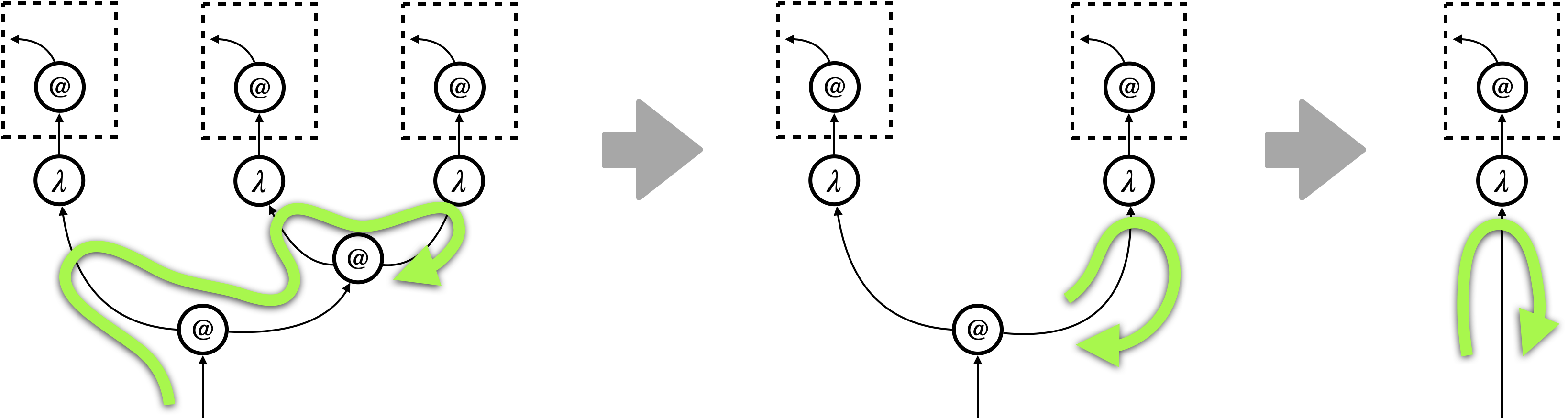

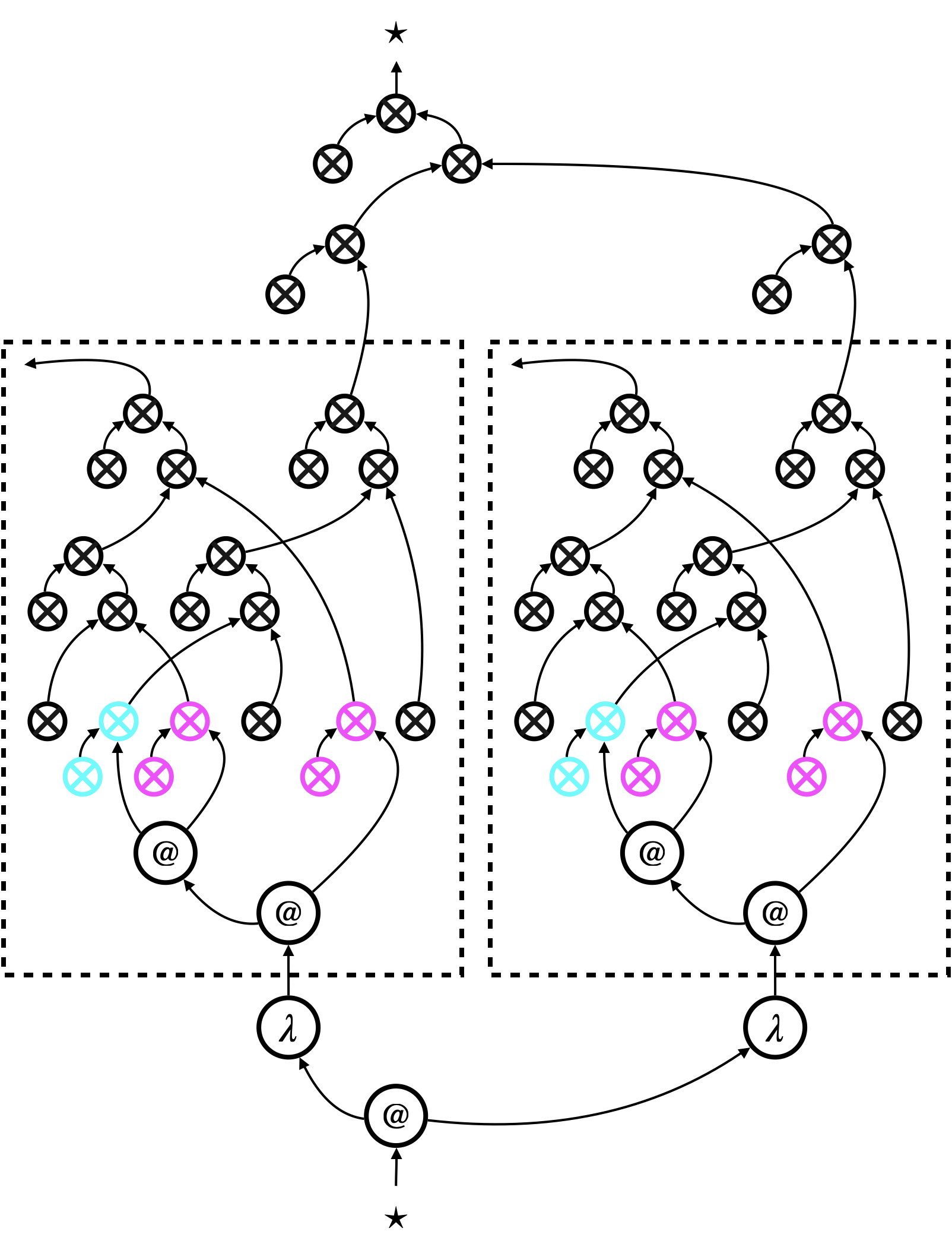

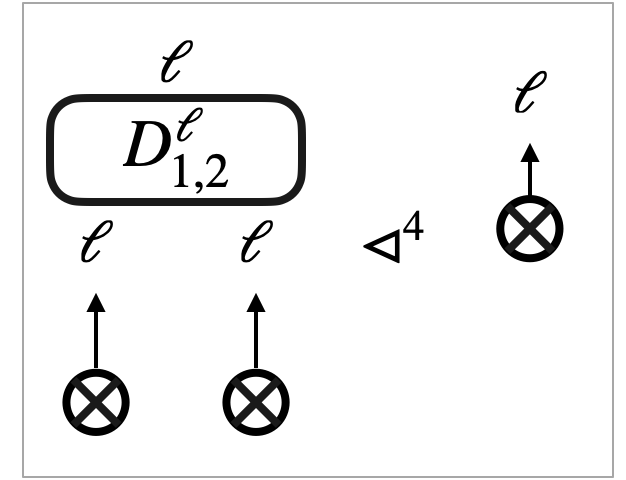

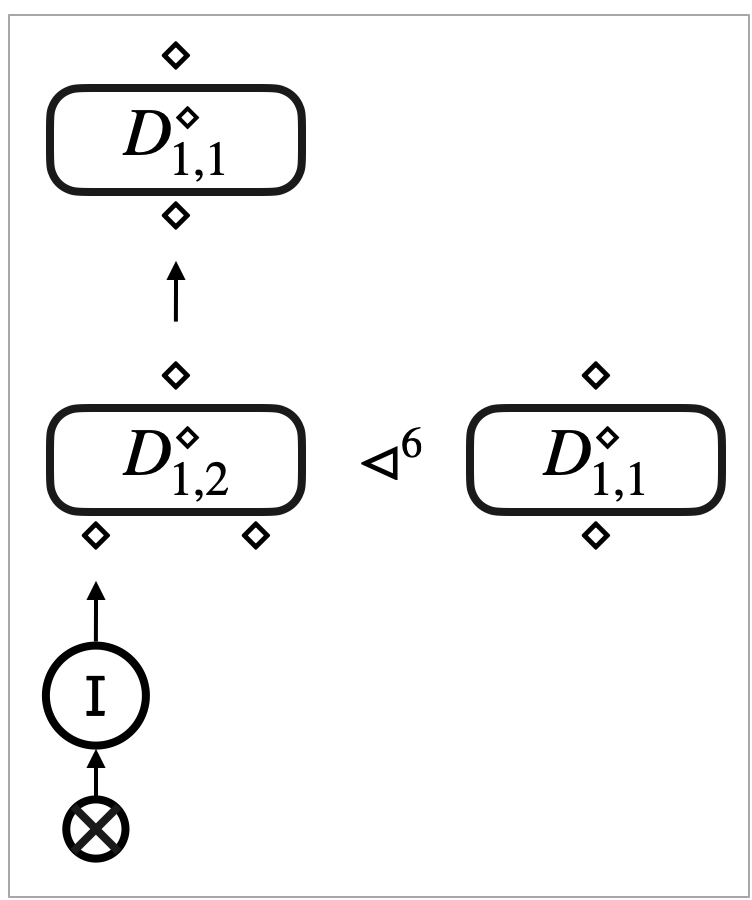

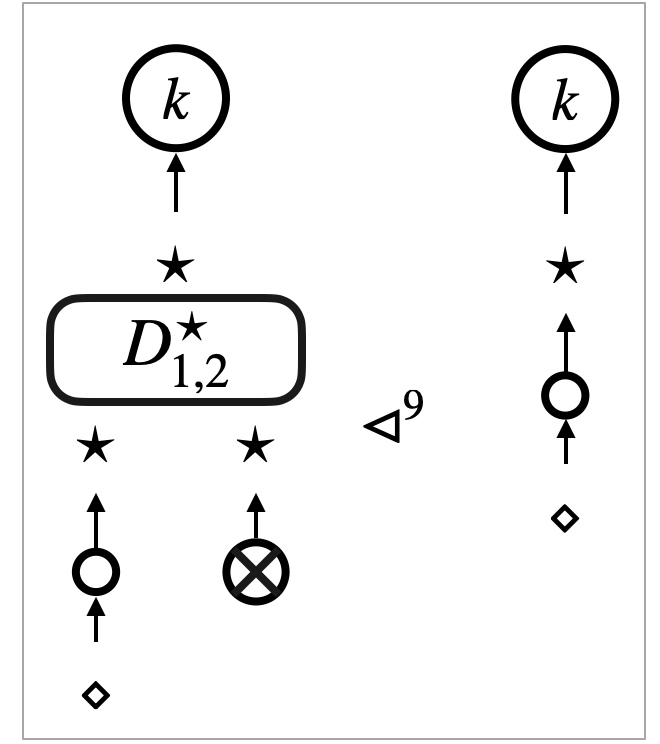

Let us illustrate focussed hypernet rewriting, using the call-by-value reduction of the linear lambda-term as shown in Figure 2. The thicker green arrows are not part of the hypernets but they show the traversal. The reduction proceeds as follows.

-

(1)

The depth-first traversal witnesses that the abstraction is a value, and that the sub-term contains two abstractions and it is ready for the beta-reduction. In the reduction, an application node () and its matching abstraction node () are deleted, and the associated dashed box is removed. The argument is then connected to the bound variable , yielding the second hypernet.

-

(2)

The traversal continues on the resultant hypernet (representing ), confirming that the result of the beta-reduction is a value. Note that the abstraction has already been inspected in the previous step, so the traversal does not repeat the inspection. It only witnesses the abstraction at this stage. The beta-reduction is then triggered, yielding the third and final hypernet representing .

-

(3)

The traversal confirms that the result of the beta-reduction is a value, and it finishes.

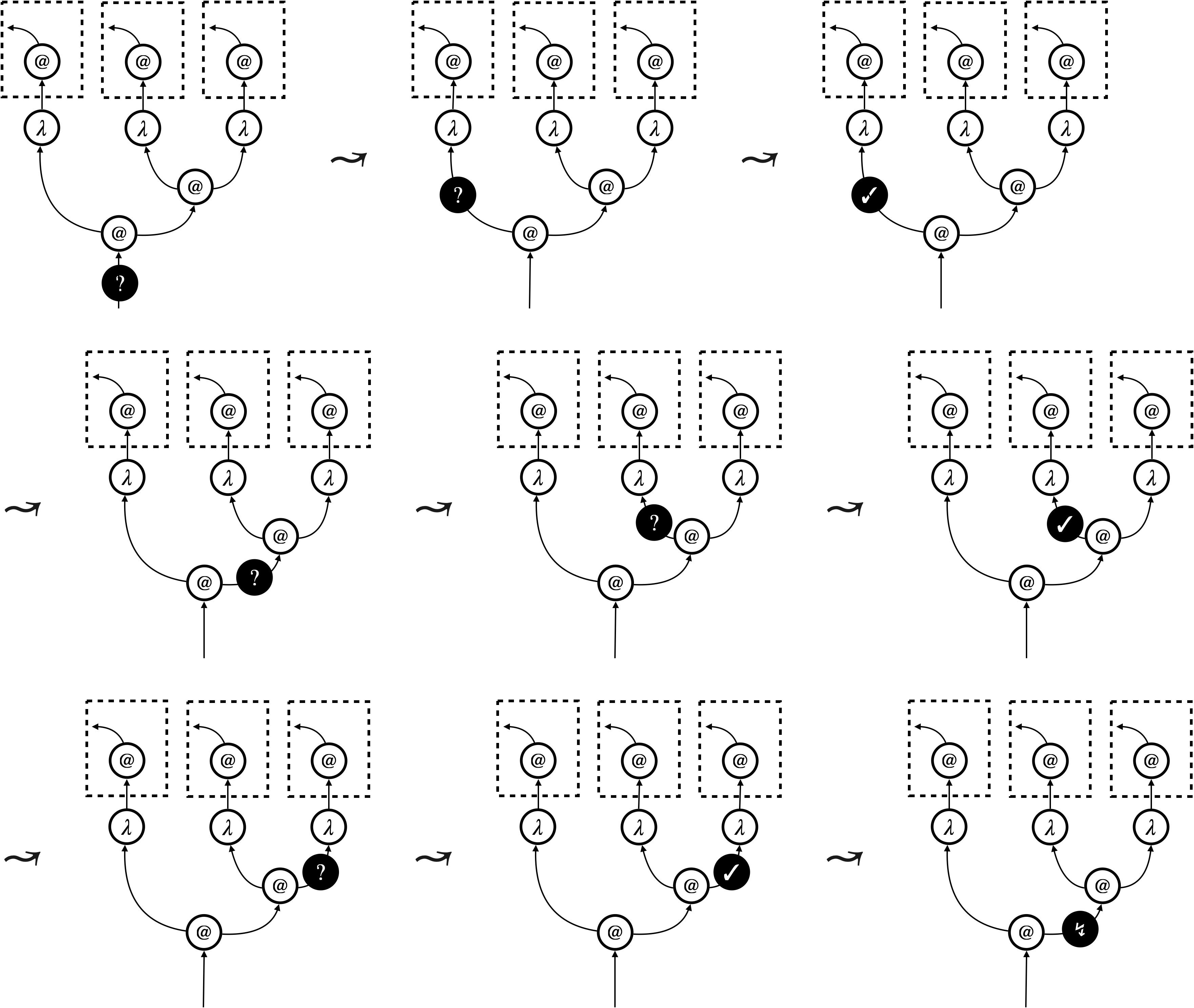

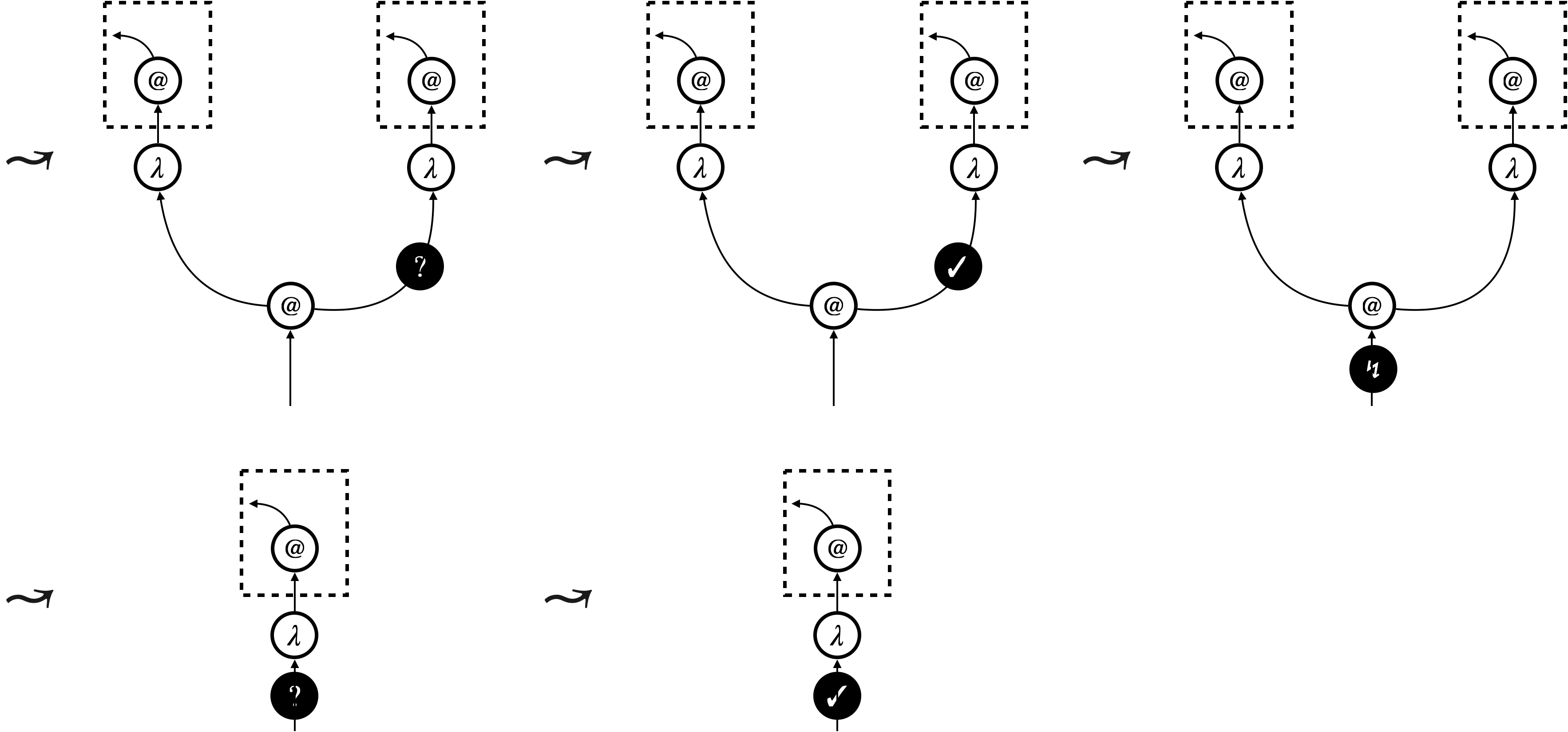

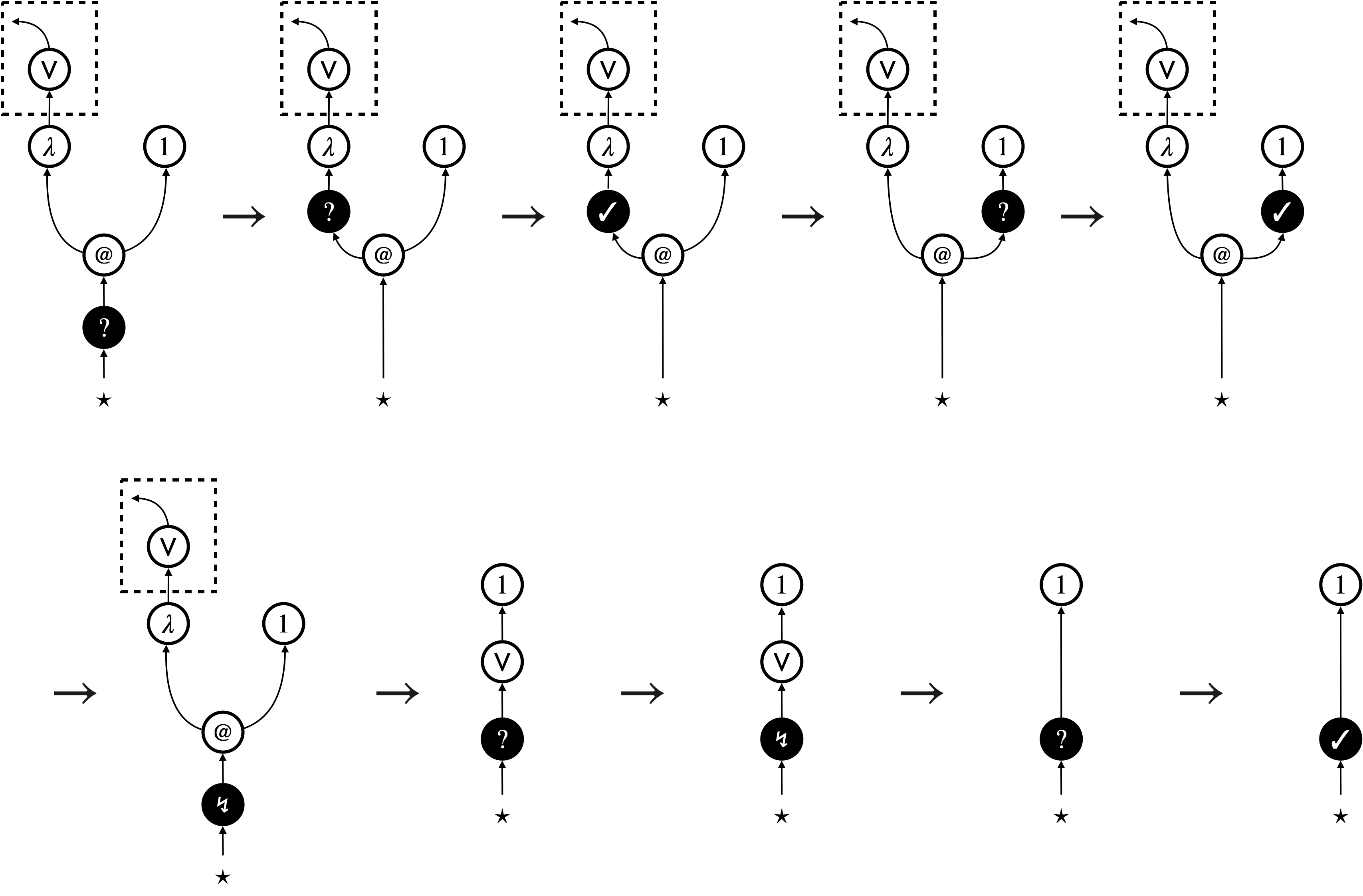

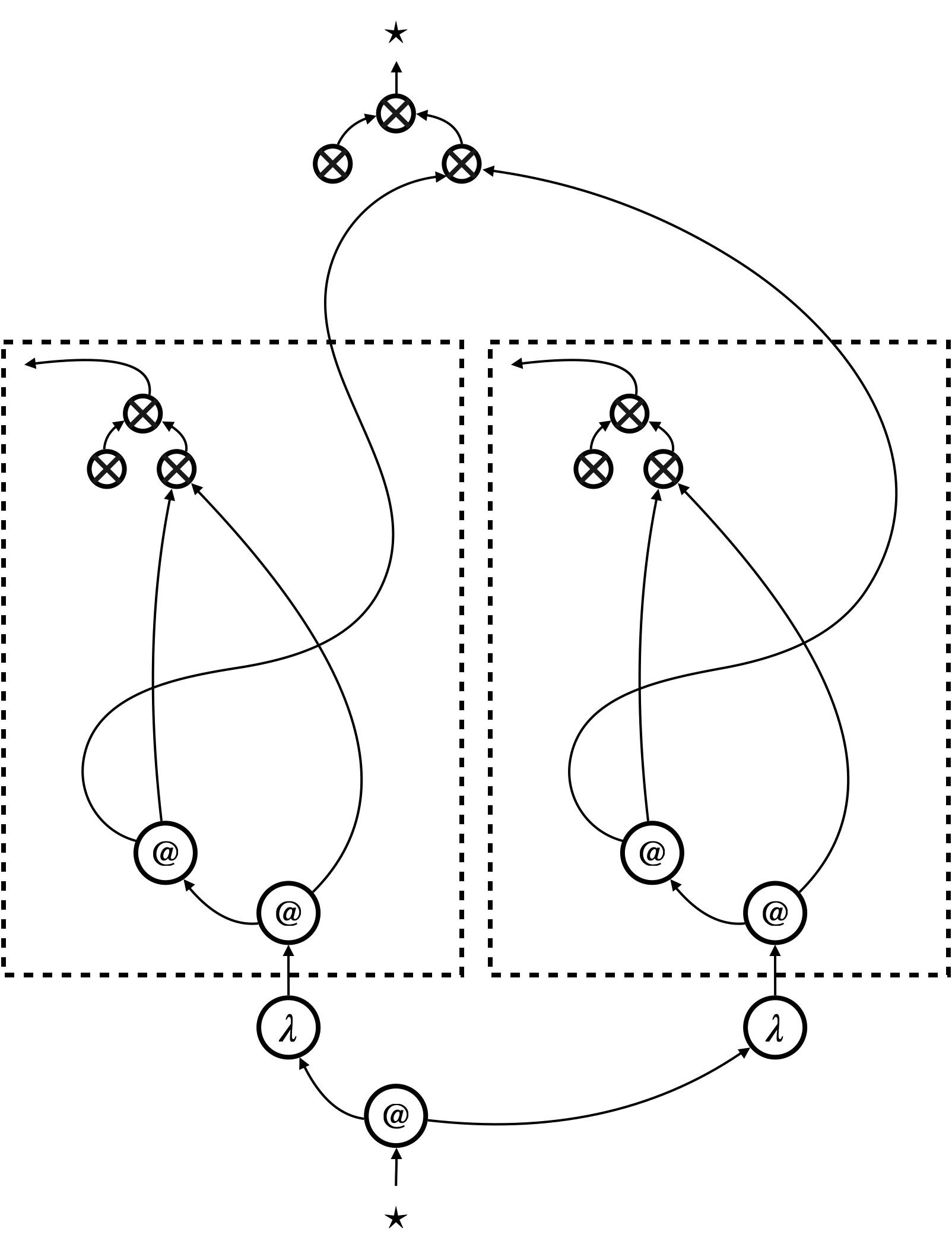

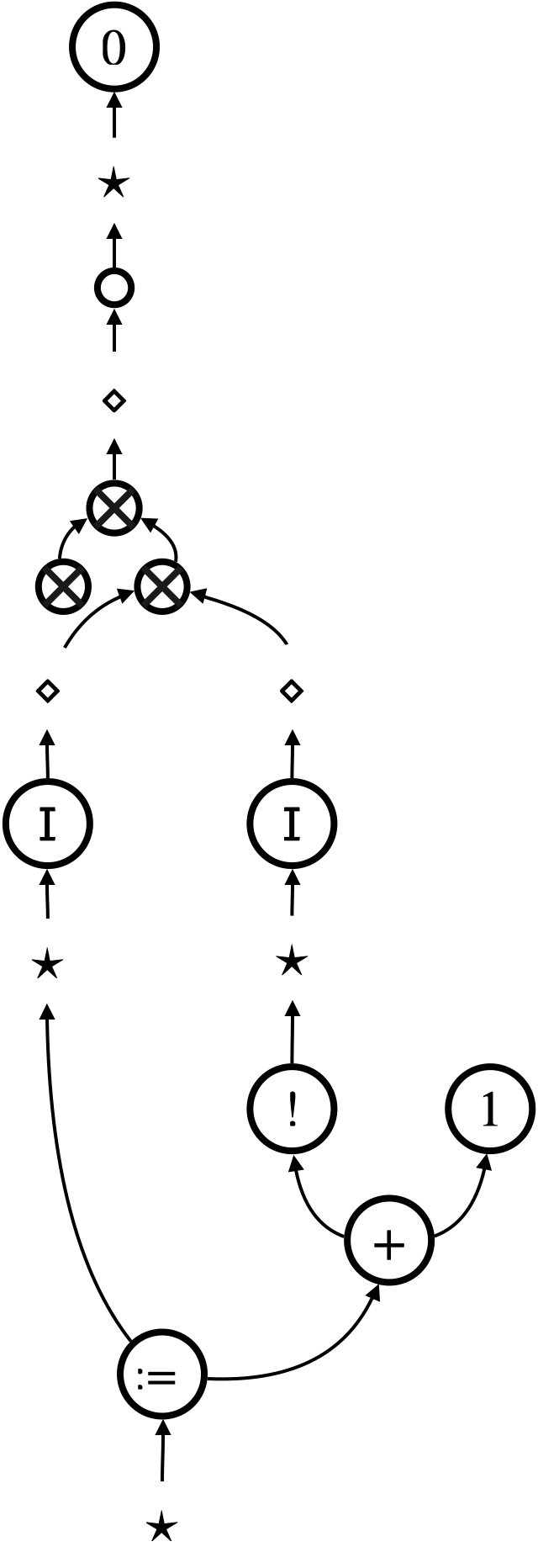

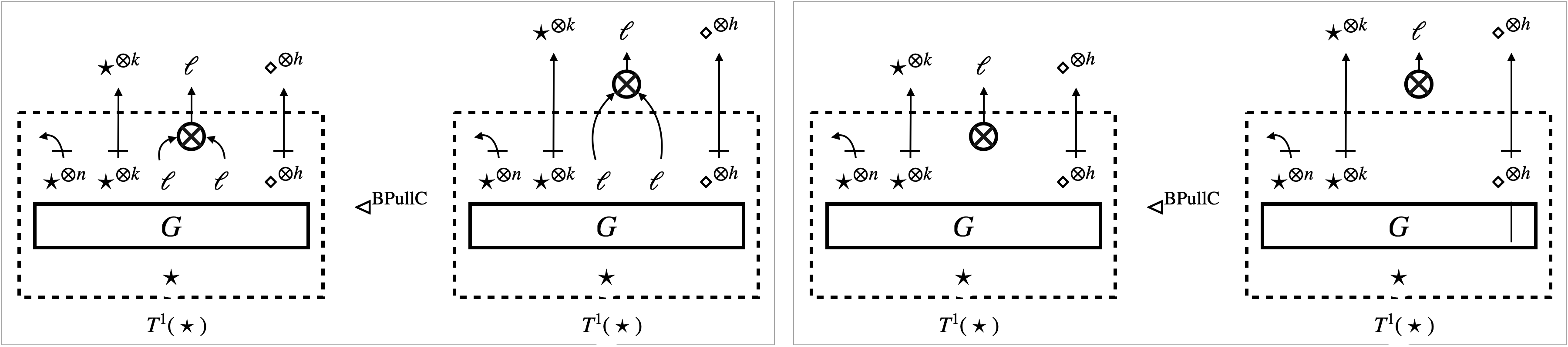

We implement focussed hypernet rewriting, in particular the graph traversal (the thick green arrows in Figure 2), using a dedicated node dubbed focus. A focus can be in three modes: searching (), backtracking (), and triggering (). The first two modes implement the depth-first traversal, and the last mode triggers update of the underlying hypernet. Figure 3 shows how focussed hypernet rewriting actually proceeds222An interpreter and visualiser can be accessed online at https://tnttodda.github.io/Spartan-Visualiser/, given the linear term . The black nodes are the focus. The first eight steps altogether implement the thick green arrow in the first part of Figure 2. At the end of these steps, the focus changes to , signalling that the hypernet is ready for the beta-reduction. What follows is an update of the hypernet, which resets the focus to the searching mode (), so the traversal continues and triggers further update.

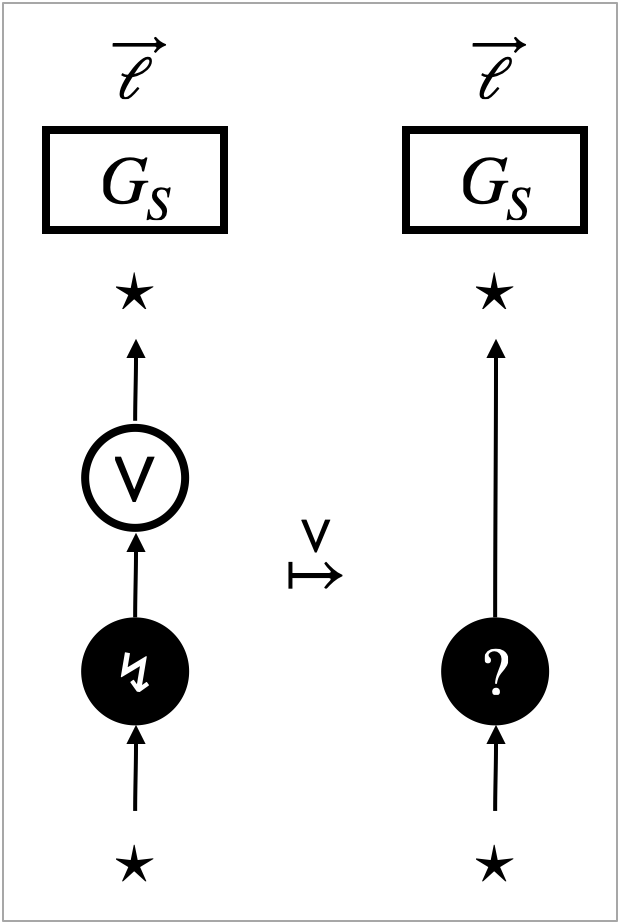

Evaluation of a program starts, when the -focus enters the hypernet representing from the bottom. Evaluation successfully finishes, when the -focus exits a hypernet from the bottom.

We will formalise focussed hypernet rewriting as an abstract machine (see [Pit00] for a comprehensive introduction). The machine has two kinds of transitions: one for the traversal, and the other for the update. It is important that the focus governs transitions; a traversal transition or an update transition is selected according to the mode of the focus. It is the focus that implements the traversal, triggers the update, and hence realises the call-by-value reduction strategy.

2.3. Step-wise local reasoning, and robustness

Finally we overview the reasoning principle that focussed hypernet rewriting enables, which leads to our main theorem, sufficiency-of-robustness theorem (6).

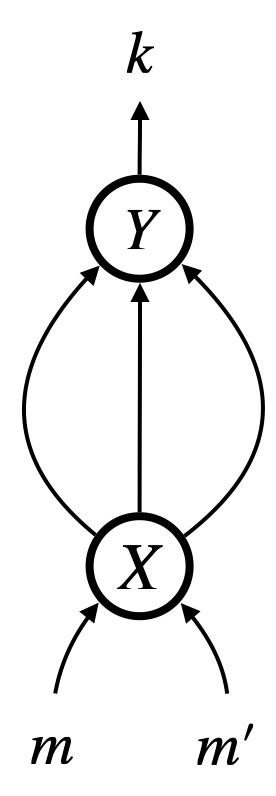

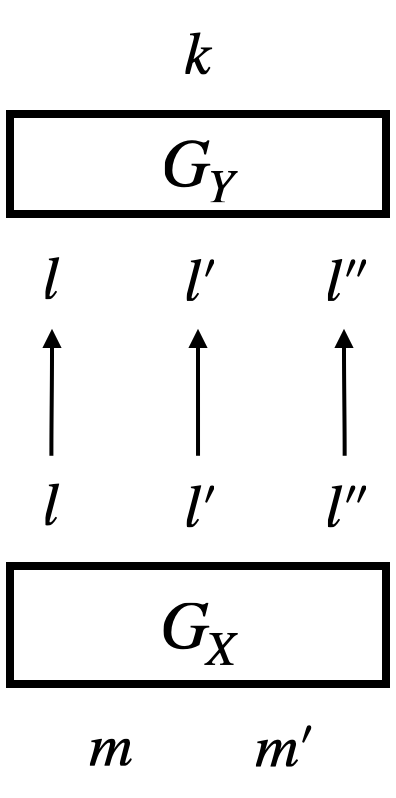

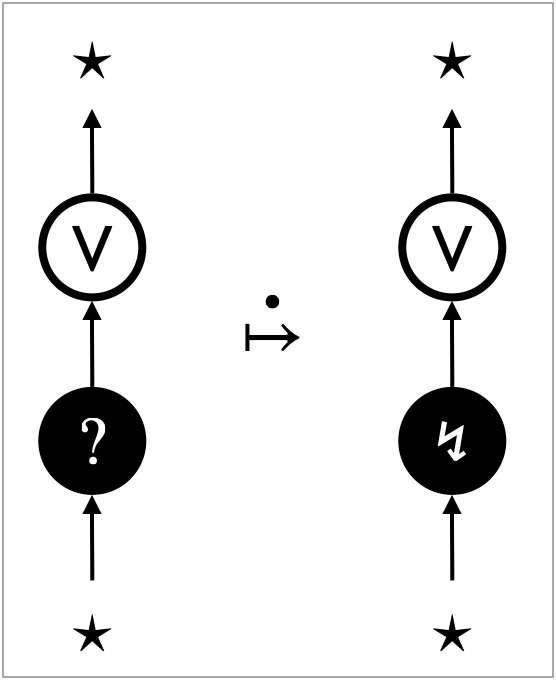

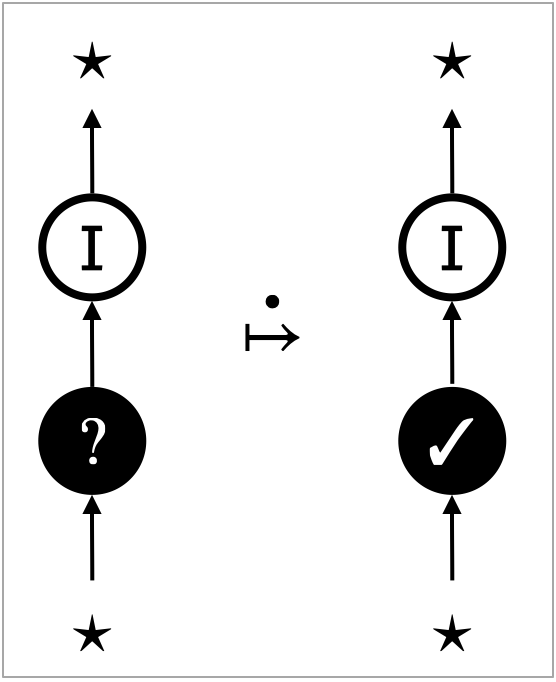

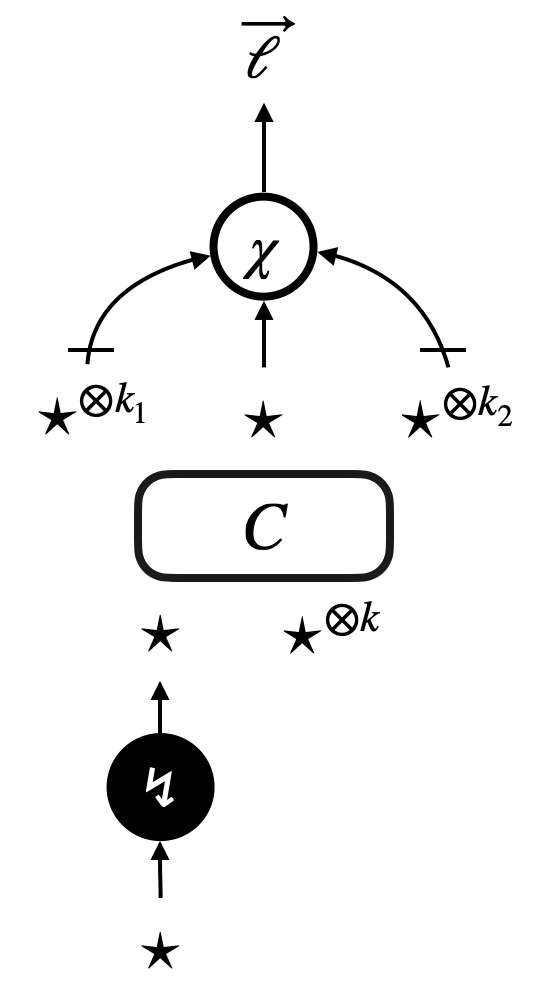

Using focussed hypernet semantics, this work takes a new coinductive, step-wise, approach to proving observational equivalence. We will introduce a new variant of weak simulation dubbed counting simulation. A counting simulation is a relation on focussed hypernets that are hypernets with a focus. We write to indicate that a hypernet contains a focus.

Our proof of an observational refinement , which is the asymmetric version of observational equivalence , proceeds as follows.

-

(1)

We start with the relation on hypernets. We call it pre-template.

-

(2)

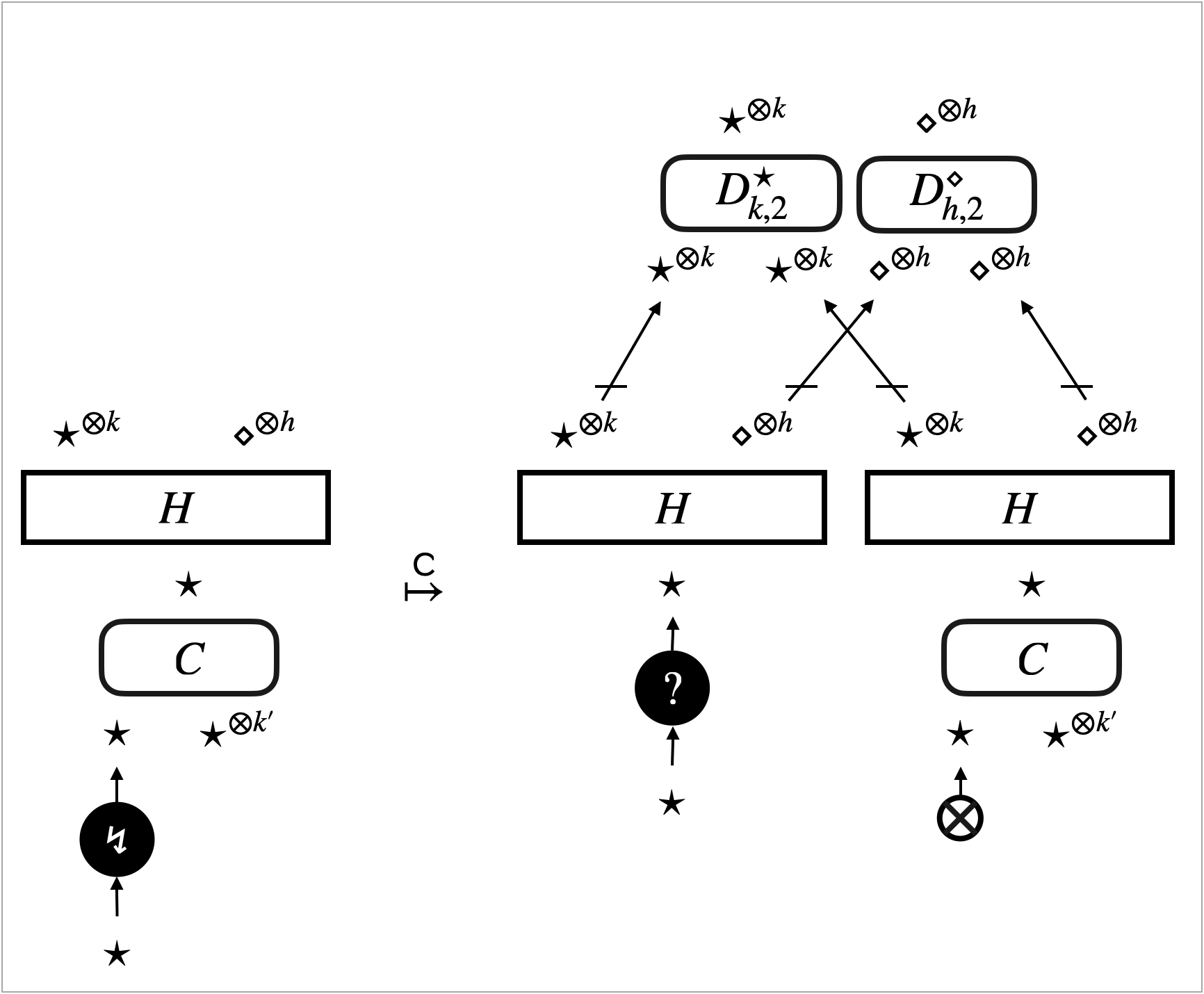

We take the contextual closure of the pre-template . It is defined by for an arbitrary focussed (multi-hole) context .

-

(3)

We show that is a counting simulation.

Once we establish the counting simulation , soundness of counting simulation asserts that the pre-template implies observational refinement .

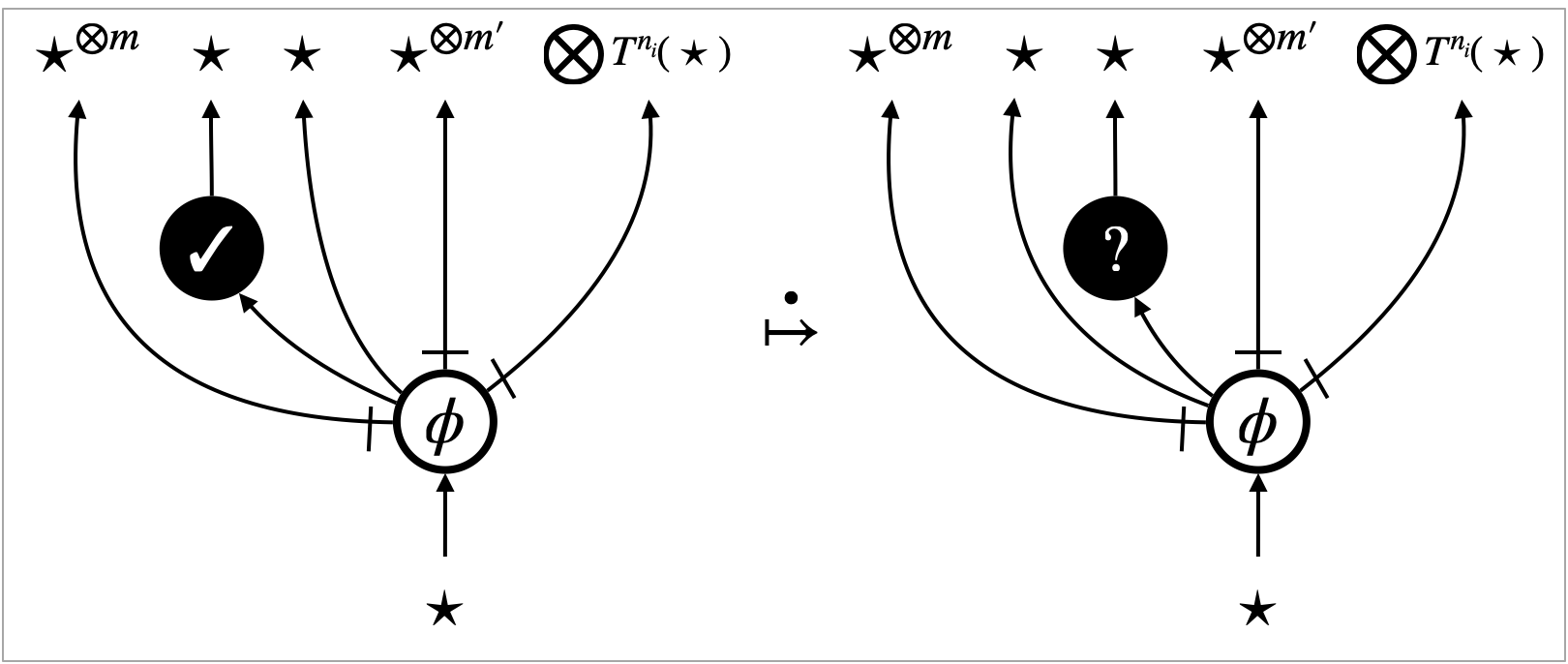

The key part of the observational refinement proof is therefore showing that is a counting simulation. Put simply, this amounts to show the following: for any and a transition , there exists a focussed context that satisfies the following.

| (2) |

Above, black parts are universally quantified, and magenta parts are existentially quantified. The arrow represents an arbitrary number of transitions . This situation (2) asserts that, after a few transitions from and , we can obtain two focussed hypernets that can be decomposed using the new context and the sub-graphs .

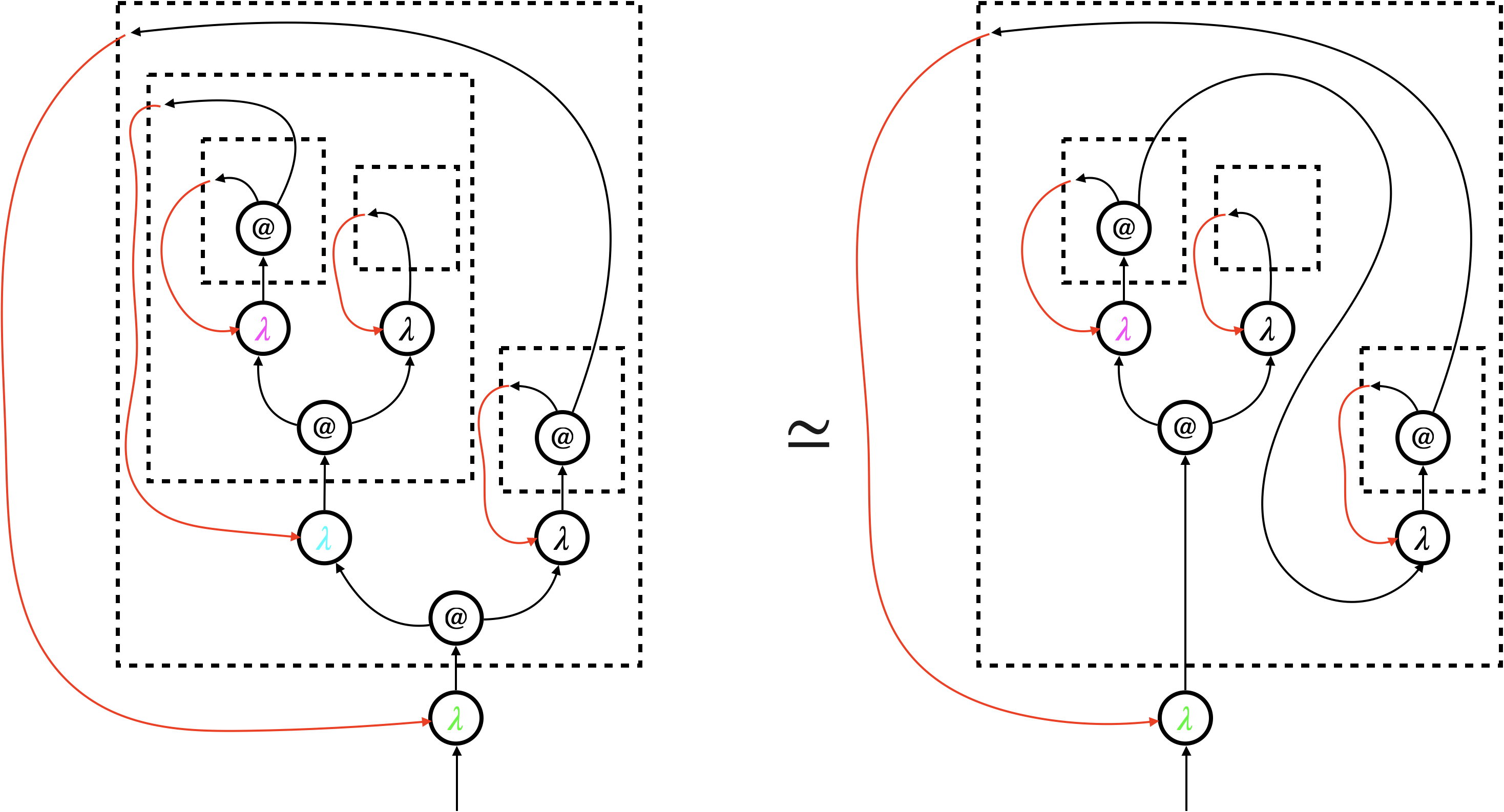

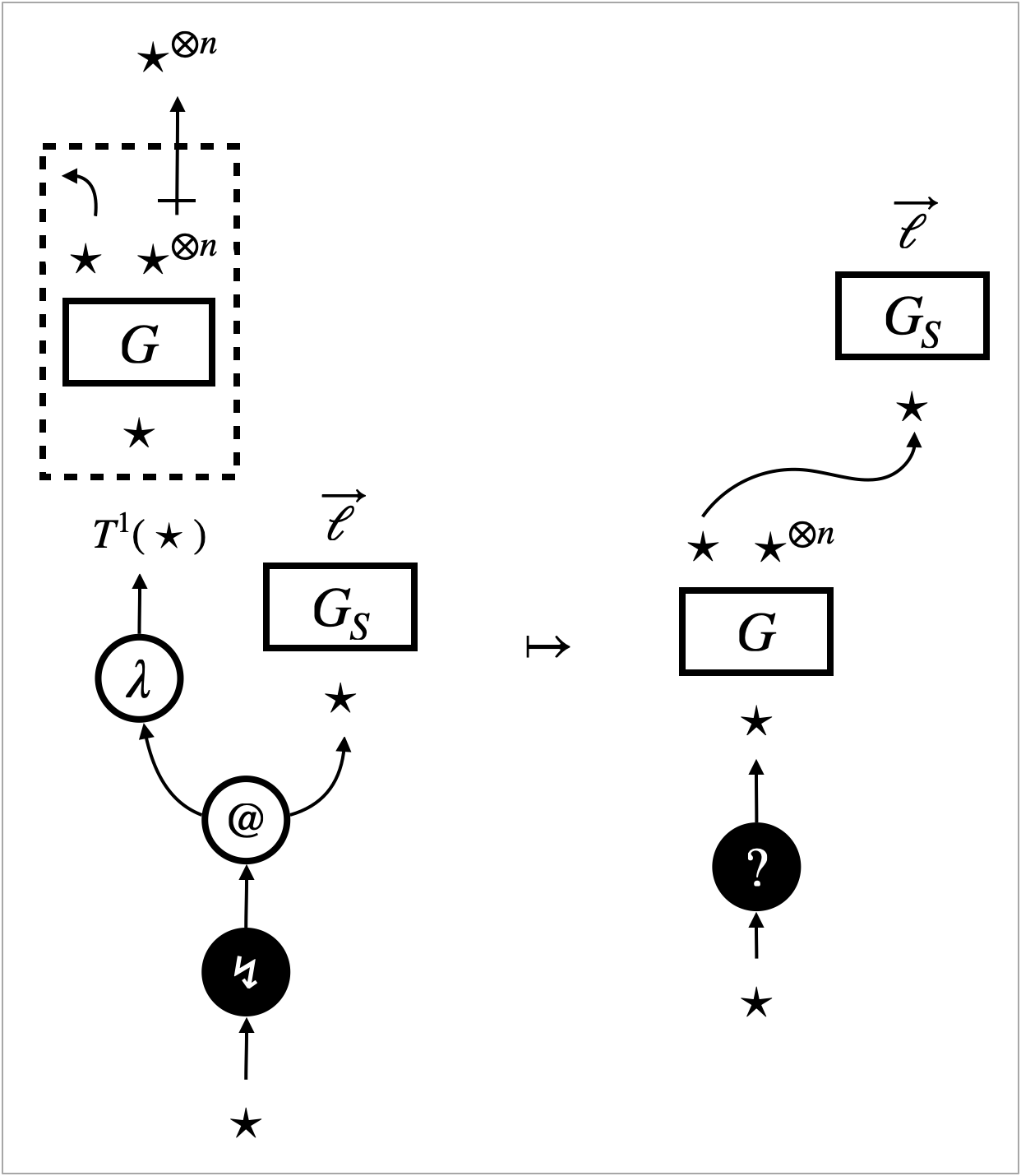

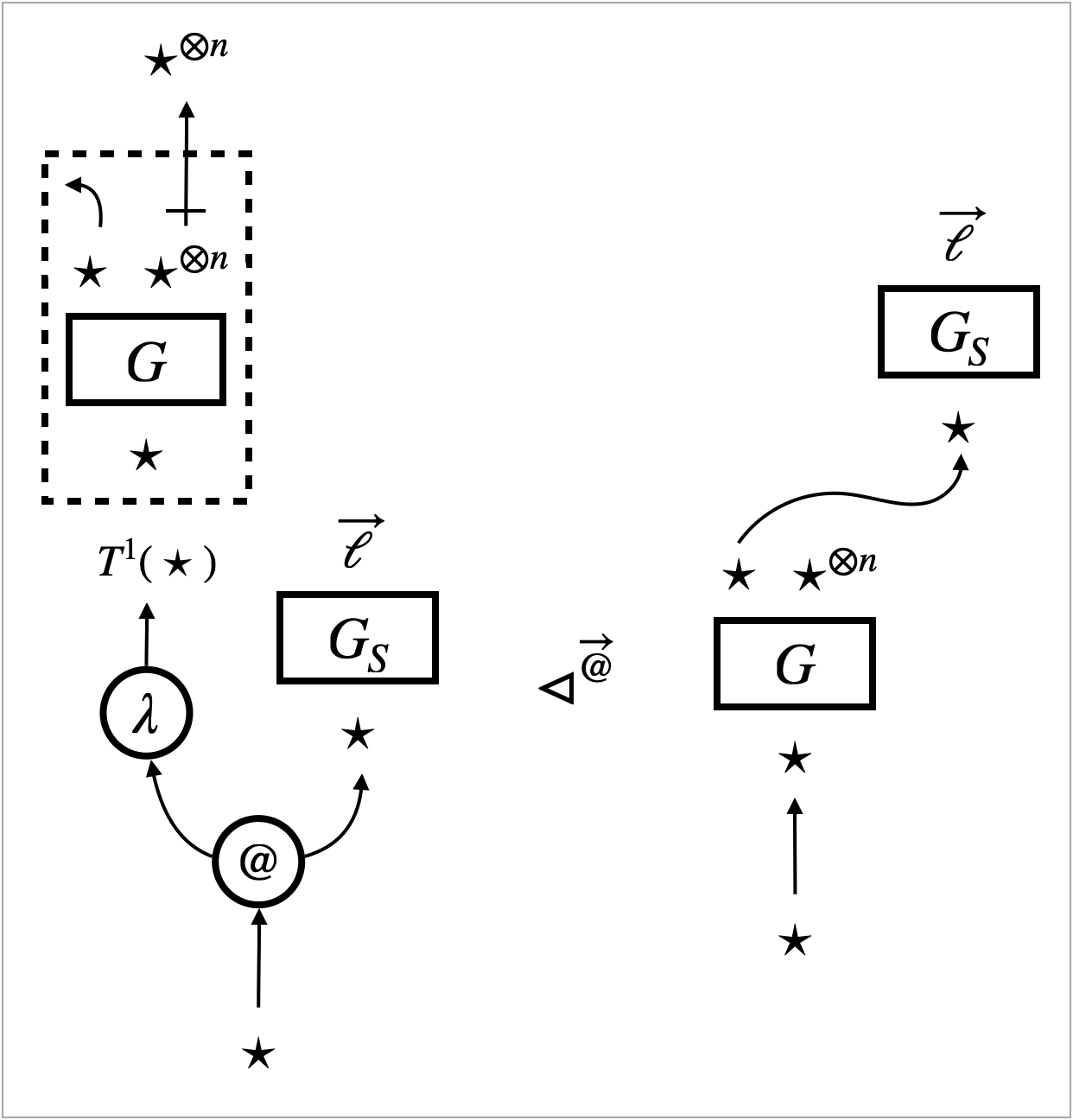

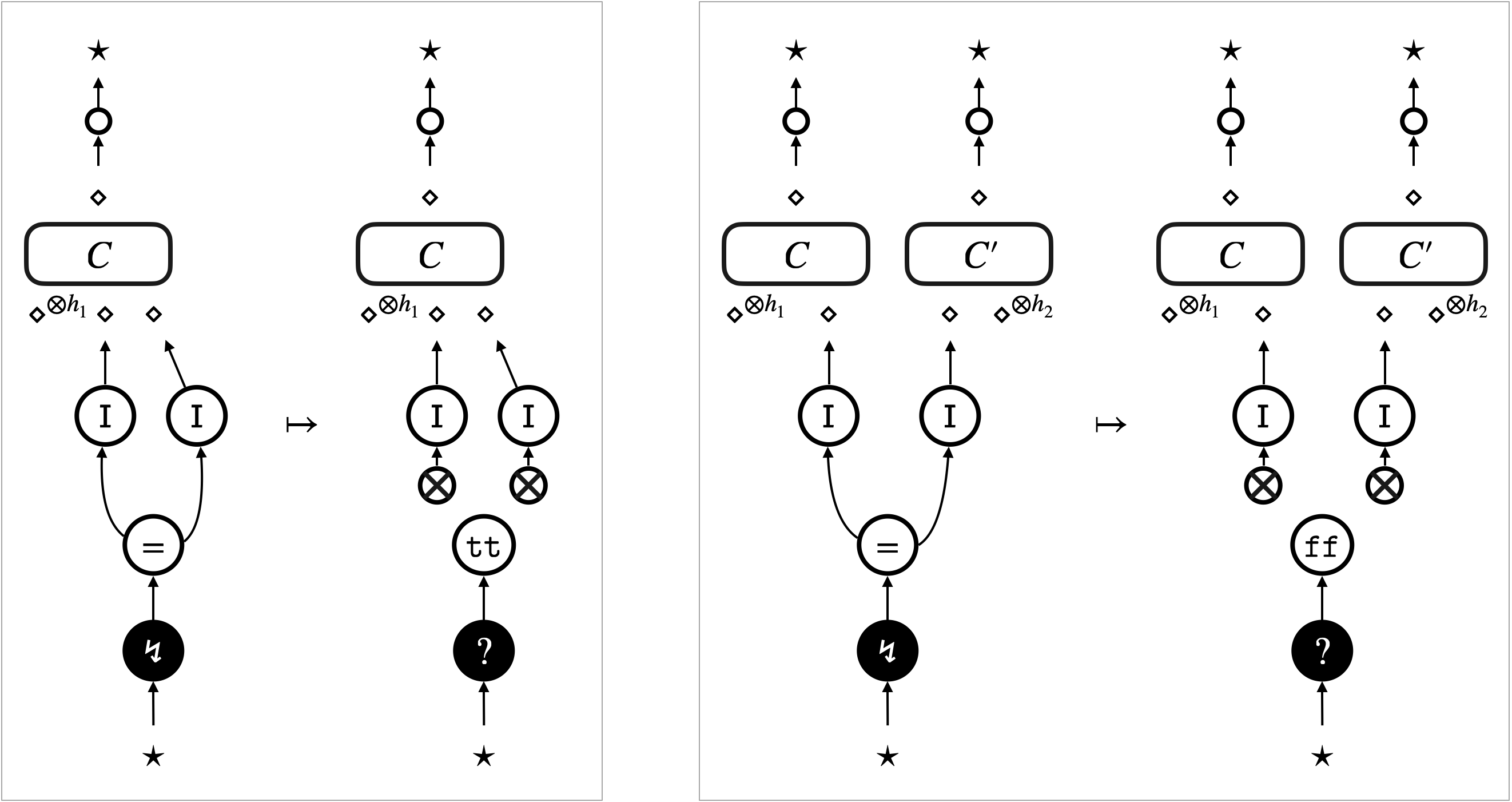

Our important observation is that (2) can be established by elementary case analysis of interaction between the sub-graphs and what happens around the focus in . This is because updates of a hypernet always happen around the -focus, and the -focus and the -focus (representing the graph traversal) move according to its neighbourhood. The analysis is hence centred around the graphical concept of neighbourhood, or graph locality. There are three possible cases of the interaction.

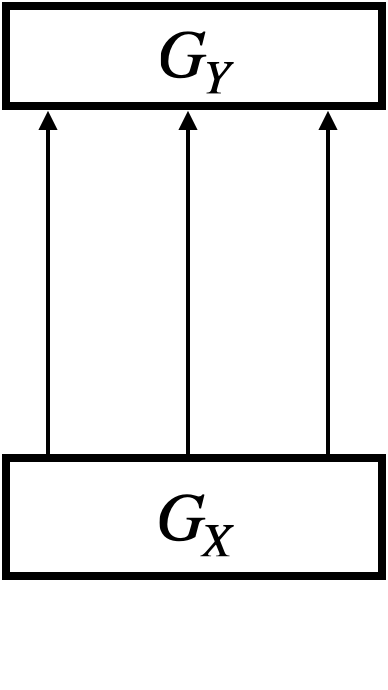

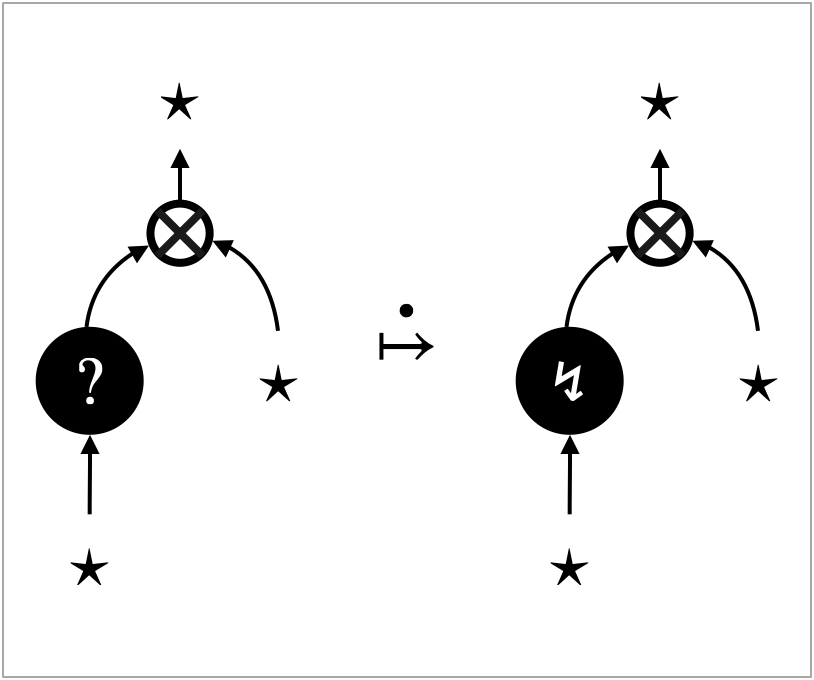

- Case (i) Move inside the context:

-

The -focus or the -focus, which implements the depth-first traversal, simply moves inside the context (see Figure 4a). Because any move of the focus is only according to its neighbourhood, the move solely depends on the context . In other words, the sub-graphs have no interaction with the focus. In this case, we can conclude that we are always in (2).

- Case (ii) Visit to the sub-graphs:

-

The -focus visits the sub-graphs (see Figure 4b). This is the case where the -focus actually interacts with ; what happens after entering of the focus depends on . We identify a sufficient condition of the pre-template , dubbed safety, for (2) to hold.

A typical example of safe pre-templates is the pre-templates that are induced by rewrite rules of hypernets. Figure 4c illustrates what happens to such a pre-template. The visit of the -focus to triggers the rewrite rule, and actually turns into .

Note that the case where the -focus visits boils down to the visit of the -focus instead, because the -focus implements backtracking of graph traversal.

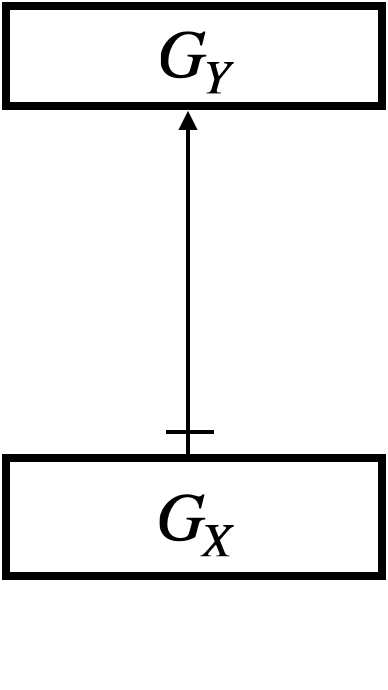

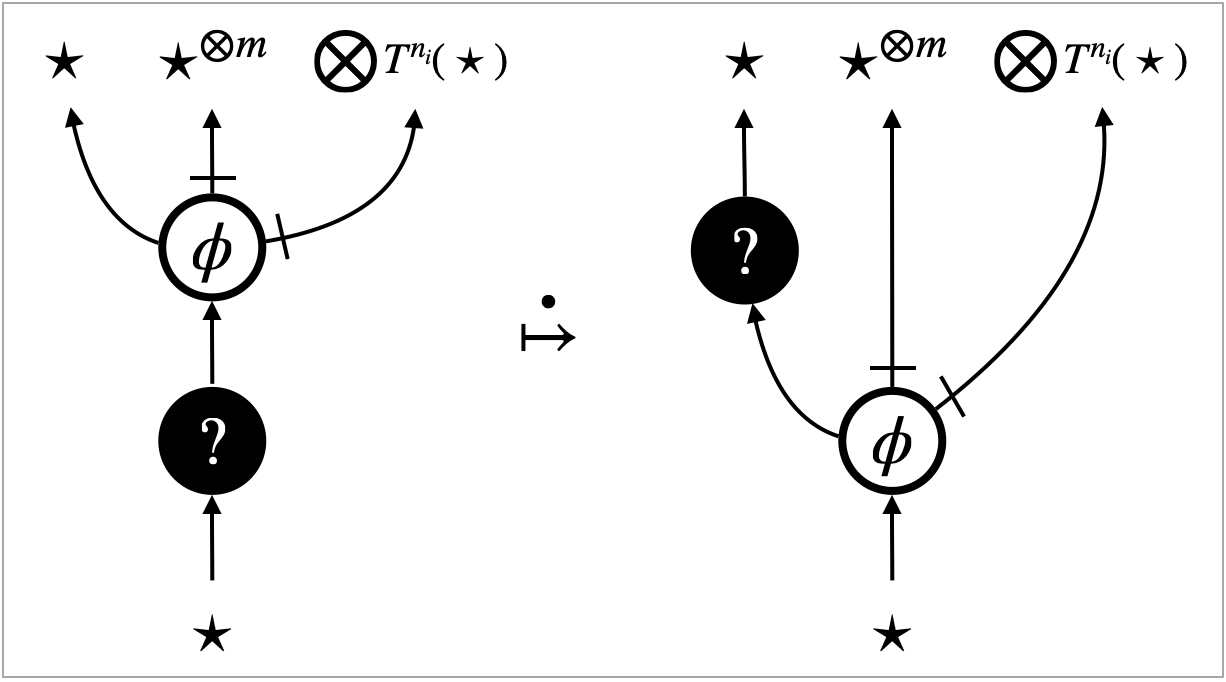

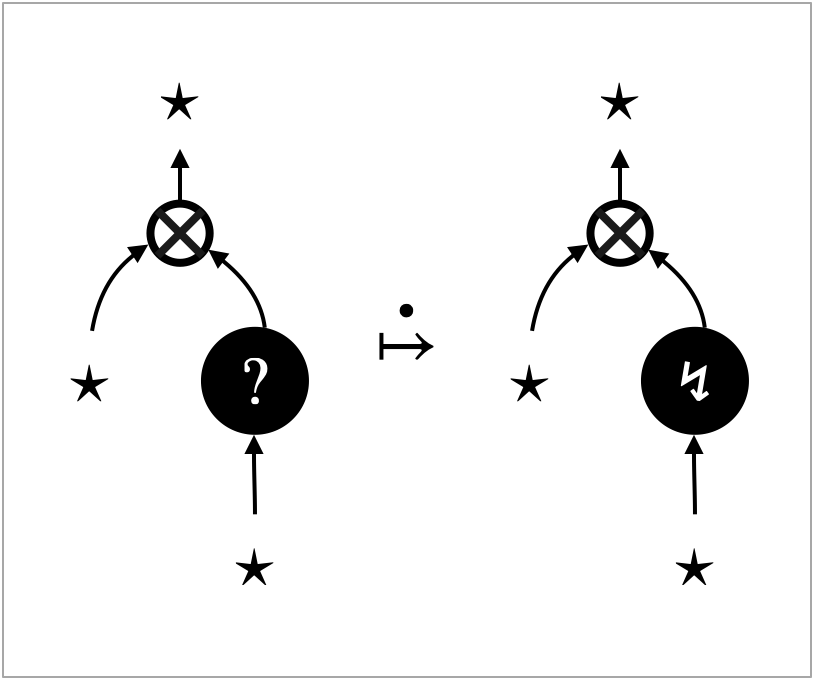

- Case (iii) Update of the hypernets:

-

The -focus triggers a rewrite rule and updates the hypernet (see Figure 4d). This is the case where the -focus interacts with ; the update may involve in a non-trivial manner. We identify a sufficient condition of the pre-template , relative to the triggered rewrite rule, dubbed robustness, for (2) to hold.

An example scenario of robustness is where the update only affects parts of the context ; see Figure 4e. In this scenario, the -focus does not really interact with . The sub-graphs are preserved, and we can take the new context with the same number of holes as that makes (2) hold.

Another example scenario of robustness is where the update duplicates (or eliminates) without breaking them. We can take the context that has more (or less) holes to make (2) hold. ∎

The above case analysis reveals sufficient conditions, namely safety and robustness, to make (2) hold and hence make the context closure a counting simulation. Combining this with soundness of counting simulation, we obtain our main theorem, sufficiency-of-robustness theorem (6). It can be informally stated as follows.

Theorem (Sufficiency-of-robustness theorem (6), informally).

A robust and safe pre-template induces observational refinement .

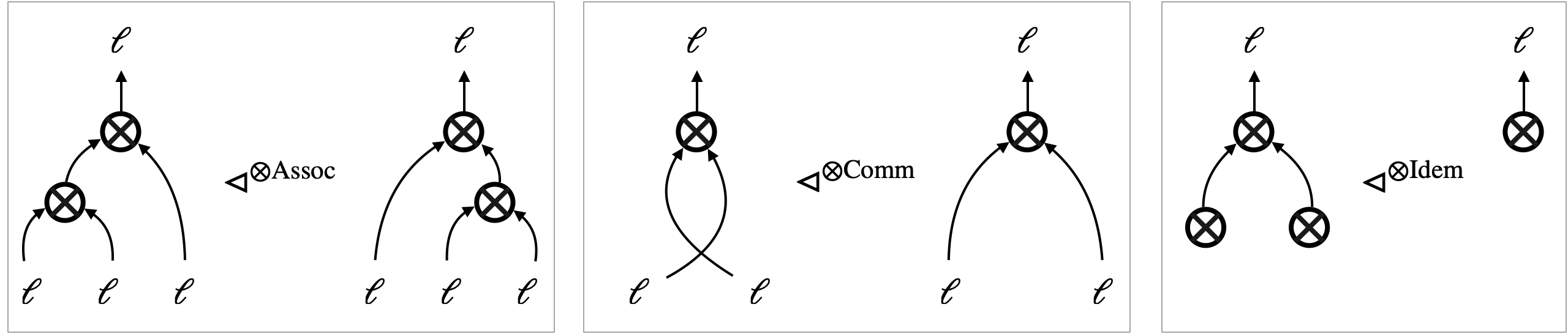

3. Preliminaries: Hypernets

We start formalising the ideas described in the previous section, by first defining hypernets. We opt for formalising hypernets as hypergraphs, following the literature [BGK+22a, BGK+22b, BGK+22c, AGSZ22] on categorically formalising string diagram rewriting using hypergraphs.

Let be the set of natural numbers. Given a set we write by the set of elements of the free monoid over . Given a function we write for the pointwise application (map) of to the elements of .

3.1. Monoidal hypergraphs and hypernets

Hypernets have a couple of distinctive features in comparison with ordinary graphs. The first feature of hypernets is that they have “dangling edges” (see Figure 1b); a hypernet has one incoming arrow with no source, and it may have outgoing arrows with no targets. To model this, we use hypergraphs—we formalise what we have been calling edges (i.e. arrows) as vertices and what we have been calling nodes (i.e. circled objects) as hyperedges (i.e. edges with arbitrary numbers of sources and targets). More specifically, we use what we call interfaced labelled monoidal hypergraphs that satisfies the following.

-

(0)

Each arrow (modelled as a vertex) and each circled object (modelled as a hyperedge) are labelled.

-

(1)

Each circled object (modelled as a hyperedge) is adjacent to distinct arrows (modelled as vertices).

-

(2)

Each arrow (modelled as a vertex) is adjacent to at most two circled objects (modelled as hyperedges).

-

(3)

The label of a circled object (modelled as a hyperedge) is always consistent with the number and labelling of its endpoints.

-

(4)

Dangling arrows are ordered, and each arrow has at least a source or a target.

[Monoidal hypergraphs] A monoidal hypergraph is a pair of finite sets, vertices and (hyper)edges along with a pair of functions , defining the source list and target list, respectively, of an edge. {defi}[Interfaced labelled monoidal hypergraphs] An interfaced labelled monoidal hypergraph consists of a monoidal hypergraph, a set of vertex labels , a set of edge labels , and labelling functions such that:

-

(1)

For any edge , its source list consists of distinct vertices, and its target list also consists of distinct vertices.

-

(2)

For any vertex there exists at most one edge such that and at most one edge such that .

-

(3)

For any edges if then , and .

-

(4)

If a vertex belongs to the target (resp. source) list of no edge we call it an input (resp. output). Inputs and outputs are respectively ordered, and no vertex is both an input and an output.

Notation 3.0 (Types of circled objects (i.e. hyperedges)).

Section 3.1 (3) makes it possible to use labels of arrows (i.e. vertices) as types for labels of circled objects (i.e. hyperedges). For each , we can associate it a type and write , where satisfy and for any such that .

Notation 3.0 (Types of interfaced labelled monoidal hypergraphs).

The concept of type can be extended to a whole interfaced labelled monoidal hypergraph . Let be the lists of inputs and outputs, respectively, of . We associate a type and write . In the syntax for lists of inputs and outputs we use to denote concatenation and define to be the empty list, and , for any label and .

In the sequel, when we say hypergraphs we always mean interfaced labelled monoidal hypergraphs.

We sometimes permute inputs and outputs of a hypergraph. Such permutation yields another hypergraph. {defi}[Interface permutation] Let be a hypergraph with an input list and an output list . Given two bijections and on sets and , respectively, we write to denote the hypergraph that is defined by the same data as except for the input list and the output list .

The second distinctive feature of hypernets is that they have dashed boxes that indicate the scope of variable bindings (see Figure 1b). We formalise these dashed boxes by introducing hierarchy to hypergraphs. The hierarchy is implemented by allowing a hypergraph to be the label of a hyperedge. As a result, informally, hypernets are nested hypergraphs, up to some finite depth, using the same sets of labels. We here present a relatively intuitive definition of hypernets; Appendix A discusses an alternative definition of hypernets333Another, slightly different, definition of hypernets is given in [AGSZ23, Section 4]. The difference is motivated by desired support of categorical graph rewriting, which requires certain properties to hold. These properties are sensitive to the definition.. {defi}[Hypernets] Given a set of vertex labels and edge labels we write for the set of hypergraphs with these labels; we also call these level-0 hypernets . We call level- hypernets the set of hypergraphs

We call hypernets the set .

Terminology 3.0 (Boxes and depth).

An edge labelled with a hypergraph is called box edge, and a hypergraph labelling a box edge is called content. Edges of a hypernet are said to be shallow. Edges of nesting hypernets of , i.e. edges of hypernets that recursively appear as edge labels, are said to be deep edges of . Shallow edges and deep edges of a hypernet are altogether referred to as edges at any depth.

3.2. Graphical conventions

A hypergraph with vertices and edges such that

is normally represented as Figure 5a. However, we find this style of representing hypergraphs awkward for understanding their structure. We will often graphically represent hypergraphs as graphs, as in Figure 5b, by (i) marking vertices with their labels and mark hyperedges with their labels circled, and (ii) connecting input vertices and output vertices with a hyperedge using arrows.

Recall that node labels are often determined in hypergraphs, thanks to typing, e.g. . We accordingly omit node labels to avoid clutter, as in Figure 5c, letting arrows connect circles directly.

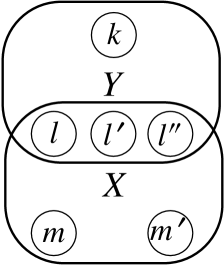

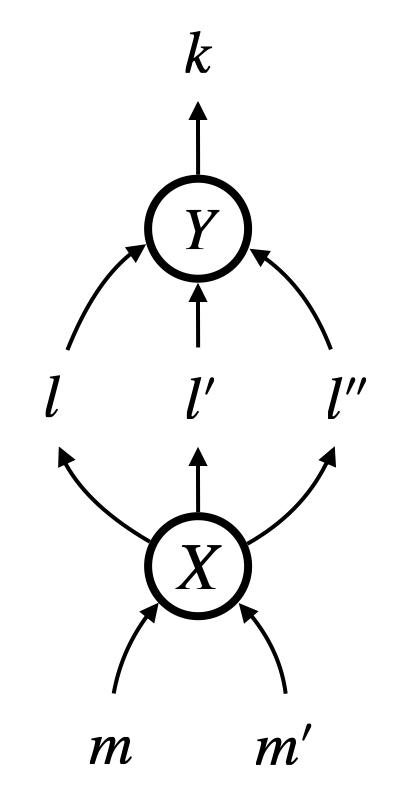

Sometimes we draw a hypergraph by connecting its sub-graphs using extra arrows. Sub-graphs are depicted as boxes. For the hypergraph , we can think of the sub-graph and the sub-graph . We may draw in three ways, as in Figure 5d–5f:

-

•

In Figure 5d, we use extra arrows connecting node labels directly, with intention that two occurrences of (or, ) are graphical representations of the same node (or ).

-

•

In Figure 5e, we omit node labels entirely, assuming that they are obvious from context.

-

•

In Figure 5f, we further replace the three extra arrows with a single arrow, given that the entire output type of matches the input type of . The single arrow comes with a dash across, which indicates that the arrow represents a bunch of parallel arrows.

The final convention is about box edges; a box edge (i.e. an edge labelled by a hypernet) is depicted by a dashed box decorated with its content (i.e. the labelling hypernet).

4. Representation of the call-by-value untyped linear lambda-calculus

In this section we introduce specific label sets that we use to represent lambda-terms as hypernets. We begin with the untyped linear lambda-calculus extended with arithmetic. This is a fairly limited language in terms of expressive power. Although simple, it is interesting enough to demonstrate our reasoning framework. We present a translation of linear lambda-terms in this section, and will adapt it to the general lambda-terms in Section 9.

Lambda-terms are defined by the BNF , where and . We assume alpha-equivalence on terms, and assume that bound variables are distinct in a term. A term is linear when each variable appears exactly once in .

First, recall that each edge label of a hypernet comes with a type where . Even though lambda-terms are untyped here, we use edges to represent term constructors, and use edge types (i.e. node labels) to distinguish thunks from terms. Namely, the term type is denoted by , and a thunk type with bound variables is denoted by .

Figure 6 shows inductive translation of linear lambda-terms to hypernets where

| (3) | ||||

| (4) |

In general, a term with free variables is translated into . Each term constructor is turned into an edge as follows.

-

•

Any variable becomes an anonymous edge . We represented a variable as a single arrow in Section 2 (see e.g. Figure 1b and Figure 3), but this means a variable would become an empty graph (i.e. a hypernet with no edge). We rather use the anonymous edge to prevent an empty graph from labelling a box edge and hence being a box content. This is for technical reasons as they simplify our development.

-

•

Abstraction becomes ; it constructs a term, taking one thunk that has one bound variable. A thunk that has one bound variable and free variables is represented by a box edge of type whose content is . Note that the box has one less output than its content. We emphasise this graphically, by bending the arrow that is connected to the leftmost of the type .

-

•

Application becomes ; it constructs a term, taking two terms as arguments. This translation is for the call-by-value evaluation strategy; both of the arguments are not thunks, and hence they will be evaluated before the application is computed.

-

•

Each natural number becomes ; it is a term, taking no arguments.

-

•

Arithmetic operations becomes and ; they construct a term, taking two terms as arguments. The translation for these operations has the same shape as that for application.

5. Focussed hypernet rewriting—the Universal Abstract Machine

In this section we present focussed hypernet rewriting. It will be formalised as an abstract machine dubbed universal abstract machine (UAM). This abstract machine is “universal” in a sense of the word similar to the way it is used in “universal algebra” rather than in “universal Turing machine”. It is a general abstract framework in which a very wide range of concrete abstract machines can be instantiated by providing operations and their behaviour.

5.1. Operations and focus

The first parameter of the UAM is given by a set of operations . Operations are classified into two: passive operations that construct evaluation results (i.e. values) and active operations that realise computation. We let range over respectively. The edge labels in (4), except for , are examples of operations:

| passive operations | active operations |

|---|---|

| for each | where |

In general, each operation has a type where . This means that each operation takes arguments and thunks, and the -th thunk has bound variables.

Given the operation set , the UAM acts on hypernets where

| (5) |

We let range over . For box edges, we impose the following type discipline: each box edge must have a type with its content having a type .

The last three elements of (5) are focuses. In the UAM, focuses are edges with the dedicated labels of type . Each label represents one of the three modes of the focus:

| label | mode |

|---|---|

| searching | |

| backtracking | |

| triggering an update |

As illustrated in Section 2.2, focussed hypernet rewriting implements program evaluation by combining (i) depth-first graph traversal and (ii) update (rewrite) of a hypernet. Focuses are the key element of this combination; they determine which action (i.e. traversal or rewrite) to be taken next, and they indicate where a redex of the rewrite is.

We refer to a hypernet that contains one focus as focussed hypernet. Given a focussed hypernet, we refer to the hypernet without the focus as underlying hypernet.

5.2. Transitions—overview

| transitions | focus | provenance | |

|---|---|---|---|

| search transitions | intrinsic | ||

| rewrite transitions | substitution transitions | ||

| behaviour | extrinsic | ||

| (compute transitions) | |||

Table 1 summarises classifications of transitions of the UAM.

The first classification is according to the focuses: search transitions for the -focus and the -focus, implementing the depth-first search of redexes, and rewrite transitions for the -focus, implementing rewrite of the underlying hypernet. Rewrite transitions are further classified into two: substitution transitions for edges labelled by , and behaviour for (active) operations.

The next classification is according to provenance: intrinsic transitions that are inherent to the UAM, and extrinsic transitions that are not. While search transitions and substitution transitions constitute intrinsic transitions, the behaviour solely provides extrinsic transitions. In fact, the behaviour is the second parameter of the UAM.

Figure 8 shows an example of the UAM execution. This evaluates the linear-term , and the execution starts with the underlying hypernet . It demonstrates all the three kinds of transitions: search transitions, substitution transitions, and the behaviour of application ().

5.3. Intrinsic transitions

Search transitions are possible for the -focus and the -focus, and they implement the depth-first search of redexes. They are specified by interaction rules depicted in Figure 9a–9e. The -focus interacts with what is connected above, and the -focus interacts with what is connected below. From the perspective of program evaluation, the interaction rules specify the left-to-right call-by-value evaluation of arguments.

- Figure 9a:

-

When the -focus encounters a variable (), it changes to the -focus. What is connected above the variable will be substituted for the variable, in a subsequent substitute transition.

- Figure 9b:

-

When the -focus encounters an operation with at least one argument, it proceeds to the first argument.

- Figure 9c:

-

After inspecting the -th argument, the -focus changes to the -focus and proceeds to the next argument.

- Figure 9d:

-

After inspecting all the arguments, the -focus finishes redex search and changes to a focus depending on the operation : to the -focus for a passive operation , and to the -focus for an active operation .

- Figure 9e:

-

When the -focus encounters an operation that takes no arguments but only thunks, it immediately finishes redex search and changes to a focus depending on the operation , like in Figure 9d.

The first kind of rewrite transitions, namely substitution transitions, implements substitution by simply removing a variable edge (). These transitions are specified by a substitution rule depicted in Figure 9f. What is connected above the variable edge () is computation bound to the variable. By removing the variable edge, the bound computation gets directly connected to the -focus, ready for redex search.

5.4. Extrinsic transitions: behaviour of operations

The second kind of rewrite transitions are for operations , in particular active operations . These transitions are extrinsic; they are given as the second parameter , called behaviour of , of the UAM.

Figure 10 shows an example of the behaviour, namely that for the following active operations:

For some of these operations, their behaviour is specified locally by rewrite rules. The rewrite transitions for the four active operations are enabled when the -focus encounters one of the operations. The -focus then changes to the -focus and resumes redex search.

- Figure 10a:

-

This rewrite rule specifies the behaviour of arithmetic (). The rule eliminates all three edges () and replace them with a new edge () such that .

- Figure 10b:

-

This rewrite rule specifies the behaviour of application (), namely the (micro-) beta-reduction. It is micro in the sense that it delays substitution. It only eliminates the constructors (), opens the box whose content is , and connects which represents a function argument to the body of the function.

- Figure 10c:

-

This is a rewrite transition, not a rewrite rule. It is for the operation that inspects memory usage. Namely, counts the number of edges in the hypernet . The transition replaces the operation edge () with the result () of counting.

6. A formal definition of the UAM

The UAM, and hence the definitions below, are all globally parameterised by the operation set and its behaviour .

6.1. Auxiliary definitions

We use the terms incoming and outgoing to characterise the incidence relation between neighbouring edges. Conventionally incidence is defined relative to nodes, but we find it helpful to extend this notion to edges. {defi}[Incoming and outgoing edges] An incoming edge of an edge has a target that is a source of the edge . An outgoing edge of the edge has a source that is a target of the edge .

The notions of path and reachability are standard. Our technical development will heavily rely on these graph-theoretic notions. Note that these are the notions that are difficult to translate back into the language of terms. {defi}[Paths and reachability]

-

(1)

A path in a hypergraph is given by a non-empty sequence of edges, where an edge is followed by an edge if the edge is an incoming edge of the edge .

-

(2)

A vertex is reachable from a vertex if holds, or there exists a path from the vertex to the vertex .

Note that, in general, the first edge (resp. the last edge) of a path may have no source (resp. target). A path is said to be from a vertex , if is a source of the first edge of the path. Similarly, a path is said to be to a vertex , if is a target of the last edge of the path. A hypergraph is itself said to be a path, if all edges of comprise a path from an input (if any) and an output (if any) and every vertex is an endpoint of an edge.

During focussed hypernet rewriting, operations are the only edges that the -focus can “leave behind”. The -focus is always at the end of an operation path. {defi}[Operation paths] A path whose edges are all labelled with operations is called operation path.

We shall introduce a few classes of hypernets below. The first is box hypernets that are simply single box edges. {defi}[Box hypernets] If a hypernet is a path of only one box edge, it is called box hypernet. The second is stable hypernets, in which a focus can never trigger a rewrite (i.e. a focus never changes to the -focus). Stable hypernets can be seen as a graph-based notion of values/normal form. For example, the hypernet that consists of an abstraction edge () only is a stable hypernet. {defi}[Stable hypernets] A stable hypernet is a hypernet , such that and each vertex is reachable from the unique input. The last is one-way hypernets, which will play an important role in local reasoning. These specify sub-graphs to which a focus enters only from the bottom (i.e. the -focus through an input), never from the top (i.e. the -focus through an output). Should the -focus enter from the top, it must have traversed upwards the sub-graph and left an operation path behind. One-way hypernets are defined by ruling out such operation paths. {defi}[One-way hypernets] A hypernet is one-way if, for any pair of an input and an output of such that and both have type , any path from to is not an operation path. For example, the underlying hypernet of the left hand side of the micro-beta rewrite rule (Figure 10b) is a one-way hypernet, if is stable. Should the -focus enters to from the top, it must be backtracking the depth-first search, and hence the -focus must have been visited from the bottom. In the presence of the micro-beta rewrite rule, such visit must result in a rewrite transition, and therefore, the backtracking of the -focus cannot be possible.

6.2. Focussed hypernets

Focussed hypernets are those that contain a focus. We impose some extra conditions as below, to ensure that the focus is outside a box and not isolated. {defi}[Focussed hypernets]

-

(1)

A focus in a hypergraph is said to be exposed if its source is an input and its target is an output, and self-acyclic if its source and its target are different vertices.

-

(2)

Focussed hypernets (typically ranged over by ) are those that contain only one focus and the focus is shallow, self-acyclic and not exposed.

Focus-free hypernets are given by , i.e. hypernets without a focus.

Notation 6.0 (Removing, replacing and attaching a focus).

-

(1)

A focussed hypernet can be turned into an underlying focus-free hypernet with the same type, by removing its unique focus and identifying the source and the target of the focus.

-

(2)

When a focussed hypernet has a -focus, then changing the focus label to another one yields a focussed hypernet denoted by .

-

(3)

Given a focus-free hypernet , a focussed hypernet with the same type can be yielded by connecting a -focus to the -th input of if the input has type . Similarly, a focussed hypernet with the same type can be yielded by connecting a -focus to the -th output of if the output has type . If it is not ambiguous, we omit the index in the notation .

The source (resp. target) of a focus is called “focus source” (resp. “focus target”) in short.

6.3. Contexts

We next formalise a notion of context, which is hypernets with holes. We use a set of hole labels, and contexts are allowed to contain an arbitrary number of holes. Hole labels are typed, and typically ranged over by . {defi}[(Simple) contexts]

-

(1)

Holed hypernets (typically ranged over by ) are given by , where the edge label set is extended by the set .

-

(2)

A holed hypernet is said to be context if each hole label appears at most once (at any depth) in .

-

(3)

A simple context is a context that contains a single hole, which is shallow.

By what we call plugging, we can replace a hole of a context with a hypernet, and obtain a new context. We here provide a description of plugging and fix a notation. A formal definition of plugging can be found in Appendix B.

Notation 6.0 (Plugging of contexts).

-

(1)

When gives a list of all and only hole labels that appear in a context , the context can also be written as . A hypernet in can be seen as a context without a hole and written as .

-

(2)

Let and be contexts, such that the hole and the latter context have the same type and . A new context can be obtained by plugging into : namely, by replacing the (possibly deep) hole edge of that has label with the context , and by identifying each input (resp. output) of with its corresponding source (resp. target) of the hole edge.

Each edge of the new context is inherited from either or , keeping the type; this implies that the new context is indeed a context with hole labels . Inputs and outputs of the new context coincide with those of the original context , and hence these two contexts have the same type.

The plugging is associative in two senses: plugging two contexts into two holes of a context yields the same result regardless of the order, i.e. is well-defined; and nested plugging yields the same result regardless of the order, i.e. .

The notions of focussed and focus-free hypernets can be naturally extended to contexts. We use the terms entering and exiting to refer to a focus that is adjacent to a hole. A focus may be both entering and exiting. {defi}[Entering/exiting focuses] In a focussed context , the focus is said to be entering if it is an incoming edge of a hole, and exiting if it is an outgoing edge of a hole.

6.4. States and transitions

We now define the UAM as a state transition system. States are hypernets that represent closed terms and hence have type . {defi}[States]

-

(1)

A state is given by a focussed hypernet of type .

-

(2)

A state is called initial if , and final if .

The following will be apparent once transitions are defined: initial states are indeed initial in the sense that no search transition results in an initial state; and final states are indeed final in the sense that no transition is possible from a final state.

Intrinsic transitions, which consists of search transitions and substitution transitions, are specified by the interaction rules and the substitution rule in Figure 9. Each intrinsic transition applies a rule outside a box and at one place. {defi}[Intrinsic transitions]

-

(1)

For each interaction rule , if there exists a focus-free simple context such that and are states, is a search transition.

-

(2)

For each substitution rule , if there exists a focus-free simple context such that and are states, is a substitution transition.

When a sequence of transitions consists of search transitions only, it is annotated by the symbol as .

Extrinsic transitions must have a specific form; namely they must be a compute transition. {defi}[Compute transitions] A transition is a compute transition if: (i) the first state has the -focus that is an incoming edge of an active operation edge; and (ii) the second state has the -focus. We can observe that substitution transitions or compute transitions are possible if and only if a state has the -focus, and they always change the focus to the -focus. We refer to substitution transitions and compute transitions altogether as rewrite transitions (cf. Table 1).

Compute transitions may be specified locally, by rewrite rules, in the same manner as the intrinsic transitions. Figure 10a & 10b shows examples of rewrite rules. We leave it entirely open what the actual rewrite associated to some operation is, by having the behaviour as parameter of the UAM as well as the operation set . This is part of the semantic flexibility of our framework. We do not specify a meta-language for encoding effects as particular transitions. Any algorithmic state transformation (e.g. the compute transition for in Figure 10c) is acceptable.

We can now define the UAM as follows. {defi}[the UAM] Given two parameters and , the universal abstract machine (UAM) is given by data such that:

-

•

is a set of states,

-

•

is a set of intrinsic transitions, and

-

•

is a set of compute transitions.

We refer to elements of as extrinsic transitions, as well as compute transitions; we use these two terms interchangeably.

An execution of the UAM starts with a focus-free hypernet that represents a closed term, e.g. a result of the translation (cf. Figure 6). It is successful if it terminates with a final state, and not if it gets stuck with a non-final state. {defi}[Execution and stuck states]

-

(1)

An execution on a focus-free hypernet is a sequence of transitions starting from the initial state .

-

(2)

A state is said to be stuck if it is not final and cannot be followed by any transition.

Recall that we have a notion of stable hypernet (Section 6.1) that is a graphical counterpart of values. An execution on any stable hypernet terminates successfully at a final state, with only search transitions (cf. 33(1) which is proved for the “non-linear” UAM).

7. Contextual equivalence on hypernets

In this section, we set the target of our proof methodology. First, we define contextual equivalence in a general manner. Next, we clarify what kind of operations our proof methodology applies to.

7.1. Generalised contextual equivalence

We propose notions of contextual refinement and equivalence that check for successful termination of execution444We opt for the very basic notion of contextual refinement that concerns termination only. Richer observation (e.g. evaluation results, output, probability, nondeterminism) would require different definitions of contextual refinement, and these are out of the scope of this paper. . Our notion of contextual refinement (and hence contextual equivalence) generalise the standard notions in two ways.

-

•

The notion of contextual refinement can be flexible in terms of a class of contexts in which it holds. Namely, contextual refinement is parameterised by a set of focus-free contexts. The standard contextual refinement can be recovered by setting to be the set of all focus-free contexts.

-

•

The notion of contextual refinement can count and compare the number of transitions. Namely, contextual refinement is parameterised by a preorder on natural numbers. The standard contextual refinement can be obtained by setting to be the total relation . Other typical examples of the preorder are the greater-than-or-equal relation and the equality . With these preorders, one can prove that two terms are contextually equivalent, and moreover, one takes a less number of transitions to terminate than the other (with ) or the two terms take exactly the same number of transitions to terminate (with ).

We require the parameter to be closed under plugging, i.e. for any contexts such that is defined, . {defi}[State refinement and equivalence] Let be a preorder on , and and be two states.

-

•

is said to refine up to , written as , if for any number and any final state such that , there exist a number and a final state such that and .

-

•

and are said to be equivalent up to , written as , if and .

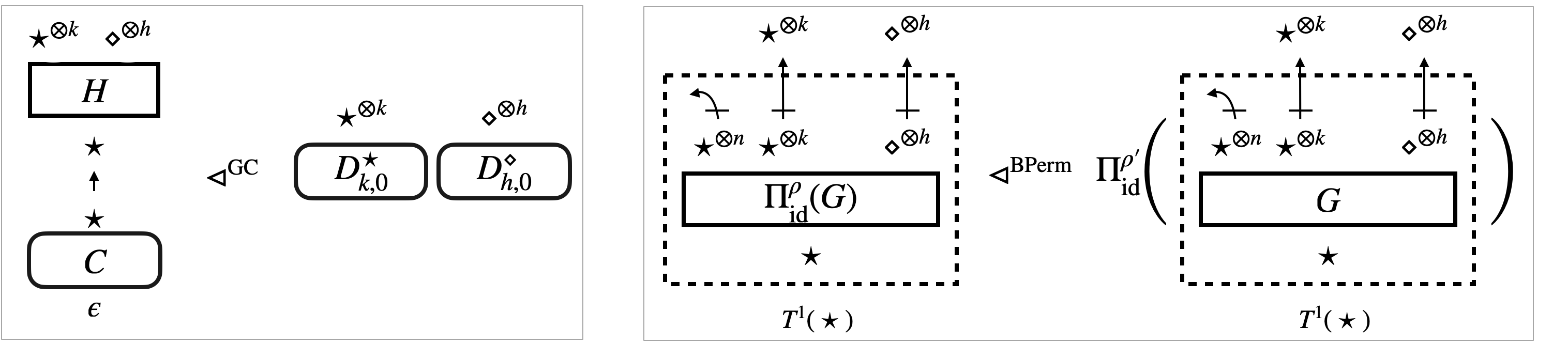

[Contextual refinement and equivalence] Let be a set of contexts that is closed under plugging, be a preorder on , and and be focus-free hypernets of the same type.

-

•

is said to contextually refine in up to , written as , if any focus-free context , such that and are states, yields refinement .

-

•

and are said to be contextually equivalent in up to , written as , if and .

In the sequel, we simply write etc., making the parameter implicit.

Because is a preorder, and are indeed preorders, and accordingly, equivalences and are indeed equivalences (35).

When the relation is the universal relation , the notions concern successful termination, and the number of transitions is irrelevant. If all compute transitions are deterministic, contextual equivalences and coincide for any (as a consequence of 36).

Because is closed under plugging, the contextual notions and indeed become congruences. Namely, for any and such that and are defined, , where .

As the parameter , we will use the set of all focus-free contexts, for the time being. We will use another set in Section 11. The standard notions of contextual refinement and equivalence can be recovered as and .

7.2. Determinism and refocusing

We will focus on operations whose behaviour makes the UAM both deterministic and refocusing in the following sense. {defi}[Determinism and refocusing]

-

(1)

A UAM is deterministic if the following holds: if two transitions and are possible, it holds that up to graph isomorphism.

-

(2)

A state is rooted if .

-

(3)

A UAM is refocusing if every transition preserves the rooted property.

In a refocusing UAM, the rooted property becomes an invariant, because any initial state is trivially rooted. The invariant ensures the following: starting the search process (i.e. a search transition) from a state with the -focus can be seen as resuming the search process , from an initial state, on the underlying hypernet . Resuming redex search after a rewrite, rather than starting from scratch, is an important aspect of abstract machines. In the case of the lambda-calculus, enabling the resumption is identified as one of the key steps (called “refocusing”) to synthesise abstract machines from reduction semantics by Danvy et al. [DMMZ12]. In our setting, it is preservation of the rooted property that justifies the resumption.

For many rewrite transitions that are specified by a local rewrite rule, it suffices to check the shape of the rewrite rule, in order to conclude that the corresponding rewrite transition preserves the rooted property. Intuitively, a rewrite rule (or the substitution rule) should satisfy the following.

-

(1)

No -focus can encounter prior to application of the rewrite rule .

-

(2)

The rewrite rule changes only edges above the -focus, and turns the focus into the -focus without moving it around.

-

(3)

If the -focus encounters , subsequent search transitions yield the -focus.

The above idea is formalised as a notion of stationary rewrite transition. If a rewrite transition is stationary in the following sense, it preserves the rooted property. {defi}[Stationary rewrite transitions] A rewrite transition is stationary if there exist a focus-free simple context , focus-free hypernets and , and a number , such that the following holds.

-

(1)

is one-way,

-

(2)

and , and

-

(3)

for any , such that is a state, there exists a state with the -focus, such that .

Lemma 2 (27).

If a rewrite transition is stationary, it preserves the rooted property, i.e. being rooted implies is also rooted.

Finally, determinism and refocusing of a UAM boil down to those of extrinsic transitions under a mild condition.

Lemma 3 (Determinism and refocusing).

-

•

A universal abstract machine is deterministic if extrinsic transitions are deterministic.

-

•

Suppose that in the substitution rule (Figure 9f) is a stable hypernet. A universal abstract machine is refocusing if extrinsic transitions preserve the rooted property.

Proof 7.1.

Intrinsic transitions and extrinsic transitions are mutually exclusive, and intrinsic transitions are all deterministic for the following reasons.

-

•

Search transitions are deterministic, because at most one interaction rule can be applied at any state.

-

•

Although two different substitution rules may be possible at a state, substitution transitions are still deterministic. Namely, if two different substitution rules and can be applied to the same state, i.e. there exist focus-free simple contexts and such that , then these two rules yield the same transition, by satisfying .

Therefore, if extrinsic transitions are deterministic, all transitions become deterministic.

Search transitions trivially preserve the rooted property. Substitution transitions also preserve the rooted property, because they are stationary, under the assumption that in Figure 9f is stable. Therefore, all transitions but extrinsic transitions already preserve the rooted property.

8. A sufficiency-of-robustness theorem

In this section, we will state a sufficiency-of-robustness theorem (6), by identifying sufficient conditions (namely safety and robustness) for contextual refinement . Throughout this section, we will use the micro-beta law, which looks like Figure 11, as a leading example. It is derived from the micro-beta rewrite rule (Figure 10b) by removing focuses, and it is the core of a graphical counterpart of the beta-law . We will introduce relevant notions and concepts (such as safety and robustness), by illustrating what it takes for the micro-beta law to hold.

8.1. Pre-templates and specimens

We begin with an observation that we are often interested in a family of contextual refinements. Syntactically, indeed, the beta-law represents a family of (concrete) contextual refinements such as . Graphically, it is the same; the micro-beta law represents a family of (concrete) laws. In the micro-beta law (Figure 11), can be arbitrary and can be any stable hypernet. The sufficiency-of-robustness theorem will therefore take a family of pairs of focus-free hypernets (i.e. a relation on focus-free hypernets), and identifies sufficient conditions for the family to imply a family of (concrete) contextual refinements.

Moreover, the relation must be well-typed, i.e. each pair must share the same type. This in particular means that, if represents a term (or a thunk), must represents a term (or resp. a thunk) with the same number of free variables. We therefore formalise as a type-indexed family of relations on focus-free hypernets, and call it pre-template. A pre-template is our candidate of (a family of) contextual refinement. {defi}[Pre-templates] A pre-template is given by a union of a type-indexed family , where is a set of types. Each is a binary relation on focus-free hypernets such that, for any where , and are focus-free hypernets with type and .

[Micro-beta pre-template ] As a leading example, we consider the micro-beta pre-template , depicted in Figure 11, derived from the micro-beta rewrite rule (Figure 10b). The pre-template additionally requires to be stable, compared to the micro-beta rewrite rule; this amounts to require to be a value in the beta-law . For each , the hypernets and have the same type , where and the sequence of types can be arbitrary as long as is stable. ∎

To directly prove that a pre-template implies contextual refinement , one would need to compare states for any focussed context . We use data dubbed specimen to provide such a pair. Sometimes we prefer relaxed comparison, between two states such that and for some binary relations on states. Such comparison can be specified by what we call quasi-specimen up to . The notion of (quasi-)specimen is relative to the set of focus-free contexts, which is one of the parameters of contextual refinement. {defi}[(Quasi-)specimens] Let be a pre-template, and and be binary relations on states.

-

(1)

A triple is a -specimen of if the following hold:

(A) , and the three sequences have the same length .

(B) for each .

(C) is a state for each . -

(2)

A pair of states is a quasi--specimen of up to , if there exists a -specimen of such that the following hold:

(A) The focuses of , and all have the same label.

(B) If and are rooted, then and are also rooted, , and . -

(3)

A -specimen is said to be single if the sequence only has one element, i.e. the context has exactly one hole edge (at any depth).

We can refer to the focus label of a -specimen and a quasi--specimen. Any -specimen gives a quasi--specimen up to .

As described in Section 2.3, the key part of proving contextual refinement for each is to show (2). With the notion of specimen in hand, (2) can be rephrased as follows:

-

•

for any -specimen of and a transition ,

-

•

there exist a -specimen and such that and the following holds.

| (6) |

To establish (6), it suffices to perform case analysis on the transition . As explained in Section 2.3, there are three possible cases. The first case is a trivial case, where the -focus or -focus moves just inside the context . In this case, we can take the updated context such that . For the other two cases, we identify sufficient conditions for (6), namely safety and robustness.

8.2. Safety

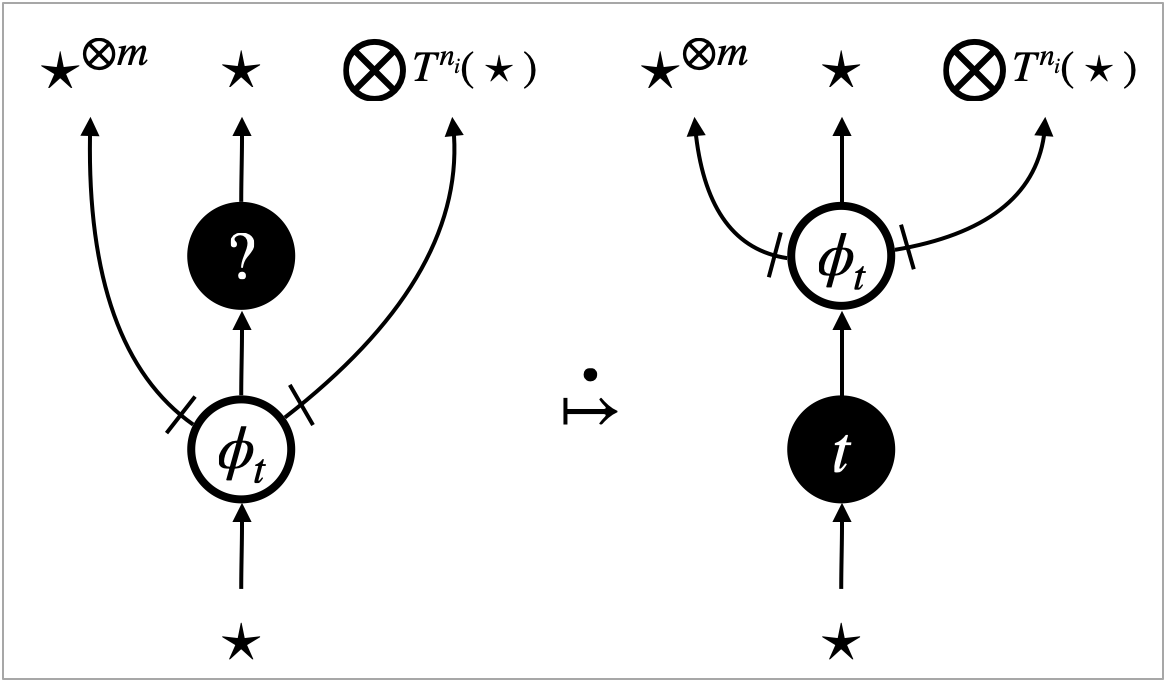

The second case of case analysis for (6) is when the -focus or the -focus encounters one of (and ); see Figure 4b. Let us look at how the micro-beta pre-template (cf. Figure 10b; mind that the focuses are dropped in the pre-template) satisfies (6) in this case. Let be the ones the focus is encountering. There are three sub-cases.

- Case (ii-1) Searching:

-

The -focus enters , i.e. we have and inside states . On , a few search transitions will be followed by a compute transition that applies the micro-beta rewrite rule, because what is connected to the right target of the application edge () (i.e. in Figure 11) is required to be stable. The result is exactly .

This means that we can take a -specimen where: is obtained by replacing the -th hole of with ; and are obtained by removing from . One observation is that the focus in is not entering (i.e. pointing at a hole), because the focus is the -focus pointing at .

- Case (ii-2) Backtracking on the box:

-

The -focus is on top of the box (i.e. in Figure 11). This case is in fact impossible in a refocusing UAM. To make states rooted, the -focus must be at the end of an operation path. However, the -focus in the state is adjacent to a box edge, and cannot be at the end of an operation path.

- Case (ii-3) Backtracking on the stable argument:

-

The -focus is on top of what is connected to the right target of the application edge () (i.e. in Figure 11). This case is also impossible, because of types. The stable hypernet has type (cf. Section 6.1), and the -focus has type . The -focus cannot be on top of .

From Case (ii-1), we extract a sufficient condition for (6), dubbed input-safety, as follows. The micro-beta pre-template falls into (II) below.

{defi}[Input-safety]

A pre-template is

-input-safe if, for any

-specimen

of such that

has the entering -focus, one of the

following holds.

(I)

There exist two stuck states and

such that

for each .

(II)

There exist a -specimen

of

and two numbers , such that

the focus of

is the -focus or the non-entering -focus, ,

, and

.

(III)

There exist a quasi--specimen

of

up to ,

whose focus is not the -focus,

and two numbers ,

such that ,

, and

.

Case (ii-2) and (ii-3) tells us that types and the rooted property prevents the -focus from visiting such that . This situation can be captured by one-way hypernets (Section 6.1), resulting in another sufficient condition dubbed output-closure. {defi}[Output-closure] A pre-template is output-closed if, for any hypernets , either or is one-way.

Input-safety and output-closure are the precise safety conditions. When a pre-template is safe, we simply call it template. {defi}[Templates] A pre-template is a -template, if it is -input-safe and also output-closed.

The micro-beta pre-template is indeed safe, and hence a template. The third parameter of input-safety is irrelevant here, and we can simply set it as the equality . Recall that is the set of all focus-free contexts using operations .

Lemma 4 (Safety of ).

Let be the set , be the set , and . The micro-beta pre-template is a -template. ∎

8.3. Robustness

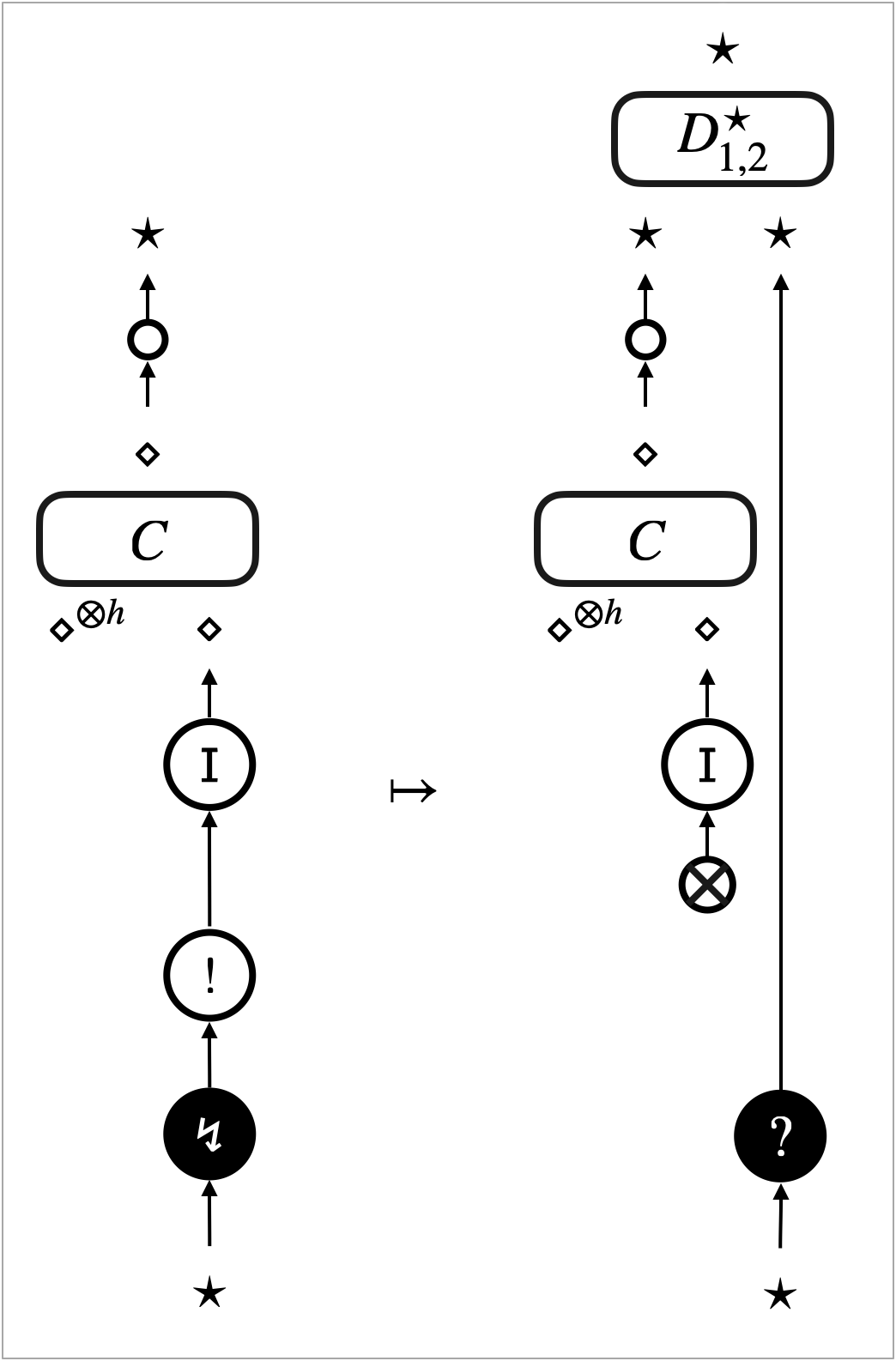

The last case of case analysis for (6) is when the -focus triggers a rewrite transition in (and hence in ). Does the micro-beta template satisfy (6) in this case? It depends on the rewrite transition . Let us consider an operation set where and . We can perform case analysis in terms of which rewrite is triggered by the -focus.

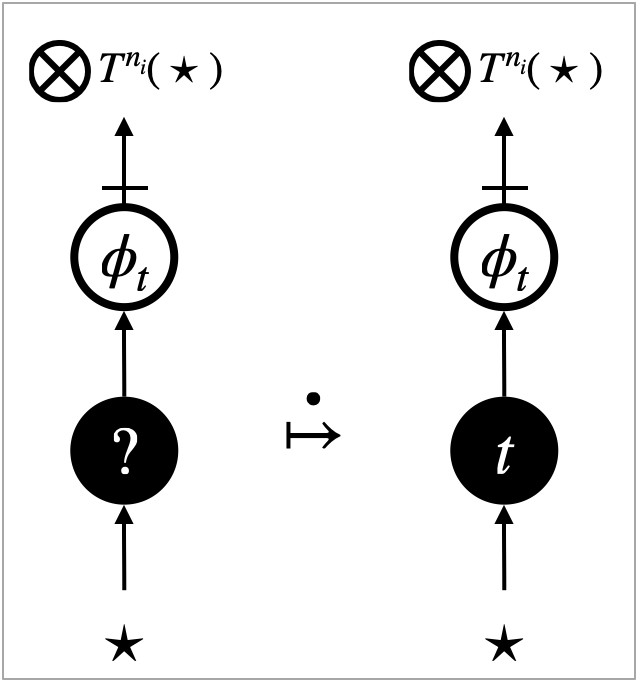

- Case (iii-1) Substitution:

-

The -focus triggers application of the substitution rule (Figure 9f). The rule simply removes a variable edge (), and the same rule can be applied to both states . Sub-graphs related by may be involved, as part of in Figure 9f, but they are kept unchanged. Therefore we can take a -specimen where has the same number of holes as .

- Case (iii-2) Arithmetic:

-

The -focus triggers application of the arithmetic rewrite rule (Figure 10a). The rewrite rule only involves three edges, and it can never involves sub-graphs related by . We can also take a -specimen where has the same number of holes as .

- Case (iii-3) Micro-beta:

-

The -focus triggers application of the micro-beta rewrite rule (Figure 10b). Sub-graphs related by may be involved in the rewrite rule, as part of the box content in Figure 10b, but they are kept unchanged. We can also take a -specimen where has the same number of holes as .

- Case (iii-4) :

-

The -focus triggers application of the rewrite rule (Figure 10c). The rewrite rule inevitably involves sub-graphs related by , and as a result, can yield different results, i.e. vs. . The same rewrite rule can be applied to states and yield , respectively, and these states can contain different natural-number edges (i.e. vs. such that ). We cannot take a specimen of to make (6) happen. ∎

We can therefore observe the following: the micro-beta template satisfies (6) relative to active operations (formalised in 5 below), and does not satisfy (6) relative to the active operation . We say the micro-beta template is robust only relative to .

Robustness, as a sufficient condition for (6), can be formalised as follows.

{defi}[Robustness]

A pre-template is

-robust relative to

a rewrite transition if, for

any -specimen

of , such that

and

the focus of

is the -focus and not entering,

one of the following holds.

(I) or

is not rooted.

(II)

There exists a stuck state such that

.

(III)

There exist a quasi--specimen

of

up to ,

whose focus is not the -focus,

and two numbers ,

such that ,

, and

.

We can now formally say that the micro-beta pre-template is robust relative to the substitution transitions and the extrinsic transitions for . The third and fourth parameters of robustness are irrelevant here555These parameters will be used in Section 12 (See Table 3)., and we can simply set them as the equality .

Lemma 5 (Robustness of ).

The micro-beta pre-template is -robust relative to the substitution transitions and the extrinsic transitions for . ∎

8.4. A sufficiency-of-robustness theorem

We have collected definitions of safety and robustness. We can finally state the sufficiency-of-robustness theorem. The theorem incorporates the so-called up-to technique: it enables us to prove contextual refinement that depends on (or, that is up to) state refinements and . Safety (Section 8.2) and robustness (Section 8.3) accordingly incorporates the up-to technique by means of quasi-specimens up-to. We cannot use arbitrary preorders in combination with the preorder , though; they must be reasonable in the following sense.

[Reasonable triples]

A triple of preorders on is

reasonable if the following hold:

(A) is closed under addition, i.e. any and satisfy

.

(B) , and .

(C) , where denotes

composition of binary relations.

Examples of a reasonable triple include:

,

,

,

,

,

,

,

,

.

The sufficiency-of-robustness theorem has two clauses (1) and (2). To prove that a pre-template implies contextual equivalence (not refinement), one can use (1) twice with respect to and , or alternatively use (1) and (2) with respect to . The alternative approach is often more economical. This is because it involves proving input-safety of for both parameters and , which typically boils down to a single proof for the smaller one of , thanks to monotonicity of input-safety with respect to .

Theorem 6 (Sufficiency-of-robustness theorem).

If an universal abstract machine is deterministic and refocusing, it satisfies the following property. For any set of contexts that is closed under plugging, any reasonable triple , and any pre-template on focus-free hypernets :

-

(1)

If is a -template and -robust relative to all rewrite transitions, then implies contextual refinement in up to , i.e. any implies .

-

(2)

If is a -template and the converse is -robust relative to all rewrite transitions, then implies contextual refinement in up to , i.e. any implies .

Proof 8.1.

This is a consequence of 40, 37 and 38 in Appendix F.

The proof is centred around a notion of counting simulation (Appendix F). We first prove that, for each robust template , its contextual closure is a counting simulation (40). We then prove soundness of counting simulation with respect to state refinement (37). We can further prove that, if implies state refinement, implies contextual refinement (38).

We can use 6 (1) to conclude that the micro-beta pre-template implies contextual refinement . Note that The micro-beta pre-template is -robust, and therefore -robust as well, because .

Proposition 7.

For the operation set , the micro-beta pre-template implies contextual refinement . ∎

The above proposition establishes contextual refinement in the absence of the operation . This is due to the observation that the micro-beta template is not robust relative to the rewrite rule of (Figure 10c). Can we say more than just failure of robustness, in the presence of ?

The answer is yes. For the specific operation set , we can actually show that the micro-beta template implies contextual refinement .

Lemma 8 (Robustness of ).

The micro-beta pre-template is -robust relative to the rewrite transitions for .

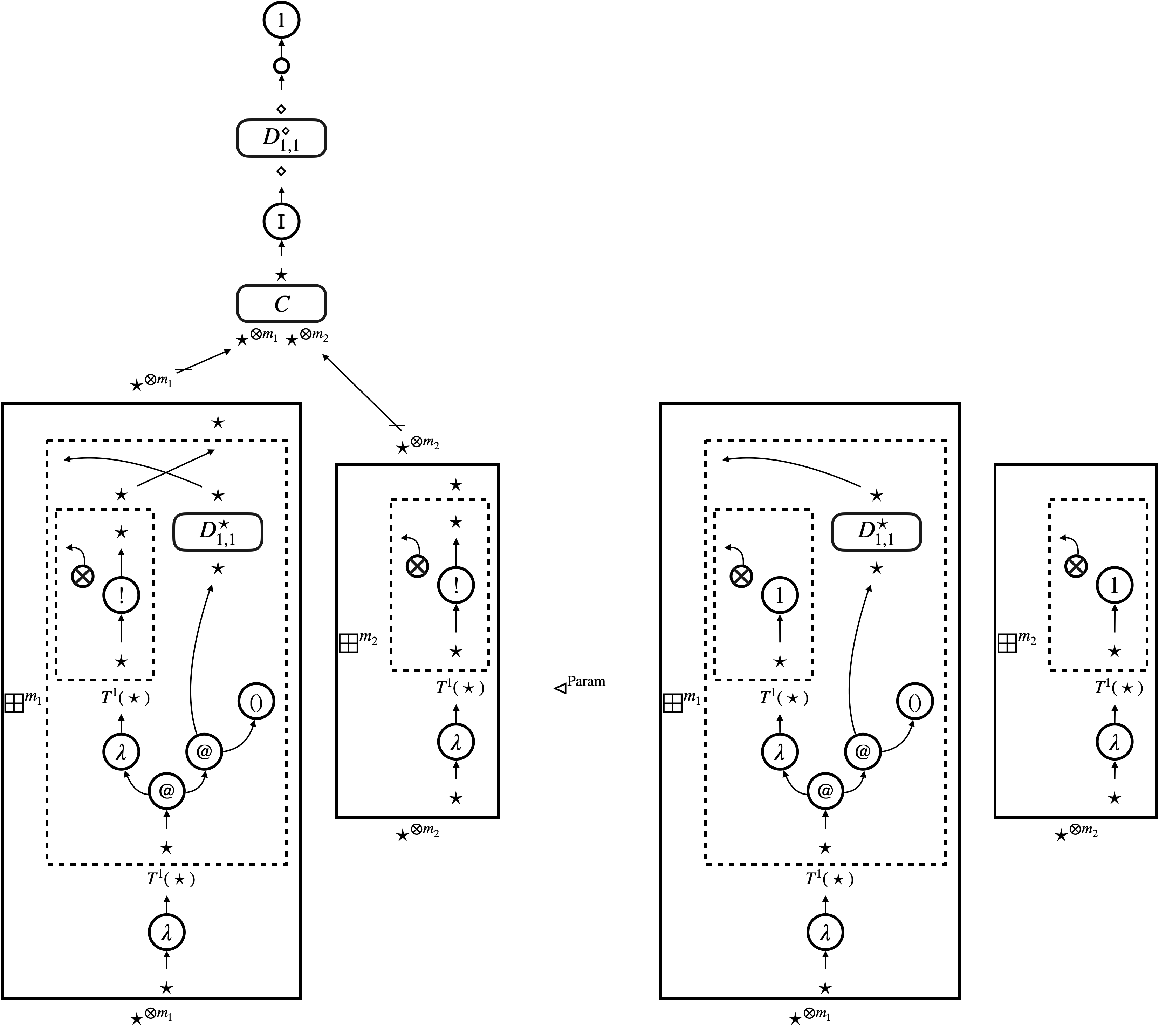

Proof 8.2.

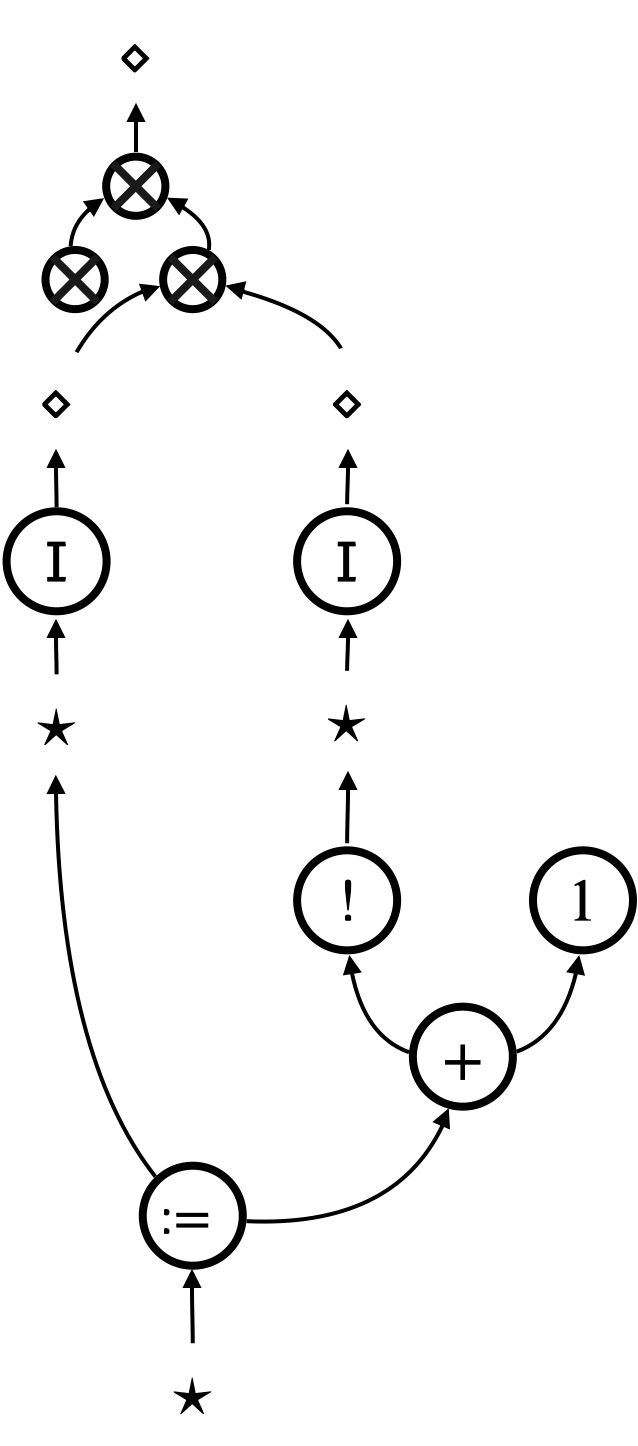

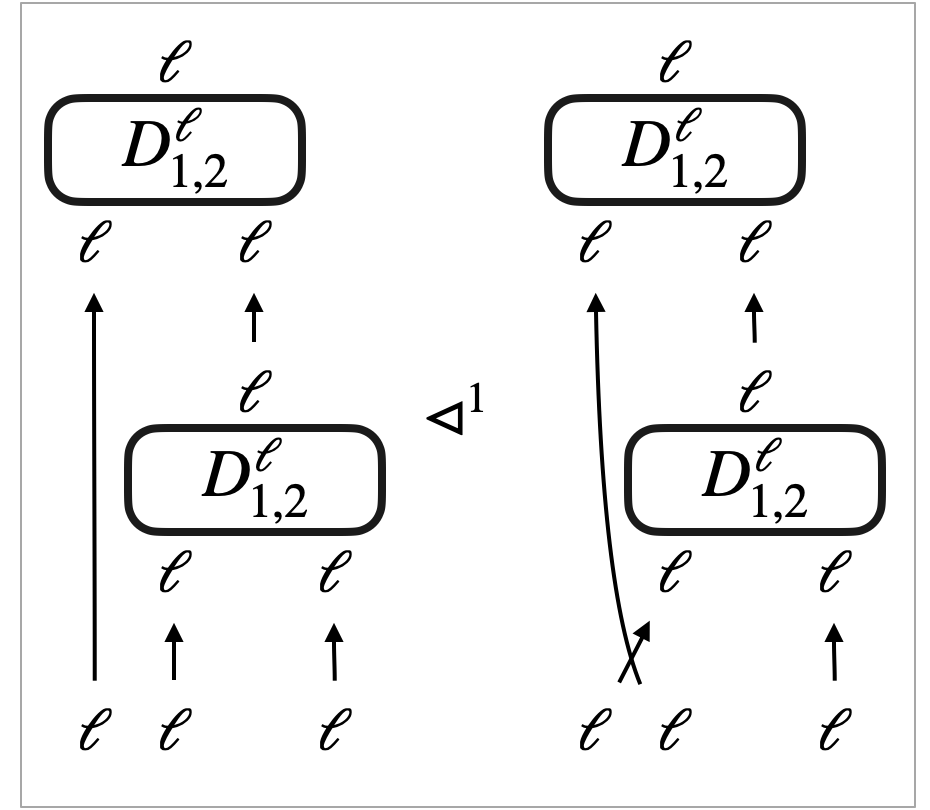

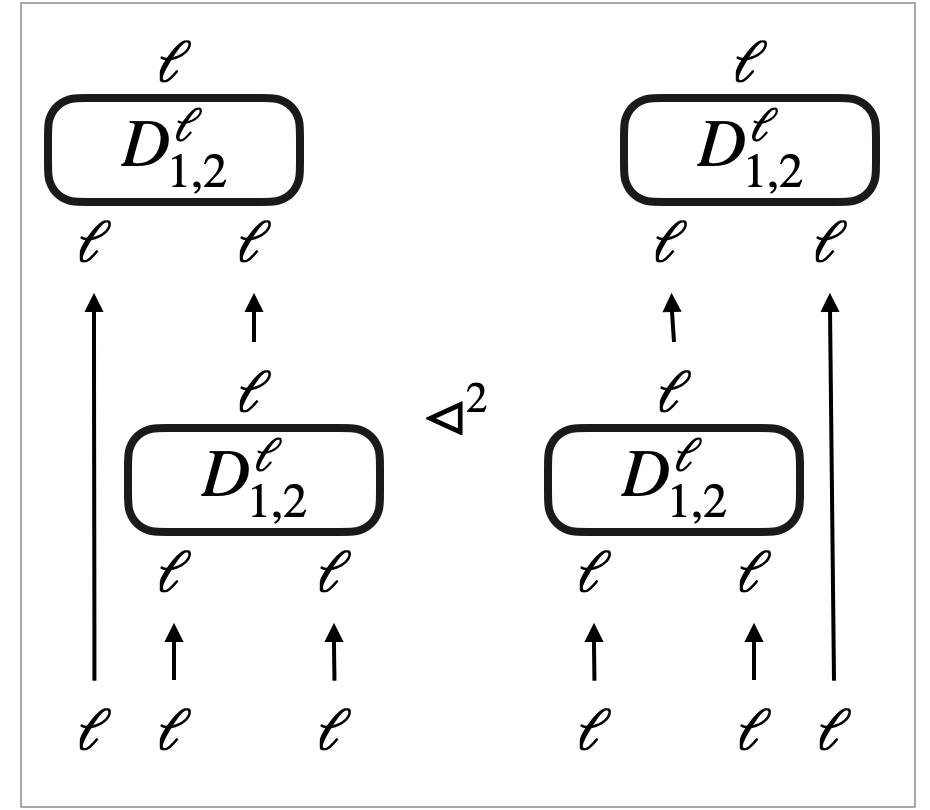

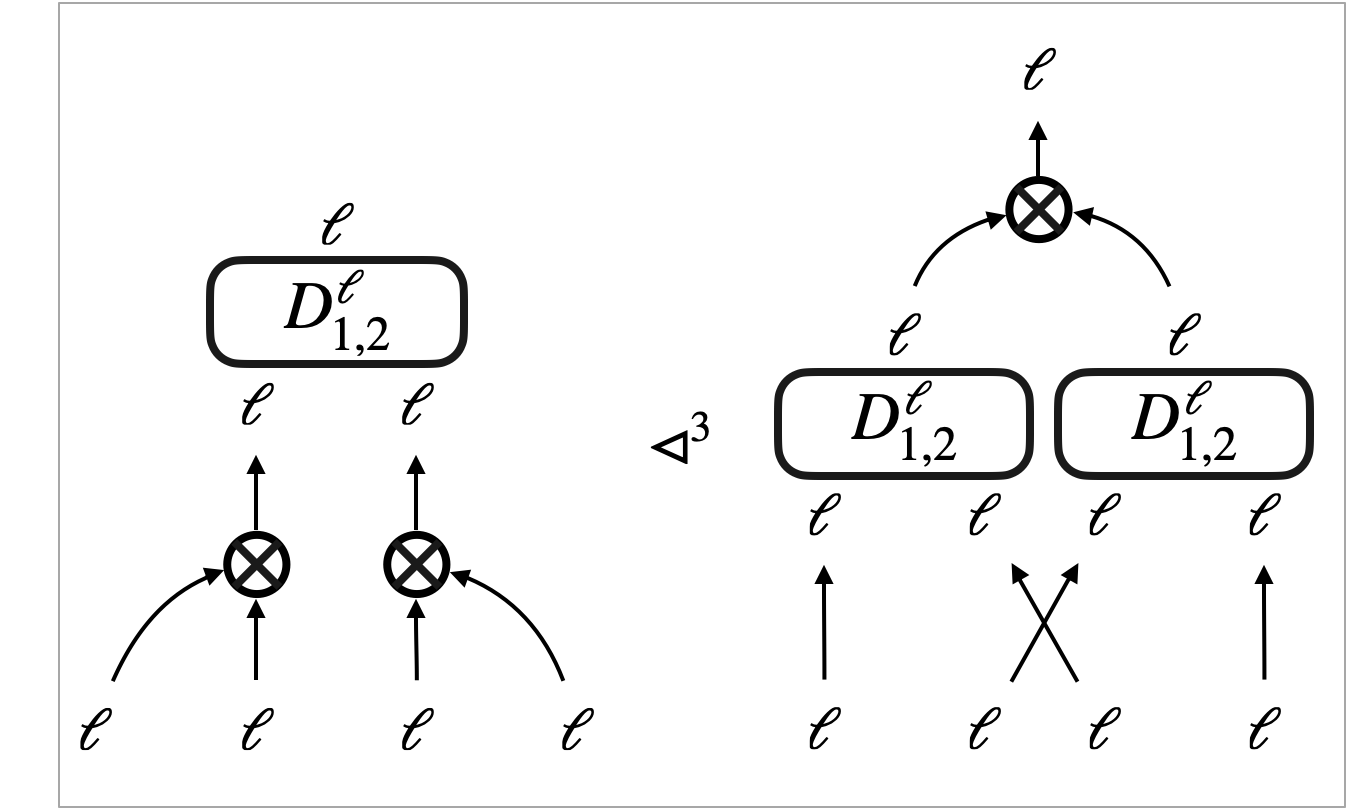

To prove robustness, we use the auxiliary pre-template , shown in Figure 12, that identifies all natural number edges. The pre-template is a -template, and it is -robust relative to the substitution transitions and the extrinsic transitions for including . Therefore, by 6 (1), it implies contextual refinement . The pre-template is symmetric, and consequently, it implies contextual equivalence .

In Case (iii-4) in Section 8.3, we could not take a specimen of that induces the states . The difference between these states is given by as well as different natural number edges; in other words, the difference is given by and .

In fact, we can take a quasi-specimen of up to , which is namely the pair . There exists a state such that: (a) thanks to the symmetric robust template , and (b) the pair is induced by a specimen of .

As a result, with the help of the symmetric robust template , the micro-beta template is robust also relative to .

In the presence of conditional branching and divergence, however, the micro-beta template would not be robust relative to . The above lemma crucially relies on robustness of the auxiliary template , which would be violated by conditional branching; in other words, the template would not be robust relative to conditional branching. Conditional branching and divergence, combined with , would enable us to construct a specific context that distinguishes the left- and the right hand sides of .

9. Representation of variable sharing and store

| Constants: | (natural numbers) | |||

| (booleans) | ||||

| (unit value) | ||||

| Unary operations: | (negation of natural numbers) | |||

| (reference creation) | ||||

| (dereferencing) | ||||

| Binary operations: | (summation/subtraction of natural numbers) | |||

| (assignment) | ||||

| (equality testing of atoms) | ||||

| Terms: | (the lambda-calculus terms) | |||

| (atoms) | ||||

| (constants, unary/binary operations) | ||||

| Formation rules: | ||||

| Syntactic sugar: | ||||

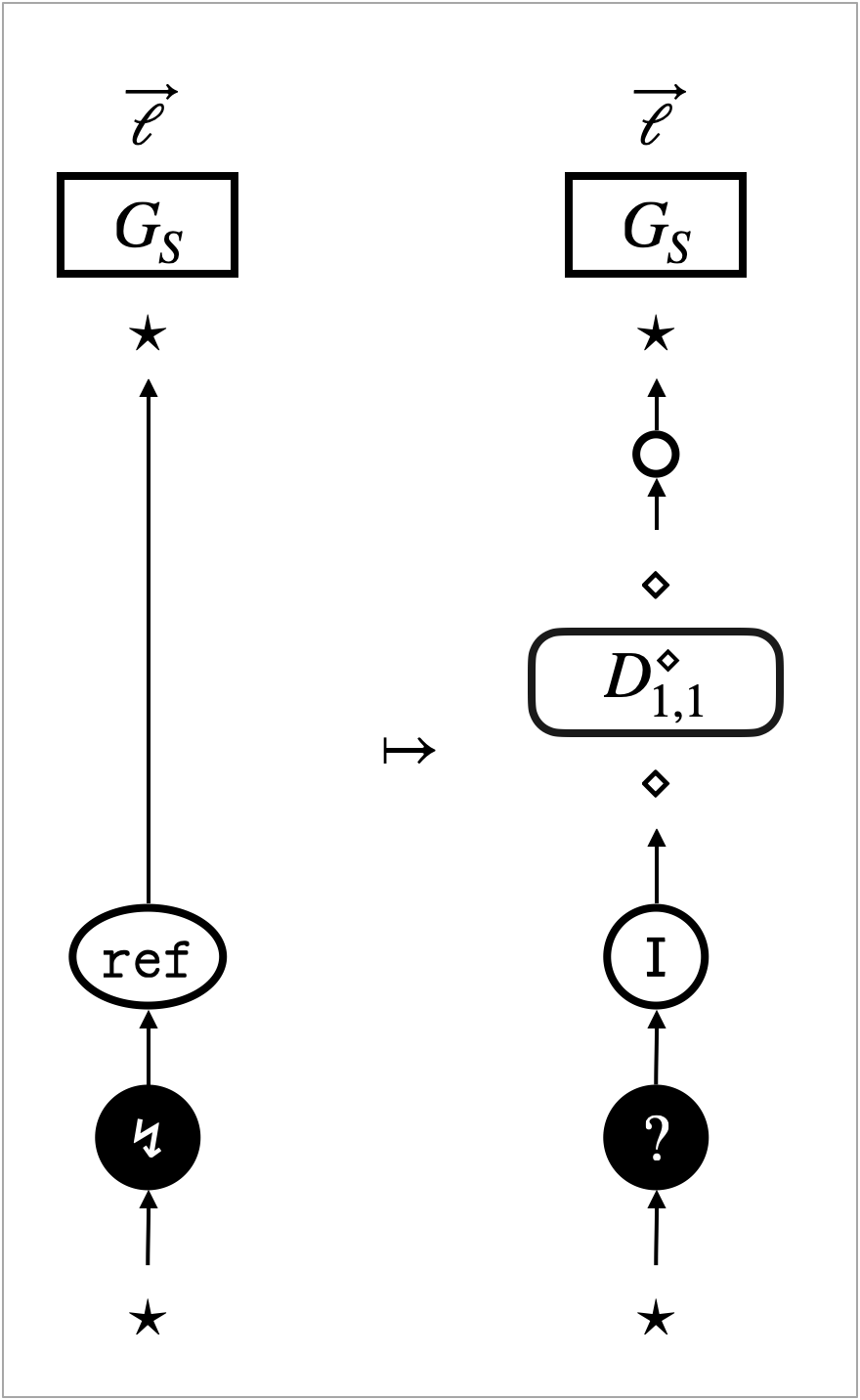

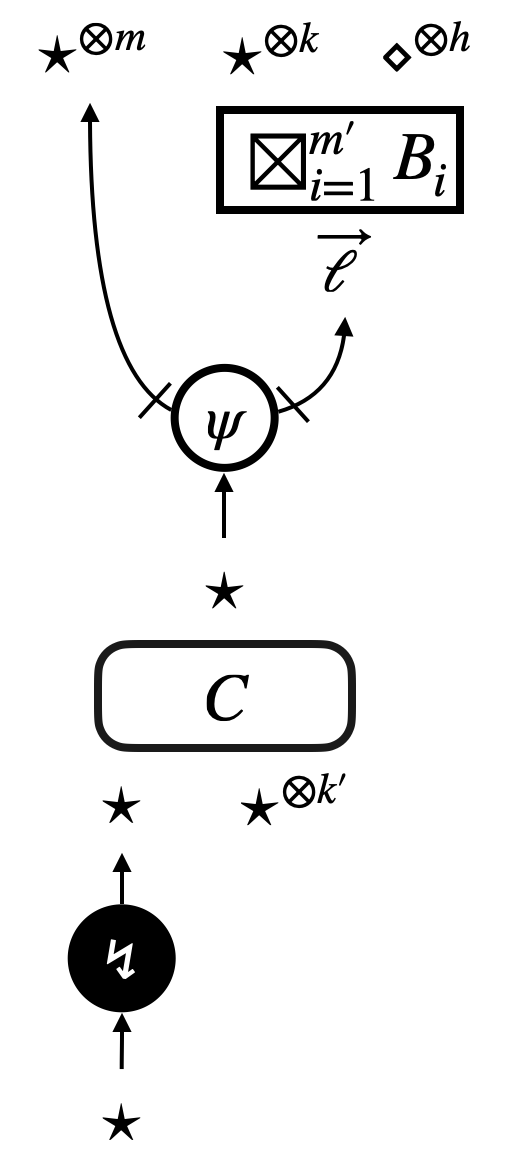

This section adapts the translation of the linear lambda-calculus, presented in Section 4, to accommodate variable sharing and (general, untyped) store. Figure 13 shows an extension of the untyped call-by-value lambda-calculus that accommodates arithmetic as well as:

-

•

atoms (or, locations of store) ,

-

•

reference-related operations: reference creation , dereferencing , assignment , equality testing of atoms ,

-

•

and their return values: booleans and the unit value .

In Figure 13, is a finite sequence of (free) variables and is a finite sequence of (free) atoms.

9.1. Variable sharing

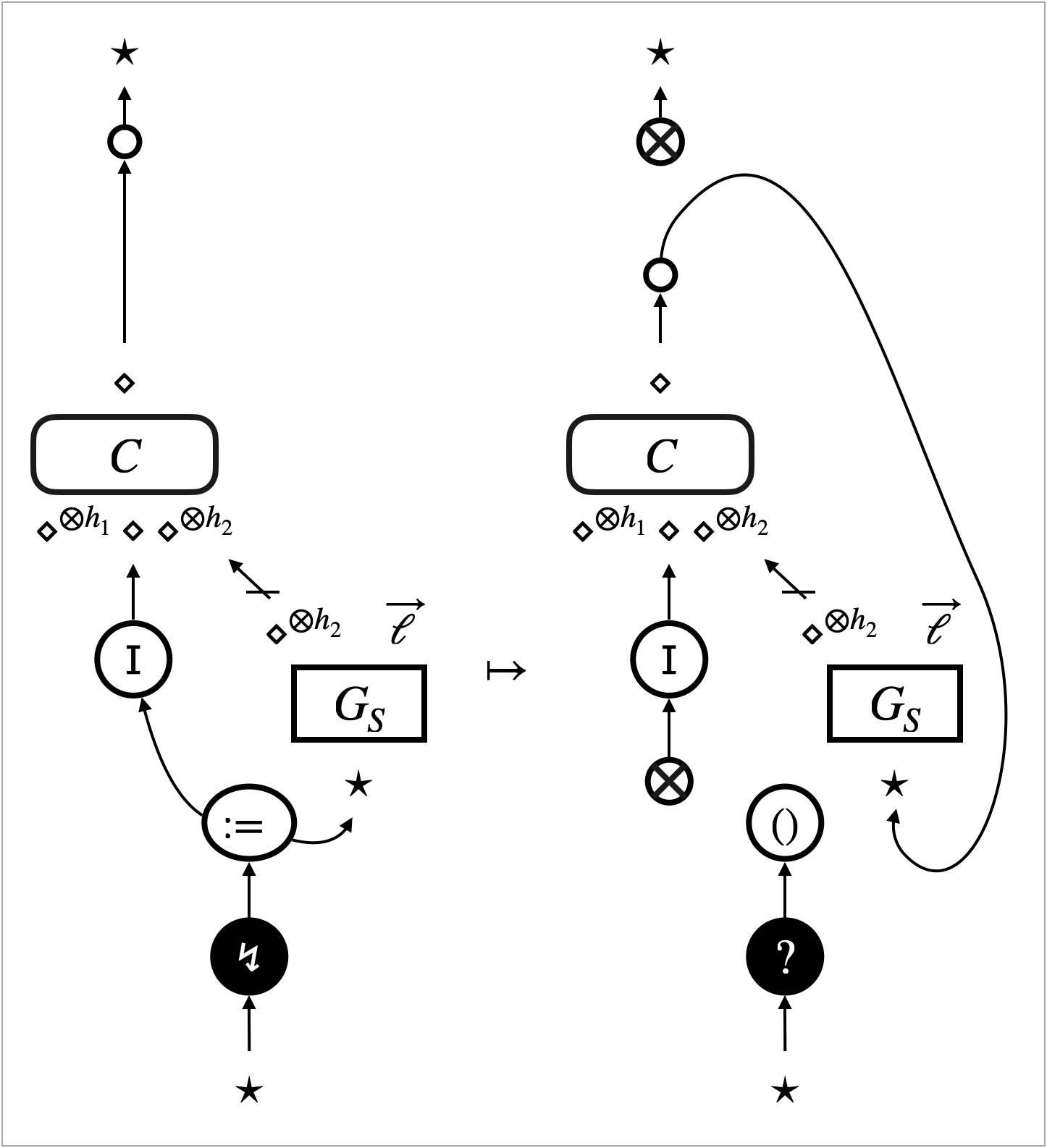

In an arbitrary term, a variable may occur many times, or it may not occur at all. To let sub-terms share and discard a variable, we introduce contraction edges and weakening edges . We can construct a binary tree with an arbitrary number of leaves using these contraction and weakening edges. We call such a tree contraction tree.

There are many ways to construct a contraction tree of leaves, but we fix a canonical666Each is canonical, in the sense that any contraction tree that contains at least one weakening and has leaves can be simplified to using the laws in Figure 21 (namely ). tree as below.

Every canonical tree contains exactly one weakening edge and contraction edges.

The translation of linear lambda-terms needs to be adapted to yield a translation of general lambda-terms. The adaptation amounts to inserting canonical trees appropriately. Additionally, we replace the edges with canonical trees , to obtain uniform translation.

We will present the exact translation in Section 9.3 (see Figure 16), and here we just show an example in Figure 14. Figure 14a shows the hypernet . Canonical trees are inserted for each term constructor, but among those, coloured canonical trees replace the edges that would represent variable occurrences in the linear translation . Figure 14b shows a possible simplification of the hypernet; such simplification is validated only by proving certain observational equivalences on contraction trees (namely, those in Figure 21).

9.2. Store and atom sharing

We next look at terms that contain references to store. An example term is that increments the value stored at atom (location) . We represent such a term, together with store (e.g. ), altogether as a single hypernet; see Figure 15. The hypernets in the figure are simplified in the same manner as Figure 14b.

To represent store and atom sharing, we begin with an observation about the difference between variable sharing and atom sharing. Atoms, like variables, may occur arbitrarily many times in a single term. However, whereas terms bound to a variable can be duplicated, each store associated to the atom should not be duplicated. For example, the atom appears twice in the term , but this does not mean the store is accordingly duplicated. Both occurrences of the atom points at the single store .

This observation leads us to introduce one new vertex label, and some edge labels, of our hypernets. Firstly, we introduce a new vertex label that represents store, in addition to the labels we have used (i.e. for terms and for thunks with bound variables). While terms and thunks can both be duplicated, store cannot be duplicated; this is the reason why we need a new vertex label.

Secondly, we introduce another version of contraction and weakening, namely and , for the type . We call these contraction and weakening as well. Contractions and weakenings for and appear the same, but they will have different behaviours (see Section 10.1).

Finally, we introduce edge labels for type conversion between and : namely, anonymous atom edges called instance , and anonymous store edges . Their roles in the hypernet representation are best understood looking at the example in Figure 15. Each occurrence of an atom is represented by the anonymous instance edge ‘’, and instances of the same atom is connected to a contraction tree of type . The contraction tree is then connected to the part of the hypernet in Figure 15b that represents the store , which consists of the store edge ‘’ and the stored value . In Figure 15b, the part of the hypernet that is typed by connects instances of an atom to its stored value.