22institutetext: Department of Oral and Maxillofacial Surgery, Houston Methodist Hospital, TX 77030, USA

33institutetext: Department of Surgery (Oral and Maxillofacial Surgery), Weill Medical College, Cornell University, NY 10065, USA 44institutetext: Oral and Craniomaxillofacial Surgery at Shanghai Ninth Hospital, Shanghai Jiaotong University College of Medicine, Shanghai 200011, China 44email: ptyap@med.unc.edu, 44email: jxia@houstonmethodist.org

A Self-Supervised Deep Framework for Reference Bony Shape Estimation in Orthognathic Surgical Planning

Abstract

Virtual orthognathic surgical planning involves simulating surgical corrections of jaw deformities on 3D facial bony shape models. Due to the lack of necessary guidance, the planning procedure is highly experience-dependent and the planning results are often suboptimal. A reference facial bony shape model representing normal anatomies can provide an objective guidance to improve planning accuracy. Therefore, we propose a self-supervised deep framework to automatically estimate reference facial bony shape models. Our framework is an end-to-end trainable network, consisting of a simulator and a corrector. In the training stage, the simulator maps jaw deformities of a patient bone to a normal bone to generate a simulated deformed bone. The corrector then restores the simulated deformed bone back to normal. In the inference stage, the trained corrector is applied to generate a patient-specific normal-looking reference bone from a real deformed bone. The proposed framework was evaluated using a clinical dataset and compared with a state-of-the-art method that is based on a supervised point-cloud network. Experimental results show that the estimated shape models given by our approach are clinically acceptable and significantly more accurate than that of the competing method.

Keywords:

Orthognathic surgical planning Shape estimation Point-cloud network.1 Introduction

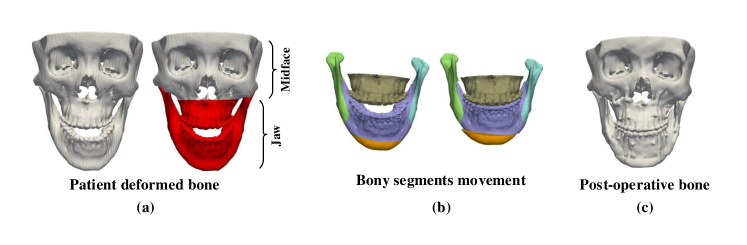

Orthognathic surgery requires a detailed surgical plan to correct jaw deformities. During computer-aided orthognathic surgical planning, a patient’s head computed tomography (CT) or cone-beam computed tomography (CBCT) scan is acquired to generate three-dimensional (3D) craniomaxillofacial (CMF) bony shape models [1]. The deformed maxilla and mandible (i.e., “jaw” in Fig. 1(a)) are then osteotomized from the 3D models into multiple bony segments (Fig. 1(b)). Under the guidance of 3D cephalometric analysis [2], a surgeon discretely moves each bony segment to a desired position, thus forming a new normal-looking CMF skeletal anatomy. A group of surgical splints are then designed and fabricated to correct the patient’s jaw according to the plan during the surgery (Fig. 1(c)). Cephalometric analysis is based on a group of specific distances and angles calculated from the anatomical landmarks on the 3D models, these measurements provide a limited guidance to the planning process, forming an “ideal” surgical plan that still heavily relies on the surgeon’s clinical experience and imagination of what the patient’s normal bone should look like. Therefore, from the surgeon’s perspective, a reference CMF bony shape model is highly desirable to accurately guide the movement of the bony segments.

Wang et al. [3] proposed to estimate patient-specific reference bony shapes based on sparse representation. Specifically, they represented a patient’s bony shape with a set of landmarks, and split them into midface landmarks and jaw landmarks. By representing the patient’s midface landmarks with a midface dictionary from normal subjects, a set of sparse coefficients were obtained, and applied to a normal jaw dictionary to estimate the patient’s normal jaw landmarks. The reference bony shape model was then generated based on the estimated landmarks. This method can work well in certain circumstances, but is heavily dependent on a small group of pre-digitized landmarks, causing the estimation performance to be sensitive to the locations of landmarks. To alleviate this problem, a recent method [4] was introduced to first simulate paired deformed-normal bony surfaces based on sparse representation, and then train a supervised point-cloud network using the simulated data to generate patient-specific reference bony surfaces. Although this network [4] outperforms the method in [3], its generalizability is limited by the extent deformity can be simulated.

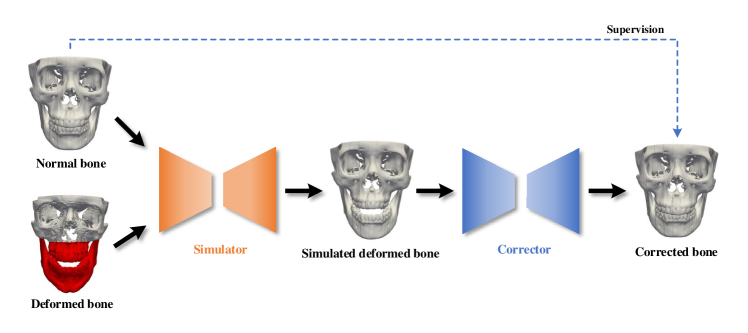

We hypothesize that a fully end-to-end trainable network is capable of estimating reference CMF bony shapes, and the network can be directly trained using random pairs of deformed-normal bones. We propose a self-supervised deep framework to first map jaw deformities of a patient bone to a normal bone to generate a simulated deformed bone with a simulator network, and then restore the simulated deformed bone back to normal with a corrector network. We train the framework using a series of bony surfaces from patients and normal subjects. The trained corrector is applied to patients to generate patient-specific reference shape models. The proposed framework was evaluated using a clinical dataset and showed superior performance over the method that is based on supervised learning [4].

2 Methods

An overview of our framework is provided in Fig. 2. Given any pair of deformed-normal bony surfaces, the simulator network takes as input the coordinates of vertices from the two surfaces and outputs the vectors that shift the vertices of the normal bony surface to generate the simulated deformed bony surface with the jaw similar to the input deformed bone and the midface same as the input normal bone. The corrector network learns a set of displacement vectors from the vertex coordinates of the simulated deformed bony surface and generates the corrected bony surface that matches the input normal bony surface.

2.0.1 Simulator Network:

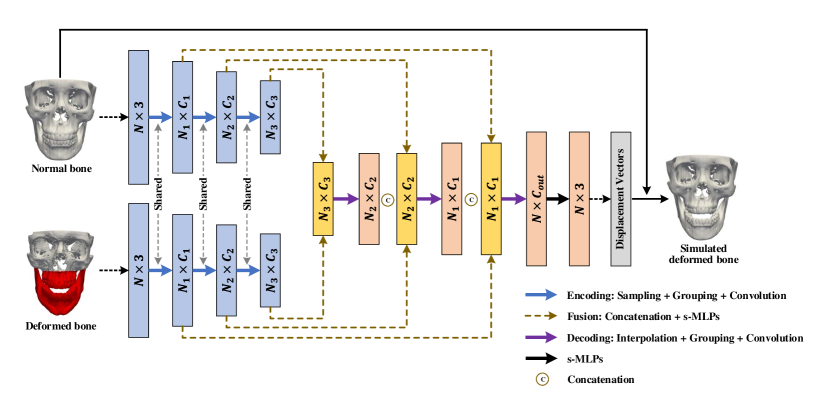

The simulator network consists of a series of encoding, fusion, and decoding layers to learn point features from the coordinates of vertices (Fig. 3). There are two encoding branches with shared weights to extract point features from the two input bony surfaces separately. Each encoding layer selects a subset of points from the input points via furthest point sampling [5]. For each sampled point, its neighboring points in a 3D ball region with radius are gathered from the input points. The point convolution is then performed over point features of the gathered points via PointConv [6], which is implemented based on shared multi-layer perceptron (s-MLP) and max-pooling [7]. Following the encoding layers, the outputs of corresponding layers from two encoding branches are fused via concatenation and s-MLPs. The fused features are then decoded by upsampling via point interpolation [5], grouping via 3D ball neighboring, and convolution via PointConv. The decoding layer increases the number of points and reduces the dimension of each feature vector. A set of cascaded fusion and decoding layers are applied to generate a output vector , which updates the positions of the vertices of the input normal bone to obtain the simulated deformed bone.

We train the simulator network using loss function

| (1) |

which encourages the jaw of the simulated deformed bone to be similar to the input deformed bone based on the relative coordinates of vertices. Specially, a set of landmarks are defined on each bony surface, the relative coordinate vector of the -th jaw vertex relative to the -th landmark on the deformed bony surface is calculated as

| (2) |

where and are respectively the coordinate vectors of the -th vertex and the -th landmark. Similarly, the relative coordinate vector for the -th jaw vertex of the simulated deformed bony surface is

| (3) |

We calculate by

| (4) |

where is the number of vertices that belong to the jaw, is the number of landmarks, and is the -norm. forces the network to fix the midface during simulation. To keep the midface unchanged, i.e., , we set the displacement vectors corresponding to midface vertices to zero in . A smooth deformation field is encouraged by calculating

| (5) |

where and are respectively the displacement vectors of and on the simulated deformed bony surface. is the set of one-ring neighboring vertices of . is the total number of vertices on the surface. Finally, a -norm regularization of the network parameters is incorporated to avoid overfitting.

2.0.2 Corrector Network:

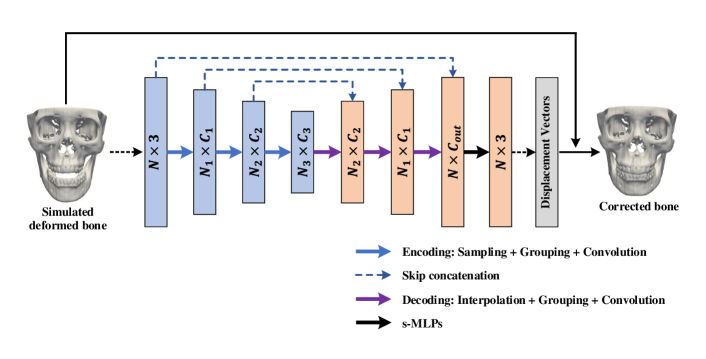

The corrector is an encoding-decoding network (Fig. 4). Like the simulator network, each encoding layer in the corrector performs sampling, grouping, and convolution operations; each decoding layer performs interpolation, grouping, and convolution. The skip connection between two corresponding layers from the encoding and decoding streams is applied to learn point features integrating local and global shape information. Following the decoding stream, the point features are processed by a set of s-MLPs to obtain a vector, which corrects the positions of vertices of the simulated deformed bone to get a corrected bone.

The loss function for the corrector network is

| (6) |

where and are the coordinate vectors of the -th vertex on the corrected and input normal bony surface, respectively, enforces -norm regularization on the network parameters.

2.0.3 Network Training and Inference:

To train the proposed networks, vertex-wise correspondences of surfaces are established by non-rigidly matching a template surface with all bony surfaces in the training set. First, a group of corresponding landmarks localized on the training surfaces are rigidly aligned. From the aligned surfaces, we then choose the surface that is closest to the average landmarks as the template, and warp it to each rest surface via non-rigid coherent point drift (CPD) matching [8]. All warped surface templates are used for network training. We reduce the number of vertices via surface simplification [9] to reduce the computation cost. The vertex coordinates are min-max normalized. The loss functions are minimized with the Adam [10] optimizer to determine the optimal network parameters. The two networks are alternatively trained in turn on each batch of samples. During the inference stage, the trained corrector is directly applied on a patient bony surface to estimate a reference bony shape model. The corrector network is invariant to the number and the order of vertices on the testing surface [7].

3 Experimental Results

3.0.1 Materials and Settings:

We used CT scans of 67 normal subjects from a previous study [11] and CT scans of 116 patients with jaw deformities to train our networks. The study was approved by our Institutional Review Board (#Pro00009723). Following the clinical routine [12], all CT data were segmented to reconstruct 3D bony shape models. 51 landmarks were localized on each bony surface by experienced oral surgeons for calculating the loss function during the training only. Following Section 2.0.3, a bony surface template from the training surfaces was selected based on the 51 landmarks to establish dense vertex correspondences. Ultimately, a total of 7772 (67 116) random pairs of deformed-normal bones were used to train the networks.

The simulator network was implemented with 4 encoding, 4 fusion, and 4 decoding layers. The corrector network was configured with 4 layers each for encoding and decoding. The numbers of points for the encoding and decoding layers were , where during training, and during testing. The radius of the 3D neighboring ball was set to and for the encoding and decoding layers, respectively. The output point features from the 4 encoding and 4 decoding layers had dimensions and , respectively. Empirically, we set , , and in the loss functions (1) and (6). The number of landmarks was set for calculating in (4). The networks were trained for 400 epochs with the learning rate of .

For testing, we acquired the data from another 24 patients with paired pre- and post-operative CT scans. The post-operative bones were used as ground truth for performance evaluation. Following the method in [4], we remeshed each post-operative bony surface according to its pre-operative bony surface to construct the vertex-wise correspondences for calculating estimation accuracy. Four evaluation metrics [4], i.e., vertex distance (VD), edge-length distance (ED), surface coverage (SC), and landmark distance (LD), were employed in the quantitative evaluation. We compared our method with DefNet [4], which was trained using 6834 paired samples simulated by sparse representation, 102 deformed bones were synthesized for each of 67 normal bones from 116 patient bones. Qualitative evaluation was performed by an experienced oral surgeon. Statistical significance of accuracy improvement was evaluated using the paired t-test.

3.0.2 Results:

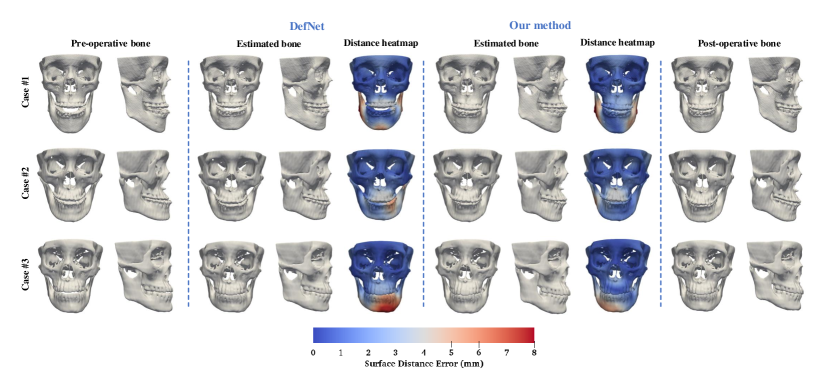

The trained framework was tested on the data of 24 patients. Figure 5 shows the estimated bony surfaces and the vertex-wise distance heatmaps for three randomly selected patients111A video illustration of the three estimation examples is available as a supporting material.. All results from our method inspected by the expert suggest that the estimated bones are clinically acceptable. For quantitative evaluation, the estimation accuracy for the jaw and the midface were calculated separately. Table 1 shows that our method is statistically significantly more accurate in estimating the normal jaw () than DefNet based on the four metrics. The accuracy in maintaining the midface is comparable between the two methods (). Overall, the proposed method outperforms DefNet in the term of accuracy.

Our method takes about 30 minutes for one training epoch, and 10 seconds for testing on each patient data, evaluated based on an NVIDIA Titan Xp GPU.

| Method | VD | ED | SC | LD | |

|---|---|---|---|---|---|

| Jaw | DefNet | 4.08 1.02 | 0.31 0.06 | 0.69 0.05 | 4.26 0.95 |

| Ours | 3.49 0.52 | 0.26 0.05 | 0.72 0.04 | 3.62 0.53 | |

| Midface | DefNet | 1.36 0.36 | 0.15 0.04 | 0.96 0.04 | 1.25 0.31 |

| Ours | 1.39 0.37 | 0.15 0.04 | 0.95 0.04 | 1.32 0.36 |

-

•

Note: A larger SC indicates better estimation performance.

4 Discussion and Conclusion

As sufficient paired deformed-normal bones are almost impossible to acquire clinically, simulating paired data is necessary for training supervised deep learning models for our task. Data simulation based on sparse representation as done in DefNet [4] is limited in mimicking deformities, and requires significant efforts from experts to confirm simulation quality. Our self-supervised deep learning framework can be trained using unpaired data. The embedded simulator network has a stronger non-linear representation ability than sparse representation, and is able to accurately synthesize a broader range of jaw deformities based on patient bones. Compared with DefNet, our framework simplifies the training process and improves prediction accuracy.

Acknowledgement

This work was supported in part by United States National Institutes of Health (NIH) grants R01 DE022676, R01 DE027251, and R01 DE021863.

References

- [1] Xia, J., et al.: Algorithm for planning a double-jaw orthognathic surgery using a computer-aided surgical simulation (CASS) protocol. Part 1: planning sequence. Int. J. Oral Maxillofacial Surg. 44(12), 1431–1440 (2015)

- [2] Xia, J., et al.: Algorithm for planning a double-jaw orthognathic surgery using a computer-aided surgical simulation (CASS) protocol. Part 2: three-dimensional cephalometry. Int. J. Oral Maxillofacial Surg. 44(12), 1441–1450 (2015)

- [3] Wang, L., et al.: Estimating patient‐specific and anatomically correct reference model for craniomaxillofacial deformity via sparse representation. Med. Phys. 42(10), 5809-5816 (2015)

- [4] Xiao, D., et al.: Estimating reference bony shape models for orthognathic surgical planning using 3D point-cloud deep learning. IEEE J. Biomed. Health Informat. (2021). \doi10.1109/JBHI.2021.3054494

- [5] Qi, C.R., et al.: PointNet++: Deep hierarchical feature learning on point sets in a metric space. In: Proc. Adv. Neural Inf. Process. Syst., pp. 5099–5108. (2017)

- [6] Wu, W., et al.: PointConv: Deep convolutional networks on 3D point clouds. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp. 9621–9630. (2019)

- [7] Qi, C.R., et al.: PointNet: Deep learning on point sets for 3D classification and segmentation. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp. 652–660. (2017)

- [8] Myronenko, A., Song, X.: Point set registration: Coherent point drift. IEEE Trans. Pattern Anal. Mach. Intell. 32(12), 2262–2275 (2010)

- [9] Garland, M., Heckbert, P.: Surface simplification using quadric error metrics. In: Proc. SIGGRAPH 97, pp. 209–216. (1997)

- [10] Kingma, D., Ba, J.: Adam: A method for stochastic optimization. In: Proc. Int. Conf. Learn. Representations, pp. 1–41. (2015)

- [11] Yan, J., et al.: Three-dimensional CT measurement for the craniomaxillofacial structure of normal occlusion adults in Jiangsu, Zhejiang and Shanghai Area. China J. Oral Maxillofac. Surg. 8, 2–9 (2010)

- [12] Yuan, P., et al.: Design, development and clinical validation of computer-aided surgical simulation system for streamlined orthognathic surgical planning. Int. J. Comput. Assist Radiol. Surg. 12(12), 2129–2143 (2017)