A Shape Optimization Problem Constrained with the Stokes Equations to Address Maximization of Vortices

Abstract.

We study an optimization problem that aims to determine the shape of an obstacle that is submerged in a fluid governed by the Stokes equations. The mentioned flow takes place in a channel, which motivated the imposition of a Poiseuille-like input function on one end and a do-nothing boundary condition on the other. The maximization of the vorticity is addressed by the -norm of the curl and the det-grad measure of the fluid. We impose a Tikhonov regularization in the form of a perimeter functional and a volume constraint to address the possibility of topological change. Having been able to establish the existence of an optimal shape, the first order necessary condition was formulated by utilizing the so-called rearrangement method. Finally, numerical examples are presented by utilizing a finite element method on the governing states, and a gradient descent method for the deformation of the domain. On the said gradient descent method, we use two approaches to address the volume constraint: one is by utilizing the augmented Lagrangian method; and the other one is by utilizing a class of divergence-free deformation fields.

Key words and phrases:

Shape optimization, Stokes equations, augmented Lagrangian, Rearrangment method.1991 Mathematics Subject Classification:

Primary: 49Q10,76D55, 35Q93; Secondary: 76U99John Sebastian H. Simon∗

Graduate School of Natural Science and Technology

Kanazawa University

Kanazawa 920-1192, Japan

Hirofumi Notsu

Faculty of Mathematics and Physics

Kanazawa University

Kanazawa 920-1192, Japan

Introduction

The study of fluid dynamics is among the most active areas in mathematics, physics, engineering, and most recently in information theory [13]. An interesting area in this field is the study of turbulent flows and on controlling the emergence of such phenomena [7, 30, 10].

Even though turbulence represents chaos, harnessing it in a controlled manner can be useful, for instance, in optimal mixing problems. Among these studies is [8] where an optimal stirring strategy to maximize mixing is studied, while in [24] the authors studied the best type of input functions that will provide a better mixing in the fluid.

Recently, Goto, K. et.al.[13] utilized the generation of vortices in the fluid for information processing tasks. In the said literature, the authors found out that the length of the twin-vortex affects the memory capacity in the context of physical reservoir computing.

For these reasons, the current paper is dedicated to studying a shape optimization problem intended to maximize the turbulence of a flow governed by the two-dimensional Stokes equations. Here, the shape of an obstacle submerged in a fluid – that flows through a channel – is determined to maximize vorticity. Furthermore, the vorticity is quantified in two ways: one is by the curl of the velocity of the fluid; and the other is by rewarding the unfolding of complex eigenvalues of the gradient tensor of the velocity field.

To be precise, our goal is to study the following shape optimization problem

| (1) |

where is a hold-all domain which is assumed to be a fixed bounded connected domain in , is an open bounded domain, and are vortex and perimeter functionals given by

is such that

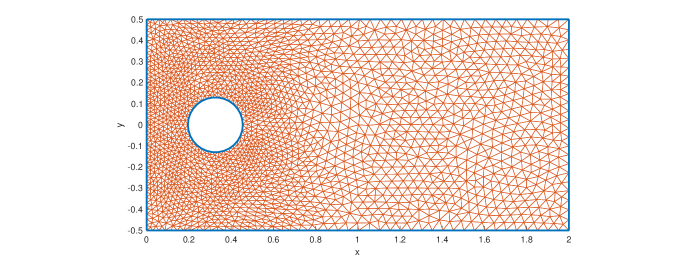

, and and are positive constants. Here, is the velocity field governed by a fluid flowing through a channel with an obstacle (see Figure 1 for reference). The flow of the fluid is reinforced by a divergence-free input function on the left end of the channel, which is an inflow boundary denoted by , whilst an outflow boundary condition is imposed to the fluid on the right end of the channel denoted by . The boundary is the free surface and is the boundary of the submerged obstacle in the fluid. The remaining boundaries of the channel are wall boundaries denoted by , upon which – together with the obstacle boundary – a no-slip boundary condition is imposed on the fluid. We refer the reader to [11, 15, 21, 25, 33] for shape optimization problems involving fluids among others.

We also point out that usually, the curl is enough to quantify the vorticity of the fluid. However, as pointed out by Kasumba, K. and Kunisch, K. in [23], the magnitude of such quantity may still be high on laminar flows. As such, on the same literature the authors proposed the second term in the vortex functional for the quantification of rotation of fluids. The impetus is that the rotational cores of fluids mostly occur near regions where the eigenvalues of are complex [4, 19, 22], and that the eigenvalues are complex when .

Convention: When we talk about a domain , we always consider it having the boundary with for , and that .

One of the challenges in this problem is the non-convexity of the functional , which leads into the possible non-existence of solutions for the shape optimization problem. Fortunately, we will be able to show later on that the functional is continuous with respect to domain variations. Furthermore, the Tikhonov regularization in the form of the perimeter functional is taken into consideration to circumvent the issue of possible topological changes of the domain.

Meanwhile, for the equality constraint , we note that the use of an augmented Lagrangian generates smoother solutions as compared to the common Lagrangian. This is due to the regularizing effect of the quadratic augmentation. As a further consequence, this quadratic term also acts as a penalizing term for the violation of the equality constraint. Aside from the augmented Lagrangian, we also propose an analogue of the -gradient method that satisfies the incompressibility condition to preserve the volume on each iterate of the gradient-descent method.

This paper is organized as follows: in the next section, the system that governs the fluid and the functional spaces to be used for the analysis will be introduced. The variational formulation of the governing system and the existence of its solution will also be shown in the next section. Section 2 is dedicated to the analysis of the existence of an optimal shape. Here, we employ the -topology of characteristic functions for convergence of deformed domains, to ensure the volume preservation.

We shall derive the necessary condition for the optimization problem in Section 3. This is done by investigating the sensitivity of the objective functional with respect to shape variations. In particular, the shape variations are induced by the velocity method [34], and the shape sensitivity is analyzed using the rearrangement method which was formalized by Ito, K., Kunisch, K., and Peichl, G. in [20]. In Section 4, we provide numerical algorithms through the help of the necessary conditions formulated in Section 3, and provide some illustrations of how these algorithms are implemented. To objectively observe the effects of the final shapes to fluid vortices, we look at the generation of twin-vortices in a dynamic nonlinear fluid flow. Finally, we draw conclusions and possible problems on the last section.

1. Preliminaries

1.1. Governing equations and necessary functional spaces

Let be open, bounded and of class , the motion of the fluid is described by its velocity and pressure which satisfies the stationary Stokes equations given by:

| (2) |

where is a constant, is an external force, is a divergence-free input function acting on the boundary , is the outward unit normal vector on , and is the -directional derivative on . The condition on corresponds to no-slip boundary condition, while the Neumann condition on is the so-called do-nothing boundary condition.

The analysis will take place in the Sobolev spaces for , and . Note that if , then and . For any domain , we define

We denote by the closure of with respect to the space .

We also consider the following bilinear forms and defined by

Furthermore, for any measurable set () we shall denote as the , or inner product.

1.2. Weak formulation and existence of solutions

Let satisfy the following properties:

| (3) |

and . The existence of the function that satisfies (3), can be easily established by utilizing for instance [12, Lemma 2.2].

By letting , we consider the variational form of the state equation (2) given by: For a given domain , find that satisfies, for any , the following equations

| (4) |

Any pair that solves the variational equation (4) is said to be a weak solution to the Stokes equation (2). The existence of the solution , is summarized below.

Theorem 1.1.

The proof of the theorem above can be done by utilizing the fact that the operator is coercive, satisfies the inf-sup condition [12], and that the right hand side of the first equation of (4) can be seen as an action of an element of .

Remark 1.

(i) The energy estimate can be extended to the hold-all domain , i.e.,

where is dependent on but not on . (ii) As expected, a more regular domain yields a more regular solution. In particular, if is of class for then the weak solution to (4) satisfies , and as a consequence of the Rellich-Kondrachov embedding theorem [9, Chapter 5, Theorem 6] the solution is in . Furthermore, if we instead assume that the outer boundary covers a convex polygonal domain while the inner boundary is of class , the same regularity of the solution is obtained when .

2. Existence of optimal shapes

2.1. Cone property and the set of admissible domains

We begin by assuming, without loss of generality, that . We define the set of admissible domains as a subset of the collection of domains inside the hold-all domain that possesses the cone property. Furthermore, we define the topology on the said admissible set by the convergence of the corresponding characteristic function of each domain in the -topology. As highlighted by Henrot and Privat in [18] and is rigorously discussed in [6] and [17], this approach helps in the preservation of volume which is among the goals in our exposition. Several authors utilized parametrizing the free-boundary (c.f. [31, 32] and the references therein) to define the set of admissible domains, however free-boundary parametrization might lead to generating domains with varying volumes.

We shall adapt the definition of the cone property as in [3]. In what follows, and denote the inner product and the norm in , respectively.

Definition 2.1.

Let , , , and such that .

(i) The cone of angle , height and axis is defined by

(ii) A set is said to satisfy the cone property if and only if for all , there exists , such that for all we have .

From this definition, the set of admissible domains as

This set of admissible domains has been established to be non-empty (see the proof of Proposition 4.1.1 in [17]), which exempts us from the futility of the analyses we will be discussing.

A sequence is said to converge to if

where the function for a set refers to the characteristic function defined by

Remark 2.

As explained by Henrot, A., et.al., [17, Proposition 2.2.1], the weak∗ convergence in implies that the convergence also holds in the space and thus is almost everywhere a characteristic function.

We shall denote the collection of characteristic functions of elements of as , i.e., , and whenever we mention we take into account the weak∗ topology in . We refer the reader to [6, Chapter 5] and [17, Chapter 2 Section 3] for a more detailed discussion on the topology of characteristic functions of finite measurable domains.

The compactness of follows from the fact that it is closed and relatively compact – as defined by Chenais, D. [3] – with respect to the topology on . One can also read upon the proof in [17, Proposition 2.4.10]. We shall not discuss the proof of such properties, nevertheless they are summarized on the lemma below.

Lemma 2.2.

The set is compact with respect to the topology on .

Another important implication of the cone property is the existence of a uniform extension operator.

Lemma 2.3 (cf. [3]).

Let and , there exists such that for all , there exists

which is linear and continuous such that .

These result will be instrumental for proving some vital properties, for example when we show that the domain-to-state map is continuous.

Remark 3.

The set of admissible domains can be identified as a collection of Lipschitzian domains. Since we only consider the hold-all domain to possess a bounded boundary we refer the reader to [17, Theorem 2.4.7] for the proof of such property.

2.2. Well-posedness of the Optimization Problem

Before showing that the optimal shape indeed exists, we take note that from Theorem 1.1 the following map is well-posed:

This implies that we can write the objective functional in the manner that it solely depends on the domain , i.e.,

where However, the mentioned well-posedness of the domain-to-state map is insufficient to prove the existence of the minimizing domain. In fact, we need the continuity of the map .

Proposition 1.

Let be a sequence that converges to . Suppose that for each , is the weak solution of the Stokes equations on the respective domain; then the extensions coverges to a state , such that is a solution to (4).

Proof.

From the uniform extension property, there exist such that

Furthermore, from Theorem 1.1

This implies uniform boundedness of in . Hence, by Rellich–Kondrachov’s theorem, there exists a subsequence of , which shall also be denoted as , and an element such that the following properties hold:

| (6) |

As usual in proving the existence of solution for minimization problems, the arguments will be based on the lower semicontinuity, and the existence of a minimizing sequence based on the boundedness below of the objective functional.

Note that the objective functional can be dissected into three different integrals, namely , , and . Thus, to establish that is bounded below, we can show that and are uniformly bounded. This implies that, since the boundary is strictly inside the bounded hold-all domain , for some constant .

Note that can be estimated by the norm of the state , i.e.,

Meanwhile, since for any , we get the following estimate,

where Young’s inequality has been employed for the last inequality.

From Proposition 1, we have established the continuity of , which also implies the continuity of the map . Thus, from the recently established estimates for and with respect to , we can infer that the respective functionals are continuous (and hence upper semicontinuous) with respect to the elements of . Furthermore, the functionals are uniformly bounded, since for each ,

Given all these facts, we can now show the existence of an optimal shape rendering our problem well-posed.

Theorem 2.4.

Let , , and satisfy (3). Then, there exists such that

Proof.

From the fact that is bounded below, there exists a sequence such that

3. Shape Sensitivity Analysis

In this section, given the previously established existence of the optimal shape, we shall discuss the necessary condition for the optimization problem. This is done by investigating the sensitivity of the objective functional with respect to shape variations. We start this section by introducing the velocity method, where we consider domain variations generated by a given velocity.

3.1. Identity perturbation

In what follows, we consider a family of autonomous deformation fields belonging to . An element generates an identity perturbation operator defined by

| (9) |

With this operator, a domain is perturbed so that for some we have a family of perturbed domains . Here, is chosen so that , where the following statement shows that is indeed dependent to the velocity field .

Lemma 3.1 (cf. [2, 14]).

Let and be the generated transformation by means of (9) and denote . Then, we have the following:

-

(i)

;

-

(ii)

there exists independent of and such that

We further mention that by the definition of , i.e., on , , and , then these boundaries are part of the perturbed domains . To be precise, we have

Additionally, a domain that has at most regularity preserves its said regularity with this transformation, this means that for , has regularity given that the initial domain is a domain. Lastly, we note that due to Remark 3.

Before we move further in this exposition, let us look at some vital properties of .

Lemma 3.2 (cf [6, 34]).

Let , then for sufficiently small , the map defined in (9) satisfies the following properties:

where .

Let us also recall Hadamard’s identity which will be indespensible for the discussion of the necessary conditions.

Lemma 3.3.

Let and suppose that , then

Proof.

See Theorem 5.2.2 and Proposition 5.4.4 of [17]. ∎

3.2. Rearrangement Method

In this part, we determine the shape derivative of the objective functional with respect to the variations generated by the transformation . This approach gets rid of the tedious process of solving first the shape derivative of the state solutions, then solving the shape derivative of the objective functional.

To start with, we consider a Hilbert space and an operator

where the equation corresponds to a variational problem in

Suppose that the free-boundary is denoted by , the said method deals with the shape optimization

subject to

| (10) |

We define the Eulerian derivative of at in the direction by

where solves the equation in . If exists for all and that defines a bounded linear functional on then we say that is shape differentiable at .

The so-called rearrangement method is given as below:

Lemma 3.4 (cf [20]).

Suppose and that the following assumptions hold:

-

(A1)

There exists an operator such that in is equivalent to

(11) with for all

-

(A2)

Let . Then satisfies

for all

-

(A3)

Let be the unique solution of (10). Then for any the solution of the following linearized equation exists:

- (A4)

Let be the solution of (10), and suppose that the adjoint equation, for all

| (12) |

has a unique solution . Then the Eulerian derivative of at in the direction exists and is given by

| (13) |

where is the mean curvature of .

Before we go further, we note that in usual practice the element is solved using the composition , and utilizes the function space parametrization (see [6, Chapter 10 Section 2.2] for example, for further details). Furthermore, assumption (A3) is originally written as the Hölder continuity of solutions of (11) with respect to the time parameter . Fortunately, Ito, K., et.al.[20], have shown that assumption (A3) implies the continuity. We cite the said result in the following lemma.

Lemma 3.5.

We applying the rearrangement method to the velocity-pressure operator defined by

where .

According to Theorem 1.1, there exists a unique such that for any

| (14) |

Notation.

Moving forward we shall use the following notations , .

Our goal is to characterize, and of course show the existence of the Eulerian derivative of the objective functional

| (15) |

where , and is the first component of the solution of (14).

From the deformation field , we let be the solution of the equation

| (16) |

By perturbing the equation (16) back to the reference domain we get the operator defined by

| (17) |

where and – by denoting the row of , the component of a vector , and by using Einstein convention over – the bilinear forms are defined as follows

Using similar arguments as in [20], it can be easily shown that and satisfy (A1) and (A4).

Furthermore, the Fréchet derivative of the velocity-pressure operator at a point can be easily obtained as

for all . Due to the linearity, we further infer that

for any . From the coercivity of , and because satisfies the inf-sup condition, then for any the variational problem

is well-posed. These imply that the operators and satisfy assumptions (A2) and (A3).

We now characterize the Eulerian derivative of by utilizing Lemma 3.4.

Theorem 3.6.

Suppose that is a domain that is of class , and let be the solution to (14). Then, the Eulerian derivative of exists and is given by

where is the unit tangential vector on , , solves the adjoint equation

| (18) |

for any ,

and the curl of a scalar valued function is defined by

Proof of Theorem 3.6 From the symmetry of the operator , the unique existence of the solution to the adjoint equation (18) can be established easily using the same arguments as in (4) – this of course uses the fact that , and hence the well-definedness of the right-hand side of (18). Thus, the existence of the Eulerian derivative of is assured.

We now characterize the derivative . We begin by solving for the expression . By pushing toward the perturbed domain and by using Lemma 3.3 yield the following

where , , , and , which were generated by using the identity for [34].

Using Green’s identities, the divergence-free property of the variables and and Green’s curl identity [26, Theorem 3.29], we obtain the following derivative

Finally, we now use (13) to determine the derivative to get

Before we write the final form of the shape derivative, we note that

and

Hence, we have the following form

Therefore, from the assumed regularity of the domain, and from the divergence theorem

Remark 5.

The resulting form of the shape derivative of coincides with the Zolesio–Hadamard Structure Theorem [6, Corollary 9.3.1], i.e., we were able to write

In this case, we shall call the shape gradient of which will be useful in the numerical implementations that we shall illustrate in the proceeding sections.

4. Numerical Realization

In this section we shall use the recently formulated necessary condition to solve the minimization problem numerically. However, the said condition does not take into account the volume constraint. With this reason, we shall resort to two approaches, one is the augmented Lagrangian method, which will be based on the implementation of Dapogny, C., et.al.[5], and the other one is by generating divergence-free velocity fields.

4.1. Augmented Lagrangian Method

By writing the shape optimization problem (1) as the equality constrained optimization

we consider minimizing the augmented Lagrangian

In this definition, corresponds to the Lagrange multiplier with respect to the equality constraint, while is a penalty parameter. For a more detailed discussion on augmented Lagrangian methods one can refer to [28, Section 17.3], and to [5] for implementation to shape optimization.

We can solve the shape gradient of at a given domain - which we shall denote as - by utilizing the shape gradient of and Lemma 3.3. This yields

where the term is evaluated as

4.2. Gradient Descent and Normal Vector Regularization

Similarly with Remark 5, one can write the shape derivative of as

This gives us an intuitive form of the velocity field as either (or for the augmented Lagrangian), which implies that

However, such choice of may lead to unwanted behavior of the deformed domain especially for iterative schemes. To circumvent this issue, several authors utilized extensions of the velocity field in the domain . In [5], the authors considered an elasticity extension with the tangential gradient for solving the field. Rabago et.al. [31] on the other hand cosidered a Neumann-type extension for solving the mentioned velocity field. In our context we shall consider two approaches based on gradient method [1]. In particular, for sufficiently small parameter , and we shall use the following gradient method: Solve for ( if ) that satisfies the variational equation

| (20) |

for all ( if ). Here is if , and if .

Note that when , (20) reduces to the usual method, while the method with is motivated by the fact that the solution satisfies the divergence-free property, and thus the preservation of volume.

Since we consider domains which are -regular, we can solve the mean curvature as [17, Proposition 5.4.8], where is any extension of in . Having this in mind, we solve for by means of the equation

| (21) |

for sufficiently small

4.3. Deformation Algorithm

Given an initial domain , we shall solve the minimization problem iteratively. In particular, we shall update an iterate by the identity perturbation operator, i.e., . Here, is solved using (20) but on instead of . On the other hand, we shall solve for using a line-search method starting at an initial guess

| (22) |

where is a fixed, sufficiently small parameter. This step size is updated whenever (or ) or if the deformed domain exhibits mesh degeneracy (see [5, Section 3.4] for more details).

As for the augmented Lagrangian , the multipliers and are iteratively updated as well. Inspired by Framework 17.3 in [28] for the -update, and by [5, Section 3.3], we use the following rule

| (23) |

where is a given target parameter and is an increase-iteration parameter. One may refer to [5, Section 3.3] for a more detailed rationale on the mentioned updates.

In summary, we have the following algorithm:

4.4. Numerical Examples

Using the algorithms discussed previously, we will now show some numerically implemented examples, which are all run using the programming software FreeFem++ [16] on a Intel Core i7 CPU 3.80 GHz with 64GB RAM.

In all these examples, we shall consider a Poiseuille-like input function given by . Furthermore, we consider the rectangular channel whose corners are , , , and . On the other hand we consider a circular initial shape for the free-boundary that is centered at and with radius (see Figure 2). For simplicity, we shall also consider a fairly viscous fluid, i.e.,

The volume constant is set as the volume of the initial domain , which is refined according to the magnitude of the state solution with the minimum mesh size and a maximum mesh size . All the governing equations – the state equation, adjoint equation, state responsible for the deformation fields, and the smooth approximation of the normal vector – are solved using the UMFPACK option. The tolerance, furthermore, is selected to be

4.4.1. Augmented Lagrangian Method

The implementation of aL-algorithm is divided into two parts. Namely, we consider when and (which we call as the curlaL-problem), and (which we call as the detgradaL-problem).

The values of the constants , , , , and are chosen so as to maintain stability of the method.

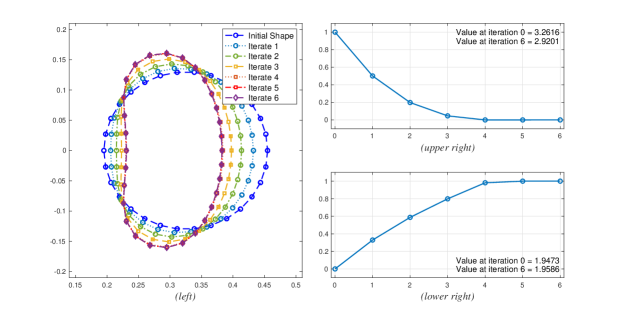

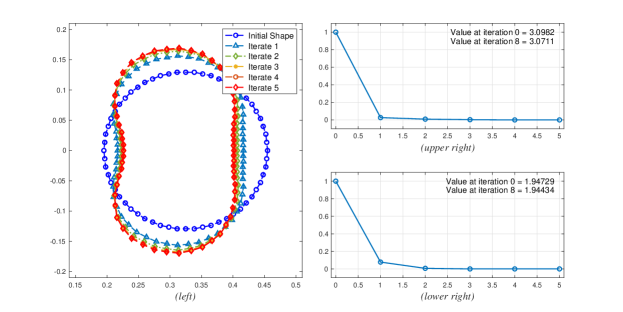

Implementation of curlaL-problem (). For this implementation, we have chosen the numerical parameters shown in Table 1.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 6.0 | 20 | ||

| 1.05 | |||

| 10 |

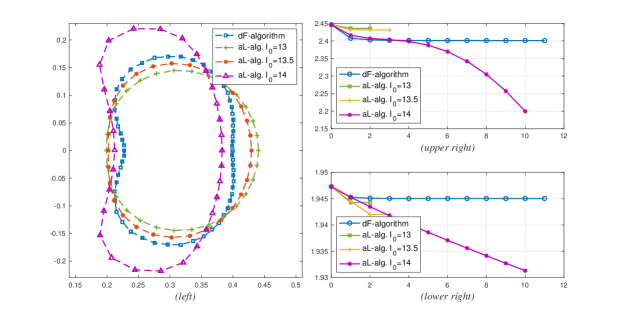

Using these parameters, the evolution of the free-boundary is shown in Figure 3(left) below. We see that the free-boundary evolves into a bean-shaped surface.

As expected, we see that the value of the objective functional decreases on each iteration (see Figure 3(upper right)). In fact, the value of the objective functional decreases by . Lastly, we see from Figure 3(lower right) that the volume increases by .

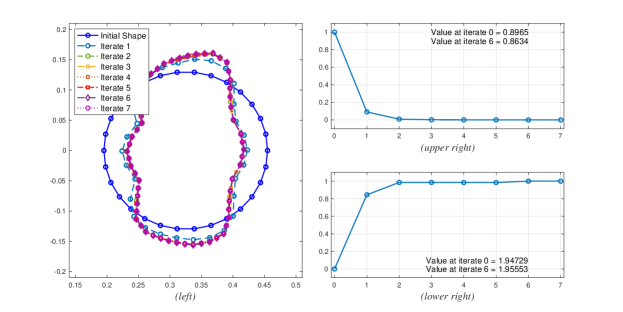

Implementation of detgradaL-problem (). In this particular scenario, we consider the parameter values shown in Table 2.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 1.3 | .5 | ||

| 1.05 | |||

| 10 |

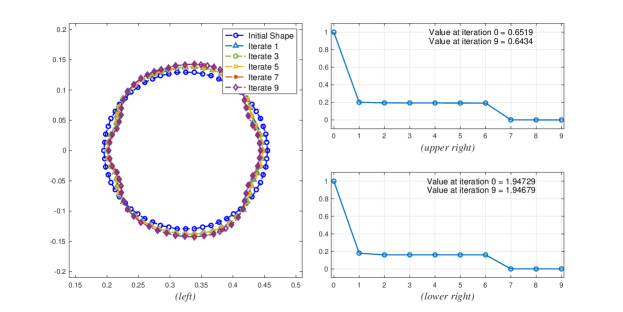

Unlike the curlaL-problem, the shape does not evolve into a bean-shaped obstacle. The evolution acts in a non-symmetrical manner (see Figure 4(left)) due to the fact that the right hand-side of the adjoint equation with the current choice of and is also non-symmetric. Even though this problem runs seven iterations, it can be observed that after two iterations, the shapes are almost identical as shown in Figure 4(left). This phenomena can also be observed on the trend of the decrease of the value of the objective functional, i.e., the values of the functional from the second iteration up to the last iteration differ minimally.

The percentage change from the initial shape to the second iterate-shape is , while from the second iterate to the final shape it is . In general, from the initial shape to the final shape the percentage difference is We also point out that the minute difference of iterate 2 and the final shape may be caused by the fact that the changes are caused by mesh adaptation used in our program.

4.4.2. Divergence-free deformation fields

Unlike the implementation of aL-algorithm, the minimization problem is solved by dF-algorithm in three parts. In particular, we shall illustrate the following modificatoins:

-

curldF-problem with parameters , and ;

-

detgraddF-problem with parameters , and ;

-

mixeddF-problem with parameters .

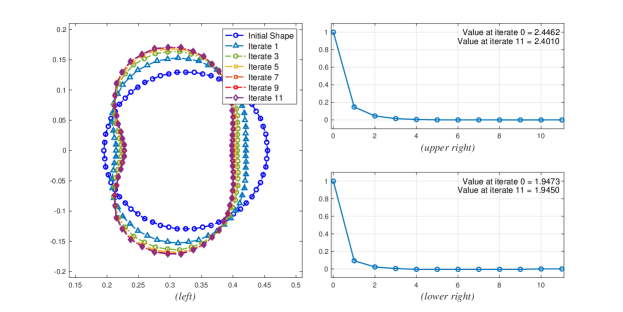

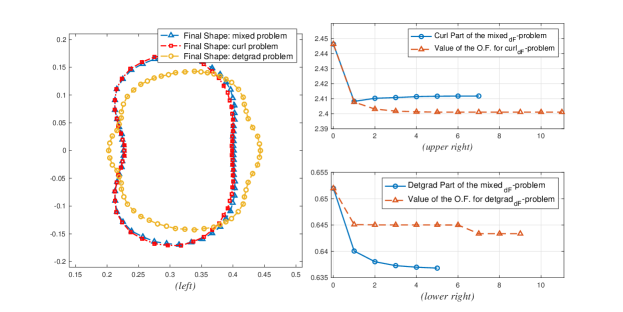

Implementation of curldF-problem (). Note that in using dF-algorithm, we are more relaxed in the choice of the parameters. In particular, we are just concerned with the parameter which we choose as .

As we can see in Figure 5(left) the method converges to a bean shaped boundary, just like the generated shape in curlaL-problem. However, in the current case, the method steers the shape to have the inner domain bounded by lose its convexity on the left side.

One can also infer from Figure 5(upper right) that the relative difference - with respect to the initial shape - of the value of the objective function at the optimal shape is , while of the volume is .

If we compare this algorithm with aL-algorithm with and , we deduce from Figure 6(lower right) that our current method preserves the volume better. We also see from Figure 6(upper right)) that the objective function values using dF-algorithm are lower than the ones from aL-algorithm except from the case , which fails horribly with volume preservation.

We also see in Figure 6(left) what we meant we we said that the inner domain bounded by loses its convexity on the right side, i.e., the curvature of the shapes generated by aL-algorithm are more pronounced compared to our current algorithm.

Remark 6.

(i.) The reason why we started the simulation of aL-algorithm with at is that if we try a smaller value of , the scheme becomes too stringent and will not generate any domain perturbation.

(ii.) One interesting feature in Figure 6 is that even after ten iterations, the objective function and volume trends for the case seem to have not converged yet. In fact, even after 20 iterations, the scheme still finds a descent direction. This highlights an issue with the balance between regularization and stability, which is an interesting venture to delve into if one is keen on studying stability of numerical methods. One may also refer to [27] for some insights on the issue of regularity and stability in general.

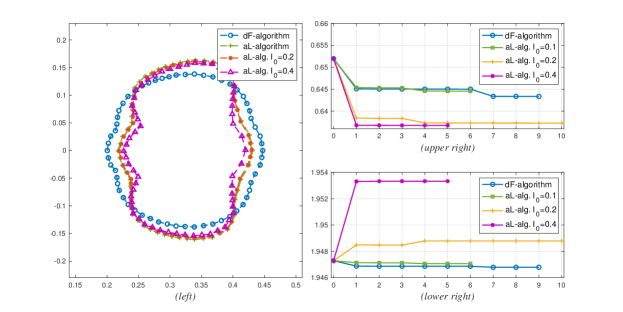

Implementation of detgraddF-problem (). Similar with our previous implementation, we only needed to choose a value for the parameter , which in this case is

As illustrated in Figure 7(left), the final perturbed domain differs minimally from the initial domain. Furthermore, we can see from Figure 8(left) that the deformation is less extensive as compared to the final shapes generated by the aL-algorithm. One can also infer from Figure 7(upper and lower right) that the value of the objective function and of the volume at the final shape differs relatively (w.r.t. the initial shape) by and , respectively. This implies that the volume preservation is significantly satisfied by the dF-algorithm, while maintaining a significant decrease on the objective function.

If we compare the current algorithm to aL-algorithm with the same value of , we see from Figure 8(lower right) that the only value of that can preserve the initial volume better is . We also see from Figure 8(upper right) that since the difference between the final value of the objective function at the final shape using dF-algorithm and aL-algorithm with is very minimal, the aL-algorithm is more preferable. The only drawback though is that aL-algorithm depends on more parameters, hence it is more subject to instabilities.

The implementations of the aL-algorithm with exhibit a significant decrease on the objective functional as compared to the dF-algorithm, however they both violate the volume constraint significantly.

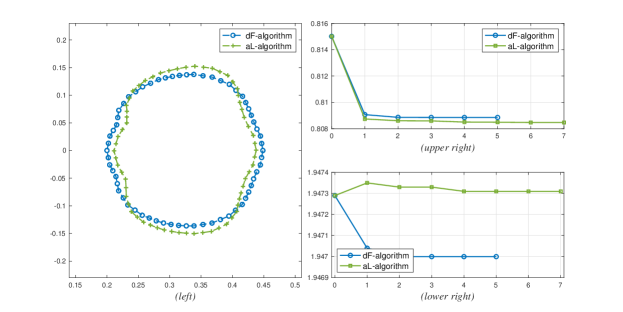

To illustrate another case where the aL-algorithm is more preferable for the minimization of the detgrad functional, Figure 9 shows an implementation where , and for the aL-algorithm.

As can be easily observed in Figure 9(right), aL-algorithm yields better result when it comes to the minimization of the objective functional and preservation of the volume. In particular, the objective functional values are much lower for aL-algorithm, and the volume difference between the initial and final shape are much closer compared to the values generated by dF-algorithm.

Implementation of mixeddF-problem (). In this implementation, we consider several configurations for reasons we shall explain later. In particular, we shall consider the value of parameters as shown in Table 3.

| Configuration | |||

|---|---|---|---|

| 1 | 6.0 | 1.0 | 1.0 |

| 2 | 7.0 | 1.0 | 2.0 |

| 3 | 8.0 | 1.0 | 3.0 |

| 4 | 9.0 | 1.0 | 4.0 |

| 5 | 10.0 | 1.0 | 5.0 |

| 6 | 11.0 | 1.0 | 6.0 |

| 7 | 12.0 | 1.0 | 7.0 |

| 8 | 13.0 | 1.0 | 8.0 |

| 9 | 14.0 | 1.0 | 9.0 |

| 10 | 15.0 | 1.0 | 10.0 |

For the first configuration, Figure 10 shows how the initial shape evolves into a bean shaped boundary similar with that of curldF-problem. Furthermore, the value of the objective functional at the final shape differs relatively from the initial shape by while the volume is preserved with a relative difference of .

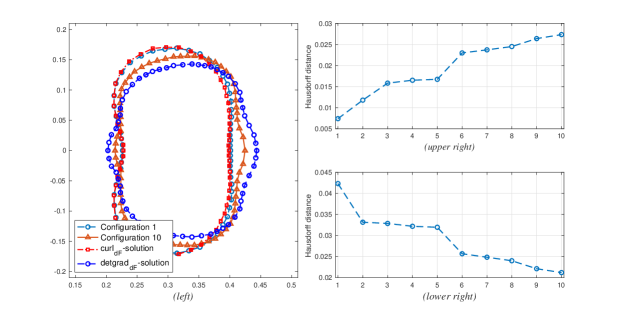

We note that in this configuration the final shape is almost the same with the final shape generated by curldF-problem, as shown in Figure 11(left).

To understand why this phenomena occurs, we note that the objective function in our configurations can be written as

where is the configuration number. We shall call the first bracket as the curl part of the mixeddf-problem while the other one the detgrad part. Evaluating the two parts numerically at the initial shape, we can see in Figure 11(right) that the value of the curl part is higher than the detgrad part, with the respective values and . This results to having the optimization problem prioritize minimizing the curl part of the mixeddF-problem, and this results into having the similar shape as that of the curldF-problem. Intriguingly though, when compared to the values of the objective functional for the curldF-problem and the detgraddF-problem, the values of the curl part on each iterate is much higher than the objective function for curldF-problem (see Figure 11(upper right)) while the values of the detgrad part is lower than the objective function for detgraddF-problem (see Figure 11(lower right)).

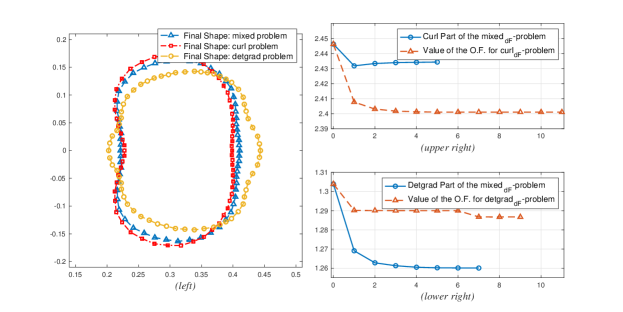

To investigate the influence of the curldF-problem and the detgraddF-problem to the solution obtained by mixeddF-problem even further, we implement configuration 2. In this example, the value of the detgrad part is higher than the one considered on the previous configuration.

As can be observed on Figure 12(left) the final shape for the mixeddF-problem is now more distinct from that of the curldF-problem. In particular, we now see a bump emerging on the right side of the final shape for mixeddF-problem. The emergence of such bump may be attributed to its presence on the final shape of detgraddF-problem. In fact, the value of the detgrad part is now higher compared to the previous configuration (see Figure 12(upper right) and (lower right)).

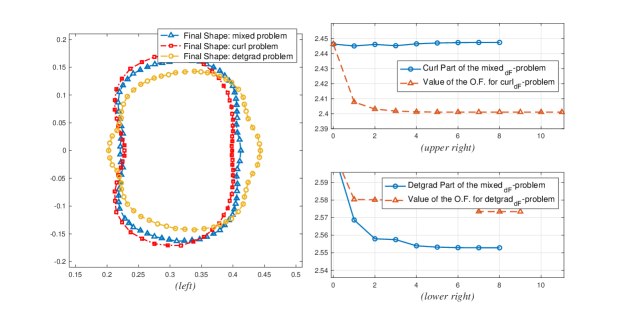

Skipping to the fourth configuration, we see that value of the detgrad part is higher than the curl part. From Figure 13(left), the final shape of the mixeddf-problem is now greatly influenced by the shape of detgraddf-problem. In particular, it can be observed that the right side of the shape generated by mixeddf-problem converges to the shape induced by the detgraddf-problem. However, we can still observe the effect of the shape by the curldf-problem on the left side.

To see the convergence more clearly we utilize Hausdorff distance. Figure 14(upper right) illustrates the increase of the Hausdorff distance between the solutions of the mixeddF-problem and the curldF-problem. Meanwhile, Figure 14(lower right) shows a decreasing trend in the Hausdorff distance between the solutions of the mixeddF-problem and the detgraddF-problem.

4.5. Effects of the Shape Solutions to Generation of Twin-Vortex

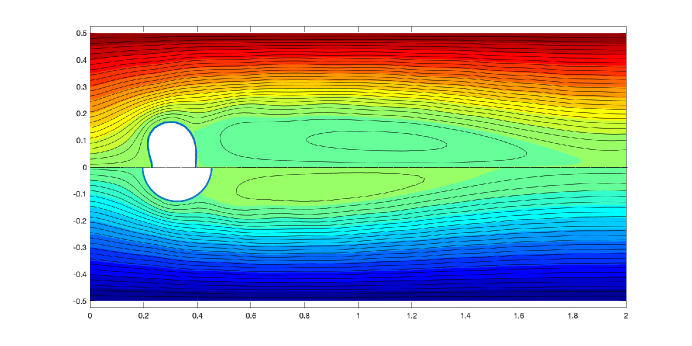

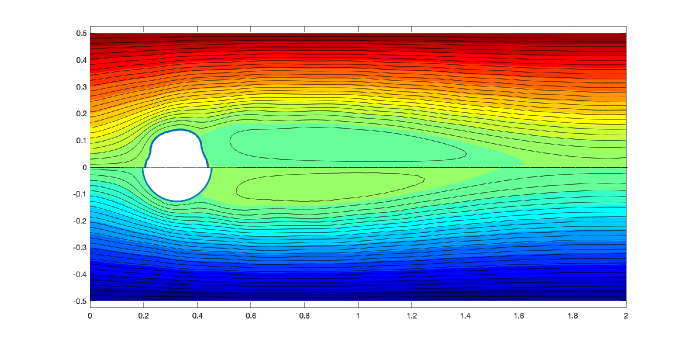

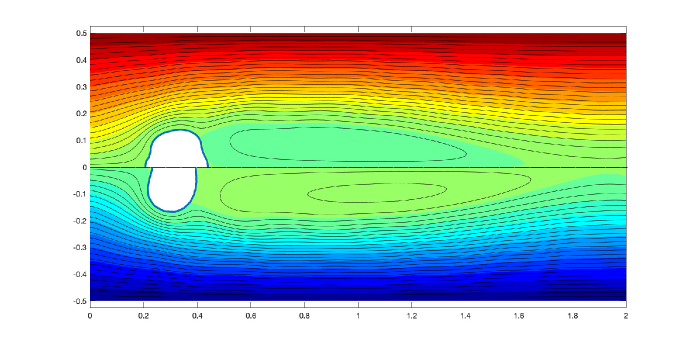

We conclude this section by briefly illustrating the effects of the final shapes to twin-vortices induced by a time-dependent Navier–Stokes equations right before the shedding of Karman vortex, and is solved using a stabilized Lagrange–Galerkin method [29]. Since similar behaviors can be observed if we use aL-algorithm or dF-algorithm, we only illustrate the cases using dF-algorithm.

Figure 15 shows the comparison of the twin-vortices using the initial domain and the domain obtained from curldF-problem. The figure shows that the solution to the curldF-problem generates a longer vortex compared to that of the initial domain.

Similarly, the solution of the detgraddF-problem generates a longer vortex as compared to the initial domain as shown in Figure 15.

Lastly, Figure 17 shows that the vortex generated using the final shape of curldf-problem is longer than that of the detgraddf-problem.

Remark 7.

As mentioned before, the generation of vortices gives a possible application of our shape optimization problem to a branch of machine learning known as physical reservoir computing. According to Goto, K., et.al.[13], the length or the long diameter of the twin-vortices has a correlation with the memory capacity - as defined in the aforementioned reference - of the physical reservoir computer. With our previous observations, we can naively conjecture that the shapes generated by the implementations above will cause a better memory capacity for the physical reservoir computer. However, since these are just visual observations, we need more experiments to verify that these shapes indeed generate a good computational ability.

5. Conclusion

We presented a minimization problem that is intended to maximize the vorticity of the fluid governed by the Stokes equations. Furthermore, we considered a Tikhonov-type regularization - in the form of the perimeter functional - and a volume constraint. The shape sensitivity analysis is carried out by utilizing the rearrangement method which gave us the necessary conditions in the form of the Zolesio–Hadamard Structure. We then implemented the necessary conditions in two ways, one is by utilizing the augmented Lagrangian, and by utilizing a new method for solving the deformation field that possesses the divergence-free condition. From these methods, we showed some numerical examples. Lastly, we illustrated how the shape solutions affect the flow, and we briefly mentioned a possible application of this problem in the field of physical reservoir computers.

We end this note by pointing out that the investigation in the stability of the numerical approaches with respect to the parameters were only scratched on the surface and can be explored with more rigor. Furthermore, the visual observations on the emergence of the twin-vortex are not enough to give a good conclusion with regards to the memory capacity of the physical reservoir computer. With this, one can investigate even further and try some more tests as carried out in [13].

References

- [1] H. Azegami. Solution of shape optimization problem and its application to product design. In Hiromichi Itou, Masato Kimura, Vladimír Chalupecký, Kohji Ohtsuka, Daisuke Tagami, and Akira Takada, editors, Mathematical Analysis of Continuum Mechanics and Industrial Applications, pages 83–98, Singapore, 2017. Springer Singapore.

- [2] J. Bacani and G. Peichl. On the first-order shape derivative of the Kohn–Vogelius cost functional of the Bernoulli problem. Abstract and Applied Analysis, 2013:1–19, 2013.

- [3] D. Chenais. On the existence of a solution in a domain identification problem. Journal of Mathematical Analysis and Applications, 52(2):189 – 219, 1975.

- [4] M. S. Chong, A. E. Perry, and B. J. Cantwell. A general classification of three‐dimensional flow fields. Physics of Fluids A: Fluid Dynamics, 2(5):765–777, 1990.

- [5] C. Dapogny, P. Frey, F. Omnès, and Y. Privat. Geometrical shape optimization in fluid mechanics using freefem++. Structural and Multidisciplinary Optimization, 58(6):2761–2788, 2018.

- [6] M. Delfour and J.-P. Zolesio. Shapes and Geometries: Metrics, Analysis, Differential Calculus, and Optimization. Society for Industrial and Applied Mathematics, 3600 Market Street, 6th Floor, Philadelphia, PA 19104-2688 USA, 2 edition, 2011.

- [7] M. Desai and K. Ito. Optimal controls of Navier–Stokes equations. SIAM Journal on Control and Optimization, 32(5):1428–1446, 1994.

- [8] M. F. Eggl and Peter J. Schmid. Mixing enhancement in binary fluids using optimised stirring strategies. Journal of Fluid Mechanics, 899:A24, 2020.

- [9] L.C. Evans. Partial Differential Equations. Graduate studies in mathematics. American Mathematical Society, 1998.

- [10] T. L. B. Flinois and T. Colonius. Optimal control of circular cylinder wakes using long control horizons. Physics of Fluids, 27(8):087105, 2015.

- [11] Z. M. Gao, Y. C. Ma, and H. W. Zhuang. Shape optimization for navier–stokes flow. Inverse Problems in Science and Engineering, 16(5):583–616, 2008.

- [12] V. Girault and P.-A. Raviart. Finite Element Methods for Navier-Stokes Equations: Theory and Algorithms, volume 5 of Springer Series in Computational Mathematics. Springer, Berlin, Heidelberg, 1986.

- [13] K. Goto, K. Nakajima, and H. Notsu. Twin vortex computer in fluid flow. New Journal of Physics, 23(6):063051, jun 2021.

- [14] J. Haslinger, K. Ito, T. Kozubek, K. Kunisch, and G. Peichl. On the shape derivative for problems of Bernoulli type. Interfaces and Free Boundaries, 11(2):231–251, 2006.

- [15] J. Haslinger, J. Málek, and J. Stebel. Shape optimization in problems governed by generalised navier-stokes equations: Existence analysis. Control and cybernetics, 34:283–303, 03 2005.

- [16] F. Hecht. New development in freefem++. J. Numer. Math., 20(3-4):251–265, 2012.

- [17] A. Henrot and M. Pierre. Shape Variation and Optimization: A Geometrical Analysis. EMS Tracts in Mathematics, 28 edition, 2014.

- [18] A. Henrot and Y. Privat. What is the optimal shape of a pipe? Archive for Rational Mechanics and Analysis, 196(1):281–302, 2010.

- [19] J. C. R. Hunt, A. A. Wray, and P. Moin. Eddies, streams, and convergence zones in turbulent flows. In Proc. 1988 Summer Program of Center for Turbulence Research Program, 1998.

- [20] Ito, K., Kunisch, K., and Peichl, G. H. Variational approach to shape derivatives. ESAIM: COCV, 14(3):517–539, 2008.

- [21] Y. Iwata, H. Azegami, T. Aoyama, and E. Katamine. Numerical solution to shape optimization problems for non-stationary navier-stokes problems. JSIAM Letters, 2:37–40, 2010.

- [22] Jinhee Jeong and Fazle Hussain. On the identification of a vortex. Journal of Fluid Mechanics, 285:69–94, 1995.

- [23] H. Kasumba and K. Kunisch. Vortex control in channel flows using translational invariant cost functionals. Computational Optimization and Applications, 52(3):691–717, 2012.

- [24] G. Mather, I. Mezić, S. Grivopoulos, U. Vaidya, and L. Petzold. Optimal control of mixing in Stokes fluid flows. Journal of Fluid Mechanics, 580:261–281, 2007.

- [25] B. Mohammadi and O. Pironneau. Applied Shape Optimization for Fluids. NUMERICAL MATHEMATICS AND SCIENTIFIC COMPUTATION. Oxford University Press, 2010.

- [26] P. Monk. Finite Element Methods for Maxwell’s Equations. Oxford University Press, 2003.

- [27] M. T. Nair, M. Hegland, and R. S. Anderssen. The trade-off between regularity and stability in tikhonov regularization. Mathematics of Computation, 66(217):193–206, 1997.

- [28] J. Nocedal and S. Wright. Numerical Optimization, volume 2 of Springer Series in Operations Research and Financial Engineering. Springer-Verlag New York, 2006.

- [29] Notsu, H. and Tabata, M. Error estimates of a stabilized lagrange-galerkin scheme for the navier-stokes equations. ESAIM: M2AN, 50(2):361–380, 2016.

- [30] M. Pošta and T. Roubíček. Optimal control of Navier–Stokes equations by Oseen approximation. Computers & Mathematics with Applications, 53(3):569–581, 2007. Recent Advances in the Mathematical Analysis of Non-Linear Phenomena.

- [31] J. F. T. Rabago and H. Azegami. An improved shape optimization formulation of the bernoulli problem by tracking the neumann data. Journal of Engineering Mathematics, 117(1):1–29, 2019.

- [32] J. F. T. Rabago and H. Azegami. A second-order shape optimization algorithm for solving the exterior Bernoulli free boundary problem using a new boundary cost functional. Computational Optimization and Applications, 2020.

- [33] S. Schmidt and V. Schulz. Shape derivatives for general objective functions and the incompressible navier-stokes equations. Control and Cybernetics, 39(3):677–713, 2010.

- [34] J. Sokolowski and J.-P. Zolesio. Introduction to Shape Optimization: Shape Sensitivity Analysis. Springer Series in Computational Mathematics. Springer-Verlag Berlin Heidelberg, 1 edition, 1992.