A SIMPLE DERIVATION OF NEWTON-COTES FORMULAS WITH REALISTIC ERRORS

Abstract

In order to approximate the integral , where is a sufficiently smooth function, models for quadrature rules are developed using a given panel of equally spaced points. These models arise from the undetermined coefficients method, using a Newton’s basis for polynomials. Although part of the final product is algebraically equivalent to the well known closed Newton-Cotes rules, the algorithms obtained are not the classical ones.

In the basic model the most simple quadrature rule is adopted (the so-called left rectangle rule) and a correction is constructed, so that the final rule is interpolatory. The correction , depending on the divided differences of the data, might be considered a realistic correction for , in the sense that should be close to the magnitude of the true error of , having also the correct sign. The analysis of the theoretical error of the rule as well as some classical properties for divided differences suggest the inclusion of one or two new points in the given panel. When is even it is included one point and two points otherwise. In both cases this approach enables the computation of a realistic error for the extended or corrected rule . The respective output contains reliable information on the quality of the approximations and , provided certain conditions involving ratios for the derivatives of the function are fulfilled. These simple rules are easily converted into composite ones. Numerical examples are presented showing that these quadrature rules are useful as a computational alternative to the classical Newton-Cotes formulas.

Keywords: Divided differences; undetermined coefficients method; realistic error; Newton-Cotes rules.

AMS Subject Classification: 65D30, 65D32, 65-05, 41A55.

1 Introduction

The first two quadrature rules taught in any numerical analysis course belong to a group known as closed Newton-Cotes rules. They are used to approximate the integral of a sufficiently smooth function in the finite interval . The basic rules are known as trapezoidal rule and the Simpson’s rule. The trapezoidal rule is , for which , and has the theoretical error

| (1) |

while the Simpson’s rule is , with , and its error is

| (2) |

The error formulas (1) and (2) are of existential type. In fact, assuming that and are (respectively) continuous, the expressions (1) and (2) say that there exist a point , somewhere in the interval , for which the respective error has the displayed form. From a computational point of view the utility of these error expressions is rather limited since in general is quite difficult or even impossible to obtain expressions for the derivatives or , and consequently bounds for . Even in the case one obtains such bounds they generally overestimate the true error of .

Under mild assumption on the smoothness of the integrand function , our aim is to determine certain quadrature rules, say , as well as approximations for its error , using only the information contained in the table of values or panel arising from the discretization of the problem. The algorithm to be constructed will produce the numerical value , the correction or estimated error as well as the value of the interpolatory rule

| (3) |

The true error of should be much less than the estimated error of , that is,

| (4) |

for a sufficiently small step . In such case we say that is a realistic correction for . Unlike the usual approach where one builds a quadrature formula (like the trapezoidal or Simpson’s rule) which is supposed to be a reasonable approximation to the exact value of the integral, here we do not care wether the approximation is eventually bad, provided that the correction has been well modeled. In this case the value will be a good approximation to the exact value of the integral . Besides the values , and we are also interested in computing a good estimation for the true error of , in the following sense. If the true error is expressed in the standard decimal form as , the approximation is said to be realistic if its decimal form has the same sign as and its first digit in the mantissa differs at most one unit, that is, (the dots represent any decimal digit). Finally, the algorithm to be used will produce the values .

In section 1.1 we present two models for building simple quadrature rules named model and model . Although both models are derived from the same method, in this work we focus our attention mainly on the model . Definitions, notations and background material are presented in section 2. In Proposition 2.1 we obtain the weights for the quadrature rule in model by the undetermined coefficients method as well as the theoretical error expressions for the rules are deduced (see Proposition 2.4). The main results are discussed in Section 3, namely in Proposition 3.1 we show that a reliable computation of realistic errors depends on the behavior of a certain function involving ratios between high order derivatives of the integrand function and its first derivative.

Composite rules for model A are presented in Section 4 where some numerical examples illustrate how our approach allows to obtain realistic error’s estimates for these rules.

1.1 Two models

In this work we consider to be given a panel of points , in the interval , having the nodes equally spaced with step , , where a sufficiently smooth function in the interval. We consider the following two models:

Model A

Using only the first node of the panel we construct a quadrature rule adding a correction , so that the corrected or extended rule is interpolatory for the whole panel,

| (5) |

where denotes the -th divided difference and , , , are weights to be determined.

Note that is simply the so-called left rectangle rule, thus can be seen as a correction to such a rule.

Model B

The rule uses the first points of the panel (therefore is not interpolatory in the whole panel), and it is added a correction term , so that the corrected or extended rule is interpolatory,

| (6) |

Since the interpolating polynomial of the panel is unique, the value computed for using either model is the same and equal to the value one finds if the simple closed Newton-Cotes rule for equally spaced points has been applied to the data. This means that the extended rules (5) and (6) are both algebraically equivalent to the referred simple Newton-Cotes rules. However, the algorithms associated to each of the models (5) and (6) are not the classical ones for the referred rules. In particular, we can show that that for odd, the rules in model B are open Newton-Cotes formulas [3]. Therefore, the extended rule in model B can be seen as a bridge between open and closed Newton-Cotes rules.

The method of undetermined coefficients applied to a Newton’s basis of polynomials is used in order to obtain . The associated system of equations is diagonal, The same method can also be applied applied to get any hybrid model obtained from the models A and B. For instance, an hybrid extended rule using points could be written as

In this work our study is mainly focused in model .

2 Notation and background

Definition 2.1.

(Canonical and Newton’s basis)

Let be the vector space of real polynomials of degree less or equal to ( a nonnegative integer). The set , where

| (7) |

is the canonical basis for .

Given distinct points , the set , with

| (8) |

is known as the Newton’s basis for .

A polynomial interpolatory quadrature rule obtained from a given panel , where for , has the form

| (9) |

where the coefficients (or weights) can be computed assuming the quadrature rule is exact for any polynomial of degree less or equal to , that is, , according to the following definition

Definition 2.2.

(Degree of exactness) ([2], p. 157)

A quadrature rule has (polynomial) degree of exactness if the rule is exact whenever is a polynomial of degree , that is

The degree of the quadrature rule is denoted by . When the rule is called interpolatory.

In particular for a -point panel the interpolating polynomial satisfies

| (10) |

where can be written in Newton’s form (see for instance [6], p. 23, or any standard text in numerical analysis)

| (11) |

and the remainder-term is

| (12) |

where and , for , denotes the -th divided difference of the data , with . Therefore from (10) we obtain

| (13) |

where denotes the true error of the rule . Thus,

| (14) |

The expression (14) suggests that the application of the undetermined coefficient method using the Newton’s basis for polynomials should be rewarding since the successive divided differences are trivial for such a basis. In particular, the weights for the extended rule in model A are trivially computed.

Proposition 2.1.

The weights for the rule in model A are

| (15) |

Proof.

The divided differences do not depend on a particular node but on the distance between nodes. Thus for any given -point panel of constant step , we can assume without loss of generality that . Considering the Newton’s basis for polynomials , , , , where , from (5) we have

The undetermined coefficients method applied to the Newton’s basis , , leads to the conditions or, equivalently, to a diagonal system of linear equations whose matrix is the identity. The equalities in (15) can also be obtained directly from (14). ∎

Theoretical expressions for the error in (13) can be obtained either via the mean value theorem for integrals or by considering the so-called Peano kernel ([1], p. 285, [2], p. 176). However, we will use the method of undetermined coefficients whenever theoretical expressions for the errors and are needed.

For sufficiently smooth functions , the fundamental relationship between divided differences on a given panel and the derivatives of is given by the following well known result,

Proposition 2.2.

Given distinct nodes in , and , there exists such that

| (16) |

Applying (16) to the canonical or Newton’s basis, we get

| (17) |

By construction, the rules in models and are at least of degree of precision according to Definition 2.2.

In this work the undetermined coefficients method enables us to obtain both the weights and theoretical error formulas. This apparently contradicts the following assertion due to Walter Gautschi ([2], p. 176): “The method of undetermined coefficients, in contrast, generates only the coefficients in the approximation and gives no clue as to the approximation error”.

Note that by Definition 2.2 the theoretical error (1) says that for the trapezoidal rule, and from (2) one concludes that for the Simpson’s rule. This suggests the following assumption.

Assumption 2.1.

Let be given a -point () panel with constant step , a sufficiently smooth function defined on the interval , and a quadrature rule (interpolatory or not) of degree , there exists a constant (depending on a certain power of ) and a point , such that

| (18) |

where de derivative is not identically null in , and is the least integer for which (18) holds.

The expression (18) is crucial in order to deduce formulas for the theoretical error of the rules in model or .

Proposition 2.3.

Proof.

For any nonnegative integer , from (18) we get . As is the least integer for which the righthand side of (18) is non zero, and , one has

from which it follows (19). As for a fixed basis the interpolating polynomial is unique, it follows that the error for the corresponding interpolatory rule is unique as well. ∎

In Proposition 2.4 we show that depends on the parity of . Thus, we recover a well known result about the precision of the Newton-Cotes rules, since is algebraically equivalent to a closed formula with nodes. Let us first prove the following lemma.

Lemma 2.1.

Consider the Newton’s polynomials

Let be an integer and and the following integrals:

Then,

(a) for even and for odd;

(b) for and for .

Proof.

(a) For it is obvious that . For any integer , let us change the integration interval into the interval and consider the bijection . For odd, we obtain

As the integrand is an odd function in , we have .

For even, we get

where the integrand is an even function, thus .

(b) The proof is analogous so it is ommited. ∎

The degree of precision for the rules in models A and B, and the respective true errors can be easily obtained using the undetermined coefficients method, the Lemma 2.1 and Proposition 2.3. The next propositions ( 2.4, 2.5 and 2.6) establish the theoretical errors and degree of precision of these rules. In particular, in Proposition 2.4 we recover a classical result on the theoretical error for the rule – see for instance [4], p. 313.

Proposition 2.4.

Consider the rule for the models or defined in the panel , and assume that is a finite interval containing the nodes , for . Let denote the Newton’s polynomial of degree . The respective degree of precision and true error are the following:

(i) If is odd and , then

| (20) |

(ii) If is even and , then

| (21) |

Proof.

We can assume without loss of generality that the panel is , , (just translate the point ) . For even or odd, by construction of the interpolatory rule , we have in model or . Taking the Newton’s polynomial , whose zeros are , we get for the divided differences in (5),

where has been substituted by in (5). Thus, and for any .

Proposition 2.5.

Consider the rule given by model A, and assume that , where is a finite interval containing the nodes , for . Then, there exists a point such that

| (22) |

Proposition 2.6.

Consider the rule given in model B and assume that , where is a finite interval containing the nodes , for . Let be the Newton’s polynomial of degree . The degree of precision for is and there exists a point such that

| (23) |

Proof.

Without loss of generality consider the panel , , , . By construction, via the undetermined coefficients, we have . Taking , we have , for , so . By Lemma 2.1 (b) and therefore . Thus, by Proposition 2.2, there exist such that,

To the point it corresponds a point in the interval , and so (23) holds. ∎

3 Realistic errors for model A

The properties discussed in the previous Section are valid for both models and . However here we will only present some numerical examples for the rules defined by model . A detailed discussion and examples for model will be presented elsewhere.

From (15) we obtain immediately the weights for any rule of points defined by model A. Such weights are displayed in Table 1, for . The values displayed should be multiplied by an appropriate power of as indicated in the table’s label. According to Proposition 2.4, the last column in this table contains the value for the degree of precision of the rule .

Note that, by construction, the weights in model A are positive for any . Therefore, the respective extended rule does not suffers from the inconvenient observed in the traditional form for Newton-Cotes rules where, for large the weights are of mixed sign leading eventually to losses of significance by cancellation.

The next Proposition 3.1 shows that a reliable computation of realistic errors for the rule , for , depends on the behavior of a certain function involving certain quotients between derivatives of higher order of and its first derivative. Fortunately, in the applications, only a crude information on the function is needed, and in practice it will be sufficient to plot for some different values of the step , as it is illustrated in the numerical examples given in this Section (for some simple rules) and in Section 4 (for some composite rules) .

Proposition 3.1.

Consider a panel of points and the model A for approximating , where is a sufficiently smooth function defined in the interval containing the panel nodes. Let

where . Denote by (or when is fixed) the function

| (24) |

Assuming that

| (25) |

and

| (26) |

then, for a sufficiently small step , the correction is realistic for . Furthermore, the true error of can be estimated by the following realistic errors:

(a) For odd:

| (27) |

where and .

(b) For even:

| (28) |

where .

Proof.

(a) By Proposition 2.4 (i), we have

and from Proposition 2.2 the correction can be written as

where , , , . Therefore,

Thus, using the hypothesis in (25) we obtain

that is,

| (29) |

where is a constant not depending on . Thus, by (26) we get

Therefore, for sufficiently small, , that is, is a realistic correction for . Furthermore,

| (30) |

Finally, by Proposition 2.2 and the continuity of the function , we know that

(b) The proof is analogous so it is omitted. ∎

The next proposition shows that Proposition 3.1 for the case leads to the rule which is algebraically equivalent to the trapezoidal rule, and when the rule is algebraically equivalent to the Simpson’s rule.

Proposition 3.2.

Let , , and . Consider the simple extended left rectangle rule

| (31) |

and , where and . Assuming that

then is a realistic error for , for sufficiently small. A realistic approximation for the true error of is

| (32) |

Proof.

Example 3.1.

(A realistic error for )

Let . The function is not differentiable at . However for , and the result (32) still holds. The numerical results (for digits of precision) are:

The true error for is

By (32) the realistic error is

Table 2 shows that the realistic error becomes closer to the true error when one goes from the step to the step .

Proposition 3.3.

Consider the model A for ,

| (36) |

where , and . Let

| (37) |

Assuming that , if

| (38) |

and

| (39) |

then, for a sufficiently small , is a realistic correction for . A realistic approximation to the true error of is

| (40) |

where and .

Example 3.2.

(A realistic error for the rule)

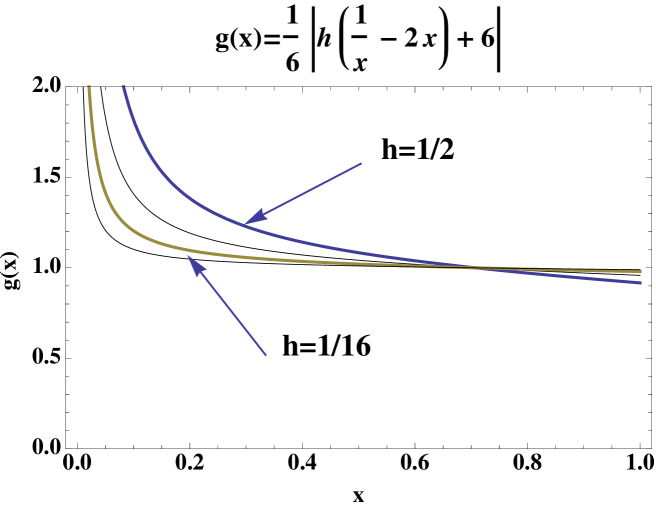

Let , with . Since , the condition (38) holds with and . Consider

As and for any we have , for (see Figure 1), thus the condition (39) is satisfied. Notice that gets closer to the value as decreases. Therefore one can assure that realistic estimates (40) can be computed to approximate the true error of for a step . In Table 3 is displayed the estimated errors and the true error for , respectively for . As expected, the computed values for have the correct sign and closely agree with the true error.

For , we have

Example 3.3.

(A realistic error for the rule)

From Table 1 we obtain the following expression for the rule ,

| (41) |

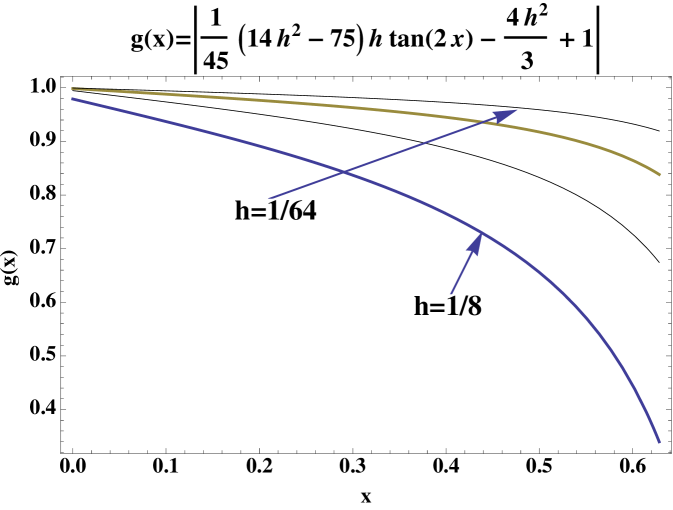

Consider and the interval . Since and , the condition (25) holds with and . Let

where the coefficients are computed using (15). It can be observed in the plot in the Figure 2 that for the condition is satisfied. Therefore, since is odd, one concludes from Proposition 3.1 (a) that the following realistic estimation for the true error of is,

| (42) |

where and . In Table 4 are displayed the computed realistic errors for the steps referred above.

For instance for , we have

and finally

4 Composite rules

The rules whose weights have been given in Table 1 are here applied in order to obtain the so-called composite rules. The algorithm described hereafter for composite rules is illustrated by several examples presented in paragraph 4.1. Since the best rules are the ones for which is even (when holds) the examples refer to , , and . Whenever the conditions of Proposition 3.1 for obtaining realistic errors are satisfied, these rules enable the computation of high precision approximations to the integral , as well as good approximations to the true error. This justifies the name realistic error adopted in this work.

Let be given and fix a natural number . Consider the number and divide the interval into equal parts of length , denoting by the nodes, with , , and . Partitioning the set into subsets of points each, and for an offset of points, we get panels each one containing successive nodes. To each panel we apply in succession the rule and compute the respective realistic correction as well as the estimated realistic error for the rule . For the output we compute the sum of the partial results obtained for each panel as described in (43):

| (43) |

According to Proposition 2.4, for composite rules with points by panel and step , in the favorable cases (those satisfying the hypotheses behind the theory) one can expect to be able to compute approximations having a realistic error. Analytic proofs for realistic errors in composite rules for both models and will be treated in a forthcoming work [3].

4.1 Numerical examples for composite rules

Once computed realistic errors within each panel for a composite rule, we can expect the error in (43) to be also realistic. This happens in all the numerical examples worked below.

Example 4.1.

Let

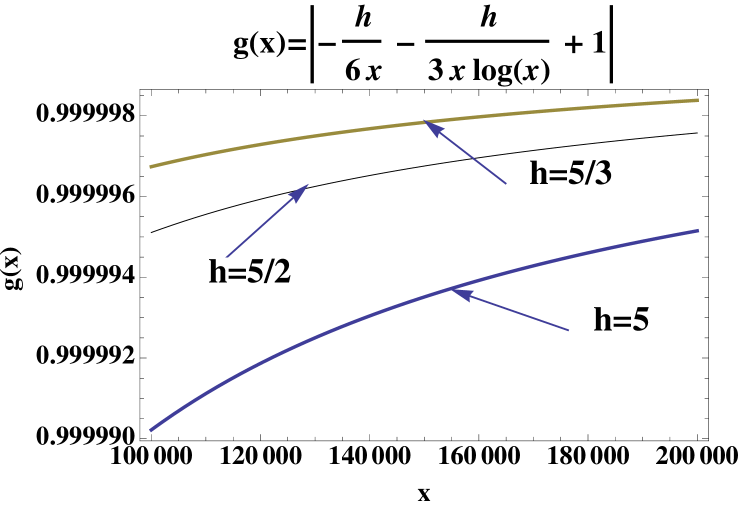

In the interval , the function belongs to the class . Since , for all , and for the quotients , with , are close to and tends to the zero function as increases. Therefore, the lefthand side in the inequality (26) is very close to . That is, for the function

is such that , for sufficiently small. So Proposition 3.1 holds and one obtains realistic errors for the rules .

The behavior of the function is illustrated for the case in Figure 3, for . Note that in this example while the chosen steps are greater than . However, realistic errors are still obtained.

Using a precison of decimal digits (or greater for ) the following values are obtained for the -point composite rule:

In Table 5 the realistic error is compared with the true error, respectively for the rules with odd from to points (the step is as tabulated).

For , the computed approximation for the integral is

where all the digits are correct. The simple rule is defined (see Table 1) as

| (44) |

The respective realistic error is (see (27))

| (45) |

In Appendix 4.1 a Mathematica code for the composite rule , for , is given. The respective procedure is called and the code includes comments explaining the respective algorithm. Of course we could have adopted a more efficient programming style, but our goal here is simply to illustrate the algorithm described above for the composite rules.

Appendix 4.1.

(Composite rule for points )

Acknowledgments

This work has been supported by Instituto de Mecânica-IDMEC/IST, Centro de Projecto Mecânico, through FCT (Portugal)/program POCTI.

References

- [1] P. J. Davis and P. Rabinowitz, Methods of Numerical Integration, Academic Press, Orlando, 1984.

- [2] W. Gautschi, Numerical Analysis, An Introduction, Birkhauser, Boston, 1997.

- [3] M. M. Graça, Realistic errors for corrected open Newton-Cotes rules, In preparation.

- [4] E. Isaacson and H. B. Keller, Analysis of numerical methods, John Wiley & Sons, New York, 1966.

- [5] V. I. Krylov, Approximate Calculation of Integrals, Dover, New York, 2005.

- [6] J. F. Steffensen, Interpolation, Dover, 2nd Ed., Boston, 2006.