A study of the classification of low-dimensional data with supervised manifold learning

Abstract

Supervised manifold learning methods learn data representations by preserving the geometric structure of data while enhancing the separation between data samples from different classes. In this work, we propose a theoretical study of supervised manifold learning for classification. We consider nonlinear dimensionality reduction algorithms that yield linearly separable embeddings of training data and present generalization bounds for this type of algorithms. A necessary condition for satisfactory generalization performance is that the embedding allow the construction of a sufficiently regular interpolation function in relation with the separation margin of the embedding. We show that for supervised embeddings satisfying this condition, the classification error decays at an exponential rate with the number of training samples. Finally, we examine the separability of supervised nonlinear embeddings that aim to preserve the low-dimensional geometric structure of data based on graph representations. The proposed analysis is supported by experiments on several real data sets.

Keywords: Manifold learning, dimensionality reduction, classification, out-of-sample extensions, RBF interpolation

1 Introduction

In many data analysis problems, data samples have an intrinsically low-dimensional structure although they reside in a high-dimensional ambient space. The learning of low-dimensional structures in collections of data has been a well studied topic of the last two decades (Tenenbaum et al., 2000), (Roweis and Saul, 2000), (Belkin and Niyogi, 2003), (He and Niyogi, 2004), (Donoho and Grimes, 2003), (Zhang and Zha, 2005). Following these works, many classification methods have been proposed in the recent years to apply such manifold learning techniques to learn classifiers that are adapted to the geometric structure of low-dimensional data (Hua et al., 2012), (Yang et al., 2011), (Zhang et al., 2012), (Sugiyama, 2007), (Raducanu and Dornaika, 2012). The common approach in such works is to learn a data representation that enhances the between-class separation while preserving the intrinsic low-dimensional structure of data. While many efforts have focused on the practical aspects of learning such supervised embeddings for training data, the generalization performance of these methods as supervised classification algorithms has not been investigated much yet. In this work, we aim to study nonlinear supervised dimensionality reduction methods and present performance bounds based on the properties of the embedding and the interpolation function used for generalizing the embedding.

Several supervised manifold learning methods extend the Laplacian eigenmaps algorithm (Belkin and Niyogi, 2003), or its linear variant LPP (He and Niyogi, 2004) to the classification problem. The algorithms proposed by Hua et al. (2012), Yang et al. (2011), Zhang et al. (2012) provide a supervised extension of the LPP algorithm and learn a linear projection that preserves the proximity of neighboring samples from the same class, while increasing the distance between nearby samples from different classes. The method by Sugiyama (2007) proposes an adaptation of the Fisher metric for linear manifold learning, which is in fact shown to be equivalent to the above methods by Yang et al. (2011), Zhang et al. (2012). In (Li et al., 2013), (Cui and Fan, 2012), (Wang and Chen, 2009), some other similar Fisher-based linear manifold learning methods are proposed. In (Raducanu and Dornaika, 2012) a method relying on a similar formulation as in (Hua et al., 2012), (Yang et al., 2011), (Zhang et al., 2012) is presented, which, however, learns a nonlinear embedding. The main advantage of linear dimensionality reduction methods over nonlinear ones is that the generalization of the learnt embedding to novel (initially unavailable) samples is straightforward. However, nonlinear manifold learning algorithms are more flexible as the possible data representations they can learn belong to a wider family of functions, e.g., one can always find a nonlinear embedding to make training samples from different classes linearly separable. On the other hand, when a nonlinear embedding is used, one must also determine a suitable interpolation function to generalize the embedding to new samples, and the choice of the interpolator is critical for the classification performance.

The common effort in all of these supervised dimensionality reduction methods is to learn an embedding that increases the separation between different classes, while preserving the geometric structure of data. It is interesting to note that supervised manifold learning methods achieve separability by reducing the dimension of data, while kernel methods in traditional classifiers achieve this by increasing the dimension of data. Meanwhile, making training data linearly separable in supervised manifold learning does not mean much only by itself. Assuming that the data are sampled from a continuous distribution (hence two samples coincide with 0 probability), it is almost always possible to separate a discrete set of samples from different classes with a nonlinear embedding, e.g., even with a simple embedding such as the one mapping each sample to a vector encoding its class label. What actually matters is how the embedding generalizes to test data, i.e., where the test samples will be mapped to in the low-dimensional domain of embedding and how well the performance will be. The generalization for test data is straightforward for kernel methods, it is determined by the underlying main algorithm. However, in nonlinear supervised manifold learning, this question has rather been overlooked so far. In this work we aim to fill this gap and look into the generalization capabilities of supervised manifold learning algorithms. We study the conditions that must be satisfied by the embedding of the training samples and the interpolation function for satisfactory generalization of the classifier. We then examine the rates of convergence of supervised manifold learning algorithms that satisfy these conditions.

In Section 2, we consider arbitrary supervised manifold learning algorithms that compute a linearly separable embedding of training samples. We study the generalization capability of such algorithms for two types of out-of-sample interpolation functions. We first consider arbitrary interpolation functions that are Lipschitz-continuous on the support of each class, and then focus on out-of-sample extensions with radial basis function (RBF) kernels, which is a popular family of interpolation functions. For both types of interpolators, we derive conditions that must be satisfied by the embedding of the training samples and the regularity of the interpolation function that generalizes the embedding to test samples, when a nearest neighbor or linear classifier is used in the low-dimensional domain of embedding. These conditions enforce the Lipschitz constant of the interpolator to be sufficiently small, in comparison with the separation margin between training samples from different classes in the low-dimensional domain of embedding. The practical value of these results resides in their implications about what must really be taken into account when designing a supervised dimensionality reduction algorithm: Achieving a good separation margin does not suffice by itself; the geometric structure must also be preserved so as to ensure that a sufficiently regular interpolator can be found to generalize the embedding to the whole ambient space. We then particularly consider Gaussian RBF kernels and show the existence of an optimal value for the kernel scale by studying the condition in our main result that links the separation with the Lipschitz constant of the kernel.

Our results in Section 2 also provide bounds on the rate of convergence of the classification error of supervised embeddings. We show that the misclassification error probability decays at an exponential rate with the number of samples, provided that the interpolation function is sufficiently regular with respect to the separation margin of the embedding. These convergence rates are higher than those reported in previous results on RBF networks (Niyogi and Girosi, 1996), (Lin et al., 2014), (Hernández-Aguirre et al., 2002), and regularized least-squares regression algorithms (Caponnetto and De Vito, 2007), (Steinwart et al., 2009). The essential difference between our results and such previous works is that those assume a general setting and do not focus on a particular data model, whereas our results are rather relevant to settings where the support of each class admits some certain structure, so as to allow the existence of an interpolator that is sufficiently regular on the support of each class. Moreover, in contrast with these previous works, our bounds are independent of the ambient space dimension and vary only with the intrinsic dimensions of the class supports as they characterize the error in terms of the covering numbers of the supports.

The results in Section 2 assume an embedding that makes training samples from different classes linearly separable. Even if most nonlinear dimensionality reduction methods are observed to yield separable embeddings in practice, we aim to verify this theoretically in Section 3. In particular, we focus on the nonlinear version of the supervised Laplacian eigenmaps embeddings (Raducanu and Dornaika, 2012), (Hua et al., 2012), (Yang et al., 2011), (Zhang et al., 2012). Supervised Laplacian eigenmaps methods embed the data with the eigenvectors of the linear combination of two graph Laplacian matrices that encode the links between neighboring samples from the same class and different classes. In such a data representation, the coordinates of neighboring data samples change slowly within the same class and rapidly across different classes. We study the conditions for the linear separability of these embeddings and characterize their separation margin in terms of some graph and algorithm parameters.

In Section 4, we evaluate our results with experiments on several object and face data sets. We study the implications of the condition derived in Section 2 on the separability margin - interpolator regularity tradeoff. The experimental comparison of several supervised dimensionality reduction algorithms shows that this compromise between the separation and interpolator regularity can indeed be related to the practical classification performance of a supervised manifold learning algorithm. This suggests that, one can possibly improve the accuracy of supervised dimensionality reduction algorithms by considering more carefully the generalization capability of the embedding during the learning. We then study the variation of the classification performance with parameters such as the sample size, the RBF kernel scale, and the dimension of the embedding, in view of the generalization bounds presented in Section 2. Finally, we conclude in Section 5.

2 Performance bounds for supervised manifold learning methods

2.1 Notation and Problem Formulation

Consider a setting with data classes where the samples of each class are drawn from a probability measure in a Hilbert space such that has a bounded support . Let be a set of training samples such that each is drawn from one of the probability measures , and the samples drawn from each are independent and identically distributed. We denote the class label of by .

Let be a -dimensional embedding of , where each corresponds to . We consider supervised embeddings such that is linearly separable. Linear separability is defined as follows:

Definition 1

The data representation is linearly separable with a margin of , if for any two classes , there exists a separating hyperplane defined by , and such that

| (1) |

The above definition of separability implies the following. For any given class , there exists a set of hyperplanes , , and a set of real numbers that separate class from other classes, such that for all of class

| (2) |

and for all of class , there exists a such that

| (3) |

These hyperplanes are obtained by setting , .

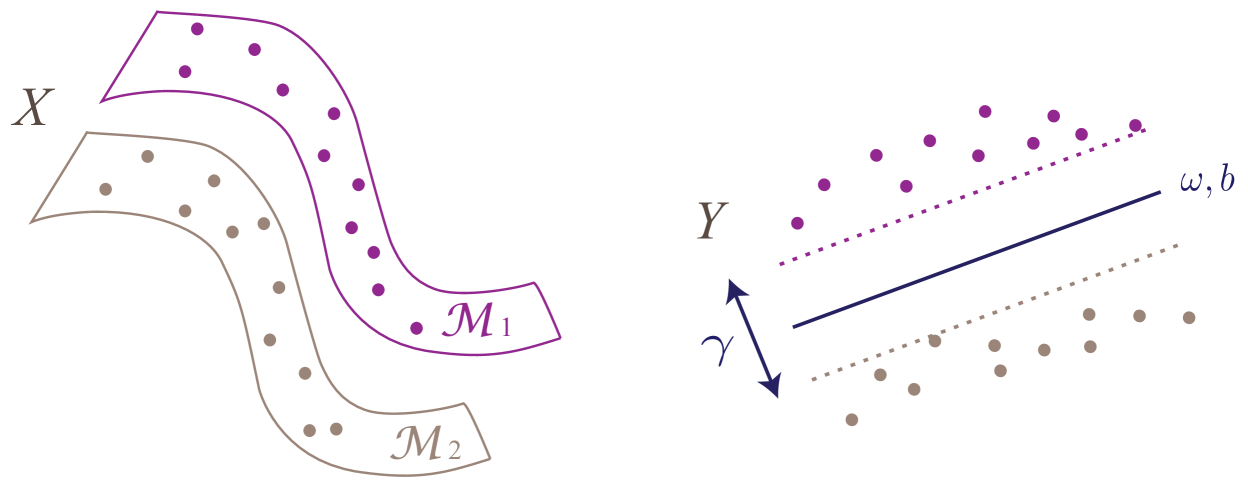

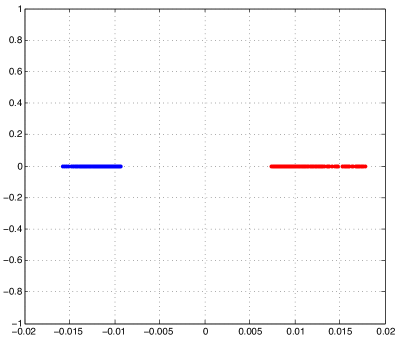

Figure 1 shows an illustration of a linearly separable embedding of data samples from two classes. Manifold learning methods typically compute a low-dimensional embedding of training data in a pointwise manner, i.e., the coordinates are computed only for the initially available training samples . However, in a classification problem, in order to estimate the class label of a new data sample of unknown class, needs to be mapped to the low-dimensional domain of embedding as well. The construction of a function that generalizes the learnt embedding to the whole space is known as the out-of-sample generalization problem. Smooth functions are commonly used for out-of-sample interpolation, e.g. as in (Qiao et al., 2013), (Peherstorfer et al., 2011).

Now let be a test sample drawn from the probability measure , hence, the true class label of is . In our study, we consider two basic classification schemes in the domain of embedding:

Linear classifier. The embeddings of the training samples are used to compute the separating hyperplanes, i.e., the classifier parameters and . Then, mapping to the low-dimensional domain as , the class label of is estimated as if there exists such that

| (4) |

Note that the existence of such an is not guaranteed in general for any , but for a given there cannot be more than one satisfying the above condition. Then is classified correctly if the estimated class label agrees with the true class label, i.e., .

Nearest neighbor classification. The test sample is assigned the class label of the closest training point in the domain of embedding, i.e., , where

In the rest of this section, we study the generalization performance of supervised dimensionality reduction methods. We first consider in Section 2.2 interpolation functions that vary regularly on each class support and we search for a lower bound on the probability of correctly classifying a new data sample in terms of the regularity of , the separation of the embedding, and the sampling density. Then in Section 2.3, we study the classification performance for a particular type of interpolation functions, namely RBF interpolators, which is one of the most popular ones (Peherstorfer et al., 2011), (Chin and Suter, 2008). We focus particularly on Gaussian RBF interpolators in Section 2.4 and derive some results regarding the existence of an optimal kernel scale parameter. Lastly, we discuss our results in comparison with previous literature in Section 2.5.

In the results in Sections 2.2-2.4, we keep a generic formulation and simply treat the supports as arbitrary bounded subsets of , each of which represents a different data class. Nevertheless, from the perspective of manifold learning, our results are of interest especially when the data is assumed to have an underlying low-dimensional structure. In Section 2.5, we study the implications of our results for the setting where are low-dimensional manifolds. We then examine how the proposed bounds vary in relation to the intrinsic dimensions of .

2.2 Out-of-sample interpolation with regular functions

Let be an out-of-sample interpolation function such that for each training sample , . Assume that is Lipschitz continuous with constant when restricted to any one of the supports ; i.e., for any and any

where denotes above the -norm if the argument is in , and the norm induced from the inner product in if the argument is in .

We will find a relation between the classification accuracy and the number of training samples via the covering number of the supports . Let denote an open ball of radius around

The covering number of a set is defined as the smallest number of open balls of radius whose union contains (Kulkarni and Posner, 1995)

We assume that the supports are totally bounded, i.e., has a finite covering number for any .

We state below a lower bound for the probability of correctly classifying a sample drawn from , in terms of the number of training samples drawn from , the separation of the embedding and the regularity of .

Theorem 2

For some with , let the training set contain at least samples drawn i.i.d. according to a probability measure such that

Let be an embedding of the training samples that is linearly separable with margin larger than , and let be an interpolation function that is Lipschitz continuous with constant on the support . Then the probability of correctly classifying a test sample drawn from independently from the training samples with the linear classifier (4) is lower bounded as

The proof of the theorem is given in Appendix A.1. Theorem 2 establishes a link between the classification performance and the separation of the embedding of the training samples. In particular, due to the condition , the increase in the separation allows a larger value for , provided that the interpolator regularity is not affected much. This reduces the covering number in return and increases the probability of correct classification. Similarly, from the condition , one can also observe that at a given separation , a smaller Lipschitz constant for the interpolation function allows the parameter to take a larger value. This reduces the covering number and therefore increases the correct classification probability. Thus, choosing a more regular interpolator at a given separation helps improve the classification performance. If the parameter is fixed, the Lipschitz constant of the interpolator is allowed to increase only proportionally to the separation margin. The condition that the interpolator must be sufficiently regular in comparison with the separation suggests that increasing the separation too much at the cost of impairing the interpolator regularity may degrade the classifier performance. In the case that the supports are low-dimensional manifolds, the covering number increases at a geometric rate with the intrinsic dimension of the manifold, since a -dimensional manifold is locally homeomorphic to . Therefore, from the condition on the number of samples, should increase at a geometric rate with .

In Theorem 2 the probability of misclassification decreases with the number of training samples at a rate of . In the rest of this section, we show that it is in fact possible to obtain an exponential convergence rate with linear and NN-classifiers under certain assumptions. We first present the following lemma.

Lemma 3

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be a test sample randomly drawn according to the probability measure of class . Let

| (5) |

be the set of samples in that are in a -neighborhood of and also drawn from the measure . Assume that contains samples. Then

| (6) |

Lemma 3 is proved in Appendix A.2. The inequality in (6) shows that as the number of training samples falling in a neighborhood of a test point increases, the probability of the deviation of from its average within the neighborhood decreases. The parameter captures the relation between the amount and the probability of deviation.

When studying the classification accuracy in the main result below, we will use the following generalized definition of the linear separation.

Definition 4

Let be a linearly separable embedding with margin such that each pair of classes are separated with the hyperplanes given by , as defined in Definition 1. We say that the linear classifier given by , has a -mean separability margin of if any choice of samples from class and samples from class , , satisfies

| (7) |

The above definition of separability is more flexible than the one in Definition 1. Clearly, an embedding that is linearly separable with margin has a -mean separability margin of for any . As in the previous section, we consider that the test sample is classified with the linear classifier (4) in the low-dimensional domain, defined with respect to the set of hyperplanes given by and as in (2) and (3).

In the following result, we show that an exponential convergence rate can be obtained with linear classifiers in supervised manifold learning. We define beforehand a parameter depending on , which gives the smallest possible measure of the -neighborhood of a point in support .

Theorem 5

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be an embedding of in that is linearly separable with a -mean separability margin larger than . For a given and , let be a Lipschitz-continuous interpolator such that

| (8) |

Consider a test sample randomly drawn according to the probability measure of class . If contains at least training samples drawn i.i.d. from such that

then the probability of correctly classifying with the linear classifier given in (4) is lower bounded as

| (9) |

Theorem 5 is proved in Appendix A.3. The theorem shows how the classification accuracy is influenced by the separation of the classes in the embedding, the smoothness of the out-of-sample interpolant, and the number of training samples drawn from the density of each class. The condition in (8) points to the tradeoff between the separation and the regularity of the interpolation function. As the Lipschitz constant of the interpolation function increases, becomes less “regular”, and a higher separation is needed to meet the condition. This is coherent with the expectation that, when becomes irregular, the classifier becomes more sensitive to the perturbations of the data, e.g., due to noise. The requirement of a higher separation is then for ensuring a larger margin in the linear classifier, which compensates for the irregularity of . From (8), it is also observed that the separation should increase with the dimension as well, and also with , whose increase improves the confidence of the bound (9). Note that the condition in (8) implies also the following: When computing an embedding, it is not advisable to increase the separation of training data unconditionally. In particular, increasing the separation too much may violate the preservation of the geometry and yield an irregular interpolator. Hence, when designing a supervised dimensionality reduction algorithm, one must pay attention to the regularity of the resulting interpolator as much as the enhancement of the separation margin.

Next, we discuss the roles of the parameters and . The term in the correct classification probability bound (9) shows that, for fixed , the confidence increases with the value of . Meanwhile, due to the numerator of the term , for a high confidence, the number of samples should also be relatively big with respect to to have a high overall confidence. Similarly, at fixed , should be made smaller to increase the confidence due to the term , which then reduces the parameter and eventually requires the number of samples to take a sufficiently large value in order to make the term small and have a high confidence. Therefore, these two parameters and behave in a similar way, and determine the relation between the number of samples and the correct classification probability, i.e., they indicate how large should be in order to have a certain confidence of correct classification.

Theorem 5 studies the setting where the class labels are estimated with a linear classifier in the domain of embedding. We also provide another result below that analyses the performance when a nearest-neighbor classifier is used in the domain of embedding.

Theorem 6

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be an embedding of in such that

hence, nearby samples from the same class are mapped to nearby points, and samples from different classes are separated by a distance of at least in the embedding.

For given and , let be a Lipschitz-continuous interpolation function such that

| (10) |

Consider a test sample randomly drawn according to the probability measure of class . If contains at least training samples drawn i.i.d. from such that

then the probability of correctly classifying with nearest-neighbor classification in is lower bounded as

| (11) |

Theorem 6 is proved in Appendix A.4. Theorem 6 is quite similar to Theorem 5 and can be interpreted similarly. Unlike in the previous result, the separability condition of the embedding is based on the pairwise distances of samples from different classes here. The condition (10) suggests that the result is useful when the parameter is sufficiently small, which requires the embedding to map nearby samples from the same class in the ambient space to nearby points.

In this section, we have characterized the regularity of the interpolation functions via their rates of variation when restricted to the supports . While the results of this section are generic in the sense that they are valid for any interpolation function with the described regularity properties, we have not examined the construction of such functions. In a practical classification problem where one uses a particular type of interpolation functions, one would also be interested in the adaptation of these results to obtain performance guarantees for the particular type of function used. Hence, in the following section we focus on a popular family of smooth functions; radial basis function (RBF) interpolators, and study the classification performance of this particular type of interpolators.

2.3 Out-of-sample interpolation with RBF interpolators

Here we consider an RBF interpolation function of the form

such that each component of is given by

where is a kernel function, are coefficients, and are kernel centers. In interpolation with RBF functions, it is common to choose the set of kernel centers as the set of available data samples. Hence, we assume that the set of kernel centers is selected to be the same as the set of training samples . We consider a setting where the coefficients are set such that , i.e., maps each training point in to its embedding previously computed with supervised manifold learning.

We consider the RBF kernel to be a Lipschitz continuous function with constant , hence, for any

Also, let be an upper bound on the coefficient magnitudes such that for all

In the following, we analyze the classification accuracy and extend the results in Section 2.2 to the case of RBF interpolators. We first give the following result, which probabilistically bounds how much the value of the interpolator at a point randomly drawn from may deviate from the average interpolator value of the training points of the same class within a neighborhood of .

Lemma 7

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be a test sample randomly drawn according to the probability measure of class . Let

| (12) |

be the set of samples in that are in a -neighborhood of and also drawn from the measure . Assume that contains samples. Then

| (13) |

The proof of Lemma 7 is given in Appendix A.5. The lemma states a result similar to the one in Lemma 3; however, is specialized to the case where is an RBF interpolator.

We are now ready to present the following main result.

Theorem 8

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be an embedding of in that is linearly separable with a -mean separability margin larger than . For a given and , let be an RBF interpolator such that

| (14) |

Consider a test sample randomly drawn according to the probability measure of class . If contains at least training samples drawn i.i.d. from such that

then the probability of correctly classifying with the linear classifier given in (4) is lower bounded as

| (15) |

The theorem is proved in Appendix A.6. The theorem bounds the classification accuracy in terms of the smoothness of the RBF interpolation function and the number of samples. The condition in (14) characterizes the compromise between the separation and the regularity of the interpolator, which depends on the Lipschitz constant of the RBF kernels and the coefficient magnitude. As the Lipschitz constant and the coefficient magnitude parameter increase (i.e., becomes less “regular”), a higher separation is required to provide a performance guarantee. When the separation margin of the embedding and the interpolator satisfy the condition in (14), the misclassification probability decays exponentially as the number of training samples increases, similarly to the results in Section 2.2.

Theorem 8 studies the misclassification probability when the class labels in the low-dimensional domain are estimated with a linear classifier. We also present below a bound on the misclassification probability when the nearest-neighbor classifier is used in the low-dimensional domain.

Theorem 9

Let be a set of training samples such that each is drawn i.i.d. from one of the probability measures . Let be an embedding of in such that

For given and , let be an RBF interpolator such that

| (16) |

Consider a test sample randomly drawn according to the probability measure of class . If contains at least training samples drawn i.i.d. from such that

then the probability of correctly classifying with nearest-neighbor classification in is lower bounded as

| (17) |

Theorem 9 is proved in Appendix A.7. While it provides the exact convergence rate as in Theorem 8, the necessary condition in (16) includes also the parameter . Hence, if the embedding maps nearby samples from the same class to nearby points, and a compromise is achieved between the separation and the interpolator regularity, the misclassification probability can be upper bounded.

2.4 Optimizing the scale of Gaussian RBF kernels

In data interpolation with RBFs, it is known that the accuracy of interpolation is quite sensitive to the choice of the shape parameter for several kernels including the Gaussian kernel (Baxter, 1992). The relation between the shape parameter and the performance of interpolation has been an important problem of interest (Piret, 2007). In this section, we focus on the Gaussian RBF kernel, which is a popular choice for RBF interpolation due to its smoothness and good spatial localization properties. We study the choice of the scale parameter of the kernel within the context of classification.

We consider the RBF kernel given by

where is the scale parameter of the Gaussian function. We focus on the condition (14) in Theorem 8

(or equivalently the condition (16) if the nearest neighbor classifier is used), which relates the interpolation function properties with the separation. In particular, for a given separation margin, this condition is satisfied more easily when the term on the left hand side of the inequality is smaller. Thus, in the following, we derive an expression for the left hand side of the above inequality by deriving the Lipschitz constant and the coefficient bound in terms of the scale parameter of the Gaussian kernel. We then study the scale parameter that minimizes .

Writing the condition in a matrix form for each dimension , we have

| (18) |

where is a matrix whose -th entry is given by , is the coefficient vector whose -th entry is , and is the data coordinate vector giving the -th dimensions of the embeddings of all samples, i.e., . Assuming that the embedding is computed with the usual scale constraint , we have . The norm of the coefficient vector can then be bounded as

| (19) |

In the rest of this section, we assume that the data are sampled from the Euclidean space, i.e., . We first use a result by Narcowich et al. (1994) in order to bound the norm of the inverse matrix. From (Narcowich et al., 1994, Theorem 4.1) we get111The result stated in (Narcowich et al., 1994, Theorem 4.1) is adapted to our study by taking the measure as so that the RBF kernel defined in (Narcowich et al., 1994, (1.1)) corresponds to a Gaussian function as . The scale of the Gaussian kernel is then given by .

| (20) |

where and are constants depending on the dimension and the minimum distance between the training points (separation radius) (Narcowich et al., 1994). As the -norm of the coefficient vector can be bounded as , from (19) one can set the parameter that upper bounds the coefficients magnitudes as

where .

Next, we derive a Lipschitz constant for the Gaussian kernel in terms of . Setting the second derivative of to zero

we get that the maximum value of is attained at . Evaluating at this value, we obtain

Now rewriting the condition (14) of the theorem, we have

where and . We thus determine the Gaussian scale parameter that minimizes

First, notice that as and , the function . Therefore, it has at least one minimum. Setting

we need to solve

| (21) |

The leading and the second-degree coefficients are positive, while the first-degree and the constant coefficients are negative in the above cubic polynomial. Then, the sum of the roots is negative and the product of the roots is positive. Therefore, there is one and only one positive root , which is the unique minimizer of .

The existence of an optimal scale parameter for the RBF kernel can be intuitively explained as follows. When takes too small values, the support of the RBF function concentrated around the training points does not sufficiently cover the whole class supports . This manifests itself in (14) with the increase in the term , which indicates that the interpolation function is not sufficiently regular. This weakens the guarantee that a test sample will be interpolated sufficiently close to its neighboring training samples from the same class and mapped to the correct side of the hyperplane in the linear classifier. On the other hand, when increases too much, the stability of the linear system (18) is impaired and the coefficients increase too much. This results in an overfitting of the interpolator and, therefore, decreases the classification performance. Hence, the analysis in this section provides a theoretical justification of the common knowledge that should be set to a sufficiently large value while avoiding overfitting.

Remark: It is also interesting to observe how the optimal scale parameter changes with the number of samples . In the study (Narcowich et al., 1994), the constants and in (20) are shown to vary with the separation radius at rates and , where the separation radius is proportional to the smallest distance between two distinct training samples. Then a reasonable assumption is that the separation radius should typically decrease at rate as increases. Using this relation, we get that and should vary at rates and with . It follows that , and the parameters , of the cubic polynomial in (21) also vary with at rates , . The equation (21) in can then be rearranged as

such that the constants vary with at rates , , , . We can then inspect how the roots of this equation change with as increases. Since and dominate the other coefficients for large , three real roots will exist if is sufficiently large, two of which are negative and one is positive. The sum of the pairwise products of the roots is negative and it decays with at rate , and the product of the roots also decays with . Then at least two of the roots must decay with . Meanwhile, the sum of the three roots is and negative. This shows that one of the negative roots is , i.e., does not decay with . From the product of three roots, we then observe that the product of the two decaying roots is . However, their sum also decays at the same rate (from the sum of the pairwise products), which is possible if their dominant terms have the same rate and cancel each other. We conclude that both of the decaying roots vary at rate , one of which is the positive root and the optimal value of the scale parameter. This analysis shows that the scale parameter of the Gaussian kernel should be adapted to the number of training samples, and a smaller kernel scale must be preferred for a larger number of training samples. In fact, the relation is quite intuitive, as the average or typical distance between two samples will also decrease at rate as the number of samples increases in an -dimensional space. Then the above result simply suggests that the kernel scale should be chosen as proportional to the average distance between the training samples.

2.5 Discussion of the results in relation with previous results

In Theorems 8 and 9, we have presented a result that characterizes the performance of classification with RBF interpolation functions. In particular, we have considered a setting where an RBF interpolator is fitted to each dimension of a low-dimensional embedding where different classes are separable. Our study has several links with RBF networks or least-squares regression algorithms. In this section, we interpret our findings in relation with previously established results.

Several previous works study the performance of learning by considering a probability measure defined on , where and are two sets. The “label” set is often taken as an interval . Given a set of data pairs sampled from the distribution , the RBF network estimates a function of the form

| (22) |

The number of RBF terms may be different from the number of samples in general. The function minimizes the empirical error

The function estimated from a finite collection of data samples is often compared to the regression function (Cucker and Smale, 2002)

where is the conditional probability measure on . The regression function minimizes the expected risk as

As the probability measure is not known in practice, the estimate of is obtained from data samples. Several previous works have characterized the performance of learning by studying the approximation error (Niyogi and Girosi, 1996), (Lin et al., 2014)

| (23) |

where is the marginal probability measure on . This definition of the approximation error can be adapted to our setting as follows. In our problem the distribution of each class is assumed to have a bounded support, which is a special case of modeling the data with an overall probability distribution . If the supports are assumed to be nonintersecting, the regression function is given by

which corresponds to the class labels , where is the indicator function of the support . It is then easy to show that the approximation error can be bounded as a constant times the probability of misclassification . Hence, we can compare our misclassification probability bounds in Section 2.3 with the approximation error in other works.

The study in (Niyogi and Girosi, 1996) assumes that the regression function is an element of the Bessel potential space of a sufficiently high order and that the sum of the coefficients is bounded. It is then shown that for data sampled from , with probability greater than the approximation error in (23) can be bounded as

| (24) |

where is the number of RBF terms.

The analysis by Lin et al. (2014) considers families of RBF kernels that include the Gaussian function. Supposing that the regression function is of Sobolev class , and that the number of RBF terms is given by in terms of the number of samples , the approximation error is bounded as

| (25) |

Next, we overview the study by Hernández-Aguirre et al. (2002), which studies the performance of RBFs in a Probably Approximately Correct (PAC)-learning framework. For , a family of measurable functions from to is considered and the problem of approximating a target function known only through examples with a function in is studied. The authors use a previous result from (Vidyasagar, 1997) that relates the accuracy of empirical risk minimization to the covering number of and the number of samples. Combining this result with the bounds on covering number estimates of Lipschitz continuous functions (Kolmogorov and Tihomirov, 1961), the following result is obtained for PAC function learning with RBF neural networks with Gaussian kernel. Let the coefficients be bounded as , a common scale parameter be chosen as , and be computed under a uniform probability measure . Then if the number of samples satisfies

| (26) |

an approximation of the target function is obtained with accuracy parameter and confidence parameter :

| (27) |

In the above expression, the expectation is over the test samples, whereas the probability is over the training samples; i.e., over all possible distributions of training samples, the probability of having the average approximation error larger than is bounded. Note that, our results in Theorems 8 and 9, when translated into the above PAC-learning framework, correspond to a confidence parameter of . This is because the misclassification probability bound of a test sample is valid for any choice of the training samples, provided that the condition (14) (or the condition (16)) holds. Thus, in our result the probability running over the training samples in (27) has no counterpart. When we take , the above result does not provide a useful bound since as . By contrast, our result is valid only if the conditions (14), (16) on the interpolation function holds. It is easy to show that, assuming nonintersecting class supports , the expression is given by a constant times the probability of misclassification. The accuracy parameter can then be seen as the counterpart of the misclassification probability upper bound given on the right hand sides of (15) and (17) (the expression subtracted from 1). At fixed , the dependence of the accuracy on the kernel scale parameter is monotonic in the bound (26); decreases as increases. Therefore, this bound does not guide the selection of the scale parameter of the RBF kernel, while the discussion in Section 2.4 (confirmed by the experimental results in Section 4.2) suggests the existence of an optimal scale.

Finally, we mention some results on the learning performance of regularized least squares regression algorithms. In (Caponnetto and De Vito, 2007) optimal rates are derived for the regularized least squares method in a Reproducing Kernel Hilbert Space (RKHS) in the minimax sense. It is shown that, under some hypotheses concerning the data probability measure and the complexity of the family of learnt functions, the maximum error (yielded by the worst distribution) obtained with the regularized least squares method converges at a rate of . Next, the work in (Steinwart et al., 2009) shows that, in regularized least squares regression over a RKHS, if the eigenvalues of the kernel integral operator decay sufficiently fast, and if the -norms of regression functions can be bounded, the error of the classifier converges at a rate of up to with high probability. Steinwart et al. also examine the learning performance in relation with the exponent of the function norm in the regularization term and show that the learning rate is not affected by the choice of the exponent of the function norm.

We now overview the three bounds given in (24), (25), and (26) in terms of the dependence of the error on the number of samples. The results in (24) and (25) provide a useful bound only in the case where the number of samples is larger than the number of RBF terms , contrary to our study where we treat the case . If it is assumed that is sufficiently larger than , the result in (24) predicts a rate of decay of only in the misclassification probability. The bound in (25) improves with the Sobolev regularity of the regression function; however, the dependence of the error on the number of samples is of a similar nature to the one in (24). Considering as a misclassification error parameter in the bound in (26), the error decreases at a rate of as the number of samples increases. The analysis in (Caponnetto and De Vito, 2007) and (Steinwart et al., 2009) also provide the similar rates of convergence of . Meanwhile, our results in Theorems 8 and 9 predict an exponential decay in the misclassification probability as the number of samples increases (under the reasonable assumption that for each class ). The reason why we arrive at a more optimistic bound is the specialization of the analysis to the considered particular setting, where the support of each class is assumed to be restricted to a totally bounded region in the ambient space, as well as the assumed relations between the separation margin of the embedding and the regularity of the interpolation function.

Another difference between these previous results and ours is the dependence on the dimension. The results in (24), (25), and (26) predict an increase in the error at the respective rates of , , and with the ambient space dimension . While these results assume that the data is in an Euclidean space of dimension , our study assumes the data to be in a generic Hilbert space . The results in Theorems 5-8 involve the dimension of the low-dimensional space of embedding and does not explicitly depend on the dimension of the ambient Hilbert space (which could be infinite-dimensional). However, especially in the context of manifold learning, it is interesting to analyze the dependence of our bound on the intrinsic dimension of the class supports .

In order to put the expressions (15), (17) in a more convenient form, let us reduce one parameter by setting . Then the misclassification probability is of

We can relate the dependence of this expression on the intrinsic dimension as follows. Since the supports are assumed to be totally bounded, one can define a parameter that represents the “diameter” of , i.e., the largest distance between any two points on . Then the measure of the minimum ball of radius in is of , where is the intrinsic dimension of . Replacing this in the above expression gives the probability of misclassification as

This shows that in order to retain the correct classification guarantee, as the intrinsic dimension grows, the number of samples should increase at a geometric rate with . In supervised manifold learning problems, data sets usually have a low intrinsic dimension, therefore, this geometric rate of increase can often be tolerated. Meanwhile the dimension of the ambient space is typically high, so that performance bounds independent of the ambient space dimension are of particular interest. Note that generalization bounds in terms of the intrinsic dimension have been proposed in some previous works as well (Bickel and Li, 2007), (Kpotufe, 2011), for the local linear regression and the K-NN regression problems.

3 Separability of supervised nonlinear embeddings

In the results in Section 2, we have presented generalization bounds for classifiers based on linearly separable embeddings. One may wonder if the separability assumption is easy to satisfy when computing structure-preserving nonlinear embeddings of data. In this section, we try to answer this question by focusing on a particular family of supervised dimensionality reduction algorithms, i.e., supervised Laplacian eigenmaps embeddings, and analyze the conditions of separability. We first discuss the supervised Laplacian eigenmaps embeddings in Section 3.1 and then present results in Section 3.2 about the linearly separability of these embeddings.

3.1 Supervised Laplacian eigenmaps embeddings

Let be a set of training samples, where each belongs to one of classes. Most manifold learning algorithms rely on a graph representation of data. This graph can be a complete graph in some works, in which case an edge exists between each pair of samples. Meanwhile, in some manifold learning algorithms, in order to better capture the intrinsic geometric structure of data, each data sample is connected only to its nearest neighbors in the graph. In this case, an edge exists only between neighboring data samples.

In our analysis, we consider a weighted data graph each vertex of which represents a point . We write , or simply if the graph contains an edge between the data samples , . We denote the edge weight as . The weights are usually determined as a positive and monotonically decreasing function of the distance between and in , where the Gaussian function is a common choice. Nevertheless, we maintain a generic formulation here without making any assumption on the neighborhood or weight selection strategies.

Now let and represent two subgraphs of , which contain the edges of that are respectively within the same class and between different classes. Hence, contains an edge between samples and , if and . Similarly, contains an edge if and . We assume that all vertices of are contained in both and ; and that has exactly connected components such that the training samples in each class form a connected component222The straightforward application of common graph construction strategies, like connecting each training sample to its K-nearest neighbors or to its neighbors within a given distance, may result in several disconnected components in a single class in the graph if there is much diversity in that class. However, this difficulty can be easily overcome by introducing extra edges to bridge between graph components that are originally disconnected.. We also assume that and do not contain any isolated vertices; i.e., each data sample has at least one neighbor in both graphs.

The weight matrices and of and have entries as follows.

Let and denote the degrees of in and

and , denote the diagonal degree matrices given by , . The normalized graph Laplacian matrices and of and are then defined as

Supervised extensions of the Laplacian eigenmaps and LPP algorithms seek a -dimensional embedding of the data set , such that each is represented by a vector . Denoting the new data matrix as , the coordinates of data samples are computed by solving the problem

| (28) |

The reason behind this formulation can be explained as follows. For a graph Laplacian matrix , where and are respectively the degree and the weight matrices, defining the coordinates normalized with the vertex degrees, we have

| (29) |

where is the -th row of giving the normalized coordinates of the embedding of the data sample . Hence, the problem in (28) seeks a representation that maps nearby samples in the same class to nearby points, while mapping nearby samples from different classes to distant points. In fact, when the samples are assumed to come from a manifold , the term is the discrete equivalent of

where is a continuous function on the manifold that extends the one-dimensional coordinates to the whole manifold. Hence, the term captures the rate of change of the learnt coordinate vectors over the underlying manifold. Then, in a setting where the samples of different classes come from different manifolds , the formulation in (28) looks for a function that has a slow variation on each manifold , while having a fast variation “between” different manifolds.

The supervised learning problem in (28) has so far been studied by several authors with slight variations in their problem formulations. Raducanu and Dornaika (2012) minimize a weighted difference of the within-class and between-class similarity terms in (28) in order to learn a nonlinear embedding. Meanwhile, linear dimensionality reduction methods pose the manifold learning problem as the learning of a linear projection matrix ; therefore, solve the problem in (28) under the constraint , where and . Hua et al. (2012) formulate the problem as the minimization of the difference of the within-class and the between-class similarity terms in (28) as well. Thus, their algorithm can be seen as the linear version of the method by Raducanu and Dornaika (2012). Sugiyama (2007) proposes an adaptation of the Fisher discriminant analysis algorithm to preserve the local structures of data. Data sample pairs are weighted with respect to their affinities in the construction of the within-class and the between-class scatter matrices in Fisher discriminant analysis. Then the trace of the ratio of the between-class and the within-class scatter matrices is maximized to learn a linear embedding. Meanwhile, the within-class and the between-class local scatter matrices are closely related to the two terms in (28) as shown by Yang et al. (2011). The terms and , when evaluated under the constraint , become equal to the locally weighted within-class and between-class scatter matrices of the projected data. Cui and Fan (2012) and Wang and Chen (2009) propose to maximize the ratio of the between-class and the within-class local scatters in the learning. Yang et al. (2011) optimize the same objective function, while they construct the between-class graph only on the centers of mass of the classes. Zhang et al. (2012) similarly optimize a Fisher metric to maximize the ratio of the between- and within-class scatters; however, the total scatter is also taken into account in the objective function in order to preserve the overall manifold structure.

All of the above methods use similar formulations of the supervised manifold learning problem and give comparable results. In our study, we base our analysis on the following formal problem definition

| (30) |

which minimizes the difference of the within-class and the between-class similarity terms as in works such as (Raducanu and Dornaika, 2012) and (Hua et al., 2012). Here is the identity matrix and is a parameter adjusting the weights of the two terms. The condition is a commonly used constraint to remove the scale ambiguity of the coordinates. The solution of the problem (30) is given by the first eigenvectors of the matrix

corresponding to its smallest eigenvalues.

Our purpose in this section is then to theoretically study the linear separability of the learnt coordinates of training data, with respect to the definition of linear separability given in (1). In the following, we determine some conditions on the graph properties and the weight parameter that ensure the linear separability. We derive lower bounds on the margin and study its dependence on the model parameters. Let us give beforehand the following definitions about the graphs and .

Definition 10

The volume of the subgraph of that corresponds to the connected component containing samples from class is

We define the maximal within-class volume as

The volume of the component of containing the edges between the samples of classes and is 333In order to keep the analogy with the definition of , a factor is introduced in this expression as each edge is counted only once in the sum.

We then define the maximal pairwise between-class volume as

In a connected graph, the distance between two vertices and is the number of edges in a shortest path joining and . The diameter of the graph is then given by the maximum distance between any two vertices in the graph (Chung, 1996). We define the diameter of the connected component of corresponding to class as follows.

Definition 11

For any two vertices and such that , consider a within-class shortest path joining and , which contains samples only from class . Then the diameter of the connected component of corresponding to class is the maximum number of edges in the within-class shortest path joining any two vertices and from class .

Definition 12

The minimum edge weight within class is defined as

3.2 Separability bounds for two classes

We now present a lower bound for the linear separability of the embedding obtained by solving (30) in a setting with two classes . We first show that an embedding of dimension is sufficient to achieve linear separability for the case of two classes. We then derive a lower bound on the separation in terms of the graph parameters and the algorithm parameter .

Consider a one-dimensional embedding , where is the coordinate of the data sample in the one-dimensional space. The coordinate vector is given by the eigenvector of corresponding to its smallest eigenvalue. We begin with presenting the following result, which states that the samples from the two classes are always mapped to different halves (nonnegative or nonpositive) of the real line.

Lemma 13

The learnt embedding of dimension satisfies

for any and for any choice of the graph parameters.

Lemma 13 is proved in Appendix B.1. The lemma states that in one-dimensional embeddings of two classes, samples from different classes always have coordinates with different signs. Therefore, the hyperplane given by , separates the data as for and for (since the embedding is one dimensional, the vector is a scalar in this case). However, this does not guarantee that the data is separable with a positive margin . In the following result, we show that a positive margin exists and give a lower bound on it. In the rest of this section, we assume without loss of generality that classes and are respectively mapped to the negative and positive halves of the real axis.

Theorem 14

Defining the normalized data coordinates , let

denote the maximum and minimum coordinates that classes and are respectively mapped to with a one-dimensional embedding learnt with supervised Laplacian eigenmaps. We also define the parameters

where is the diameter of the graph corresponding to class as defined in Definition 11. Then, if the weight parameter is chosen such that , any supervised Laplacian embedding of dimension is linearly separable with a positive margin lower bounded as below:

| (31) |

The proof of Theorem 14 is given in Appendix B.2. The proof is based on a variational characterization of the eigenvector of corresponding to its smallest eigenvalue, whose elements are then bounded in terms of the parameters of the graph such as the diameters and volumes of its connected components.

Theorem 14 states that an embedding learnt with the supervised Laplacian eigenmaps method makes two classes linearly separable if the weight parameter is chosen sufficiently small. In particular, the theorem shows that, for any , a choice of the weight parameter satisfying

guarantees a separation of between classes and at . Here, we use the symbol to denote the separation in the normalized coordinates . In practice, either one of the normalized eigenvectors or the original eigenvectors can be used for embedding the data. If the original eigenvectors are used, due to the relation , we can lower bound the separation as where . Thus, for any embedding of dimension , there exists a hyperplane that results in a linear separation with a margin of at least

Next, we comment on the dependence of the separation on . The inequality in (31) shows that the lower bound on the separation has a variation of with the weight parameter . The fact that the separation decreases with the increase in seems counterintuitive at first; this parameter weights the between-class dissimilarity in the objective function. This can be explained as follows. When is high, the algorithm tries to increase the distance between neighboring samples from different classes as much as possible by moving them away from the origin (remember that different classes are mapped to the positive and the negative sides of the real line). However, since the normalized coordinate vector has to respect the equality , the total squared norm of the coordinates cannot be arbitrarily large. Due to this constraint, setting to a high value causes the algorithm to map non-neighboring samples from different classes to nearby coordinates close to the origin. This occurs since the increase in reduces the impact of the first term in the overall objective and results in an embedding with a weaker link between the samples of the same class. This causes a polarization of the data and eventually reduces the separation. Hence, the parameter should be carefully chosen and should not take too large values.

Theorem 14 characterizes the separation at in terms of the distance between the supports of the two classes. Meanwhile, it is also interesting to determine the individual distances of the supports of the two classes to the origin. In the following corollary, we present a lower bound on the distance between the coordinates of any sample and the origin.

Corollary 15

The distance between the supports of the first and the second classes and the origin in a one-dimensional embedding is lower bounded in terms of the separation between the two classes as

where

Corollary 15 is proved in Appendix B.3. The proof is based on a Lagrangian formulation of the embedding as a constrained optimization problem, which then allows us to establish a link between the separation and the individual distances of class supports to the origin. The corollary states a lower bound on the portion of the overall separation lying in the negative or the positive sides of the real line. In particular, if the vertex degrees are equal for all samples in and (which is the case, for instance, if all vertices have the same number of neighbors and a constant weight of is assigned to the edges), since , the portions of the overall separation in the positive and negative sides of the real line will be equal. Although the statement of Theorem 14 is sufficient to show the existence of separating hyperplanes with positive margins for the embeddings of two classes, we will see in Section 3.3 that the separability with a hyperplane passing through the origin as in Corollary 15 is a desirable property for the extension of these results to a multi-class setting.

3.3 Separability bounds for multiple classes

In this section, we study the separability of the embeddings of multiple classes with the supervised Laplacian eigenmaps algorithm. In particular, we focus on a setting with multiple classes that can be grouped into several categories. The classes in each category are assumed to bear a relatively high resemblance within themselves, whereas the resemblance between classes from different categories is weaker. This is a scenario that is likely to be encountered in several practical data classification problems.

In the following, we study the embeddings of multiple categorizable classes. The objective matrix defining the embedding is close to a block-diagonal matrix if the between-category similarities are relatively low. Building on this observation, we present a result that links the separability of the overall embedding to the separability of the embeddings of each individual category with the same algorithm. Especially in a setting with many classes, this simplifies the problem for multiple classes and makes it possible to deduce information for the overall separation by studying the separation of the individual categories, which is easier to analyze.

We consider data samples belonging to different classes that can be categorized into groups. For the purpose of our theoretical analysis, let us focus for a moment on the individual categories and consider the embedding of the samples in each category with the supervised Laplacian eigenmaps algorithm if the data graph was constructed only within the category . Let be the -dimensional embedding of category . Assume that is separable with margin . Then for any two classes in category , there exists a hyperplane such that

| (32) |

where is the -th row of defining the coordinates of the -th data sample in category . Note that an offset of is assumed here, i.e., the classes in each category are assumed to be separable with hyperplanes passing through the origin. While this is mainly for simplifying the analysis, the studied supervised Laplacian eigenmaps algorithm in fact computes embeddings having this property in practice (the theoretical guarantee for the two-class setting being provided in Corollary 15).

Now let denote the objective matrix defining the embedding of with supervised Laplacian eigenmaps. Also, let denote the block-diagonal objective matrix where the within-class and the between-class Laplacians and are obtained by restricting the graph edges to the ones within the categories. In other words, is obtained by removing the edges between all pairs of data samples belonging to different categories.

Let denote the component of arising from the between-category data connections. In our analysis, we will treat this component as a perturbation on the block-diagonal matrix and analyze the eigenvectors of accordingly in order to study the separability of the embedding obtained with .

We will need a condition on the separation of the eigenvalues of . Let denote the minimal separation (the smallest difference) between the eigenvalues of

| (33) |

where are the eigenvalues of for . For and a random sampling of data, the eigenvalues of are expected to be distinct444Note that the within-class and the between-class Laplacians and are normalized Laplacians; therefore, the constant vector is not an eigenvector and 0 is not a repeating eigenvalue.; therefore, one can reasonably assume the minimal eigenvalue separation to be positive. The characterization of the behavior of the minimal separation of the eigenvalues depending on the graph properties is not within the scope of this study and remains as future work.

We state below our main result about the separability of the embeddings of multiple categorizable classes.

Theorem 16

Let be the matrix representing the objective function of the supervised Laplacian eigenmaps algorithm with classes categorizable into groups. Assume that is close to a block-diagonal objective matrix containing only within-category edges such that the perturbation is bounded as

in terms of the minimal eigenvalue separation of the matrix defined in (33). Let each category have a -dimensional embedding separable with margin as in (32). We define the parameters

and

Then there exists an embedding of dimension consisting of the eigenvectors of the overall objective matrix that is separable with a margin of at least

provided that .

The proof of Theorem 16 is given in Appendix B.4. The proof is based on first analyzing the separation of the embedding corresponding to the block-diagonal component of the objective matrix, and then lower bounding the separation of the original embedding in terms of the perturbation and the separation of the eigenvalues. In brief, the theorem says that if the classes are categorizable with sufficiently low between-category edge weights, and if the individual embedding of each category makes all classes in that category linearly separable, then in the embedding computed for the overall data graph with the supervised Laplacian eigenmaps algorithm, all pairs of classes (from same and different categories) are also linearly separable. This extends the linear separability of individual categories to the separability of all classes. The margin of the overall separation decreases at a rate of as the magnitude of the non-block-diagonal component of the objective matrix increases.555From the definition of the parameters ,, and in Theorem 16, we have , , . It follows that .

The dimension of the separable embedding is given by the sum of the dimensions of the individual embeddings of the categories that ensure the linear separability within each category, hence, the dimension required for linear separability must be linearly proportional to the number of categories. In order to compute the exact value of the number of dimensions required for linear separability, one needs the knowledge of the number of dimensions that ensures the separability within each category. Nevertheless, the provided result is still interesting as the theoretical or numerical analysis of individual categories is often easier than the analysis of the whole data set, since the number of classes in a particular category is more limited.666For instance, we have theoretically shown in Section 3.2 that one dimension is sufficient for obtaining a linearly separable embedding of two classes. While we do not provide a theoretical analysis for more than two classes, we have experimentally observed that data becomes linearly separable at two dimensions when the number of classes is three or four. Note that, one can also interpret the theorem by considering each class as a different category. However, in this case the edges between samples of different classes must have sufficiently low weights for the applicability of the theorem, i.e., the non-block diagonal component of the Laplacian must be sufficiently small. The examination of the general problem of embedding data with multiple non-categorizable classes and no assumptions of the edge weights between different classes seems to be a more challenging problem and remains as a future direction to study.

4 Experimental Results

In this section, we present results on synthetical and real data sets. We compare several supervised manifold learning methods and study their performances in relation with our theoretical results.

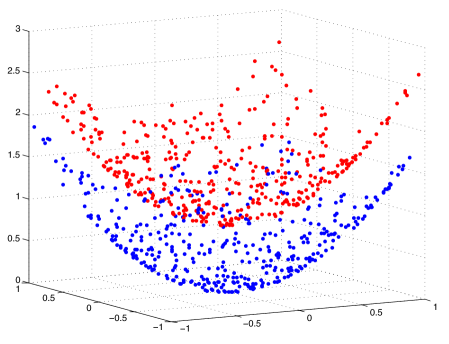

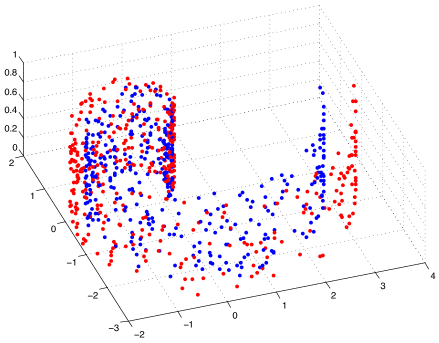

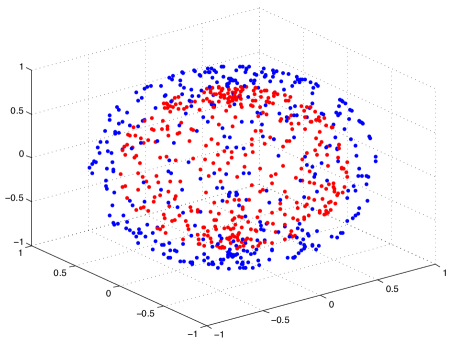

4.1 Separability of embeddings with supervised manifold learning

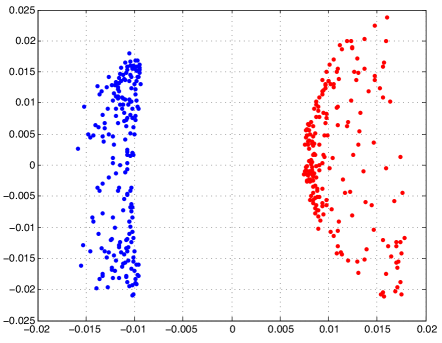

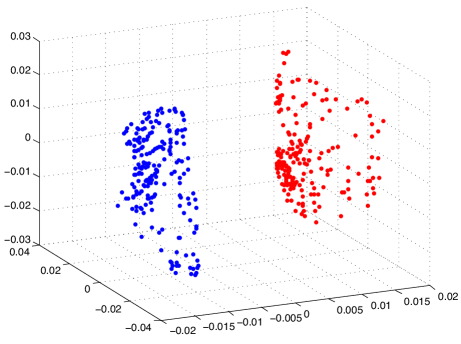

We first present results on synthetical data in order to study the embeddings obtained with supervised dimensionality reduction. We test the supervised Laplacian eigenmaps algorithm in a setting with two classes. We generate samples from two nonintersecting and linearly nonseparable surfaces in that represent two different classes. We experiment on three different types of surfaces; namely, quadratic surfaces, Swiss rolls and spheres. The data sampled from these surfaces are shown in Figure 2. We choose samples from each class. We construct the graph by connecting each sample to its -nearest neighbors from the same class, where is chosen between and . The graph is constructed similarly, where each sample is connected to its nearest neighbors from the other class. The graph weights are determined as a Gaussian function of the distance between the samples. The embeddings are then computed by minimizing the objective function in (30). The one-dimensional, two-dimensional, and three-dimensional embeddings obtained for the quadratic surface are shown in Figure 3, where the weight parameter is taken as (to have a visually clear embedding for the purpose of illustration). Similar results are obtained on the Swiss roll and the spherical surface. One can observe that the data samples that were initially linearly nonseparable become linearly separable when embedded with the supervised Laplacian eigenmaps algorithm. The two classes are mapped to different (positive or negative) sides of the real line in Figure 3(a) as predicted by Lemma 13. The separation in the 2-D and 3-D embeddings in Figure 3 is close to the separation obtained with the 1-D embedding.

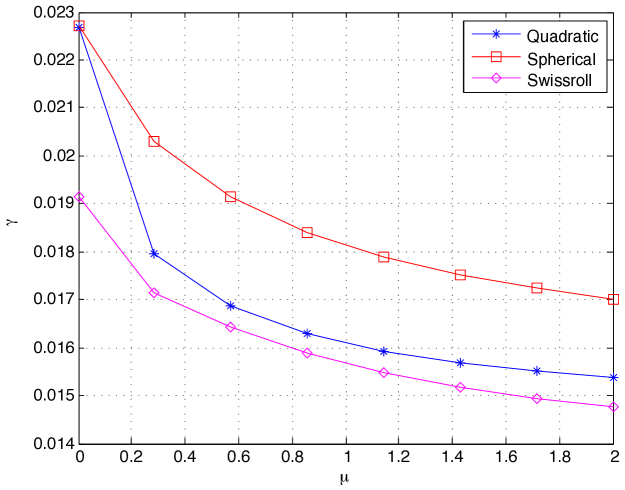

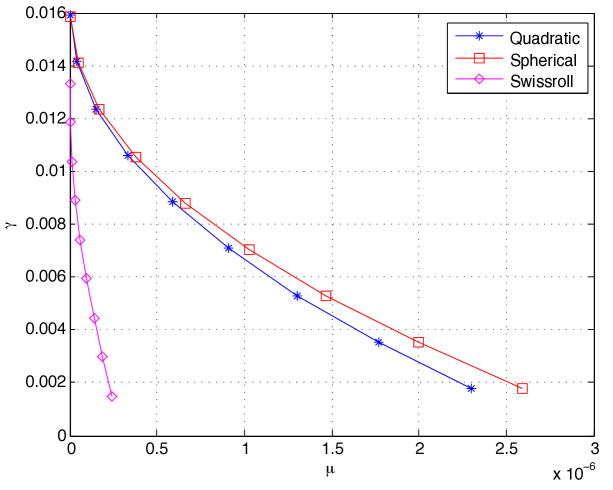

We then compute and plot the separation obtained at different values of . Figure 4(a) shows the experimental value of the separation obtained with the 1-D embedding for the three types of surfaces. Figure 4(b) shows the theoretical upper bound for in Theorem 14 that guarantees a separation of at least . Both the experimental value and the theoretical bound for the separation decrease with the increase in the parameter . This is in agreement with (31), which predicts a decrease of in the separation with respect to . The theoretical bound for the separation is seen to decrease at a relatively faster rate with for the Swiss roll data set. This is due to the particular structure of this data set with a nonuniform sampling density where the sampling is sparser away from the spiral center. The parameter then takes a small value, which consequently leads to a fast rate of decrease for the separation due to (31). Comparing Figures 4(a) and 4(b), one observes that the theoretical bounds for the separation are numerically more pessimistic than their experimental values, which is a result of the fact that our results are obtained with a worst-case analysis. Nevertheless, the theoretical bounds capture well the actual variation of the separation margin with .

4.2 Classification performance of supervised manifold learning algorithms

We now study the overall performance of classification obtained in a setting with supervised manifold learning, where the out-of-sample generalization is achieved with smooth RBF interpolators. We evaluate the theoretical results of Section 2 on several real data sets: the COIL-20 object database (Nene et al., 1996), the Yale face database (Georghiades et al., 2001), the ETH-80 object database (Leibe and Schiele, 2003), and the MNIST handwritten digit database (LeCun et al., 1998). The COIL-20, Yale face, ETH-80, and MNIST databases contain a total of 1420, 2204, 3280, and 70046 images from 20, 38, 8, and 10 image classes respectively. The images in the COIL-20, Yale and ETH-80 data sets are converted to greyscale, normalized, and downsampled to a resolution of respectively , , and pixels.

4.2.1 Comparison of supervised manifold learning to baseline classifiers

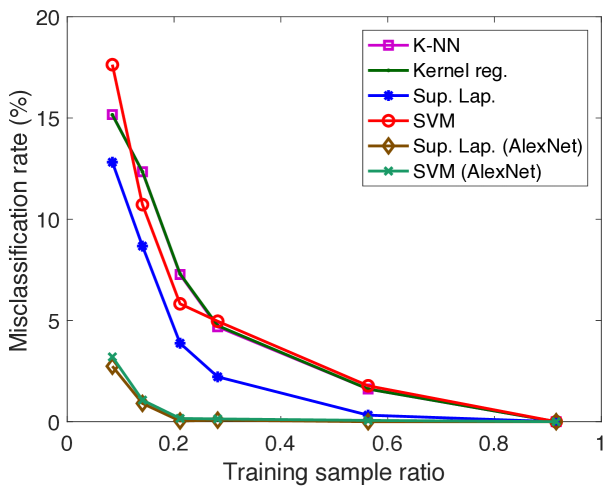

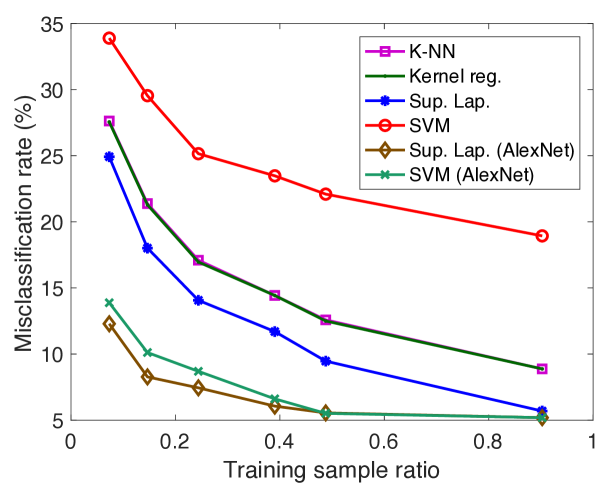

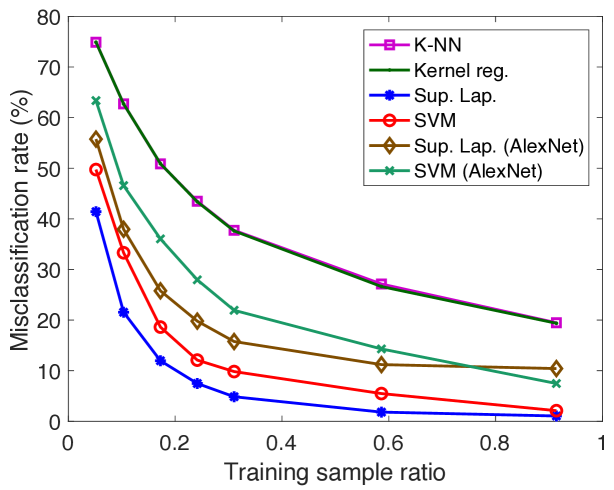

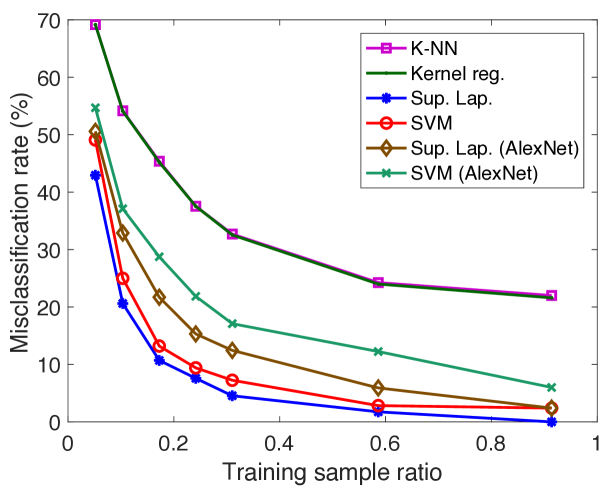

We first compare the performance of supervised manifold learning with some reference classification methods. The performances of SVM, K-NN, kernel regression, and the supervised Laplacian eigenmaps methods are evaluated and compared. Figure 5 reports the results obtained on the COIL-20 data set, the ETH-80 data set, the Yale data set, a subset of the Yale data set consisting of its first 10 classes (reduced Yale data set), and the MNIST data set. The SVM, K-NN, and kernel regression algorithms are applied in the original domain and their hyperparameters are optimized with cross-validation. In the supervised Laplacian eigenmaps method, the embedding of the training images into a low-dimensional space is computed. Then, an out-of-sample interpolator with Gaussian RBFs is constructed that maps the training samples to their embedded coordinates as described in Section 2.3. Test samples are mapped to the low-dimensional domain via the RBF interpolator and the class labels of test samples are estimated via nearest-neighbor classification in the low-dimensional domain. The supervised Laplacian eigenmaps and the SVM methods are also tested over an alternative representation of the image data sets based on deep learning. The images are provided as input to the pretrained AlexNet convolutional neural network proposed in (Krizhevsky et al., 2012), and the activation values at the second fully connected layer are used as the feature representations of the images. The feature representations of training and test images are then provided to the supervised Laplacian eigenmaps and the SVM methods. The plots in Figure 5 show the variation of the misclassification rate of test samples in percentage with the ratio of the number of training samples in the whole data set. The results are the average of 5 repetitions of the experiment with different random choices for the training and test samples.

The results in Figure 5 show that the best results are obtained with the supervised Laplacian eigenmaps algorithm in general. The performances of the algorithms improve with the number of training images as expected. In the COIL-20 and ETH-80 object data sets, the supervised Laplacian eigenmaps and the SVM algorithms yield significantly smaller error when applied to the feature representations of the images obtained with deep learning. Meanwhile, in the Yale face data set these two methods perform better on raw image intensity maps. This can be explained with the fact that the AlexNet model may be more successful in extracting useful features for object images rather than face images as it is trained on many common object and animal classes. It is interesting to compare Figures 5(c) and 5(d). While the performances of the supervised Laplacian eigenmaps and the SVM methods are closer in the reduced version of the Yale database with 10 classes, the performance gap between the supervised Laplacian eigenmaps method and the other methods is larger for the full data set with 38 classes. This can be explained with the fact that the linear separability of different classes degrades as the number of classes increases, thus causing a degradation in the performance of the classifiers in comparison. Meanwhile, the performance of the supervised Laplacian eigenmaps method is not much affected by the increase in the number of classes. The K-NN and kernel regression classifiers are seen to give almost the same performance in the plots in Figure 5. The number of neighbors is set as for the K-NN algorithm in these experiments, where it has been observed to attain its best performance; and the scale parameter of the kernel regression algorithm is optimized to get the best accuracy, which has turned out to take relatively small values. Hence the performances of these two classifiers practically correspond to that of the nearest-neighbor classifier in the original domain.

4.2.2 Variation of the error with algorithm parameters and sample size

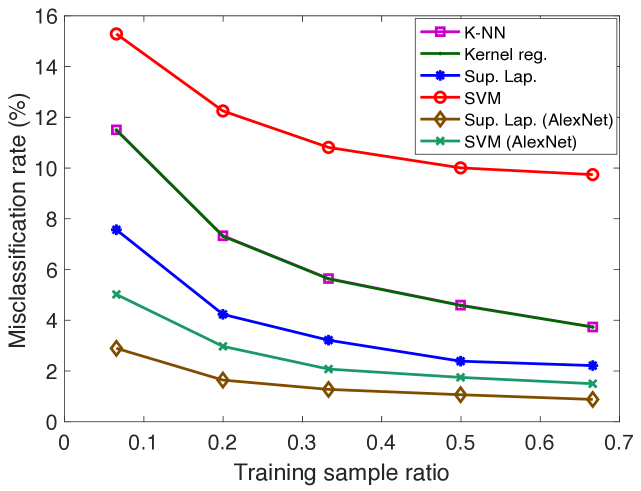

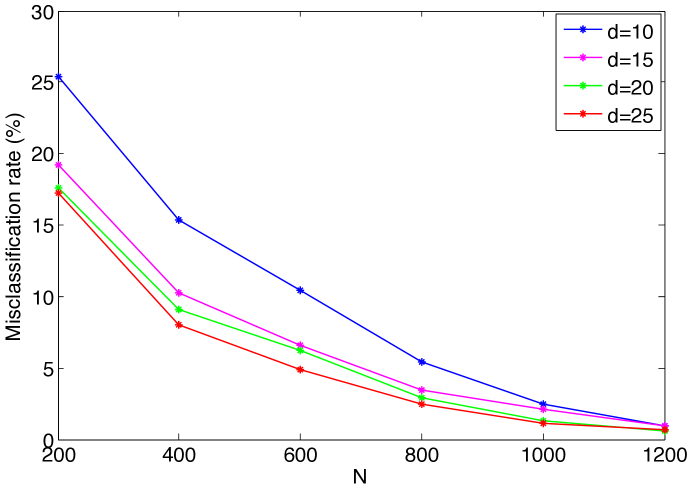

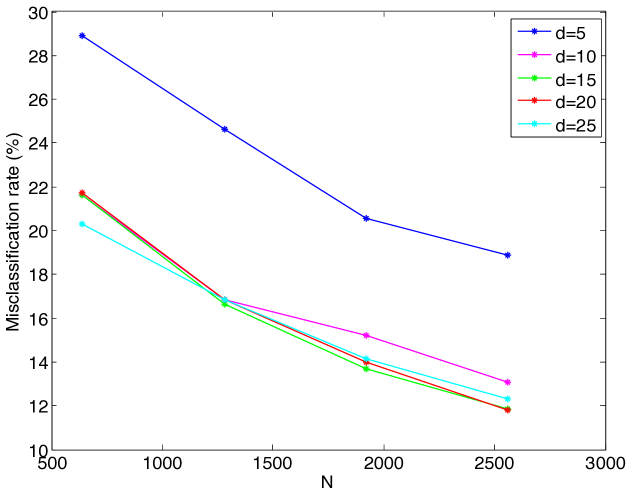

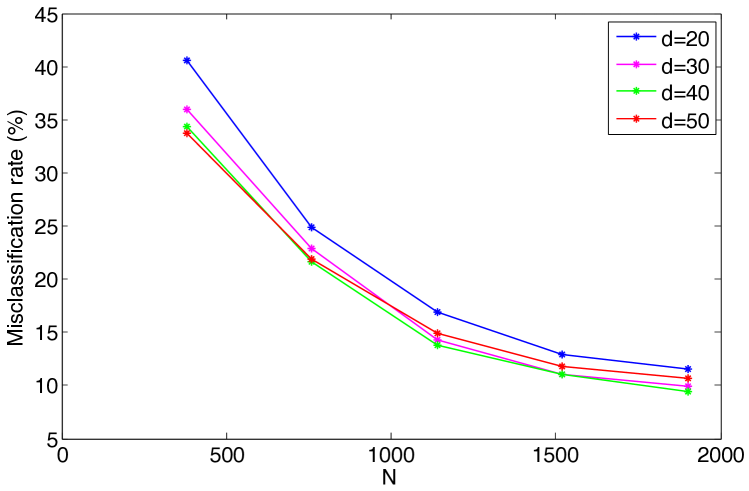

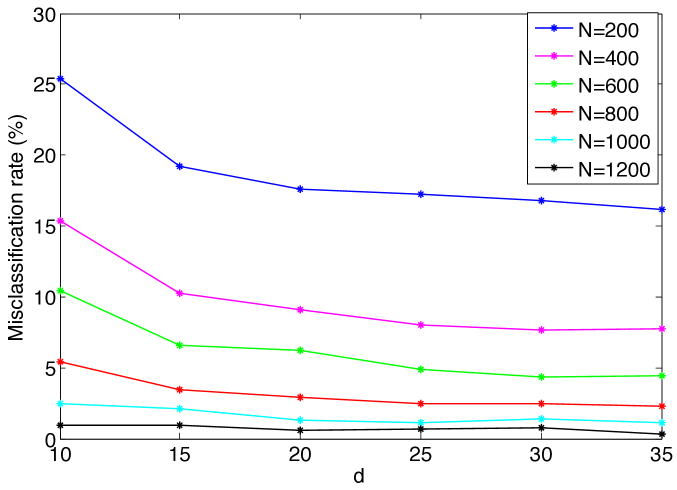

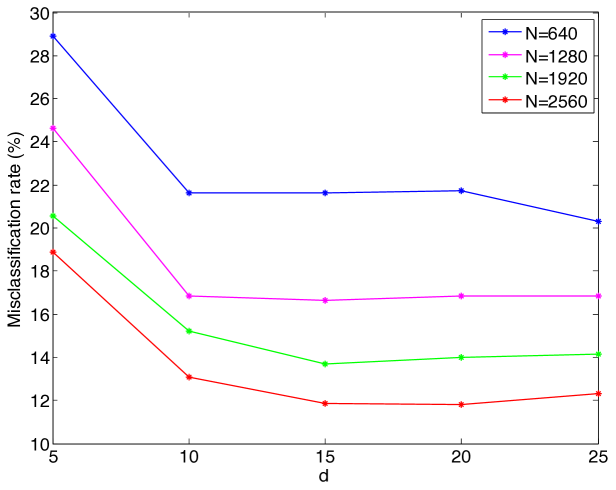

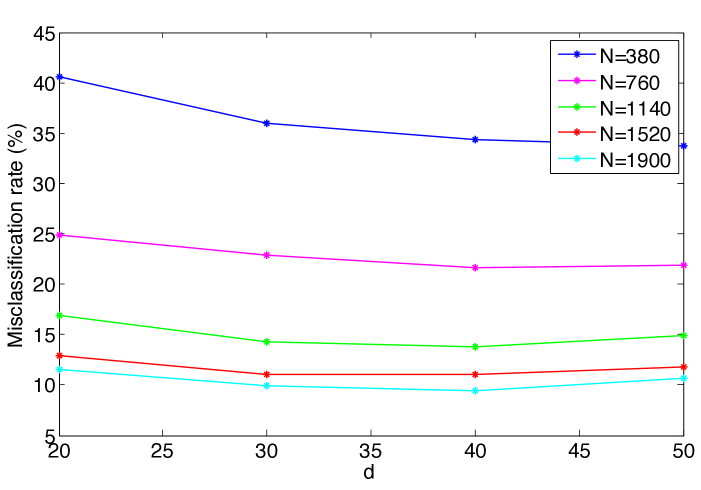

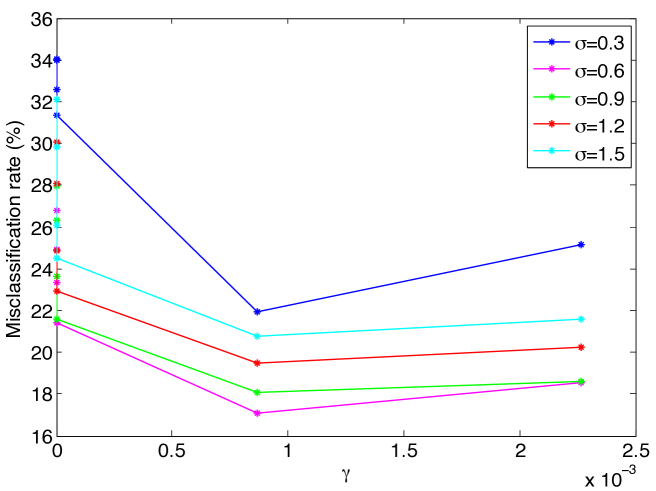

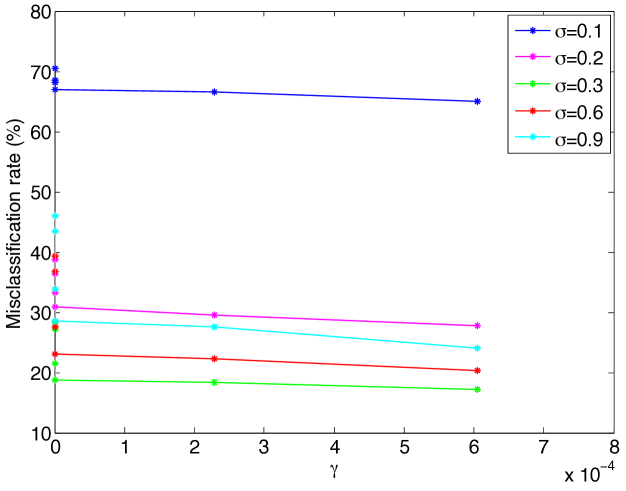

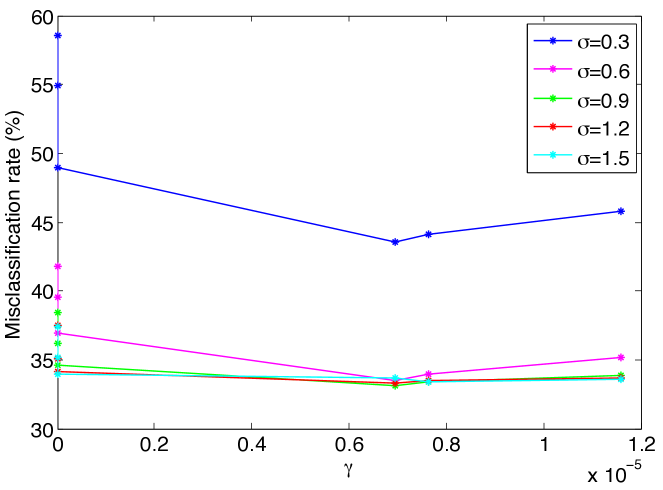

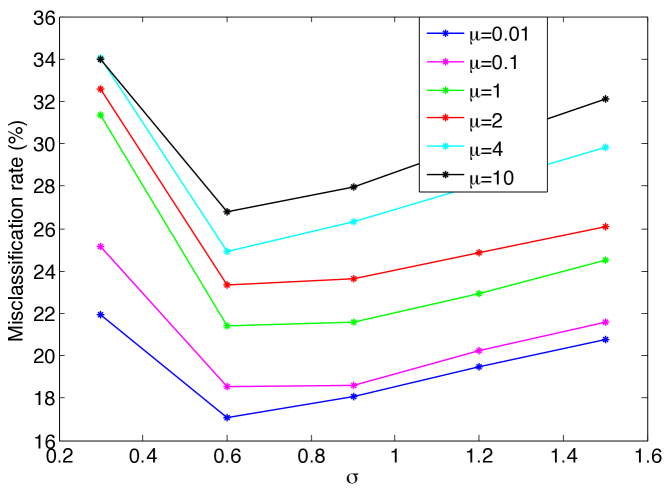

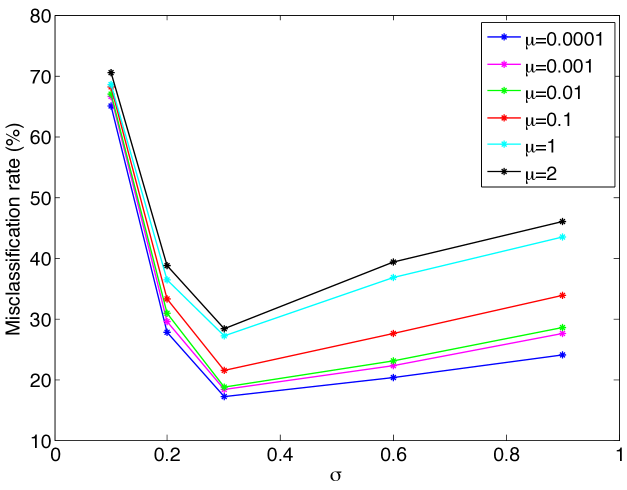

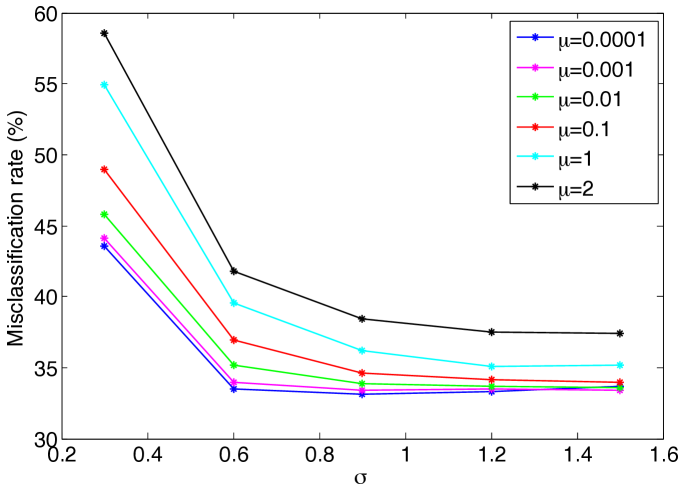

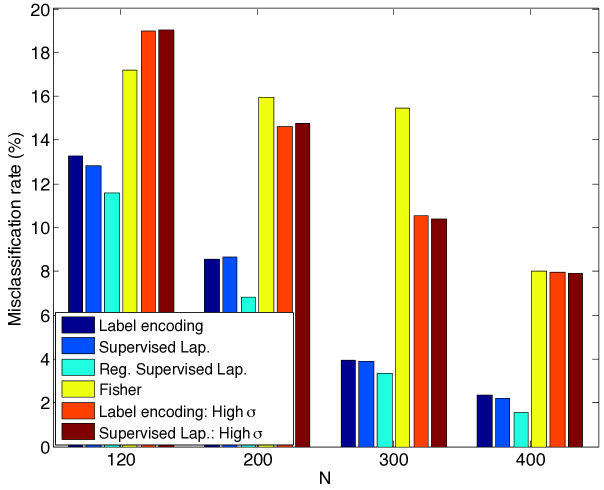

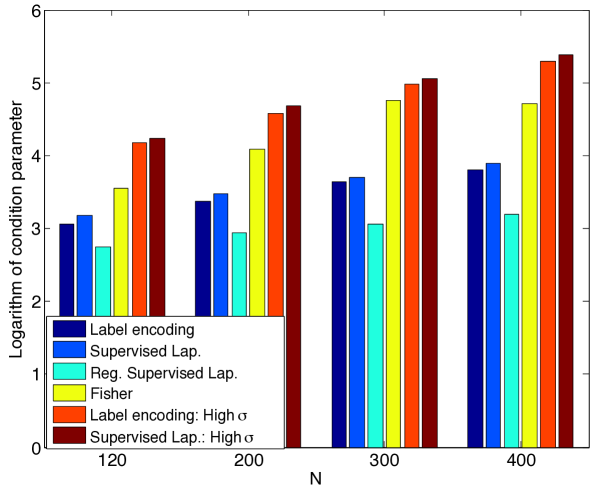

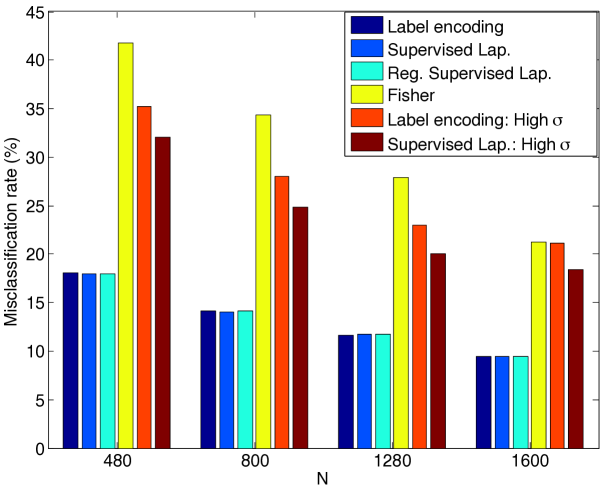

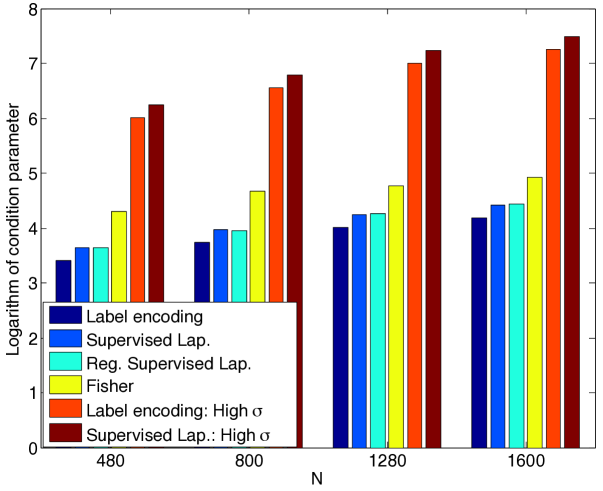

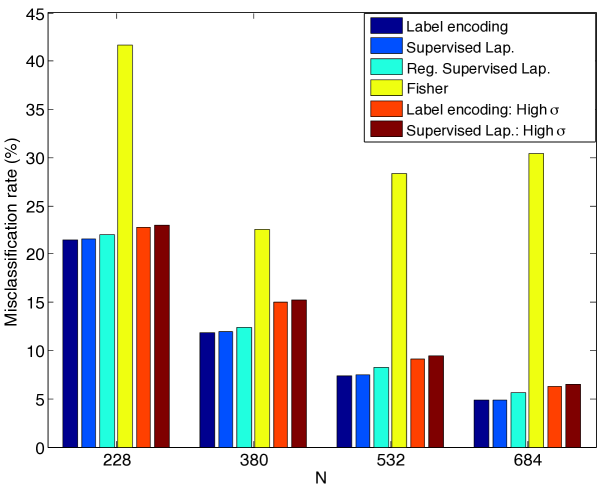

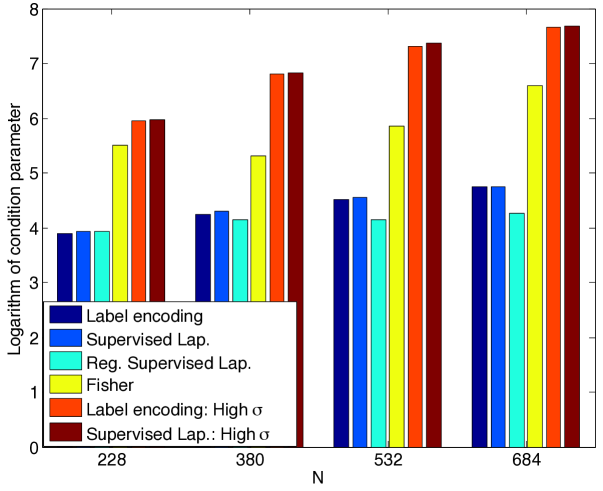

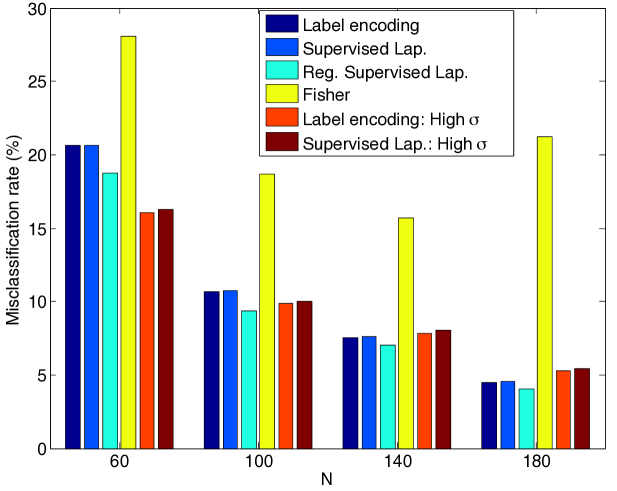

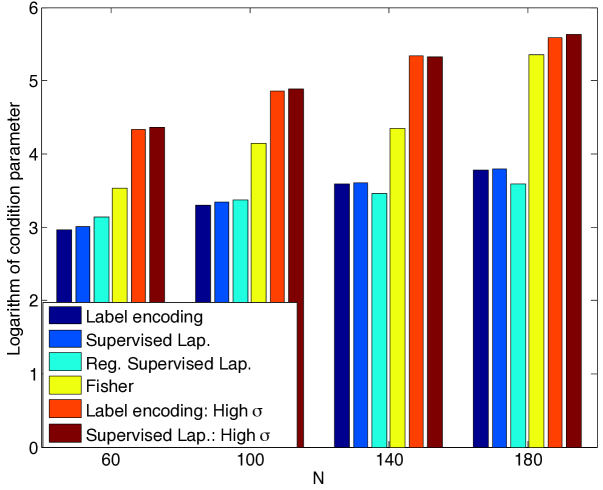

We first study the evolution of the classification error with the number of training samples. Figures 6(a)- 6(c) show the variation of the misclassification rate of test samples with respect to the total number of training samples for the COIL-20, ETH-80 and Yale data sets. Each curve in the figure shows the errors obtained at a different value of the dimension of the embedding. The decrease in the misclassification rate with the number of training samples is in agreement with the results in Section 2 as expected.

The results of Figure 6 are replotted in Figure 7, where the variation of the misclassification rate is shown with respect to the dimension of the embedding at different values. It is observed that there may exist an optimal value of the dimension that minimizes the misclassification rate. This can be interpreted in light of the conditions (14) and (16) in Theorems 8 and 9, which impose a lower bound on the separability margin in terms of the dimension of the embedding. In the supervised Laplacian eigenmaps algorithm, the first few dimensions are critical and effective for separating different classes. The decrease in the error with the increase in the dimension for small values of can be explained with the fact that the separation increases with at small , thereby satisfying the conditions (14), (16). Meanwhile, the error may stagnate or increase if the dimension increases beyond a certain value, as the separation does not necessarily increase at the same rate.