A Survey on Security Attacks and Defense Techniques for Connected and Autonomous Vehicles

Abstract

Autonomous Vehicle has been transforming intelligent transportation systems. As telecommunication technology improves, autonomous vehicles are getting connected to each other and to infrastructures, forming Connected and Autonomous Vehicles (CAVs). CAVs will help humans achieve safe, efficient, and autonomous transportation systems. However, CAVs will face significant security challenges because many of their components are vulnerable to attacks, and a successful attack on a CAV may have significant impacts on other CAVs and infrastructures due to their communications. In this paper, we conduct a survey on 184 papers from 2000 to 2020 to understand state-of-the-art CAV attacks and defense techniques. This survey first presents a comprehensive overview of security attacks and their corresponding countermeasures on CAVs. We then discuss the details of attack models based on the targeted CAV components of attacks, access requirements, and attack motives. Finally, we identify some current research challenges and trends from the perspectives of both academic research and industrial development. Based on our studies of academic literature and industrial publications, we have not found any strong connection between academic research and industry’s implementation on CAV-related security issues. While efforts from CAV manufacturers to secure CAVs have been reported, there is no evidence to show that CAVs on the market have the ability to defend against some novel attack models that the research community has recently found. This survey may give researchers and engineers a better understanding of the current status and trend of CAV security for CAV future improvement.

Index Terms—Connected and autonomous vehicles, Intelligent transportation system, Cybersecurity, Traffic engineering

I Introduction

Autonomous Vehicle (AV) has been a fascinating and impactful application of modern technology and it has been transforming human’s intelligent transportation systems[1, 2]. As telecommunication technology improves, the concept of Connected Vehicles (CVs), which is the idea to connect vehicles and to communicate with road infrastructures and the Internet, has been realized and often implemented together with Autonomous vehicle [3, 4]. Many research studies in academia and industry have advanced Connected and Autonomous Vehicles (CAVs), aiming toward a safe, driverless, and efficient transportation system. These advancements have led to prominent public demonstrations of CAVs in North America, Japan, and Europe [5, 6, 7, 8]. There are various levels of CAV automation, ranging from non-automated to fully automated. In 2018, the Society of Automobile Engineers updated the official reference that specifically described the five levels of vehicle automation [9]. These five levels include Level 0-no automation, Level 1-driver assistance, Level 2-partial automation, Level 3-conditional automation, Level 4-high automation, and Level 5-full automation. In this paper, we consider automation levels 2, 3, 4, and 5, which are described by the official document [9] that humans are not fully involved when the automated driving features are engaged (such as being hands-off).

A CAV consists of many sensing components, such as laser, radar, camera, Global Positioning System (GPS), and light detection and ranging (LiDAR) [10], as well as their connection mechanisms, such as cellular connection, Bluetooth, IEEE 802.11p Wireless Access in Vehicular Environments (WAVE) [11], and Wi-Fi. The sensing components enable a CAV to navigate in an environment with unknown obstacles. Using data from these sensing sensors, the surrounding environment and the vehicle’s location are computed by a system in a process known as Simultaneous Localization and Mapping (SLAM) [12, 13]. Connection mechanisms improve the driving experience or enhance an autonomous driving system by providing advanced knowledge and a bigger picture of the environment. Applications that utilize connection mechanisms include Intelligent Driver-Assistance Systems (IDAS) [14], safety features through Vehicle-to-Infrastructure communications [15], and safety features through Vehicle-to-Vehicle communications [16, 17]. While the sensing components and connection mechanisms have offered significant improvements in safety, cost, and fuel efficiency, they also created more opportunities for cyberattacks.

| Survey Paper | Year Published | Reference Count | Year of Latest Reference | Focused Topic | High-level Taxonomy | Outlining Open-issues |

| Miller and Valasek [18] | 2014 | 12 | 2012 | CAVs | No | No |

| Thing and Wu [19] | 2016 | 16 | 2016 | CAVs | Yes | No |

| Haider et al. [20] | 2016 | 10 | 2015 | Global Positioning System | No | No |

| Parkinson et al. [21] | 2017 | 91 | 2016 | CAVs | No | Yes |

| Tomlinson et al. [22] | 2018 | 43 | 2018 | Controller Area Network | Yes | Yes |

| van der Heijden et al. [23] | 2018 | 126 | 2018 | Misbehavior detection in communication between CAVs | No | Yes |

| This paper | 2020 | 184 | 2020 | CAVs | Yes | Yes |

Attempts to deploy and test CAVs have been carried out in many places, and they are supported by governments and corporations. In September 2016, the United States Department of Transportation started the Connected Vehicle Pilot Deployment Program [24, 25], providing over 45 million USD to Wyoming [26], New York City [27], and Tampa [28] to begin building connected vehicle programs. In the United Kingdom, the Centre for Connected and Autonomous Vehicles has invested 120 million GBP to support over 70 CAVs projects, with a further 68 million GBP coming from industry contributions [29]. In China, industry officials estimated that by 2035, there will be around 8.6 million autonomous vehicles on the road, of which 5.2 million are semi-autonomous (SAE levels 3 and 4) and 3.4 million are fully autonomous (SAE level 5) [30]. In Japan, prime minister Shinzo Abe claimed to grow a fleet of thousands of autonomous vehicles to serve in Tokyo Olympics 2020 [30]. In South Korea, two competitions were sponsored by Hyundai Motor Group to stimulate the development of CAVs [31]. These two competitions were held in 2010 and 2012, respectively. The development of CAVs is gaining significant public attention. The unfortunate side effect of this public attention is that CAVs will probably become attractive targets for cyberattacks.

Furthermore, CAV engineers and manufacturers need to have a systematic understanding of the cybersecurity implications of CAVs. Even though no significant cyberattack has occurred to the publicly deployed CAV programs, there are potential security threats to CAVs that have been discovered largely by the academic research community [32]. These potential security attacks will be more harmful than attacks on non-automated transportation systems because drivers may not be mentally or physically available to take over the driving, and engineers and technicians may not be available immediately to recover a compromised system.

Considerable research efforts have been carried out for identifying vulnerabilities in CAVs, recommending potential mitigation techniques, and highlighting the potential impacts of cyberattacks on CAVs and related infrastructures [33, 34, 35, 36]. Researchers have identified many vulnerabilities associated with sensors, electronic control units, and connection mechanisms. Some even demonstrated successful cyberattacks on CAVs and their components that are currently being sold and operated [37, 38, 39]. Since detailed and security-focused studies for CAVs are fairly new in the literature (the majority of technical and in-depth papers discussed in this survey are published after 2011), there is an absence of a comprehensive survey paper that utilizes the current literature to build a taxonomy and to suggest significant gaps and challenges. For example, Miller and Valasek (2014) [18] published a survey paper on attack surfaces but did not have much cover on defense strategies. Thing and Wu (2016) proposed a taxonomy of attacks and defenses but failed to point out specific examples in the literature with only 16 references [19]. Other survey papers that are dedicated to specific components of CAVs [22, 20, 23]. To our knowledge, Parkinson et al. (2017) [21] is a state-of-the-art survey paper on this topic. Parkinson et al.’s paper reviewed 89 publicly accessible publications and identified knowledge gaps in the literature. However, we found that the authors missed interesting and important papers on some attack models and defense strategies, such as ones that we will cover in the GPS spoofing attacks [40, 41] (section III-F), defense against LiDAR spoofing [42, 43] (section III-D), and adversarial input attack on cameras [44, 45] (section III-G). Meanwhile, Parkinson et al. [21] did not include any literature published after 2017. We tried our best to explore and present such technical papers, which are experimented not only on CAVs but also on related cyber-physical systems (e.g., unmanned aerial vehicles). Besides, our survey paper covers the recent developments of attacks and defenses on CAVs, including three ethical hacking studies on Tesla and Baidu autonomous vehicles in 2019. A comparison of survey papers can be found in Table I.

This survey paper aims to review published papers and technical reports on cybersecurity vulnerabilities and defenses of CAVs, to provide readers with a summary of past research efforts, to organize them into systematic groups, and to identify research gaps and challenges. We have surveyed 184 papers from 2000 to 2020 about CAVs and CAV components to understand the security challenges of CAVs. The first paper related to the security of CAVs was published in 2005 regarding secure software update for CAVs [46]. Since then, we observed an increasing trend of publications on this issue. From 2015 to 2019, we counted 8, 11, 14, 17, and 12 published papers per respective year about CAV-related security issues. We hope that our work can inform current and aspiring researchers and engineers of the security issues of CAVs as well as state-of-the-art defense and mitigation techniques. We further hope that our work can motivate other researchers to address cybersecurity challenges facing the development of CAVs. We acknowledge that research in this area is growing at a rapid rate. We also realize that some achievements from academia and industry might have been overlooked or not yet published. As a result, we observe that many vulnerabilities do not have enough tested solutions. Given the vast investment and rapid changes in the CAV industry, many individuals and corporations may not agree with our observations in this article, but any debate and criticism would be welcomed and appreciated for the growth of the community.

The remainder of this paper is organized as follows. Section 2 describes the taxonomies of attack and defense according to the components of CAVs, where those components are also explained in details. This section would provide readers a brief overview of the attack and defense techniques appeared in the existing literature so that readers without technical experience can have a high-level understanding of those attacks and defenses. Section 3 discusses the attack techniques, their corresponding mitigation/defense techniques, and the challenges of defenses and the gaps between attack models and defense techniques. In section 4, we identify the trends, challenges, and open issues in academic research and industry developments.

II Taxonomy of Attacks and Defenses

The purpose of this section is to provide a high-level overview of the types of attacks and defenses that have been discussed for CAVs. In this section, we attempt to classify attack models and defense strategies based on their characteristics, but do not provide technical details. Instead, technical details of attack models and defense strategies with their corresponding references are presented in section III. Figures 3 and 4, which are presented in section II to point readers to related parts in section III, may help readers navigate easily between the two sections.

II-A Taxonomy of Attacks

In this section, we discuss the CAV components whose vulnerabilities have been found in the literature and provide a high-level overview of the attack models.

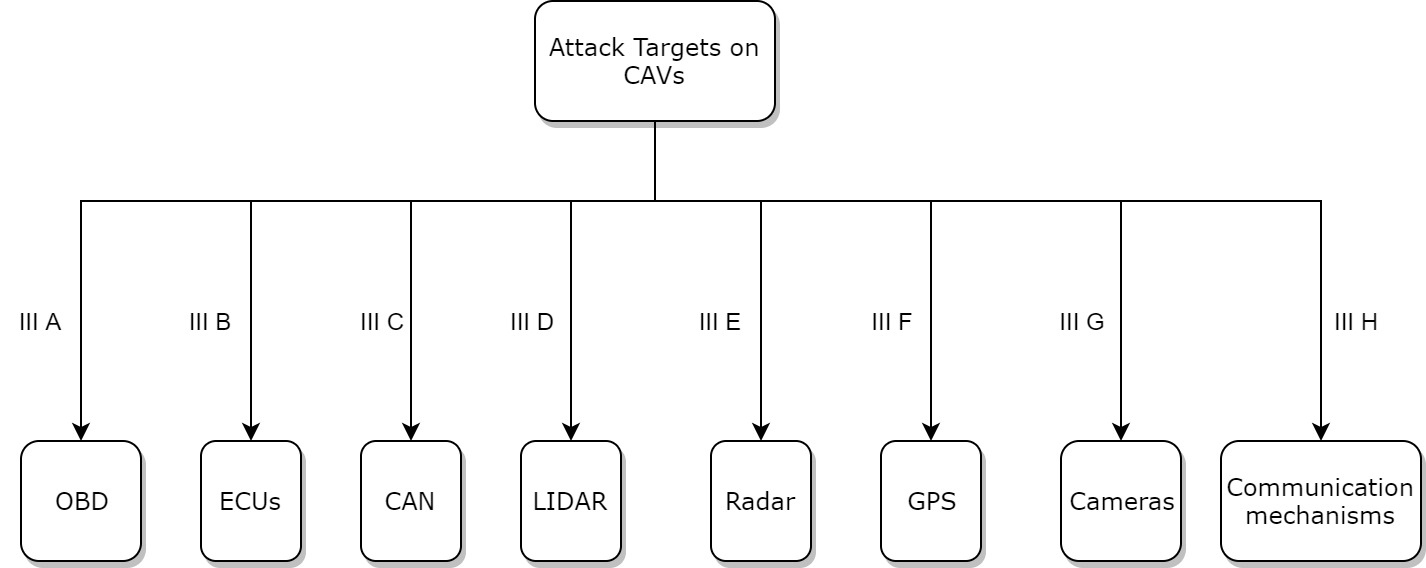

II-A1 Attack targets

As discussed in section I, a good CAV system consists of many sensor components and connection mechanisms. They work together and contribute to CAVs’ functioning. Compromising or tampering any of these components may destabilize a CAV and serve the attacker’s goal, such as stealing information and causing property damage and bodily injury. In this subsection, we describe CAV components that have been targeted by cyber attackers in the literature. Some attack models have been demonstrated as realistic threats while the others have only been discussed theoretically. While section II presents the classification of attacks and defense, section III gives the detailed discussion of attack models and defense strategies with their citations. Figure 1 summarizes the attack targets along with their corresponding subsections in section III, where readers can find the detailed discussions of the attack models and the mitigation techniques along with their references.

On-board Diagnostic Port (OBD) is a connection port that anyone can use to collect information about a vehicle’s emissions, mileage, speed, and data on a vehicle’s components. There are two OBD standards, namely OBD-I and OBD-II. OBD-I was introduced in 1987 but had many flaws, so it was replaced by OBD-II introduced in 1996 [47]. OBD-II port should be found in almost any modern vehicle, and CAVs are not exceptions. Figure 2 shows the OBD port on a Tesla Model X (SAE level 2). Modern OBD ports can provide real-time data [48]. OBD also provides a pathway to acquire data from CAV’s Electronic Control Units and possibly to modify the software embedded in those control units. Many manufacturers also use OBD ports to perform firmware updates [33]. Attack models on OBD ports and their corresponding mitigation techniques are discussed in Section III-A.

Electronic Control Units (ECUs) are embedded electronic systems that control other subsystems in a vehicle. All modern vehicles use ECUs to control vehicular functionalities by acquiring electronic signals from other components, as well as processing, and sending control signals. Some important ECUs are Brake Control Module, Engine Control Module, Tire-pressure Monitor Systems, and Inertial Measurement Units. Their functionalities are as follows. The Brake Control Module collects data from wheel-speed sensors and the brake system, as well as processes the data to determine whether or not to release braking pressure in real-time [49]. The Engine Control Module controls fuel, air, and spark, as well as collects data from many sensors around the vehicle to ensure that all components are within a normal operating range [49]. The Tire-pressure Monitor Systems collect data from sensors within tires and determine if the tire pressures are at ideal levels. The United States has legally required all vehicles to be equipped with Tire-pressure Monitor Systems since 2007 [50], and the European Union issued the same regulation in 2012 [51]. The Inertial Measurement Units collect data from accelerometers, magnetometers, and gyroscopes and calculate the vehicle’s velocity, acceleration, angular rate, and orientation. These calculations are pivotal for CAVs because they serve as inputs for running a safe automated driving system [52]. For example, a change in road gradient would change a CAV’s angular rate and orientation, and the automated driving system may issue an adjustment in a vehicle’s speed to maintain safe operations. CAVs involve a larger number of ECUs than a non-automated vehicle (SAE level 2 and below) because they possess many more sensors and require many more calculations to make autonomous decisions in driving. Readers may think of ECUs in CAVs as mini-computers, each carries out a specific role and collaborates with others to perform autonomous driving. It is common to see complex collaborations between ECUs [34]. Attack models and defense strategies on ECUs are discussed in section III-B. Communications between ECUs happen on Controller Area Networks, which will be discussed as follows.

Controller Area Network (CAN). ECUs are typically connected through a CAN. In a vehicle, the CAN is a central network to connect ECUs together so that they can communicate with each other. A CAN bus is typically structured as a two-wire and half-duplex network system that can support high-speed communication [53]. The greatest benefits of CANs are the low amount of wiring and the ingenious prevention of message loss and message collision [53]. In CAVs, network packets are transmitted to all the nodes in the CAV network, and the packets do not contain an authentication field or source identification field [19]. Therefore, a compromised node can collect all data being transferred through the network and broadcast malicious data to other nodes, making the entire CAN vulnerable to cyberattacks. Attack models and defense strategies on CANs are discussed in section III-C.

Sensors. The following sensors are crucial to CAVs and are often found in most CAVs. All of the sensors discussed below will have their vulnerabilities discussed in Section III.

-

•

Light Detection And Ranging (LiDAR) are sensors that use light to measure the distance to surrounding objects. LiDAR sensors operate by sending light waves to probe the surrounding environment and make measurements based on reflected signals [54]. The light beam’s wavelength varies to suit the purpose and ranges from 10 micrometers (infrared light) to approximately 250 nanometers (ultraviolet light) [54]. In CAVs, LiDAR is often used for obstacle detection to navigate safely through environments and is often implemented by rotating laser beams [55]. Data from LiDAR can be used by software embedded in ECUs to determine whether there are obstacles in the environment, as well as by autonomous emergency braking systems [56]. Attacks and defense techniques on LiDAR are described in section III-D.

-

•

Radio Detection and Ranging (Radar) are sensors that send out electromagnetic waves in the radio or microwave domain to detect objects and measure their distance and speed by sensing the reflected signals. In CAVs, radars are useful in many applications. For example, short‑range radars enable blind-spot monitoring [57], lane-keeping assistance [58], and parking aids [59]. Long‑range radars assist in automatic distance control [60] and brake assistance [61]. Attacks and defense techniques on radars are described in section III-E.

-

•

Global Positioning System (GPS) is a satellite-based navigation system that is funded and owned by the United States government, is operated and maintained by the United States Air Force [62]. It is a global navigation system that operates based on the satellites in the Earth’s orbit that transmit high-frequency radio signals. The radio signals may be sensed by many devices such as smartphones and GPS receivers in CAVs. When GPS receivers find signals from three or more satellites, they can compute their locations. Since finding a route between two locations is necessary for autonomous driving, GPS signals are critical to CAVs. GPS receivers can operate without any communication channel such as wireless networks, but data from wireless networks can often enhance GPS receivers’ accuracy [63]. Since GPS signals do not contain any data that can directly authenticate the source of signals, GPS receivers are vulnerable to jamming and spoofing attacks. These attacks and mitigation techniques are described in section III-F.

-

•

Cameras (image sensors) are widely applied in CAVs. Autonomous and semi-autonomous vehicles (SAE level 2 and above) rely on cameras placed on many positions to acquire a 360-degree view around the vehicle. Cameras provide information for important autonomous tasks such as traffic sign recognition [64, 65, 66] and lane detection [67, 68]. Cameras can also be used to replace LiDAR for the task of object detection and for measuring distance at a lower cost, but they have poor performance under specific situations such as rain, fog, or snow [69]. Together with LiDAR and radars, cameras provide abundant and diverse data for autonomous driving. Attack models on cameras and mitigation techniques are described in section III-G.

Connection Mechanisms in CAVs can be divided into vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) networking. V2V communications help exchange data between nearby vehicles and can quickly provide additional information to the data already collected by a CAV regarding its surrounding environment. This additional data can lead to safer and more efficient autonomous driving. V2I communications help exchange data between CAVs and road infrastructures, which provide data about the bigger picture of the transportation system, such as smart traffic signs (without the need to do image recognition) and safety warnings in a large region [70]. V2V communications often follow the Vehicular Ad-hoc NETworks (VANET) paradigm, where each vehicle acts as a network node and can independently interact with other nodes through a wireless connection [71]. The wireless connections used are often dedicated short-range communications (DSRC) and cellular networks [72]. A well-known example of DSRC is the IEEE 802.11p Wireless Access in Vehicular Environments (WAVE) [11, 73]. WAVE is described in detail by Kenney (2011) [74]. V2I communications are often achieved by using cellular networks, where Long-Term Evolution (LTE) is the current standard [72]. Attack models on V2V and V2I networks and defense techniques are discussed in section III-H.

II-A2 Classifications of Attack Models

We can categorize the attack models, described in details in section III, by their access requirements and by their motives.

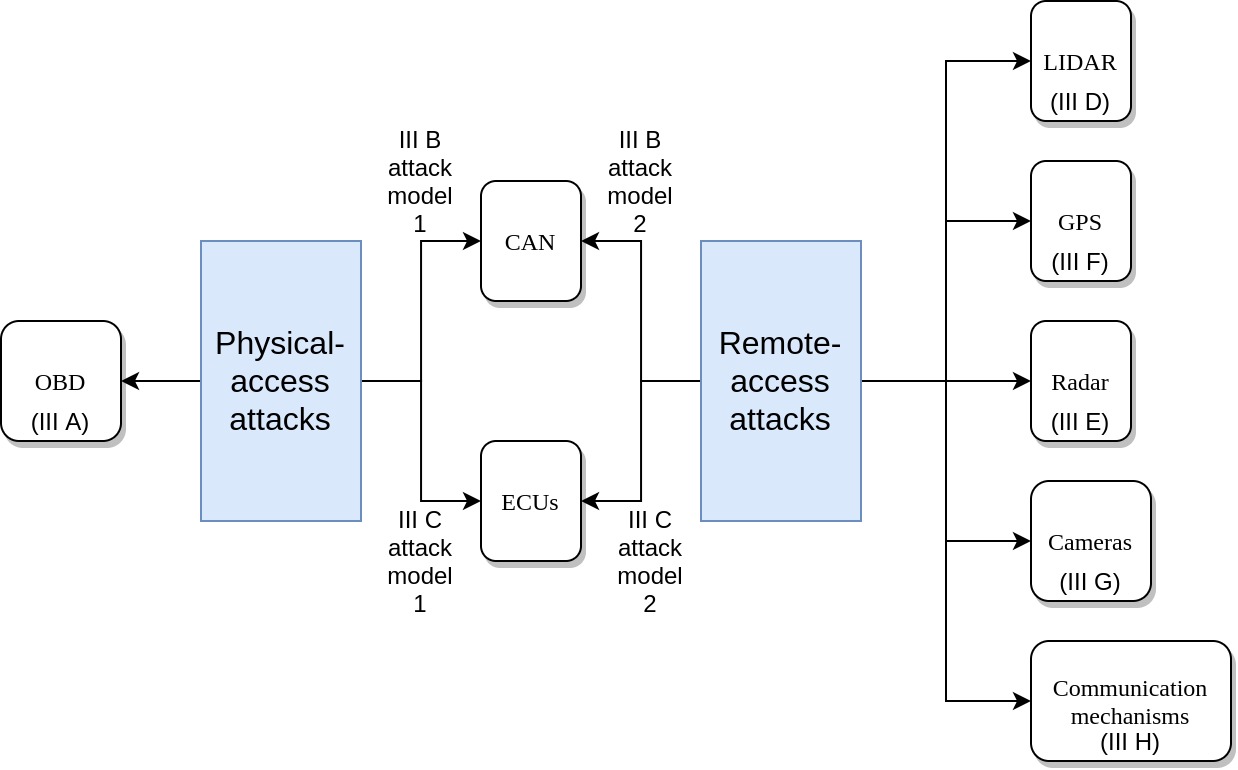

Access Requirement: attack models can be performed remotely (remote-access attacks) or can only be performed with physical access to CAV components (physical-access attacks).

-

•

Remote-access attacks: Attackers do not need to physically modify parts on CAVs or attach instruments to CAVs. Attacks can be launched from a distance, such as from another vehicle. Three common patterns for remote-access attacks are sending counterfeit data, blocking signals, and collecting confidential data. Examples of sending counterfeit data can be found in section III-D-Attack Model 1, section III-E-Attack Model 1, section III-F-Attack Model 1, and section III-G-Attack Model 2. Examples of attacks that block signals are described in section III-D-Attack Model 2, section III-E-Attack Model 2, section III-F-Attack Model 2, and section III-G-Attack Model 1. Examples of attacks that collect confidential data can be found in section III-H-Attack Model 1. Readers may refer to these sections for more details.

-

•

Physical-access attacks: Attackers need to physically modify components on CAVs or attach instruments to CAVs. Examples of these attacks are reprogramming ECU (section III-A and section III-C-Attack Model 1) and falsifying input data (section III-B-Attack Model 1). Physical-access attacks are more difficult to carry out because attackers may be detected when tampering with CAVs.

For easier navigation through this paper, a summary of access requirements to perform attacks on the aforementioned CAV components is presented in Figure 3, annotated with the corresponding parts in section III for more details. CAN and ECUs can be targets for both remote-access and physical-access attacks.

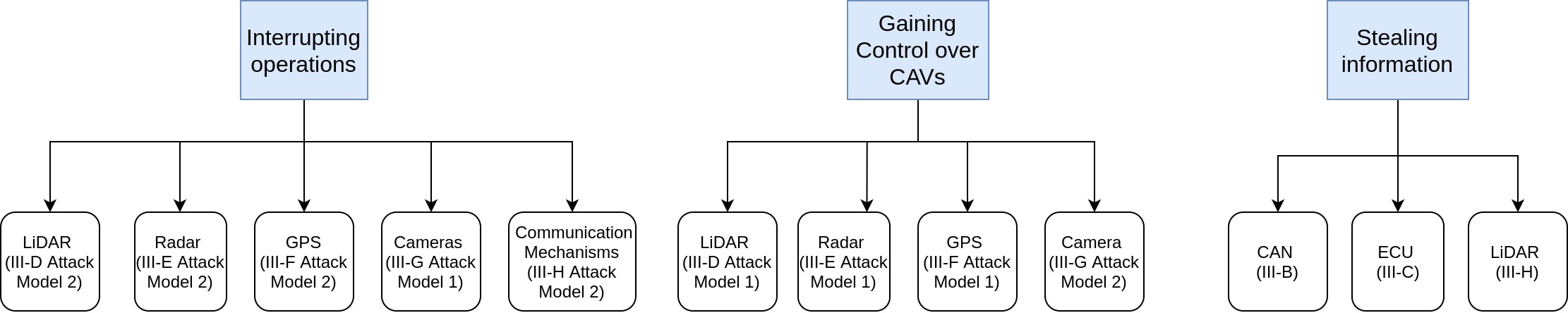

Attack Motivations: Three common attack motivations are to interrupt (but without control) CAVs’ operation, to control CAVs as attackers’ wishes, or to steal information.

-

•

Interrupting operations: attackers aim to corrupt CAV components that are important for autonomous driving, thus making the autonomous driving mode unavailable on CAVs. These attacks are analogous to Denial-of-Service attacks on networks. Examples can be found in III-D-Attack Model 2, section III-E-Attack Model 2, section III-F-Attack Model 2, section III-G-Attack Model 1, and section III-H-Attack Model 2.

-

•

Gaining control over CAVs: attackers gain sufficient control over CAVs so that they can alter the vehicles’ movements, such as changing the vehicle’s route, forcing emergency brake, and changing vehicle speed. Examples of these attacks can be found in section III-D-Attack Model 1, section III-E-Attack Model 1, section III-F-Attack Model 1, and section III-G-Attack Model 2.

-

•

Stealing information: attackers’ goal is to collect important and/or confidential information from CAVs. Collected information may be used for further attacks. Examples of this type of attack can be found in section III-B, section III-C, and section III-H-Attack Model 1.

For easier navigation through this paper, a summary of attack motives is presented in Figure 4, annotated with the corresponding parts in section III for more details.

Finally, for each attack model described in section III, we show its targeted CAV component, access requirement, and attack motives in Table II.

| Sub-section in section III | Targeted CAV component | Access requirement | Attack motives |

|---|---|---|---|

| III-A | OBD | Physical | Interrupting operations, Gaining Control over CAVs, Stealing Information |

| III-B Attack Model 1 | CAN | Physical | Interrupting operations, Gaining Control over CAVs, Stealing Information |

| III-B Attack Model 2 | CAN | Remote | Interrupting operations, Gaining Control over CAVs, Stealing Information |

| III-C Attack Model 1 | ECU | Physical | Interrupting operations, Gaining Control over CAVs, Stealing Information |

| III-C Attack Model 2 | ECU | Remote | Interrupting operations, Gaining Control over CAVs, Stealing Information |

| III-D Attack Model 1 | LiDAR | Remote | Gaining Control over CAVs |

| III-D Attack Model 2 | LiDAR | Remote | Interrupting operations |

| III-E Attack Model 1 | Radar | Remote | Gaining Control over CAVs |

| III-E Attack Model 2 | Radar | Remote | Interrupting operations |

| III-F Attack Model 1 | GPS | Remote | Gaining Control over CAVs |

| III-F Attack Model 2 | GPS | Remote | Interrupting operations |

| III-G Attack Model 1 | Camera | Remote | Gaining Control over CAVs |

| III-G Attack Model 2 | Camera | Remote | Interrupting operations |

| III-H Attack Model 1 | Connection Mechanism | Remote | Interrupting operations |

| III-H Attack Model 2 | Connection Mechanism | Remote | Gaining Control over CAVs |

II-B Taxonomy of Defenses

In this section, We attempt to organize the defense techniques into categories that have certain patterns. Some defense categories are generally effective against certain types of attacks, but we suggest that readers study defense techniques for attacks on a case-by-case basis. For example, attacks that prevent sensors from receiving legitimate signals can usually be mitigated by the abundance of information, through planting multiple sensors or through acquiring additional information from V2V or v2I connections, but this does not hold for the case of GPS jamming (section III-F-Attack Model 2).

The categories for defense techniques are as follows.

-

•

Anomaly-based Intrusion Detection System (IDS) is a method that is designed to detect unauthorized access or counterfeit data. IDS methods generally look to detect abnormal data from the signals or side-channel information. For example, Cho et al. (2016) [75] proposed Clock-based IDS (CIDS), which measures the clock skew of ECUs (the phenomenon in which the clock signal arrives at different ECU at slightly different times) and uses this information to fingerprint the ECUs. The fingerprints are then used to detect intrusions by checking for any abnormal shifts in the clock skews. IDS methods are generally applicable in attack models that rely on sending counterfeit signals, such as CAN attacks (section III-B), LiDAR spoofing (section III-D-Attack Model 1), radar spoofing (section III-E-Attack Model 1), and GPS spoofing (section III-F-Attack Model 1). However, they are not effective to defend against adversarial image attacks on cameras (section III-G-Attack Model 2).

-

•

The abundance of information can be achieved by getting information from other CAVs and infrastructures or by setting up abundant information within a CAV. This is good not only for defending against cyberattacks but also for increasing confidence in autonomous driving. This category of defense strategy is generally effective against Denial-of-Service type of attacks, such as LiDAR jamming (section III-D-Attack Model 2), radar jamming (section III-D-Attack Model 2), and camera blinding (section III-G-Attack Model 1). For example, by using multiple LiDAR sensors with different wavelengths, a CAV is protected from attackers who send high-power light beams to blind LiDAR sensors. However, this method is not effective against GPS jamming (section III-F-Attack Model 2). A major drawback of this defense category is that placing abundant components on CAVs is expensive.

-

•

Encryption methods can be applied to defend against attacks that abuse components that lack authentication methods, such as CANs and signals for sensors. Many encryption methods have been published to secure CAN and sensor signals, such as those in [76, 77, 78, 79] (they will be discussed in detail in section III). However, an encryption method is not applicable for GPS receivers because it is too expensive to modify the satellites so that they can send encrypted radio signals. This makes defending against GPS jamming attacks a difficult task (section III-F-Attack Model 2).

Some defense techniques are unique and do not fall into any of these categories, which is why we suggest that readers study defense techniques on a case-by-case basis.

III Existing attacks and their countermeasures

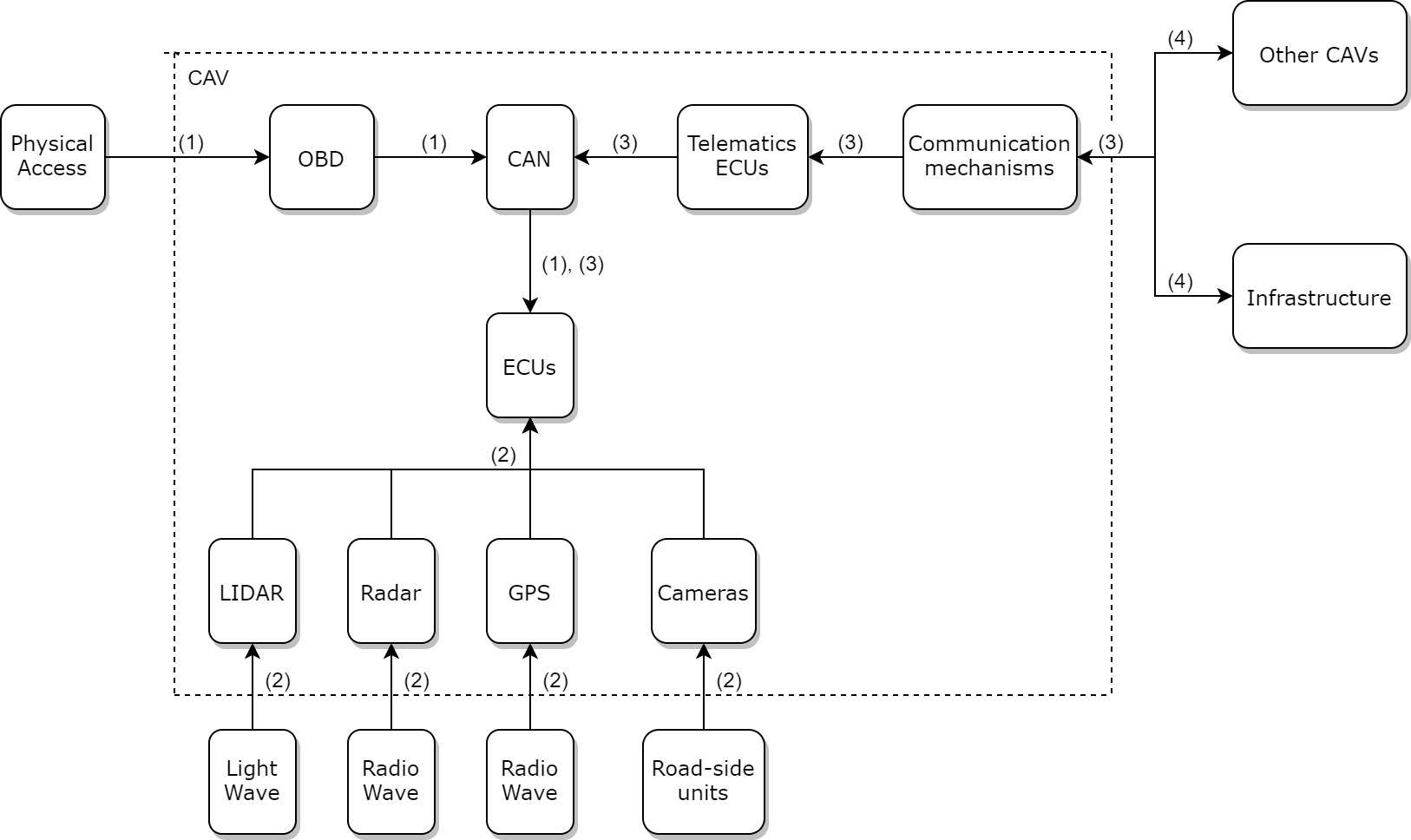

One compromised component of CAVs may allow attackers to compromise other components, other CAVs, and infrastructures, thus forming a sequence of attacks. From our reading of the literature, we have come up with possible attack sequences that attackers may perform and present them in Figure 5. The attack sequences plotted in Figure 5 are:

-

•

Sequence with label (1): Attackers gain physical access to OBD ports, which gives them access to CANs and subsequently access to ECUs.

-

•

Sequence with label (2): Attackers compromise LiDAR, radar, GPS, or cameras and send adversarial information to ECUs.

-

•

Sequence with label (3): Attackers compromise telematics ECUs (ones that have access to communication channels such as VANET, Bluetooth, and DSRC). Attackers can then send adversarial information through the CAN to other ECUs.

-

•

Sequence with label (4): Attackers can send adversarial information from their CAVs or CAVs that have been compromised.

The possibility that attackers may compromise one CAV component after another means that in order to enhance the security of CAVs, manufacturers should enhance the security of all CAV components.

Each of the following subsections describes attack model(s) for a specific CAV component. In each subsection, we describe the attack models, the security requirements for defense techniques against the attack models, existing defense techniques in the literature and whether they meet the security requirements, and challenges for defending against the attack models. We number the attack models for easier reference to section II and include short descriptions as the names of the attack models. For example, in section III-B, Attack Model 1 - CAN access through OBD would be referred as ”III-B Attack Model 1” in section II and this attack models happen through physical access to the OBD port.

III-A Attacks on OBD

Attack Model 1 - malicious OBD devices: The OBD port is an open gateway for many attacks to other CAV components because the port usually does not encrypt data or control access. Since the OBD port itself has no capability of remote connection, attackers would need physical access to the OBD port to perform these attacks. Some devices that are plugged into the OBD port can transfer data to a computer through wired or wireless connections. Some of these devices were made by car manufacturers for diagnostic purposes and firmware updates. Some examples of these devices are Honda’s HDS, Toyota’s TIS, Nissan’s Consult 3, and Ford’s VCM [33]. Some other devices are developed by third-party companies to connect vehicles to smartphones (i.e. self-diagnostic purpose), such as Telia Sense [80] and AutoPi [81]. Studies have been done to assess the feasibility of using these third-party devices to perform a meaningful attack. Marstorp and Lindström (2017) [82] found that Telia Sense is a well secured system whereas Christensen and Dannberg (2019) [83] successfully performed a man-in-the-middle attack, where they intercepted data to and from the AutoPi Cloud interface. After gaining access to the OBD port, attackers can interrogate information about the CAV, controlling key components (such as warning light [84], windows lift, airbag control system, horn [34]), and injecting codes to ECUs [85]. Koscher et al. (2011) [34] successfully performed one such attack on a running vehicle by using a self-written program named CARSHARK to compromise many components on the vehicle. In [86], it was shown that if attackers can trick drivers into downloading a malicious self-diagnostic application on their smartphones, attackers can transmit an ECU-controlling data frame through the OBD device to the vehicle’s ECUs.

Criteria for Defense Strategies: Defense may take place at the OBD level, or at CAN and ECUs level. Criteria for defense strategies at CAN and ECUs are discussed in their respective subsections (III-B and III-C). Here, we discuss the criteria for securing the OBD port. Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Authenticity of OBD devices: before being granted access to a CAV’s data, OBD devices must come from a trusted manufacturer

-

•

Integrity of OBD devices: they must be provable that they have not been compromised or corrupted after their creation.

-

•

Privacy of OBD devices: any information gained from the OBD port is intelligible only to the device’s intended party.

-

•

The authentication process of OBD devices should be efficient to not cause significant delay to users.

Existing Defense Strategies: Unfortunately, we have not found any significant method in the literature to secure the OBD port and to detect malicious devices. However, defense strategies for CAN and ECUs are abundant. Defense strategies to detect abnormal activities from OBD ports can be implemented in CANs and ECUs and are described in their respective subsections. Fowler et al. (2017) [87] proposed using hardware-in-the-loop (HIL) equipment to collect and simulate data on attacks through an OBD port. The HIL technique is not a defense layer on OBD ports but provide a virtual environment for further testing attack and defense mechanisms for the OBD port.

Challenges for defending against this attack model: Because OBD ports are commonly used by car manufacturers for diagnostics and firmware updates, and by other companies for data collection purposes, it is difficult to distinguish legitimate from malicious OBD devices. No study has proposed putting a layer of defense in the OBD port and this remains a challenging problem.

III-B Attacks on CAN

Attack Model 1 - CAN access through OBD: Since CAN protocols generally do not support encrypting messages for authentication and confidentiality [88], attackers can perform three types of attack as follows.

-

•

Eavesdrop: CAN messages can be observed from the OBD port [88].

-

•

Replay attack and unauthorized data transmission [76]: Once attackers have observed all messages transmitted on the CAN bus, they can easily impersonate an ECU and transmit counterfeit messages through OBD ports.

-

•

Denial of Service attack [88]. Attackers can send many messages with high priority through OBD ports and prevent CAN from processing other messages on the CAN bus.

It is important to note that all of these attacks require physical access to the OBD port.

Criteria for Defense Strategies against Attack Model 1: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Confidentiality/privacy of CAN messages: CAN messages should be readable by only the intended receiving ECUs. A message sent through CAN is received by all ECUs connected to that CAN and each ECU decides whether to use it by checking the identifier of the message [89]. This may allow attackers to conclude private information such as driving behavior or the state of the vehicle.

-

•

Authenticity of CAN messages: CAN messages should only be sent from verified ECUs connect to that CAN to prevent unauthorized data transmission and Denial of Service attacks.

-

•

Low requirement for computing resources: the authentication and verification process of CAN messages should be done efficiently to ensure real-time performance of the entire CAV system.

Existing Defense Strategies against Attack Model 1: Wolf et al. (2006) [90] proposed a secure CAN protocol that achieved authenticity and confidentiality by using Symmetric Key Encryption and Public Key Encryption. Lin et al. (2012) [76] and Nilsson et al. (2008) [91] also proposed to achieve authenticity by using the Message Authentication Code (MAC) method. Herrewege et al. (2011) [78] proposed an authentication protocol named CANAuth, which also uses MAC for authentication but utilizes an out-of-band channel to send more authentication data in a real-time environment. Even with the out-of-band channel, Herrewege et al. acknowledge that public-key cryptography is not viable due to its large key size requirement. Matsumoto et al (2012). [88] criticized that the aforementioned cryptographic methods suffer from key management issues (e.g. leakage of secret keys) and may not be fast enough to achieve real-time response in a moving vehicle. Halabi and Artail (2018) [79] aimed to solve these problems by proposing lightweight symmetrical encryption where the keys are generated based on a CAN frame’s payload and the previous key.

IDS-based defense techniques are popular. Müter et al. (2011) [92] proposed calculating the entropy of a CAN bus during normal activities; significant deviations in entropy are then used to detect attacks. Similarly, Miller et al. (2014) [18] proposed using a small device that connects to the OBD port, collects traffic data, and detects abnormal traffic patterns with machine learning. When the device detects an attack, it stops the circuit on the CAN bus and disables all CAN messages. Matsumoto et al. (2012) [88] proposed a solution where all ECUs attempt to detect unauthorized messages by monitoring all messages being transmitted on the CAN bus. For each ECU, a flag is implemented within the CAN controller that would indicate whether the ECU is trying to send a message. Then, the timing of the flag being switched is measured to detect unauthorized messages. Shin and Cho (2017) [93, 75] proposed a similar method, where they measured the intervals of periodic CAN messages and used these measurements to detect abnormal messages. Gmiden et al. (2016) [94] criticized that Matsumoto’s method requires modifications to each ECU and thus is an expensive solution. Gmiden et al. then proposed an IDS that checks the identity of each ECU that send CAN messages and calculates the time since the last message from the same ECU was observed. If the new time interval is significantly shorter than the previous time intervals from the same CAN ID, an alert of attack is raised. Tyree et al. (2018) [95] proposed an IDS that uses the correlations between ECU messages to estimate the state of the vehicle. A sudden change to an ECU’s messages would raise an alert that the ECU is compromised. If an attacker successfully compromises many ECUs, the sudden change in the state of the vehicle is used to detect the attack. Many more types of IDS for CAN can be found in Tomlinson’s survey paper about this topic [22]. Siddiqui et al. (2017) [96] proposed a hardware-based framework that implements mutual authentication and encryption over the CAN Bus.

Challenges for defending against Attack Model 1: Requirements for a good defense method against Attack Model 1 are real-time response, accuracy, and low degree of modification needed on the vehicle. Even though many defense mechanisms have been proposed in the literature [22], there also exist criticisms to most of them as discussed in the previous two paragraphs. It is difficult to determine the best defense strategies to implement in an operating CAV. Therefore, there is a need for comparative studies that are performed on moving vehicles to serve as recommendations for CAV manufacturers. In addition, CAN standards may carry legacy and thus may not have the capacity to accommodate the computing demand and communication constraints to these innovative solutions.

Attack Model 2 - CAN access from telematics ECUs: One may attack a CAN by compromising a member ECU first. Some ECUs could be compromised without access to the CAN because they have other access points through connection mechanisms such as Bluetooth and cellular networking. The compromised ECU can then send authenticated messages that can bypass the cryptographic and IDS-based defense mechanisms discussed in Attack Model 1. More details about how telematics ECUs are compromised and studies that have implemented this attack model are presented in subsection C.

Criteria for Defense Strategies against Attack Model 2: Defense strategies may be implemented on the CAN to detect abnormal ECU behaviors, or may be implemented on telematics ECUs to prevent compromise. Here, we discuss the second approach and leave the first approach for section III-C. The criteria for a defense strategy to be implemented on CAN are:

-

•

Enforce integrity of messages sent through CAN under the possibility that a connected ECU is compromised (and thus any authenticity test is invalid).

-

•

Efficient verification process to not interrupt system-wide service.

Existing Defense Strategies against Attack Model 2 When attackers have successfully compromised an ECU, they may be able to send encrypted and authenticated messages through the CAN. Therefore, cryptographic methods discussed in Attack Model 1 such as Symmetric Key Encryption, Public Key Encryption, and MAC may not be able to detect the attack. Some IDS-based defense techniques would not be useful either. For example, Matsumoto’s method [88] and Gmiden’s method [94] may not work because messages being sent from the compromised ECU would not create any timing abnormality. Other IDS-based approaches, such as Müter’s [92], Miller’s [18], and Tyree’s [95], may work because they attempt to model the traffic patterns and state of the vehicles, thus we speculate that they may acquire essential information to detect abnormal messages from the compromised ECU. A probably better strategy of defense is to secure the telematics ECUs. Securing telematics ECUs are discussed in the next subsection about ECUs.

Challenges for defending against Attack Model 2: As previously discussed, there are two approaches to defend against Attack Model 2: securing telematics ECUs [77, 97] and using IDS-based algorithms to detect abnormal traffic patterns from the compromised telematics ECUs [92, 18, 95]. Implementing both approaches in a CAV would increase the security against this attack model. However, it is still unclear how effective these approaches will be and how difficult they are to be implemented.

III-C Attacks on Electronic Control Units

Attack Model 1 - ECU access through CAN: Attackers compromise ECUs through their access to CAN. As previously discussed, after gaining access to CAN through the OBD port or telematics ECUs, attackers may compromise other ECUs on the CAN. Examples of attack techniques are falsifying input data [34], code injection and reprogramming ECUs [98, 89].

Existing Defense Strategies against Attack Model 1: have been discussed in sections regarding OBD and CAN (III-A and III-B).

Attack Model 2 - ECU access through connection mechanisms: Attackers compromise a telematics ECUs through their connection mechanisms. We have found two examples of this attack model in the literature. First, Checkoway et al. (2011) [33] were able to get remote code executions on a telematics unit of a vehicle through Bluetooth and long-range wireless connection (chapters 4.3 and 4.4 on the cited paper, respectively). The authors achieved this result by extracting the ECUs’ firmware and using disassembly (a computer program that decompiles machine code into assembly language) to reverse-engineer the code. After assessing the firmware of the ECU that is responsible for Bluetooth connections, the authors hypothesized that if attackers can pair their smartphones with the Bluetooth ECU, they can compromise the ECU by sending malicious code through their smartphones. For example, after re-engineering the operating system of the ECU that is responsible for handling Bluetooth connections, Checkoway et al. found over 20 insecure calls to strcpy, one of which would allow them to copy data to the stack and thus to execute any code on the ECU. Second, Nilsson et al. (2008) [77] described another pathway for attacking telematics ECUs as follows. Many CAV manufacturers are performing firmware updates over the air (FOTA) for ECUs [99]. The FOTA process works as follows. The firmware is first downloaded over a wireless network connection to a trusted station, then transferred to the vehicle, and finally transferred to the ECUs. The firmware transferring process can be secured by using protocols described in [46, 100], but the installation process is not secured. Therefore, the downloaded firmware is vulnerable to susceptible to adversarial modification by a time-of-check-to-time-of-use attack (TOCTTOU), as described in [101]. The TOCTTOU attack works as follows. Given the benign firmware update File B that is expected by the check-install code. The attacker also prepares malicious File M and constructs a storage device that can observe the read requests to File B. For the first access to File B, the mass storage device serves the legitimate File B. This first access is likely to serve the purpose of calculating and comparing the cryptographic hashes. After the verification process succeeds, the storage device serves the malicious File M for the installation phase. The attack succeeds if the check code verifies the benign file File B and then install the malicious File M for the ECU’s firmware update.

Criteria for Defense Strategies against Attack Model 2: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Robust code on ECUs’ firmware to avoid code injection.

-

•

Restrict access to connect to telematics ECUs, i.e., only accept connections from trusted and authenticated sources.

-

•

Robust firmware update protocols for ECUs that assure integrity and authenticity of the firmware updates.

Existing Defense Strategies against Attack Model 2: Checkoway et al. [33] did not discuss specific defense strategy against their attack model through Bluetooth and wireless connection, but did mention that robust code and firmware update protocols for ECUs are necessary. Seshadri et al. (2006) [97] and Nilsson et al. (2008) [77] proposed a protocol to secure the ECUs’ FOTA. In [77], the secure protocol can be summarized as follows. First, the trusted station generates a random value and combines it with fragments of the firmware update to create a chain. The chain is then hashed repeatedly and the final hash value serves as the verification code. Next, the firmware, the random value, and the verification code are transferred to the vehicle over a secure channel and the hashing process is performed again to validate the integrity of the firmware. In [97], the authors proposed Indisputable Code Execution (ICE), which is a protocol to securely execute codes on a network node from a trusted station. ICE consists of three steps: checking the integrity of the firmware update code, setting up an environment in which once the firmware update is executed, no other code is allowed to be executed, and executing the firmware update within the safe environment.

III-D Attacks on LiDAR

Attack Model 1 - LiDAR spoofing: An attacker can record legitimate signals sent from a LiDAR sensor and relay the signals to another LiDAR sensor of the same CAV to make real objects appear closer or further than their actual locations. Another variation of this attack model is when an attacker creates counterfeit signals that represent an object and inject the counterfeit signals into a LiDAR sensor. Both of these variations have been successfully demonstrated by Petit et al. (2015) [36] at a low financial cost. The authors performed the spoofing attack as follows. The attacker uses two transceivers B and C. The output of B is a voltage signal that corresponds to the intensity of the pulse sent by the LiDAR device being attacked. The output of B is sent to C, which in turn emits a pulse to the LiDAR device. The total cost of these two transceivers was 49.9 US Dollars! Despite the low cost, the authors managed to make the vehicle’s ECU (one that receives input from a LiDAR sensor) think that it is approaching a large object and initiate emergency brake. The second attack variation was more difficult to perform, as it requires the attacker to send the counterfeit signals within a small window after a LiDAR sensor sends its signal. Shin et al. (2017) [102] performed this attack variation on a stand-alone LiDAR sensor, Velodyne’s VLP-16. Cao et al. (2019) [38] concluded from their experiments that the machine learning-based object detection process made it difficult to perform a LiDAR spoofing attack. Nevertheless, the authors formulated an optimization model for the process of generating counterfeit inputs. They performed a case study on the Baidu Apollo’s software module and was able to force an emergency brake, reducing the vehicle’s speeding from 43 km/h to 0 in a second. The authors claimed that their attack model can have a success rate of 75%.

Criteria for Defense Strategies against Attack Model 1: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Low cost: A trivial solution for defending against this attack model is to have redundant LiDAR devices on the vehicle to make it more difficult for attackers to spoof all devices simultaneously. However, the cost for this solution is proportional to the number of redundant LiDAR devices and thus this solution may not be appealing for manufacturers.

-

•

High immediacy: The solution should not take too long to detect a spoofing attack. This also implies that the solution should be computationally efficient.

-

•

Signal filter: a solution that can detect spoofing attacks may be sufficient, but a solution that can filter out legitimate signals among adversarial signals would be even more appealing.

Existing Defense Strategies against Attack Model 1: Shin et al. [102] proposed a few defense strategies such as using multiple sensors having overlapping views, reducing the signal-receiving angle, transmitting pulses in random directions, and randomizing the pulses’ waveforms. Nevertheless, the authors also pointed out that these defense strategies do not match all the aforementioned criteria. Using multiple sensors is expensive. Reducing the signal-receiving angle is also expensive because it requires more LiDAR devices to cover the entire space around the vehicle. Transmitting pulses in random directions is feasible and inexpensive, but does not have good immediacy because the LiDAR device would have to send many unused pulses. Randomizing the pulses’ waveforms and rejecting pulses different from the transmitted one is probably the most appealing solution thanks to its low cost and high immediacy. Approaches of this type have also been studied intensively and applied for military radars [103]. In a 2016 study on LiDAR spoofing on Unmanned Aerial Vehicle (UAV), Davidson et al. (2016) [104] proposed using LiDAR data in previous frames to formulate a momentum model that aims to detect adversarial inputs. The model utilizes the random sample consensus (RANSAC) method and works as follows. Let be the vector of motion that contain features from LiDAR object detection. RANSAC randomly samples k features and forms a hypothesis for each of them. The hypothesis for vector is the ground truth motion for vector . Then, we let all other features vote for each of these k hypotheses. For a feature motion to vote for a hypothesis , the two motion vectors need to be similar such that . The RANSAC method performs many realizations of this process and then picks the hypothesis with the highest vote to be the final hypothesis for the frame, and the corresponding features to be the ground truth of the frame. The shortcoming of this solution, which the authors also acknowledged in the paper, is that it would take some time to build up the weights for the model and would require high computational power. Thus, the solution is not immediate. In 2018, Matsumura et al. [105] proposed a mitigation technique that embeds the authentication data onto the light wave itself. The fingerprinting is obtained by modulating LiDAR’s laser light with information from a cryptographic device, such as an AES encryption circuit. This defense strategy is interesting because it is cost-effective to implement and the authors claimed that attackers cannot make a distance-decreasing attack larger than 30 cm. It is important to note that Cao’s study (2019) [38] on an attack model was published after Matsumura’s defense strategy (2018) [105] but did not discuss this defense strategy any other countermeasure. In 2020, Porter et al. proposed adding dynamic watermarking to LiDAR signals to validate measurements. This solution has the potential to satisfy all the three aforementioned criteria.

Challenges for defending against Attack Model 1: Cao’s attack model may be considered state-of-the-art for its newness and effectiveness. Matsumura’s countermeasure is also novel and recent [105]. It will be an interesting study to implement Matsumura’s strategy against Cao’s attack model [38]. Davidson’s proposed method for UAV [104] may also be worth an experiment on CAVs. Porter et al.’s solution shows good potential and will be interesting for the community to discuss.

Attack Model 2 - LiDAR jamming: Attackers aim to perform a Denial-of-Service attack by sending out light with the same wavelength but with higher intensity and effectively preventing the sensor from acquiring the legitimate light wave. This technique has been used by civilians who aim to avoid speeding tickets by jamming police’s speed gun (a LiDAR device) [106]. In the context of CAV attacks, Stottelaar (2015) [107] successfully performed a jamming attack on a LiDAR sensor (Ibeo Lux3) and argued that such an attack on a CAV’s LiDAR sensor is possible. We have not found any other study or experiment on LiDAR jamming in the literature. Nevertheless, the attack process, as described in detail in [107], is relatively straightforward and should not require much training to replicate.

Criteria for Defense Strategies against Attack Model 2: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Low cost: The solution should not require expensive modification to the vehicle

-

•

High immediacy: The solution should not take too long to detect a jamming attack.

-

•

Signal filter: a solution should be able to filter out legitimate signals among jamming signals.

Existing Defense Strategies against Attack Model 2: Stottelaar [107] suggested several countermeasures such as using V2V communications to gather additional information, changing the wavelength frequently, using multiple LiDAR sensors with different wavelengths, and shortening the ping period (the time window that a sensor waits for the signal to come back). However, these countermeasures all have disadvantages. Vehicle-to-vehicle communication may not always be available. Using multiple LiDAR devices is expensive. The shortened ping period makes a device prone to errors. Changing wavelength frequently may not be effective against attackers who can follow a CAV for a while, as acknowledged by the author. Wang et al. (2015) proposed a novel LiDAR scheme called pseudo-random modulation (PMQSL) quantum secured LiDAR [42]. The PMQSL scheme is based on random modulation technique. The random modulation is a technique in the time domain, which is a typical way to recover the weak signal buried in random noise. The transmitting signal is modulated by the digital pulse codes, usually consisting of on and off. The order M-sequence with elements 1 or 0 is generated by a set of n-stage shift registers. The laser is pulse-position modulated by an electro-optic modulator. These pulses are further randomly modulated to create the horizontal, diagonal, vertical, and anti-diagonal polarization states of the photon through a polarization modulated model. When there is no jamming attack, four different distances corresponding to the four measured polarizations have very small error rates. In the presence of jamming attacks, the four distances have considerable error in the received polarization. The increase in error allows the system to determine that the LiDAR device was being jammed. The authors claimed that this LiDAR scheme can efficiently detect a jamming attack, but cannot filter out legitimate signals among jamming signals.

Challenges for defending against Attack Model 2: We have not found any study or experiment that demonstrates LiDAR jamming on a moving autonomous vehicle, as in Attack Model 1. Such a study will be interesting since we can observe the real effectiveness of Attack Model 2. Besides, defense strategies proposed in [42] and [43] need to be tested for CAVs because they were not specifically developed CAVs.

III-E Attacks on radar

Attack Model 1 - Radar spoofing: Attackers replicate and rebroadcast radar signals to inject distorted data to the sensor. A common tool to perform this attack model is Digital radio frequency memory (DRFM), which is an electronic method to store radio frequency and microwave signals by using high-speed sampling and digital memory [108]. The phase of stored signals can then be modified and the signals are then re-broadcasted to the radar sensor. The falsified signals can then cause incorrect calculations of distance to surrounding objects. Chauhan (2014) [109] experimented with this attack model on a radar device (Ettus Research USRP N210) and managed to make an object from a 121-meter distance appear at a 15-meter distance. Yan et al. (2016) [37] discussed the same idea of the attack but unfortunately, they did not have the resource to implement the attack. Instead, they attempted to inject counterfeit signals to a radar sensor on a Tesla Model S. The attempt was not successful because the sensor has a low ratio of working time over idle time, which makes it difficult to inject signals at the precise time slot.

Criteria for Defense Strategies against Attack Model 1: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Attack Detection: The solution should be able to detect a radar spoofing attack in a timely manner.

-

•

Signal Filter: The solution should be able to filter out the attack signals and derives accurate distance measurements.

-

•

Consistency: The solution should be able to achieve the previous two criteria under many circumstances and over a long period of time.

-

•

Non-disruptivity: The solution should not affect other services of a vehicle.

Existing Defense Strategies against Attack Model 1: A novel approach, called physical challenge-response authentication (PyCRA) (2015) [110], inspects the surrounding environment by sending randomized probing signals, called challenging signals. PyCRA shuts down the actual sensing signals at random times and assumes that attackers cannot detect challenging signals immediately. Under that assumption, PyCRA can detect malicious signals by determining if they are higher than a noise threshold during a period with the Chi-square test. Kapoor et al. (2018) [111] criticized PyCRA that PyCRA may severely affect the safety-critical CAV components, such as adaptive cruise control and collision warning, because they are shut down at random times. Another shortcoming of PyCRA is that after the first 30 seconds following an attack, The derived distance is continuously longer than the actual distance [111], thus PyCRA falls short of the Consistency criteria. Dutta et al. (2017) [112] attempted to address PyCRA’s problems with consistency and non-disruptivity by introducing the Challenge Response Authentication method (CRA). CRA works by applying the recursive least square method to provide the estimated distance by minimizing the sum of square of errors, which is defined as the difference between the predicted distance and the actual distance. According to Dutta et al., CRA may satisfy all four aforementioned criteria. However, Kapoor et al. criticized that CRA may not be effective in practice because it relies on the assumption that the actual distance is known [111]. Kapoor et al. proposed a new method called Spatio-Temporal Challenge-Response (STCR). STCR uses the same idea as PyCRA, but instead of shutting down sensing signals, it transmits challenging signals randomized directions. Reflected challenging signals can be used to identify directions that reflect malicious signals, then excludes the untrustworthy directions when measuring the surrounding environment. According to [111], STCR is able to detect attacks and measure the actual distance consistently and in a timely manner.

Challenges for defending against Attack Model 1: Yan et al. [37] failed to apply the DRFM technique to inject counterfeit signals to a radar sensor because the sensor has a low ratio of working time over idle time. However, it was unclear from their publication whether this is the characteristic that all CAVs’ radar sensors share, and whether an attacker can find a way to overcome this problem. Further experiments are needed to answer this question. Besides, Kapoor et al.’s defense method [111] is the state-of-the-art technique but has not been validated in an experiment.

Attack Model 2 - Radar jamming: This attack model can also be carried out by using DRFM, but instead of modifying phase, attackers can modify frequency and amplitude of the stored signals before rebroadcasting to the radar sensors. The falsified signals can make the radar sensors fail to detect the object, at which the jamming device is located. We have not found any publication that experimented with this attack model on CAVs. However, this attack model is widely used by manned and unmanned aerial vehicles (UAV) to hide themselves from radar detection [113, 114, 115]. Since these attacks have only been applied to UAVs and not CAVs, we are not certain about their feasibility on CAVs and thus cannot make claims about criteria for defense strategies.

Existing Defense Strategies against Attack Model 2: Similar to the attack model, defense strategies are widely studied for UAVs, but none has been discussed for CAVs. To defend against this attack model, one can attempt to separate the legitimate signals from the counterfeit signals. The specific methods are described in [116, 117, 118, 43].

Challenges for defending against Attack Model 2: Further studies on both attacks and defense techniques are needed to investigate whether the attacks are feasible on CAVs and whether the defense techniques are also effective on CAVs.

III-F Attacks on GPS

Attack Model 1 - GPS Spoofing: An attacker broadcasts incorrect, but realistic GPS signals to mislead GPS receivers on CAVs. This is also known as a GPS Spoofing attack. In this attack model, attackers begin by broadcasting signals that are identical to the satellites’ legitimate signals. The attackers then gradually increase the power of his signals and gradually deviate their GPS signals from the target’s true location. GPS receivers are often configured to make use of signals with the strongest magnitudes [119]. Therefore, once the counterfeit signal is stronger than the legitimate satellite signal, GPS devices would choose to process the counterfeit signal. Tippenhauer et al. (2011) described in detail how to perform a GPS Spoofing attack and the requirements for a successful attack [120]. They found that an attacker must be able to calculate the distance from himself to the victim with an error of at most 22.5 meters. Whether this condition could be met on a moving CAV is still unclear. We have not found any successful GPS spoofing attack on CAV published in the literature. However, attacks on other transportation means are available. For instance, in 2014, Psiaki et al. [121] successfully spoofed GPS signals to a superyacht’s and reported counterfeit locations to the crew. The crew then attempted to correct the course, only to deviate from the correct course. Shepard et al. (2012) [122] used a civilian GPS spoofer to successfully create a significant timing error in a phasor measurement unit, which is a component of GPS devices responsible for estimating the magnitude and phase angle of GPS signals. Zeng et al. (2018) [123] assembled a small device from popular components with a total cost of 223 US Dollars and used it to trigger fake turn-by-turn navigation to guide victims to a wrong destination without being noticed. The authors demonstrated the attacks on real cars with 40 participants and were able to guide 38 participants to the authors’ predetermined locations (95% success rate). Zeng et al. discussed one of the limitations of their study is that it is not effective if a driver is familiar with the area. However, this may not be the case for CAVs and thus Zeng et al.’s attack model would pose a significant threat to CAVs. Recently, Regulus Cyber LTD. tested GPS spoofing on a Tesla 3 and successfully made the car’s GPS display false positions on the map, and hence any attempt to find a route to a destination resulted in bad navigation [39]. The total equipment cost to perform this attack was 550 US Dollars and the report also stated that “this dangerous technology is everywhere“. Unfortunately for those who are curious, the researchers did not perform an attack when the car was on an autopilot mode. Narain et al. (2019) [124] proposed an interesting approach for attackers. They first looked for data on regular patterns that exist in many cities’ road networks. Then, they used an algorithm to exploit the regular patterns and identify navigation paths that are similar to the original route (assuming that the attackers know the victim’s route). Finally, the identified paths can be forced onto a target CAV through spoofed GPS signals. This attack model allows attackers to possibly bypass some defense mechanisms, such as the Inertial Navigation System (INS), which helps GPS receivers to get positions and angle updates at a quicker rate [125, 126]. The inconsistencies between the spoofed path and the original path may be negligible and the attack can be successfully executed. Also in 2019, Meng et al published an open-source GPS-spoofing generator using Software-Defined Receiver [127]. The authors claimed that their spoofing generator can cover all open-sky satellites while providing high-quality concealment, thus it can block all the legitimate signals. This would make the spoofing signals closely similar to that of the legitimate signal. Therefore, it would be difficult to detect this attack based on only the differences with surrounding GPS receivers or the signal consistency. The threat of this spoofing model to CAVs is very serious once all signals from the visible GPS satellites are spoofed [127].

Criteria for Defense Strategies against Attack Model 1: Haider and Khalid (2016) [20] proposed the following criteria for effective defense strategies:

-

•

Quick Implementation: The solution can be implemented easily.

-

•

Cost Effective: The solution should be affordable in either a small scale or a large scale.

-

•

Prevent Simple Attack: ability to detect simple attacks.

-

•

Prevent Intermediate Attacks: ability to detect intermediate type of attacks.

- •

-

•

No Requirements to modify satellite transmitters: the solution does not require changes to be made on the satellite transmitters.

-

•

Validation: the solution is easy to test.

-

•

Interoperability: The solution works on many types of machines (we are only concerning about CAVs in this context).

Existing Defense Strategies against Attack Model 1: In 2003, the United States Department of Energy suggested seven simple countermeasures to detect GPS spoofing attacks [128]. The seven countermeasures are:

-

•

Monitor the absolute GPS signal strength: by recording and monitoring the average signal strength, a system may detect a GPS spoofing attack by observing that signal strengths are many orders of magnitude larger than normal signals from GPS satellites.

-

•

Monitor the relative GPS signal strength: the receiver software could be programmed to record and compare signals in consecutive time frames. A large change in relative signal strength would be an indication of a spoofing attack.

-

•

Monitor the signal strength of each received satellite signal: the relative and absolute signal strengths are recorded and monitored individually for each of the GPS satellites.

-

•

Monitor satellite identification codes and number of satellite signals received: GPS spoofers typically transmit signals that contain tens of identification code, whereas legitimate GPS signals on the field often come from a few satellites. Keeping track of the number of satellite signals received and the satellite identification codes may help determine a spoofing attack.

-

•

Check the time intervals: with most GPS spoofers, the time between signals is constant. This is not the case with real satellites. Keeping track of the time intervals between signals may be useful in detecting spoofing attack.

-

•

Do a time comparison: Many GPS receivers do not have an accurate clock. By using timing data from an accurate clock to compare to the time derived from the GPS signals, we can check the veracity of the received GPS signals.

-

•

Perform a sanity check: by using accelerometer and compass, a system can independently monitor and double check the position reported by the GPS receiver.

All of the above seven countermeasures are simple and inexpensive to implement, may prevent simple attacks, do not require modification to satellite transmitters, and are inter-operable. However, they may fall short when dealing with sophisticated attacks such as [127, 124].

Defense strategies against GPS spoofing attacks have also been studied extensively in the academic literature. Since GPS signals do not contain any information that can verify their integrity, a natural way to defend against Attack Model 1 is to use redundant information to verify the integrity of GPS signals. One example of such a method is the Receiver autonomous integrity monitoring (RAIM), a technology that uses redundant signals from multiple GPS satellites to produce several GPS position fixes and compare them [129, 130]. A RAIM system is considered available if it can receive signals from 24 or more GPS satellites [131, 132]. RAIM statistically determines whether GPS signals are faulty or malicious by using the pseudo-range measurement residual, which is the difference between the observed measurement and the expected measurement [40]. Advanced Receiver Autonomous Integrity Monitoring (ARAIM) is a concept that extends RAIM to other constellations beyond GPS, such as GLObal NAvigation Satellite System (GLONASS), Galileo, and compass [133, 134]. One criticism with ARAIM is that its availability is inconsistent if one or more satellites are not reachable [135, 131]. Meng et al. (2018) proposed solutions for this problem and improved ARAIM availability up to 98.75% [135, 136]. There are many other validation mechanisms, which all make use of additional satellites or side-channel information. For example, O’Hanlon et al. described how to estimate the expected GPS signal strength and compared against the observed signal strength to validate GPS signals [41]. Furthermore, a defense system can monitor GPS signals to ensure that the rate of change is within a threshold. Montgomery proposed a defense approach that uses a dual antenna receiver that employs a receiver-autonomous angle-of-arrival spoofing countermeasure [137]. The main idea is to measure the difference in the signal’s phase between multiple antennas referenced to a common oscillator. Other examples of countermeasures can be found in the survey paper by Haider and Khalid (2016) [20].

Challenges for defending against Attack Model 1: Even though several countermeasures have been proposed in the literature, their effectiveness against newer attack strategies, such as [127, 124] (2019), is unknown. The attack strategy in [127] is especially dangerous for CAVs. Therefore, finding effective countermeasures for these 2019-born attack models is a current research challenge.

Attack Model 2 - GPS jamming: Since radio signals from the satellites are generally weak, jamming can be achieved by firing strong signals that overwhelm GPS receiver, so that the legitimate signals can not be detected [138]. Examples that highlight the risks of GPS jamming have been reported. In 2013, a New Jersey man was arrested for using a $100 GPS jamming device plugged into the cigarette lighter in his company truck [139]. The man’s motive was to jam his company truck’s GPS signal to hide from his employer. However, the device was reported to be powerful enough to interfere with GPS signals at the nearby Newark airport. Even though GPS jamming devices are illegal for civilian use, they can easily be found on online retailers such as eBay [140]. GPS jamming is less dangerous than GPS spoofing in the sense that attackers have higher control over GPS receivers with a spoofing attack. However, GPS jamming attack can cause disruptions of service and is essentially a Denial-of-Service attack.

Criteria for Defense Strategies against Attack Model 2: Based on our understanding of the attack model and study on the defense techniques, we suggest the following criteria.

-

•

Attack Detection: The solution should be able to detect a jamming attack in a timely manner to ensure the safety of CAVs.

-

•

Signal Filter: The solution should be able to filter out the attack signals so that the vehicle can still operate under certain attack scenarios and avoid disruption of service.

Existing Defense Strategies against Attack Model 2: Many GPS receiver modules have implemented anti-jamming measures that target unintentional interference from every-day electronic devices. However, Hunkeler et al. (2012) [141] have shown that these countermeasures are ineffective against intentional attacks. For example, the NEO-6 GPS receiver has an integrated anti-jamming module that provides data to assess the likelihood that a jamming attack is ongoing. Hunkeler et al. showed that the parasitic signal from the GPS jammer interfered with the NEO-6 receiver in such a way that the receiver could not function while the anti-jamming module reported very low probability for a jamming attack. Several studies have demonstrated how calculate the probability of intentional GPS jamming attack just by using information from GPS receivers [141, 142, 143]. Unfortunately, detection of GPS jamming does not prevent disruption of service, which is the main objective of a GPS jamming attack. L3Harris Technologies, Inc. developed a technology, Excelis Sentry 1000, that can detect sources of interference to support timely and effective actionable intelligence [144]. The Excelis Sentry 1000 systems can be strategically placed around high-risk areas to instantaneously sense and triangulate the location of jamming sources [140]. However, this defense strategy may not be effective for CAVs, whose operating location is not predictable.