A Temporal Approach to Stochastic Network Calculus 111An early version of this paper was partially presented at MASCOTS 2009 [36].

Abstract

Stochastic network calculus is a newly developed theory for stochastic service guarantee analysis of computer networks. In the current stochastic network calculus literature, its fundamental models are based on the cumulative amount of traffic or cumulative amount of service. However, there are network scenarios where direct application of such models is difficult. This paper presents a temporal approach to stochastic network calculus. The key idea is to develop models and derive results from the time perspective. Particularly, we define traffic models and service models based on the cumulative packet inter-arrival time and the cumulative packet service time, respectively. Relations among these models as well as with the existing models in the literature are established. In addition, we prove the basic properties of the proposed models, such as delay bound and backlog bound, output characterization, concatenation property and superposition property. These results form a temporal stochastic network calculus and compliment the existing results.

keywords:

Stochastic network calculus , max-plus algebra , min-plus algebra , stochastic arrival curve , stochastic service curve , performance guarantee analysis , delay bound , backlog bound1 Introduction

Stochastic network calculus is a theory dealing with queueing systems found in computer networks [8][15][20][22]. It is particularly useful for analyzing networks where service guarantees are provided stochastically. Such networks include wireless networks, multi-access networks and multimedia networks where applications can tolerate a certain level of violation of the desired performance [14].

Stochastic network calculus is based on properly defined traffic models [7][20][22][27][34][37] and service models [20][22]. In literature, it is typical to model the arrival process by a stochastic arrival curve and the service process by a stochastic service curve. The arrival curve provides probabilistic upper bounds on the cumulative amount of arrival traffic whereas the service curve lower bounds the cumulative amount of service. In this paper, we call such models space-domain models, from which extensive results have been derived. There are five most fundamental properties [20][22]: (P.1) Service Guarantees including delay bound and backlog bound; (P.2) Output Characterization; (P.3) Concatenation Property; (P.4) Leftover Service; (P.5) Superposition Property. Examples demonstrating the necessity and applications of these basic properties can be found [20][22].

However, there are many open challenges for stochastic network calculus, making its wide application difficult [22]. One is to analyze networks where users are served probabilistically. For example, in wireless networks, a wireless link is error-prone and consequently retransmission is often adopted to ensure reliability. In random multi-access networks, random backoff and retransmission are used to deal with contention and collision. To apply stochastic network calculus to analyze such networks, it is fundamental to find the stochastic characterization of the service time that provides successful transmissions for the user. However, direct application of existing space-domain models, which are built on the amount of cumulative service, is difficult.

This paper aims to rethink stochastic network calculus to address some of the challenges in current stochastic network calculus literature, such as the analysis of error-prone wireless channels and/or contention-based multi-access. To be specific, we present a temporal approach to stochastic network calculus. The key idea is to develop models and derive results from the time perspective. We define traffic models and service models based on the cumulative packet inter-arrival time and the cumulative packet service time, respectively. In this paper, we shall call such models time-domain models. In addition to their easy use in network scenarios discussed above, the basic properties are also investigated based on the proposed time-domain models. Moreover, relations among the proposed time-domain models as well as with the corresponding space-domain models are established, which provide a tight link between the proposed temporal stochastic network calculus approach and the existing space domain stochastic network calculus approach. This gives increased flexibility in applying stochastic network calculus in challenging network scenarios.

The structure of the paper is as follows. In Section 2 we first introduce the notation and the system specification, followed by the review of the relevant results of stochastic network calculus. Section 3 defines the network calculus models in the time-domain and explores the model transformations. Four fundamental properties are thoroughly investigated in Section 4. The relevant discussion reveals the reasons of establishing the model transformations in Section 3. In Section 5, we conclude the paper and discuss the open issue.

2 Network Model and Related Work

This section specifies the network system and reviews mathematical preliminaries for the analysis in the following sections. A brief overview on stochastic network calculus of particular relevance to this paper is presented as well.

In this paper, we make the following assumptions unless stated otherwise.

-

1.

All packets have the same length.

-

2.

A packet is considered to be received by a network element when and only when its last bit has arrived to the network element.

-

3.

A packet can be served only when its last bit has arrived.

-

4.

A packet is considered out of a network element when and only when its last bit has been transmitted by the network element.

-

5.

Packets arriving to a network element are queued in the buffer and served in the FIFO order. All queues are empty at time .

-

6.

All network elements provide sufficient buffer space to store all incoming traffic and are lossless.

2.1 Notations and System Specification

We use , , and , to denote the packet entering the system, its arrival time to the system, its departure time from the system and its service time provided by the system, respectively, where .

-

1.

From the temporal perspective, an arrival process counts the cumulative inter-arrival time between two arbitrary packets and is denoted by for any . Note .

-

2.

A service process describes the cumulative service time received between two arbitrary packets and is denoted by for any . Note .

In the time-domain, the system backlog and system delay are defined below, respectively.

Definition 1.

The system backlog at time is denoted by :

| (1) |

The delay that packet experiences in the system is denoted by :

| (2) |

Moreover, the time that packet waits in queue is denoted by :

| (3) |

The following function sets are often used in this paper.

The set of non-negative wide-sense increasing functions is denoted by , where for each function ,

and for any function , we set for all .

We denote by the set of non-negative wide-sense decreasing functions where for each function ,

and for any function , we set for all .

We denote by a subset of , where for each function , its nth-fold integration, denoted by , is bounded for any and still belongs to for any , i.e.,

For ease of exposition, we adopt the following notations in this paper:

In addition, the ceiling and floor functions are used in this paper as well.

-

1.

The ceiling function returns the smallest integer not less than .

-

2.

The floor function returns the larget integer not greater than .

2.2 Mathematical Basis

An essential idea of (stochastic) network calculus is to use alternate algebras, particularly min-plus algebra and max-plus algebra [5], to transform complex non-linear network systems into analytically tractable linear systems. To the best of our knowledge, the existing models and results of stochastic network calculus mainly based on min-plus algebra that has basis operations suitable for characterizing the amount of cumulative traffic and service. As a result, these models focus on describing network behavior from the spatial perspective. Max-plus algebra is suitable for arithmetic operations with cumulative inter-arrival times and service times. Consequently, network modeling from the temporal perspective more relies on max-plus algebra. In the following, we review the basics of both min-plus algebra and max-plus algebra.

In min-plus algebra, the ‘addition’ operation represents infimum or minimum when it exists, and the ‘multiplication’ operation is . The min-plus convolution of functions , denoted by , is defined as

where, when it applies, ‘infimum’ should be interpreted as ‘minimum’. The min-plus deconvolution of functions , denoted by , is defined as

where, when it applies, ‘supremum’ should be interpreted as ‘maximum’.

In the max-plus algebra, the ‘addition’ operation represents supremum or maximum when it exists, and the ‘multiplication’ operation is . The max-plus convolution of functions , denoted by , is defined as

where, when it applies, ‘supremum’ should be interpreted as ‘maximum’. The max-plus deconvolution of functions , denoted by , is defined as

where, when it applies, ‘supremum’ should be interpreted as ‘maximum’.

The max-plus convolution is associative and commutative [5].

-

1.

Associativity: for any , .

-

2.

Commutativity: for any , .

2.3 State of The Art in Stochastic Network Calculus

The available literature on stochastic network calculus mainly focuses on modeling network behavior and analyzing network performance from the spatial perspective [6][12][15][16][20][22][23][28][29][31]. We call the corresponding models and results space-domain models and results in this paper.

In order to characterize the arrival process of a flow from the spatial perspective, let us consider the amount of traffic generated by this flow in a time interval , denoted by . In the context of stochastic network calculus, the arrival curve model is defined based on a stochastic upper bound on the cumulative amount of the arrival traffic. Here, we only review one relevant space-domain arrival curve model, virtual-backlog-centric () stochastic arrival curve (SAC) [22].

Definition 2.

(v.b.c Stochastic Arrival Curve)

A flow is said to have a virtual-backlog-centric (v.b.c) stochastic arrival curve with bounding function , if for all and all , there holds

| (4) |

In stochastic network calculus, the service curve model is defined as a stochastic lower bound on the cumulative amount of service provided by the system. Two space-domain service curve models [22] are reviewed here.

Definition 3.

(Weak Stochastic Service Curve)

A network system is said to provide a weak stochastic service curve with bounding function for the arrival process , if for all and all , there holds

| (5) |

where denotes the cumulative amount of the departure traffic.

Unlike the arrival curve, it is difficult to identify the service curve from (5) because it couples the arrival process, the service curve and the departure process. Thus we need a more explicit model to directly reveal the relation between the service process and its service curve such as the following model [20].

Definition 4.

(Stochastic Strict Service Curve)

A network system is said to provide stochastic strict service curve with bounding function , if during any period , the amount of service provided by this system satisfies, for any ,

| (6) |

Definition 4 is applied to ‘any period’ which implies both worst-case scenario and other scenarios. If we could determine a function which makes Eq.(6) hold under the worst-case scenario, then Eq.(6) automatically holds under other scenarios as well.

Based on the arrival curve and service curve models, five fundamental properties have been proved to facilitate tractable analysis. For example, they can be used to derive service guarantees including delay bound and backlog bound, characterize the behavior of traffic departing from a server, describe the service provided along a multi-node path, determine the arrival curve for the aggregate flow, and compute the service provided to each constituent flow.

-

1.

P.1: Service Guarantees (single-node)

Under the condition that the traffic arrival process has an arrival curve with bounding function and the network node provides service with a service curve model with bounding function , the stochastic delay bound and stochastic backlog bound can be derived. Particularly, the backlog bound is related to the maximal vertical distance between and ; the delay bound is relevant to the maximal horizontal distance between and .

-

2.

P.2: Output Characterization

To analyze the end-to-end performance of a multi-hop path, one option is the node-by-node analysis approach. This approach requires being able to characterize the traffic behavior after the traffic has been served and leaves the previous node. The output process of a flow from a node can also be characterized by an arrival curve which is determined by both the arrival curve of the arrival process and the service curve of the service process.

-

3.

P.3: Concatenation Property (multi-node)

Network calculus possesses an unique property, concatenation property, which is also used to analyze the end-to-end performance but improves the results obtained from the node-by-node analysis. The essence of the concatenation property is to represent a series of nodes in tandem as a ‘black box’ which can be treated as a single node. The service curve of this equivalent system is determined by the service curve of all individual nodes along this path.

-

4.

P.4: Superposition Property (aggregate flow)

Flow aggregation is very common in packet-switched networks. If multiple flows are aggregated into a single flow under the FIFO order, the aggregate flow also has an arrival curve which is the summation of the arrival curve of all constituent flows.

-

5.

P.5: Leftover Service Characterization (per-flow)

The leftover service characterization makes per-flow performance analysis feasible under FIFO aggregate scheduling. The crucial concept is to represent all other constituent flows as an ‘aggregate cross flow’ which can be characterized using an arrival curve. Then the service provided to the constituent flow of interest can also be described by a service curve which is determined by the service curve provided to all arrival flows and the arrival curve of the ‘aggregate cross flow’.

The superposition property of the SAC [22] is reviewed here because it is relevant to the model transformation in the following content.

Theorem 1.

Consider flows with arrival processes , , respectively. If each arrival process has a SAC with bounding function , then the aggregate arrival process has a SAC with bounding function , where

3 Time-domain Modeling and Transformations

This section defines traffic and service models in the time-domain. Particularly, traffic models are defined based on probabilistic lower bounds on the cumulative inter-arrival time between two arbitrary packets. Service models are defined in terms of the virtual time function and probabilistic upper bounds on the cumulative service time between two arbitrary packets. Moreover, we establish the transformations among these models as well as the transformation between the time-domain model and the space-domain model.

3.1 Time-domain Traffic Models

Consider an arrival process that specifies packets arriving to a network system at time , . In order to stochastically guarantee a certain level of QoS to this arrival process, this arrival process should be constrained. By characterizing the constrained arrival traffic from the temporal perspective, we define an inter-arrival-time (i.a.t) stochastic arrival curve model.

Definition 5.

(i.a.t Stochastic Arrival Curve)

A flow is said to have an inter-arrival-time (i.a.t) stochastic arrival curve with bounding function , if for any and , there holds

| (7) |

Eq.(7) indicates that function is a probabilistic lower bound on the cumulative inter-arrival time. The violation probability that the cumulative inter-arrival time is smaller than is bounded above by function . If for all , Eq.(7) represents a time-domain deterministic arrival curve [10] which is a special case of the SAC.

Queueing theory typically characterizes the arrival process using the probability distribution of the inter-arrival time between two consecutive customers:

Comparing with Eq.(7), we notice that Eq.(7) gives a more general probability expression of the inter-arrival time between two arbitrary packets. From this viewpoint, is a special case of Eq.(7).

Example 1.

Consider a flow of packets with fixed packet size. Suppose that packet inter-arrival times follow an exponential distribution with mean . Then, the packet arrival time has an Erlang distribution with parameter [1], where denotes the number of arrival packets. For any two packets and , their inter-arrival time satisfies, for ,

where . Thus, the flow has an SAC .

The SAC is simple but has limited applications. For example, consider a virtual single server queue (SSQ) fed with the arrival traffic which has an SAC with bounding function . Suppose that the virtual SSQ provides a constant service time for each packet. From Eq.(3), the waiting delay of experienced in the virtual SSQ is

| (8) | |||||

| (9) |

where is the beginning of the backlogged period within which packet is transmitted. Eq.(8) is derived from the departure time given in Eq.(13). Eq.(9) is called the virtual-waiting-delay property. It is difficult to compute the virtual-waiting-delay from Eq.(7). When investigating the performance guarantees such as delay bound and backlog bound in Section 4.1, we face the similar difficulty.

In order to deal with the difficulty of computing the virtual-waiting-delay, we define another stochastic arrival curve model based on Eq.(9).

Definition 6.

(v.w.d Stochastic Arrival Curve)

A flow is said to have a virtual-waiting-delay (v.w.d) stochastic arrival curve with bounding function , if for any and , there holds

| (10) |

Through some manipulations, Eq.(10) can be expressed as the max-plus convolution:

| (11) |

Here, can be considered as the expected time that the packet would arrive to the head-of-line (HOL) if the flow has passed through a virtual SSQ with the (deterministic) service curve . The packet is expected to arrive not earlier than the expected HOL time. Here represents the difference between the expected HOL time and the actual arrival time. The violation probability is bounded by the non-increasing function .

We use the SAC to characterize the arrival traffic in Example 1.

Example 2.

Consider a flow that consists of packets having the fixed packet size. Suppose that all packet inter-arrival times are exponentially distributed with mean . Based on the steady-state probability mass function (PMF) of the queue-waiting time for an M/D/1 queue [33], we say that the flow has a SAC with bounding function for . Let . We obtain the bounding function of the probability that the waiting delay exceeds

where, denotes the floor function.

The definition of SAC is more strict than that of SAC. As a result, it is not trivial to derive the SAC for an arrival process even if it can be characterized by an SAC. Thus, it is important to explore whether there exists some relationship between the SAC and the SAC.

Theorem 2.

-

1.

If a flow has a v.w.d SAC with bounding function , then the flow has an i.a.t SAC with the same bounding function .

-

2.

Conversely, if a flow has an i.a.t SAC with bounding function , it also has a v.w.d SAC with bounding function , where for 222Note that should not be greater than .

Remark. In the second part, while not . If the requirement on the bounding function is relaxed to , the second part may not hold in general.

Theorem 2 reveals that if an arrival process can be modeled by a SAC , then is also the SAC of this arrival process. On the other hand, if an arrival process can be modeled by an SAC with the associated bounding function in , then this arrival process also has a SAC which may be associated with a more loose bounding function.

It is worth highlighting that the SAC looks similar to the SAC (see Definition 2) defined in the space-domain. Since these two models play an important role in performance analysis in their respective domains, we establish their relationship in the following theorem.

Theorem 3.

-

1.

If a flow has a space-domain v.b.c SAC with bounding function , the flow has a time-domain v.w.d SAC with bounding function , where

with denoting the inverse function of , where

Specifically, if is sub-additive, .

-

2.

Conversely, if a flow has a time-domain v.w.d SAC with bounding function , the flow has a space-domain v.b.c SAC with bounding function , where

with denoting the inverse function of , where

Specifically, if is sub-additive333[4] clarifies that defines a meaningful constraint only if it is subadditive. If is not subadditive, it can be replaced by its subadditive closure., .

Note that in Theorem 3, the arrival curve denotes the cumulative number of arrival packets while not the cumulative amount (in bits) of arrival traffic.

The generalized stochastically bounded burstiness (gSBB) [38] is a special case of the space-domain SAC. A summarization of some well-known traffic belonging to gSBB is given [22], including both Gaussian self-similar processes [2][11][25][30], such as fractional Brownian motion, and non-Gaussian self-similar processes, such as stable self-similar process [3][24], and the stochastic traffic model [7][9]. With Theorem 3, the following example shows that gSBB can be readily represented using the time-domain SAC.

Example 3.

If an arrival process can be described by gSBB with upper rate and bounding function , i.e., for any , there holds

then the process has a SAC with the bounding function . With Theorem 3 (1), the arrival process has a SAC which is sub-additive and the bounding function , i.e.,

Remark. Theorem 3 allows us to readily utilize the results of gSBB traffic for time-domain models. If the traffic is more suitable for being characterized by the time-domain traffic models rather than the space-domain traffic models, then the transformation between two domains can facilitate the analysis.

3.2 Time-domain Service Models

Queueing theory characterizes the service process of a system based on the per customer service time. Like the arrival model, time-domain service models extend to the cumulative service time.

If packet arrives to a network system after packet has departed from the system, the departure time of is the arrival time plus the service time , i.e., . If arrives to the system while is still in the system, then its departure time is . The combination of both cases gives the departure time of

| (12) |

with . Applying Eq.(12) iteratively to its right-hand side results in

| (13) |

The system usually allocates a minimum service rate to an arrival flow in order to meet its QoS requirements. The guaranteed minimum service rate is related to the guaranteed maximum service time for each packet of the flow. Accordingly, the time that the packet departs from the system is bounded. Denote the guaranteed maximum service time by . The Guaranteed Rate Clock (GRC) is defined based on [17] [18]:

| (14) |

with . Applying Eq.(14) iteratively to its right-hand side yields

| (15) |

Eq.(15) is similar to Eq.(13) except for that represents the guaranteed departure time444The guaranteed departure time is actually error term [17], where error term is determined by the employed service discipline. The underlying service discipline considered throughout this paper is FIFO, under which, the error term is . while is the actual departure time.

If is denoted by a function , then Eq.(15) becomes

| (16) |

which is the basis for the time-domain (deterministic) service model [10]. For systems that only provide service guarantees stochastically or applications that require only stochastic QoS guarantees, the service time may not need to be deterministically guaranteed. In this case, we extend the (deterministic) service curve into a probabilistic one.

Definition 7.

(i.d Stochastic Service Curve)

A system is said to provide an inter-departure time (i.d) stochastic service curve with bounding function , if for any , there holds

| (17) |

Note that the stochastic service curve of a service process is not unique. Therefore optimization is needed to find the SSC of a specific system.

Example 4.

Consider two nodes, the transmitter and the receiver. They communicate through an error-prone wireless link which is modeled as a slotted system. The wireless link can be considered as a stochastic server. Packets have fixed-length and are served in a FIFO manner by the transmitter. To simplify the analysis, we assume that the length of time slot equals one packet transmission time555It means we only compute the number of time slots in this example..

The transmitter sends packets only at the beginning of a time slot. Due to the error-prone nature of the wireless link, the probability that a packet is successfully transmitted is determined by packet error rate (PER). Here, we assume that packet errors happen independently in every transmission with a fixed PER denoted by . The successful transmission probability of one packet is hence . If error happens, the unsuccessfully transmitted packet will be retransmitted in the next time slot immediately. In order to guarantee 100% reliability, the packet will be retransmitted until it is successfully received by the receiver.

The per-packet service time is a geometric random variable with parameter . The cumulative service time of successfully transmitting packets to is which follows the negative binomial distribution with parameter . The mean service time denoted by equals

According to the complementary cumulative distribution function (CCDF) of the negative binomial distribution, the cumulative service time between two arbitrary packets and is given by

| (18) |

for any , where is the ceiling function.

The right-hand side of Eq.(18) represents the bound on the probability that the actual cumulative service time exceeds the cumulative mean service time. Let for and denote the right-hand side of Eq.(18). From Definition 7, we know

from which, we have

Thus, we conclude that this error-prone wireless link provides an SSC with the bounding function for , where

Since Eq.(18) is only relevant to the cumulative service time and does not involve the arrival process, it provides a method to find the SSC.

Remark. Example 4 demonstrates that we can obtain the SSC from analyzing per-packet service time. However, if applying the space-domain results to this case, we need an impairment process [22] to characterize the cumulative amount of service consumed by unsuccessful transmissions. In other words, we still need to compute the cumulative slots due to failed transmission and then convert it into the amount of service. Such conversion may introduce error or result in looser bounds whereas the time-domain model directly computes the service time and avoids the conversion error. This simple example thus illustrates the feasibility of the time-domain service curve model.

In Section 4, we show that many results can be derived from the SSC. However, without additional constraints, we have difficulty in proving the concatenation property for the SSC. To address this difficulty, we introduce another service curve model in the following.

Definition 8.

(-Stochastic Service Curve)

A system is said to provide an -stochastic service curve with bounding function , if for any , there holds

| (19) |

for any small .

Note that the left-hand side of Eq.(19) represents a property that is typically hard to calculate. It means that Definition 8 is more strict than Definition 7. Thus it is important to find the relationship between the SSC and the -stochastic service curves.

Theorem 4.

-

1.

If a system provides to its arrival process an -stochastic service curve with bounding function , it provides to the arrival process an SSC with the same bounding function ;

-

2.

If a system provides to its arrival process an SSC with bounding function , it provides to the arrival process an -stochastic service curve with bounding function for , where

Again, in the second part of Theorem 4, while not . If the requirement on the bounding function is relaxed to , the above relationship may not hold in general.

Definition 7 explores the relationship between the arrival process and the departure process, but it does not explicitly characterize the service process. From Eq.(17), it is not trivial to find the stochastic service curve for a specific system. Example 4 illustrates how to add some increment to the stochastic service curve . To this end, we expand Eq.(16) to

| (20) |

Without loss of generality, assume () is the beginning of the backlogged period in which packet is served. Then,

and .

We rewrite the right-hand side of Eq.(20) as

| (21) | |||||

Note that Eq.(21) holds for arbitrary . Inspired by this, we define a new service curve model.

Definition 9.

(Stochastic Strict Service Curve)

A system is said to provide stochastic strict service curve with bounding function , if the cumulative service time between two arbitrary packets and 666If and are in the same backlogged period, . satisfies for any ,

| (22) |

Eq.(21) reveals a relationship between the SSC and the stochastic strict service curve. Furthermore, in Theorem 4(2), the relationship between the stochastic strict service curve and the stochastic service curve is obtained.

Theorem 5.

Consider a system providing stochastic strict service curve with bounding function .

-

1.

It provides an SSC with the same bounding function .

-

2.

If , it provides an stochastic service curve with bounding function , where

Note that the second part of Theorem 5 requires the bounding function while not .

4 Fundamental Properties

In this section, we explore the four fundamental properties for time-domain models, i.e. service guarantees, output characterization, concatenation property and superposition property. Some properties can only be proved for the combination of a specific traffic model and a specific service mode. This is why we have established various transformations between models in Section 3. With these transformations, we can flexibly apply the corresponding models to specific network scenarios.

4.1 Service Guarantees

Suppose that the arrival process has a SAC and the service process has an SSC. Under this condition, we derive the delay bound and backlog bound.

4.1.1 Delay Bound

The system delay significantly impacts QoS and is an important performance metric.

Theorem 6.

(System Delay Bound).

Consider that a system provides an i.d SSC with bounding function to the input which has a SAC with bounding function . Let be the system delay of packet . For , is bounded by

| (23) |

If the arrival process and the service process are independent of each other, we obtain another system delay bound according to Lemma 6.1 [22].

Lemma 1.

(System delay bound: independent condition)

Consider that a system provides an i.d SSC with bounding function to the arrival process which has a SAC with bounding function . Suppose that the arrival process and the service process are independent of each other. Then for , the system delay is bounded by

| (24) |

where and .

4.1.2 Backlog Bound

The system backlog represents the total number of packets in the system at time , including both the packets waiting in the buffer and the packet being served. It is determined by function (1):

The following theorem provides a probabilistic bound on the system backlog for the given arrival process and service process.

Theorem 7.

(Backlog Bound)

Consider that a system provides an i.d SSC with bounding function to the arrival process which has a SAC with bounding function . The system backlog at time () is bounded by

| (25) |

for .

Let

represent the maximum horizontal distance between functions and . The probability that exceeds is bounded by

| (26) |

Remark. can be considered as the maximum system backlog in a (deterministic) virtual system, where the arrival process is and the service process is . Eq.(26) is thus a bound on this maximum system backlog.

If the arrival process and the service process are independent of each other, another backlog bound is derived according to Lemma 6.1 [22].

Lemma 2.

(Backlog Bound: independent condition)

Consider that a system provides an i.d SSC with bounding function to the arrival process which has a SAC with bounding function . Suppose that the arrival process and the service process are independent of each other. Then the system backlog at time () is bounded by:

| (27) |

for .

The probability that exceeds is bounded by

| (28) |

4.2 Output Characterization

The previous section has presented how to derive the service guarantees in a single node. Another common scenario with which performance analysis deals is the end-to-end performance. An intuitive and simple approach is called node-by-node analysis [19] which requires characterization of the departure process from a single node.

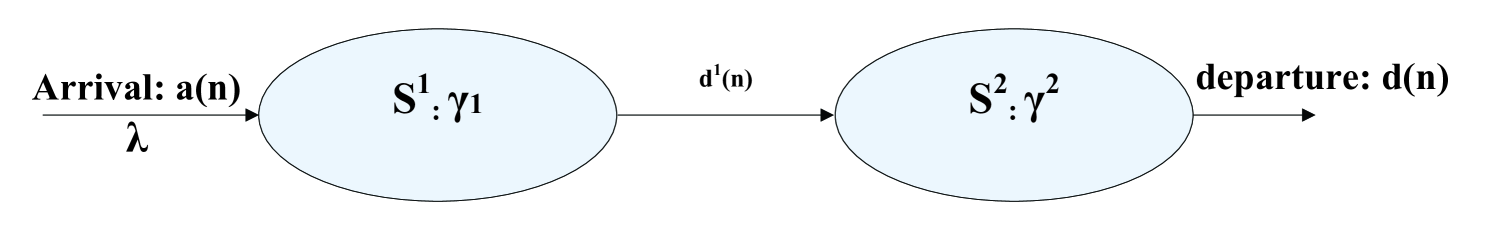

Let us consider a simple network as shown in Figure 1. The departure process of Server is the arrival process for Server .

The delay bound in Server 1 can be derived from the result of Section 4.1.1. To derive the delay bound in Server 2, we need to characterize the arrival process to Server 2, which is the departure process from Server 1. The problem is how to characterize the departure process from Server 1.

Theorem 8.

(Output Characterization)

Consider that a system provides an i.d SSC with bounding function to its arrival process which has a SAC with bounding function . The output has an i.a.t SAC with bounding function , i.e., for any , there holds

| (29) |

Remark. In Theorem 8, the initial arrival process has a SAC while the departure process has an SAC. In order to derive the service guarantees in Server 2, we need Theorem 2 (2) to transform the SAC into a SAC. Such transformation introduces a loose bounding function. The node-by-node analysis thus generates a loose end-to-end delay bound. Network calculus possesses an attractive property, concatenation property, which is used to deal with the end-to-end performance analysis. The comparison between the node-by-node analysis and the concatenation analysis reveals that the latter yields a tighter end-to-end delay bound [21].

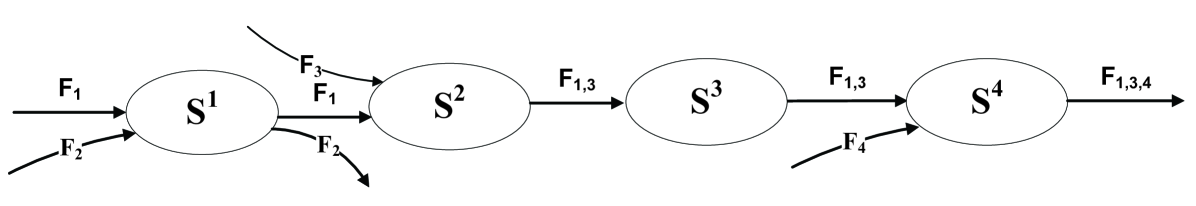

The output characterization property however is very useful when analyzing complicated network scenarios, such as Figure 2, where flows join or leave dynamically. In order to analyze the per-flow service guarantees, the departure process from each single node should be characterized using the arrival process to the node and the service process provided by the node.

Moreover, if the arrival process and the service process are independent of each other, the following lemma depicts the departure process.

Lemma 3.

(Output Characterization: independent condition.)

Consider that a system provides an i.d SSC with bounding function to its arrival process which has a SAC with bounding function . The output has an i.a.t SAC with bounding function , where

| (30) |

4.3 Concatenation Property

The concatenation property aims to use an equivalent system to represent a system of multiple servers connected in tandem if each server provides a service curve to its input. Then this equivalent system can be considered as a ‘black box’ which also provides the initial input with a service curve.

In the following discussion, and denote the stochastic service curve and bounding function of the th server. For packet , the time arriving to the th server is and the time departing from the th server is . For a network of tandem servers, the initial arrival is and the final departure is .

Theorem 9.

(Concatenation Property)

Consider a flow passing through a system of nodes connected in tandem. If each node (= 1,2,…,N) provides an SSC with bounding function to its input, the system provides to the initial input an SSC with bounding function , where

with

for and , and

for and .

The proof of Theorem 9 utilizes the relationship between the SSC and the stochastic service curve. The following lemma directly describes the service characterization of a system of nodes connected in tandem, where each single node provides an stochastic service curve to its input.

Lemma 4.

Consider a flow passing through a system of nodes connected in tandem. If each node (= 1,2,…,N) provides an -stochastic service curve with bounding function to its input, i.e.,

then the system provides to the initial arrival process an SSC with bounding function :

where , , for any small .

Remark. The proof of the concatenation property reveals another reason of defining the stochastic service curve model.

4.4 Superposition Property

The superposition property can be applied for multiplexing individual flows into an aggregated flow under the FIFO aggregate scheduling. The arrival process of the aggregate flow can be characterized by a stochastic arrival curve if the arrival process of each individual flow can be stochastically characterized by a stochastic arrival curve. Then we only need to analyze the service guarantees for the aggregate flow since all constituent flows are served equally.

4.4.1 Superposition of Renewal Processes

The superposition of multiple flows essentially falls into the research issue - superposition of renewal processes. In queueing networks, an individual server may receive inputs from different sources. It is reasonable to assume that the arrival process to a server is a superposition of statistically independent constituent processes [26]. The individual constituent processes are typically considered as renewal processes. A renewal process is a counting process in which the times between successive events are independent and identically distributed possibly with an arbitrary distribution [32].

The superposition of renewal processes has been widely studied since the original investigation by Cox and Smith [13]. However, the renewal property is not preserved under superposition except for Poisson sources. More precisely, the inter-arrival times in the superposition process become statistically dependent. This property cannot be captured by the renewal model [35].

In the following, we introduce how to characterize the superposition processes of multiple flows from a network calculus viewpoint.

4.4.2 Arrival Time Determination

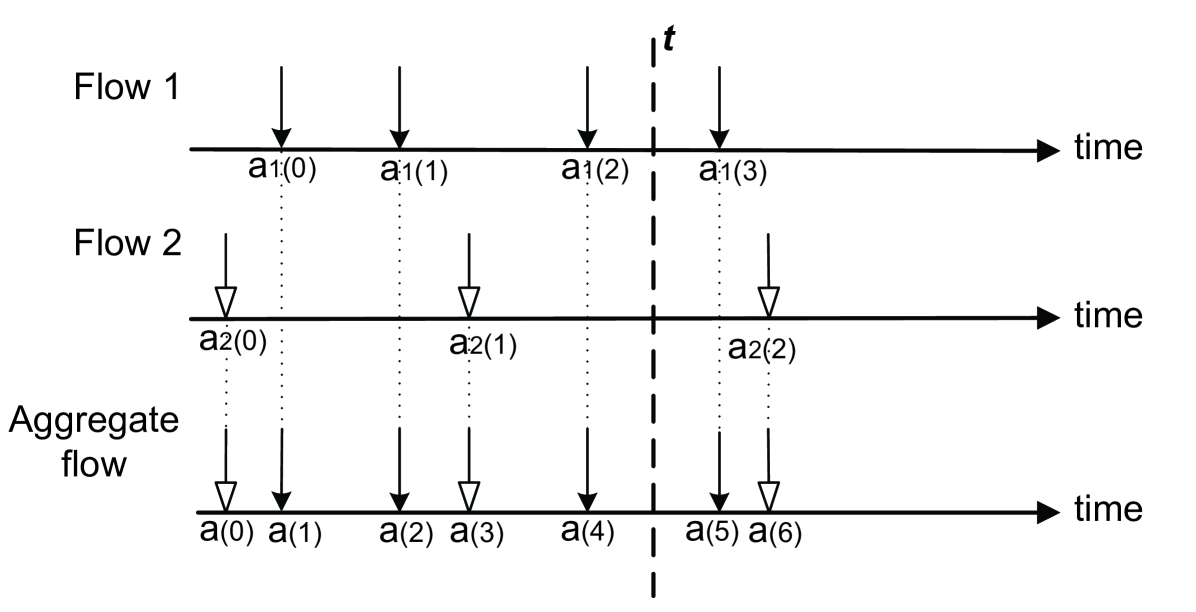

First, we only consider the superposition of two flows denoted by and . Let , and be the arrival process of , and the aggregate flow, respectively. As shown in Figure 3, and are aggregated in the FIFO manner. If two or more than two packets which belong to different flows arrive simultaneously, they are inserted into the FIFO queue arbitrarily.

Figure 4 depicts that the arrival process of the aggregate flow is dependent on the arrival process of two constituent flows.

Recall that denotes the th packet of the aggregate flow. The same notation is also used for constituent flows and . Thus, packet of the aggregate flow is either the th packet of flow (i.e., ) or the th packet of flow (i.e., ), where . When , it means no packet of flow arriving yet. When , it means no packet of flow arriving yet. By convention, we adopt for . Since takes value between to , there are combinations.

Theorem 10.

Consider that two flows and arrive to a network system and are aggregated into one flow in the FIFO manner. Let , and be the arrival process of flows , and , respectively. Then the packet arrival time of the aggregate flow is determined by

| (31) |

with

We use an example to explain the underling concept of Theorem 10. In Figure 4, observe the arrival process of the aggregate flow at time . Packet (arrival time: ) is the last arrival packet, which is either packet or packet , depending on which packet’s arrival time is closer to time , i.e., for , the arrival time of packet is one element of the following set denoted by

i.e., . We notice that is actually the expansion of Eq.(31). According to the packet arrival times of two constituent flows shown in Figure 4, we have

which is consistent with Figure 4.

Theorem 10 can be generalized to the aggregation of flows.

Corollary 1.

Consider that flows ,,…, arrive to a network system and are aggregated into one flow in the FIFO manner. Let , ,…, and be the arrival process of the constituent flows and the aggregate flow, respectively. Then the packet arrival time of the aggregate flow is determined by

| (32) |

with

4.4.3 Superposition Process Characterization

Eq.(31) can compute the packet arrival time of the aggregate flow. However, we still have the difficulty in characterizing the packet inter-arrival time of the aggregate flow if the packet inter-arrival times of two constituent flows follow the general distribution. For this reason, it is difficult to directly characterize the arrival process of the aggregate flow from the temporal perspective. Alternatively, we rely on the available results of the superposition property explored in the space-domain (see Theorem 1).

In the space-domain, the traffic arrival process is characterized based on the cumulative amount of arrival traffic. In the following, we use , and to denote the cumulative number of arrival packets of the aggregate flow up to time , the cumulative number of arrival packets of up to time and the cumulative number of arrival packets of up to time , respectively. is the sum of and , from which we can find the stochastic arrival curve for the aggregate flow.

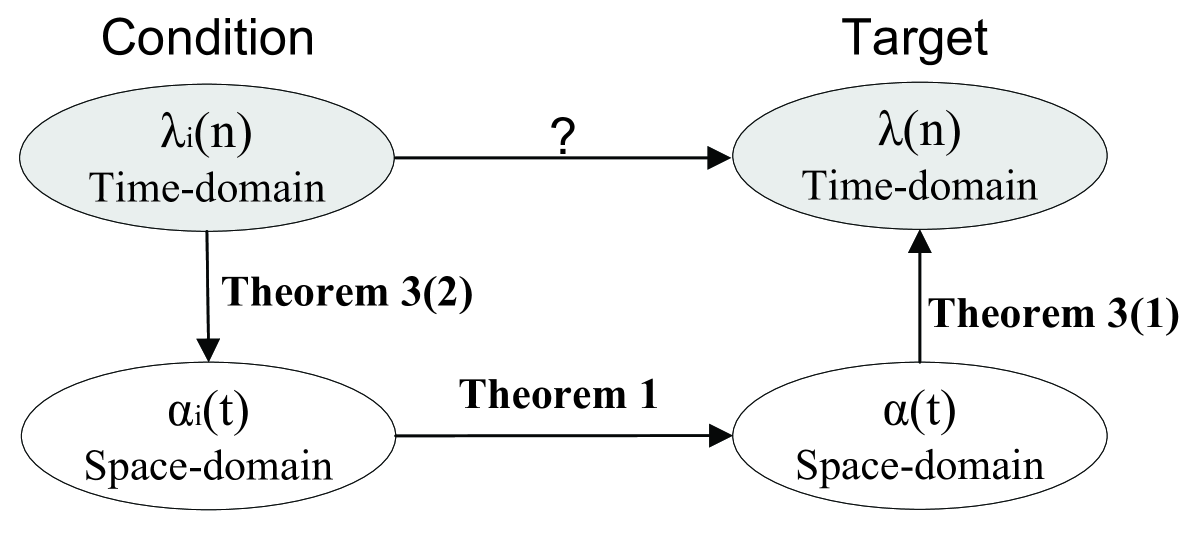

As shown in Figure 5, the condition is that the time-domain stochastic arrival curve of all constituent flows are known, and the target is to verify that the aggregate flow also has a time-domain stochastic arrival curve.

If a flow has a time-domain SAC, with Theorem 3(2), this flow has a space-domain SAC, for which the superposition property holds (refer Theorem 1). Applying Theorem 3(1) gives rise to the SAC for the aggregate flow.

If flow has a SAC with bounding function , , from Theorem 3(2), we can verify that flow has a SAC with bounding function , where and are given in Theorem 3(2). Furthermore, according to Theorem 1, the aggregate flow has a SAC with bounding function . Finally, we apply Theorem 3(1) and can verify that the aggregate flow also has a SAC.

Theorem 11.

(Superposition property)

Consider the aggregate of flows. If the arrival process of each flow has a SAC for , i.e.,

which implies that every flow also has a SAC

with bounding function

where denote the inverse function of :

Then the aggregate arrival process has a SAC with bounding function , where

with and denoting the inverse function of :

4.4.4 Special Case: Superposition of Poisson Processes

As we have mentioned in Section 4.4.1, the Poisson process is a special case of renewal processes because its renewal property is preserved under superposition. In addition, the superposition of multiple Poisson processes is still a Poisson process. From the temporal perspective, the inter-arrival time between two arbitrary events of a superposition of Poisson arrivals follows the Gamma distribution.

Example 5.

Consider the superposition process of two independent Poisson arrival processes. Suppose that all packets of both arrival processes have the same size. The packet inter-arrival times of two Poisson processes follow exponential distributions with mean and , respectively. Find the time-domain SAC for the superposition process.

In Example 2 the stochastic arrival curve for a Gamma process has been derived. We thus know that the superposition process has a SAC () with bounding function :

where .

Remark. It is readily to generalize the above example into the superposition of multiple independent Poisson processes.

5 Conclusions and Open Issue

This paper presented a temporal network calculus to formulate queueing systems in communication networks where applications can tolerate a certain level of performance violation. The time-domain models make it feasible to characterize the temporal behavior of network traffic and capture the temporal nature of the network capacity perceived by individual packets.

The models are defined in such a way to compromise between simple models and complex models. The former may not be sufficient to explore the fundamental properties whereas the latter may be too difficult to build. In order to solve this dilemma, we propose a transformation method such that the appropriate models are selected to some specific scenario. Moreover, we also link the temporal network calculus and the existing space-domain network calculus results through connecting the time-domain arrival curve and the corresponding space-domain arrival curve.

Four properties investigated in the time-domain facilitate performance analysis of various network scenarios. In addition, the proof of the superposition property has given insights into the importance of model transformation. We believe that this temporal network calculus is applicable for analyzing networks where users are served probabilistically and compliments the current network calculus results.

The leftover service characterization is useful for per-flow performance analysis and has been proved in the space-domain. We attempted to tackle this property under the condition that the arrival process has a deterministic time-domain arrival curve and the service process provides an SSC [36]. We will expand the investigation to this property under the general condition. One challenge is to be able to decouple the constituent flow’s arrival process from the aggregate arrival process.

References

- [1] NIST/SEMATECH e-Handbook of Statistical Methods. 2006.

- [2] R. Addie, P. Mannersalo, and I. Norros. Most probable paths and performance formulae for buffers with gaussian input traffic. European Transactions on Telecommunications, 13(3):183–196, 2002.

- [3] A.Karasaridis and D. Hatzinakos. Network heavey traffic modeling using -stable self-similar processes. IEEE Trans. Commun., 49(7):1203–1214, July 2001.

- [4] J.-Y. L. Boudec. Application of network calculus to guaranteed service networks. IEEE Trans. Infor. Theory, 44(3):1087–1096, May 1998.

- [5] J.-Y. L. Boudec and P. Thiran. Network Calculus: A Theory of Deterministic Queueing Systems for the Internet. Springer-Verlag, 2001.

- [6] A. Burchard, J. Liebeherr, and S. D. Patek. A min-plus calculus for end-to-end statistical service guarantees. IEEE Trans. Information Theory, 52(9):4105–4114, Sept. 2006.

- [7] C.-S. Chang. Stability, queue length and delay of deterministic and stochastic queueing networks. IEEE Trans. Auto. Control, 39(5):913–931, May 1994.

- [8] C.-S. Chang. On the exponentiality of stochastic linear systems under the max-plus algebra. IEEE Trans. Automatic Control, 41(8):1182–1188, Aug. 1996.

- [9] C.-S. Chang. Performance Guarantees in Communication Networks. Springer-Verlag, 2000.

- [10] C.-S. Chang and Y. H. Lin. A general framework for deterministic service guarantees in telecommunication networks with variable length packets. IEEE/ACM Trans. Automatic Control, 46(2):210–221, Feb. 2001.

- [11] J. Cheo and N. B. Shroff. A central-limit-theorem-based approach for analyzing queue behavior in high-speed networks. IEEE/ACM Trans. Networking, 6(5):659–671, Oct. 1998.

- [12] F. Ciucu, A. Burchard, and J. Liebeherr. Scaling properties of statistical end-to-end bounds in the network calculus. IEEE Trans. Information Theory, 52(6):2300–2312, June 2006.

- [13] D. R. Cox and W. L. Smith. On the superposition of renewal processes. Biometrika, 41(1-2):91–99, 1954.

- [14] D. Ferrari. Client requirements for real-time communication services. IEEE Commun. Magazine, 28(11):65–72, Nov. 1990.

- [15] M. Fidler. An end-to-end probabilistic network calculus with moment generating functions. In Proc. IEEE IWQoS, 2006.

- [16] M. Fidler. A survey of deterministic and stochastic service curve models in the network calculus. IEEE Commun. Surveys and Turotials, 12(1):59–86, Feb. 2010.

- [17] P. Goyal, S. S. Lam, and H. M. Vin. Determining end-to-end delay bounds in heterogeneous networks. Multimedia System, 5(3):157–163, May 1997.

- [18] P. Goyal and H. M. Vin. Generalized guaranteed rate scheduling algorithms: A framework. IEEE/ACM Trans. Networking, 5(4):561–571, Aug. 1997.

- [19] Y. Jiang. Delay bounds for a network of guaranteed rate servers with FIFO aggregation. Computer Networks, 40(6):683–694.

- [20] Y. Jiang. A basic stochastic network calculus. In Proc. ACM SIGCOMM 2006, 2006.

- [21] Y. Jiang. Internet quality of service - architectures, approaches and analyses. http://www.q2s.ntnu.no/ jiang/Notes.pdf, 2006.

- [22] Y. Jiang and Y. Liu. Stochastic Network Calculus. Springer, 2008.

- [23] Y. Jiang, Q. Yin, Y. Liu, and S. Jiang. Fundamental calculus on generalized stochastically bounded bursty traffic for communication networks. Computer Networks, 53(12):2011–2021, Mar. 2009.

- [24] A. Karasaridis and D. Hatzinakos. A non-Gaussian self-similar process for broadband heavy traffic modeling. In Proc. IEEE GLOBECOM, 1998.

- [25] H. S. Kim and N. B. Shroff. Loss probability calculations and asymptotic analysis for finite buffer multiplexers. IEEE/ACM Trans. Networking, 9(6):755–768, Dec. 2001.

- [26] C. Y. T. Lam and J. P. Lehoczky. Superposition of renewal processes. Advances in Applied Probability, 23(1):64–85, March 1991.

- [27] C. Li, A. Burchard, and J. Liebeherr. A network calculus with effective bandwidth. IEEE/ACM Trans. Networking, 15(6):1442–1453, Dec. 2007.

- [28] C. Li, A. Burchard, and J. Liebeherr. A network calculus with effective bandwidth. IEEE/ACM Trans. Networking, 15(6):1442–1453, Dec. 2007.

- [29] Y. Liu, C.-K. Tham, and Y. Jiang. A calculus for stochastic QoS analysis. Performance Evaluation, 64(6):547–572, July 2007.

- [30] P. Mannersalo and I. Norros. A most probable path approach to queueing systems with general gaussian input. Computer Networks, 40(3):399–412, Oct. 2002.

- [31] S. Mao and S. S. Panwar. A survey of envelope processes and their applications in quality of service provisioning. IEEE Commun. Surveys and Turotials, 8(3):2–19, 2006.

- [32] S. M. Ross. Introduction to Probability Models. Elsevier, 2006.

- [33] J. F. Shortle and P. H. Brill. Analytical distribution of waiting time in the M/iD/1 queue. Queueing Systems, 50(2):185–197, 2005.

- [34] D. Starobinski and M. Sidi. Stochastically bounded burstiness for communication networks. IEEE Tran. Information Theory, 46(1):206–212, Jan. 2000.

- [35] P. Torab and E. W. Kamen. On approximate renewal models for the superposition of renewal processes. In Proc. IEEE ICC, 2001.

- [36] J. Xie and Y. Jiang. Stochastic service guarantee analysis based on time-domain models. In Proc. 17th IEEE/ACM International Symposium on Modelling, Analysis and Simulation of Computer and Telecommunication Systems (MASCOTS), 2009.

- [37] O. Yaron and M. Sidi. Performance and stability of communication network via robust exponential bounds. IEEE/ACM Trans. Networking, 1(3):372–385, June 1993.

- [38] Q. Yin, Y. Jiang, S. Jiang, and P. Y. Kong. Analysis on generalized stochastically bounded bursty traffic for communication networks. In Proc. 27th IEEE Local Computer Networks, 2002.

Appendix A Proofs of theorems and lemmas

Proof of Theorem 2

Proof.

The first part follows from that for any , there trivially holds

For the second part, there holds

For any ,

Based on the above steps, we have

The right-hand side of the last inequality still belongs to . The second part follows from the above inequality and the fact that the probability is always not greater than . ∎

Proof of Theorem 3

Proof.

(1) From Lemma 2 [36], we know that for any , event

implies event

where

Thus, there holds

Particularly, if is sub-additive, i.e. for any and , we then have:

Hence, the first part follows.

(2) From Lemma 3 [36], we know that for any , event

implies event

where

Thus, there holds

Particularly, if is sub-additive, we have

which ends the proof. ∎

Proof of Theorem 4

Proof.

The first part follows since there always holds

by letting on the right hand side.

For the second part, there holds

Hence for any , there exists

The right-hand side of the above inequality still belongs to and is always not greater than 1. The proof of the second part is completed. ∎

Proof of Theorem 6

Proof.

For any , according to the definition of , there holds

To ensure system stability, we require

| (33) |

In the proofs of the following theorems, without explicitly stating, we shall assume Eq.(33) holds.

Proof of Theorem 7

Proof.

According to the backlog definition

we need to prove the bounding function on the violation probability, i.e., . For ease of exposition, let , then we have

Let . The above inequality is written as

Because there holds

with the same conditions as analyzing the delay, we obtain

To prove Eq.(26), we replace in event and have

The definition of implies

for any , i.e.,

Then we conclude

∎

Proof of Theorem 8

Proof.

For any two departure packets , there holds

Let . Then the above inequality is written as

where the last step is because

Adding to both sides of the above inequality results in

In addition, there holds

To ensure that the right-hand side of the above inequality is meaningful, it requires . With the same conditions as analyzing delay, we conclude

∎

Proof of Theorem 9

Proof.

We shall only prove the three-node case, from which, the proof can be easily extended to the -node case. The departure of the first node is the arrival to the second node, so and . We then have,

Based on the relationship between the SSC and the -stochastic service curve presented in Theorem 4(2), the following inequality holds

which completes the proof.

Note that both the max-plus convolution and the min-plus convolution are associative and commutative. ∎

Proof of Lemma 4

Proof.

We shall only prove two-node case, from which, the proof can be extended to the -node case. Keep in mind that . For the two-node case, we have

The last step holds because of Theorem 4(1). From the condition, we conclude

∎

Proof of Theorem 10

Proof.

We use the induction way to prove this theorem.

Step (1) We start from with the given condition . If , then ; if , then .

Step (2) Assume holds for :

which has four solutions as below: