22email: frankluis@hust.edu.cn 22email: zhangyh@hfut.edu.cn

A Temporal Knowledge Graph Completion Method Based on Balanced Timestamp Distribution

Abstract

Completion through the embedding representation of the knowledge graph (KGE) has been a research hotspot in recent years. Realistic knowledge graphs are mostly related to time, while most of the existing KGE algorithms ignore the time information. A few existing methods directly or indirectly encode the time information, ignoring the balance of timestamp distribution, which greatly limits the performance of temporal knowledge graph completion (KGC). In this paper, a temporal KGC method is proposed based on the direct encoding time information framework, and a given time slice is treated as the finest granularity for balanced timestamp distribution. A large number of experiments on temporal knowledge graph datasets extracted from the real world demonstrate the effectiveness of our method.

Keywords:

Knowledge graph embedding balanced distribution of timestamps fine-grained time slices for timestamp distribution negative sampling for temporal relations.1 Introduction

The knowledge graph was proposed by Google in 2012 in order to optimize the results of search engines. Many KGs including Freebase [1], Richpedia [2] are created, then widely used in various applications, such as knowledge Q&A [3], recommendation system [4], etc.

There are two kinds of KG, static KG and dynamic KG. Most dynamic KGs are related with time, which are also called temporal knowledge graph. Static KGs have a common limitation that no matter when the facts remain unchanged. Compared with static KGs (which is represented as triples ), the temporal KGs are expanded into quadruples , and the time information can effectively constrain the geometric structure of the vector space. Static KGC ignores the time information of triples. Only a small amount of existing works have made certain explorations in the field of temporal KGC. Jiang [5] learns the time sequence between relations as a condition for the knowledge graph completion. Dasgupta [6] directly encodes time information by projecting entities and relations onto time-specific hyperplanes. However, the distribution of timestamps in the knowledge graph is unbalanced, and each fact is projected to all the timestamps included in its time span, which results in the “overlapping part timestamps” between different facts for the same completion task. The unbalanced distribution of timestamps will aggravate this problem.

For example, given a series of temporal knowledge graph completion tasks , -, -, where the end time of triples is replaced with the default maximum value due to being unknown, there will be multiple triples with the same relations and tail entities to be projected onto the same timestamp hyperplane in the time of “overlapping part”. And the training of the head entity will be indistinguishable. When calculating the score for head prediction, the parameter Dasgupta [6] feeds is the number of the timestamp hyperplane where the start time (such as 1853, 1917, 1954) of the triples is located. But it is obvious that a triple is valid for a range of timestamps. The purpose of this is to avoid the above influence as much as possible, because the timestamp of the start time is less likely to have confused triples. The prediction of the earlier triples will be more accurate, and the prediction of the later triples will be more ambiguous, because the timestamp hyperplane, in which the later triple’s start year is located, will contain more confused triples. Although Tang [7] tries to solve this problem, it does not directly model in the embeddings to distribute the timestamps evenly. Instead, it uses an external unit (design a timespan gate in GRU [25]) to solve this problem, which greatly increases the complexity of the model.

In order to distribute balanced timestamps in the process of modeling in the knowledge graph embedding, we proposed bt-HyTE (Hyperplane-based Temporally aware KG Embedding with Balanced timestamp distribution) and tr-HyTE (Hyperplane-based Temporally aware KG Embedding with Balanced timestamp distribution and Negative Sampling for Temporal Relations). Both use a certain time (such as year) as the finest granularity to count the number of facts in the time to ensure that the number of facts in each distributed timestamp is within the set number threshold. And then the two models project the head entities, tail entities and relations of each fact to the corresponding timestamp hyperplanes, and learn the embedding of the entities, relations, and the normal vectors of timestamp hyperplanes. Lastly, tr-HyTE adds negative sampling for temporal relations on the basis of bt-HyTE. The following is the main contributions of our paper:

-

We propose the method bt-HyTE to generate the knowledge graph vector space. Different from the previous methods, it directly solves the problem of unbalanced timestamp distribution in the embedding of the model. Taking a certain time width as the finest granularity, bt-HyTE sequentially accumulates the number of facts in each finest granularity unit, then sets a threshold for the number of facts to have a balanced distribution for the timestamps.

-

Following bt-HyTE, we design and add negative sampling for the temporal relations to optimize the vector space of the temporal knowledge graph, and propose tr-HyTE.

-

Through a large number of experiments on real-world datasets rich in time information, we have proved the effectiveness of bt-HyTE and tr-HyTE on temporal KGC.

The rest of this paper is organized as follows. Section 2 introduces related work, including various existing static and temporal KGC methods. The proposed method is detailed in Section 3. And the experimental details are presented in Section 4. The results are analyzed in Section 5, followed by our conclusion and future work in the final section.

2 Related Work

KGC is a downstream task of KGE. It completes the missing entities or relations by predicting.

The most popular methods are translation-based. The basic idea of the model TransE [8] proposed in 2013 is that the relation is regarded as a translation from the head to the tail, which is simple and efficient. TransH [9] is proposed to overcome the shortcomings of TransE in solving many-to-one and one-to-many relations by projecting entities on relation-specific hyperplanes. TransR [10] is proposed based on TransE and TransH designing a relation-specific space. The translation-based models also includes TransD [11] and TransF [12]. In addition, there are methods based on matrix decomposition, including RESCAL [13], DistMult [14], HolE [15], ComplEX [16] and SimplE [17]. Based on the Tucker decomposition of binary tensors, a new linear model TuckER [18] is proposed. There are some nonlinear models, such as ConvE [19] and GNNs [20] that have achieved high results at present, and ConvKB [21] and CapsE [22] have been questioned due to the work of Sun [23].

Although these models have good results in the experiments and datasets they show, they all ignore the time information of the knowledge graph. There are few explorations about the completion methods of temporal knowledge graphs. Jiang [5] provides a link prediction strategy by modeling the sequence of temporal relations, and Leblay [24] studies various methods of the interaction between time and relation through learning the embedding of them. Dasgupta [6] proposes a model directly encoding time information in the embedding, which gives us great enlightenment. But the problem of unbalanced timestamp distribution has not been wholly solved in these embedding methods. Later, TDG2E [7] proposed in 2020 took into account the evolution effect of time on the basis of the HyTE [6]. Although it has considered unbalanced distribution of timestamps, the problem is solved by designing a timespan gate in GRU [25] instead of directly embedding in the model. However, we directly and evenly distribute the timestamps in the model embedding, which not only ensures the model effectiveness but also greatly reduces the complexity of the model.

3 Method

In this section, we describe in detail the proposed method which consists of bt-HyTE and tr-HyTE. bt-HyTE directly handles the problem of unbalanced distribution of timestamps in model embedding. And tr-HyTE adds negative sampling for temporal relations on the basis of bt-HyTE. We first describe the overall architecture of our proposed method in Section 3.1. Next, we detail the proposed model in Section 3.2, Section 3.3, and Section 3.4.

3.1 Overall Architecture

Given an input knowledge graph containing time information, in which the fact can be expressed in the form of a quadruple , we aim to complete the missing entities and relations under the condition of time. Similar to the existing model, we represent the head entity as , the tail entity as , and the relation as , where , is the dimension of the embedding vector.

The overall architecture of our proposed method will consist of the following three steps:

-

Balanced distribution of the timestamps is completed by proposed balanced timestamp distribution method in the embedding of the model, which is detailed in Section 3.2.

-

Based on bt-HyTE and tr-HyTE, the entities and relations are projected onto all the time-specific hyperplanes covered by a particular triple, and then the embedding of entities and relations is learned on all the projected hyperplanes. Learning temporal knowledge graph embedding representation is described in Section 3.3.

-

To complete entity and relation, we compute the scores for each triple in the test set. And for a triple, the scores of elements in all entity set or relation set are arranged in the ascending order. And then the effectiveness of the temporal KGC is evaluated based on the evaluation metrics. See Section 3.4 for details.

3.2 Balanced Distribution Method For Timestamps

The quadruple of temporal knowledge graph indicates that the triple is valid in the period . Assuming that the timestamps are distributed in some way, a fact is valid on the timestamp spans occupied by its time range . So the rationality of the timestamp distribution will directly influence the performance of the method. For distributing timestamps, the dynamic temporal knowledge graph will be partition into static knowledge graphs , where :.

We consider dealing with timestamp distributing directly in the embedding of the model to mitigate the effects of the problems described above. We take a period of time as the the finest granularity, and collect the most fine-grained time period for each fact, in which the fact is valid. If a year is used as the most fine-grained time period, the triple is valid in every year within , where , which is included in the set of effective time spans. Then, the number of facts contained in each of the most fine-grained times is counted in the embedding, and the threshold for distributing the timestamps is set, thereby the balanced distribution of the timestamps is done. As shown in Alg. 1, we consider not only the start time and the end time of a fact, but also the process time between them, thus taking into account all the times when the fact is valid. For the series triple illustrated above, according to the number of facts of the most fine-grained time slice, the later time will accumulate more fact numbers as time advances, and then when the threshold is reached, it is able to be distributed into , by which we distribute the timestamps. With this newly formed timestamp, the prediction of missing entities or relations prior to the year will be conditionally completed.

3.3 Temporal Knowledge Graph Embedding Representation

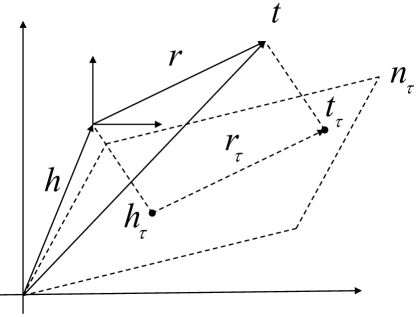

In the above temporal knowledge graph , is the set of timestamps distributed in the embedding of the model, then the set of timestamps covered by the time period is expressed as . Project entity and relation vectors onto the hyperplane of a particular timestamp under the balanced distribution of timestamps as shown in Fig. 1,

| (1) |

The score function is expressed as:

| (2) |

For a valid fact, there should be , where is a timestamp evenly distributed in the embedding of the model using Alg. 1. For a fact triple in a negative sample, should be a number much greater than 0. Here, we learn the normal vector of each timestamp hyperplane, and the embedding of projected entities and relations on each timestamp hyperplane.

We learn the model by minimizing the loss function, which is expressed as:

| (3) |

As mentioned, for a corrupted triple, the distance should be a very large positive number, which will result in is less than 0. So for a triple, when its is greater than 0, the model need to continue to learn. is the set of valid triples. is the set of negative samples, and we generate negative samples in following two ways:

-

Without negative sampling for the temporal relations. A certain number of negative sampling is carried out for the head and tail entities of valid triples without considering the influence of the timestamps, and the negative sampling strategy is applied to bt-HyTE expressed by the following formula,

-

With negative sampling for the temporal relations. A certain number of negative samples are taken for the head entities, tail entities and temporal relations of the valid triples.This negative sampling strategy is applied to tr-HyTE, and it is expressed by the following formula,

We performed link prediction, relation prediction tasks, and some fine-grained investigation to prove the effectiveness of proposed bt-HyTE and tr-HyTE. The addition of negative sampling strategy for temporal relations can greatly improve the performance of relation prediction, but it can also inhibit the performance of head and tail entity prediction to a certain extent.

3.4 Temporal Knowledge Graph Completion

The temporal KGC is entity prediction and relation prediction tasks based on the embedding of entities, relations, and times. At present, the widely accepted task of temporal KGC takes time as the condition of the traditional completion task. We also use time as the condition of entity prediction and relation prediction to further improve the performance of KGC. In this paper, the temporal KGC task is divided into the following subtasks:

-

Head prediction: for an incomplete fact , predict the head entity from the entity set at period .

-

Relation prediction: for an incomplete fact , predict the relation from the relation set at period .

-

Tail prediction: for an incomplete fact , predict the tail entity from the entity set at period .

4 Experiments

In this section, we experiment bt-HyTE and tr-HyTE based on popular datasets and compare their performance with the state-of-the-art KGC methods. Specifically, we first introduce the datasets used, the methods involved in the comparison, and the evaluation schema, and then introduce the details of the experiment.

4.1 Datasets

We use the subgraphs containing rich time information of Wikidata and YAGO knowledge graph datasets to carry out the experiment. The subjects of the experiment included bt-HyTE, tr-HyTE and some baselines.

-

Wikidata11k. This is a temporal knowledge graph extracted from Wikidata by the method proposed in HyTE [6]. It contains 27.5k triples, 10623 entities and 24 relations.

| Datasets | #Entity | #Relation | #Train | #Valid | #Test |

|---|---|---|---|---|---|

| YAGO11k | 10623 | 10 | 16.4k | 2k | 2k |

| Wikidata11k | 10623 | 24 | 23.4k | 2k | 2k |

| Datasets | lr | testfreq | inpdim | margin | l2 | neg_sample |

|---|---|---|---|---|---|---|

| YAGO11k | 0.0001 | 5 | 128 | 10 | 0 | 5 |

| Wikidata11k | 0.0001 | 5 | 128 | 10 | 0 | 5 |

4.2 Compared Methods

As following, we compare these five methods to evaluate the performance of our method:

-

TransE [8]: TransE is the pioneering work of translation-based models, which embeds entities and relations in the same vector space. It is simple but efficient.

-

TransH [9]: TransH put forward on the basis of TransE. It projects entities onto the relation-specific hyperplane, and regards the relation on the hyperplane as the translation from the head to the tail.

-

HyTE [6]: HyTE is a dynamic KGE method. Inspired by TransH’s modeling, it projects entities and relations onto the time-specific hyperplane, and regards the projected relation as the translation of the projected head to the projected tail. It has achieved remarkable results in dealing with the completion of temporal KGs.

-

bt-HyTE: On the basis of HyTE, we consider directly dealing with unbalanced timestamp distribution in the model embedding, and propose bt-HyTE.

-

tr-HyTE: On the basis of bt-HyTE, we add negative sampling for the temporal relations, and the model is named tr-HyTE, which effectively improves the performance of relation prediction.

4.3 Evaluation Schema

We use the traditional evaluation metrics Mean Rank and HIT@k in the field of KGC. The vectors embedded in the low-dimensional space are first projected onto the time-specific hyperplanes by formula (1), and then the scores are calculated and ordered by formula (2). The rankings of the missing entities are recorded, and the following metrics are calculated:

-

Mean Rank: For each triple in the test set, scores calculated are sorted from small to large, then the missing entity or relation ranking of each triple is counted. The average value of rankings of all the triples in test set is called Mean Rank. For Mean Rank, a smaller value is better.

-

HIT@k: For each triple in the test set, scores calculated are also sorted from small to large, and the proportion of triples whose missing entity or relation is ranking in front of k to the total number of the test sets is counted. For HIT@k, a larger value is better.

4.4 Experiment Details

4.4.1 Experiment Setup

For all the methods, the max epoch during training is 4000, and we adopt the optimal parameter configuration given in the corresponding method’s paper. The dimension of the embedding vector is selected from and takes 128; Margin is selected from using 10; Optimizer takes SGD; Learning rates selected from are all 0.0001; And batch size will be selected from ; Neg sample is selected from . The optimal parameter configuration of the proposed method is shown in Table 2.

4.4.2 Entity Prediction

Entity prediction includes two subtasks: head prediction and tail prediction. Like previous models, the selection of hyperparameter epoch is also dependent on the mean of Mean Rank metric of head prediction and tail prediction. For the fact , the head entity prediction is to process the case of , and the tail entity prediction is to process the case of , both of which regard time as a known condition of completion. We have experimented with baselines, bt-HyTE and tr-HyTE respectively.

4.4.3 Relation Prediction

The selection of the hyperparameter epoch of the relation prediction directly depends on the Mean Rank metric of the relation prediction. Different from the static KGE methods, the relation prediction in the temporal KGs is to process the case of . Knowing the head and tail , the relation prediction for the triple is completed under the condition of time . We also experiment on the relation prediction of bt-HyTE, tr-HyTE and some baselines.

4.4.4 Fine-grained Investigation Based On YAGO11k Dataset

Based on the YAGO11k test set with realistic representational significance and HIT@k metric, we fine-grain comparisons of completion performance between different methods. The completion strategy is as follows:

-

Relation completion: The metric referenced is HIT@1, which directly completes the number one relation and forms the valid facts.

-

Head & tail completion: The metric referenced is HIT@10. If the score of the missing entity is in the top 10, then it is defined that it can complete the missing entity and form the valid fact.

5 Results And Analysis

To verify the strengths of our method, we not only make statistics and analysis of the results based on Mean Rank and HIT@k metrics, but also have a fine-grained investigation on bt-HyTE, tr-HyTE and compared models.

5.1 Performance Analysis And Comparison

| Datasets | Wikidata11k | |||||

| Metric | MR | HIT@10(%) | HIT@1(%) | |||

| Tasks | head | tail | relation | head | tail | relation |

| TransE | 900.72 | 694.49 | 2.81 | 8.31 | 23.02 | 75.81 |

| TransH | 867.87 | 653.63 | 2.71 | 9.19 | 26.74 | 72.73 |

| HyTE | 448.28 | 308.91 | 1.55 | 28.40 | 48.58 | 81.28 |

| bt-HyTE | 448.71 | 282.90 | 1.51 | 28.79 | 50.34 | 81.52 |

| tr-HyTE | 450.21 | 318.62 | 1.30 | 30.21 | 50.49 | 86.46 |

| Datasets | YAGO11k | |||||

| Metric | MR | HIT@10(%) | HIT@1(%) | |||

| Tasks | head | tail | relation | head | tail | relation |

| TransE | 2042.04 | 447.51 | 1.47 | 1.27 | 7.95 | 78.11 |

| TransH | 1891.57 | 351.83 | 1.48 | 1.32 | 11.70 | 79.52 |

| HyTE | 1059.62 | 110.53 | 1.28 | 13.31 | 35.01 | 82.64 |

| bt-HyTE | 1040.78 | 114.07 | 1.27 | 13.46 | 37.06 | 82.45 |

| tr-HyTE | 1036.90 | 118.99 | 1.22 | 14.92 | 34.67 | 85.18 |

Our proposed models bt-HyTE and tr-HyTE are better than other compared models in performance, which may be due to the effectiveness of our contributions.

As shown in Table 4 and Table 4, the best results are bolded. bt-HyTE are qualitatively improved compared to not only the static KGC models TransE and TransH, but also the current state-of-the-art temporal knowledge graph embedding model HyTE. Based on Wikidata11k dataset, bt-HyTE is better than HyTE on MR of tail prediction (an increase of 26.01) and relation prediction (an increase of 0.04). As for HIT@k metric, bt-HyTE has an increase of 0.39% on head prediction, 1.76% on tail prediction and 0.24% on relation prediction compared with HyTE. Based on YAGO11k dataset, bt-HyTE is better than HyTE on MR of head prediction, relation prediction and HIT@k of head prediction, tail prediction. There is a slight fluctuation in other metrics, which may be due to the experimental error. It illustrates the effectiveness of balanced timestamps.

Compared with bt-HyTE, tr-HyTE has further improved performance. Based on YAGO11k dataset, tr-HyTE is better than bt-HyTE on MR of head prediction (an increase of 3.88) and relation prediction (an increase of 0.05). In HIT@k metrics, tr-HyTE has an increase of 1.46% on head prediction and 2.73% on relation prediction. Based on Wikidata11k dataset, tr-HyTE is also better than bt-HyTE on MR of relation prediction and HIT@k of head prediction, tail prediction, relation prediction. And tr-HyTE is obviously better than HyTE. It demonstrates the effectiveness of balanced timestamps again and illustrates the effectiveness of negative sampling for temporal relations.

However, we also notice that MR-based head entity prediction on the Wikidata11k dataset and MR-based tail entity prediction on the YAGO11k dataset are not very well. We attribute this to the problem that a certain year contains a very large number of facts. Although our timestamp fact number threshold has been set, the number of facts included in some of the finest-grained time slices is much more than the threshold, which obviously weakens the balance of timestamp distribution.

5.2 Fine-grained Investigation Results

| Test Quadruples | TransE | HyTE | bt-HyTE | tr-HyTE |

| ?, playsFor, France_national_football_team, [2002, 2011] | - | - | Djibril_Cissé | Djibril_Cissé |

| ?, playsFor, Bradford_City_A.F.C., [2011, 2014] | - | - | Kyel_Reid | - |

| ?, playsFor, Woking_F.C., [2003, 2004] | - | - | - | Ashley_Bayes |

| ?, hasWonPrize, Eisner_Award, [2001, 2010] | - | - | - | J._H._Williams_III |

| Test Quadruples | TransE | HyTE | bt-HyTE | tr-HyTE |

| Alex_Campbell_(golfer), wasBornIn, ?, [1876, 1876] | - | - | - | Scotland |

| Sarney_Filho, wasBornIn, ?, [1957, 1957] | - | - | São_Luís,_Maranhão | São_Luís,_Maranhão |

| Marion_Burns, wasBornIn, ?, [1907, 1907] | - | - | Los_Angeles | - |

| Brad_Mays, wasBornIn, ?, [1955, 1955] | - | - | - | St._Louis |

| Test Quadruples | TransE | HyTE | bt-HyTE | tr-HyTE |

| Tomoko_Kawase, ?, Kyoto, [1975, 1975] | diedIn, isMarriedTo | diedIn, isMarriedTo | diedIn, isMarriedTo | wasBornIn, diedIn |

| Sacha_Guitry, ?, Saint_Petersburg, [1885, 1885] | diedIn, wasBornIn | diedIn, created | diedIn, created | wasBornIn, diedIn |

| Eleanor_Summerfield, ?, London, [1921, 1921] | diedIn, wasBornIn | diedIn, wasBornIn | wasBornIn, diedIn | diedIn, wasBornIn |

| Olivia_Newton-John, ?, Cambridge, [1948, 1948] | diedIn, isMarriedTo | diedIn, wasBornIn | diedIn, wasBornIn | wasBornIn, diedIn |

Through the fine-grained investigation method described in Section 4.4, we select a certain number of specific quadruples based on the test set of the YAGO11k dataset, and prove the effectiveness of our proposed model from the fine-grained perspective.

As shown in Table 7 and Table 7, on the entity prediction task, our proposed bt-HyTE and tr-HyTE can complete the missing entities that rank top 10 ahead of the static KGC method TransE and the temporal model HyTE that ignores the balanced distribution of timestamps. As shown in Table 7, in the relation prediction task, for some specific quadruples, HyTE confuses the similar relations between “dieIn” and “wasborn” like TransE, but our proposed bt-HyTE and tr-HyTE can distinguish these relations. The performance of tr-HyTE is better than that of bt-HyTE for relation prediction, which is because of the negative sampling for temporal relations. But in our method, the number of negative samples for temporal relations should be strictly controlled to avoid affecting the entity prediction.

6 Conclusion

In this paper, we proposed a temporal KGC method bt-HyTE, and on this basis, a method of adding negative sampling for temporal relations was proposed named tr-HyTE. Based on the original method of directly encoding time information HyTE, we consider directly solving the problem of unbalanced timestamp distribution in the model embedding. The experimental results based on temporal knowledge graph datasets indicate that our proposed model is better than the state-of-the-art algorithms. In the future, we plan to further refine the time slice size used for balanced timestamp distribution, and further study the influence of this time slice size on the effectiveness of the temporal KGC methods.

References

- [1] Kurt Bollacker, Colin Evans, Praveen Paritosh, Tim Sturge, and Jamie Taylor.: Freebase: A collaboratively created graph database for structuring human knowledge. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (SIGMOD) , pp. 1247–1250. (2008)

- [2] Wang M, Qi G, Wang H F, et al.: Richpedia: A Comprehensive Multi-modal Knowledge Graph. In: Joint International Semantic Technology Conference (JIST) , pp. 130–145. (2019)

- [3] Bordes A, Weston J, Usunier N, et al.: Open question answering with weakly supervised embedding models[C]. In: Joint European conference on machine learning and knowledge discovery in databases , pp. 165–180. (2014)

- [4] Palumbo E, Rizzo G, Troncy R, et al.: Knowledge graph embeddings with node2vec for item recommendation[C]. In: European Semantic Web Conference , pp. 117–120. (2018)

- [5] Jiang T, Liu T, Ge T, et al.: Encoding temporal information for time-aware link prediction[C]. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing , pp. 2350–2354. (2016)

- [6] Dasgupta S S, Ray S N, Talukdar P.: Hyte: Hyperplane-based temporally aware knowledge graph embedding[C]. In: Proceedings of the 2018 conference on empirical methods in natural language processing , pp. 2001–2011. (2018)

- [7] Tang X, Yuan R, Li Q, et al.: Timespan-Aware Dynamic Knowledge Graph Embedding by Incorporating Temporal Evolution[J]. In: IEEE Access , pp. 6849–6860. (2020)

- [8] Bordes, A., Usunier, N., Garcia-Duran, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: Neural Information Processing Systems (NIPS) , pp. 1–9. (2013)

- [9] Wang Z, Zhang J, Feng J, et al.: Knowledge graph embedding by translating on hyperplanes[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2014)

- [10] Lin Y, Liu Z, Sun M, et al.: Learning entity and relation embeddings for knowledge graph completion[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2015)

- [11] Ji G, He S, Xu L, et al.: Knowledge graph embedding via dynamic mapping matrix[C]. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (volume 1: Long papers) , pp. 678–696. (2015)

- [12] Feng J, Huang M, Wang M, et al.: Knowledge graph embedding by flexible translation[C]. In: Proceedings of the Fifteenth International Conference on Principles of Knowledge Representation and Reasoning , pp. 557–560. (2016)

- [13] Nickel M, Tresp V, Kriegel H P.: A three-way model for collective learning on multi-relational data[C]. In: Icml. (2011)

- [14] Yang B, Yih W, He X, et al.: Embedding entities and relations for learning and inference in knowledge bases[J]. In: arXiv preprint arXiv:1412.6575. (2014)

- [15] Nickel M, Rosasco L, Poggio T.: Holographic embeddings of knowledge graphs[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2016)

- [16] Trouillon T, Welbl J, Riedel S, et al.: Complex embeddings for simple link prediction[C]. In: International Conference on Machine Learning , pp. 2071–2080. (2016)

- [17] Kazemi S M, Poole D.: Simple embedding for link prediction in knowledge graphs[J]. In: arXiv preprint arXiv:1802.04868, 2018. (2018)

- [18] Balažević I, Allen C, Hospedales T M.: OTucker: Tensor factorization for knowledge graph completion[J]. In: arXiv preprint arXiv:1901.09590. (2019)

- [19] Dettmers T, Minervini P, Stenetorp P, et al.: Convolutional 2d knowledge graph embeddings[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2018)

- [20] Shang C, Tang Y, Huang J, et al.: End-to-end structure-aware convolutional networks for knowledge base completion[C]. In: Proceedings of the AAAI Conference on Artificial Intelligence , pp. 3060–3067. (2019)

- [21] Nguyen D Q, Nguyen T D, Nguyen D Q, et al.: A novel embedding model for knowledge base completion based on convolutional neural network. In: In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies , pp. 327–333. (2018)

- [22] Vu T, Nguyen T D, Nguyen D Q, et al.: A capsule network-based embedding model for knowledge graph completion and search personalization. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies , pp. 2180–2189. (2019)

- [23] Sun Z, Vashishth S, Sanyal S, et al.: A re-evaluation of knowledge graph completion methods[J]. In: arXiv preprint arXiv:1911.03903. (2019)

- [24] Leblay J, Chekol M W.: Deriving validity time in knowledge graph[C]. In: Companion Proceedings of the The Web Conference 2018 , pp. 1771–1776. (2018)

- [25] Chung J, Gulcehre C, Cho K H, et al.: Empirical evaluation of gated recurrent neural networks on sequence modeling[J]. In: arXiv preprint arXiv:1412.3555. (2014)

- [26] Mahdisoltani F, Biega J, Suchanek F.: Yago3: A knowledge base from multilingual wikipedias[C]. In: 7th biennial conference on innovative data systems research. CIDR Conference. (2014)