A Unified Low-level Foundation Model for Enhancing Pathology Image Quality

Abstract

Foundation models have revolutionized computational pathology by achieving remarkable success in high-level diagnostic tasks, yet the critical challenge of low-level image enhancement remains largely unaddressed. Real-world pathology images frequently suffer from degradations such as noise, blur, and low resolution due to slide preparation artifacts, staining variability, and imaging constraints, while the reliance on physical staining introduces significant costs, delays, and inconsistency. Although existing methods target individual problems like denoising or super-resolution, their task-specific designs lack the versatility to handle the diverse low-level vision challenges encountered in practice. To bridge this gap, we propose the first unified Low-level Pathology Foundation Model (LPFM), capable of enhancing image quality in restoration tasks, including super-resolution, deblurring, and denoising, as well as facilitating image translation tasks like virtual staining (H&E and special stains), all through a single adaptable architecture.Our approach introduces a contrastive pre-trained encoder that learns transferable, stain-invariant feature representations from 190 million unlabeled pathology images, enabling robust identification of degradation patterns. A unified conditional diffusion process dynamically adapts to specific tasks via textual prompts, ensuring precise control over output quality. Trained on a curated dataset of 87,810 whole slied images (WSIs) across 34 tissue types and 5 staining protocols, LPFM demonstrates statistically significant improvements (p<0.01) over state-of-the-art methods in most tasks (56/66), achieving Peak Signal-to-Noise Ratio (PSNR) gains of 10-15% for image restoration and Structural Similarity Index Measure (SSIM) improvements of 12–18% for virtual staining. More importantly, LPFM represents a transformative advancement for digital pathology, as it not only overcomes fundamental image quality barriers but also establishes a new paradigm for stain-free, cost-effective, and standardized pathological analysis, which is crucial for enabling scalable and equitable deployment of AI-assisted pathology worldwide.

keywords:

Computational Pathology, Image Restoration, Virtual Staining, Foundation Model

1 Introduction

The advent of digital pathology has revolutionized modern medicine by transitioning traditional glass slides into high-resolution whole slide images (WSIs), enabling computerized analysis [1], enhanced collaborative diagnostics across institutions [2], and AI-assisted decision support [3]. This digital transformation began with slide scanning technologies [4] and has since evolved into an essential component of precision medicine [5, 6], allowing pathologists to examine tissue morphology at unprecedented scales [7] while facilitating large-scale collaborative research [8, 9]. However, diagnostic utility is frequently compromised by multiple degradation problems in the imaging pipeline [10, 11]. During slide preparation, tissue sections may suffer from folding [12], tearing [13], or staining inhomogeneity [14], while scanning introduces optical blur [15], noise [16], and resolution limitations [17].

These degradations collectively obscure critical cellular features including nuclear pleomorphism [18, 19], inflammatory infiltrates [20], and subtle pathological changes [21], potentially leading to diagnostic uncertainty. The limitations of physical rescanning are manifold [22, 9]: time constraints [18], prohibitive costs [23], technical irreversibility of preparation artifacts [4], and frequent biopsy exhaustion [7]. This has spurred computational approaches to enhance image quality through both restoration techniques (e.g., noise reduction [10], deblurring [24], super-resolution [5]) and virtual staining methods [25, 26]. Current solutions remain fragmented [27, 17], typically addressing either restoration or staining separately [28, 29], with denoising models potentially altering stain characteristics [13] and staining networks amplifying artifacts [30]. The field lacks unified generative frameworks capable of handling diverse low-level vision tasks [31, 32], forcing clinics to maintain incompatible specialized systems [2]. Pathology imaging particularly suffers from this fragmentation despite shared underlying challenges [33, 34].

Our work addresses this critical gap by introducing the first unified low-level generative foundation model for enhancing pathology image quality, including pathology image restoration and translation. Unlike existing task-specific models that process artifacts and stains in isolation, our approach recognizes that these challenges are fundamentally interconnected aspects of pathological image formation. This unified treatment enables synergistic improvements; for instance, stain-aware denoising and artifact-resistant stain transfer emerge naturally from the shared representation. We develop a novel pre-training paradigm using large-scale multi-tissue, multi-stain datasets, capturing both universal characteristics of pathological image degradation and stain-invariant morphological features. Furthermore, we pioneer a prompt-controlled framework that dynamically switches between low-level pathological tasks without architectural modifications. By integrating these innovations, we establish the first unified low-level pathology foundation model in computational pathology that moves beyond fragmented solutions to comprehensive image generation.

2 Results

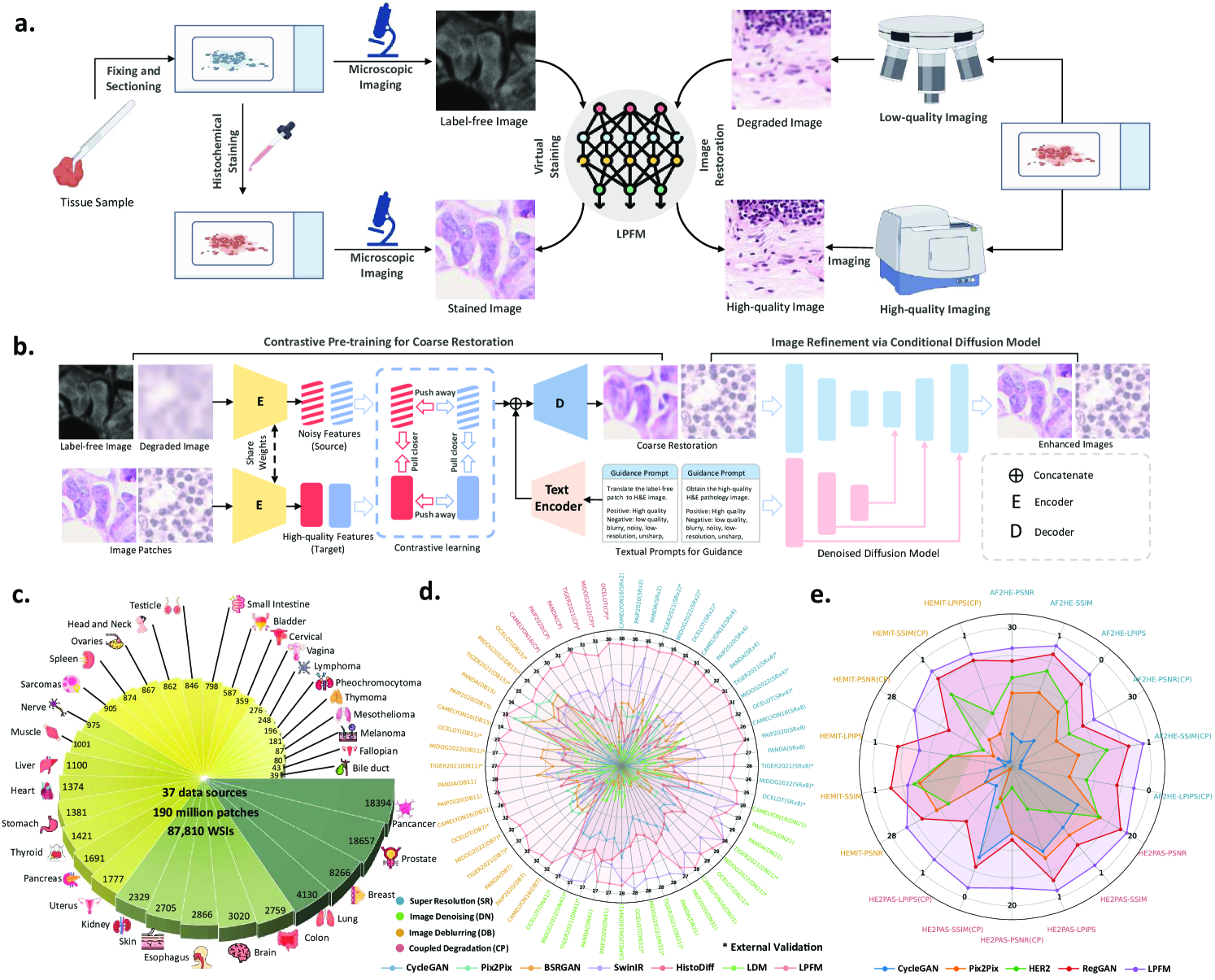

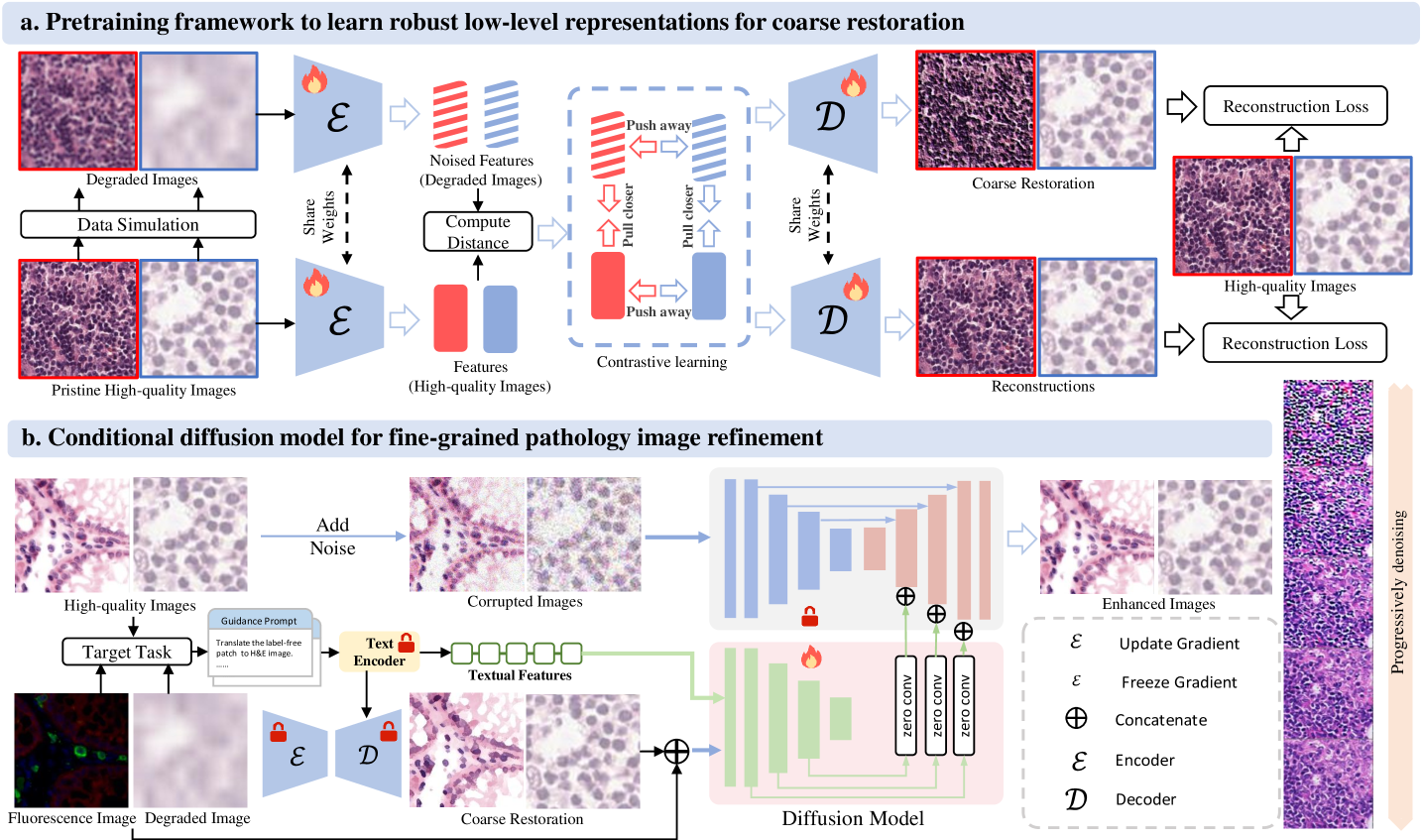

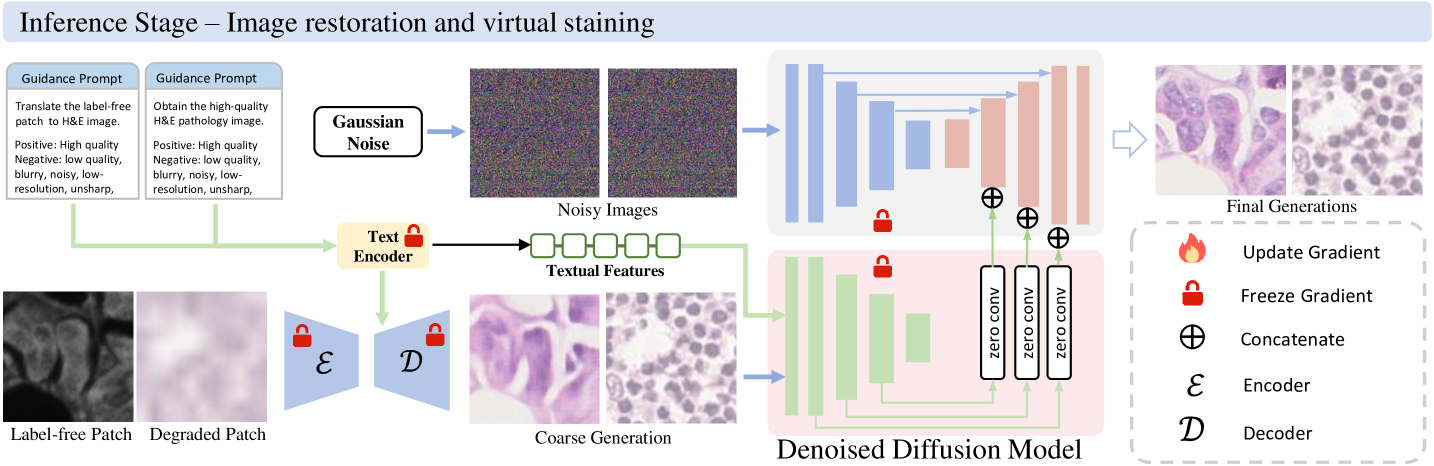

In this section, we conducted comprehensive evaluations across 66 distinct experimental tasks, systematically organized into six fundamental low-level vision categories: (i) super-resolution (18 tasks with varying scale factors and degradation models), (ii) image deblurring (18 tasks covering different kernel sizes), (iii) image denoising (18 tasks with varying noise intensities), (iv) coupled degradation restoration (6 tasks addressing composite artifacts), (v) virtual staining (3 tasks for stain transformation), and (vi) virtual staining for degraded pathology image (3 tasks combining physical degradations with stain conversion). Fig. 1 presents a comprehensive overview of the proposed low-level pathology foundation model (LPFM) for pathology image restoration and virtual staining. As shown in Fig. 1b, LPFM included a contrastive pretraining framework that tackled multiple coupled degradation problems, generating coarsely restored and virtually stained images. The pretraining framework was trained through a contrastive loss by pulling closer the latent features of paired degraded images and their high-quality counterparts, while pushing away the features of unpaired samples.

Building on the coarsely restored images, we proposed a conditional diffusion model that improved image quality through a guided denoising process, utilizing both the coarse restorations and textual prompts as conditional inputs. In Fig. 1d-e, our LPFM demonstrated superior performance across all tasks, establishing itself as the first unified foundation model capable of handling multiple low-level vision challenges in computational pathology. The key advantages of LPFM include exceptional generalization across tissue types and staining protocols, and robust performance on isolated/coupled degradations (present tense for enduring conclusions). To ensure a thorough assessment of image quality, we employed three complementary metrics: PSNR [35] for pixel-level fidelity, SSIM [36] for structural similarity, and LPIPS [37] for perceptual quality. These metrics were consistently used in our experiments (Sec. 4.3) to provide quantitative comparisons. All experiments were conducted on partitioned datasets with 95% confidence intervals and significance testing, ensuring reliable evaluation.

2.1 Super Resolution

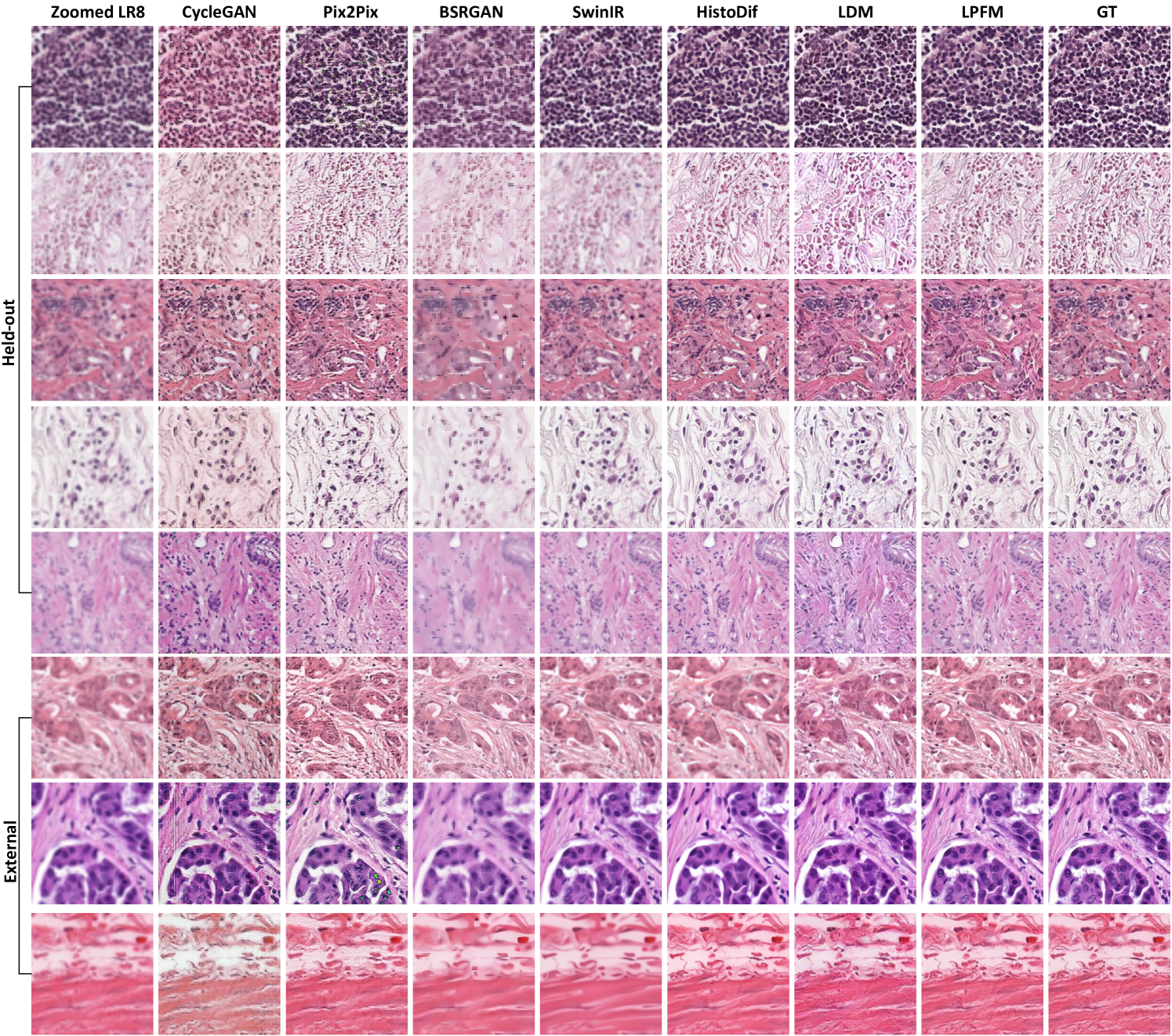

Super resolution refers to computational techniques that reconstruct high-resolution images from low-resolution inputs by recovering lost high-frequency details and fine structures. In digital pathology, this process enhances the visibility of diagnostically critical features that may be obscured due to limitations in scanning resolution or image acquisition conditions. The ability to faithfully reconstruct these microscopic details is particularly important because pathologists routinely examine tissue specimens at multiple magnification levels, where fine cellular and subcellular features directly inform diagnostic decisions. Therefore, it is important to evaluate the super-resolution abilities of different models. In this section, we conducted experiments on a total of 18 tasks, including 9 internal tests and 9 external tests. The detailed experimental results are presented in Extended Data (Tab. 1-6). More generated samples are shown in Extended Data (Fig. 14).

To simulate realistic image degradation for super-resolution evaluation, we generated low-resolution counterparts by downscaling original high-resolution pathology images by factors of 2, 4, and 8 using the comprehensive degradation model detailed in Sec. 4.1.2. For internal validation, we employed three benchmark datasets (CAMELYON16[38], PANDA[39], and PAIP2020[40]), which were rigorously partitioned into training (70%), validation (10%), and test (20%) sets with no data overlap to ensure unbiased evaluation. All model comparisons were performed exclusively on the held-out test sets under standardized conditions. To further validate generalization capability, we incorporated external test datasets (TIGER2021, MIDOG2022 and OCELOT[41]) representing diverse tissue types, staining protocols, and scanner variations, providing robust assessment across different clinical scenarios and imaging conditions.

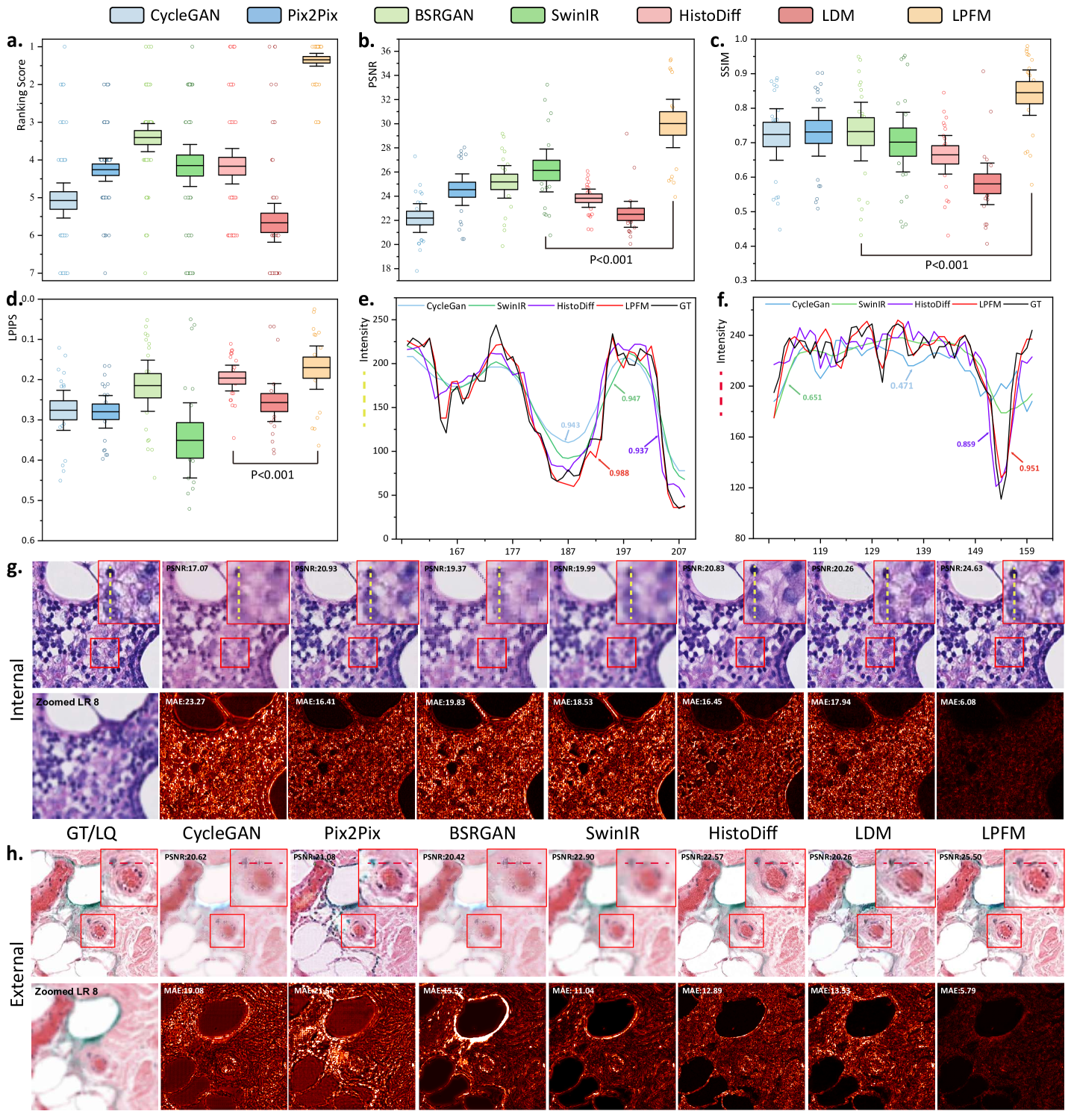

To comprehensively evaluate the performance of different methods across multiple super-resolution tasks, we conducted a thorough ranking analysis where each of the 18 super-resolution tasks was ranked according to all three metrics (Fig. 2a). Our proposed method demonstrated superior and consistent performance across the comprehensive evaluation. In the 18 super-resolution tasks assessed by three metrics, our approach achieved an outstanding average ranking of 1.33 across all evaluation criteria (Fig. 2a). More impressively, in 15 out of these 18 tasks, our method simultaneously secured either first or second place rankings in all three metrics (PSNR, SSIM, and LPIPS). Specifically, the LPFM attained remarkable quantitative results with average values of 30.27 dB (PSNR), 0.85 (SSIM), and 0.1647 (LPIPS) across all tasks, surpassing the second-best methods by significant margins of 4.14 dB and 0.12 in PSNR and SSIM, respectively (Fig. 2b-d).

Furthermore, we showed some generated visual samples of various methods in internal and external datasets (Fig. 2g-h). The mean absolute error (MAE) of the generated images and GT images was computed to evaluate the visual quality of the generated images. LPFM achieved the lowest MAE for the samples in internal and external samples. Additionally, we analyzed the intensity profiles of the generated samples in internal and external datasets produced by the top four best-performance models alongside the GT images (Fig. 2e-f). To better validate the fidelity of reconstructed details, we conducted pixel-wise Pearson Correlation analysis [42] between the generated images and GT images. The results demonstrated that LPFM achieved the highest correlation coefficient. As shown in Fig. 2e-f, the intensity curve of LPFM-generated images exhibited nearly perfect alignment with the GT profile, particularly in preserving critical high-frequency components that correspond to cellular boundaries and nuclear details. The quantitative correlation analysis, combined with our previous metric evaluations, provided comprehensive evidence that LPFM delivered both perceptually convincing and accurate super-resolution results for pathology images.

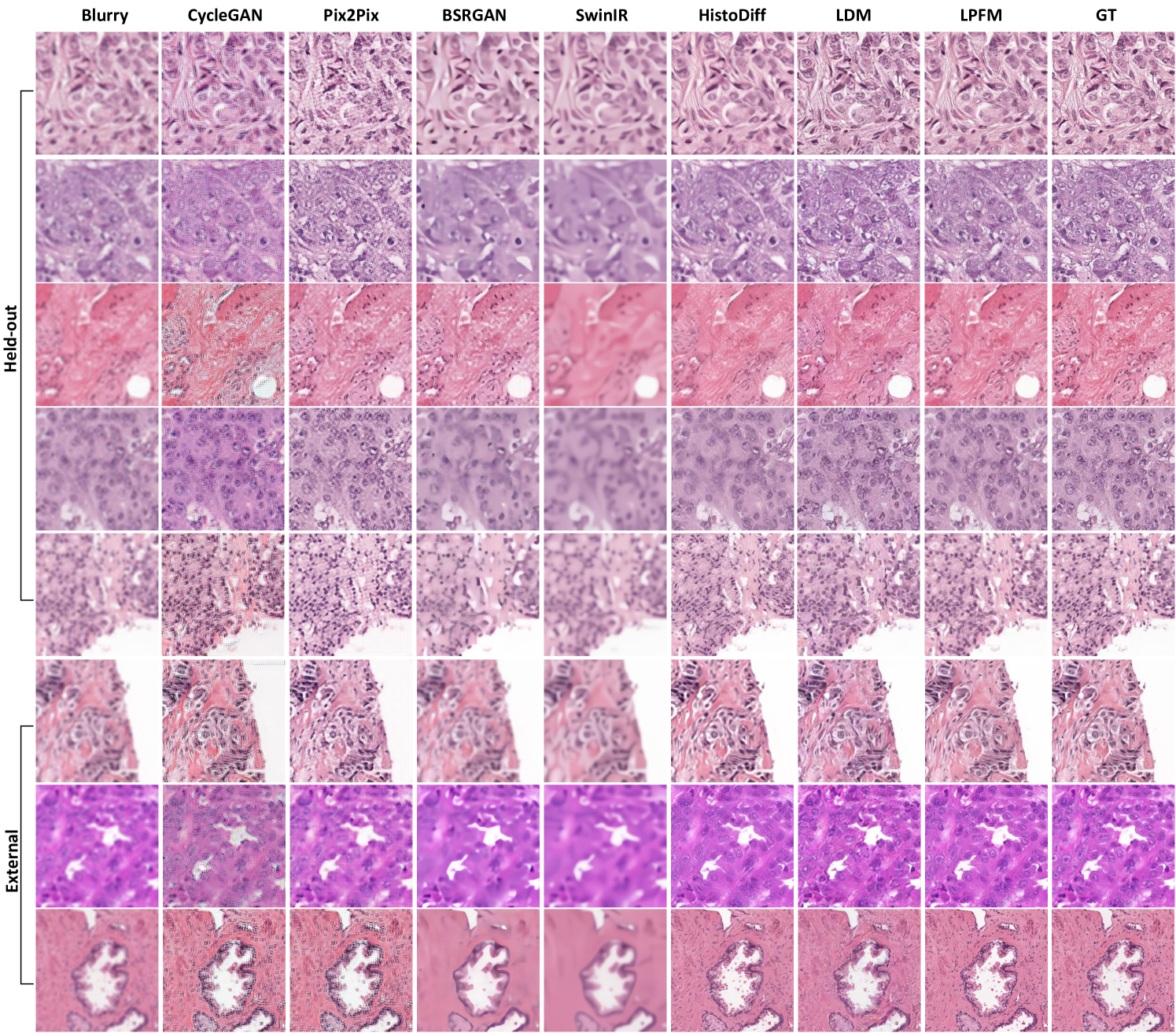

2.2 Image Deblurring

In computational pathology, effective deblurring enhances critical diagnostic features by restoring sharp boundaries and fine cellular details that may be lost due to optical limitations or focus variations during slide scanning. This capability directly impacts diagnostic accuracy in applications such as tumor margin assessment and mitotic figure detection.

Our evaluation framework comprised 18 deblurring tasks (9 internal and 9 external tests), with complete results available in Extended Data (Tab. 13-18). We simulated clinically relevant blur conditions using Gaussian kernels with varying parameters (kernel sizes: 7-15, : 1.5-3.5) as detailed in Sec. 4.1.2. The same rigorous dataset partitioning (CAMELYON16, PANDA, PAIP2020) and external validation protocol (OCELOT, MIDOG2022, TIGER2021) described in Sec. 2.1 were maintained. More generated samples are shown in Extended Data (Fig. 15).

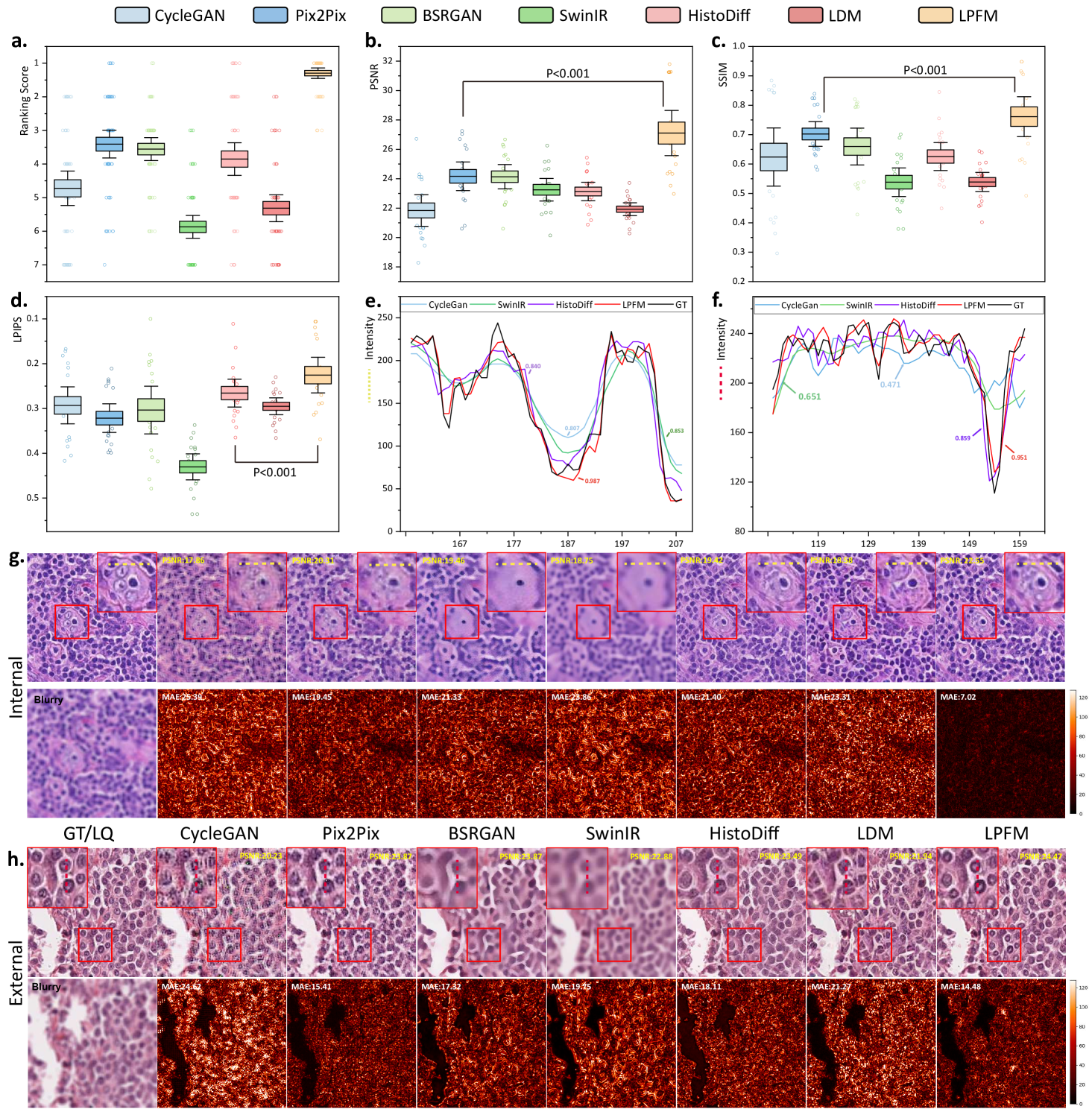

The statistical analysis showed that LPFM achieved the best average ranking scores across PSNR, SSIM and LPIPS metrics for the 18 deblurring tasks (Fig. 3a). Specifically, LPFM ranked among the top two methods in all three metrics for 16 out of 18 tasks (84.2% of cases), demonstrating remarkable consistency across different evaluation criteria. Notably, LPFM achieved the highest PSNR values in all 18 tasks, with an average score of 27.36 dB that significantly outperformed the second-best method (24.17 dB, +3.19 dB improvement). For structural similarity assessment, LPFM maintained superior performance with SSIM scores consistently leading all comparison methods across various blur conditions (Fig. 3c). The average SSIM of 0.770 substantially exceeded competing approaches, with particularly notable advantages in challenging cases involving large kernel sizes where LPFM achieved up to 0.948 SSIM versus 0.824-0.845 for other methods. Perceptual quality evaluation through LPIPS further confirmed LPFM’s advantages (Fig. 3d), with an average score of 0.220 representing 31.5% reduction in perceptual distance compared to traditional methods (CycleGAN: 0.293) and 17.3% improvement over recent diffusion-based approaches (HistoDiff: 0.266).

Visual comparisons presented in Fig. 3g-h showed LPFM consistently producing sharper cellular boundaries and better-preserved nuclear details compared to other methods. Quantitative analysis revealed LPFM achieved the lowest MAE across internal and external datasets, with internal samples showing 7.02 (versus 19.45 for the second-best method) and external datasets demonstrating 14.48 (versus 15.41 for the second-best method). The evaluation of structural consistency through the pixel-level PCC analysis further confirmed LPFM’s exceptional performance, achieving 0.987 on internal datasets and maintaining strong generalization with 0.953 on external datasets (Fig. 3e-f). These results collectively validated LPFM’s capability to faithfully restore diagnostically critical features while maintaining structural consistency.

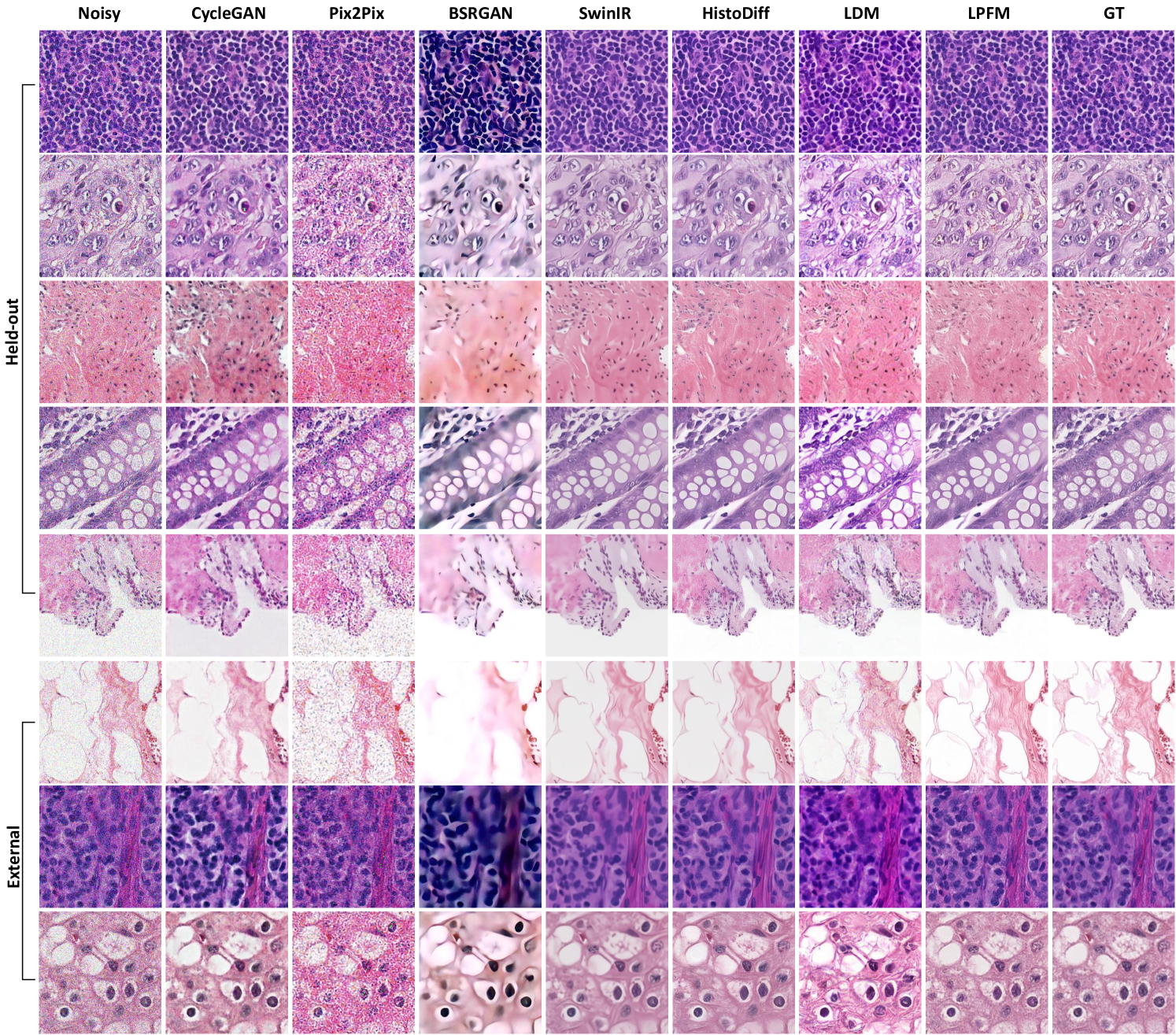

2.3 Image Denoising

Noise corruption in pathology images presents a significant challenge for both clinical diagnosis and computational analysis. Multiple factors introduce noise, including electronic sensor limitations during slide scanning, uneven staining artifacts, tissue preparation inconsistencies, and optical imperfections in imaging systems. These noise patterns obscure critical cellular and subcellular features essential for accurate diagnosis, including nuclear membrane integrity, chromatin distribution patterns, and subtle morphological characteristics. Therefore, it is necessary to remove possible noise inside pathology images and restore high-quality images for downstream tasks. To evaluate the noise restoration performance of various methods, we conducted 18 denoising experiments, including 9 internal and 9 external tests. The detailed experimental results are presented in Extended Data (Tab. 7-12). More generated samples are shown in Extended Data (Fig. 16).

To evaluate denoising performance, we generated synthetic datasets by corrupting high-quality pathology images with Gaussian noise at varying intensities ( = 21, 31, 41), following the degradation model in Sec. 4.1.2. We used the same internal datasets (CAMELYON16, PANDA, PAIP2020) with a 7:1:2 train/val/test split and further validated generalization on external datasets (OCELOT, MIDOG2022, TIGER2021).

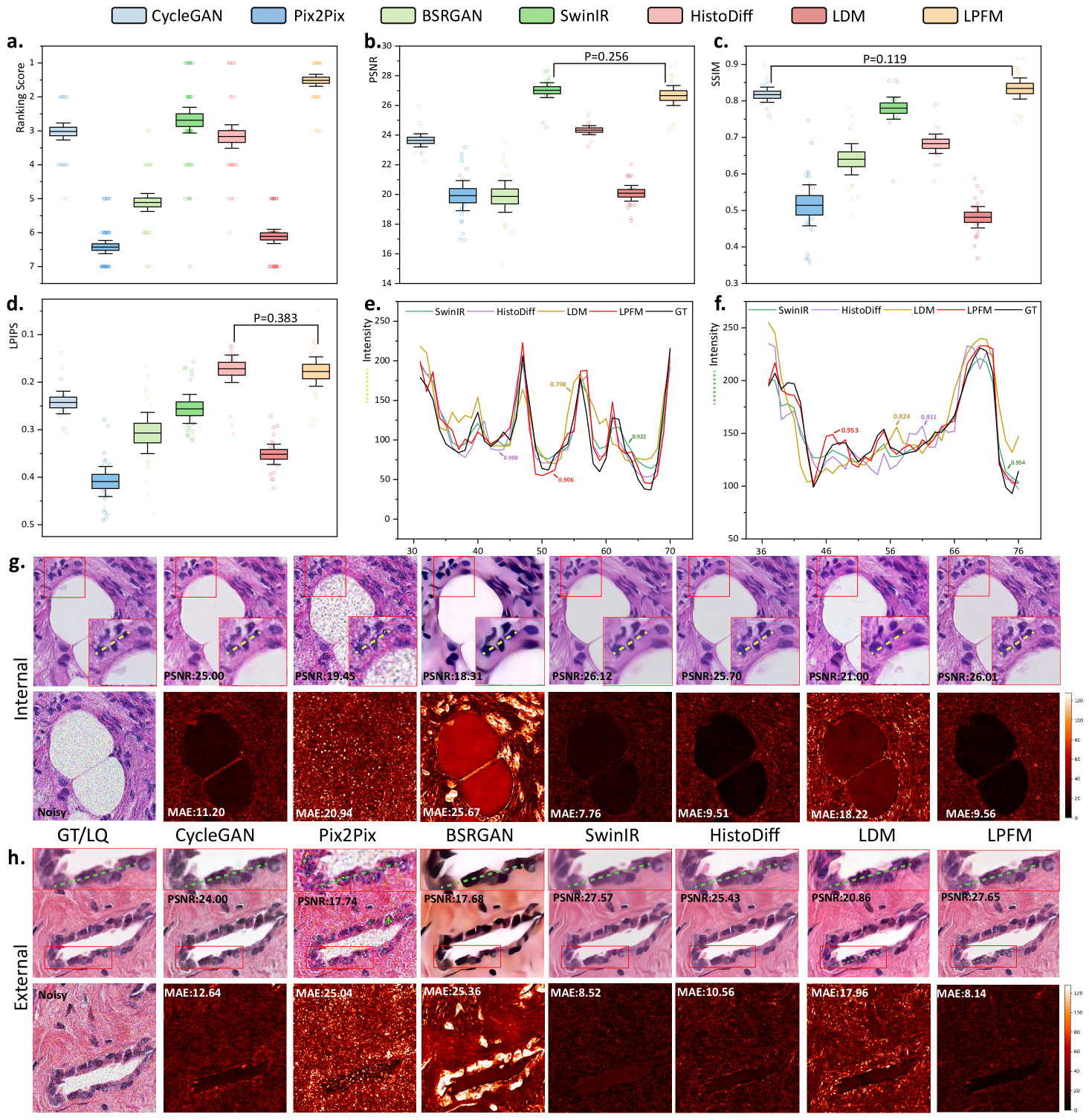

The ranking analysis of 18 denoising tasks revealed the superior performance of LPFM (Fig. 4a), which achieved an outstanding average ranking score of 1.48, significantly outperforming the second-best method SwinIR (average ranking 2.65) by 1.17. LPFM ranked among the top two methods in all three evaluation metrics (PSNR, SSIM, and LPIPS) for 14 out of the 18 tasks, showcasing its remarkable robustness in balancing different aspects of image quality. The substantial performance gap between LPFM and competing methods was particularly evident in high-noise scenarios (=41) where LPFM maintained superior detail preservation while effectively suppressing noise artifacts.

The comprehensive evaluation across all 18 denoising tasks revealed an interesting performance landscape among the compared methods (Fig. 4b-d). While LPFM did not achieve the highest scores in PSNR (SwinIR led with 27.02 dB average) or LPIPS (HistoDiff led with 0.172 average), it demonstrated exceptional balance across all three metrics (PSNR, SSIM, and LPIPS). Crucially, LPFM’s superior SSIM (average 0.837), which measures structural similarity, indicated that it best maintained critical tissue and cellular details essential for pathological diagnosis, even if its PSNR or LPIPS was marginally lower than the best performing methods. This balance is vital in medical imaging where over-smoothing (high PSNR but loss of detail) or perceptual artifacts (good LPIPS but unnatural textures) can compromise diagnostic accuracy.

Visual and quantitative analysis of denoising performance was provided in Fig. 4g-h. In terms of MAE metric, LPFM showed differentiated performance across internal and external datasets. On internal test sets, LPFM achieved an MAE of 9.56, slightly higher than SwinIR’s 7.76. However, in external validation datasets, LPFM showed superior generalization with the lowest MAE of 8.14, outperforming SwinIR’s 8.52. Structural consistency analysis through the pixel-level PCC (Pearson Correlation Coefficient) showed similar trends. On internal data, LPFM achieved a high PCC of 0.906 (second to SwinIR’s 0.922), while on external data it reached 0.953 - nearly identical to SwinIR’s 0.954. These results indicated that both methods preserved local structures well, with SwinIR having a marginal advantage on familiar data distributions. While SwinIR achieved strong quantitative scores, its outputs frequently exhibited over-smoothing artifacts that erased diagnostically important cellular details and tissue textures. In contrast, LPFM maintained superior perceptual quality, preserving nuclear boundaries and chromatin patterns, even if some pixel-level metrics showed slight disadvantages.

2.4 Virtual Staining

Virtual staining, enabled by AI models, offers a transformative approach in pathology by digitally replicating the appearance of chemically stained tissue samples without the need for physical dyes. This technology significantly accelerates diagnostic workflows, generating high-quality stained images in minutes rather than the hours or days required for traditional chemical staining methods, while also reducing costs associated with reagents and laboratory labor.

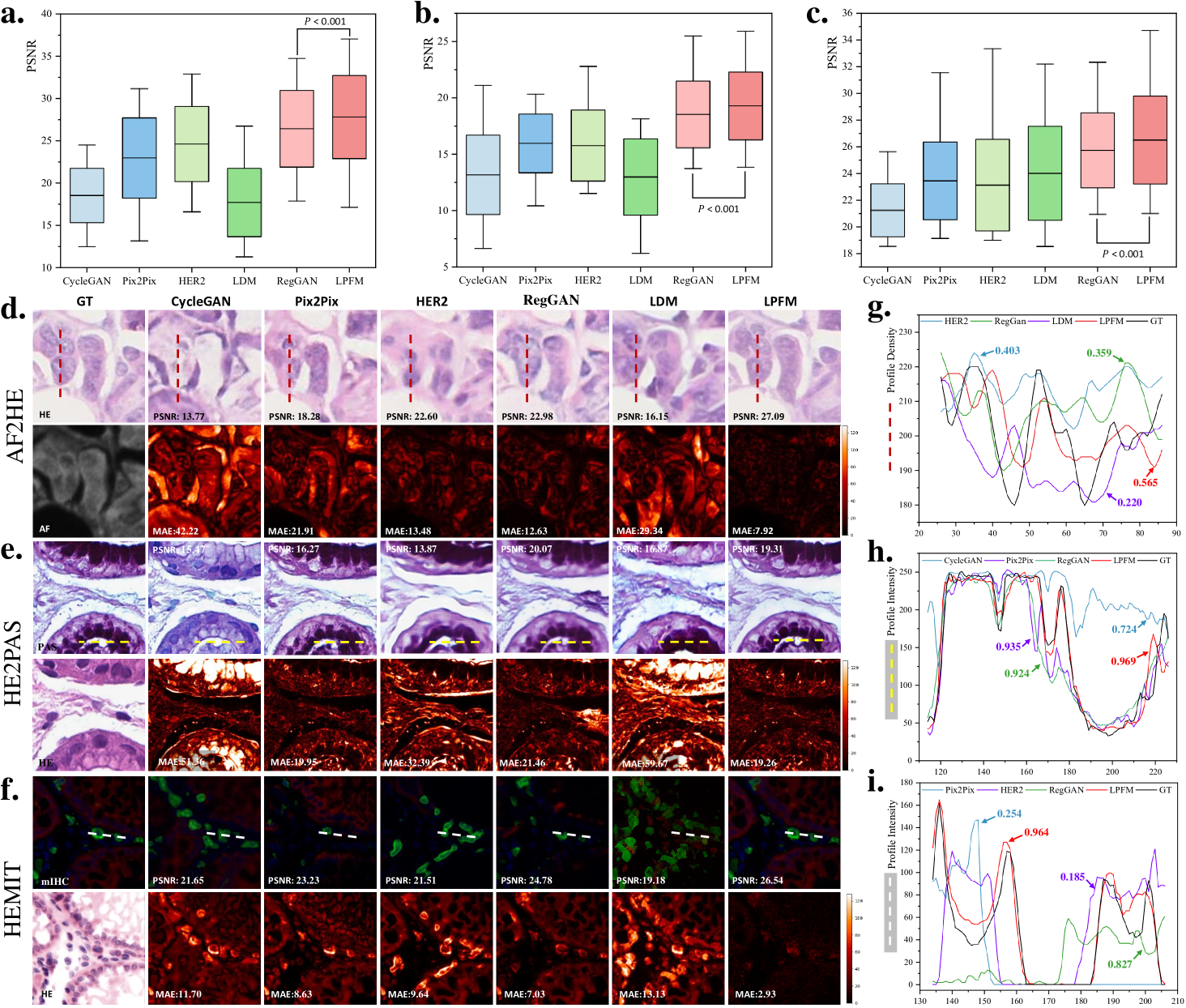

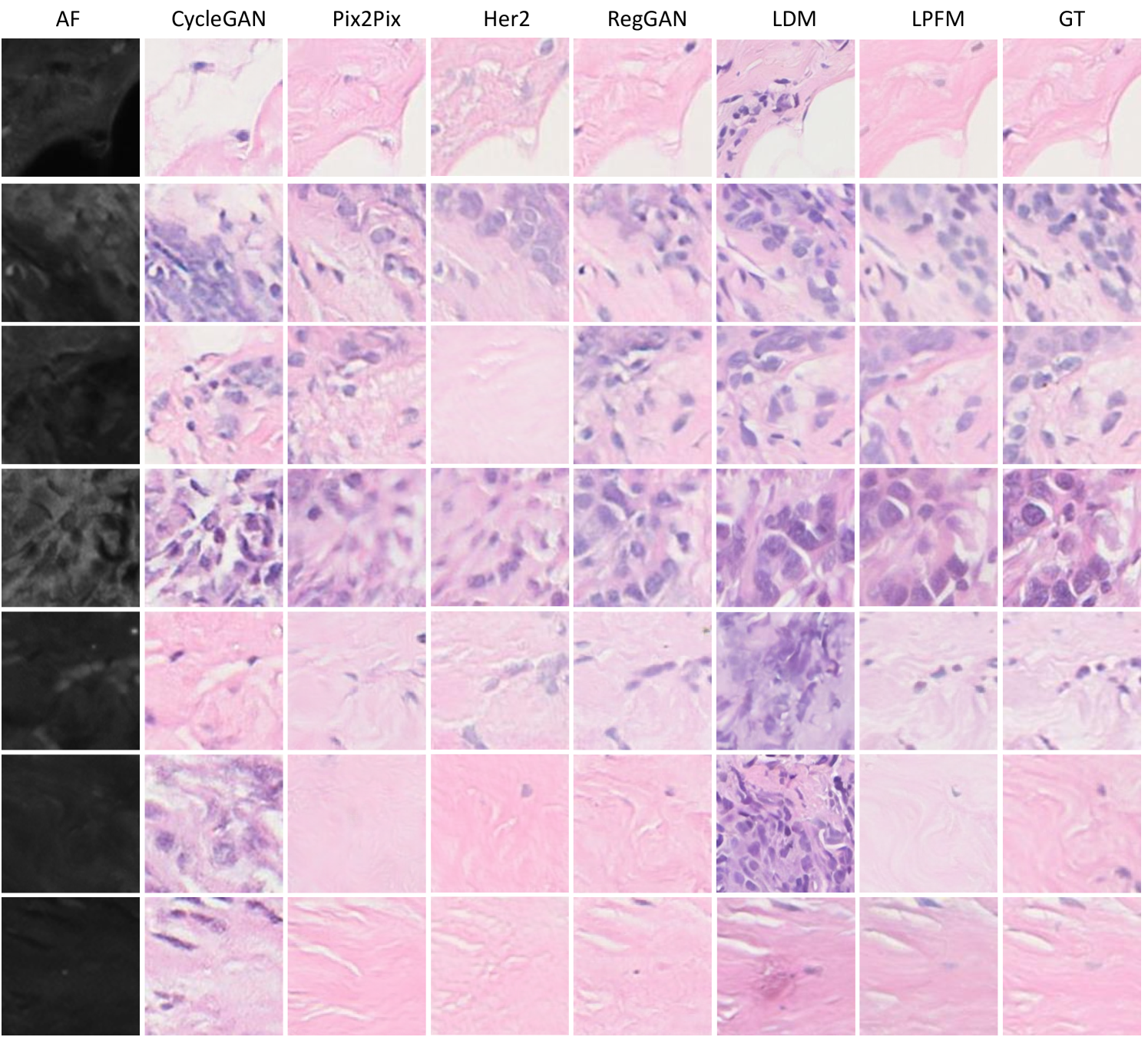

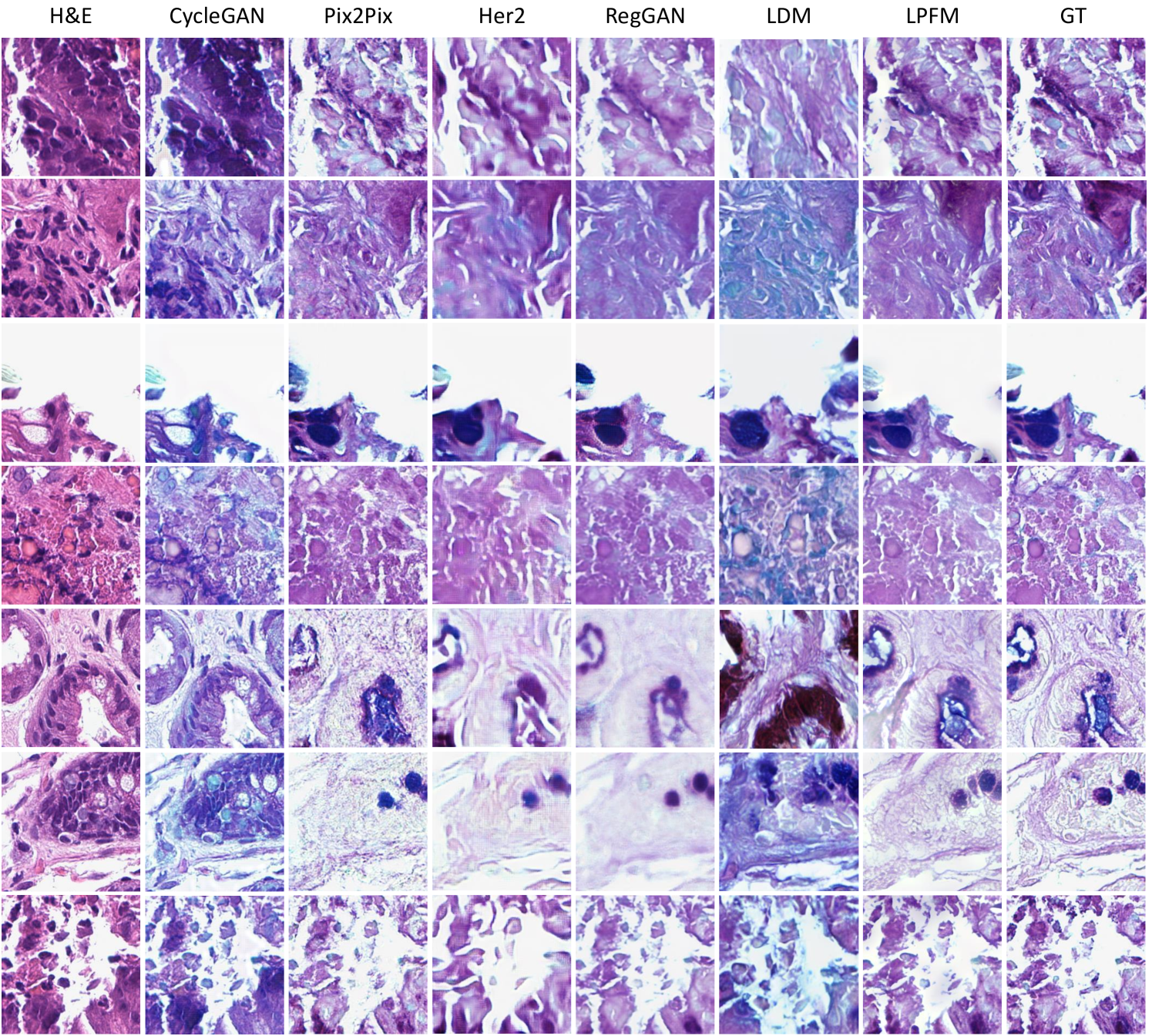

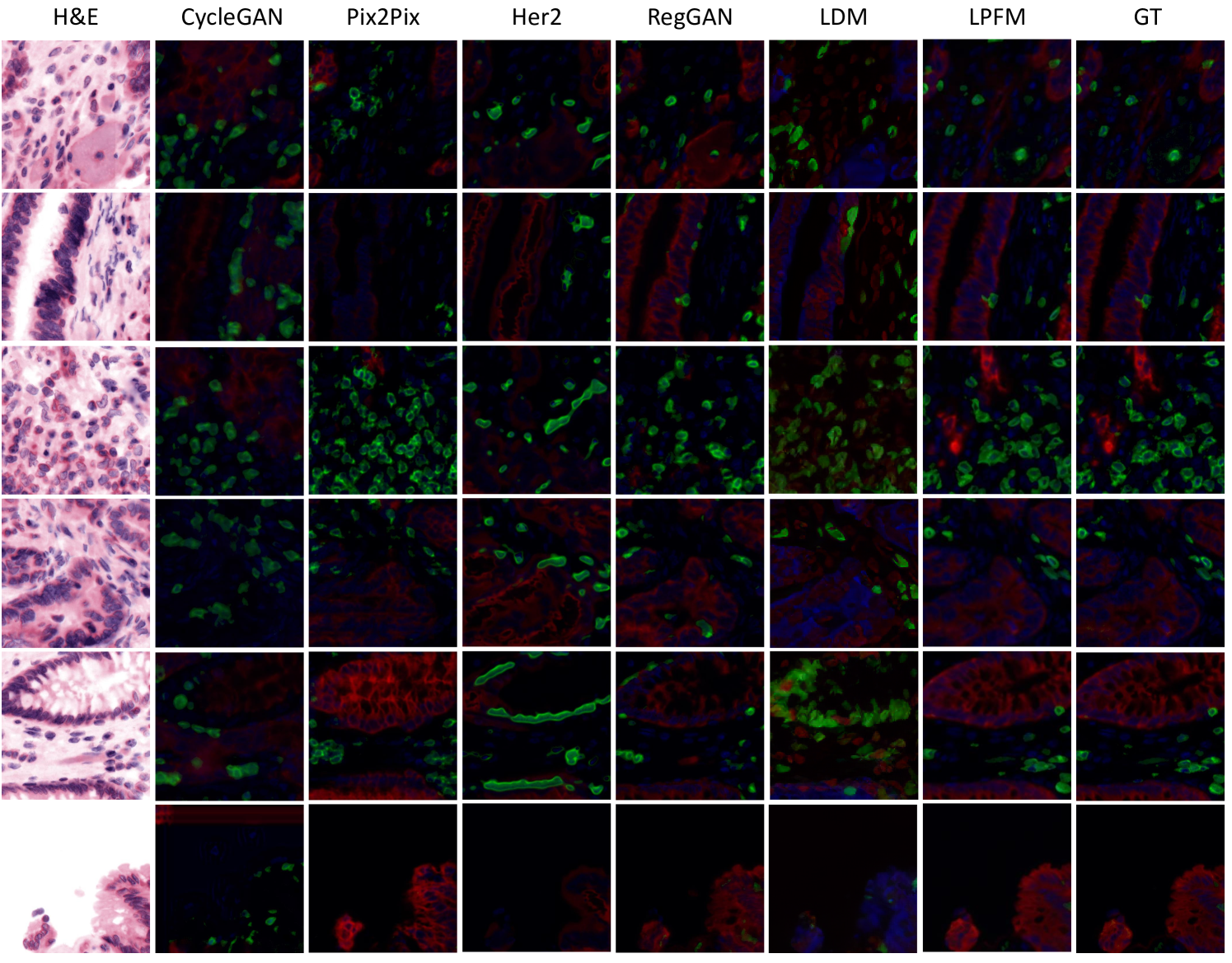

For the virtual staining tasks, we employed multiple paired staining datasets, including AF2HE [43], HE2PAS, and HEMIT [44] datasets, to rigorously validate the performance of LPFM and various compared methods. Each dataset served a distinct purpose: AF2HE evaluated the model’s ability to transform autofluorescence (AF) images into H&E stains, crucial for rapid preliminary diagnostics; HE2PAS assessed the conversion between H&E and Periodic Acid-Schiff-Alcian Blue (PAS-AB) stains, important for detecting glycoproteins and mucins in conditions like kidney and liver diseases; and HEMIT tested the model’s capability to predict multiplex immunohistochemistry (mIHC) staining from H&E, enabling advanced biomarker analysis without repeated physical staining. These datasets were selected to cover diverse staining modalities and clinical scenarios, ensuring robust validation across different tissue structures and diagnostic needs. The detailed experimental results are presented in Extended Data (Tab. 19-21). More generated samples are shown in Extended Data (Fig. 18-20).

To comprehensively evaluate our approach, we compared LPFM against several widely used methods for virtual staining tasks, each with distinct architectures and advantages. CycleGAN, an unsupervised generative adversarial network (GAN), excelled in unpaired image-to-image translation through its cyclic consistency loss, making it suitable when strictly paired training data was unavailable. Pix2Pix, a conditional GAN, leveraged paired data for precise pixel-to-pixel translation, offering superior performance in scenarios where exact input-output alignments were critical. HER2 specialized in histopathology image translation by incorporating hierarchical feature extraction, enhancing structural preservation in complex tissue architectures. RegGAN introduced a registration-based loss to improve spatial alignment between input and output images, particularly beneficial for maintaining morphological accuracy in virtual staining tasks. Lastly, Latent Diffusion Models (LDM) employed a denoising diffusion process in latent space, combining the generative power of diffusion models with computational efficiency.

Our proposed LPFM demonstrated superior performance across all three virtual staining datasets (AF2HE, HE2PAS, and HEMIT) when compared to existing methods, as evidenced by both quantitative metrics and qualitative assessments. Quantitatively, LPFM achieved the highest average PSNR values (Fig. 5a-c). Specifically, in the AF2HE task, LPFM achieved a PSNR of 27.81 dB, representing a 5.3% improvement over the second-best method RegGAN (26.42 dB). The superior performance was further confirmed in the HE2PAS and HEMIT tasks where LPFM attained PSNR values of 19.29 dB and 26.51 dB respectively, corresponding to 4.1% and 3.0% improvements over RegGAN.

The architectural innovations in LPFM yielded substantial benefits in both structural preservation and perceptual quality. For structural similarity, LPFM achieved SSIM scores of 0.763, 0.563, and 0.820 across the three tasks, outperforming the second-best methods by 4.5%, 33.8%, and 10.7% respectively. The perceptual quality metrics (LPIPS) showed consistent advantages, with LPFM demonstrating 20.1%, 7.0%, and 20.0% reductions in perceptual error compared to the leading alternatives for each task.

Qualitatively, LPFM-generated virtual stains (Fig. 5d-f) exhibited remarkable fidelity to chemical staining results, with significantly reduced artifacts and better preservation of critical diagnostic features compared to other methods. The MAE heatmaps revealed that LPFM produced the smallest errors in challenging regions such as cell nuclei boundaries (H&E), glomerular basement membranes (PAS-AB), and biomarker expression patterns (mIHC). The intensity profile analyses (Fig. 5g-i) further confirmed this advantage, with LPFM showing the highest Pearson correlation coefficients (PCC) with ground truth stained images, particularly in capturing fine-grained histological features.

2.5 Restoration for Coupled Degradations

Real-world pathology images often suffer from multiple coexisting degradations, including blur, noise, and low resolution. While existing methods perform well on single degradation types, their effectiveness significantly decreases when handling such composite cases. This performance drop primarily occurs because optimizing for one degradation type may interfere with addressing others, and models trained on isolated degradations fail to capture the complex interactions present in actual clinical images.

To rigorously evaluate model robustness under such challenging conditions, we constructed a comprehensive degradation framework by applying multiple sequential distortions to high-quality pathology images from our datasets. These distortions included randomized combinations of Gaussian blur, Poisson noise, and low resolution with parameters carefully sampled to reflect real clinical imaging conditions. The degradation process preserved the biological relevance of the images while introducing realistic artifacts.

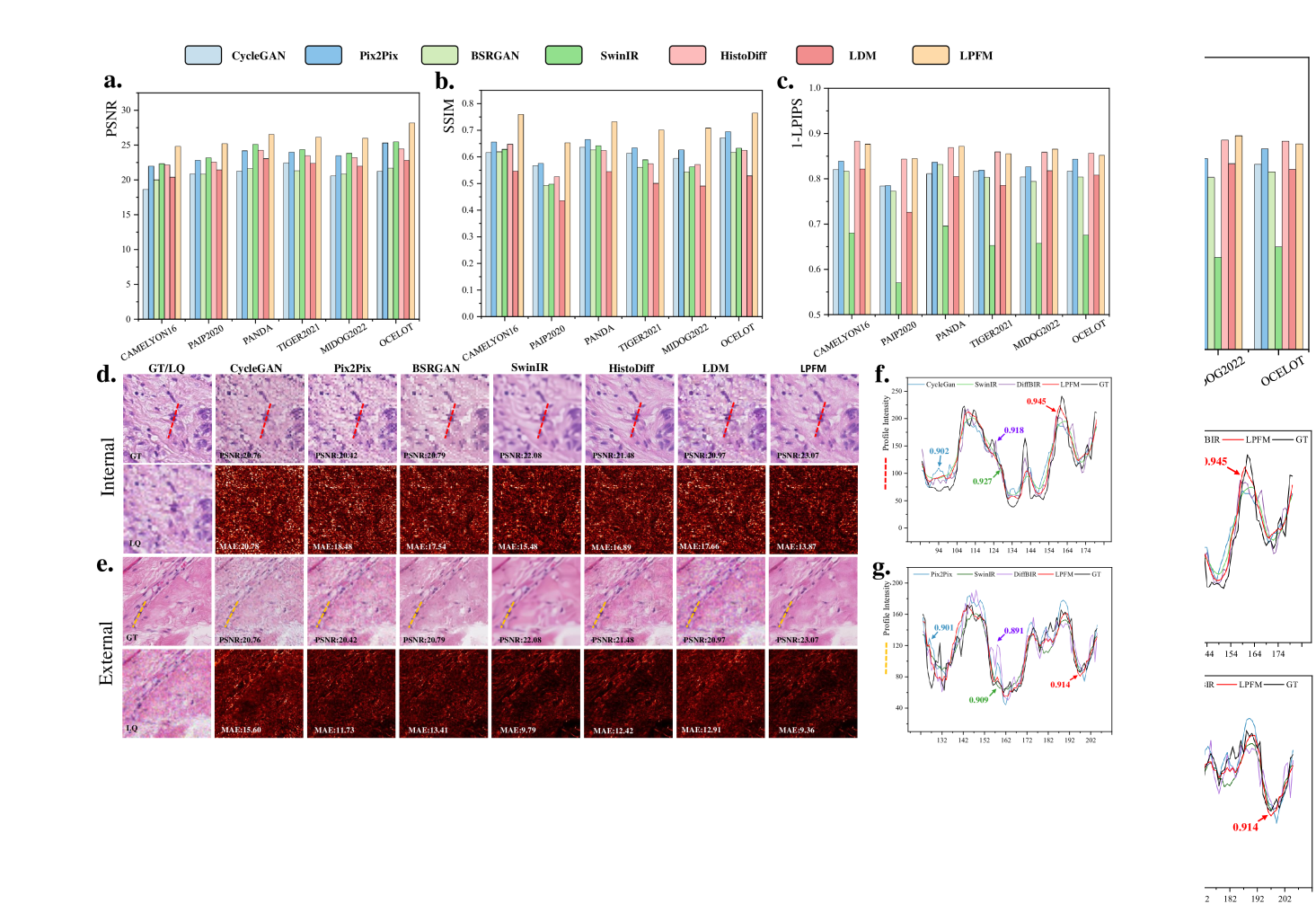

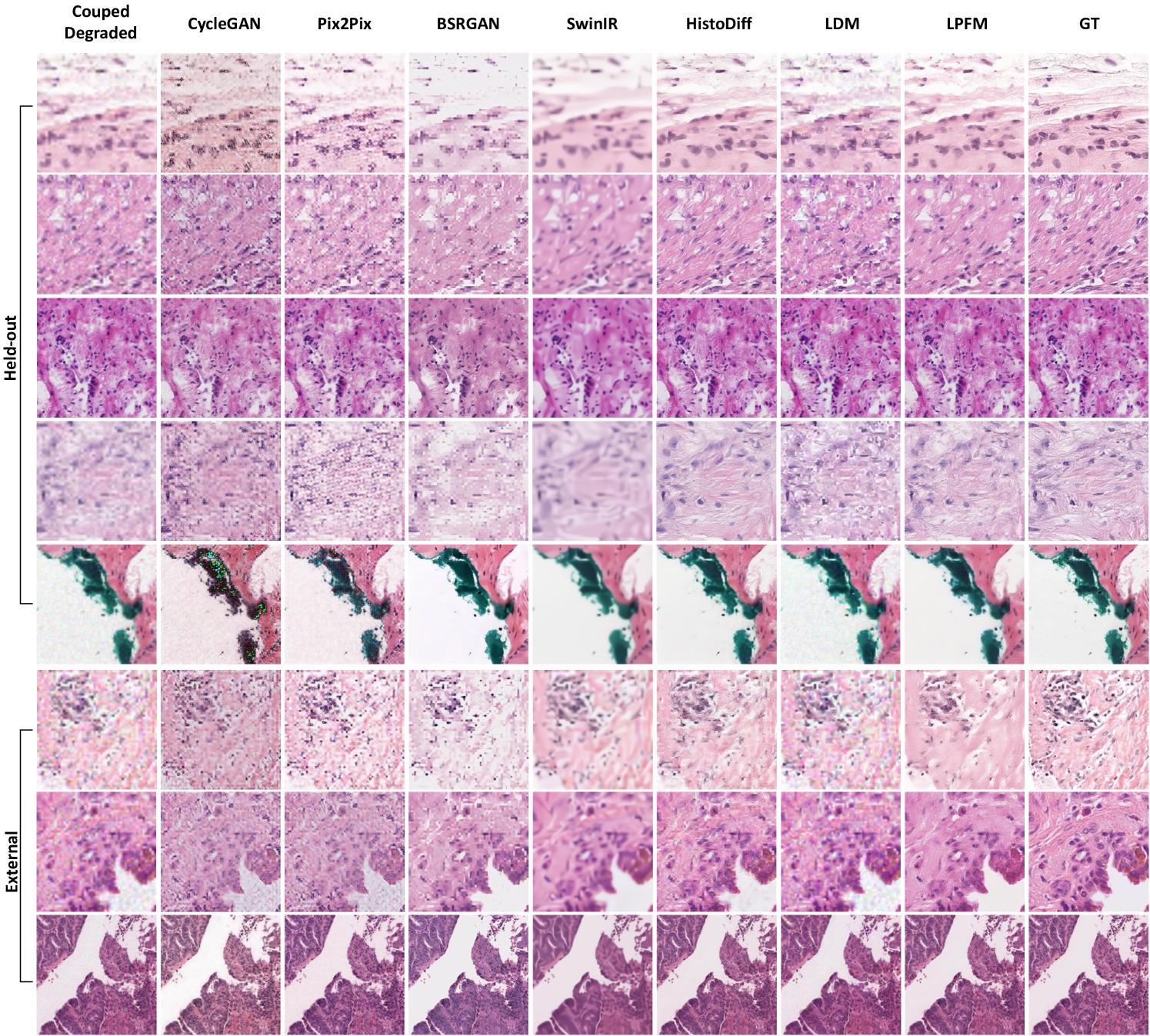

We evaluated our method using a rigorous two-tier validation strategy. First, internal testing was conducted on held-out datasets from the training distribution, including CAMELYON16, PAIP2020 and PANDA. Second, external evaluation was performed on completely independent datasets, including MIDOG2022, TIGER2021 and OCELOT, to assess generalization capability. The detailed experimental results are presented in Extended Data (Tab. 22-23). More generated samples are shown in Extended Data (Fig. 17).

As shown in Fig. 6a-c, the quantitative results demonstrated LPFM’s consistent advantages over competing approaches. In terms of PSNR, LPFM achieved a mean score of 26.15 dB, outperforming the second-best method SwinIR (24.05 dB) by a significant margin of 2.10 dB. This improvement was particularly notable in the OCELOT dataset where LPFM reached 28.20 dB, suggesting exceptional generalization capability to diverse tissue types. The SSIM results further confirmed this trend, with LPFM (0.720) substantially exceeding Pix2Pix (0.642) and other methods, indicating better structural preservation. LPFM maintained this performance advantage across all six datasets, demonstrating remarkable robustness to different degradation patterns and tissue characteristics.

LPIPS metrics, which assess perceptual quality, revealed additional insights. While HistoDiff achieved competitive LPIPS scores (0.138), LPFM demonstrated more balanced performance across all quality metrics. This suggested that while some methods might optimize for specific aspects of image quality, LPFM successfully maintained excellence in both pixel-level accuracy (PSNR) and perceptual similarity (LPIPS). The detailed experimental results are presented in Extended Data (Tab. 22-23).

Visual analysis of the results (Fig. 6d-e) corroborated these quantitative findings. Compared to other methods, LPFM better preserved nuclear details and tissue architecture while more effectively suppressing artifacts. This was especially evident in the error maps where LPFM showed minimal deviation from ground truth. The intensity profile (Fig. 6f-g) comparisons further demonstrated LPFM’s accuracy in maintaining original image characteristics, with Pearson correlation coefficients consistently exceeding 0.9 across all test cases.

2.6 Virtual Staining for Degraded Images

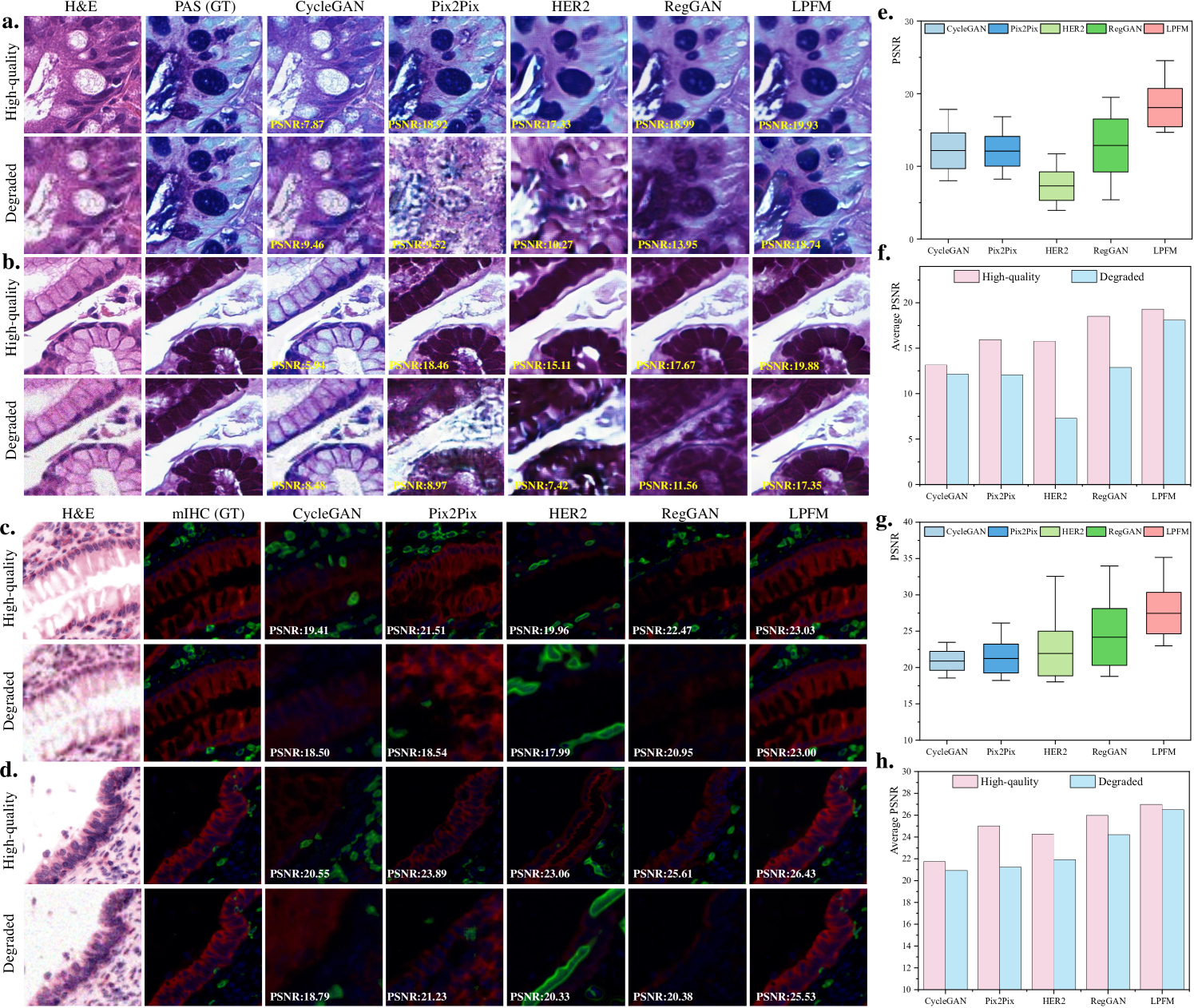

Clinical histopathology workflows frequently encounter degraded tissue specimens due to suboptimal staining, sectioning artifacts, or imaging imperfections. As illustrated in Fig. 7a-d, these degradations significantly impact virtual staining quality, motivating our rigorous evaluation of method robustness. Our experiments simulated realistic degradation scenarios by applying compound artifacts to H&E images from both HE2PAS and HEMIT datasets, then processing them through pretrained models without architectural modifications.

Our experimental design simulated this clinical challenge through a two-stage process (Fig. 6a-d). First, we applied coupled degradations (blur: =2.5, noise: =31, and 4 downsampling) to high-quality H&E images from HE2PAS and HEMIT datasets. These degraded inputs then underwent virtual staining to PAS-AB and mIHC respectively, without any intermediate restoration steps. This direct transformation tested the model’s ability to simultaneously address staining conversion and artifact suppression.

The quantitative results in Figure 7e-h demonstrated LPFM’s exceptional stability across degradation conditions. On HE2PAS (Figure 7e), LPFM maintained a narrow PSNR distribution (17.94-18.24 dB) for degraded inputs, outperforming RegGAN’s wider range (12.68-13.09 dB) while preserving superior mean PSNR (18.09 dB vs 12.89 dB). This robustness became more pronounced in Fig. 7f where LPFM showed merely 6.2

The HEMIT dataset results (Figure 7g) revealed an even more striking advantage - LPFM actually achieved higher PSNR on degraded inputs (26.99 dB) than on high-quality ones (26.49 dB), suggesting its unique capability to compensate for certain artifacts. As shown in Figure 7h, this inverse relationship contrasted sharply with other methods’ expected performance degradation, particularly HER2’s 3.1% drop. Visual comparisons in Figure 7c-d confirmed that LPFM successfully suppressed noise while preserving critical histological features in mIHC staining, whereas CycleGAN introduced false positive signals and Pix2Pix lost structural detail.

3 Discussion

The development of the Low-level Pathology Foundation Model (LPFM) represents a significant advancement in computational pathology by unifying diverse image restoration and virtual staining tasks within a single, adaptable framework. Our comprehensive evaluation across 66 distinct tasks demonstrates that this unified approach not only matches but frequently surpasses the performance of specialized models while offering unprecedented flexibility for clinical and research applications. The success of LPFM stems from its integration of several key innovations. The contrastive pre-training strategy enables the model to learn robust, stain-invariant feature representations that generalize across diverse tissue types and degradation patterns. This is complemented by a unified conditional diffusion framework that dynamically adapts to specific tasks through textual prompts, allowing clinicians to guide the enhancement process based on diagnostic priorities. Training on an extensive dataset encompassing 34 tissue types and 5 staining protocols further ensures broad applicability, from routine H&E analysis to specialized staining techniques. Notably, LPFM excels in handling coupled degradations, where it achieves a 2.10 dB PSNR improvement over leading specialized methods. This capability is particularly valuable given that clinical images often exhibit multiple concurrent artifacts that require simultaneous correction.

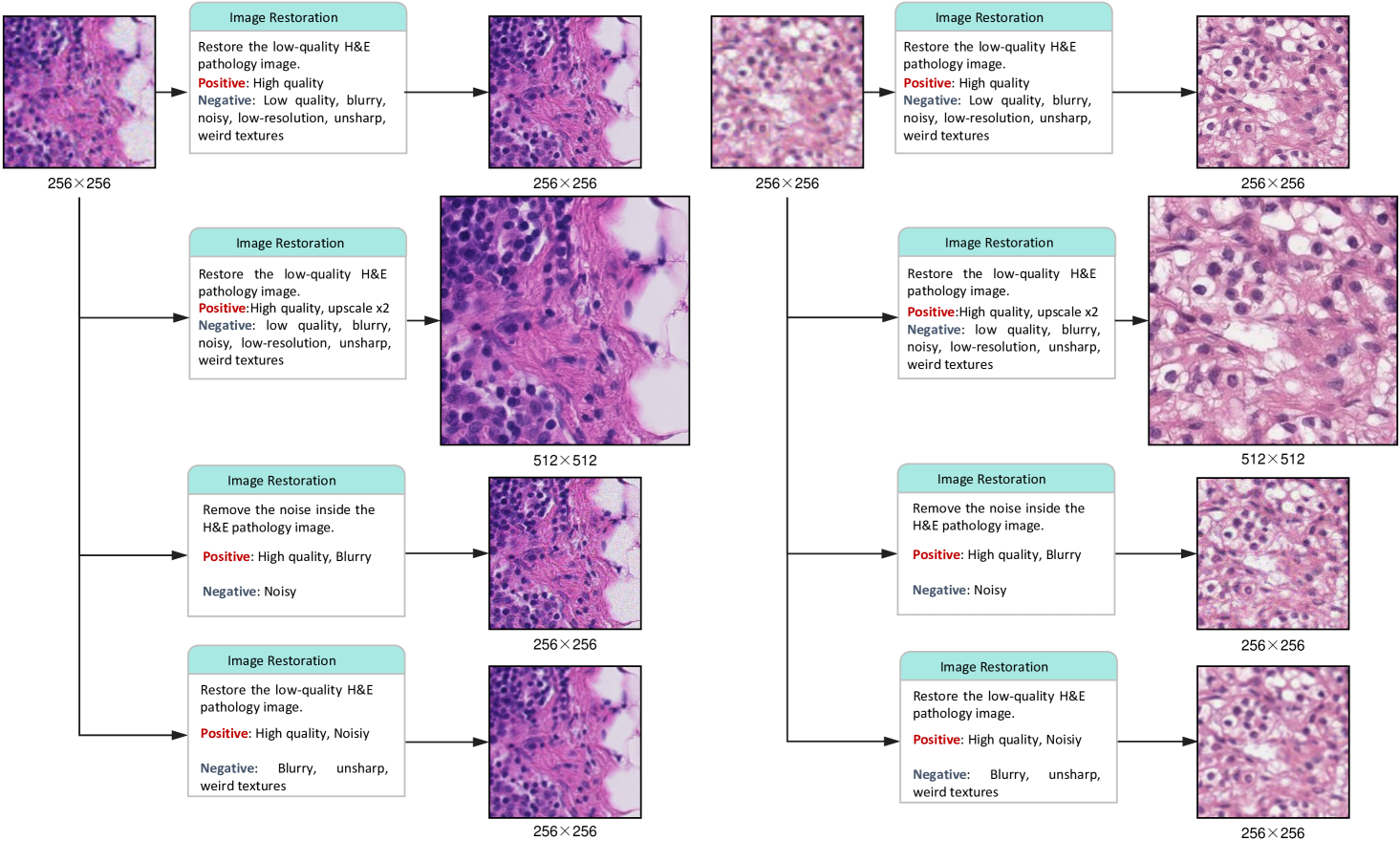

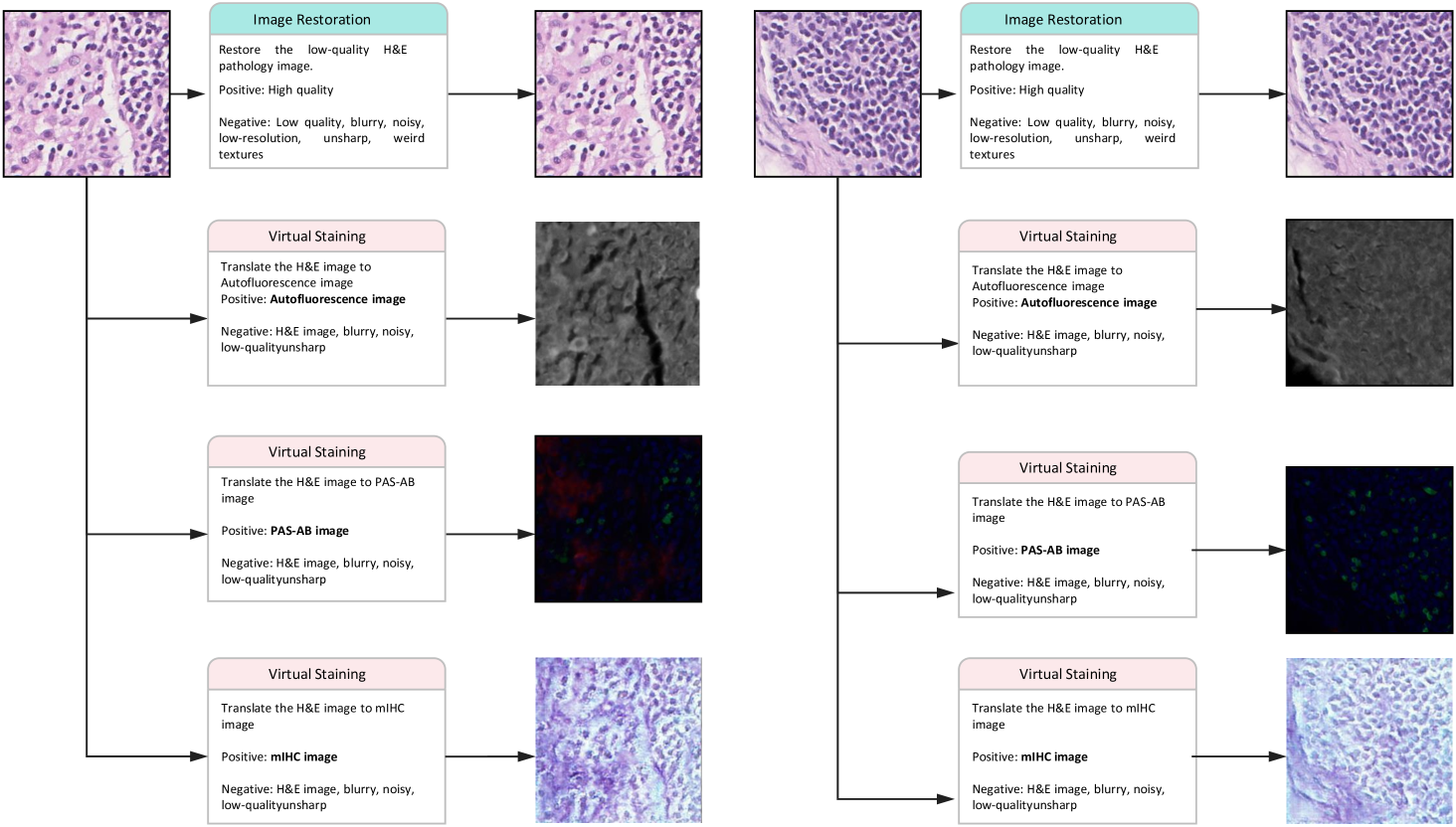

Textual Prompt Guidance. The prompt-guided architecture introduces a novel level of interactivity to pathology image processing. By allowing users to specify enhancement priorities through natural language instructions, the system adapts to diverse diagnostic needs without requiring architectural modifications. As shown in Fig. 10 and 11, the system dynamically adapts its image restoration or virtual staining targets based on natural language instructions without requiring architectural modifications.This flexibility addresses a major limitation of conventional approaches that enforce rigid processing pipelines, potentially enabling more personalized and context-aware low-level tasks.

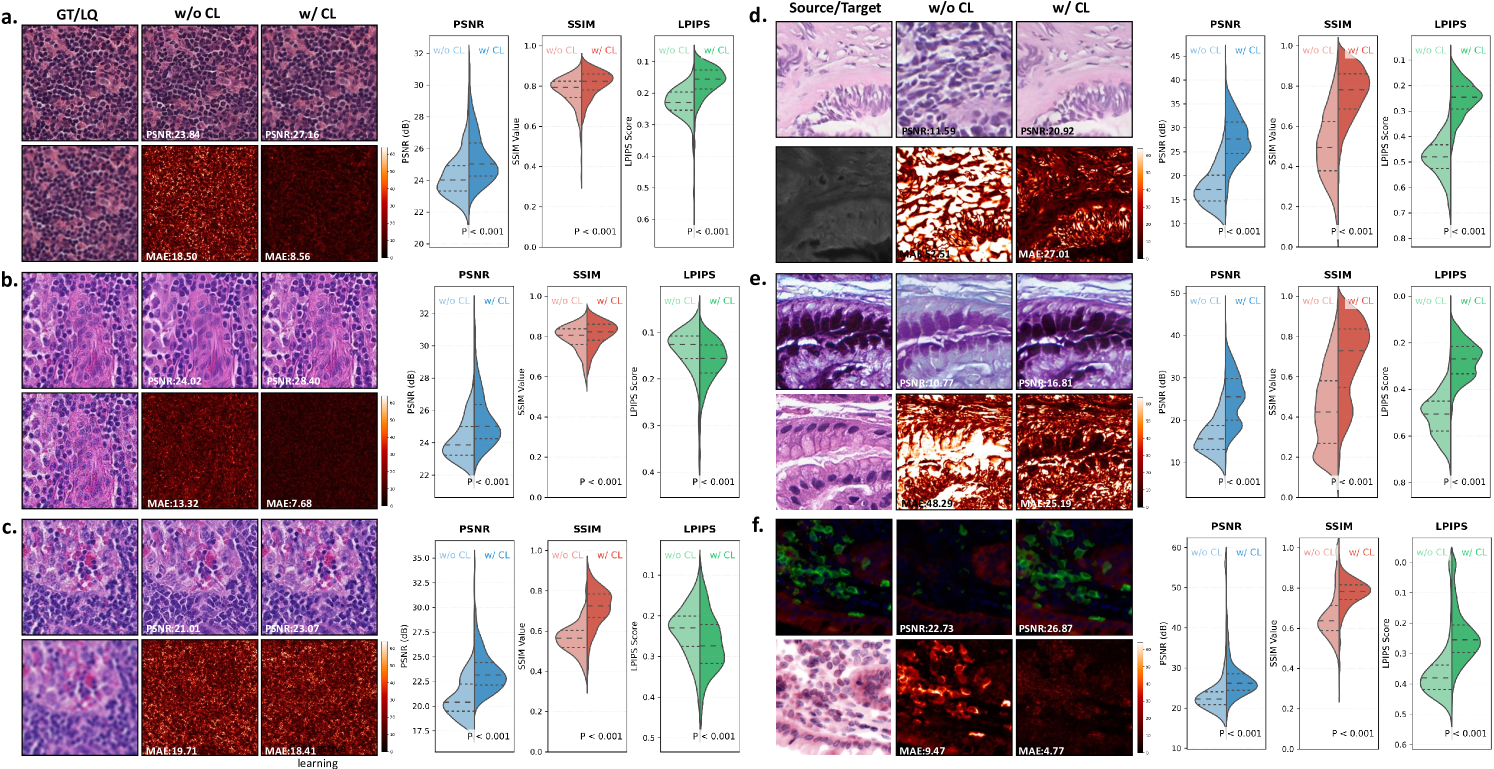

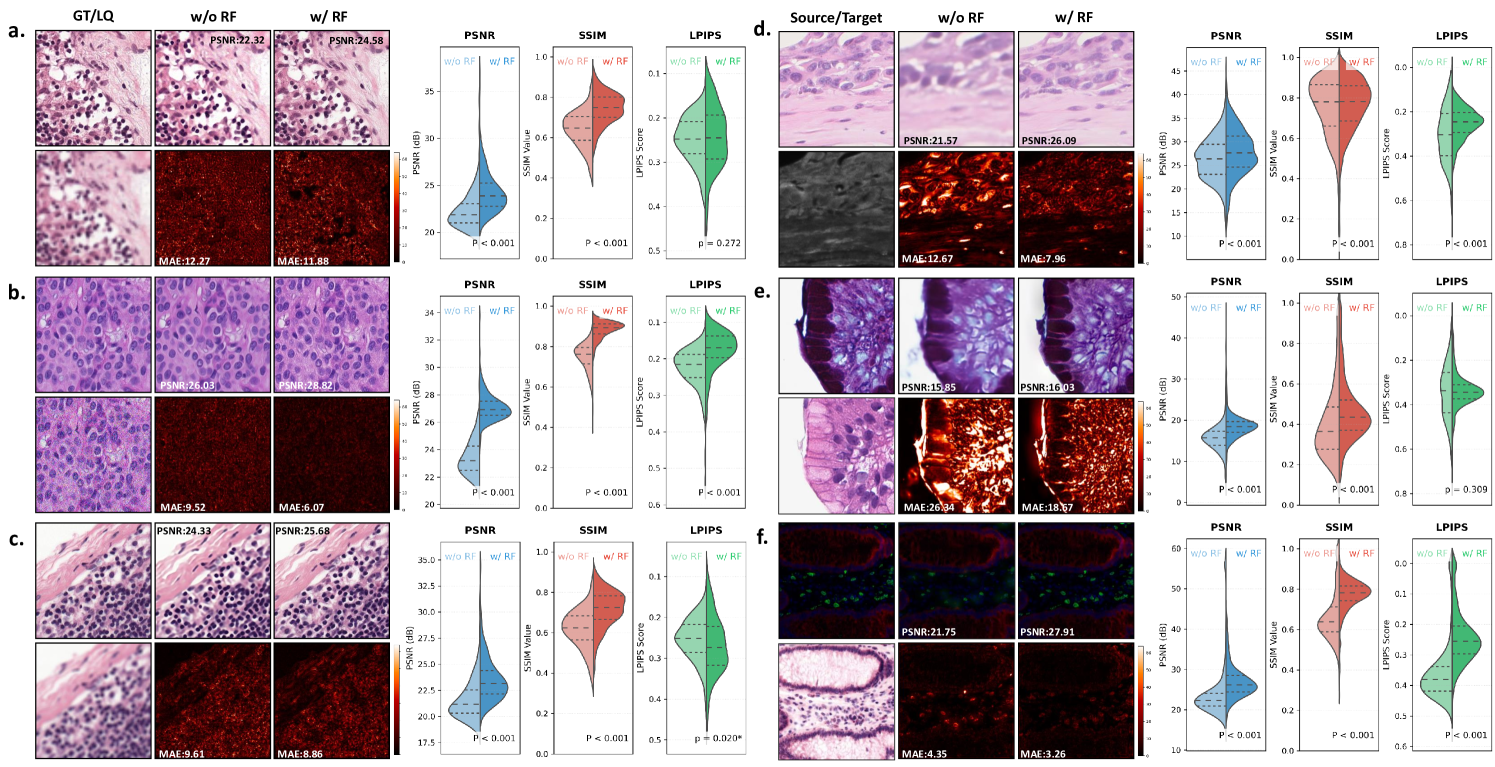

Effectiveness of Contrastive Pre-training. As demonstrated in Fig. 12, contrastive learning provides fundamental improvements to the model’s ability to handle both image restoration and virtual staining tasks. The visual comparisons clearly show that the contrastive pre-trained version better preserves fine cellular structures and tissue morphology compared to the non-contrastive baseline. This improvement is particularly evident in the mean average error (MAE) mapswhere the contrastive approach shows significantly reduced errors in diagnostically important regions. The quantitative results across all three evaluation metrics consistently confirm these visual observations, demonstrating that contrastive learning enables more robust feature representations for diverse pathology image processing tasks.

Effectiveness of Pathology Image Refinement. The refinement module plays a crucial role in enhancing output quality, as shown in Fig. 13. The conditional diffusion-based refinement effectively suppresses artifacts while preserving critical diagnostic features in both restoration and virtual staining tasks. Visual examination of the results reveals that the refined outputs maintain better structural consistency with the ground truth, particularly in challenging regions such as tissue boundaries and cellular details. The quantitative metrics consistently support these observations, with the refined versions showing superior performance across all evaluation criteria. The improvement is maintained across different types of image degradation and staining transformations, demonstrating the general applicability of the refinement approach.

Potential Limitation. Despite these advances, certain limitations must be acknowledged. While LPFM generalizes well across multiple scanners and staining protocols, its performance may vary when confronted with radically novel imaging modalities not represented in the training data. Additionally, like all generative models, there remains a small risk of introducing plausible but artifactual features in severely degraded inputs. These observations highlight important directions for future research, including extension to 3D pathology data, development of interactive refinement mechanisms, and creation of explainability tools to clarify enhancement decisions.

Clinical Implications. From a clinical perspective, LPFM’s virtual staining capabilities could transform diagnostic workflows. The model’s ability to generate high-quality PAS-AB and mIHC stains from H&E images with consistent structural preservation offers practical advantages, including reduced reagent costs, faster turnaround times, and conservation of precious biopsy material. These benefits would be especially impactful for rare specimens or when additional stains are needed retrospectively. While our quantitative and qualitative analyses confirm that virtually stained images maintain diagnostically critical features such as nuclear membranes and glycoprotein distributions, further clinical validation studies will be essential to assess diagnostic concordance with conventional staining methods. The broader implications of this work extend beyond immediate clinical applications. By demonstrating that a single model can excel at multiple low-level vision tasks while maintaining diagnostic reliability, LPFM reduces the computational overhead associated with deploying multiple specialized solutions in pathology workflows. This unification could improve the interoperability of computational tools while making advanced image enhancement more accessible to resource-limited settings. Moreover, the prompt-based control mechanism establishes a template for collaborative human-AI interaction in medical image interpretation where clinician expertise guides algorithmic processing to align with diagnostic requirements. Some examples of prompts controlling the generation are shown in Extended Data (Fig. 10-11).

Future works. Two critical directions warrant further investigation. First, extending LPFM to 3D pathology data represents a natural evolution, as volumetric imaging becomes increasingly important for comprehensive tissue analysis. This extension would enable artifact correction and virtual staining across z-stack acquisitions while presenting new computational challenges in processing high-dimensional pathology data. Second, large-scale clinical validation studies are essential to rigorously quantify diagnostic concordance between enhanced and ground-truth images across diverse disease subtypes and staining protocols, particularly focusing on whether model-generated features maintain diagnostic fidelity in critical regions like tumor margins or micrometastases.

In conclusion, LPFM advances the field of computational pathology by providing a versatile, high-performance solution for image quality enhancement that addresses both common and challenging real-world scenarios. The model’s ability to handle diverse tasks through a unified framework—while maintaining or improving upon the performance of specialized alternatives—suggests that foundation models can achieve transformative impact in medical imaging, much as they have in other domains. As the field progresses, the integration of such models into clinical workflows, coupled with ongoing technical refinements and rigorous validation, promises to enhance the accuracy, efficiency, and accessibility of pathological diagnosis.

4 Methods

4.1 Preparation Process

To ensure the robustness and generalizability of our model on image restoration and virtual staining tasks, we collected a comprehensive, multi-source dataset encompassing diverse tissue types and staining protocols. Our dataset includes H&E (Hematoxylin and Eosin), IHC (Immunohistochemistry), mIHC (multiplex immunohistochemistry) images, and PAS (Periodic Acid-Schiff) stained slides from multiple organs (e.g., liver, kidney, breast, and lung) (Extended Data Tab. 27).

4.1.1 Whole Slide Image Tiling and Stitching

Due to the extremely high resolution of WSIs (often exceeding 100,000 100,000 pixels), direct processing is computationally infeasible. Thus, we partition each WSI into smaller, manageable patches of size 256 256 pixels with 32 pixel overlapping, ensuring sufficient spatial context for image restoration and virtual staining while maintaining computational efficiency. After image restoration and virtual staining, the processed patches are reassembled into a complete WSI using grid-based stitching with 32-pixel overlapping boundaries to eliminate seam artifacts. Bilinear interpolation is applied to ensure smooth transitions between adjacent patches.

4.1.2 Degradation Simulation for Restoration Tasks

To generate paired training data for our image restoration tasks, we simulate three clinically relevant degradation types through carefully designed transformations of high-quality WSIs. Each degradation method was developed in consultation with pathologists to ensure biological plausibility. We mainly analyze three typical degradation types in pathology images, including low-resolution, blurry and noisy problems, which commonly occur during whole-slide image acquisition due to various factors such as optical limitations, tissue preparation artifacts, and scanning imperfections. Below we detail the specific implementations for each degradation type.

Low Resolution: In whole-slide imaging systems, resampling operations play a critical role in generating multi-resolution pyramids for pathological analysis. Our model incorporates three clinically validated interpolation methods (area-based, bilinear, and bicubic) that reflect the resampling algorithms used in commercial whole-slide scanners. Area-based interpolation best preserves nuclear morphology and intensity distributions, while bilinear maintains smooth tissue transitions and bicubic captures fine chromatin textures, though it may introduce slight edge enhancements. We intentionally exclude nearest-neighbor interpolation due to its tendency to create jagged nuclear borders and artificial discontinuities in tissue architecture that could mimic pathological features. During training, we randomly select among the three approved methods to ensure robustness across different scanner implementations. This approach was validated through consultation with pathologists, who confirmed it successfully maintains three key diagnostic features: nuclear membrane integrity for tumor grading, stromal texture for invasion assessment, and chromatin patterns for molecular characterization. Importantly, the method realistically simulates the multi-resolution acquisition process inherent to digital pathology workflows.

Image Blur: In computational pathology, blur artifacts commonly arise from optical defocus, tissue sectioning imperfections, and scanner vibrations. We model these effects using anisotropic Gaussian kernels that account for the directional variability observed in pathological imaging. The blur kernel is defined as:

| (1) |

where the covariance matrix controls the blur’s directionality; is the spatial coordinates; is the normalization constant. The covariance matrix could be further represented as follows:

| (2) | ||||

This formulation captures both isotropic defocus blur (when ) and anisotropic artifacts from scanner motion or uneven tissue surfaces (when ). The rotation matrix accounts for directional effects commonly seen in whole-slide scanning. We exclude unrealistically sharp kernels that could distort nuclear morphology and other diagnostically important features.

Image Noise: Noise artifacts primarily originate from two distinct physical processes: electronic sensor noise and quantum photon fluctuations. We model these phenomena using a composite noise formulation that accounts for the unique characteristics of pathological imaging. Additive Gaussian noise captures the electronic readout noise inherent in CCD/CMOS sensors, with its intensity modulated by the standard deviation of the normal distribution . This noise component manifests as color noise (when independently sampled across RGB channels), reflecting different scanner architectures.

Poisson noise models the fundamental quantum limitations of photon detectionwhere the variance scales linearly with signal intensity according to the Poisson distribution . This noise source is particularly relevant in high-magnification imaging of weakly stained regionswhere photon counts are inherently limited. The combination of these noise types effectively reproduces the characteristic graininess observed in low-light conditions while maintaining the structural integrity of diagnostically critical features such as nuclear membranes and chromatin patterns. Therefore, the noisy image can be represents as:

| (3) |

where represents the noise-free image intensity at pixel , denotes additive Gaussian noise. models Poisson-distributed quantum noise:

| (4) |

Our implementation preserves the Poisson noise’s signal-dependent nature while avoiding artificial amplification of staining variations, ensuring biologically plausible noise characteristics throughout the dynamic range of pathological specimens.

4.2 Network Architecture

The objective of this work is to develop a unified low-level pathology foundation model for image restoration and virtual staining. To ensure robust interactivity and enable precise control across multiple tasks, our model employs a prompt-based conditioning mechanism, which dynamically guides the generation process toward the desired output modality (e.g., restoration, virtual staining, or coupled degradation reversal).

Our framework employs a two-stage training approach to achieve high-fidelity pathology image generation through progressive refinement. In the first stage, a contrastive learning-based autoencoder learns to extract consistent low-level features and produce coarse restorations guided by task-specific prompts. Building upon this foundation, the second stage trains a denoising diffusion model that takes both the coarse reconstruction and prompt embedding as inputs to generate detailed, high-quality outputs. The diffusion model progressively refines the image through an iterative denoising process, while maintaining anatomical consistency from the coarse input and adhering to task-specific requirements through prompt conditioning. This hierarchical approach allows our unified model to first capture global structural information and then synthesize precise local details, enabling flexible performance across diverse tasks, including image restoration and virtual staining, without requiring architectural modifications. The diffusion model’s conditioning on both the initial reconstruction and textual prompts ensures that the final output not only preserves the input’s structural integrity but also accurately reflects the desired transformation specified by the prompt, whether it involves stain conversion, artifact removal, or resolution enhancement.

During the inference stage, our unified low-level pathology foundation model takes a user-defined prompt and noisy image as input to generate high-quality image through a controlled reverse diffusion process. The pretrained encoder first processes the textual or embedding-based prompt to extract task-specific conditioning signals, while the diffusion model progressively denoises the initial random noise across multiple timesteps. This iterative refinement enables the synthesis of both structurally accurate and task-aligned results - whether restoring low-quality H&E pathology images (prompt: "Obtain high-quality H&E pathology image") or generating specific stains (prompt: "Synthesize H&E staining") - while maintaining tissue-level consistency.

Contrastive Pretrainig for Coarse Restoration

In the pre-training phase, we aim to pretrain a low-level autoencoder that can extract consistent low-level features and produce coarse restorations guided by task-specific prompts. Following LDM[28] and CLIP[45], we pretrain the KL-Autoencoder as the low-level pathology image autoencoder to generate the coarse restorations and directly use the CLIP[45] text encoder to obtain the textual features for guidance.

Given the WSIs, we firstly pre-process the WSIs into 256256 pathology patches as early mentioned in sec. 4.1.1. As shown in Extended Data Fig. 8a, we adopt a unified training paradigm for low-level pathology tasks by leveraging contrastive learning to capture shared feature representations across different staining protocols and image quality levels. This approach enables the model to learn robust latent embeddings that are invariant to degradation types and staining variations in histopathology images. The contrastive loss operates in the latent space, pulling together features from different views (e.g., degraded/restored or differently stained versions) of the same tissue while pushing apart features from different tissue samples:

| (5) |

where and are positive pairs (different views of same tissue), are negative samples (different tissues), is the feature encoder, and is a temperature parameter.

For high-quality reference images in restoration tasks and target-stain images in virtual staining tasks, the KL-autoencoder is optimized to reconstruct the original input through the objective:

| (6) |

where and represent the encoder and decoder respectively. Simultaneously, when processing degraded inputs for restoration or source-stain images for virtual staining, the model is trained to generate enhanced outputs that approximate the target distribution using a composite loss function:

| (7) |

where represent the paired degraded or source-stained pathology images. The reconstruction loss ensures pixel-level accuracy by minimizing absolute differences between generated and target images. However, pixel-wise losses alone often produce blurry results, so we incorporate a perceptual loss (LPIPS) [37] that operates in the feature space of a pretrained VGG network [46]:

| (8) |

where denotes features from layer of a pretrained VGG network, and are spatial dimensions of the feature maps.

To achieve more realistic quality for pathology images, we employ an adversarial loss that aligns the generated image distribution with original pathology images through a discriminator.

| (9) |

where discriminator is trained to distinguish between real () and generated () images. Therefore, the final pretraining loss can be denoted as:

| (10) |

The textual prompts encoded by the CLIP text encoder are injected into the encoder through cross-attention layers, providing explicit guidance to steer the reconstruction toward the desired output. This integrated approach ensures the autoencoder learns semantically meaningful representations while maintaining flexibility across diverse pathology image processing tasks.

Conditional Diffusion for Image Refinement :

Building upon the pretrained autoencoder’s coarse restorations, we implement a conditional diffusion model to recover fine pathological details through noise-to-image synthesis. As shown in Extended Data Fig. 8b, we aim to remove the additive noises in the pathology images with the coarse image generation and textual prompts.

Our framework builds upon diffusion probabilistic models, which learn a target data distribution through iterative denoising of normally distributed variables. These models formulate the generation process as the reverse of a fixed Markov chain spanning timesteps, effectively implementing a sequence of denoising autoencoders . Following the standard controllable diffusion framework[47], we train a U-Net to iteratively denoise corrupted versions of high-quality pathology images. For a target image progressively noised to at timestep , the model is trained to predict and remove noise from corrupted versions of input images , following the reweighted variational lower bound objective:

| (11) |

with t uniformly sampled from .

In our diffusion model development, we adopt a two-phase training strategy to ensure robust noise modeling and precise conditional control. First, we pretrain a standard denoising diffusion model without any conditional inputs, optimizing the baseline objective from Eq. 11. This initial phase establishes fundamental denoising capabilities for pathology images. After convergence, we freeze these parameters and introduce a trainable controllable module that shares the U-Net[48] encoder with the fixed diffusion model. The complete architecture then processes both the coarse reconstruction and textual prompt embedding through and minimize the joint optimization:

| (12) |

where represents the predicted noised inside the image from the diffusion model. It should be noted that all the denoised process could be directly applied in the latent space of the pretrained autoencoder from the first stage. This latent diffusion approach can reduce computational costs as the dimensionality of the latent space is much lower than that of the original image space.

Inference Stage

In the inference stage, our model synthesizes high-quality pathology images through an iterative denoising process that combines coarse image reconstructions and textual prompts in Fig. 9. The generation begins with pure Gaussian noise and progressively refines it over T timesteps using the trained diffusion model conditioned on both the encoded coarse image and the prompt embedding . At each timestep t, the model predicts the noise component using:

| (13) |

and updates the image estimate through:

| (14) |

where defines the noise schedule and for . This process maintains anatomical fidelity through the z-conditioning while allowing precise control over stain characteristics and artifact correction via the prompt c. The complete inference typically converges in 50-100 steps using the DDIM scheduler, producing outputs that balance clinical utility with prompt-specific transformations. Our experiments demonstrate that this approach successfully generates diagnostically valid images while adhering to diverse transformation goals specified through textual prompts.

4.3 Evaluation Metrics

To quantitatively evaluate the performance of different methods in image restoration and virtual staining tasks, we employ three popular metrics: Peak Signal-to-Noise Ratio (PSNR) [35], Structural Similarity Index Measure (SSIM) [36], and Learned Perceptual Image Patch Similarity (LPIPS) [37]. These metrics assess different aspects of image quality, including pixel-level fidelity, structural similarity, and perceptual consistency.

Peak Signal-to-Noise Ratio (PSNR): PSNR measures the pixel-level similarity between the generated image and the ground truth. It is defined as:

| (15) |

where is the maximum possible pixel value (e.g., 255 for 8-bit images), and (Mean Squared Error) computes the average squared difference between the generated and reference images, is the total number of pixels, is the pixel value from the ground truth image, and is the corresponding pixel value from the generated image. A lower MAE indicates better pixel-wise accuracy. A higher PSNR or a lower MAE indicates better pixel-wise reconstruction accuracy. However, these metrics may not fully align with human perception, as they do not account for structural or semantic differences.

Structural Similarity Index Measure (SSIM): SSIM evaluates the structural similarity between two images considering luminance, contrast, and structure.

| (16) |

where , are the local means, , are the standard deviations, , is the cross-covariance, and , are small constants for stability. SSIM ranges from 0 to 1, with higher values indicating better preservation of structural details. Unlike PSNR, SSIM better correlates with human judgment by capturing perceptual quality.

Learned Perceptual Image Patch Similarity (LPIPS): LPIPS measures perceptual similarity using deep features extracted from a pre-trained neural network (e.g., VGG or AlexNet). The metric computes the weighted distance between deep feature representations of the generated and reference images:

| (17) |

where , denote deep features at layer , and are learned weights. A lower LPIPS score indicates better perceptual alignment with human vision, as it captures high-level semantic similarities rather than low-level pixel differences.

To make it clear, a good generated image should have high PSNR, high SSIM, and low LPIPS. High PSNR suggests strong pixel-wise accuracy but does not guarantee visually pleasing results. High SSIM indicates better structural preservation, such as edges and textures. Low LPIPS reflects superior perceptual quality, meaning the generated image is more realistic to human observers. By combining these metrics, we comprehensively assess the performance of different methods in terms of pixel fidelity, structural consistency, and perceptual quality.

4.4 Compared Methods

To thoroughly evaluate the performance of our proposed LPFM framework, we conduct extensive comparisons with eight state-of-the-art methods that represent the current spectrum of approaches in computational pathology image enhancement. These baseline methods encompass three major architectural paradigms: (1) generative adversarial networks (CycleGAN, Pix2Pix, BSRGAN, RegGAN), (2) transformer-based models (SwinIR), and (3) diffusion models (LDM, HistoDiff), along with (4) specialized hierarchical networks (HER2). This diverse selection enables us to assess LPFM’s advantages across different architectural designs and application scenarios, from general image-to-image translation to pathology-specific enhancement tasks. Each comparator was carefully selected based on its demonstrated effectiveness in either medical image analysis or general computer vision tasks with potential pathology applications.

CycleGAN [49]: This pioneering unpaired image-to-image translation framework employs cycle-consistent adversarial networks to learn bidirectional mappings between domains without requiring aligned training pairs. Its ability to handle unpaired data makes it particularly valuable for virtual staining applications where precisely registered stain pairs are often unavailable. The model consists of two generators (, ) and corresponding discriminators trained with adversarial and cycle-consistency losses.

Pix2Pix [26]: As a seminal conditional GAN architecture, Pix2Pix establishes the foundation for supervised pixel-to-pixel translation tasks. The model combines a U-Net generator with a PatchGAN discriminator, trained using both adversarial and L1 reconstruction losses. When paired training data is available (e.g., registered H&E-to-IHC stain pairs), Pix2Pix serves as a strong baseline for both virtual staining and restoration tasks, though it requires precise image alignment.

BSRGAN [50]: This blind super-resolution network introduces a comprehensive degradation model that simulates real-world imaging artifacts including blur, noise, and compression. The architecture combines a U-shaped network with residual dense blocks and channel attention mechanisms. BSRGAN’s ability to handle unknown and complex degradation patterns makes it particularly suitable for restoring historical pathology slides with varying quality issues.

SwinIR [11]: Representing the transformer-based paradigm, SwinIR leverages shifted window attention mechanisms within a hierarchical architecture for image restoration. The model processes images through shallow feature extraction, deep feature transformation using Swin Transformer blocks, and high-quality reconstruction modules. SwinIR demonstrates particular effectiveness in super-resolution and denoising tasks due to its ability to capture long-range dependencies in tissue structures.

LDM [28]: This latent diffusion model operates in a compressed perceptual space to achieve efficient high-resolution image generation. LDM combines an autoencoder for latent space projection with a diffusion process that gradually denoises images conditioned on task-specific inputs. The model’s memory efficiency enables processing of whole-slide images at high resolutions while maintaining computational tractability.

HistoDiff [51]: Specifically designed for histopathology, this diffusion model incorporates tissue-specific priors through a morphology-aware attention mechanism. The architecture integrates a pre-trained nuclei segmentation network to guide the diffusion process, ensuring preservation of diagnostically critical cellular features during enhancement. HistoDiff demonstrates particular strengths in stain normalization and artifact correction tasks.

HER2 [52]: This hierarchical enhancement network processes pathology images through parallel pathways at multiple magnification levels (5, 10, 20). The architecture employs cross-scale feature fusion and attention-guided skip connections to maintain consistency across scales. HER2 has shown excellent performance in virtual IHC staining tasks by explicitly modeling tissue structures at different resolution levels.

RegGAN [53]: This registration-enhanced GAN introduces spatial alignment constraints during training through a differentiable STN module. The model jointly optimizes for image translation quality and morphological consistency by incorporating landmark-based registration losses. RegGAN demonstrates superior performance in applications requiring precise spatial correspondence, such as sequential staining prediction and multi-modal image harmonization.

4.5 Datasets

Pretraining datasets. In this study, we curate the datasets including 87,810 whole-slide images (WSIs) and 190 million patches from 37 public datasets. Our unified low-level pathology foundation model is pretrained on all the datasets excluding MIDOG2022 [54], TIGER2021 [55], and OCELOT [56] which are reserved for external validation. The pretraining corpus comprises large-scale multi-organ cohorts including TCGA [57] (30,159 slides), GTEx [58] (25,711 slides), and CPTAC [59] (7,255 slides), alongside organ-specific references such as PANDA [39] (prostate, 10,616 slides), CAMELYON16/17 [4, 60] (breast, 1,397 slides combined), and BRACS [60] (breast tumor subtypes, 547 slides). Rare morphologies are represented by AML-Cytomorphology_LMU [61] (blood, 18K patches) and Osteosarcoma_Tumor [62] (bone, 1,144 patches), while stain diversity is ensured through HEMIT [44] (H&E/mIHC pairs), AF2HE [43](translate autofluorescence to H&E stain), and PASAB (translate H&E stain to PAS-AB stain). Artifact robustness derives from PAIP2019/2020 [63, 40] (liver/colon artifacts) and Post-NAT-BRCA [64] (post-treatment breast tissue), with stain translation priors learned from ACROBAT2023 [65] (H&E/IHC breast) and SPIE2019 [66] (multi-stain patches). This curated diversity enables our two-stage framework to first extract universal features from TCGA’s 120M patches and GTEx’s 31M patches, then refine them via targeted datasets like DiagSet [67] (prostate microstructures) and BCNB [68] (breast tumor margins), ultimately supporting prompt-guided adaptation across restoration and virtual staining tasks. More informations about the datasets are presented in Extended Data Tab. 30.

CAMELYON16 Dataset. The CAMELYON16[4] dataset comprises 270 hematoxylin and eosin (H&E) stained whole slide images (WSIs) of lymph node sections with pixel-level annotations for breast cancer metastases, containing a total of 400 annotated tumor regions. The WSIs were scanned at 40 magnification (0.25 um/pixel resolution) using Philips and Hamamatsu scanners, providing diversity in imaging characteristics. The dataset is particularly valuable for studying micro- and macro-metastases detection, with tumor regions ranging from 0.2 mm to over 5 cm in diameter. For our experiments, the WSIs were processed into 1,706,890 non-overlapping 256 256 patches, divided into training (1,194,823 patches), validation (170,689 patches), and test (341,378 patches) sets.

PAIP2020 Dataset. The PAIP2020[40] challenge dataset provides 50 H&E stained WSIs of liver resection specimens from hepatocellular carcinoma patients, including 30 training cases and 20 test cases. Scanned at 20 magnification (0.5 um/pixel) using Leica Aperio AT2 scanners, these images include detailed annotations for 15,742 viable tumor regions, 8,916 necrotic areas, and extensive non-tumor liver parenchyma. After processing, we obtained 892,450 patches (624,715 training, 89,245 validation, and 178,490 test) of size 256256 pixels. The dataset exhibits diverse tumor morphologies including trabecular, pseudoglandular, and compact growth patterns, making it particularly suitable for studying hepatic histopathology.

PANDA Dataset. As the largest publicly available prostate cancer dataset, PANDA[39] contains approximately 11,000 prostate biopsy WSIs (10,616 with Gleason grades) from the Radboud University Medical Center and Karolinska Institute. Scanned using three different scanner models (Hamamatsu, Philips, and Leica) at 20 magnification, the dataset covers the full spectrum of Gleason patterns (3-5) with expert-annotated Gleason scores. For our study, we utilized 8,492 WSIs (5,944 training, 1,699 validation, and 849 test), which were processed into 4.2 million 256256 patches (2.94M training, 840K validation, 420K test). The inclusion of multiple scanning systems makes this dataset valuable for studying scanner-invariant feature learning.

MIDOG2022 Dataset. The MIDOG2022[54] challenge dataset consists of 200 breast cancer WSIs (160 training, 40 test) from four different scanners (Hamamatsu, Roche, Leica, and Philips) with 5,712 annotated mitotic figures. The images were acquired at 40 magnification with 0.25 um/pixel resolution. After processing, we obtained 423,580 patches (296,506 training, 42,358 validation, 84,716 test) of size 256256 pixels. This dataset is uniquely designed to address domain shift challenges in digital pathology, containing carefully matched cases across scanners while maintaining consistent staining protocols.

TIGER2021 Dataset. The TIGER2021[55] dataset includes 500 WSIs (400 training, 100 test) of H&E stained prostatectomy specimens, containing 2.3 million annotated tumor cells and 1.8 million annotated non-tumor cells. The images were scanned at 40 magnification (0.25 um/pixel) using Philips Ultra Fast Scanners. For our experiments, we processed these into 1,125,400 patches (787,780 training, 112,540 validation, 225,080 test) of size 256256 pixels. The dataset provides comprehensive annotations for Gleason patterns 3-5 across multiple tissue cores.

OCELOT Dataset. The OCELOT[56] dataset contains 394 WSIs (315 training, 79 test) of H&E stained tissue sections from multiple cancer types (208 lung, 106 pancreas, 80 cervix) with focus on tumor microenvironment analysis. The images were acquired at 20 magnification (0.5 um/pixel) using Hamamatsu NanoZoomer scanners, yielding 936,250 processed patches (655,375 training, 93,625 validation, 187,250 test) of size 256256 pixels. Unique features include detailed annotations for 42,368 tumor regions, 38,915 stroma regions, and 15,742 lymphocyte clusters, as well as corresponding immunohistochemistry (IHC) slides for 126 selected cases.

AF2HE Dataset. The AF2HE [43] dataset comprises 15 samples of breast and liver cancer tissues. The samples were first imaged as whole slide images (WSI) using the autofluorescence (AF) technique without any chemical staining. The same slide was then stained with H&E to capture stained images. The WSIs were roughly aligned, and cropped into 128 128 patches and divided into a training set of 50,447 pairs and a test set of 4,422 pairs.

HE2PAS Dataset. This dataset was collected from the Prince of Wales Hospital in Hong Kong and comprises of 10,727 H&E and PAS-AB pairs for training and 1,191 pairs for testing. The image size is 128 128. Additionally, we collected another 2841 patches sampled from high-risk slides as the external validation.

HEMIT Dataset. The dataset utilized in this study was curated by Bian et al [44], and comprises cellular-wise registered pairs of H&E and multiplex immunohistochemistry (mIHC) images, sourced from the ImmunoAlzer project. We employed the official train-validation-test split (3717:630:945) across all methods. To mitigate computational complexity, we resize the image to scale of 512 512 from the original images. The three channels of the mIHC images correspond to 4’,6-diamidino-2-phenylindole (DAPI, red channel), Pan Cytokeratin (panCK, green channel), and cluster of differentiation 3 (CD3, blue), respectively

4.6 Code Availability

The code will be available on Github(https://github.com/ziniBRC/LPFM).

4.7 Ethics Declarations

This project has been reviewed and approved by the Human and Artefacts Research Ethics Committee (HAREC) of Hong Kong University of Science and Technology. The protocol number is HREP-2024-0429.

4.8 Author Contribution

Z.L. and H.C. conceived and designed the work. Z.L., Z.X., J.M. and W.L. curated the data included in the paper. Z.X. and Z.L. contributed to the technical implementation of the LPFM framework and performed the experimental evaluations. Z.L, J.H., F.H., and X.W. wrote the manuscript with inputs from all authors. R.C.K.C. supplied data and offered medical guidance. T.T.W.W. provided autofluorescence data. All authors reviewed and approved the final paper. H.C. supervised the research.

4.9 Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 62202403), Innovation and Technology Commission (Project No. MHP/002/22 and ITCPD/17-9), Research Grants Council of the Hong Kong Special Administrative Region, China (Project No: T45-401/22-N) and National Key R&D Program of China (Project No. 2023YFE0204000).

References

- [1] Ma, J., Chan, R., Wang, J., Fei, P. & Chen, H. A generalizable pathology foundation model using a unified knowledge distillation pretraining framework. \JournalTitleNature Biomedical Engineering (2025).

- [2] Chen, R. J. et al. Towards a general-purpose foundation model for computational pathology. \JournalTitleNature Medicine 30, 850–862 (2024).

- [3] Song, A. H. et al. Artificial intelligence for digital and computational pathology. \JournalTitleNature Reviews Bioengineering 1, 930–949 (2023).

- [4] Bejnordi, B. E. et al. Diagnostic assessment of deep learning algorithms for detection of lymph node metastases in women with breast cancer. \JournalTitleJama 318, 2199–2210 (2017).

- [5] Ma, J., Chan, R. & Chen, H. Pathbench: A comprehensive comparison benchmark for pathology foundation models towards precision oncology. \JournalTitlearXiv:2505.20202 (2025).

- [6] Lai, B., Fu, J. et al. Artificial intelligence in cancer pathology: Challenge to meet increasing demands of precision medicine. \JournalTitleInternational Journal of Oncology 63, 1–30 (2023).

- [7] Xu, Y. et al. A multimodal knowledge-enhanced whole-slide pathology foundation model. \JournalTitlearXiv preprint arXiv:2407.15362 (2024).

- [8] Srinidhi, C. L., Ciga, O. & Martel, A. L. Deep neural network models for computational histopathology: A survey. \JournalTitleMedical image analysis 67, 101813 (2021).

- [9] Yan, F., Chen, H., Zhang, S., Wang, Z. et al. Pathorchestra: A comprehensive foundation model for computational pathology with over 100 diverse clinical-grade tasks. \JournalTitlearXiv:2503.24345 (2025).

- [10] Zhuang, J. et al. Mim: Mask in mask self-supervised pre-training for 3d medical image analysis. \JournalTitleIEEE Transactions on Medical Imaging (2025).

- [11] Liang, J. et al. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF international conference on computer vision, 1833–1844 (2021).

- [12] Jin, C., Chen, H. et al. Hmil: Hierarchical multi-instance learning for fine-grained whole slide image classification. \JournalTitleIEEE Transactions on Medical Imaging 44, 1796–1808 (2025).

- [13] Echle, A. et al. Deep learning in cancer pathology: a new generation of clinical biomarkers. \JournalTitleBritish journal of cancer 124, 686–696 (2021).

- [14] Wang, H. et al. Rethinking multiple instance learning for whole slide image classification: A bag-level classifier is a good instance-level teacher. \JournalTitleIEEE Transactions on Medical Imaging 43, 3964–3976 (2024).

- [15] Xiong, C., Chen, H., King, I. et al. Takt: Target-aware knowledge transfer for whole slide image classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention (2024).

- [16] Zhang, K., Zuo, W., Chen, Y., Meng, D. & Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. \JournalTitleIEEE transactions on image processing 26, 3142–3155 (2017).

- [17] Chen, L. et al. Next token prediction towards multimodal intelligence: A comprehensive survey. \JournalTitlearXiv preprint arXiv:2412.18619 (2024).

- [18] Bulten, W. et al. Automated deep-learning system for gleason grading of prostate cancer using biopsies: a diagnostic study. \JournalTitleThe Lancet Oncology 21, 233–241 (2020).

- [19] Tolkach, Y., Dohmgörgen, T., Toma, M. & Kristiansen, G. High-accuracy prostate cancer pathology using deep learning. \JournalTitleNature Machine Intelligence 2, 411–418 (2020).

- [20] Siemion, K. et al. What do we know about inflammatory myofibroblastic tumors?–a systematic review. \JournalTitleAdvances in Medical Sciences 67, 129–138 (2022).

- [21] Coudray, N. et al. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. \JournalTitleNature medicine 24, 1559–1567 (2018).

- [22] Wang, J., Yue, Z., Zhou, S., Chan, K. C. & Loy, C. C. Exploiting diffusion prior for real-world image super-resolution. \JournalTitleInternational Journal of Computer Vision 132, 5929–5949 (2024).

- [23] Krithiga, R. & Geetha, P. Breast cancer detection, segmentation and classification on histopathology images analysis: a systematic review. \JournalTitleArchives of Computational Methods in Engineering 28, 2607–2619 (2021).

- [24] Xia, B. et al. Diffir: Efficient diffusion model for image restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 13095–13105 (2023).

- [25] Guo, Z., Chen, H. et al. Focus: Knowledge-enhanced adaptive visual compression for few-shot whole slide image classification. In Proceedings of the Computer Vision and Pattern Recognition Conference (2025).

- [26] Isola, P., Zhu, J.-Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 1125–1134 (2017).

- [27] Xie, S. et al. Towards unifying understanding and generation in the era of vision foundation models: A survey from the autoregression perspective. \JournalTitlearXiv preprint arXiv:2410.22217 (2024).

- [28] Rombach, R., Blattmann, A., Lorenz, D., Esser, P. & Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 10684–10695 (2022).

- [29] Qu, L. et al. Tokenflow: Unified image tokenizer for multimodal understanding and generation. \JournalTitlearXiv preprint arXiv:2412.03069 (2024).

- [30] Liang, M. et al. Multi-scale self-attention generative adversarial network for pathology image restoration. \JournalTitleThe Visual Computer 39, 4305–4321 (2023).

- [31] Xiong, J. et al. Autoregressive models in vision: A survey. \JournalTitlearXiv preprint arXiv:2411.05902 (2024).

- [32] Li, T., Tian, Y., Li, H., Deng, M. & He, K. Autoregressive image generation without vector quantization. \JournalTitleAdvances in Neural Information Processing Systems 37, 56424–56445 (2025).

- [33] Han, J. et al. Infinity: Scaling bitwise autoregressive modeling for high-resolution image synthesis. \JournalTitlearXiv preprint arXiv:2412.04431 (2024).

- [34] Fan, L. et al. Fluid: Scaling autoregressive text-to-image generative models with continuous tokens. \JournalTitlearXiv preprint arXiv:2410.13863 (2024).

- [35] Huynh-Thu, Q. & Ghanbari, M. Scope of validity of psnr in image/video quality assessment. \JournalTitleElectronics letters 44, 800–801 (2008).

- [36] Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. \JournalTitleIEEE transactions on image processing 13, 600–612 (2004).

- [37] Johnson, J., Alahi, A. & Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, 694–711 (Springer, 2016).

- [38] Litjens, G. et al. 1399 h&e-stained sentinel lymph node sections of breast cancer patients: the camelyon dataset. \JournalTitleGigaScience 7, giy065 (2018).

- [39] Bulten, W. et al. Artificial intelligence for diagnosis and gleason grading of prostate cancer: the panda challenge. \JournalTitleNature medicine 28, 154–163 (2022).

- [40] Kim, K. et al. Paip 2020: Microsatellite instability prediction in colorectal cancer. \JournalTitleMedical Image Analysis 89, 102886 (2023).

- [41] Ryu, J. et al. Ocelot: Overlapped cell on tissue dataset for histopathology. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 23902–23912 (2023).

- [42] Benesty, J., Chen, J., Huang, Y. & Cohen, I. Pearson correlation coefficient. In Noise reduction in speech processing, 1–4 (Springer, 2009).

- [43] Dai, W., Wong, I. H. & Wong, T. T. A weakly supervised deep generative model for complex image restoration and style transformation. \JournalTitleAuthorea Preprints (2022).

- [44] Bian, C. et al. Immunoaizer: A deep learning-based computational framework to characterize cell distribution and gene mutation in tumor microenvironment. \JournalTitleCancers 13, 1659 (2021).

- [45] Radford, A. et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, 8748–8763 (PmLR, 2021).

- [46] Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. \JournalTitlearXiv preprint arXiv:1409.1556 (2014).

- [47] Zhang, L., Rao, A. & Agrawala, M. Adding conditional control to text-to-image diffusion models.

- [48] Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, 234–241 (Springer, 2015).

- [49] Zhu, J.-Y., Park, T., Isola, P. & Efros, A. A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Computer Vision (ICCV), 2017 IEEE International Conference on (2017).

- [50] Zhang, K., Liang, J., Van Gool, L. & Timofte, R. Designing a practical degradation model for deep blind image super-resolution. In IEEE International Conference on Computer Vision, 4791–4800 (2021).

- [51] Xu, X., Kapse, S. & Prasanna, P. Histo-diffusion: A diffusion super-resolution method for digital pathology with comprehensive quality assessment. \JournalTitlearXiv preprint arXiv:2408.15218 (2024).

- [52] DoanNgan, B., Angus, D., Sung, L. et al. Label-free virtual her2 immunohistochemical staining of breast tissue using deep learning. \JournalTitleBME frontiers (2022).

- [53] Rong, R. et al. Enhanced pathology image quality with restore–generative adversarial network. \JournalTitleThe American Journal of Pathology 193, 404–416 (2023).

- [54] Aubreville, M. et al. Domain generalization across tumor types, laboratories, and species—insights from the 2022 edition of the mitosis domain generalization challenge. \JournalTitleMedical Image Analysis 94, 103155 (2024).

- [55] Shephard, A. et al. Tiager: Tumor-infiltrating lymphocyte scoring in breast cancer for the tiger challenge. \JournalTitlearXiv preprint arXiv:2206.11943 (2022).

- [56] Ryu, J. et al. Ocelot: Overlapped cell on tissue dataset for histopathology. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 23902–23912 (2023).

- [57] Weinstein, J. N. et al. The cancer genome atlas pan-cancer analysis project. \JournalTitleNature genetics 45, 1113–1120 (2013).

- [58] Carithers, L. J. et al. A novel approach to high-quality postmortem tissue procurement: the gtex project. \JournalTitleBiopreservation and biobanking 13, 311–319 (2015).

- [59] Edwards, N. J. et al. The cptac data portal: a resource for cancer proteomics research. \JournalTitleJournal of proteome research 14, 2707–2713 (2015).

- [60] Bandi, P. et al. From detection of individual metastases to classification of lymph node status at the patient level: the camelyon17 challenge. \JournalTitleIEEE transactions on medical imaging 38, 550–560 (2018).

- [61] Matek, S. S. M. C., C. & Spiekermann, K. A single-cell morphological dataset of leukocytes from aml patients and non-malignant controls. \JournalTitleThe Cancer Imaging Archive (2019).

- [62] Leavey, P. et al. Osteosarcoma data from ut southwestern/ut dallas for viable and necrotic tumor assessment [data set]. \JournalTitleCancer Imaging Arch 14 (2019).

- [63] Kim, Y. J. et al. Paip 2019: Liver cancer segmentation challenge. \JournalTitleMedical image analysis 67, 101854 (2021).

- [64] Tafavvoghi, M., Bongo, L. A., Shvetsov, N., Busund, L.-T. R. & Møllersen, K. Publicly available datasets of breast histopathology h&e whole-slide images: a scoping review. \JournalTitleJournal of Pathology Informatics 15, 100363 (2024).