A universal detector of CNN-generated images using properties of checkerboard artifacts in the frequency domain

Abstract

We propose a novel universal detector for detecting images generated by using CNNs. In this paper, properties of checkerboard artifacts in CNN-generated images are considered, and the spectrum of images is enhanced in accordance with the properties. Next, a classifier is trained by using the enhanced spectrums to judge a query image to be a CNN-generated ones or not. In addition, an ensemble of the proposed detector with emphasized spectrums and a conventional detector is proposed to improve the performance of these methods. In an experiment, the proposed ensemble is demonstrated to outperform a state-of-the-art method under some conditions.

Index Terms:

GAN, CNN, checkerboard artifact, fake image,I Introduction

Convolutional neural networks (CNNs) have led to major breakthroughs in a wide range of applications. In contrast, they have generated new concerns and problems. Recent rapid advances in deep image synthesis techniques, such as generative adversarial networks (GANs) have easily generated fake images, so detecting manipulated images has become an urgent issue[1, 2].

To overcome this issues, we propose a universal detector of images generated by using CNNs in this paper. Recently, CNN-generated images were investigated to include a trace of checkerboard artifacts [3, 4, 5, 6], although the trance is weak in general. We focus on a trace of checkerboard artifacts in the frequency domain to detect CNN-generated images.

In the proposed method, the spectrum of images is enhanced in accordance with properties of checkerboard artifacts, and a classifier is trained by using the enhanced spectrums. In addition, an ensemble of the trained detector and a state-of-the-art detector[7] is proposed for improving the performance of the detectors. In an experiment, the proposed ensemble is demonstrated to outperform the state-of-the-art one under the use of 11 models.

II Related work

II-A Generator model

A variety of generator models have been proposed for image generation and image-to-image translation. Models based on variational autoencoder (VAE) or GAN are typical image generator models[8, 9]. Autoencoders including VAE translate an image into latent variable by using an encoder, and generate an image from by using a decoder. Since has the standard normal distribution, VAE can generate images from a noise having the standard normal distribution by the decoder. Deepfakes[10] with VAE become a major threat to the international community.

GAN models estimate a generative model and a discriminative model via an adversarial process. PGGAN[11], BigGAN[12], StyleGAN[13] and StyleGAN2[14] generate high resolution images from a random noise vector. In addition, CycleGAN[15] and StarGAN[16] translate an image from a source domain to a target domain, e.g. changing apples to oranges. GauGAN[17] were proposed to generate an image from an input semantic layout.

II-B Detecting CNN-generated images

The first approach for detecting CNN-generated images was inspired by photo-response non-uniformity noise (PRNU) that was used for discriminating camera devices[18, 19]. This approach enables us to discriminate GAN-generated images from fingerprints caused by GAN, and assume that the same GAN models are used for training and testing images.

To universally detect CNN-generated images even when images are not ones generated from a model used for training the detector, a universal detector was proposed, where it was trained by using images generated ony from AutoGAN[20]. In this work[20], the use of the frequency domain was demonstrated to improve the performance of the detector. In contrast, a universal detector by training only one specific GAN (PGGAN) was proposed[7], where RGB images are directly used for training a detector. This method was shown to detect not only PGGAN but also other generator models that were not used for training the detector.

III Proposed detector

III-A Detector with enhanced spectrum

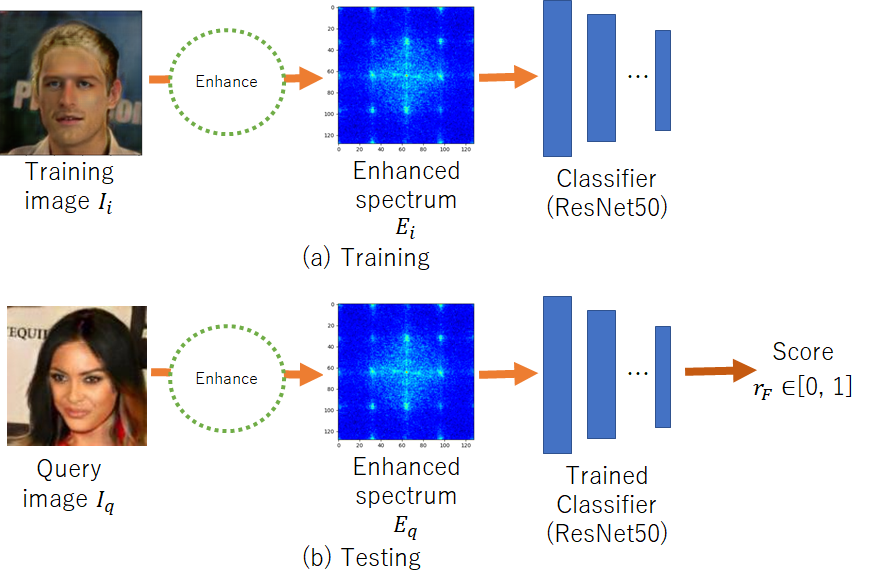

Figure 1 shows an overview of the proposed detector with enhanced spectrums. For training a classifier, a novel enhancement method for clearly showing checkerboard artifacts included in CNN-generated images is applied to training image . Enhanced spectrum calculated from are used for training the classifier. For testing, a query image is enhanced as well as for training, the spectrum calculated from is inputted to the trained classifier to judge it to be a CNN-generated one or not in accordance with an outputted probability score .

Enhanced spectrum is calculated as below.

-

1.

A median filter with a size of is appliled to , and the difference between and , is calculated, where is an output image from the median filter.

-

2.

Cropping into rectangles with a size of at random positions to generate images with a size of , .

-

3.

Applying -DFT to to obtain their spectrums .

-

4.

Computing enhanced spectrum as.

| (1) |

Similarly, enhanced spectrum is calculated by using query (see Fig.2).

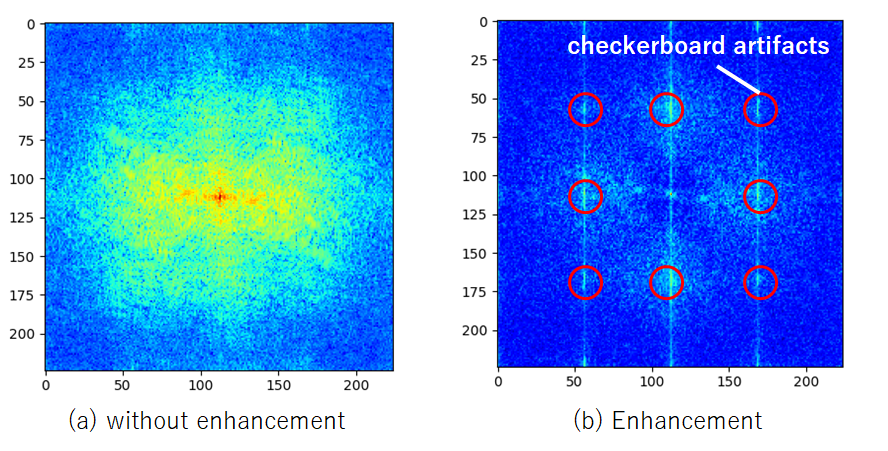

From properties of checkerboard artifacts, CNN-generated images are confirmed to have the influence of checkerboard artifacts at the same positions in the frequency domain for all cropped images. Accordingly, averaging spectrums as shown in the procedure can enhance the features that CNN-generated images have.

III-B Ensemble of RGB image and enhanced spectrum

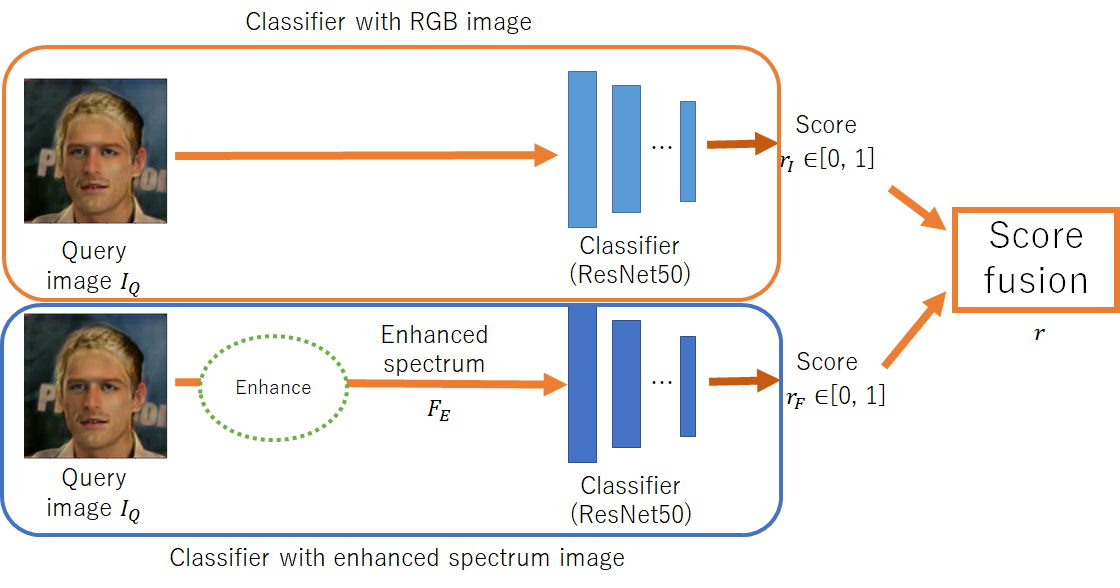

A state-of-the-art method for detecting CNN-generated images [7] is directly carried out with RGB images. In this paper, we also propose an ensemble of this conventional detector and the detector with enhanced spectrums.

An overview of the ensemble is shown in Fig. 3. Final probability score is calculated from and in accordance with Algorithm 1, where and are scores from the detector with enhanced spectrums and the detector with RGB images, respectively. Each detector has their own strengths and weaknesses, so this ensemble is expected to improve the performance of each detector.

IV Experiment results

IV-A Experiment setup

In this experiment, the dataset prepared by Wang et al. [7] was used, where the training dataset consist of 720K images generated by using PGGAN [11], and test datasets consisted of 4K images in which 2K images are generated by using 11 models as CNN-generated images, and the others are images captured by cameras.

The performance of detectors was evaluated by using F-score and average precision (AP). F-score is given by,

| (2) |

where and are the precision and recall at a selected threshold , and was selected for this evaluation. AP is also computed by summarizing a precision-recall curve as the weighted mean of precisions achieved at each threshold:

| (3) |

where and are the precision and recall at the threshold, and an input image is judged to be a CNN-generated image, when the probability score of the input image is higher than .

IV-B Experiment results

Table I and II show AP and F-score values under the use of test images from each model. Table I shows the proposed method (single) outperformed Wang’s method under a number of models that had similar network structures to PGGAN. In addition, the proposed method (ensemble) outperformed Wang’s method for almost all models. Wang’s method and the proposed method (single) have their own strengths and weaknesses for detecting CNN-generated images, respectively. In contrast, the ensemble enables us to adopt only strong points of each method. Table II also shows mean values calculated from AP values of 11 models. From the table, the proposed detector with enhanced spectrums was confirmed to improve the accuracy of Wang’s method, although the models were trained only by using images from PGGAN. The difference between Table I and Table II was caused due to the difference in the selection of threshold values.

| Methods | Input image | PGGAN | StyleGAN | StyleGAN2 | BigGAN | CycleGAN | StarGAN | GauGAN | CRN | IMLE | SAN | Deepfake |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Wang’s method[7] | RGB | 1.0000 | 0.6382 | 0.5390 | 0.3154 | 0.7663 | 0.7677 | 0.7406 | 0.8599 | 0.9374 | 0.0000 | 0.0489 |

| Proposed (single) | Spec. | 0.9652 | 0.7704 | 0.6063 | 0.8487 | 0.7446 | 0.6675 | 0.7276 | 0.7672 | 0.6226 | 0.4014 | 0.1585 |

| Proposed (ensemble) | RGB+Spec. | 1.0000 | 0.6839 | 0.5292 | 0.6831 | 0.8180 | 0.7695 | 0.7712 | 0.8976 | 0.9379 | 0.0000 | 0.0369 |

| Methods | Input image | PGGAN | StyleGAN | StyleGAN2 | BigGAN | CycleGAN | StarGAN | GauGAN | CRN | IMLE | SAN | Deepfake | mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Wang’s method[7] | RGB | 100.00 | 98.51 | 97.99 | 88.23 | 96.82 | 95.45 | 98.09 | 98.95 | 99.42 | 63.88 | 66.27 | 91.24 |

| Proposed (single) | Spec. | 99.56 | 90.92 | 86.38 | 94.27 | 92.30 | 82.22 | 84.75 | 82.90 | 62.29 | 73.91 | 60.13 | 82.69 |

| Proposed (ensemble) | RGB+Spec. | 100.00 | 98.76 | 98.02 | 94.37 | 98.17 | 95.53 | 98.50 | 99.07 | 99.52 | 69.24 | 66.21 | 92.51 |

V Conclusion

We proposed a universal detector with enhanced spectrums for detecting CNN-generated images. The proposed ensemble was confirmed to outperform the state-of-the-art under the use of 11 models, where the classifier for the detection was trained by using image generated only from one model, PGGAN.

References

- [1] L. Verdoliva, “Media forensics and deepfakes: An overview,” IEEE Journal of Selected Topics in Signal Processing, vol. 14, no. 5, pp. 910–932, 2020.

- [2] M. Tanaka and H. Kiya, “Fake-image detection with robust hashing,” in Proc. of IEEE 3rd Global Conference on Life Sciences and Technologies, 2021, pp. 40–43.

- [3] Y. Sugawara, S. Shiota, and H. Kiya, “Super-resolution using convolutional neural networks without any checkerboard artifacts,” in Proc. of IEEE International Conference on Image Processing, 2018, pp. 66–70.

- [4] Y. Kinoshita and H. Kiya, “Fixed smooth convolutional layer for avoiding checkerboard artifacts in cnns,” in Proc. in IEEE International Conference on Acoustics, Speech and Signal Processing, 2020, pp. 3712–3716.

- [5] Y. Sugawara, S. Shiota, and H. Kiya, “Checkerboard artifacts free convolutional neural networks,” APSIPA Transactions on Signal and Information Processing, vol. 8, p. e9, 2019.

- [6] T. Osakabe, M. Tanaka, Y. Kinoshita, and H. Kiya, “CycleGAN without checkerboard artifacts for counter-forensics of fake-image detection,” in International Workshop on Advanced Imaging Technology (IWAIT) 2021, vol. 11766, International Society for Optics and Photonics. SPIE, 2021, pp. 51 – 55.

- [7] S.-Y. Wang, O. Wang, R. Zhang, A. Owens, and A. A. Efros, “Cnn-generated images are surprisingly easy to spot… for now,” in Proc. of IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2020.

- [8] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” arXive preprint arXive:1406.2661, 2014. [Online]. Available: https://arxiv.org/abs/1406.2661

- [9] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXive preprint arXive:1312.6114, 2014. [Online]. Available: https://arxiv.org/abs/1312.6114

- [10] Deepfakes github. [Online]. Available: https://github.com/deepfakes/faceswap

- [11] T. Karras, T. Aila, S. Laine, and J. Lehtinen, “Progressive growing of gans for improved quality, stability, and variation,” arXive preprint arXive:1710.10196, 2018. [Online]. Available: https://arxiv.org/abs/1710.10196

- [12] A. Brock, J. Donahue, and K. Simonyan, “Large scale gan training for high fidelity natural image synthesis,” arXive preprint arXive:1809.11096, 2019. [Online]. Available: https://arxiv.org/abs/1809.11096

- [13] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2019.

- [14] T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and improving the image quality of stylegan,” in Proc. of IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2020.

- [15] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proc. of IEEE International Conference on Computer Vision, Oct 2017.

- [16] Y. Choi, M. Choi, M. Kim, J.-W. Ha, S. Kim, and J. Choo, “Stargan: Unified generative adversarial networks for multi-domain image-to-image translation,” in Proc. of IEEE Conference on Computer Vision and Pattern Recognition, June 2018.

- [17] T. Park, M.-Y. Liu, T.-C. Wang, and J.-Y. Zhu, “Semantic image synthesis with spatially-adaptive normalization,” in Proc. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2019.

- [18] F. Marra, D. Gragnaniello, L. Verdoliva, and G. Poggi, “Do gans leave artificial fingerprints?” in Proc. of IEEE Conference on Multimedia Information Processing and Retrieval), 2019, pp. 506–511.

- [19] N. Yu, L. Davis, and M. Fritz, “Attributing fake images to gans: Learning and analyzing gan fingerprints,” in Proc. of IEEE/CVF International Conference on Computer Vision, 2019, pp. 7555–7565.

- [20] X. Zhang, S. Karaman, and S. Chang, “Detecting and simulating artifacts in gan fake images,” in Proc. of IEEE International Workshop on Information Forensics and Security, 2019, pp. 1–6.