Achieve the Minimum Width of Neural Networks for Universal Approximation

Abstract

The universal approximation property (UAP) of neural networks is fundamental for deep learning, and it is well known that wide neural networks are universal approximators of continuous functions within both the norm and the continuous/uniform norm. However, the exact minimum width, , for the UAP has not been studied thoroughly. Recently, using a decoder-memorizer-encoder scheme, Park et al. (2021) found that for both the -UAP of ReLU networks and the -UAP of ReLU+STEP networks, where are the input and output dimensions, respectively. In this paper, we consider neural networks with an arbitrary set of activation functions. We prove that both -UAP and -UAP for functions on compact domains share a universal lower bound of the minimal width; that is, . In particular, the critical width, , for -UAP can be achieved by leaky-ReLU networks, provided that the input or output dimension is larger than one. Our construction is based on the approximation power of neural ordinary differential equations and the ability to approximate flow maps by neural networks. The nonmonotone or discontinuous activation functions case and the one-dimensional case are also discussed.

1 Introduction

The study of the universal approximation property (UAP) of neural networks is fundamental for deep learning and has a long history. Early studies, such as Cybenkot (1989); Hornik et al. (1989); Leshno et al. (1993), proved that wide neural networks (even shallow ones) are universal approximators for continuous functions within both the norm () and the continuous/uniform norm. Further research, such as Telgarsky (2016), indicated that increasing the depth can improve the expression power of neural networks. If the budget number of the neuron is fixed, the deeper neural networks have better expression power Yarotsky & Zhevnerchuk (2020); Shen et al. (2022). However, this pattern does not hold if the width is below a critical threshold . Lu et al. (2017) first showed that the ReLU networks have the UAP for functions from to if the width is larger than , and the UAP disappears if the width is less than . Further research, Hanin & Sellke (2017); Kidger & Lyons (2020); Park et al. (2021), improved the minimum width bound for ReLU networks. Particularly, Park et al. (2021) revealed that the minimum width is for the UAP of ReLU networks and for the UAP of ReLU+STEP networks, where is a compact domain in .

For general activation functions, the exact minimum width for UAP is less studied. Johnson (2019) consider uniformly continuous activation functions that can be approximated by a sequence of one-to-one functions and give a lower bound for -UAP (means UAP for ). Kidger & Lyons (2020) consider continuous nonpolynomial activation functions and give an upper bound for -UAP. Park et al. (2021) improved the bound for -UAP (means UAP for ) to . A summary of known upper/lower bounds on minimum width for the UAP can be found in Park et al. (2021).

In this paper, we consider neural networks having the UAP with arbitrary activation functions. We give a universal lower bound, , to approximate functions from a compact domain to in the norm or continuous norm. Furthermore, we show that the critical width can be achieved by many neural networks, as listed in Table 1. Surprisingly, the leaky-ReLU networks achieve the critical width for the -UAP provided that the input or output dimension is larger than one. This result relies on a novel construction scheme proposed in this paper based on the approximation power of neural ordinary differential equations (ODEs) and the ability to approximate flow maps by neural networks.

| Functions | Activation | Minimum width | References |

| ReLU | Hanin & Sellke (2017) | ||

| ReLU | Park et al. (2021) | ||

| ReLU | Park et al. (2021) | ||

| ReLU+STEP | Park et al. (2021) | ||

| Conti. nonpoly‡ | Park et al. (2021) | ||

| Arbitrary | Ours (Lemma 1) | ||

| Leaky-ReLU | Ours (Theorem 2) | ||

| Leaky-ReLU+ABS | Ours (Theorem 3) | ||

| Arbitrary | Ours (Lemma 1) | ||

| ReLU+FLOOR | Ours (Lemma 4) | ||

| UOE†+FLOOR | Ours (Corollary 6) | ||

| UOE† | Ours (Theorem 5) | ||

| Continuous nonpolynomial that is continuously differentiable at some with . . | |||

| UOE means the function having universal ordering of extrema, see Definition 7 . | |||

1.1 Contributions

-

1)

Obtained the universal lower bound of width for feed-forward neural networks (FNNs) that have universal approximation properties.

-

2)

Achieved the critical width by leaky-ReLU+ABS networks and UOE+FLOOR networks. (UOE is a continuous function which has universal ordering of extrema. It is introduced to handle -UAP for one-dimensional functions. See Definition 7.)

-

3)

Proposed a novel construction scheme from a differential geometry perspective that could deepen our understanding of UAP through topology theory.

1.2 Related work

To obtain the exact minimum width, one must verify the lower and upper bounds. Generally, the upper bounds are obtained by construction, while the lower bounds are obtained by counterexamples.

Lower bounds. For ReLU networks, Lu et al. (2017) utilized the disadvantage brought by the insufficient size of the dimensions and proved a lower bound for -UAP; Hanin & Sellke (2017) considered the compactness of the level set and proved a lower bound for -UAP. For monotone activation functions or its variants, Johnson (2019) noticed that functions represented by networks with width have unbounded level sets, and Beise & Da Cruz (2020) noticed that such functions on a compact domain take their maximum value on the boundary . These properties allow one to construct counterexamples and give a lower bound for -UAP. For general activation functions, Park et al. (2021) used the volume of simplex in the output space and gave a lower bound for either -UAP or -UAP. Our universal lower bound, , is based on the insufficient size of the dimensions for both the input and output space, which combines the ideas from these references above.

Upper bounds. For ReLU networks, Lu et al. (2017) explicitly constructed a width- network by concatenating a series of blocks so that the whole network can be approximated by scale functions in to any given accuracy. Hanin & Sellke (2017); Hanin (2019) constructed a width- network using the max-min string approach to achieve -UAP for functions on compact domains; Park et al. (2021) proposed an encoder-memorizer-decoder scheme that achieves the optimal bounds of the UAP for . For general activation functions, Kidger & Lyons (2020) proposed a register model construction that gives an upper bound for -UAP. Based on this result, Park et al. (2021) improved the upper bound to for -UAP. In this paper, we adopt the encoder-memorizer-decoder scheme to calculate the universal critical width for -UAP by ReLU+FLOOR activation functions. However, the floor function is discontinuous. For -UAP, we reach the critical width by leaky-ReLU, which is a continuous network using a novel scheme based on the approximation power of neural ODEs.

ResNet and neural ODEs. Although our original aim is the UAP for feed-forward neural networks, our construction is related to the neural ODEs and residual networks (ResNet, He et al. (2016)), which include skipping connections. Many studies, such as E (2017); Lu et al. (2018); Chen et al. (2018), have emphasized that ResNet can be regarded as the Euler discretization of neural ODEs. The approximation power of ResNet and neural ODEs have also been examined by researchers. To list a few, Li et al. (2022) gave a sufficient condition that covers most networks in practice so that the neural ODE/dynamic systems (without extra dimensions) process -UAP for continuous functions, provided that the spatial dimension is larger than one; Ruiz-Balet & Zuazua (2021) obtained similar results focused on the case of one-hidden layer fields. Tabuada & Gharesifard (2020) obtained the -UAP for monotone functions, and for continuous functions it was obtained by adding one extra spatial dimension. Recently, Duan et al. (2022) noticed that the FNN could also be a discretization of neural ODEs, which motivates us to construct networks achieving the critical width by inheriting the approximation power of neural ODEs. For the excluded dimension one, we design an approximation scheme with leaky-ReLU+ABS and UOE activation functions.

1.3 Organization

We formally state the main results and necessary notations in Section 2. The proof ideas are given in Section 3 4, and 5. In Section 3, we consider the case where , which is basic for the high-dimensional cases. The construction is based on the properties of monotone functions. In Section 4, we prove the case where . The construction is based on the approximation power of neural ODEs. In Section 5, we consider the case where and discuss the case of more general activation functions. Finally, we conclude the paper in Section 6. All formal proofs of the results are presented in the Appendix.

2 Main results

In this paper, we consider the standard feed-forward neural network with neurons at each hidden layer. We say that a network with depth is a function with inputs and outputs , which has the following form:

| (1) |

where are bias vectors, are weight matrices, and is the activation function. For the case of multiple activation functions, for instance, and , we call a + network. In this situation, the activation function of each neuron is either or . In this paper, we consider arbitrary activation functions, while the following activation functions are emphasized: ReLU (), leaky-ReLU ( is a fixed positive parameter), ABS (), SIN (), STEP (), FLOOR () and UOE (universal ordering of extrema, which will be defined later).

Lemma 1.

For any compact domain and any finite set of activation functions , the networks with width do not have the UAP for both and .

-UAP and -UAP. The lemma indicates that is a universal lower bound for the UAP in both and . The main result of this paper illustrates that the minimal width can be achieved. We consider the UAP for these two function classes, i.e., -UAP and -UAP, respectively. Note that any compact domain can be covered by a big cubic, the functions on the former can be extended to the latter, and the cubic can be mapped to the unit cubic by a linear function. This allows us to assume to be a (unit) cubic without loss of generality.

2.1 -UAP

Theorem 2.

Let be a compact set; then, for the function class , the minimum width of leaky-ReLU networks having -UAP is exactly .

The theorem indicates that leaky-ReLU networks achieve the critical width , except for the case of . The idea is to consider the case where and let the network width equal . According to the results of Duan et al. (2022), leaky-ReLU networks can approximate the flow map of neural ODEs. Thus, we can use the approximation power of neural ODEs to finish the proof. Li et al. (2022) proved that many neural ODEs could approximate continuous functions in the norm. This is based on the fact that orientation preserving diffeomorphisms can approximate continuous functions Brenier & Gangbo (2003).

The exclusion of dimension one is because of the monotonicity of leaky ReLU. When we add a nonmonotone activation function such as the absolute value function or sine function, the -UAP at dimension one can be achieved.

Theorem 3.

Let be a compact set; then, for the function class , the minimum width of leaky-ReLU+ABS networks having -UAP is exactly .

2.2 -UAP

-UAP is more demanding than -UAP. However, if the activation functions could include discontinuous functions, the same critical width can be achieved. Following the encoder-memory-decoder approach in Park et al. (2021), the step function is replaced by the floor function, and one can obtain the minimal width .

Lemma 4.

Let be a compact set; then, for the function class , the minimum width of ReLU+FLOOR networks having -UAP is exactly .

Since ReLU and FLOOR are monotone functions, the -UAP critical width does not hold for . This seems to be the case even if we add ABS or SIN as an additional activator. However, it is still possible to use the UOE function (Definition 12).

Theorem 5.

The UOE networks with width have -UAP for functions in .

Corollary 6.

Let be a compact set; then, for the continuous function class , the minimum width of UOE+FLOOR networks having -UAP is exactly .

3 Approximation in dimension one ()

In this section, we consider one-dimensional functions and neural networks with a width of one. In this case, the expression of ReLU networks is extremely poor. Therefore, we consider the leaky ReLU activation with a fixed parameter . Note that leaky-ReLU is strictly monotonic, and it was proven by Duan et al. (2022) that any monotone function in can be uniformly approximated by leaky-ReLU networks with width one. This is useful for our construction to approximate nonmonotone functions. Since the composition of monotone functions is also a monotone function, to approximate nonmonotone functions we need to add a nonmonotone activation function.

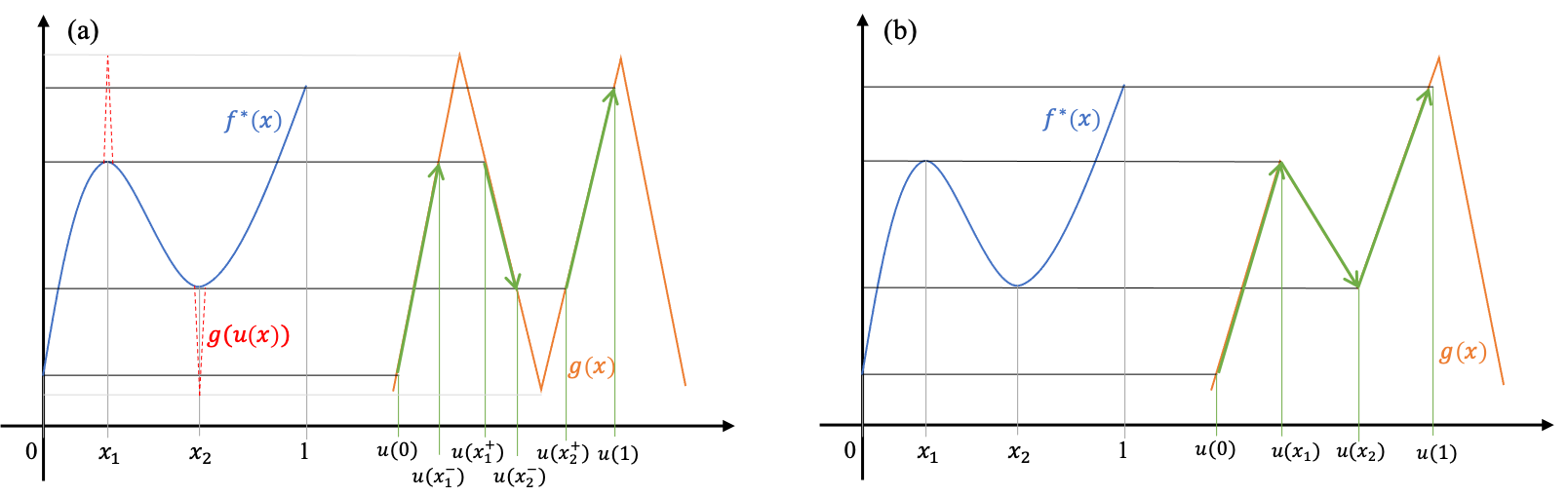

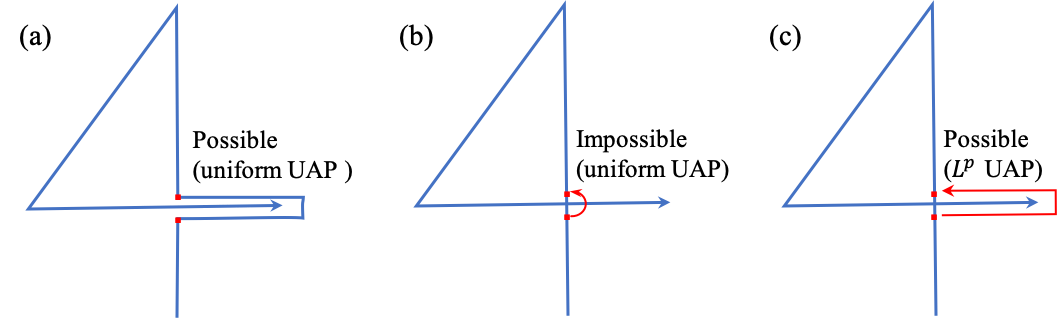

Let us consider simple nonmonotone functions, such as or . We show that leaky-ReLU+ABS or leaky-ReLU+SIN can approximate any continuous function under the norm. The idea, shown in Figure 1, is that the target function can be uniformly approximated by the polynomial , which can be represented as the composition

Here, the outer function is any continuous function whose value at extrema matches the value at extrema of , and the inner function is monotonically increasing, which adjusts the location of the extrema (see Figure 1). Since polynomials have a finite number of extrema, the inner function is piecewise continuous.

For -UAP, the approximation is allowed to have a large deviation on a small interval; therefore, the extrema could not be matched exactly (over a small error). For example, we can choose as the sine function or the sawtooth function (which can be approximated by ABS networks), and is a leaky-ReLU network approximating at each monotone interval of . Figure 1(a) shows an example of the composition.

For -UAP, matching the extrema while keeping the error small is needed. To achieve this aim, we introduce the UOE functions.

Definition 7 (Universal ordering of extrema (UOE) functions).

A UOE function is a continuous function in such that any (finite number of) possible ordering(s) of values at the (finite) extrema can be found in the extrema of the function.

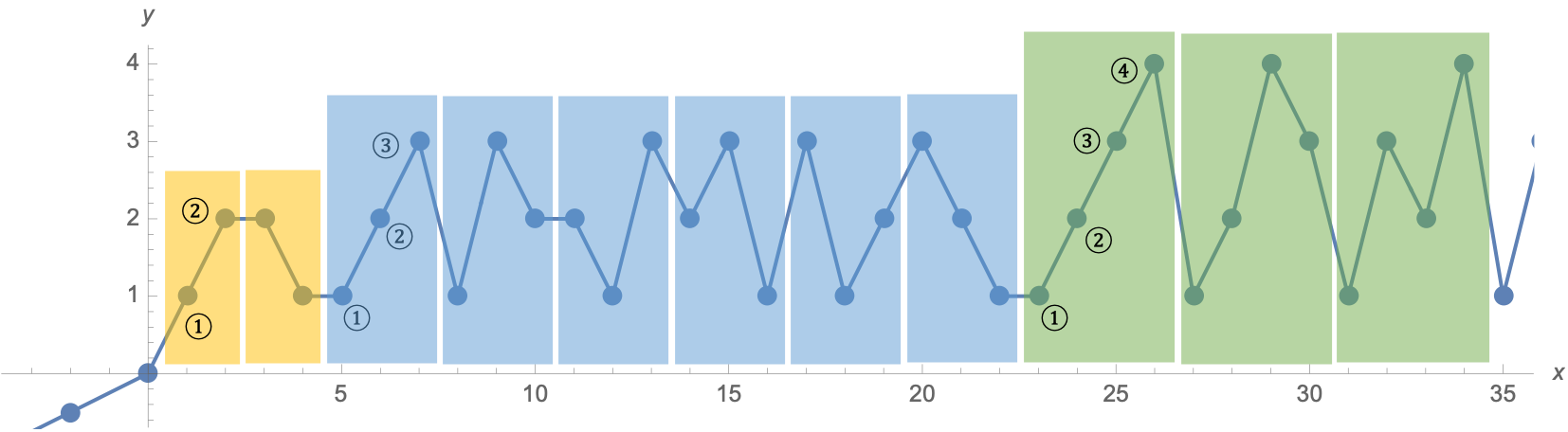

There are an infinite number of UOE functions. Here, we give an example, as shown in Figure 2. This UOE function is defined by a sequence ,

| (4) |

where is the concatenation of all permutations of positive integer numbers. The term UOE in this paper means this function . Since the UOE function can represent leaky-ReLU on any finite interval, this implies that the UOE networks can uniformly approximate any monotone functions.

To illustrate the -UAP of UOE networks, we only need to construct a continuous function matching the extrema of (see Figure 1(b)). That is, construct by the composition , where is a monotone and continuous function. This is possible since the UOE function contains any ordering of the extrema.

The following lemma summarizes the approximation of one-dimensional functions. As a consequence, Theorem 5 holds since functions in can be regarded as one-dimensional functions.

Lemma 8.

For any function and , there is a leaky-ReLU+ABS (or leaky-ReLU+SIN) network with width one and depth such that There is a leaky-ReLU+UOE network with a width of one and a depth of such that

4 Connection to the neural ODEs ()

Now, we turn to the high-dimensional case and connect the feed-forward neural networks to neural ODEs. To build this connection, we assume that the input and output have the same dimension, .

Consider the following neural ODE with one-hidden layer neural fields:

| (5) |

where and the time-dependent parameters are piecewise constant functions of . The flow map is denoted as , which is the function from to . According to the approximation results of neural ODEs (see Li et al. (2022); Tabuada & Gharesifard (2020); Ruiz-Balet & Zuazua (2021) for examples), we have the following lemma.

Lemma 9 (Special case of Li et al. (2022) ).

Let . Then, for any continuous function , any compact set , and any , there exist a time and a piecewise constant input so that the flow-map associated with the neural ODE (5) satisfies:

Next, we consider the approximation of the flow map associated with (5) by neural networks. Recently, Duan et al. (2022) found that leaky-ReLU networks could perform such approximations.

Lemma 10 (Theorem 2.2 in Duan et al. (2022)).

If the parameters in (5) are piecewise constants, then for any compact set and any , there is a leaky-ReLU network with width and depth such that

| (6) |

Combining these two lemmas, one can directly prove the following corollary, which is a part of our Theorem 2.

Corollary 11.

Let be a compact set and ; then, for the function class , the leaky-ReLU networks with width have -UAP.

Here, we summarize the main ideas of this result. Let us start with the discretization of the ODE by the splitting approach (see McLachlan & Quispel (2002) for example). Consider the spliting of (5) with , where is a scalar function and is the -th axis unit vector. Then for a given time step , ( large enough), the splitting method gives the following iteration of which approximates ,

| (7) |

where the map is defined as

| (8) |

Here the superscript in means the -th coordinate of . and take their value at . Note that the scalar functions and are monotone with respect to when is small enough. This allows us to construct leaky-ReLU networks with width to approximate each map and then approximate the flow-map, .

Note that Lemma 10 holds for all dimensions, while Lemma 9 holds for dimensions larger than one. This is because flow maps are orientation-preserving diffeomorphisms, and they can approximate continuous functions only for dimensions larger than one; see Brenier & Gangbo (2003). The approximation is based on control theory where the flow map can be adjusted to match any finite set of input-output pairs. This match does not hold for dimension one. However, the case of dimension one is discussed in the last section.

5 Achieving the minimal width

Now, we turn to the cases where the input and output dimensions cannot be equal.

5.1 Universal lower bound

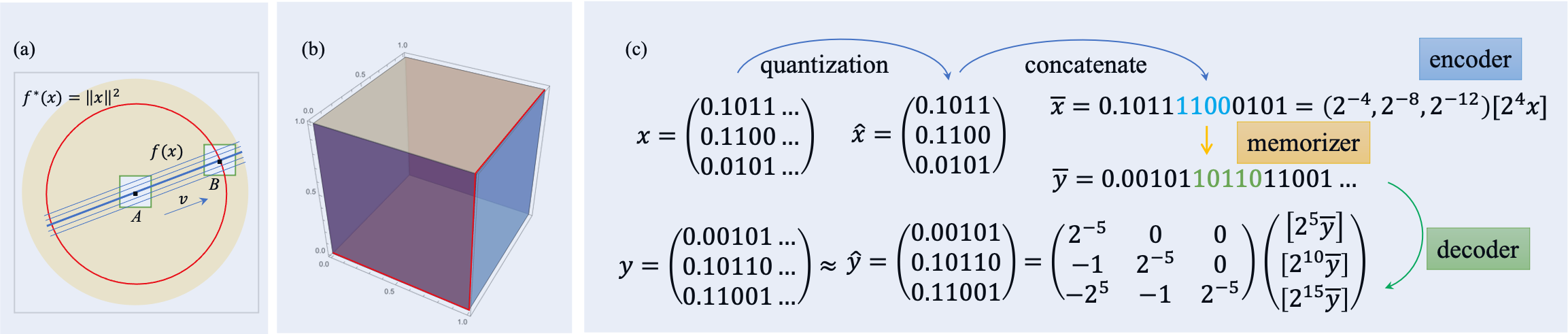

Here, we give a sketch of the proof of Lemma 1, which states that is a universal lower bound over all activation functions. Parts of Lemma 1 have been demonstrated in many papers, such as Park et al. (2021). Here, we give proof by two counterexamples that are simple and easy to understand from the topological perspective. It contains two cases: 1) there is a function that cannot be approximated by networks with width ; 2) there is a function that cannot be approximated by networks with width . Figure 3(a)-(b) shows the counterexamples that illustrate the essence of the proof.

For the first case, , we show that is what we want; see Figure 3(a). In fact, we can relax the networks to a function , where is a transformer from to and could be any function. A consequence is that there exists a direction (set as the vector satisfying , ) such that for all . Then, considering the sets and , we have

Since the volume of is a fixed positive number, the inequality implies that even the approximation for is impossible. The case of the norm and the uniform norm is impossible as well.

For the second case, , we show the example of , which is the parametrized curve from 0 to 1 along the edge of the cubic, see Figure 3(b). Relaxing the networks to a function , could be any function. Since the range of is in a hyperplane while has a positive distance to any hyperplane, the target cannot be approximated.

5.2 Achieving for -UAP

Now, we show that the lower bound for -UAP can be achieved by leaky-ReLU+ABS networks. Without loss of generality, we consider .

5.3 Achieving for -UAP

Here, we use the encoder-memorizer-decoder approach proposed in Park et al. (2021) to achieve the minimum width. Without loss of generality, we consider the function class . The encoder-memorizer-decoder approach includes three parts:

-

1)

an encoder maps to which quantizes each coordinate of by a -bit binary representation and concatenates the quantized coordinates into a single scalar value having a -bit binary representation;

-

2)

a memorizer maps each codeword to its target codeword ;

-

3)

a decoder maps to the quantized target that approximates the true target.

As illustrated in Figure 3(c), using the floor function instead of a step function, one can construct the encoder by FLOOR networks with width and the decoder by FLOOR networks with width . The memorizer is a one-dimensional scalar function that can be approximated by ReLU networks with a width of two or UOE networks with a width of one. Therefore, the minimal widths and are obtained, which demonstrate Lemma 4 and Corollary 6, respectively.

5.4 Effect of the activation functions

Here, we emphasize that our universal bound of the minimal width is optimized over arbitrary activation functions. However, it cannot always be achieved when the activation functions are fixed. Here, we discuss the case of monotone activation functions.

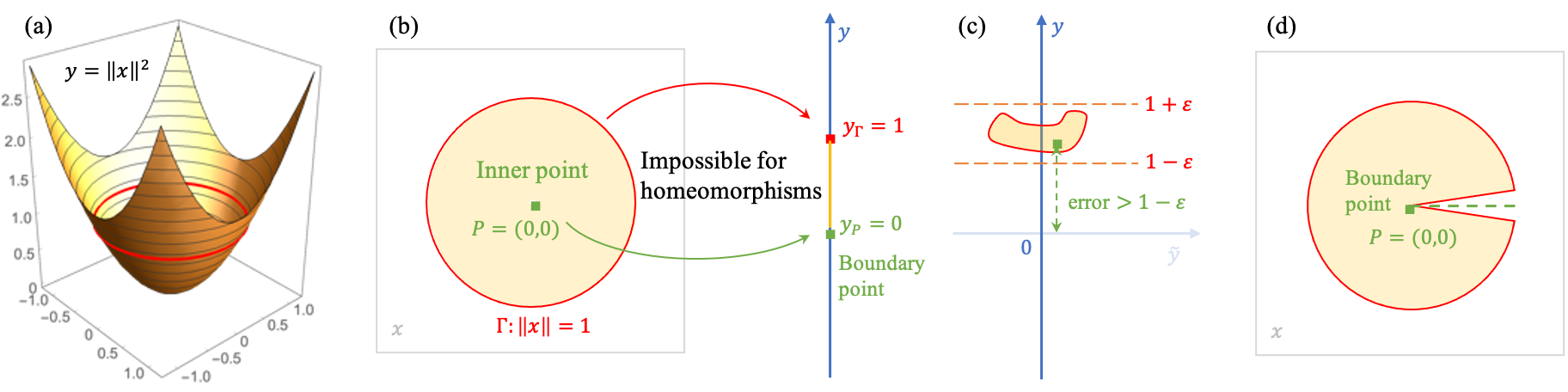

If the activation functions are strictly monotone and continuous (such as leaky-ReLU), a width of at least is needed for -UAP. This can be understood through topology theory. Leaky-ReLU, the nonsingular linear transformer, and its inverse are continuous and homeomorphic. Since compositions of homeomorphisms are also homeomorphisms, we have the following proposition: If and the weight matrix in leaky-ReLU networks are nonsingular, then the input-output map is a homeomorphism. Note that singular matrices can be approximated by nonsingular matrices; therefore, we can restrict the weight matrix in neural networks to the nonsingular case.

When , we can reformulate the leaky-ReLU network as , where is the homeomorphism. Note that considering the case where is sufficient, according to Hanin & Sellke (2017); Johnson (2019). They proved that the neural network width cannot approximate any scalar function with a level set containing a bounded path component. This can be easily understood from the perspective of topology theory. An example is to consider the function shown in Figure 4.

The case where . We present a simple example in Figure 5. The curve ‘4’ corresponding to a continuous function from to cannot be uniformly approximated. However, the approximation is still possible.

6 Conclusion

Let us summarize the main results and implications of this paper. After giving the universal lower bound of the minimum width for the UAP, we proved that the bound is optimal by constructing neural networks with some activation functions.

For the -UAP, our construction to achieve the critical width was based on the approximation power of neural ODEs, which bridges the feed-forward networks to the flow maps corresponding to the ODEs. This allowed us to understand the UAP of the FNN through topology theory. Moreover, we obtained not only the lower bound but also the upper bound.

For the -UAP, our construction was based on the encoder-memorizer-decoder approach in Park et al. (2021), where the activation sets contain a discontinuous function . It is still an open question whether we can achieve the critical width by continuous activation functions. Johnson (2019) proved that continuous and monotone activation functions need at least width . This implies that nonmonotone activation functions are needed. By using the UOE activation, we calculated the critical width for the case of . It would be of interest to study the case of in future research.

We remark that our UAP is for functions on a compact domain. Examining the critical width of the UAP for functions on unbounded domains is desirable for future research.

Acknowledgments

We thank anonymous reviewers for their valuable comments and useful suggestions. This research is supported by the National Natural Science Foundation of China (Grant No. 12201053).

References

- Beise & Da Cruz (2020) Hans-Peter Beise and Steve Dias Da Cruz. Expressiveness of neural networks having width equal or below the input dimension. arXiv preprint arXiv:2011.04923, 2020.

- Brenier & Gangbo (2003) Yann Brenier and Wilfrid Gangbo. Approximation of maps by diffeomorphisms. Calculus of Variations and Partial Differential Equations, 16(2):147–164, 2003.

- Chen et al. (2018) Ricky TQ Chen, Yulia Rubanova, Jesse Bettencourt, and David K Duvenaud. Neural ordinary differential equations. Advances in neural information processing systems, 31, 2018.

- Cybenkot (1989) George Cybenkot. Approximation by superpositions of a sigmoidal function. Mathematics of Control, Signals and Systems, 2(4):303–314, 1989.

- Duan et al. (2022) Yifei Duan, Li’ang Li, Guanghua Ji, and Yongqiang Cai. Vanilla feedforward neural networks as a discretization of dynamic systems. arXiv preprint arXiv:2209.10909, 2022.

- E (2017) Weinan E. A proposal on machine learning via dynamical systems. Communications in Mathematics and Statistics, 1(5):1–11, 2017.

- Hanin (2019) Boris Hanin. Universal function approximation by deep neural nets with bounded width and relu activations. Mathematics, 7(10):992, 2019.

- Hanin & Sellke (2017) Boris Hanin and Mark Sellke. Approximating continuous functions by relu nets of minimal width. arXiv preprint arXiv:1710.11278, 2017.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Hornik et al. (1989) Kurt Hornik, Maxwell Stinchcombe, and Halbert White. Multilayer feedforward networks are universal approximators. Neural Networks, 2(5):359–366, 1989.

- Johnson (2019) Jesse Johnson. Deep, Skinny Neural Networks are not Universal Approximators. In International Conference on Learning Representations, 2019.

- Kidger & Lyons (2020) Patrick Kidger and Terry Lyons. Universal approximation with deep narrow networks. In Conference on learning theory, pp. 2306–2327. PMLR, 2020.

- Leshno et al. (1993) Moshe Leshno, Vladimir Ya. Lin, Allan Pinkus, and Shimon Schocken. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Networks, 6(6):861–867, 1993.

- Li et al. (2022) Qianxiao Li, Ting Lin, and Zuowei Shen. Deep learning via dynamical systems: An approximation perspective. Journal of the European Mathematical Society, 2022.

- Lu et al. (2018) Yiping Lu, Aoxiao Zhong, Quanzheng Li, and Bin Dong. Beyond finite layer neural networks: Bridging deep architectures and numerical differential equations. In International Conference on Machine Learning, pp. 3276–3285. PMLR, 2018.

- Lu et al. (2017) Zhou Lu, Hongming Pu, Feicheng Wang, Zhiqiang Hu, and Liwei Wang. The expressive power of neural networks: A view from the width. volume 30, 2017.

- McLachlan & Quispel (2002) Robert I. McLachlan and G. Reinout W. Quispel. Splitting methods. Acta Numerica, 11:341–434, 2002.

- Park et al. (2021) Sejun Park, Chulhee Yun, Jaeho Lee, and Jinwoo Shin. Minimum Width for Universal Approximation. In International Conference on Learning Representations, 2021.

- Ruiz-Balet & Zuazua (2021) Domenec Ruiz-Balet and Enrique Zuazua. Neural ode control for classification, approximation and transport. arXiv preprint arXiv:2104.05278, 2021.

- Shen et al. (2022) Zuowei Shen, Haizhao Yang, and Shijun Zhang. Optimal approximation rate of ReLU networks in terms of width and depth. Journal de Mathématiques Pures et Appliquées, 157:101–135, 2022.

- Tabuada & Gharesifard (2020) Paulo Tabuada and Bahman Gharesifard. Universal approximation power of deep residual neural networks via nonlinear control theory. arXiv preprint arXiv:2007.06007, 2020.

- Telgarsky (2016) Matus Telgarsky. Benefits of Depth in Neural Networks. In Conference on Learning Theory, pp. 1517–1539. PMLR, 2016.

- Yarotsky & Zhevnerchuk (2020) Dmitry Yarotsky and Anton Zhevnerchuk. The phase diagram of approximation rates for deep neural networks. Advances in neural information processing systems, 33:13005–13015, 2020.

Appendix A Proof of the lemmas

A.1 Proof of Lemma 8

We give a definition and a lemma below that are useful for proving Lemma 8.

Definition 12.

We say two functions, , have the same ordering of extrema if they have the following properties:

-

1)

has only a finite number of extrema that are (increasing)

-

2)

and the two sequences,

and

have the same ordering, i.e.,

Lemma 13.

Let and be continuous functions in that have the same ordering of extrema; then, there are two strictly monotone functions, and , such that

Proof.

Here, we use the same notation in Definition 12. The functions and can be constructed as follows.

(1) Construct the outer function that tries to match the function values at the extrema. The only requirement is that

Since and have the same ordering, it is easy to construct such a function that is continuous and strictly increasing, for example, piecewise linear.

(2) Construct the inner function to match the location of the extrema. Denote , which satisfies . Since and are strictly monotone and continuous on the intervals and , respectively, we can construct the function on as

Combining each piece of , we have a strictly increasing and continuous function on the whole space . As a consequence, we have .

∎

Lemma 8.

For any function and ,

1) there is a leaky-ReLU+ABS (or leaky-ReLU+SIN) network with width one and depth such that

2) there is a leaky-ReLU + UOE network width one and depth such that

Proof.

We mainly provide proof of the second point, while the first point can be proven using the same scheme.

For any function and , we can approximate it by a polynomial with order such that

according to the well-known Weierstrass approximation theorem. Without a loss of generality, we can assume that is not the same at all of its extrema. Then, we can represent by the following composition, using Lemma 13 and the property of UOE:

| (9) |

where is the UOE function (4) and and are monotonically increasing continuous functions.

Then, we can approximate by UOE networks. Since and are monotone, there are UOE networks and such that and are arbitrarily small. Hence, there is a UOE network that can approximate such that

which implies that

This completes the proof of the second point.

For the first point, we only emphasize that it is easy to construct a function that has the same local maximum and local minimum in the interval and has small enough. This has the same ordering of extrema as the sawtooth function (or sine) and hence can be uniformly approximated by leaky-ReLU+ABS (or leaky-ReLU+SIN) networks . As a consequence, is small enough. ∎

A.2 Proof of Lemma 9

Lemma 9.

Let . Then, for any continuous function , any compact set , and any , there exist a time and a piecewise constant input so that the flow map associated with the neural ODE (5) satisfies:

Proof.

This is a special case of Theorem 2.3 in Li et al. (2022). ∎

A.3 Proof of Lemma 10

Lemma 10.

If the parameters in (5) are piecewise constants, then for any compact set and any , there is a leaky-ReLU network with width and depth such that

| (10) |

Proof.

It is Theorem 2.2 in Duan et al. (2022). ∎

A.4 Proof of Corollary 11

Corollary 11.

Let be a compact set and ; then, for the function class , the leaky-ReLU networks with width have -UAP.

Appendix B Proof of the main results

B.1 Proof of Lemma 1

Lemma 1.

For any compact domain and any finite set of activation functions , the networks with width do not have the UAP for both and .

Proof.

It is enough to show the following two counterexamples that cannot be approximated in the -norm.

1) cannot be approximated by any networks with widths less than . In fact, we can relax the networks to a function , where is a transformer from to and could be any function. A consequence is that there exists a direction (set as the vector satisfying , ) such that for all . Then, considering the sets and , we have

Since the volume of is a fixed positive number, the inequality implies that even the approximation for is impossible. The case of the norm and the uniform norm is impossible as well.

2) The function , the parametrized curve from 0 to 1 along the edge of the cubic, cannot be approximated by any networks with a width less than . Relaxing the networks to a function , could be any function. Since the range of is in a hyperplane while has a positive distance to any hyperplane, the target cannot be approximated. ∎

B.2 Proof of Theorem 2

Theorem 2.

Let be a compact set; then, for the function class , the minimum width of leaky-ReLU networks having -UAP is exactly .

Proof.

Using Lemma 1, we only need to prove two points: 1) the -UAP holds when , 2) when , there is a function that cannot be approximated by leaky-ReLU networks with width one (since width two is enough for the -UAP).

The first point is a consequence of Corollary 11 since we can extend the target function to dimension .

The second point is obvious since leaky-ReLU networks with a width of one are monotone functions that cannot approximate nonmonotone functions such as . ∎

B.3 Proof of Theorem 3

Theorem 3.

Let be a compact set; then, for the function class , the minimum width of leaky-ReLU+ABS networks having -UAP is exactly .

B.4 Proof of Lemma 4

Lemma 4.

Let be a compact set; then, for the function class , the minimum width of ReLU+FLOOR networks having -UAP is exactly .

Proof.

Recalling the results of Lemma 1, we only need to prove two points: 1) the C-UAP holds when , 2) when , there is a function that cannot be approximated by ReLU+FLOOR networks with width one (since width two is enough for the -UAP).

The first step can be constructed by the encoder-memorizer-decoder approach. The second point is obvious since ReLU+FLOOR networks with width one are monotone functions that cannot approximate nonmonotone functions such as . ∎

B.5 Proof of Theorem 5

Theorem 5.

The UOE networks with width have -UAP for functions in .

Proof.

Since functions in can be regarded as one-dimensional functions, it is enough to prove the case of , which is the result in Lemma 8. ∎

B.6 Proof of Corollary 6

Corollary 6.

Let be a compact set; then, for the continuous function class , the minimum width of UOE+FLOOR networks having -UAP is exactly .