Acoustic Communication and Sensing for

Inflatable Modular Soft Robots

Abstract

Modular soft robots combine the strengths of two traditionally separate areas of robotics. As modular robots, they can show robustness to individual failure and reconfigurability; as soft robots, they can deform and undergo large shape changes in order to adapt to their environment, and have inherent human safety. However, for sensing and communication these robots also combine the challenges of both: they require solutions that are scalable (low cost and complexity) and efficient (low power) to enable collectives of large numbers of robots, and these solutions must also be able to interface with the high extension ratio elastic bodies of soft robots. In this work, we seek to address these challenges using acoustic signals produced by piezoelectric surface transducers that are cheap, simple, and low power, and that not only integrate with but also leverage the elastic robot skins for signal transmission. Importantly, to further increase scalability, the transducers exhibit multi-functionality made possible by a relatively flat frequency response across the audible and ultrasonic ranges. With minimal hardware, they enable directional contact-based communication, audible-range communication at a distance, and exteroceptive sensing. We demonstrate a subset of the decentralized collective behaviors these functions make possible with multi-robot hardware implementations. The use of acoustic waves in this domain is shown to provide distinct advantages over existing solutions.

I Introduction

Modular robots overcome individual platform limitations by physically connecting and reconfiguring in order to tailor their system-level capabilities to their application and environment [1]. At the same time, soft, shape-changing robots have distinct advantages over rigid-bodied robots, including passive adaptation to their environment through structural compliance, inherent safety for human-robot interaction tasks, and the ability to exert relatively large forces and undergo relatively large strains with low-cost actuators [2]. Modular soft robots, which take inspiration from biological collectives (as “cellular robots” [3]), combine these advantages in order to perform useful behaviors emergent from interactions between relatively simple individual units. A major barrier to progress, however, is the fact that these robots also combine the challenges of these two realms. For example, a significant challenge in the design of modular robots meant to be deployed in large collectives is balancing individual platform size, complexity, and cost with the architecture and functionality of the conjoined system. The design of multi-functional components, which can adequately fulfill the function of multiple robotic subsystems without requiring additional hardware, is a potential solution. The soft, extensible structure of a modular soft robot compounds the challenge by placing additional constraints on the possible implementations, which must be both robust to high extension ratios as well as able to be coupled to elastic surfaces.

Many modular and swarm robots have sought to address the challenge of scalable inter-agent communication and sensing via infrared (IR) optical transmission [1]. This relatively low range and line-of-sight constrained method may be supplemented by wider area radio-frequency networking [4]. In contrast, in nature the use of acoustic signals is ubiquitous, including among the social insects which inspire many designers of modular and swarm robots [5]. These acoustic signals include substrate-borne vibrations, audible sound, and vibrations shared through direct body contact [6, 7]. Inspired by the way that existing organisms use passive mechanical body structures to efficiently produce, receive, and transmit acoustic signals – from the audible range of the cricket [8] to the ultrasonic range of the moth [9] – the same pre-tensioned elastic membranes that make soft robots so difficult to instrument for sensing and communication make them particularly attractive for multi-functional acoustics-based components.

Existing acoustic transducers are well-suited for acting as multi-functional components due, in part, to their ability to be operated across a wide spectrum. The Huygens-Fresnel principle dictates that the directivity of a wave corresponds to the size of the source relative to the wavelength. In practice this change in directivity is beneficial for applications like ultrasonic obstacle detection [10], where it limits the field-of-view of the transducer and focuses the signal just as a lens does for an infrared source, and is a challenge for designers of speakers with desirable “dispersion patterns.” In addition to this variable directivity, the attenuation of acoustic waves in air is proportional to the wave frequency; the absorption coefficient of air increases approximately 30dB from 1kHz to 20kHz [11]. The relatively flat frequency response (up to about 20kHz) of the simple commodity piezoelectric disc transducers used in this work therefore means that they can be operated with variable attenuation and directionality depending on desired function.

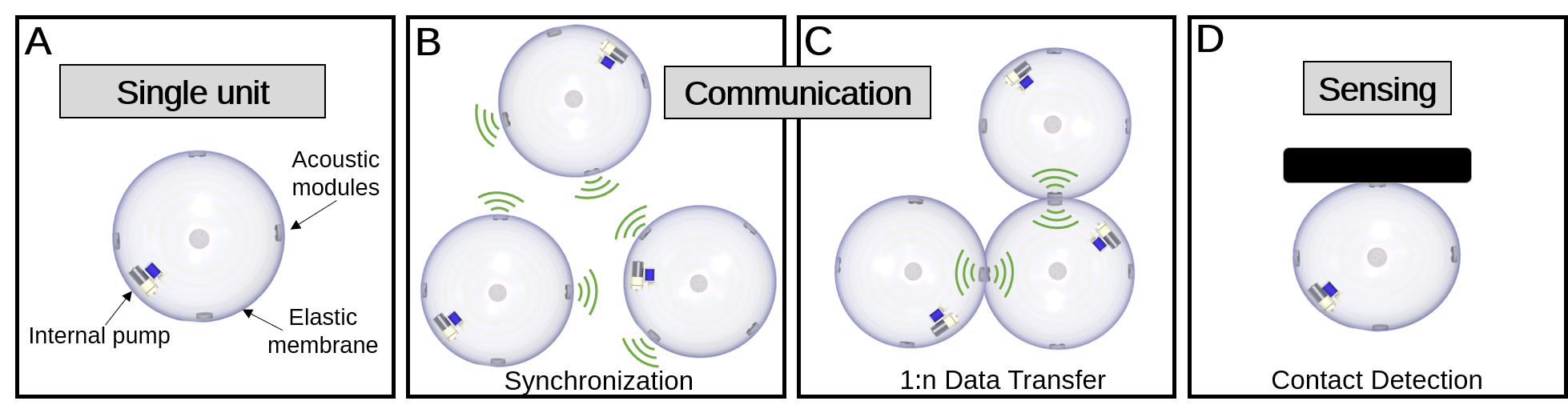

The contribution of this work is a communication and sensing modality (Fig. 1) based on surface-distributed “acoustic modules,” which use piezoelectric transducers to both send and receive acoustic waves across the audible to ultrasonic spectra, implemented on modular soft robots with high extension ratios (Fig. 2). The modules are scalable (i.e, of minimal cost and complexity), efficient (i.e., each module consumes 60mW, compared to the 160mW IR emitter of the Kilobot [12]), and helps to perform multiple core robotic functions. They not only integrate simply with elastic skins through surface attachment, they also take advantage of the structure itself as a transmission medium that is robust to large shape change. Together, this makes our solution cost effective, capable, and versatile compared to other options for shape-changing modular soft robots. After discussing related work in Section II, we describe the technical implementation and results of testing in Section III, showing core collective functions like communication between robots, synchronization at a distance, and sensing of external stimuli. In Section IV, we demonstrate two enabled collaborative behaviors in a group of three robots, including synchronized lifting and a decentralized inchworm-based gait.

II Related Work

The most directly relevant related work includes other modular and swarm robots that use individual subsystems or components for multiple functions and other examples of acoustic sensing and communication in multi-robot systems.

II-A Multifunctional Hardware for Multi-robot Systems

The Linbot soft modular platform [13] is the most directly related to this work. It uses a voice coil for actuation, sensing, and communication, taking advantage of the wide frequency response in a similar manner to how we use our piezoelectric transducers. A Hall-effect sensor is used for proprioception through sensing of the voice coil position, electromagnetic coupling between neighboring Linbots allows for omnidirectional communication, and audible range waves can be produced for external communication. To accomplish this they rely on the rigid connections between the actuator core and the extents of the soft shell, only operating with shape changes of up to approximately 30 on their principle axis. In contrast, our robots undergo maximum volume changes of close to 1000, and the exterior surfaces do not remain in contact with the primary actuator.

Swarm platforms are relevant in this context because they are also motivated by finding low complexity and cost, scalable solutions [14]. The Kilobot [12] platform uses an IR transmitter and receiver on its underside to both communicate with and detect the distance of neighbors, using only one pair for both functions but doing so only omnidirectionally and only up to about 10cm away. The Open E-Puck platform [15] uses a set of 12 pairs of radially arranged IR transmitters and receivers to perform inter-robot communication as well as range and bearing measurements, which allows it to send and receive signals from specific directions. Our acoustic solution adds the additional functionality of long-range (1m) communication with no line-of-sight requirements, as well as contact/deformation sensing, while only requiring a single transducer instead of an emitter/receiver pair.

II-B Multi-robot Acoustic Sensing and Communication

A common use of acoustic waves in multi-robot systems is for ultrasonic range estimation. The relatively slow speed of sound lessens signal processing constraints relative to radio frequency solutions (e.g., RSSI) by enabling direct time of flight measurements, making it a useful supplement to improve robustness of distance estimation [16]. Relative positioning of multi-robot systems using ultrasonic ranging at distances up to seven meters has been demonstrated with absolute average error of only 8mm [17].

As opposed to sensing, acoustic communication between autonomous robots is a relatively underexplored area. An exception is in the realm of autonomous underwater vehicles, which are driven towards acoustic modes by the high electromagnetic absorption of seawater [18, 19]. Audible range communication has been noted as a potentially useful supplement to radio frequency networking for land-based multi-robot systems due to the fact that the relatively strong environmental attenuation of acoustic waves can encode environmental information [20]. In this work, we take this idea further by using the soft pressurized structure of the robot itself as the information-encoding transmission environment.

Outside of the robotics domain, acoustic communication has been shown between pressurized mylar balloons that act as amplifiers and speakers when actuated by piezoelectric transducers [21], which served as an inspiration for the communication-at-a-distance in this work.

III Implementation and Results

III-A System Hardware

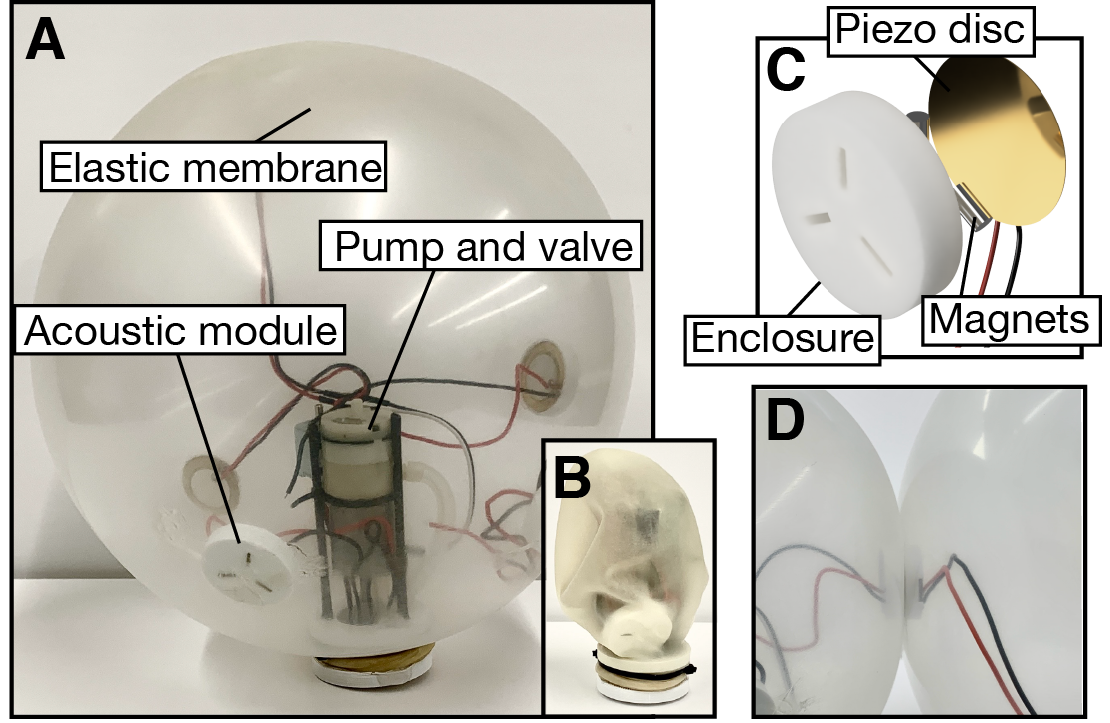

The inflatable robot units (Fig. 2A) are based on our co-authors’ recent prior work [22] demonstrating untethered cellular robots. Each is composed of a 45cm maximum diameter, 0.4mm thick latex membrane enclosing a DC air pump, solenoid-controlled valve, and up to N (N = 8 given the analog switch array used in this work) acoustic modules distributed across the membrane. Each acoustic module represents a sensing, communication, and connection point for the robots to interact with their environment and each other. There is an inherent tradeoff between the increased functionality (e.g., in terms of sensing resolution) and the increased complexity for each additional acoustic module which bears future investigation.

The acoustic modules (Fig. 2C) comprise FDM 3D printed, cylindrical enclosures (30mm diameter, 7mm thickness, PLA) with 60 degree radially arrayed slots for diametrically polarized cylindrical magnets (3.2mm diameter, 6.4mm height). The magnet housings are slightly over-sized, allowing the magnets to reorient when connectors are drawn together, making them “genderless.” We rely upon passive reorientation of the units for alignment, although notably vibrations can be transmitted with sufficient signal-to-noise ratio through even imperfectly aligned modules. The piezoelectric transducers (27mm diameter brass plate with 20mm diameter ceramic piezo, 0.5mm thickness) are standard contact microphones, fixed into the printed enclosures with 3M 300LSE double sided adhesive tape. The acoustic modules are each fixed to the inside of the latex skin with the same tape.

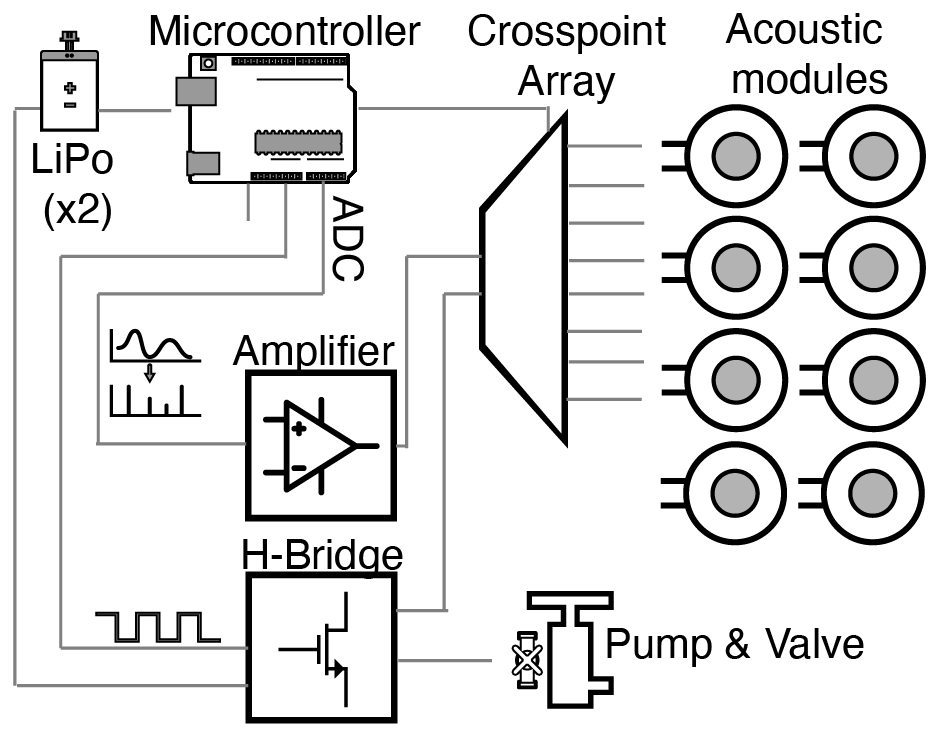

A Teensy4, which contains a 600MHz Cortex M7 microprocessor, is sufficient for the software-defined radio architecture of the acoustic communication. To minimize cost and complexity the piezoelectric transducers are connected through an 8:16 analog switch array (MT8816) to a single full H-bridge dual-channel motor driver (TB6612FNG) and a single audio amplifier (MAX9814, includes preamplifier, variable gain stage, and output amplifier) with 60dB gain. A block diagram of the system is shown in Fig. 3. Each piezoelectric transducer consumes approximately 60mW during full-duty cycle operation between 3kHz and 20kHz (10mA at 6V). The poor impedance matching between the piezoelectric transducer and the amplifier, which is designed for standard electret condenser microphones, creates a high pass filter around 2kHz. Although here we show units with electronics and power located externally to the robot membrane, prior work shows that the required electronics, battery pack, and charging circuit can be incorporated into the latex membranes inside a 3D printed enclosure [22].

III-B Contact-based Communication

Swarm and modular robot systems designed for large agent counts typically rely heavily on local communication as a way to overcome challenges with scaling of radio-based networks [14]. For modular robots the connection points represent natural avenues for information transfer, such as through direct electrical connections [23]. Methods that do not rely on mechanically flush or material-specific connections, like IR transmit/receive pairs built into the faces of the connectors [24], are more suitable for deformable surfaces. In our robots, the piezoelectric transducers in the rigid enclosure of the magnetic connectors can transfer information in the form of shared vibration through even imperfect contact made between connectors; the received signal amplitude for a 18kHz tone decreases from its full value when all three magnets are aligned, , by about 35 for =2 and 45 for =1, never falling below about 40dB signal-to-noise ratio (SNR). An advantage over an IR-based method is that vibrations are coupled from the interior modules through the exterior surfaces of the robots mechanically, removing any optical property design constraints.

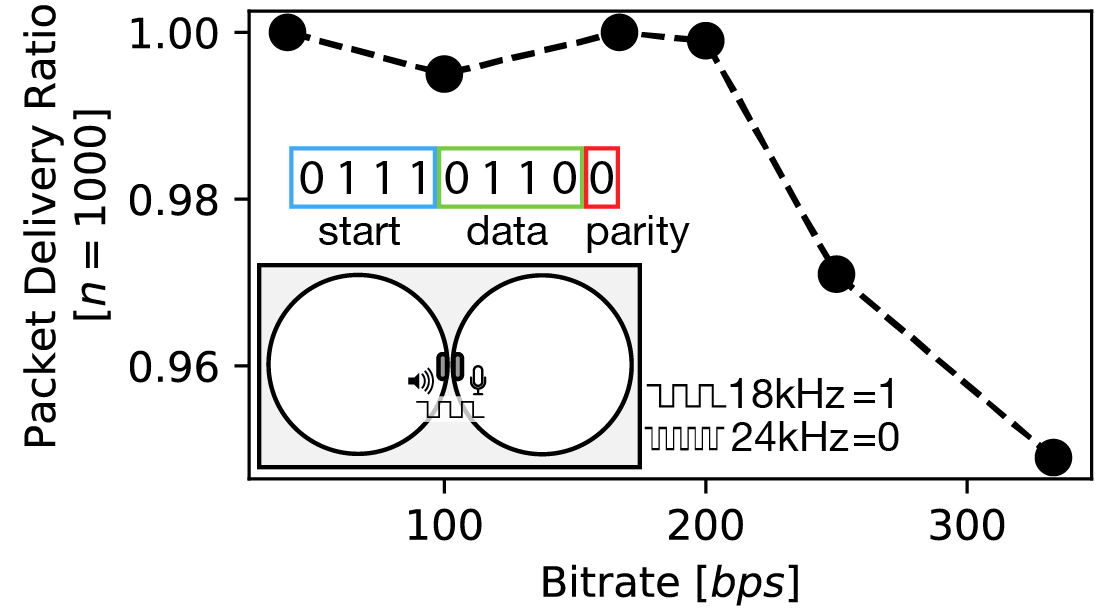

We implement acoustic communication through binary frequency-shift keying (FSK), chosen over amplitude modulation in order to resist contact-quality based errors. A demonstration of the achievable packet delivery ratio (PDR) for a 1:1 module pair is shown in Fig. 4. Packets consist of a 4-bit start sequence, 4-bit data structure, and one parity bit. The decrease in achievable PDR is correlated with increasingly tight timing requirements (i.e., a shorter symbol time requires stricter phase alignment) and a decreased SNR caused presumably by the piezoelectric transducers being unable to ring up to full vibration amplitude before a bit transition.

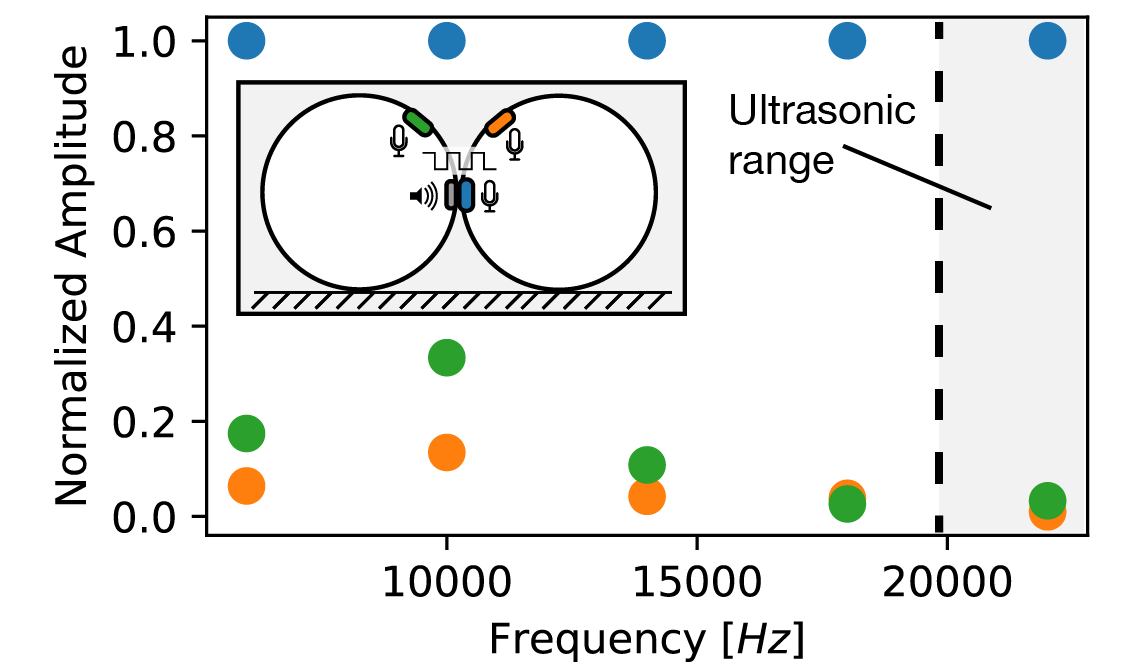

Having multiple individually addressable communication points on each robot allows for directional communication between any number of connected neighbors. For this to be possible, signals received at each transducer must be able to be successfully disambiguated from those received at their neighboring nodes; as the signals here are mechanically coupled to the structure and not based on line-of-sight, they radiate symmetrically from their coupling point through the elastic membrane and are received at neighboring points. Fig. 5 shows the received signal amplitude at the receiver node versus the received signal amplitude at the neighboring nodes on both the send and receive robots. Vibrations are increasingly attenuated at higher signal frequencies resulting in a higher SNR in the ultrasonic range. This means that ultrasonic signals are the best option for sending information directionally through the connections, and can do so with the added benefits of being inaudible and having minimal chance of encountering relevant environmental noise.

For multi-connection data routing over a single channel – in this case, an individual robot’s software-defined FSK receiver, which is only hooked up to a single acoustic module at a time – we implement a slotless architecture based on the ALOHA protocol [25]. The default listening behavior is to time multiplex through the acoustic modules with an interval equal to a single packet duration , waiting to detect a start sequence (0111) and “locking” (i.e., remaining listening) if one is detected. If a full packet is decoded with the correct parity bit, an acknowledgement is then sent through the appropriate module. The corresponding sending behavior is to continuously broadcast a packet on all desired output modules for a duration equal to , then listen on those modules for the acknowledgement; if no acknowledgement is received the packet is resent.

III-C Communication at a Distance

Collaboration between our robots is possible without either direct contact or line-of-sight via transmission of signals in the audible range, produced effectively by the same piezoelectric transducers thanks to their flat frequency response. In this case, the pressurized elastic skin acts as an omnidirectional pickup for the airborne acoustic waves, letting the ostensibly contact-based piezoelectric transducers act as true microphones. By operating at the approximate resonance of the piezoelectric transducers of 6kHz signals from robots up to a meter away can be received through the air with a measured SNR of 7dB through the entire operational volume range (). The received signal amplitude is determined by factors including the robot distance, each robots’ volume, and the contact quality between the acoustic modules and the elastic membrane.

One important and fundamental function of decentralized multi-robot systems is the ability to synchronize in time [26]. In nature, animals use both acoustic and optical (e.g., in katydids [27] and fireflies [28], respectively) signals to achieve synchronicity in a process known as “synchronized chorusing,” or more formally as groups of pulse coupled oscillators.

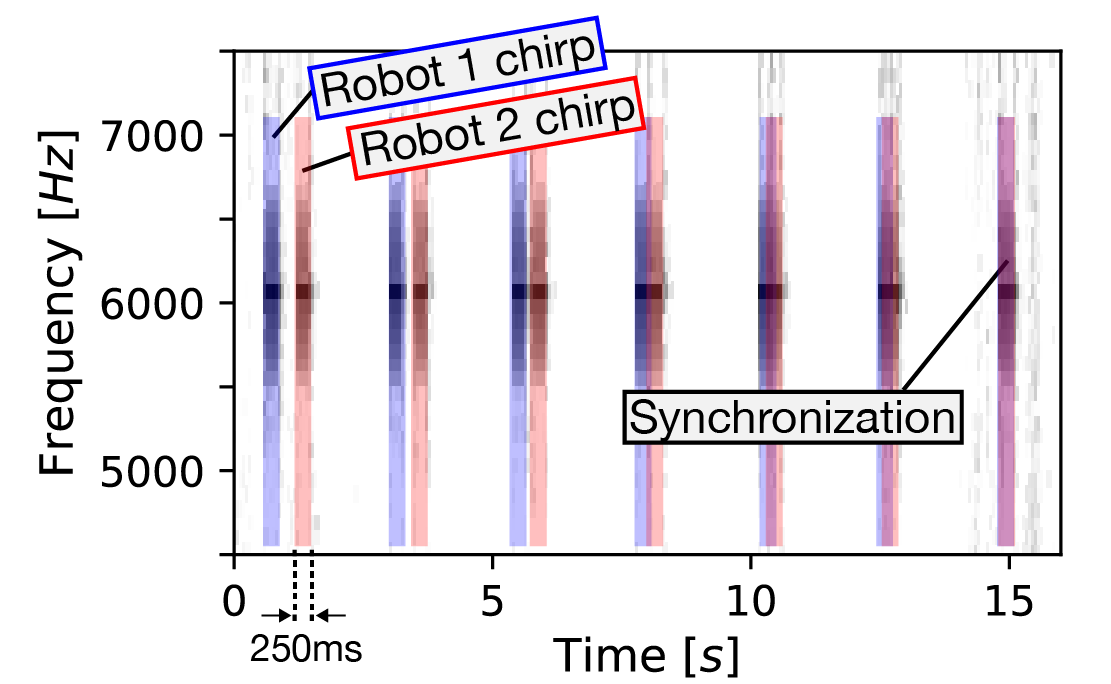

Here, pulse coupled synchronization using audible signals is implemented simply; an example spectrogram from synchronization of two robots is shown in Fig. 6. Each robot starts with some initial phase offset from its neighbors (about 250ms in Fig. 6). After a delay , a synchronization pulse is produced by all transducers simultaneously at 6kHz for a duration . The cycle repeats after another delay . During each delay interval a module acting as the receiver is continuously sampled in order to detect amplitude peaks at 6kHz above a predetermined ambient noise threshold. At the conclusion of the duration cycle, the tallied detections are used to determine whether the chirp should be shifted “forward” or “backward” in a binary fashion; if more are detected during , for example, then the majority of neighboring robots are pulsing before this one, so the phase is shifted without changing the period by setting and .

There is a tradeoff between synchronization time and total (audible) robot count. In the most extreme case, all time slots in the listening period would be filled with chirps and therefore balanced. This means that the time for synchronization is expected to scale with the number of robots as the listening period must increase for additional robots. Time-varying chirps (such as those produced by katydids [29]) could provide an additional layer of information that improves the scalability of this approach.

The synchronization accuracy is related to both the chirp duration and the digital signal processing on the receiver. The minimum chirp duration is bounded by the response time of the piezoelectric transducers and the associated SNR at the receiver side. For the receiver processing, non-overlapping 256-point FFT segments with a sampling frequency of 50kS/s results in a minimum synchronization window of approximately 5ms.

III-D Exteroceptive Sensing

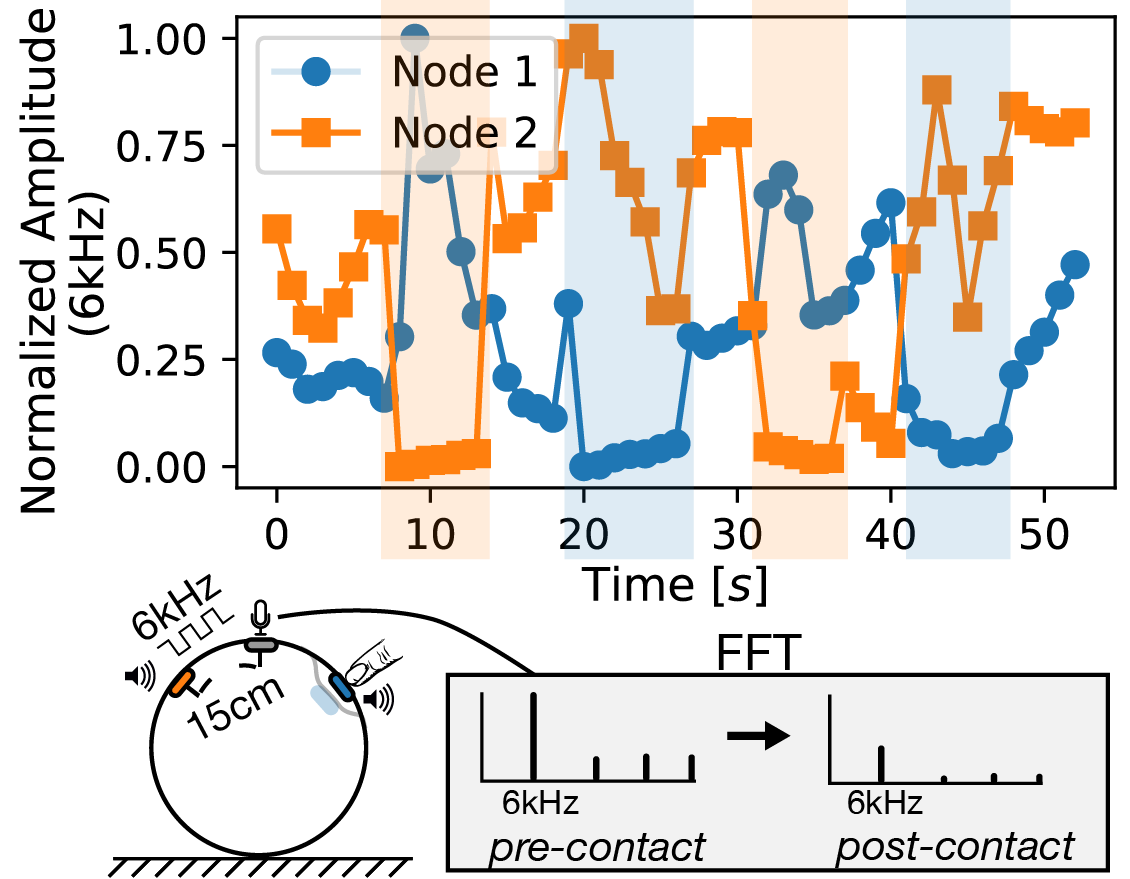

Sensing external stimuli like applied loads and environmental contacts is a critical robotic function. Existing solutions for soft robots, such as adding flexible signal transmission channels (e.g., optical channels [30] or printed traces [31]) throughout the robot surface, are costly, complex, and not robust to the high-percentage shape change exhibited by our robots. In order to sense contact we instead take advantage of the coupling between loads on the robot and the resultant attenuation of the acoustic waves being transmitted through the existing unmodified external surface, reducing instrumentation cost and complexity by taking advantage of the compliant nature of the robot.

Fig. 7 demonstrates that acoustic signals, received at a central receiving node from tones transmitted by surrounding nodes, can be used to detect compression of the robot. Regions are effectively “sensitized” by adding a continuously sampling receiver. Contacts with areas centered on the transmitting modules both dampen the vibrations of the piezoelectric transducer in its magnetic enclosure as well as decrease the coupling of the surrounding elastic membrane to the node, producing a clearly distinguishable shift in received signal FFT amplitude at the tone frequency. The sensitive region size is determined by the initial SNR of the received tones, which is a function of inflated volume, pressure, and contact quality. The spatial resolution is determined geometrically by the acoustic module area, , the module dispersion density, and the current inflated volume. In this inverse to the problem of private contact-based communication, it is important to maximize signal transmission to neighboring nodes and hence requires audible-range signals (see Fig. 5). Importantly, contact at the receiver node itself manifests as decreases in amplitude from all surrounded nodes; switching the set of “sensitized” nodes by reconfiguring the analog crosspoint array could allow for diambiguation.

IV Autonomous Behavior Demonstrations

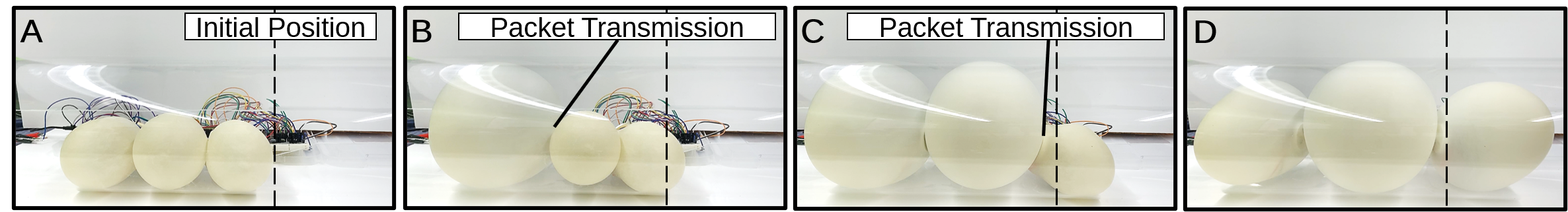

With 1-DOF actuation, coordination between connected robots allows for locomotion based on an inchworm gait [22]. Contact-based communication allows the robots to selectively initiate inflation cycles in neighboring robots. A “one-dimensional” locomotion example using this acoustic communication strategy is shown in Figure 8. Here, forward motion is only possible when the robots make full contact with the duct walls: the contact detection described in Section III could be used to control the inflation and deflation cycles. Locomotion in the X-Y plane could be performed with a minimum group of six such interconnected robots with the ability to communicate in this manner.

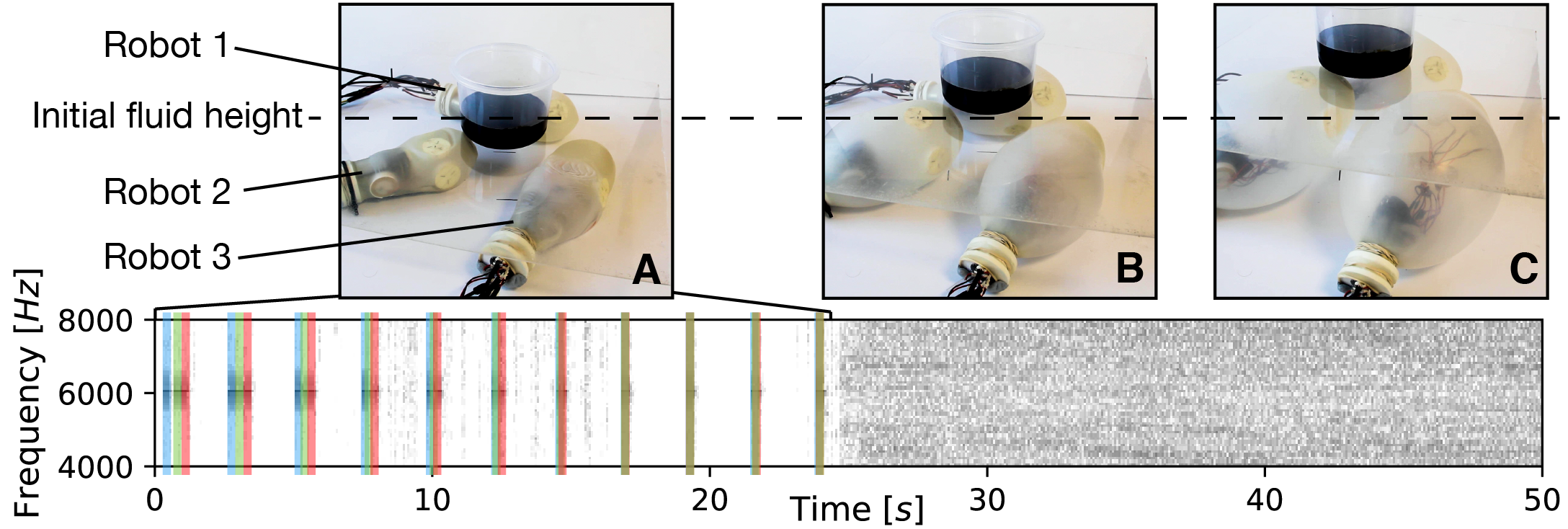

Clock synchronization is of practical use for an application like coordinated lifting of unstable or safety-critical objects, such as those theoretically encountered in search and rescue or human-assistive contexts. Figure 9 shows that a group of three robots can lift a balanced load in tandem. A centralized initiation signal tells all three robots to attempt a synchronous lift and sets an initial random clock offset. They begin to use audible-range communication to synchronize (as determined by a maximum number of FFT frames with detected chirps) and once this condition is reached for a minimum of four periods they begin to inflate.

V Future Work

There are additional sensing modalities possible using the architecture presented here with relatively minor changes to the system hardware. In the future, sourcing or fabricating properly tuned (i.e., a higher quality factor in the ultrasonic region) or properly coupled (e.g., with an attached acoustic horn) transducers for the acoustic modules may be sufficient for monostatic ultrasonic range finding from each [32]. Bistatic range finding would be an opportunity to take advantage of the shape changing nature of the robots, letting them act as reconfigurable “acoustic lenses” which vary field-of-view through changes in volume. Contact quality repeatability between modules, and variable contact quality over multiple inflate-deflate cycles, prevented more nuanced force and deformation sensing based on learned models, as in [33, 34]. A way to more permanently distribute and fix the modules onto the membrane would allow for more functionality. Multi-material composite membranes for the robot exterior could boost SNR through better acoustic impedance matching or add region-dependent sensitivity at design time through acoustic wave guides, as in [35].

There are a number of interesting questions related to network architecture for a collection of robots with wide-spectrum transmission capabilities. For example, the audible-range clock synchronization functionality could be used for a slot-based network architecture (e.g., slotted ALOHA), increasing network throughput. In the future, a multi-hop mesh network based on acoustic signals could choose between omnidirectional audible broadcasts and neighbor-to-neighbor ultrasonic modes depending on the traffic route. Additionally, the use of audible range acoustic signals as a primary mode of communication presents opportunities for the study of how human-interpretable modes of multi-robot collaboration affects human operators and bystanders [36].

VI Conclusion

Acoustic waves are fundamentally different than electromagnetic (e.g., optical and radio frequency) waves in their transmission properties. Simple and low-cost transducers are available with operation ranges covering broad swaths of the spectrum. By taking advantage of the variable attenuation and directivity of acoustic waves as a function of their frequency, these transducers can be used for functions ranging from communication to sensing. Further, the same high extension ratio pressurized membranes that make soft shape-changing robots difficult to instrument can instead become useful parts of the acoustic transduction strategy by acting as signal channels and state-dependent amplifiers/attenuators.

ACKNOWLEDGMENT

This work was supported in part by the Intelligence Community Postdoctoral Research Fellowship Program, administered by the Oak Ridge Institute for Science and Education through an Interagency Agreement between the U.S. DoE and ODNI

References

- [1] A. Brunete, A. Ranganath, S. Segovia, J. P. de Frutos, M. Hernando, and E. Gambao, “Current trends in reconfigurable modular robots design,” International Journal of Advanced Robotic Systems, vol. 14, no. 3, p. 1729881417710457, May 2017, publisher: SAGE Publications.

- [2] C. Zhang, P. Zhu, Y. Lin, Z. Jiao, and J. Zou, “Modular Soft Robotics: Modular Units, Connection Mechanisms, and Applications,” Advanced Intelligent Systems, vol. 2, no. 6, p. 1900166, 2020, _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/aisy.201900166.

- [3] C.-H. Yu, K. Haller, D. Ingber, and R. Nagpal, “Morpho: A Self-Deformable Modular Robot Inspired by Cellular Structure,” in 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems. Nice: IEEE, Sept. 2008, pp. 3571–3578.

- [4] J. Seo, J. Paik, and M. Yim, “Modular Reconfigurable Robotics,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 2, no. 1, pp. 63–88, 2019, _eprint: https://doi.org/10.1146/annurev-control-053018-023834.

- [5] R. B. Cocroft, “The public world of insect vibrational communication,” Molecular Ecology, vol. 20, no. 10, pp. 2041–2043, 2011, _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.1365-294X.2011.05092.x.

- [6] P. S. M. Hill, “How do animals use substrate-borne vibrations as an information source?” Naturwissenschaften, vol. 96, no. 12, pp. 1355–1371, Dec. 2009.

- [7] B. Hölldobler, “Multimodal signals in ant communication,” Journal of Comparative Physiology A, vol. 184, no. 2, pp. 129–141, 1999.

- [8] F. Montealegre-Z, T. Jonsson, and D. Robert, “Sound radiation and wing mechanics in stridulating field crickets (Orthoptera: Gryllidae),” Journal of Experimental Biology, vol. 214, no. 12, pp. 2105–2117, June 2011.

- [9] H. G. SPANGLER, M. D. GREENFIELD, and A. TAKESSIAN, “Ultrasonic mate calling in the lesser wax moth,” Physiological Entomology, vol. 9, no. 1, pp. 87–95, 1984.

- [10] D. Bank, “A novel ultrasonic sensing system for autonomous mobile systems,” IEEE Sensors Journal, vol. 2, no. 6, pp. 597–606, Dec. 2002, conference Name: IEEE Sensors Journal.

- [11] H. E. Bass, L. C. Sutherland, A. J. Zuckerwar, D. T. Blackstock, and D. M. Hester, “Atmospheric absorption of sound: Further developments,” The Journal of the Acoustical Society of America, vol. 97, no. 1, pp. 680–683, Jan. 1995.

- [12] M. Rubenstein, C. Ahler, and R. Nagpal, “Kilobot: A low cost scalable robot system for collective behaviors,” in 2012 IEEE International Conference on Robotics and Automation, May 2012, pp. 3293–3298, iSSN: 1050-4729.

- [13] R. M. McKenzie, M. E. Sayed, M. P. Nemitz, B. W. Flynn, and A. A. Stokes, “Linbots: Soft Modular Robots Utilizing Voice Coils,” Soft Robotics, vol. 6, no. 2, pp. 195–205, Dec. 2018, publisher: Mary Ann Liebert, Inc., publishers.

- [14] M. Brambilla, E. Ferrante, M. Birattari, and M. Dorigo, “Swarm robotics: a review from the swarm engineering perspective,” Swarm Intelligence, vol. 7, no. 1, pp. 1–41, Mar. 2013.

- [15] A. Gutierrez, A. Campo, M. Dorigo, J. Donate, F. Monasterio-Huelin, and L. Magdalena, “Open E-puck Range Bearing miniaturized board for local communication in swarm robotics,” in 2009 IEEE International Conference on Robotics and Automation, May 2009, pp. 3111–3116, iSSN: 1050-4729.

- [16] L. Girod and D. Estrin, “Robust range estimation using acoustic and multimodal sensing,” in Proceedings 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), vol. 3, Oct. 2001, pp. 1312–1320 vol.3.

- [17] F. Rivard, J. Bisson, F. Michaud, and D. Letourneau, “Ultrasonic relative positioning for multi-robot systems,” in 2008 IEEE International Conference on Robotics and Automation, May 2008, pp. 323–328, iSSN: 1050-4729.

- [18] S. Chappell, J. Jalbert, P. Pietryka, and J. Duchesney, “Acoustic communication between two autonomous underwater vehicles,” in Proceedings of IEEE Symposium on Autonomous Underwater Vehicle Technology (AUV’94), July 1994, pp. 462–469.

- [19] A. Bahr, J. J. Leonard, and M. F. Fallon, “Cooperative Localization for Autonomous Underwater Vehicles,” The International Journal of Robotics Research, vol. 28, no. 6, pp. 714–728, June 2009, publisher: SAGE Publications Ltd STM.

- [20] P. Karimian, R. Vaughan, and S. Brown, “Sounds Good: Simulation and Evaluation of Audio Communication for Multi-Robot Exploration,” in 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Oct. 2006, pp. 2711–2716, iSSN: 2153-0866.

- [21] J. A. Paradiso, “The interactive balloon: Sensing, actuation and behavior in a common object,” IBM Systems Journal, vol. 35, no. 3.4, pp. 473–487, 1996, conference Name: IBM Systems Journal.

- [22] M. R. Devlin, B. T. Young, N. D. Naclerio, D. A. Haggerty, and E. W. Hawkes, “An untethered soft cellular robot with variable volume, friction, and unit-to-unit cohesion,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2020, p. 7.

- [23] K. Gilpin, A. Knaian, and D. Rus, “Robot pebbles: One centimeter modules for programmable matter through self-disassembly,” in 2010 IEEE International Conference on Robotics and Automation, May 2010, pp. 2485–2492, iSSN: 1050-4729.

- [24] K. Gilpin, K. Kotay, D. Rus, and I. Vasilescu, “Miche: Modular Shape Formation by Self-Disassembly,” The International Journal of Robotics Research, vol. 27, no. 3-4, pp. 345–372, Mar. 2008, publisher: SAGE Publications Ltd STM.

- [25] N. Abramson, “THE ALOHA SYSTEM: another alternative for computer communications,” in Proceedings of the November 17-19, 1970, fall joint computer conference, ser. AFIPS ’70 (Fall). New York, NY, USA: Association for Computing Machinery, Nov. 1970, pp. 281–285.

- [26] V. Trianni and A. Campo, “Fundamental collective behaviors in swarm robotics,” in Springer handbook of computational intelligence. Springer, 2015, pp. 1377–1394.

- [27] A. Ravignani, D. L. Bowling, and W. Fitch, “Chorusing, synchrony, and the evolutionary functions of rhythm,” Frontiers in psychology, vol. 5, p. 1118, 2014.

- [28] F. Perez Diaz, “Firefly-Inspired Synchronization in Swarms of Mobile Agents,” phd, University of Sheffield, Sept. 2016.

- [29] R. Pipher and G. Morris, “Frequency modulation in Conocephalus nigropleurum, the black-sided meadow katydid (Orthoptera: Tettigoniidae),” The Canadian Entomologist, vol. 106, pp. 997–1001, Sept. 1974.

- [30] P. A. Xu, A. K. Mishra, H. Bai, C. A. Aubin, L. Zullo, and R. F. Shepherd, “Optical lace for synthetic afferent neural networks,” Science Robotics, vol. 4, no. 34, Sept. 2019, publisher: Science Robotics Section: Research Article.

- [31] I. Wicaksono, E. Kodama, A. Dementyev, and J. A. Paradiso, “SensorNets: Towards Reconfigurable Multifunctional Fine-grained Soft and Stretchable Electronic Skins,” in Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, ser. CHI EA ’20. New York, NY, USA: Association for Computing Machinery, Apr. 2020, pp. 1–8.

- [32] J. Borenstein and Y. Koren, “Obstacle avoidance with ultrasonic sensors,” IEEE Journal on Robotics and Automation, vol. 4, no. 2, pp. 213–218, Apr. 1988, conference Name: IEEE Journal on Robotics and Automation.

- [33] G. Laput, X. A. Chen, and C. Harrison, “SweepSense: Ad Hoc Configuration Sensing Using Reflected Swept-Frequency Ultrasonics,” in Proceedings of the 21st International Conference on Intelligent User Interfaces, ser. IUI ’16. New York, NY, USA: Association for Computing Machinery, Mar. 2016, pp. 332–335.

- [34] S. Swaminathan, M. Rivera, R. Kang, Z. Luo, K. B. Ozutemiz, and S. E. Hudson, “Input, Output and Construction Methods for Custom Fabrication of Room-Scale Deployable Pneumatic Structures,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 3, no. 2, pp. 62:1–62:17, June 2019.

- [35] T. M. Huh, C. Liu, J. Hashizume, T. G. Chen, S. A. Suresh, F. Chang, and M. R. Cutkosky, “Active Sensing for Measuring Contact of Thin Film Gecko-Inspired Adhesives,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3263–3270, Oct. 2018, conference Name: IEEE Robotics and Automation Letters.

- [36] D. Moore, N. Martelaro, W. Ju, and H. Tennent, “Making Noise Intentional: A Study of Servo Sound Perception,” in 2017 12th ACM/IEEE International Conference on Human-Robot Interaction (HRI, Mar. 2017, pp. 12–21, iSSN: 2167-2148.