Active Exploration for Learning

Symbolic Representations

Abstract

We introduce an online active exploration algorithm for data-efficiently learning an abstract symbolic model of an environment. Our algorithm is divided into two parts: the first part quickly generates an intermediate Bayesian symbolic model from the data that the agent has collected so far, which the agent can then use along with the second part to guide its future exploration towards regions of the state space that the model is uncertain about. We show that our algorithm outperforms random and greedy exploration policies on two different computer game domains. The first domain is an Asteroids-inspired game with complex dynamics but basic logical structure. The second is the Treasure Game, with simpler dynamics but more complex logical structure.

1 Introduction

Much work has been done in artificial intelligence and robotics on how high-level state abstractions can be used to significantly improve planning [21]. However, building these abstractions is difficult, and consequently, they are typically hand-crafted [16, 14, 8, 4, 5, 6, 22, 10].

A major open question is then the problem of abstraction: how can an intelligent agent learn high-level models that can be used to improve decision making, using only noisy observations from its high-dimensional sensor and actuation spaces? Recent work [12, 13] has shown how to automatically generate symbolic representations suitable for planning in high-dimensional, continuous domains. This work is based on the hierarchical reinforcement learning framework [1], where the agent has access to high-level skills that abstract away the low-level details of control. The agent then learns representations for the (potentially abstract) effect of using these skills. For instance, opening a door is a high-level skill, while knowing that opening a door typically allows one to enter a building would be part of the representation for this skill. The key result of that work was that the symbols required to determine the probability of a plan succeeding are directly determined by characteristics of the skills available to an agent. The agent can learn these symbols autonomously by exploring the environment, which removes the need to hand-design symbolic representations of the world.

It is therefore possible to learn the symbols by naively collecting samples from the environment, for example by random exploration. However, in an online setting the agent shall be able to use its previously collected data to compute an exploration policy which leads to better data efficiency. We introduce such an algorithm, which is divided into two parts: the first part quickly generates an intermediate Bayesian symbolic model from the data that the agent has collected so far, while the second part uses the model plus Monte-Carlo tree search to guide the agent’s future exploration towards regions of the state space that the model is uncertain about. We show that our algorithm is significantly more data-efficient than more naive methods in two different computer game domains. The first domain is an Asteroids-inspired game with complex dynamics but basic logical structure. The second is the Treasure Game, with simpler dynamics but more complex logical structure.

2 Background

As a motivating example, imagine deciding the route you are going to take to the grocery store; instead of planning over the various sequences of muscle contractions that you would use to complete the trip, you would consider a small number of high-level alternatives such as whether to take one route or another. You also would avoid considering how your exact low-level state affected your decision making, and instead use an abstract (symbolic) representation of your state with components such as whether you are at home or an work, whether you have to get gas, whether there is traffic, etc. This simplification reduces computational complexity, and allows for increased generalization over past experiences. In the following sections, we introduce the frameworks that we use to represent the agent’s high-level skills, and symbolic models for those skills.

2.1 Semi-Markov Decision Processes

We assume that the agent’s environment can be described by a semi-Markov decision process (SMDP), given by a tuple , where is a -dimensional continuous state space, returns a set of temporally extended actions, or options [21] available in state , and are the reward received and probability of termination in state after time steps following the execution of option in state , and is a discount factor. In this paper, we are not concerned with the time taken to execute , so we use .

An option is given by three components: , the option policy that is executed when the option is invoked, , the initiation set consisting of the states where the option can be executed from, and , the termination condition, which returns the probability that the option will terminate upon reaching state . Learning models for the initiation set, rewards, and transitions for each option, allows the agent to reason about the effect of its actions in the environment. To learn these option models, the agent has the ability to collect observations of the forms when entering a state and upon executing option from .

2.2 Abstract Representations for Planning

We are specifically interested in learning option models which allow the agent to easily evaluate the success probability of plans. A plan is a sequence of options to be executed from some starting state, and it succeeds if and only if it is able to be run to completion (regardless of the reward). Thus, a plan with starting state succeeds if and only if and the termination state of each option (except for the last) lies in the initiation set of the following option, i.e. , , and so on.

Recent work [12, 13] has shown how to automatically generate a symbolic representation that supports such queries, and is therefore suitable for planning. This work is based on the idea of a probabilistic symbol, a compact representation of a distribution over infinitely many continuous, low-level states. For example, a probabilistic symbol could be used to classify whether or not the agent is currently in front of a door, or one could be used to represent the state that the agent would find itself in after executing its ‘open the door’ option. In both cases, using probabilistic symbols also allows the agent to be uncertain about its state.

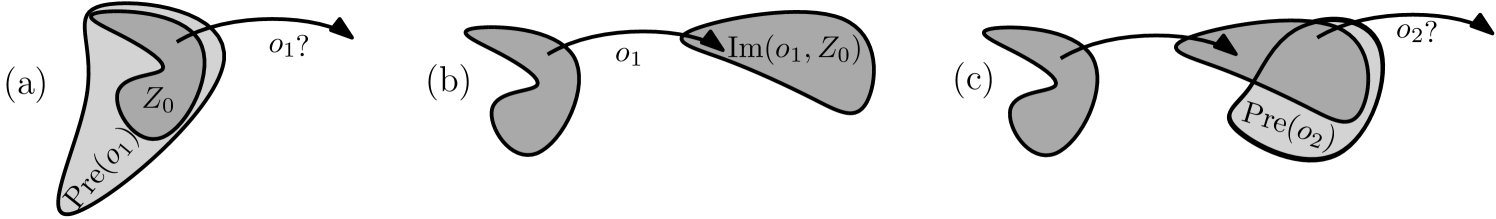

The following two probabilistic symbols are provably sufficient for evaluating the success probability of any plan [13]; the probabilistic precondition: , which expresses the probability that an option can be executed from each state , and the probabilistic image operator:

which represents the distribution over termination states if an option is executed from a distribution over starting states . These symbols can be used to compute the probability that each successive option in the plan can be executed, and these probabilities can then be multiplied to compute the overall success probability of the plan; see Figure 1 for a visual demonstration of a plan of length 2.

Subgoal Options Unfortunately, it is difficult to model for arbitrary options, so we focus on restricted types of options. A subgoal option [19] is a special class of option where the distribution over termination states (referred to as the subgoal) is independent of the distribution over starting states that it was executed from, e.g. if you make the decision to walk to your kitchen, the end result will be the same regardless of where you started from.

For subgoal options, the image operator can be replaced with the effects distribution: , the resulting distribution over states after executing from any start distribution . Planning with a set of subgoal options is simple because for each ordered pair of options and , it is possible to compute and store , the probability that can be executed immediately after executing :

We use the following two generalizations of subgoal options: abstract subgoal options model the more general case where executing an option leads to a subgoal for a subset of the state variables (called the mask), leaving the rest unchanged. For example, walking to the kitchen leaves the amount of gas in your car unchanged. More formally, the state vector can be partitioned into two parts , such that executing leaves the agent in state , where is independent of the distribution over starting states. The second generalization is the (abstract) partitioned subgoal option, which can be partitioned into a finite number of (abstract) subgoal options. For instance, an option for opening doors is not a subgoal option because there are many doors in the world, however it can be partitioned into a set of subgoal options, with one for every door.

The subgoal (and abstract subgoal) assumptions propose that the exact state from which option execution starts does not really affect the options that can be executed next. This is somewhat restrictive and often does not hold for options as given, but can hold for options once they have been partitioned. Additionally, the assumptions need only hold approximately in practice.

3 Online Active Symbol Acquisition

Previous approaches for learning symbolic models from data [12, 13] used random exploration. However, real world data from high-level skills is very expensive to collect, so it is important to use a more data-efficient approach. In this section, we introduce a new method for learning abstract models data-efficiently. Our approach maintains a distribution over symbolic models which is updated after every new observation. This distribution is used to choose the sequence of options that in expectation maximally reduces the amount of uncertainty in the posterior distribution over models. Our approach has two components: an active exploration algorithm which takes as input a distribution over symbolic models and returns the next option to execute, and an algorithm for quickly building a distribution over symbolic models from data. The second component is an improvement upon previous approaches in that it returns a distribution and is fast enough to be updated online, both of which we require.

3.1 Fast Construction of a Distribution over Symbolic Option Models

Now we show how to construct a more general model than that can be used for planning with abstract partitioned subgoal options. The advantages of our approach versus previous methods are that our algorithm is much faster, and the resulting model is Bayesian, both of which are necessary for the active exploration algorithm introduced in the next section.

Recall that the agent can collect observations of the forms upon executing option from , and when entering a state , where is the set of available options in state . Given a sequence of observations of this form, the first step of our approach is to find the factors [13], partitions of state variables that always change together in the observed data. For example, consider a robot which has options for moving to the nearest table and picking up a glass on an adjacent table. Moving to a table changes the and coordinates of the robot without changing the joint angles of the robot’s arms, while picking up a glass does the opposite. Thus, the and coordinates and the arm joint angles of the robot belong to different factors. Splitting the state space into factors reduces the number of potential masks (see end of Section 2.2) because we assume that if state variables and always change together in the observations, then this will always occur, e.g. we assume that moving to the table will never move the robot’s arms.111The factors assumption is not strictly necessary as we can assign each state variable to its own factor. However, using this uncompressed representation can lead to an exponential increase in the size of the symbolic state space and a corresponding increase in the sample complexity of learning the symbolic models.

Finding the Factors Compute the set of observed masks from the observations: each observation’s mask is the subset of state variables that differ substantially between and . Since we work in continuous, stochastic domains, we must detect the difference between minor random noise (independent of the action) and a substantial change in a state variable caused by action execution. In principle this requires modeling action-independent and action-dependent differences, and distinguishing between them, but this is difficult to implement. Fortunately we have found that in practice allowing some noise and having a simple threshold is often effective, even in more noisy and complex domains. For each state variable , let be the subset of the observed masks that contain . Two state variables and belong to the same factor if and only if . Each factor is given by a set of state variables and thus corresponds to a subspace . The factors are updated after every new observation.

Let be the set of states that the agent has observed and let be the projection of onto the subspace for some factor , e.g. in the previous example there is a which consists of the set of observed robot coordinates. It is important to note that the agent’s observations come only from executing partitioned abstract subgoal options. This means that consists only of abstract subgoals, because for each , was either unchanged from the previous state, or changed to another abstract subgoal. In the robot example, all observations must be adjacent to a table because the robot can only execute an option that terminates with it adjacent to a table or one that does not change its coordinates. Thus, the states in can be imagined as a collection of abstract subgoals for each of the factors. Our next step is to build a set of symbols for each factor to represent its abstract subgoals, which we do using unsupervised clustering.

Finding the Symbols For each factor , we find the set of symbols by clustering . Let be the corresponding symbol for state and factor . We then map the observed states to their corresponding symbolic states , and the observations and to and , respectively.

In the robot example, the observations would be clustered around tables that the robot could travel to, so there would be a symbol corresponding to each table.

We want to build our models within the symbolic state space . Thus we define the symbolic precondition, , which returns the probability that the agent can execute an option from some symbolic state, and the symbolic effects distribution for a subgoal option , , maps to a subgoal distribution over symbolic states. For example, the robot’s ‘move to the nearest table’ option maps the robot’s current symbol to the one which corresponds to the nearest table.

The next step is to partition the options into abstract subgoal options (in the symbolic state space), e.g. we want to partition the ‘move to the nearest table’ option in the symbolic state space so that the symbolic states in each partition have the same nearest table.

Partitioning the Options For each option , we initialize the partitioning so that each symbolic state starts in its own partition. We use independent Bayesian sparse Dirichlet-categorical models [20] for the symbolic effects distribution of each option partition.222We use sparse Dirichlet-categorical models because there are a combinatorial number of possible symbolic state transitions, but we expect that each partition has non-zero probability for only a small number of them. We then perform Bayesian Hierarchical Clustering [9] to merge partitions which have similar symbolic effects distributions.333We use the closed form solutions for Dirichlet-multinomial models provided by the paper.

There is a special case where the agent has observed that an option was available in some symbolic states , but has yet to actually execute it from any . These are not included in the Bayesian Hierarchical Clustering, instead we have a special prior for the partition of that they belong to. After completing the merge step, the agent has a partitioning for each option . Our prior is that with probability ,444This is a user specified parameter. each belongs to the partition which contains the symbolic states most similar to , and with probability each belongs to its own partition. To determine the partition which is most similar to some symbolic state, we first find , the smallest subset of factors which can still be used to correctly classify . We then map each to the most similar partition by trying to match masked by with a masked symbolic state already in one of the partitions. If there is no match, is placed in its own partition.

Our final consideration is how to model the symbolic preconditions. The main concern is that many factors are often irrelevant for determining if some option can be executed. For example, whether or not you have keys in your pocket does not affect whether you can put on your shoe.

Modeling the Symbolic Preconditions Given an option and subset of factors , let be the symbolic state space projected onto . We use independent Bayesian Beta-Bernoulli models for the symbolic precondition of in each masked symbolic state . For each option , we use Bayesian model selection to find the the subset of factors which maximizes the likelihood of the symbolic precondition models.

The final result is a distribution over symbolic option models , which consists of the combined sets of independent symbolic precondition models and independent symbolic effects distribution models

The complete procedure is given in Algorithm 1. A symbolic option model can be sampled by drawing parameters for each of the Bernoulli and categorical distributions from the corresponding Beta and sparse Dirichlet distributions, and drawing outcomes for each . It is also possible to consider distributions over other parts of the model such as the symbolic state space and/or a more complicated one for the option partitionings, which we leave for future work.

3.2 Optimal Exploration

In the previous section we have shown how to efficiently compute a distribution over symbolic option models . Recall that the ultimate purpose of is to compute the success probabilities of plans (see Section 2.2). Thus, the quality of is determined by the accuracy of its predicted plan success probabilities, and efficiently learning corresponds to selecting the sequence of observations which maximizes the expected accuracy of . However, it is difficult to calculate the expected accuracy of over all possible plans, so we define a proxy measure to optimize which is intended to represent the amount of uncertainty in . In this section, we introduce our proxy measure, followed by an algorithm for finding the exploration policy which optimizes it. The algorithm operates in an online manner, building from the data collected so far, using to select an option to execute, updating with the new observation, and so on.

First we define the standard deviation , the quantity we use to represent the amount of uncertainty in . To define the standard deviation, we need to also define the distance and mean.

We define the distance from to , to be the sum of the Kullback-Leibler (KL) divergences555The KL divergence has previously been used in other active exploration scenarios [17, 15]. between their individual symbolic effect distributions plus the sum of the KL divergences between their individual symbolic precondition distributions:666Similarly to other active exploration papers, we define the distance to depend only on the transition models and not the reward models.

We define the mean, , to be the symbolic option model such that each Bernoulli symbolic precondition and categorical symbolic effects distribution is equal to the mean of the corresponding Beta or sparse Dirichlet distribution:

The standard deviation is then simply: . This represents the expected amount of information which is lost if is used to approximate .

Now we define the optimal exploration policy for the agent, which aims to maximize the expected reduction in after is updated with new observations. Let be the posterior distribution over symbolic models when is updated with symbolic observations (the partitioning is not updated, only the symbolic effects distribution and symbolic precondition models), and let be the distribution over symbolic observations drawn from the posterior of if the agent follows policy for steps. We define the optimal exploration policy as:

For the convenience of our algorithm, we rewrite the second term by switching the order of the expectations:

Note that the objective function is non-Markovian because is continuously updated with the agent’s new observations, which changes . This means that is non-stationary, so Algorithm 2 approximates in an online manner using Monte-Carlo tree search (MCTS) [3] with the UCT tree policy [11]. is the combined tree and rollout policy for MCTS, given tree .

There is a special case when the agent simulates the observation of a previously unobserved transition, which can occur under the sparse Dirichlet-categorical model. In this case, the amount of information gained is very large, and furthermore, the agent is likely to transition to a novel symbolic state. Rather than modeling the unexplored state space, instead, if an unobserved transition is encountered during an MCTS update, it immediately terminates with a large bonus to the score, a similar approach to that of the R-max algorithm [2]. The form of the bonus is -, where is the depth that the update terminated and is a constant. The bonus reflects the opportunity cost of not experiencing something novel as quickly as possible, and in practice it tends to dominate (as it should).

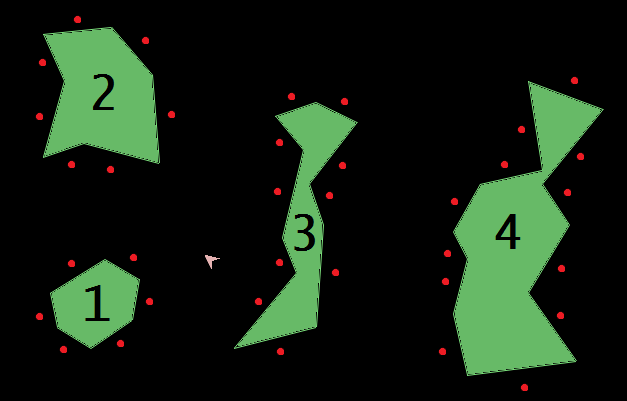

4 The Asteroids Domain

The Asteroids domain is shown in Figure 2(a) and was implemented using physics simulator pybox2d. The agent controls a ship by either applying a thrust in the direction it is facing or applying a torque in either direction. The goal of the agent is to be able to navigate the environment without colliding with any of the four “asteroids.” The agent’s starting location is next to asteroid 1. The agent is given the following 6 options (see Appendix A for additional details):

-

1.

move-counterclockwise and move-clockwise: the ship moves from the current face it is adjacent to, to the midpoint of the face which is counterclockwise/clockwise on the same asteroid from the current face. Only available if the ship is at an asteroid.

-

2.

move-to-asteroid-1, move-to-asteroid-2, move-to-asteroid-3, and move-to-asteroid-4: the ship moves to the midpoint of the closest face of asteroid 1-4 to which it has an unobstructed path. Only available if the ship is not already at the asteroid and an unobstructed path to some face exists.

Exploring with these options results in only one factor (for the entire state space), with symbols corresponding to each of the 35 asteroid faces as shown in Figure 2(a).

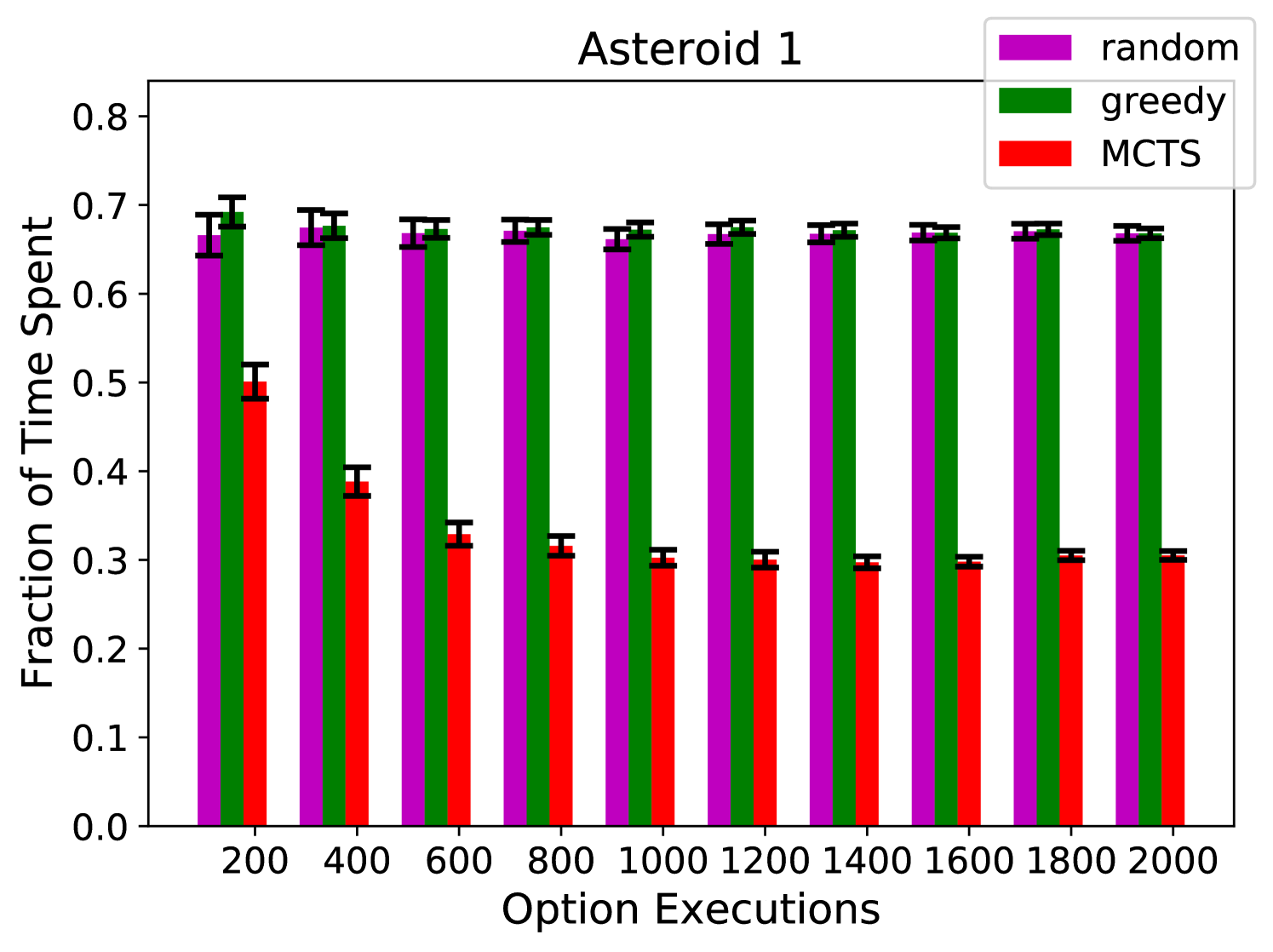

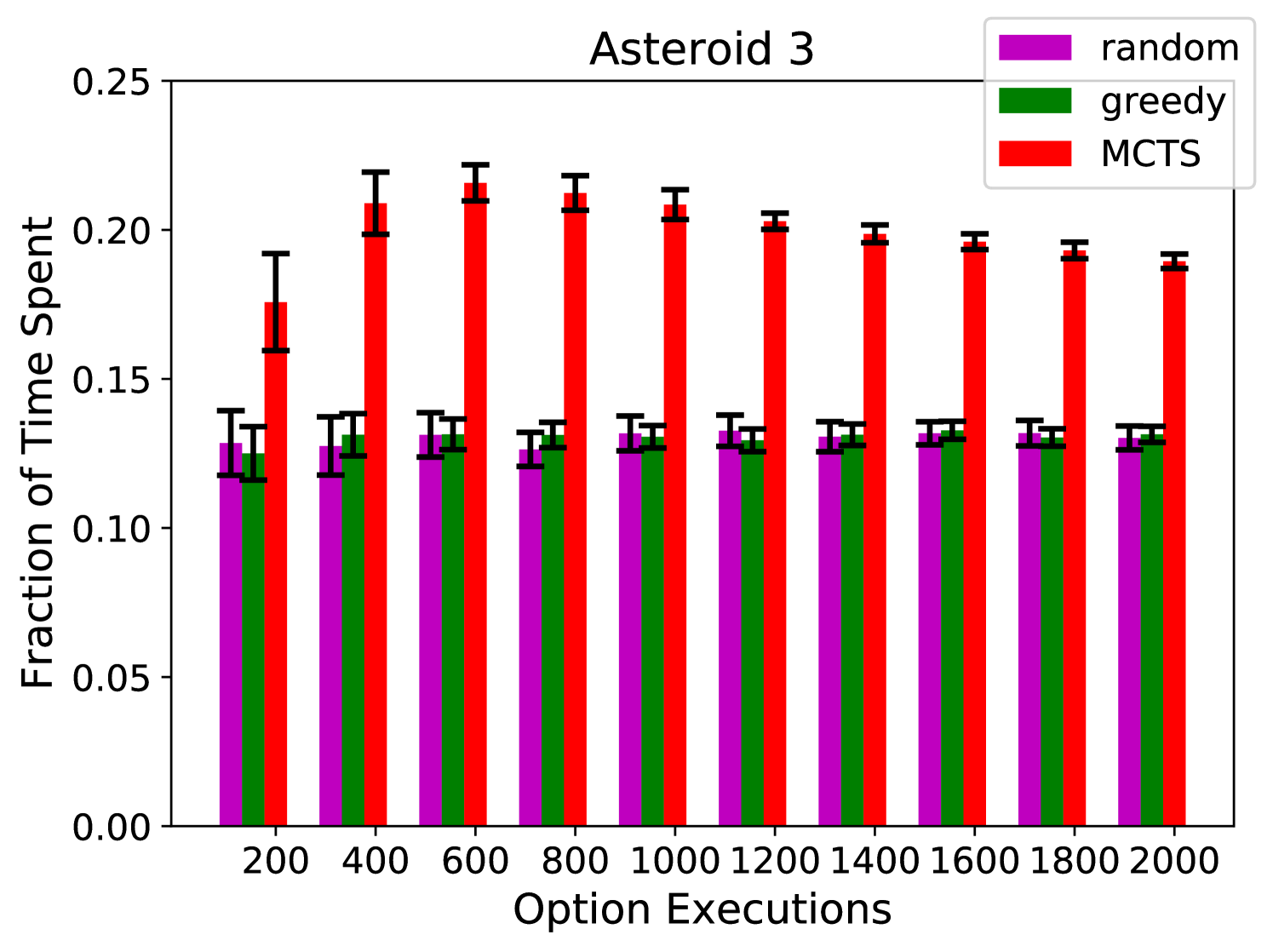

Results We tested the performance of three exploration algorithms: random, greedy, and our algorithm. For the greedy algorithm, the agent first computes the symbolic state space using steps 1-5 of Algorithm 1, and then chooses the option with the lowest execution count from its current symbolic state. The hyperparameter settings that we use for our algorithm are given in Appendix A.

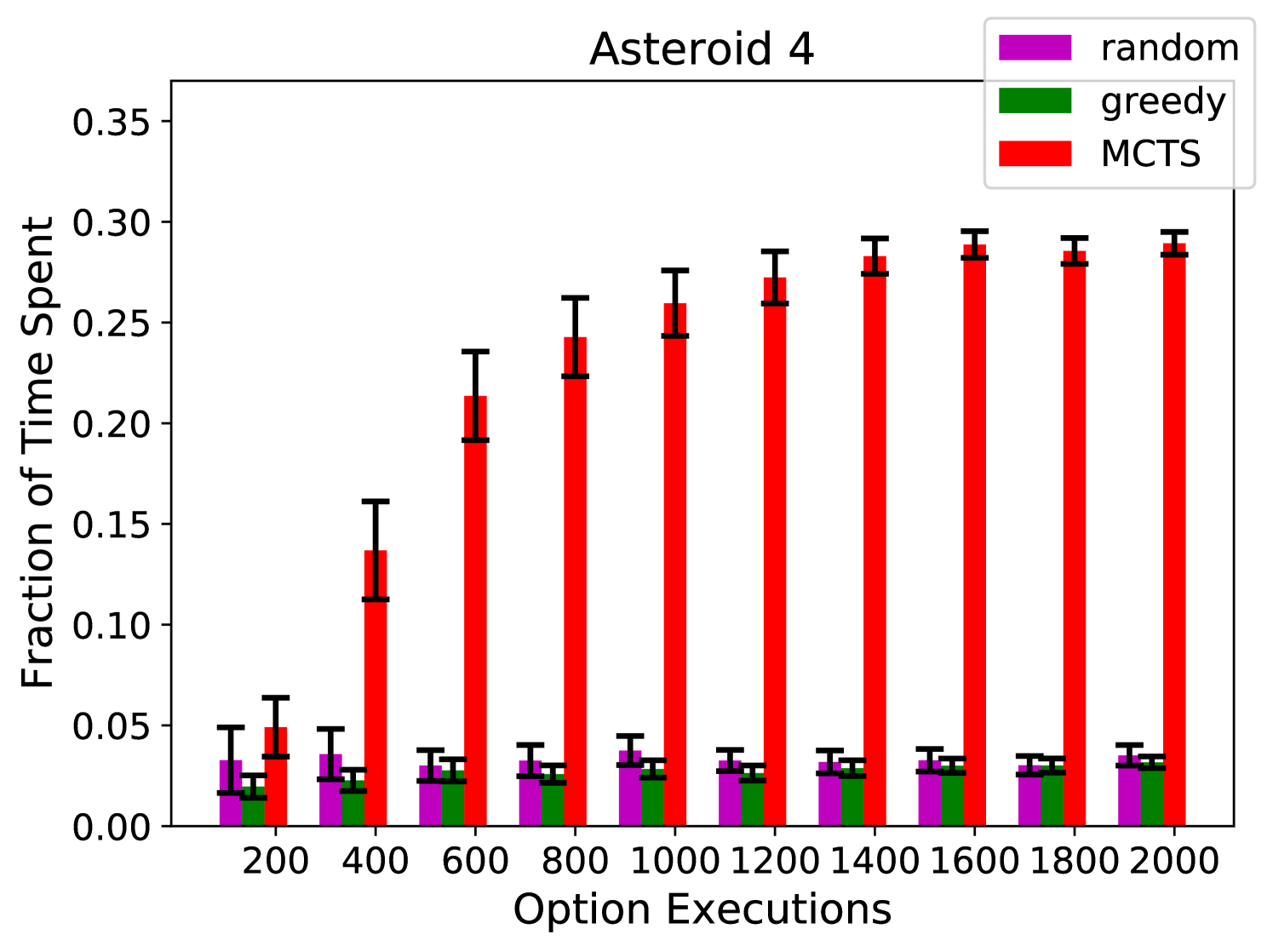

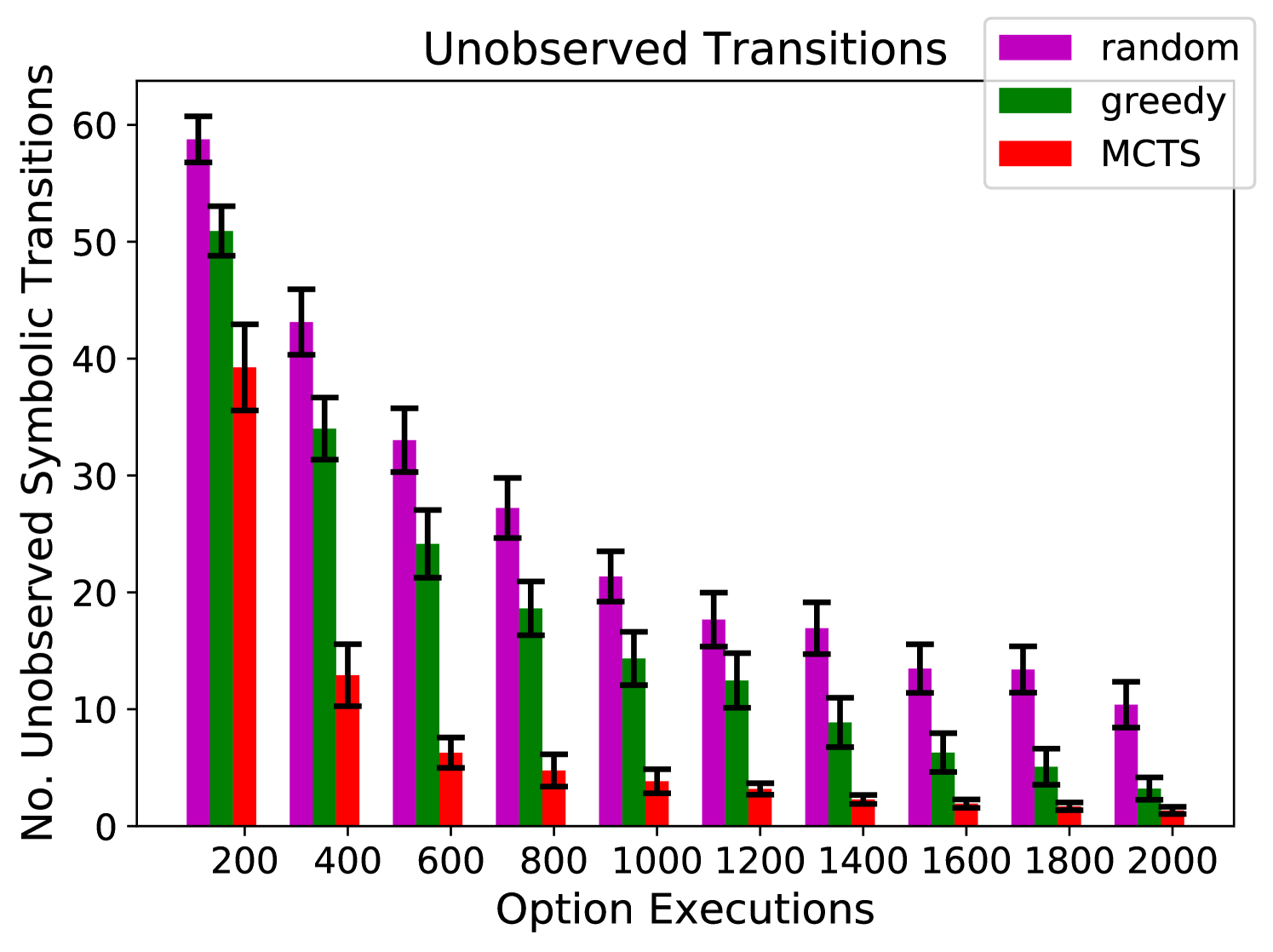

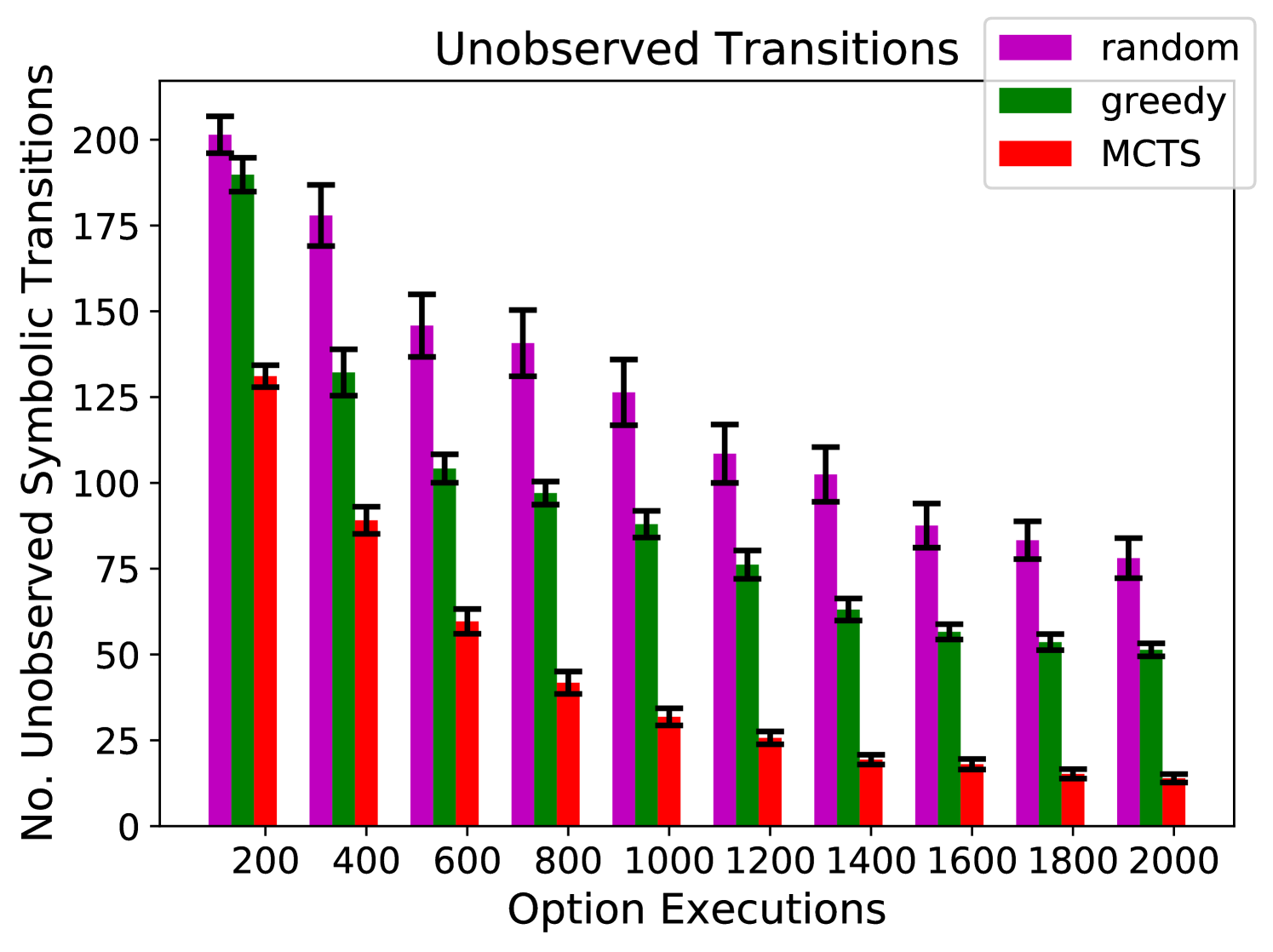

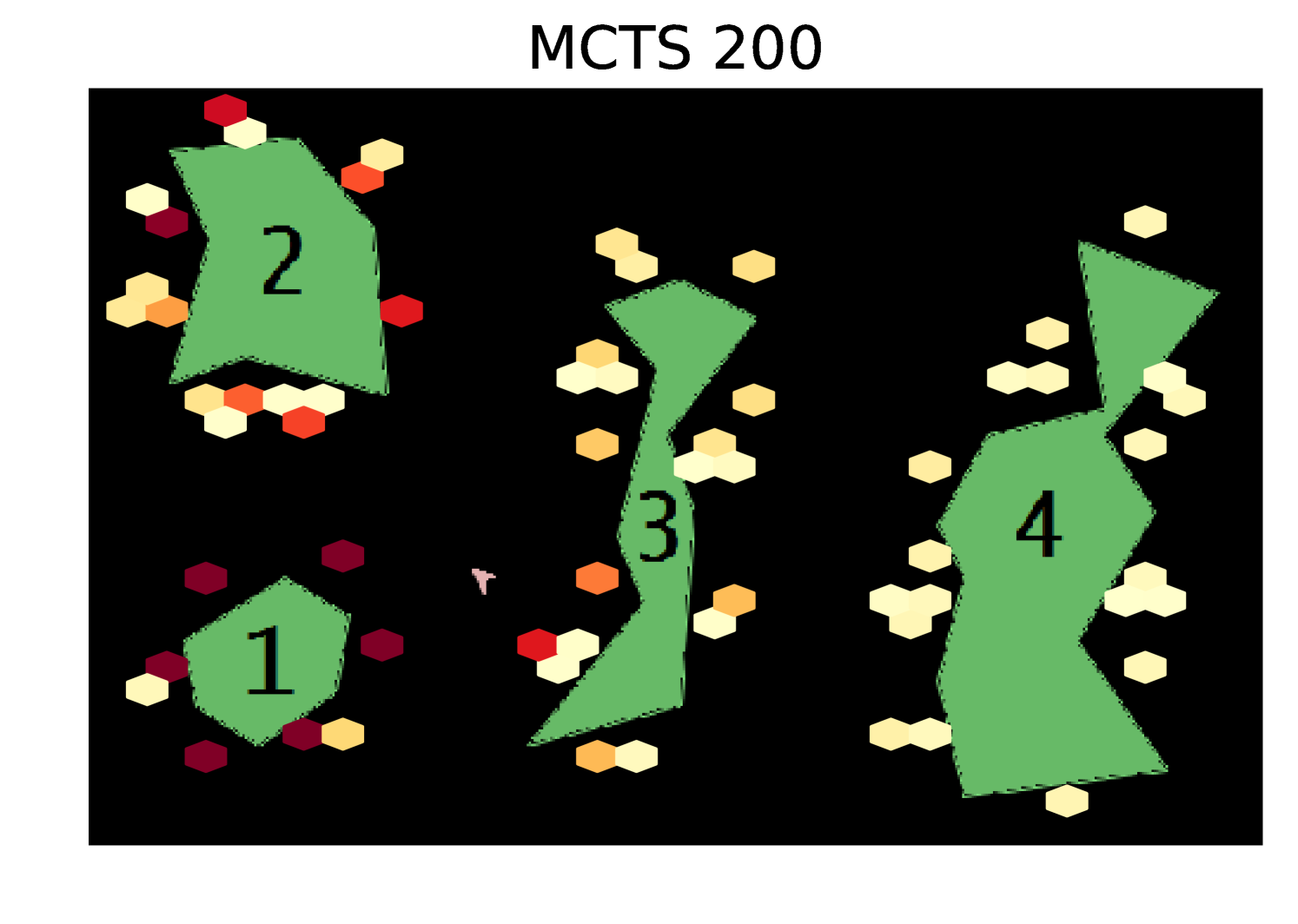

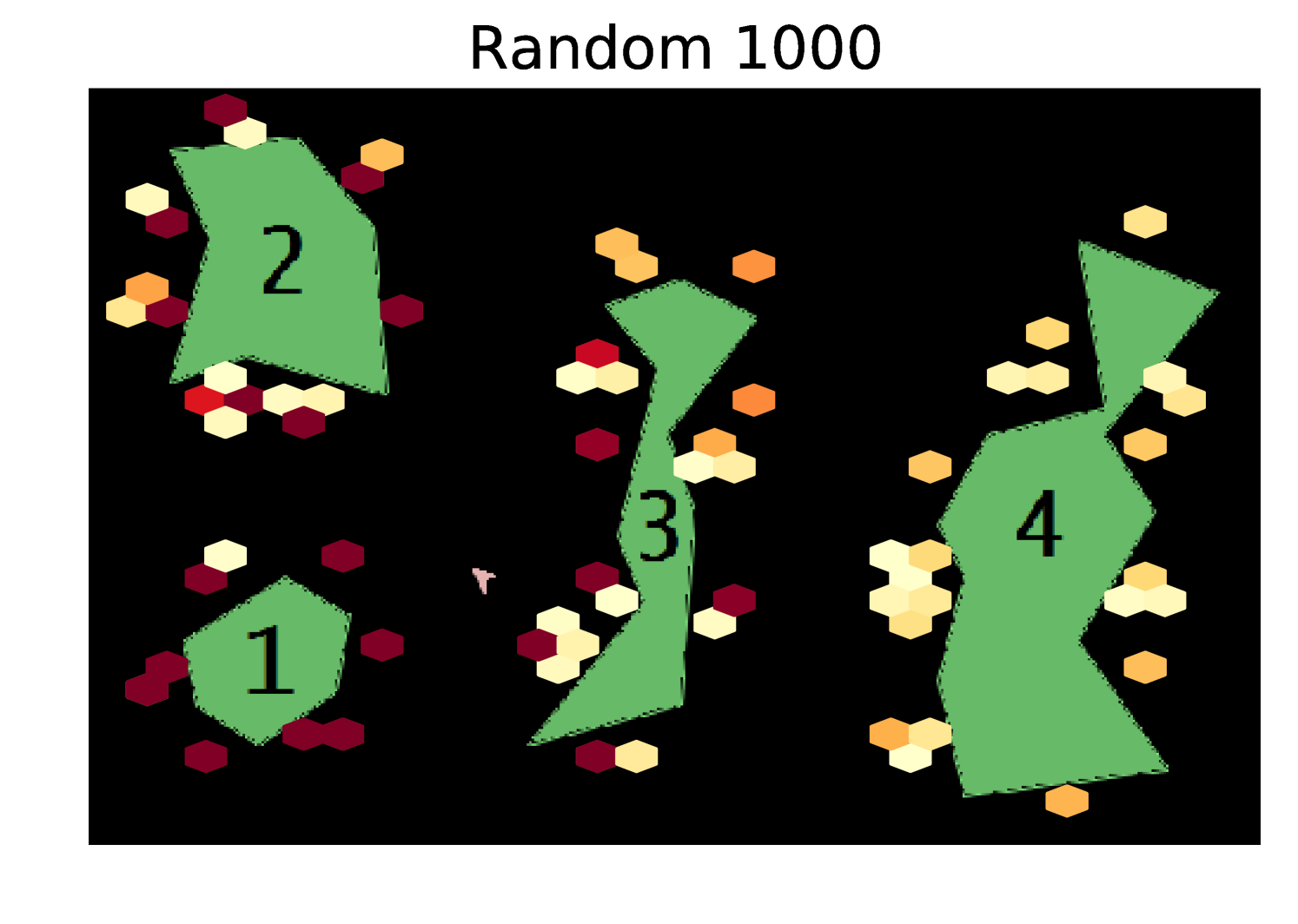

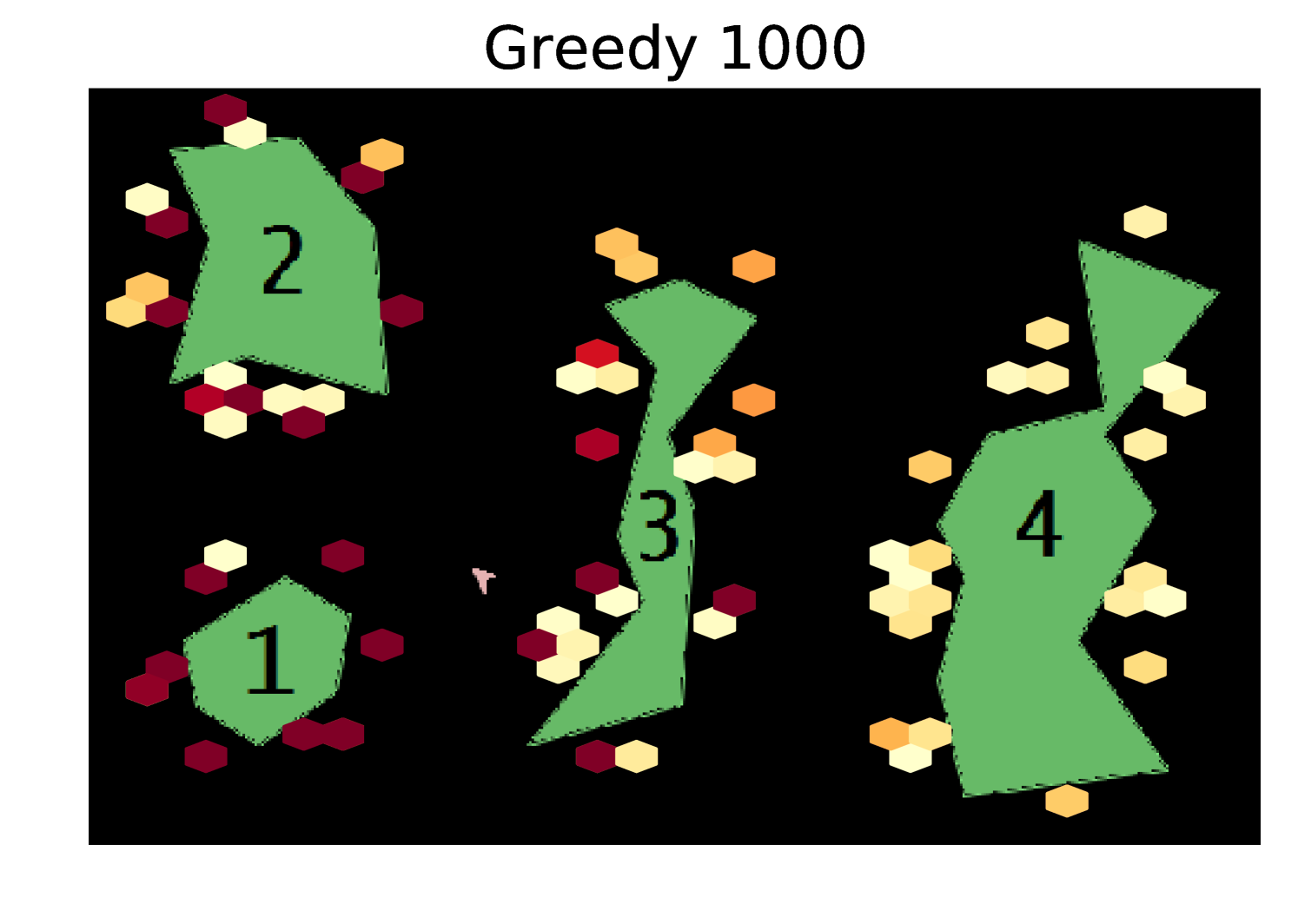

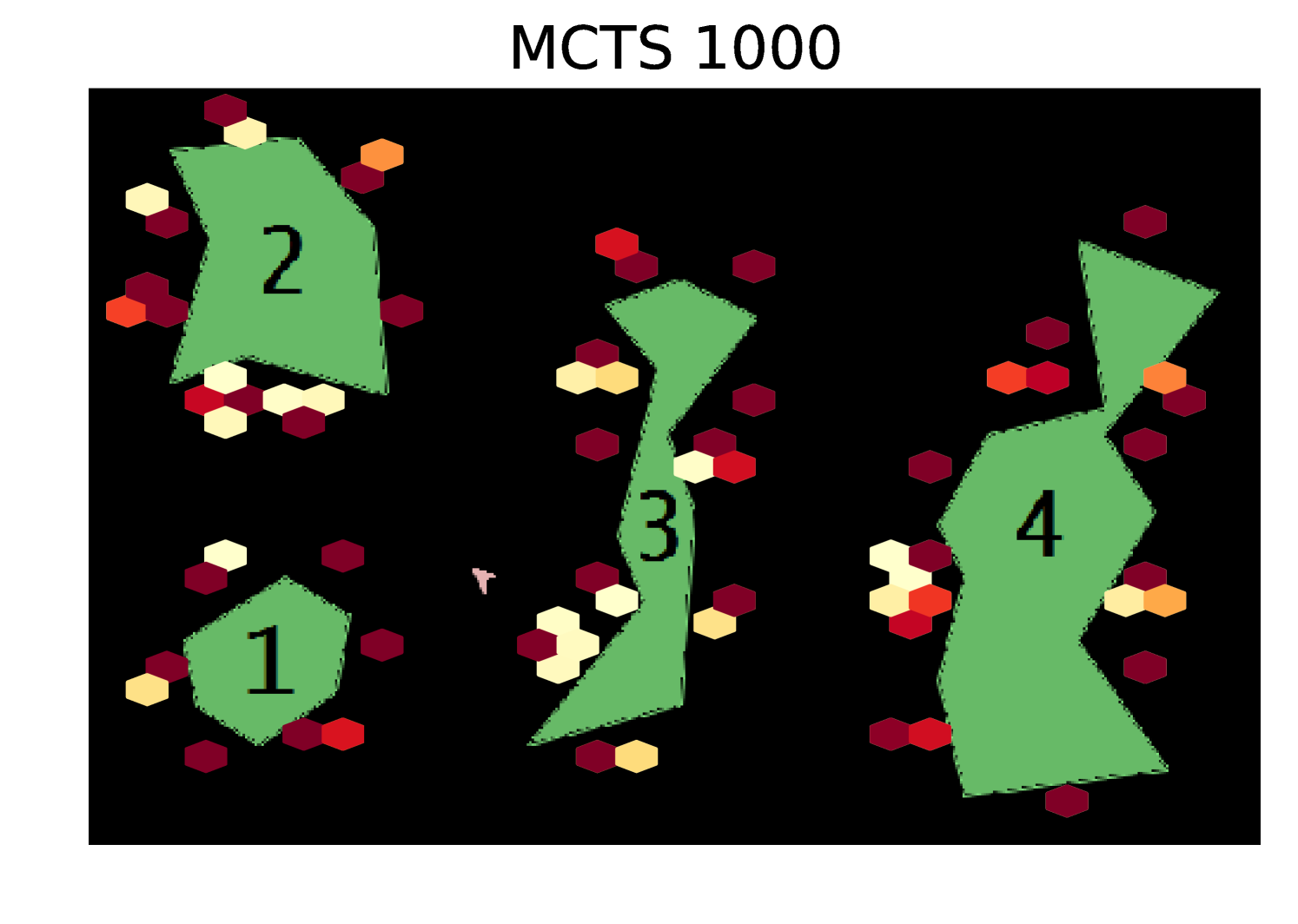

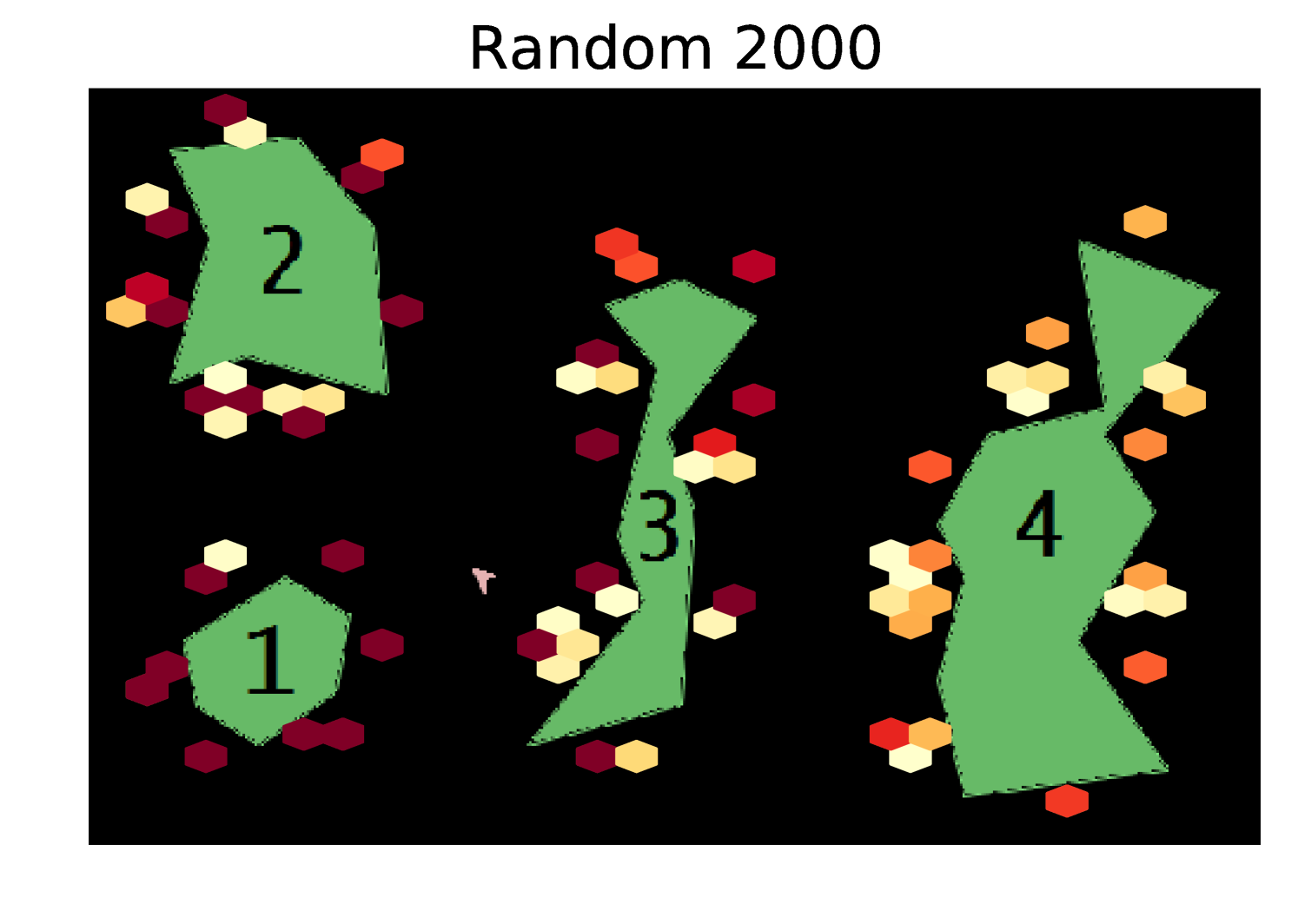

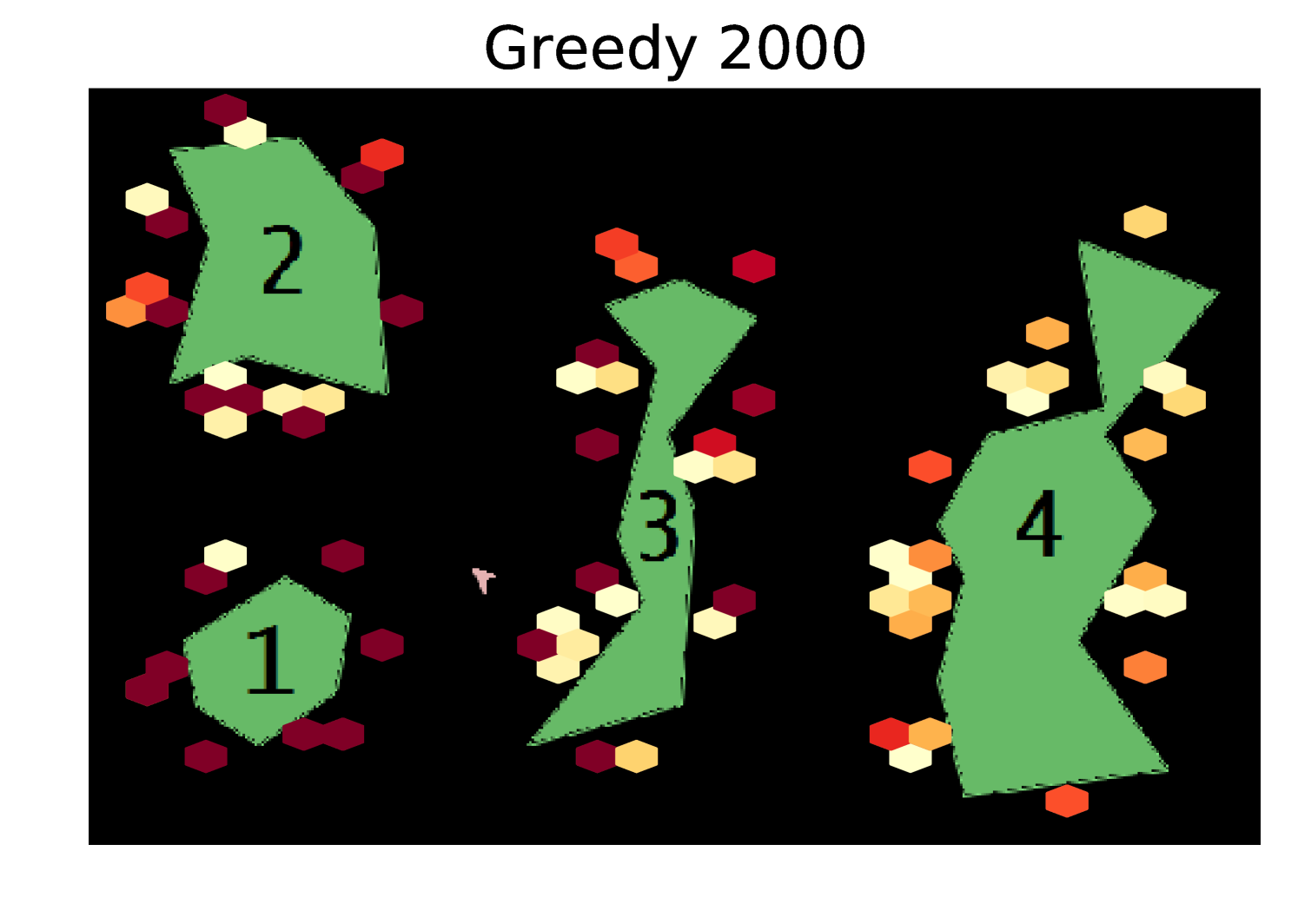

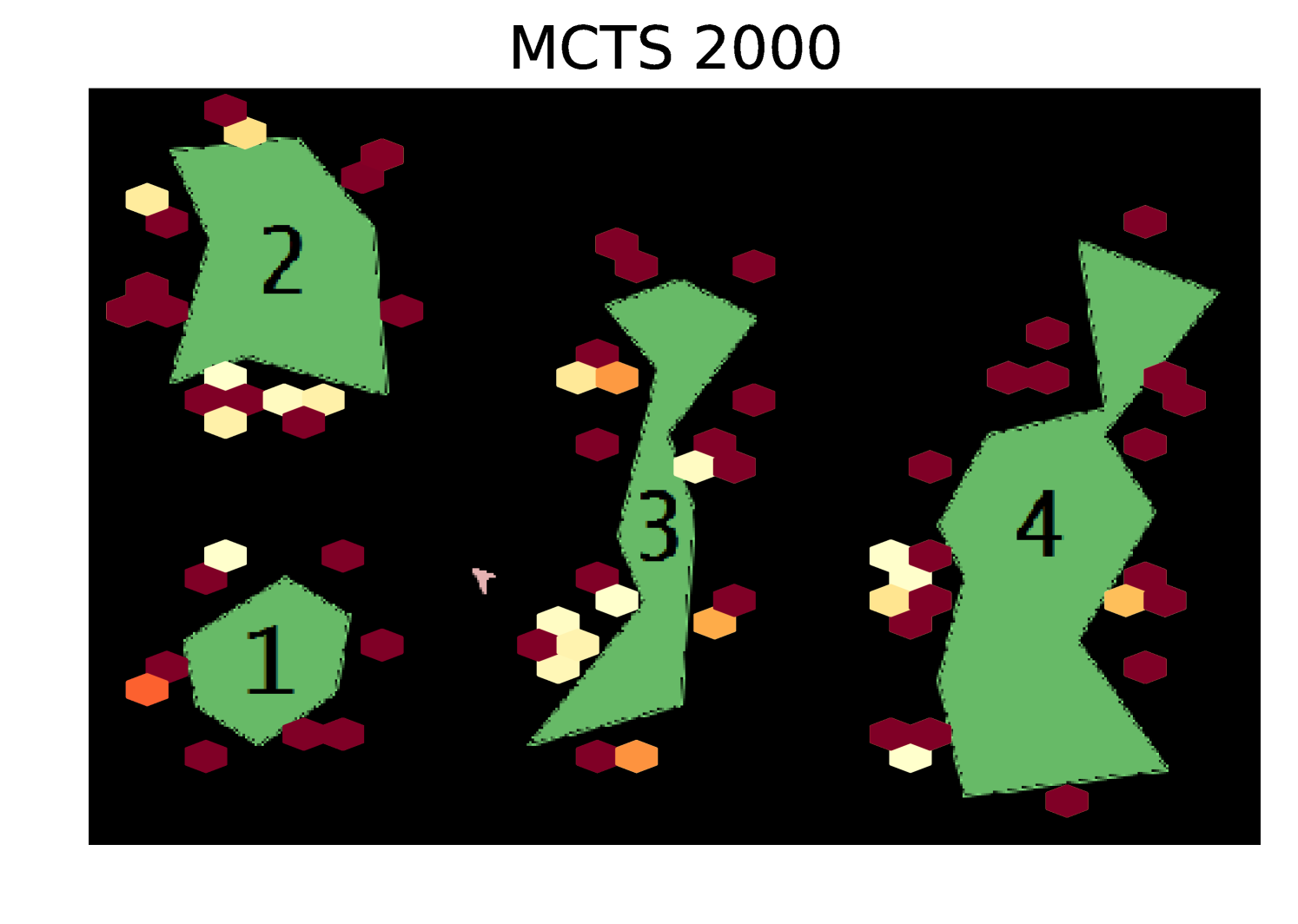

Figures 3(a), 3(b), and 3(c) show the percentage of time that the agent spends on exploring asteroids 1, 3, and 4, respectively. The random and greedy policies have difficulty escaping asteroid 1, and are rarely able to reach asteroid 4. On the other hand, our algorithm allocates its time much more proportionally. Figure 4(d) shows the number of symbolic transitions that the agent has not observed (out of 115 possible).777We used Algorithm 1 to build symbolic models from the data gathered by each exploration algorithms. As we discussed in Section 3, the number of unobserved symbolic transitions is a good representation of the amount of information that the models are missing from the environment.

Our algorithm significantly outperforms random and greedy exploration. Note that these results are using an uninformative prior and the performance of our algorithm could be significantly improved by starting with more information about the environment. To try to give additional intuition, in Appendix A we show heatmaps of the coordinates visited by each of the exploration algorithms.

5 The Treasure Game Domain

The Treasure Game [13], shown in Figure 2(b), features an agent in a 2D, pixel video-game like world, whose goal is to obtain treasure and return to its starting position on a ladder at the top of the screen. The 9-dimensional state space is given by the and positions of the agent, key, and treasure, the angles of the two handles, and the state of the lock.

The agent is given 9 options: go-left, go-right, up-ladder, down-ladder, jump-left, jump-right, down-right, down-left, and interact. See Appendix A for a more detailed description of the options and the environment dynamics. Given these options, the 7 factors with their corresponding number of symbols are: -, 10; -, 9; -, 2; -, 2; - and -, 3; -, 2; and - and -, 2.

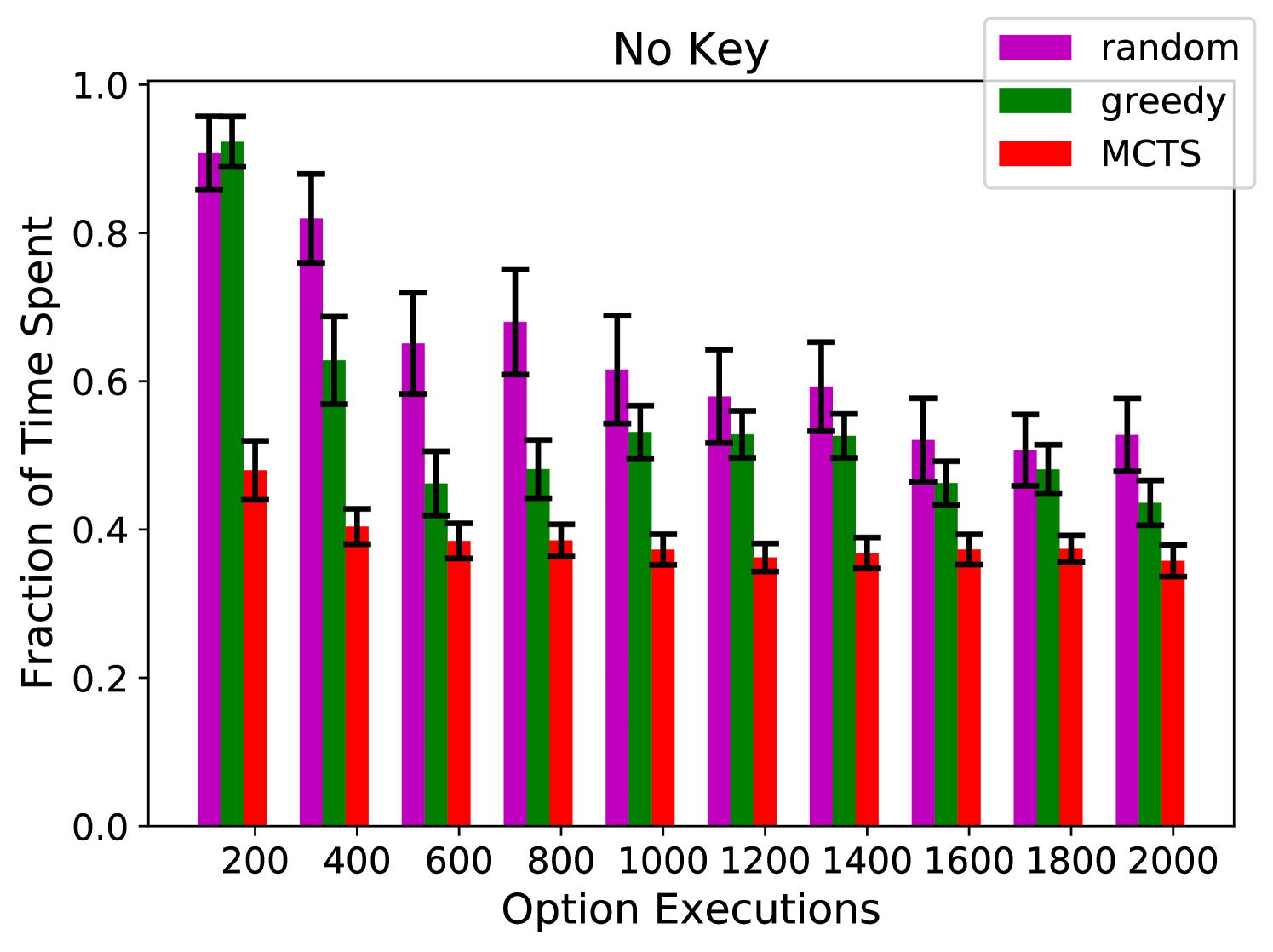

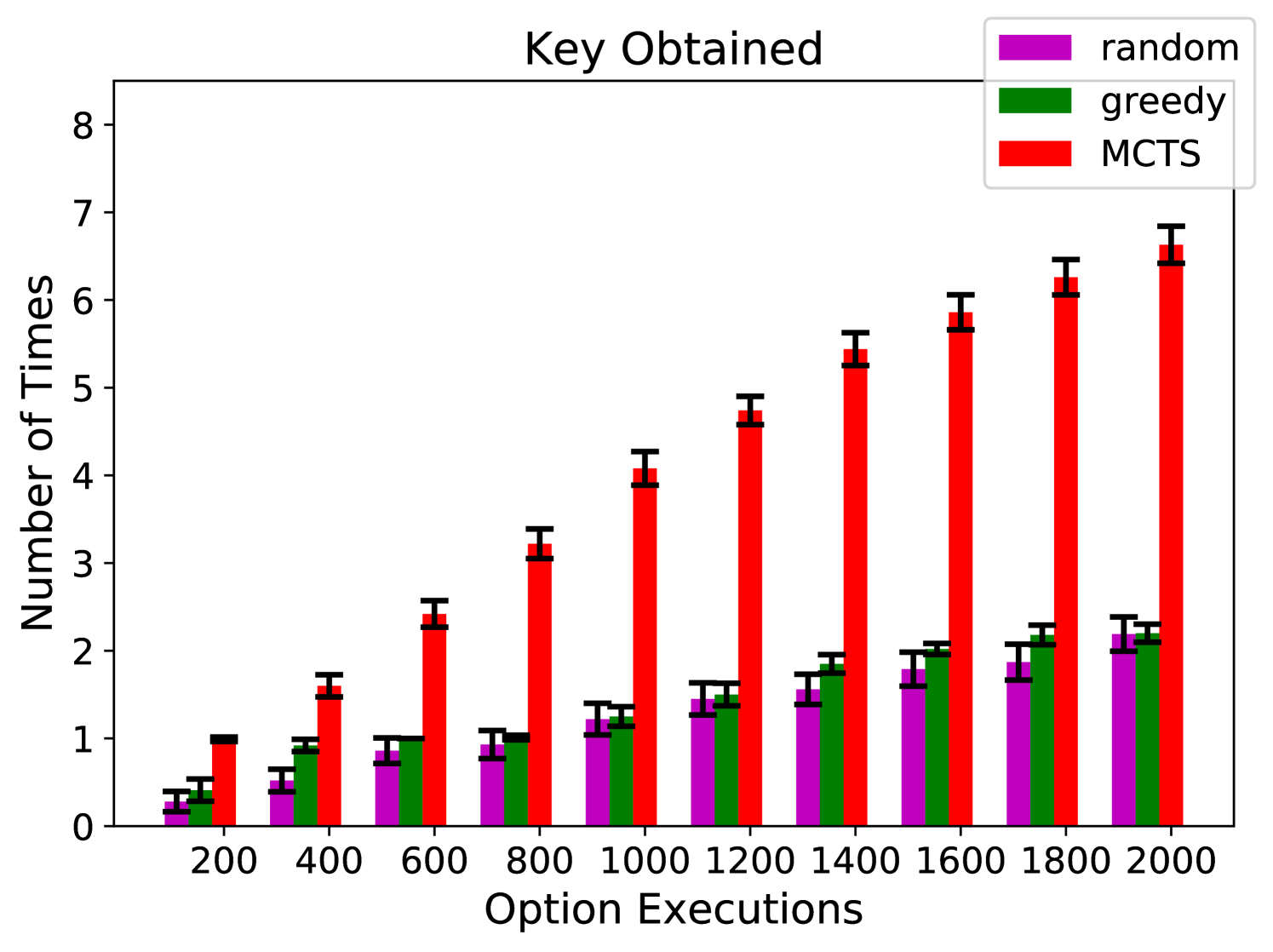

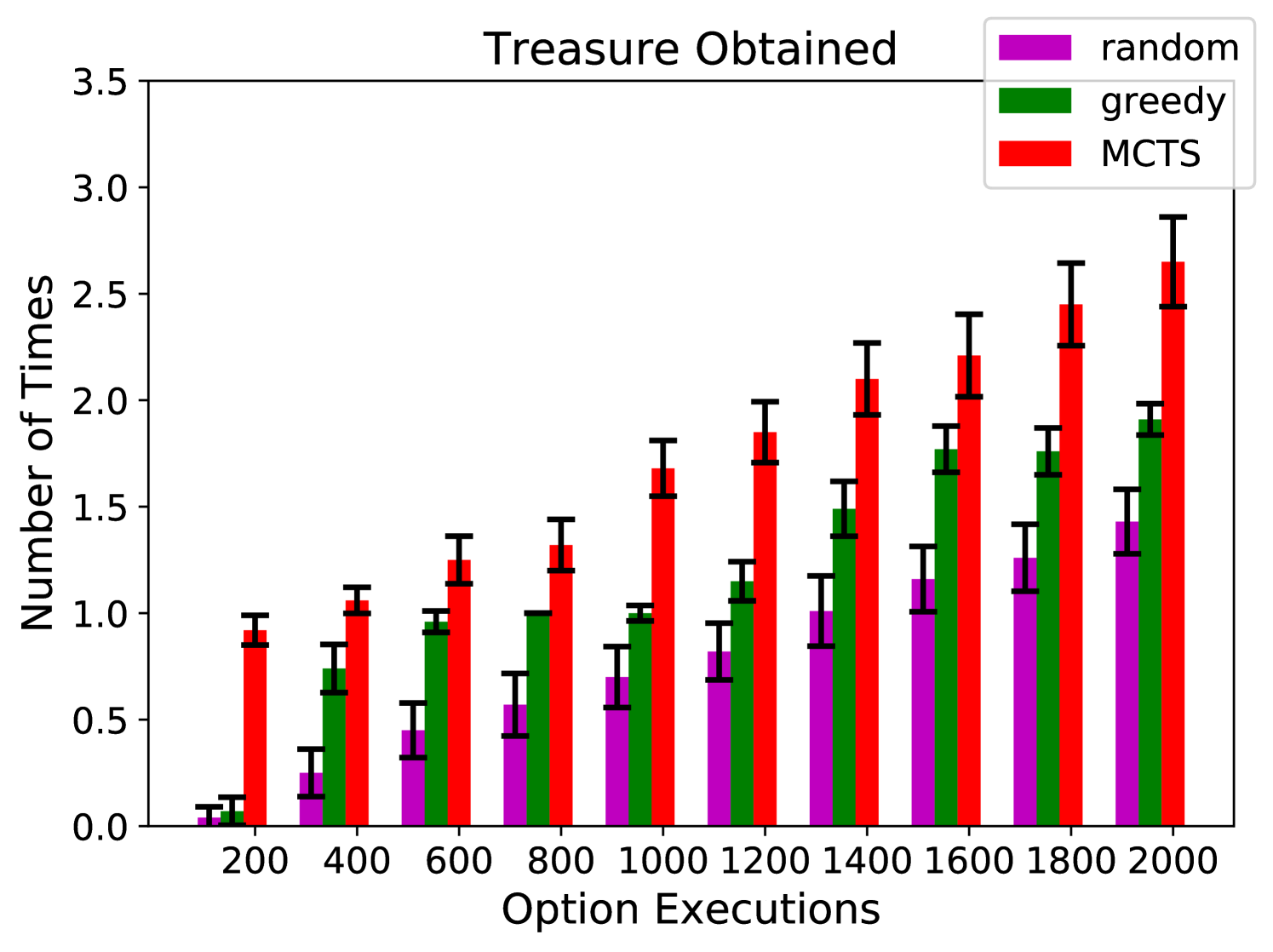

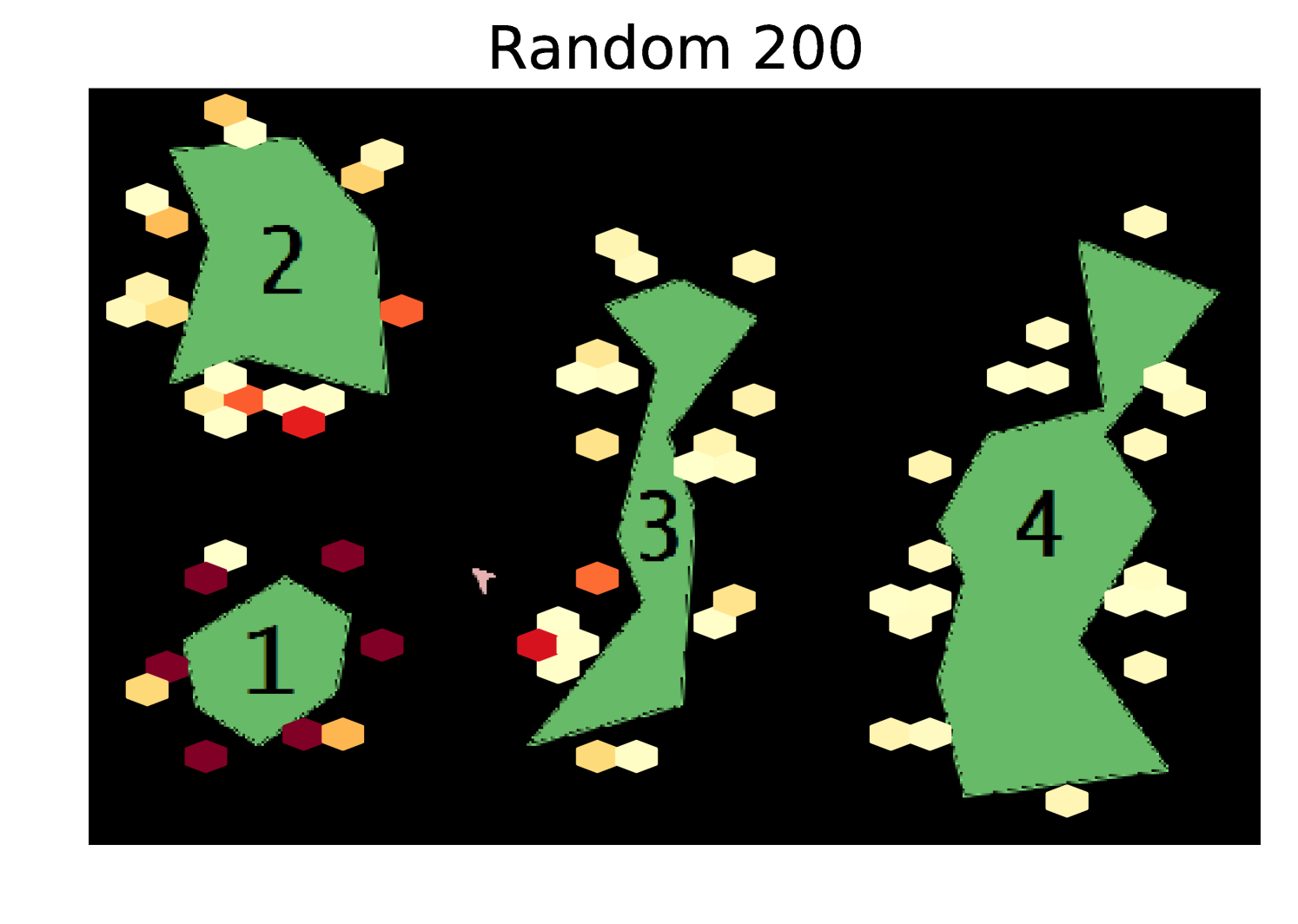

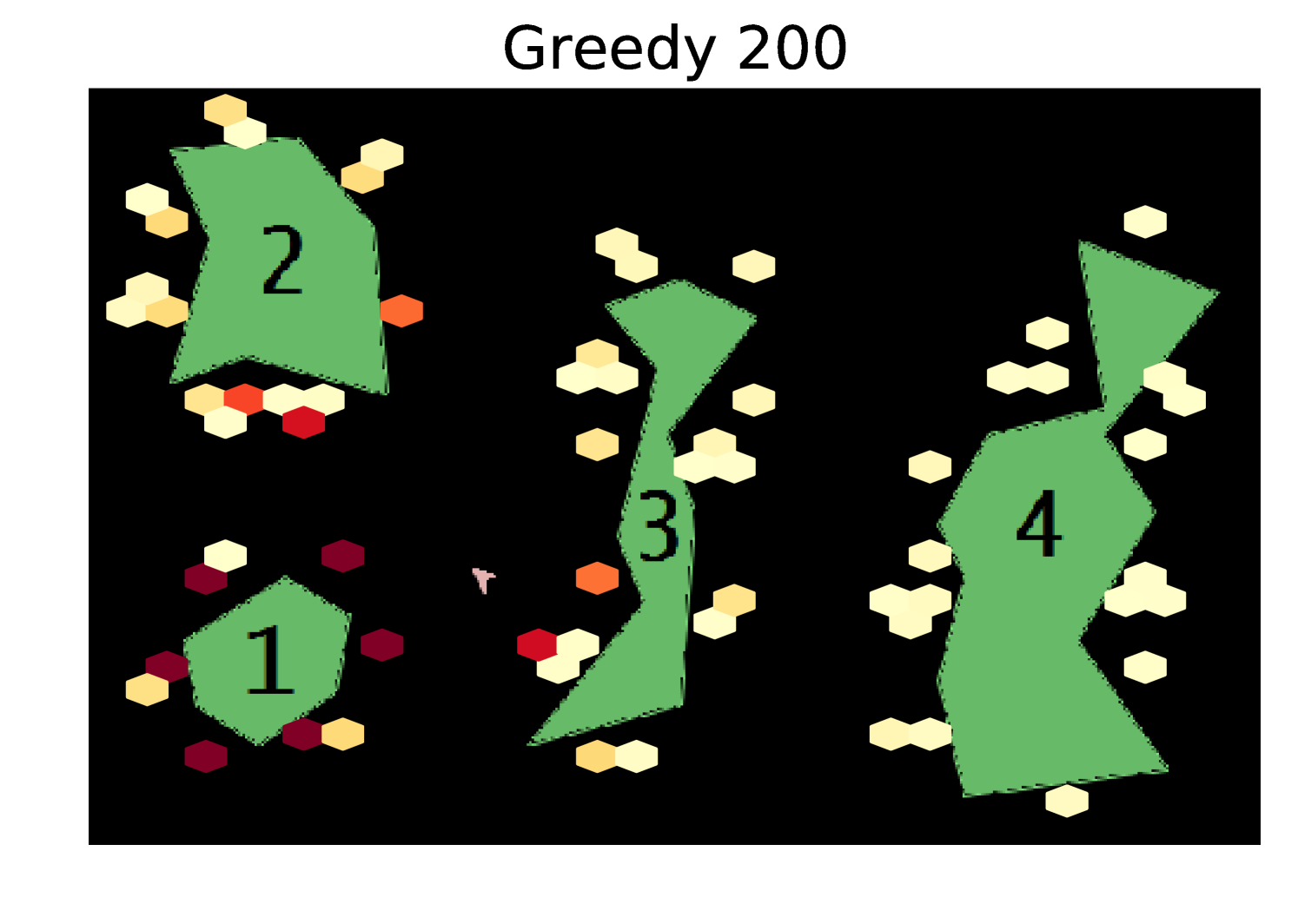

Results We tested the performance of the same three algorithms: random, greedy, and our algorithm. Figure 4(a) shows the fraction of time that the agent spends without having the key and with the lock still locked. Figures 4(b) and 4(c) show the number of times that the agent obtains the key and treasure, respectively. Figure 4(d) shows the number of unobserved symbolic transitions (out of 240 possible). Again, our algorithm performs significantly better than random and greedy exploration. The data from our algorithm has much better coverage, and thus leads to more accurate symbolic models. For instance in Figure 4(c) you can see that random and greedy exploration did not obtain the treasure after 200 executions; without that data the agent would not know that it should have a symbol that corresponds to possessing the treasure.

6 Conclusion

We have introduced a two-part algorithm for data-efficiently learning an abstract symbolic representation of an environment which is suitable for planning with high-level skills. The first part of the algorithm quickly generates an intermediate Bayesian symbolic model directly from data. The second part guides the agent’s exploration towards areas of the environment that the model is uncertain about. This algorithm is useful when the cost of data collection is high, as is the case in most real world artificial intelligence applications. Our results show that the algorithm is significantly more data efficient than using more naive exploration policies.

7 Acknowledgements

This research was supported in part by the National Institutes of Health under award number R01MH109177. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- [1] A.G. Barto and S. Mahadevan. Recent advances in hierarchical reinforcement learning. Discrete Event Dynamic Systems, 13(4):341–379, 2003.

- [2] Ronen I Brafman and Moshe Tennenholtz. R-max-a general polynomial time algorithm for near-optimal reinforcement learning. Journal of Machine Learning Research, 3(Oct):213–231, 2002.

- [3] C.B. Browne, E. Powley, D. Whitehouse, S.M. Lucas, P.I. Cowling, P. Rohlfshagen, S. Tavener, D. Perez, S. Samothrakis, and S. Colton. A survey of Monte-Carlo tree search methods. IEEE Transactions on Computational Intelligence and AI in Games, 4(1):1–43, 2012.

- [4] S. Cambon, R. Alami, and F. Gravot. A hybrid approach to intricate motion, manipulation and task planning. International Journal of Robotics Research, 28(1):104–126, 2009.

- [5] J. Choi and E. Amir. Combining planning and motion planning. In Proceedings of the IEEE International Conference on Robotics and Automation, pages 4374–4380, 2009.

- [6] Christian Dornhege, Marc Gissler, Matthias Teschner, and Bernhard Nebel. Integrating symbolic and geometric planning for mobile manipulation. In IEEE International Workshop on Safety, Security and Rescue Robotics, November 2009.

- [7] Martin Ester, Hans-Peter Kriegel, Jörg Sander, Xiaowei Xu, et al. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, pages 226–231, 1996.

- [8] E. Gat. On three-layer architectures. In D. Kortenkamp, R.P. Bonnasso, and R. Murphy, editors, Artificial Intelligence and Mobile Robots. AAAI Press, 1998.

- [9] K.A. Heller and Z. Ghahramani. Bayesian hierarchical clustering. In Proceedings of the 22nd international conference on Machine learning, pages 297–304. ACM, 2005.

- [10] L. Kaelbling and T. Lozano-Pérez. Hierarchical planning in the Now. In Proceedings of the IEEE Conference on Robotics and Automation, 2011.

- [11] L. Kocsis and C. Szepesvári. Bandit based Monte-Carlo planning. In Machine Learning: ECML 2006, pages 282–293. Springer, 2006.

- [12] G.D. Konidaris, L.P. Kaelbling, and T. Lozano-Perez. Constructing symbolic representations for high-level planning. In Proceedings of the Twenty-Eighth Conference on Artificial Intelligence, pages 1932–1940, 2014.

- [13] G.D. Konidaris, L.P. Kaelbling, and T. Lozano-Perez. Symbol acquisition for probabilistic high-level planning. In Proceedings of the Twenty Fourth International Joint Conference on Artificial Intelligence, pages 3619–3627, 2015.

- [14] C. Malcolm and T. Smithers. Symbol grounding via a hybrid architecture in an autonomous assembly system. Robotics and Autonomous Systems, 6(1-2):123–144, 1990.

- [15] S.A. Mobin, J.A. Arnemann, and F. Sommer. Information-based learning by agents in unbounded state spaces. In Advances in Neural Information Processing Systems, pages 3023–3031, 2014.

- [16] N.J. Nilsson. Shakey the robot. Technical report, SRI International, April 1984.

- [17] L. Orseau, T. Lattimore, and M. Hutter. Universal knowledge-seeking agents for stochastic environments. In International Conference on Algorithmic Learning Theory, pages 158–172. Springer, 2013.

- [18] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, et al. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12(Oct):2825–2830, 2011.

- [19] D. Precup. Temporal Abstraction in Reinforcement Learning. PhD thesis, Department of Computer Science, University of Massachusetts Amherst, 2000.

- [20] N.F.Y. Singer. Efficient Bayesian parameter estimation in large discrete domains. In Advances in Neural Information Processing Systems 11: Proceedings of the 1998 Conference, volume 11, page 417. MIT Press, 1999.

- [21] R.S. Sutton, D. Precup, and S.P. Singh. Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1-2):181–211, 1999.

- [22] J. Wolfe, B. Marthi, and S.J. Russell. Combined task and motion planning for mobile manipulation. In International Conference on Automated Planning and Scheduling, 2010.

Appendix A Environment Descriptions

A.1 Asteroids Domain Cont.

The agent’s 6 options are implemented using PD controllers for the torque and thrust. The options do not always work as intended, sometimes the ship will crash during the execution of an option, which resets the environment. If the ship tries to move from asteroid to asteroid , it crashes with probability . As designed, these options do not have the subgoal property because the outcome of executing each option is dependent on which face of which asteroid the option was executed from. However, they are partitioned subgoal options because their outcome is only dependent on which asteroid face they were executed from.

Results Cont. Figure 5 shows heatmaps of the coordinates visited by each exploration algorithm in the Asteroids domain. Our algorithm explores the state space much more uniformly than the random and greedy exploration algorithms.

A.2 Treasure Game Domain Cont.

The low-level actions available to the agent are move up, down, left, and right, jump, and interact. The 4 movement actions move the agent between 2 and 4 pixels uniformly at random in the appropriate direction. There are three doors which may block the path of the agent. The top two doors are oppositely open and closed; flipping one of the two handles switches their status. The bottom door which guards the treasure can be opened by the agent obtaining the key and using it on the lock. The interact action is available when the agent is standing in front of a handle, or when it possesses the key and is standing in front of the lock. In the first case, executing the interact action flips the handle’s position with probability 0.8, and in the second case, the lock is unlocked and the agent loses the key. Whenever the agent has possession of the key and/or the treasure, they are displayed in the lower-right corner of the screen. The agent returning to the top ladder resets the environment. The agent’s 9 options are implemented using simple control loops:

-

1.

go-right and go-left: the agent moves continuously right/left until it reaches a wall, edge, object it can interact with, or ladder. Only available when the agent’s way is not directly blocked.

-

2.

up-ladder and down-ladder: the agent ascends/descends a ladder. Only available when the agent is directly below/above a ladder.

-

3.

down-left and down-right: the agent falls off an edge onto the nearest solid cell on its left/right. Only available when they would succeed.

-

4.

jump-left and jump-right: the agent jumps and moves left/right for about 48 pixels. Only available when the area above the agent’s head, and above its head and to the left/right, are clear.

-

5.

interact: same as the low-level interact action.

These options, like the low-level actions they are composed of, all have at least a small amount of stochasticity in their outcomes. Additionally, when the agent executes one of the jump options to reach a faraway ledge, for instance when it is trying to get the key, it succeeds with probability 0.53, and misses the ledge and lands directly below with probability 0.47. These are abstract partitioned subgoal options.

A.3 Hyperparameter Settings

In each run the agent had access to the exact number of option executions it had to explore with. For MCTS, we used the UCT tree policy with , a random rollout policy, and performed updates. Also, during UCT option selection, we normalized a node’s score using the highest and lowest scores seen so far. For the sparse Dirichlet-Multinomial models, we used hyperparameter and the prior probability over the size of the support was given by a geometric distribution with parameter . For the state clustering (step 3 of Algorithm 1), we used the DBSCAN algorithm [7] implemented in scikit-learn [18] with parameters -, and for the Asteroids domain and for the Treasure Game domain. For Algorithm 2, we set . For all options , we set .