droneslab.github.io/NH

{yashturk, ykim35, kdantu}@buffalo.edu

Active Illumination Control in Low-Light Environments using NightHawk

Abstract

Subterranean environments such as culverts present significant challenges to robot vision due to dim lighting and lack of distinctive features. Although onboard illumination can help, it introduces issues such as specular reflections, overexposure, and increased power consumption. We propose NightHawk 111This project was partially funded by NSF #1846320 and a gift from MOOG Inc., a framework that combines active illumination with exposure control to optimize image quality in these settings. NightHawk formulates an online Bayesian optimization problem to determine the best light intensity and exposure-time for a given scene. We propose a novel feature detector-based metric to quantify image utility and use it as the cost function for the optimizer. We built NightHawk as an event-triggered recursive optimization pipeline and deployed it on a legged robot navigating a culvert beneath the Erie Canal. Results from field experiments demonstrate improvements in feature detection and matching by 47-197% enabling more reliable visual estimation in challenging lighting conditions.

1 Introduction

Environment illuminance plays a critical role in the performance of robot perception algorithms, many of which rely heavily on feature detection and matching. Illuminance directly influences image brightness and camera exposure, ultimately affecting image utility. This impact is especially pronounced in scenarios where lighting conditions are variable, such as indoor-outdoor transitions, shadowed areas, or environments with dynamic or low lighting. Standard vision sensors, including monocular, RGB-D, and stereo cameras, rely on autoexposure (AE) to adjust settings like exposure time (shutter speed), gain (ISO), and aperture. While AE performs well in typical (well-lit) scenarios, it struggles in extreme environments. The primary limitation of AE stems from its objective: maintaining a mean pixel intensity around 50%, aiming for neither over nor under exposure. However, this approach may not be optimal for robot vision tasks. The goal in these applications is to reliably detect and match stable features, which a neutrally exposed image doesn’t necessarily guarantee.

Several exposure control methods have been proposed [24], [6], [25], [8], [13], [10], [5]. [11], controls camera exposure-time while [23] controls both exposure-time and gain. Some of these methods propose image utility metrics that aim to quantify the quality of images from a feature detection and matching perspective like [21], [26] and NEWG [9]. These metrics typically revolve around using image gradients, as most feature detectors exploit gradients for keypoint detection. These exposure control methods adjust camera parameters such as shutter speed and gain, but often fall short in challenging low-light or varying-light conditions. Insufficient scene radiance can necessitate excessively long exposures or high gains leading to reduced frame-rates and increased noise. Integrating an onboard light source offers a promising solution by augmenting scene illumination. However, naive control of onboard lighting can introduce undesirable artifacts such as specular reflections [3] and overexposure, while also consuming significant robot power. Careful tuning and adjustment of light intensity is essential to mitigate these drawbacks.

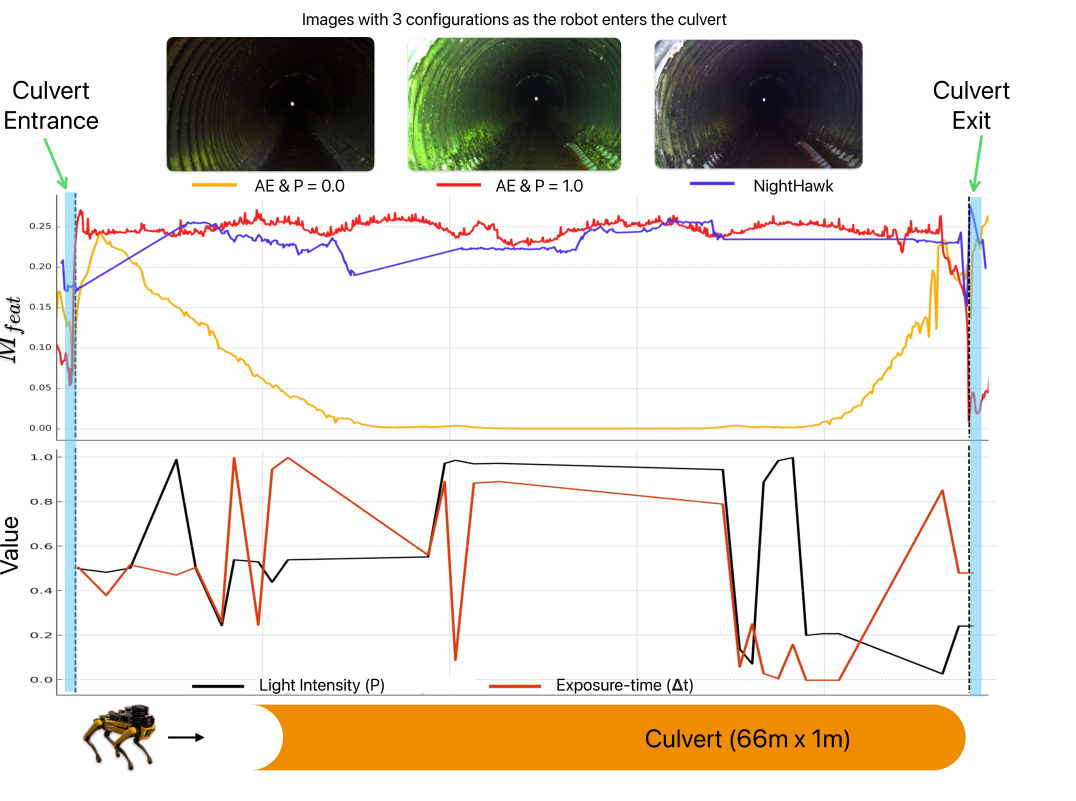

Our motivation stems from our efforts to inspect culverts beneath the Erie Canal in western New York. These culverts are long pipes with a 1-meter diameter that extend across the canal. As shown in Figure 1, they are dimly lit with extreme light variations at the entrances. They are characterized by repeating textures and features, posing a significant challenge for visual estimation.

We address these challenges with NightHawk, a novel framework that combines active illumination with exposure control. We also present a new image utility metric () which leverages learning-based feature detectors to assess image quality and demonstrates strong correlation with feature matching performance in low-light settings. This metric serves as the cost function for our online Bayesian optimization process enabling us to determine optimal external light intensity and camera attribute values. Our key contributions are:

-

1.

Novel image utility metric () based on a modern learning-based feature detector that effectively quantifies feature performance

-

2.

An active illumination and exposure control framework (NightHawk) which uses online Bayesian optimization to find optimal external-light intensity () and exposure-time ()

-

3.

Experimental validation in a challenging subterranean environment—a 66-meter-long, 1-meter-diameter culvert beneath the Erie Canal—to demonstrate enhanced feature matching performance.

2 NightHawk Design

NightHawk is an external light and camera exposure-time control algorithm that uses event-triggered Bayesian Optimization to provide optimal lighting and camera configuration. This section describes the image utility metric and illumination control strategy.

2.1 Image Utility

Effective exposure control requires a reliable mechanism to quantify image quality. Conventional auto-exposure (AE) mechanisms rely on irradiance aimed to maintain a mean intensity of 50% or 128 (8-bit). Prior approaches proposed by [21], [26], [9] control exposure by using image gradient-based utility metrics. Recently, there have been numerous learning-based feature detectors (e.g., SiLK[4], R2D2[17]) that are trained in a self-supervised manner to estimate probabilities of being "interesting" per pixel. Further, detectors such as R2D2 explicitly output repeatability and reliability tensors aligned with image dimensions, enabling a more nuanced assessment of feature quality. Inspired by this literature, our intuition is to leverage such probabilities as direct feedback to assess image utility.

To quantify image utility, we utilize R2D2 as the base feature detection network. We compute the product of the mean repeatability and the square of the mean reliability that yields a single scalar performance index per image:

| (1) |

This metric describes the image’s utility for successful feature detection. The square of the mean reliability amplifies its influence on the final score, reflecting the critical role of the descriptor in accurate matching performance. Here, is repeatability per pixel, is reliability per pixel, and is the total number of pixels. We evaluated ’s performance and compared it against other metrics in section 3 where shows a strong correlation with feature matching performance.

2.2 Illumination and Exposure Control

The illumination and exposure control problem is formulated as a multi-variable Bayesian optimization (BO) where the optimal value of the light-intensity () and exposure-time () are determined by maximizing our image quality metric . The Gaussian process (GP) provides the surrogate model to parameterize the influence of and with zero mean Gaussian noise . The mean and covariance of the predictive distribution is given by:

| (2) | |||

| (3) |

where x = [,] gives inputs and outputs . In this work, the Matérn kernel [16] function is selected to construct the correlation matrix and vector . In addition, Expected Improvement [22] (EI) is selected as an acquisition function to determine the next evaluation points.

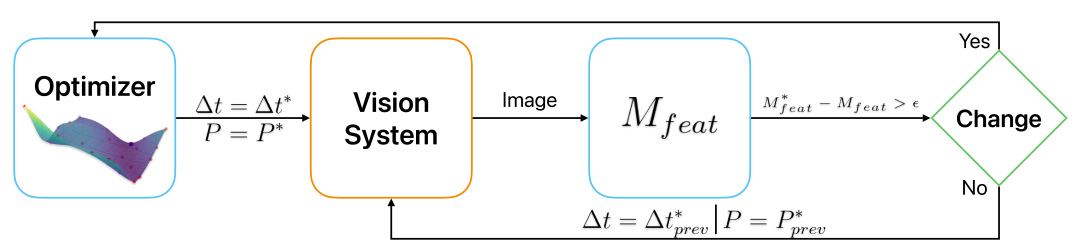

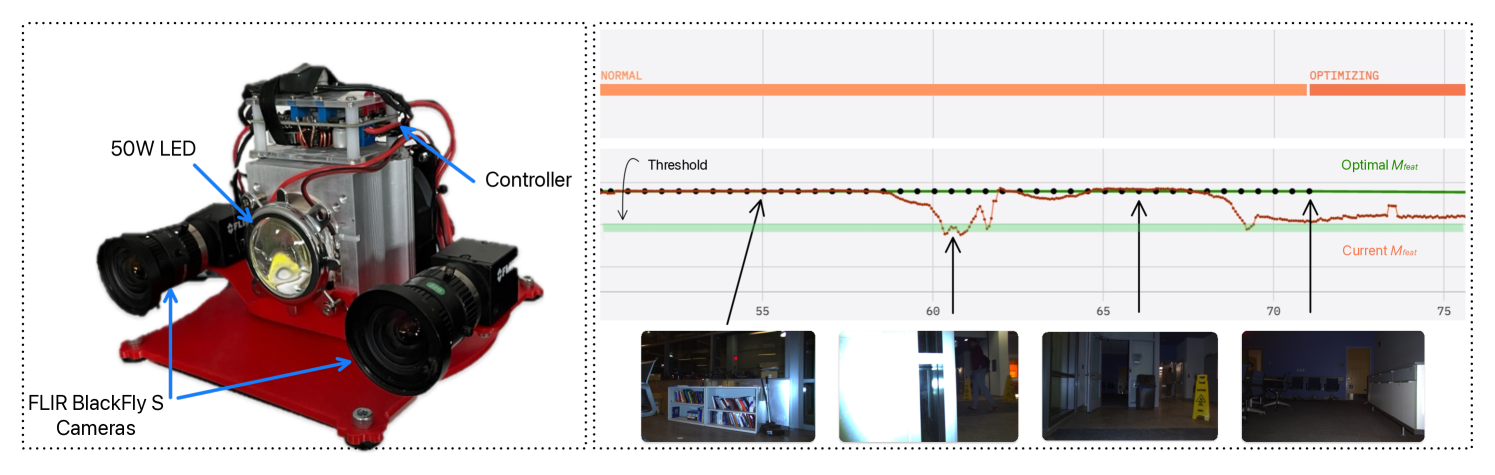

NightHawk’s overall architecture is illustrated in Figure 2 where the algorithm begins with BO to compute the optimal configuration ( and ) which provides the optimal . After applying the configuration, images are received by an image quality assessment module which checks the current metric value and compares it with the optimal. A threshold () is provided by the user as the tolerance. As the robot moves, if , the system triggers another round of optimization.

3 Experiments and Results

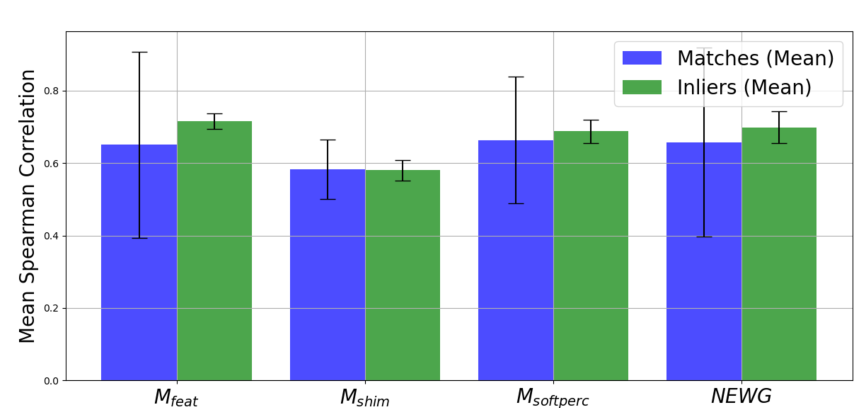

We benchmark against established utility measures such as , and . Figure 3 shows the correlation between the metrics and feature matching performance between consecutive frames. Spearman correlation is used to quantify the relationship between each metric and feature matching.

demonstrates a strong positive correlation with feature matching performance across five diverse feature detectors (AKAZE [1], SHI_TOMASI [7], ORB [18], R2D2, and Superpoint [2]). While also exhibits comparable correlation, is more consistent with lower variance. Further, ’s capacity to incorporate information from learning-based feature detectors distinguishes it from conventional metrics reliant solely on gradient-based features and enables a more comprehensive evaluation of image utility. Finally, offers a significant practical advantage when using learning-based features in visual estimation. A single computation for both feature extraction and quality assessment (e.g., when using R2D2 features) can help reduce compute overheads.

We deploy NightHawk on a Boston Dynamic’s Spot robot equipped with a FLIR Blackfly S camera and controllable 50W LED. NightHawk was implemented using ROS2 [12], PyTorch [14], and Scikit-learn [15] and runs online on an onboard NVIDIA Jetson Orin. We use a pre-trained R2D2 model provided by the authors for our image utility metric. The optimization process duration can be as short as 20 seconds depending on the chosen hyper-parameters. We conducted several tele-operated missions beneath the Erie Canal, capturing images under 3 camera configurations with fixed gain:

-

1.

Auto-exposure no external light (AE & P=0.0): The camera relied solely on its built-in AE mechanism.

-

2.

Auto-exposure fixed external light (AE & P=1.0): The camera used AE while a constant 100% intensity from the LED was applied.

-

3.

NightHawk optimization: Our proposed method dynamically adjusted both exposure-time () and LED intensity () to optimize image quality.

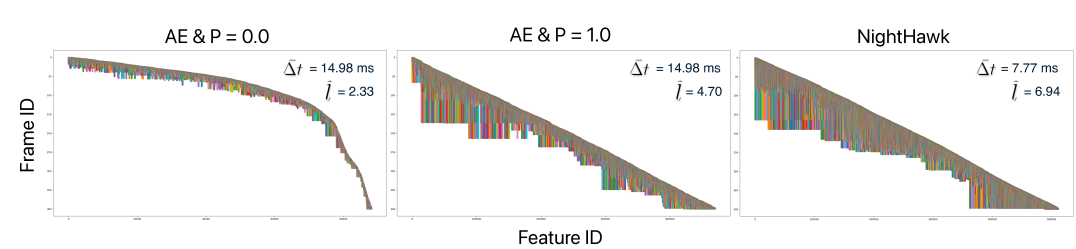

Figure 5 shows the image utility as robot navigates through the culvert environment while Figure 4 shows feature tracking performance. The highlighted regions indicate the time intervals during which the robot enters and exits the culvert. When using (AE & P = 0.0) configuration, drops sharply as the robot enters the culvert. Although AE initially adjusts to improve the image quality, eventually falls to near zero due to the environment’s darkness and AE’s limitations at maximum exposure settings. In the (AE & P = 1.0) configuration, a similar initial drop in occurs as the robot transitions into the culvert. This is likely caused by reflections and exposure instability when the lighting suddenly changes. AE recovers after this initial drop, but the inconsistency as a lasting impact on feature detection and matching. With NightHawk, however, exhibits a lower value compared to fixed light but avoids sharp drops. This results in more stable image utility throughout the transition, supporting more consistent feature detection and matching. A similar trend is observed when the robot exits the culvert.

Feature tracking performance, as shown in Figure 4, is sub-optimal for the two baseline methods. In the AE & P = 0.0 setting, limited feature persistence () results in short track lengths and poor image utility, despite a standard exposure time ( ms). Adding external light in the AE & P = 1.0 setting improves the average track length (), but introduces visual artifacts such as a green-ish hue due to reflections and uneven illumination. In contrast, NightHawk achieves the best performance, with significantly higher average track length () and reduced exposure time ( ms), enabling longer and more consistent feature tracking while minimizing motion blur and avoiding overexposure.

Beyond enhancing feature performance, NightHawk also maintains well balanced exposure times and light intensities by optimizing camera settings within a user-defined search space. Balanced exposure not only ensures consistent image quality but also contributes to reduced power consumption by avoiding unnecessary use of external lighting. Since camera exposure time directly limits the achievable frame rate, we constrain the maximum allowable exposure time in the optimizer based on the desired frame rate. This guarantees that NightHawk produces solutions that meet the real-time performance requirements of our application while operating efficiently in terms of both computation and energy usage.

4 Experimental Insights

To evaluate the performance of NightHawk in real-world scenarios, we conducted field experiments in subterranean environments. These trials, illustrated in Figure 6, highlight the challenges posed by low-light, reflective, and texture-sparse conditions, and demonstrate how adaptive exposure and illumination significantly improve visual perception.

4.1 Inspection in Low Light Conditions

Optimizing Adaptive Lighting: A key challenge in low-light and dynamic lighting environments for robot perception is the ability to adapt. Our observation is that controlling only exposure settings of a camera, or mounting a light that is switched on throughout the task gives suboptimal feature detection and tracking results.

Controlling an adjustable light, identifying when the lighting conditions have changed, and adaptively reconfiguring the light as well as exposure jointly leads to much better feature detection/tracking (Figure 4) including a 47% improvement in feature tracking in our scenario. This also results in more robust robot perception as shown by the low variance in correlation in Figure 3.

Tuning to a given scenario: There are several tradeoffs in incorporating NightHawk into an inspection system. The optimization takes time as seen by the delays in execution (several seconds per run) once the optimization is triggered in Figure 7. Additionally, we need to tune the threshold () to ensure the optimization is not triggered too frequently to affect task execution while also not triggering too infrequently to affect useful image capture. In our executions, we have tuned the threshold to adjust to big changes - once when it goes from the outside into the culvert, and again when it is deep enough in the culvert that the lighting has completely changed. Note that NightHawk changes the light setting to 54% intensity when it enters the culvert, far less than the 100% setting in our baseline. This results in slightly lower scores as in Figure 5, but good feature matching accuracy in our application.

Integrating other perception services: In theory, we can increase exposure unboundedly to improve image capture. However, in most robot autonomy, there are other services such as localization and mapping that assume continuity through motion and a certain frame rate for efficient execution [19],[20]. Further, motion adds blur which is exacerbated by long exposure. We observe that adaptive lighting allows us to function at reasonable frame rate to cater to such services while improving inspection in the culvert environment.

Energy Efficiency: A side benefit is reduced energy expenditure. Our setup in Figure 7 has a 50W LED. The total power output of the robot is 150W. Keeping a light powered on at full intensity can severely limit the robot range and endurance. This is validated in Figure 5 which shows the light intensity vary as the robot navigates the culvert.

4.2 Learning-based Metric

Our metric builds on ongoing research in learning-based feature detectors, and uses R2D2. The advantage of using our metric is that we can both compute the image utility as well as detect features in one pass. As demonstrated in Figure 3, it exhibits strong and reliable (low variance) correlation with feature detection and matching. Beyond our application, we believe that our metric can be widely applicable for applications requiring to only adjust auto-exposure, or even evaluate the image utility of a given scene for other purposes.

4.3 System Optimizations

Onboard Optimization: Optimization must efficiently execute on resource constrained robot hardware. We employ multi-threading and early stopping to accelerate convergence. Through this process, we reduced the latency of optimization from 70 seconds to 20 seconds.

Parameter Control and Feedback: The optimizer adjusts , , captures images, and receives feedback . ROS2 ensures time synchronization and precise hardware control.

Utility Computation: is computed online at high rates by applying CUDA acceleration to down-sampled images, achieving 15Hz on an NVIDIA Jetson Orin while running other tasks on the CPU.

Event-triggered Maneuvers: The optimizer can temporarily pause robot movement, determining optimal settings before proceeding to the next way-point. One promising solutions is to move this evaluation to the background in an predictive manner, which will significantly reduce the delay.

5 Conclusion and Future Plan

We propose NightHawk, an active illumination and exposure control method that enhances visual estimation in low-light and dynamically lit environments. Field experiments demonstrate that deploying NightHawk in challenging environments improves feature tracking performance by 47–197%. These experiments also provide valuable insights into how exposure settings and onboard lighting affect feature detection, offering a foundation for applications where low-light visual estimation is critical.

In future work, we aim to generalize the Bayesian optimization process using learning-based methods, allowing NightHawk to perform exposure and illumination control in a single shot. This advancement would eliminate the need for the current event-triggered (stop-and-go) routine, significantly accelerating navigation.

6 Acknowledgments

We thank our colleagues Christo Aluckal, Kartikeya Singh, and Yashom Dighe for their invaluable assistance with the experiments. We also extend our gratitude to Matthew Lengel from the NY Canal Corporation for providing access to inspection sites and sharing his expertise on culvert infrastructure.

References

- [1] Pablo Alcantarilla, Jesus Nuevo, and Adrien Bartoli. Fast Explicit Diffusion for Accelerated Features in Nonlinear Scale Spaces. In Procedings of the British Machine Vision Conference 2013, pages 13.1–13.11, Bristol, 2013. British Machine Vision Association.

- [2] Daniel DeTone, Tomasz Malisiewicz, and Andrew Rabinovich. SuperPoint: Self-Supervised Interest Point Detection and Description, April 2018. arXiv:1712.07629 [cs].

- [3] Kamak Ebadi, Lukas Bernreiter, Harel Biggie, Gavin Catt, Yun Chang, Arghya Chatterjee, Christopher E. Denniston, Simon-Pierre Deschênes, Kyle Harlow, Shehryar Khattak, Lucas Nogueira, Matteo Palieri, Pavel Petráček, Matěj Petrlík, Andrzej Reinke, Vít Krátký, Shibo Zhao, Ali-akbar Agha-mohammadi, Kostas Alexis, Christoffer Heckman, Kasra Khosoussi, Navinda Kottege, Benjamin Morrell, Marco Hutter, Fred Pauling, François Pomerleau, Martin Saska, Sebastian Scherer, Roland Siegwart, Jason L. Williams, and Luca Carlone. Present and Future of SLAM in Extreme Environments: The DARPA SubT Challenge. IEEE Transactions on Robotics, 40:936–959, 2024.

- [4] Pierre Gleize, Weiyao Wang, and Matt Feiszli. SiLK – Simple Learned Keypoints, April 2023. arXiv:2304.06194 [cs].

- [5] Ruben Gomez-Ojeda, Zichao Zhang, Javier Gonzalez-Jimenez, and Davide Scaramuzza. Learning-Based Image Enhancement for Visual Odometry in Challenging HDR Environments. In 2018 IEEE International Conference on Robotics and Automation (ICRA), pages 805–811, Brisbane, QLD, May 2018. IEEE.

- [6] Bin Han, Yicheng Lin, Yan Dong, Hao Wang, Tao Zhang, and Chengyuan Liang. Camera Attributes Control for Visual Odometry With Motion Blur Awareness. IEEE/ASME Transactions on Mechatronics, 28(4):2225–2235, August 2023.

- [7] Jianbo Shi and Tomasi. Good features to track. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition CVPR-94, pages 593–600, Seattle, WA, USA, 1994. IEEE Comput. Soc. Press.

- [8] Joowan Kim, Younggun Cho, and Ayoung Kim. Exposure Control Using Bayesian Optimization Based on Entropy Weighted Image Gradient. In 2018 IEEE International Conference on Robotics and Automation (ICRA), pages 857–864, Brisbane, QLD, May 2018. IEEE.

- [9] Joowan Kim, Younggun Cho, and Ayoung Kim. Proactive Camera Attribute Control Using Bayesian Optimization for Illumination-Resilient Visual Navigation. IEEE Transactions on Robotics, 36(4):1256–1271, August 2020.

- [10] Kyunghyun Lee, Ukcheol Shin, and Byeong-Uk Lee. Learning to Control Camera Exposure via Reinforcement Learning. In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 2975–2983, Seattle, WA, USA, June 2024. IEEE.

- [11] Xiadong Liu, Zhi Gao, Huimin Cheng, Pengfei Wang, and Ben M. Chen. Learning-based Low Light Image Enhancement for Visual Odometry. In 2020 IEEE 16th International Conference on Control & Automation (ICCA), pages 1143–1148, Singapore, October 2020. IEEE.

- [12] Steven Macenski, Tully Foote, Brian Gerkey, Chris Lalancette, and William Woodall. Robot Operating System 2: Design, architecture, and uses in the wild. Science Robotics, 7(66):eabm6074, May 2022. Publisher: American Association for the Advancement of Science.

- [13] Ishaan Mehta, Mingliang Tang, and Timothy D. Barfoot. Gradient-Based Auto-Exposure Control Applied to a Self-Driving Car. In 2020 17th Conference on Computer and Robot Vision (CRV), pages 166–173, Ottawa, ON, Canada, May 2020. IEEE.

- [14] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. PyTorch: An Imperative Style, High-Performance Deep Learning Library.

- [15] Fabian Pedregosa, Gael Varoquaux, Alexandre Gramfort, Vincent Michel, Bertrand Thirion, Olivier Grisel, Mathieu Blondel, Peter Prettenhofer, Ron Weiss, Vincent Dubourg, Jake Vanderplas, Alexandre Passos, and David Cournapeau. Scikit-learn: Machine Learning in Python. MACHINE LEARNING IN PYTHON.

- [16] Carl Edward Rasmussen and Christopher K. I. Williams. Gaussian processes for machine learning. Adaptive computation and machine learning. MIT Press, Cambridge, Mass, 2006. OCLC: ocm61285753.

- [17] Jerome Revaud, Philippe Weinzaepfel, César De Souza, Noe Pion, Gabriela Csurka, Yohann Cabon, and Martin Humenberger. R2D2: Repeatable and Reliable Detector and Descriptor, June 2019. arXiv:1906.06195 [cs].

- [18] Ethan Rublee, Vincent Rabaud, Kurt Konolige, and Gary Bradski. ORB: An efficient alternative to SIFT or SURF. In 2011 International Conference on Computer Vision, pages 2564–2571, Barcelona, Spain, November 2011. IEEE.

- [19] Sofiya Semenova, Steven Ko, Yu David Liu, Lukasz Ziarek, and Karthik Dantu. A Comprehensive Study of Systems Challenges in Visual Simultaneous Localization and Mapping Systems. ACM Transactions on Embedded Computing Systems, 24(1):1–31, January 2025.

- [20] Sofiya Semenova, Steven Y. Ko, Yu David Liu, Lukasz Ziarek, and Karthik Dantu. A quantitative analysis of system bottlenecks in visual SLAM. In Proceedings of the 23rd Annual International Workshop on Mobile Computing Systems and Applications, pages 74–80, Tempe Arizona, March 2022. ACM.

- [21] Inwook Shim, Joon-Young Lee, and In So Kweon. Auto-adjusting camera exposure for outdoor robotics using gradient information. In 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 1011–1017, Chicago, IL, USA, September 2014. IEEE.

- [22] Jasper Snoek, Hugo Larochelle, and Ryan P. Adams. Practical Bayesian Optimization of Machine Learning Algorithms, August 2012. arXiv:1206.2944 [stat].

- [23] Justin Tomasi, Brandon Wagstaff, Steven L. Waslander, and Jonathan Kelly. Learned Camera Gain and Exposure Control for Improved Visual Feature Detection and Matching. IEEE Robotics and Automation Letters, 6(2):2028–2035, April 2021.

- [24] Shuyang Zhang, Jinhao He, Bohuan Xue, Jin Wu, Pengyu Yin, Jianhao Jiao, and Ming Liu. An Image Acquisition Scheme for Visual Odometry based on Image Bracketing and Online Attribute Control. In 2024 IEEE International Conference on Robotics and Automation (ICRA), pages 381–387, Yokohama, Japan, May 2024. IEEE.

- [25] Shuyang Zhang, Jinhao He, Yilong Zhu, Jin Wu, and Jie Yuan. Efficient Camera Exposure Control for Visual Odometry via Deep Reinforcement Learning. IEEE Robotics and Automation Letters, 10(2):1609–1616, February 2025.

- [26] Zichao Zhang, Christian Forster, and Davide Scaramuzza. Active exposure control for robust visual odometry in HDR environments. In 2017 IEEE International Conference on Robotics and Automation (ICRA), pages 3894–3901, Singapore, Singapore, May 2017. IEEE.