Adaptive Dilated Convolution For Human Pose Estimation

Abstract

Most existing human pose estimation (HPE) methods exploit multi-scale information by fusing feature maps of four different spatial sizes, i.e. , , , and of the input image. There are two drawbacks of this strategy: 1) feature maps of different spatial sizes may be not well aligned spatially, which potentially hurts the accuracy of keypoint location; 2) these scales are fixed and inflexible, which may restrict the generalization ability over various human sizes. Towards these issues, we propose an adaptive dilated convolution (ADC). It can generate and fuse multi-scale features of the same spatial sizes by setting different dilation rates for different channels. More importantly, these dilation rates are generated by a regression module. It enables ADC to adaptively adjust the fused scales and thus ADC may generalize better to various human sizes. ADC can be end-to-end trained and easily plugged into existing methods. Extensive experiments show that ADC can bring consistent improvements to various HPE methods. The source codes will be released for further research.

I Introduction

Human Pose Estimation (HPE) aims to locate skeletal keypoints (e.g. ear, shoulder, elbow, etc.) of all persons in the given RGB image. It is fundamental to action recognition and has wide applications in human-computer interaction, animation, etc. This paper is interested in single-person pose estimation, which is the basis of multi-person pose estimation [1, 2].

HPE involves two sub-tasks: location (determining where the keypoints are) and classification (determining which kinds the keypoints are). The location needs plenty of local details to get pixel-level accuracy. While classification requires a relatively larger receptive field to extract discriminative semantic representations [3]. Consequently, HPE methods have to fuse multi-scale information to make a balance between these two sub-tasks [4]. Most nowadays HPE methods [5, 6, 7, 8, 9] repeatedly downscale feature maps to enlarge the receptive fields. Feature maps of different spatial sizes (i.e. , , , and of the input image) are then resized and summed to exploit multi-scale information.

This strategy has made great achievements in HPE [7, 4, 8], but it still leaves to be desired. In this strategy, feature maps are downscaled by strided convolution (or pooling). As shown in Figure 1, during the downscaling, multiple pixels on the larger feature maps are merged into the same pixel on the smaller ones. The location information will be destroyed in this process. While during the upscaling, even if the transposed convolution [10] is used, it is hard to recover the destroyed location information. Consequently, there will be multiple possible corresponding positions on the upscaled feature maps for original single pixel. Although the final resized feature maps have the same spatial sizes, their pixels may be not well aligned. This spatial non-alignment potentially hurts the accuracy of location. Thus, it may be more preferred to fuse multi-scale features of the same spatial sizes.

An alternative method is to use dilated convolution, instead of downscaling, to enlarge receptive fields. In [11, 12], multiple convolutional layers with different dilation rates are used to extract feature maps at different scales. These feature maps have the same spatial sizes and are well aligned spatially. They are concatenated and fused by convolution to exploit multi-scale information. However, these dilation rates are still manually set and fixed, which may restrict the generalization ability over various human sizes.

Towards these issues, we propose an adaptive dilated convolution (ADC) in this paper. As shown in Figure 2, it divides channels into different dilation groups and uses a dilation-rates regression module to adaptively generate dilation rates for these groups. Compared with previous multi-scale fusion methods, ADC has three advantages: i) Instead of using multiple independent dilated convolution layers, ADC directly assigns different dilation rates to its channels. In this way, ADC can generate and fuse multi-scale features in a single layer, which is more elegant and efficient. ii) ADC allows fractional dilation rates, which enables ADC to adjust receptive fields with finer granularity, instead of only four fixed integer scales. Thus ADC may be able to exploit richer and finer multi-scale information. iii) The dilation rates in ADC are adaptively generated, which could help ADC to generalize better to various human sizes.

ADC can be easily plugged into existing HPE methods and trained end-to-end by standard back-propagation. Our contributions can be summarized into three points:

-

1.

We attempt to address the spatial non-alignment and inflexibility problems in nowadays multi-scale fusion methods of HPE. These problems are important to the accuracy of location and generalization ability over various human sizes.

-

2.

We propose an adaptive dilated convolution (ADC), which could flexibly fuse well-aligned multi-scale features in a single convolutional layer by adaptively generating dilation rates for different channels.

-

3.

The proposed ADC can be easily plugged into existing HPE methods and extensive experiments show that ADC can bring these methods consistent improvements.

II Related Works

II-A Multi-scale Fusion

Multi-scale fusion is widely adopted in many high-level vision tasks, such as detection [13, 14, 15], semantic segmentation [16], etc. On the one hand, these tasks involve both location and classification. They need multi-scale information to make a balance between these two sub-tasks. On the other hand, these tasks need to tackle objects of various sizes. They scale-invariant representations to get more stable performances. In these tasks, most methods [7, 13, 16] firstly extract a feature pyramid, which contains feature maps of different spatial sizes, and then fuse feature maps to obtain multi-scale information. However, as we have discussed above, the fused features may be not well spatially-aligned. This non-alignment may hurt the accuracy of location. For detection and segmentation, this influence could be ignored, because their evaluation metrics (IOU) are less sensitive to the accuracy of location. While HPE methods are evaluated by OKS, which will be heavily influenced by pixel-level errors. Thus the non-alignment may restrict the performance of HPE methods. In the proposed adaptive dilated convolution, multi-scale features are of the same spatial sizes, which may be more friendly to human pose estimation.

II-B Dilated Convolution

The main idea of dilated convolution is to insert zeros between pixels of convolution kernels. It is widely used in segmentation [17, 18] to enlarge the receptive fields while keeping the resolutions of feature maps. As the size of its receptive field can be easily changed by adjusting its dilation rate, dilated convolution is also used to aggregate multi-scale context information. For example, in [11], the outputs of convolutional layers with different dilation rates are fused to exploit multi-scale context information. And in [12], a similar idea is adopted in an atrous spatial pyramid pooling (ASPP) module. However, these dilation rates of different layers are manually set and can only be integers, which are not flexible enough. Instead, the dilation rates in ADC can be fractional and are adaptively generated, which enables it to learn more suitable receptive fields for objects of various sizes. Besides, every dilation group in ADC can represent features at a scale, which enables ADC to fuse richer multi-scale information yet in a simpler way.

III Adaptive Dilated Convolution

III-A Constant Dilation Rates

As shown in Figure 3, original dilated convolution can be decomposed into two steps: 1) sampling according to a index set over the input feature map ; 2) matrix multiplication of the sampled values and convolutional kernel . The index set is defined by the dilation rate and size of kernel :

| (1) |

where is the number of channels in , denotes rounding down to the nearest integer. Specially, if and then

| (2) | ||||

For value at location of the output feature map , we have

| (3) |

where enumerates the indexes in , and denotes the corresponding convolutional kernel for the output channel.

The receptive field for each channel in convolutional layer is defined as the square covered by index set . In original dilated convolution, the receptive fields of all channels are the same. Their sizes are:

| (4) | ||||

Specially, when , the size of receptive field is .

III-B Adaptive Dilation Rates

In adaptive dilated convolution, the dilation rates are no longer manually set. As shown in Figure 2, we use a dilation-rates regression module (DRM) to adaptively generate the dilation rates for different channels. DRM consists of a global average pooling layer and two fully connected layers with nonlinear activations. Suppose DRM is denoted as a function , then generated dilation rate is

| (5) |

We divide the input channels into dilation groups. Each group contains channels. The channels in the same group shares the same dilation rate. Thus the shape of is . And the dilation rate of the input channel is . If , then each channel has its own dilation rate. If , then all channels share the same dilation rate.

Consequently, the sampling index set becomes

| (6) |

In cases where is fractional, as shown in Figure 3, we use bilinear interpolation to get the sampling values. Suppose denotes the interpolated value at on , then we have:

| (7) |

Similarly, in the channel of adaptive dilated convolution, the size of receptive field is:

| (8) | ||||

Consequently, different dilation groups have different sizes of receptive fields. And thus ADC can fuse multi-scale information in a single layer.

III-C Analysis and Discussion

Comparison with Yu et al. In [11], Yu et al. use multiple dilated convolutional layers with different dilation rates to extract features at different scales. ADC adopts a similar idea, but implements it in a simple yet efficient way. Firstly, ADC consists of only one convolutional layer. It does not use independent dilated convolutional layers or extra concatenation. Thus ADC is much more computation-economic and time-saving. Secondly, every dilation group in ADC represents features at a different scale, which enables ADC to exploit richer multi-scale information than [11]. Thirdly, the dilation rates in ADC can be fractional and are adaptively generated, instead of manually set integers. It helps ADC to generalize better to persons of various sizes.

Comparison with deformable convolution. In [19], Dai et al. propose a deformable convolutional layer, which allows the sampling index set to be non-grid and irregular. It assigns an offset for each index in , instead of only modifying the dilation rates. Compared with adaptive dilated convolution, deformable convolution enjoys higher degrees of freedom, but it also has a much higher computational cost. More importantly, the offsets introduced in deformable convolution are completely unconstrained and independent. This may cause the input and output feature maps to lose their spatial correspondence, which also potentially hurts the accuracy of location. We also experimentally proved in Sec IV-A5 that the proposed adaptive dilated convolution is more suitable to human pose estimation than deformable convolution.

Comparison with scale-adaptive convolution. In [21], Zhang et al. propose a scale-adaptive convolution (SAC) to address inconsistent predictions of large objects and invisibility of small objects in scene parsing. SAC adaptively generates pixel-wise dilation rates to acquire flexible-size receptive fields along spatial dimensions. It works well for scene parsing, which needs to tackle objects of various sizes within a single image. However, in single person pose estimation, size inconsistent across different images plays a more important role, which could be better alleviated via multi-scale fusion along channel dimension. In SAC, different pixels can have different sizes of receptive fields, but different channels share the same dilation rates. Consequently, ADC may be more suitable for single person pose estimation than SAC. In Sec IV-A5, we also experimentally prove that ADC works better than SAC in HPE methods.

III-D Instantiation

We plug ADC into the backbones of frequently used HPE models, including the family of SimpleBaseline [22] and HRNet [4]. Their backbones are built up with residual blocks [23]. As shown in Figure 4, we replace one ordinary convolution layer in the original residual block by ADC. The weights of the last layer in the dilated-rates regression module are initialized as zeros and its bias are initialized as ones. Thus, the generated dilation rates in ADC are initialized are ones. The dilation groups are set as , in which case each group contains only one channel. Thus every channel can exploit context information at different scales, and ADC could fuse as much richer multi-scale information as it can. We also experimentally demonstrate that the performance is positively correlated to in Sec IV-A4.

IV Experiments

IV-A Experiments on COCO

Dataset. All of our experiments about human pose estimation are done on COCO dataset [24]. It contains over persons and persons. Our models are trained on COCO train2017 ( images), and evaluated on COCO val2017 ( images) and COCO test-dev ( images).

Evaluation metric. We use the standard evaluation metric Object Keypoint Similarity (OKS) to evaluate our models. , where is the Euclidean distance between the detected keypoint and its corresponding ground-truth, is the visibility flag of the ground-truth, denotes the person scale, and is a per-keypoint constant that controls falloff. We report the standard average precision () and recall, including ( at OKS=), , (mean of scores from OKS= to OKS= with the increment as , ( scores for person of medium sizes) and ( scores for persons of large sizes).

Training. Following the setting of [4], we augment the data by random rotation ([, ]), random scaling ([, ]), random translation ([, ]), random horizontal flip and half body transform [25]. Then we crop out each single person according to their ground-truth bounding boxes. These crops are resized to (or ) and input to the HPE model.

The models are optimized by Adam [26] optimizer, and the initial learning rate is set as . For the family of HRNet, each model is trained for 210 epochs and the learning rate decays to and at and epoch respectively. For the family of SimpleBaseline, each model is trained for 140 epochs and the learning rate decays to and at and epoch respectively. All models are trained and tested on Tesla V100 GPUs. More details can be referred to the Github repository Pose***https://github.com/leoxiaobin/deep-high-resolution-net.pytorch.git.

Testing. During testing, we use the same person detection results provided in [22], which are widely used for many single-person HPE models [4, 3]. Single persons are cropped out according to the detection results and then resized and input to the HPE models. The flip test [4] is also performed in all experiments. Each keypoint location is predicted by adjusting the highest heatvalue location with a quarter offset in the direction from the highest response to the second-highest response [4].

IV-A1 Ablation Study

To fully demonstrate the superiority of ADC, we perform ablation studies on different models, including the family of SimpleBaseline [22] and HRNet [4]. The results are shown in Table I. As one can see, ADC can bring consistent improvement for different models. For the smallest model, i.e. SimpleBaseline-Res50, ADC brings an improvement of on score. For the largest model, i.e. HRNet-W48, there is still an improvement of on score. The increments decay as the scores increase. This may because it is harder to improve the performance of a more accurate model. From and , we can see that the improvements in medium and large persons are roughly the same. It indicates that ADC benefits equally the keypoint detection of large and medium persons.

IV-A2 Error Analysis

In this section, we use the error analysis tool in [27] to further explore how ADC help HPE models achieve better results. We mainly study four types of errors: 1) jitter: small error around the correct keypoint location; 2) missing: large localization error, the detected keypoint is not within the proximity of any body part; 3) inversion: confusion between semantically similar parts belonging to the same instance. The detection is in the proximity of the true keypoint location of the wrong body part; 4) swap: confusion between semantically similar parts of different instances. The detection is within the proximity of a body part belonging to a different person. We use SimpleBaseline-Res50 as the baseline model, and plot the error analysis results with and without ADC in Figure 5. As one can see, ADC can reduce the proportion of all four types of errors. Especially, the proportion of missing error is reduced by . It suggests that ADC could help the model to be more robust and detect keypoints in more cases. This may be attributed to that ADC can adaptively adjust the dilation rates. The jitter error and inversion error directly indicate the accuracy of location and classification respectively. The proportions of these two errors are both reduced by . It suggests that ADC can simultaneously benefit the location and classification of keypoints.

IV-A3 Statistical Analysis

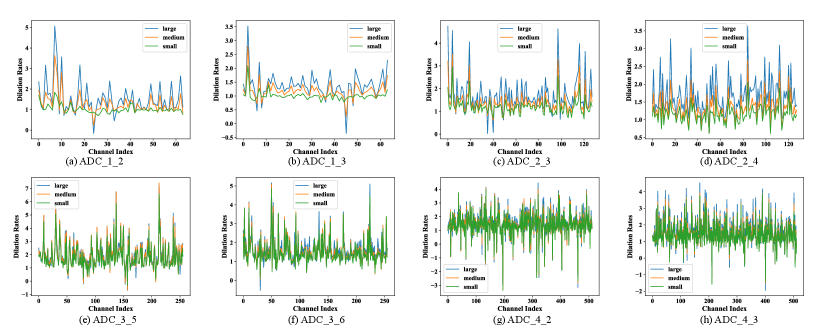

In this section, we make a statistical analysis to further investigate how the generated dilation rates in ADC are related to the sizes of test persons. We divide the test persons in COCO val2017 into three types according to the areas of their bounding boxes. Persons whose bounding boxes have areas: 1) smaller than are divided into the small group ( persons); 2) greater than but smaller than are divided into the medium group ( persons); 3) greater than are divided into the large group ( persons). We still use SimpleBaseline-Res50 with ADC as the studied model. The backbone, i.e. Resnet-50, has four stages, and they contain , , , residual blocks respectively.

We plot the means and variations of the dilation rates of different channels in Figure 6. For example, Figure 6 (a) shows the mean dilation rates of ADC in the third block of the first stage ( channels). Figure 6 (especially the top row) suggests that the dilation rates are closely related to the sizes of test persons. The dilation rates for larger persons are more likely to larger. It enables ADC to be more robust over various human sizes. Besides, the dilation rates for the deeper block also tend to have larger means (bottom row). It may because that deeper blocks are more concerned with semantic features and need larger dilation rates to enlarge the receptive fields. Additionally, the mean dilation rates of different channels in the same layer are quite different. The larger variance of these dilation rates also indicates that ADC can fuse rich multi-scale information via different channels.

IV-A4 Study of Dilation Groups

We perform comparative experiments to explore the influence of dilation groups . We use the SimpleBaseline-Res50 as the baseline. We gradually improve from to . The results are shown in Table II. As one can see, the model performance becomes better when increases. It suggests that the number of different dilation rates matters, which also indicates the importance of multi-scale information fusion in HPE.

| Groups | ||||

|---|---|---|---|---|

IV-A5 Compared with Other Methods

In this section, we experimentally prove that ADC is more suitable to human pose estimation than deformable convolution (DC) [19] and scale-adaptive convolution (SAC) [21]. Comparative experiments are performed on SimpleBaseline-Res50. As shown in Table III, although DC can bring an improvement on the baseline, its performance is inferior to that of ADC. As we have discussed in Sec III-C, the unconstrained and independent offsets of DC may cause the input and output feature maps to lose their spatial correspondence, which potentially hurt the accuracy of location. SAC can alleviate the size inconsistent along spatial dimensions, but involves little multi-scale fusion along the channel dimension, which is more important in HPE. Consequently, the improvement of SAC is lower than both DC and ADC.

IV-B Experiments for Semantic Segmentation

Similar to human pose estimation, semantic segmentation also requires rich multi-scale information to make a balance between local and semantic features. Thus the proposed ADC should also benefit the performance of semantic segmentation models. In this section, we plug ADC into different models to demonstrate its benefits on semantic segmentation.

We use CityScapes [28] as our training (2975 images) and validation (500 images) datasets. We use FCN [29], PSANet [30], DeepLabV3 [12] and DeepLabV3+ [17] as our baseline models. The input sizes are set as . All models are trained for iterations. More details can be referred to the Github repository mmsegmention†††https://github.com/open-mmlab/mmsegmentation.git. As shown in Table IV, ADC can bring consistent improvements to different Semantic Scene Parsing models. For FCN, ADC even improves the mIOU by .

V Conclusion

In this paper, we mainly focus on multi-scale fusion methods in human pose estimation. Existing HPE methods usually fuse feature maps of different spatial sizes to exploit multi-scale information. However, the location information is irreversibly destroyed during the downscaling, and thus the upscaled feature maps may be not well spatially-aligned. This non-alignment potentially hurts the accuracy of keypoint location. Besides, scales of these feature maps are fixed and inflexible, which may restrict its generalization over different human sizes. In this paper, we propose an adaptive dilated convolution (ADC), which exploits multi-scale information by fusing channels with different dilation rates. In this way, each channel in ADC can represent features at a scale, and thus ADC can exploit richer multi-scale information from features of the same spatial sizes. More importantly, the dilation rates for different channels in ADC are adaptively generated, which enables ADC to adjust the scales according to the sizes of test persons. As a result, ADC can help HPE fuse better aligned and more generalized multi-scale features. Extensive experiments on both human pose estimation and semantic segmentation prove that ADC can bring consistent improvements to these methods.

Acknowledgements

This work is jointly supported by National Key Research and Development Program of China (2016YFB1001000), Key Research Program of Frontier Sciences, CAS (ZDBS-LY-JSC032), Shandong Provincial Key Research and Development Program (2019JZZY010119), and CAS-AIR.

References

- [1] A. Newell, K. Yang, and J. Deng, “Stacked hourglass networks for human pose estimation,” in ECCV. Springer, 2016, pp. 483–499.

- [2] S.-E. Wei, V. Ramakrishna, T. Kanade, and Y. Sheikh, “Convolutional pose machines,” in CVPR, 2016, pp. 4724–4732.

- [3] Y. Cai, Z. Wang, Z. Luo, B. Yin, A. Du, H. Wang, X. Zhang, X. Zhou, E. Zhou, and J. Sun, “Learning delicate local representations for multi-person pose estimation,” in ECCV. Springer, 2020, pp. 455–472.

- [4] K. Sun, B. Xiao, D. Liu, and J. Wang, “Deep high-resolution representation learning for human pose estimation,” in CVPR, 2019, pp. 5693–5703.

- [5] Y. Chen, Z. Wang, Y. Peng, Z. Zhang, G. Yu, and J. Sun, “Cascaded pyramid network for multi-person pose estimation,” in CVPR, 2018, pp. 7103–7112.

- [6] K. Su, D. Yu, Z. Xu, X. Geng, and C. Wang, “Multi-person pose estimation with enhanced channel-wise and spatial information,” in CVPR, 2019, pp. 5674–5682.

- [7] W. Li, Z. Wang, B. Yin, Q. Peng, Y. Du, T. Xiao, G. Yu, H. Lu, Y. Wei, and J. Sun, “Rethinking on multi-stage networks for human pose estimation,” arXiv preprint arXiv:1901.00148, 2019.

- [8] Z. Luo, Z. Wang, Y. Cai, G. Wang, Y. Huang, L. Wang, E. Zhou, and J. Sun, “Efficient human pose estimation by learning deeply aggregated representations,” arXiv preprint arXiv:2012.07033, 2020.

- [9] Z. Luo, Z. Wang, Y. Huang, T. Tan, and E. Zhou, “Rethinking the heatmap regression for bottom-up human pose estimation,” arXiv preprint arXiv:2012.15175, 2020.

- [10] V. Dumoulin and F. Visin, “A guide to convolution arithmetic for deep learning,” arXiv preprint arXiv:1603.07285, 2016.

- [11] F. Yu and V. Koltun, “Multi-scale context aggregation by dilated convolutions,” arXiv preprint arXiv:1511.07122, 2015.

- [12] L.-C. Chen, G. Papandreou, F. Schroff, and H. Adam, “Rethinking atrous convolution for semantic image segmentation,” arXiv preprint arXiv:1706.05587, 2017.

- [13] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in CVPR, 2017, pp. 2117–2125.

- [14] T. Kong, F. Sun, C. Tan, H. Liu, and W. Huang, “Deep feature pyramid reconfiguration for object detection,” in ECCV, 2018, pp. 169–185.

- [15] G. Ghiasi, T.-Y. Lin, and Q. V. Le, “Nas-fpn: Learning scalable feature pyramid architecture for object detection,” in CVPR, 2019, pp. 7036–7045.

- [16] S. Liu, L. Qi, H. Qin, J. Shi, and J. Jia, “Path aggregation network for instance segmentation,” in CVPR, 2018, pp. 8759–8768.

- [17] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in ECCV, 2018.

- [18] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille, “Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs,” IEEE transactions on pattern analysis and machine intelligence, vol. 40, no. 4, pp. 834–848, 2017.

- [19] J. Dai, H. Qi, Y. Xiong, Y. Li, G. Zhang, H. Hu, and Y. Wei, “Deformable convolutional networks,” in ICCV, 2017, pp. 764–773.

- [20] R. Zhang, S. Tang, Y. Zhang, J. Li, and S. Yan, “Scale-adaptive convolutions for scene parsing,” in ICCV, 2017, pp. 2031–2039.

- [21] ——, “Scale-adaptive convolutions for scene parsing,” in ICCV, 2017, pp. 2031–2039.

- [22] B. Xiao, H. Wu, and Y. Wei, “Simple baselines for human pose estimation and tracking,” in ECCV, 2018, pp. 466–481.

- [23] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in CVPR, 2016, pp. 770–778.

- [24] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in ECCV. Springer, 2014, pp. 740–755.

- [25] Z. Wang, W. Li, B. Yin, Q. Peng, T. Xiao, Y. Du, Z. Li, X. Zhang, G. Yu, and J. Sun, “Mscoco keypoints challenge 2018,” in ECCVW, vol. 5, 2018.

- [26] D. P. Kingma and J. Ba, “Adam: a method for stochastic optimization. corr abs/1412.6980 (2014),” 2014.

- [27] M. R. Ronchi and P. Perona, “Benchmarking and error diagnosis in multi-instance pose estimation,” in ICCV, Oct 2017.

- [28] M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The cityscapes dataset for semantic urban scene understanding,” in CVPR, 2016, pp. 3213–3223.

- [29] E. Shelhamer, J. Long, and T. Darrell, “Fully convolutional networks for semantic segmentation,” IEEE transactions on pattern analysis and machine intelligence, vol. 39, no. 4, pp. 640–651, 2017.

- [30] H. Zhao, Y. Zhang, S. Liu, J. Shi, C. Change Loy, D. Lin, and J. Jia, “Psanet: Point-wise spatial attention network for scene parsing,” in ECCV, 2018, pp. 267–283.