Adaptive Gaussian Fuzzy Classifier for Real-Time Emotion Recognition in Computer Games

Abstract

Human emotion recognition has become a need for more realistic and interactive machines and computer systems. The greatest challenge is the availability of high-performance algorithms to effectively manage individual differences and nonstationarities in physiological data streams, i.e., algorithms that self-customize to a user with no subject-specific calibration data. We describe an evolving Gaussian Fuzzy Classifier (eGFC), which is supported by an online semi-supervised learning algorithm to recognize emotion patterns from electroencephalogram (EEG) data streams. We extract features from the Fourier spectrum of EEG data. The data are provided by 28 individuals playing the games ‘Train Sim World’, ‘Unravel’, ‘Slender The Arrival’, and ‘Goat Simulator’ – a public dataset. Different emotions prevail, namely, boredom, calmness, horror and joy. We analyze the effect of individual electrodes, time window lengths, and frequency bands on the accuracy of user-independent eGFCs. We conclude that both brain hemispheres may assist classification, especially electrodes on the frontal (Af3-Af4), occipital (O1-O2), and temporal (T7-T8) areas. We observe that patterns may be eventually found in any frequency band; however, the Alpha (8-13Hz), Delta (1-4Hz), and Theta (4-8Hz) bands, in this order, are the highest correlated with emotion classes. eGFC has shown to be effective for real-time learning of EEG data. It reaches a 72.2% accuracy using a variable rule base, 10-second windows, and 1.8ms/sample processing time in a highly-stochastic time-varying 4-class classification problem.

I Introduction

Human emotions can be noticed by machines through non-physiological data, such as facial expressions, gestures, body language, eye blink count, voice tones; and physiological data by using EEG, electromyogram (EMG), and electrocardiogram (ECG) [1][2]. Computer vision and audition, physiological features, and brain-computer interface (BCI) are typical approaches to emotion recognition. The potential outcomes of non-physiological and physiological data analysis spread across a variety of domains in which machine intelligence has offered decision-making support; control of mechatronics; and realism, efficiency, and interaction [3].

Classifiers of EEG data are usually based on Support Vector Machines (SVM) using different kernels, the -Nearest Neighbors (kNN) algorithm, Linear Discriminant Analysis (LDA), Naive Bayes (NB), and the Multi-Layer Perceptron (MLP) neural network [3] [4]. Deep learning has also been applied to affective computing from physiological data. A deep neural network to classify the states of relaxation, anxiety, excitement, and fun by means of skin conductance and pulse signals, with similar accuracy to those of shallow methods, is given in [5]. A Deep Belief Network (DBN), i.e., a probabilistic generative deep model, to classify positive, neutral, and negative emotions is proposed in [6]. Selection of electrodes and frequency bands is performed through the weight distributions obtained from the trained DBN, being differential asymmetries between the left and right brain hemispheres relevant features. A Dynamical-Graph Convolutional Neural Network (DG-CNN), trained by error back-propagation, learns an adjacency matrix among EEG channels to outperform DBN, Transductive SVM, Transfer Component Analysis (TCA), and other methods on benchmark EEG datasets in [1].

Fuzzy methods to handle uncertainties in emotion recognition, specially using speech data and facial images have been proposed. A Two-Stage Fuzzy Fusion strategy combined with a CNN (TSFF-CNN) is described in [7]. Facial expressions and speech modalities are aggregated for a final classification decision. The method manages ambiguity and uncertainty of emotional state information. The TSFF-CNN outperformed non-fuzzy deep methods. In [8], a Fuzzy multi-class SVM method uses features from the Biorthogonal wavelet transform applied over facial images to detect emotions, namely, happiness, sadness, surprisingness, angriness, disgustingness, and fearfulness. An Adaptive Neuro-Fuzzy Inference System (ANFIS) that combines facial expression and EEG features have shown to be superior to single-source-based classifiers in [9]. The ANFIS model identifies the valence status stimulated by watching movies.

Multi-scale Inherent Fuzzy Entropy (I-FuzzyEn) is a method that uses empirical mode decomposition and fuzzy membership functions to evaluate the complexity of EEG data [10]. Complexity estimates are useful as a health bio-signature. FuzzyEn has shown to be more robust than analogous non-fuzzy methods. The Online weighted Adaptation Regularization for Regression (OwARR) algorithm [11] aims to estimate driver drowsiness from EEG data. OwARR uses fuzzy sets to realize part of a regularization procedure. Some offline training steps are needed to select domains. The idea is to reduce subject-specific calibration from EEG data. An ensemble of models using swarm-optimized fuzzy integral for motor imagery recognition and robotic arm control is given in [12]. The ensemble uses Sugeno or Choquet fuzzy integral, supported by particle swarm optimization (PSO); it identifies mental representation of movements.

These classifier-design methods indirectly assume stationary data sources since models are expected to keep their training performance during online operation using fixed structure and parameters. However, physiological data change due to movement artifacts, electrode and channel configuration, and environmental conditions. Fatigue, attention and stress also affect both user-dependent and user-independent classifiers in an uncertain way. In the present study we cope with real-time emotion classification from EEG data streams (visual and auditory evoked potentials) generated by computer game players. We summarize a recently-proposed semi-supervised evolving Gaussian Fuzzy Classification (eGFC) method [13]. eGFC is an instance of Evolving Granular System [14] [15], which is a general-purpose online learning framework, i.e., a family of algorithms and methods to autonomously construct classifiers, regressors, predictors, and controllers in which any aspect of a problem may assume a non-pointwise (e.g., interval, fuzzy, rough, statistical) uncertain characterization, including data, parameters, features, learning equations, and covering regions [16] [17] [18]. eGFC has been applied to power quality classification in smart grids [13]; and anomaly detection in data centers [19].

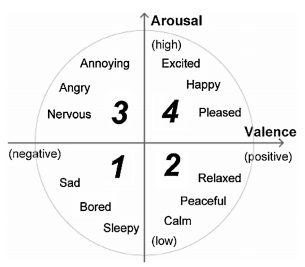

We use a public EEG dataset [20], which contains ordered samples obtained from individuals exposed to visual and auditory stimuli. Brain activity is recorded by the 14 channels of the Emotiv EPOC+ EEG device. We pre-process raw data by means of time windows and frequency filters. We extract ten features per EEG channel, namely, the maximum and mean values from the Delta (1-4Hz), Theta (4-8Hz), Alpha (8-13Hz), Beta (13-30Hz), and Gamma (30-64Hz) bands – 140 features in total. A unique user-independent eGFC model is developed from scratch based on sequential data from 28 consecutive players. After recognizing a predominant emotion according to the Arousal-Valence system, i.e., boredom, calmness, horror, or joy, which are related to specific computer games, then a feedback can be implemented in a real or virtual environment to promote a higher level of realism and interactivity. The feedback step is out of the scope of this study.

Our contribution in relation to the literature are: (i) a new user-independent time-varying fuzzy classifier, eGFC, supplied with online learning to deal with uncertainties and nonstationarities of EEG data. eGFC incorporates new spatio-temporal patterns with no human intervention by using adaptive granularity and interpretable rule base. Storing data samples, and a priori knowing the task and number of classes are needless; (ii) an analysis of the effect of time window lengths, brain regions, frequency bands, and dimensionality reduction on the classification performance.

II Evolving Gaussian Fuzzy Classifier

We summarize the semi-supervised evolving classification method, eGFC [13]. Although eGFC handles partially labeled data, we assume labels become available some time steps after the class estimate. A single supervised learning step is given using an input-output pair when the output is available. eGFC uses Gaussian membership functions to cover the data domain with granules. Recursive equations construct its rule base and updates granules to deal with changes and provide nonlinear and smooth class boundaries [13] [19].

II-A Rule Structure

Rules are created and updated depending on the behavior of the system over time. An eGFC rule, say , is

IF

in which , , are features, and is a class. The data stream is denoted Moreover, , ; , are Gaussian functions built and updated incrementally; is a class label. has height 1, and is characterized by the modal value and dispersion . Rules , , set up a zero-order Takagi-Sugeno model. The number of rules, , is variable. We give a semi-supervised way of constructing eGFC from scratch.

II-B Adding Classification Rules

Rules are created and evolved as data are available. A new granule, , and rule, , are created if none of the existing rules are sufficiently activated by .

Let be an adaptive threshold. If

| (1) |

in which is the minimum triangular norm, then the eGFC structure is expanded. dictates how large granules can be. Different values result in different granular perspectives [18]. Section II-D gives recursive equations to update .

A new has membership functions , , with

| (2) |

The intuition is to start big. Dispersions diminish when new samples activate the same granule. This strategy is appealing for a compact model structure [17].

The class is initially undefined. If the corresponding output, , associated to , becomes available, then

| (3) |

Otherwise, the first labeled sample that arrives after the -th time step, and activates the rule according to (1), is used to define its class.

In case a labeled sample activates a labeled rule, but their labels are different, then a new (partially overlapped) granule is created to represent new information. Partially overlapped Gaussian granules, tagged with different labels, tend to have their modal values withdrawn and dispersions reduced by the updating procedure (Section II-C). With this initial rule parameterization, preference is given to the design of granules balanced along its dimensions. eGFC realizes the principle of justifiable granularity [21], but allows Gaussians to find more appropriate places and dispersions.

II-C Updating Parameters

The -th rule is candidate to be updated if it is sufficiently activated by an unlabeled sample, , according to

| (4) |

Only the most active rule, , is chosen for adaptation in case two or more rules reach the level for the unlabeled . For a labeled sample, i.e., for pairs , the class of the most active rule , if defined, must match . Otherwise, the second most active rule among those that reached the level is chosen for adaptation, and so on. If none of the rules are apt, then a new one is created (Section II-B).

To include in , the learning algorithm updates the modal values and dispersions of , ,

| (5) |

and

| (6) | |||||

II-D Time-Varying Granularity

Let the activation threshold, , be time-varying [16]. The threshold assumes values in the unit interval according to the overall average dispersion

| (7) |

in which and are the number of rules and features. Thus,

| (8) |

Given , rules’ activation degrees are compared to to decide between parametric or structural change of the eGFC. We suggest as starting value.

II-E Merging

A distance measure between Gaussian objects is

| (9) |

eGFC may combine the pair of granules with the smallest . The underlying granules must be either unlabeled or tagged with the same class label. The merging decision is based on a threshold, , or expert judgment regarding the suitability of combining granules to have a more compact model. For data within the unit hypercube, we use as default, which means that the candidate granules should be quite similar and, in fact, carry quite similar information.

A new granule, say , which results from and , is built by Gaussians with modal values

| (10) |

and dispersion

| (11) |

II-F Deleting Rules

A rule is removed from the eGFC model if it is inconsistent with the current environment. In other words, if a rule is not activated for a number of time steps, say , then it is deleted from the rule base. However, if a class is rare, then it may be the case to set , and keep the inactive rules. Removing rules periodically helps to keep the rule base updated.

II-G Online Learning from Data Stream

III Methodology

We want to classify emotions from EEG data (visual and auditory evoked potentials). We describe how features are extracted and models are evaluated based on particular channels and multivariable BCI system.

III-A About the Data

The eGFC is evaluated from EEG streams generated by individuals playing computer games. A game motivates predominantly a particular emotion, according to the quadrants of the Arousal-Valence circle [22]. On the left side of the valence dimension are negative emotions, e.g., ‘angry’, ‘bored’, and relative adjectives, whereas on the right side are positive emotions, e.g., ‘happy’, ‘calm’, and relative adjectives. The upper part of the arousal dimension characterizes excessive emotional arousal or behavioral expression, while the lower part designates apathy. We assign a number to the quadrants (Figure 1) according to our classification problem. The classes are: bored, calm, anger, and happy. As the players effectively change the outcome of the game, their mental activity is high. They cognitively process images, build histories mentally, and evaluate characters and situations. At first, emotions are not prone to any quadrant [2] [23].

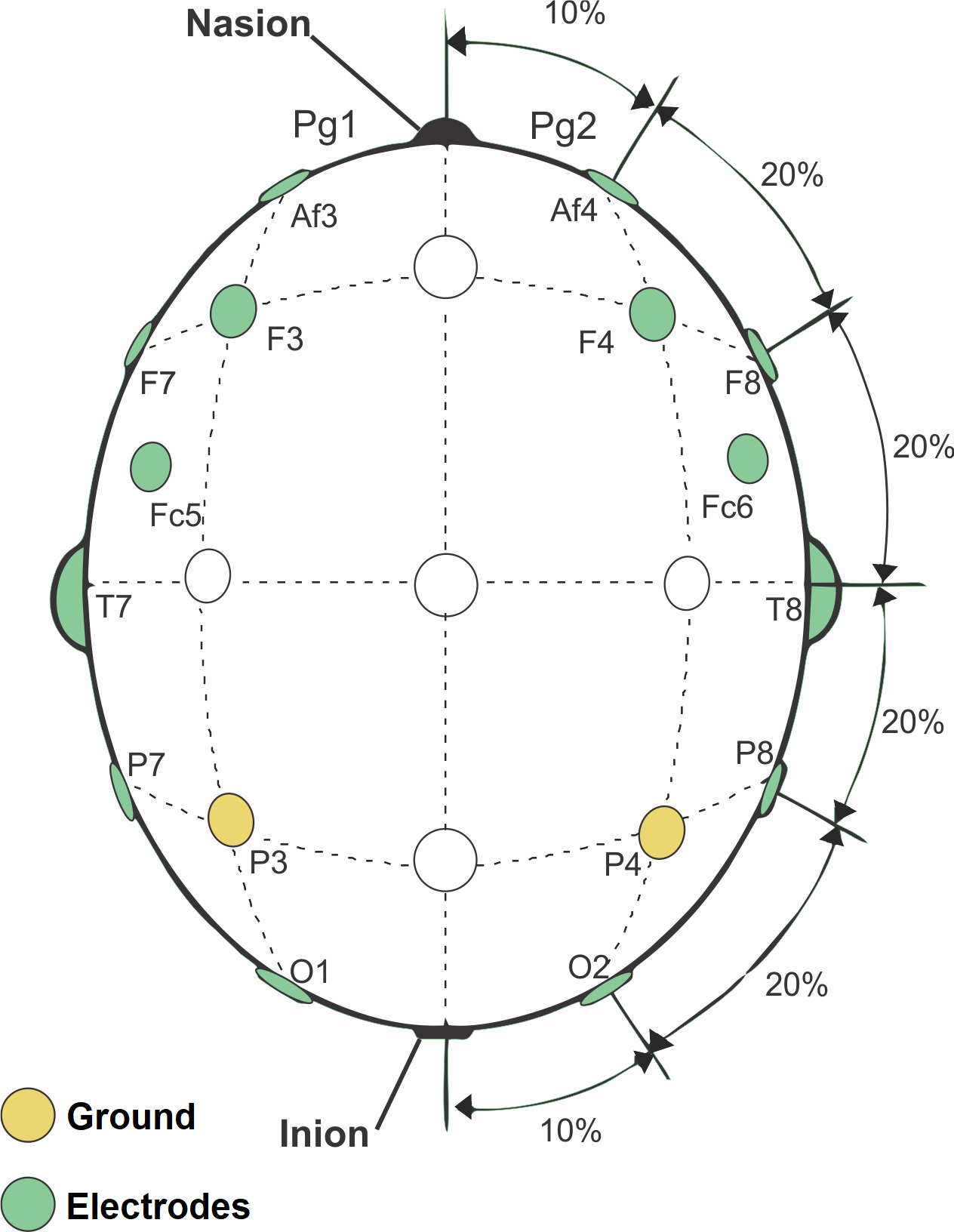

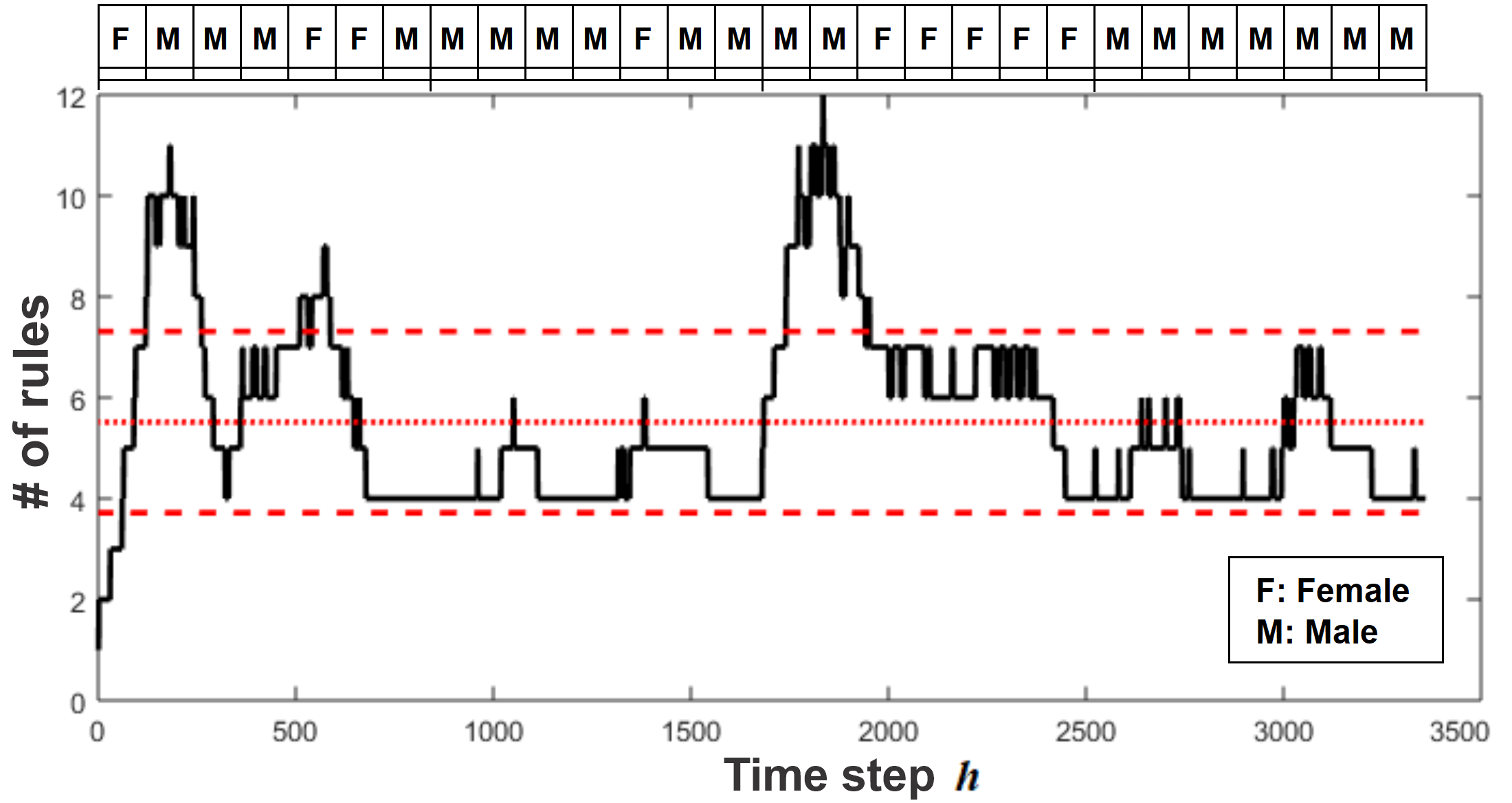

The raw data is provided in [20]. The data were produced by 28 healthy individuals (experimental group) from 20 to 27 years old. An individual plays a game for 5 minutes (20 minutes in total) using the Emotiv EPOC+ EEG device and earphones. The male-female (M-F) order of players is: F M M M F F M M M M M F M M M M F F F F F M M M M M M M. A single user-independent eGFC model is evolved for the experimental group aiming at reducing individual uncertainty, and enhancing the reliability and generalizability of the system. Brain activity is recorded from 14 electrodes placed on the scalp according to the 10-20 System, namely, at Af3, Af4, F3, F4, F7, F8, Fc5, Fc6, T7, T8, P7, P8, O1, and O2 (Figure 2). A letter identifies the lobe. F stands for Frontal, T for Temporal, P for Parietal, and O for Occipital. Even and odd numbers refer to positions on the right and left brain hemispheres. The sampling frequency is 128Hz. Each individual produces 38,400 samples per game, and 153,600 samples in total.

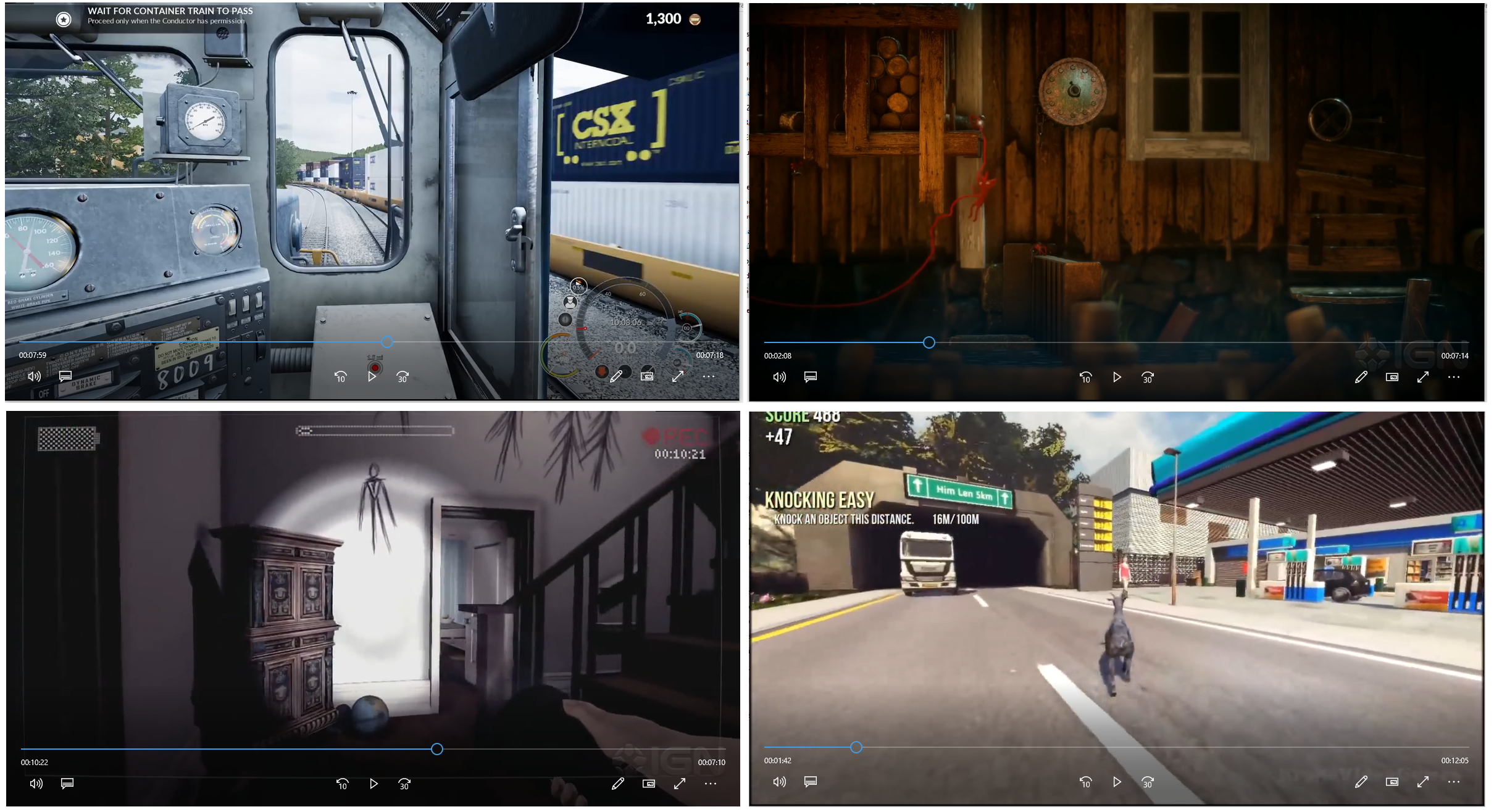

The experiments are conducted in a dark and quiet room. A laptop with a 15.6 inch screen, with 16GB high-quality graphic rendering, is used. The games are played in the same order: ‘Train Sim World’, ‘Unravel’, ‘Slender The Arrival’, and ‘Goat Simulator’. Figure 3 illustrates their interfaces. According to a survey, the predominant class of emotion for the games are, respectively, boredom (Class 1), calmness (Class 2), angriness/nervousness (Class 3), and happiness (Class 4).

III-B About Feature Extraction and Experiments

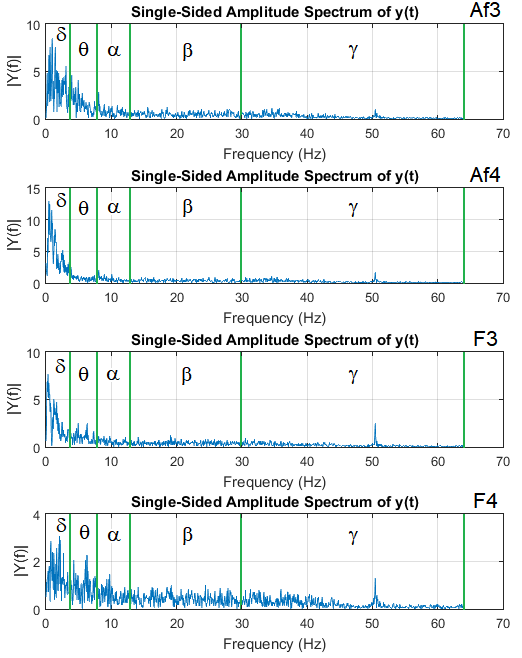

A fifth-order sinc filter is applied to the raw data to suppress movement artifacts [20]. Subsequently, feature extraction is performed. We generate 10 features from each of the 14 EEG channels. They are the maximum and mean values of 5 bands of the Fourier spectrum. The bands are known as Delta (1-4Hz), Theta (4-8Hz), Alpha (8-13Hz), Beta (13-30Hz), and Gamma (30-64Hz). In case 5-minute time windows are used to construct the frequency spectrum, and compose a processed sample to be fed to eGFC, then each individual produces 4 samples – one sample per game, i.e., one sample per class or predominant emotion. Therefore, the 28 participants generate 112 processed samples. We also evaluate 1-minute, 30-second, and 10-second time windows, which yield 560, 1120, and 3360 processed samples. Examples of spectra using 30-second time windows over data streams from frontal electrodes – Af3, Af4, F3 and F4 – are illustrated in Figure 4. Notice a higher level of energy in the Delta, Theta and Alpha bands, and that the maximum and mean values per band can be easily obtained to compose a 10-feature processed sample.

In a first experiment, the data of individual electrodes are analyzed (univariate time series analysis). 10-feature samples, , , are fed to the eGFC, which evolves from scratch. We perform the train-after-test approach, i.e., an eGFC estimate is given; then the true class of becomes available, and the pair is used for a supervised learning step. Classification accuracy, , is obtained recursively from

| (12) |

in which if the estimate is correct, i.e., ; and , otherwise. A random (coin flipping) classifier has an expected of 0.25 (25%) in the 4-class problem. Higher values indicate a level of learning from the data. From the investigation of individual electrodes we expect to identify more discriminative areas of the brain.

A measure of model compactness is the average number of fuzzy granules over time,

| (13) |

Second, we consider the global 140-feature problem (multivariate time series analysis) – being 10 features extracted from each electrode. Thus, , , are considered as eGFC inputs. The classifier self-develops on the fly based on the train-after-test strategy. Dimension reduction is performed using the non-parametric Spearman’s Correlation-based Score method [24]. Fundamentally, a feature is better ranked if it is less correlated to other features, and more correlated to a class. The Leave -Feature Out method () gradually eliminates lower ranked features.

IV Results

IV-A Single Channel Experiment

We evaluate individual EEG channels to discover more propitious brain regions in terms of their relations to emotion patterns. Default hyper-parameters are used to initialize eGFC, i.e. , [13]. Input samples contain 10 entries. Table I shows the accuracy and compactness of eGFC models for different time window lengths.

| 5-minute time window | |||||

|---|---|---|---|---|---|

| Left hemisphere | Right hemisphere | ||||

| Ch | Ch | ||||

| Af3 | 18.8 | 24.8 | Af4 | 19.6 | 22.9 |

| F3 | 23.2 | 21.3 | F4 | 20.5 | 21.2 |

| F7 | 17.0 | 21.2 | F8 | 25.0 | 19.1 |

| Fc5 | 24.1 | 22.5 | Fc6 | 20.5 | 19.0 |

| T7 | 22.3 | 20.2 | T8 | 24.1 | 21.2 |

| P7 | 18.8 | 21.7 | P8 | 18.8 | 21.7 |

| O1 | 21.4 | 21.9 | O2 | 21.4 | 21.9 |

| Avg. | 20.8 | 21.9 | Avg. | 21.4 | 21.0 |

| 1-minute time window | |||||

| Left hemisphere | Right hemisphere | ||||

| Ch | Ch | ||||

| Af3 | 43.4 | 17.3 | Af4 | 41.6 | 13.1 |

| F3 | 37.0 | 14.3 | F4 | 39.6 | 15.5 |

| F7 | 41.3 | 14.5 | F8 | 31.6 | 10.3 |

| Fc5 | 38.4 | 16.1 | Fc6 | 41.8 | 18.6 |

| T7 | 43.9 | 14.4 | T8 | 50.4 | 15.9 |

| P7 | 37.9 | 17.4 | P8 | 33.8 | 15.7 |

| O1 | 45.0 | 16.3 | O2 | 40.5 | 16.6 |

| Avg. | 41.0 | 15.8 | Avg. | 39.9 | 15.1 |

| 30-second time window | |||||

| Left hemisphere | Right hemisphere | ||||

| Ch | Ch | ||||

| Af3 | 51.3 | 11.7 | Af4 | 43.9 | 8.9 |

| F3 | 40.5 | 10.3 | F4 | 42.9 | 8.8 |

| F7 | 44.2 | 10.3 | F8 | 37.5 | 6.7 |

| Fc5 | 41.3 | 10.8 | Fc6 | 44.7 | 11.6 |

| T7 | 40.4 | 10.2 | T8 | 49.6 | 11.3 |

| P7 | 46.3 | 9.4 | P8 | 42.7 | 10.3 |

| O1 | 45.9 | 10.1 | O2 | 44.7 | 12.8 |

| Avg. | 44.3 | 10.4 | Avg. | 43.7 | 10.1 |

| 10-second time window | |||||

| Left hemisphere | Right hemisphere | ||||

| Ch | Ch | ||||

| Af3 | 52.6 | 6.3 | Af4 | 44.0 | 5.5 |

| F3 | 41.8 | 6.1 | F4 | 43.8 | 5.9 |

| F7 | 46.3 | 6.1 | F8 | 40.2 | 4.8 |

| Fc5 | 40.5 | 5.7 | Fc6 | 46.8 | 6.8 |

| T7 | 40.4 | 5.7 | T8 | 51.5 | 6.2 |

| P7 | 46.9 | 6.7 | P8 | 45.2 | 6.5 |

| O1 | 47.7 | 6.6 | O2 | 45.2 | 7.0 |

| Avg. | 45.2 | 6.2 | Avg. | 45.2 | 6.1 |

From Table I we notice that the mean accuracy for 5-minute windows, , does not reflect learning. This suggests that the filter effect on extracting features from 5-minute windows suppresses crucial details to distinguish patterns. Emotions tend to be sustained along shorter periods. Accuracies greater than arise for smaller windows. The average accuracy for 1-minute windows, , is significant, especially due to the limitation of observing a single electrode. As window length reduces, accuracy increases using a more compact model. This is a result of the availability of a larger amount of processed samples (extracted from a smaller amount of time windows), and the ability of the learning algorithm to lead the eGFC to a more stable set up after merging and deleting granules. The difference between the average accuracy of the 30-second () and 10-second () windows becomes small, which suggests saturation around 45-46%. Windows smaller than 10 seconds tend to be needless. Asymmetries between the accuracy of models evolved for the left and right brain hemispheres can be noticed. Although the right hemisphere is known to deal with emotional interpretation, and be responsible to creativity and intuition; logical interpretation – typical of the left hemisphere – is also present as players seek reasons to justify decisions. Thus, we notice the emergence of patterns in both hemispheres.

In general, with focus on the 30- and 10-second windows, the pair of frontal electrodes, Af3-Af4, gives the best recognition results, especially Af3. The frontal cortex includes the premotor and primary motor cortices, which controls voluntary movements of body parts. Thus, patterns that arise from the Af3-Af4 streams may be strongly related to brain commands to move hands and arms – which is indirectly related to the emotions of the players. A spectator, instead of a game actor, may have a classification model based solely on Af3-Af4 with diminished accuracy. The pair Af3-Af4 is closely followed by the pairs O1-O2 (occipital lobe) and T7-T8 (temporal lobe), which is somehow consistent with the results in [25] [26]. The temporal lobe is useful for audition, language processing, declarative memory, and visual and emotional perception. The occipital lobe contains the primary visual cortex and areas of visual association. Neighbor electrodes, P7-P8, also offer relevant information for classification.

IV-B Multiple Channel Experiment

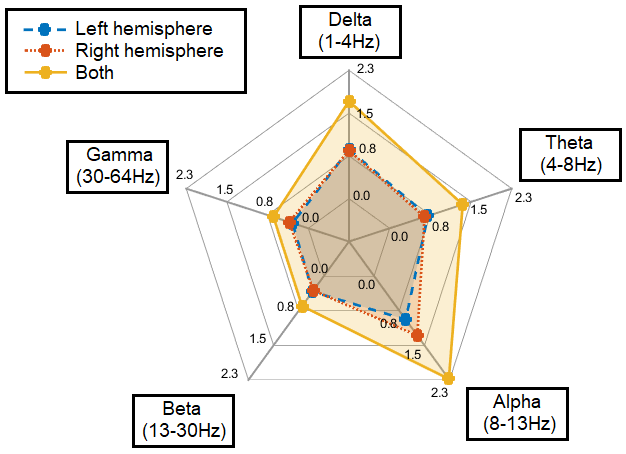

The multivariate 140-feature stream is fed to eGFC. We consider 10-second windows over 20-minute recordings per individual – the best set up secured in the previous experiment. Features are ranked from the Spearman’s Correlation Score. To give quantitative evidence, we sum the monotonic correlations between a band and the classes of emotions (a sum of 14 items that correspond to the 14 EEG channels). Figure 5 shows the result in dark yellow, and its decomposition per brain hemisphere. The global values are precisely: 2.2779, 1.7478, 1.3759, 0.6639, and 0.6564 for the Alpha, Delta, Theta, Beta, and Gamma bands, respectively. The higher, the better.

We apply the strategy of leaving features out to evaluate the user-independent eGFC. Each eGFC model delineates class boundaries in a different multi-dimensional space. Table II shows the results for the multi-channel streams. A quadcore laptop, i7-8550U, with 1.80GHz, 8GB RAM, and Windows 10 OS, is used. We testify that brain activity patterns are found in all frequency bands, viz., Delta, Theta, Alpha, Beta, and Gamma, as even lower ranked features positively affect the estimates. Features from any channel may assist the classifier decision. The greatest eGFC accuracy, , occurs using all features extracted from the spectrum. In this case, a stream of 3,360 samples is processed in 6.032 seconds (1.8ms per sample). As a sample is generated at every 10s (10-second windows), and processed in 1.8ms, we have shown that eGFC can operate in real time with much larger rule bases and Big data, such as data generated by additional electrodes, and other physiological and non-physiological means.

| # Features | CPU Time (s) | ||

|---|---|---|---|

| 140 | 72.20 | 5.52 | 6.032 |

| 135 | 71.10 | 5.70 | 5.800 |

| 130 | 71.46 | 5.68 | 5.642 |

| 125 | 71.25 | 5.75 | 5.624 |

| 120 | 70.89 | 5.79 | 5.401 |

| 115 | 70.92 | 5.68 | 5.193 |

| 110 | 70.68 | 5.73 | 4.883 |

| 105 | 70.68 | 5.71 | 4.676 |

| 100 | 70.24 | 5.78 | 4.366 |

| 95 | 70.12 | 5.69 | 4.290 |

| 90 | 69.91 | 5.66 | 3.983 |

| 85 | 66.31 | 5.53 | 3.673 |

| 80 | 65.09 | 5.45 | 3.534 |

| 75 | 65.48 | 5.26 | 3.348 |

| 70 | 64.08 | 5.26 | 2.950 |

| 65 | 63.42 | 5.37 | 2.775 |

| 60 | 62.56 | 5.33 | 2.684 |

| 55 | 60.95 | 5.33 | 2.437 |

| 50 | 60.57 | 5.57 | 2.253 |

| 45 | 60.39 | 5.53 | 2.135 |

| 40 | 54.13 | 5.14 | 1.841 |

| 35 | 52.29 | 5.28 | 1.591 |

| 30 | 52.52 | 5.54 | 1.511 |

| 25 | 50.95 | 5.61 | 1.380 |

| 20 | 51.42 | 5.58 | 1.093 |

| 15 | 49.28 | 5.57 | 0.923 |

| 10 | 48.63 | 5.58 | 0.739 |

The average number of fuzzy rules along the learning steps, around 5.5, is similar for all streams analyzed (Table II). An example of eGFC structural evolution (best case, ) is shown in Figure 6. Notice that the emphasis of the model and algorithm is to keep up with accuracy during online operation at the price of merging and deleting rules occasionally. If a higher level of memory is desired, then the default eGFC hyper-parameters can be changed by turning off the rule removing threshold, ; and setting the merging parameter, , to a lower value. Figure 6 also shows that the effect of male-female shifts due to the consecutive use of the EEG device does not imply a need of completely new granules and rules. Parametric adaptation of Gaussian granules is often enough to accommodate slightly different behaviors. Nevertheless, if a higher level of memory is allowed, rules for both genders can be kept separately. In general, the results using evolving fuzzy intelligence and EEG streams as unique source of physiological data are encouraging.

V Conclusion

We have evaluated online learning and an evolving fuzzy classifier, called eGFC, for recognition of emotions in games. The players are subject to visual and auditory stimuli using an EEG device. An emotion (boredom, calmness, angriness, or happiness) prevails in each game, according to the Arousal-Valence system. 140 features are extracted from the frequency spectra of 14 electrodes/brain regions. We analyzed the Delta, Theta, Alpha, Beta, and Gamma bands. We examined the contribution of single electrodes on emotion recognition, and the effect of window lengths and dimensionality reduction on the overall accuracy of the eGFC models. We conclude: (i) electrodes on both brain hemispheres assist the recognition of emotions expressed through a variety of spatio-temporal patterns; (ii) the frontal (Af3-Af4) area, followed by the occipital (O1-O2) and temporal (T7-T8) areas are, in this order, slightly more discerning than the others; (iii) although patterns may be found in any band, the Alpha (8-13Hz) band, followed by the Delta (1-4Hz) and Theta (4-8Hz) bands, is more monotonically correlated with the emotion classes; (iv) eGFC is able to learn from and process 140-feature samples in 1.8 milliseconds per sample. Thus, eGFC is suitable for real-time applications considering EEG and other data sources; (v) a greater number of features covering the entire spectrum and the use of 10-second windows guided eGFC to its best accuracy, 72.20%, using from 4 to 12 granules and rules. In the future, wavelet transforms will be evaluated. A deep neural network (as feature extractor) will be connected to eGFC for real-time emotion recognition. We also intend to evaluate an ensemble of customized evolving classifiers.

Acknowledgement

This work received support from the Serrapilheira Institute (Serra - 1812-26777).

References

- [1] T. Song, W. Zheng, P. Song, and Z. Cui, “EEG emotion recognition using dynamical graph convolutional neural networks,” IEEE Trans Affect Comput, vol. 11, no. 3, pp. 532–541, 2020.

- [2] G. Vasiljevic and L. Miranda, “Brain–computer interface games based on consumer-grade EEG devices: A systematic literature review.” Int J Hum-Comput Int, vol. 36, no. 2, pp. 105–142, 2020.

- [3] M. Alarcao and M. J. Fonseca, “Emotions recognition using EEG signals: A survey,” IEEE Trans. Affect. Comput., vol. 10, no. 3, pp. 374–393, 2019.

- [4] X. Gu, Z. Cao, A. Jolfaei, P. Xu, D. Wu, T.-P. Jung, and C.-T. Lin, “EEG-based brain-computer interfaces (BCIs): A survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications,” arXiv:2001.11337, p. 22p., 2020.

- [5] H. P. Martinez, Y. Bengio, and G. N. Yannakakis, “Learning deep physiological models of affect,” IEEE Computat Intell Mag, vol. 8, no. 2, p. 20–33, 2013.

- [6] W.-L. Zheng and B.-L. Lu, “Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks,” IEEE Transactions on Auton Ment Dev, vol. 7, no. 3, pp. 162–175, 2015.

- [7] M. Wu, W. Su, L. Chen, W. Pedrycz, and K. Hirota, “Two-stage fuzzy fusion based-convolution neural network for dynamic emotion recognition,” IEEE Trans. Affect. Comput., pp. 1–1, 2020.

- [8] Y. Zhang, Z. Yang, H. Lu, X. Zhou, P. Phillips, Q. Liu, and S. Wang, “Facial emotion recognition based on biorthogonal wavelet entropy, fuzzy support vector machine, and stratified cross validation,” IEEE Access, vol. 4, pp. 8375–8385, 2016.

- [9] G. Lee, M. Kwon, S. Kavuri, and M. Lee, “Emotion recognition based on 3D fuzzy visual and EEG features in movie clips,” Neurocomputing, vol. 144, pp. 560–568, 11 2014.

- [10] Z. Cao and C.-T. Lin, “Inherent fuzzy entropy for the improvement of EEG complexity evaluation,” IEEE Tran Fuzzy Syst, vol. 26, no. 2, p. 1032–1035, 2017.

- [11] D. Wu, V. J. Lawhern, S. Gordon, B. J. Lance, and C.-T. Lin, “Driver drowsiness estimation from EEG signals using online weighted adaptation regularization for regression (OwARR),” IEEE Tran Fuzzy Syst, vol. 25, no. 6, p. 1522–1535, 2016.

- [12] S.-L. Wu, Y.-T. Liu, T.-Y. Hsieh, Y.-Y. Lin, C.-Y. Chen, C.-H. Chuang, and C.-T. Lin, “Fuzzy integral with particle swarm optimization for a motor-imagery-based brain–computer interface,” IEEE Tran Fuzzy Syst, vol. 25, no. 1, p. 21–28, 2016.

- [13] D. Leite, L. Decker, M. Santana, and P. Souza, “EGFC: Evolving Gaussian fuzzy classifier from never-ending semi-supervised data streams - With application to power quality disturbance detection and classification,” in IEEE World Congr. Comput Intel (WCCI - FUZZ-IEEE), 2020.

- [14] D. Leite, “Evolving Granular Systems,” Ph.D. dissertation, School of Electr. Comput. Eng., University of Campinas (UNICAMP), 2012.

- [15] I. Skrjanc, J. A. Iglesias, A. Sanchis, D. Leite, E. Lughofer, and F. Gomide, “Evolving fuzzy and neuro-fuzzy approaches in clustering, regression, identification, and classification: A survey.” Inf. Sci., vol. 490, pp. 344–368, 2019.

- [16] C. Garcia, D. Leite, and I. Skrjanc, “Incremental missing-data imputation for evolving fuzzy granular prediction,” IEEE Tran Fuzzy Syst, vol. 28, no. 10, pp. 2348–2362, 2020.

- [17] D. Leite, G. Andonovski, I. Skrjanc, and F. Gomide, “Optimal rule-based granular systems from data streams,” IEEE Tran Fuzzy Syst, vol. 28, no. 3, pp. 583–596, 2020.

- [18] D. Leite, P. Costa, and F. Gomide, “Evolving granular neural networks from fuzzy data streams,” Neural Networks, vol. 38, pp. 1–16, 2013.

- [19] L. Decker, D. Leite, L. Giommi, and D. Bonacorsi, “Real-time anomaly detection in data centers for log-based predictive maintenance using an evolving fuzzy-rule-based approach,” in IEEE World Congr. on Comput. Intell. (WCCI - FUZZ-IEEE), 2020, p. 8p.

- [20] T. B. Alakus, M. Gonenb, and I. Turkogluc, “Database for an emotion recognition system based on EEG signals and various computer games - gameemo,” Biomed Signal Proces, vol. 60, no. 101951, p. 12p., 2020.

- [21] X. Wang, W. Pedrycz, A. Gacek, and X. Liu, “From numeric data to information granules: A design through clustering and the principle of justifiable granularity,” Knowl.-Based Syst., vol. 101, p. 100–113, 2016.

- [22] J. Russell, “A circumplex model of affect,” J. Pers. Soc. Psychol., vol. 39, no. 6, pp. 1161–1178, 1980.

- [23] L. Fedwa, M. Eid, and A. Saddik, “An overview of serious games,” Int J Comput Game Technol, vol. 2014, p. 15p., 2014.

- [24] E. Soares, P. Costa, B. Costa, and D. Leite, “Ensemble of evolving data clouds and fuzzy models for weather time series prediction,” Appl Soft Comput, vol. 64, p. 445–453, 2018.

- [25] X. Li, D. Song, P. Zhang, Y. Zhang, Y. Hou, and B. Hu, “Exploring EEG features in cross-subject emotion recognition,” Front. Neurosci., vol. 12, pp. 162–176, 03 2018.

- [26] W.-L. Zheng, J.-Y. Zhu, and B.-L. Lu, “Identifying stable patterns over time for emotion recognition from EEG,” IEEE Trans Affect Comput, vol. 10, no. 3, pp. 417–429, 2019.